Abstract

We show that populations of identical uncoupled neurons exhibit partial phase synchronization when stimulated with independent, random unidirectional current spikes with interspike time intervals drawn from a Poisson distribution. We characterize this partial synchronization using the phase distribution of the population, and consider analytical approximations and numerical simulations of phase-reduced models and the corresponding conductance-based models of typical Type I (Hindmarsh–Rose) and Type II (Hodgkin–Huxley) neurons, showing quantitatively how the extent of the partial phase synchronization depends on the magnitude and mean interspike frequency of the stimulus. Furthermore, we present several simple examples that disprove the notion that phase synchrony must be strongly related to spike synchrony. Instead, the importance of partial phase synchrony is shown to lie in its influence on the response of the population to stimulation, which we illustrate using first spike time histograms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Synchronized neural activity is believed to be important for various brain functions, including visual processing (Gray et al. 1989; Usrey and Reid 1999), odor identification (Friedrich and Stopfer 2001), signal encoding (Stevens and Zador 1998), cortical processing (Singer 1993), learning (Stent 1973), and memory (Klimesch 1996). It can also be detrimental. For example, resting tremor in patients with Parkinson’s disease has been linked to synchronization of a cluster of neurons in the thalamus and basal ganglia (Pare et al. 1990). Similarly, essential tremor and epileptic seizures are commonly associated with synchronously firing neurons (Elble and Koller 1990; Traub et al. 1989), which can become (partially or fully) synchronized due to coupling and/or stimulation by common inputs.

Even common inputs that are random or noisy can lead to synchronization (Goldobin and Pikovsky 2005a, b; Nakao et al. 2005). This applies to a broad range neuron models (Ritt 2003; Teramae and Tanaka 2004), with little constraint on intrinsic properties. It has also been shown experimentally that some neurons, in particular olfactory bulb mitral cells, can synchronize in this manner in vitro (Galán et al. 2006). This is relevant to spike timing reliability experiments, which found that repeated injection of the same fluctuating current into a single cortical neuron leads to a more reproducible spiking pattern than injection of a constant current (Mainen and Sejnowski 1995). Indeed, an experiment in which multiple uncoupled neurons are subjected to a common input is equivalent to an experiment in which a single neuron is subjected to the same input over multiple trials, as in Mainen and Sejnowski (1995), so that spike timing reliability can be viewed as synchronization across trials.

Typically, as in the references cited above, synchrony in the context of neuroscience is discussed in terms of synchronization of action potentials (spikes). Synchronization of spike times is a natural way to quantify the dynamic behavior of a population of neurons, since typically the only observable quantities are voltages. There is, however, another form of synchrony that can play an important role in the dynamic response of a population of oscillatory neurons to stimulus.

In this paper, we consider partial phase synchronization, a characteristic that provides information about the dynamical state of a population of oscillatory neurons not easily obtained by studying spike synchrony alone. The concept of partial phase synchronization applies to populations of oscillatory neurons, each of which evolves in time according to dynamics can be represented by a one-dimensional phase oscillator (a “simple clock”, in the terminology of Winfree 2001). Here, the phase of a neuron relates its state, in time, to the firing of an action potential (or other marker event on its periodic orbit), as described for example in Guckenheimer (1975) and Brown et al. (2004a). The dynamical state of a population of phase oscillators can be characterized by the distribution of their phases (over the unit circle). Partial phase synchronization refers to the degree to which this phase distribution possesses a single dominant mode, meaning that there is a higher density of neurons with phases near this region than anywhere else on the circle. Complete phase synchronization is the limiting case of partial phase synchronization where all the neurons have exactly the same phase, which yields a phase distribution in the form of a Dirac delta function.

After specifying the neuron and stimulus models we consider, we present an intuitive description of the mechanism by which partial phase synchronization can occur. We then consider a case in which partial phase synchronization occurs in a population of identical uncoupled neurons that each receive different (uncorrelated) spike trains with interspike time intervals drawn from a Poisson distribution, which could represent, for example, the background activity of other neurons (Softky and Koch 1993). We then present a detailed theory for calculating the long-time phase distribution, the results of which are compared with simulation data for populations of phase oscillators and conductance-based neuron models. Two different neural models are studied: Hindmarsh–Rose (Rose and Hindmarsh 1989), a prototypical Type I neuron, and Hodgkin–Huxley (Hodgkin and Huxley 1952), a prototypical Type II neuron (Rinzel and Ermentrout 1998). These models are considered for parameter regions in which the neurons spike periodically in time. We show that the simulation results for a population of phase oscillators very closely match those for a population of conductance-based neuron models exposed to the same type of stimulus, and both sets of simulation data yield long-time phase distributions that are very similar to the theoretical predictions. This verifies that the concept of phase synchronization, although developed based on phase oscillators, is an effective and accurate tool for modeling populations of conductance-based neuron models. We then exploit the computational efficiency of simulating phase oscillator models in order to map how the degree of phase synchronization depends on the magnitude and mean spike frequency of the stimulus.

The balance of the paper will be devoted to discussing the practical implications of partial phase synchrony to the response of the population. We will develop several simple examples that illustrate some of the complex relationships between phase synchronization and spike synchronization. We stress that phase synchronization does not necessarily imply spike synchronization, and spike synchronization guarantees phase synchronization only in the weakest sense. The two phenomena are distinct, and both are important dynamical characteristics of a neural population.

2 Methods

2.1 Models

2.1.1 Conductance-based models

We consider a population of N identical neurons with dynamics governed by a conductance-based model of the form

for i = 1, ⋯ , N. For the i th neuron, \(V_i \in \mathbb{Re}\) is the voltage across the membrane, \({\bf n}_i \in \mathbb{Re}_{[0,1]}^{m}\) is the vector of gating variables, \(C \in \mathbb{Re}^+\) is the membrane capacitance, \(I_g: \mathbb{Re} \times \mathbb{Re}^m \rightarrow \mathbb{Re}\) is the sum of the membrane currents, \(I_b \in \mathbb{Re}\) is a constant baseline current, and \(I_i: \mathbb{Re} \rightarrow \mathbb{Re}\) is the current stimulus.

We choose prototypical systems to represent two common types of neurons (Rinzel and Ermentrout 1998). As a Type I neuron model, we consider the Hindmarsh–Rose equations (Rose and Hindmarsh 1989), which represent a reduction of the Connor model for crustacean axons (Connor et al. 1977):

As a Type II neuron model, we consider the Hodgkin–Huxley equations, which model the squid Loligo giant axon (Hodgkin and Huxley 1952):

We hope the use of these prototypical models will provide the reader with some familiarity and intuition.

2.1.2 Phase models

We choose baseline current, I b , values such that each neuron’s only attractor is a stable periodic orbit: I b = 5 mA for the Hindmarsh–Rose model, and I b = 10 mA for the Hodgkin–Huxley model. Since each system has a stable periodic orbit at the prescribed I b value, it is useful to map the system to phase coordinates (Brown et al. 2004a; Guckenheimer 1975; Kuramoto 1984; Winfree 1974, 2001). Following Guckenheimer (1975), there exists a homeomorphism h: \(\mathbb{Re} \times \mathbb{Re}^m \rightarrow \mathbb{S}^1\) mapping any point on the periodic orbit γ to a unique point the unit circle θ ∈ [0,2π). Furthermore, in a neighborhood of the periodic orbit, the phases are determined by isochrons, whose level-sets are the sets of all initial conditions for which the distance between trajectories starting on the same isochron goes to zero as t → ∞ (Josić et al. 2006). By convention, when θ i = 0 the ith neuron fires. In the limit of small perturbations, the stimulus spikes serve to nudge the state slightly off the periodic orbit. In this way, the state may be moved onto a different isochron resulting in a difference in phase, with a magnitude and direction determined by the phase response curve (PRC). This reduction enables the dynamics of an (m + 1)-dimensional ordinary differential equation (ODE) neuron model to be represented by the evolution of a scalar phase variable. A recent discussion of this framework was communicated in Gutkin et al. (2005).

We can therefore model our neural population by a set of one-dimensional phase models - one for each neuron. In the presence of stimuli I i (t), this phase reduction yields the following uncoupled N-dimensional system of equations for the phases θ i of a population of N identical neurons (Brown et al. 2004a; Gutkin et al. 2005):

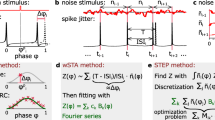

Here θ i ∈ [0,2 π), ω = 2π/T where T is the period of the periodic orbit, and Z(θ) is the PRC. Of particular interest are perturbations in the voltage direction, i.e. \(Z_V(\theta) = \frac{\partial \theta}{\partial V}\). The software package XPPAUT (Ermentrout 2002) was used to numerically calculate PRCs for the Hindmarsh–Rose and Hodgkin–Huxley models. For computational convenience, we use approximations to these PRCs shown in Fig. 1: the Hindmarsh–Rose PRC is approximated by a curve-fit of the form \(Z_V(\theta) \approx \frac{K}{\omega}(1-\cos(\theta))\) where K ≈ 0.0036 (mV ms) − 1 for I b = 5 mA (Brown et al. 2004a, b), cf. Ermentrout (1996); the Hodgkin–Huxley PRC is approximated as a Fourier series with 21 terms.

2.1.3 Stimulus model

To model the independent random stimuli, we suppose that each neuron receives δ-function current inputs of strength \(\bar{I}\) at times determined by a Poisson process with mean frequency α:

where \(t_i^k\) is the time of the k th input to the i th neuron. The times of these inputs are determined by drawing the interspike intervals from the distribution

We emphasize that the neurons in our models are not coupled to each other, and that each one receives the same baseline current I b but a different current stimulus I i (t).

In our analysis, we are interested in determining how the population-level behavior depends on α and \(\bar{I}\). Softky and Koch (1993) found that the mean excitatory spike frequency in certain brain neurons is about 83 Hz or α = .083 ≈ 0.1 spikes/ms. This falls at the high end of the gamma-rhythm range (Kopell 2000), and sets the scale for biologically relevant α values. We base our \(\bar{I}\) scaling on a unit 1 mA, so that a spike gives an instantaneous voltage change of order \(\bar{I}/C = 1\) mV. Since the dynamic range of a periodically firing neuron is approximately 100 mV, this corresponds to a small perturbation in the depolarizing direction (Tass 2000).

2.2 Mechanism for partial phase synchronization

2.2.1 Intuitive description

We take partial phase synchronization to mean that there is a higher probability of a neuron having a certain range of phases than another range of equal size. The following illustrates qualitatively how partial phase synchronization can occur for a population of neurons subjected to the previously described stimuli. Take, for example, the PRC Z V (θ) = 1 − cos(θ). Recall the time evolution of a neuron is governed by Eq. (3). Now imagine a set of four such neurons on the unit circle with this PRC starting at \(\{\theta_1 , \theta_2 , \theta_3 , \theta_4 \} = \{ 0 , \frac{\pi}{2} , \pi , \frac{3\pi}{2} \}\). These are shown as black markers on Fig. 2(a) and are labeled according to their ith indices. Figure 2(a) also shows the phase of the four neurons in the absence of stimuli after some time interval Δt has elapsed: each neuron has advanced in phase by Δθ = ωΔ t to positions indicated with open markers, labeled i′. This behavior is termed drift and is simply due to the natural frequency of the neurons. If each of the neurons was exposed to a unit stimulus during this same time interval Δt, their phases advance additionally, as determined by the PRC, by \(\Delta \theta = Z_V(\theta) \bar{I}/C\) (if the PRC contains negative values, the phase can also be retarded). The position of each of the neurons after drift and stimulus is indicated by striped markers labeled by i′′. Notice that the position of neuron 1 (starting at θ 1 = 0) with stimulus (1′′) is the same as without stimulus (1′). This is because Z V (0) = 0 for this example.

If all four neurons are subjected to stimulus, it is apparent from the i′′ locations in Fig. 2(a) that the distribution of neurons has changed. What began as uniform has become asymmetric. The situation becomes more apparent if many more neurons are displayed, as in Fig. 2(b). Here we see a definite “bunching” around θ = 0. Many neurons have similar phases, hence phase synchronization.

The above description assumed each neuron receives the same input. Conceptually, one can envision a similar argument if all neurons receive unidirectional independent Poisson-type stimuli with the same statistics, i.e. mean interspike frequency α and magnitude \(\bar{I}\).

2.2.2 Theoretical development

Suppose that each neuron in a population received the same non-random stimulus I(t). For example, I(t) might be a step function stimulus (Brown et al. 2004a) or a sinusoidal stimulus. We are interested in the probability that a neuron will have a phase between θ and θ + dθ at time t, given by ρ(θ,t)dθ, where ρ(θ,t) is the probability distribution function for the population of neurons. The phase synchronization can be represented by the shape of ρ(θ,t) over θ ∈ [0,2π) at time t. The following partial differential equation can be derived for N → ∞ for such a population of neurons (e.g., Brown et al. 2004a):

For the present problem with independent random inputs I i (t), the situation is more complicated. We proceed by deriving an expansion of the density evolution that is conducive to perturbation methods consistent with the neuron models and random inputs under consideration. For the values of mean spike frequency, α, and neuron natural firing frequency, ω, used in this paper, it is reasonable to consider the ratio ω/α as \(\mathcal{O}(1)=\mathcal{O}(\epsilon^0)\) for the Hodgkin–Huxley model and \(\mathcal{O}(\epsilon)\) for the Hindmarsh–Rose model, where ε is a small parameter.

2.2.3 Kramers–Moyal expansion

The probability distribution ρ(θ,t) obeys the Kramers–Moyal expansion (Coffey et al. 2004)

where

Here \(\mathbb E\) denotes the expected value, and θ(0) = θ 0 for the realizations used to calculate \(D^{(n)}(\theta_0)\). Now

where Δθ is the total jump in θ due to the random Poisson inputs in [0,Δt). For sufficiently small Δt, we expect that either no inputs occur, in which case Δθ = 0, or one input occurs, giving

For n = 1 and sufficiently small Δt, we have

Using

gives

so that

Similarly for n > 1,

so that

Finally, letting

where Z d and ε are nondimensional, we obtain the following equation for the steady (\(\partial/\partial t = 0\)) density ρ s (θ):

We now consider the small ε limit, corresponding to random inputs with small amplitude. We substitute

into Eq. (16), and to solve at successive orders of ε. We divide this into two cases based on the relative size of ω/α.

2.2.4 Hodgkin–Huxley: \(\frac{\omega}{\alpha} = {\cal O}(1)\)

This case applies when the ratio of natural frequency to mean spike frequency is of order 1, which corresponds to the Hodgkin–Huxley model in our analysis.

At \({\cal O}(\epsilon^0)\), we find that

where the value of the constant follows from the normalization

At \({\cal O}(\epsilon)\), we obtain

which has solution

The value of the constant k 1 is determined by the normalization condition

Thus, to \({\cal O}(\epsilon)\),

At \({\cal O}(\epsilon^2)\), we obtain

This has solution

Using the normalization condition

we obtain the following approximation for ρ s (θ) accurate to \({\cal O}(\epsilon^2)\):

where

The truncation at \({\cal O}(\epsilon^2)\) is equivalent to formulating the problem in terms of a Fokker–Plank equation, the same form of which is known as the diffusion equation.

2.2.5 Hindmarsh–Rose: \(\frac{\omega}{\alpha} = {\cal O}(\epsilon)\)

This case applies when the natural frequncy is much smaller than the mean spike frequency, which corresponds to the Hindmarsh–Rose model in the regime studied in this paper.

We let

where \(\tilde{\omega} = {\cal O}(1)\). At \({\cal O}(\epsilon)\) we obtain

This has solution

where

A useful interpretation for this result is that, on average, the current \(\alpha \bar{I}\) enters each neuron during every time unit. If such current came in uniformly, so that \(I(t) = \alpha \bar{I}\), Eq. (6) would have a steady distribution given by Eq. (31).

At \({\cal O}(\epsilon^2)\) we obtain

This has solution

Using the normalization condition

we obtain the following approximation for ρ s (θ) accurate to \({\cal O}(\epsilon)\):

where

A different approach for the derivation of the steady probability distribution for a related problem is given in Nakao et al. (2005), which is primarily concerned with showing phase synchronization in the case in which all I i (t) in Eq. (3) are identical. Here the dynamics are reduced to a random phase map, and the evolution of the density associated with this map is described by a generalized Frobenius–Perron equation. The steady distribution can be found numerically, or analytically in certain limits. We find that our theory described above gives good agreement with our numerical results, and provides a straightforward alternative to the approach of Nakao et al. (2005).

2.3 Simulation methods

2.3.1 Conductance-based models

A numerical routine was constructed to simulate large populations of neurons described by (m + 1)-dimensional conductance-based models. Since the neurons are not coupled, the simulations can be conducted on a neuron-by-neuron basis. A second-order Runge–Kutta method with small fixed time step Δt was used for \(\mathcal{O}((\Delta t)^2)\) accuracy and compatibility with the Poisson-distributed stimuli. In our numerical models, we approximate the δ-function by a rectangular spike of duration Δt and magnitude 1/Δt.

To obtain a uniform initial phase distribution across the population, the (m + 1)-dimensional periodic orbit is calculated at run-time as a set of (m + 2)-tuples of the states and time. The time is then scaled to [0,2π), and initial states are assigned by drawing a scalar θ 0 from a uniform random number generator and using the periodic orbit to interpolate the initial data. Once the initial condition has been set, the system is integrated. Periodically throughout the time evolution of each neuron, the program computes its phase using a routine which will presently be described, yielding a sampled time-series of the neuron’s phase even though the equations are not in phase-reduced form.

Intuitively, the phase should be related to the time it would take the neuron to fire, beginning at its present state, in the absence of stimuli. Computationally, some additional care is required. We use the Hodgkin–Huxley equations as an example to illustrate some of the computational complexities. For the I b values considered, the vector field of this system contains a spiral source near the periodic orbit (Rinzel and Miller 1980), an example of a phase singularity (Winfree 2001). Therefore, it is possible for a trajectory on the periodic orbit passing near this source to be pushed arbitrarily close to it by stimulus. A neuron with a state very near the spiral source may take a significant amount of time to evolve back to a neighborhood close to the periodic orbit, especially if the (positive) real parts of the unstable eigenvalues are small. This situation is extremely rare for the models and parameter ranges we consider. In fact, it did not occur for any of the simulations which generated the data presented in this paper. However, to be useful in a general setting, the phase calculation scheme must be able to identify this case and alert the program that a phase singularity has been reached. In most settings dealing with behavior of large populations, these cases can be dismissed without loss of statistical significance, since they appear to occur on a set of extremely small measure.

To compute phase, we use the current state vector as the initial (t = 0) data for a new simulation which is run in absence of stimulus. This simulation is integrated until two spikes are observed. Let the time it takes for a spike (above a set voltage threshold) to occur be T 1 (a large upper bound on the allowable T 1 is used to identify possible phase singularities). Let the time at which the next spike occurs be T 2. The interspike interval between the two is then T int = T 2 − T 1. Then phase can be computed as

An important component of this algorithm is its ability to correctly identify a spike. We use a selection of logical checks which test the voltage time series for maxima above a set threshold, and have a prescribed minimum interspike time interval. Obviously, some knowledge of the spike magnitude and period is essential, but this is readily available since the computation of the periodic orbit at run-time provides all the necessary information. Using this method, one can simulate N neurons for P sample steps and receive, as output, an N ×P matrix containing the discrete time evolution of θ i as the values in row i.

The above method provides an accurate and robust way of simulating and determining phase information for large populations of uncoupled neurons of arbitrary dimension with a wide range of forcing functions and environmental parameters. Not limited to small perturbations, the utility of this method is constrained only by the relative stiffness of the neuron model and the geometry of the basin of attraction of the periodic orbit. However, such flexibility comes at the price of computational speed, which is strongly dependent on the dimension of the neuron model and the type of solver used.

2.3.2 Phase-reduced models

We are interested in the population dynamics over as wide a \((\alpha,\bar{I})\) range as possible. In order to more efficiently map the parameter space, we use the phase-reduced form of the neuron equations. We have implemented an algorithm which very efficiently simulates Eq. (3) for large N and for long times. It exploits the fact that Eq. (3) can be solved exactly for the time intervals for which no inputs are present. One gets times at which inputs occur by recognizing that the time interval τ between subsequent inputs for an individual neuron can be obtained by sampling the distribution (5). When an input comes for the i th neuron, we instanta neously let

Again, the uncoupled assumption of the population allows each θ i to be simulated independently, and the program creates an N ×P output matrix of the discrete time evolution of θ i .

3 Results

We simulate populations of uncoupled neurons each subjected to independent spike trains of set magnitude, \(\bar{I}\), with interspike intervals drawn from a Poisson distribution parameterized by mean spike frequency α. Our results include grayscale plots of ρ(θ,t) showing the time evolution of the phase distribution of the population, time-averaged distribution curves, and mappings of the distribution peak value and location as a function of \(\bar{I}\) and α. Comparisons are made between simulations of the full conductance-based models, simulations of the phase models, and theoretical estimates.

For notational convenience, we will refer to the N ×P output matrix as Θ. We remind the reader that the ith row of Θ represents the time-series values of θ i , the phase of neuron i, as it evolves from initial time t 0 to final time t f . While the differential equations are solved using a small time step Δt, we report phase using a larger time step Δp. This ensures the displayed data will not have spurious characteristics due to limitations of graphics resolution, and results in a dramatic performance increase in the simulations of the full conductance-based models. Therefore, the P columns of Θ represent the (t f − t 0)/ Δp sample points, plus a left-concatenated column of the initial phases, i.e. P = 1 + (t f − t 0)/ Δp.

3.1 Phase distribution dynamics

The phase density of the population at each sample point, ρ(θ,t) is computed from Θ by taking an appropriately scaled histogram of the corresponding column of Θ. For example, ρ(θ) at the kth sample point is calculated by

where hist(.,.) is the standard histogram binning function, n bins is the number of bins dividing the interval [0,2 π), and e k is an P ×1 vector of zeros with a 1 in the kth entry. The argument Θ e k is simply the kth column of Θ, representing the phase of all the neurons at time t = (k + 1) Δp, where k = 1 implies t = t 0. The scaling used in Eq. (39) gives the normalizing condition

where Δθ is the bin size 2 π/ n bins. Equation (39) returns a n bins ×1 vector discretization of ρ(θ,t).

Populations of 1,000 neurons were simulated, beginning with a uniform phase distribution at t 0 = 0, and subjected to random sequences of spikes with magnitude \(\bar{I}=1\) mA. An integration time step of Δt = 0.01 ms was used. Since the time scales of the Hindmarsh–Rose and Hodgkin–Huxley equations are significantly different, separate simulation parameters are necessary, as given in Table 1.

Figure 3(a, b) shows our results for the simulations of the full conductance-based models, to be compared with Fig. 3(c, d), which is the results from the phase-reduced model simulations. It is apparent that the phase reduction yields both qualitatively and quantitatively similar results. Since the phase response curve for the Hodgkin–Huxley system is of relatively small magnitude, we have used an α value of 1, rather than 0.1, to more clearly illustrate the dynamic behavior of the phase distribution.

One notices in these figures a behavior that can be described as “breathing”, i.e. there is an oscillation (with period of approximately 200 ms for the Hindmarsh–Rose model and approximately 15 ms for the Hodgkin–Huxley model) about a mean distribution. We found numerically that such oscillations persist for long times of the order of 106 ms. It is worth noting that this oscillatory behavior is exhibited in both the phase-reduced and conductance-based model simulations, suggesting that it may not be an artifact of the numerics, but rather something inherent in the dynamics which is not predicted by the theoretical framework. For the purposes of our subsequent analysis, we view these oscillations as a secondary effect, to be pursued in future work. To illustrate the average shape of the ρ(θ,t) distribution curves, we average each simulation over the last 25% of the integration time interval, which filters the oscillatory effects of the “breathing” behavior.

Figure 4(a) shows that theoretical predictions match the numerical data very closely for the Hindmarsh–Rose system. The results for the Hodgkin–Huxley system are qualitatively similar, as illustrated in Fig. 4(b), but display a slight mismatch of the θ location of the distribution peak.

Comparison of theoretical distribution estimate with averaged simulation data for both conductance-based and phase-reduced models for Hindmarsh–Rose (a) and Hodgkin–Huxley (b). Solid lines represent full conductance-based model simulation data, dashed lines represent phase-reduced model simulation data, and dotted lines represent the theoretical estimates

3.2 Parametric study

The computationally efficient phase-reduced model allows for a more complete mapping of the population response in parameter space. As a characterization of the population response, we consider the magnitude, ρ max, and location, θ max, of the peak of the t → ∞ averaged probability distribution. As in Section 3.1, we consider populations of 1,000 neurons. The Hindmarsh–Rose neurons are simulated for 1,000 ms (natural period ≈ 312 ms). The Hodgkin–Huxley neurons are simulated for 100 ms (natural period ≈ 14.6 ms).

Figure 5 compares the phase model results to the theoretical prediction using Eq. (36) for the Hindmarsh–Rose system over 0 < α ≤ 3 spikes/ms and \(0 < \bar{I} \le 5\) mA, for the ρ max characteristic. We find qualitative and reasonable quantitative agreement. For simulations of the full conductance-based model, simulations of the phase model, and theoretical predictions, the location θ max of the probability distribution function peak ρ max stays at θ max = 0.

The plots in Fig. 6 show our results over the same ranges of α and \(\bar{I}\) for the Hodgkin–Huxley system. Figure 6(a) illustrates the more complex parameter dependence of ρ max. While the theory predicts that θ max will be independent of α and \(\bar{I}\), Fig. 6(b) shows that it is weakly dependent on them. Since our theory is based on the ratio ω/α being order ε, we have plotted the theoretical results over a reduced region of α and \(\bar{I}\) parameter space, as shown in Fig. 6(d) to be compared with the simulation results reproduced over this reduced range in Fig. 6(c). We see that the theoretical results qualitatively capture the trend of the data, but start to differ quantitatively as α and \(\bar{I}\) increase. Importantly, for both the Hindmarsh–Rose and Hodgkin–Huxley models, we have shown that the degree of synchronization, as measured by the peak of the probability distribution function of the phase, increases with both the size and frequency of the stimuli, and can become quite substantial.

Hodgkin–Huxley population-level response characteristics. Numerical ρ max over (\(\alpha, \bar{I}\)) parameter space (a). Numerical θ max over (\(\alpha, \bar{I}\)) parameter space (b). Comparison of numerical results (c) to theoretical predictions (d) over the range of (\(\alpha, \bar{I}\)) for which the assumption \(\omega/\alpha = \mathcal{O}(1)\) is reasonable

4 Discussion

4.1 Relationship between partial phase synchrony and spike synchrony

The importance of studying partial phase synchronization lies not in its relationship to spike synchrony, but in its characterization of the dynamical state of the population, which governs the response of the population to future stimulus. Before addressing the practical implications of partial phase synchrony, we will illustrate some important points regarding the relationship of phase synchrony and spike synchrony by discussing several simple examples. We will show that, in the general case of a population of stimulated neurons, the degree of partial phase synchronization is only weakly related to spike synchrony.

In the following scenarios, we consider a population of N identical uncoupled phase oscillators each obeying the following ODE:

In the absence of input, i.e. I i (t) ≡ 0, there is a strong relationship between phase synchrony and spike synchrony for populations of uncoupled identical oscillators. If all oscillators have identical phase, it is obvious that they will cross the 0/2π spiking threshold at identical time. At the opposite extreme, if the distribution of the population is uniform, it follows that the spike times will be uniform in time (and thus desynchronized).

In the presence of input, coupling, or large distributions of natural frequency, this strong relationship no longer holds. Since we have restricted our attention to populations of identical uncoupled neurons in this paper, we will develop several simple examples that illustrate the how this relationship breaks down in the presence of input stimulus.

Our primary concern is showing the mathematical relationship between phase synchrony and spike synchrony, so we will assume that there are no constraints on the inputs we can use, and the oscillators themselves are governed by phase response curves that allow them to be controlled to achieve any prescribed dynamics:

This assumption is not true in general for real neurons (since the phase response curves are often nonlinear and non-invertible). The question of whether population of neurons with a given phase response curve can be driven to achieve specific dynamics when stimulated by inputs from a restricted set is a control theoretic problem and an aim of our future research. For the time being, we will follow our controllability assumption, which will allow us to develop examples that are simple and easy to verify.

In order to quantify partial phase synchronization, we introduce the Kuramoto order parameter (Kuramoto 1984):

In particular, we consider the magnitude of the order parameter, |r(t)|, which ranges from zero for a uniform phase distribution to one for a completely synchronized phase distribution (a Dirac delta function).

4.1.1 Example 1: phase synchrony without spike synchrony

Consider a population of N (uncoupled) phase oscillators, labeled θ 1,...,θ N , evolving according to the following ODE:

with the following initial conditions:

This population will have uniform interspike intervals, i.e. no spike synchrony whatsoever, while possessing an order parameter |r| →1 as Δ→0 and N → ∞, which indicates an arbitrarily high degree of phase synchronization.

What has been done here is to define a neighborhood around θ = π, with interval measure equal to 2Δ, where the neurons move very slowly. Outside this neighborhood the neurons advance very quickly. We have set up the initial conditions such that most of the oscillators (all but two) are in the small region where they move slowly. The one that starts at zero quickly moves to the starting edge of the π-neighborhood phase interval. During the same time interval, the last oscillator, θ N , advances quickly from the end of the π-neighborhood phase interval around the circle to the 0/2π spiking threshold. Also during this time interval, all the other neurons slowly advance in order along the π-neighborhood phase interval. After the interspike time interval, the neurons have rotated positions, but the overall population distribution is identical to where it started from, so after relabeling the oscillators, we are back to the starting configuration. Now if we make Δ small, we increase the level of phase synchronization, but the firing continues to be completely uniform (desynchronized). In fact, as Δ approaches zero, all the neurons except one will be arbitrarily close to π, so \(|r| \rightarrow \frac{N-1}{N} = 1- \frac{1}{N}\). Furthermore, as we increase the number of neurons, |r| →1 asymptotically. The value of |r| will, of course, never reach 1, but it can made arbitrarily close.

We illustrate this with a numerical example consisting of a set of N = 6 phase oscillators with natural frequency ω = 1. We set Δ = 0.5 so that the trajectories will not be overly crowded in the π-neighborhood and the plotted results can be easily understood. Figure 7(a) shows the trajectories generated by Eq. (42) along with the magnitude of the order parameter |r(t)|. The interspike time intervals here are uniformly equal to ratio of the natural period T to the number of neurons N, so there is no spike synchrony whatsoever. Yet the magnitude of the order parameter is at all times above 0.5. This value can be driven arbitrarily close to one by increasing the number of neurons and decreasing Δ. We note that all the phase trajectories are in solid black, because it is not important to be able to differentiate between them. Any set of trajectories following such paths, regardless of which neuron is on which trajectory at a given time, will generate the same population-level results. This convention will be used in the next example, as well.

Illustrations showing that partial phase synchrony and spike synchrony need not be strongly related. (a) shows the scenario from Example 1 where there is a high degree of phase synchronization but no spike synchronization. (b) shows the scenario from Example 2 where there is complete spike synchronization but only small time intervals of phase synchronization. The bottom figures show a quantification of phase synchrony, as represented by the magnitude of the Kuramoto order parameter. The results can be sharpened by increasing the number of neurons, and reducing the parameters Δ and ε

4.1.2 Example 2: spike synchrony with minimal phase synchrony

To further simplify the following example, we will assume that we can prescribe the phase trajectory for the ith oscillator directly, rather than via an ODE, according to the following equation:

The population of oscillators evolving according to Eq. (44) has complete spike synchrony, but the trajectories have been configured such that the distribution will be nearly uniform except near the spike times, where the distribution will collapse toward a Dirac delta distribution, then expand again to be nearly uniform after the spike. Thus we have shown that, even in the presence of complete spike synchrony, the phase synchrony can be confined to intervals of arbitrarily small measure. To quantify this, we note that the integral of the order parameter magnitude

can be made arbitrarily small by letting ɛ→0. This means that the phase synchrony of this population is zero except on a set of arbitrarily small (but still positive) measure. We conclude that, although spike synchrony must be accompanied by phase synchrony at the instants of the spikes, we cannot say more about their relationship without considering controllability of the oscillators with respect to possible trajectories.

We illustrate this point with a small population of 6 oscillators with natural period T = 1. Figure 7(b) shows the trajectories generated by Eq. (44) as well as the associated |r(t)|. For clarity, we choose ɛ = 0.1. The magnitude of the order parameter is zero everywhere except in an ε-neighborhood of the spike times. All of the oscillators spike together, which means that there is complete spiking synchrony. By reducing ɛ, we can confine the periodic phase synchrony to arbitrarily small time intervals centered at the spike time.

4.1.3 Poisson inputs

For a population of neurons subjected to independent Poisson inputs as described in Section 2.1.3, the independence of the inputs implies that the spike times will be uncorrelated. It is intuitively clear from Eq. (41) and Fig. 4, the magnitude of the order parameter r can be non-zero when there are such inputs.

From a mathematical standpoint, we can illustrate this by considering the continuum limit as N → ∞ and asymptotically as t → ∞,

The last relation follows from the fact that the absolute value of the integral is equal to the magnitude of the first Fourier component: when ρ s is unimodal and not too sharply peaked, we expect that

for some θ 0, from which the relation follows. The values of ρ max are precisely the results computed in Section 3.2 and displayed in Figs. 5 and 6. We note in this case that \(\rho_{\min} \approx \frac{1}{\pi} - \rho_{\max} \le \frac{1}{2 \pi}\). The neurons subjected to Poisson inputs thus have partial phase synchronization, with an order parameter increasing with both the magnitude of the stimulus and the mean spike frequency, although there is no spike synchronization. In the next section, we will discuss how a population that is partially phase-synchronized reacts differently to stimulus than if its phases were uniformly distributed. This will illustrate the importance of considering phase synchronization in addition to spike synchronization when studying the dynamical properties of populations of oscillatory neurons.

4.2 Effects of partial phase synchronization

The degree of partial phase synchronization affects the manner in which the population responds to stimulus, as can most clearly be seen by constructing a histogram of the first spike times of each neuron after receiving a step stimulus of 1 mA. For both the Hindmarsh–Rose and Hodgkin–Huxley systems, populations of 1,000 phase models are initialized with uniform phase distribution. We expose the population to the step input and track when the next zero-crossing (firing) occurs. Figure 8(a) shows the first spike time histogram (FSTH) for Hindmarsh–Rose and Fig. 8(b) shows the FSTH for Hodgkin–Huxley. To illustrate the effect of partial phase synchronization, we repeat the above simulations starting from the partially synchronized distribution shown in Fig. 4(a) and (b). The results are shown below the FSTH plots for the uniform cases, in Fig. 8(c) and (d). We see that for the Hindmarsh–Rose system, the partial phase synchronization tends to flatten the FSTH somewhat. For the Hodgkin–Huxley system, using the curve from the α = 1 case for consistency, we see a very pronounced change in shape of the FSTH. All four FSTH plots are tabulated over 1,000 realizations, in order to show the character of the distributions independent of the randomness inherent in any individual realization. These results show that while partial phase synchrony does not imply firing synchrony, it can play an important role in determining how the population responds to a common stimulus.

First spike time histograms for Hindmarsh–Rose (a), (c) and Hodgkin–Huxley (b), (d). (a) and (b) begin from a uniform distribution. (c) and (d) begin from the partially synchronized distributions shown in Fig. 4. This analysis tracks the time of the first spike after the unit step-function stimulus is turned on. 1,000 realizations are used

4.3 Conclusion

We have shown that populations of identical uncoupled neurons exhibit partial phase synchronization when stimulated with independent unidirectional current spikes with interspike time intervals drawn from a Poisson distribution. We characterized this partial synchronization by the phase distribution for the population, using analytical approximations and numerical simulations of phase-reduced models and conductance-based models of typical Type I (Hindmarsh–Rose) and Type II (Hodgkin–Huxley) neurons. The results from the different approaches agree well with each other. We found that the degree of partial phase synchronization, as measured by the peak of the phase distribution, increases with both the size and frequency of the stimuli, and can become quite substantial.

We have shown that partial phase synchronization, a distinct phenomenon from spike synchronization, is an important consideration when using phase reduced models to infer dynamical characteristics of spiking neurons. Our results show that neural populations subjected to background activity from other neurons do not have a uniform distribution of phases, as is sometimes assumed in simulation studies. We show that such non-uniformity leads to different population-level response to other stimuli, suggesting that noisy inputs must be carefully incorporated into simulation studies in order to obtain biologically realistic results.

The present study isolated the effect of random stimuli by considering populations of identical uncoupled neurons. It would be interesting to explore phase synchronization due to noisy inputs for heterogeneous and/or coupled neural populations.

References

Brown, E., Moehlis, J., & Holmes, P. (2004a). On the phase reduction and response dynamics of neural oscillator populations. Neural Computation, 16, 673–715.

Brown, E., Moehlis, J., Holmes, P., Clayton, E., Rajkowski, J., & Aston-Jones, G. (2004b). The influence of spike rate and stimulus duration on noradrenergic neurons. Journal of Computational Neuroscience, 17, 13–29.

Coffey, W. T., Kalmykov, Y. P., & Waldron, J. T. (2004). The Langevin Equation: With applications to stochaistic problems in physics, chemistry, and electrical engineering. Singapore: World Scientific.

Connor, J. A., Walter, D., & McKown, R. (1977). Neural repetitive firing: Modifications of the Hodgkin–Huxley axon suggested by experimental results from crustacean axons. Biophysical Journal, 18, 81–102.

Elble, R. J., & Koller, W. C. (1990). Tremor. Baltimore: Johns Hopkins University Press.

Ermentrout, G. B. (1996). Type I membranes, phase resetting curves, and synchrony. Neural Computation, 8, 979–1001.

Ermentrout, G. B. (2002). Simulating, analyzing, and animating dynamical systems: A guide to XPPAUT for researchers and students. Philadelphia: SIAM

Friedrich, R. W., & Stopfer, M. (2001). Recent dynamics in olfactory population coding. Current Opinion in Neurobiology, 11, 468–474.

Galán, R. F., Fourcaud-Trocmé N., Ermentrout, G. B., & Urban, N. N. (2006). Correlation-induced synchronization of oscillations in olfactory bulb neurons. Journal of Neuroscience, 26(14), 3646–3655.

Goldobin, D. S., & Pikovsky, A. (2005a). Synchronization and desynchronization of self-sustained oscillators by common noise. Physical Review E, 71, 045201.

Goldobin, D. S., & Pikovsky, A. (2005b). Synchronization of self-sustained oscillators by common white noise. Physica A, 351, 126–132.

Gray, C. M., König, P., Engel, A. K., & Singer, W. (1989). Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature, 338, 334–337.

Guckenheimer, J. (1975). Isochrons and phaseless sets. Journal of Mathematical Biology, 1, 259–273.

Gutkin, B. S., Ermentrout, G. B., & Reyes, A. D. (2005). Phase response curves give the responses of neurons to transient inputs. Journal of Neurophysiology, 94, 1623–1635.

Hodgkin, A. L., & Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. Journal of Physiology, 117, 500–544.

Josić, K., Shea-Brown, E. T., & Moehlis, J. (2006). Isochron. Scholarpedia, 2509.

Klimesch, W. (1996). Memory processes, brain oscillations and EEG synchronization. International Journal of Psychophysiology, 24, 61–100.

Kopell, N. (2000). We got rhythm: Dynamical systems of the nervous system. Notices of the AMS, 47(1), 6–16.

Kuramoto, Y. (1984). Chemical oscillations, waves, and turbulence. Berlin: Springer

Mainen, Z. F., & Sejnowski, T. J. (1995). Reliability of spike timing in neocortical neurons. Science, 268, 1503–1506.

Nakao, H., Arai, K., Nagai, K., Tsubo, Y., & Kuramoto, Y. (2005). Synchrony of limit cycle oscillators induced by random external impulses. Physical Review E, 72, 026220.

Pare, D., Curro’Dossi, R., & Steriade, M. (1990). Neuronal basis of the Parkinsonian resting tremor: A hypothesis and its implications for treatment. Neuroscience, 35, 217–226.

Rinzel, J., & Ermentrout, G. B. (1998). Analysis of neural excitability and oscillations. In: C. Koch & I. Segev, (Eds.), Methods in neuronal modeling (pp. 251–291). MIT Press.

Rinzel, J., & Miller, R. N. (1980). Numerical calculations of stable and unstable periodic solutions to the Hodgkin–Huxley equations. Mathematical Biosciences, 49, 27–59.

Ritt, J. (2003). Evaluation of entrainment of a nonlinear neural oscillator to white noise. Physical Review E, 68(4), 041915 (Oct).

Rose, R., & Hindmarsh, J. (1989). The assembly of ionic currents in a thalamic neuron I. The three-dimensional model. Proceedings of the Royal Society of London. Series B, 237, 267–288.

Singer, W. (1993). Synchronization of cortical activity and its putative role in information processing and learning. Annual Review of Physiology, 55, 349–374.

Softky, W. R., & Koch, C. (1993). The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. Journal of Neuroscience, 13(1), 334–350.

Stent, G. S. (1973). A physiological mechanism for Hebb’s postulate of learning. Proceedings of the National Academy of Sciences, 70, 997–1001.

Stevens, C. F., & Zador, A. M. (1998). Input synchrony and the irregular firing of cortical neurons. Nature Neuroscience, 1, 210–217.

Tass, P. A. (2000). Effective desynchronization by means of double-pulse phase resetting. Europhysics Letters, 53, 15–21.

Teramae, J., & Tanaka, D. (2004). Robustness of the noise-induced phase synchronization in a general class of limit cycle oscillators. Physical Review Letters, 93(20), 204103.

Traub, R. D., Miles, R., & Wong, R. K. S. (1989). Model of the origin of rhythmic population oscillations in the hippocampal slice. Science, 243, 1319–1325.

Usrey, W. M., & Reid, R. C. (1999). Synchronous activity in the visual system. Annual Review of Physiology, 61, 435–456.

Winfree, A. (1974). Patterns of phase compromise in biological cycles. Journal of Mathematical Biology, 1, 73–95.

Winfree, A. (2001). The geometry of biological time (2nd ed.). New York: Springer.

Acknowledgements

This work was supported by the National Science Foundation grant NSF-0547606 and a University of California Council on Research and Instructional Resources Faculty Research Grant. P.D. acknowledges an National Science Foundation IGERT Fellowship in Computational Science and Engineering. G.B. is partially supported by NIH Grant R01 GM078993. J.M. acknowledges an Alfred P. Sloan Research Fellowship in Mathematics. We thank the anonymous referees for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: G. Bard Ermentrout

Rights and permissions

About this article

Cite this article

Danzl, P., Hansen, R., Bonnet, G. et al. Partial phase synchronization of neural populations due to random Poisson inputs. J Comput Neurosci 25, 141–157 (2008). https://doi.org/10.1007/s10827-007-0069-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-007-0069-z