Abstract

Parent-mediated interventions for young children with autism spectrum disorder (ASD) are highly under-utilized in community settings. A challenge to the dissemination of these programs is that provider training protocols must teach both the intervention techniques, and the parent coaching strategies. The current study assesses the feasibility an innovative multimodal training protocol to teach community-based intervention providers Project ImPACT (Improving Parents as Communication Teachers). A total of 30 providers (90% female; 76% Caucasian, 13.3% Multiracial/Other, 3.3% Hispanic/Latino, and 3.3% Asian/Pacific Islander) participated in Phase 1, and 15 of these participants (80% female; 60% Caucasian, 33.3% multiracial, and 6.6% Hispanic/Latino) participated in Phase 2. In Phase 1, providers completed questionnaires and videotaped interactions before and after a web-based tutorial and interactive workshop focused on intervention techniques. In Phase 2, providers completed questionnaires and videotaped interactions before and after a second interactive workshop focused on parent coaching. Results from Phase 1 indicate that the addition of the workshop resulted in higher ratings of training satisfaction (p < .01), training adequacy (p < .01), and self-efficacy (p < .01) of the intervention techniques. Results from Phase 2 indicate the addition of the second workshop resulted in greater training adequacy of the parent coaching strategies, p < .01. These results suggest that use of this training protocol is a feasible way to increase access to high quality training in evidence-based intervention for providers working with children with ASD in the community; however, larger scale community-based trials of the training protocol are an important next step.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There has been a dramatic increase in the number of individuals diagnosed with autism spectrum disorder (ASD), with prevalence rates reaching 1 in 64 (Zablotsky et al. 2015). Individuals with ASD often require intensive and specialized intervention, yet access to behavioral and therapeutic services remains the largest unmet service need for these individuals in the United States (Chiri and Warfield 2012). Factors impeding access to evidence-based services include a lack of compatibility between the interventions studied in research and those that are feasible in practice settings, difficulty translating evidence-based practices from university laboratory settings to community settings, and a lack of appropriately trained providers to deliver evidence-based practices in community settings (Brookman-Frazee et al. 2012; Chiri and Warfield 2012; Dingfelder and Mandell 2011; Garland et al. 2013). Recent efforts have focused on developing and improving strategies for the dissemination and implementation of effective ASD interventions, including early intervention, in real-world practice settings (Drahota et al. 2012; Schreibman et al. 2015; Vismara et al. 2009; Wood et al. 2015).

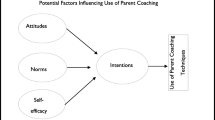

While a growing body of work has examined the effectiveness of interventions in practice settings, less is known about how providers and organizations learn about, adopt and implement these evidence-based interventions in the first place (Beidas and Kendall 2010; Sanders and Turner 2005). In order to effectively and efficiently translate ASD interventions into community settings, however, a better understanding of the process by which providers learn about and use interventions is critical. In fact, the successful transportation of interventions from research settings to community settings is rooted in the training and support of community-based providers (Herschell et al. 2010). When providers are asked about barriers to implementing new interventions, they report concerns about competing priorities and responsibilities, difficulties obtaining materials and resources, a lack of support from administration, and difficulties finding time to use the intervention (e.g., Langley et al. 2010; Massey et al. 2005). In addition to organization-level barriers, factors specific to the individual provider are known to influence adoption and implementation of a new intervention. According to the consolidated framework for implementation research (CFIR), “characteristics of the individual” influencing implementation include provider knowledge and beliefs about the intervention, individual stage of change, degree of identification with the organization, personality traits, and, importantly, self-efficacy (Damschroder et al. 2009). Self-efficacy is one of the most widely studied individual characteristics and research suggests it plays a strong role in individual behavior change (National Cancer Institute 2005). In this context, higher self-efficacy is associated with greater likelihood of embracing change and sustaining the use of a novel intervention, even when faced with obstacles (Damschroder et al. 2009).

This research suggests that it is important to provide adequate training and support to community providers as a means to increase their self-efficacy and skill development in a novel intervention, and subsequently increase the likelihood that they implement the novel intervention in practice settings (Beidas and Kendall 2010; Brookman-Frazee et al. 2012; Miller et al. 2004; Vismara et al. 2009). Indeed, from a systems-contextual (SC) perspective, quality training as reflected in the availability, content and method of training, along with contextual variables (e.g., organizational support for the intervention, therapist self-efficacy), is necessary for effective dissemination and implementation to occur (Beidas and Kendall 2010). The importance of quality training for community-based providers is reflected in the challenges observed within the ASD early intervention field; despite positive attitudes about evidence-based interventions in general, providers do not use many evidence-based ASD early interventions in practice settings because they are not adequately trained in and do not feel comfortable using such approaches (Brookman-Frazee et al. 2010). This may be particularly salient for early intervention providers expected to use evidence-based parent-mediated intervention approaches, given that teachers and other early intervention providers are rarely trained in parent education strategies, including parent coaching approaches (Ingersoll and Dvortcsak 2006).

Unfortunately, there is no gold standard with respect to provider training approaches (Addis et al. 1999) and there is limited information about which specific teaching strategies are most effective for training community-based providers in effective intervention approaches, including those for individuals with ASD (Beidas and Kendall 2010; Brookman-Frazee et al. 2012; Stadnick et al. 2013). As such, there is a recognized need for the development and evaluation of cost-effective, creative and efficient training models supporting specialized training which will result in high levels of self-efficacy and skill in using specific ASD intervention approaches (Thomson et al. 2009).

To date, many provider training protocols have been developed in research settings under the assumption that the training procedures used in clinical efficacy research would also be effective in community settings (Sholomskas et al. 2005). The standard clinical efficacy trial training includes the selection of therapists who are experienced and committed to a particular treatment, intensive didactic seminars with role play and practice, and at least a year of intensive supervision (Sholomskas et al. 2005). This kind of training procedure has been used in early intervention programs for ASD. For example, providers participating in a the UK Young Autism Project (UK YAP) were trained using a manual, seven half-day seminars on Applied Behavior Analysis (ABA) and related issues, and 60 h of supervised practicum experience (Hayward et al. 2009). Although providers demonstrated competencies across the EBPs emphasized in the UK YAP (Eikeseth et al. 2009), it is unrealistic to expect that such an intensive training model could be replicated in a diverse range of community settings. This is particularly true for early intervention providers within the ASD field, who have relatively high rates of turnover and who come from diverse training backgrounds (Kazemi et al. 2015; Sholomskas et al. 2005; Stahmer 2007).

Given their intensity, clinical efficacy trial training protocols are used infrequently in community settings. Instead, the most common methods of disseminating EBPs across mental health areas is through the distribution of written materials (e.g., manuals) and/or brief “one-shot” didactic trainings (Herschell et al. 2010; Odom et al. 2010). However, there is limited evidence supporting the effectiveness of these approaches as standalone training and dissemination tools (Miller et al. 2004). For example, Sholomskas et al. (2005) found that some providers demonstrated improvements in knowledge and use of intervention strategies in response to only reading a manual. Yet, these providers demonstrated gains that were smaller and less well maintained than those providers trained in more intensive format. Thus, manuals and written information may be beneficial, but not sufficient, for training and supporting providers’ use of evidence-based intervention strategies.

One aspect of provider training that appears to be especially important is the opportunity to receive additional supervision and/or consultation following didactic training (Henggeler et al. 2013, 2002; Miller et al. 2004). Much of the time, this additional consultation involves live “on the job” coaching and feedback. Prior research has examined a number of different consultation models, with varying levels of intensity and support provided depending on factors such as complexity of the intervention, feasibility (e.g., time, location, staff), and cost. For example, Locke et al. (2015) relied on 12 hands-on coaching sessions (two, 45 min sessions per week over 6 weeks) to train school personnel to implement specific strategies to facilitate peer engagement during recess for youth with ASD. Other studies have relied on video review for a less intensive consultation approach; providers training in An Individualized Mental Health Intervention (AIM HI) participated in 10, 1-h group supervision meetings which included feedback from video review (Brookman-Frazee et al. 2012). While there are limited data indicating comparative effectiveness of the various consultation models, the provision of some form of follow-up consultation in “real world” settings, after didactic training, appears to be an important component of training.

Recent advances in computer and internet technology suggest the potential of online instruction and video conferencing to provide remote consultation and supervision. Vismara et al. (2009) used videoconferencing to successfully train and supervise community-based therapists to implement the Early Start Denver Model (ESDM), an evidence-based practice for ASD (Rogers and Dawson 2009). As these investigators emphasize, it is critical to examine the extent to which computer and internet technology can be used as a vehicle for increasing community providers’ efficacy and skill as they are delivering evidence-based ASD interventions in community settings to meet the growing demands for such services (Vismara et al. 2009).

The current study is a pilot, quasi-experimental investigation of an innovative training protocol combining internet-based learning with live instruction and remote consultation to train community-based providers in Project ImPACT (Ingersoll and Dvortcsak 2010), an evidence-based parent-mediated naturalistic developmental behavioral intervention (NDBI; Schreibman et al. 2015) for children with ASD. The current study sought to examine the feasibility, acceptability, and initial effectiveness of a two-phase multimodal protocol to train community-based providers in Project ImPACT. We hypothesized that the multimodal protocol would be feasible and acceptable to providers, and that participation in the training would result in changes in provider experience (e.g., self-efficacy) and provider behavior (e.g., intervention fidelity).

Method

Participants

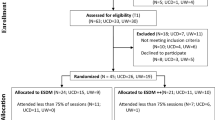

A total of 30 providers, from three early intervention community intervention sites, participated in the first phase of training. The three sites were selected to participate in this study based on: (1) an expressed interest in learning Project ImPACT; (2) at least one provider actively providing intervention to children with ASD, ages 18 months to 6 years; and (3) completion of written informed consent. Participating sites represented a range of service delivery settings including a public Early Childhood Special Education (ECSE) program, a hospital-based clinic, and a private intervention agency. Community intervention sites were located between 90 and 300 miles from the research lab.

Over the course of the study, there was significant staff turnover at the community agencies; nearly one-third of the participating providers moved positions or left their organization. Two of the three sites experienced changes in administrators and lead providers, and chose to discontinue participation after Phase 1. Thus, only one of the community sites (15 providers) participated in Phase 2 of the study. Provider demographics for the Phase 1 and Phase 2 samples are presented in Table 1.

After enrolling in the study, sites opened up participation to interested providers who met the above criteria. None of the providers had prior training in Project ImPACT. Before enrolling, each provider identified at least one family on their caseload with whom they could learn and practice the Project ImPACT intervention. Eligible families needed to have a child between 18 months and 6 years with ASD or social communication delays, be currently receiving services within the identified community site, and provide parental consent for videotaping.

Procedure

Intervention

Project ImPACT is a parent-mediated intervention that focuses on teaching parents to increase their child’s social engagement, communication, imitation and play during daily play and routine activities. Project ImPACT was developed in collaboration with parents, teachers, and community providers in order to be compatible with community-based service delivery models. The resulting curriculum can be implemented in either a group or individual format over 12 weeks. Initial evaluations of Project ImPACT have demonstrated the efficacy of the individual model when delivered within a research setting (Ingersoll and Wainer 2013a), and the effectiveness of the group model when delivered in community settings (Ingersoll and Wainer 2013b; Stadnick et al. 2015). Throughout the process of piloting the Project ImPACT program in community settings, it was clear that adequate training and supervision was essential to support providers in delivering Project ImPACT. Moreover, feedback from providers indicated the need for a phased approach to learning the intervention where they received training and consultation in using the Project ImPACT techniques directly with a child (i.e., intervention techniques) prior to receiving training in how to support parents in learning Project ImPACT in a parent-mediated format (i.e., parent coaching strategies). This phased approach to training seemed critical as it ensured provider comfort with the Project ImPACT intervention prior to being asked to teach the intervention to parents. Working with community providers early on also highlighted the need to consider alternative and flexible training protocols which could be used to train providers across a diverse range of practice settings, with diverse background knowledge and experiences, and located in diverse geographical areas. Thus, we developed a multimodal training protocol (see below) to introduce Project ImPACT to community based providers.

Multimodal training protocol

Training was implemented in two phases, each lasting roughly 3 months. Phase 1 involved training providers in the use of the intervention techniques and Phase 2 involved training providers in the parent coaching strategies (Fig. 1).

Phase 1

Providers were given access to the Project ImPACT tutorial, a self-directed website, approximately 2 weeks prior to the training workshop. The Project ImPACT tutorial included written text, video examples, and interactive exercises to introduce providers to the Project ImPACT intervention as it would be delivered directly with a child. The tutorial included information about the intervention techniques, as well as assessment and goal setting, and took approximately 5 h to complete. After completing the tutorial, they attended a one-day interactive workshop led by Project ImPACT trainers (masters’-level clinicians trained directly by the developers of Project ImPACT). The workshop consisted of a brief review of the intervention techniques, video review, and role play of the intervention techniques. Upon completion of the workshop, providers participated in three remote consultation sessions (approximately one per month) with the Project ImPACT trainers. Prior to each consultation session, providers uploaded video of themselves using Project ImPACT directly with a child on their caseload to a secure password protected file transfer program (FTP). Videos were immediately downloaded, saved to the secure university network, and then deleted from the FTP. The trainer reviewed the video for fidelity of implementation and then sent a secure email with written feedback on the quality of the provider’s implementation of each of the intervention techniques, as well as an overall summary of the session. Providers then met with the Project ImPACT trainer over skype for 30 min to discuss the feedback and problem-solve barriers or concerns.

Providers were asked to complete a series of questionnaires and videotaped provider-child interactions at three different time points: (1) before completing the web-based Project ImPACT tutorial (Time 1); (2) immediately after completing the Project ImPACT tutorial (Time 2); and (3) immediately after completing the Phase 1 interactive workshop (Time 3).

Phase 2

In Phase 2 (beginning approximately 6 months after the Phase 1 workshop), the providers received training in the Project ImPACT parent coaching strategies. This phase began with a second, one-day workshop focused entirely on coaching parents to use Project ImPACT. The workshop included discussion and role play of collaborative goal setting, video review, and role play of parent coaching. After attending the workshop, providers participated in three remote consultation sessions with the Project ImPACT trainers (approximately one per month). Providers uploaded video of a Project ImPACT parent coaching session to a secure password protected FTP, which was reviewed for fidelity using the ImPACT parent coaching fidelity form. Videos were immediately downloaded, saved to the secure university network, and then deleted from the FTP. Providers were sent a secure email with written feedback on their use of each of the coaching strategies, as well as an overall summary of the session. Providers then met with the Project ImPACT trainer over skype for 30 min to discuss the feedback and problem-solve any barriers or concerns.

Providers were asked to complete a series of questionnaires and videotaped parent coaching sessions at three additional time points: (4) before the Phase 2 interactive parent coaching workshop (Time 4); (5) after the Phase 2 interactive parent coaching workshop (Time 5); and (6) at follow-up (12-months after Time 1) (Time 6).

Measures

Demographics

Providers indicated their gender, race/ethnicity, professional background, years of experience working with children with ASD, total number of clients with ASD, average number of hours per week treating clients with ASD, and location of service provision prior to beginning the Project ImPACT tutorial in Phase 1.

Training outcomes

Training satisfaction

Satisfaction with the training protocol was assessed using the Project ImPACT Training Evaluation Form following the Phase 1 and Phase 2 interactive workshops, which included the question of “How satisfied were you with the training?” and was rated on a scale of 1 (not at all satisfied) to 7 (very satisfied).

Adequacy

Adequacy of training in Project ImPACT was assessed across time points using the two questions “Do you feel adequately trained to use the Project ImPACT intervention techniques?” and “Do you feel adequately trained to conduct parent coaching in Project ImPACT?” (Seng et al. 2006). Providers rated each question on a scale from 1 (no, definitely not) to 7 (yes, definitely).

Self-efficacy

Self-efficacy was assessed across time points using the two questions “Do you feel you now have the skills to implement the Project ImPACT intervention techniques directly with a child?” and “Do you feel you now have the skills to implement the Project ImPACT parent coaching intervention?” (Seng et al. 2006). Providers rated each question on a scale from 1 (no, definitely not) to 7 (yes, definitely).

Fidelity

During Phase 1, provider use of the Project ImPACT intervention techniques was rated on a scale of 1 (provider does not implement during the session) to 5 (provider implements throughout the session) using the Project ImPACT Fidelity Form (Ingersoll and Dvortcsak 2010). An overall fidelity score was calculated, and based on prior research (Ingersoll and Wainer 2013a, b), we considered an average of 4 or above (80%) as implementing Project ImPACT with fidelity. The Project ImPACT Parent Coaching Fidelity Form (Ingersoll and Dvortcsak 2010) was used to determine the extent to which providers were using the 20 parent coaching strategies at Phase 2. Provider behavior was rated as observed (1), partially observed (0.5) or not observed (0), and a proportion of coaching strategies observed was calculated. We considered an average of 80% as implementing the parent coaching strategies with fidelity.

Barriers to use

Expected and actual barriers to Project ImPACT use were assessed at Time 1, Time 4 and Time 6. The questionnaires were based on the Barriers Interview conducted by Seng et al. (2006) for the Triple P Positive Parenting Program. Nineteen potential barriers were listed and providers rated the extent to which each would (or did) serve as a barrier in their use of Project ImPACT on a scale from 1 (not at all) to 5 (extremely). Potential barriers fell into the categories of workplace characteristics, program/provider fit, and program management.

Intervention use

Providers reported on the number of families with whom they used Project ImPACT in a direct and/or parent-mediated format.

Data Analyses

For Phase 1 paired t-tests were used to examine differences in training satisfaction and training adequacy in the Project ImPACT intervention techniques from Time 2 to Time 3. Repeated measures ANOVA, with Bonferroni post hoc analyses, were used to examine differences in provider self-efficacy and provider fidelity of the Project ImPACT intervention techniques across time points. For Phase 2, repeated measures ANOVA, with Bonferroni post hoc analyses, were used to examine differences in training adequacy and self-efficacy of the parent coaching strategies from Time 4 to Time 5 and Time 6. Due to the significant administrative and staff turnover towards the end of Phase 1 and the beginning of Phase 2, only three providers sent in videos for data collection Time 4. Thus, we are unable to report baseline fidelity of parent coaching strategies prior to Phase 2 training. However, summary fidelity data of parent coaching strategies is summarized below. Paired t-tests were used to examine differences between expected and actual barriers at Time 4 and Time 6. Descriptive statistics were used to characterize provider use of Project ImPACT in their practice settings at Time 6.

Results

Phase 1

Twenty-nine of the 30 providers participated in all portions of the first phase of training (e.g., tutorial, workshop and remote consultation) over the course of approximately 3 months. Providers rated satisfaction with the Project ImPACT tutorial rather highly (M = 4.94, SD = .73), although they felt that the training was significantly improved with the addition of the Phase 1 interactive workshop (M = 6.31, SD = .69), t(25) = 7.29, p < .01. Ratings of training adequacy also increased significantly from Time 2 (M = 4.59, SD = 1.05) to Time 3 (M = 5.89, SD = .80), t(26) = 7.39, p < .01.

Results of repeated measures ANOVA, with Bonferroni post hoc analyses, indicated significant increases in provider self-efficacy of the Project ImPACT intervention techniques from pre-training (Time 1; M = 2.70, SD = 2.10) to after the Project ImPACT tutorial (Time 2; M = 4.39, SD = 1.14), to after the Phase 1 interactive workshop (Time 3; M = 5.93, SD = 0.78), F(3,36) = 11.76, p < .01 (Fig. 2). Repeated measures ANOVA with Bonferroni post hoc analyses also indicated changes in fidelity, with significant improvement from pre-training (Time 1; M = 2.27, SD = 1.07) and the Project ImPACT tutorial (Time 2; M = 2.44, SD = 0.87) to the third remote consultation session, F(1.85,5.55) = 13.42, p < .01. By the third remote consultation session, providers were implementing Project ImPACT with fidelity (M = 4.1. SD = 0.57) (see Fig. 3).

Phase 2

Fourteen (of 15) providers completed the second phase of training over approximately 3 months. The one provider who did not complete this phase had moved positions within the organization and was no longer providing direct care to children and families. Providers were highly satisfied with the Phase 2 parent coaching interactive workshop (M = 6.73, SD = 0.44). Results of repeated measures ANOVA, with Bonferroni post hoc analyses, indicated increases in ratings of training adequacy in parent coaching strategies from the pre-coaching workshop (Time 4; M = 5.00, SD = 0.63), to after the Phase 2 interactive parent coaching workshop (Time 5; M = 6.17, SD = 0.41), to the 12-month follow up (Time 6; M = 6.67, SD = 0.52); F(1.69,5.19) = 17.17, p < .01 (Fig. 4). Repeated measures ANOVA did not reveal significant differences with respect to ratings of self-efficacy of parent coaching strategies from Time 4 (M = 5.83, SD = 0.98) to Time 5 (M = 6.50, SD = 0.55) or Time 6 (M = 6.83, SD = 0.41), F(1.19,2.61) = 3.68, n.s. (Fig. 4).

Summary fidelity data from each parent coaching consultation video suggested a trend in improvements in parent coaching fidelity from the first (87%) to the second and third (92% for both) consultation sessions. Importantly, providers were implementing the parent coaching strategies with fidelity after the Phase 2 interactive parent coaching workshop.

Follow Up

There were no significant differences between expected (M = 1.09, SD = 0.99) and actual (M Time4 = 1.94, SD Time 4 = 1.37; M Time6 = 1.69, SD Time6 = 0.77) barriers to use of Project ImPACT for workplace characteristics, F(1.47,1.83) = 1.60, n.s. There were no significant differences between expected (M = 0.76, SD = 1.13) and actual (M Time4 = 1.73, SD Time 4 = 1.46; M Time6 = 1.06, SD Time6 = 0.11) barriers to use of Project ImPACT for program/provider fit, F(1.40,2.51) = 1.37, n.s. Similarly, there were no significant differences between expected (M = 0.88, SD = 0.66) and actual (M Time4 = 2.45, SD Time 4 = 1.55; M Time6 = 1.39, SD Time6 = 0.35) barriers to use of Project ImPACT for program management, F(1.05,8.61) = 3.95, n.s. In general, providers reported few barriers to use at the 12-month follow up (Fig. 5). At the 12-month follow up, all of the 14 providers who completed Phase 2 had used Project ImPACT in their practice setting. On average, providers used it as a direct intervention with two clients, ran two parent coaching groups, and used the individual parent coaching model with one family.

Discussion

The current study investigated the feasibility and effectiveness of an innovative training protocol combining internet-based learning with live instruction and remote supervision to train community-based providers in Project ImPACT. Prior research suggests that community-based providers can effectively teach parents to use these evidence-based intervention strategies with their children with ASD (Ingersoll and Wainer 2013b; Stadnick et al. 2015). The current research represents an initial effort to understand the methods that can be used to effectively and efficiently train providers in using Project ImPACT in community settings, thus, directly addressing recent calls to examine cost-effective, creative and efficient professional and paraprofessional training models for ASD intervention practices (Thomson et al. 2009).

Results from this work suggest that providers found the innovative training protocol to be feasible, acceptable and satisfactory in preparing them to use Project ImPACT directly with a child, as well as in a parent-mediated format. Providers rated both the Project ImPACT tutorial and the interactive workshops highly, with increases in training satisfaction across stages of training. Importantly, providers also reported significant increases in their self-efficacy when using the Project ImPACT intervention strategies across Phase 1 (e.g., Project ImPACT tutorial, interactive workshop, remote consultation). These increases were also reflected in changes in provider behavior, with significant increases in fidelity of implementation from pre-training and the Project ImPACT tutorial to the final consultation session. The three remote consultation sessions were particularly beneficial in achieving the Project ImPACT fidelity benchmark of 80% or higher. Taken together, these results suggest that each aspect of the Phase 1 training protocol may contribute to changes in provider perception of skill and behavior, both of which are important factors to supporting continued implementation of parent-mediated intervention.

During Phase 2, there were no statistically significant changes across stages of training with respect to provider self-efficacy in the parent coaching strategies. This may be due, in part, to the fact that the providers already had access to the Project ImPACT manuals which included explicit instructions for how to deliver the intervention in a parent-mediated format. While often not sufficient as a standalone training approach, specific and detailed manuals can serve as a type of facilitation strategy which is associated with greater levels of behavior change, including higher levels of intervention fidelity (Turner and Sanders 2006). In this study, some of the providers may have already implemented Project ImPACT as a parent-mediated intervention prior to the Phase 2 workshop and consultation sessions, and therefore knowledge of, and comfort with, the parent coaching strategies may have come from “on the job learning” before explicit training. However, it is important to note that participation in Phase 2 did result in significant increases in providers’ perceptions of the adequacy of their training in the parent coaching strategies. The extent to which providers feel adequately trained, and their overall training experiences, are critical for supporting long-term implementation (Aarons and Palinkas 2007), as community providers who reported insufficient training are less likely to use evidence-based parenting interventions relative to those who feel adequately prepared (Sanders et al. 2009). Indeed, high levels of training adequacy in the current study corresponded with high rates of Project ImPACT use at the 12-month follow-up time point.

High rates of continued use of Project ImPACT at follow-up parallel the research literature suggesting that effective training approaches improve characteristics specific to the individual provider (e.g., self-efficacy), and thereby increase the likelihood that the use of a novel intervention will be sustained. Another possible reason for providers’ sustained use of Project ImPACT at follow-up is their report of very few structural and organization-level barriers to implementing Project ImPACT within their service agency. In fact, the providers who remained in Phase 2 of the research study had substantial administrative investment and support in their learning and use of Project ImPACT. Taken together, these results may underscore the added benefit of organization-level support and planning prior to the enrollment in a multimodal training protocol.

The current study contributes to the growing body of research implicating the role computer and internet technology to increase access to information about evidence-based ASD interventions. By capitalizing on self-directed learning platforms, such as the Project ImPACT tutorial, we were able to offer comprehensive yet flexible training in the Project ImPACT techniques, and were able to dedicate limited workshop time to applied training activities, resulting in a more efficient training approach. Furthermore, using remote video review and conferencing capabilities allowed for a detailed consultation process which would not have otherwise been possible given the geographical distance between the study investigators and the community-based sites. The use of video review and video conferencing can serve a particularly important role for supporting implementation as research has indicated that “quality control” procedures like ongoing supervision and consultation are critical for ensuring continued treatment integrity and supporting the sustainability of a program in community settings (e.g., Garland and Schoenwald 2013; Wood et al. 2015).

Several limitations of this study are acknowledged. First, community-based service organizations often experience high rates of staff turnover (Grindle et al. 2009); the organizations involved in this study were no exception. Due to changes in administration and staff turnover, nearly half of the providers from Phase 1 were not able to participate in Phase 2. While a training protocol such as the one highlighted in this study represents an important aspect of supporting implementation, it is certainly not sufficient. Future implementation studies must address barriers related to outer (e.g., district policy, social factors outside of the organization) and inner (e.g., organizational culture and climate) factors concurrently as these clearly impact dissemination, adoption, and implementation of evidence-based interventions (Damschroder et al. 2009). Second, due to the high rates of turnover and related missing data, we were not able to collect sufficient information about parent coaching fidelity prior to Phase 2 training. As such, the extent to which providers were already using parent coaching strategies correctly before the second interactive workshop remains unknown. However, data suggest improvements in ratings of adequacy of training, along with a trend in improvement in parent coaching fidelity across consultation sessions. Third, the use of a quasi-experimental design limits the conclusions that can be drawn regarding the causal effect of the training on changes in provider behavior. Finally, although this research adhered to confidentiality standards as outlined by the university’s IRB, issues such as HIPPA compliance, as well as licensing and credentialed policies and reimbursement schedules, were not directly addressed within the current work. It is absolutely critical that these matters be closely considered when utilizing telehealth and web-based learning in clinical settings, given the unique security, confidentiality and accountability issues associated with telehealth service delivery (Gros et al. 2013).

Finally, due to limited funding and time constraints, we were unable to collect data related to child and parent level outcomes. However, successful implementation reflects both treatment effectiveness and implementation factors (e.g., training and adoption, cost, acceptability; Proctor et al. 2011). A critical next step is to conduct a Type 2 Hybrid Effectiveness-Implementation trial (Curran et al. 2012); this would mean a hybrid trial examining the Project ImPACT provider training protocol and parent-mediated intervention across community-based settings. This would allow for detailed data collection at multiple levels including clinical outcomes data (e.g., parent/child/family outcomes) and implementation outcomes (e.g., provider support, sustainability). Given that formative evaluation is often a part of Type 2 Hybrid studies (Brown et al. 2008), collecting and analyzing both clinical and implementation data within a larger trial would also allow for local adaptations along the way in order to support maximal uptake of the intervention in the community settings. Although a relatively unexplored research strategy within the ASD intervention field, this approach has been utilized by researchers in other areas (Brown et al. 2008) and can continue to push the translation of evidence-based ASD interventions into community settings in a way that is efficient, effective and sustainable.

In summary, data from this study suggest that an innovative protocol combining internet-based learning with live instruction and remote supervision can successfully train community-based providers in Project ImPACT. This work represents a promising training approach that capitalizes on existing dissemination and implementation literature to address the need for increased training in evidence-based ASD services as they are moved into community settings. Such an approach has the potential to address the growing demands for high-quality early intervention services for young children with ASD.

References

Aarons, G. A., & Palinkas, L. A. (2007). Implementation of evidence-based practice in child welfare: Service provider perspectives. Administration and Policy in Mental Health and Mental Health Services Research, 34, 411–419.

Addis, M. E., Wade, W. A., & Hatgis, C. (1999). Barriers to dissemination of evidence‐based practices: Addressing practitioners’ concerns about manual‐based psychotherapies. Clinical Psychology: Science and Practice, 6, 430–441.

Beidas, R. S., & Kendall, P. C. (2010). Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology, 17, 1–30.

Brookman-Frazee, L. I., Drahota, A., & Stadnick, N. (2012). Training community mental health therapists to deliver a package of evidence-based practice strategies for school-age children with autism spectrum disorders: A pilot study. Journal of Autism and Developmental Disorders, 42, 1651–1661.

Brookman-Frazee, L., Drahota, A., Stadnick, N., & Palinkas, L. A. (2012). Therapist perspectives on community mental health services for children with autism spectrum disorders. Administration and Policy in Mental Health and Mental Health Services Research, 39, 365–373.

Brookman-Frazee, L. I., Taylor, R., & Garland, A. F. (2010). Characterizing community-based mental health services for children with autism spectrum disorders and disruptive behavior problems. Journal of Autism and Developmental Disorders, 40, 1188–1201.

Brown, A. H., Cohen, A. N., Chinman, M. J., Kessler, C., & Young, A. S. (2008). EQUIP: Implementing chronic care principles and applying formative evaluation methods to improve care for schizophrenia: QUERI series. Implementation Science, 3, 1.

Chiri, G., & Warfield, M. E. (2012). Unmet need and problems accessing core health care services for children with autism spectrum disorder. Maternal and Child Health Journal, 16, 1081–1091.

Curran, G. M., Bauer, M., Mittman, B., Pyne, J. M., & Stetler, C. (2012). Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care, 50, 217.

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4, 1.

Dingfelder, H. E., & Mandell, D. S. (2011). Bridging the research-to-practice gap in autism intervention: An application of diffusion of innovation theory. Journal of Autism and Developmental Disorders, 41, 597–609.

Drahota, A., Aarons, G. A., & Stahmer, A. C. (2012). Developing the autism model of implementation for autism spectrum disorder community providers: Study protocol. Implementation Science, 7, 1.

Eikeseth, S., Hayward, D., Gale, C., Gitlesen, J. P., & Eldevik, S. (2009). Intensity of supervision and outcome for preschool aged children receiving early and intensive behavioral interventions: A preliminary study. Research in Autism Spectrum Disorders, 3, 67–73.

Garland, A. F., Haine-Schlagel, R., Brookman-Frazee, L., Baker-Ericzen, M., Trask, E., & Fawley-King, K. (2013). Improving community-based mental health care for children: Translating knowledge into action. Administration and Policy in Mental Health and Mental Health Services Research, 40, 6–22.

Garland, A., & Schoenwald, S. K. (2013). Use of effective and efficient quality control methods to implement psychosocial interventions. Clinical Psychology: Science and Practice, 20, 33–43.

Grindle, C. F., Kovshoff, H., Hastings, R. P., & Remington, B. (2009). Parents’ experiences of home-based applied behavior analysis programs for young children with autism. Journal of Autism and Developmental Disorders, 39, 42–56.

Gros, D. F., Morland, L. A., Greene, C. J., Acierno, R., Strachan, M., & Egede, L. E., et al. (2013). Delivery of evidence-based psychotherapy via video telehealth. Journal of Psychopathology and Behavioral Assessment, 35, 506–521. doi:10.1007/s10862-013-9363-4.

Hayward, D. W., Gale, C. M., & Eikeseth, S. (2009). Intensive behavioural intervention for young children with autism: A research-based service model. Research in Autism Spectrum Disorders, 3, 571–580.

Henggeler, S. W., Chapman, J. E., Rowland, M. D., Sheidow, A. J., & Cunningham, P. B. (2013). Evaluating training methods for transporting contingency management to therapists. Journal of Substance Abuse Treatment, 45, 466–474.

Henggeler, S. W., Schoenwald, S. K., Liao, J. G., Letourneau, E. J., & Edwards, D. L. (2002). Transporting efficacious treatments to field settings: The link between supervisory practices and therapist fidelity in MST programs. Journal of Clinical Child and Adolescent Psychology, 31, 155–167.

Herschell, A. D., Kolko, D. J., Baumann, B. L., & Davis, A. C. (2010). The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review, 30, 448–466.

Ingersoll, B., & Dvortcsak, A. (2006). Including parent training in the early childhood special education curriculum for children with autism spectrum disorder. Journal of Positive Behavior Interventions, 8, 79–87.

Ingersoll, B., & Dvortcsak, A. (2010). Teaching social communication: A practitioner’s guide to parent training for children with autism. New York: Guilford Press.

Ingersoll, B., & Wainer, A. (2013a). Initial efficacy of Project ImPACT: A parent-mediated social communication intervention for young children with ASD. Journal of Autism and Developmental Disorders, 43, 2943–2952.

Ingersoll, B. R., & Wainer, A. L. (2013b). Pilot study of a school-based parent training program for preschoolers with ASD. Autism, 17, 434–448.

Kazemi, E., Shapiro, M., & Kavner, A. (2015). Predictors of intention to turnover in behavior technicians working with individuals with autism spectrum disorder. Research in Autism Spectrum Disorders, 17, 106–115.

Langley, A. K., Nadeem, E., Kataoka, S. H., Stein, B. D., & Jaycox, L. H. (2010). Evidence-based mental health programs in schools: Barriers and facilitators of successful implementation. School Mental Health, 2, 105–113.

Locke, J., Olsen, A., Wideman, R., Downey, M. M., Kretzmann, M., Kasari, C., & Mandell, D. S. (2015). A tangled web: The challenges of implementing an evidence-based social engagement intervention for children with autism in urban public school settings. Behavior Therapy, 46, 54–67.

Massey, O. T., Armstrong, K., Boroughs, M., Henson, K., & McCash, L. (2005). Mental health services in schools: A qualitative analysis of challenges to implementation, operation, and sustainability. Psychology in the Schools, 42, 361–372.

Miller, W. R., Yahne, C. E., Moyers, T. B., Martinez, J., & Pirritano, M. (2004). A randomized trial of methods to help clinicians learn motivational interviewing. Journal of Consulting and Clinical Psychology, 72, 1050.

National Cancer Institute (2005). Theory at a glance: A guide for health promotion practice.

Odom, S. L., Boyd, B. A., Hall, L. J., & Hume, K. (2010). Evaluation of comprehensive treatment models for individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 40, 425–436.

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., & Bunger, A., et al. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38, 65–76.

Rogers, S. J., & Dawson, G. (2009). Early Start Denver Model for young children with autism. New York, NY: Guilford Press.

Sanders, M. R., Prinz, R. J., & Shapiro, C. J. (2009). Predicting utilization of evidence-based parenting interventions with organizational, service-provider and client variables. Administration and Policy in Mental Health and Mental Health Services Research, 36, 133–143.

Sanders, M. R., & Turner, K. M. (2005). Reflections on the challenges of effective dissemination of behavioural family intervention: Our experience with the Triple P–Positive Parenting Program. Child and Adolescent Mental Health, 10, 158–169.

Seng, A. C., Prinz, R. J., & Sanders, M. R. (2006). The role of training variables in effective dissemination of evidence-based parenting interventions. International Journal of Mental Health Promotion, 8, 20–28.

Schreibman, L., Dawson, G., Stahmer, A. C., Landa, R., Rogers, S. J., & McGee, G. G., et al. (2015). Naturalistic developmental behavioral interventions: Empirically validated treatments for autism spectrum disorder. Journal of Autism and Developmental Disorders, 45, 2411–2428.

Sholomskas, D. E., Syracuse-Siewert, G., Rounsaville, B. J., Ball, S. A., Nuro, K. F., & Carroll, K. M. (2005). We don’t train in vain: A dissemination trial of three strategies of training clinicians in cognitive-behavioral therapy. Journal of Consulting and Clinical Psychology, 73, 106.

Stadnick, N. A., Drahota, A., & Brookman-Frazee, L. (2013). Parent perspectives of an evidence-based intervention for children with autism served in community mental health clinics. Journal of Child and Family Studies, 22, 414–422.

Stadnick, N. A., Stahmer, A., & Brookman-Frazee, L. (2015). Preliminary effectiveness of Project ImPACT: A parent-mediated intervention for children with autism spectrum disorder delivered in a community program. Journal of Autism and Developmental Disorders, 45, 2092–2104.

Stahmer, A. C. (2007). The basic structure of community early intervention programs for children with autism: Provider descriptions. Journal of Autism and Developmental Disorders, 37, 1344–1354.

Thomson, K., Martin, G. L., Arnal, L., Fazzio, D., & Yu, C. T. (2009). Instructing individuals to deliver discrete-trials teaching to children with autism spectrum disorders: A review. Research in Autism Spectrum Disorders, 3, 590–606.

Turner, K. M., & Sanders, M. R. (2006). Dissemination of evidence-based parenting and family support strategies: Learning from the Triple P—Positive Parenting Program system approach. Aggression and Violent Behavior, 11, 176–193.

Vismara, L. A., Young, G. S., Stahmer, A. C., Griffith, E. M., & Rogers, S. J. (2009). Dissemination of evidence-based practice: Can we train therapists from a distance? Journal of Autism and Developmental Disorders, 39, 1636–1651.

Wood, J. J., McLeod, B. D., Klebanoff, S., & Brookman-Frazee, L. (2015). Toward the implementation of evidence-based interventions for youth with autism spectrum disorders in schools and community agencies. Behavior Therapy, 46, 83–95.

Zablotsky, B., Black, L. I., Maenner, M. J., Schieve, L. A., & Blumberg, S. J. (2015). Estimated prevalence of autism and other developmental disabilities following questionnaire changes in the 2014 National Health Interview Survey. National Health Statistics Reports, 87, 1–21.

Author Contributions

A.L.W. designed the study, conducted data analysis, and led manuscript writing and preparation. K.P. executed the study, supervised data collection, and assisted with manuscript writing and preparation. B.R.I. collaborated with the design of the study and assisted with manuscript writing and preparation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interests.

Ethical Approval

This research adhered to basic ethical considerations for the protection of human participants in research and was overseen by the Michigan State University’s Institutional Review Board.

Informed Consent

In adherence with ethical standards, as overseen by Michigan State University’s IRB, all participants completed informed consent prior to enrolling in the present research study.

Rights and permissions

About this article

Cite this article

Wainer, A.L., Pickard, K. & Ingersoll, B.R. Using Web-Based Instruction, Brief Workshops, and Remote Consultation to Teach Community-Based Providers a Parent-Mediated Intervention. J Child Fam Stud 26, 1592–1602 (2017). https://doi.org/10.1007/s10826-017-0671-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10826-017-0671-2