Abstract

Portable touch-screen devices have been the focus of a notable amount of intervention research involving individuals with autism. Additionally, popular media has widely circulated claims that such devices and academic software applications offer tremendous educational benefits. A systematic search identified 19 studies that targeted academic skills for individuals with autism. Most studies used the device’s built-in video recording or camera function to create customized teaching materials, rather than commercially-available applications. Analysis of potential moderating variables indicated that participants’ age and functioning level did not influence outcomes. However, participant operation of the device, as opposed to operation by an instructor, produced significantly larger effect size estimates. Results are discussed in terms of recommendations for practitioners and future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Individuals with autism spectrum disorder (ASD) constitute a growing proportion of students receiving special education services and they experience unique challenges (e.g., social-communication deficits) which impede acquisition and generalization of skills (American Psychiatric Association 2013; Office of Special Education Programs 2017). Although individuals with ASD present with diverse skill profiles, they often exhibit poor performance on academic skills relative to their cognitive abilities, suggesting that they require individualized instruction and supports (Keen et al. 2016; King et al. 2016). As emphasis on access to general education curriculum and settings has increased, so have the academic expectations of students with ASD, further increasing the need to identify effective practices for teaching academic content to this population of students (e.g., the Common Core State Standards 2010; Fleury et al. 2014; King et al. 2016).

In addition to difficulties in traditional academics, many students with ASD display minimal appropriate engagement during classroom activities (Fleury et al. 2014). Engagement behaviors (e.g., participation in group activities, appropriate use of materials, on-task behavior) are often considered fundamental by teachers and have been linked to academic outcomes (Fleury et al. 2014; Koegel et al. 2010). For example, academic participation and success during grade school positively predict participation in postsecondary education and competitive employment, domains in which individuals with ASD are greatly underrepresented (Migliore et al. 2012).

One increasingly popular option for presenting academic content and engaging students with ASD is the use of touch-screen device technology (Kagohara et al. 2013). Portable touch-screen devices such as iPads and Android tablets are widely available and have a number of features which make them potentially desirable for use in educational contexts with individuals with ASD. Researchers have found that some individuals with ASD prefer technology-based instruction and perform better during interventions that include electronic devices (Kagohara et al. 2013; Shane and Albert 2008). Previous literature also suggests that these devices may reduce the frequency of adult-delivered prompts during instruction, which can decrease the likelihood of prompt-dependency (Mechling 2011; Smith et al. 2015). Additionally, these mainstream devices may be less stigmatizing, more affordable, and offer additional functions compared to many devices specifically designed to serve as assistive technology (e.g., highly-specialized speech generating devices).

Parents and teachers report that they find portable touch-screen devices appealing and use them frequently with individuals with ASD (Clark et al. 2015; King et al. 2017). These devices have also received attention and widespread endorsement in popular media (Knight et al. 2013). For example, a recent article in Parenting advertises 11 “expert-recommended apps” for individuals with autism and describes how their use may improve skills across a variety of domains without any references to supporting research (Willets 2017). Given the apparent enthusiasm and purported adoption of these devices in academic programs for individuals with ASD, it is important for systematic reviews to illuminate how their use is supported by empirical research.

Previous reviews on interventions incorporating touch-screen devices with individuals with ASD have focused more broadly across skill domains (Hong et al. 2017; Kagohara et al. 2013). Kagohara et al. (2013) systematic review included 15 intervention studies but only one of the included studies targeted academic skills. Specifically, researchers used an iPad to present instructional videos (video modeling) to successfully teach two students with ASD to check the spelling of words (Kagohara et al. 2012). A more recent meta-analysis by Hong et al. (2017) examined 36 studies that used touch-screen devices in treatment programs for individuals with ASD. Nine of those studies directly targeted academic skills (e.g., reading, writing, arithmetic) or an increase in engagement in academic tasks. Although the nine studies produced large effect size estimates, no moderating variables related to intervention characteristics were identified. The broad focus across skill domains (e.g., academics, communication, vocational skills) precluded more nuanced conclusions regarding academic outcomes, limiting ability to offer guidance for practitioners. In terms of academic engagement, a number of peer-reviewed studies have successfully increased engagement in academic settings by teaching self-monitoring, providing choices, and using peer supports (e.g., Goodman and Williams 2007; Koegel et al. 2010; McCurdy and Cole 2014). However, despite the recognized importance of engagement in the classroom, we were unable to locate any systematic reviews focused on the academic engagement of students with ASD.

This current meta-analysis builds from previous reviews by focusing directly on studies involving students with ASD that used touch screen devices to target academic skills and increase engagement in academic tasks (on-task behavior). Further, variables that were not coded and summarized in previous reviews (e.g., intervention dosage, participant functioning level) were extracted from included studies and analyzed. Specifically, this review (a) describes the characteristics, features, and functions of touch-screen devices and applications that have been used in previous research; (b) identifies specific target skills and teaching procedures; and (c) calculates effect size estimates in order to analyze potential moderating variables. Overall, a review of this nature is intended to inform evidence-base practice and offer suggestions for future research.

Method

Protocol Registration and PRISMA Guidelines

The procedures for this meta-analysis were registered with the PROSPERO International prospective register of systematic reviews (Ledbetter-Cho et al. 2017a), a database which publishes protocols from systematic reviews prior to the initiation of data extraction in an effort to reduce reporting bias (Moher et al. 2015). The meta-analysis procedures were conducted in accordance with PRISMA guidelines (Moher et al. 2009), a set of evidence-based reporting procedures designed to increase the quality of systematic reviews.

Search Strategy

A systematic search was conducted in the following four electronic databases: Educational Resources Information Center (ERIC), Medline, Psychology and Behavioral Sciences Collection, and PsychINFO. Search terms were designed to identify studies that included participants with an autism diagnosis (i.e., autis*, ASD, Asperger*, or pervasive developmental disord*) and the use of a touch-screen device (i.e., mobile technolog*, pocket PC, phone, portable media, Mp3, palmtop comp*, handheld comp*, PDA, personal digital assis*, multimedia device, iPhone, iPod, iPad, portable electronic devi*, or tablet). The search was limited to peer-reviewed articles published in English from 2000 to 2017. Consistent with other reviews examining comparable technology, the year 2000 was chosen because touch-screen mobile devices became widely available following this time period (Mechling 2011; Nashville 2009). The first author subsequently conducted ancestry searches of included articles identified through the electronic database search.

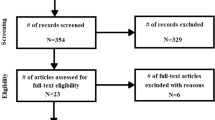

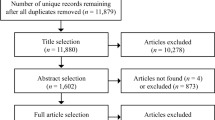

The initial database search yielded a total of 427 records. Following the removal of duplicates and non-intervention articles (e.g., systematic reviews, commentaries), the first author screened the full text of 136 articles for inclusion. Nineteen met our predetermined inclusion criteria, 17 from database searches and two from ancestry searches. Figure 1 outlines the search and screening process.

Study Selection

Studies were required to meet multiple inclusion criteria that were determined prior to literature searches. First, studies must have provided intervention to a minimum of one individual diagnosed with an autism spectrum disorder (i.e., Asperger’s, ASD, autism, Autistic Disorder, or Pervasive Developmental Disorder-Not Otherwise Specified [PDD-NOS]) per author report, a medical professional, school diagnostic criteria, or alignment with criteria from the Diagnostic and Statistical Manual of Mental Disorders (DSM). If a study included participants who were not diagnosed with an ASD, only the data from participants with ASD were analyzed. Second, only studies that used experimental designs with the potential to demonstrate a functional relation between the intervention and dependent variable (e.g., multiple baseline design, reversal design, group design with appropriate randomization and controls) were considered. Additionally, studies must have utilized touch-screen mobile devices (e.g., iPods, iPads, personal digital assistants) in intervention delivery.

Finally, studies were required to target specific academic skills or academic engagement behaviors. Specific academic skills were defined as students’ accuracy during activities in the content areas of language arts, science, social studies, writing, or mathematics (Knight et al. 2013; Machalicek et al. 2008; Root et al. 2017). Academic engagement behaviors consisted of on-task behaviors that took place within the context of an academic task and were necessary for accurate performance (e.g., engagement with academic materials; on-task behavior; Koegel et al. 2010; McCurdy and Cole 2014). Interrater agreement on the application of the inclusion criteria was conducted on 20% of articles in the database and ancestry searches and reached 100% agreement.

Data Extraction and Coding

Data extracted from each study are reported in Table 1 and are summarized in terms of: (a) participant characteristics, (b) intervention materials and procedures, (c) dependent variables, (d) outcomes, and (e) research design and rigor. The cost of applications used in the studies are displayed in Table 2. The first author coded and summarized variables from all included studies. Co-authors independently verified the accuracy of the summaries for 30% of studies (Watkins et al. 2014). Interrater agreement was calculated on all coded variables by dividing the number of agreements by the total number of items and multiplying by 100. Interrater agreement was scored across 142 items (e.g., setting, implementer, effect size estimates) and reached 96%. Disagreements were resolved by discussion among co-authors.

Each participant’s functioning level was coded as lower, medium, or higher based upon the framework outlined by Reichow and Volkmar (2010). Specifically, individuals with limited vocal communication and/or an IQ below 55 were categorized as lower functioning. Participants were classified as medium functioning when they presented with emerging vocal communication and/or an IQ between 55 and 85. Individuals with well-developed vocal communication and/or an IQ above 85 were categorized as higher functioning.

In order to summarize outcomes using visual analysis, authors examined the data from included studies to code a success estimate for each intervention (Reichow and Volkmar 2010; Watkins et al. 2017). The success estimate provides a ratio of the number of implementations of intervention where an effect was observed out of the total number of implementations (Reichow and Volkmar 2010). Success is determined by employing visual analysis as described by the What Works Clearinghouse (Kratochwill et al. 2010; i.e., level, trend, stability, immediacy of effect, non-overlap, and consistency of data).

The Evaluative Method for Determining Evidence-Based Practices in Autism was applied to included studies to determine the quality of research (Reichow et al. 2008). This method has precedence in systematic reviews of applied intervention research and has demonstrated validity and reliability (Wendt and Miller 2012; Whalon et al. 2015). Studies were coded as having strong, adequate, or weak methodological strength based upon the number of primary and secondary quality indicators that they displayed. Primary quality indicators consist of descriptions of participants, independent and dependent variables, baseline conditions, visual analysis of data, and evaluation of experimental control. Secondary quality indicators consist of interobserver agreement (IOA), kappa, treatment fidelity, the use of blind raters, the evaluation of maintenance and generalization of behavior change, and social validity.

Studies coded as having strong methodological rigor received high ratings on all primary quality indicators and displayed a minimum of three secondary quality indicators. Studies classified as adequate received high ratings on a minimum of four primary quality indicators and included two secondary quality indicators. Studies with weak methodological rigor received high ratings on fewer than four primary quality indicators and/or included less than two secondary quality indicators.

Meta-analysis

In addition to using visual analysis to report outcomes, we calculated nonparametric effect size estimates in an effort to enable broader comparisons across studies. Given that there is no consensus regarding the most appropriate effect size metric for single-case research designs, we adhered to the current recommendation and utilized multiple approaches to estimating effect size. We calculated the improvement rate difference (IRD) and nonoverlap of all pairs (NAP; Kratochwill et al. 2013; Pustejovsky and Ferron 2017).

IRD is equivalent to the difference between the rate of improvement in baseline and treatment phases and has been widely applied to medical research (Parker et al. 2009). Advantages of IRD include its alignment with the Phi coefficient and compatibility with visual analysis (Parker et al. 2009). IRD scores above .70 indicate a large treatment effect, .50 to .70 moderate, and scores below .50 indicate small or questionable effects (Parker et al. 2009). NAP represents the proportion of data that are improved across contrasting phases following pairwise comparisons and is mathematically equivalent to the area under the curve (AUC; Parker and Vannest 2009). Advantages of NAP include its ability to produce valid confidence intervals and its alignment with visual analysis. NAP scores at or above .93 indicate a large treatment effect, .66 to .92 moderate, and scores at or below .65 indicate a small effect (Parker and Vannest 2009).

In order to prepare data for effect size calculations, graphs from each study were saved as images and imported into the WebPlotDigitizer data extraction software (Rohatgi 2017). WebPlotDigitizer has demonstrated validity and reliability for extraction of data from single-case design graphs (Moeyaert et al. 2016). Graphed data were converted into numerical data and exported into an excel spreadsheet which organized the raw data from each phase of individual studies. IRD and NAP were calculated using online software (Pustejovsky 2017).

Effect sizes were calculated for individual participants as well as at the study level. For studies employing multiple baseline, multiple probe, reversal, or combined designs, data from all adjacent AB phases were contrasted (Chen et al. 2016; Pustejovsky and Ferron 2017). For multielement designs, effect sizes were calculated by conducting between-condition comparisons (i.e., contrasting the data from the two intervention conditions). Two separate IRD and NAP scores were reported for studies using alternating treatment designs. Specifically, effect sizes were calculated by contrasting baseline phases with best treatment phases and by conducting between-condition comparisons (Chen et al. 2016; Pustejovsky and Ferron 2017).

In an effort to identify potential moderating variables, average IRD and NAP scores were calculated for different study and participant variables (e.g., participant functioning level, research rigor) and are reported in Table 3. We used the Stasitical Package for the Social Sciences (SPSS) to conduct the Mann–Whitney U test to determine if differences between effect size estimates in the different groups were statistically significant (i.e., contained a p-value of less than .05; Mann and Whitney 1947). The Mann–Whitney U test is appropriate for data with a non-normal distribution, such as the effect sizes calculated for the current meta-analysis, and is comparable to a non-parametric version of a t test (McKnight and Najab 2010).

Results

The procedures and outcomes of the 19 studies included in this meta-analysis are categorized by the domain of the targeted skills (i.e., academic skills or engagement behaviors) and presented in Table 1. All studies utilized single-case research designs and were published across six different peer-reviewed journals. Table 2 summarizes the variety and cost of the software applications utilized in the studies and Table 3 reports the average effect sizes, standard deviations, and indicates statistically significant differences between groups when examining specific study variables.

Participant, Setting, and Implementer Characteristics

A total of 53 individuals (including six females) diagnosed with an ASD participated in the included studies and ranged in age from 2 to 19 years (M = 10 years and 5 months). Participants included 32 children (coded for individuals ages birth through 11) and 21 adolescents (ages 12–21). Individuals received classification as lower functioning (n = 17), medium (n = 16), and higher functioning (n = 14) according to criteria outlined by Reichow and Volkmar (2010). For six participants, the level of functioning could not be determined due to limited information in the studies. Interventions were most often conducted in classrooms (n = 16), followed by homes (n = 2), and clinics (n = 2). One study was conducted across two locations (Neely et al. 2013). Interventions were implemented by researchers (n = 13) and teachers (n = 6).

Devices and Software Applications

Devices priced at less than $600.00 US dollars (USD) were used in the majority of studies and consisted of iPads (n = 15; $329.00), iPods (n = 1; $199.00), and a Samsung tablet (n = 1; $599.00). One study utilized a smart phone which retails for $724.00 and the remaining study included an HP iPAQ mobile for which pricing data was not available.

Table 2 displays the variety and current cost in USD of the software applications used in the included studies and reveals that the applications utilized by most researchers were cost free (n = 13). Eight studies used applications that ranged in cost from $1.99 to $13.99 (M = $5.99). The applications described in two studies were not available for commercial purchase nor was the cost reported (e.g., I-Connect; Clemons et al. 2016). Two studies each used two applications or device features in their investigation (Lee et al. 2015; Neely et al. 2013).

Pre-training on Devices

In 14 of the included studies, participants operated the touch-screen device during intervention. Of these, six did not provide participants with pre-training on the device (e.g., Burton et al. 2013). The use of prompting (e.g., verbal prompts, gestural prompts) was described in seven studies, including three studies that reported teaching participants to use the device within the context of a mastered skill (e.g., Spriggs et al. 2015). In the remaining five studies, the instructor presented and manipulated the touch-screen device during intervention.

Intervention Procedures and Dosage

In addition to the use of a touch-screen device, operated either by participants (n = 14 studies) or instructors (n = 5 studies), intervention packages included a variety of evidence-based procedures to teach the targeted skills. Studies often described a form of prompting (n = 9) to evoke the targeted skill, including least-to-most hierarchies (n = 3), priming (n = 2), verbal prompts (n = 2), time delay, and a system of most-to-least prompts (n = 1 each). Three studies utilized error correction procedures (e.g., replaying the video model and instructing the participant to perform the skill a second time; Cihak et al. 2010a). The use of reinforcement (e.g., delivery of a preferred item) was described in seven studies (e.g., Clemons et al. 2016). Five studies merely provided participants with the touch-screen device and did not describe the use of any prompts, program for reinforcement, nor the delivery of any supplemental instructional procedures (e.g., Spriggs et al. 2015; Van der Meer et al. 2015).

Session length was not reported in seven studies, precluding calculation of the total dosage of intervention. For the remaining studies, session length ranged from 5 to 30 min (M = 15 min) and sessions were implemented one to four times per week (M = 3). The total length of interventions ranged from 1.5 to 12 h (M = 5 h and 10 min), with the majority of interventions lasting no more than 5 h.

Target Behaviors

Specific academic skills were targeted in eight studies. Five studies taught participants to complete mathematics skills (e.g., comparing prices, double-digit subtraction; Weng and Bouck 2014; Yakubova et al. 2016) and two studies targeted reading comprehension (e.g., Zein et al. 2016). One study taught both paragraph-writing and mathematics (Spriggs et al. 2015).

Researchers targeted academic engagement in the eleven remaining studies. Seven studies targeted on-task behavior during academic work, including five studies that taught participants to self-monitor their behavior (e.g., Clemons et al. 2016; Crutchfield et al. 2015). Independent transitions between activities were targeted in three studies (e.g., Cihak et al. 2010a) and two studies compared participants’ engagement in academic tasks during teacher-led and iPad-assisted instruction (Lee et al. 2015; Neely et al. 2013).

Four studies evaluated collateral behaviors that were not directly targeted by intervention components. Specifically, three studies targeting on-task behavior during academic work also measured participants’ challenging behavior (Lee et al. 2015; Neely et al. 2013; Zein et al. 2016). Following an intervention that taught four participants to self-monitor their on-task behavior during class, researchers measured participants’ scores on a vocabulary assessment that was not utilized during intervention (Xin et al. 2017).

Intervention Effectiveness

Intervention outcomes, success estimates, and effect sizes of individual studies are reported in Table 1. Given that some studies reported multiple dependent variables (e.g., Lee et al. 2015) or utilized designs which necessitated the calculation of two effect sizes (e.g., Weng and Bouck 2014), IRD and NAP were calculated for a total of 28 variables. Effect sizes for dependent variables ranged from small to large, with most variables producing large effect sizes (n = 17; 61%), followed by moderate (n = 6; 21%), and small (n = 5; 18%). These effect size estimates were consistently aligned with the success estimates determined for each study using visual analysis (see Table 1).

Effect size estimates and their statistical significance were also examined across different variables of the included studies and are reported in Table 3. Participant functioning level did not significantly influence treatment effectiveness, with IRD and NAP scores indicating large effects across functioning levels. Participant age did impact treatment outcomes, with adolescent participants producing significantly higher effect size estimates than children (UIRD = 377.5; p = .037; UNAP = 376.5; p = .036). Effect sizes increased with ratings of methodological rigor. Studies with weak research rigor produced moderate effect size estimates but did not contain enough cases for statistical analysis. NAP scores indicated a significant difference between studies with adequate and strong methodological rigor (UNAP = 324; p = .038) while IRD scores did not reach statistical significance (UIRD = 331.5; p = .051).

With regard to intervention characteristics, interventions in which the participant operated the device (i.e., physically manipulated the device during intervention) resulted in significantly higher effect sizes in comparison to studies in which the instructor manipulated the device (UIRD = 218; p = .007; UNAP = 208; p = .004). Additionally, interventions that provided the participant with pre-training on the device prior to intervention produced significantly better treatment outcomes than those without pre-training (UIRD = 254.5; p < .001; UNAP = 250; p < .001). Interventions consisting of video modeling and self-monitoring produced large effect size estimates. Self-monitoring interventions resulted in significantly better treatment outcomes in comparison to explicit instruction interventions (UIRD = 99.5; p = .001; UNAP = 100.5; p = .002). Studies using visual supports and social stories did not contain enough cases for statistical analysis but produced moderate to large treatment effects.

Examination of the targeted skills revealed that studies teaching specific academic skills produced the largest effect size estimates, followed by interventions targeting engagement and challenging behavior. However, no statistically significant differences were found. Finally, intervention dosage did not significantly influence outcomes. Effect size estimates ranged from moderate to large and studies with the largest dosage produced the largest effects.

Research Strength

All included studies used single-case research designs to evaluate intervention effects on participants’ academic skills and engagement. No group designs met inclusion criteria. Studies were most commonly awarded ratings of strong methodological rigor (n = 9). Eight studies met criteria for adequate methodological rigor, with the remaining two studies receiving ratings of weak rigor. Adequate and weak ratings were due to overlap and instability in the data (n = 7), a lack of secondary quality indicators, or a lack of detailed participant description (n = 1 each).

Discussion

This meta-analysis identified 19 studies that incorporated touch-screen devices into interventions targeting the academic skills (n = 8) or academic engagement behaviors (n = 11) of 53 students with ASD. The majority of studies produced moderate to large treatment effects across participant functioning levels and received methodological ratings of adequate or strong. These findings support the conclusions of previous reviews that suggested interventions using touch-screen devices are generally found to be effective and that research in this area is increasing (Hong et al. 2017; Kagohara et al. 2013). In conjunction with the touch-screen device, most studies used teaching procedures with robust support in the research-base (e.g., prompting hierarchies, systematic reinforcement), which likely contributed to the positive outcomes reported.

Most studies utilized widely available devices (e.g., iPods) and cost-free software applications. It is somewhat surprising, however, that so few commercially designed educational applications were investigated (see Table 2). Rather than using pre-configured applications designed for intervention, researchers often used the device’s inherent video or photograph functions to create individualized teaching materials (e.g., video-enhanced activity schedules). Future research should examine the effectiveness of additional commercially designed educational applications on the market, such as those targeting reading or mathematics (e.g., Starfall®, Show Me Math®). In addition to the relative effectiveness of the various applications, usability and other social validity variables should be considered in future comparisons of software and device options.

Only eight studies targeted performance on specific academic skills such as writing, math, and reading comprehension, indicating a clear need for future research on the utility of touch-screen devices for teaching these skills (Kagohara et al. 2013). Six of these studies utilized video modeling or prompting, supporting previous research which has found video modeling effective for teaching a variety of skills to individuals with ASD (Bellini and Akullian 2007). Five studies taught students to utilize the touch-screen device to monitor their on-task behavior during academic work, including one in which participants monitored their own stereotypy (Crutchfield et al. 2015). Although students with ASD have been taught to use these devices, some tasks may be more complicated to perform on the device than others (require additional steps). For example, students may acquire the skills necessary to play the video model more efficiently than they acquire the skills necessary to use the same device for self-management. Because all but two self-monitoring interventions were implemented within the context of independent work, future research should evaluate the efficacy and social validity of technology-based self-monitoring during teacher-led instruction or group work.

The examination of unintended adverse effects of interventions that use touch-screen devices may have important implications for applied practice. Researchers have suggested that the use of electronic devices in teaching programs for individuals with ASD may lead to increases in untargeted stereotypy or challenging behavior (King et al. 2017; Ramdoss et al. 2011). Alternatively, interventions may produce desirable collateral effects across different skill domains, potentially increasing intervention efficiency (Ledbetter-Cho et al. 2017b; McConnell 2002). The results of the current review are promising, with three studies reporting collateral improvements in challenging behavior during interventions incorporating touch-screen devices (Lee et al. 2015; Neely et al. 2013; Zein et al. 2016) and one finding untargeted academic improvements (Xin et al. 2017). However, these findings must be interpreted with caution given the small number of studies that investigated the impact of the interventions on untargeted dependent variables.

Variations in the technology features utilized in intervention packages did not appear to influence treatment outcomes. Components such as voice-over narration and video modeling versus video prompting did not contain enough cases for statistical analysis but the data that were available indicated similar outcomes. These findings are consistent with previous studies that have reported success using various formats and approaches to video modeling (Bellini and Akullian 2007). Based on these results, practitioners should consider individualizing technology features and teaching procedures based upon the learner’s preferences (e.g., conduct a preference assessment on device features prior to intervention).

Studies were primarily conducted in applied settings, such as schools, supporting claims that individuals with ASD can benefit from using touch-screen devices in natural contexts. However, interventions were overwhelmingly implemented by researchers. This is concerning given that some adult instruction appears potentially necessary for learners to acquire targeted skills. Specifically, with the exception of three studies (Burton et al. 2013; Hart and Whalon 2012; Van der Meer et al. 2015), interventionists used instructional procedures (e.g., prompts, reinforcement) in addition to providing participants with the touch-screen device. Future research that utilizes natural intervention agents and describes the process for training them in replicable detail would be beneficial in determining the feasibility of such interventions. Indeed, classroom teachers have indicated that they feel underprepared to implement interventions involving technology and desire training in this area (Clark et al. 2015).

Regarding moderating variables, interventions in which the participant operated the device produced significantly larger effect size estimates compared to interventions in which the adult manipulated the device (see Table 3). It is possible that requiring students to operate the application increases attending to relevant stimuli, decreasing the need for adult-delivered prompts and increasing independence (Kimball et al. 2004). Additionally, some individuals may enjoy interacting with technology and be more likely to correctly perform the targeted academic skills. Providing participants with pre-training on the device prior to introducing intervention also produced significantly improved outcomes. Participants who did not receive pre-training may have experienced difficulty during intervention due to the necessity of acquiring two skills simultaneously (i.e., navigating the software and learning the targeted skill).

Interventions with adolescent participants produced significantly higher effect size estimates than those with children. This finding could be due to the fact that the targeted academic skills and engagement/self-monitoring behaviors may have been more developmentally appropriate for older participants (Lifter et al. 2005). Alternatively, the finding that adolescents benefited more may be due to some characteristic of the interventions more likely to be used with adolescent participants (e.g., self-monitoring, video modeling). Finally, the methodological rigor of the included studies was also found to moderate intervention effectiveness, with studies that received higher quality ratings producing significantly higher effect size estimates. This is most likely due to the method used to appraise research quality: studies with non-overlap of data across adjacent phases received higher marks for methodological rigor which contributed to larger effect size estimates (Reichow et al. 2008).

Limitations

Because all of the options for estimating effect sizes from single-case design studies have limitations, we followed current recommendations to employ multiple measures (IRD and NAP) that estimate the degree of improvement following intervention (Maggin and Odom 2014). Although alternative effect size measures which could potentially provide a more fine-grained analysis through regression models are beginning to appear in the literature (e.g., standardized mean difference statistics), these measures cannot currently be applied to many of the designs utilized by the included studies (e.g., multielement designs; Pustejovsky and Ferron 2017; Shadish et al. 2014).

To ensure a minimum level of study quality, we restricted our search to peer-reviewed publications that used an experimental design with the potential to demonstrate a functional relation. Studies that met these criteria were included in the analysis—even if they had ratings of weak methodological rigor - in an effort to provide a comprehensive review of a small research-base. Although there are concerns with including less methodologically rigorous studies in meta-analyses, further restricting inclusion criteria may have inflated positive outcomes (Sham and Smith 2014).

Because the included studies differed across a number of different variables (e.g., intervention components, dosage, participant age), interpretation of moderator variables should be considered cautiously. For example, interventions in which the participant operated the device included many studies with video modeling, self-monitoring, and explicit instruction. These intervention components, rather than who operated the device, may have contributed to the positive outcomes observed. Finally, interrater agreement at the level of entering search terms during the database search was not collected.

Implications for Practice

Despite these limitations, results from the current meta-analysis provide evidence that intervention packages incorporating touch-screen devices may be effective in improving the academic skills and related engagement behaviors of students with ASD in applied settings. Only eight of the included studies targeted specific academic skills, indicating that there is limited empirical support for the use of touch-screen devices in teaching academic content. The majority of included studies utilized instructor-created teaching materials. Touch screen devices are only as effective as the underlying instructional procedures and ineffective teaching procedures are not likely to become effective merely by delivery via a touch-screen device. Practitioners are encouraged to individualize touch-screen presented lessons based on the needs of the student and ensure that the instruction provided by the device is aligned with the evidence-base.

This meta-analysis suggests that touch screen devices are useful in improving academic skills and academic engagement in students with ASD. However, these devices should be viewed as a supplement to carefully-planned instruction involving evidence-based teaching practices. Finally, given the promising outcomes from interventions in which pre-training was conducted, educators should consider training the student to use the device and its software prior to introducing the targeted skill.

References

Asterisk denotes articles included in the current meta-analysis

American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th edn.). Arlington, VA: American Psychiatric Publishing.

Bellini, S., & Akullian, J. (2007). A meta-analysis of video modeling and video self-modeling interventions for children and adolescents with autism spectrum disorders. Exceptional Children, 73, 264–287. https://doi.org/10.1177/001440290707300301.

*Burton, C. E., Anderson, D. H., Prater, M. A., & Dyches, T. T. (2013). Video self-modeling on an iPad to teach functional math skills to adolescents with autism and intellectual disability. Focus on Autism & Other Developmental Disabilities, 28, 67–77. https://doi.org/10.1177/1088357613478829.

Chen, M., Hyppa-Martin, J. K., Reichle, J. E., & Symons, F. J. (2016). Comparing single case design overlap-based effect size metrics from studies examining speech generating device interventions. American Journal on Intellectual and Developmental Disabilities, 121, 169–193. https://doi.org/10.1352/1944-7558-121.3.169.

*Cihak, D., Fahrenkrog, C., Ayres, K. M., & Smith, C. (2010a). The use of video modelling via a video iPod and a system of least prompts to improve transitional behaviors for students with autism spectrum disorders in the general education classroom. Journal of Positive Behavior Interventions, 12, 103–115. https://doi.org/10.1177/1098300709332346.

*Cihak, D. F., Wright, R., & Ayres, K. M. (2010b). Use of self-modeling static-picture prompts via a handheld computer to facilitate self-monitoring in the general education classroom. Education and Training in Autism and Developmental Disabilities, 45, 136–149.

Clark, M. L. E., Austin, D. W., & Craike, M. J. (2015). Professional and parental attitudes toward iPad application use in autism spectrum disorder. Focus on Autism and Other Developmental Disabilities, 30, 174–181. https://doi.org/10.1177/1088357614537353.

*Clemons, L. L., Mason, B. A., Garrison-Kane, L., & Wills, H. P. (2016). Self-monitoring for high school students with disabilities. Journal of Positive Behavior Interventions, 18, 145–155. https://doi.org/10.1177/1098300715596134.

*Crutchfield, S., Mason, R., Chambers, A., Wills, H., & Mason, B. (2015). Use of a self-monitoring application to reduce stereotypic behavior in adolescents with autism: A preliminary investigation of I-Connect. Journal of Autism & Developmental Disorders, 45, 1146–1155. https://doi.org/10.1007/s10803-014-2272-x.

*Finn, L., Ramasamy, R., Dukes, C., & Scott, J. (2015). Using WatchMinder to increase the on-task behavior of students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 45, 1408–1418. https://doi.org/10.1007/s10803-014-2300-x.

Fleury, V. P., Hedges, S., Hume, K., Browder, D. M., Thompson, J. L., Fallin, K., … Vaughn, S. (2014). Addressing the academic needs of adolescents with autism spectrum disorder in secondary education. Remedial and Special Education, 35, 68–79. https://doi.org/10.1177/0741932513518823.

Goodman, G., & Williams, C. M. (2007). Interventions for increasing the academic engagement of students with autism spectrum disorders in inclusive classrooms. Teaching Exceptional Children, 39, 53–61. https://doi.org/10.1177/004005990703900608.

Hart, J. E., & Whalon, K. J. (2012). Using video self-modeling via iPads to increase academic responding of an adolescent with autism spectrum disorder and intellectual disability. Education and Training in Autism and Developmental Disabilities, 47, 438–446.

Hong, E. R., Gong, L., Nici, J., Morin, K., Davis, J. L., Kawaminami, S., … Noro, F. (2017). A meta-analysis of single-case research on the use of tablet-mediated interventions for persons with ASD. Research in Developmental Disabilities, 70, 198–214. https://doi.org/10.1016/j.ridd.2017.09.013.

*Jowett, E. L., Moore, D. W., & Anderson, A. (2012). Using an iPad-based video modeling package to teach numeracy skills to a child with an autism spectrum disorder. Developmental Neurorehabilitation, 15, 304–312. https://doi.org/10.3109/17518423.2012.682168.

Kagohara, D. M., Sigafoos, J., Achmadi, D., O’Reilly, M., & Lancioni, G. (2012). Teaching children with autism spectrum disorders to check the spelling of words. Research in Autism Spectrum Disorders, 6, 304–310. https://doi.org/10.1016/j.rask.2011.05.012.

Kagohara, D. M., van der Meer, L., Ramdoss, S., O’Reilly, M. F., Lancioni, G. E., Davis, T. N., … Sigafoos, J. (2013). Using iPods(®) and iPads(®) in teaching programs for individuals with developmental disabilities: A systematic review. Research in Developmental Disabilities, 34, 147–156. https://doi.org/10.1016/j.ridd.2012.07.027.

Keen, D., Webster, A., & Ridley, G. (2016). How well are children with autism spectrum disorder doing academically at school? An overview of the literature. Autism, 20, 276–294. https://doi.org/10.1177/1362361315580962.

Kimball, J. W., Kinney, E. M., Taylor, B. A., & Stromer, R. (2004). Video enhanced activity schedules for children with autism: A promising package for teaching social skills. Education and Treatment of Children, 27, 280–298.

King, A. M., Brady, K. W., & Voreis, G. (2017). “It’s a blessing and a curse”: Perspectives on tablet use in children with autism spectrum disorder. Autism & Developmental Language Impairments, 2, 1–12. https://doi.org/10.1177/2396941516683183.

King, S. A., Lemons, C. J., & Davidson, K. A. (2016). Math interventions for students with autism spectrum disorder: A best-evidence synthesis. Exceptional Children, 82, 443–462. https://doi.org/10.1177/0014402915625066.

Knight, V., McKissick, B. R., & Saunders, A. (2013). A review of technology-based interventions to teach academic skills to students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 43, 2628–2648. https://doi.org/10.1007/s10803-013-1814-y.

Koegel, L. K., Singh, A. K., & Koegel, R. L. (2010). Improving motivation for academics in children with autism. Journal of Autism and Developmental Disorders, 40, 1057–1066. https://doi.org/10.1007/s10803-010-0962-6.

Kratochwill, T. R., Hitchcock, J. H., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M., & Shadish, W. R. (2010). Single case designs technical documentation. What Works Clearhinghouse: Procedures and standards handbook (version 1.0). Retrieved from https://ies.ed.gov/ncee/wwc/Document/229.

Kratochwill, T. R., Hitchcock, J. H., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M., & Shadish, W. R. (2013). Single-case intervention research design standards. Remedial and Special Education, 34, 26–38. https://doi.org/10.1177/0741932512452794.

Ledbetter-Cho, K., Lang, R., O'Reilly, M., Watkins, L., & Lim, N. (2017a). A meta-analysis of instructional programs using touch-screen mobile devices to teach academic skills to individuals with autism spectrum disorders. PROSPERO International Registrar of Systematic Reviews.

Ledbetter-Cho, K., Lang, R., Watkins, L., O’Reilly, M., & Zamora, C. (2017b). Systematic review of the collateral effects of focused interventions for children with autism spectrum disorder. Autism and Developmental Language Impairments. https://doi.org/10.1177/2396941517737536.

*Lee, A., Lang, R., Davenport, K., Moore, M., Rispoli, M., van der Meer, L., … Chung, C. (2015). Comparison of therapist implemented and iPad-assisted interventions for children with autism. Developmental Neurorehabilitation, 18, 97–103. https://doi.org/10.3109/17518423.2013.830231.

Lifter, K., Ellis, J., Cannon, B., & Anderson, S. R. (2005). Developmental specificity in targeting and teaching play activities to children with pervasive developmental disorders. Journal of Early Intervention, 27, 247–267. https://doi.org/10.1177/105381510502700405.

Machalicek, W., O’Reilly, M. F., Beretvas, N., Sigafoos, J., Lancioni, G., Sorrells, A., Lang, R., & Rispoli, M. (2008). A review of school-based instructional interventions for students with autism spectrum disorders. Research in Autism Spectrum Disorders, 2, 395–416. https://doi.org/10.1016/j.rasd.2007.07.001.

Maggin, D. M., & Odom, S. L. (2014). Evaluating single-case research data for systematic review: A commentary for the special issue. Journal of School Psychology, 52, 237–241. https://doi.org/10.1016/j.jsp.2014.01.002.

Mann, H. B., & Whitney, D. R. (1947). On a test of whether one of two random variables is stochastically larger than the other. The Annals of Mathematical Statistics, 18, 50–60. https://doi.org/10.1214/aoms/1177730491.

McConnell, S. R. (2002). Interventions to facilitate social interaction for young children with autism: Review of available research and recommendations for educational intervention and future research. Journal of Autism & Developmental Disorders, 32, 351–372. https://doi.org/10.1023/A:1020537805154.

McCurdy, E. E., & Cole, C. L. (2014). Use of a peer support intervention for promoting academic engagement of students with autism in general education settings. Journal of Autism and Developmental Disorders, 44, 883–893. https://doi.org/10.1007/s10803-013-1941-5.

McKnight, P. E., & Najab, J. (2010). Mann-Whitney U Test. In The Corsini Encyclopedia of Psychology. Hoboken: Wiley.

Mechling, L. C. (2011). Review of twenty-first century portable electronic devices for persons with moderate intellectual disabilities and autism spectrum disorders. Education and Training in Autism and Developmental Disabilities, 46, 479–478.

Migliore, A., Timmons, J., Butterworth, J., & Lugas, J. (2012). Predictors of employment and postsecondary education of youth with autism. Rehabilitation Counseling Bulletin, 55, 176–184. https://doi.org/10.1177/0034355212438943.

Moeyaert, M., Maggin, D., & Verkuilen, J. (2016). Reliability, validity, and usability of data extraction programs for single-case research designs. Behavior Modification, 40, 874–900. https://doi.org/10.1177/0145445516645763.

Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Annals of Internal Medicine, 151, 264–269. https://doi.org/10.1371/journal.pmed.1000097.

Moher, D., Shamseer, L., Clarke, M., Ghersi, D., Liberati, A., Petticrew, M., … Stewart, L. A. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews, 4, 1.

Nashville, N. (2009). History of the palmtop computer, Artipot. Retrieved from http://www.artipot.com/articles/378059/the-history-of-the-palmtopcomputer.htm on June 21, 2017.

National Governors Association Center for Best Practices & Council of Chief State School Officers (2010). Common core state standards. Washington, DC: National Governors Association Center for Best Practices & Council of Chief State School Officers.

*Neely, L., Rispoli, M., Camargo, S., Davis, H., & Boles, M. (2013). The effect of instructional use of an iPad® on challenging behavior and academic engagement for two students with autism. Research in Autism Spectrum Disorders, 7, 509–516. https://doi.org/10.1016/j.rasd.2012.12.004.

Office of Special Education Programs. (2017). Thirty-eighth annual report to congress on the implementation of the Individuals with Disabilities Education Act. Washington, DC: U.S. Department of Education.

Parker, R. I., & Vannest, K. (2009). An improved effect size for single-case research: Nonoverlap of all pairs. Behavior Therapy, 40, 357–367. https://doi.org/10.1016/j.beth.2008.10.006.

Parker, R. I., Vannest, K., & Brown, L. (2009). The improvement rate difference for single-case research. Exceptional Children, 75, 135–150.

Pustejovsky, J. E. (2017). Single-case effect size calculator (Version 0.1) [Web application]. Retrieved from https://jpusto.shinyapps.io/SCD-effect-sizes.

Pustejovsky, J. E., & Ferron, J. M. (2017). Research synthesis and meta-analysis of single-case designs. In J. M. Kaufmann, D. P. Hallahan & P. C. Pullen (Eds.), Handbook of special education (2nd ed.). New York, NY: Routledge.

Ramdoss, S., Lang, R., Mulloy, A., Franco, J., Reilly, O., Didden, M., R., & Lancioni, G. (2011). Use of computer-based interventions to teach communication skills to children with autism spectrum disorders: A systematic review. Journal of Behavioral Education, 20, 55–76. https://doi.org/10.1007/s10864-010-9112-7.

Reichow, B., & Volkmar, F. (2010). Social skills interventions for individuals with autism: Evaluation for evidence-based practices within a best evidence synthesis framework. Journal of Autism and Developmental Disorders, 40, 149–166. https://doi.org/10.1007/s10803-009-0842-0.

Reichow, B., Volkmar, F. R., & Cicchetti, D. V. (2008). Development of the evaluative method for evaluating and determining evidence-based practices in autism. Journal of Autism and Developmental Disorders, 38, 1311–1319. https://doi.org/10.1007/s10803-007-0517-74.

Rohatgi, A. (2017). WebPlotDigitizer user manual version 3.12. Retrieved from https://arohatgi.info/WebPlotDigitizer/userManual.pdf.

Root, J. R., Stevenson, B. S., Davis, L. L., Geddes-Hall, J., & Test, D. W. (2017). Establishing computer-assisted instruction to teach academics to students with autism as an evidence-based practice. Journal of Autism and Developmental Disorders, 47, 275–284. https://doi.org/10.1007/s10803-016-2947-6.

Shadish, W. R., Hedges, L. V., & Pustejovsky, J. E. (2014). Analysis and meta-analysis of single-case designs with a standardized mean difference statistic: A primer and applications. Journal of School Psychology, 52, 123–147. https://doi.org/10.1016/j.jsp.2013.11.005.

Sham, E., & Smith, T. (2014). Publication bias in studies of an applied behavioranalytic intervention: An initial analysis. Journal of Applied Behavior Analysis, 47, 663–678. https://doi.org/10.1002/jaba.146.

Shane, H. C., & Albert, P. D. (2008). Electronic screen media for persons with autism spectrum disorders: Results of a survey. Journal of Autism and Developmental Disorders, 38, 1499–1508. https://doi.org/10.1007/s10803-007-0527-5.

*Siegel, E. B., & Lien, S. E. (2015). Using photographs of contrasting contextual complexity to support classroom transitions for children with autism spectrum disorders. Focus on Autism and Other Developmental Disabilities, 30, 100–114. https://doi.org/10.1177/1088357614559211.

*Smith, B. R., Spooner, F., & Wood, C. L. (2013). Using embedded computer-assisted explicit instruction to teach science to students with autism spectrum disorder. Research in Autism Spectrum Disorders, 7, 433–443. https://doi.org/10.1016/j.rasd.2012.10.010.

Smith, K. A., Shepley, S. B., Alexander, J. L., & Ayres, K. M. (2015). The independent use of self-instructions for the acquisition of untrained multi-step tasks for individuals with an intellectual disability: A review of the literature. Research in Developmental Disabilities, 40, 19–30. https://doi.org/10.1016/j.ridd.2015.01.010.

*Spriggs, A., Knight, V., & Sherrow, L. (2015). Talking picture schedules: Embedding video models into visual activity schedules to increase independence for students with ASD. Journal of Autism & Developmental Disorders, 45, 3846–3861. https://doi.org/10.1007/s10803-014-2315-3.

*Van der Meer, J., Beamish, W., Milford, T., & Lang, W. (2015). iPad-presented social stories for young children with autism. Developmental Neurorehabilitation, 18, 75–81. https://doi.org/10.3109/17518423.2013.809811.

Watkins, L., O’Reilly, M., Kuhn, M., Gevarter, C., Lancioni, G. E., Sigafoos, J., & Lang, R. (2014). A Review of peer-mediated social interaction interventions for students with autism in inclusive settings. Journal of Autism and Developmental Disorders, 45, 1070–1083. https://doi.org/10.1007/s10803-014-2264-x.

Watkins, L., O’Reilly, M., Ledbetter-Cho, K., Lang, R., Sigafoos, J., Kuhn, M., Lim, N., Gevarter, C., & Caldwell, N. (2017). A meta-analysis of school-based social interaction interventions for adolescents with autism spectrum disorder. Review Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s40489-017-0113-5.

Wendt, O., & Miller, B. (2012). Quality appraisal of single-subject experimental designs: An overview and comparison of different appraisal tools. Education and Treatment of Children, 35, 235–268. https://doi.org/10.1353/etc.2012.0010.

*Weng, P.-L., & Bouck, E. C. (2014). Using video prompting via iPads to teach price comparison to adolescents with autism. Research in Autism Spectrum Disorders, 8, 1405–1415. https://doi.org/10.1016/j.rasd.2014.06.014.

Whalon, K. J., Conroy, M. A., Martinez, J. R., & Werch, B. L. (2015). School-based peer-related social competence interventions for children with autism spectrum disorder: A meta-analysis and descriptive review of single case research design studies. Journal of Autism and Developmental Disorders, 45, 1513–1531. https://doi.org/10.1007/s10803-015-2373-1.

Willets, M. (2017). 11 expert-recommended autism apps for kids. Parenting. Retrieved from http://www.parenting.com/gallery/autism-apps.

*Xin, J. F., Sheppard, M. E., & Brown, M. (2017). Brief report: Using iPads for self-monitoring of students with autism. Journal of Autism and Developmental Disorders, 47, 1559–1567. https://doi.org/10.1007/s10803-017-3055-y.

*Yakubova, G., Hughes, E. M., & Hornberger, E. (2015). Video-based intervention in teaching fraction problem-solving to students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 45, 2865–2875. https://doi.org/10.1007/s10803-015-2449-y.

*Yakubova, G., Hughes, E., & Shinaberry, M. (2016). Learning with technology: Video modeling with concrete-representational-abstract sequencing for students with autism spectrum disorder. Journal of Autism & Developmental Disorders, 46, 2349–2362. https://doi.org/10.1007/s10803-016-2768-7.

*Zein, F., Gevarter, C., Bryant, B., Son, S.-H., Bryant, D., Kim, M., & Solis, M. (2016). A comparison between iPad-assisted and teacher-directed reading instruction for students with autism spectrum disorder. Journal of Developmental and Physical Disabilities, 28, 195–215. https://doi.org/10.1007/s10882-015-9458-9.

Acknowledgments

The research described in this article was supported in part by Grant H325H140001 from the Office of Special Education Programs, U.S. Department of Education. Nothing in the article necessarily reflects the positions or policies of the federal government, and no official endorsement by it should be inferred.

Author information

Authors and Affiliations

Contributions

KLC contributed to the formulation of the review questions and procedures, conducted initial searches, provided summaries of the included studies, and wrote the first draft of the manuscript. MO and RL contributed to the formulation of the review questions and procedures and the writing of the final manuscript. LW and NL contributed data coding and analysis. All authors have approved the final version of the manuscript.

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ledbetter-Cho, K., O’Reilly, M., Lang, R. et al. Meta-analysis of Tablet-Mediated Interventions for Teaching Academic Skills to Individuals with Autism. J Autism Dev Disord 48, 3021–3036 (2018). https://doi.org/10.1007/s10803-018-3573-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-018-3573-2