Abstract

Most approaches aiming to reduce behavior problems among youth with Autism Spectrum Disorder (ASD) focus on individual students; however, school personnel also need professional development to better support students. This study targeted teachers’ skill development to promote positive outcomes for students with ASD. The sample included 19 teachers in two non-public special education settings serving students with moderate to severe ASD. Participating teachers received professional development and coaching in classroom management, with guided practice in a mixed-reality simulator. Repeated-measures ANOVAs examining externally-conducted classroom observations revealed statistically significant improvements in teacher management and student behavior over time. Findings suggest that coaching and guided practice in a mixed-reality simulator is perceived as acceptable and may reduce behavior problems among students with ASD.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The social, communication, and behavioral symptoms of Autism Spectrum Disorder (ASD), and the high co-morbidity with an intellectual disability (CDC 2014), complicates the education of students identified as having these disabilities (Ben Itzchak et al. 2008). Children with moderate to severe ASD, in particular, are at risk for exhibiting behavior problems including aggression and self-injurious behaviors, which can interfere with learning and impact student safety (Munson et al. 2008; Sullivan and Bradshaw 2012). These behavior problems prove to be challenging for teachers to manage. Yet, relatively few teachers have training in the use of evidence-based interventions for students with ASD while addressing behavior problems in the classroom (Scheuermann et al. 2003; Shyman 2012). Targeting teacher practices is consistent with the public health approach to prevention (O’Connell et al. 2009) and is likely an effective and cost-efficient way of addressing the complex needs of multiple children with ASD simultaneously. However, literature on how to support in-service teachers’ skill development is lacking. This study aimed to address this gap by exploring the extent to which a teacher-tailored coaching approach that utilized a state-of-the-art mixed-reality simulator was associated with increased teacher capacity to address the diverse behavioral and learning needs of students with ASD.

The Need for Supporting Teachers of Students with ASD

The rate of ASD diagnoses more than doubled between 2002 and 2010 (CDC 2014) with the most recent estimates peaking at 1 in 45 children (Zablotsky et al. 2015). As such, over 455,000 students with ASD are served under the Individuals with Disabilities Education Act (IDEA) in schools, representing the highest number in history (Shattuck 2006; USDOE 2015). Although schools are an important service provider for individuals with ASD, many educators struggle to meet the unique needs of students with ASD. In fact, parents of children with ASD report frustration with the educational system and experience difficultly obtaining appropriate services (Bitterman et al. 2008; Spann et al. 2003) and ensuring the receipt of quality services for their children (Kasari et al. 1999). Dissatisfaction is highest among parents of children with more severe and complex presentations of ASD (Zablotsky et al. 2012). States have similarly shown concern, and created task forces and commissions to provide guidance to service providers such as schools (e.g., Maryland Commission on Autism 2012). Therefore, the optimal means of delivering educational services to students with ASD is a pressing concern.

Although there is a growing body of research on evidence-based behavioral and instructional strategies to be used with children with ASD (Eldevik et al. 2009), these studies largely provide child-centered interventions in highly controlled settings. The translation of such evidence-based interventions into special education classrooms is lacking (NRC 2001; Rumsey et al. 2000). Sadly, a survey of 185 special education directors and autism specialists/consultants in Georgia (i.e., 74 % of the targeted sample; covering 57 % of districts in Georgia) indicated that less than 5 % used evidence-based practices with their students with ASD across all grade levels (i.e., prek-12). Furthermore, among the 40 evidence-based strategies asked about, just three were endorsed as being commonly used (i.e., discrete trial training; Learning Experiences: An Alternative Program for Preschoolers and Parents; and pivotal response; Morrier et al. 2011). Of note, the preschool teachers in this study were the most likely to report using evidence-based strategies. The proportion of teachers who reported using these evidence-based practices was not significantly higher among those working in self-contained special education classrooms than in general education, mixed, or other settings serving students with ASD (Morrier et al. 2011). In sum, there is insufficient use of such interventions and thus, work to improve the dissemination and implementation of evidence-based practices is needed.

The absence of evidence-based strategies in classrooms serving students with ASD may be attributed to the lack of appropriate, specialized training for teachers (Bellini et al. 2011). For example, few graduate teacher preparation programs provide training in core therapeutic techniques for students with ASD (Hyman and Levy 2013; Lerman et al. 2004; Scheuermann et al. 2003). A survey of 87 higher education institutions in 43 states indicated that 41 % of the graduate programs did not offer ASD-related coursework; half of the programs reported that there was no state-established list of autism competencies for educators (Barnhill et al. 2011). Further, general and special educators often lack basic classroom and behavioral management training (Baker 2005; Reinke et al. 2011). Taken together, there is a clear need for additional training in evidence-based interventions for in-service teachers (Kucharczyk et al. 2012).

Potential Utility of Coaching and Guided Practice

There is a growing body of research highlighting the importance of coaching and active learning embedded within the classroom context for enhancing teacher skills (Garet et al. 2001; Kretlow and Bartholomew 2010). More specifically, school-based coaching is defined as professional support provided to teachers as a means of improving their skills (Denton and Hasbrouck 2009); it allows teachers to target skill areas in which their students struggle while receiving on-going, performance-based feedback (Pas et al. 2014; Pas and Newman 2013). Research has shown that coaching increases teachers’ use and fidelity of evidence-based practices (Lerman et al. 2008; Noell et al. 2002; Reinke et al. 2008). A typical approach is for a coach and teacher to meet outside of classroom time to discuss teachers’ practices. Although the focus on immediate feedback or modeling is not common, research suggests it is an effective mode of professional development (Scheeler et al. 2004). There is also increasing interest in using technology as a tool to guide teachers in learning new skills. One such technology is simulation, which provides the opportunity to engage in guided practice that may not otherwise be available in the classroom, enables individualization to teacher needs, and avoids exposing real students to the harms of teachers’ unsuccessful attempts at using new skills (Dieker et al. 2007; Dieker and Rodriguez 2014).

TeachLivE™ is one such immersive, mixed-reality simulator that provides teachers with an opportunity to practice new skills and to receive immediate feedback (Dieker et al. 2008). TeachLivE™ was developed by researchers at the University of Central Florida to be an immersive, mixed-reality (i.e., part real, part synthetic) simulator where the teacher interfaces with five computer-generated, animated student avatars, with unique personalities who react to the human teacher (Dieker et al. 2008). This small-group instruction setting mirrors that of self-contained classrooms and therefore is particularly relevant to special educators of students with ASD. TeachLivE™ is not scripted; rather, it is dynamic and responsive to the teacher’s behavior, making it a more true-to-life experience. It is also modifiable, such that the virtual students’ behavior can be tailored as needed (Dieker and Rodriguez 2014). Given the complex behaviors of students with ASD, TeachLivE™ allows for specialized and focused practicing. Such out-of-classroom practice can prevent potentially unsafe behaviors that may emerge in classrooms when teachers would typically try new techniques.

Coaching teachers within a mixed-reality setting has the potential to improve teachers’ classroom management practices, by transforming teachers’ experience and generating new knowledge (Kolb 2014) and by allowing for immediate performance feedback and teacher reflection, without the distractions of a real classroom (Hayes et al. 2013). Further, research on experiential learning demonstrates that mobile technologies can improve knowledge creation and retention, as compared to traditional “paper and pencil” strategies (Lai et al. 2007). Applied to this study, the continued learning and support of teachers while they practice their new skills in a simulator has the potential to transform teachers’ experiences (Kolb 2014); in turn, this should create more meaningful and sustained changes in teacher skills than a more traditional approach whereby a coach may more simply relay new information to the teacher. TeachLivE™ has not yet been rigorously tested, but has gained much recognition for its ingenuity and promise (Zhu et al. 2011). Preliminary research indicated that providing six pre-service teachers with coaching within the TeachLivE™ simulator, as a means to teach them to use a specific behavioral prompting procedure to use with a student with ASD, was followed by an improvement in teacher use of this skill (Garland et al. 2016). Further, teachers were able to comfortably interact with the avatars (Dieker and Rodriguez 2014; Elford et al. 2013).

Current Study

The aim of the current study was to explore the preliminary effects, acceptability, and feasibility of the coaching and TeachLivE™ intervention in self-contained classrooms serving students with moderate to severe ASD. We utilized a formalized coaching model called the Classroom Check-Up (CCU; Reinke et al. 2011). Prior research has shown that the CCU coaching model is associated with improvements in teacher behavior management and, subsequently, reduced problematic student behaviors (Reinke et al. 2007, 2008), particularly when teachers receive visual performance feedback (e.g., charts of their behaviors; Reinke et al. 2007). General educators have found the CCU to be effective, non-intrusive, confidence evoking, and socially valid (Reinke et al. 2008). Based on earlier work with both the CCU coaching model (Pas et al. in press; Reinke et al. 2008) and the TeachLivE™ simulator (Dieker et al. 2008), we hypothesized that participating teachers would demonstrate improved use of proactive, positive behavior management strategies and that students would display a reduction in behavior problems. Further, we hypothesized that this intervention would be feasible and acceptable, with teachers being particularly receptive to the mixed-reality practice and reflection time via coaching. The overarching goal of this study was to provide initial evidence of promise, feasibility, and acceptability of this professional development model for use with teachers of students with ASD.

Method

Participants

This study was conducted in two non-public educational settings with teachers serving at least one student with moderate to severe ASD. These two settings employ 41 lead teachers and provide services to about 350 students in total, about 60 % of whom were identified as having ASD. School 1 served students aged 5–13 years and School 2 served students aged 14–21 years. Students at these schools were grouped into classrooms based on either moderate needs (i.e., were in need of social and attentional support, but fewer academic and emotional supports) or moderate to severe needs (i.e., were in need of intensive behavioral, emotional, and academic supports; displayed sensory difficulties and general emotion and behavior dysregulation). Students attend these schools through funding provided by the local school system combined with funds provided by the federal and state departments of education. The local school systems’ Individualized Education Program (IEP) team determined the placement as the least restrictive environment in which to implement each student’s IEP.

The sample included 19 white teachers, the majority of which were women and had a master’s degree. On average, teachers’ experiences placed them as mid-career, ranging from early (i.e., 3 years of experience) to late (i.e., maximum of 25 years) career. Class sizes were small (i.e., never more than 12 students per classroom) and had at least two adults, the lead teacher and either a teaching assistant or student aide. The largest number of adults observed in one classroom was six. About half of the teachers served students with moderate to severe needs and half served those with mainly moderate disabilities. See Table 1 for additional details and school-specific data.

Procedure

Recruitment

The school administrators asked 19 teachers to participate, all of which agreed. Those not approached met exclusionary criteria, including that they did not serve any students with ASD (i.e., n = 4), were involved in a different study using TeachLivE™ to promote teachers’ use of discrete trial training (i.e., n = 5), or were already being coached (n = 11). Coaching and TeachLivE™ were part of the regular education practice, but could only be extended to a small number of teachers each year because of resource limitations (i.e., administrative staff to provide coaching and funds to pay for TeachLivE™ sessions). The resources for this study enabled the schools to provide coaching to all teachers of students with ASD and to examine the outcomes using objective observations conducted by researchers. At the start of this study (i.e., baseline), none of the participating teachers were engaged in coaching or use of the mixed-reality simulator.

Intervention

As noted above, the coaches utilized the CCU (Reinke et al. 2011), a data-driven coaching framework, and provided guided practice using the mixed-reality TeachLivE™ virtual classroom within the coaching (Dieker et al. 2008). The lead author and practitioner partners tailored the CCU and TeachLivE™ processes to ensure alignment with the school’s already-existing use of the TeachLivE™ technology. The CCU is a staged problem-solving process that targets teacher classroom management skills (Reinke et al. 2011), specifically addressing use of positive behavioral supports such as setting clear behavior expectations, providing students with behavior-contingent praise, and increasing opportunities to respond (Sutherland et al. 2000, 2002).

Following the CCU procedure (Reinke 2006), the coach first met with each teacher for an interview to learn more about the teacher and his/her identified areas of strength and weakness. During this interview and other stages of this process, the CCU coach used motivational interviewing techniques (Reinke et al. 2008, 2011) by listening for “change talk” or language that the teacher used that either explicitly or implicitly demonstrated his/her motivation to change a behavior. After the interview, the coach collected classroom management data during three classroom visits. Following data collection, the coach provided feedback on strengths and areas for improvement. Again, the coach used motivational interviewing communication skills, such as paraphrasing and summarizing, to highlight the teachers’ motivations/desires to improve specific elements of the classroom. Based on these conversations, the coach and teacher pair co-developed an action plan. The pairing of both motivational interviewing and data-based feedback and decision making targets the often-encountered obstacle of implementation fidelity common to school-based consultation (Reinke et al. 2008), by allowing the coach to help the teacher identify acceptable strategies and garnering teacher commitment and thus, implementation fidelity (Reinke 2006; Reinke et al. 2012; for greater detail, see Reinke et al. 2011). Each teacher selected 1–2 target behaviors for promoting positive student behavior in the classroom.

Approximately 2 weeks following the action planning, the teacher practiced the targeted skill(s) in the TeachLivE™ simulator. A TeachLivE™ simulator was already set up at one of the schools. The simulator was located in an approximately 10 × 15 room that was reserved for this purpose; the virtual avatar classroom was projected onto a 60 inch screen at the front of the room. The teacher stood facing the screen that displayed the five avatar students sitting at desks and facing the teacher. A webcam and an Xbox Kinect allowed for the perspective of the classroom to change as the teacher walked around the room (e.g., to allow the teacher to “move closer” to a specific student). The middle or high school avatars were used, depending on the grade level(s) the teachers in the session taught. The avatars are a diverse student sample, including both genders and students of different racial and ethnic backgrounds.

Coaches also went to the TeachLivE™ session to collect data on the target skill(s) and to provide immediate feedback. Teachers attended hour-long lab sessions in pairs and were able to observe one another practicing. The TeachLivE™ format was such that one teacher practiced for 10 min while the coach collected data, that teacher received immediate feedback, watched the other teacher practice and receive feedback, and then repeated that cycle for a second time. Following the first lab session, the coach conducted another classroom observation to determine whether skills transferred from the simulator into the classroom, and also provided feedback to the teacher on their progress toward increasing their use of specific classroom management strategies. The timeframe from the interview through the final follow-up observation in the classroom was about 10 weeks. Teachers were then scheduled for a second and third TeachLivE™ session in the simulator.

Coaching Supervision and Dosage

Each school was served by one of two coaches, one was a doctorate-level clinical psychologist and the second was a former teacher who was an Ed.D. candidate in a school of education. Both were trained in the CCU and supervised in a bi-weekly meeting led by the lead author. To ensure that the intervention was implemented with fidelity, the lead author viewed videos of every teacher interview, feedback, and action planning session and a school administrator was present for every TeachLivE™ session.

Data Collection

The data collection was conducted by two master-level research assistants, who served as trainers for this observational measure in other studies conducted by co-authors on this study; they were not involved in the coaching process, but rather only collected data for the purpose of the research. These trainers reached 80 % inter-observer agreement in their training using the observational protocol, but have also conducted it for dozens of schools, and continue to re-calibrate each year. For each of the two observations per time point, the observer spent approximately 3 min acclimating to the classroom and providing some basic information about the classroom (e.g., the number of students and staff in the room). The observer then spent 15 min conducting event-based tallies (see next section) followed by completion of Likert-type survey items regarding teacher classroom management, student behavior, and the engagement of students in meaningful participation. The number of students and adults present in the classrooms during baseline observation appears in Table 1. Coaches logged the amount of time spent with each teacher, following each contact. Both coaches and teachers provided their feedback about the intervention, at the end of the study, on a paper survey. The Institutional Review Board at the researchers’ institution approved this study.

Measures

Classroom Observations

The Assessing School Settings: Interactions of Students and Teachers (ASSIST; Rusby et al. 2001) classroom observation includes both event-based tallies and global ratings of teacher and student behaviors. The tallies included counts of teacher use of classroom management strategies and student behavior. Specifically, proactive behavioral expectations included all verbal (e.g., explaining, prompting) and physical (e.g., modeling) demonstrations of behavioral expectations prior to a behavior becoming a problem. Reactive behavior management included teacher cues (e.g., touch, gesture, proximity, and comment) in response to inappropriate behavior (i.e., excluding disapproval). Opportunities to respond (OTRs) were defined as behavioral or instructional prompts requiring an immediate response to the teacher, a peer, or a publicly (not privately) written response. Approval was defined as recognizing students’ performance by providing a tangible item, verbal praise, approving gestures, or physical contact. Disapproval was the threat or use of a tangible punitive consequence, verbal criticism or sarcasm, or gestural or physical contact to demonstrate dissatisfaction with behavior. Student non-compliance was defined as an instance when a student/s does not follow an adult directive. Disruptions were defined as a behavior that interferes with the activity of another student(s), the entire classroom, or the teacher as indicated by the target person or group being taken off task. Physical aggression was defined as aversive physical contact, and verbal aggression was defined as verbal disapproval or critical judgment. Profanity was coded separately from verbal aggression.

The survey items completed at the end of the observation comprised seven global rating scales. Each item had a 5-point Likert scale, ranging from never (scored 0) to almost continuously/often occurred (scored 4). Items on each scale were averaged to create the scale score. Included in the scales were ratings of teacher positive behavioral management (4 items; α = .68; “Teacher gives clear instructions and directives to students”), teacher monitoring (4 items; α = .83; “Teacher positions him/herself so they can see most of the room area”), teacher anticipation (6 items; α = .85, “Teacher anticipates when students may have problems behaviorally”), teacher control (5 items; α = .82; “There is evidence of classroom routines—students know what they’re supposed to be doing”), teacher and student meaningful participation (9 items, α = .85; “Students have opportunities to take leadership roles in the classroom”), student compliance (7 items, α = .92; “Students consistently follow rules appropriate to settings”), and student socially disruptive behavior (7 items, α = .66; “Students are irritable or sarcastic toward the teacher”; see Debnam et al. (2015) and Pas et al. (2015) for additional information about the ASSIST).

Data were collected at three time points: prior to the coaching (i.e., baseline), following the coaching and mixed-reality exposure (i.e., post-test), and then about 3 months later (i.e., follow-up). Data from the two observations per time point were averaged and used as single scores at post-test and follow-up. Fifteen of the 19 teachers had complete data at all three time points. With regard to the four teachers without complete data, one teacher went on extended medical leave after coaching, and thus only was present in the school during the baseline data collection. The other three were not available for the follow-up data because coaching occurred later in the school year. These teachers had baseline and posttest data. Observers provided complete data during each observation conducted.

Coaching Dosage Data

The coaches logged the amount of time they met with each teacher, as well as the specific amount of time spent on each core coaching activity (e.g., interview, data collection, feedback, TeachLivE™ session) after each teacher contact. These data were used to determine the amount of time spent with each teacher and applied to determine the feasibility of the model.

Acceptability of coaching

Both the participating teachers and coaches provided information regarding the coaching relationship via the coach-teacher alliance measures (see Johnson et al. 2016). These parallel coach and teacher measures included four subscales (Bradshaw et al. 2009a, b) including an assessment of how each participant viewed the working relationship (e.g., “The coach/teacher and I worked together collaboratively”; teacher α = .76; coach α = .88); the coaching process which assessed how competently the steps of coaching were conducted (e.g., “The coach/I communicated effectively”; teacher α = .85; coach α = .79); teacher investment in coaching scale which assessed how much the teacher valued and perceived the coaching to be worthwhile (e.g., “The work I did with the teacher/coach was important”; teacher α = .88; coach α = .78); and perceived benefits of the coaching scale which assessed improvements in teacher skills and positive impact on students (e.g., “The students benefit from the work with the coach/teacher”, teacher α = .71; coach α = .91). The coach form additionally assessed perceived barriers to the coaching using 6 items (α = .76; e.g., “reported work-related problem”). All responses were provided on a 5-point Likert-type scale (0 = never to 4 = always).

Acceptability of TeachLivE™

For this study, we also adapted an additional scale comprised of eight items regarding the acceptability of the use of TeachLivE™ technology within the CCU process. This scale included items regarding teacher comfort and perceived relevance (e.g., “The TeachLivE™ lab provided added benefits to the coaching experience”) of the mixed-reality experience (teacher form: α = .70; coach form: α = .64). All responses were provided on a 5-point Likert scale (0 = never to 4 = always).

Analyses

We first examined the changes over time on the ASSIST tallies and global ratings regarding teacher and student behavior using repeated measures MANOVAs. Repeated measure ANOVAs were then used to determine whether there were significant changes on each of the ten event-based tallies and seven global ASSIST scales. Pair-wise comparisons indicated whether there were differences between baseline and post-test, baseline and follow-up, and post-test and follow-up. Cohen’s d effect sizes were also calculated to indicate the magnitude of differences between time points, as a function of standard deviation units. Specifically, Cohen’s d effect sizes were calculated by subtracting the unadjusted follow-up means from the pre-test means, and dividing by the baseline standard deviation. Effect sizes of 0.2 are considered small; 0.5 are moderate; and 0.8 are large (Cohen 1988). Descriptive analyses were used to summarize the acceptability data. Conclusions about feasibility are drawn based on the implementation data and the acceptability of the intervention.

Results

Changes in Observational Data Over Time

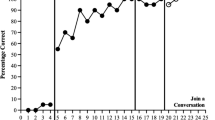

The repeated measure MANOVA for the tallied teacher behavior indicated significant effects for time, F (2, 13) = 4.33, p = .04, partial η2 = .40, for tally, F (3, 12) = 43.46, p < .01, partial η2 = .92, and for the interaction between time and tally, F (6, 9) = 5.25, p = .01, partial η2 = .79 indicating that tallies changed significantly over time and that these effects varied across tallies. Therefore, follow-up ANOVAs were conducted for each individual teacher behavior tally on the ASSIST (see Table 2 for all ANOVA findings and Fig. 1 for graphic depiction). The ANOVAs demonstrated significant improvements in the tallied observations of teachers’ use of proactive behavioral expectations, F (2, 28) = 6.73, p < .01, partial η2 = .33. Specifically, teachers communicated approximately one proactive behavioral expectation every 2 min at baseline (i.e., M = 7.80 instances in 15 min), which increased to approximately one per minute at post-test (M = 12.63) and follow-up (M = 12.83). Similarly, there were significant improvements in the teachers’ use of approval, F (2, 28) = 8.12, p < .01, partial η2 = .37. Teachers used more than twice as many approvals at post-test (M = 24.53) and nearly twice as many at follow-up (M = 18.50), as compared to baseline (M = 11.00). For both proactive behavioral expectations and approval, the pairwise comparisons demonstrated a significant increase between baseline and both subsequent data collections; however, the post-test and follow-up rates were not significantly different from each other, suggesting a sustained effect. The increasing trend in use of reactive behavior management and opportunities to respond did not reach statistical significance. Teachers were never observed using disapprovals; therefore, no analyses were conducted on this outcome.

The repeated measure MANOVA for the tallied student behavior indicated a significant effect for tally, F (4, 11) = 10.72, p < .01, partial η2 = .80, but not for time or the interaction between time and tally, indicating that student tallies differed from one another. In follow-up ANOVA testing, one student behavioral rating differed significantly over time; specifically, the rate of student non-compliance reduced significantly over time, F (2, 28) = 3.58, p = .04, partial η2 = .20, with rates being significantly lower at follow-up (M = 0.13 instances in 15 min) than at post-test (M = 1.00). The presence of just one indicator changing over time explains the results of the MANOVA.

A MANOVA also indicated significant improvements in the ASSIST teacher global rating scales over time, F (2, 8) = 10.64, p < .01, partial η2 = .73, by global scale F (7, 3) = 46.17, p < .01, partial η2 = .95, and for the interaction between teacher global scale and time, F (4, 6) = 19.28, p < .01, partial η2 = .97. The same was true for the MANOVA for the student global rating scales; F (2, 13) = 7.60, p < .01, partial η2 = .54 for time, F (2, 13) = 564.24, p < .01, partial η2 = .99 by global scale, and F (4, 11) = 9.34, p < .01, partial η2 = .77 for the interaction between student global scale and time. The follow-up ANOVAs indicated significant changes on ratings of teacher proactive behavior management, F (2, 28) = 6.92, p < .01, partial η2 = .33, with ratings for teacher behavioral management increasing incrementally over the three data collections and the significant difference emerging between the baseline (i.e., M = 2.92) and follow-up (i.e., M = 3.36) rating. Similarly, teacher control, F (2, 28) = 17.11, p < .01, partial η2 = .55, teacher monitoring, F (2, 28) = 14.10, p < .01, partial η2 = .50, and teacher and student meaningful participation, F (2, 28) = 9.81, p < .01, partial η2 = .41, increased significantly over time. On all three scales, there was a significant increase between baseline and both subsequent data collections; however, the post-test and follow-up rates were not significantly different from each other, suggesting a sustained effect (Ms = 3.43, 3.67, and 3.77 for teacher control, Ms = 2.95, 3.50, and 3.29 for monitoring, and Ms = 1.71, 2.22, and 2.10 for participation at baseline, post-test, and follow-up). There were also marginally significant improvements over time in ratings of student socially disruptive behaviors, F (2, 28) = 3.19, p = .057, partial η2 = .19 (see Table 2; Fig. 1).

Effect Sizes Estimates

We computed Cohen’s d effect sizes as an indication of the magnitude of the difference between the baseline ASSIST scales and the follow-up scores (see Table 2). These effect sizes are helpful in light of the relatively small sample size and the potential for low power to detect significant effects (Cohen 1992) and are more interpretable than a partial η2 (Levine and Hullet 2002). With regard to the tallied teacher data, the largest effect sizes were in the moderate to large range and were observed for teacher approval (d = 1.06), proactive behavioral expectations (d = 0.92), and opportunities to respond (d = 0.47). With regard to the tallied student behaviors, the largest effect sizes were in the moderate range and were observed for non-compliance (d = −0.36) and student disruptions (d = −0.29). Inspection of the Cohen’s d effect size estimates (reported in Table 2) indicated that the largest effect sizes were observed for the global ratings of teacher control (d = 2.66), teacher monitoring (d = 1.74), meaningful participation (d = 1.60), and teacher proactive behavior management (d = 1.43), which were all in the large range.

Coaching Dosage Data

Data from the review of videos and coaching logs indicated that the intervention was implemented as intended. All teachers received the components of the CCU and received coaching in TeachLivE™ at least once. The only variability in implementation was with regard to the number of TeachLivE™ sessions attended by teachers. All teachers were offered the opportunity to participate in the TeachLivE™ simulator on three occasions with the first session scheduled following action planning and the other two sessions scheduled following the coach follow-up observation in the classroom. All of the teachers participated in the first session; only 26.3 % of teachers stopped after this session. The majority of teachers (i.e., 73.7 %) attended a second session, and less than a third (i.e., 31.6 %) participated all three times.

Teachers received just over 7 h of coaching and TeachLivE™ time (i.e., M = 7.15 h, SD = 1.45 h). It is important to note that approximately 28 % of the total time logged by coaches was time they spent going into the classroom to observe (i.e., data they used for feedback and was done separately from the ASSIST observations). Thus, of the approximately 7 h of coaching time, on average 4.11 h (SD = 1.65 h) required face-time with the teachers, suggesting a high level of efficiency.

Acceptability

Coaches provided the ratings on alliance surveys regarding each of the 19 coaching cases; 8 of the 19 teachers also provided ratings. There were no statistically significant differences between teachers who provided the alliance ratings and those who did not on the number of TeachLivE™ sessions attended or on any baseline ASSIST data. As we have found in other research (Johnson et al. 2016), the ratings provided by teachers regarding the working relationship, coaching process, investment in, and perceived benefits of the coaching were high overall (i.e., average ratings ranged from 3.13 to 3.69 by teachers; see Table 3 for full listing of average ratings). Thus, teachers, on average, rated the four alliance scales positively (i.e., in the often to always range). Similarly, the coaches also viewed the relationship, process, and investment positively (i.e., average ratings of 2.99 to 3.26 or often to always agreeing with the positively-worded statements). Coaches, on average, reported sometimes (i.e., M = 2.50; SD = 0.61) observing benefits to the teachers. Ratings of TeachLivE™ were more moderate by both groups, with teachers reporting an average score of 2.77 (SD = 0.60), and coaches reporting an average score of 3.05 (SD = 0.39). Finally, coaches reported that barriers were never to seldom present (i.e., M = 0.27; range = 0 to 1.50).

Discussion

Research demonstrates that special educators are lacking training and support in implementing evidence-based strategies when instructing students with ASD (Bellini et al. 2011; Morrier et al. 2011), who often have difficult behavioral and emotional symptoms that interfere with learning. Thus, this study used a teacher-focused professional development approach to broadly promote teachers’ use of behavior management strategies and positive student behaviors (e.g., Garet et al. 2001; Reinke 2008), targeting special education teachers working in a self-contained educational setting. Specifically, we implemented guided practice in a mixed-reality simulator approach (Dieker et al. 2008) within an existing tailored coaching model (Reinke et al. 2011) to improve teaching practices. The results indicated that this intervention shows promise as an effective and acceptable intervention with special educators.

Observations conducted by research assistants using a standardized tool (i.e., the ASSIST) regarding teachers’ classroom management strategies and students’ behavior at three time points revealed that the participating teachers evidenced significant improvements in classroom management strategies as well as improvements in student behavior. Specifically, relative to baseline, the post-test and follow-up classroom observations suggested significant gains in teachers’ frequency and observer ratings of positive behavioral expectations, the frequency of approvals, and ratings of teacher monitoring and anticipation. In other words, a range of scales captured improvements in teachers’ classroom behavior management over the course of the school year. Importantly, many of the gains in teachers’ skills that occurred post-test were sustained at follow-up. Interestingly, the significant declines in tallied student behavior problems only emerged at the follow-up observations (i.e., not at post-test), which may suggest that these changes take a little longer to emerge. This pattern aligns with the notion that student behavior change may only occur following sustained improvements in classroom management (e.g., Burke et al. 2011; MacSuga and Simonsen 2011; Pisacreta et al. 2011). Finally, observations indicated improvements in meaningful participation, indicating that students were provided more frequent opportunities to engage in the classroom instruction in the later data collections.

All teachers were able to successfully complete the coaching and guided practice; earlier studies have similarly shown such fidelity with regular education teachers (Dieker and Rodriguez 2014; Elford et al. 2013; Reinke et al. 2008). The active time required by teachers was approximately 4 h on average, thus indicating that the intervention can be implemented within teachers’ busy schedules. This time commitment included up to three sessions in the TeachLivE™ simulator, with the majority of teachers attending on two occasions. The variability in teacher attendance in TeachLivE™ may have related to a few simulator-specific barriers. Logistically, the coach returned to the teachers’ classrooms following the first simulator session, then scheduled a second session within reasonable proximity (i.e., weeks). The third session in the simulator followed breaks in the school year, and anecdotally, it seemed there was a loss of momentum in scheduling and thus attendance. Had the coaches provided additional in-classroom follow-up after the second session or scheduled all three sessions closely together, there may have been more consistent attendance for all three sessions. Finally, teachers were scheduled to practice within the simulator after school hours, which may have served as a barrier, particularly during the latter part of the school year. Further research is needed regarding the optimal number of simulator sessions and the means for promoting attendance and teacher behavior change.

Both teachers and coaches generally rated the alliance scales and the work in the TeachLivE™ simulator highly, indicating acceptability of the intervention. When specifically asked about their discomfort or the level of awkwardness in the first and second TeachLivE™ sessions, teachers’ responses on average were below a 2 (i.e., averaging a response of rarely to sometimes) and therefore generally suggested a fair degree of comfort. However, these responses also suggested room for improvement; perhaps more time is needed for teachers to become more familiar with the simulator. Open-ended comments from teachers indicated a desire for greater tailoring of the simulator, which was utilized with those who attended a third session. Perhaps teachers who did not attend the third session disengaged because of concerns that it was not tailored enough to their needs.

Limitations and Strengths

Given the developmental, non-experimental nature of this work, the sample size was small and conclusions are not causal. Furthermore, not all teachers provided their feedback on the alliance survey. Coaches felt that teachers were very busy at the end of the school year, when surveys were administered, and may have forgotten; all teachers were given two reminders. The sample size is a challenge more broadly within this field, given that teachers who address the needs of students with ASD are typically spread across schools, and therefore researchers would need to engage a large number of schools to attain a large teacher sample. Working with a greater number of similar settings (e.g., those with multiple classrooms serving students with moderate to severe ASD) in a public school setting would allow for the addition of a control condition of teachers and/or a coached condition which did not include TeachLivE™. Such a design would allow for stronger conclusions regarding the causal impact of the intervention on teacher and student outcomes as well as a determination of generalizability. A larger sample may also provide more diversity in the teaching staff. Future data collection regarding teacher perceptions will additionally be offered as an online measure, which may improve response rates. Despite the small sample, significant improvements on several outcomes were observed following coaching, and many of the effect sizes were in the moderate to large range (Cohen 1992), particularly for the global teacher ratings. Other strengths of the study included the use of objective and externally-conducted repeated observations of both student and teacher outcomes.

The overall prevalence rate of negative student behaviors (e.g., profanity, aggression) was relatively low in this setting, despite the multiple observations conducted in each classroom. Thus, our ability to detect statistically significant improvements over time on these scales was limited. Furthermore, teacher disapproval was never coded in this setting and therefore could not be improved upon or analyzed. Prior research in regular education high schools indicates that, on average, teachers display less than 1 act of disapproval per 15 min (see Pas et al. 2015); this low prevalence of teacher use of disapproval is notable given the severity of ASD and other diagnosis symptomology among this student population. Further examination of the teacher practices in settings such as these, using the ASSIST, would further our understanding of the use of positive behavioral management among special educators serving students with ASD.

Conclusions

Teachers play an important role in improving the outcomes for students with ASD. Yet, few interventions for this population have targeted teachers as the primary change agent (NRC 2001; Rumsey et al. 2000). This study leveraged a novel mixed-reality simulator as a means for providing guided practice of skills to teachers embedded within a staged problem solving coaching process. This simulator can offer a multitude of skill-building opportunities to teachers, as well as to students, if applied and examined further. With the emerging technological developments in education, this study is both timely and unique.

Taken together, the current study provided preliminary evidence of the potential impacts of a teacher-focused coaching model to support students with ASD. Specifically, our findings suggested that the CCU coaching model combined with mixed-reality simulated practice may be an effective approach for promoting both teacher and student behavioral change. The coaching process was relatively efficient, requiring just about 4 h of active teacher participation, and was generally well-received by teachers. Furthermore, the data suggest that this intervention is acceptable to teachers and therefore a potentially feasible approach (Bowen et al. 2009), particularly if further attention is paid to how the simulator is introduced to teachers and how they are provided access to it. Our experience suggests that maximizing the accessibility of the simulator and including activities such as helping teachers to become familiar with the simulator, ensuring that there is a proper infrastructure for scheduling the sessions within close proximity of one another, and having coaches continue to follow up within the classroom may further increase acceptability, feasibility, and the potential impact of this approach. Additional experimental research is needed to determine the causal influence of this novel model of professional development on teacher and student behavior and to determine the most essential components of this intervention.

References

Baker, P. H. (2005). Managing student behavior: How ready are teachers to meet the challenge? American Secondary Education, 33, 51–64.

Barnhill, G. P., Polloway, E. A., & Sumutka, B. M. (2011). A survey of personnel preparation practices in autism spectrum disorders. Focus on Autism and Other Developmental Disabilities, 26(2), 75–86. doi:10.1177/1088357610378292.

Bellini, S., Henry, D., & Pratt, C. (2011). From intuition to data: Using logic models to measure professional development outcomes for educators working with students on the autism spectrum. Teacher Education and Special Education, 34, 37–51. doi:10.1177/0888406410384153.

Ben Itzchak, E., Lahat, E., Burgin, R., & Zachor, A. D. (2008). Cognitive, behavior, and intervention outcome in young children with autism. Research in Developmental Disabilities, 29, 447–458.

Bitterman, A., Daley, T. C., Misra, S., Carlson, E., & Markowitz, J. (2008). A national sample of preschoolers with autism spectrum disorders: Special education services and parent satisfaction. Journal of Autism and Developmental Disorders, 38, 1509–1517. doi:10.1007/s10803-007-0531-9.

Bowen, D. J., Kreuter, M., Spring, B., Cofta-Woerpel, L., Linnan, L., Weiner, D., … Fernandez, M. (2009). How we design feasibility studies. American Journal of Preventive Medicine, 36, 452–457. doi:10.1016/j.amepre.2009.02.002.

Bradshaw, C. P., Pas, E. T., Domitrovich, C. E., Reinke, W. M., Herman, K., & Poduska, J. M. (2009a). Measure of coach and teacher alliance—teacher report. Unpublished measure. Baltimore, MD: Johns Hopkins University.

Bradshaw, C. P., Pas, E. T., Domitrovich, C. E., Reinke, W. M., Herman, K., & Poduska, J. M. (2009b). Measure of coach and teacher alliance—coach report. Unpublished measure. Baltimore, MD: Johns Hopkins University.

Burke, R. V., Oats, R. G., Ringle, J. L., Fichtner, L. O., & DelGaudio, M. B. (2011). Implementation of a classroom management program with urban elementary schools in low-income neighborhoods: Does program fidelity affect student behavior and academic outcomes? Journal of Education for Students Placed at Risk, 16, 201–218. doi:10.1080/10824669.2011.585944.

Centers for Disease Control (CDC) Autism and Developmental Disabilities Monitoring Network Surveillance Year 2010 Principal Investigators. (2014). Prevalence of autism spectrum disorders among children aged 8 years-Autism and developmental disabilities Monitoring Network, 11 Sites, United States, 2010. MMWR Morbidity and Mortality Weekly Report, 63(2), 1–22.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd Ed.). Hillsdale, NJ: Lawrence Earlbaum Associates.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112, 155–159.

Debnam, K. J., Pas, E. T., Bottiani, J., Cash, A. H., & Bradshaw, C. P. (2015). An examination of the association between self-reported and observed culturally responsive teaching practices. Psychology in the Schools, 52, 533–548. doi:10.1002/pits.21845.

Denton, C. A., & Hasbrouck, J. (2009). A description of instructional coaching and its relationship to consultation. Journal of Educational and Psychological Consultation, 19, 150–175.

Dieker, L. A., Hynes, M. C., Hughes, C. E., & Smith, E. (2008). Implications of mixed reality and simulation technologies on special education and teacher preparation. Focus on Exceptional Children, 40(6), 1–20.

Dieker, L. A., Hynes, M. C., Stapleton, C., & Hughes, C. E. (2007). Virtual classrooms: STAR simulator. New Learning Technology SALT, 4, 1–22.

Dieker, L. A., Rodriguez, J. A., Lignugaris, B., Hynes, M. C., & Hughes, C. E. (2014). The potential of simulated environments in teacher education: Current and future possibilities. Teacher Education and Special Education, 37, 21–33. doi:10.1177/0888406413512683.

Eldevik, S., Hasting, R. P., Hughes, J. C., Jahr, E., Eikeseth, S., & Cross, S. (2009). Meta-analysis of early intensive behavioral intervention for children with autism. Journal of Clinical Child & Adolescent Psychology, 38, 439–450. doi:10.1080/15374410902851739.

Elford, M., Carter, R. A. Jr., & Aronin, S. (2013). Virtual reality check: Teachers use bug-in-ear coaching to practice feedback techniques with student avatars. JSD: The Learning Forward Journal, 34, 40–43.

Garet, M. S., Porter, A. C., Desimone, L., Birman, B. F., & Yoon, K. S. (2001). What makes professional development effective? Results from a national sample of teachers. American Educational Research Journal, 38, 915–945.

Garland, K., M. V., Holden, K., & Garland, D. P. (2016). Individualized clinical coaching in the TLE TeachLivE lab: Enhancing fidelity of implementation of System of Least Prompts among novice teachers of students with autism. Teacher Education and Special Education, 39, 47–59. doi:10.1177/0888406415600769.

Hayes, A. T., Straub, C. L., Dieker, L. A., Hughes, C. E., & Hynes, M. C. (2013). Ludic learning: Exploration of TLE TeachLivE™ and effective teacher training. International Journal of Gaming and Computer-Mediated Simulations, 52(2), 23–26.

Hyman, S. L., & Levy, S. E. (2013). Autism spectrum disorders. In M. L. Batshaw, N. J. Roizen & G. R. Lotrecchiano (Eds.), Children with disabilities (7th Ed., pp. 345–368). Baltimore, MD: Brookes.

Johnson, S. R., Pas, E. T., & Bradshaw, C. P. (2016). Understanding and measuring coach-teacher alliance: A glimpse inside the ‘black box’. Prevention Science, 17, 439–449. doi:10.1007/s11121-016-0633-8.

Kasari, C., Freeman, S. F. N., Bauminger, N., & Alkin, M. C. (1999). Parental perspectives on inclusion: Effects of autism and down syndrome. Journal of Autism and Developmental Disorders, 29, 297–305.

Kolb, D. A. (2014). Experiential learning: Experience as the source of learning and development (2nd Ed.). Upper Saddle River, NJ: Pearson Education.

Kretlow, A. G., & Bartholomew, C. C. (2010). Using coaching to improve the fidelity of evidence-based practices: A review of studies. Teacher Education and Special Education, 33, 279–299.

Kucharczyk, S., Shaw, E., Smith Myles, B., Sullivan, L., Szidon, K., & Tuchman-Ginsberg, L. (2012). Guidance & coaching on evidence-based practices for learners with Autism Spectrum Disorders. Chapel Hill: The University of North Carolina, Frank Porter Graham Child Development Institute, National Professional Development Center on Autism Spectrum Disorders.

Lai, C., Yang, J., Chen, F., Ho, C., & Chan, T. (2007). Affordances of mobile technologies for experiential learning: The interplay of technology and pedagogical practices. Journal of Computer Assisted Learning, 23, 326–337. doi:10.1111/j.1365-2729.2007.00237.x.

Lerman, D. C., Tetreault, A., Hovanetz, A., Strobel, M., & Garro, J. (2008). Further evaluation of a brief, intensive teacher-training model. Journal of Applied Behavior Analysis, 41, 243–248. doi:10.1901/jaba.2008.41-243.

Lerman, D. C., Vorndran, C. M., Addison, L., & Kuhn, S. C. (2004). Preparing teachers in evidence-based practices for young children with autism. School Psychology Review, 33, 510–526.

Levine, T. R., & Hullett, C. R. (2002). Eta squared, partial eta squared, and misreporting of effect size in communication research. Human Communication Research, 28, 612–625. doi:10.1111/j.1468-2958.2002.tb00828.x.

MacSuga, A. S., & Simonsen, B. (2011). Increasing teachers’ use of evidence-based classroom management strategies through consultation: Overview and case studies. Beyond Behavior, 20(2), 4–12.

Maryland Commission on Autism. (2012). Addressing the needs of individuals with Autism Spectrum Disorders in Maryland: Recommendations for a statewide system of care in response to HG § 13-2806(2). Maryland Commission on Autism. Retrieved from http://dhmh.maryland.gov/autism/documents/final_autism_report_10-5-2012.pdf.

Morrier, M. J., Hess, K. L., & Heflin, L. J. (2011). Teacher training for implementation of teaching strategies for students with Autism Spectrum Disorders. Teacher Education and Special Education, 34, 119–132. doi:10.1177/0888406410376660.

Munson, J., Dawson, G., Sterling, L., Beauchaine, T., Zhou, A., Koehler, E., … Abbott, R. (2008). Evidence of latent classes of IQ in young children with Autism Spectrum Disorder. Journal of Autism and Developmental Disabilities, 113, 439–452.

Noell, G. H., Duhon, G. J., Gatti, S. L., & Connell, J. E. (2002). Consultation, follow-up, and implementation of behavior management interventions in general education. School Psychology Review, 31, 217–234.

NRC. (2001). Educating children with autism. Washington, DC: National Academy Press.

O’Connell, M. E., Boat, T., & Warner, K. E. (2009). Preventing mental, emotional, and behavioral disorders among young people: Progress and possibilities. Washington, DC: Committee on the Prevention of Mental Disorders and Substance Abuse Among Children, Youth and Young Adults: Research Advances and Promising Interventions; Institute of Medicine; National Research Council. The National Academies Press.

Pas, E. T., Bradshaw, C. P., & Cash, A. (2014). Coaching classroom-based preventive interventions. In M. Weist, N. Lever, C. P. Bradshaw & J. Owens (Eds.), Handbook of school mental health (2nd Ed., pp. 255–268). New York: Springer.

Pas, E. T., Cash, A. H., O’Brennan, L., Debnam, K., & Bradshaw, C. P. (2015). Profiles of high school student behavior in the classroom: Associations with teacher behavior management strategies and classroom composition. Journal of School Psychology, 53, 137–148. doi:10.1016/j.jsp.2014.12.005.

Pas, E. T., Larson, K., Reinke, W., Herman, K., & Bradshaw, C. P. (in press). Implementation and acceptability of an adapted Classroom Check-Up coaching model to promote culturally responsive classroom management. The Education & Treatment of Children.

Pas, E. T., & Newman, D. L. (2013). Teacher metoring, coaching, and consultation. In J. A. C. Hattie & E. M. Anderman (Eds.), International handbook of student achievement (pp. 152–154). New York: Routledge Publishing Company.

Pisacreta, J., Tincani, M., Connell, J. E., & Axelrod, S. (2011). Increasing teachers’ use of a 1:1 praise-to-behavior correction ratio to decrease student disruption in general education classrooms. Behavioral Interventions, 26, 243–260. doi:10.1002/bin.341.

Reinke, W. M. (2006). The classroom check-up: Advanced tools for effective coaching. In R. Sprick, J. Knight, W. M. Reinke & T. McKale (Eds.), Coaching for positive classrooms: Supporting teachers with classroom management (pp. 139–167). Eugene, OR: Pacific Northwest Publishing.

Reinke, W. M., Herman, K., Darney, D., Pitchford, J., Becker, K., Domitrovich, C. E., & Ialongo, N. S. (2012). Using the classroom check-up model to support implementation of PATHS to PAX. Advances in School Mental Health Promotion, 5(3), 220–232.

Reinke, W. M., Herman, K., & Sprick, R. (2011). Motivational interviewing for effective classroom management: The classroom check-up. New York, NY: Guilford Press.

Reinke, W. M., Lewis-Palmer, T., & Martin, E. (2007). The effect of visual performance feedback on teacher use of behavior-specific praise. Behavior Modification, 31, 247–263. doi:10.1177/0145445506288967.

Reinke, W. M., Lewis-Palmer, T., & Merrell, K. (2008). The classroom check-up: A classwide teacher consultation model for increasing praise and decreasing disruptive behavior. School Psychology Review, 37, 315–332.

Reinke, W. M., Stormont, M., Herman, K. C., Puri, R., & Goel, N. (2011). Supporting children’s mental health in schools: Teacher perceptions of needs, roles, and barriers. School Psychology Quarterly, 26, 1–13. doi:10.1037/a0022714.

Rumsey, J. M., Vitiello, B., Cooper, J., & Hirtz, D. (2000). Special issue: Treatments for people with autism and other pervasive developmental disorders: Research perspectives—editorial preface. Journal of Autism and Developmental Disorders, 30, 369–371.

Rusby, J. C., Taylor, T., & Milchak, C. (2001). Assessing School Settings: Interactions of Students and Teachers (ASSIST) observation system. Unpublished manual.

Scheeler, M. C., Ruhl, K. L., & McAfee, J. K. (2004). Providing performance feedback to teachers: A review. Teacher Education and Special Education, 27, 396–407. doi:10.1177/088840640402700407.

Scheuermann, B., Webber, J., Boutot, E. A., & Goodwin, M. (2003). Problems with personnel preparation in Autism Spectrum Disorders. Focus on Autism and Other Developmental Disabilities, 18, 197–206. doi:10.1177/10883576030180030801.

Shattuck, P. T. (2006). The contribution of diagnostic substitution to the growing administrative prevalance of autism in US special education. Pediatrics, 117, 1028–1037. doi:10.1542/peds.2005-1516.

Shyman, E. (2012). Teacher education in Autism Spectrum Disorders: A potential blueprint. Education and Training in Autism and Developmental Disabilities, 47, 187–197.

Spann, S. J., Kohler, F. W., & Soenksen, D. (2003). Examining parents’ involvement in and perceptions of special education services: An interview with families in a parent support group. Focus on Autism and Other Developmental Disabilities, 18, 228–237.

Sullivan, T., & Bradshaw, C. P. (2012). Introduction to the special issue of Behavioral Disorders: Serving the needs of youth with disabilities through school-based violence prevention efforts. Behavioral Disorders, 37(3), 129–132.

Sutherland, K. S., Wehby, J. H., & Copeland, S. R. (2000). Effect of varying rates of behavior-specific praise on the on-task behavior of students with EBD. Journal of Emotional and Behavioral Disorders, 8, 2–8.

Sutherland, K. S., Wehby, J. H., & Yoder, P. J. (2002). Examination of the relationship between teacher praise and opportunities for students with EBD to respond to academic requests. Journal of Emotional and Behavioral Disorders, 10, 5–13.

U.S. Department of Education (USDOE), National Center for Education Statistics. (2015). Digest of Education Statistics, 2013 (NCES 2015-011), Chap. 2. Retrieved from https://neces.ed.giv/fastfacts/display.asp?id=64.

Zablotsky, B., Black, L. I., Maenner, M. J., Schieve, L. A., & Blumberg, S. J. (2015). Estimated prevalence of autism and other developmental disabilities following questionnaire changes in the 2014 National Health Interview Survey. National Health Statistics Reports, no 87. Hyattsville, MD: National Center for Health Statistics.

Zablotsky, B., Boswell, K., & Smith, C. (2012). An evaluation of school involvement and satisfaction of parents of children with autism spectrum disorders. American Journal on Intellectual and Developmental Disabilities, 117, 316–330.

Zhu, J., Moshell, J. M., Ontanon, S., Erbiceanu, E., & Hughes, C. E. (2011). Why can’t a virtual character be more like a human: A mixed-initiative approach to believable agents. Lecture Notes in Computer Science, 6774, 289–296.

Acknowledgments

The authors would like to acknowledge the developers of the Classroom Check-Up, Drs. Wendy Reinke and Keith Herman and TeachLivE™, Drs. Lisa Dieker, Charles Hughes, and Michael Hynes.

Author Contributions

ETP conceived of the study, participated in its design and coordination, conducted the data analyses, and drafted the manuscript; SRJ delivered the intervention, participated in the presentation of results, and helped to draft the manuscript; KL delivered the intervention and helped to draft and review the manuscript; LB conceived of the study, participated in its design and coordination, assisted with implementation, and provided critical review of the manuscript; RC conceived of the study, participated in the design, and provided critical review of the manuscript; CPB conceived of the study, participated in the design of the study, assisted with interpretation and presentation of results; and revised it for critical intellectual content. All authors read and approved the final manuscript.

Funding

This study was funded by Wendy Klag Center for Autism and Developmental Disabilities, at the Johns Hopkins Bloomberg School of Public Health. This was a faculty award to the first author. Additional support for this work comes from the Institute of Education Sciences (R305A150221; R324A110107) and National Institute of Mental Health (T32 MH019545-23).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Each author declares that she has no conflicts of interest.

Ethical Approval

The researchers’ Institutional Review Board reviewed this research and found it to be exempt. Therefore, this article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent

Because this research was exempt, it was considered to not involve human participants.

Additional information

Kristine Larson was affiliated with the Johns Hopkins University, School of Education, Baltimore, Maryland, USA during the conduct of the study. She has been affiliated with the Bloomberg School of Public Health since the writing of this manuscript began.

Rights and permissions

About this article

Cite this article

Pas, E.T., Johnson, S.R., Larson, K. et al. Reducing Behavior Problems Among Students with Autism Spectrum Disorder: Coaching Teachers in a Mixed-Reality Setting. J Autism Dev Disord 46, 3640–3652 (2016). https://doi.org/10.1007/s10803-016-2898-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-016-2898-y