Abstract

Understanding text can increase access to educational, vocational, and recreational activities for individuals with autism spectrum disorder (ASD); however, limited research has been conducted investigating instructional practices to remediate or compensate for these comprehension challenges. The current comprehensive literature review expanded previous reviews and evaluated research quality using Reichow (Evidence-based practices and treatments for children with autism, pp 25–39. doi:10.1007/978-1-4419-6975-0_2, 2011) criteria for identifying evidence-based practices. Three questions guided the review: (a) Which approaches to comprehension instruction have been investigated for students with ASD?; (b) Have there been a sufficient number of acceptable studies using a particular strategy to qualify as an evidence-based practice for teaching comprehension across the content areas?; and (c) What can educators learn from the analysis of high quality studies? Of the 23 studies included in the review, only 13 achieved high or adequate ratings. Results of the review suggest that both response-prompting procedures (e.g., model-lead-test, time delay, system of least prompts,) and visual supports (e.g., procedural facilitators) can increase comprehension skills in content areas of ELA, math, and science. Authors conclude with a discussion of (a) research-based examples of how to use effective approaches, (b) implications for practitioners, and (c) limitations and future research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Expressive and receptive social communication deficits are key diagnostic criteria for autism spectrum disorder (ASD; DSM-5, 2013). When compared to controls matched for cognitive functioning, a number of studies have found differences in the receptive communication of children with ASD at the word, phrase, and sentence level of communication (Eskes et al. 1990; Prior and Hall 1979; Tager-Flusberg 1981). These difficulties in listening comprehension can have a profound effect on a child’s ability to comprehend what they read. In fact, it has been suggested that a child’s maximum level of reading comprehension is determined by the child’s level of listening comprehension (Biemiller 1999). Nation et al. (2006) established that vocabulary and oral language comprehension scores are highly correlated with reading comprehension scores, offering support for the assertion that deficits in reading comprehension may accompany impairments in comprehending oral language for children with ASD.

Predictors of Comprehension Challenges for Children with ASD

For typically developing children, listening comprehension and early decoding ability are reliable predictors of later reading achievement (Woolley 2011). In contrast, many children with autism spectrum disorder (ASD) are skilled word decoders, but have challenges in reading comprehension (e.g., Nation et al. 2006; Whalon et al. 2009). Reading comprehension scores of students with ASD are consistently lower than those of matched controls but the reasons for these difficulties are not entirely understood (Frith and Snowling 1983; O’Conner and Klein 2004; Snowling and Frith 1986). Evidence suggests oral language, application of background knowledge, ability to make inferences from text, and even social skills can impact a child’s reading comprehension skills. Each of these areas will be discussed in subsequent sections.

The Simple View of Reading (Gough and Tunmer 1986) contends that word recognition and oral language are both important elements of reading comprehension ability, and results from several studies support this association for students with ASD. For example, Snowling and Frith (1986) compared students with ASD students with intellectual disabilities and typically developing students matched for “mental” and reading age on their ability to recall factual questions and general knowledge questions. Authors concluded students with lower verbal ability (regardless of disability category) had a more difficult time applying relevant background knowledge and comprehending text. In a review of the literature on comprehension abilities, Ricketts (2011) found that factors including word recognition, oral language, nonverbal ability, and working memory were associated with reading comprehension difficulties for individuals with ASD. Randomized control trials and longitudinal studies have confirmed these findings, suggesting a causal role of oral language abilities on the comprehension skills of students with ASD (Carroll and Snowling 2004; Clarke et al. 2010; Nation et al. 2010).

Not only does oral language play a role in the acquisition of comprehension skills, but skilled text comprehenders must also be able to decipher the meanings of words, analyze word structure, draw upon background knowledge, and make inferences from the text (Randi et al. 2010). Since students with ASD have deficits in many of these areas, it would follow they would also have difficulty comprehending text. For example, researchers have found that students with high functioning autism have deficits in applying background knowledge, specifically in making global and abstract connections (Wahlberg and Magliano 2004). Children with ASD also have problems resolving anaphoric reference and in monitoring their own reading (O’Conner and Klein 2004). Similarly, some studies have shown that students with Asperger syndrome could comprehend factual information, but had challenges in making inferences from the text (e.g., Griswold et al. 2002; Myles and Simpson 2002). One reason for this may be that students with ASD have difficulty understanding abstract or figurative oral language (e.g., use of metaphor), which can impede reading comprehension skills (The American Psychiatric Association 2013).

Impairments in social and communication abilities may also predict lower reading comprehension scores. For example, Jones et al. (2009) reported a significant association between the severity of social and communication impairment and reading comprehension. Lending support to these findings, Ricketts et al. (2013) found that social behavior and social cognition predict reading comprehension after controlling for the variance explained by word recognition and oral language. The semantics and social nature of some texts may compound these comprehension difficulties, possibly due to social communication deficits characteristic of individuals with ASD. Some children with ASD have more difficulty comprehending highly social texts than less social texts (Brown et al. 2013). From these studies, it would appear challenges in applying background knowledge, knowing how to make inferences, oral language ability, and social skills contribute to the factors affecting reading comprehension in children and youth with ASD.

Research on Effective Practices for Comprehension for Students with ASD

Reading comprehension is considered to be, “the most important academic skill learned in school” (Mastropieri and Scruggs 1997, p. 1), as students who are unable to extract meaning from text have not made the transition from learning to read to reading to learn. Studies have clearly documented that reading for meaning is problematic for children with ASD, but unfortunately, limited research is available on how to effectively teach comprehension strategies to this population based on the results from previous literature reviews. For example, of the 11 studies that met criteria for inclusion in a comprehension literature review for students with ASD, only four studies evaluated instructional methods to enhance text comprehension (Chiang and Lin 2007). In a more general review of reading interventions, Whalon et al. (2009) examined 11 studies encompassing one or more of the NRP’s components of reading (i.e., phonemic awareness, phonics, reading fluency, vocabulary, and comprehension strategies). Only five of the 11 studies related specifically to reading interventions targeting vocabulary development and comprehension. Of these, interventions included peer delivered instruction (e.g., cooperative learning groups) and one to one instructional delivery (i.e., prompting system to teach a student to act out single directions, procedural facilitation). While neither of these reviews specifically excluded studies on comprehension across content areas, the purpose, scope and findings of the reviews did not contribute the literature beyond the content area of ELA, including reading.

To date, reviews have not evaluated instructional strategies in listening and reading comprehension across content areas (e.g., science, social studies) likely due to the limited number of studies available in the literature at the time of the reviews. Comprehension in the content areas can be especially challenging for all students due to the complex vocabulary, semantics, and differences in text structure. Since prior reviews suggested future research in this area and recent conference presentations at national conferences have highlighted novel strategies, it seems an updated review is warranted. Fortunately, several studies have emerged since these reviews that may offer guidance on effective practices for promoting text-based comprehension skills for students with ASD in content areas. Since text-based comprehension is a skill needed in all content areas (e.g., math content frequently includes word problems), the current review seeks to include studies that addressed comprehension across content areas. Students with listening or reading comprehension challenges will need effective interventions across content, and it is likely that text-based strategies for listening comprehension will resemble those for reading comprehension. Further, the structure of narrative and expository texts differ and therefore, the strategies used to comprehend them may vary. In contrast, the review may reveal patterns across content areas that can be used to guide educators in teaching comprehension skills.

The era of evidence-based practices (EBP) combined with increased attention given to standards-based instruction requires educators of students with ASD to use effective strategies based on empirical evidence. While broad reviews of EBP for children with ASD to promote academic and other skills exist (e.g., Wong et al. 2014) evidence-based methods for fostering text comprehension in core content areas are needed. Reichow (2011) and Reichow et al. (2008) have suggested an evaluative approach in the field of ASD to determine EBP. Reichow colleagues (2008, 2011) propose criteria similar to the Horner et al. (2005) criteria, but with the specific population of students with ASD and their unique needs in mind (e.g., including bodies of research from medical, psychological, and educational areas). Benefits of using the Reichow (2011) criteria include: (a) the evaluation of both single case and group research within the same review; (b) rubrics with operational definitions; (c) the delineation primary (essential) and secondary (non-essential) quality indicators; (d) guidelines for the determination of research report strength (e.g., strong, moderate, weak); and (e) criteria for the overall determination of an EBP. The purpose of the current literature review is to update previous reviews by evaluating the body of evidence on text-based, content area comprehension strategies for students with ASD using this established framework. Three questions will guide the review: (a) Which approaches to comprehension instruction have been investigated for children and youth with ASD across content areas; (b) Have there been a sufficient number of quality studies that use a particular strategy to qualify as an evidence-based practice for teaching comprehension across the content areas?; and (c) What can educators and researchers learn from the analysis of high quality studies?

Method

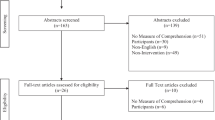

Search Procedures

The authors of this review examined the existing literature on teaching text-based comprehension skills across content areas to students with ASD. In order to determine effective practices for teaching comprehension in content areas to students with ASD, the authors conducted a comprehensive literature review. Using combinations of the following terms: “autis*”, “Asperger’s”, “ASD”, “content”, “core content”, “common core”, “comprehension”, “strategy”, “intervention”, “procedure”, “reading”, “ELA”, “Story-based lessons,” “math”, “word problems,” “science”, “social studies”, the authors searched for all available literature. The following search engines were used: ERIC, PsychInfo, Master File Premier, and Academic File Premier. The search terms were entered into all search engines simultaneously. The authors did not restrict the electronic search by year.

In addition to the electronic search, the authors conducted hand searches of the following journals: Journal of Autism and Developmental Disabilities, Journal of Applied Behavior Analysis, Focus on Autism and Other Developmental Disabilities, and Research in Autism Spectrum Disorders. For the hand search, authors searched editions published from 2009 to the present in order to find articles published after the literature review conducted by Whalon et al. (2009). The authors also completed an ancestral search of all articles uncovered by the hand and electronic searches. Searching the reference lists, the authors looked for articles related to ASD and text comprehension.

Inclusion Criteria

The authors established the following criteria for study inclusion: (a) used a single case (SCD) or group research design, (b) included one or more participants with ASD, (c) appeared in a peer-reviewed journal, (d) included comprehension results (e.g., graphs were required for SCD), (e) used an intervention to increase text-based comprehension skills, and (f) examined comprehension skills in any academic content area in the context of an instructional lesson in a school setting. Text-based comprehension for the purposes of this review included listening comprehension and comprehension of vocabulary and language concepts, as long as the participants were required to answer comprehension questions, provide definitions, or apply content to novel situations derived from text (vs. pictures or objects); in contrast, studies that focused only on vocabulary and definitions were excluded. Studies that examined rote skills such as sight word reading and coin counting were not included in this study, unless comprehension was also measured. Researchers excluded SCD studies that did not include graphs for comprehension data (e.g., Allor et al. 2010). Group design studies were excluded if they had sample sizes with twenty or fewer participants or (e.g., Mashal and Kasirer 2011; O’Conner and Klein 2004). Two group design studies were excluded because they included both students with and without ASD; disaggregated data had to be available if the study included students other than those w/ ASD (Browder et al. 2010; Goodwin et al. 2012). Comparison studies of children and youth with ASD to matched controls were also excluded unless an intervention was used. Studies examining comprehension in the context of functional skills only were not included (e.g., Dogoe et al. 2011). Of the studies examined during the search process, 23 single case design studies met the inclusion criteria and were rated in this review. No group design studies met the inclusion criteria.

Quality Analysis Using Reichow (2011) Criteria

Using a scoring sheet developed by one of the researchers, the authors rated the studies according to the criteria set forth by Reichow (2011). In this rating system, there are six primary quality indicators, including: (a) participant characteristics; (b) independent variable; (c) baseline condition; (d) dependent variable; (e) visual analysis; and (f) experimental control. For each of these categories, the rater assigns research report strength as being high (H) quality, acceptable (A) quality, or unacceptable (U; Reichow 2011), based on the operational definitions available. In this rating system, the rater also notes the presence or absence of secondary quality indicators, including: (a) interobserver agreement; (b) kappa; (c) blind raters; (d) fidelity; (e) generalization or maintenance; and (f) social validity (Table 1).

In order for a study to receive an overall strong rating, it must score high on all primary indicators and at demonstrate the presence of at least three secondary indicators. For a study to receive an overall adequate rating, it must have at least four high scores on the primary indicators with no unacceptable ratings, and demonstrate the presence of at least two secondary indicators. Studies with unacceptable scores on any primary indicators, fewer than four high ratings, or less than two secondary indictors exhibited are given an overall rating of weak.

Descriptive Characteristics of Strong and Adequate Studies

Researchers recorded the descriptive characteristics of strong and adequate studies (see Table 2). The following study characteristics are included: (a) reference; (b) participants; (c) setting; (d) targeted skills; (e) dependent variable / measures; (f) independent variable / intervention; (g) research designs; and (h) results /outcomes. Authors then examined the strong and adequate studies to determine effective interventions for teaching comprehension skills to students with ASD.

Determination of Evidence-Based Practices

Reichow et al. (2008) and Reichow (2011) provide an EBP status formula that can be used to identify evidence-based practices, in which both single-case and group designs can be combined to evaluate the overall quantity and methodological quality of a practice with respect to its potential as an EBP. The formula is: GroupS *30GroupA *15SSEDS *4SSEDA *2Z, where, “GroupS is the number group research design studies earning a strong rating, GroupA is the number group research design studies earning an adequate rating, SSEDS is the number of participants for whom the intervention was successful from SCD studies earning a strong rating, SSEDA is the number of participants for whom the intervention was successful from SSED studies earning an adequate rating, and Z is the total number of points for an intervention” (p. 32, Reichow 2011). Based on this formula, reviewers can calculate practices as being established EBP (Z = 60+), probable EBP (Z = 31–59), or not an EBP [Z = 0–30; see Reichow (2011) for a thorough description of this process].

Interrater Reliability for Quality Analysis

Interrater reliability was conducted after the second author coded each study according to Reichow (2011) indicators. The first author and two undergraduate students conducted reliability on six randomly assigned articles coded for quality criteria (38.3 % of the articles) and three of the articles coded for descriptive information (30 % of the articles). Using a point-by-point method, the authors divided the number of agreements by the total number of indicators, and then multiplied by 100. The researchers obtained an acceptable reliability score of 84.7 % for quality criteria and 84.6 % for descriptive information (most disagreements concerned participants, baseline, and visual analysis). Disagreements were discussed, but data reported in the manuscript were retained from the second author’s original analysis.

Results

Study Quality

After conducting searches for studies that met inclusion criteria, the researchers found 23 single case studies to include in the review, and no group studies. Of these studies, five achieved strong ratings (Bethune and Wood 2013; Reisen et al. 2003; Rockwell et al. 2011; Smith et al. 2013; Stringfield et al. 2011), because they met all primary indicators with high ratings. Eight studies achieved adequate ratings (Browder et al. 2007; Burton et al. 2013; Carnahan and Williamson 2013; Flores and Ganz 2007, 2009; Knight et al. 2013; Mims et al. 2012; Whalon and Hanline 2008). These studies had no unacceptable primary indicator ratings. Ten studies scored overall weak ratings (Armstrong and Hughes 2012; Browder et al. 2012; Dugan et al. 1995; Hua et al. 2012; Kamps et al. 1994, 1995; Muchetti 2013; Schenning et al. 2013; Secan et al. 1989; Zakas et al. 2013). The 13 adequate and strong studies were subsequently reviewed for descriptive characteristics (i.e., participants, settings, skills, dependent variables and measures, independent variables, research designs, and results).

Participants

The researchers examined characteristics of the participants of the 13 studies that achieved strong or adequate ratings. Thirty-four students with ASD participated, including 29 males and five females. Ages of the participants ranged from 7 to 15 years of age (mean age = 11.5). All but one of the studies provided information about the cognitive functioning of the participants (Carnahan and Williamson 2013); five studies included children and youth with IQs higher than 85 (average/above average), five studies included students in the 70-85 IQ range (one standard deviation below the mean), five included children and youth with IQs in the 55 to 70 IQ range (two standard deviations below the mean), and three included students with IQs less than 55 (three standard deviations below the mean).

Settings

Most (n = 10) of the 13 studies occurred in a special education school or class setting (Bethune and Wood 2013; Browder et al. 2007; Burton et al. 2013; Flores and Ganz 2007, 2009; Knight et al. 2013; Stringfield et al. 2011), or in private school settings (Carnahan and Williamson 2013; Flores and Ganz 2007, 2009). Two studies included same age peers in the interventions. One of these occurred in the general education classroom (Riesen et al. 2003) and one in an intervention room within the school (Whalon and Hanline 2008). Mims et al. (2012) also conducted their study in an intervention room within a school, although they did not include same-age peers in their study. A tutoring setting (the researcher’s home) was used in one study (Rockwell et al. 2011). One study had pre-training in a special education classroom with intervention and generalization in an inclusive classroom (Smith et al. 2013).

A variety of people implemented the interventions across the 13 studies; however, researchers implemented instructional interventions in the majority (n = 8) of the studies (Bethune and Wood 2013; Carnahan and Williamson 2013; Flores and Ganz 2007, 2009; Knight et al. 2013; Mims et al. 2012; Rockwell et al. 2011; Smith et al. 2013). Typical intervention agents implemented or helped to implement the intervention in five of the studies, including: teachers (Browder et al. 2007; Burton et al. 2013; Stringfield et al. 2011), researchers combined with teachers (Whalon and Hanline 2008), and paraeducators (Riesen et al. 2003).

Targeted Skills and Content Areas

Although the review sought to include any content area (e.g., Math, History, Economics, Geography) only skills in the areas of ELA, Science, and Math were represented across the 13 studies, with reading comprehension most frequently studied. Many studies included ELA skills beyond ‘typical’ reading studies (e.g., story-based lessons with adapted books, access to biographies, examination of language skills, pre-literacy skills). Story comprehension instruction occurred in five of the studies (Bethune and Wood 2013; Browder et al. 2007; Mims et al. 2012; Stringfield et al. 2011; Whalon and Hanline 2008). Browder et al. (2007) also addressed literacy pre-skills such as turning pages.

Two studies examined reasoning and language skills as a precursor to reading comprehension (Flores and Ganz 2007, 2009). For example, Flores and Ganz (2009) addressed deductive and inductive reasoning skills as well as picture analogies. Another study by Flores and Ganz (2009) taught making inferences, using facts, and understanding analogies.

Four studies examined comprehension within the context of science instruction (Carnahan and Williamson 2013; Knight et al. 2013; Riesen et al. 2003; Smith et al. 2013). Carnahan and Williamson (2013) evaluated comprehension of science questions, Venn diagram completion, and number of propositions; Knight et al. (2013) examined comprehension of science concepts (e.g., convection; precipitation); Riesen et al. (2003) addressed science vocabulary definitions; and Smith et al. (2013) focused on science terms and applications.

Two studies addressed comprehension in the context of math instruction. Rockwell et al. (2011) taught a student with ASD to choose the correct sign and solve different types of word problems and Burton et al. (2013) used video self-modeling to solve story problems.

Dependent Variables/Measures

The majority of studies (n = 9) used researcher developed probes to determine the effectiveness of the interventions on the targeted skills for each participant (Carnahan and Williamson 2013; Flores and Ganz 2007, 2009; Knight et al. 2013; Mims et al. 2012; Riesen et al. 2003; Rockwell et al. 2011; Smith et al. 2013; Stringfield et al. 2011). Three studies created task analyses. Researchers targeting language and reasoning composed probe questions related to predetermined concepts (Flores and Ganz 2007, 2009; Riesen et al. 2003; Rockwell et al. 2011).

Six studies measured student ability to answer comprehension questions (Bethune and Wood 2013; Carnahan and Williamson 2013; Knight et al. 2013; Mims et al. 2012; Stringfield et al. 2011; Whalon and Hanline 2008). Stringfield et al. (2011) also required participants to fill out a story map correctly and Knight et al. (2013) asked students to complete a graphic organizer. In addition to examining question responses, Whalon and Hanline (2008) measured the frequency of questions asked by students.

Independent Variables/Interventions

Eleven of the 13 studies used response-prompting strategies. Five studies used a model- lead-test technique in which the teacher fades his or her level of support during a teaching demonstration (Flores and Ganz 2007, 2009; Knight et al. 2013; Rockwell et al. 2011; Smith et al. 2013), and one of these used a used a model-test explicit instruction format within a computer-based program (Smith et al. 2013). Four studies used a system of least prompts (Bethune and Wood 2013; Browder et al. 2007; Mims et al. 2012; Stringfield et al. 2011). Task analyses were used in four studies (Browder et al. 2007; Burton et al. 2013; Knight et al. 2013; Smith et al. 2013). Time delay was used in three studies (Browder et al. 2007; Knight et al. 2013; Riesen et al. 2003). Two studies used response-prompting systems as part of published Direct Instruction programs. Two studies used modeling of examples and non-examples (Knight et al. 2013; Stringfield et al. 2011), and one study used simultaneous prompting (Riesen et al. 2003). In addition to the use of response prompting strategies, eight studies employed the use of visual supports (Browder et al. 2007; Burton et al. 2013; Knight et al. 2013; Mims et al. 2012; Rockwell et al. 2011; Smith et al. 2013; Stringfield et al. 2011; Whalon and Hanline 2008).

Research Designs

All 13 of the studies examined used single case research designs. Eight studies used multiple probe designs (across participants; Browder et al. 2012; Knight et al. 2013; Mims et al. 2012; Smith et al. 2013) or multiple probe (across behaviors; Flores and Ganz 2007, 2009; Rockwell et al. 2011). Multiple baseline across students was used in four studies (Bethune and Wood 2013; Burton et al. 2013; Stringfield et al. 2011; Whalon and Hanline 2008). One study used a reversal (Carnahan and Williamson 2013) and one used adapted alternating treatments (Riesen et al. 2003) to evaluate student outcomes.

Study Results

All 13 studies in this review demonstrated positive student outcomes. All studies measuring reading comprehension reported improvement on comprehension questions (Bethune and Wood 2013; Mims et al. 2012; Stringfield et al. 2011; Whalon and Hanline 2008). Browder et al. (2007) found that the participants increased the number of literacy behaviors in the task analysis of steps they completed independently, literacy preskills, and comprehension questions.

The two studies that measured comprehension pre-skills found that students met all criteria on all skills in both studies. In addition, the participants maintained growth on the new concepts (Flores and Ganz 2007, 2009).

In the four studies that measured science skills, participants were able to define science vocabulary (Knight et al. 2013; Riesen et al. 2003), identify science terms (Smith et al. 2013) and untrained exemplars of these terms (Knight et al. 2013; Smith et al. 2013), and increase the number of correct comprehension science questions (Carnahan and Williamson 2013). Riesen et al. (2003) demonstrated that for the participant with ASD simultaneous prompting was more efficient than time delay.

In the Burton et al. (2013) and Rockwell et al. (2011) studies in math, participants also demonstrated improvement. The participant in the Rockwell et al. (2011) study increased story problem comprehension on three separate problem types, maintained growth, and generalized to new problem types. Participants in the Burton et al. (2013) study showed immediate increase in number of steps completed after implementation of intervention.

Reliability

All of the studies examined demonstrated acceptable criteria for interobserver agreement (IOA). Twelve of the 13 studies demonstrated IOA of at least 90 % (Bethune and Wood 2013; Browder et al. 2007; Burton et al. 2013; Carnahan and Williamson 2013; Flores and Ganz 2007, 2009; Knight et al. 2013; Mims et al. 2012; Riesen et al. 2003; Rockwell et al. 2011; Smith et al. 2013; Stringfield et al. 2011). The other study also demonstrated acceptable reliability at 85–90 % (Whalon and Hanline 2008). Further, all 13 of the studies in this review included measures of procedural reliability, and demonstrated acceptable procedural reliability, with studies scoring 92 % or above.

Social Validity

Eight of the 13 studies in this review included social validity measures (Bethune and Wood 2013; Browder et al. 2007; Burton et al. 2013; Carnahan and Williamson 2013; Mims et al. 2012; Smith et al. 2013; Stringfield et al. 2011; Whalon and Hanline 2008). Of these, three used Likert scales (Bethune and Wood 2013; Browder et al. 2007; Mims et al. 2012), two used interviews (Stringfield et al. 2011; Whalon and Hanline 2008), and three used a questionnaire (Burton et al. 2013; Carnahan and Williamson 2013; Smith et al. 2013). The studies that examined social validity also demonstrated positive results. Browder et al. (2007), for example, indicated that teachers found the training used in the intervention practical and simple. Mims et al. (2012) found that teachers were satisfied with the intervention. Stringfield et al. (2011) indicated that two of the students were more confident about their reading skills, although only one indicated that he would continue to use the story map taught in the intervention.

Determination of Evidence-Based Practice

Reichow (2011) has developed a method for determining the EBP status of a practice, in which individual studies are rated as strong, adequate, or weak. Taken together, the strong and adequate studies are then evaluated to determine the overall strength of the practice. Reichow (2011) has suggested a formula, in which the number of participants for whom the intervention was successful from both single case research design studies and group research design studies is taken into account. Based on this formula, practices can be considered established EBP (Z = 60+), probable EBP (Z = 31–59), or not an EBP (Z = 0–30). Using this formula, response prompting strategies and visual supports can be considered established interventions to teach comprehension skills across content areas (ELA, Math story problems, and Science) for children and youth with ASD. In the current review, response prompting strategies obtained a Z score of 76 and visual supports received a Z score of 80 (well above the 60+ cutoff to be considered EBP).

Study Limitations

Five of the 13 studies did not include a social validity measure (Flores and Ganz 2007, 2009; Knight et al. 2013; Riesen et al. 2003; Rockwell et al. 2011). Social validity measures are a valuable aspect of a study, since they provide an important demonstration of the intervention’s practical relevance, indicating that the changed behaviors have a positive impact on the participants’ success outside of the confines of the study (Kadzin 1997). Two studies did not include generalization or maintenance data (Browder et al. 2007; Whalon and Hanline 2008). Generalization and maintenance data provide valuable information about the effectiveness of the intervention in novel contexts across time, demonstrating the intervention’s effectiveness in untaught situations after intervention is withdrawn (Gast 2010). None of the studies included in this review used blind raters, which is one of Reichow (2011) secondary indicators. Only one of the 13 studies included a Kappa measure (Whalon and Hanline 2008), which is one of Reichow (2011) criteria. The use of blind raters and Kappa measures reduce the risk of bias when reporting results.

Discussion

The purpose of this comprehensive literature review was to (a) examine both the quality and quantity of studies to evaluate the various approaches that have been used to teach text-based comprehension skills across content areas to students with ASD, (b) determine if a sufficient number of quality studies exists warranting an established EBP status for a particular strategy, and (c) to provide implications for practitioners by analyzing the quality studies. Results of this review suggest response prompting strategies and visual supports can be considered established interventions to teach comprehension skills across content areas (ELA, Math story problems, and Science) for children and youth with ASD.

These findings support previous conclusions that response prompting strategies are effective in promoting academic skills to students with moderate and severe disabilities, including students with ASD. For example, Browder et al. (2009) found time delay to be an EBP for teaching picture and sight word recognition to students with severe disabilities (including ASD). Similarly, Spooner et al. (2012) evaluated methods for teaching academic skills to students with severe disabilities (including ASD), and suggested teachers use time delay and task analytic instruction across content areas to teach various skills (e.g., discrete math facts, matching science terms to definitions). However, these previous reviews did not evaluate text-based comprehension or students with ASD, specifically. Previous reviews on reading comprehension strategies for children and youth with ASD have yielded limited conclusions, due to the low number of studies in this area (e.g., Chiang and Lin 2007; Randi et al. 2010; Whalon et al. 2009). In contrast to the previous reviews, the current review suggests that the model-lead-test (MLT) strategy may be gaining momentum in the field of ASD for teaching comprehension across content areas. The MLT strategy was the most frequently cited response prompting strategy (N = 5) of the 13 strong and adequate studies, and was used across content areas, including one study that used a model-test and computer assisted embedded instruction in science (Smith et al. 2013). The system of least prompts was used in four studies across content areas. Time delay, modeling of examples and non-examples, direct instruction, and simultaneous prompting were also used across studies.

In addition to response prompting strategies, visual supports (i.e., graphic organizers, visual diagrams, video self-modeling, story cards, use of picture symbols to aide comprehension, visuals of key phrases, picture analogies) were also found to be an established EBP for teaching text-based comprehension skills in the current review. Visual supports have been suggested by the National Professional Development Center on ASD (NPDC 2010) as an evidence-based practice for teaching various adaptive skills (e.g., task engagement, transitions, play skills, interaction skills, and reducing self-injurious behaviors) to students with ASD across grade levels. In the NPDC’s analysis, visual supports included “pictures, written words, objects within the environment, arrangement of the environment or visual boundaries, schedules, maps, labels, organization systems, timelines, and scripts” (NPDC 2010, p. 1); however, activity schedules and scripts were the most frequently cited type of visual support used. Visual activity schedules, specifically, are considered an evidence-based practice for (a) increasing on-task, on-schedule, and appropriate and independent transitions; (b) improving latency to task from task direction, percentage of correctly completed responses, task, or task analysis steps; and (c) decreasing level of prompts necessary for transitions (Knight et al. 2014). Given these findings, it may not be surprising that visual supports could also be used across content areas to increase text-based comprehension skills; however, in the current analysis, none of the studies reviewed used visual activity schedules to increase comprehension skills. While the types of visual support varied from study to study, graphic organizers and visual diagrams were used most often. Although reviewers found response prompting strategies and visual supports overall to be established EBP for teaching comprehension skills to students with ASD, there were not enough quality studies to establish specific forms of prompting procedures (e.g., time delay) or visual supports (e.g., graphic organizers) as either promising or established.

Implications for Practice

Interestingly, the MLT strategy was the most frequently used response prompting strategy across studies. For teachers new to this strategy, we will provide a brief description followed by illustrative examples from the literature reviewed. The MLT strategy is a systematic, explicit method based on direct instruction that provides frequent opportunities for students to correctly practice academic skills, reducing student error throughout the process (Carnine et al. 1997). This strategy involves the teacher modeling the problem or skill for the student, leading them through the problem, and then testing them on what they have learned. During the model phase, the teacher demonstrates the skill by modeling verbally or using a demonstration. An example of the model phase would be, “My turn. A snake is an example of a reptile.” Modeling can also be used to illustrate higher order, metacognitive processes involved in comprehension (e.g., asking questions to clarify the text). During the lead phase, the instructor completes the skill with the students or asks them to respond as a group. For example, the teacher would say, “With me. A snake is an example of a …”, followed by the teacher and students saying “reptile” in unison. The process is repeated with examples and non-examples of the skill or concept until the students can perform the skill automatically (e.g., “A bear is not an example of a reptile.”). The test phase is used to assess whether the students understand the skill or concept independently. In the test phase, the teacher would say, “What is an example of a reptile?” If students are correct, they should be reinforced and if they are incorrect, error correction and additional examples may be provided (Sayeski et al. 2003).

In their review of comprehension strategies, Randi et al. (2010) suggested DI (of which MLT is an aspect) as a promising method for increasing reading comprehension in this population, but did not mention MLT specifically. In both of the Flores and Ganz (2007, 2009) studies, MLT was used as part of the Direct Instruction package in ELA to increase inferences, use of facts and analogies; and to increase deducting and inductive reasoning and comprehension of picture analogies, respectively. Rockwell et al. (2011) used direct instruction, including MLT, to teach a student with ASD to use a schematic diagram and how to recognize the salient features of the mathematic problem types (i.e., group problems, change problems, and compare problems; e.g., change problems consist of a beginning amount, change amount indicated by an action, and an ending amount). MLT can also be separated from a prescribed DI package to teach a range of skills. For example, in the Knight et al. (2013) study, MLT was used to teach examples and non-examples of science concepts related to convection. Researchers used MLT and examples and non-examples of the science concepts (e.g., “My turn. This is not evaporation. It shows water, which is a liquid, but it does not turn to gas.”), as well as where to place vocabulary words on the graphic organizers. Students were tested using novel exemplars of the science concepts and graphic organizers. Similarly, Smith et al. (2013) used an iPad to deliver a computer-assisted model-test format to teach students how to identify and apply science vocabulary in a general education setting.

One benefit to using MLT combined with examples and non-examples is that it may help with concept formation, by providing children with ASD with the most salient attributes that distinguish one concept from another. Randi et al. (2010) suggests “concept formation may guide children with ASD to more abstract forms of reasoning and category formation based on prototypes” (p. 898). Results from the studies described above lend credence to this idea, since students in the studies were required to recognize the relevant features of the stimulus, use more abstract forms of reasoning, and to categorize.

Visual supports also highlight the most significant aspects of the stimulus for students with ASD. Specifically, graphic organizers and visual diagrams can be used to organize the important information for the students or to call students’ attention to the most relevant details of the lesson. For example, in the Stringfield et al. (2011) study, upper elementary aged students learned a to use a story map of story elements as a strategy for answering comprehension questions. Once completed, the story map contained the following story grammar elements from the story: characters, time, and place; and the beginning, middle, and end of the story. Mims et al. (2012) used two graphic organizers; the first was used to organize sequence of events in the story (What happened first? Next? Last?), and the second displayed rules for answering “wh” questions (e.g., “When you hear ‘what’, listen for a thing. When you hear ‘why’, listen for because.”). These were used to assist middle school students in answering “wh” comprehension questions about biographies. In the Bethune and Wood (2013) study, students aged 8-10 also used graphic organizers as a strategy to answer “wh” questions; however, in this study, students sorted words into corresponding columns (i.e., Each column was labeled according to the type of wh-question as follows: Who? [person],Where? [place], What? [thing], and What doing? [event]). Completed graphic organizers were then used to answer “wh” questions after students read a brief passage. Results of these studies lend support to recent findings in neuropsychology indicating individuals with ASD may have better visual processing ability than verbal ability compared to matched controls. For example, based on their findings from an fMRI study showing individuals with HF ASD showed more activation in the parietal and occipital regions of the brain, authors suggested individuals with HF ASD relied more on visual imagery as an adaptation to understand sentences compared to a control group. Roth et al. (2012) compared the auditory brainstem responses (ABR) of young children suspected of having ASD to children with and without a language delay, finding those suspected of having ASD had more abnormalities than either of the other two groups. Authors support the notion of an auditory deficiency, noting “an auditory processing deficit may be at the core of these two disorders” (p. 23). The permanent nature of written text may assist students with ASD in reading comprehension due to their enhanced visual ability, since learners can review important details and reread passages (Randi et al. 2010); similarly, visual supports may assist students with ASD in text-based comprehension across content areas by enabling them to return to and organize information in order to make meaningful connections.

In addition to using visual supports, practitioners should consider teaching students with ASD skills to scaffold their own cognitive processes (i.e., strategy instruction) as well as assisting students in practicing comprehension skills (e.g., using content enhancements). Content enhancements are instructional devices (e.g., graphic organizers, computer assisted instruction) used to facilitate the selection, organization, and presentation of difficult to understand material and make the text more meaningful and accessible. In contrast, strategy instruction teaches students how to learn methods of actively processing and learning from the text (Gajria et al. 2007; Gersten et al. 2001). Parallel to previous reviews (e.g., Chiang and Lin 2007; Randi et al. 2010; Whalon et al. 2009), the current review suggests researchers may still be more interested in teaching students to use content enhancements or to practice comprehension skills, as the number of studies addressing these skills far outweighed the number of studies teaching strategy skills. Further, the current review indicates most studies using strategy instruction included students with HF ASD or students with average or above-average intelligence. While teachers should consider individual student needs and characteristics, results suggest they may be limiting instruction to teaching practicing comprehension skills or using content enhancements based on preconceived notions of what students can do (e.g., IQ scores). Instead, teachers could reflect on the purpose of instruction. For example, if the purpose of instruction is to assist students in actively processing the content, then content enhancements would be effective; however, if the instructional goal is on “how to learn” when generating main ideas, summarizing information, predicting, questioning, or clarifying text, a cognitive strategy approach may be more beneficial.

Finally, practitioners should consider the type of text and content area of instruction when selecting interventions. Readers are often more challenged by comprehension of expository material than narrative texts. Many students experience difficulties with expository text due to the large volume of unfamiliar and technical vocabulary, as well as differences from narrative texts in terms of text structure and level of difficulty (Gajria et al. 2007). Gersten et al. (2001) summarize the reasons why expository text can be challenging: (a) expository text involves reading long passages without prompts from a conversational partner (e.g., dialogue), (b) expository text structure is often more abstract than narrative structure, and (c) expository texts use more complicated and varied structures than do narratives. In addition to the style of expository text, vocabulary in content areas is often more difficult to decode and pronounce, may be absent from the students’ listening or speaking vocabulary, and terms are often presented in rapid succession. Content is usually new and unfamiliar to the student, going beyond their everyday experiences. Science content, for example, includes many unfamiliar concepts and in higher density than found in narrative materials. Special features of expository text can present challenges as well; science texts often contain graphics and illustrations that contribute directly to the information presented in the text. Students with disabilities need careful introduction of the graphics to determine the interrelationships between the concepts presented in the illustration.

Some experts suggest narrative text may actually be more challenging for students with ASD due to the need to understand the author’s intent, and characters’ feelings, perceptions, and motives (e.g., Happe 1994; Randi et al. 2010). At the heart of these difficulties lie some of the defining characteristics of ASD, including social and communication challenges, which may not be as easy for teachers to address as difficult vocabulary, unfamiliar concepts or graphics, or failure to differentiate text structures. Although researchers have suggested that different strategies should be used for comprehending varying text structures or content areas for children with learning disabilities (e.g., Gajria et al. 2007; Gersten et al. 2001), findings from the current analysis suggest students with ASD should be taught to use both content enhancements and strategy instruction through the use of response prompting methods and explicit teaching of visual supports across content or text structures.

Limitations of the Review

Although 13 of the 23 studies reviewed were considered strong or adequate, limitations to the review process exist. Of the studies reviewed, no group research design studies were found that met criteria for review. In addition, while the criteria set forth by Reichow et al. (2008) and Reichow (2011) are the only ones recommended for studies involving individuals with ASD that can be used with both single-case and group design research studies, it is relatively new. Several studies were excluded from subsequent analysis because they were considered weak, even though none of the primary indicators were considered unacceptable (i.e., three adequate and three high primary indicators is considered a weak study). Further, when conducting applied research in educational settings, it can be difficult to obtain accurate diagnostic information regarding a students’ ASD label (apart from the educational label used to provide services based on the IEP). In most of the studies reviewed, authors provided a thorough description of the students (including characteristics associated with ASD), but did not provide other identifying information related to the diagnosis of ASD. In fact, most of the studies did not describe the severity of ASD, but did provide information about co-morbid disabilities. Only six of the strong and acceptable studies included a social validity measure, and group research studies were not found that met inclusion criteria.

As a field, experts continue to struggle with the both the definition of and the evaluative criteria needed to determine an EBP, and while methodological rigor should not be sacrificed, realistic considerations for applied settings may need to be considered. Another limitation of the current review were the settings and intervention agents, as most of the studies were conducted in 1:1 settings/self-contained settings, by researchers, and only two included typically developing peers. Further, although studies in the content area of social studies were included in the review, none were found to be adequate. Additionally, the ages of students in the studies ranged from 7–15, indicating young adults may not be getting instruction in comprehension of content areas. Finally, since none of the effective practices found in the review were used in isolation, future research should conduct component analyses to determine the most beneficial aspects of the treatment.

Future Research

Comprehension challenges for this population have been well documented in the literature since Kanner’s observations (1943), yet, the overall number of studies in the current review that met inclusion criteria is low, and the number of adequate and strong studies is even more disappointing. Although the number of strong and adequate studies is low overall, and we concur with Randi et al. (2010) that it is unwise to prescribe particular interventions without considering individual student characteristics, our findings suggest that response prompting strategies combined with visual supports can be useful to teach text-based comprehension skills across content areas (e.g., science vocabulary, wh-questions, steps completed in a task analysis based on a math story problem).

As the prevalence of ASD continues to rise, it seems likely that children on the spectrum will be increasingly served in inclusive settings. Future research should continue to evaluate methods to increase text-based comprehension in these settings, across age groups (especially with young adults), along side typically developing peers, in small groups, by typical intervention agents, and in various content areas (e.g., social studies). Additional rigorous research is needed that includes accurate diagnostic information, measures of social validity, Kappa measures, and blind raters. If the field is to continue to evaluate and validate EBP, individual researchers interested in promoting comprehension interventions for children and youth with ASD should consider working as a research community, replicating promising interventions and strategies across research teams to establish evidence-based practices. Specifically, the MLT strategy emerged as a novel approach to aide children with ASD in listening and reading comprehension across content areas; however, additional empirical studies are needed to establish it as an EBP. Similarly, graphic organizers were also used in many of the studies, so future research should continue to evaluate this strategy across core content areas and within the spectrum of children with ASD. Further, researchers should continue to evaluate methods for teaching students varying text structures and content areas to determine if there is a need for specialized instruction in these areas. Since studies in the area of social studies did not meet criteria for quality in this review, rigorous studies are critically needed in this content area. Researchers could also evaluate specific practices for listening or reading comprehension. Since much of the current research examined comprehensive treatment packages, future research should evaluate via component analysis aspects of the intervention most effective.

Previous literature reviews have appeared to make an impact on subsequent comprehension intervention research for this population. For example, Chiang and Lin (2007) recommended future evaluations of the NRP-identified methods for teaching text comprehension (e.g., graphic and semantic organizers, story structure, and question answering of wh-questions). In the span between the previous review and the current review, researchers have seemingly followed these suggestions, as several of the studies in the current review used these strategies (e.g., graphic and semantic organizers were used by seven of the 13 quality studies). We hope that the current review not only provides practitioners with effective interventions to promote comprehension across content areas for children with ASD, but that it also inspires researchers to fine-tune our understanding of how best to teach comprehension skills in core content to students with ASD.

References

References marked with an asterisk indicate studies included in the review

Allor, J. H., Mathes, P. G., Roberts, J. K., Jones, F. G., & Champlin, T. M. (2010). Teaching students with moderate intellectual disabilities to read: An experimental examination of a comprehensive reading intervention. Education and Training in Autism and Developmental Disabilities, 45(1), 3–22.

American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Washington, DC: Author.

**Armstrong, T. K., & Hughes, M. T. (2012). Exploring computer and storybook interventions for children with high functioning autism. International Journal of Special Education, 27(3), 88–99.

**Bethune, K. S., & Wood, C. L. (2013). Effects of wh-question graphic organizers on reading comprehension skills of students with autism spectrum disorders. Education and Training in Autism and Developmental Disabilities, 48(2), 236–244.

Biemiller, A. (1999). Language and reading success. Cambridge, MA: Brookline Books.

Browder, D. M., Ahlgrim-Delzell, L., Spooner, F., Mims, P. J., & Baker, J. N. (2009). Using time delay to teach literacy to students with severe developmental disabilities. Exceptional Children, 75, 343–364.

**Browder, D. M., Jimenez, B. A., Spooner, F., Saunders, A., Hudson, M., & Bethune, K. S. (2012). Early numeracy instruction for students with moderate and severe developmental disabilities. Research & Practice for Persons with Severe Disabilities, 37(4), 308–320.

Browder, D. M., Trela, K., Courtade, G. R., Jimenez, B., Knight, V., & Flowers, C. (2010). Teaching mathematics and science standards to students with moderate and severe developmental disabilities. Journal of Special Education, 46(1), 26–35.

**Browder, D. M., Trela, K., & Jimenez, B. (2007). Training teachers to follow a task analysis to engage middle school students with moderate and severe developmental disabilities in grade appropriate literature. Focus on Autism and other Developmental Disabilities, 22(4), 206–219.

Brown, H. M., Oram-Cardy, J., & Johnson, A. (2013). A meta-analysis of the reading comprehension skills of individuals on the autism spectrum. Journal of Autism and Developmental Disorders, 43(4), 932–955.

**Burton, C. E., Anderson, D. H., Prater, M. A., & Dyches, T. T. (2013). Video self-modeling on an iPad to teach functional math skills to adolescents with autism and intellectual disability. Focus on Autism and Other Developmental Disabilities, 28(2), 67–77.

**Carnahan, C. A., & Williamson, P. S. (2013). Does Compare-Contrast Text Structure Help Students With Autism Spectrum Disorder Comprehend Science Text? Exceptional Children, 79(3), 347–363.

Carnine, D., Silbert, J., & Kameenui, E. J. (1997). Direct instruction reading (3rd ed.). Columbus, OH: Merrill.

Carroll, J. M., & Snowling, M. J. (2004). Language and phonological skills in children at high risk of reading difficulties. Journal of Child Psychology and Psychiatry, 45(3), 631–640.

Chiang, H. M., & Lin, Y. H. (2007). reading comprehension instruction for students with autism spectrum disorders: A review of the literature. Focus on Autism and Other Developmental Disabilities, 22(4), 259–267.

Clarke, P. J., Snowling, M. J., Truelove, E., & Hulme, C. (2010). Ameliorating children’s reading-comprehension difficulties a randomized controlled trial. Psychological Science, 21, 1106–1116.

Dogoe, M. S., Banda, D. R., Lock, R. H., & Feinstein, R. (2011). Teaching generalized reading of product warning labels to young adults with autism using the constant time delay procedure. Education and Training in Autism and Developmental Disabilities, 46(2), 204–213.

**Dugan, E., Kamps, D., Leonard, B., Watkins, N., Rheinberger, A., & Stackhaus, J. (1995). Effects of cooperative learning groups during social studies for students with autism and fourth-grade peers. Journal of Applied Behavior Analysis, 28, 175–188.

Eskes, G., Bryson, S., & McCormick, T. (1990). Comprehension of concrete and abstract words in autistic children. Journal of Autism and Developmental Disorders, 20, 61–73.

**Flores, M. M., & Ganz, J. B. (2007). Effects of Direct Instruction for teaching statement inference, use of facts, and analogies to students with developmental disabilities and reading delays. Focus on Autism and Other Developmental Disabilities, 22(4), 244–251.

**Flores, M. M., & Ganz, J. B. (2009). Effects of direct instruction on the reading comprehension of students with autism and developmental disabilities. Education and Training in Developmental Disabilities, 44(1), 39–53.

Frith, U., & Snowling, M. (1983). Reading for meaning and reading for sound in autistic and dyslexic children. British Journal of Developmental Psychology, 1, 329–342.

Gajria, M., Jitendra, A. K., Sood, S., & Sacks, G. (2007). Improving comprehension of expository text in students with LD: A research synthesis. Journal of Learning Disabilities, 40, 210–225.

Gast, D. L. (2010). Single subject research methodology in behavioral sciences. New York, NY: Routledge.

Gersten, R., Fuchs, L. S., Williams, J. P., & Baker, S. (2001). Teaching reading comprehension strategies to students with learning disabilities: A review of research. Review of Educational Research, 71, 279–320.

Goodwin, A., Fein, D., & Naigles, L. R. (2012). Comprehension of wh-questions precedes their production in typical development and autism spectrum disorders. Autism Research, 5(2), 109–123.

Gough, P. B., & Tunmer, W. E. (1986). Decoding, reading, and reading disability. Remedial and special education, 7(1), 6–10.

Griswold, D. E., Barnhill, G. P., Myles, B. S., Hagiwara, T., & Simpson, R. L. (2002). Asperger syndrome and academic achievement. Focus on Autism and Other Developmental Disabilities, 17(2), 94–102.

Happe, F. (1994). An advanced test of theory of mind: Understanding of story characters’ thoughts and feelings by able autistic, mentally handicapped, and normal children and adults. Journal of Autism and Developmental Disorders, 24, 129–154.

Horner, R. H., Carr, E. G., Halle, J., McGee, G., Odom, S., & Wolery, M. (2005). The use of single-subject research to identify evidence-based practice in special education. Exceptional Children, 71(2), 165–179.

**Hua, Y., Hendrickson, J. M., Therrien, W. J., Woods-Groves, S., Ries, P. S., & Shaw, J. J. (2012). Effects of combined reading and question generation on reading fluency and comprehension of three young adults with autism and intellectual disability. Focus on Autism and Other Developmental Disabilities, 27(3), 135–146.

Jones, C. R. G., Happé, F., Golden, H., Marsden, A. J. S., Tregay, J., Simonoff, E., et al. (2009). Reading and arithmetic in adolescents with autism spectrum disorders: Peaks and dips in attainment. Neuropsychology, 23(6), 718–728.

Kadzin, A. E. (1997). Assessing the clinical or applied significance of behavior change through social validation. Behavior Modification, 1, 427–452.

**Kamps, D. M., Barbetta, P. M., Leonard, B. R., & Delquadri, J. (1994). Classwide peer tutoring: An integration strategy to improve reading skills and promote interactions among students with autism and general education peers. Journal of Applied Behavior Analysis, 27, 49–61.

**Kamps, D. M., Leonard, B., Potucek, J., & Garrison-Harrell, L. (1995). Cooperative learning groups in reading: An integration strategy for students with autism and general classroom peers. Behavioral Disorders, 21(1), 89–109.

Kanner, L. (1943). Autistic disturbances of affective contact. Nervous child, 2(3), 217–250.

**Knight, V. F., Spooner, F., Browder, D. M., Smith, B. R., & Wood, C. L. (2013). Using systematic instruction and graphic organizers to teach science concepts to students with autism spectrum disorders and intellectual disability. Focus on autism and other developmental disabilities, 28(2), 115–126.

Knight, V., Spriggs, A., & Sartini, E. (2014). Evaluating visual activity schedules as evidence-based practice for individuals with autism spectrum disorder. Journal of Autism and Developmental Disorders. doi:10.1007/s10803-014-2201-z.

Mashal, N., & Kasirer, A. (2011). Thinking maps enhance metaphoric competence in children with autism and learning disabilities. Research in Developmental Disabilities, 32, 2045–2054.

Mastropieri, M. A., & Scruggs, T. E. (1997). Best practices in promoting reading comprehension in students with learning disabilities 1976 to 1996. Remedial and Special Education, 18(4), 198–213.

**Mims, P. J., Hudson, M. E., & Browder, D. M. (2012). Using read-alouds of grade level biographies and systematic prompting to promote comprehension for students with moderate and severe developmental disabilities. Focus on Autism and Other Developmental Disabilities, 27(2), 67–80.

**Muchetti, C. A. (2013). Adapted shared reading at school for minimally verbal students with autism. Autism, 17(3), 358–372.

Myles, B. S., & Simpson, R. L. (2002). Asperger syndrome an overview of characteristics. Focus on Autism and Other Developmental Disabilities, 17(3), 132–137.

Nation, K., Clarke, P., Wright, B., & Williams, C. (2006). Patterns of reading ability in children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 36(7), 911–919.

Nation, K., Cocksey, J., Taylor, J. S., & Bishop, D. V. (2010). A longitudinal investigation of early reading and language skills in children with poor reading comprehension. Journal of Child Psychology and Psychiatry, 51, 1031–1039.

National Professional Development Center on ASD (NPDC). (2010). Evidence-base for visual supports. Module: Visual Supports. Retrieved from http://autismpdc.fpg.unc.edu/sites/autismpdc.fpg.unc.edu/files/VisualSupports_EvidenceBase.pdf

O’Conner, I. M., & Klein, P. D. (2004). Exploration of strategies for facilitating the reading comprehension of high-functioning students with autism spectrum disorders. Journal of Autism and Developmental Disorders, 34(2), 115–127.

Prior, M., & Hall, L. (1979). Comprehension of transitive and intransitive phrases by autistic, retarded, and normal children. Journal of Communication Disorders, 12, 103–111.

Randi, J., Newman, T., & Grigorenko, E. L. (2010). Teaching children with autism to read for meaning: Challenges and possibilities. Journal of Autism and Developmental Disorders, 40, 890–902.

Reichow, B. (2011). Development, procedures, and application of the evaluative method for determining evidence-based practices in autism. In B. Reichow et al. (Eds.). Evidence-based practices and treatments for children with autism (pp. 25–39). doi:10.1007/978-1-4419-6975-0_2

Reichow, B., Volkmar, F. R., & Cicchetti, D. V. (2008). Development of the evaluative method for evaluating and determining evidence-based practices in autism. Journal of Autism and Developmental Disorders, 38, 1311–1319.

Ricketts, J. (2011). Research review: Reading comprehension in developmental disorders of language and communication. Journal of Child Psychology and Psychiatry, 52(11), 1111–1123.

Ricketts, J., Jones, C. R., Happé, F., & Charman, T. (2013). Reading comprehension in autism spectrum disorders: The role of oral language and social functioning. Journal of Autism and Developmental Disorders, 43, 807–816.

**Riesen, T., McDonnell, J., Johnson, J. W., Polychronis, S., & Jameson, M. (2003). A comparison of constant time delay and simultaneous prompting within embedded instruction in general education classes with students with moderate to severe disabilities. Journal of Behavioral Education, 12(4), 241–259.

**Rockwell, S. B., Griffin, C. G., & Jones, H. A. (2011). Schema-based instruction in mathematics and the word problem-solving performance of a student with autism. Focus on Autism and Other Developmental Disabilities, 26(20), 87–95.

Roth, D. A., Muchnik, C., Shabtai, E., Hildesheimer, M., & Henkin, Y. (2012). Evidence for atypical auditory brainstem responses in young children with suspected autism spectrum disorders. Developmental Medicine & Child Neurology,. doi:10.1111/j.1469-8749.2011.04149.x.

Sayeski, K., Paulsen, K., & the IRIS Center. (2003). Early reading. Retrieved from http://iris.peabody.vanderbilt.edu/case_studies/ICS-002.pdf

**Schenning, H., Knight, V., & Spooner, F. (2013). Effects of structured inquiry and graphic organizers on social studies comprehension by students with autism spectrum disorders. Research in autism spectrum disorders, 7, 526–540.

**Secan, K. E., Egel, A. L., & Tilley, C. S. (1989). Acquisition, generalization, and maintenance of question-answering skills in autistic children. Journal of Applied Behavior Analysis, 22, 181–196.

**Smith, B. R., Spooner, F., & Wood, C. L. (2013). Using embedded computer-assisted explicit instruction to teach science to students with autism spectrum disorder. Research in Autism Spectrum Disorders, 7, 433–443.

Snowling, M., & Frith, U. (1986). Comprehension in “hyperlexic” readers. Journal of Experimental Child Psychology, 42(3), 392–415.

Spooner, F., Knight, V. F., Browder, D., & Smith, B. R. (2012). Evidence-based practices for teaching academic skills to students with severe developmental disabilities. Remedial and Special Education, 33, 374–387. doi:10.1177/074193251142634.

**Stringfield, S. G., Luscre, D., & Gast, D. L. (2011). Effects of a story map on accelerated reader postreading test scores in students with high-functioning autism. Focus on Autism and Other Developmental Disabilities, 26(4), 218–229.

Tager-Flusberg, H. (1981). Sentence comprehension in autistic children. Applied Psycholinguistics, 2, 5–24.

Wahlberg, T., & Magliano, J. P. (2004). The ability of high function individuals with autism to comprehend written discourse. Discourse Processes, 38(1), 119–144.

Whalon, K. J., Al Otaiba, S., & Delano, M. E. (2009). Evidence-based reading instruction for individuals with autism spectrum disorders. Focus on Autism and Other Developmental Disabilities, 24(1), 3–16.

**Whalon, K., & Hanline, M. F. (2008). Effects of a reciprocal questioning intervention on the question generation and responding of children with autism spectrum disorder. Education and Training in Developmental Disabilities, 43, 367–387.

Wong, C., Odom, S. L., Hume, K., Cox, A. W., Fettig, A., Kucharczyk, S., et al. (2014). Evidence-based practices for children, youth, and young adults with autism spectrum disorder. Chapel Hill: The University of North Carolina, Frank Porter Graham Child Development Institute, Autism Evidence-Based Practice Review Group. Retrieved from http://autismpdc.fpg.unc.edu/sites/autismpdc.fpg.unc.edu/files/2014_EBP_Report.pdf

Woolley, G. (2011). Reading comprehension: Assisting children with learning difficulties. doi:10.1007/978-94-007-1174-7_2. Retrieved from http://www.google.com

**Zakas, T. L., Browder, D. M., Ahlgrim-Delzell, L., & Heafner, T. (2013). Teaching social studies content to students with autism using a graphic organizer intervention. Research in Autism Spectrum Disorders, 7, 1075–1086.

Acknowledgments

The authors would like to thank Rebecca Moody and Ryane Williamson of Vanderbilt University for their contributions to this project.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Knight, V.F., Sartini, E. A Comprehensive Literature Review of Comprehension Strategies in Core Content Areas for Students with Autism Spectrum Disorder. J Autism Dev Disord 45, 1213–1229 (2015). https://doi.org/10.1007/s10803-014-2280-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-014-2280-x