Abstract

Over the past fifteen years many schools have utilized aggression prevention programs. Despite these apparent advances, many programs are not examined systematically to determine the areas in which they are most effective. One reason for this is that many programs, especially those in urban under-resourced areas, do not utilize outcome measures that are sensitive to the needs of ethnic minority students. The current study illustrates how a new knowledge-based measure of social information processing and anger management techniques was designed through a partnership-based process to ensure that it would be sensitive to the needs of urban, predominately African American youngsters, while also having broad potential applicability for use as an outcome assessment tool for aggression prevention programs focusing upon social information processing. The new measure was found to have strong psychometric properties within a sample of urban predominately African American youth, as item analyses suggested that almost all items discriminate well between more and less knowledgeable individuals, that the test-retest reliability of the measure is strong, and that the measure appears to be sensitive to treatment changes over time. In addition, the overall score of this new measure is moderately associated with attributions of hostility on two measures (negative correlations) and demonstrates a low to moderate negative association with peer and teacher report measures of overt and relational aggression. More research is needed to determine the measure’s utility outside of the urban school context.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

There has been a proliferation of school-based aggression prevention programs conducted across American schools in the wake of the Columbine shooting and other similar horrific episodes of school violence (Modzeleski 2007). However, many of the school-based aggression prevention programs being conducted have not demonstrated that they are effective (Leff et al. 2001) and/or that they are able to be implemented or evaluated in a consistent and systematic manner (Leff et al. 2009b; Perepletchikova et al. 2007). Another critique of many aggression prevention programs is that initiatives do not always measure program effectiveness or outcomes in a culturally-sensitive and/or developmentally appropriate manner (see Leff et al. 2006). This is especially true in low income urban samples, as the majority of outcome measures have been normed and validated with suburban and/or middle class Caucasian youth, possibly disempowering urban youth and undermining the validity of the measures used (Fantuzzo et al. 1997; Leff et al. 2006). In fact, there has been a recent emphasis upon researchers ensuring that the assessment tools being used are culturally sensitive and appropriate for samples comprised of minority youth (Tucker and Herman 2002; U.S. Public Health Service 2001). A third limitation of many aggression prevention programs is that they often measure relatively distal outcomes consisting of aggressive behavior change. However, many aggression prevention programs teach students to recognize and utilize problem solving steps, which may represent a more proximal outcome (e.g., Leff et al. 2009a; Lochman and Wells 2002). Typically, this is assessed by presenting youth with a sample vignette and asking how they would interpret and react in the context of a socially provocative situation. While studying attributions of intentionality (i.e., how a participant interprets the intent of others) and related constructs has demonstrated some associations with the development and maintenance of aggression (see Orobio de Castro et al. 2002), prevention programs often teach youth to first have an awareness or general knowledge of the social problem solving steps thought to be important in adequately processing and reacting to social stimuli (e.g., Crick and Dodge 1994; Lochman and Wells 2002). This underlying general knowledge of social and emotional processing steps may be a more global skill that underlies or is a precursor for actual social processing change in specific situations. Nevertheless, there is a paucity of research on measures that assess for one’s awareness and knowledge of social and emotional processing.

The goal of the current study is to highlight how a new measure assessing students’ general knowledge of social and emotional problem solving steps was developed through a participatory action research (PAR) framework and initially evaluated with a sample of urban predominately African American youth. When using a PAR approach, researchers carefully adapt empirically-based best practice procedures by collaborating with research participants and key stakeholder groups (see Leff et al. 2004, or Nastasi et al. 2000, for other examples of this process). Employing a PAR model for intervention or measurement design can allow for a psychometrically-sound, culturally-responsive, and meaningful intervention or measure (Hughes 2002; Nastasi et al. 2000). In the current study, the authors used a social information processing model of aggression as the starting point to develop a knowledge-based measure to better understand the way youth perceive different social interactions within their environment. Items used in this new measure were thought to have utility for measuring the types of general knowledge that are often taught in school-based aggression and bullying prevention programs.

Social Information Processing Model

Many aggression prevention programs are designed to decrease aggressive behavior by modifying how students process and interpret social cues and select behavioral responses (e.g., Coping Power Program, Lochman and Wells 2003; Lochman and Wells 2004; Brain Power Program, Hudley 2003; Hudley et al. 1998; Hudley and Graham 1993; Second Step, Frey et al. 2005; Van Schoiack-Edstrom et al. 2002). These programs were heavily influenced by Crick and Dodge’s (1994) reformulated social information processing (SIP) theory, a social cognitive model of social adjustment that involves six discrete processes that occur in a relatively brief amount of time, exert bidirectional influence through feedback loops, and ultimately result in a behavioral outcome. Specifically, components of SIP include attending to and encoding internal and external cues in a social situation, interpreting cues (e.g., attributing hostile intent), clarifying socioemotional goals (e.g., avoid conflict, regulate anger), generating and evaluating possible responses to the situation, and enacting a behavioral response. Given that the many aggression interventions teach students strategies for slowing down and recognizing social cognitive cues in the context of potential social conflicts (e.g., Frey et al. 2005; Hudley et al. 1998; Lochman and Wells 2004), it follows that a knowledge-based measure of general social and emotional processing steps is needed to improve the evaluation of outcomes for social cognitive retraining aggression prevention programs. Further, given that many traditional outcome measures have been viewed as culturally insensitive for urban low-income youth and/or not developmentally appropriate (Leff et al. 2006), the current study utilizes a partnership-based methodology to ensure the relevance and meaning of such a measure with an urban 3rd–5th grade sample of youth.

Measures Typically Used to Measure Aspects of Social Information Processing

Social information processing assessment tools utilized in the literature tend to be vignette-based and try to elicit a child’s social goals (Renshaw and Asher 1983), attributional style or tendency to make a hostile attributional bias (Crick 1995; Crick et al. 2002; Leff et al. 2006), and/or outcome evaluation in the context of a potential social conflict (Crick and Werner 1998). While these represent an important range of measures, a more general measure of knowledge about SIP steps, such as the one developed in the current study, may ultimately be beneficial as either a mediating variable and/or outcome assessment measure for aggression prevention programs that focus upon social cognitive retraining. In other words, a new measure was desired that went beyond asking children how they would interpret and react in an ambiguous social situation that ended poorly (e.g., a vignette in which someone bumps into you from behind, and then asking the child whether or not they think that the action was on purpose or by accident) and instead identify whether or not they had a general understanding or knowledge for the sequential steps thought to be important for social and emotional processing (e.g., what is the first thing you would do if someone bumps you from behind?). Given that many school-based aggression prevention best practice programs have a social cognitive retraining emphasis in which they train participants in the awareness of the sequential social problem-solving steps (e.g., Leff et al. 2009a, b; Lochman and Wells 2003; Lochman and Wells 2004), this type of measure would have widespread applicability, especially if scores on this measure were associated with attributional or behavioral indices of functioning.

Although several SIP-based aggression prevention programs have developed instruments designed to assess whether or not participants learned material covered in the curriculum and specifically a knowledge and/or awareness of SIP steps, these instruments have yet to establish external validity. Thus, existing measures of SIP appear valid within the context of a specific intervention study but have yet to establish generalizability across studies and populations. For example, the Responding in Peaceful and Positive Ways (RIPP) Knowledge Test (Farrell et al. 2001b) was developed to determine if participants in a school-based universal violence prevention program had acquired an understanding of the problem-solving model covered in that particular curriculum. The instrument utilizes a multiple-choice format, includes items assessing curriculum-specific content (e.g., knowledge of acronyms used as teaching tools), and was designed for use with a population of primarily African-American middle-school students from low-income families. To date, the RIPP Knowledge Test has been used as part of an evaluation of the efficacy of the RIPP intervention with 6th and 7th grade populations. Students who completed the intervention demonstrated higher scores on the instrument, indicating greater knowledge of the social problem-solving model taught in the intervention compared to students who did not receive the intervention. This effect was greater for boys than girls and remained significant six months post-treatment (Farrell et al. 2003). In addition, correlational analyses indicated an inverse relation between knowledge of the problem-solving model and behavioral outcomes (e.g., violent behavior, nonphysical aggression) pre- and post-intervention, providing support for the role of social cognition as a predictor of aggressive behavior. Although these data provide initial evidence of construct validity, the RIPP Knowledge Test is unlikely to establish validity as an instrument with utility outside of the RIPP program given the focus on content and terminology that is specific to the intervention curriculum. In addition, other test items focus on behavioral response selection as opposed to assessing individual components of the guiding SIP model. The instrument presented in the current study was designed to fill an important gap in the field by assessing SIP components in the context of an intervention without curriculum-specific content.

Other intervention programs with well-established efficacy regarding the decrease of aggressive behavior (e.g., Coping Power Program, Lochman and Wells 2003; Lochman and Wells 2004; Lochman et al. 2009) have not assessed whether behavior change was mediated by changes in social knowledge or attitudes. Specifically, the Coping Power Program, a school-based secondary prevention program for aggressive children and adolescents uses a group format to increase social skills including social problem solving and SIP, and a parent component to improve parents’ stress management strategies and communication with their children. Despite demonstrating efficacy regarding behavioral outcomes (e.g., frequency of aggressive behavior, delinquency, drug usage) and an increase in more adaptive responses on hypothetical vignettes of social situations, change in children’s general knowledge of social and emotional processing has yet to be systematically assessed. As SIP is a core feature of theories underlying empirically supported interventions, it follows that a child’s general knowledge of social and emotional processing may be an important target for assessment and curriculum when evaluating treatment outcomes.

Several instruments have been developed to assess SIP as part of cross-sectional studies of aggressive behavior in adolescence (e.g., Social Problem-Solving Inventory for Adolescents, Frauenknecht and Black 1995; The Social Cognitive Skills Test, van Manen et al. 2002). Although these instruments have demonstrated construct validity and the ability to discriminate between aggressive and non-aggressive adolescents, to date they have not been used to measure changes in knowledge over time. For example, the Social Cognitive Skills Test (van Manen et al. 2002) was designed to assess children’s social cognitive development by examining the quality of responses to six short stories involving common social situations. Based on Selman and Byrne’s structural-developmental theory of social cognition (1974), the Social Cognitive Skills Test has shown that aggressive elementary school students exhibit developmentally younger SIP strategies compared to non-aggressive peers. Despite its utility in identifying different SIP approaches in target groups, the instrument has not been used to demonstrate developmental changes over time and/or changes attributable to an SIP-based intervention.

Overall, despite recent advances in the development of interventions that target social cognition, significant gaps in the assessment of cognitive mediators of aggressive behavior remain. As a result, broad-based utility of existing instruments designed to assess SIP in childhood is limited by a number of factors. Although instruments have been developed to evaluate changes in students’ knowledge of curriculum-specific content as a result of treatment, these measures have yet to establish external validity and are unlikely to generalize to other populations. In addition, many interventions with well-established efficacy in decreasing physical aggression have yet to provide data that changes in behavior are accompanied by changes in SIP. Finally, there is a dearth of culturally sensitive instruments designed to assess SIP in middle childhood.

Use of PAR in Measurement Development

The supplement to the Surgeon General’s Report on Mental Health (U.S. Public Health Service, 2001) stresses the importance of developing new assessment and intervention techniques so that they are maximally responsive to the needs of minority cultural groups. A number of researchers have recently advocated for the use of a participation action research (PAR) framework to develop interventions and measurement tools that are both psychometrically sound and culturally-responsive (Leff et al. 2004; Nastasi et al. 2000). The PAR approach combines scientific empirical research with key stakeholder feedback to help ensure that the resulting assessment measures are both scientifically sound and culturally-responsive. In the current study, we utilize a partnership-based approach to design a measure of general knowledge related to social and emotional processing steps and anger management skills that is based upon the reformulated SIP model of aggression (Crick and Dodge 1994) and one that would be responsive to the needs of urban predominately African American youth. Thus, we aimed to have questions that assessed youth’s knowledge of identifying signs of physiological arousal, attributional processing, and response selection and evaluation, in addition to other anger management strategies and techniques (e.g., how to enter a challenging social group without causing a conflict; what to do if you are the bystander of aggression) that are routinely taught in aggression prevention and intervention programs in the school setting. Feedback from youth and community partners was solicited in order to ensure that the questions were understandable and worded appropriately for 3rd through 5th graders, response choices were interpreted as intended, the multiple choice format was similar to routine school quizzes and tests, and any ambiguity in language was changed. This combination of questions derived from theory and empirical findings integrated with feedback across important dimensions provides confidence for the researcher in the cultural sensitivity and ecological validity of the resultant measure.

Uses of the New Measure and Goals for the Current Paper

The measure described in this paper will be of utility to both school-based practitioners and researchers. For example, a general knowledge-based measure of SIP steps and related anger management techniques can be used by school psychologists and counselors to better understand how sensitive school-based anger management programs conducted with urban youth are in response to treatment. In addition, a knowledge-based measure may serve as an important mediator variable in behavioral outcomes and therapeutic change for school-based aggression interventions (Farrell et al. 2001a; Kazdin 1998).

The aims of the current paper are threefold. The first goal is to illustrate how a participatory action research framework can be used in the creation of psychometrically strong and culturally-sensitive outcome assessment measures. Second, the current paper will help readers understand how a partnership-based methodology was employed in the design of a knowledge-based measure. The final goal of the paper is to determine the psychometric soundness of the new measure, and to discuss future research steps and practice implications.

Methods

Stages of Measurement Development

In the current manuscript, we will detail several stages of measurement development. The first stage includes the use of a participatory action research (PAR) framework to generate items assessing SIP steps and related anger management strategies for the Knowledge of Anger Problem Solving (KAPS) Measure. Also during the first stage, researchers designed and pilot tested items for possible inclusion on the new measure based upon extensive feedback of 37 youth attending an inner-city summer camp and several community representatives, including teachers and community advocates, who worked within the local school systems. In the second stage, the researchers investigated the test-retest reliability with a school-based sample of 18 urban African American youth. In the final stage, instrument psychometrics were examined with a sample of 224 3rd and 4th grade students who were attending an extremely large urban public elementary school.

Phase 1: Item Development

Participants in the Item Development Phase

To create items that would be developmentally appropriate and culturally sensitive, the research team designed questions based on SIP theory and then partnered with several different groups of predominately urban African American youth to make adaptations to items as appropriate. Twenty two girls and 15 boys attending an urban summer day camp participated in the initial item development phase of the study. All 37 of these youth were African American, including 8 entering 3rd grade, 15 entering 4th grade, and 14 entering 5th grade in local elementary schools within the urban setting. In addition, the research team discussed the potential items with several 3rd–5th grade teachers and community advocates who had worked in various roles within the local school district.

Item Development Process and Procedure

After initially developing items based upon the SIP literature, best practice aggression prevention programs, and consultation with several content area experts,Footnote 1 the researchers partnered with youth attending a summer day camp program from which the later psychometric sample was drawn. Youth participating in this step of the study were individually administered the questions in order to ensure that any feedback as to the question stem, response choice, or wording was accurately recorded. This helped to ensure that the questions based upon empirically-based SIP strategies (Crick and Dodge 1994) and developmental-ecological systems theory (Bronfenbrenner 1986) were adapted to be developmentally and culturally appropriate. Comments provided by respondents in terms of readability, understandability, and response choice options for each question were collated, reviewed and used to improve the wording of items. In addition, several teachers and community advocates helped to also ensure that the wording and response choice format were culturally-sensitive and age-appropriate. The teachers suggested that the questionnaire use a multiple-choice format so that it would be similar to the types of tests and assessments often used within the school district setting. This iterative process of questionnaire development is a key aspect of the participatory action research approach (Leff et al. 2003; Nastasi et al. 2000).

Version 1 of the KAPS was individually administered to six youth at the summer camp. This original version of the questionnaire contained 13 multiple-choice items and two true/false items. Initial feedback was positive, with one item being viewed as being too wordy, and another as needing some clarification. Also, youth suggested that we underline key words in several questions in order to help participants more readily understand the main point being asked. The participating teachers and community advocates suggested that we make one item briefer, and similar to the youth, suggested that we highlight key words for each question.

Following this feedback, the research team reworded one item, clarified the question stem of a second item, and underlined key words to help improve understandability across items. Version 2 of the KAPS was then individually administered to a sample of 12 youth attending the summer camp. Based upon a review of the item responses and oral feedback given by these participating youth, the research team determined that 10 of the 15 questions appeared to be worded appropriately and were of variable difficulty level, which was desired at this stage of item development. However, five of the items appeared to be relatively easy as almost all respondents chose the correct response. The research team next worked to make modifications to these five items (which included four multiple choice items and one true-false item) such that the questions were adapted and/or the response options were fine-tuned. Also, the two true-false items were made into a multiple choice format in order to be consistent with other items.

These modifications were made for Version 3 of the KAPS, which was subsequently administered to an additional 19 youth attending the summer camp. Overall, participants found this version of the questionnaire to be understandable and straightforward, and relatively few suggestions were made for modification. Almost all participants obtained the correct score on two items, causing the test developers to modify one of the questions to increase its difficulty level. In contrast, researchers decided to keep the other question without modification because it covered an important topic and because researchers decided that it would be appropriate to have an “easy” item on the questionnaire. Researchers also counterbalanced the positioning of the correct multiple-choice response before finalizing the measure. Finally, the researchers discussed all potential changes with the teacher and community consultants who had a favorable impression of the final modifications. The KAPS was finalized following this discussion.

Phase 2—Test-retest Reliability Analysis

Participants in the Test-Retest Reliability Phase

Eighteen students (10 boys, 8 girls) attending an urban elementary school were individually-administered the knowledge-based measure.Footnote 2 All students were African American and in the 3rd grade (n = 5) or 5th grade (n = 13). At the end of each administration, they were also asked to comment on the readability and understandability of the new measure. Two weeks after the initial administration, respondents were re-administered the same measure.

Test-Retest Reliability of the KAPS

Based on feedback in this administration, participants reported that they understood and were able to answer all items. In addition, descriptive analyses found sufficient variability in responses and overall test scores. Thus, no items were changed at this phase. Two-week test-retest reliability was high (r (16) = 0.85), suggesting that responses did not change across time.

Phase 3: Psychometric Study

Participants in the Psychometric Study

Two hundred and twenty four 3rd and 4th grade students attending a large urban public elementary school participated in the study (M age = 9.44 years). All 3rd and 4th graders attending the school were eligible to participate, and 78% of eligible children obtained signed parental permission and were present on the days of the testing. The majority of the participants were African American (73.6%) or bi-racial including African American (9.3%) and from low-income homes. Participants were taking part in a larger intervention study in which classrooms of youth were randomly assigned to a 20-session classroom-based relational and physical aggression prevention program or to a no treatment control condition. The intervention, called Preventing Relational Aggression in Schools Everyday (PRAISE; Leff et al. 2008) was modeled after an indicated intervention with high risk girls that has shown promise for decreasing participant levels of relational and physical aggression, lessening girls’ tendency to make hostile attributions, while improving social standing, and decreasing loneliness (e.g., Leff et al. 2007a; Leff et al. 2009a). The PRAISE Program is delivered by two to three graduate student facilitators within the classroom. It combines multiple sessions on attribution re-training (e.g., how to identify signs of physiological arousal, how to accurately interpret other’s intentions, how to generate and evaluate alternatives) with sessions related to promoting empathy and perspective taking skills. It was thought that youth participating in the program would experience increased knowledge for social information processing steps during the course of the intervention due to the emphasis upon the social cognitive component (attribution re-training). The program uses cartoons, videos, and role plays as the primary teaching modality. In the current sample, the initial assessment study took place as part of the pre-intervention assessment battery, with the second assessment being conducted post-intervention, approximately four months later.

Approach to Item Analyses

The third phase of test development examined the psychometric properties of the final version of the KAPS, using a classical item analysis approach (Nunnally and Bernstein 1994). Items were evaluated with respect to discrimination, difficulty and distractor quality. Item discrimination refers to the ability of an item to distinguish more knowledgeable individuals from less knowledgeable individuals by comparing performance on a single item to performance on other items that measure the same construct (i.e., overall knowledge score of SIP steps). The point biserial (p-bis) correlation between an item’s response and the complete set of items on the same test form is the measure of discrimination that was used for this analysis. Item difficulty refers to the proportion of participants who respond correctly to an item; this is reported in the results and corresponding tables as the p-value.Footnote 3 Finally, distractor quality is evaluated by examining the relative frequency that each of the incorrect response alternatives was selected, as well as by examining the p-bis correlation between the endorsement of each incorrect response and performance on all other items. If an incorrect response alternative is frequently selected by respondents, including respondents who otherwise respond correctly to other items, then this is an indication of a problem with the distractor. When a distractor is frequently selected (as evidenced by high p-bis values), it may be that the particular response alternative is confusing or misunderstood. It is also possible that such a distractor is partially correct. Thus, any distractors with high p-bis values should be checked for their accuracy and clarity.

An adequate multiple choice test is expected to demonstrate the following characteristics with respect to item statistics: (a) item difficulty values (p-value) are variable across test items, with a minimum value of approximately 0.15; (b) item discrimination values (p-bis) are greater than approximately 0.20; and (c) distractor (p-bis) discrimination values are less than 0.05 (Nunnally and Bernstein 1994).

In the psychometric study, we investigated the measure’s item properties at two time periods. First, the responses of all participants at baseline were analyzed to determine general baseline test properties. That is, we were interested in the individual item difficulties, discrimination, and distractor quality to determine whether the measure met basic psychometric requirements. Second, we examined the same item properties post-intervention, dividing the sample by group (intervention and control groups analyzed separately). This second analysis offered the opportunity to look at the reliability (consistency) of item properties at two time points and the effect of learning on knowledge scores.

Once item properties were deemed acceptable from a test development standpoint, we investigated changes in test scores from baseline to post-intervention. To do so, a repeated measures analysis of variance (RM ANOVA) was conducted to investigate changes from pre- to post-test for each group. From this analysis, we expected a significant correlation from Time 1 to Time 2 scores for participants from both groups. In addition, we expected significant knowledge score gains for the intervention group only. The primary focus of this analysis was to investigate the test’s sensitivity to detecting changes in knowledge following exposure to the curriculum. A RM ANOVA was used for the analysis in order to maximize statistical power and parsimony (i.e., limit Type I error from multiple tests).

A correlational analysis was also conducted in order to examine whether the KAPS was associated with several commonly used hostile attributional bias vignette measures (Crick 1995; Hughes et al. 2004; Leff et al. 2006;), peer nominations of social behavior (Crick and Grotpeter 1995), and teacher reports of youth’s aggressive behavior (Crick 1996).

Measures

Knowledge of Anger Processing Scale (KAPS)

The KAPS is a 15 item multiple choice measure. Four items related to physiological arousal and the importance of staying calm once you recognize that you are having a reaction to an event or situation (Questions #3, 5, 11, and 14). Four items related to attributions of intentionality (Questions #1, 7, 9, and 12). Finally, seven items related to choices one can make in a range of different situations (Questions #2, 4, 6, 8, 10, 13, and 15), including trying to enter a social group, being involved with rumors, and being the bystander of aggression. The girls’ version of the KAPS is contained in the Appendix.

Peer Nominations of Aggression and Prosocial Behavior

To identify youth’s level of relational and physical aggression, and their prosocial behavior, a standardized unlimited peer nomination procedure was used (see Terry 2000). Following standard sociometric scoring procedures, youth completed a series of peer nominations for items related to relational aggression (e.g., spread rumors, ignore or stop talking to others when mad at them, try to keep others from being in their group, 5 items), physical aggression (e.g., starts fights, hit or push others, 3 items), and a prosocial behavior item (e.g., does nice things for others, helps others, or cheers others up). Raw score nominations on five peer nomination items specific to relational aggression were standardized within each nominating group (the grade), resulting in a final relational aggression z-score for each child. A similar procedure was employed for peer nominations of physical aggression and for prosocial behavior. The test-retest reliability, stability across time and situation, and concurrent and predictive validity have been well-established for peer nomination methods across diverse samples of youth (e.g., Olweus 1991; Kupersmidt and Coie 1990), and several studies have investigated and found significant correlations among peer nominations and teacher report indices of aggression for African American youth (Coie and Dodge 1988; Hudley 1993). In the current study, intercorrelations ranged from 0.63 to 0.75 (p < 0.001) for items related to relational aggression and from 0.82 to 0.87 (p < 0.001) for items related to physical aggression. Finally the intercorrelation between the two items related to prosocial behavior was.76 (p < 0.001).

Measure of Hostile Attributional Bias (HAB)

A cartoon-based version of a well-established HAB measure (Crick 1995; Crick et al. 2002) was used to determine students’ level of HAB in relationally and instrumentally provocative social situations. Participants are shown the cartoon illustrations while the corresponding vignette is read aloud. Participants respond to two questions to determine their level of HAB. A recent study demonstrated strong psychometric properties of this cartoon-based adaptation combined with higher acceptability ratings than the traditional written vignette measure for a sample of urban African American girls (Leff et al. 2006). A parallel study has demonstrated similar findings for a sample of urban African American boys (Leff et al. 2007b).

Social Cognitive Assessment Profile, SCAP

A modified version of The SCAP, another hypothetical stories measure (Hughes et al. 2004; Yoon et al. 2000) was used to obtain a second measure of children’s intentionality (e.g., HAB). The SCAP is administered by reading 8 vignettes (four relationally provocative and four instrumentally provocative) and then participants are asked several questions following each vignette to assess their perception of why the event occurred. The original measure uses an open- ended response format to measure attributions of intentionality as responses are coded as hostile, non-hostile, or not scoreable (see Hughes et al. 2004). In our pilot testing it became clear that it was difficult for our research team to determine the level of intentionality or hostility when administering the measure in this manner. As a result we utilized two close-ended intentionality questions based upon open-ended responses obtained during pilot testing that were similar to the HAB measure described above. A recent study has demonstrated the SCAP’s psychometric properties (confirmatory factor analysis, internal consistency, discriminative and predictive validities), and its relevance for African American youngsters (Hughes et al. 2004). In the current study, the overall HAB score across the eight vignettes was utilized (following to the procedure used by Hughes and colleagues).

Teacher Report Measure of Aggression and Social Behavior

The Children’s Social Behavior Questionnaire (CSB; Crick 1996) was completed by the classroom teacher for each of the participating children. The Relational Aggression and Physical Aggression subscales were calculated. The reliability and validity of these subscales have been established for an ethnically diverse sample of youth drawn from a Midwestern city (see Crick 1996). In addition, the CSB has been used in a number of studies with low income youth (e.g., Crick et al. 2005; Murray-Close et al. 2006). In the current study strong internal consistency was demonstrated for each subscale: Relational Aggression (7 items; α = 0.95), Physical Aggression (4 items; α = 0.94), and Prosocial Behavior (4 items; α = 0.80).

Procedure

Youth were administered the knowledge-based measure in small groups (three to four same-sex peers per group) in conjunction with the other self-report measures. The order of presentation of the measures was counterbalanced across groups. A trained research assistant read each of the 15 multiple-choice questions out loud while each student followed along on a paper copy. Youth were instructed to answer each question on their page without talking out loud. If they had questions they were instructed to raise their hand so that the research assistant could assist them. The questionnaire took between 5–10 min to complete and there were no issues with the administration procedures.

Results

Results of the item analyses are reported in Table 1. This table provides the item difficulty and item discrimination values at baseline (full sample) and at post-intervention (intervention and control groups separately). At baseline, there was a wide range of difficulty across items, from a low of 14% correct to a high of 85% correct. Point-biserial (p-bis) correlations revealed strong discriminations for each item, ranging from a low value of.10 to a high of.54. As described above, these values represent the correlation between a single item’s response and the complete set of items on the same test form, which demonstrates each item’s ability to discriminate more from less knowledgeable individuals. These results indicate that the first two criteria for item adequacy—variability in item difficulty and high item discrimination—were generally met at baseline.

Following intervention, separate item statistics were calculated for the intervention and control groups. It was expected that the test characteristics would generally be stable from baseline to post-intervention for the control group, with similar patterns of difficulty and discrimination. In contrast, it was expected that difficulty (e.g., p-values representing the percent of correct responses) and discrimination values would increase for the intervention group, due to learning gains as a result of participation in the intervention. In addition, it was expected that item difficulty p-values would be higher for the intervention group than the control group as well as higher at post-intervention than the baseline measurement. Results of the item analysis at follow-up are also reported in Table 1. As expected, and similar to baseline, item difficulties (intervention group range 0.29 to 0.83 at post-intervention; control group range 0.16 to 0.83 at post-intervention) and discrimination (intervention group range 0.22 to 0.60 at post-intervention; control group range 0.13 to 0.65 at post-intervention) met item adequacy requirements. In addition, for 13 out of the 15 items at post-test, item p-values were higher for the intervention group at post-test than at baseline (for the full sample). This demonstrates knowledge gains from pre-post intervention. In addition, comparing intervention and control group items statistics at post-intervention only, the p-values for the intervention group were generally higher than the control group (13 out of 15 items), which further supports the impact of the intervention on knowledge gains.

Finally, we investigated distractor quality for each item, at baseline and post-intervention, by calculating the p-bis for each incorrect response. At baseline and at post-intervention (group statistics calculated separately), the p-bis values for the vast majority of distractors were less than.05. However, there were two items (7, 14) that each had one problematic response (distractor) at baseline and at post-intervention. This finding indicates that there may be a problem with these two items, though it is easily resolved by adjusting the one problematic response choice for each item. Furthermore, the distractor confusion may explain why these were two of the most difficult test items (see Table 1).

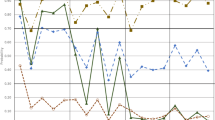

Descriptive statistics for the test as a whole are reported in Table 2 for baseline (full sample) and for post-intervention (intervention and control groups). This table reveals the difficulty of this knowledge test. Overall, mean test scores were only moderate, though participant scores ranged across the full scale, from 0 to 15 points. In addition, test scores increased from baseline to post-intervention, for both groups, though there was a larger mean increase for the intervention group. The results of the RM ANOVA also revealed greater gains from baseline to post-intervention for the intervention group than the control group, with a significant interaction between time and group (F(1,208) = 40.41; p<0.001; partial eta 2 = 0.161). This partial eta 2 corresponds to a large effect size (Cohen 1988).Footnote 4 Figure 1 illustrates the changes from baseline to post-intervention for the two groups.

Age and gender differences were also examined. While there was not a significant difference between girls’ (M = 6.64; SD = 2.67) and boys’ (M = 6.13; SD = 2.26) on the total score of the KAPS, t (226) = 2.40, 4th graders (M = 6.70, SD = 2.61) tended to score higher on the measure than 3rd graders (M = 5.99; SD = 2.27), t (226) = 4.77, p < 0.05.Footnote 5

Correlational analyses indicated that the overall score on the KAPS was moderately associated with attributions of intentionality in relational situations (r = −0.26, p < 0.01) and in instrumental situations (r = −0.36, p < 0.01) on a commonly used hostile attributional bias measure (Crick 1995; Leff et al. 2006). Similar results were found when correlating the overall score of the KAPS with another measure of social cognitive processing (Hughes et al. 2004). For instance, the KAPS was moderately associated with attributions on intentionality on the SCAP (r = −0.35, p < 0.001). In addition, there were low-moderate correlations between the overall KAPS score and teacher reports of student’s physical aggression (r = −0.19, p < 0.01) and relational aggression (r = −0.16, p < 0.05). Finally, the overall score on the KAPS was negatively related to peer nominations of overt aggression (r = −0.16, p < 0.05) and positively related to peer nominations of prosocial behavior (r = 0.19, p < 0.01).

In summary, item-level analyses suggest that 13 of the 15 items demonstrate strong psychometric properties across all item statistics, while 2 of the 15 items demonstrate adequate items statistics. These findings combined with results suggesting that the test has strong test-retest reliability, is low-moderately associated with similar though distinct constructs (hostile attributions and ratings of student behavior by teachers and peers), and robust sensitivity to treatment effects indicate that the KAPS has much potential for use with urban African American youth.

Discussion

The current study illustrates how a participatory action research framework can be used to create an empirically-supported, psychometrically-sound, and culturally-responsive social information processing (SIP) measure for use with urban predominately African American youth. This measure adds to the literature base because it was designed to have wide applicability for urban elementary school populations, so that it would likely be an appropriate assessment and/or outcome tool for any aggression prevention program with urban youth that utilizes a SIP theory or model (Crick and Dodge 1994). Prior SIP knowledge measures have limited applicability outside of the specific violence prevention program for which they were designed (e.g., Farrell et al. 2001b). Given that SIP and the developmental-ecological systems are central theories underlying many empirically-supported aggression interventions, children’s general knowledge of social and emotional processing as measured through The KAPS provides a fruitful avenue for assessment and intervention planning.

Results from the current study on the KAPS suggest that the measure has strong psychometric properties for our sample of urban African American youth. For instance, item analyses revealed that almost all items are strong across all indices including their ability to discriminate between more and less knowledgeable individuals, and that the test is a challenging one with variable levels of difficulty across items. In addition, a large number of individuals randomized to a relational and physical aggression intervention at the classroom level exhibited a significant increase on the knowledge total score from pre- to post-treatment as compared to similar youth randomly assigned to a no-treatment control condition during the intervention time period. Further, total scores on the measure are moderately associated with attributions of intentionality across two separate measures, and low-moderately associated with teacher reports of relational and physical aggression, and peer nominations of overt aggression and prosocial behavior. Finally, test-retest reliability of the overall score is relatively high, suggesting that the test is stable across short time periods. In sum, the new KAPS has favorable psychometric properties. The item analyses suggest that the items ranged in terms of their level of difficulty, discriminate well between more and less knowledgeable youth, and have strong distractor quality. Further, the measure demonstrates strong test-retest reliability and sensitivity to treatment change over time.

The use of a participatory action research approach to measurement development, in which questions taken from empirically-supported SIP steps and modified based upon feedback of local youth and school/community stakeholders, help to ensure that the measure is psychometrically sound and meaningful. This is extremely important within the context of measurement design for urban African American youth. For instance, a number of prior studies have demonstrated that standard psychological measurement tools are not always sensitive to the needs of ethnic minority youth (e.g., Fantuzzo et al. 1997). In fact, research suggests stronger acceptability and cultural appropriateness for urban African American youth when measures are designed by incorporating extensive stakeholder feedback (Leff et al. 2006). Thus, the current research not only resulted in a well-designed measure with widespread utility, but also illustrates how to use a partnership-based process in the design of culturally-sensitive and psychometrically strong outcome measures for urban ethnic minority youth. Further, the current study is meaningful given the widespread problems of aggression in urban school settings (Black and Krishnakumar 1998; Peskin et al. 2006, Witherspoon et al. 1997) and the fact that no prior instrument has been developed and validated for use within this key context.

This study had a number of limitations but also suggests several directions for future research. First, the psychometric study was conducted at one large urban elementary school. Although this school appears to be representative of the larger urban school district from which it was drawn, broader psychometric testing is desirable to ensure that the psychometric properties are similar across a greater number of urban elementary schools. Second, the knowledge instrument was designed specifically to be meaningful for and sensitive to the needs of 3rd–5th grade urban African American youth. While findings are promising in this regard, future research needs to be conducted to determine whether the measure would be sensitive to the needs of other cultural groups of youth. As such, it is unclear as to whether this measure would be appropriate for older or younger youth, or for youth from non-urban settings. Clearly this is an important avenue for future research on the new knowledge-based measure. Further, additional research is needed to further examine whether greater knowledge is associated with less aggressive behavior as well as other variables often explored in aggression prevention programs, such empathy and prosocial behavior.

The results of the research may have implications for school practitioners and researchers. For instance, school-based practitioners may use this knowledge-based measure of SIP and related anger management techniques to provide feedback to schools on how well their aggression programs are impacting youth knowledge. Given that the test can be administered in small groups and is relatively brief, it is a feasible outcome assessment measure even within busy schools. It is also important to understand not only whether or not a given intervention reduces aggressive behavior, but also through which mechanisms such a reduction occurs. The KAPS offers a means to explore whether knowledge of SIP in particular mediates aggression reduction over time, an area that was largely unexplored in past research.

Notes

Forty youth completed the test at time period one. The first twenty participants were selected to participate in the re-test administration. Eighteen of these 20 youth were available on the dates of the re-test administration.

This standard terminology should not be confused with the p-value associated with significance testing.

The partial eta 2 is equivalent to a Cohen’s d of 0.88, which is also considered a large effect (Cohen 1998).

Given that the correlations between grade and total score of the KAPS at pre- (r = 0.14) and post-intervention (r = 0.01) is relatively small, we decided that it was unnecessary to conduct a covariate analysis.

References

Black, M. M., & Krishnakumar, A. (1998). Children in low-income urban settings: interventions to promote mental health and well-being. The American Psychologist, 53, 635–646.

Bronfenbrenner, U. (1986). Ecology of the family as a context for human development: research perspectives. Developmental Psychology, 22, 723–742.

Cohen, J. (1988). Statistical power for the behavioral sciences (2nd ed.). Hillsdale, NJ: Erlbaum.

Coie, J. D., & Dodge, K. A. (1988). Multiple sources of data on social behavior and social status in the school: a cross-age comparison. Child Development, 59, 815–829.

Crick, N. R. (1995). Relational aggression: the role of intent attributions, feelings of distress, and provocation type. Development and Psychopathology, 7, 313–322.

Crick, N. R. (1996). The role of overt aggression, relational aggression, and prosocial behavior in the prediction of children’s future social adjustment. Child Development, 67, 2317–27.

Crick, N. R., & Dodge, K. A. (1994). A review and reformulation of social information-processing mechanisms in children’s social adjustment. Psychiatric Bulletin, 115, 74–101.

Crick, N. R., & Grotpeter, J. K. (1995). Relational aggression, gender, and social psychological adjustment. Child Development, 66, 710–722.

Crick, N. R., & Werner, N. E. (1998). Response decision processes in relational and overt aggression. Child Development, 69, 1630–1639.

Crick, N. R., Grotpeter, J. K., & Bigbee, M. (2002). Relationally and physically aggressive children’s intent attributions and feelings of distress for relational and instrumental peer provocations. Child Development, 73, 1134–1142.

Crick, N. R., Murray-Close, D., & Woods, K. (2005). Borderline personality features in childhood: a short-term longitudinal sample. Development and Psychopathology, 17, 1051–1070.

Fantuzzo, J. W., Coolahan, K., & Weiss, A. (1997). Resiliency partnership-directed research: Enhancing the social competencies of preschool victims of physical abuse by developing peer resources and community strengths. In D. Cicchetti & S. Toth (Eds.), Developmental perspective on trauma: Theory, research and intervention (pp. 463–490). Rochester: University of Rochester Press.

Farrell, A. D., Meyer, A. L., Kung, E. M., & Sullivan, T. N. (2001a). Development and evaluation of school-based violence prevention programs. Journal of Clinical Child Psychology, 30, 207–2020.

Farrell, A. D., Meyer, A. L., & White, K. S. (2001b). Evaluation of Responding in Peace and Positive Ways (RIPP): a school-based prevention program for reducing violence among urban adolescents. Journal of Clinical Child Psychology, 30, 451–463.

Farrell, A. D., Meyer, A. L., Sullivan, T. N., & Kung, E. M. (2003). Evaluation of the Responding in Peaceful and Positive Ways (RIPP) seventh grade violence prevention curriculum. Journal of Child and Family Studies, 12, 101–120.

Frauenknecht, M., & Black, D. R. (1995). Social Problem-Solving Inventory for Adolescents (SPSI-A): development and preliminary psychometric evaluation. Journal of Personality Assessment, 64, 522–539.

Frey, K. S., Nolen, S. B., Van Schoiack-Edstrom, L., & Hirschstein, M. K. (2005). Evaluating a school-based social competence program: linking behavior, goals and beliefs. Journal of Applied Developmental Psychology, 26, 171–200.

Hudley, C. A. (1993). Comparing teacher and peer perceptions of aggression: an ecological approach. Journal of Education & Psychology, 85, 377–384.

Hudley, C. A. (2003). Cognitive-behavioral intervention with aggressive children. In M. Matson (Ed.), Neurobiology of aggression: Understanding and preventing violence (pp. 275–288). Totowa: Humana.

Hudley, C., & Graham, S. (1993). An attributional intervention to reduce peer-directed aggression among African American boys. Child Development, 64, 124–138.

Hudley, C., Britsch, B., Wakefield, W., Smith, T., DeMorat, M., & Cho, S. (1998). An attribution retraining program to reduce aggression in elementary school students. Psychology in the Schools, 35, 271–282.

Hughes, J. N. (2002). Commentary: participatory action research leads to sustainable school and community improvement. School Psychology Review, 32, 38–43.

Hughes, J. N., Meehan, B., & Cavell, T. (2004). Development and validation of a gender-balanced measure of aggression relevant social cognition. Journal of Clinical Child & Adolescent Psychology, 33(2), 292–302.

Kazdin, A. E. (1998). Research design in clinical psychology (3rd ed.). New York: Macmillan.

Kupersmidt, J. B., & Coie, J. D. (1990). Preadolescent peer status, aggression, and school adjustment as predictors of externalizing problems in adolescence. Child Development, 61, 1350–1362.

Leff, S. S., Power, T. J., Manz, P. H., Costigan, T. E., & Nabors, L. A. (2001). School-based aggression prevention programs for young children: current status and implications for violence prevention. School Psychology Review, 30, 343–360.

Leff, S. S., Power, T. J., Costigan, T. E., & Manz, P. H. (2003). Assessing the climate of the playground and lunchroom: implications for bullying prevention programming. School Psychology Review, 32, 418–430.

Leff, S. S., Costigan, T. E., & Power, T. J. (2004). Using participatory-action research to develop a playground-based prevention program. Journal of School Psychology, 42, 3–21.

Leff, S. S., Crick, N. R., Power, T. J., Angelucci, J., Haye, K., Jawad, A., et al. (2006). Understanding social cognitive development in context: partnering with urban African American girls to create a hostile attributional bias measure. Child Development, 77, 1351–1358.

Leff, S. S., Angelucci, J., Goldstein, A. B., Cardaciotto, L., Paskewich, B., & Grossman, M. (2007a). Using a participatory action research model to create a school-based intervention program for relationally aggressive girls: The Friend to Friend Program. In J. Zins, M. Elias, & C. Maher (Eds.), Bullying, victimization, and peer harassment: Handbook of prevention and intervention (199–218). New York: Haworth.

Leff, S. S., Simeral, Khera, G. S., & Grossman, M. B. (2007b). Developing a hostile attributional bias measure for urban African American Boys. In N. Werner (Chair), Relational Aggression and Social Information Processing in Middle Childhood: Recent Developments and Future Directions. Symposium presented at the biennial meeting of the Society for Research in Child Development, Boston, MA. (March)

Leff, S. S., Paskewich, B., & Gullan, R. L. (2008). The Preventing Relational Aggression in Schools Everyday (PRAISE) Program: Implications for school psychologists and counselors. In S. Leff (Chair), Bullying Prevention Programming: Implications for School-Based Professionals. Symposium conducted at the National Association of School Psychologists Annual Convention, New Orleans, LA. (February)

Leff, S. S., Gullan, R. L., Paskewich, Abdul-Kabir S., Jawad, A. F., Grossman, M., Munro, M. A., et al. (2009a). An initial evaluation of a culturally-adapted social problem solving and relational aggression prevention program for urban African American relationally aggressive girls. Journal of Prevention & Intervention in the Community, 37, 260–274.

Leff, S. S., Hoffman, J. A., & Gullan, R. L. (2009b). Intervention integrity: new paradigms and applications. School Mental Health, 1, 103–106.

Lochman, J. E., & Wells, K. C. (2002). Contextual social-cognitive mediators and child outcome: a test of the theoretical model in the Coping Power program. Development and Psychopathology, 14, 945–967.

Lochman, J. E., & Wells, K. C. (2003). Effectiveness of the Coping Power Program and of classroom intervention with aggressive children: outcomes at a 1-year follow-up. Behavior Therapy, 34, 493–515.

Lochman, J. E., & Wells, K. C. (2004). The Coping Power program for preadolescent aggressive boys and their parents: outcome effects at the 1-year follow-up. Journal of Consulting and Clinical Psychology, 72, 571–578.

Lochman, J. E., Boxmeyer, C., Powell, N., Qu, L., Wells, K. C., & Windle, M. (2009). Dissemination of the Coping Power program: importance of intensity of counselor training. Journal of Consulting and Clinical Psychology, 77, 397–409.

Modzeleski, W. (2007). School-based violence prevention programs: offering hope for school districts. American Journal of Preventive Medicine, 33, S107–S108.

Murray-Close, D., Crick, N. R., & Galotti, K. M. (2006). Children’s moral reasoning regarding physical and relational aggression. Social Development, 15, 345–372.

Nastasi, B. K., Varjas, K., Schensul, S. L., Silva, K. T., Schensul, J. J., & Ratnayake, P. (2000). The participatory intervention model: a framework for conceptualizing and promoting intervention acceptability. School Psychology Quarterly, 15, 207–232.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric Theory (3rd Ed.). McGraw Hill.

Olweus, D. (1991). Bully/Victim problems among schoolchildren: Basic facts and effects of a school based intervention program. In K. H. Rubin & D. Pepler (Eds.), Development and treatment of childhood aggression. Hillsdale: Erlbaum.

Orobio de Castro, B., Joop, D. B., Veerman, J. W., & Koops, W. (2002). The effects of emotion regulation, attribution, and delay prompts on aggressive boys’ social problem solving. Cognitive Therapy and Research, 27, 153–166.

Perepletchikova, F., Treat, T., & Kazdin, A. (2007). Treatment integrity in psychotherapy research: analysis of the studies and examination of the associated factors. Journal of Consulting and Clinical Psychology, 75, 829–841.

Peskin, M. F., Tortolero, S. R., & Markham, C. M. (2006). Bullying and victimization among Black and Hispanic adolescents. Adolescence, 41, 467–484.

Renshaw, P. D., & Asher, S. R. (1983). Children’s goals and strategies for social interaction. Merrill-Palmer Quarterly, 29, 353–374.

Selman, R. L., & Byrne, D. F. (1974). A structural developmental analysis of levels of role-taking in middle childhood. Child Development, 45, 803–806.

Terry, R. (2000). Recent advances in measurement theory and the use of sociometric techniques. In A. Cillessen & W. Bukowski (Eds.), Recent advances in the measurement of acceptance and rejection in the peer system: New directions in child and adolescent development (pp. 27–53). San Francisco: Jossey-Bass.

Tucker, C. M., & Herman, K. C. (2002). Using culturally-sensitive theories and research to meet the academic needs of low-income African American children. The American Psychologist, 57, 762–773.

U.S. Public Health Service. (2001). The surgeon general’s mental health supplement on culture, race and ethnicity. Washington, DC: Department of Health and Human Services.

Van Manen, T. G., Prins, P. J. M., & Emmelkamp, P. M. G. (2002). Social cognitive skills test. Houten: Bohn Stafleu Van Loghum.

Van Schoiack-Edstrom, L., Frey, K. S., & Beland, K. (2002). Changing adolescents’ attitudes aboutrelational and physical aggression: an early evaluation of a school-based intervention. School Psychology Review, 31, 201–216.

Witherspoon, K., Speight, S., & Thomas, A. (1997). Racial identity attitudes, school achievement, and academic self-efficacy among African American high school students. Journal of Black Psychology, 23, 344–357.

Yoon, J., Hughes, J., Cavell, T., & Thompson, B. (2000). Social cognitive differences between aggressive-rejected and aggressive-non-rejected children. Journal of School Psychology, 38, 551–570.

Acknowledgements

This research was supported by three NIMH grants to the first author, K23-MH01728, R34MH072982, R01MH075787. This research was made possible, in part, by the School District of Philadelphia. Opinions contained in this report reflect those of the authors and do not necessarily reflect those of the School District of Philadelphia.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Knowledge Measure—Girls Version

Circle the best answer while we read each question out loud to you.

-

1.

If you can’t tell if someone did something on purpose, what is the best thing to do?

-

a.

Tell the person to leave you alone.

-

b.

Assume it was an accident.

-

c.

Tell the teacher.

-

d.

Start a rumor about the other person.

-

a.

-

2.

If you hear that other kids are spreading rumors about your classmate (that is, talking about her behind her back or saying mean things about her), what is the best thing you can do?

-

a.

Tell the kids that you are going to fight them if they don’t stop spreading rumors.

-

b.

Ignore it.

-

c.

Tell the kids, “I’m not going to be mean. I’m not going to spread rumors.”

-

d.

Spread a rumor about them to get back at them.

-

a.

-

3.

Which of the following is the best way to stay calm (not get upset) if someone is mean to you?

-

a.

Stomp your feet.

-

b.

Hit a pillow.

-

c.

Count to 10.

-

d.

Tell an adult.

-

a.

-

4.

At recess your friends are playing ball but you want to play tag with them. What is the best way to get them to stop playing ball and play tag instead?

-

a.

Tell them that tag is much more fun than playing ball.

-

b.

Take the ball from them so that they will have to play tag with you.

-

c.

Wait until they seem tired of playing ball, and then ask them to play tag.

-

d.

Hang around and see if someone else suggests playing tag.

-

a.

-

5.

Ariel is standing in line in the lunchroom. Kim bumps into her from behind. Ariel feels really angry. What should Ariel do next?

-

a.

Try to calm down and think about what to do.

-

b.

Tell the teacher.

-

c.

Push Kim back.

-

d.

Say to Kim, “Watch where you’re going!”

-

a.

-

6.

If you have an argument with your best friend, what is the best way to deal with it?

-

a.

Just ignore it and the argument will probably go away.

-

b.

Tell other kids not to be friends with her.

-

c.

Tell her that you want to fight at recess.

-

d.

Think about what her side of the story is.

-

a.

-

7.

In the lunch room one of the kids says there is not room at the table for you. How can you tell whether this kid is being mean or not?

-

a.

Ask other kids at the table what they think.

-

b.

Look at the kid’s face and body to learn more about the situation.

-

c.

Ask an adult for help.

-

d.

Tell the kid that you should be able to sit at the table and see if the kid lets you.

-

a.

-

8.

You want to play dodgeball during recess. The game has already started. What should you do?

-

a.

Wait until the game has stopped, and then ask if you can play.

-

b.

Watch the game until the other kids notice you.

-

c.

Jump into the game as soon as possible.

-

d.

Ask an adult to tell the other kids to let you play.

-

a.

-

9.

When you’re having an argument (or disagreement), what is the best reason to pay attention to the other kid’s face and body?

-

a.

So you can tell the teacher exactly what happens.

-

b.

Because you need to be ready to fight.

-

c.

Because it can help you figure out how she is feeling.

-

d.

So you can make fun of her.

-

a.

-

10.

Which of the following is the best way to stay out of a fight?

-

a.

Only play with the kids you know at recess.

-

b.

Don’t back down if someone is messing with you.

-

c.

Make sure to sit with your friends at the lunch table.

-

d.

Stop and think before you do things.

-

a.

-

11.

What is the best way to keep calm (not get upset) in an argument?

-

a.

Walk away from the situation.

-

b.

Take deep breaths.

-

c.

Talk to a friend.

-

d.

Talk to an adult.

-

a.

-

12.

Crystal bumps into Amber in the hallway. When Amber looks at Crystal, Crystal has a surprised look on her face. Do you think Crystal bumped Amber:

-

a.

On Purpose.

-

b.

By Accident.

-

c.

I don’t know.

-

a.

-

13.

A kid from another classroom is bullying your friend. What is the best way that you could help stop the bullying?

-

a.

Look for something else to do.

-

b.

Ignore it.

-

c.

Talk to an adult about it.

-

d.

Tell the bully that if she doesn’t leave your friend alone, she’ll have to fight you.

-

a.

-

14.

You are waiting to play a game on the playground. Someone cuts in line in front of you. What should you do first?

-

a.

Get back your place in line.

-

b.

Ask the other kid why she cut in line.

-

c.

Tell an adult.

-

d.

Figure out how you are feeling before doing anything.

-

a.

-

15.

Brittany tells you a secret: she is wearing dirty clothes because she did not have anything clean to wear. You tell the secret to some other kids. What do you think will happen next?

-

a.

Brittany’s feelings will be hurt.

-

b.

Nothing. Brittany is probably used to having other kids tell her secrets.

-

c.

Brittany will be sorry that she has told other kids’ secrets before.

-

d.

Nothing. Brittany probably won’t find out.

-

a.

Answer Key:

-

(1)

b

-

(2)

c

-

(3)

c

-

(4)

c

-

(5)

a

-

(6)

d

-

(7)

b

-

(8)

a

-

(9)

c

-

(10)

d

-

(11)

b

-

(12)

b

-

(13)

c

-

(14)

d

-

(15)

a

Rights and permissions

About this article

Cite this article

Leff, S.S., Cassano, M., MacEvoy, J.P. et al. Initial Validation of a Knowledge-Based Measure of Social Information Processing and Anger Management. J Abnorm Child Psychol 38, 1007–1020 (2010). https://doi.org/10.1007/s10802-010-9419-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10802-010-9419-9