Abstract

Adaptive learning gives learners control of context, pace, and scope of their learning experience. This strategy can be implemented in online learning by using the “Adaptive Release” feature in learning management systems. The purpose of this study was to use learning analytics research methods to explore the extent to which the adaptive release feature affected student behavior in the online environment and course performance. Existing data from two sections of an online pre-service teacher education courses from a Southeastern university were analyzed for this study. Both courses were taught by the same instructor in a 15 weeks time period. One section was designed with the adaptive release feature for content release and the other did not have the adaptive release feature. All other elements of the course were the same. Data from five interaction measures was analyzed (logins, total time spent, average time per session, content modules accessed, time between module open and access) to explore the effect of the adaptive release feature. The findings indicated that there was a significant difference between the use of adaptive release and average login session. Considered as the average time of module access across the entire course, adaptive release did not systematically change when students accessed course materials. The findings also indicated significant differences between the experimental and control courses, especially for the first course module. This study has implications for instructors and instructional designers who design blended and online courses.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Student learning behavior is highly variable, especially in online courses. Some students prefer to have the option to move ahead, while other students work according to the course schedule. Others struggle to get their work done by the due dates. Varying the delivery of the content to adapt to each learner’s needs is called adaptive learning. Learning management systems (LMS) are one of the most highly adopted academic technologies in higher education; 2014 survey results indicate that 85 % of faculty and 83 % of higher education students used the LMS in one or more courses (Dahlstrom et al. 2014). The increased use of the LMS makes it easy to integrate adaptive learning into a course. Prior research has demonstrated that frequency of use of the LMS is directly related to achievement (Peled and Rashty 1999; Morris et al. 2005; Campbell 2007; Dawson et al. 2008; Fritz 2011; Whitmer et al. 2012); however, use of learning analytics on LMS data to understand how course design effects student achievement is still in its infancy. Can log files from student use of the LMS help us to understand the effectiveness or implications of changes in online course design?

An early definition of Learning analytics was written by George Siemens in a blog post as: “Learning analytics is the use of intelligent data, learner-produced data, and analysis models to discover information and social connections, and to predict and advise on learning” (2010). The introduction of learning analytics techniques into education research now enables the examination of student success in online learning based on their actual use of technology-enabled resources. The purpose of this research is to study the effects of Blackboard’s timed adaptive release feature and different measures of student interaction with the online course (logins, total time spent, average time per session, content modules accessed, time between module open and access). In the following sections, we review the literature on learning analytics followed by the literature on adaptive learning.

2 Learning Analytics

Fournier et al. (2011) define learning analytics as “the measurement, collection, analysis, and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (p. 3). Learning analytics is a relatively new field that has emerged in higher education and refers to data-driven decision making practices at the university administrative level, and also applies to student teaching and learning concerns (Baepler and Murdoch 2010). The goal of learning analytics is to assist teachers and schools to adapt educational opportunities based on students need and ability (van Harmelen and Workman 2012). In their Analytics Series, the authors (van Harmelen and Workman 2012) propose that analytics can be used in learning and teaching to identify students at risk in order to provide positive interventions, provide recommendations to students in relation to course materials and learning activities, detect the need for and measuring the results of pedagogic improvements, tailor course offerings, identify high performing teachers and teachers who need assistance with teaching methods and assist in the student recruitment process. They also believe there are inherent risks in using learning analytics such as students and academic staff not being able to interpret analytics, students and academic staff being unable to effectively respond to danger signs revealed by analytics, response interventions are ineffectual because of misinterpreted data, and that the continual monitoring of analytics could lead students to fail at developing a self-directed approach to education and learning, including failing to develop life-long learning skills.

Learning analytics focuses on methods and models to answer significant questions that affect student learning and enables human tailoring of responses, including such actions as revising instructional content, intervening with at-risk students, and providing feedback. In a recent report Bienkowski et al. (2012) suggest that a key application of learning analytics is in the monitoring and predicting of student learning performance. They propose that learning analytics systems apply models to answer queries such as: When are students ready to move on to the next topic and when they are falling behind in a course? They also propose to apply models to identify when students are at risk for not completing a course and what grade a student is likely to get without intervention.

Research on exploring the use of adaptive release and learning analytics is still in infancy. Armani (2004) recommended using time, user traits, user navigation behaviors and users knowledge/performance as the attributes to be used to provide adaptive release. This study used only one of these criteria (namely, time) in order to provide release for those materials, which was conducted for feasibility and clarity in research design.

LMS’s such as Blackboard Learn and Moodle capture and store large amounts of user activity and interaction data. User tracking variables include measures like number and duration of visits to course site, LMS tools accessed, messages read or posted, and content pages visited. Data, such as these can be representative of learner behavior, however as stated by Ferguson (2012) “the depth of extraction and aggregation, reporting and visualization functionality of these built in analytics” (p. 4) are rudimentary at best. Ferguson also highlights the fact that while learner activity and performance is tracked by the LMS, other significant data records are stored in a multitude of different places, thus straining collection efforts and fully informed analyses. Additionally, there is no available guidance for instructors to indicate which of the tracked variables are pedagogically relevant. However, in the field’s current state, a learning analytics approach “merges data collation with statistical techniques and predictive modeling that can be subsequently used to inform both pedagogical practice and policy” (Macfadyen and Dawson 2010, p. 591).

Macfadyen and Dawson (2010) collected data from the Blackboard Vista LMS to determine if time spent interacting with an LMS affected student grades. As a result of the study, researchers identified fifteen variables, to include frequency of usage of course materials and tools supporting content delivery, as well as overall engagement and discussion actions that were directly correlated to student final grades. Additionally, they tracked data indicating total time spent on certain tool-based activities. Their findings revealed a regression model of student success that resulted in a more than 30 % variation in student final grades. Furthermore, the tracking variables analyzed identified students at risk of failure with 70 % accuracy, as well as correctly identified 80 % of the students as at risk who actually did fail the course. They summarized that these predictors of success are dependent on many variables, the most poignant being overall course design and student comprehension of course goals. Their study demonstrates that pedagogically relevant information can be discerned from LMS generated tracking data.

Whitmer et al. (2012) collected detailed log files, which recorded every student interaction with the LMS and the time spent on each activity and paired them with student characteristics and course performance data tables from the student information system. This study showed improved learning outcomes in the form of higher average grades on the final course project, but it also revealed a higher rate of drops, withdrawal, and final grades. They discovered a direct correlation between LMS usage and the student’s final grade. The more time students spent on the LMS, the higher their final grade.

Ryabov (2012) recognized that a majority of learning analytics related studies focused primarily on student participation in online discussion forums and student self-reported data. He utilized Park and Kerr’s method to test if student letter grades are affected by the time they spend logged in on Blackboard. The study consisted of 286 students with various majors and found significant relationship between time students spent logged to Blackboard and the grade achieved in the course. The study concluded that the average student had a GPA of 2.78, had taken 22.5 credit hours before the sociology course, and spent 4.7 h a week interacting with the online course. Furthermore, the study validated the hypothesis that time spent online directly affects final grade, revealing that students who received a final grade of A (31 %) spent 5.4 h online and those receiving a B (38 %) spent 4.9 h. Only 4.5 % of the students in the study received an F, however their grade was reflective of the 2.1 h they spent on online course work. This led to a conclusion that more time online is needed to not only improve failing grades, but to also move from average and below grades (C and D) to better grades (A and B) (Ryabov 2012).

Research by Beer et al. (2012) revealed a much more complex reality that could limit the value of some analytics based strategies. They accumulated data from three LMS and over 80,000 individual students across over 11,000 course offerings. The results indicated a direct relationship between student forum contributions and final grades within the Moodle LMS. The 6453 students who received a failing grade averaged 0.4 forum posts and 0.7 forum replies. Their study determined that several factors, including differing educational philosophies, staff and student familiarity with the technologies, staff and student educational backgrounds, course design, the teacher’s conception of learning and teaching, the level and discipline of the course, and institutional policies and processes contributed to the variation in final grades.

Dawson et al. (2008) at Queensland University of Technology in Australia examined the online behavior trends of a large Science class who used the Blackboard Learn LMS. Their analysis wished to identify differences between high performing students and those requiring early learning support interventions. The researchers found that students who actively participated in the online discussion forum activities scored 8 % mean higher grade than the students who did not participate. Their results also indicated a significant difference between low and high performing students in terms of the quantity of online session times attended, where low performing students attended far fewer online sessions. Upon conclusion of the study, Dawson et al. (2008) concluded that successful students spent a greater amount of time online in both discussion and content areas of the online site.

Ifenthaler and Widanapathirana (2014) reported two case studies on developing and validating the learning analytics framework on student profiles, learning profiles and curriculum profiles. The first case study focused on studying student profiles to provide early personalized interventions to facilitate learning. The second case study focused on studying learning profiles and described how learners continually evolve and change due to various learning experiences.

The use of learning analytics is still in its infancy, yet the process can be powerful in giving meaning to interactions within a learning environment. It can provide scope for personalized learning and for the creation of more effective learning environments and experiences (Fournier et al. 2011).

3 Adaptive Learning

Adaptive learning refers to applying what is known about learners and their content interactions to change learning resources and activity in order to improve individual student success and satisfaction (Howard et al. 2006). Adaptive learning places the learner in the center of the learning experience, making content dynamic and interactive. As suggested by Shute and Towle (2003) “the goal of adaptive (e)learning is aligned with exemplary instruction: delivering the right content, to the right person, at the proper time, in the most appropriate way” (p. 113). Adaptive learning technologies offer an advanced learning environment that can meet the needs of different students. These types of systems model a student’s knowledge, objectives, and preferences to adapt the learning environment for each student to best support their individual learning (Kelly and Tangney 2006). However, the challenge in improving student learning and performance is dependent on correctly identifying individual learner characteristics.

Adaptive learning employs an active strategy that gives learners control of context, pace, and scope of the learning experience. Lin et al. (2005) define a teacher-oriented adaptive system has five specific functionalities related to curriculum setting (see Table 1). They suggest that such an environment with adjustable functions not only supports varying teaching styles, but also allows for calculation and prediction of student performance.

Learning management systems are powerful integrated systems that support a myriad of activities performed by instructors and students during the learning process. Common features of a learning management system are communication tools, navigational tools, course management tools, assessment tools and authoring tools (Robson 1999). Typically, the LMS assists the instructors to post announcements to students enrolled in a course, provide instructional materials, facilitate discussions, lead chat sessions, communicate with students, conduct assessments and monitor and grade student progress. Students use the LMS to access learning resources, communicate with the instructor and their peers and collaborate on projects and discussions. However, the majority of LMS applications are used in a “one size fits all students” approach that lacks personalized support (Brusilovsky 2004).

Adaptive learning provides a customized context for students to interact with LMS resources and activities. Magoulas et al. (2003) contend that the “adaptive functionality is reflected in the personalization of the navigation and content areas and is implemented through the following technologies: Curriculum Sequencing allows the gradual presentation of the outcome concepts for a learning goal based on student’s progress; Adaptive Navigation Support helps students navigate in the lesson contents according to their progress; and Adaptive Presentation offers individualized content depending on the learning style of the student” (p. 516).

The Blackboard Learn LMS has an adaptive feature called “Adaptive Release”. This tool allows instructors and course designers to release course related content based on default rules or ones they create. Simply, this allows instructors to control what content is made available to which students and under what conditions they are allowed to access it. Rules can be created for individuals or groups, based on set criteria related to date, time, activity scores or attempts, and the review status of other items in the course. The tool allows instructors to release content gradually and avoid overwhelming students, some of which who may need more time to process information. While there are several ways to adapt students learning, in this study, we focused on adapting content based on “time” of release in this study which falls under the “Adaptive Curriculum Setting” feature as described by Lin et al. (2005) which includes selection of course properties and arrangement of course materials.

4 Purpose of the Study

The purpose of this research is to study the effects of Blackboard’s timed adaptive release feature on student interaction and performance within the online course. Specifically the following questions are answered in this study:

-

1.

Is there consistency between different measures of student interaction (i.e., logins, total time spent, average time per session, content modules accessed, time between module open and access) with the online course?

-

2.

Do students from the timed adaptive and timed non-adaptive release course sections interact differently with the online course?

-

3.

Do students from the timed adaptive and timed non-adaptive release course sections perform differently with the online course?

4.1 Hypothesis

Null Hypothesis H01

There is no significant difference between groups (with timed adaptive release and without timed adaptive release) on student interaction as measured by logins, total time spent, average time per session, content modules accessed, time between module open and access in an asynchronously online course.

Null Hypothesis H02

There is no significant difference between groups on timed adaptive release (with timed adaptive release and without timed adaptive release) on student performance as measured by final grade in an asynchronously online course.

5 Method

5.1 Context

Data from two sections of an online pre-service teacher education course (EDN 303—Instructional Technology) from a Southeastern university in the United States were analyzed for this study. Both the sections of the course were taught by the same instructor.

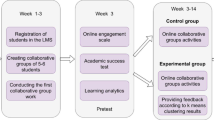

5.1.1 Section 1: Group with Timed Adaptive Release

The course section was taught in a 15 weeks time period and had seven modules and each module included a variety of instructional components including an e-learning module, a quiz, and hands-on projects. This course section used the timed adaptive learning feature, releasing instructional materials only after certain date. This course section had 25 students enrolled of which 23 (92 %) were Caucasian and 1 (4 %) was African American and 1(4 %) was Hispanic/Latino, 20 (80 %) were Female and 5 (20 %) were Male. The average GPA prior to this course for the students in this section was 3.18 (Fig. 1).

5.1.2 Section 2: Group Without Timed Adaptive Release

The courses were identical with each other except for the timed adaptive release feature. This course section did not use the timed adaptive learning feature and all the materials were available to the students from the start of the semester. There were 18 students enrolled in this section of the course of which 16 (89 %) were Caucasian and 2 (11 %) were African American, 17 (80 %) were Female and 1 (20 %) was Male. The average GPA prior to this course for the students in this section was 3.30.

5.2 Participants

Student demographics are summarized in Table 2. Between the timed adaptive release and not timed adaptive release course sections there were 43 students, Male = 6 (14 %) and Female = 37 (86 %). There were 39 (91 %) Caucasian, 3 (7 %) African American and 1 (2 %) Hispanic student in the course sections. About half (51 %) of the students were from pre-elementary education.

5.3 Data Sources

There were three sets of data analyzed in this study: (1) Blackboard course use data; (2) gradebook data; (3) student demographic data. Blackboard course reports were run from the Blackboard v9 instructor reports feature to extract the detailed access data. Individual reports were run for each student and the results exported into a single Excel file. Likewise, the gradebook was exported into an Excel file at the end of the course. The student demographic data (race/ethnicity, major, etc.) were retrieved by the registrar’s office from the database of record, the Student Information System. These data sources were merged into a single file using unique student identifiers and analyzed using Stata.

The measures in Table 3 were used to measure the frequency and time of student access to course materials and activities in this study. These measures are justified as the main indicators used in previously cited research.

5.4 Data Transformations and Analysis

Once the data was imported in the source format, it was transformed to provide variables that were better suited to the analysis planned. The course access data was reduced from 156 total items (which included overview pages and other items) to 35 core content materials. Four students who withdrew from the course were removed from the data set due to incomplete data about these students. One extreme outlier in student time was identified and also removed from the sample, resulting in the total population of 39 students.

Initial descriptive analysis was conducted to characterize the study results and to ensure that the data met the assumptions required for correlations; namely, that there were sufficient observations for the number of variables included in the analysis, that the data was not missing entire observations or individual values, and that the values were normally distributed in the population. This analysis was conducted on all data sources and individual variables considered for inclusion in the analysis.

A variable called “Module Access Difference” was created and calculated as the difference between the scheduled date of that item in the syllabus and the date the student first accessed the item. In the case of the control group, students could have accessed materials before the intended start time, resulting in a negative value (e.g. “early”). In the case of the experimental group, the students could only access the materials at or after that date, resulting in only positive values. This variable was calculated for each of the content items accessed. As discussed in more detail in the results section, we found that there was low variation in student grade in either the control or the experimental group, which prevented using this measure as an outcome variable. As a replacement, “module access difference” was used as the outcome variable. Although this variable does not describe changes in learning outcomes, it does indicate differences in the behavior of students in when they accessed course materials and activities. This difference would indicate a meaningful, substantive effect as a result of using timed adaptive release. Further empirical research or literature review could establish the relationship between these changes and student learning outcomes. In addition to final grade, student demographic characteristics were of interest but were found to have insufficient variation among students in the course to be included in the analysis.

A one-way analysis of variance was conducted between the experimental condition and overall course module access difference to test for significant differences between the standard course and the course with timed adaptive release applied. Histograms of access differences for each course were created to illustrate the differences between courses in a more approachable manner.

To deepen this course-wide analysis and examine the impact at different points of the course, a multivariate analysis of variance test was conducted between the experimental condition and the access differences for each module. As in the course-wide access data, histograms for differences for modules with significant effects were created to display the results in a more approachable manner.

After completing tests for experimental condition, a pairwise correlation matrix was created with a larger set of variables describing LMS activity (time spent in course, number of course logins, number of items accessed, module access difference, experimental/control condition). This analysis identified additional relationships between measures of student activity in the course.

6 Results

Descriptive data showing the interactions of students with course resources and activities is displayed in Table 4. Table four shows combined data from the 39 students enrolled in both course sections until the end of the term. Course logins has a very large range and standard deviation, showing that some students logged in frequently and others logged in less frequently, with a distribution that does not follow a normal bell curve. Interestingly, the values for total items accessed and time spent had a much lower variation, indicating that although students may have had high variability in the frequency of login, they spent more similar amounts of effort with course materials. This is especially the case with the number of “total items” accessed, which indicates how many of the course content modules were accessed by students. This variable has a relatively low range and small standard deviation, indicating that most of the students accessed the course materials (44.92 out of 52 total items). The total access difference has a high range, which is expected given the experimental condition which effects when students can access course materials.

End of semester course grades were used as the data set to measure student performance. There was very little dispersion in grades in either course, which is common in courses for Education majors (e.g., two-thirds of the students earned a ‘B+’ or better). As a result, we were unable to run analysis to determine if there were differences between the timed adaptive and no timed adaptive release treatments based on course grades.

In addition, there was not much variability in the demographics of the course participants. Hence we were unable to run analysis to correlate demographics with the different course access and interaction variables. The course was skewed female (87 %), predominantly white (92 %), and largely of immediate post-high school age groups (71 % < 24 years old) which is typical for pre-service teacher education courses.

6.1 Consistency Between Measures of Student Interaction

In Table 5, the pairwise correlation between the key measures of student interaction with course materials is displayed. Overall, there is consistency between the measures but not equanimity between them, which demonstrates some interesting student behaviors. The number of logins and content items accessed had the strongest effect size, indicating that students who logged in more frequently were more likely to access each of the content items in the course. Students who logged in more frequently were also more likely to access materials early in the timed adaptive release course.

Less strong effects were found between the number of logins and total time spent, suggesting that students logging in more frequently spent more time, but not a large amount of additional time. This observation is corroborated by the negative result for average session length; students logging in more frequently usually had shorter lengths of time spent in each session. Taken as a whole, these results show that there is consistency between these behaviors, while some different expressions of student activity within the LMS.

6.2 Course Section Comparison

ANOVA results indicated that there was a significant difference between the use of timed adaptive release and average login session length at the p < .05 level [F(1,37) = 6.10, p = 0.0183], with an adjusted R2 of 0.12. This result indicates that students in the timed adaptive release course spent more time per session than students in the control course. Although the low effect size suggests that limited conclusions should be drawn, this result illustrates that students with earlier potential access did spend more time in the course materials, a positive effect of time on task.

Considered as the average across the entire course, timed adaptive release did not systematically change when students accessed course materials. However, the results of the MANOVA test (Roy’s largest root = 1.2629, F(1,37) = 5.41, p < 0.001) indicated significant differences between the experimental and control courses if individual modules were considered as separate dependent variables. In Table 6 the results by module show that this result was caused by different access of the first course module only. Later modules were accessed with a much smaller difference, suggesting that when students are in stream with multiple commitments, they are less likely to access materials early.

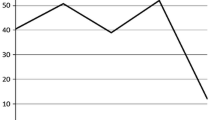

Almost 50 % of the students in the non-timed adaptive release course accessed the course prior to the start date, with less than 25 % accessing the course more than 5 days after the start of the course. In the timed adaptive release course, approximately 50 % of the students accessed the course immediately after the course start, with the remainder accessing the course more than 5 days after the start of the course. This access may have resulted in different preparation and approach to the course resources. This behavior was not evident in future modules. The difference in access times for Module 1 between the non-timed adaptive and timed adaptive release course sections are illustrated in Figs. 2 and 3.

7 Discussion

The first hypothesis in this study was to investigate if there were significant difference between groups (with timed adaptive release and without timed adaptive release) on student interaction as measured by logins, total time spent, average time per session, content modules accessed, time between module open and access in an asynchronously online course. Learning Analytics data from two groups of students in similar courses, one with the time-based adaptive release feature enabled and one in which it was not enabled, were analyzed in this study.

The overall findings indicate that students only access materials early for the first course module. This difference may indicate a likelihood of students to “browse” course materials in advance of the course start dates, as they are preparing for the term, determining expectations for different courses, and trying to anticipate the experience and requirements for courses that term. It is notable that this browsing behavior only extended to the first course module, as the students in the control group had access to all course modules. This behavior may indicate that this browsing activity is shallow and suggests that course design should include key materials at an easily accessible point in the course design.

The use of timed adaptive release did not create a “gate” that led students to access materials when they were released, as might be hoped as one impact of timed adaptive release. However, the slight increase in session length for timed adaptive release is a sign of a positive educational impact of this intervention: with more average time per session, it is likely that students had increased exposure to learning materials and activities. By limiting access to future course modules, students had a lower amount of total potential resources, and appear to have spent more time when they were logged in.

In this study, time spent on accessing the materials, total items accessed and login attempts were the three data points that were analyzed while answering the research question on differences in student engagement between the timed adaptive release and no time-based adaptive release treatments. The significant correlations between the variables selected in this study indicate that they are indeed useful measures through which student behavior can be observed. The differences and low to moderate effect sizes indicate that they measure different underlying activities, which is useful for attempting to understand more deeply how student engage with LMS provided materials.

The second hypothesis in this study was to investigate if there was no significant difference between groups on timed adaptive release (with timed adaptive release and without timed adaptive release) on student performance as measured by final grade in an asynchronously online course. Due to very little dispersion in final grades in either course, we were unable to run analysis to determine if there were differences between the timed adaptive and no timed adaptive release treatments based on course grades.

Lin et al. (2005) recommended five different adaptive features beneficial for online courses—Adaptive curriculum setting, Adaptive co-teaching and privileges setting, Adaptive reward setting, Adaptive assessment setting and Adaptive information sharing setting. The timed-release adaptive release feature used in this study was one aspect of adaptive curriculum setting. The timed-release functionality can also be used for adaptive assessment setting and has been recommended by Kleinman (2005) as a strategy to encourage academic honesty especially when students are given online open book and/or essay exams. Adaptive timed setting can also support coteaching and privilege setting if multiple instructors are teaching the same online course at varied times. Timed adaptive features can also be used with adaptive reward setting. With the emergence of earning badges in online courses, timing the completion of online course activities which can be used to generate badges for students to increase student motivation while participating in the online courses. Timed adaptive release can also be set with adaptive information sharing where instructors can open discussion forums at various times, and may set different privileges for different students.

Another area that is related to adaptive learning is cognitive modeling (Anderson 1983; Elkind et al. 1990; Wenger 1987). Cognitive modeling can be used to simulate or predict human behavior or performance on tasks similar to the ones modeled. This uses artificial intelligence applications such as expert systems, natural language programming and neural networks. While the current LMSes in the market do not include cognitive modeling and intelligent tutoring features (Sonwalkar 2007), it may be something we see in the near future.

7.1 Limitations

This study had a small number of students with relatively low demographic diversity. It was also limited to a single course subject. The same findings may not take place in another version of the course, with different student demographics or other academic subjects. A small distribution in grades (or other outcomes measures) prevented analysis of the impact of any changes in behavior on grades, although given the small changes in behavior it is unlikely that changes in outcomes would have resulted. From a course design perspective, time-based adaptive release is only one of many ways that this feature can be implemented and different results may occur if other release conditions (e.g. content mastery) are used to trigger the release. There was no other data collected directly from the students using other data collection methods such as surveys or interviews. This study directly focused on the learning analytics approach to investigate adaptive learning and this is a limitation as the exact reasons for early access are unknown.

7.2 Implications for Future Research and Improvement of Practice

The limited findings in this study from timed release suggest that other performance-based adaptive release, along with time-based adaptive release, should be investigated as possible course design functionality that could help students better plan their use of time with online course materials. The lack of a difference in timed release suggests that relying on timing as a method to effect student behaviors has limited impact. Learning Analytics research that investigates changes in course behaviors as well as outcomes based on alternate adaptive release techniques could provide greater insights into the possibilities of adaptive release to impact student interaction and learning.

For Learning Analytics, this study suggests a methodology and approach that can be used to investigate other types of course design changes. Through descriptive data about student behaviors interacting with course materials, we can determine how students interact with materials at a detailed level not available through other outcome-only oriented research. The measures of time of access, time on task, login frequency, and other measures can be used as proxies for outcome measures that indicate increased learning outcomes that are more significant than grades and reflect a wider spectrum of behaviors. For example, if a change in course design (such as increased multimedia) is related to students logging in more frequently, it can be inferred that students are more engaged in the course, independent of the outcomes reflected in grades.

This study has implications for instructors, instructional designers, and any other stakeholders interested in better understanding the impact of online learning design on student behaviors and outcomes. Although the study found relatively small differences in behavior between the two groups, the study still informs the designers and instructors on whether it is important to use provide adaptive release of content with respect to time. Future studies have to be conducted replicating this with a larger audience, and also adaptive release has to be experimented with other features such as performance based release and student characteristics based release. Studies have to be conducted with data sets that include a wide grade dispersion to be able to measure the effect on student performance, and also on data sets that include varied student demographic.

References

Anderson, J. R. (1983). The architecture of cognition. New York, NY: Psychology Press.

Armani, J. (2004). Shaping learning adaptive technologies for teachers: A proposal for an adaptive learning management system. In Proceedings of IEEE international conference on advanced learning technologies, 2004 (pp. 783–785).

Baepler, P., & Murdoch, C. J. (2010). Academic analytics and data mining in higher education. Retrieved from http://dspaceprod.georgiasouthern.edu:8080/jspui/bitstream/10518/4069/1/_BaeplerMurdoch.pdf.

Beer, C., Jones, D., & Clark, D. (2012). Analytics and complexity: Learning and leading for the future. In ASCILITE conference (Vol. 2012, No. 1). Retrieved from http://beerc.files.wordpress.com/2009/11/ascillite2012_final_submission.pdf.

Bienkowski, M., Feng, M., & Means, B. (2012). Enhancing teaching and learning through educational data mining and learning analytics: An issue brief (pp. 1–57). Washington, DC: Office of Educational Technology, US Department of Education.

Brusilovsky, P. (2004). Knowledge tree: A distributed architecture for adaptive e-learning. In Proceedings of the 13th international World Wide Web conference on Alternate track papers & posters. ACM (pp. 104–113).

Campbell, J. P. (2007). Utilizing student data within the course management system to determine undergraduate student academic success: An exploratory study. A. G. Rud. United States—Indiana, Educational Studies.

Dahlstrom, D. E., Brooks, C., & Bichsel, J. (2014). The current ecosystem of learning management systems in higher education: Student, faculty, and IT perspectives. Research report. Louisville, CO: ECAR, September 2014. Available from http://www.educause.edu/ecar.

Dawson, S., McWilliam, E., & Tan, J. P. L. (2008). Teaching smarter: How mining ICT data can inform and improve learning and teaching practice. In ASCILITE 2008, Melbourne (pp. 221–230).

Elkind, J. I., Card, S. K., & Hochberg, J. (Eds.). (1990). Human performance models for computer-aided engineering. San Diego, CA: Academic Press.

Ferguson, R. (2012). The state of learning analytics in 2012: A review and future challenges. Technical Report KMI-12-01, Knowledge Media Institute, The Open University, UK. Retrieved from http://kmi.open.ac.uk/publications/techreport/kmi-12-01.

Fournier, H., Kop, R., & Sitlia, H. (2011). The value of learning analytics to networked learning on a personal learning environment. In 1st international conference on learning analytics and knowledge, Banff, Canada, 27 February–1 March 2011.

Fritz, J. (2011). Classroom walls that talk: Using online course activity data of successful students to raise self-awareness of underperforming peers. The Internet and Higher Education, 14(2), 89–97.

Howard, L., Remenyi, Z., & Pap, G. (2006). Adaptive blended learning environments. In International Conference on Engineering Education (pp. 23–28).

Ifenthaler, D., & Widanapathirana, C. (2014). Development and validation of a learning analytics framework: Two case studies using support vector machines. Technology, Knowledge and Learning, 19(1–2), 221–240.

Kelly, D., & Tangney, B. (2006). Adapting to intelligence profile in an adaptive educational system. Interacting with Computers, 18(3), 385–409.

Kleinman, S. (2005). Strategies for encouraging active learning, interaction, and academic integrity in online courses. Communication Teacher, 19(1), 13–18.

Lin, C. B., Young, S. S. C., Chan, T. W., & Chen, Y. H. (2005). Teacher-oriented adaptive Web-based environment for supporting practical teaching models: a case study of “school for all”. Computers & Education, 44(2), 155–172.

Macfadyen, L. P., & Dawson, S. (2010). Mining LMS data to develop an “early warning system” for educators: A proof of concept. Computers & Education, 54(2), 588–599.

Magoulas, G. D., Papanikolaou, Y., & Grigoriadou, M. (2003). Adaptive web-based learning: accommodating individual differences through system’s adaptation. British Journal of Educational Technology, 34(4), 511–527.

Morris, L., Finnegan, C., & Wu, S. (2005). Tracking student behavior, persistence, and achievement in online courses. Internet and Higher Education, 8, 221–231.

Peled, A., & Rashty, D. (1999). Logging for success: Advancing the use of WWW logs to improve computer mediated distance learning. Journal of Educational Computing Research, 21(4), 413–431.

Robson, R. (1999). Course support systems: The first generation. International Journal of Telecommunications in Education, 5(4), 271–282.

Ryabov, I. (2012). The effect of time online on grades in online sociology courses. MERLOT Journal of Online Learning and Teaching, 8(1), 13–23.

Shute, V., & Towle, B. (2003). Adaptive e-learning. Educational Psychologist, 38(2), 105–114.

Sonwalkar, N. (2007). Adaptive learning: A dynamic methodology for effective online learning. Distance Learning, 4(1), 43–46.

van Harmelen, M., & Workman, D. (2012). Analytics for learning and teaching. CETIS Analytics Series, 1(3), 1–41.

Wenger, E. (1987). Artificial intelligence and tutoring systems: Computational and cognitive approaches to the communication of knowledge. Los Altos, CA: Morgan Kaufmann.

Whitmer, J., Fernandes, K., & Allen, W. (2012). Analytics in progress: Technology use, student characteristics, and student achievement. EDUCAUSE Review . Retrieved online from http://www.educause.edu/ero/article/analytics-progress-technology-use-student-characteristics-and-studentachievement.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Martin, F., Whitmer, J.C. Applying Learning Analytics to Investigate Timed Release in Online Learning. Tech Know Learn 21, 59–74 (2016). https://doi.org/10.1007/s10758-015-9261-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10758-015-9261-9