Abstract

We introduce a design-based research framework, learning axes and bridging tools, and demonstrate its application in the preparation and study of an implementation of a middle-school experimental computer-based unit on probability and statistics, ProbLab (Probability Laboratory, Abrahamson and Wilensky 2002 [Abrahamson, D., & Wilensky, U. (2002). ProbLab. Northwestern University, Evanston, IL: The Center for Connected Learning and Computer-Based Modeling, Northwestern University. http://www.ccl.northwestern.edu/curriculum/ProbLab/]). ProbLab is a mixed-media unit, which utilizes traditional tools as well as the NetLogo agent-based modeling-and-simulation environment (Wilensky 1999) [Wilensky, U. (1999). NetLogo. Northwestern University, Evanston, IL: The Center for Connected Learning and Computer-Based Modeling http://www.ccl.northwestern.edu/netlogo/] and HubNet, its technological extension for facilitating participatory simulation activities in networked classrooms (Wilensky and Stroup 1999a) [Wilensky, U., & Stroup, W. (1999a). HubNet. Evanston, IL: The Center for Connected Learning and Computer-Based Modeling, Northwestern University]. We will focus on the statistics module of the unit, Statistics As Multi-Participant Learning-Environment Resource (S.A.M.P.L.E.R.). The framework shapes the design rationale toward creating and developing learning tools, activities, and facilitation guidelines. The framework then constitutes a data-analysis lens on implementation cases of student insight into the mathematical content. Working with this methodology, a designer begins by focusing on mathematical representations associated with a target concept—the designer problematizes and deconstructs each representation into a pair of historical/cognitive antecedents (idea elements), each lying at the poles of a learning axis. Next, the designer creates bridging tools, ambiguous artifacts bearing interaction properties of each of the idea elements, and develops activities with these learning tools that evoke cognitive conflict along the axis. Students reconcile the conflict by means of articulating strategies that embrace both idea elements, thus integrating them into the target concept.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

“Such problems [—especially problems like that of composing a poem, inventing a machine, or making a scientific discovery—] are intimations of the potential coherence of hitherto unrelated things, and their solution establishes a new comprehensive entity, be it a new power, a new kind of machine, or a new knowledge of nature” (Polanyi 1967, p. 44).

“Not that I mean as sufficing for invention the bringing together of objects as disparate as possible; most combinations so formed would be entirely sterile. But certain among them, very rare, are the most fruitful of all” (Poincaré 1897/2003, p. 51).

1 Introduction

1.1 Objective

The objective of this paper is to contribute to research on mathematics-education a new design-oriented framework for fostering conceptual understanding. The proposed framework outlines principled design-research methodology for implementing constructivist/constructionist pedagogy in the form of concept-targeted learning environments, including objects, activities, and facilitation emphases for teachers. These learning environments are designed to structure opportunities for students to reinvent mathematical concepts through problem-solving interactions with artifacts that problematize for students their mathematical understandings and foster reflection that stimulates insight. The framework guides designers along a research path from diagnosis of a design problem through to design of learning tools and, eventually, analysis of data from implementing the design. The framework emerged over several projects (Abrahamson 2004; Abrahamson and Cendak 2006; Abrahamson and Wilensky 2004a; Fuson and Abrahamson 2005), yet we focus in this paper on a single project, in which we targeted basic statistical concepts. To demonstrate the proposed framework, we explain the design rationale, objects, and activities and then present empirical data of students engaging in these activities and interpret student insight in light of the framework. Ultimately, the framework expresses a reflection on our design practice, so as both to ground this practice in the learning sciences and formulate guidelines for design in diverse settings and for a range of mathematical content domains.

In the remainder of this Introduction, we explain the intellectual roots of our design-oriented framework (e.g., Freudenthal 1986; Papert 1991; Piaget and Inhelder 1952; von Glasersfeld 1987). In the Design section, we situate the design rationale of our experimental middle-school unit (Abrahamson and Wilensky 2002) within previous work (the Connected Probability project, Wilensky 1997), explain our choice of technology-based learning environments for implementing the unit (NetLogo and HubNet), and then detail the focal activity discussed in this paper (S.A.M.P.L.E.R., Abrahamson and Wilensky 2004a). In the Results and Discussion section, we present a set of episodes from our empirical data. Finally, we discuss implications of our proposed framework for theory of learning and design.

1.2 Conceptual composites, learning axes, bridging tools, and learning issues: a generic example

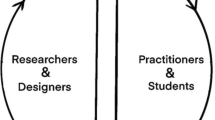

We research students’ mathematical cognition, focusing on: the nature of mathematical intuition, reasoning, and learning; the relations among mathematical intuition, reasoning, and learning; and the roles that carefully designed artifacts may play in supporting students’ development of deep conceptual understanding grounded in tacit perceptual and experiential knowledge as well as in their prior mathematical understandings. That is, we are interested in investigating the relations between human reasoning and artifacts within social contexts created specifically to facilitate mathematical learning, such as classrooms. But we are also interested in effecting change—in leveraging insight into students’ mathematical learning so as to increase mathematical literacy in the general population. Thus, our work lies at the intersection of theory and practice—our “deliverables” are both models of mathematical learning and mathematical artifacts that we design and research. We have therefore found design-based research (see Fig. 1, below) a suitable methodological approach for coordinating our investment in both theory and practice; for making this theory and practice synergistic (Brown 1992; Cobb et al. 2003; Collins 1992; Collins et al. 2004; Edelson 2002). As we now explain, our methodology is informed by considerations of how people develop mathematical tools and work with them.

Design-for-learning activity framework. A theory of design enfolded within a theory of learning (top) is applied to mathematical content in the form of domain analysis (middle), which then guides the iterated development and research of experimental learning tools, in a sequence of empirical studies with participant students

1.2.1 Overview of research activity

Our research on mathematical cognition encompasses a broad set of issues pertaining to the invention, use, and learning of mathematical concepts. We are particularly interested in the artifacts—mathematical representations, computing devices, and ritualized procedures around these objects—that mediate numeracy practices and, possibly, conceptual understanding. For example, we examine reciprocal relations between media, forms of representations, and content (Wilensky and Papert 2007) and how these impact historical and individual learning. Thus, in preparing to design learning tools for a particular mathematical concept, we study the historical and contemporary mathematical objects that have become canonical in supporting cultural practice associated with the concept. Also, we investigate how learners come to engage in activities with these objects.

A study of phylogenic, ontogenetic, and ethnomethodological aspects of mathematical practice related to a target concept is relevant to design. Just as cultures took millennia in evolving these cognitively ergonomic artifacts, constructivist designers have argued, so students—who share with their ancestors the capacity to see, touch, count, and imagine—need opportunities to re-invent these artifacts (Artigue 2002; Gravemeijer 1994; Wilensky 1997). Only thus, we believe, can students appropriate these objects as thinking tools; only thus, do students develop trust in their personal mathematical agency; only thus, do students develop critical numeracy (see also Gal 2005).

These virtues—mathematical fluency, intellectual confidence, and critical reasoning—are assets for citizens of democratic society. Yet, at the same time, we cannot expect each student to reinvent the wheel. We are therefore searching for an optimal balance between “free range” environments and overly prescriptive curricula (von Glasersfeld 1992)—a balance that would resonate with professional-development needs and enable wide distribution of instructional units.

This section introduces four key design-research constructs. These constructs have emerged in our work as useful for organizing the development of mathematical objects as well as research on student engagement with these objects: (1) conceptual composite; (2) learning axis; (3) bridging tool; and (4) learning issue. We will use a generic example that should be adequate to convey the meaning and application of these constructs. The constructs will later be demonstrated within the context of more complex mathematical content—probability and statistics—in subsequent sections that discuss the development and research of the design that is the focus of this paper. But before we get to the heart of the paper it is useful to step back and ask: What would we want of a design-research framework that purported to accountably implement constructivist/constructionist pedagogical philosophy?

1.2.2 Criteria toward creating a design-for-learning research framework

In order for the desired design framework to accountably implement constructivist/constructionist pedagogical philosophy, it would need to satisfy a set of criteria. We have been seeking to create learning environments that:

-

1.

capitalize on students’ proto-mathematical intuitions pertaining to the target concept (students’ ecological intelligence, Gigerenzer 1998);

-

2.

elicit students’ holistic strategies, heuristics, perceptual judgments, experiential acumen, vocabulary, and previous mathematical understandings pertaining to a class of situated problems that exemplify the target concept (the phenomena under inquiry);

-

3.

provide materials and activities that enable students to concretize and reflect on isolated elements of their intuitive strategy through attention to properties of the materials and actions with these materials;

-

4.

challenge students’ strategy by presenting situations in which the isolated elements of the strategy appear to be incompatible (even though they are in fact complementary—students initially do not have the conceptual structures for explaining the apparent incompatibility and, therefore, take it to imply error, despite a lingering unarticulated sense that their initial intuitions are in fact correct);

-

5.

enable students to recognize the complementarity of the isolated strategy elements and articulate this insight qualitatively in the form of new mathematical understanding;

-

6.

foster student appropriation of normative symbolical inscriptions as problem-solving tools that warrant, extend, and sustain the initial intuitive convictions.

The above criteria outline an “unpack–repack,” or “breakdown–buildup,” design-for-learning strategy—the criteria purport to frame the design of learning environments in which students unpack their intuitive qualitative strategies into elements, reflect on these elements and relations among them, and then re-pack the elements into mathematical problem-solving tools. Thus the “psychological” becomes “epistemological” (Papert 2000). We now explain the design framework we created in attempt to satisfy the above criteria.

1.2.3 Overview of framework constructs

Conceptual composites, learning axes, bridging tools, and learning issues are theoretical constructs informing our design-based research in Mathematics education. These constructs, which have emerged through our studies of student learning in diverse mathematical domains, enable us to articulate our design rationales in terms of our understanding of how students learn mathematics. In turn, the constructs enable us to couch data of students’ mathematical learning in terms of their interactions with our designs. We will now explain our key constructs.

1.2.4 Conceptual composite: a domain-analysis technique

Mathematical ideas can be captured in a range of sign systems, such as symbols, diagrams, or words. For example, a particular cardinal can be expressed, respectively, as “2,” or “two,” “o o.” Diagrams are unique: unlike symbols and words, diagrams are inherently given to ambiguity, because they are more loosely tied to the semiotic system (“compiler”) governing the interpretation of signs. For example, “2” and “two”—at least in their inscribed form—refer unambiguously to the cardinal property, whereas “o o” might be interpreted as eyes, coins, or “oo” as in “book.” The inherent ambiguity of diagrams requires that a learner adopt a conventional way of seeing the diagram so as to participate in a social practice that utilizes these diagrams unambiguously. Addressing this inherent constraint of diagrams is central to the constructivist pedagogical enterprise, where students engage in activities with objects such as visual representations. Moreover, phenomenological analysis implicates a central role of the visual modality—and, more generally, embodied multimodality—in mathematical learning. That is, we assume that core mathematics-learning processes transpire as negotiations of multimodal images. Therefore, ambiguity could play a constructive role in design frameworks that purport to be sensitive to the cultural evolution of conventional diagrams as well as foster personal learning processes that emulate this cultural evolution. Ambiguity, as we now explain, can create learning opportunities.

Mathematical representations, we posit, are conceptual composites, i.e., they enfold a historical coordination of two or more ideas. Each of these ideas, or conceptual elements, is associated with one way of selectively attending to the representation when using it. For example, an array can lend itself to at least two ways of seeing (see Fig. 2, below). The mathematical expression ab = ba, which defines the commutative nature of the multiplication operation, expresses the equivalent cardinality of the products of two different multiplications, each associated with a unique way of seeing the diagram. To wit, the pictures on the left and right of Fig. 2 foreground, respectively, the 3-groups-of-2 and 2-groups-of-3 visual parsing of the 3-by-2 central array (we will further clarify this example in the next sections). Notwithstanding, one could presumably use the commutative property of multiplication without understanding it. However, is such practice desirable?

The composite nature of mathematical representations is often covert—one can use these concepts without appreciating which ideas they enfold or how these ideas are coordinated. Consequently, standard mathematical tools may be opaque—learners who, at best, develop procedural fluency with these tools, may not develop a sense of understanding, because they do not have opportunities to build on the embedded ideas, even if each one of these embedded ideas is familiar and robust. Moreover, such consequent lack of connected understanding (Wilensky 1997) remains concealed from assessment, due to students’ reasonably effective procedural skills, i.e., the effective procedural performance acts as a smokescreen obscuring the problematic conceptual understanding. Lacking deep understanding, in turn, is detrimental in terms of students’ mathematical cognition and affect: it hinders the potential generativity, creativity, and satisfaction of engaging in mathematical reasoning (Wilensky 1995, 1997) and compromises students’ performance in solving problems (Bereiter and Scardamalia 1985).

By depicting mathematical representations as expressing ideas in need of coordination, the construct of conceptual composites underscores a general design problem. Namely, there is a tension between, on the one hand, the phylogeny of a mathematical concept—how it may have evolved over millennia—and, on the other hand, common classroom ontogeny—a curricular need to learn a new concept within several weeks, if not days or hours. This tension, we conjecture, can be partially addressed while still abiding with constructivist/constructionist pedagogical philosophy. We propose to provide students with the complementary ideas of a target concept and facilitate problem-solving activities that encourage students to construct the concept as a coordination of these elements. The following constructs convey a design template for facilitating such coordination in diverse mathematical content domains.

1.2.5 Learning axis: a design-researcher’s articulation of a target mathematical concept as idea elements in need of coordination

The learning axis, a design-theory construct, extends between two necessary and complementary components of a mathematical concept. That is, a particular learning axis expresses a conceptual-composite analysis of a mathematical representation toward creating learning tools. The two conceptual elements at the poles of a learning axis are each within the age-appropriate learner’s comfort zone. The notion of learning axis positions these differentiated conceptual elements as potentially co-present within the learner’s attention and reasoning. Student learning is stimulated when the two elements are experienced as competing and the student attempts to construct logical reconciliation (“bridging”) of these competing perceptions. Such cognitive conflict is mediated through problem-solving activities with a bridging tool.

1.2.6 Bridging tool: an “ambiguous” artifact affording a learning axis

A bridging tool is a hybrid representation bearing structural properties of each of two identified conceptual-composite elements at the poles of a learning axis—it is perceptually similar to each element, so it can concurrently afford either. That is, the bridging tool constitutes a single diagrammatic substrate carrying both idea elements couched in the same visual language. In so doing, the bridging tool backgrounds the similarity of the idea elements, thus accentuating their difference. The juxtaposition of the elements, in turn, enables their coordination and integration into the standard representation. Thus, in the proposed framework students learn by clarifying the bridging tool’s ambiguity. That is, conceptual learning is accomplished as a rule-expressed coordination of the bridging tool’s competing disambiguations—a coordination that reconciles the tension created by the ambiguity. Students’ sense of purpose underlying this activity is created by problem-solving contexts.

In Fig. 2, bottom center, we see an example of a picture that could serve as a bridging tool in a mathematics-education design for the commutativity of multiplication (ab = ba). Two of the possible interpretations of this picture—the picture’s meanings—are as 3 columns each made up of 2 X’s or as 2 rows each made up of 3 X’s. These meanings are strongly suggested in the pictures on the left (3*2) and on the right (2*3), but the meanings are equally likely for the central picture. One might construct the commutative property of multiplication as a rule that relaxes the tension inherent in the object’s interpreted ambiguity. That is, to reconcile these competing meanings, a learner would need to discover how these meanings might be complementary (“AND”) rather than mutually exclusive (“OR”). Thus, commutativity is constructed as the reconciliation of the conflict created by the competing affordances of a single object (see the summary section 1.2.8 for further explanation of bridging tools).

1.2.7 Learning issue

Learning axes are articulated as part of the domain analysis, at a point in the tool-development process when a learning axis could be implemented in a plurality of bridging tools that would each potentially facilitate students’ negotiation along the axis. Yet only once the learning axis has been implemented in the form of an actual learning tool, a bridging tool, do students have context for engaging the axis. Once implemented in a design, a learning axis is manifest in students’ behavior in the form of a learning issue, a design-specific articulation of the learning axis in terms of what students need to be able to see and do with the particular bridging tools. So the learning issues are the design-specific challenges on the way to basic mastery of a concept.

Although the design does not prescribe a specific instructional sequence along the learning issues, the set of learning issues constitutes landmarks in students’ individual learning trajectories through the unit, and it is assumed that students understand a unit only by having struggled with all the key learning issues.

1.2.8 Summary and intellectual foundations of the framework

The learning-axes-and-bridging-tools framework reflects an interpretation of intuition as a double-edged pedagogical sword—intuition instills a favorable sense of familiarity with situational contexts together with a possibly misleading sense of conceptual understanding (see Papert 2000, on a differentiation between psychology and epistemology). We wish to support students in sustaining positive affective disposition toward their intuitive reasoning while reflecting on this reasoning and recognizing the added value of adopting mathematical problem-solving tools that amplify these intuitions. To do so, we guide students through activities with artifacts designed to elicit intuitive judgment yet foster a reflective, analytic, formal counterpart to this intuition. This program has roots in research on students’ learning, as we now discuss.

Given supportive learning environments, learners are inclined to reinvent core aspects of commonly used mathematical, computational, and, in general, quantitative–symbolical artifacts and procedures (Abrahamson et al. 2006; Bamberger and Ziporyn 1991; diSessa et al. 1991; Papert 1980). The learning axes approach attempts to leverage students’ capacity to reinvent mathematics by articulating for designers domain-analysis principles by which to identify conceptual elements and activity contexts that foster student reinvention of target concepts. Specifically, we attempt to create for students a problem space for which two different notions each appear useful, but where it is not initially clear how these notions may be combined to solve the problem. So problem spaces that give rise to learning axes include objects that both contextualize the problem and stimulate the complementary notions that will contribute to the solution of the problem—objects that we call bridging tools.

Bridging tools (Abrahamson 2004, 2006a; Fuson and Abrahamson 2005) are pedagogical artifacts and activities that tap and stimulate students’ previous mathematical knowledge, situational understandings, and kinesthetic schemas and link these reciprocally to mathematical representations. Figure 3 (below) builds on the apprehending-zone model (Abrahamson 2004; Fuson and Abrahamson 2005), a mathematics-education model of design, teaching, and learning, to further explain bridging tools. A bridging tool is created in the Design Tools Space (see Fig. 3, lower tier). Through participating in the Classroom Activity Space (see Fig. 3, middle tier), students construct meanings for the bridging tool and link these meanings. A unique attribute of bridging tools is that each bridging tool is designed to evoke at least two meanings that are complementary in understanding the target concepts. Each of these meanings is an affordance of the tool within some activity context, and each affordance supports a subconstruct of the target domain (see Fig. 3, the dashed arrows rising from the bridging tool). Students negotiate and reconcile these complementary meanings to construct a new mathematical concept in their Internalized Space (see Fig. 3, top tier; note vertical axis).

Our design of bridging tools is informed by cognitive, pedagogical, and socio-constructivist assumptions and motivations that have led us to regard learning tools as more than computation devices for carrying out solution procedures or scaffolds towards some alleged abstract understandings. We assume that mathematical instruments can play pivotal roles in mediating to students mathematical understandings. Specifically, bridging tools can potentially embody and convey dilemmas and solutions inherent in a mathematical domain. Using bridging tools, students come to emulate thought processes that the designer sensed are conducive to the construction of central ideas of the target domain.

By focusing on bridging tools as organizing mathematics-education learning environments rather than on the mathematical concepts, we wish to foreground a design principle that learning environments should create opportunities for students to construct new ideas, and that presenting students with completely “baked” ideas may defeat the objective that students themselves construct the concepts (see, e.g., von Glasersfeld 1990). Sometimes, we conjecture, outright clarity deprives a learner of opportunities to construct understandings. In its ambiguity, the bridging tool sidesteps ready-made clarity, creating instead a generative semiotic node. Thus, the classroom activities and classroom episodes that we present in this paper attempt to convey the plausibility of designing for learning opportunities rather than designing directly for concepts. Bridging tools play a pivotal role in suspending a concept-driven pedagogy of definitions, formulas, and word problems. Using bridging tools, students are to experience the challenges inherent in understanding mathematical concepts and initiate discussion of these challenges. So bridging tools are “half baked” by the designers yet require learners’ active participation to become well done as emergent mathematical constructs, i.e., to become personal understandings that are sufficiently shared in the classroom (see also Cobb et al. 1997, on the emergent perspective on learning).

One assumption of our design framework, coming from Abrahamson (2004), is that students can construct mathematical concepts as reconciliations of the competing interpretations inherent in a bridging tool. The idea of learning as reconciliation is not new. In 1837, William Whewell wrote the following words about students’ intuitive understanding of the fundamental axioms of geometry: “The student’s clear apprehension of the truth of these is a condition of the possibility of his pursuing the reasoning on which he is invited to enter” (Whewell 1837/1989, p. 40). Learning, according to Whewell, is the process of an individual student grounding formalisms in her intuition, and this learning process is fostered through discourse (see also Schön 1981, on learning as synthesizing the intuitive and the formal). Following Whewell, we attempt to help students ground formal constructs and symbolical inscriptions in their perception and understanding-in-action intuition (Wilensky 1991, 1993; Abrahamson 2004).

The idea of understanding-in-action that is a hallmark of constructivist pedagogy (e.g., von Glasersfeld 1987) can be seen as rooted in phenomenological philosophy (e.g., Heidegger 1927/1962; Merleau–Ponty 1945/1962) and in Gibson’s (1977) construct ‘affordance’ that is widely used in the learning-sciences literature. We wish to extend the idea of learning as reconciliation by submitting that reconciliation or synthesis can transpire not only between intuition and formalism but also between two intuitions grounded in one and the same object—the learning occurs as constructions when learners attempt to reconcile two competing interpretations of a phenomenon in the context of some designed activity (see Poincaré 1897/2003, Polanyi 1967, and Steiner 2001, on the “mental combinatorics” of mathematics creativity; see Piaget 1952, on how the idea of volume arises in conservation tasks; see Minsky 1985, on hierarchies in mental structures; see Case and Okamoto 1996, on central conceptual structures; see Forman and Pufall 1988, on epistemic conflict; see Fauconnier and Turner 2002, on conceptual blends).

Finally, note that not every ambiguous figure is a bridging tool in the sense that we have been using it to discuss design for mathematics-learning environments (see Fig. 4, below). If I say “duck” and you say “rabbit” (Fig. 4a), we might learn something about visual perception. But if I say “2 rows of 3 X’s” and you say “3 columns of 2 X’s” (Fig. 4b), let’s not call the whole thing off—rather, we might learn something about mathematics.

Having discussed the proposed design framework as well as its application, theoretical constructs, and foundations, we will now turn to the case of a design used in our empirical study with middle school students studying probability and statistics.

2 Design

This section introduces the ProbLab experimental unit for middle-school probability and statistics (Abrahamson and Wilensky 2002). We explain the initial design problem and how we treated it using the learning-axes-and-bridging-tools design framework. Next, we support our choice of media for implementing the design. The bulk of this section is a description of parts of ProbLab relevant to the empirical study discussed in this paper.

2.1 Design problem and domain analysis toward design rationale

The Connected Probability project (Wilensky 1997) and its current research unit, ProbLab (Abrahamson and Wilensky 2002), are an attempt to respond to a century of theoretical and empirical studies reporting and analyzing student difficulty in the domain of probability and statistics (von Mises 1928/1957; Piaget 1952; Fischbein 1975; Hacking 1975, 2001; Simon and Bruce 1991; Konold 1989; Shaughnessy 1992; Wilensky 1993, 1995, 1997; Biehler 1995; Papert 1996; Gigerenzer 1998; Maher et al. 1998; Metz 1998; Henry 2001; Jones et al. 2007). Authors have critiqued as detrimental to student learning the symbolical notation of the domain (Gigerenzer 1998), embedded assumptions in learning environments regarding randomness (e.g., Henry 2001; Maher et al. 1998), and a general disconnect in most mathematics curricula between student real-world experiences and formal mathematical expressions (Wilensky 1997; Pratt 2000). Our reading of this body of literature is that there are pairs of juxtaposed subconstructs, or idea elements, inherent in the domain, such as theoretical- versus empirical probability (Hacking 2001), dependent- versus independent events (Abrahamson et al. 2006), exploratory data analysis versus probability (Biehler 1995), single trials versus expected values (von Mises 1928/1957; Hacking 2001), and the ultimate tenuousness of statistical measurement versus “true” population properties (Abrahamson and Wilensky 2004a; Liu and Thompson 2002).

We propose that student difficulty with these juxtaposed subconstructs could be treated not as confusions indicating poor learning but as tensions that could be generative of inquiry, reflection, discussion, and deep understanding. That is, we regard these juxtaposed subconstructs as potentially framing powerful learning experiences. We interpret each pair of juxtaposed subconstructs as inter-defining through dialectical semiosis: “theoretical probability” has little if any meaning without some understanding of “empirical probability,” and vice versa. Our approach is to decompose the domain constructs, e.g., distribution, into idea components (the poles of the learning axis) and create bridging tools and activities that elicit students’ intuitive and analytic resources for each component and help students recompose the target constructs as capstones bridging the conceptual semiosis (on students’ intuitive probability, see Fischbein 1975; Piaget and Inhelder 1975).

2.2 ProbLab: implementing the design rationale in the form of bridging tools

The promise of the computer as a medium for mathematics-learning environments, in general (e.g., Noyles and Noss 1992), and for probability and statistics, in particular, has long been discussed by Papert (1980, 1996) and others (e.g., Finzer 2000; Konold 2004; Wilensky 1993, 1995). Oft-cited advantages of the computer environment are high-speed errorless data processing, dynamic-visualization capabilities, and interactive facilities that can support exploration and the testing of conjecture (e.g., Pratt 2004; Pratt et al. 2006). The design used in this study was part of ProbLab (Abrahamson and Wilensky 2002), a computer-based experimental unit that extends the Connected Probability project (Wilensky 1997). Technology employed included NetLogo a multi-agent modeling-and-simulation environment for researching, teaching, and learning science, social science, and other phenomena, and HubNet, a technological infrastructure built on NetLogo that enables participatory simulation activities (Resnick and Wilensky 1998; Wilensky and Stroup 1999b, 2000) in a networked classroom. A participatory simulation is a form of collaborative inquiry-based activity that is distinguished from regular group learning by virtue of having the learners play the roles of elements in a complex system that they are collectively studying. For example, each student plays the role of an animal in a herd through which a contagious disease is being propagated—thus students investigate the complex dynamics of epidemics. The learners interact one with another according to individual “rules” while monitoring for group-level transformation along focal dimensions (see also Berland and Wilensky 2004; Collela et al. 1998; Klopfer et al. 2005).

We chose to work in the NetLogo environment due its authoring functionalities, multi-agent structure, and topology, as follows. The NetLogo authoring functionalities enabled us to create original interactive modules and modify them iteratively in between implementation cycles, even during a classroom intervention. The NetLogo multi-agent structure enabled us to construct probability experiments in which multiple computer avatars simultaneously select between two properties (as though one were tossing many coins at once). The NetLogo topology enabled us to structure the unit so as to establish relations between probability and statistics activities—namely the same visual metaphors constituted either a stochastic device or a population, as we now explain.

The NetLogo view, the interface area where the simulated phenomena are visually represented, is a reticulated matrix of patches, which are square-shaped independent computational agents with fixed positions on the Cartesian plane and properties such as color. The environment supports assigning rule-based procedures to these independent agents, “running” these procedures, and monitoring group-level outcomes, e.g., ratios in color distribution resulting from hundreds of patches each choosing randomly between being green or blue, all with the same p value. Over numerous trials, the user witnesses the emergence of distributions, at the macro level, that converge on the p value (of the micro decisions). In a statistics simulation, the user samples squares from a matrix of thousands of the identical green/blue patches.

These features of the NetLogo modeling environment were all aligned with our design rationale. First, the colorful tessellation of the view supports users in engaging perceptual judgment of color proportions so as to explore relations between micro and macro aspects of stochastic phenomena. For example, when thousands of patches choose independently between green and blue with equal likelihoods, the overall “mosaic” appears about half green and half blue. Second, the shared visual language of the probability and statistics modules enable nuanced differentiation between procedures associated with both concepts, such as sampling, and investigation of epistemological aspects of these concepts, such as the realness of sample spaces (Abrahamson 2006b).

2.3 Overview of ProbLab bridging tools and activities

The unit revolves around the 9-Block, a mathematical object embodied in three different activities: theoretical probability, empirical probability, and statistics. A 9-Block is a 3-by-3 matrix in which each of the nine squares can be either green or blue. The unit begins with a collaborative construction project in which the classroom builds the combinatorial sample space of the 9-block (the combinations tower). Next, students work with computer microworlds in which the 9-Block is embodied in the form of a stochastic device, where each of the nine squares independently chooses between green and blue. Students analyze outcome distributions from running these simulations. Finally, students work in S.A.M.P.L.E.R., a statistics activity, in which they take and analyze 9-block samples from a hidden population of thousands of green and blue squares (for other objects, a suite of over 20 models, and activities, see Abrahamson and Wilensky 2002; Abrahamson 2006a; Abrahamson et al. 2006).

2.3.1 The combinations tower

To build the combinatorial sample space of all possible green/blue 9-blocks, students use paper, crayons, scissors, and glue (see Fig. 5a–c, below). Abrahamson et al. (2006) report on how a 6th-grade classroom self organized to engineer, strategize, and produce this challenging mathematical object comprised of 512 distinct elements. Students create collections of 9-blocks (Fig. 5a), with particular attention to avoiding duplicates. Students are then guided to construct this sample in the form of a histogram, according to the number of green squares in the 9-blocks (see Fig. 5d, for a sketch of this histogram). The heights of the histogram columns correspond to the coefficients of the binomial function (a + b)9, 1, 9, 36, 84, 126, 126, 84, 36, 9, and 1. For example, there is only a single, 1, combination with no green squares, there are 9 different combinations with exactly one green square, 36 with exactly two green squares, and so on. Students notice the symmetry and general emerging shape of the distribution and use this knowledge to inform their search for new combinations. Students paste the 9-blocks they have created onto a poster, grouped according to the number of green squares in each column and without duplicates (Fig. 5b). This histogram—the combinations tower—grows into a narrow and very tall chart that begins near the floor and extends up to the ceiling (Fig. 5c). Importantly, the activity of constructing the combinations tower is not framed as relating to probability. Nevertheless, as we will discuss in a later section, the classroom refers to this display as such in subsequent probability activities.

2.3.2 9-Blocks—a NetLogo simulation

The NetLogo model 9-Blocks(Abrahamson and Wilensky 2004b; see Fig. 6a, next page) was designed to help students understand outcome distributions obtained in empirical-probability experiments in terms of theoretical probability. Nine patches independently select between green or blue (in the default version of the model, p = .5). The procedure then counts up how many green squares are in the 9-block outcome and records this number in a histogram. For instance, if the 9-block has 6 green squares in it (see Fig. 6a), the 7th column from the left will rise up one unit (the left-most column corresponds to zero greens). From trial to trial, the histogram grows incrementally, progressively taking on a stable shape similar to the combinations tower (compare Fig. 6a and b). Classroom discussion focuses on explaining how a random procedure could possibly produce a distribution identical in shape to an artifact that had been derived through meticulous combinatorial analysis.

2.3.3 S.A.M.P.L.E.R.: a HubNet participatory-simulation activity

S.A.M.P.L.E.R., Statistics As Multi-Participant Learning-Environment Resource (Abrahamson and Wilensky 2002), is a participatory-simulation activity built in NetLogo and enabled by the HubNet technological infrastructure.

In S.A.M.P.L.E.R. (see Fig. 7, next page), students each take individual samples from a population and use these samples to estimate a target property of this population. The “population” is a matrix of thousands of green or blue squares (Fig. 7a), and the target property being measured is the population’s greenness, i.e., the proportion of green in the population. A feature of the activity is that population squares can be “organized”—all green to the left, all blue to the right (Fig. 7b). This “organizing” indexes the proportion of green as a part-to-whole linear extension that maps onto scales both in a slider (above it) and in a histogram of students’ collective guesses (below it). Students participate through clients (in the current version of S.A.M.P.L.E.R., these clients run on students’ personal computers). These clients are hooked up to the facilitator’s server. Students take individual samples from the population (Fig. 7c), and analyze these samples so as to establish their best guess for the population’s target property. (Note that whereas all students sample from the same population, by default each student only sees their own samples, unless these are “pooled” on the server.) Students input their individual guesses and these guesses are processed through the central server and displayed as a histogram on the server’s interface that is projected onto a classroom overhead screen (Fig. 7d).

The histogram shows all student guesses and the classroom mean guess and interfaces with the contour of the self-indexing green–blue population immediately above it. Note the small gap (Fig. 7d, middle) between the classroom mean guess and the true population index. Because a classroom-full of students takes different samples from the same population, the histogram of collective student input typically approximates a normal distribution, and the mean approximates the true greenness value. The students identify with the data points they have created on the plot (“I am the 37”... “So am I!”... “Oh no... who is the 81?!”). So students can reflect both on their individual guesses as compared to their classmates’ guesses and on the classroom guess as compared to the population’s true value of greenness. Such reflection and the discussion it stimulates is conducive to understanding distribution.

Students each have a limited “sampling allowance,” so they must sample strategically. The allowance is replenished at the beginning of each sampling round. For each new hidden population, students each receive 100 points. At each guess, as many points are deducted from individual students as their guess is off from the true value, e.g., a “60%” guess for a 50% green population causes a 10-point deduction. However, before the results are revealed, students are required to choose whether to link their bet to their own input or the classroom average, e.g., a “60%” guess for a 50% green population would cause a deduction of only 2 points, if the student committed to the group guess and this guess is “52%” (Abrahamson et al. 2006; Abrahamson and Wilensky 2004a; see Surowiecki 2004, on the power of group guessing; for detailed explanation of the S.A.M.P.L.E.R. activity, see the NetLogo Participatory-Simulation Guide, Wilensky and Stroup 2005).

3 Methodology

The implementations were opportunities to study students’ reasoning on problem-solving tasks involving situations that pertain to the study of probability and statistics. These situations and framing activity contexts were designed so as to support students’ invention and practice of mathematical constructs initially experienced as personally innovative solution procedures. Because we are interested both in student cognition and in developing learning tools, these interventions were opportunities both for eliciting students’ reasoning and for evaluating the instructional design. Specifically, analysis of students’ spontaneous behavior—their actions and utterances—was to inform modification of the emerging theory and design rationale as well as the objects and activities. Thus, subsequent studies would use improved materials, and the activity facilitation would be informed by a better understanding of how the theoretical model should be implemented so as to optimize students’ opportunities for discovery learning. Ultimately, the goal of these iterated studies is to achieve resonance between the theoretical model and the pedagogical practice; to articulate emergent findings and integrate them into the model; to create a coherent design-for-learning framework that will guide other educational researchers and practitioners in the development, implementation, and study of mathematics-learning.

3.1 Participants

S.A.M.P.L.E.R. was enacted in two 6th-grade classrooms (n = 20; n = 18) in a middle school in a very heterogeneous urban/suburban district (school demographics: 48% White; 35% African-American; 15% Hispanic; 2% Asian; 29% free/reduced lunch; 5% ESL). The teacher was a White female teacher in her 3rd year as a teacher. The rotating research team on site also included four graduate students completing their doctoral studies in the Learning Sciences. The first author, who was also the lead designer of the ProbLab activities, took an active role in co-facilitating the lessons with the teacher. The other team-member roles included collecting video data and field notes, eliciting student ideas through on-the-fly interviews during classroom activities, and addressing software and hardware issues that often occur in technology-based pilot studies. The second author (the PI) monitored the study through daily debriefing meetings, in which we consulted on the objectives and methodology such that the underlying pedagogical philosophy is implemented.

3.2 Procedure

The intervention spanned 2 weeks: first week—2*80 min periods work on the combinations tower interspersed with work on NetLogo models; second week—3*80 min periods work on S.A.M.P.L.E.R., for a total of five double-period lessons per classroom. In these implementations, NetLogo models were operated by the teacher and discussed by the students. Footnote 1

Activity introductions and summaries were facilitated by the lead researcher or the teacher. Otherwise, lesson time was dominated by individual and group work, with occasional classroom discussions, some of which were spontaneous and others initiated by the facilitators. Students were encouraged to lead discussion from the front of the classroom, by referring to the computer interfaces projected on the overhead screen and calling on other participating students.

A posttest was administered as another measure of students’ understanding and so as to elicit students’ feedback on the experimental unit.

3.3 Materials

The classroom was arranged for this implementation in a horseshoe shape (see Fig. 8a, next page). Twenty students seated at individual laptop computers were connected through a switch to the facilitator’s computer. The facilitator’s laptop computer was wired via a 24-port switch to all students’ laptop computers, Macintoshes running OS9 operating system. We ran S.A.M.P.L.E.R. in NetLogo 1.3.1. Footnote 2 The facilitator, whether teacher, researcher, or student, often stood at the opening of the horseshoe to present the overhead projection (Fig. 8b). During the lesson, the facilitator had access to all students, and they could consult each other (Fig. 8c).

The posttest consisted of items that required students to address sampling strategies—both their personal strategies and group strategies. Specifically, students were asked to explain their favorite sampling strategy, to comment on whether it is better to commit to one’s own guess or to commit to the group guess, and whether the group should first input individual guesses and only then discuss the input or first discuss and then guess (for results of these posttests, see Abrahamson et al. 2006; Abrahamson and Wilensky 2005, where we describe the range in student responses as well as some fluency limitations in implementing their correct intuitions; this paper focuses on analyzing selected episodes from the classroom data from the perspective of the emergent framework).

3.4 Data collected

Two video cameras filmed all lessons: one was carried by a researcher to capture student work and discussion and the other one was typically positioned on a tripod at the back of the classroom as a backup but occasionally carried by another researcher. During individual work, the researchers interacted with students, asking them to explain their strategies, actions, and thoughts. Thus, the bulk of the data are either of classroom discussion or on-the-fly interviews. Because the researchers were consistent in interacting with all students, the data sample the classroom activity. Every day, the design team wrote extensive field notes during and immediately after the lessons and during a first run through the video data. Verbal and electronic communications within the design team and with the teacher were recorded to track the rationale of day-to-day modifications of the design. Finally, we collected students’ posttest responses.

3.5 Data analysis

The researchers individually examined the videotaped classroom data and the daily field notes. Researchers each marked in the tapes episodes they sensed could be of significance for understanding and improving the learning potential of students participating in the classroom activities. In research meetings, we discussed our selected episodes, many of which were chosen by more than a single researcher. These episodes tended to portray students who either had difficulty understanding the activities or made insightful comments about the meaning of mathematical representations (on collaborative microgenetic-analysis methodology, see Schoenfeld et al. 1991). Our discussions resulted in the delineation of required modifications of the learning tools and necessary emphases in the facilitation (see Abrahamson and Cendak 2006, for a subsequent study that applied these conclusions). Also, we evaluated whether the emergent theoretical model generates productive insights into the data, i.e., whether the model sensitizes us to nuances of student actions and utterance such that we understand these better. In particular, we attempted to articulate students’ conceptual learning as action-based negotiation and resolution of competing meanings embedded in the learning tools.

4 Results and discussion: analysis of student learning through the analytical lenses of the learning axes and bridging tools framework

In earlier sections, we have demonstrated our use of the learning-axes-and-bridging-tools framework in the analysis of mathematical domains and how this analysis, in turn, informs our design of bridging tools for classroom activities. In this section we demonstrate how the framework can be used also as analytic lenses on classroom data. Using the same lenses for domain analysis, design, facilitation, and data analysis helps us evaluate the efficacy of our materials, activities, and facilitation in implementing the design philosophy. In particular, we select episodes in the data that, to our judgment, demonstrate student insight and inventiveness, and we work to articulate this insight in terms of the bridging tools the student was working with, the activity that contextualized the student’s work, the underlying learning axis apparently stimulated in this activity, and the invented statistical construct, where the axis cohered as an articulated reconciliation of cognitive conflict.

We now examine five brief classroom episodes so as to explain five of the learning axes and statistical constructs that are enfolded in the bridging tools and emerge through student participation in classroom problem-solving activities. In these episodes, the learning axis, bridging tool, and construct are, respectively: (a) local-versus-global interpretation of the S.A.M.P.L.E.R. population stimulates the construction of ‘sample’; (b) theoretical-probability-versus-statistical interpretation of a collection of 9-blocks stimulates the construction of “sample distribution in the population”; (c) theoretical-versus-empirical-probability interpretation of the combination tower stimulates the construction of “sample space”; (d) range-versus-cluster interpretation of a histogram stimulates the construction of “variance” and “balance”; and (e) individual-versus-social interpretation of a histogram stimulates the construction of “sample mean” and “distribution.” For each learning axis, we demonstrate through classroom data students’ negotiation between the poles of the axis. The section ends with a summary of the structural elements of these episodes.

4.1 Episode one: the local–global learning axis coheres as a sample

Within the design sequence, an introductory activity engages students in determining the greenness of a population that is completely revealed (students see all of the tiny squares in the green–blue mosaic). This activity occurs at a point before students have discussed sampling and before the facilitator has enabled students to sample. Nevertheless, students performed quasi-sampling actions. Namely, close attention to students’ verbal descriptions and gestures reveals that their guesses were informed both by counting tiny squares in selected spatial locations (“local” actions) and by eyeballing the greenness value of the entire population (“global” actions). So students were using two different methods: local enumeration actions and a global perceptual judgment. Importantly, students did not appear, initially, to be aware that they were using two different methods, nor did they appear to coordinate these methods as complementary. Yet, through discussion with their peers and the facilitator, students had opportunities to connect between these strategies, as the following transcription demonstrates.

-

Researcher: [standing by the student, Devvy, who is working on his individual laptop computer] What are you doing here?

-

Devvy: [gazing at the S.A.M.P.L.E.R. population, index finger hopping rapidly along adjacent locations in the population; see Fig. 9, below] Counting the squares.

-

Res: What did you come up with?

-

Dev: [hands off screen, gazing at screen; mumbles, hesitates] Around 60 percent or 59 percent.

-

Res: Sorry ... so, show me exactly what you’re counting here.

-

Dev: Green squares, [right index on screen, swirls in one location, then hops to another location, unfurling fingers] ‘cause it says, “Find the percentage of the green squares.”

-

Res: Uhm’hmm

-

Dev: So if you were to look at it [left hand, fingers splayed, brushes down the whole population and off the screen] and sort of average it out, [touches the ‘input’ button] it’d probably equ... [index on population, rubbing up and down at center, using rapid little motions and wandering off to the left and then down] it’d probably go to 59 or 60.

-

Res: And how did you get that number?

-

Dev: [index strokes population along diagonal back and forth] Because it’s almost even, but I think there’s a little bit more green than blue.

Devvy’s actions are not statistically rigorous—he is not taking equally sized samples, nor is he systematically counting the number of green squares in each sample or methodically averaging values from these counts. But his actions are proto-statistical (see Resnick 1992)—without any formal background in statistical analysis, Devvy is going through the motions of this practice, if qualitatively: skimming the population, attending to selected locations, comparing impressions from these locations, and determining a global value. Albeit, Devvy appears to acknowledge the tenuousness of his methods or their execution in qualifying his suggested strategy as “sort of average it out.”

Whereas Devvy’s spontaneous local and global methods are as yet disconnected, both methods are grounded in the same object, the S.A.M.P.L.E.R. population. This common grounds constitutes the platform or arena upon which Devvy may negotiate the competing mental resources. Devvy may have already begun building a micro-to-macro continuum by attending to mid-level clusters of tiny squares, i.e. “samples” (see Levy and Wilensky 2004, in press, on the role of the mid-level constructions in student reasoning about multi-agent phenomena). Through participating in the S.A.M.P.L.E.R. activities, Devvy’s proportional judgments could possibly be connected to his acts of counting. Yet, at this point in the classroom activities, this student’s limited fluency in applying proportional constructs does not enable him to quantify his proportional judgment in terms of the local data. Therefore, he begins with a local narrative but, when pressed for an exact answer, he switches to a global approximation.

In summary of this episode, 6th-grade students have personal resources that are relevant to statistical reasoning (Devvy had been identified by the teacher as the lowest achieving student in the class). Specifically, in the context of determining the greenness of the S.A.M.P.L.E.R. population—the bridging tool in this episode—students have structured opportunities to invent sampling as an action that reconciles enumeration and perceptual judgment.

4.2 Episode two: the theoretical–statistical learning axis coheres as a distribution

Luke is seated to Devvy’s right. Footnote 3 He, too, is looking at the S.A.M.P.L.E.R. population (see Fig. 10a). Luke responds to Devvy’s guess of 59%. In his own observation, Luke refers to the combinations tower that students had built during the previous week and is now attached to the wall nearby him (see Fig. 10b):

-

Luke: Ok, the reason I think “50 percent” is ‘cause when you make that tower [turns in his seat to face the combinations tower], it’s gonna be equal for green and blue [equal total numbers of green and blue squares]. So if this [the S.A.M.P.L.E.R. population] were to be all of the combinations, it’ll be equal green and blue—50%. [The correct answer was indeed 50%. We had not informed students of this value.]

The S.A.M.P.L.E.R. population and the combinations tower are physically distinct objects in the classroom. Luke’s insight is that we can couch the S.A.M.P.L.E.R. population of thousands of squares as a collection of discrete 9-blocks. The connection that Luke builds between these objects is not associated with any particular new object (compare to Devvy’s invention of a sample). It is grounded in and facilitated by the bridging tool “9-block,” yet it is essentially not about 9-blocks per se but about the distribution of 9-blocks in the population. This, at a point in the unit where ‘distribution’ had not been named or otherwise symbolized. So combinatorics-based construal of a population may provide basic tools for statistical analysis. Footnote 4 If Luke had not participated in constructing the combinations tower, he may not have been able to use it as a resource for his insight (see the project-before-problem design principle, Papert 1996).

4.3 Episode three: the theoretical-versus-empirical-probability axis coheres as a sample space

On the last day of our intervention, we asked students to address a fundamental principle of probability—that a distribution emerging from randomly generated outcomes gradually comes to resemble the anticipated distribution produced through theoretical analysis. Figure 11 (above) features the 9-Block model (on the left) and a computer-generated picture of the combinations tower (center). While the 9-Blocks experiment was running, Emma volunteered to explain why one of the central columns in its outcome distribution was growing taller than most other columns. Footnote 5

-

Emma: “Maybe because there’s more of that kind of combination. Just basically, because if there’s 512 different combinations, and we know that there’s more [possible combinations] in the middle columns, [then] even though there’re duplicates, there’s still going to be more combinations in the middle columns. [The student is now using a pointer to explain what the class is watching on the screen] Even though these patterns [in the empirical live run, on left] may have duplicates in this [the combinations tower, center], it’s still counting all the patterns, so it’s going to have the same shape. ... It’s going to be the same shape, because it’s basically the same thing. Because in the world there are more patterns of these than there are of the other ones.”

Emma notices that the tower and the distribution are alike in shape. Moreover, she explains why these two representations should be alike in shape. This, despite the possibly confusing fact that the empirically generated histogram records many more samples than just the five-hundered-and-twelve 9-blocks in the static combinations tower. Emma’s assertion that “in the world” there are relatively more of one type of pattern as compared to the other suggests that she is attending to the proportions between counts and not (only) to the absolute difference between them. That is, by virtue of comparing between the representations, Emma first came to think of the combinations tower as a theoretical-probability tool. The combinations tower is not just the collection of all combinations and permutations. Rather, it represents propensity—it is a template for gauging relative frequencies through multiplicative comparison. Due to its unique histogram-like configuration, the combinations tower constitutes a bridging tool for grounding the idea of a sample space.

4.4 Episode four: the range-versus-cluster learning axis coheres as variance

For the last day of enacting S.A.M.P.L.E.R., we designed a competitive game between the two study classrooms. A monitor on the screen tracked students’ average score from round to round (the classroom’s points), and the classroom with the highest score at the end of five rounds was to be the winner. Also, we disabled students’ choice between committing to their own guess or the group guess—all students had to go with the group guess. These combined circumstances engendered a higher-than-usual collaboration in the classroom. The following episode occurred during the third round in one of the classrooms.

All students had taken their full quota of samples and were discussing how to process the collective classroom data. Becky has been walking around the classroom, observing her classmates’ screens and adding up the total green squares on all of these screens. Now, she has just rushed to the teacher, with the following idea: (1) students should each call out their personal guess for the population greenness, but they should not input that guess; (2) someone should calculate the average of these guesses; and (3) all students should input this average. This way, Becky contends, the class as a whole would minimize the error, which she describes as the collective distances from the true value in the population, and would thus minimize the loss of points. Jerry replies that this strategy is redundant and error prone—that all students should just input their own guess and let the computer calculate the mean automatically. (Technically speaking, Jerry is correct—that is precisely what the computer procedure does.) Becky disagrees. Now she is vehemently pensive. It is as though Becky is worried about the variance of the distribution, whereas Jerry reckons that the variance is irrelevant for the task at hand. Becky would like a strong guess, whereas Jerry is comfortable to ignore the guess density.

-

Becky: If we’re closer to the average, won’t the average be closer and we’ll [lose less points?]

-

Jerry: It’s the same thing, because this is just like adding up the whole classroom—we’re adding it up on the computer.

-

Becky: If we get this [= if we first calculate the class average independently of the computer] and then people change their guess to be closer to the average...

-

Jer: [Change] our guess?

-

Becky: Yeah.

-

Jer: No, [points to screen] [inaudible]

-

Becky: I’m adding up how many [blue] they have [and then subtracting to determine how many green they have]

-

Jer: I know, but [the computer is finding the average], so it will be the same answer. It will be more precise, though.

-

Becky: I’m adding up how many blues.

-

Jer: I know, but still, then we reverse it. [because the proportion of green and blue complements 100%]

-

Becky: And then if they... and people can change their answer closer [to the average]

-

Jer: But then, well, if we all just put it in [= input our guesses], then since we’re all going with the group guess, it’ll all... we’ll all go with the average, so it’ll be the same thing. It’ll be a precise average, down to the decimal.

-

Becky: Yes, but couldn’t we get less points taken off if people changed their guesses so it’s closer to the average, since the average will be more precise?

-

Jer: It won’t matter, ’cause we’re going with the group guess, so they’ll automatically guess the average. We all are guessing the average, no matter what. We have no choice—we’re guessing the average, since we’re going with the group guess, and the group guess is the average. We’re all guessing the average. We’re all guessing exactly the average, down to the millionth.

-

Becky: Ok, José got only 4 blue. His average will be really high up, won’t that change the average?

-

Jer: Yeah, but still, it still takes the average. [Becky rushes back to her seat]

What’s in a mean? Should it reflect the range of the sampling distribution? If both the mean and the range are important in some activity, perhaps some new mathematical construct is needed that captures both ideas? The context of the guessing game and in particular the high stakes invested in the classroom mean created an opportunity to ground in the histogram the idea of variance—an index of sampling distribution that had not been previously discussed in the classroom forum. Becky naively assumed that the tighter the cluster of a set of guesses, the higher its accuracy. Whereas this intuition is sensible and is reflected in statistical measures of confidence, a dense and a sparse cluster of guesses may be as accurate as a whole, and in fact a sparse set of guesses may be more accurate than a dense one. Even if these issues are not resolved immediately, the issues are raised through argumentation grounded in personally meaningful mathematical reasoning—the conventional tools are problematized and the domain is complexified.

4.5 Episode five: the personal–social learning axis coheres around the histogram mean

During the second day of implementing S.A.M.P.L.E.R., a unique moment of potential learning occurred at a point where students had all input their guesses for the population’s greenness (see Fig. 12, below). Whereas most of the students’ guesses clustered around the classroom mean of 47.2% (the tall thin line), there was a lone guess of 81% far off to the right. The true value of the population was 50% green, as indicated by the contour between the colored areas immediately above and to the right of the mean. So the outlying guess was instrumental in “pulling” the classroom mean up, to the benefit of the many students who had committed to the group guess. As it turned out, many students assumed that the outlying guess was perforce detrimental to the precision of the group guess (“How can it be good if it’s so way off?”). This moment of potential learning is delicate—the facilitator must assess whether exposing the outlying student may be conducive to classroom learning and to improving that student’s peer esteem as a mathematician.

-

Teacher: Do we know whoever that is for sure? [who guessed 81%]

-

Researcher: We can figure out. [walks over to facilitator’s computer to determine the value of that guess—it turns out to be 81%, and Jade identifies herself as the guesser]

-

Teacher: Oh, it was Jade. Ok. [Jade had been identified by the teacher as low achieving in mathematics]

-

Res: [to Jade] Ok, you put in 81. Now, this is something very interesting. This is really really interesting. Now, on the one hand... so... ok, so... [addresses classroom] What do you think of Jade’s guess? ... More hands—what do you think of that guess?

-

Jade: Terrible. [laughing, somewhat uncomfortably, preempting anticipated ridicule]

-

Riv: She probably just uncovered a lot of... more green than blue when she was clicking.

-

Res: Ok, I’ll... let’s get some more input... Jonathan?

-

Jonathan: I think that it’s a good thing that she guessed so high, because otherwise the average would have been lower.

-

Res: Could you come up and explain that? [Jonathan walks over to the screen, uses a pointer, see Fig. 12, above]

-

Jon: Uhhm... because the average includes everyone’s guess, so that, say she guessed, like, down here [on the far left side of the distribution] like in the 40’s or the 30’s, well then the average would have been lower, and the average would have been farther away from the actual thing. So like...’cause... if she moved it [her guess] like down here [to the 40’s], the average would have been lower, because the total before you divide would have been lower. So, the lower the total before you divide, the lower the number would be. The average would be, like, more down here—it would be farther away from the actual... from the actual guess... from the actual answer.

-

Res: So, so Jonathan, people who went with the group guess, what should they think about Jade’s guess?

-

Jon: They should, like, thank her for guessing so high, ’cause that’s what got them—that’s what got them close enough to the actual answer.

-

Students: Thank you Jade, thank you Jade.

Jade’s episode is an example of how a facilitator working in a networked classroom can tap the classroom’s social dynamics to ground mathematical understanding in a shared experience. The histogram serves as a bridging tool between Jade’s individual guess, which she constructed within her computer environment, and the classroom distribution and mean. If it were not for the entire distribution of guesses being available as a shared display—if, for example, we had been working only with monitors showing various central-tendency outputs—this moment could not have been spun through social tension into learning and new esteem for a student who, apparently, struggles in mathematics. Also, based on students’ evidently limited understanding of “mean,” coming into this design, Jade’s episode may have afforded the classroom an opportunity to construe the mean in a new way that was more meaningful than the algorithm for computing it.

4.6 Summary

We have discussed five classroom episodes that we have interpreted as cases of student and/or classroom negotiation between some pair of affordances of a designed object within some activity context (see Table 1, below). In each case, a different object in the design constituted a bridging tool between these affordance antipodes. Student interaction with this bridging tool within the classroom forum supported coordination of idea elements, was instrumental in achieving the design-facilitated classroom tasks, and reflects common domain-specific practices. The design fosters opportunities for students to tap, shape, and coordinate their implicit skills by providing artifacts around which conceptual structures—concepts-in-action—cohere as useful bits of knowledge.

5 Conclusion

We have presented a proposed mathematics-education design-for-learning framework that provides a set of tools for coherently conducting domain analysis, design, instructional facilitation, data collection, and analysis of student multimodal reasoning, in researching student learning. The framework is grounded in phenomenological philosophy, genetic epistemology, artificial-intelligence research, creativity studies, and cognitive-science perspectives on mathematics-education. We demonstrated an implementation of this framework in a middle-school experimental unit on probability and statistics that was embodied in mixed media, including traditional tools, computer-based simulations, and a networked-classroom participatory simulation activity. In particular, we presented five episodes from classroom data that showed students tackling core constructs of the target domain by way of negotiating conflicting interpretations of mathematical artifacts. We conclude that the framework is suitable for implementing the intended pedagogical vision that students learn through personally re-constructing historical mathematical concepts.

5.1 Implications for mathematics-education pedagogy and design

If necessity be the mother of all invention, ambiguity is the father of all re-invention. Ambiguity plays a central role in the design-for-learning framework presented in this paper. We position ambiguity halfway between confusion and clarity, and we value ambiguity as a catalyst of generative cognitive conflict toward insight. Accordingly, our design framework lays out principles for crafting ambiguous mathematical objects, objects that give rise to two disambiguations. These dual interpretations of a single referent “are stimulus-synonymous without having the same meaning in any acceptable defined sense of ‘meaning”’ (Quine 1960, p. 46). By affording two activities that are phenomenologically disparate yet mathematically complementary, the bridging tool anchors intrasubjective stimulus synonymy. By embedding these tools in classroom collaborative activities, the synonymy becomes intersubjective.

Learning mathematics is a process of coordinating mental action models into new schemas. On these new schemas ride mathematical terminology, symbolical notation, and solution procedures. The action models do not become coordinated haphazardly. Rather, the coordinating is grounded in objects in the learning environment and stimulated by some task that problematizes the object. von Glasersfeld (1992) clarifies that radical constructivism is not about letting students dwell benignly in their blooming buzzing confusion as much as it isn’t about dictating clarity. Rather, the idea is to create learning environments that foster opportunities for students to construct mathematical understanding. It is the objective of the learning-axes-and-bridging-tools design framework to create sufficient constraints so as to land students halfway between confusion and clarity—this is achieved by providing crafted objects and activities that encourage student appropriation of mathematical constructs as personally invented problem-solving tools.

A set of guidelines follows from the work reported herein for designers of mathematics learning environments:

-

(a)

analyze the target mathematical domain to identify its key constructs and representations;

-

(b)

determine and isolate the action models inherent to making sense of these constructs and representations;

-

(c)

formulate hypotheses as to the learning challenges inherent to reconciling the isolated action models as complementary;

-

(d)

identify or create ambiguous (hybrid) objects affording these competing action models;

-

(e)

embed these objects in activities that bring out the ambiguity; and

-

(f)

design a learning environment that stimulates individual students to struggle with the ambiguity and facilitates student argumentation.

5.2 Limitations and future work

We do not claim that LA & BT (learning axes and bridging tools framework) is a design-for-learning panacea for mathematics education. The theoretical model addresses a certain class of mathematical topics each structured as a pair of conceptual building blocks that needs to be fit together and that both can, in turn, be embedded as perceptions of a single artifact (object, computer-based simulation, etc.). At this point, we cannot fathom the proportion of mathematical topics that can be fashioned as abiding with these necessary constraints (but see Abrahamson 2006c, for application of the framework to other mathematical concepts). Some of the numerous questions we are still asking are:

-

Why may it be challenging to conjoin complementary bits of knowledge? For instance, are these conceptual components initially construed as contradictory?

-

Are the axis components by necessity dialectically co-defining within each conceptual composite?

-

What of learning systems, rather than axes?: Does learning advance as a gradual pairing of stable-enough concepts, or can several bits of knowledge come together all at once?

-

Are some learning axes in principle unbridgeable?

-

What is the nature of the relationship between intuitive and formal mathematical knowledge?: Do intuitive perceptual judgments ever become articulated or are they essentially only validated through appropriation of formal procedures (see Abrahamson and Cendak 2006)?

-

Might “simple” concepts, e.g., multiplication, also be, in fact, opaque composites in need of bridging?