Abstract

The focus of this work is to examine the relationship between subjective and objective measures of prestige of journals in our field. Findings indicate that items pulled from Clarivate, Elsevier, and Google all have statistically significant elements related to perceived journal prestige. Just as several widely used bibliometric metrics related to prestige, so were altmetric scores.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The proliferation of higher education journals began as early as the 1980s (Bayer, 1983) and has continued to accelerate; scholars in the field of higher education now have more journals than ever in which to place their work and of which to cite. This proliferation leaves scholars with many choices of where to submit work to publish but with questions about which journals to prioritize both as a submitter and a reader, both to maximize exposure to the desired audience but also to advance their careers. If understanding about these journals is limited, then readers and authors alike will be unable to make fully informed decisions about what to read and cite as well as where to submit. People making decisions about the quality of their work, in both formative and summative capacities, may be uninformed as well.

Studies of journals can provide journal users with important data and thus have value, for example to journal editors as well as to institutions and scholars. The information such studies produce can affect submission trends, subscription levels, and advertising rates (Haas et al., 2007; Podsakoff et al., 2005). Such studies may also be meaningful for both institutions and specific academic programs, as scholarly publication productivity and prestige relates to institutional and program status (Dey et al., 1997; Drew & Karpf, 1981; Hartley & Robinson, 1997; Ranis & Walters, 2004). Further, knowledge about journals, and perceptions of their prestige, has consequences for faculty, since those factors can and do influence promotion and tenure decisions, resultant faculty status, and also influence salary increases (Corby, 2003; Davis & Astin, 1987; Fox, 1985, Gaston, 1978; Nelson et al., 1983; O’Neil & Sachis, 1994; Tahai & Meyer, 1999).

Multiple studies have also shown that the publication expectations for faculty have been growing over time (Bok, 2015; Gappa et al., 2007; Geiger, 2010; Gonzales, 2012; Youn & Price, 2009). These increased expectations have faculty seeking to publish more, and focusing in greater amounts on high prestige outlets (Schuster & Finkelstein, 2006; Youn & Price, 2009). Bok (2015) indicated institutions are shifting hiring practices in the same direction, targeting authors at the assistant level with greater and greater levels of pre-existing publication productivity. Or perhaps it is that graduate students are trained to even greater levels to understand the importance of, and pursue while enrolled, publication before seeking a position. Ball suggests, “Ranking journals and publications is not just an academic exercise. Such schemes are increasingly used by funding agencies to assess the research of individuals and departments” (Ball, 2006). As such, journal prestige and publications in them are critically important for promotion and tenure decisions (see, e.g., Heckman & Moktan, 2020; Lindahl, 2018).

For this reason, journals in the field of higher education represents an important area of inquiry. In particular, information about the quality or reputation of journals is important to understand, since perceptions of quality can affect usage. Reputational standing and perception of quality is linked to the concept of prestige (Bray & Major, 2011). Understanding prestige is clearly important for authors and those evaluating their work, as is understanding whether prestige is something purely subjective or if it can be measured and encapsulated by the metrics available in a field of study still developing like higher education administration. This is the focus of our work here: to examine the relationship between subjective and objective measures of prestige of journals in our field.

Background

In 2011, we published our first look at the prestige of journals in higher education. We chose the concept of “prestige” since by definition, it refers to the quality or standing of something, rather than “status,” which definitionally infers a consideration of standing relative to others. We wanted a consensus about general levels of quality and reputation among users. In particular, our original work was (and current work is) substantiated in conflict-based prestige theory (see Wegener, 1992). Wegener conceptualized prestige as having four main categories: rational conflict theories (in which prestige has its foundation in esteem), rational order theories (in which the basis of prestige exists through achievement), subjective order theories (in which prestige is considered upon the basis of charisma), and normative conflict theories (in which the concept of prestige has its basis in honor). In the pair of order based theories, prestige is a characteristic of the individual person or entity in question, while in the pair of conflict theories, prestige is instead linked to the way it is socially ascribed or aggregates. In the quadrant of prestige theories we utilize, the conflict based prestige theory, it is suggested that prestige exists and can be assessed by the perceived values that others ascribe. In other words, prestige is prestige; the Journal of Higher Education, for instance, has prestige because the members of the field of study of higher education view it as in a position of honor, or worthy of esteem. As a result, those journals that are viewed as being good, as being worthy of attention or merit, thus garner more submissions and more attention, which in turn makes them more likely to attract top manuscripts which reinforces views on their merit and worth.

Perhaps because of this cycle and the importance of perception to prestige, in addition to possible metrics or measures that also factor in, the study of prestige can and should include both objective and subjective measures. It would also be of interest to ascertain if objective and subjective measures that factor into prestige are related to each other. However, at the root of prestige in the conflict-based model, is the perception of prestige and the sense of consensus regarding those perceptions within a group or population. Thus, using an approach drawing upon conflict-based prestige theory, here we began our work by higher education faculty for their perceptions of the prestige level of journals in the field of study of higher education. Our further examination then linked these subjective perceptions to objective measures, creating the bifurcated focus suggested by Wegener (1992) in focusing more holistically on prestige.

For this 2011 work, we surveyed faculty in the field of higher education. In addition to gaining a general understanding of the perceived quality of the 50 journals in our study, we found that journals our participants considered to be “top tier” tended to be generalist in nature, while more specialized journals tended to be lower in overall ranking. Top tier journals tended to be cited more, but journals as a whole had relatively low citation rates and low representation in databases such as the Social Science Citation Index.

This conundrum fit with one of the questions that arose from our 2011 study – the nature of prestige for journals. Is prestige related to longevity, the amount the journal was cited, or something else? Do we just know prestige when we see it? In short, is prestige something faculty viewed as existing without a basis or is it perceived prestige related to specific metrics, which would allow us to develop a prestige scale based on evidence. This raises the question of if such a scale would then have validity. Based on a conflict theory approach to prestige, in which is can be accumulated from the acknowledgement and esteem of others, we believe prestige is as it is viewed by the people considering the topic. Thus, journal prestige in higher education is what the collective ascribes as prestige to those journals. Fitting objective metrics (see Appendix A for data sources and descriptions, and Appendix Table 6 for the variables drawn from each source with descriptions) to the subjective measure of prestige therefore offers a valid attempt to unpack how that prestige is formed and if it fits data driven patterns.

Research Review

Research on academic journals in general is fairly limited, particularly as focused on specific disciplines and fields of study. Little work has been done in the field of higher education administration literature specifically. However, some studies of the use of objective measures to link to prestige exists across different specialties. In a study across institutions in the US and the UK, Wellington and Torgerson (2005) coded findings of 161 items involved five main categories: entry and acceptance and the process of refereeing, the editor and editorial board, the authors’ reputation, content quality of the journal, and objective data such as circulation, citational data, and readership. However, these findings were not consistent across higher education institutions, and they differed across national systems of higher education. The findings from US faculty, indicated that the content of the journal (29.8%) was the most important in defining perceived status by the faculty, followed by readership (21.3%). Only 8.5% mentioned the status of the authors, and a minimal 2% commented on the publishers.

Content, as viewed by respondents, was an important aspect for faculty, and offers an interesting but warning message. While faculty may engage in work that is publishable and important, it is possible that even if the work is exemplary and groundbreaking, it may still remain viewed as “lesser” just because of the content area. Faculty have to continually work against such pressures in opening new vistas for examination, something important to remember and consider in looking at prestige. Packwood et al. (1997) also examined what faculty considered characteristics of a good journal. Their findings suggested three categories valued by faculty: quality and clarity of the writing, breadth and scope of the journal, and timeliness and originality of the articles.

Objective and Subjective Data

While only a few studies of objective and subjective data on academic journals exist, these offered some lessons for our work. DeJong and St. George (2018) in their objective and subjective review of criminal justice journals, for example, found that Thomas Reuter’s journal impact factor (JIF) may not be the best measure of quality in terms of linking subjective scales to objective ones, whereas Google Scholar’s H-index and Elsevier’s cite score are more closely related with subjective journal ratings (see the Appendix for additional information about these sources). Callaham et al. (2002) found that the impact factor of the journal in which a work was published is the strongest factor in its ultimate usefulness, when usefulness is determined by number of citations.

Of the few studies on the status of journals in higher education specifically, research typically has relied upon one of two main approaches: an objective approach (e.g., Bayer, 1983; Haas et al., 2005; Nelson et al., 1983) using information like citation rates on the Social Sciences Citation Index (SSCI), or a subjective approach in which respondents share what their perceptions are rather than applying specific metrics (e.g., Bayer, 1983; Bray & Major, 2011). While both measures provide important data, the solution offered by Wegener (1992) is to combine the two. However, metrics for the field of higher education remain relatively underpopulated for higher education, and it has not been possible to address how much objective and subjective measures coincide to even consider combining them. It is important to note some of the limitations to using and blindly accepting some of the metrics available, particularly in fields of study as opposed to disciplines. For example, low consensus fields (see Biglan, 1973) may be a less predictive measure of prestige than they may be in high consensus fields. Similarly, the way we access data too may be a challenge in incorporating metrics evenly across fields, or using studies from one field to understand another; circulation rates, for instance, may not be the best measure of usage (Nelson et al., 1983), something we see argued more and more with the rise of various altmetrics which can provide more nuance to how we look at journal articles, how they are accessed, and how things like abstract views compare to full text downloads.

Even one of the more common approaches, and one we hear suggested by various administrators, is the inclusion of journals in, or measures from, the Social Science Citations Index (SSCI) (see Bayer; Nelson et al., 1983). However, while the SSCI is a useful database for some fields and in measuring citation metrics from those, it is of limited utility for higher education. Consider, few of the journals included in this study are even listed in the SSCI. We have heard administrators argue that even inclusion in the SSCI is an indicator of prestige, suggesting faculty should focus on getting their work into those journals as greater evidence of the importance of their work. However, it remains to be seen if this is true, or if instead the SSCI just is a poor measure for some fields, rather than a prestige indicator. If the latter, then in fact consideration of the SSCI as a prestige factor would be highly deleterious to the work of faculty in the field to suggest that those not in SSCI are limited in some way. Given how few journals in the field are in SSCI, it would have a cooling effect on several areas of research to overreach the perceived value of SSCI inclusion. Citation measures alone, whether included in SSCI or not, are not an indicator of originality or a measure of creativity either and can be skewed across fields (Oromaner, 1981; Thelwall & Wilson, 2014). The measure of journal or publication status is not wholly objective and can show little consensus across measures and platforms they are compiled on (see Smart, 1983; Harzing & Van der Wal, 2009).

Meanwhile, subjective measures suffer from many disagreements about how best to capture prestige as well (Doreian, 1989). Who should be selected to determine prestige? Department chairs, particularly in amalgamated multi-program departments, often do not know enough about the myriad of journals to have an informed opinion. Even within a field like higher education administration, specialization is occurring rapidly enough that many professionals may have difficulty knowing all the journals across sub-specializations to know how valued they are by specialists in those areas. There is also the difficulty of deciding which journals should be selected and how the rating instrument is constructed (Doreian, 1989).

Today, there exists no lack of evaluation systems, just a lack of complete ones capturing journals across the field of higher education administration. A breakdown of objective measures highlights key ways journal quality is measured objectively across different rating systems, which will guide our discussion. The challenge for higher education professionals in considering their work or evaluating the work of others is that most of these systems only evaluate or include a small portion of the journals in our field. Relying on one measure leaves many journals out of any consideration. (Note: we do not include Academic Analytics as it does not provide a clear, accessible inventory of its journals and their metrics or measures for them).

Methods

The research questions driving this study were two-fold: 1) what factors are deemed as important to journal prestige, and 2) how do the expressed factors of prestige match with rankings of individual journal prestige. To answer these questions, we asked a wide array of professionals for their perceptions of journal prestige, what factors they thought mattered for prestige, and then set out to gather data on those factors to determine how subjective and objective measures of prestige related.

For data, we sought information across a wide array of categories, including respondent background characteristics as well as perceptions of factors of prestige, in addition to specific levels of prestige for each of the journals included in this study.

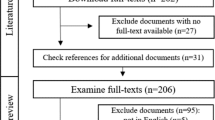

In order to conduct this study, we compiled a comprehensive list of journals in higher education, realizing that the pantheon of journals in the field is a moving target. The initial review of journals in the field indicated 149 publications. We next sought to categorize journals into sub-specializations in the field as well, a topic that seemed both critically important and controversial at the same time. To that end, we made initial categorizations of journals based on their expressed missions, placing them into one of ten categories. The ASHE and AERA Division J content areas for proposal submissions provided a starting point that was used as a proxy for current ways the field is divided by other experts.

We then went through editorial boards, authors, and ASHE and AERA J presenters to establish a panel of experts within content to examine initial journal categorizations, to suggest any deletions, additions, or revisions. This led to some journals being included across more than one specialization area, but it led to a final grouping of ten categories: 22 journals that focused generally across higher education, 19 focused on Student issues, 27 in the category of Teaching and Learning, 14 journals were categorized as having an Organization and Administration focus, 32 focused on Policy issues, 13 were grouped on Context, Foundations, and Methods, 12 focused on Comparative/International topics, 7 grouped on Community College topics, 19 journals focused on Minority and Underrepresented Groups, and 18 journals focused on Developmental and Continuing Education. The survey was then piloted with these individuals to ensure ease of navigation as well as to confirm journal inclusion as indicated.

The list of study participants was drawn from authors who had written across the journals selected, as well as from ASHE presentations, creating a respondent list of 1,023 with a final sample size of 988. Our goal was to send to rank and file professionals across the field of study of higher education. Three-hundred and forty-two respondents completed the survey, leading to a response rate of 34.4%. In spite of our best efforts, self-selection bias is a possibility; it is challenging to determine their representativeness given we do not have exact figures for the sample to who the survey was sent or for the larger population represented by that sample. What we do know is that the sample was diverse across several categories, more limited in respondent diversity in others. One-hundred and twelve identified as male, and one-hundred twenty-six identified as female with 2 gender non-conforming, 1 transgender, and 6 providing an unlisted identity; 27 percent chose to not respond. Just under seven percent were Asian American, twelve percent of the respondents were African American, three percent Pacific Islander, three percent Hispanic, and three percent identified themselves as multi-racial or mixed, with roughly 50 percent Caucasian.

All respondents were asked for their perceptions of the prestige of journals that had a generalist focus, and then within their specialty or specialties. They were also asked to provide their perceptions of which criteria mattered the most for prestige. To gauge their perceptions, we asked respondents to place each journal in their area into one of three categories; to avoid confusion between high and low, we labeled these options “First Tier”, “Second Tier” and “Third Tier.” Given prestige was being treated by Wegener’s theory, we treated prestige for this element as a social construct developed from how people perceived the journals and placed no specific criteria on why that given journal was or was not prestigious, or how to determine if a journal was prestigious. Instead, we simple asked how prestigious was the journal in question.

We then gathered specific information from the databases listed in our literature review to create a database of journal metrics to compare to the subjective score of prestige and to their perceptions of what factors mattered for prestige. Unfortunately, this reduced the number of journals to 75 using Elsevier, while the number from the SSCI reduced to 27 for those analyses. While this limits our findings, it also highlights the importance of developing a sense of how metrics matter, so we can more broadly consider prestige even when the journals themselves are not included in specific metrics.

With the data in hand, we ran correlations to look for the relationship between subjective prestige and the various associated objective metrics such as impact factors, SCITE score, etc. (see Appendix Table 6 for a complete list and explanation of variables used). We also looked for multi-collinearity issues before creating z-scores for all items and seeking to develop a regression model to predict prestige; this allows for the development of a scale comprised of all the items that fit with prestige.

Findings

Descriptives provide some informative data (see Table 1). These data are particularly helpful in contextualizing those other higher education journals that are not included in one or more of these databases (Clarivate or SCImago). The average number of citable items was 90.14, with 98 percent or content being considered citable documents. The average cited half-life is 9.9 while the average citing half-life is 8.9 years. The average journal impact factor in 2020 is 3.20, with a 5-year average JIF at 4.12. Journal impact factor without self-citation dropped to 2.80, while average JCI in 2020 is 1.92. The average Google Scholar h-index score is 44.14. Average acceptance rate across all journals was 22.46 percent, and the average age of the journals in the field was 55 years old. On average, journals published 4.73 times per year, with an average journal article length of 23.3 pages. Average time to review was 2.9 months. The average Altmetric score across journals was 30.98.

Faculty perceptions of the importance of various prestige criteria is presented in Table 2; data were scored on a scale of 1–5, with 1 being very important. The idea of peer review is far and away the single strongest criteria faculty identified for journal prestige, with a mean of 1.28. Acceptance rate (2.23) is the next most important criteria strongest factor perceived to relate to prestige, followed by scholarly focus (2.3), impact factor (2.31), inclusion on SSCI (2.43), and longevity (2.43).

In response to research question one, we started by calculating a prestige score for each journal, based on the mean score for each journal across all respondents. This became our dependent variable, a subjective measure of prestige. We then examined the objective measures’ correlation with subjective prestige. Remember that a lower score was more prestigious, so negative relationships indicate increased prestige with increase in the correlated variable. We found that, while the Clarivate (SSCI) measures were the strongest in their relationship with perceived prestige, items pulled from Clarivate, Elsevier, and Google all have statistically significant elements related to perceived prestige (see Table 3). The strongest relationship with prestige is cited half-life (r(20) = -0.710, p < 0.001), meaning that higher journal prestige correlated with a higher cited half-life, or higher median age of citations. This was followed by citing half-life (r(20) = -0.551, p < 0.05), again negative which means that prestige increase coincided with increase median age of cited works. Thus, journals with older citations and age of works cited tended to be more prestigious.

Other factors included immediacy (r(20) = 0.501, p < 0.05), which means that as perceived prestige went up, the time it took for articles to be cited went down. The opposite was true with article length (r(47) = -0.480, p < 0.001), longer articles were correlated with higher prestige levels. A significant age of journal (r(47) = -0.461, p < 0.001) relationship indicated that as the journal has been in existence longer, prestige also increases. Oddly, a lower journal impact factor from 2020 (r(20) = 0.445, p < 05) also correlated with higher journal prestige. Also correlated with higher prestige was a lower acceptance rate (r(47) = 0.436, p < 0.01), a higher h-index (r(54) = -0.300, p < 0.05), and a higher SJR score (r(53) = -0.289, p < 0.05).

Considering our specific list of journals recommended by a panel of professionals across the field and various sub-specializations, different databases were missing a quarter to over half of the higher education administration publications outlets. Thus in considering research question two, we decided to first test a model that did not require the inclusion of a given journal in any database. In other words, we wanted to see how solid a predictor of subjective prestige we could create with readily available data; thus, is a journal was not included in Elsevier, Clarivate, Google, or Cabbell’s, would we still be able to predict prestige? With that in mind, we were concerned with making a model that looked at relationships between variables that are generally easy to find. Our rationale is that many journals in higher education are not included in any of these databases; we wanted to construct a best model for prestige based on the indicates found to be significant in this study allowing those publishing in those journals that are not for whatever reason included in some of these main databases. Table 4 outlines this base model; using only age of the journal, length of the accepted manuscript, and acceptance rate of the journal, items that can be found from most journals directly rather than relying on a specific database, creates a regression model that combine to predict prestige [F (3,37) = 11.908, R2 = 0.491, p < . 001].

To develop a more comprehensive model to see how well we could comprehensively model prestige from all rating/ranking factors, there were multiple issues with multi-collinearity. For example, we were very interested in including the Altmetric score to see how it related; however, while it was a Cabells calculated score, it alone had a collinearity of above 0.8 (out threshold for removing one of the variables) with several Clarivate scores, including JIF 2020, JIF 5 year, JIF without self-selection, immediacy, and JCI 2020. Any collinearity magnitude of greater or equal to 0.8 was used as ground for removing one of the related variables in construction of a regression model.

A factor analysis and z-scored set of variables was used to create a non-statistically significant model that predicted 68% of the variance in prestige [F (6,3) 1.056, R-Square = 0.679, p = 0.525]. Working across the items that needed to be removed for collinearity, we worked with multiple iterations before finally reaching a model that predicted 97% of the variance in prestige (although note the subject to variable ratio was low) and was significant at the 0.05 level (see Table 5). Yet, interestingly in this model, no one variable is statistically significant.

One point for further consideration was the findings related to the altmetrics scores provided from Cabells. Remember, altmetrics do not have a significant relationship with prestige of higher education journals directly. It did, however, have a strong correlation with several other strong, widely-regarded impact measures. For example, it has significant relationships with the fire year JIF (α = 0.925**), Google Scholar’s h median (α = 0.608*), SJR (α = 0.679**), Elsevier’s journal rank (α = -0.499**), JIF percentile (α = 0.574**), and number of references per document (α = 0.325*). While some may dismiss altmetrics and the importance of considering broadly the impact of a journal and its pervasion into general discourse across other platforms and in measures other than citations, it appears those concerns may be a bit less daunting than they may originally seem. Instead, it seems having a footprint in those spaces relates well to generally more widely accepted measures of impact, and thus are worth further consideration.

Discussion

There has been a great deal of discussion on prestige and journal quality over the years. The interest in our 2011 work was immediate and very focused on practical uses for the knowledge of journal prestige. Like Yuen’s (2018) study of the relationship between various impact metrics, our study too finds robust correlations across many of these metrics, despite their different databases and mathematical approaches.

The findings present some confirmation of things we would expect based on literature and past research. There is a wealth of significant correlations between objective quality measures such as impact factors, eigenvalues, and scores. Blind peer review was important, and remains the key coin to the realm, but we mostly selected only peer reviewed journals, so assessment of its true impact here was limited and could use further development in subsequent analyses. Acceptance rate mattered strongly, too; the ability to say no to many authors who want to be published in a given journal continues to be a solid proxy of how we collectively view a journal. As several prestigious journals have a long time to publication, these data would be helpful to include in future examinations. Unfortunately, such data were not widely reported and included, so their relative weight could not be analyzed here.

One of the more interesting questions is about faculty perceptions of what should matter for prestige, and comparing those with actual relationships of those measures with prestige. For example, article length mattered, but respondents did not feel it would or should. More prestigious journals, though, give authors more collective space in which to present their work. By far the strongest individual correlations with prestige were citing- and cited half-life, with cited half-life by far predicting the most variance in prestige.

A final note must be considered for altmetrics as well. While not useful as a tool for understanding journal prestige, altmetrics did correlate strongly with several impact scores that are widely respected and utilized. It is understood that many faculty are slow to adapt to new technology, and are slow to shift to utilizing social media platforms to advance the audience for their work. This finding suggests they would be wise to seek those opportunities. Furthermore, there is reason to believe this approach, and citation of those metrics, should be a helpful tool for junior faculty for whom citation scores will trail behind altmetric scores which provide much greater immediacy. It will be interesting to analyze the changing role of altmetrics, altmetrics scales and individual indicators included within them to follow their relationships with known factors like citation scores as well as with overall senses of journal prestige.

Conclusion

Prestige as a subjective measure is in fact significantly related with several quantitative measures across journals, and at least many of the higher rated journals’ prestige score can be relatively accurately predicated from metrics compiled across the multiple databases used in this study. However, this does not mean that these relationships would hold true across other journals that are not currently included in these various databases. Further examination into the nature of the journals that are included here (i.e., whether they are generalist in nature, if they are more represented or underrepresented from specific sub-specialties, etc.) would be of great help in understanding more completely, in the absence of these metrics for journals that are not captured across these databases, how much weight we can put into the prestige scales. That is, we would argue that prestige is prestige; a wide swath of faculty have provided feedback on these subjective measures of prestige. This argument may not sway a quantitatively minded dean or promotion and tenure committee. If we can suggest strongly that these prestige scores do in fact relate strongly to the data that is known to exist, then in the absence of those data, these prestige scores could be a good proxy of the collective quantitative model of quality. In other words, when know age of journal, acceptance rate, and age of journal, regardless of whether it is in SSCI, Clarivate, or SCImago, we can get a sense of the relative prestige of the journal. Thus, available prestige rankings of higher education journals can be used with confidence by the various individuals and committees that assess faculty members for such matters as reappointment, tenure and promotion and annual salary adjustments.

We must reiterate that similar to prestige being related strongly to several impact factors, so were altmetric scores. This fact also suggests that engagement with a piece of scholarship in the public discourse is of inherent value, but it also is something that indicates the weight and suasion of the piece in question. Citation scores and affiliated metrics are an increasingly limited way to conceptualized the measurement of scholarship; altmetrics and generally known data about the journals should be used in examining the effect of scholarship as well.

Data Availability

Not applicable for this work.

Code Availability

Not applicable for this work.

References

Ball, P. (2006). Prestige is factored into journal ranking. Nature, 439, 770. https://doi.org/10.1038/439770a

Bayer, A. E. (1983). Multi-method strategies for defining “core” higher education journals. The Review of Higher Education, 6, 103–113.

Bergstrom, C. (2007). Eigenfactor: Measuring the value and prestige of scholarly journals. College & Research Libraries News, 68(5), 314–316.

Biglan, A. (1973). The characteristics of subject matter in different academic areas. Journal of Applied Psychology, 57(3), 195–203.

Bok, D. (2015). Higher education in America. Princeton University Press.

Bray, N. J., & Major, C. H. (2011). Status of journals in the field of higher education. The Journal of Higher Education, 82(4), 479–503.

Callaham, M., Wears, R. L., & Weber, E. (2002). Journal prestige, publication bias, and other characteristics associated with citation of published studies in peer-reviewed journals. JAMA, 287(21), 2847–2850. https://doi.org/10.1001/jama.287.21.2847

Clarivate. (2021a). Journal citation reports: Reference guide. https://clarivate.com/webofsciencegroup/wp-content/uploads/sites/2/2021a/06/JCR_2021a_Reference_Guide.pdf. Accessed 28 March 2022.

Clarivate. (2021b). Introducing the journal citation indicator. https://clarivate.com/wp-content/uploads/dlm_uploads/2021b/05/Journal-Citation-Indicator-discussion-paper-2.pdf. Accessed 28 March 2022.

Clarivate. (2022). Journal citation reports: Data. https://incites.help.clarivate.com/Content/Indicators-Handbook/ih-journal-citation-reports.htm. Accessed 28 March 2022.

Corby, K. (2003). Constructing core journal lists: Mixing science and alchemy. Libraries and the Academy, 3(2), 207–217.

Davis, D. E., & Astin, H. S. (1987). Reputational standing in academe. Journal of Higher Education, 58(3), 261–275.

DeJong, C., & St. George, S. (2018). Measuring journal prestige in criminal justice and criminology. Journal of Criminal Justice Education, 29(2), 290–309.

Dey, E. L., Milem, J. F., & Berger, J. B. (1997). Changing patterns of publication productivity: Accumulative advantage or institutional isomorphism? Sociology of Education, 70, 308–323.

Doreian, P. (1989). On the ranking of psychological journals. Information Processing and Management, 25(2), 205–214.

Drew, D. E., & Karpf, R. (1981). Ranking academic departments: Empirical findings and a theoretical perspective. Research in Higher Education, 14(4), 305–320.

Fox, E. (1985). International schools and the international baccalaureate. Harvard Educational Review, 55(1), 53–69.

Gappa, J. M., Austin, A. E., & Trice, A. G. (2007). Rethinking faculty work and workplaces: Higher education’s strategic imperative. Jossey-Bass.

Gaston, J. (1978). The reward system in British and American science. Wiley.

Geiger, R. L. (2010). Optimizing research and teaching: The bifurcation of faculty roles in research universities. In J. C. Hermanowicz (Ed.), The American academic profession: Transformation in contemporary higher education (pp. 21–43). Johns Hopkins University Press.

Gonzales, L. D. (2012). Responding to mission creep: Faculty members as cosmopolitan agents. Higher Education, 64(3), 337–353.

Google Scholar. (2022). Metrics. https://scholar.google.com/citations?view_op=metrics_intro&hl=en. Accessed 28 March 2022.

Gu, X., & Blackmore, K. (2017). Characterisation of academic journals in the digital age. Scientometrics, 110(3), 1333–1350.

Hartley, J. E., & Robinson, M. D. (1997). Economic research at national liberal arts colleges: School rankings. Journal of Economic Education, 28(4), 337–349.

Harzing, A. W., & van der Wal, R. (2009). A Google Scholar h-index for journals: An alternative metric to measure journal impact in economics and business. Journal of the American Society for Information Science and Technology, 60(1), 41–46. https://doi.org/10.1002/asi.20953

Heckman, J. J., & Moktan, S. (2020). Publishing and promotion in economics: The tyranny of the top five. Journal of Economic Literature, 58(2), 419–470.

Lindahl, J. (2018). Predicting research excellence at the individual level: The importance of publication rate, top journal publications, and top 10% publications in the case of early career mathematicians. Journal of Informetrics, 12(2), 518–533.

Mañana-Rodríquez, J. (2015). A critical review of SCImago journal & country rank. Research Evaluation, 24(4), 343–354.

Nelson, T. M., Buss, A. R., & Katzko, M. (1983). Rating of scholarly journals by chairpersons in the social sciences. Research in Higher Education, 19(4), 469–497.

Oromaner, M. (1981). The quality of scientific scholarship and the “graying” of the academic profession: A skeptical view. Research in Higher Education, 15(3), 231–239.

Packwood, A., Scanlon, M., & Weiner, G. (1997). Getting published: A study of writing, refereeing and editing practices. Sociological Research Online, 2(3), 67–68.

Podsakoff, P., Mackenzie, S., Bachrach, D., & Podsakoff, N. (2005). The influence of management journals in the 1980s and 1990s. Strategic Management Journal, 26, 473–488.

Ranis, S. H., & Walters, P. B. (2004). Education research as a contested enterprise: The deliberations of the SSRC-NAE Joint Committee on Education Research. European Educational Research Journal, 3(4), 795–806.

Schuster, J. H., & Finkelstein, M. J. (2006). The restructuring of academic work and careers: The American faculty. Johns Hopkins University Press.

Smart, J. C. (1983). Perceived quality and citation rates of education journals. Research in Higher Education, 19(2), 175–182.

Tahai, A., & Meyer, M. J. (1999). A revealed preference study of management journals’ direct influences. Strategic Management Journal, 20(3), 279–296.

Thelwall, M., & Wilson, P. (2014). Distributions for cited articles from individual subjects and years. Journal of Informetrics, 8(2014), 824–839.

Wegener, B. (1992). Concepts and measurement of prestige. Annual Review of Sociology, 18, 253–280.

Wellington, J., & Torgerson, C. J. (2005). Writing for publication: What counts as a ‘high status, eminent academic journal’? Journal of Further and Higher Education, 29(1), 35–48.

Youn, T. I. K., & Price, T. M. (2009). Learning from the experience of others: The evolution of faculty tenure and promotion rules in comprehensive institutions. Journal of Higher Education, 80(2), 204–237. https://doi.org/10.1353/jhe.0.0041

Yuen, J. (2018). Comparison of impact factor, eigenfactor metrics, and Scimago journal rank indicator and h-index for neurosurgical and spinal surgical journals. World Neurosurgery, 119(4), e328–e337.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical Concerns

Part of the data was collected following Institutional Review Board approved procedures, while the rest was general access data.

Conflicts of Interest

The authors have no conflicts of interest to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Data Sources

Cabell’s Scholarly Analytics

Cabell’s has rebranded their scholarly analytics as “Journalytics” which compiles over 11,000 journals and 18 disciplines. They present information on journal acceptance rate, type of review (double blind, blind, editorial, etc.), time to review, length of published articles, time to publication post review, as well as an SCITE index and Altmetric Report score. The SCTIE score is composed from the number of supporting cite articles receive and dividing by the combined number of supporting and contrasting cites, across a minimum of 100 citations. The Altmetric score is developed from across Facebook, blogs, and Twitter and producing a median number to show the amount of attention articles generate.

Clarivate Journal Citation Reports (Social Sciences Citation Index)

Clarivate (formerly Thomson Reuters) includes all journals in the Web of Science Core Collection (WSCC). They are widely known for their journal impact factor (JIF) developed in 1955, which is “defined as citations to the journal in the Journal Citation Reports™ (JCR) year to items published in the previous two years, divided by the total number of scholarly items, also known as citable items, (these comprise articles and reviews) published in the journal in the previous two years” with the logic that “Each cited reference in a scholarly publication is an acknowledgement of influence. JCR therefore aggregates all citations to a given journal in the numerator regardless of cited document type” (Clarivate, 2021a). Citations used in their calculation come from the WSCC: Science Citation Expanded, Social Sciences Citation Index, Arts & Humanities Citation Index, Conference Proceedings Citation Index, and Book Citation Index.

They provide a range of metrics (see Clarivate, 2022), including citable items, percent of articles in citable items, cited half-life (half-life indicated the number of years at which point half of the citations to the articles in the journal are more recent than that time), citing half-life, total articles, percent of OA Gold (articles in journals that are in the Directory of Open Access Journals), total citations, 2020 journal impact factor (JIF), 5 year JIF, JIF without self-citation, 2020 journal citation indicator (JCI) (see Clarivate, 2021b), and calculated eigenfactor (meant to be like JIF but with weighted values for the articles included; see Bergstrom, 2007), normalized eigenvalue, article influence score, and JIF percentile and quartile. One challenge here is that, of the 183 journals included in our study, only 27 were included in the WSCC. So while there is a plethora of metrics, using this as an indicator of the quality of faculty publications in higher education administration will leave many areas of study and publications underrepresented and undervalued.

Elsevier (i.e., Scopus and SCImago)

The SCImago journal rankings are publicly available metrics that are built from information in the SCOPUS database, or linked to Elsevier. Their database and combination of metrics lead to development of their own SCImago Journal Rank (SJR) indicator which is the average number published in the journal over the past three years. Other indicators include: H index, Total documents (2020) total documents (3 years), total references (2020), total cites (3 years) citable docs (3 years) (citable documents include articles, reviews, and presentations), Cites per document (2 years) (Often used as an impact factor / score), and references per document (2020). As with other indicators, though, transparency and methodological concerns exist for SJR and other Elsevier factor (see Mañana-Rodríguez, 2015). Alternatively, Gu and Blackmore (2017) found SJR to be better than alternatives and thus the fundamental score they used for scholarly impact scores.

Google Scholar

Google Scholar (2022) provides a h5-index and h5-median score. The h5-index is the h-index score for articles that have been published in the last five years, while median represents the median number of citations for the articles in the index.

Appendix B

Table

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bray, N.J., Major, C.H. Impact Factors, Altmetrics, and Prestige, Oh My: The Relationship Between Perceived Prestige and Objective Measures of Journal Quality. Innov High Educ 47, 947–966 (2022). https://doi.org/10.1007/s10755-022-09635-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10755-022-09635-4