Abstract

This article examines the impact of a faculty professional development effort on the understanding, confidence, and attitudes of education faculty related to program assessment activities. We applied literature on the adoption of innovations, institutional effectiveness, and professional development to a case study of a series of workshops on assessment. The results suggest that faculty members had improved skills and knowledge, understanding, confidence, and attitudes regarding assessment following the workshops. The opportunity for faculty members to collaborate with colleagues on assessment and to develop an understanding of the kinds of supports in place for assessment work were important elements in the gains.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Assessment of student learning at the program level consumes substantial time, energy and resources in higher education. However, it is unevenly embraced by faculty members. Wergin (2005), who argued that the faculty had not yet internalized the value of assessment, noted that regional accrediting commissions believe their member institutions have come to view assessment as “mechanistic” (p. 31). Indeed, much accreditation work on campuses is driven by accreditor expectations, giving it the feel of an external mandate that the faculty often resists.

This article reports on the initial phase of a study on the effectiveness of a professional development program designed to shape faculty attitudes, confidence, and understanding related to program assessment. The study addressed a single question. What effect did a series of assessment workshops have on faculty members’ attitudes, self-confidence, and understanding related to program assessment?

Conceptual Framework

The professional development workshops and this research are informed by literature on the diffusion of innovation, faculty development, and institutional effectiveness. While many factors influence the adoption of innovations like program assessment, the adoption of innovation is a social process through which we engage with others to explore, interpret, and accept change.

Program Assessment as Innovation

The term “program assessment” is used in various ways throughout higher education. Most broadly, it is used synonymously with the phrase “program review” to indicate a process through which institutions evaluate and take stock of the overall health and effectiveness of individual academic programs. Bresciani (2006), for example, described outcomes-based assessment program review as a process for examining whether programs have met goals in multiple areas (e.g., services, research, student learning, faculty development). More narrowly, program assessment is used as a variation on “assessment of student learning” to describe the collection and use of student learning data to inform improvement at the program level (as opposed to individual student intervention and remediation). In such cases, program assessment is seen as part of the overall program review process, serving as one measure of a program’s health.

Assessment activities are part of a larger genre of institutional effectiveness efforts, driven at least in part by calls for accountability from government, parents and students, among others. Regional accrediting bodies (e.g., The Higher Learning Commission) require assessment at a campus level while professional accrediting organizations (e.g., education, nursing) have similar and, at times, more detailed expectations at the program level. While the specifics of the process vary, internal program reviews are typically done by standing committees of the faculty or academic senate and may require formal approval of the final report by that body.

For the purposes of this article, we use the terms program assessment and assessment interchangeably to describe a process that calls on the faculty to articulate programmatic learning outcomes, collect data on student attainment of those outcomes, and review the aggregated data to inform program improvement efforts (Palomba and Banta 1999). Thus, we embrace the more restrictive definition articulated above, with the primary role of program assessment of student learning understood as that of faculty use in program improvement.

Program assessment often challenges how faculty members are accustomed to working. Traditionally, they develop, implement, and maintain what they see as their own courses. While faculty members regularly assess their individual students and courses, all too seldom do they come together to examine data about the program as a whole. Much of faculty work is independent and conducted in relative isolation from colleagues. Assessment at the program level calls on faculty members to employ new “technology” and thus is an innovation that they typically see as novel and different (Rogers 2003).

Knowledge of and Attitudes Toward Assessment and Innovation

Before they can decide whether or not to adopt an innovation, individuals must first know and understand the principles behind it (Hall and Hord 2001; Rogers 2003). Related to assessment, faculty members might need to know how to draft learning outcomes, to understand organizational expectations, and to recognize support for practice; and they must understand the program improvement process. Knowledge of the process is essential in the early stages of a proposed change when there is ambiguity about expectations. For example, individuals may be anxious about the changes, their roles in these changes, and the level of organizational support that really exists for the innovation (Hall and Hord 2001). Knowledge of an innovation, or lack thereof, can foster or inhibit acceptance.

Yet knowledge of the process alone is not sufficient; attitudes typically drive the final decision of whether or not to adopt changes in practice as potential users ask whether they are confident that making a change will be possible and worthwhile. Individuals evaluate innovations, in part, based on the innovation’s attributes—such as relative advantage over other practices, compatibility with current practices and culture, and complexity (Rogers 2003).

There is some evidence and ample perception that faculty members shy away from institutional effectiveness activities, including systematic program assessment (Ewell 2002; Welsh and Metcalf 2003; Wergin 2005). Despite such wariness, there is nothing inherent in the concept of assessment itself, or in the activities appropriately following from it, that make it inevitable that faculty members will respond negatively to the idea; after all, they assess individual student learning both formally and informally hundreds of time in a given semester.

A significant factor in faculty members’ reluctance to engage in assessment is the way in which assessment and institutional effectiveness are linked to the idea of accountability (often through accreditation). This notion, rooted in the structural or bureaucratic paradigm of organizations (Birnbaum 2000; Bolman and Deal 2003; Walvoord 2004), means that the conversation usually focuses on proving that students are succeeding or that assessment is being done. The tone is one in which the faculty must justify its merit, worth, and effectiveness. In contrast, faculty culture is much more concerned with autonomy and collegiality, viewing accountability as more appropriately determined by one’s peers within a discipline or field of study than by accreditors. Faculty members often see assessment as imposed from above, driven by external pressures, and posing threats to their autonomy, curricular control, and academic freedom (Walvoord 2004; Wehlburg 2008; Welsh and Metcalf 2003). Moreover, they note that assessment activities at the program level represent new work in a day already filled with numerous time demands (Wehlburg 2008). The result is a culture gap in which faculty members perceive accountability-driven assessment as at odds with their culture, priorities, and practices.

On the other hand, there is evidence that faculty members can find value in institutional effectiveness activities such as assessment. These individuals are willing to engage in such activities if they feel the purpose for the activities is improvement rather than accountability, if there is real involvement of faculty members in the activities, and if a definition of quality is based on outcomes rather than inputs (Welsh and Metcalf 2003). Moving beyond the initial act of measurement to using data for program improvement can also generate buy-in (Palomba and Banta 1999; Walker et al. 2007; Wehlburg 2008; Wright 2002). In addition, perceived organizational support for the innovation can be a key factor in influencing individual attitudes. Faculty members must believe that leaders value the innovation and that the organization is behind it if they are to be willing implementers of the change (Hall and Hord 2001).

The goal of faculty engagement in program assessment activities is reflected in Wehlburg’s (2008) idea of transformative assessment, where assessment is practiced as a faculty-owned, faculty-driven endeavor rather than a top-down mandate. Transformative assessment respects the unique context and goals of individual programs, builds on existing work, captures data that are meaningful to the program faculty, and is sustainable (Walvoord 2004; Wehlburg 2008). Moreover, it is built upon a foundation of trust, shared goals and guidelines, and a common language (Wehlburg 2008). Achieving such a system requires time, resources, and leadership (Walvoord 2004; Wehlburg 2008).

If they are to adopt new practices, faculty members must have time to understand the innovation, to build the skills to carry it out, and to adopt the attitude that change is desirable. Shifting the conversation around assessment from accountability to program improvement and engaging them in driving the process may close the culture gap that fosters wary attitudes.

Innovation as a Social Process

At its core, the process for achieving this goal is a highly social one. Berquist (1992) observed that faculty members frequently adopt or reject innovations based on word of mouth from colleagues, which shapes their knowledge of and attitudes about an innovation. Sahin and Thompson (2006) found that collegial interaction was more powerful than one-on-one mentoring in facilitating technology adoption. It is through such exchanges that faculty members build knowledge of the innovation; explore its comparative advantages; and determine its suitability for their practices, values, and culture (Rogers 2003). Knowledge and attitudes are not shaped in a vacuum, but rather through interaction with one’s colleagues and the administrative support structures that surround them. Individuals come to understand an innovation through their interactions not just with the innovation itself, but through interactions with their colleagues.

Given the highly social nature of innovation adoption and diffusion, faculty members must have the opportunity to work with their peers to collaborate, learn about, and make sense of assessment practices. These principles suggest the value of a sustained professional development effort to build faculty members’ knowledge, attitudes, and confidence around assessment.

Professional Development

Professional development is one way to ensure that the target group understands the proposed innovation and feels that they have the skills and knowledge necessary to implement it. In the case of assessment, if faculty members are to “own” this work and be motivated to change practice, they must understand their role and how the process will work, as well as be confident in their abilities. Professional development that is ongoing, systematic and integrated, with regular sessions focused upon a common theme, is more likely to influence practice than episodic or unsystematic experiences (Boyle et al. 2005; Murray 1999). This approach complements the need to hear the same message multiple times in multiple ways if individuals are to understand an innovation and move toward adoption (Hall and Hord 2001).

Professional development can also provide the social environment needed to support adoption. Such a structure gives faculty members the opportunity to exchange ideas, explore the feasibility of new practices, try out and observe the innovation, and determine with colleagues ways in which it fits with organizational culture and practice (Rogers 2003). The opportunity for individuals to work together to establish a network or community focused on exploring and achieving common goals can be a central element of changing practice (Stigmar 2008; Sullivan et al. 2006; Wolverton et al. 1998).

The framework discussed above suggests the potential to implement a system of program assessment that is driven and supported by the faculty. Doing so requires ongoing, systematic professional development as well as the recognition that the acceptance of change and innovation is a social process. These concepts and lessons informed our professional development efforts and the related study.

The Assessment Workshop Series

A four-part assessment workshop series took place monthly during spring 2008 in the College of Education at California State University, Long Beach. Several premises provided the foundation for the series. First, the faculty must lead assessment for such efforts to thrive rather than be driven by compliance. Second, assessment challenges faculty members to think and act in new ways as they collect and use data for program improvement. Third, for faculty members to embrace assessment they must have the chance to work together to build skills and knowledge, develop confidence in their skills, establish positive attitudes, and explore its relevance for their programs and practice.

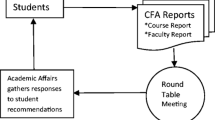

The workshop series, supported by the college dean and department chairs and planned by two of the authors (Haviland and Turley), was an effort to develop the transformative system of assessment (Wehlburg 2008) described above. Yet the series also began with a clear, tangible goal: the creation of an assessment plan by each program for use starting in fall 2008. Each workshop covered a single topic: writing student learning outcomes (SLOs), identifying appropriate evidence to assess each SLO, creating rubrics, and interpreting and using student performance data for program improvement. Workshops were sequential; each workshop built on the work done in the previous workshops (e.g., how to create learning outcomes leading to identifying evidence to assess those outcomes). They were structured to help faculty members collaborate to make immediate progress in applying what they were learning for the benefit of their programs. For each workshop, the same outside expert presented and led a discussion on the topic for one-hour. In the remaining 2 hours, program faculty worked together to apply the skills and knowledge covered by the presenter.

A total of 53 faculty members were invited by the dean to participate in the series. Approximately 44 individuals participated in any single workshop (variation was due to prior obligations and to the fact that two or three invitees opted not to participate at all). Nearly all college programs sent multiple faculty members. Most participants were full-time tenured or tenure-track faculty members in the college, reflecting approximately half the college’s total number of the tenured/tenure-track faculty. Participants were nominated by department chairs, invited by the dean, and received a modest stipend from the college.

The Study

This research is an instrumental case study (Stake 1995), which provides a framework for a manageable study over the long-term and, given an understanding of context generated through the method, allows for hypotheses to be generated regarding similar institutions (Murray 2008). We report on the effectiveness of sustained professional development to support faculty adoption of an innovation; in this case, that innovation is program assessment. Thus, the study assumes the value and merit of assessment as an activity and focuses on the professional development outcomes. Quantitative and qualitative methods were implemented concurrently, with integration of the methods occurring throughout the study (Creswell 2003).

The research, which had been approved by the university’s institutional review board, was conducted in the College of Education at California State University, Long Beach. The University is an urban, Master’s-granting public institution in the Los Angeles metropolitan region and enrolls more than 35,000 students. The college, with approximately 75 full-time faculty, serves nearly 3,000 students each year and offers more than 20 academic programs. Five affiliated credential programs are housed in other colleges on campus.

We used three data sources for this study. We administered pre- and post-workshop surveys before and after the series. Participants also completed an evaluation of each workshop. Finally, two of the researchers conducted interviews with seven workshop participants within 4 weeks of the conclusion of the workshop series.

Pre- and Post-Workshop Series Surveys

We developed the pre- and post-workshop surveys, which were administered anonymously online, using the Stages of Concern Questionnaire created by George et al. (2006) as a model. We identified three main categories of concerns that might facilitate or impede faculty members’ assessment work (attitudes regarding the value of assessment, understanding of assessment and the way the system would work in the college, and confidence in the ability to carry out assessment work).

Thirty-six of the original invitees (68%) completed the pre-workshop survey; 24 (45%) completed the post-workshop survey. While the response rate to the initial survey was relatively strong, we believe the timing of the post-workshop survey (at the end of the academic year) contributed to its lower response rate. Nonetheless, the order of the contribution of each academic program to the total participants in each survey remained unchanged over time. This implies that the data attrition or missing data found in the second survey occurred in a random rather than systematic fashion.

Our effort to match participants using pre- and post-survey identifiers based on a respondent-created numeric identifier on the surveys resulted in identifying just 16 respondents who completed both surveys. This relatively low figure could well be because some respondents were unable to recall their identifier at the second administration and thus input an identifier that did not match any of the pre-workshop surveys. The matched cases alone were used in statistical analyses with the repeated measures employed in this study.

Descriptive and inferential statistics guided pre- and post-workshop survey data analysis. Cronbach’s alpha (1951) was obtained to assess the reliability of each set of attribute items (attitudes, understanding, confidence), using matched individual responses on the pre-workshop survey. For those sets of items where reliability was satisfactory (understanding [α = .76], confidence [α = .82]), exploratory factor analysis was conducted on the pre-workshop survey responses to measure the construct validity of the item sets. Both understanding and confidence retained one factor whose eigen value was larger than 1.0. Once the understanding and confidence items were identified as psychological scales, a paired t test was conducted using the means of the item scores from both surveys for each scale to examine the effect of the workshop on faculty attributes. Because the attitude items failed to demonstrate psychometric quality sufficient to be considered a scale, a paired t test was conducted on individual item scores.

Workshop Evaluations

Workshop participants completed evaluations following each session. These evaluations asked participants about their learning in a workshop, their level of comfort with the knowledge and skills, and their satisfaction with the experience. Between 21 and 32 participants completed each workshop evaluation, with an average return of 25 evaluations per session. With approximately 44 faculty members participating in each session, the average return represents a 57% response rate on workshop evaluations. Survey results were analyzed using descriptive statistics.

Interviews

Two researchers (Haviland and Turley) interviewed seven workshop participants in May 2008, immediately after the workshop series concluded. Interviews ranged from 30 to 60 minutes in length. Interviewees were chosen to represent a cross-section of departments/affiliated programs, experiences with assessment, and years of service in the college. In the interviews we explored participants’ reactions to the workshops, with particular attention to the impact on their attitudes, confidence, and understanding regarding assessment. Due to transcription problems, one interview could not be used in the analysis. Two researchers coded the transcripts using multiple “first cycle” coding methods (Saldaña 2009), first identifying general codes based on the conceptual framework of the study and anticipated needs (provisional codes). We coded transcripts independently before aligning our coding and identifying new categories based on an analysis of emerging themes. A software program was used to facilitate manipulation of the data.

Results

Analysis of quantitative and qualitative data provided evidence that the workshops influenced faculty members’ understanding of and, to some degree, attitudes regarding assessment. The workshops also influenced confidence, although not in the way conceptualized at the start of this research. Finally, the findings echo the importance of interaction with colleagues as a way to shape understanding, attitudes, and confidence related to assessment, highlighting the importance of social processes in the adoption of an innovation.

Impact on Understanding

A paired t test on the mean scale score for understanding was not statistically significant, and an omnibus test of the single group MANOVA with repeated measures on individual item scores of the scale failed to show a change in understanding. However, as shown in Fig. 1, faculty members’ self-reported need for understanding dropped on five items in the scale, indicating increased knowledge and understanding of the assessment system. Moreover, a univariate test revealed a statistically significant (F(1,14) = 7.508, p = .016) change on the sixth item score of the scale (It is not clear to me how the new assessment system is better than what we currently have), suggesting that participants had greater understanding of assessment after the workshop.

Pre- and post-workshop average self-ratings on understanding items. Respondents answered items according to the following scale: 0 = Statement seems irrelevant; 1-2 = Statement is not at all true of me at this time; 3–5 = Statement is somewhat true of me now; 6-7 = Statement is very true of me at this time

This finding is mirrored by session evaluation data asking participants how clear they were about the role of the session topic in the college’s assessment system and the college’s expectations for that topic in the overall assessment system. Anywhere from approximately 70% to 95% of respondents said they were either Clear or Extremely Clear about the topic and college expectations regarding it, with the results generally in the range of 80 percent.

Interviews further support the interpretation that the workshops built faculty members’ understanding of the assessment system. Participants repeatedly talked about the importance of building a common understanding of the system. One faculty member said, “…prior to these assessment workshops, everybody was sort of doing their own thing, but there really wasn’t any cohesiveness to the assessment piece.” The professor added, “I think this unified us as a college.”

This understanding shaped a sense that there was now a common system in place. One faculty member observed,

What I think the workshop looked at, and talked about, is OK, when are you going to collect data for that, and coming up with a system….Just getting, I think the most important thing overall, if I had to pick one thing, is getting everybody in line and we’re all going to be on the same page with working together and coming up with a plan that’s systematic. I think that’s one of the biggest things the workshop accomplished.

These comments echo the findings on the scale’s sixth item, suggesting faculty members left the series with a clearer sense of the assessment system, its purpose, and their role in it.

Impact on Confidence

The workshops were designed to give participants the skills and knowledge necessary to engage in ongoing program assessment, with the assumption that doing so would positively influence confidence in their ability to carry out assessment work.

Workshop participants were positive about their gains in skills and knowledge made during the workshop series. Pre- and post-workshop surveys asked participants to “rate [their] learning needs related to assessment practices.” Choices ranged from a 1 (I have little or no knowledge/understanding of how to do this) to a 4 (I have strong knowledge/understanding of how to do this). Figure 2 reflects the percentage of people who rated their knowledge or understanding of an item as a 3 or 4 on pre- and post-workshop series self-assessments. Participants reported learning in all key areas of assessment practice, with an average gain of .62 on a four-point scale. Of note were gains in ability to draft an assessment plan, organize a meeting to review data, and identify elements of an assessment plan.

Despite these gains, evidence of any positive impact on participants’ confidence in their ability to engage in assessment practices was limited when significance tests were conducted using the matched participant responses on the confidence scale items. Neither the paired t test (p = .451) on the mean scale scores nor the single group MANOVA (p = .562) with repeated measures on individual items revealed significant changes in confidence among the population. Of course, because small sample size is known to affect the power of a study (Stevens 1996), it is possible the small sample in this study made it difficult to detect changes in confidence.

Descriptive statistics provide a modest indication that faculty members became more confident in their ability to engage in assessment over time. The pre-workshop survey revealed a moderate negative correlation (r = -.635) between participants’ desire for understanding and their confidence in their ability to carry out assessment work. Initially, the greater the need faculty members felt to understand the assessment system, the less confident they were in their ability to do assessment. As they became clearer about expectations regarding assessment, confidence (the mean of which climbed from 4.6 to 4.8 on a scale of 7) may have risen enough to weaken the negative relationship; by the end of the workshop series, this negative correlation was no longer statistically significant. In many ways this fact makes sense: the less participants understood the expectations regarding assessment, the less confident they felt doing it. This finding suggests that changes in confidence might be more linked to changes in faculty members’ understanding of roles and expectations related to the assessment system than to gains in skills and knowledge.

The interviews lend support to this interpretation and suggest that we had initially thought of confidence in the wrong way. Interviewees did not feel they had lacked confidence in their ability to do assessment. Instead, they linked their confidence to a sense of the value of participation grounded in their understanding of college expectations and the system. The workshops left them more confident that carrying out assessment work would be worthwhile because the individuals saw a common college assessment system. One faculty member commented, “Well, I think any time you go to a meeting, and if you are on board with everyone else, you are going to feel confident that way.” Another explained, “It made me see how… this may actually work…” A third participant, contrasting the workshops with past experiences, “…it was easier to be more receptive…the process is a lot clearer, and what we’re going to be doing is going to be built upon in the future.”

Faculty members did not need more confidence in their assessment skills and knowledge. Rather, they feared that their assessment efforts might be undone by lack of consistency or a change of direction by the college. The professional development effort helped them gain confidence that the assessment system would remain with some level of stability.

Impact on Attitudes

Figure 3 shows that only item A2 (Concerned about assessment requiring changes in my teaching practice) showed a statistically significant increase over time (t(15) = -2.457, p = .027). This result suggests faculty members actually became more concerned about whether or not assessment would require changes in their teaching. While not statistically significant, item A4 (Not interested in participating in assessment efforts) saw an increase in the mean, indicating faculty members were slightly less interested in participating in assessment efforts in the college. These items suggest less positive attitudes after the workshops.

Pre- and post-workshop average self-ratings on attitude items. Respondents answered items according to the following scale: 0 = Statement seems irrelevant; 1-2 = Statement is not at all true of me at this time; 3–5 = Statement is somewhat true of me now; 6-7 = Statement is very true of me at this time

However, while not statistically significant, other items suggest positive changes in attitudes. The decline in the rating for item A5 (Preoccupied with things other than assessment) indicates that workshops captured the attention of faculty members toward assessment. The increase in the rating for item A1 (Concerned with issues or priorities other than assessment) suggests participants were less worried about assessment following the workshops. Similarly, items A3 (Would like to develop working relationships with our faculty and outside faculty to do assessment) and A6 (Want to coordinate my work on assessment with others to maximize its impact), both of which deal with the desire to work with others to make assessment work, saw rating gains.

The qualitative data collected during interviews offer additional insight into how attitudes might have been affected. Perhaps the starkest example of a change in attitude came from a participant who said,

I was really tired of hearing about assessment. We’ve been dealing with [it] since 2002, and I had had it…. But the assessment workshops sort of turned my attitude around. OK, alright, now I’m ready to talk about assessment again. I don’t want to put it on the back burner any more. Now I’m ready to address them [the learning outcomes].

Other faculty members, all of whom described changes in attitudes, suggested that much of this change was connected to an improved understanding of the assessment system and confidence in consistent implementation. Many of the comments described a sense of a more cohesive system, with greater clarity about faculty members’ role and the support they would receive.

Still, particularly given the variability in the quantitative data, attitudes might best be described as reflecting cautious optimism. While perhaps less worried about assessment efforts overall and willing to work with others in the process, faculty members indicated concern about the likelihood that assessment would affect their teaching practices. This concern may have been because, as they gained a greater understanding of how program assessment works, they recognized that it would almost certainly prompt changes in pedagogy and other practices.

The Value of Working with Colleagues

During the interviews, we asked participants what the most effective aspect of the workshop series was. By far the most common answer was the opportunity faculty members had to work with colleagues on assessment. One participant explained,

It was very effective having the faculty together so that we could talk about things…because when we do have faculty meetings, we are usually dealing with other kinds of housekeeping issues, but to be able to sit and just talk about assessment and how it was conducted in our program, I think that was just so helpful.

Carving out time to discuss assessment with colleagues in the midst of many other demands was essential for helping participants make progress, and they recognized its value.

Table I presents data from surveys completed after each workshop related to participants’ perceptions of the value of working with colleagues. Participants rated the value of this interaction on a scale of 1 (Not at All Valuable) to 4 (Extremely Valuable). Data in Table I reflect the percentage of respondents who rated an item as a 3 or a 4 for the workshop. All the ratings are reasonably high although workshops two and four, with the lowest ratings, had substantially less program-level collaboration than planned. Nonetheless, in nearly every instance, more than three-quarters of respondents found value in collaborating with colleagues.

These findings illuminate the central role that colleagues played in helping to build a common understanding of the assessment innovation.

Discussion

The workshops served their most immediate and concrete purposes. The faculty members in each of the more than 20 programs with participants in the workshop series crafted sound assessment plans. In the aftermath of the series, all programs have gone on to develop and use rubrics to assess student learning; and many have implemented their assessment plans with growing sophistication. As a result, the College recently had a successful review of its assessment work by its national accrediting body. Moreover, the above discussion speaks to the kind of impact the workshops had on faculty members’ understanding of, attitudes toward, and confidence regarding assessment. Administrators and some faculty members have a sense of cautious optimism that assessment work is done increasingly with a sense of faculty investment and ownership, and not merely out of compliance. Perhaps even more striking is what these outcomes suggest for the potential of professional development efforts to support adoption of innovation and changes in culture.

The findings from this study support the idea that change and innovation are highly social processes and that professional development can be structured to leverage this interaction in ways that foster the adoption of innovation. Researchers (Berquist 1992; Rogers 2003; Sahin and Thompson 2006; Stigmar 2008; Sullivan et al. 2006; Wolverton et al. 1998) have spoken to the importance of providing those being asked to adopt new practices with the interactions, network, and community necessary to support adoption and success. Faculty members who participated in the workshop series commented repeatedly on the importance of having time to work with colleagues. This sentiment arose not just because they were able to accomplish necessary tasks, but also because they had the chance to explore the innovation with each other and consider how they might use it within the context and culture of their own programs. They were not simply aligning with college expectations; they were deciding amongst themselves how they could make this innovation work within their programs and daily practice. As they did so, they built an understanding of assessment and confidence in their ability to work with it, both individually and as a program faculty. This social process mediated gains in understanding of and confidence in the value of doing assessment.

Working with colleagues may have had another benefit: the shear number of faculty members participating in the process underscored the message that assessment was driven and owned by the faculty, not administrators or accreditors. One interviewee noted that the workshops and the overall assessment effort seemed less “top down” than in the past. The real and perceived participation of the faculty in leading assessment and the corresponding changes in understanding, confidence, and attitudes echo findings (Welsh and Metcalf 2003) that faculty involvement is a key step in nurturing support for institutional effectiveness activities.

This study also confirms findings by others (Boyle et al. 2005; Murray 1999; Sahin and Thompson 2006) that professional development is more meaningful when it takes place over time, is focused on a topic with clear and attainable goals for learning and growth, and integrates collaboration with colleagues for support. Growth in assessment skills and knowledge, improvements in understanding, and gains in confidence suggest that faculty participants benefited from receiving manageable amounts of information about assessment over an extended period. Respondents commented on greater clarity and alignment regarding what the college would be doing with assessment, what the role of faculty members would be, and how the system would work. The importance of this point for implementation cannot be overstated. It reflects the value of a sustained professional development effort as a means for conveying consistent messages on multiple occasions (Hall and Hord 2001).

This study also suggests that more may be needed than just professional development that facilitates collegial interaction. The findings indicate the importance of administrative support for and facilitation of assessment work in generating faculty buy-in. Real and perceived administrative support is important in faculty members’ decisions regarding the adoption of an innovation in general (Hall and Hord 2001; Sahin and Thompson 2006) and of assessment in particular (Palomba and Banta 1999; Peterson and Vaughn 2002; Wehlburg 2008). While the workshops supported the social nature of innovation, they were also a symbol of administrative support for assessment and a venue through which faculty members became aware of a system of support. If the visibility of faculty members’ participation made it clear who was driving the effort, the workshops, together with other steps (e.g., creation of an assessment office) spoke to a broader structure to support faculty work. The presence of this structure helped make the innovation tangible, showed organizational buy-in, and made the ongoing practice of assessment seem attainable.

It is important to remember that these findings apply to one college of education at a university in one region of the country and are not generalizeable in the traditional quantitative sense. The discussion section does link findings from the study to the literature on innovations and professional development, and we hope this study will therefore add to empirical support of practices reflected in that literature. However, research on faculty from other fields and at other institutions will be critical in adding depth to and challenging or refining these findings.

Conclusion

Despite potential benefits, program assessment efforts on many campuses are often implemented with a compliance mentality accompanied by limited faculty support. This research suggests that ongoing, focused faculty professional development on assessment, together with visible administrative support, can play a positive role in nurturing faculty understanding, confidence, and attitudes regarding assessment. Among the key aspects of this approach are the opportunity for faculty members to engage in meaningful assessment-related work with their colleagues, the chance to hear information in manageable doses, and the ability to see how individual and program-level work is supported at the organizational level.

References

Berquist, W. H. (1992). The four cultures of the academy: Insights and strategies for improving leadership in collegiate organizations. San Francisco, CA: Jossey-Bass.

Birnbaum, R. (2000). Management fads in higher education: Where they come from, what they do, why they fail. San Francisco, CA: Jossey-Bass.

Bolman, L. G., & Deal, T. E. (2003). Reframing organizations: Artistry, choice and leadership (3rd ed.). San Francisco, CA: Jossey-Bass.

Boyle, B., Lamprianou, I., & Boyle, T. (2005). A longitudinal study of teacher change: What makes professional development effective? Report of the second year of the study. School Effectiveness and School Improvement, 16(1), 1–27.

Bresciani, M. J. (2006). Outcomes-based academic and co-curricular review: A compilation of institutional good practices. Sterling, VA: Stylus.

Creswell, J. W. (2003). Research design: Qualitative, quantitative, and mixed methods approaches (2nd ed.). Thousand Oaks, CA: Sage Publications.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297334.

Ewell, P.T. (2002, April). Perpetual movement: Assessment after twenty years. Speech given to the American Association of Higher Education Annual Meeting. Boston, MA. Retrieved on August 13, 2009, from www.teaglefoundation.org/learning/pdf/2002_ewell.pdf

George, A. A., Hall, G. E., & Stiegelbauer, S. M. (2006). Measuring implementation in schools: The stages of concern questionnaire. Austin, TX: Southwest Educational Development Laboratory/SEDL.

Hall, G. E., & Hord, S. M. (2001). Implementing change: Patterns, principles, and potholes. Needham Heights, MA: Allyn and Bacon.

Murray, J. P. (1999). Faculty development in a national sample of community colleges. Community College Review, 27(3), 47–64.

Murray, J. P. (2008). New faculty members’ perceptions of the academic work life. Journal of Human Behavior in the Social Environment, 17(1/2), 107–128.

Palomba, C. A., & Banta, T. W. (1999). Assessment essentials: Planning, implementing, and improving assessment in higher education. San Francisco, CA: Jossey-Bass.

Peterson, M. W., & Vaughn, D. S. (2002). Promoting academic improvement: Organizational and administrative dynamics that support student assessment. In T. W. Banta (Ed.), Building a scholarship of assessment (pp. 26–46). San Francisco, CA: Jossey-Bass.

Rogers, E. M. (2003). The diffusion of innovations (4th ed.). New York, NY: Free.

Sahin, I., & Thompson, A. (2006). Using Rogers’ theory to interpret instructional computer use by COE faculty. Journal of Research on Technology and Education, 39(1), 81–104.

Saldaña, J. (2009). The coding manual for qualitative researchers. Thousand Oaks, CA: Sage.

Stake, R. E. (1995). The art of case study research. Thousand Oaks, CA: Sage.

Stevens, J. (1996). Applied multivariate statistics for the social sciences (3rd ed.). Mahwah, NJ: Erlbaum.

Stigmar, M. (2008). Faculty development through an educational action programme. Higher Education Research and Development, 27(2), 107–120.

Sullivan, A. M., Lakoma, M. D., Billings, J. A., Peters, A. S., & Block, S. D. (2006). Creating enduring change: Demonstrating the long-term impact of a faculty development program in palliative care. Journal of General Internal Medicine, 21(9), 907–914.

Walker, G.E., Golde, C.M., Jones, L., Bueschel, A.C., & Hutchings, P. (2007, December 14). The importance of intellectual community. The Chronicle of Higher Education, B6-B8.

Walvoord, B. A. (2004). Assessment clear and simple: A practice guide for institutions, departments, and general education. San Francisco, CA: Jossey-Bass.

Wehlburg, C. M. (2008). Promoting integrative and transformative assessment: A deeper focus on student learning. San Francisco, CA: Jossey-Bass.

Welsh, J. F., & Metcalf, J. (2003). Faculty and administrative support for institutional effectiveness activities. The Journal of Higher Education, 74(4), 445–468.

Wergin, J. F. (2005). Taking responsibility for student learning: The role of accreditation. Change: The Magazine of Higher Learning, 37(1), 30–33.

Wolverton, M., Gmelch, W. H., & Sorenson, D. (1998). The department chair as double agent: The call for department change and renewal. Innovative Higher Education, 22(3), 203–215.

Wright, B. D. (2002). Accreditation and the scholarship of assessment. In T. W. Banta (Ed.), Building a scholarship of assessment (pp. 240–258). San Francisco, CA: Jossey-Bass.

Acknowledgements

This article is based on ongoing research into the implementation of a unit-wide assessment system. We thank the faculty members who supported this research through participating in interviews and completing surveys, as well as the College of Education for funding data collection efforts.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Haviland, D., Shin, SH. & Turley, S. Now I’m Ready: The Impact of a Professional Development Initiative on Faculty Concerns with Program Assessment. Innov High Educ 35, 261–275 (2010). https://doi.org/10.1007/s10755-010-9140-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10755-010-9140-1