Abstract

With the advent of mass higher education and the consequent absorption of significant national resources, both public and private, it is inevitable that universities are increasingly expected to meet a range of societal needs. They are expected to ‘connect’ with society at large. In this paper, we argue that connectivity is best integrated with research, teaching and scholarship and should not be relegated to a ‘third stream’. We compare degrees of connectivity of 50 national systems of higher education using ten indicators, making a distinction between domestic and international connectivity. The strongest finding is that smaller countries exhibit the highest level of international connectivity. The higher education systems in countries with large absolute numbers of researchers such as the USA, China and Japan are relatively self-contained compared with countries such as Ireland, Switzerland and Singapore. Another finding is the relative insularity of the education sector in Eastern Europe, including the Russian Federation. When differences in levels of economic development are allowed for, among lower-income countries South Africa stands out as having a well-connected higher education sector.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Universities are institutions that undertake a wide range of activities, but the importance attached to each activity by various societal stakeholders may differ from those of the sector itself. In broad terms universities: teach students; undertake research and train researchers; and engage with the rest of society, both nationally and internationally. In the past decade, the popularity of international rankings that are based primarily on research performance has led to a much-increased emphasis on that activity. University administrators, especially those in Europe, Asia and Oceania, deem these rankings to be important (Hazelkorn 2007, 2008; Marginson and van der Wende 2007) with consequent effects on mission statements that emphasise research. Hundreds of universities throughout the world wish to be in the top 50 or 100 of the major international rankings. This goal can be achieved only through research performance. Furthermore, many governments in Europe and Asia have tried to increase their national presence in the rankings through measures such as targeting funds on selected universities, encouraging amalgamations of existing institutions and performance-based funding (van der Wende 2014).

Although research performance dominates the international rankings, research is narrowly defined, predominantly by articles published and citations in quality journals. It does not include, for example, the more informal research links with industry (Dill and van Vught 2014). In the Shanghai Academic Ranking of World Class Universities (ARWU) publications and citations have a weight of two-thirds; the other third is an impact measure represented by Nobel prizes and Fields medals. Research links with the rest of society are not included, except insofar as Nobel prizes might be thought to represent contributions that are highly valued by the community. The Times Higher Ed ranking incorporates a wider list of attributes although citations to journal articles are the single most important measure with a weight of 30 %. Research (a survey measure plus research income and papers per head) is also weighted at 30 %.Footnote 1

A related concern is that international rankings may bias research activity away from regional/national issues and engagement and towards areas that are of international interest. Worldwide citations are larger if the research is of interest to an international audience. Besides, access to the World Wide Web may have exacerbated this trend by increasing the ability of researchers to cite international work.

It is in part to modify the biases created by the importance of international rankings that attention has been turned towards the full spectrum of activities undertaken by universities and the importance of domestic and international linkages. For example, the triple helix model explores the institutional relationships between universities, industry and government (Etzkowitz and Leydesdorff 1995, 2000 and, for a survey of the extensive literature, Meyer et al. 2014). Linkages with industry and government are important for economic growth, especially in developing countries. A World Bank (2012, p. 6) report on higher education in East Asia concluded that ‘…higher education is failing to deliver skills for growth and research for innovation because of widespread disconnects between higher education institutions and other skill and research users and providers’. The OECD (2007) has also examined the role of universities as drivers of economic development.

More generally, there is a growing demand for greater transparency and a wider range of measures to cover the needs of all stakeholders (Van Vught and Westerheijden 2010, p. 20). This reflects the international movement towards sustainability reporting (see the discussion of the Global Reporting Initiative in Dumay et al. 2010).

The aim of this paper is to measure the degree of connectivity that higher education systems have with the external world, both domestically and internationally. It is an attempt to throw some light on the challenge thrown up by Jongbloed et al. (2008, p. 322) to show empirically the links between higher education and societal and stakeholder demands. We focus on systems (countries) rather than institutions in order to allow for diversity of institutions. This paper commences with a discussion of the related idea of the 'third mission’, a concept which has occasioned considerable interest, especially in Europe. This then leads to a discussion of connectivity which we present as a much broader concept than the third mission. We emphasise that connectivity is an attribute of all three university functions: research, teaching and scholarship. In practice, data are available for only a subset of connectivity measures. We use ten measures of connectivity that are widely available and construct measures of national and international connectivity for each of the 50 countries included in our sample. The analysis is then extended to an examination of how the extent of connectivity varies with levels of economic development.

What is the third mission?

With the advent of mass higher education and the consequent absorption of significant national resources, both public and private, it is inevitable that universities are increasingly expected to meet a range of societal needs. In recent times, a formal literature that discusses the importance and nature of a third stream of activities in addition to research and teaching has grown up, principally in Europe. However, what is encompassed by ‘the third stream’ differs between authors.

The concept of a third mission as set out by Montesinos et al. (2008) contains three modules: international activity, lifelong learning, and science and technological parks. Within each module, a number of indicators are proposed. A more recent project, ‘European Indicators and Ranking Methodology for University Third Mission’ (labelled E3M), was sponsored by the European Commission. The group of researchers behind this project adopted a wider definition of third mission activities grouped into three modules: related to research (technology transfer and innovation), education (lifelong learning and training) and social engagement (voluntary work, consultancy by students and staff, public access to lectures, and concerts and facilities). Details are provided in Marhl and Pausita (2011) and European Commission (2012b). In an attempt to widen the number of indicators used to judge the attributes and performance of universities, the European Union provided seed money for a new set of indicators known as U-Multirank (www.u-multirank.eu). In addition to teaching and research, the indicators also cover knowledge transfer, international orientation and regional engagement. It is intended primarily as a consumer guide for prospective students. To the best of our knowledge, U-Multirank is the first large-scale attempt to provide these wider measures.

In 2002, a report commissioned by the Russell Group of research universities in the UK was published under the title ‘Measuring Third Stream Activities’ (Molas-Gallart et al. 2002). In this report, the analytical framework distinguishes between capabilities and activities. The two main capabilities are knowledge capabilities and physical facilities. The three main activities are labelled as teaching, research and communication, but in the authors’ views, third stream activities arise from all three types of activities where non-academic communities are involved. The report goes on to list 34 indicators grouped under twelve headings. In a paper that examines the mission of universities from a US perspective, Douglass (2014, p. 2) has profiled what he calls the ‘flagship university model’. ‘It is a model that does not ignore international standards of excellence focused largely on research productivity, but is grounded in national and regional service, and with a specific set of characteristics and responsibilities…’. Douglass suggests that one or two such universities should exist in each geographic region of a country or, for developing countries, in each nation. In addition to world class performance in research and teaching, Douglass sees the flagship university as also engaging in third mission type activities, such as regional economic engagement, technology transfer, links with secondary schools and other tertiary institutions, and providing leadership in governance and management.

Virtually all of the measures of the third mission discussed in the papers mentioned above are activities. These are hard enough to measure, but impact is even harder. In practice, impact measures would require reporting back by the external parties as to whether their needs were met. However, there do exist some measures for teaching that capture impact. For example, registrations in Massive Open Online Courses (MOOCs) are a measure of the value of teaching to individuals outside the providing institutions. A related measure is the extent of external use of material on an institutions website, although this applies more to the impact of research than of teaching. The amount of licensing revenues collected by technology transfer offices is another example of an impact measure.

A broader concept: connectivity

A wider issue is whether the expression ‘third mission’ lowers the status of the attributes of a university that are encompassed by the term. Is a bronze medal good enough? The approach of the Molas-Gallart et al. (2002) report mirrors our belief that links with external groups flow through many channels and the concept of a third mission in addition to research and teaching narrows the concept of engagement too much. Our use of the term ‘connectivity’ also serves to emphasise that the flow of information and activity between the university sector and the rest of society is bi-directional (Casper 2013), something the third mission literature often downplays.

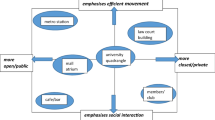

We argue that the concept of a separate ‘third stream’ only makes sense if we measure research narrowly as in the international rankings, namely publications and citations. Many of the activities usually listed under the heading of the third mission can be embedded in ‘research’ and ‘teaching and learning’ (noted also by Jongbloed et al. 2008, p. 313), although to complete the taxonomy, we need to add ‘scholarship’. By scholarship, we mean all those activities that utilise the specialised knowledge of academics that cannot be categorised as research or teaching, such as editorial functions on scientific journals and media engagement. Research activity includes not only publications and citations, but joint activity with industry, international collaboration, patents obtained and so on. Similarly, teaching and learning and scholarship encompass not only teaching to undergraduate and postgraduate students but activities such as continuing education, public lectures and media activities.Footnote 2 The activities included under the three headings of research, teaching and learning, and scholarship can be divided into three groups: core (internal) activities, domestic connectivity and international connectivity. The schematic representation is given in Box 1.

Another limitation of the expression ‘third mission’ is that it tends to convey the idea of the university reaching out to society, but universities also need to import ideas and if necessary adapt them to national needs. This is particularly true for low-income countries, where the process of catching up to high-income countries involves importing and modifying existing technology. This technology transfer tends to yield higher returns for low-income countries than does research. But low-income countries need a core of trained people and organisational structures if the process of technological transfer is to be successful (see Schaaper 2014; Archiburgi and Pietrobelli 2003; Sorensen 1999).

Institutional versus national connectivity

A nation’s tertiary education sector needs to engage in all the activities listed in Box 1, but it is not essential that each institution engages in all activities (e.g. Douglass 2014). Lifelong learning programs might be best carried out by universities that are less research intensive, some institutions may concentrate on meeting regional needs, and so on. What matters within the spectrum of higher education institutions is whether all activities are well covered at the national level. This view echoes that of the distinguished President of the Carnegie Foundation from 1979 to 1995, Boyer (1992, p. 91), who argued that ‘every college and university should also seek to find its own special niche within the spectrum’. More recently, Sánchez-Barrioluengo (2014) and West (2009) point out the deficiencies of the ‘one-size-fits-all’ model.

The mission of the higher education system as a whole must include criteria that are above the responsibilities of individual institutions. These include national policy on the types of institutions permitted, movement of students between different types of institutions, articulation from secondary to tertiary levels of education, governance structures and monitoring of quality. National evaluations encompass both institutional diversity and governmental policy settings.

Quantitative measures of connectivity in national systems of higher education

The ability to estimate all the connectivity variables listed in Box 1 is limited by the availability of internationally comparable data. One set of data that are available relate to links with industry and business. These links are important for knowledge transfer, which can occur in both directions (Abramo et al. 2009, 2011). We use three such measures: the IMD survey of the views of business executives on the extent of knowledge transfer (IMD); the World Economic Forum survey of research collaboration (WEF); and the CWTS-Leiden data on joint publications between university and industry researchers (UIC), which is further split between domestic and international industry partners. The two surveys have the advantage of encompassing all channels of communication between universities and the private sector.

International research links are also measured more broadly by the number of publications co-authored with international researchers (Pubs). International research links facilitate the import of new ideas and practices into a nation. The practical impact of university research is measured by patent applications by universities to the World Intellectual Property Office (Patents).Footnote 3 Student movement, both inflows (In Std) and outflows (Out Std), is included as a measure of international linkages that are both immediate and long lasting. Relationships developed during study persist into working life. The two final measures of connectivity relate to online connectivity: one measure is the dissemination of the output of universities as measured by full-text files on the web (Web Files); the second measure is the extent to which these files are accessed which is a measure of impact (Web Impact).

Relating back to Box 1, the big gap in the available measures is connectivity with the general population through activities such as public lectures and media work, although the web measures pick up an element of this.

Precise definitions of variables and their sources are given in Box 2. Six of the measures are taken from the Connectivity module of the 2014 U21 ranking of national systems of higher education (see www.universitas21.com and Williams et al. 2013). The additional measures used here are WEF, Patents, Out Students and the split of UIC between domestic and international.

Some 50 countries from all continents are included. The selection of countries was made on the basis of national research performance, as measured by the US National Science Foundation, supplemented by ensuring that all G20 countries were included. It was necessary to exclude three countries (Egypt, Pakistan and Tunisia) that met the criteria because of the poor quality of their national data. Descriptive statistics are given in Table 1.

In Table 2, we show how each country ranks on the various measures. Consistent with the taxonomy set out in Box 1, we group the variables into those relating to domestic connectivity and to international connectivity. Domestic connectivity comprises the two surveys of business (IMD and WEF) and joint articles with researchers in domestic firms. International connectivity comprises five measures: joint publications with international researchers (both in general and with international firms), student movement, both inflows and outflows, and patents. Patents are treated as a measure of international connectivity because applications made to WIPO have a global scope of protection (de Rassenfosse et al. 2014, p. 722). The web-based measures reflect both domestic and international connectivity, and separation is not possible.

In the two surveys that rate links between universities and business (IMD and WEF), eight countries make the top ten in each survey. In alphabetical order, these are Finland, Germany, Israel, the Netherlands, Sweden, Switzerland, the UK and the USA. The lowest ranked countries in the two surveys on university–business links are in Eastern Europe, including the Russian Federation. Joint publications with industry (domestic) are highest in Japan, followed in rank order by Korea, the USA, Denmark, Sweden, Finland, Norway and the Netherlands. Any difference between the survey results and the ranking on joint papers reflects the type of links between universities and industry. Ireland, Israel and Malaysia, for example, rank much higher on the surveys than on joint publications, which could suggest that the links have a strong operational component. Conversely, Greece and Slovenia rate more highly on joint publications than on the surveys.

The country with the highest proportion of articles written with industry (international) is Austria, followed by Sweden, the Netherlands, Denmark and Belgium. Looking at all joint articles with industry (domestic plus international), the four Nordic countries occupy four of the top eight positions, with the other four countries being the Netherlands, Austria, Japan and Korea. For collaboration with international authors of all affiliations, the country with the highest proportion of articles jointly authored is Switzerland, followed by Hong Kong SAR, Belgium, Denmark and Austria.

Patent applications per head of population are highest for universities in Israel, followed in rank order by Singapore, Ireland, Korea, Switzerland, Denmark, the USA and the UK. The percentage of students who are international (inbound) is highest in Singapore, Australia and the UK. The relative numbers of outbound students are highest in Hong Kong SAR, Slovakia and Ireland.

The top five countries for the average number of open access full text files per institution are Hong Kong SAR, Saudi Arabia, Taiwan–China, Indonesia and Croatia. For the impact measure, websites of US institutions are on average accessed the most, followed by those of Australia, Hong Kong SAR, Canada and the UK.

The results for the eight individual indicators are summarised in radar charts for a selected number of countries in Fig. 1. Each indicator is converted to scores out of 100 where the observed highest score is set at 100. Switzerland scores higher than average on all indicators; the USA is relatively much stronger on domestic connectivity and web impact than it is on international connectivity; China rates well below average on international indicators; Japan is above average on domestic indicators, but a little below average on international indicators except for joint publications. The pattern of results for the UK and Australia is very similar: generally, outperforming except for outbound students.

We now combine the individual measures of connectivity to obtain aggregate measures.Footnote 4 An overall measure of connectivity is constructed by averaging the scores out of 100 for the nine indicators listed in Box 2 with half weights on each of the two web-based measures.Footnote 5 Scores are calculated for domestic connectivity and international connectivity with equal weights on the components. Table 3 displays the resultant country scores [columns (1), (4) and (7)] and ranks [columns (2), (5) and (8)]. The top ten ranked countries for overall connectivity are, in rank order, Switzerland, Hong Kong SAR, Singapore, Ireland, Sweden, Israel, Denmark, the UK, Austria and the Netherlands. But the aggregate ranking hides important differences between domestic and international ranking. The USA is ranked first for domestic connectivity but 31st for international connectivity. Similarly, Japan is ranked third for domestic connectivity but 38th for international. The corresponding ranks for Korea are sixth and 23rd. Germany and China are also ranked more highly for domestic connectivity than international. The pattern is clear: countries with a large higher education system have a strong web of internal connections, which reduces the perceived need for external links. The top three nations for international connectivity are Ireland, Switzerland and Singapore; all countries ranked in the top eight have populations below ten million. The three countries where the international rank is highest compared with the domestic rank are Bulgaria, Slovakia and Greece.

Connectivity and economic development

We argued above that connectivity is particularly important for economic development. We now examine how the various types of connectivity vary with levels of development and what countries have high levels of connectivity relative to countries at similar stages of development. As a measure of economic development, we use GDP per capita in 2011 measured in thousands of US dollars at purchasing power parity.

Each measure of connectivity is regressed on GDP per capita and if appropriate, its square. For all measures except web files, the level of connectivity shows a statistically significant increase with GDP per head. A linear model was sufficient to capture the variation for all measures except joint publications with industry (UIC) and patents where a quadratic model fitted best.Footnote 6 The regression results are summarised in Table 4. The strongest relationship between the connectivity measures and economic development occurs for the two surveys of business (IMD and WEF): links between business and universities are strongest in advanced economies. The weakest relationship is between students studying abroad and economic development; cultural and linguistic factors are presumably important in explaining differences here.

The regression results provide a broad overview but of greater interest is to highlight those countries where the higher education system exhibits greater connectivity compared with other countries at similar stages of development. We do this by calculating deviations from the fitted regression lines for each connectivity measure. (For web files, we deviate from the mean value.) To compensate for the fact that deviations below the line cannot exceed 100 % whereas those above the line are unbounded, we calculate the difference between the actual and predicted values divided by the average of actual and predicted values. We then calculate average percentage deviations for total connectivity and for the domestic and international components with weights as before. In the last step, countries are ranked in order using the spectrum of positive and negative deviations. The results are presented in Table 3: total connectivity in column (3); domestic connectivity in column (6); and international connectivity in column (9).

The effect of adjusting connectivity measures for levels of economic development can be measured by comparing the adjusted country ranks with the original rankings. For the total measure of connectivity, the largest improvement in rankings occasioned by adjusting for income levels occurs for South Africa. The country rises 28 places compared with the original rankings to number one. The other countries that exhibit high levels of connectivity relative to their level of economic development are Malaysia (22 places higher), Portugal (16 places higher) and China (10 places higher). Countries that rank lower than countries at similar income levels include Australia, Japan, Norway, Taiwan–China and the USA. After adjusting for levels of GDP per head, the ten top ranked countries for connectivity are South Africa, Switzerland, Denmark, Ireland, Israel, Portugal, the UK, Austria, Finland and Belgium.

The aggregate measures hide differences between domestic and international connectivity. After adjusting for levels of development, Japan is ranked first for domestic connectivity but 40th for international connectivity. The next ranked countries for (adjusted) domestic connectivity are South Africa, Korea and India. GDP-adjusted international connectivity is ranked highest in Ireland, Switzerland, South Africa and Denmark.

Concluding remarks

This paper argues that connectivity is a component of all three functions of universities: research, teaching and scholarship. It is also a two-way process with external groups. This concept of connectivity has been operationalised through the use of ten measures, distinguishing between domestic and international connectivity, for 50 countries. The strongest finding is that smaller countries exhibit the highest level of international connectivity. The university systems in countries with large absolute numbers of researchers such as the USA, China, Japan and Korea are relatively self-contained compared with countries such as Ireland, Switzerland and Singapore. Another finding is the relative insularity of the university sector in Eastern Europe, including the Russian Federation. When differences in levels of economic development are allowed for, South Africa stands out as having a well-connected university sector.

The principal caveat to the findings is that we use ‘only’ ten measures of connectivity and the domestic measures are limited to surveys and joint publications with industry. The measures need to be supplemented by measures of connectivity with the other sectors of the economy: government (national and regional), households and not-for-profits. Unfortunately, data limitations preclude such extensions, at least for the range of 50 countries evaluated in this paper. The multitude of ways in which universities interact with households, such as continuing education and contributions to the public debate through lectures and media work, means that household connectivity measures are probably best obtained by surveys.

The World Bank (2012) and others have emphasised that the connectivity of the higher education sector influences labour market outcomes and economic growth through new research, the transfer of existing knowledge and appropriate skills training. Our data potentially enable an empirical check on this view, although such an exercise would require attention to appropriate lags between improved levels of connectivity and a stronger economy. Hence, regular updates of the data are desirable.

The nature of government funding for research influences connectivity between universities and private industry, but there is no ‘best’ model. In Israel and Sweden, for example, our measured strong connectivity between universities and domestic industry owes much to government policy that promotes these linkages (European Commission 2012a p. 8). On the other hand, the strong domestic connectivity in the USA is more organic and owes less to government policy (Lane 2008). It is interesting to note that government funding models are being widened in scope in several countries. Funding based narrowly on research publications and not their impact was found in Australia to have led to a fall in the average quality as measured by citations (Butler 2003). Subsequently, a wider range of measures of research performance was used. In the UK, under the 2014 Research Excellence Framework 20 % of research funds were allocated on the basis of the ‘social, economic and cultural impact of research’ (www.hefce.ac.uk).

Finally, we note that there is an inherent conflict between governments (and universities) wanting institutions to be high up in the existing international (research) rankings and the demands by business and households for greater linkages with universities. Two solutions are emerging: a broadening of the coverage of rankings (such as the U-Multirank model) and governments funding institutions differentially to ensure that only an appropriate limited number of institutions are able to compete in international research rankings, while others carry out more general missions (Eggins 2014; Olsen and Slaughter 2014).

Notes

The Times Higher Ed ranking does include internationalisation and links to industry measures, with a combined weight of ten per cent. Internationalisation is measured by the percentages of international students and staff. Delgado-Márquez et al. (2013) use the Times data to show that the degree of internationalisation exerts a positive influence on a university’s reputation.

As an interesting example of media engagement, Smith (2013) documents the linkages between academics and the BBC in post-war Britain.

The World Intellectual Property Office (WIPO) acts as an international patents office and, under the Patent Co-operation Treaty, provides a written report to applicants, which facilitates the awarding of national patents. For this reason WIPO does not have data on patents awarded; nevertheless, because of the cost and time involved, application to WIPO implies that the invention is important.

In aggregating data missing values have been dealt with as follows. International students: values for Argentina and Mexico put equal to the percentage figure for Chile; India given the figure for Indonesia. Outbound enrolment: Singapore and Taiwan-China put at third-quartile value. IMD knowledge transfer: Iran, Saudi Arabia and Serbia given first quartile number. WEF Forum survey: Taiwan-China estimated from rank in IMD survey. Taking slightly different values as placeholders does not alter the overall score significantly.

Williams and de Rassenfosse (2014) provide a detailed discussion of the methodologies for aggregating performance measures into a ranking in the specific context of the higher education literature.

In order to capture the non-linearity in UIC we fitted the quadratic to countries with GDP per capita over $16,000 and for countries below that level we used their average UIC score as the predicted value.

References

Abramo, G., D’Angelo, C. A., & Di Costa, F. (2009). Research collaboration and productivity: Is there correlation? Higher Education, 57, 155–171.

Abramo, G., D’Angelo, C. A., & Di Costa, F. (2011). University-industry research collaboration: A model to access university capability. Higher Education, 62, 163–181.

Altbach, P. (2003). The costs and benefits of world-class universities. International Higher Education, 33(Fall), 5–8.

Archiburgi, D., & Pietrobelli, C. (2003). The globalisation of technology and its implications for developing countries: Windows of opportunity or further burdening? Technological Forecasting and Social Change, 70(9), 861–863.

Boyer, E. L. (1992). Scholarship reconsidered: Priorities of the professoriate. Issues in Accounting Education, 7(1), 87–91.

Butler, L. (2003). Explaining Australia’s increased share of ISI publications—The effects of a funding formula based on publication counts. Research Policy, 32, 143–155.

Casper, S. (2013). The spill-over theory reversed: The impact of regional economies on the commercialization of university science. Research Policy, 42, 1313–1324.

de Rassenfosse, G., Dernis, H., Guellec, D., Picci, L., & van Pottelsberghe, B. (2014). The worldwide count of priority patents: A new indicator of inventive activity. Research Policy, 42, 720–737.

Delgado-Márquez, B. L., Escudero-Torres, M. Á., & Hurtado-Torres, N. E. (2013). Being highly internationalised strengthens your reputation: An empirical investigation of top higher education institutions. Higher Education, 66(5), 619–633.

Dill, D., & van Vught, F. (2014). The contribution of universities to innovation: Insights from a comparative study of the leading OECD nations. In A. Maldonado-Maldonado & R. Bassett (Eds.), The forefront of international higher education: A Festschrift in honour of Philip G. Altbach (pp. 209–222). Dordrecht: Springer.

Douglass, J. (2014). Profiling the flagship university model—An exploratory proposal for changing the paradigm from ranking to relevancy. Research and Occasional Paper Series 5.14, Center for Studies in Higher Education, University of California, Berkeley.

Dumay, J., Guthrie, J., & Farneti, F. (2010). GRI sustainability reporting guidelines for public and third sector organisations. Public Management Review, 12(4), 531–548.

Eggins, H. (2014). Global diversity: Emerging trends. In A. Maldonado-Maldonado & R. Bassett (Eds.), The forefront of international higher education: A Festschrift in honour of Philip G. Altbach (pp. 209–222). Dordrecht: Springer.

Etzkowitz, H., & Leydesdorff, L. (1995). The triple helix—University–industry–government relations: A laboratory for knowledge-based economic development. EASST Review, 14(1), 14–19.

Etzkowitz, H., & Leydesdorff, L. (2000). The dynamics of innovation: From national systems and ‘Mode 2’ to a tripe helix of university–industry–government relations. Research Policy, 29(2), 109–123.

European Commission. (2012a). Overview of higher education in Israel. Tempus Programme. http://eacea.ec.europa.eu/tempus/

European Commission. (2012b). Green paper: Fostering and measuring ‘Third Mission’ in higher education institutions. Lifelong Learning Programme.

Hazelkorn, E. (2007). The impact of league tables and ranking systems on higher education decision making. Higher Education Management and Policy, 19(2), 87–110.

Hazelkorn, E. (2008). Learning to live with league tables and ranking: The experience of institutional leaders. Higher Education Policy, 21(2), 193–215.

Jongbloed, B., Enders, J., & Salerno, C. (2008). Higher education and its communities: Interconnections, interdependencies and a research agenda. Higher Education, 56(3), 303–324.

Lane, N. (2008). US science and technology: An uncoordinated system that seems to work. Technology in Society, 30, 248–263.

Marginson, S., & van der Wende, M. (2007). To rank or to be ranked: The impact of global rankings in higher education. Journal of Studies in International Education, 11(3/4), 306–329.

Marhl, M., & Pausita, A. (2011). Third mission indicators for new ranking. Evaluation in Higher Education, 5(1), 43–64.

Meyer, M., Grant, K., Morlacchi, P., & Weckowska, D. (2014). Triple Helix indicators as an emergent area of enquiry: A bibliometric perspective. Scientometrics, 99, 151–174.

Molas-Gallart, J., Salter, A., Patel, P., Scott, A., & Duran, X. (2002). Measuring third stream activities—Final report to the Russell Group of universities. Falmer: Social and Technology Policy Research Unit, University of Sussex.

Montesinos, P., Carot, J. M., Martinez, J.-M., & Mora, F. (2008). Third mission ranking for world class universities: Beyond teaching and research. Higher Education in Europe, 33(2/3), 259–271.

OECD. (2007). Higher education and regions: Globally competitive, locally engaged. Paris: OECD.

Olsen, J., & Slaughter, S. (2014). Forms of capitalism and creating world-class universities. In A. Maldonado-Maldonado & R. Bassett (Eds.), The Forefront of international higher education: A Festschrift in honour of Philip G. Altbach (pp. 267–279). Dordrecht: Springer.

Sánchez-Barrioluengo, M. (2014). Articulating the ‘three-missions’ in Spanish universities. Research Policy, 43, 1760–1773.

Schaaper, M. (2014). The human factor in innovation. Chapter 2 in Cornell University, INSEAD and WIPO. The Global Innovation Index 2014: The human factor in innovation, Fontainebleau, Ithaca and Geneva.

Smith, D. N. (2013). Academics, the ‘cultural third mission’ and the BBC: Forgotten histories of knowledge creation, transformation and impact’. Studies in Higher Education, 38(5), 663–677.

Sorensen, A. (1999). R&D, learning and phases of economic growth. Journal of Economic Growth, 4(4), 429–445.

Tijssen, R. J. W. (2012). Co-authored research publications and strategic analysis of public–private collaboration. Research Evaluation, 21(3), 204–215.

Van der Wende, M. C. (2014). On mergers and missions: Implications for institutional governance and government steering. In Y. Cheng, Q. Wang, & N. Liu (Eds.), How world-class universities affect global higher education (pp. 137–152). Rotterdam: Sense.

Van Vught, F., & Westerheijden, D. F. (2010). Multidimensional ranking: A new transparency tool for higher education and research. Higher Education Management and Policy, 22(3), 31–56.

West, P. W. A. (2009). A Faustian bargain? Institutional responses to national and international rankings. Higher Education Management and Policy, 21(1), 9–18.

Williams, R., & de Rassenfosse, G. (2014). Pitfalls in aggregating performance measures in higher education. Studies in Higher Education. doi:10.1080/03075079.2014.914912

Williams, R., de Rassenfosse, G., Jensen, P., & Marginson, S. (2013). The determinants of quality national higher education systems. Journal of Higher Education Policy and Management, 35(6), 599–611.

World Bank. (2012). Putting higher education to work: Skills and research for growth in east Asia. Washington, DC: World Bank.

Wu, W., & Zhou, Y. (2012). The third mission stalled? Universities in China’s technological progress. Journal of Technology Transfer, 37(6), 812–827.

Acknowledgments

Gaétan de Rassenfosse’s current affiliation is College of Management, Ecole polytechnique fédérale de Lausanne (EPFL), Lausanne, Switzerland. The article was written when Gaétan was a senior research fellow at the University of Melbourne. This work forms part of the Universitas 21 sponsored project Ranking National Systems of Higher Education located at the Melbourne Institute, University of Melbourne (www.universitas21.com). We are indebted to Robert Tijssen (CWTS-Leiden) and Isidro Aguillo (Webometrics) for the provision of data. We are most grateful to the Editor and two anonymous referees who provided very insightful comments on an earlier draft.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

de Rassenfosse, G., Williams, R. Rules of engagement: measuring connectivity in national systems of higher education. High Educ 70, 941–956 (2015). https://doi.org/10.1007/s10734-015-9881-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10734-015-9881-y