Abstract

This study investigated the relationship between multiple predictors of academic achievement, including course experience, students’ approaches to learning (SAL), effort (amount of time spent on studying) and prior academic performance (high school grade point average—HSGPA) among 442 first semester undergraduate psychology students. Correlation analysis showed that all of these factors were related to first semester examination grade in psychology. Profile analyses showed significant mean level differences between subgroups of students. A structural equation model showed that surface and strategic approaches to learning were mediators between course experience and exam performance. This model also showed that SAL, effort and HSGPA were independent predictors of exam performance, also when controlling for the effect of the other predictors. Hence, academic performance is both indirectly affected by the learning context as experienced by the students and directly affected by the students’ effort, prior performance and approaches to learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

First semester undergraduate psychology students comprise a highly diverse group of students as regards prior academic achievement, learning motives and strategies, experience of the quality of education, and effort. All of these variables are possible predictors of academic achievement as indicated by examination grade (Diseth 2007). However, the relationship between these variables may be complex in terms of direct, indirect and independent effects as predictors of academic achievement (Lizzio et al. 2002; Richardson 2006).

Undergraduate studies in psychology attract students for several reasons, including interest in the topic, possibilities for combinations with other subjects in tertiary education, and possibilities for further studies in psychology which may lead to a professional career as a psychologist. Consequently, some students intend to continue their psychology studies beyond the first semester, whereas others do not. Furthermore, some students may not show up at the exam for various reasons (e.g. drop-out or withdrawal). Finally, some first semester psychology students fail the final exam. Hence, undergraduate psychology students constitute several subgroups which may be interesting to compare.

The main purpose of the present study is first to investigate the relative contribution of course experience, students’ approaches to learning (motives and learning strategies), effort (number of hours spent studying), prior academic achievement (HSGPA) as predictors of academic achievement, and second to examine whether the abovementioned subgroups of students may have different mean levels of scores on these performance indicators. This research may provide more complete knowledge about the predictors of academic achievement, which may guide students and teachers in efforts to enhance learning and academic achievement in subgroups of students.

Students’ approaches to learning and course experience

The correspondence between students’ intentions, motives and learning strategies, conceptualised as students’ approaches to learning (SAL) (Entwistle et al. 2000), is considered to be an important aid for course, curriculum and assessment design (Coffield et al. 2004) and may encourage a more systematic approach to academic teaching (Trigwell and Prosser 1991). A deep approach is defined as the intention to understand the learning material and demonstrating an interest in ideas, combined with the learning strategies “use of evidence” and “relating of ideas”. In contrast, a surface approach is characterised by the motives of avoiding failure and fulfilling minimum course requirements by reproducing the learning material (“rote learning”). Finally, an intention to succeed and the motive of achieving high examination grades by organising time and the learning environment constitute a strategic approach. This approach is characterised by great effort, finding the right conditions for studying, effective monitoring of time and effort, attention to examination demands and criteria, and adjustment of studying according to perceived demands.

Students’ approaches to learning is assumed to be partly a result of contextual influences. For example, Honkimäki et al. (2004) investigated whether innovative teaching experiments by university teachers are reflected in students’ study orientations and their subjective learning experiences and outcomes. They found that motivational problems and undesirable study behaviour (reproduction and competition) decreased among students participating in innovative courses which included different kinds of activating instruction with an emphasis on interactive forms of learning and studying. However, there were no differences in the meaning orientation or in problems with study skills, suggesting that the pedagogical innovations were of most benefit to those students who usually had problems with motivation. Furthermore, Sadlo and Richardson (2003) found that the implementation of a problem-based curriculum had desirable effects on the quality of learning. These effects were partly mediated by students’ perceptions of their academic environment. This latter point has also been emphasised by other researchers, who conclude that it is the student’s perception of the learning context rather than the context in an objective sense which influences SAL most directly (Biggs 2001; Entwistle 1987; Laurillard 1979; Richardson 2003), in particular the students’ responses to perceived situational demands (Biggs 1999; Entwistle and Tait 1990; Lizzio et al. 2002; Newble and Clarke 1987).

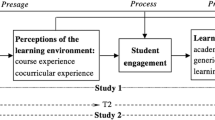

This interplay between the learning context, perception of the learning context, and approaches to learning has been explained by Biggs (2003). He maintained that SAL has a foundation in phenomenography and constructivism, which both recognize the importance of students’ perceptions of learning activities. He developed a model to describe ‘the interactive relationship among student factors, teaching context, the on-going approaches to a particular task and student learning outcomes’ (Biggs 2001, p. 135). This model is referred to as the 3P model, in which presage refers both to student factors (prior knowledge, ability, and learning orientations) and to the teaching context (the curriculum, teaching methods and assessment, and the institutional environment). Furthermore, process concerns the students’ approaches to learning in their current learning task. Previous research has shown that students switch between these approaches depending on how they respond to the learning context (Volet and Chalmers 1992). Finally, product is about the outcome of learning in terms of understanding and examination grade. The student factors and teaching context interact to determine how students approach learning tasks (including assessment tasks), and ultimately how their behaviour may affect their learning outcomes (Biggs 2003).

Ramsden (1992) subsequently developed a model of student learning in context to demonstrate how the interaction between the students’ learning intention (to understand or to reproduce) and the process of studying were reflected in the quality of understanding. In this model, students’ approaches to learning mediated the relationship between perceived learning demands and learning outcome.

Students’ perception of the learning context is often referred to as course experience and is measured by means of the course experience questionnaire—CEQ (Ramsden 1991). This inventory has been designed as a performance indicator of teaching effectiveness at the level of the whole course or degree across disciplines and institutions about which students have direct experience (Lizzio et al. 2002). A higher order structure of the CEQ, consisting of a teaching quality factor (good teaching, clear goals and standards, generic skills, and appropriate assessment) and workload (appropriate workload) has been observed (Diseth 2007; Wilson et al. 1997). But other studies have shown a single higher order factor for the CEQ (Diseth et al. 2006; Lawless and Richardson 2002).

Previous research has shown consistent relations between course experience and SAL (Diseth 2007; Diseth et al. 2006; Kember and Leung 1998; Lawless and Richardson 2002; Lizzio et al. 2002; Prosser and Trigwell 1999; Richardson and Price 2003; Wilson et al. 1997). For example, a multivariate analysis (SEM) showed that perceived teaching quality was positively related to deep and strategic approaches, but negatively related to surface approach, whereas perceived appropriate workload was positively related to deep approach, but negatively related to surface approach (Diseth 2007). Furthermore, Richardson (2003) found correlations between an overall measurement of course experience and deep (r = .31), surface (r = −.44), and strategic (r = .03) approaches to learning. Also, Kember and Leung (1998) found significant relations between perceived workload and surface approaches to learning. Finally, Lizzio et al. (2002) found meaningful, significant relations between course experience variables and deep/surface approaches to learning.

Kember and Leung (1998) suggested that the causal relationship between SAL and CE may be bi-directional, but research has shown that demographic variables (age and gender) and course experience are independent predictors of approaches to learning (Diseth et al. 2006; Richardson 2003; Richardson and Price 2003). This finding has been considered as indirect support for the assumption that course experience influences approaches to learning. This line of reasoning is also supported by previous research. For example, Lizzio et al. (2002) analysed the responses of 2,130 students and found that perceptions of teaching environments (course experience) influence learning outcomes (academic achievement) both directly and indirectly.

However, more recently, Richardson (2006) suggested that “…any direct effects of demographic variables on students’ perceptions of their academic environment may have been masked by indirect but opposing effects that were mediated by variations in their study behaviour [SAL]” (p. 870). He found evidence for multiple causal relationships as follows: (1) demographic variables → course experience, (2) demographic variables → study behaviour (SAL), (3) course experience → study behaviour (SAL), and (4) study behaviour (SAL) → course experience. This suggested a bi-directional causal relationship between course experience and study behaviour (SAL) (Richardson 2006). These multiple causal relations are also consistent with the fact that SAL may be both variable and consistent (Ramsden 1988), as approaches to learning are a function of individual student characteristics, such as personality (Diseth 2003) and the learning context (Biggs 2001; Newble and Hejka 1991; Sadler 1989; Sambell and McDowell 1998).

Prediction of academic achievement

As regards prediction of academic achievement, previous research has generally shown that deep and strategic approaches to learning are positively correlated and a surface approach negatively correlated with academic achievement (Cano 2005; Diseth 2003; Entwistle and Ramsden 1983; Watkins 2001), although a combination of surface and strategic approaches may be positively related to examination grade among undergraduate science students or whenever fact-oriented assessment is utilised (Entwistle et al. 2000). For example, Newstead (1992) found that academic achievement was positively correlated with meaning orientation (deep approach) (r = .22) and achieving orientation (strategic approach) (r = .32) in a study of psychology students’ academic achievement at different stages in their study. Similarly, Sadler-Smith (1997) found that academic achievement was positively correlated with deep approach (r = .26) and strategic approach (r = .14) in a sample of undergraduate business students. However, it may be concluded that the relationship between SAL and achievement is often moderate or rather weak (Diseth 2003; Duff 2003), suggesting that other factors may be equally important as predictors of academic achievement.

The relationship between course experience and academic achievement has been less studied, but Richardson and Price (2003) found a positive correlation between an overall measure of perceived course experience and assigned marks for coursework. Also, Lizzio et al. (2002) found that the CEQ factor ‘good teaching’ significantly predicted GPA among students in several faculties. Finally, Diseth (2007) found a correlation between examination grade and the higher order course experience factor of teaching quality (r = .31) and appropriate workload (r = .28). However, this study also showed that the effect of course experience as a predictor of examination grade disappeared in a SEM which also included SAL.

Whereas both CE and SAL may predict academic achievement, other factors are obviously also influential. For example, Touron (1983) found that secondary school marks are among the best predictors of academic performance. Furthermore, academic achievement (test scores) has been found to correlate with HSGPA in a sample of undergraduate psychology students (Cavell and Woehr 1994; Woehr and Cavell 1993). However, the same studies did not show a significant relationship between the amount of time spent on studying and academic achievement. Schuman et al. (1985) concluded that it is sometimes possible to find a significant relationship between the amount of study time and academic achievement, but that this relationship is very small. Subsequent research has generally accepted these findings, but their generalizability may be questioned (Plant et al. 2005). For example, Rau and Durand (2000) found that the amount of study was related to GPA (r = .23), but the real benefit was seen for students studying over 14 h a week. Finally, Plant et al. (2005) found that the amount of study only emerged as a significant predictor of cumulative GPA when the quality of study and previously attained performance were taken into consideration.

Of particular relevance to the present study is an investigation by Diseth et al. (2006), who studied the relationship between perceived experience of educational quality (course experience), learning motives and strategies (students’ approaches to learning—SAL) and examination grade in a sample of 486 first semester students. This study showed a consistent relationship between course experience and SAL, but the prediction of examination grade was weak. Moreover, this study did not control for other variables, such as effort and previous academic performance. Furthermore, the previously mentioned study by Diseth (2007) investigated a sample of undergraduate psychology students in their second semester of study. This study showed that course experience had an indirect effect on examination grade via SAL. In addition, previous academic performance, as indicated by high school grade point average (HSGPA), had an independent effect on academic performance, also when controlling for the effect of SAL and course experience. However, students in the second semester probably constitute a less heterogeneous group, because they have actively selected to continue studies in psychology beyond the first semester. Hence, they may also, on average, be more motivated and show more effort than students in the first semester. Hence, the results from the Diseth (2007) study may not necessarily generalize to students in the first semester. However, the results from this study are in accordance with previous research by Kember and Leung (1998) and Lizzio et al. (2002).

Current issues and hypotheses

The present study aims at providing a further exploration of the relationship between course experience, SAL and academic achievement by using a larger sample of first semester psychology students. An additional aim is to increase the number of variables compared to the Diseth et al. (2006) study by including previous academic performance (high school GPA) and effort (amount of time spent on studying). In addition, mean level differences of these variables in the group of students who, for some reason (e.g. withdrawal), fail to take the exam will be compared to the students who do take the exam. Similarly, the group of students who submit an exam but do not pass the exam (grade F) will be compared to the students who do pass (grade A–E). These issues are interesting because they may provide information about the possible causes of not taking the exam or not passing the exam, and this group of students represents a potential resource for the undergraduate psychology programme. Finally, some of the students in the first semester are determined to continue their psychology studies beyond the first semester, and the present study will explore whether these students differ from the rest of the students in terms of their mean level of scores on measurement of course experience, SAL, effort, and academic performance.

The theoretical rationale for the present research resembles the previously mentioned 3P model (Biggs 2001), in which the product of academic learning (examination grade) is a result of both presage variables (HSGPA) and the learning process (course experience and SAL). Course experience may partly be considered as a process variable because it reflects the students’ perception of the learning environment, but also partly as a presage variable, insofar as it reflects the learning environment in an “objective” sense. Presage variables may affect the product both directly and indirectly, via process variables. Thus, SAL may mediate between CE and academic achievement (cf. Ramsden 1992).

Hence, it is expected that both course experience and SAL will predict examination grade, in accordance with previous research (Cano 2005; Diseth 2003; Entwistle and Ramsden 1983; Watkins 2001) and that these predictor variables will be related (Diseth 2007; Diseth et al. 2006; Kember and Leung 1998; Lawless and Richardson 2002; Lizzio et al. 2002; Prosser and Trigwell 1999; Richardson and Price 2003; Wilson et al. 1997). Furthermore, both effort and prior academic performance may have independent effects on examination grade (Rau and Durand 2000; Touron 1983), also when controlling for the effect of course experience and SAL. These effects may be direct or indirect, via SAL.

As regards differences between subgroups of students, there is little previous research to guide any specific hypotheses. However, students who aim at further psychology studies beyond the first semester are expected to score higher on course experience, deep and strategic approaches, effort, and achievement, but lower on surface approaches, in comparison with the group of students who do not intend to continue such studies. These students may also show a higher score on course experience. The same pattern of findings may be expected in the group of students who pass, as opposed to those who fail the exam.

Methods

Participants and context

The target group was all of the 756 students who had registered for the PSYK100 course at the University of Bergen, Norway. This course is part of the first semester of a 1-year introductory level program of study. PSYK100 is an introductory course in psychology for undergraduate students. However, the students may choose to take only the first semester (for example in combination with other subjects at the university). The formal programme, consisting of lectures and seminar groups, was the same for all of the participating students. The final examination was administered at the end of the semester, and this exam was the only means of grading the student.

Of the total of 756, 32 students had to be excluded from the study. Of these, 29 were not possible to find due to incorrect address information, and 3 respondents replied that they were not enrolled in this course after all. Hence, the population consisted of 724 students, of whom 482 replied (response rate: 66.6%). In addition, 40 students had to be deleted from the sample for various reasons (e.g. some students had not indicated their student number, so that it was not possible to access their examination grade, and some students did not take the exam for medical reasons). Thus, the final sample consisted of 442 students, giving a response rate of 61%. Of this sample, 385 students (296 female and 89 male, mean age 21.2 years, SD = 3.99) actually took the final exam. The rest of the students (57 students—45 female and 12 male, mean age 25.75 years, SD = 6.11) did not.

Procedure

The inventory was distributed to the students at five different lecture hours in order to reach as many students as possible. In the two-first lectures, the students were given 10 min at the end of the first lecture session in addition to a 15 min break to respond to the inventory. In the three last lectures, the inventories and stamped envelopes were handed out to the students who had not already replied, and they were asked to complete the inventory at home and mail it. In addition, inventories were sent to students by mail. The students were sent a written reminder by mail, and a text message was sent on two occasions. The entire data collection was finished 1 month before the final exam.

Measures

Course experience questionnaire

An adapted version of the CEQ (Ramsden 1991), consisting of 20 items measuring the 4 factors of good teaching (6 items), clear goals and standards (4 items), appropriate workload (6 items), and appropriate assessment (4 items) was used. Most of these items were adapted from Byrne and Flood (2003). In addition, 2 items covering perceived textbook volume and difficulty for this particular course were included in the appropriate workload scale. The students were instructed to indicate how they actually perceived the course as a whole (not with reference to e.g. any particular lecturer) on a scale ranging from 5 (“definitely agree”) to 1 (“definitely disagree”).

Students’ approaches to learning: ASSIST

An abbreviated version of the approaches and study skills inventory for students—ASSIST (Entwistle 1997) was employed to measure deep, surface and strategic approaches to learning. A Norwegian version of this inventory has been described and validated previously (Diseth 2001). The ASSIST originally comprises a 52-item questionnaire which is based on statements made by university students asked what they usually do when they go about learning. For the present study, the number of items was reduced from 52 to 24 in order to ease the data collection, in accordance with strategies in previous research on SAL (Kember and Leung 1998). Previous research (Diseth et al. 2006; Diseth 2007) showed that reliability, factor structure, and predictive validity were good even though the number of items was reduced. The participants were instructed to reply according to how they actually study at present in this particular course, and they indicated their relative agreement or disagreement with the statements on a 5-point scale (5—“agree”, 1—“disagree”). The participants were instructed not to mark no. 3 (“unsure”) unless they really had to or if the item did not apply to their learning situation, in accordance with the original inventory (Entwistle 1997).

Academic achievement

The students sat a 6-hour exam, in which they had to answer 3 out of 6 essay-like questions and a 90-item multiple choice (MC) test. The essays contributed 2/3 and the MC 1/3 of the total grade. The students were only given a total grade score, using a scale from A (excellent) to F (fail). These grades were converted for data analytic purposes as follows: A = 6, B = 5 … F = 1.

Effort

This was measured by asking the students the total amount of time they spent studying during a typical study week. The students were asked to reply to the following item: “How much time do you spend on studying for this course during a typical study week, on average (including lectures, seminars, reading, and other study activities)?”. Previous research has supported the validity of this measure (Diseth 2007).

Prior academic performance

The students were asked to report their high school grade point average (HSGPA) on a scale ranging from 1 to 6 with two decimal intervals.

Results

Measurement models

In order to assess the construct validity of each scale, separate confirmatory factor analyses were performed for each construct before including them in a subsequent SEM (as shown below) in accordance with guidelines by Jöreskog (1993). Table 1 shows that the fit indexes for all of the constructs in the ASSIST and the CEQ are appropriate for further analysis, according to recommended cut-off values for these indexes (Byrne 2001).

Descriptive statistics and correlations

Descriptive statistics displayed in Table 2 showed that the alpha reliability for the variables was satisfactory, and that this sample is generally neither particularly satisfied nor dissatisfied with the course experience. Furthermore, there were relatively high levels of deep and strategic approaches and a low level of surface approach. Finally, this group of students reported that they spent an average of 25.17 h per week studying (effort). However, the standard deviations show that there were relatively large differences between the students, particularly with respect to effort. Academic achievement (examination grade) was significantly correlated with good teaching (r = .14, p < .05), appropriate workload (r = .16, p < .01), appropriate assessment (r = .21, p < .01), CEQ total (r = .23, p < .01), deep approach (r = .30, p < .01), surface approach (r = −.35, p < .01) strategic approach (r = .43, p < .01), HSGPA (r = .27, p < .01), and effort (r = .44, p < .01).

Difference between student groups

An independent samples t-test was performed in order to investigate mean level differences between (1) students who passed (n = 280) and students who failed (n = 81) the exam, and between (2) students who intended to continue studying psychology (n = 260) and students who did not (n = 182). Except for prior academic performance (HSGPA), students who did not plan to continue studying psychology were significantly different from the rest of the students, as shown in Table 3. They reported being less satisfied with the quality of education; they had lower levels of deep and strategic approaches, but a higher level of surface approach; they spent less time on their studies; and they demonstrated lower performances on their psychology exams. Table 3 also shows that the group of students who failed at the exam (grade F) had lower ratings of “appropriate workload”, “appropriate assessment” and “CEQ total” (all of the course experience scales), compared to those who passed the exam. They also demonstrated lower levels of deep and strategic approaches, but higher levels of surface approaches to learning. These students also had lower HSGPA levels and spent less time on their studies (effort). All of these differences were significant at the 1% level, and the effect sizes for each of the variables varied strongly according to guidelines by Cohen (1988), who defined effect sizes as small (d = .2), medium (d = .5), and large (d = .8). However, some of these differences may be educationally trivial due to large sample sizes.

Additionally, the mean level for students who failed to take the final exam (n = 73) for some reason (e.g. withdrawal) was compared to the rest of the students. This analysis showed that students who failed to take the exam scored significantly higher on the CEQ scale appropriate workload (p < .05), but lower on good teaching (p < .05) and strategic approach (p < .01). Hence, they were less satisfied with the teaching and they were less strategic, but they were less dissatisfied with the workload. This latter finding may reflect that this group of students is less concerned about the amount of work required. These students also spent less time on their studies during a typical study week (18.6 h as opposed to 25.5 h, p < .01), and they had lower HSGPA (p < .01). However, they were equal to the rest of the students with respect to the levels of deep and surface approaches.

Profile analysis

While the independent sample t-test accounts for mean level differences between separate variables, profile analysis explores multivariate relations (cf. Tabachnick and Fidell 2007). Two profile analyses were performed on all of the CEQ and ASSIST variables in order to investigate group differences between (1) students who passed and students who failed the exam, and between (2) students who intended to continue studying psychology and students who did not. HSGPA, effort, and examination grade were not included in this analysis, because all variables in a profile analysis must be measured on the same scale.

Mean values were used for data screening, and general linear modelling (SPSS) was used for the major analysis. No univariate or multivariate outliers were detected. By means of Hotelling’s criterion, the profiles (Figs. 1, 2) were found to deviate significantly from flatness (Hotelling’s trace for pass/fail groups of students (Fig. 1) = .12, F(6,311) = 119.54, p < .00, η 2p = .10; Hotelling’s trace for continue/not continue students (Fig. 2) = .08, F(6,311) = 4.15, p < .00, η 2p = .07). Wilks’ criterion showed that the profiles deviated significantly from parallelism (Wilks’ lambda for pass/fail groups of students (Fig. 1) = .90, F(6,311) = 5.97, p < .00, η 2p = .10; Wilks’ lambda for continue/not continue students (Fig. 2) = .93, F(6,311) = 4.15, p < .00, η 2p = .07).

Structural equation modeling

A structural equation model was developed by means of the AMOS 4.0 programme (Arbuckle 1999) in order to investigate the relationship between the above variables. The estimation method was maximum likelihood, which makes estimates based on maximizing the probability (likelihood) that the observed covariances are drawn from a population assumed to be the same as that reflected in the coefficient estimates (Pampel 2000).

In this model (Fig. 3), the observed course experience factors (good teaching, clear goals and standards, appropriate assessment, and appropriate workload) were accounted for by a single latent higher order course experience factor, partly for psychometric and theoretical reasons, as explained in the introduction, and partly in order to produce a more parsimonious model. This latent course experience factor was set to predict deep, surface and strategic approaches to learning. Next, surface and strategic approaches to learning predicted examination grade, but the positive path between deep approach and examination grade was not significant. Error covariates between some of the observed course experience (CEQ) factors and between most of the SAL factors were added because these variables are theoretically and empirically related (cf. Entwistle et al. 2000; Lizzio et al. 2002), Hence, application of error covariates is also necessary as a means of providing better model fit (cf. Byrne 2001).

A separate regression analysis showed that the students’ age predicted deep and surface (but not strategic) approaches to learning (SAL), also when controlling for the effect of course experience on SAL, thus supporting the assumption that course experience causes SAL, and not the opposite, in accordance with Richardson and Price (2003).

Furthermore, the average amount of time spent on studying per week (effort) and prior academic achievement (HSGPA) were set as predictors of examination grade. In addition, effort was also set as a predictor of deep and strategic approaches to learning. All of the paths were significant at the 1% level (except deep → examination grade). In sum, this model showed that (1) course experience factors may be accounted for by a latent higher order factor, (2) strategic and surface approaches to learning predicted examination grade, (3) these approaches to learning also functioned as mediators between course experience and examination grade, (4) both effort and HSGPA functioned as independent predictors of examination grade, and (5) effort had an additional indirect effect on examination grade via the strategic approach.

The whole model showed appropriate fit to the data (χ2 = 81.60, df = 26, p < .00, χ2/df = 3.14, TLI = .87, SRMR = .047, CFI = .94, RMSEA = .067) according to recommended cut-off values for the CFI, which provides an evaluation of the difference between an independent model and the specified model (Bentler 1990; Browne and Cudeck 1993), the RMSEA, which estimates how well the model would fit the sample if optimal parameters were available (Hu and Bentler 1995), and the SRMR, which is the average difference between the predicted and observed variances and covariances in the model, based on standardized residuals (Pampel 2000). However, the χ 2/df ratio should preferably have been below 2, and the TLI should be close to .95, but the TLI and the SRMR tend to underestimate model fit in larger samples, as opposed to the CFI and the RMSEA, which are not affected by sample size (Byrne 2001; Fan et al. 1999). The predictor variables in this model accounted for 33% of the variance in examination grade. Alternative paths between the presage, process and product variables were tested, but they were not significant, and were therefore not included. Hence, there was no significant relationship between e.g. prior academic achievement and SAL. A direct path between CEQ and examination grade was tested, but it was non-significant, and produced marginally different fit indexes (χ2 = 81.58, df = 25, p < .00, χ2/df = 3.26, TLI = .87, SRMR = .047, CFI = .94, RMSEA = .069, χ2 difference = 0, df difference = 1).

Finally, an alternative model examining the direct effect of all the variables as predictors of examination grade (without mediation) was tested (Fig. 4). However, this model produced poor fit indexes (χ2 = 328.10, df = 29, p < .00, χ2/df = 11.31, TLI = .50, SRMR = .134, CFI = .68, RMSEA = .15), and it was significantly different from the original model (Figs. 1, 2: χ2 difference = 246.50, df difference = 3, p < .01).

This model also showed that the relationship between CEQ and examination grade (shown in the correlation analysis, Table 2) disappeared when controlling for the effect of the other independent variables. Also, the path from CEQ to examination grade was insignificant. Hence, the model in Fig. 1 may be considered to be the best.

Discussion

The main purposes of the present study were to include measures of first semester undergraduate psychology students’ course experience, approaches to learning, effort and previous academic performance in a model which specified the direct, indirect, and independent effects of these variables as predictors of examination grade, and to investigate how subgroups of students differed in their mean level scores on these variables.

The correlation analysis clearly showed an advantage of deep and strategic approaches, and a disadvantage of surface approach regarding academic achievement. However, the SEM analysis showed that the positive effect of a deep approach disappeared when controlling for the effect of surface and strategic approaches. Hence, it may be more important to discourage a surface approach than to encourage a deep approach if the goal is to improve performance. On the other hand, encouragement of a deep approach is advantageous in itself, because it increases the quality of learning in terms of understanding of the learning material. Accordingly, Trigwell and Prosser (1991) suggested that a deep approach to learning is encouraged more effectively by using assessment methods and teaching practices which aim at deep learning and conceptual understanding, rather than by trying to discourage a surface approach to learning.

The structural equation model (SEM) displayed in Fig. 3 also showed that effort (in terms of the amount of time spent on studying) had an independent effect on examination grade, also when controlling for the effect of course experience, SAL and HSGPA. In fact, effort also had an indirect effect on examination grade, via strategic approaches to learning. It is interesting to compare this finding with Plant et al. (2005), who stated that it is the quality of studying (e.g. strategic approach), not the effort, which matters most for examination grade. The present findings indicate that both are important, and that students with increased effort indeed also have more strategic approaches to learning, which ultimately leads to better academic achievement. Hence, examination grade is not only a matter of effort or prior achievement (HSGPA), it is also a matter of how the students utilize their study time, in terms of motivation/intention and learning strategies. Consequently, students should be made aware that their academic achievement is partly determined by their effort and motives/learning strategies (SAL), and that these factors obviously may be improved by the students themselves.

In addition, SAL is predicted by the students’ experience of the course quality (course experience), which may be related to the actual education quality, insofar as previous research has shown that students’ evaluations are valid and reliable (Marsh 1987; Wachtel 1998). Hence, enhancing education quality may have an indirect effect on achievement, via students’ approaches to learning. For example, a deep approach may be encouraged by the students’ opportunity to manage their own learning (Ramsden and Entwistle 1981), the students’ perceptions of good teaching and “freedom in learning” (Entwistle and Smith 2002), lecturers’ interest, support and enthusiasm (Ramsden 1979), and finally by teaching and assessment methods which produce active and long-term engagement with learning tasks, stimulating and considerate teaching, and clearly stated academic expectations (Ramsden 1992). On the other hand, a surface approach is induced by assessment methods which emphasise the recall of trivial procedural knowledge and create anxiety, cynical or conflicting messages about rewards, excessive amounts of material in the curriculum, and lack of interest in and background knowledge of the subject matter (Ramsden 1992). Heavy workload and fact-based assessment are also sources of a surface approach (Entwistle and Smith 2002). In fact, the earliest research on students’ approaches to learning demonstrated that the students’ expectations of learning demands affected their learning approach, and that these expectations were effectively communicated by the assessment demands (Marton and Säljö 1976). Accordingly, Scouller (1998) found that students who prepared for multiple choice assignments adopted a surface approach, whereas students preparing for an essay assignment adopted a deep approach. Hence, it is important to recognise that examination form has an important effect on the students’ approaches to learning. However, the present sample was given an assignment consisting of both multiple choice questions and essays, which may potentially encourage both deep and surface approaches to learning.

The direction of causality between CE and SAL may be converse, as discussed in the introduction (cf. Richardson 2006). However, the alternative SEM (Fig. 4) showed no independent effect of CE as predictor of examination grade when controlling for the effect of SAL. In addition, CE and demographic variables were independent predictors of SAL. Hence, the current findings give support to the majority of previous research which maintains that CE causes SAL (cf. Diseth 2007; Lizzio et al. 2002).

As regards differences between subgroups of students, there were considerable mean level differences in course experience, SAL, effort and examination grade between the groups of students who did not intend to continue psychology studies as compared to the rest of the students. Students who intended to continue studying psychology had more favourable course experience, approaches to learning (higher deep/strategic, lower surface), and higher effort, and they performed better at the exam, as opposed to those who did not intend to continue studying psychology. However, their mean HSGPA was similar to that of the students who did not intend to continue studying psychology. This may indicate a substantial mean level difference in motivation and appreciation of the program of study, but not in academic ability (HSGPA).

There were also some interesting mean level differences between the students who failed at the exam and the students who passed. For instance, the students who failed had lower ratings on the course experience factors of appropriate workload and appropriate assessment, but not on the factors of good teaching or clear goals and standards. Hence, this group of students may not find it more difficult to know what they are expected to learn (clear goals and standards), but they may have more of a problem with the method of assessment and the amount of work on the study. Furthermore, the students who failed showed a less advantageous pattern of approaches to learning (lower deep and strategic, higher surface approach) as compared to the rest of the students. They had lower HSGPA and spent less time on their studies. Hence, this group of students may benefit from more help in managing the workload. However, these students also need to show improved effort, motivation and learning strategies, and the lower HSGPA in this group of students may reflect lower levels of academic ability.

Finally, the students who for some reason failed to take the final exam were less strategic, showed less effort, and were less dissatisfied with the workload. This may reflect a lack of engagement or a lack of opportunity to work with the studies. But it is also interesting to note that the group of students who did not take the final exam was equal to the other students with respect to deep and surface approaches to learning. Hence, they may be equally interested in the study, but fail to take the exam for other reasons (e.g. lack of effort and achievement motivation).

The present results are generally in accordance with previous research on the relationship between presage, process and product variables, but they also show some interesting additional findings. For example, previous research (Diseth et al. 2006) was unable to account for SAL as a mediator between course experience and examination grade, and it did not control for the effect of effort and previous academic performance (HSGPA). Both of these factors were included in the current investigation. Furthermore, Diseth (2007) conducted research on second semester psychology students, which in reality is a selected group of students because many students do not study psychology beyond the first semester. Also, the earlier study failed to show a direct effect of effort on examination grade, as opposed to the current results.

In conclusion, the present study has contributed to the research on predictors of academic achievement by investigating the interrelationship between several variables which accounted for a substantial amount of variance in examination grade. When attempting to understand and improve academic achievement, the joint effects of course experience interacting with students’ approaches to learning, previous academic achievement (HSGPA), and the students’ own effort should all be considered. However, the mean level differences of these variables between subgroups of students show that some students have a greater need for support and guidance. This should be taken into account in educational practice and in future research on predictors of academic achievement.

References

Arbuckle, J. L. (1999). AMOS users’ guide version 4.0. Chicago: SPSS Corporation.

Bentler, P. M. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107, 238–246.

Biggs, J. (1999). Teaching for quality learning at university. Buckingham: Open UP and SRHE.

Biggs, J. (2001). Enhancing learning: A matter of style or approach? In R. J. Sternberg & L. F. Zhang (Eds.), Perspectives on thinking, learning, and cognitive styles (pp. 73–103). London: Lawrence Erlbaum.

Biggs, J. B. (2003). Teaching for quality learning at university. UK: SRHE and Open University Press.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 445–455). Newbury Park, CA: Sage.

Byrne, B. M. (2001). Structural equation modelling with AMOS. Basic concepts, applications, and programming. London: Lawrence Erlbaum.

Byrne, M., & Flood, B. (2003). Assessing the teaching quality of accounting programmes: An evaluation of the course experience questionnaire. Assessment & Evaluation in Higher Education, 28, 135–145.

Cano, F. (2005). Epistemological beliefs and approaches to learning: Their change through secondary school and their influence on academic performance. British Journal of Educational Psychology, 75, 203–221.

Cavell, T. A., & Woehr, D. J. (1994). Predicting introductory psychology test scores: An engaging and useful topic. Teaching of Psychology, 21, 108–110.

Coffield, F., Moseley, D., Hall, E., & Ecclestone, K. (2004). Should we be using learning styles? What research has to say to practice. London: Learning and Skills Research Centre.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum.

Diseth, Å. (2001). Validation of a Norwegian version of the approaches and study skills inventory for students (ASSIST): Application of structural equation modelling. Scandinavian Journal of Educational Research, 45, 381–394.

Diseth, Å. (2003). Personality and approaches to learning as predictors of academic achievement. European Journal of Personality, 17, 143–155.

Diseth, Å. (2007). Approaches to learning, course experience and examination grade among undergraduate psychology students: Testing of mediator effects and construct validity. Studies in Higher Education, 32, 373–388.

Diseth, Å., Pallesen, S., Hovland, A., & Larsen, S. (2006). Course experience, approaches to learning and academic achievement. Education and Training, 48, 156–169.

Duff, A. (2003). Quality of learning on an MBA programme: the impact of approaches to learning on academic performance. Educational Psychology, 23, 127–139.

Entwistle, N. (1987). A model of the teaching–learning process. In J. T. E. Richardson, M. W. Eysenck, & D. Warren Piper (Eds.), Improving learning: New perspectives (pp. 178–198). London: Kogan Page.

Entwistle, N. J. (1997). The approaches and study skills inventory for students (ASSIST). Edinburgh: Centre for Research on Learning and Instruction, University of Edinburgh.

Entwistle, N., & Ramsden, P. (1983). Understanding student learning. London: Croom Helm.

Entwistle, N., & Smith, C. (2002). Personal understanding and target understanding: Mapping influences on the outcomes of learning. British Journal of Educational Psychology, 72, 321–342.

Entwistle, N., & Tait, H. (1990). Approaches to learning, evaluation of teaching, and preferences for contrasting academic environments. Higher Education, 19, 169–194.

Entwistle, N., Tait, H., & McCune, V. (2000). Patterns of response to an approaches to studying inventory across contrasting groups and contexts. European Journal of Psychology of Education, 15, 33–48.

Fan, X., Wang, L., & Thompson, B. (1999). Effects of sample size, estimation method, and model specification on structural equation modeling fit indexes. Structural Equation Modeling, 6, 56–83.

Honkimäki, S., Tynjälä, P., & Valkonen, S. (2004). University students’ study orientations, learning experiences and study success in innovative courses. Studies in Higher Education, 29, 431–449.

Hu, L.-T., & Bentler, P. M. (1995). Evaluating model fit. In R. H. Hoyle (Ed.), Structural equation modelling: Concepts, issues, and applications (pp. 76–99). Thousand Oaks, CA: Sage.

Jöreskog, K. G. (1993). Testing structural equation models. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 294–317). Newbury Park, CA: Sage.

Kember, D., & Leung, D. Y. P. (1998). Influences upon students’ perceptions of workload. Educational Psychology, 18, 293–307.

Laurillard, D. (1979). The process of student learning. Higher Education, 8, 395–409.

Lawless, C. J., & Richardson, J. T. E. (2002). Approaches to studying and perceptions of academic quality in distance education. Higher Education, 44, 257–282.

Lizzio, A., Wilson, K., & Simons, R. (2002). University students’ perceptions of the learning environment and academic outcomes: Implications for theory and practice. Studies in Higher Education, 27, 27–52.

Marsh, H. W. (1987). Students’ evaluation of university teaching: Research findings, methodological issues, and directions for future research. International Journal of Educational Research, 11, 253–388.

Marton, F., & Säljö, R. (1976). On qualitative differences in learning: I—outcome and process. British Journal of Educational Psychology, 46, 4–11.

Newble, D. I., & Clarke, R. (1987). Approaches to learning in a traditional and an innovative medical school. In J. T. E. Richardson, M. W. Eysenck, & D. Warren Piper (Eds.), Student learning: Research in education and cognitive psychology (pp. 39–48). Milton Keynes: Open University Press.

Newble, D., & Hejka, E. J. (1991). Approaches to learning of medical students and practicing physicians: Some empirical evidence and its implications for medical education. Educational Psychology, 11, 333–342.

Newstead, S. E. (1992). A study of two ‘quick-and-easy’ methods of assessing individual differences in student learning. British Journal of Educational Psychology, 62, 299–312.

Pampel, F. C. (2000). Logistic regression: A primer. Thousand Oaks, CA: Sage.

Plant, E. A., Ericsson, K. A., Hill, L., & Asberg, K. (2005). Why study time does not predict grade point average across college students: Implications of deliberate practice for academic performance. Contemporary Educational Psychology, 30, 96–116.

Prosser, M., & Trigwell, K. (1999). Understanding learning and teaching: The experience in higher education. Buckingham, UK: Open University Press.

Ramsden, P. (1979). Student learning and perceptions of the academic environment. Higher Education, 8, 411–427.

Ramsden, P. (1988). Situational influences on learning. In R. R. Schmeck (Ed.), Learning strategies and learning styles (pp. 159–184). New York: Plenum Press.

Ramsden, P. (1991). A performance indicator of teaching quality in higher education: The course experience questionnaire. Studies in Higher Education, 16, 129–150.

Ramsden, P. (1992). Learning to teach in higher education. London: Routledge.

Ramsden, P., & Entwistle, N. (1981). Effects of academic departments on students’ approaches to studying. British Journal of Educational Psychology, 51, 368–383.

Rau, W., & Durand, A. (2000). The academic ethic and college grades: Does hard work help students to “make the grade?”. Sociology of Education, 73, 19–38.

Richardson, J. T. E. (2003). Approaches to studying and perceptions of academic quality in a short web-based course. British Journal of Educational Technology, 34, 433–442.

Richardson, J. T. E. (2006). Investigating the relationship between variations in students’ perceptions of their academic environment and variations in study behaviour in distance education. British Journal of Educational Psychology, 76, 867–893.

Richardson, J. T. E., & Price, L. (2003). Approaches to studying and perceptions of academic quality in electronically delivered courses. British Journal of Educational Technology, 34, 45–56.

Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instructional Science, 18, 119–144.

Sadler-Smith, E. (1997). ‘Learning style’: Frameworks and instruments. Educational Psychology, 17, 51–63.

Sadlo, G., & Richardson, J. T. E. (2003). Approaches to studying and perceptions of the academic environment in students following problem-based and subject-based curricula. Higher Education Research and Development, 22, 253–274.

Sambell, K., & McDowell, L. (1998). The construction of the hidden curriculum: Messages and meanings in the assessment of student learning. Assessment and Evaluation in Higher Education, 23, 391–402.

Schuman, H., Walsh, E., Olson, C., & Etheridge, B. (1985). Effort and reward: The assumption that college grades are affected by the quantity of study. Social Forces, 63, 945–966.

Scouller, K. (1998). The influence of assessment method on students’ learning approaches: Multiple choice question examination versus assignment essay. Higher Education, 35, 453–472.

Tabachnick, B., & Fidell, L. (2007). Using multivariate statistics. Boston: Pearson.

Touron, J. (1983). The determinants of factors related to academic achievement in the university: Implications for the selection and counselling of students. Higher Education, 12, 399–410.

Trigwell, K., & Prosser, M. (1991). Improving the quality of student learning: The influence of learning context and student approaches to learning on learning outcomes. Higher Education, 22, 251–266.

Volet, S. E., & Chalmers, D. (1992). Investigation of qualitative differences in university students’ learning goals based on an unfolding model of stage development. British Journal of Educational Psychology, 62, 17–34.

Wachtel, H. (1998). Student evaluation of college teaching effectiveness: A brief review. Assessment and Evaluation in Higher Education, 23, 191–211.

Watkins, D. (2001). Correlates of approaches to learning: A cross-cultural meta-analysis. In R. J. Sternberg & L. F. Zhang (Eds.), Perspectives on thinking, learning, and cognitive styles (pp. 165–195). Mahwah, NJ: Lawrence Erlbaum.

Wilson, K. L., Lizzio, A., & Ramsden, P. (1997). The development, validation and appreciation of the course experience questionnaire. Studies in Higher Education, 22, 33–53.

Woehr, D. J., & Cavell, T. A. (1993). Self-report measures of ability, effort, and non-academic activity as predictors of introductory psychology test scores. Teaching of Psychology, 20, 156–160.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Diseth, Å., Pallesen, S., Brunborg, G.S. et al. Academic achievement among first semester undergraduate psychology students: the role of course experience, effort, motives and learning strategies. High Educ 59, 335–352 (2010). https://doi.org/10.1007/s10734-009-9251-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10734-009-9251-8