Abstract

Surface-wave dispersion analysis is widely used in geophysics to infer a shear wave velocity model of the subsoil for a wide variety of applications. A shear-wave velocity model is obtained from the solution of an inverse problem based on the surface wave dispersive propagation in vertically heterogeneous media. The analysis can be based either on active source measurements or on seismic noise recordings. This paper discusses the most typical choices for collection and interpretation of experimental data, providing a state of the art on the different steps involved in surface wave surveys. In particular, the different strategies for processing experimental data and to solve the inverse problem are presented, along with their advantages and disadvantages. Also, some issues related to the characteristics of passive surface wave data and their use in H/V spectral ratio technique are discussed as additional information to be used independently or in conjunction with dispersion analysis. Finally, some recommendations for the use of surface wave methods are presented, while also outlining future trends in the research of this topic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Surface waves have been studied in seismology for the characterization of the Earth’s interior since the 1920s, but their widespread use started during the 1950s and 1960s thanks to the increased possibilities of numerical analysis and to improvements in instrumentation for recording seismic events associated with earthquakes (Dziewonski and Hales 1972; Aki and Richards 1980; Ben-Menahem and Singh 2000). Geophysical applications at regional scales for the characterization of geological basins make use of seismic signals from explosions (Malagnini et al. 1995) and microtremors (Horike 1985). Engineering applications started in the 1950s with the Steady State Rayleigh Method (Jones 1958), but their frequent use only began over the last two decades, initially with the introduction of the SASW (Spectral Analysis of Surface Waves) method (Stokoe II et al. 1994) and then with the spreading of multistation methods (Park et al. 1999; Foti 2000). The recent interest in surface waves methods in shallow geophysics is witnessed by numerous workshops and sessions at international conferences and by dedicated issues of international journals (EAGE—Near Surface Geophysics, November 2004; Journal of Engineering and Environmental Geophysics, June and September 2005). A comprehensive literature review on the topic is reported by Socco et al. (2010b).

Despite the different scales, the aforementioned applications rely on the same basic principles. They are founded on the geometrical dispersion, which makes the velocity of Rayleigh waves frequency dependent in vertically heterogeneous media. High frequency (short wavelength) Rayleigh waves propagate in shallow zones close to the free surface and are informative about their mechanical properties, whereas low frequency (long wavelength) components sample deeper layers. Surface wave methods use this property to characterize materials over a very wide range of scales, from microns to kilometres. The essential differences between applications are given by the frequency range of interest and the spatial sampling, as it will be detailed in next sections.

The basic principles of guided waves also find similar applications related to different waves. One example is electromagnetic waves generated by ground-penetrating radar (GPR) (Haney et al. 2010), which can be analysed to provide information on the water saturation of shallow sediments (Strobbia and Cassiani 2007; van der Kruk et al. 2010).

Surface wave tests are typically devoted to the determination of a small strain stiffness profile for the site under investigation. Moreover, as shown by Malagnini et al. (1995) and Rix et al. (2000), surface wave data can also be used to characterize the dissipative behaviour of soils. Although this aspect will not be covered in detail in the present paper, it certainly deserves attention and further research considering the difficulties in getting reliable estimates of this using geophysical methods. Also, laboratory testing procedures on which damping estimates often rely upon are not representative of the behaviour of the whole soil deposit. Other relevant contributions to damping estimation from surface wave data are provided by Lai et al. (2002), Xia et al. (2002), Foti (2004), Albarello and Baliva (2009), and Badsar et al. (2010).

Another use of surface wave data is based on the analysis of seismic noise horizontal-to-vertical spectral ratios (NHV) in single station measurements of seismic noise. The ratio of the Horizontal-to-Vertical spectral components of seismic records was originally proposed as a tool for the determination of the resonance frequency of a soil deposit by Nogoshi and Igarashi (1970, 1971). Subsequently, the technique was revised by Nakamura (1989) and found large diffusion thanks to its cost effectiveness. The original interpretation of such information was based on the idea that peaks in the NHV were associated mainly with different amplifications of body wave propagating from the interior of the Earth. Nowadays, it is widely accepted that in most cases the peaks are associated with the surface wave content in the vertical and horizontal signals and as such they can be analysed to provide an estimate of the resonance frequencies of the layered system, which assumes the same values of the ones pertinent to the amplification of shear waves (Fäh et al. 2001; Malischewsky and Scherbaum 2004). Inverse analysis can also be applied to the ellipticity of Rayleigh waves alone or both Rayleigh and Love waves to provide information on the S-wave velocity below a site (Fäh et al. 2003), or in joint interpretation schemes with surface wave dispersion curves (Arai and Tokimatsu 2005; Parolai et al. 2005).

The current paper is organised as follows: after a general overview on surface wave dispersion analysis, with the main focus on phase velocity, different experimental techniques either based on active-source or passive source measurements are discussed. Approaches and strategies proposed in the literature for the solution of the inverse problem are then covered. Finally some recommendations for the use and selection of surface wave methods for site characterization are reported.

2 Surface Wave Dispersion Analyses

In order to summarize the concept behind the use of geometrical dispersion for soil characterization, let us assume that the stratified medium in Fig. 1a is characterized by increasing stiffness, hence increasing shear-wave velocity, with depth. In such a situation, a high frequency Rayleigh wave (i.e., a short wavelength, Fig. 1b), travelling in the top layer will have a velocity of propagation slightly lower than the velocity of a shear wave in the first layer. On the other hand, a low frequency wave (i.e., a long wavelength, Fig. 1c) will travel at a higher velocity because it is influenced also by the underlying stiffer materials. This concept can be extended to several frequency components. The phase velocity vs. wavelength (Fig. 1d) plot will hence show an increasing trend for longer wavelengths. Considering the relationship between wavelength and frequency, this information can be represented as a phase velocity versus frequency plot (Fig. 1e). This graph is usually termed a dispersion curve. This example shows, for a given vertically heterogeneous medium, that the dispersion curve will be associated with the variation of medium parameters with depth. This is the so called forward problem. It is important, however, to recognize the multimodal nature of surface waves in vertically heterogeneous media, i.e., several modes of propagation exist and higher modes can play a relevant role in several situations. Only the fundamental mode dispersion curve is presented in Fig. 1.

If the dispersion curve is estimated on the basis of experimental data, it is then possible to solve the inverse problem, i.e., the model parameters are identified on the basis of the experimental data collected on the boundary of the medium. This is the essence of surface wave methods.

Figure 2 outlines the standard procedure for surface wave tests, which can be subdivided into three main steps:

-

1.

Acquisition of experimental data;

-

2.

Signal processing to obtain the experimental dispersion curve;

-

3.

An inversion process to estimate the shear wave velocity profile at the site.

It is very important to recognize that the above steps are strongly interconnected and their interaction must be adequately accounted for during the whole interpretation process.

Appealing alternatives for the interpretation of surface wave data are the inversion of field data based on full waveform simulations and the inversion of the Fourier frequency spectra of observed ground motion (Szelwis and Behle 1987), but these strategies are rarely used because of their complexity. Moreover, the experimental dispersion curve is informative about trends to be expected in the final solution, so that its visual inspection is important for the qualitative validation of the results. Indeed, engineering judgment plays a certain role in test interpretation. Since the site and the acquisition are never “ideal”, the results of fully automated interpretation procedures must also be carefully examined, with special attention paid to intermediate results during each step of the interpretation process. A deep knowledge of the theoretical aspects and experience are hence essential.

Surface wave data can also be used to characterize the dissipative behaviour of soils. Indeed, the spatial attenuation of surface waves is associated with the internal dissipation of energy. Using a procedure analogous to the one outlined in Fig. 2 it is possible to extract from field data the experimental attenuation curve, i.e., the coefficient of attenuation of surface waves as a function of frequency, and then use this information in an inversion process that aims to estimate the damping ratio profile for the site (Lai et al. 2002; Foti 2004).

The primary use of surface wave testing is related to site characterization in terms of shear wave velocity profiles. The VS profile is indeed of primary interest for seismic site response, vibration of foundations and vibration transmission in soils. Other applications are related to the prediction of ground settlement and to soil-structure interaction. Comparisons of results from surface wave tests and borehole tests are frequent in the technical and scientific literature, showing the reliability of the method (see, for example, Fig. 3).

With respect to the evaluation of seismic site response, it is worth noting the affinity between the model used for the interpretation of surface wave tests and the model adopted for most site responses study. Indeed, the application of equivalent linear elastic methods is often associated with layered models, see for example the code SHAKE by Schnabel et al. 1972s. This affinity is also particularly important in the light of equivalence problems, which arise because of the non-uniqueness of the solution in inverse problems. Indeed, profiles which are equivalent in terms of Rayleigh wave propagation are also equivalent in terms of seismic amplification (Foti et al. 2009).

Many seismic building codes (e.g., NEHRP 2000; CEN 2004) introduce the weighted average of the shear wave velocity profile in the shallowest 30 m to discriminate classes of soil to which a similar site amplification effect can be associated. The so-called VS,30 can also be evaluated very efficiently with surface wave methods because its average nature does not require the high resolution provided by seismic borehole methods, such as Cross-Hole tests and Down-Hole tests (Moss 2008; Comina et al. 2011).

A brief discussion of each step involved in surface wave testing is reported in the following section.

2.1 Acquisition

Surface wave data are typically collected on the surface using a variable number of receivers, which can be deployed both with one-dimensional (i.e., 1D) or two-dimensional (i.e., 2D) geometries. Several variations can be introduced both in the choice of receivers and acquisition device and in the generation of the wave fields.

The receivers adopted for testing related to exploration geophysics and engineering near surface applications are typically geophones (velocity transducers). Accelerometers are more often used for the characterization of pavement systems because in this case, the need for high frequency components makes the use of geophones not optimal.

The advantage of using geophones instead of accelerometers arises because geophones do not need a power supply, whereas accelerometers do. Moreover, in cases where surface waves are extracted from seismic noise recordings, accelerometers do not generally have the necessary sensitivity. On the other hand, low frequency geophones (natural frequency less than 2 Hz) tend to be bulky and very vulnerable because the heavy suspended mass can be easily damaged during deployment on site.

Several devices can be used for the acquisition and storage of signals. Basically, any device having an A/D converter and the capability to store the digital data can be adopted, ranging from seismographs to dynamic signal analyzers to purpose-made acquisition systems built using acquisition boards connected to PCs or laptops. Commercial seismographs for geophysical prospecting are typically the first choice because they are designed to be used in the field, hence they are physically very robust. New generation seismographs are comprised of scalable acquisition blocks to be used in connection with field computers, hence allowing preliminary processing of data on site. As for as the generation of the wavefield is concerned, several different sources can be used, provided they generate sufficient energy in the frequency range of interest for the application. Impact sources are often preferred because they are quite low cost and allow for fast testing. A variety of impacts can be used ranging from small hammers for high frequency range signals (10–200 Hz), to large falling weights, which generate low frequency signals (2–40 Hz). Appealing alternatives are controlled sources which are able to generate a harmonic wave, hence assuring very high quality data. Also, the size of the source is variable from relatively small electromagnetic shakers to large truck-mounted vibroseis. The drawback of such sources is their cost and the need for longer acquisition processes on site. However, this aspect could be circumvented using swept-sine signals as input.

A different perspective is the use of seismic noise analysis. In this case the need for the source is avoided by recording background noise and the test is undertaken using a “passive” approach. Seismic noise consists of both cultural noise generated by human activities (traffic on highways, construction sites, etc.) and that associated with natural events (sea waves, wind, etc.). A great advantage is that seismic noise is usually rich in low frequency components, whereas high frequency components are strongly attenuated when they travel through the medium and are typically not detected. Hence, seismic noise surveys provide useful information for deep characterization (tens or hundreds of meters), whereas the level of detail close to the surface is typically low. In seismic noise surveys, however, the choice of the appropriate instrument is crucial (see e.g., Strollo et al. 2008 and references therein). Indeed, it is worth noting that due to the very low environmental seismic noise amplitude (i.e., the displacements involved are generally in the range 10−4 to 10−2 mm), a prerequisite for high quality seismic noise recordings is the selection of A/D converters with adequate dynamic ranges (i.e., at least 19 bit).

The limitation in resolution close to the surface can be overcome by combining active and passive measurements, or with the new generation of low cost systems (Picozzi et al. 2010a) using a large number of sensors and high sampling rates.

2.2 Processing

The field data are processed to estimate the experimental dispersion curve, i.e., the relationship between phase velocity and frequency. The different procedures apply a variety of signal analysis tools, mainly based on the Fourier Transform. Indeed, using Fourier analysis, it is possible to separate the different frequency components of a signal that are subsequently used to estimate phase velocity using different approaches in relation to the testing configuration and the number of receivers. Alternative procedures are based on the group velocity of surface wave data, which can be obtained with the Multiple Filter Technique (Dziewonski et al. 1969) and its modifications (Levshin et al. 1992; Pedersen et al. 2003). These techniques do not suffer from spatial aliasing which affects estimates of phase velocity. However, here we will focus on phase velocity analysis, which is more widespread in the field of seismic site characterisation.

Some equipments allow for a pre-processing of experimental data directly in the field. Indeed the simple visual screening of time traces is not always sufficient because surface wave components are grouped together and without signal analysis it is not possible to judge the quality of data. In particular an assessment of the frequency range with high signal quality can be particularly useful to assess the necessity of changing the acquisition setup or the need for gathering additional experimental data. Ohrnberger et al. (2006) first proposed the use of wireless mobile ad-hoc network of standard seismological stations equipped with highly sensitivity, but also highly expensive, Earth Data digitizers for site-effect estimate applications. To overcome the resolution problem posed by a reduced number of stations available, the authors proposed to repeat the measurements using consecutive arrays with different sizes. Recently, Picozzi et al. (2010a) presented a new system, which is named GFZ-WISE, for performing dense 2D seismic ambient-noise array measurements. Since the system is made up of low-cost wireless sensing units that can form dense wireless mesh networks, raw data can be communicated to a user’s external laptop which is connected to any node that belongs to the network, allowing a user to perform real-time quality control and analysis of seismic data.

2.3 Inversion

The solution of the non linear inverse problem is the final step in the test interpretation. Assuming a model for the soil deposit, model parameters that minimize an object function representing the distance between the experimental and the numerical dispersion curves are identified. The object function can be expressed in terms of any mathematical norm (usually the RMS) of the difference between experimental and numerical data points. In practice, the set of model parameters that produces a solution of the forward problem (a numerical dispersion curve) as close as possible to the experimental data (the experimental dispersion curve of the site) is selected as the solution of the inverse problem (e.g., Fig. 4).

This goal can be reached using a variety of strategies. A major distinction arises between Local Search Methods (LSM), which minimize the difference starting from a tentative profile and searching in its vicinity, and Global Search Methods (GSM), which attempt to explore the entire parameter space of possible solutions. As can also be intuitively imagined, both methods present advantages and drawbacks.

LSMs are undoubtedly faster since they require a limited number of runs of the forward Rayleigh wave propagation problem, but since the solution is searched in the vicinity of a tentative profile, there is the risk of being trapped in local minima. On the other hand, LSMs allow the estimation of the resolution and model covariance matrixes, which are powerful tools for verifying the existence of trade-off among model parameters, and for assessing the confidence bounds for the unknown parameters.

On the other side GSMs require a much bigger computational effort since a large number of forward calculations is required, so that the approach is quite time consuming. However, GSMs are considered inherently stable methods, because they require the computation of the forward problem and of the cost function only, avoiding any potentially numerically instable process (e.g., matrix inversion and partial derivative estimates).

In general, surface wave dispersion curve inverse problems are inherently ill-posed and a unique solution does not exist. A major consequence is the so called equivalence problem, i.e., several shear wave velocity profiles can be equivalent with respect to the experimental dispersion curve, meaning that the numerical dispersion curve associated to each of these profiles is at the same distance from the experimental dispersion curve. A meaningful evaluation of equivalent profiles has also to take into account the uncertainties in the experimental data. Additional constrains and a priori information from borehole logs or other geophysical tests are useful elements in resolving the equivalence problem.

3 Active Source Methods

3.1 Spectral Analysis of Surface Waves (SASW)

The traditional SASW method uses either impulsive sources such as hammers or steady-state sources like vertically oscillating hydraulic or electro-mechanical vibrators that sweep through a pre-selected range of frequencies, typically between 5 and 200 Hz. Rayleigh waves are detected by a pair of transducers located at distances D and D + X from the source. The signals at the receivers are digitised and recorded by a dynamic signal analyser. The Fast Fourier Transform is computed for each signal and the cross power spectrum between the two receivers is calculated. Multiple signals are averaged to improve the estimate of the cross power spectrum. An impact source creates a wave-train, which has components over a broad frequency range. The ground motion is detected by a pair of receivers, which are placed along a straight line passing from the source, and the signals are then analysed in the frequency domain. The phase velocity \( V_{R} \) is obtained from the phase difference of the signals using the following relationship:

in which \( \Uptheta_{12} (\omega ) \) is the cross-power spectrum phase between two receivers, ω is the angular frequency and X is the inter-receiver spacing.

One critical aspect of the above procedure is the influence of the signal-to-noise ratio. Indeed, the measurement of phase difference is a very delicate task. The necessary check on the signal-to-noise ratio is usually accomplished using the coherence function (Santamarina and Fratta 1998), whose value is equal to 1 for linearly correlated signals in the absence of noise. Only the frequency ranges having a high value of the coherence function are used for the construction of the experimental dispersion curve. It must be remarked that the coherence function must be evaluated using several pairs of signals, leading to the necessity of repeating the test using the same receiver setup.

As an example, Fig. 5 shows the spectral quantities relative to a pair of receivers the couple with 18 m spacing, selected from a test performed using a weight-drop source. Together with the Cross-Power Spectrum phase, the Coherence function and the Auto-Power spectra at the two receivers are reported. These other quantities give a clear picture of the frequency range over which most of the energy is concentrated and hence there is a high signal-to-noise ratio.

Example of a two-receiver data elaboration (source: 130 kg weight-drop, inter-receiver distance 18 m): a cross power spectrum (wrapped); b coherence function; c Auto-power spectrum (receiver 1); d Auto-power spectrum (receiver 2) (Foti 2000)

Other important concerns are near-field effects and spatial aliasing in the recorded signals. In this respect, usually a filtering criterion (function of the testing setup) is applied to the dispersion data (Ganji et al. 1998), e.g., only frequencies for which the following relationship is satisfied are retained:

where \( \lambda_{R} \left( \omega \right) = V_{R} \left( \omega \right)/f \) is the estimated wavelength, D is the source-first geophone distance, and X is the inter-receiver spacing (Fig. 6). Typically, the receiver positions are such that X and D are equal, in accordance with the results of some parametric studies about the optimal test configuration (Sanchez-Salinero 1987).

Acquisition schemes for 2-station SASW: a common receiver mid-point; b common source (Foti 2000)

The above filtering criterion assumes that near-field effects are negligible if the first receiver is placed at least half a wavelength away from the source for a given frequency in the spectral analysis. Such an assumption is acceptable in a normally dispersive site, i.e., a site having stiffness increasing with depth, but it can be too optimistic for more complex situations (Tokimatsu 1995). For this reason and in order to avoid a significant loss of data, inversion methods that take into account near field effects have been proposed (Roesset et al. 1991, Ganji et al. 1998).

For the aforementioned considerations, a single testing configuration gives information only for a particular frequency range, which is dependent on the receiver positions. The test is then repeated using a variety of geometrical configurations that include adapting the source type to the actual configuration, i.e., lighter sources (hammers) are used for high frequencies (small receiver spacing) and heavier ones (weight-drop systems) for low frequencies (large receiver spacing). Usually five or six setups are used, moving source and receivers according to a common-receiver-midpoint scheme (Nazarian and Stokoe II 1984).

Typically, the test is repeated for each testing configuration in a forward and reverse direction, moving the source from one side to the other with respect to the receivers (Fig. 6a). Such a procedure is quite time consuming, but it is required to avoid the drift that can be caused by instrument phase shifts between the receivers, since the analysis process is based on a delicate phase difference measurement. Yet, very often the measurements are conducted using a common source scheme (Fig. 6b) in order to avoid the need for moving the source, especially when it cannot be easily moved (i.e., large and heavy sources).

Finally, the information collected in several testing configurations is assembled (Fig. 7) and averaged to estimate the experimental dispersion curve at the site, which will be used for the subsequent inversion process.

Assembling dispersion curves branches in SASW method (Foti 2000)

A crucial task in the interpretation of the SASW test is related to the unwrapping of the Cross-Power Spectrum phase. This is obtained in a modulo-2π, which is very difficult to interpret and unsuitable for further processing (Poggiagliolmi et al. 1982). The passage to an unwrapped (full-phase) curve is necessary for the computation of time delay as a function of frequency (see Eq. 1).

Usually, some automated algorithms are applied for this task (Poggiagliolmi et al. 1982), but external noise can produce fictitious jumps in the wrapped phase, which drastically damage the results. The operator may not always be able to correct such unwrapping errors on the basis of judgement and in any case, it is a subjective procedure, which precludes the automation of the process. The unwrapping procedure begins in the low frequency range where there is a very low signal-to-noise ratio, but error in the unwrapping of low frequencies might also affect velocity estimation also in the frequency range where the signal-to-noise ratio is good. An automated procedure based on a least-square interpolation of the cross-power spectrum phase has also been proposed (Nazarian and Desai 1993).

3.2 Multi-channel Analysis of Surface Waves (MASW)

The use of a multi-station testing setup can introduce several advantages in surface wave testing. In this case, the motion generated by the source is detected simultaneously at several receiver locations in line with the source itself. This testing setup is similar to the one used for seismic refraction/reflection surveys, providing interesting synergies between different methods (Foti et al. 2003; Ivanov et al. 2006; Socco et al. 2010a).

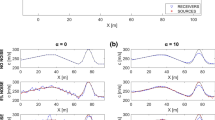

For surface wave analysis, the experimental data are typically transformed from the time-offset domain to different domains, where the dispersion curve is easily extracted from the spectral maxima. For example, applying a double Fourier transform to field data the dispersion curve can be identified as the maxima in the frequency-wavenumber domain (Fig. 8). Other methods use different transforms obtaining similar results, e.g., the ω-p (frequency-slowness) domain representation obtained by the slant-slack transform (McMechan and Yedlin 1981) or the MASW method (Park et al. 1999). The formal equivalence of these approaches can be proved considering the mathematical properties of the different transforms (Santamarina and Fratta 1998) and there is practically no difference in the obtained dispersion curves (Foti 2000). An alternative method for extracting the surface wave dispersion curve from multistation data is based on the linear regression of phase versus offset at each frequency (Strobbia and Foti 2006).

Example of processing of experimental data using the frequency-wavenumber analysis: a field data; b fk domain; c dispersion curve (Foti 2005)

In theory, transform-based methods allow the identification of several distinct Rayleigh modes. Tselentis and Delis (1998) showed that the fk spectrum for surface waves in layered media can be written as the following sum of modal contributions:

where S m is a source function, x n is the distance from the source of the nth receiver, α m and k m are, respectively, attenuation and wavenumber for the mth mode. Note that the above expression is valid in the far field (Aki and Richards 1980) and that the geometrical spreading is neglected because it can be accounted for in processing (Tselentis and Delis 1998). Observing the quantity in the square brackets in Eq. 3, it is evident that, if material attenuation is neglected, the maxima of the energy spectrum are obtained for \( k = k_{m} (f) \). Furthermore, it can be shown that also if the above differentiation is conducted without neglecting the material attenuation, the conclusion is the same, i.e., the accuracy is not conditioned by material attenuation (Tselentis and Delis 1998).

Once the modal wavenumbers have been estimated for each frequency, they can be used to evaluate the dispersion curve, recalling that phase velocity is given by the ratio between frequency and wavenumber.

Using a very large number of signals (256) Gabriels et al. (1987) were able to identify six experimental Rayleigh modes for a site. They then used these modes for the inversion process. The possibility of using modal dispersion curves is a great advantage with respect to methods giving only a single dispersion curve (as the two-station method) because having more information means a better constrained inversion. Nevertheless, it has to be considered that, in standard practice, the number of receivers for engineering applications is typically small and the resulting reduced spatial sampling strongly affects the resolution of the surface wave test. Receiver spacing influences aliasing in the wavenumber domain, so that if high frequency components are to be sought, the spacing must be small. On the other side, the total length of the receiver array influences the resolution in the wavenumber domain. Obviously, using a finite number of receivers this aspect generates a trade-off similar to the one existing between resolution in time and in frequency, as the resolution in the wavenumber domain is inversely proportional to the total length of the acquisition array. Using a simple 2D Fourier Transform on the original dataset to obtain the experimental fk domain would lead to a spatial resolution not sufficient to obtain a reliable estimate of the dispersion curve. The use of zero padding or advanced spectral analysis techniques such as beamforming or MUSIC (Zywicki 1999) makes it possible to locate the correct position of the maxima in the fk panel (Fig. 9).

Effect of zero padding on resolution in the wavenumber domain: slice of the fk panel for a given frequency (Foti 2005)

Unfortunately, once a survey has been carried out adopting a certain array configuration, no signal analysis strategies can allow the improvement of the real resolution. Hence, in the following signal analysis stage, it will not be possible anymore to separate modal contributions when more than a single mode plays a relevant role in the propagation (Foti et al. 2000). This aspect is exemplified in Fig. 10 where slices of the fk spectrum for a given frequency are reported for two different synthetic datasets. If a large number of receivers is used to estimate the fk spectrum, the resolution is very high and the energy peaks are well defined, but if the number of receivers is low, the resolution is very poor. With poor resolution it is only possible to locale a single peak in the fk panel, which in principle is not associated with a single mode but to several superposed modes. The concept of apparent phase velocity has been introduced to denote the velocity of propagation corresponding to this single peak representing several modes (Tokimatsu 1995). In the example of Fig. 10a, the fundamental mode is the dominant mode in the propagation, meaning that almost all energy is associated with this mode. In this situation, the apparent phase velocity is the phase velocity associated with the fundamental mode and the inversion process can be simplified inverting the apparent dispersion curve as a fundamental mode. This situation is usual in soil deposits where stiffness increases with depth with no marked impedance jumps between different layers. On the contrary, in the example of Fig. 10b, the fundamental mode is still the one carrying more energy, but it is no longer dominant, meaning that higher modes play a relevant role in the propagation. If few receivers are used, a single peak will be observed and a single value of the phase velocity will be obtained. This value is not necessarily the phase velocity of one of the modes involved in the propagation, but it is rather a sort of average value, often referred to as the apparent phase velocity or effective phase velocity (Tokimatsu 1995). In this case, it is no longer possible to use inversion processes based on the fundamental mode or on modal dispersion, but it is necessary to use an algorithm that can account for mode superposition effects (Lai 1998) and for the actual testing configuration (O’Neill 2004). This situation is usual when strong impedance contrasts are present in the soil profile or in inversely dispersive profiles, i.e., profiles in which soft layers underlie stiff layers.

Influence of the effective wavenumber resolution on the dispersion curve a dominant fundamental mode b relevant higher modes (Foti 2005)

The dispersion curves obtained with fk analysis on the synthetic dataset used for Fig. 10b are reported in Fig. 11. As explained above, if a sufficiently high number of receivers is used, it will be possible to obtain the modal dispersion curves (Fig. 11a), whereas with the number of receivers used in standard practice, a single apparent dispersion curve will be obtained (Fig. 11b).

Influence of wavenumber resolution on the dispersion curve from synthetic data: a 256 receivers; b 24 receivers (Foti 2000)

As mentioned above, the apparent dispersion curve is dependent on the spatial array so that if higher modes are relevant for a given site, the inversion process will be cumbersome. On the other hand, if the fundamental mode is dominant, the inversion process can be noticeably simplified. However, it is not always clear from the simple inspection of the experimental dispersion curve if higher modes are involved.

4 Passive Source Methods

4.1 Refractor Microtremor (ReMi)

Similarly to the MASW method, the multi-station approach can be applied to seismic noise recordings collected by 1D arrays. This technique, generally known as Refraction Microtremors (ReMi) was recently introduced by Louie (2001) who proposed as a basis for the velocity spectral analysis the p-τ transformation, or “slantstack”, described by Thorson and Claerbout (1985). This transformation takes a record section of multiple seismograms, with seismogram amplitudes relative to distance and time (x-t), and converts them to amplitudes relative to the ray parameter p (the inverse of apparent velocity) and an intercept time τ. It is familiar to array analysts as “beam forming”, and has similar objectives as the two-dimensional Fourier-spectrum or “f-k” analysis as described by Horike (1985).

The p-τ transform is a simple line integral across a seismic record A(x,t) in distance x and time t:

where the slope of the line p = dt/dx is the inverse of the apparent velocity V a in the x direction. In practice, x is discretized into nx intervals at a finite spacing dx. That is x = j dx where j is an integer. Likewise, time is discretized with t = i dt (with dt usually 0.001-0.01 s), giving a discrete form of the p-τ transform for negative and positive p = p0 + l dp, and τ = k d t is called the slantstack:

The calculation starts from an initial p0 = −p max where p max defines the inverse of the minimum velocity that will be tested. np is set to vary between one and two times nx. dp typically ranges from 0.0001 to 0.0005 s/m, and is set to cover the interval from −p max to p max in 2np slowness steps. In this way, energy propagating in both directions along the refraction receiver line will be considered. Amplitudes at times t = τ + p x falling between sampled time points are estimated by linear interpolation.

The distances used in ReMi analysis are simply the distances between geophones starting from one end of the array. As described by Thorson and Claerbout (1985), the traces do not have to sample the range of distance The intercept times after transformation are thus simply the arrival times at one end of the array.

Each trace in the p-τ transformed record contains the linear sum across a record at all intercept times, at a single slowness or velocity value. The next step takes each p-τ trace in A(p, τ) (Eq. 5) and computes its complex Fourier transform F A (p,f) in the τ or intercept time direction and its power spectrum S A (p,f)

Then, the two p-τ transforms of a record obtained by considering the forward and reverse directions of propagation along the receiver line are summed together. To sum energy from the forward and reverse directions into one slowness axis that represents the absolute value of p, |p|, the slowness axis is folded and summed about p = 0 .

This operation completes the transform of a record from distance-time (x-t) into the p-frequency (p-f) space. The ray parameter p for these records is the horizontal component of slowness (inverse velocity) along the array. In analyzing more than one record from a ReMi deployment the individual records’ p-f images are added point-by-point into an image of summed power.

Therefore, the slowness-frequency analysis produces a record of the total spectral power considering all records from a site, which plots within slowness-frequency (p-f) axes. If one identifies trends within this domain where a coherent phase has significant power, then the slowness-frequency picks can be plotted on a typical frequency-velocity diagram for dispersion analysis. The p-τ transform is linear and invertible, and can in fact be completed equivalently in the spatial and temporal frequency domains (Thorson and Claerbout 1985). Following Louie (2001), due to the use of linear geophone arrays and to the fact that the location of environmental seismic noise sources cannot be estimated, an interpreter cannot just pick the phase velocity of the largest spectral ratio at each frequency as a dispersion curve, as MASW analyses effectively do. On the contrary, an interpreter must try to pick the lower edge of the lowest-velocity, but still reasonable peak ratio. Since the arrays are linear and do not record an on-line triggered source, some noise energy will arrive obliquely and appear on the slowness-frequency images as peaks at apparent velocities V a higher than the real in-line phase velocity v. In the presence of an isotropic or weakly heterogeneous wave field, it can be demonstrated (Louie 2001; Mulargia and Castellaro 2008) that out-of-line wave fronts do not affect significantly the Rayleigh waves dispersion curve. However, this is not true when markedly directional effects exist. Cox and Beekman (2010) have shown the high experimental uncertainty associated to array orientation and source position. In this respect, 2D arrays (see next section) provide the capability to resolve obliquely incident energy. An example of the application of the ReMi technique can be found in Stephenson et al. (2005) and Richwalski et al. (2007).

Interestingly, Fig. 12 shows that, starting from the same 1D recording data-set, the dispersion curve obtained using the ESAC signal analysis (see the following section for details on the ESAC analysis) automatically corresponds to the lower edge of the maxima energy distribution within the ReMi’s frequency-velocity plot. It is worth noting that the combination of the ReMi and ESAC analyses would allow thus eliminating the questionable and unclear, especially for un-experienced interpreters, manual velocity picking analysis step introduced in Louie (2001).

4.2 Two-dimensional (2D) Arrays

Seismic arrays were originally proposed at the beginning of the 1960s as a new type of seismological tool for the detection and identification of nuclear explosion (Frosch and Green 1966). Since then, seismic arrays have been applied at various scales for many geophysical purposes. At the seismological scale, they were used to obtain refined velocity models of the Earth’s interior (e.g., Birtill and Whiteway 1965; Whiteway 1966; Kværna 1989; Kárason and van der Hilst 2001; Ritter et al. 2001; Krűger et al. 2001). A recent review on array applications in seismology can be found in Douglas (2002) and in Rost and Thomas (2002). At smaller scales, since the pioneering work of Aki (1957), seismic arrays have been used for the characterization of surface wave propagation, and the extraction of information about the shallow subsoil structure (i.e., the estimation of the local S-wave velocity profile). Especially in the last decades, due to the focus of seismologists and engineers on estimating the amplification of earthquake ground motion as a function of local geology, and the improvements in the quality and computing power of instrumentation, interest in analyzing seismic noise recorded by arrays (e.g., among others Horike 1985; Hough et al. 1992; Ohori et al. 2002; Okada 2003; Scherbaum et al. 2003, Parolai et al. 2005) has grown.

4.2.1 Frequency-wavenumber (f-k) Based Methods

The phase velocity of surface waves can be extracted from noise recordings obtained by 2D seismic arrays by using different methods, originally developed for monitoring nuclear explosions. Here we will illustrate the two most frequently used methods for f-k analysis: the Beam-Forming Method (BFM) (Lacoss et al. 1969) and the Maximum Likelihood Method (MLM) (Capon 1969).

The estimate of the f-k spectra P b (f,k) by the BFM is given by:

where f is the frequency, k the two-dimensional horizontal wavenumber vector, n the number of sensors, ϕ lm the estimate of the cross-power spectra between the lth and the mth data, and X i and X m , are the coordinates of the lth and mth sensors, respectively.

The MLM gives the estimate of the f-k spectra P m (f,k) as:

Capon (1969) showed that the resolving power of the MLM is higher than that of the BFM, however, the MLM is more sensitive to measurement errors.

From the peak in the f-k spectrum occurring at coordinates k xo and k yo for a certain frequency f 0, the phase velocity c 0 can be calculated by:

An extensive description of these methods can be found in Horike (1985) and Okada (2003).

As discussed in Sect. 2.2 about the MASW method, the f-k analyses presented here can be also applied to recordings collected using 1D geometry.

The estimate EPb and EPm of the true Pb and Pm f-k spectra may be considered the convolution of the true functions with a frequency window function Wf and the wavenumber window functions WB and WM for the BFM and MLM, respectively (Lacoss et al. 1969). The first window function Wf is the transfer function of the tapering function applied to the signal time windows (Kind et al. 2005). The function WB, is referred to differently by various authors (e.g., “spatial window function” by Lacoss et al. 1969, and “beam-forming array response function” by Capon 1969), and hereafter is termed the Array Response Function (ARF). The ARF depends only on the distribution of stations in the array, and for the wavenumber vector ko has the form (Horike 1985)

Simply speaking, it represents a kind of spatial filter for the wavefield. The main advantage of the MLM with respect to the BFM involves the use of an improved wavenumber window WM. That is, for a wavenumber k0, this window function may be expressed in the form

where

and qjl represents the elements of the cross-power spectral matrix. It is evident that WM depends not only on the array configuration through the function WB, but also on the quality (i.e., signal-to-noise ratio) of the data (Horike 1985). In fact, the wavenumber response is modified by using the weights Aj (f, k0), which depend directly on the elements qlj(f). In practice, WM allows the monochromatic plane wave travelling at a velocity corresponding to the wavenumber k0 to pass undistorted, while it suppresses, in an optimum least-squares sense, the power of those waves travelling with velocities corresponding to wavenumbers other than k0 (Capon 1969). Or, in other words, coherent signals are associated with large weights of Aj and their energy is emphasized in the f-k spectrum. On the contrary, if the coherency is low, the weights Aj are small and the energy in the f-k spectrum is damped (Kind et al. 2005). This automatic change of the main-lobe and side-lobe structure for minimizing the leakage of power from the remote portion of the spectrum has a direct positive effect on the Pm function, and consequently on the following velocity analysis. However, considering the dependence of WM on WB, it is clear that the array geometry is a factor having a strong influence on both EPb and EPm. In fact, similarly to every kind of filter, several large side lobes located around the major central peak can remain in the f-k spectra (Okada 2003) and determine serious biases in the velocity and back-azimuth estimates. In particular, the side-lobe height and main-lobe width within WB control the leakage of energy and resolution, respectively (Zywicki 1999).

As a general criterion, the error in the velocity analysis due to the presence of spurious peaks in the f-k spectra may be reduced using distributions of sensors for which the array response approaches a two-dimensional δ-function. For that reason, it is considered good practice to undertake a preliminary evaluation of the array response when the survey is planned. Irregular configurations of even only a few sensors should be preferred, because they allow one to obtain a good compromise between a large aperture, which is necessary for sharp main peaks in the EPb and EPm, and small inter-sensor distances, which are needed for large aliasing periods (Kind et al. 2005).

Figure 13 shows an example of a suitable 2D array configuration, its respective array response function, and aims by a simple example to clarify a basic aspect related to the array response function role and importance. In fact, Figure (13b) depicts the ideal f-k plot related to a 5 Hz wave with velocity of 300 m/s, and an optimal distribution of sensors and of seismic sources in far-field (left panel), the array response function (middle panel), and the convolution of the two (right panel). In particular, the latter plot is the f-k image that can be effectively estimated using a finite number of sensors and the selected geometry in the optimal noise free data-set case. Actually, comparing the ideal (left panel) and experimental (right panel) f-k images it is clear that the use of a limited number of sensors introduces blurring effects. As discussed in Picozzi et al. (2010b), the removal by deconvolution of the array response from the f-k estimates can improve the phase-velocity estimation, reducing the relevant level of uncertainty.

It is worth noting that the array transfer function is also a powerful tool for the planning of surface wave surveys. Indeed, once the array geometry is designed, it is possible to evaluate a priori the array resolution with respect to large wavelengths, and the aliasing related to short wavelengths. Figure 14a shows for the array configuration of Fig. 13a an example of poor resolution with respect to a 1 Hz wave with 300 m/s velocity. That is to say, within the experimental f-k image resulting from the convolution of the ideal f-k spectra and the array response function (Fig. 14a, right panel) it is not possible to identify the ideal circle of wavenumbers related to the wave propagation, but rather a unique wide peak with the maximum in the centre of the f-k image corresponding to infinite velocity.

On the other hand, Fig. 14b depicts for the same array configuration the aliasing effects hampering the f-k spectra image for a 30 Hz wave and 300 m/s velocity. In fact, for such a short wavelength, the final f-k image (Fig. 14b, right panel) starts to be corrupted by aliasing related artefacts that make it difficult to identify the correct wave velocity.

It is worth noting that given an array configuration, it is straightforward to exploit the array transfer function for identifying those wavelengths (i.e., combinations of phase velocities and frequencies) for which the array resolution is not adequate or those affected by aliasing (Fig. 14c). This aspect has to be carefully taken into account for planning the surveys, considering that the size of the array has to be specifically tuned for the frequency range of interest.

4.2.2 SPatial Auto-Correlation (SPAC) and Extended Spatial Auto-Correlation (ESAC)

Aki (1957, 1965) showed that phase velocities in sedimentary layers can be determined using a statistical analysis of ambient noise. He assumed that noise represents the sum of waves propagating without attenuation in a horizontal plane in different directions with different powers, but with the same phase velocity for a given frequency. He also assumed that waves with different propagation directions and different frequencies are statistically independent. A spatial correlation function can therefore be defined as

where u(x, y,t) is the velocity observed at point (x,y) at time t; r is the inter-station distance; λ is the azimuth and < > denotes the ensemble average. An azimuthal average of this function is given by

For the vertical component, the power spectrum ϕ(ω) can be related to ϕ(r) via the zeroth order Hankel transform

where ω is the angular frequency, c(ω) is the frequency-dependent phase velocity, and J 0 is the zero order Bessel function. The space-correlation function for one angular frequency ω 0, normalized to the power spectrum, will be of the form

By fitting the azimuthally averaged spatial correlation function obtained from measured data to the Bessel function, the phase velocity c(ω 0) can be calculated. A fixed value of r is used in the spatial autocorrelation method (SPAC). However, Okada (2003) and Ohori et al. (2002) showed that, since c(ω) is a function of frequency, better results are achieved by fitting the spatial-correlation function at each frequency to a Bessel function, which depends on the inter-station distances (extended spatial autocorrelation, ESAC). For every couple of stations the function ϕ(ω) can be calculated in the frequency domain by means of (Malagnini et al. 1993; Ohori et al. 2002; Okada 2003):

where m S jn is the cross-spectrum for the mth segment of data, between the jth and the nth station; M is the total number of used segments. The power spectra of the mth segments at station j and station n are m S jj and m S nn , respectively.

The space-correlation values for every frequency are plotted as a function of distance, and an iterative grid-search procedure can then be performed using equation (20) in order to find the value of c(ω 0) that gives the best fit to the data. The tentative phase velocity c(ω 0 ) is generally varied over large intervals (e.g., between 100 and 3,000 m/s) in small steps (e.g. 1 m/s). The best fit is achieved by minimizing the root mean square (RMS) of the differences between the values calculated with Eqs. (16) and (15). Data points, which differ by more than two standard deviations from the value obtained with the minimum-misfit velocity, can be removed before the next iteration of the grid-search. Parolai et al. (2006) using this procedure allowed a maximum of three grid-search iterations. An example of the application of this procedure is shown Fig. 15.

The ESAC method was adopted to derive the phase velocities for all frequencies composing the Fourier spectrum of the data. Figure 15 (top) shows examples of the space-correlation values computed from the data together with the Bessel function they fit best to. Figure 15 (bottom) shows corresponding RMS errors as a function of the tested phase velocities, exhibiting clear minima. For high frequencies, the absolute minimum sometimes corresponds to the minimum velocity chosen for the grid search procedure. This solution is then discarded, because a smooth variation of the velocity between close frequencies is required. At frequencies higher than a certain threshold, the phase velocity might increase linearly. This effect is due to spatial aliasing limiting the upper bound of the usable frequency band. It depends on the S-wave velocity structure at the site and the minimum inter-station distance. At low frequencies, the RMS error function clearly indicates the lower boundary for acceptable phase velocities, but might not be able to constrain the higher ones (a plateau can appear in the RMS curves). The frequency, from which phase differences cannot be resolved any more, depends on the maximum inter-station distance and the S-wave velocity structure below the site where a wide range of velocities will then explain the observed small phase differences. Zhang et al. (2004) clearly pointed out this problem in Equation (3a) of their article.

Figure 16 shows examples of the final ESAC dispersion curve compared with those obtained from the f-k BMF and MLM analyses. Interestingly, all curves in this example look normally dispersive and are in good agreement with each others over a wide range of frequencies. However, at lower frequencies (i.e., below 5 Hz) the f-k methods provide larger estimates of phase velocity than ESAC. This point was originally discussed by Okada (2003), who defined this ‘f-k degeneration effect’ and concluded that f-k methods are able to use wavelengths up two to three times the largest interstation distance, whereas with the ESAC method one may investigate wavelengths up to 10 to 20 times the largest interstation distance, being therefore more reliable in the low-frequency range.

Phase velocity dispersion curves obtained by ESAC (red line), MLM (blue line) and BFM (green line) analysis. The dotted line indicates the theoretical aliasing limit calculated as 4·f·dmin, where dmin is the minimum interstation distance. The factor 4 is used instead of the generally used factor 2, because the minimum distance in the array is appearing only once. The dashed gray line indicates the lower frequency threshold of the analysis based on 2·π·f·Δk, where Δk is calculated as the half-width of the main peak in the array response function. The continuous grey line indicates the lower frequency threshold of the analysis based on the criterion f·dmax, where dmax is the maximum interstation distance in the array

Over the last decade, new developments in SPAC method based on the use of few stations and circular arrays have been proposed with the aim of extracting the Rayleigh and Love wave dispersion velocities (see among the others, Tada et al. 2006; Asten 2006; García-Jerez et al. 2008).

4.3 Seismic Noise Horizontal-to-Vertical spectral ratio NHV

In 1989 Nakamura (Nakamura 1989) revised the Horizontal-to-Vertical (H/V) spectral ratio of seismic noise technique, first proposed by Nogoshi and Igarashi (1970, 1971). The basic principle is that average spectral ratios of ambient vibrations in the horizontal and vertical directions at a single site could supply useful information about the seismic properties of the local subsoil. Since then, in the field of site effect estimation, a large number of studies using this low cost, fast and therefore, attractive, technique have been published (e.g., Field and Jacob 1993; Lermo and Chavez-Garcia 1994; Mucciarelli 1998; Bard 1998; Parolai et al. 2001). However, attempts to provide standards for the analysis of seismic noise have only recently been carried out (Bard 1998; SESAME 2003; Picozzi et al. 2005a). An analogous approach was also proposed by considering earthquake records (Lermo and Chavez-Garcia 1993), but, being not directly related to surface waves analysis (at least in its original form) it will not be discussed here.

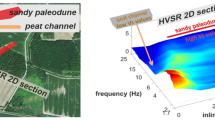

Theoretical considerations (e.g., Tuan et al. 2011) and numerical modelling (e.g., Lunedei and Albarello 2010) suggest that the pattern of the H/V ratios vs. frequency (NHV curve) presents a complex relationship with subsoil major features. On the other hand, most researchers, on the basis of comparison of noise H/V spectral ratios and earthquake site response, agree that, at least with respect to simple stratigraphic configurations, the maximum of the NHV curve provides a fair estimate of the fundamental resonance frequency of a site. This parameter is directly linked to the thickness of the soft sedimentary cover and this makes NHV curves an effective exploratory tool for seismic microzoning studies and geological surveys.

Recent studies (Yamanaka et al. 1994; Ibs-von Seht and Wohlenberg 1999; Delgado et al. 2000a, b; Parolai et al. 2001; D’Amico et al. 2008) showed that noise measurements can be used to map the thickness of soft sediments. Quantitative relationships between this thickness and the fundamental resonance frequency of the sedimentary cover, as determined from the peak in the NHV spectral ratio were calculated for different basins in Europe (e.g., Ibs-von Seht and Wohlenberg 1999; Delgado et al. 2000a).

The approach is based on the assumption that in the investigated area, lateral variations of the S-wave velocity are minor and that it mainly increases with depth following a relationship such as

where v s0 is the surface shear wave velocity, Z = z/z 0 (with z 0 = 1 m) and x describes the depth dependence of velocity. Taking this into account and considering the well-known relation among f r (the resonance frequency), the average S-wave velocity of soft sediments \( \overline{{V_{s} }} \), and its thickness h,

the dependency between thickness and f r thus becomes

where f r is to be given in Hz, v s0 in m/s and h in m.

Moreover, from (19), an empirical relationship between f r and h is expected in the approximate form

that can be parameterized from empirical observations, generally applying grid search procedures.

The above approximate interpretation can be easily extended to the case of a two-layer sedimentary cover (D’Amico et al. 2008). Despite the fact that relatively large errors affect the depth estimates provided by this approach (D’Amico et al. 2004), it can be considered as a useful proxy for exploratory purposes.

A possible limitation of this approach is the presence of thick sedimentary covers. In this case, NHV peaks could occur at very low frequency, i.e., below the minimum frequency that can be actually monitored by the available experimental tools. It is worth noting, however, that the generally available seismological/geophysical equipment allows detection of eventual NHV maxima occurring above 0.1–0.5 Hz (see for a detailed discussion about this issue and how to address a priori the choice of the equipment, Strollo et al. 2008). However, considering realistic Vs profiles for soft sedimentary covers, resonance frequencies at or below these frequency values correspond to thicknesses of several hundred meters. In these cases, lithostatic loads provide a strong compaction of sediments, with an expected increase of the relevant rigidity and corresponding Vs value at depth up to values similar to those of the underlying bedrock. This implies that impedance contrasts at the bottom of a thick sedimentary cover tend to become vanishingly small. This implies that such a structural configuration might become of minor interest when looking for amplification effects (but not for variations of ground motion). However, for general microzonation purposes, since the existence of large impedance contrasts at large depths might not be know a priori, and examples of existing large impedance contrast at depth are know in the literature (e.g., Parolai et al. 2001; Parolai et al. 2002), it is advisable that the used equipment are selected considering their technical characteristics that can, a priori, identify frequency bands where only under certain high noise level conditions the fundamental resonance frequency peak can be estimated (Strollo et al. 2008). Furthermore, when geological surveys are of concern, the effect of instrument band width limitations could lead to ambiguous interpretation of a flat NHV curve (outcropping bedrock or very deep sedimentary cover?). Local geological indications in this case could help in indentifying the most reliable interpretation

5 Inversion Methods

5.1 The Forward Modelling of the Rayleigh Wave Dispersion Curve

The basic element of the inversion procedure is the availability of a fast and reliable tool for solving the forward problem. Theoretical modelling suggests that the dispersion curves of the fundamental and higher mode Rayleigh waves and the NHV spectral ratio, while mainly depending on the S-wave velocity structure, are dependent also on the density and P-wave velocity structure. Concerning the damping profile, numerical experiments indicate that the sensitivity of the Rayleigh and Love waves dispersion curve results are relatively weak, while the NHV curve is much more sensitive to this parameter (Lunedei and Albarello 2009).

Several procedures exist to compute expected surface waves amplitudes and propagation velocities (both for the fundamental and higher modes) in the case of a flat weakly or strongly dissipative layered Earth (e.g., Buchen and Ben-Hador 1996; Lai and Rix 2002). In general, modal characteristics of surface waves are provided in implicit form (zeroes of the normal equation) and this implies that numerical aspects play a major role (e.g., Lai and Wilmanski 2005). Thus, the effectiveness of available numerical protocols (e.g., Herrmann 1987) mainly relies on their capability in reducing numerical instabilities (mode jumping, etc.).

In order to simplify the problem, the dominance of a fundamental propagation mode is commonly assumed. However, several studies (e.g., Tokimatsu et al. 1992; Foti 2000; Zhang and Chan 2003, Parolai et al. 2006) showed that for sites with S-wave velocities varying irregularly with depth (low velocity layers embedded between high velocity ones) a higher mode or even multiple modes dominate certain frequency ranges. This results in an inversely dispersive trend in these frequency ranges. Therefore, due to the contribution of higher modes of Rayleigh waves, the obtained phase velocity has to be considered an apparent one. Moreover, other studies (Karray and Lefebvre 2000) showed that, even at sites with S-wave velocity increasing with depth, the fundamental mode does not always dominate (see also Sect. 2.2). Tokimatsu et al. (1992) formulated the apparent phase velocity derived from noise-array data as the superposition of multiple-mode Rayleigh waves. Ohori et al. (2002) adopted this formulation making use of the method of Hisada (1994) for calculating the dispersion curves. Assuming that source and receivers are located only at the surface, Tokimatsu et al. (1992) proposed that the apparent phase velocity is related to the multiple-mode Rayleigh waves through:

where c si (f) is the apparent phase velocity, c m (f) and A m (f) are the phase velocities of the mth Rayleigh wave mode and the corresponding medium response (Harkrider 1964). A m (f) is related to the power spectrum density function of the mth mode, M is the maximum order of mode for each frequency, and r is the shortest distance between sensors. Parolai et al. (2006) confirmed that in the case of subsoil profiles with low velocity layers leading to apparent dispersion curves, the inversion carried out by only considering fundamental modes yielded artifacts in the derived S-wave velocity profiles.

The presence of higher modes is also responsible for another problem. The number of existing modes depends on the frequency (Aki and Richards 1980): this implies that, depending on the subsoil structure, abrupt changes exist in this number as a function of frequency (modal truncation). In particular, when higher modes play a significant role, their sudden disappearance results in unrealistic jumps in the computed dispersion and NHV curves. To reduce this problem, as suggested by Picozzi and Albarello (2007), a number of fictitious very thick layers (of the order of km) have to be added below the model to prevent artefacts. Of course, the parameters of these layers cannot be resolved by the experimental curves and simply have the role of preventing modal truncation effects.

Beyond these problems, one should be aware that surface waves only represent a part of the existing wave field. Other seismic phases (near field, body waves) also exist and could play a major role. During active surveys, this problem could be resolved by selecting suitable source-receiver distances. However, when passive procedures are of concern, it is not possible to select suitable sources and some problems could arise. In general, seismic array procedures (fk, ESAC, SPAC) allow to individuate and to remove the effect of such waves. However, this cannot be done in a single station setting (NHV). These effects have been explored theoretically (Albarello and Lunedei 2010; Lunedei and Albarello 2010) by modelling the average complete noise wave field generated by surface point sources. This study revealed that the surface waves solution only holds (above the fundamental resonance frequency of a site) in the case where a source free area of the order of several tens to hundreds meters (depending on the subsoil configuration) exists around the receiver.

As discussed from Sect. 1, surface wave dispersion analyses rely on the basic assumption that the medium can be approximated by 1D geometry; that is to say, isotropic and laterally homogeneous layers. Of course, any violation from this assumption, such as the presence of lateral heterogeneities in the subsoil structure, makes the forward modelling discussed inadequate. In general, lateral velocity variations dramatically affect both amplitudes and propagation velocities. However, it can be shown (e.g., Snieder 2002) that when the wavelength of concern is much larger than the horizontal scale length of the structural variation, local modes can be considered. These are defined at each horizontal location (x, y) as the modes that the system would have if the medium would be laterally homogeneous. That is, the properties of the medium at that particular location (x, y) can be considered to be extended laterally infinitively. In this approximation, 1D models can be applied, making it possible to develop approximate surface waves tomographic approaches (Picozzi et al. 2008a).

5.2 The Forward Modelling of Horizontal-to-Vertical Spectral Ratio curve

Arai and Tokimatsu (2000, 2004) proposed an improved forward modelling scheme for the calculation of NHV spectral ratios. Moreover, this scheme has been successfully applied in a joint inversion scheme of NHV and dispersion curves by Parolai et al. (2005), Arai and Tokimatsu (2005), Picozzi and Albarello (2007), and D’Amico et al. (2008).

Arai and Tokimatsu (2000) showed that NHV spectral ratios can be better reproduced if the contribution of higher modes of Rayleigh waves and Love waves is also taken into account. They suggest to calculate the NHV spectral ratio as:

where the subindex s stands for surface waves, and PVS and PHS are the vertical and horizontal powers of surface waves (Rayleigh and Love), respectively.

The vertical power of the surface waves is only determined by the vertical power of the Rayleigh waves (PVr), while the horizontal power must consider the contribution of both Rayleigh (PHr) and Love waves (PHL). The following equations can therefore be used:

where A is the medium response, k is the wavenumber, u/w is the H/V ratio of the Rayleigh mode at the free surface, j is the mode index, and α is the H/V ratio of the loading horizontal and vertical forces LH/LV. Parolai et al. (2005) and Picozzi et al. (2005b) showed that varying α over a large range did not significantly change the NHV shape. Therefore, they used α = 1.

A basic problem of these inversion procedures is the choice of frequency band to be considered for the inversion of the NHV curve. As an example, the NHV values around the maximum have been discarded by Parolai et al. (2006) and instead taken into account by Picozzi and Albarello (2007) and D’Amico et al. (2008). Recent theoretical studies (Lunedei and Albarello 2010; Albarello and Lunedei 2010; Tuan et al. 2011) indicated that the NHV curve around the fundamental resonance frequency f 0 (i.e., around the NHV maximum) can be significantly affected by the damping profile in the subsoil and by the distribution of sources around the receiver. In particular, they showed that sources located within a few hundred meters of the receiver can generate seismic phases that strongly affect the shape of the NHV curve around and below f 0. This implies that, unless a large source-free area exists around the receiver, the inversion of the NHV shape (around and below f 0) carried out using forward models based on surface waves only, might provide biased results.

5.3 Inversion Procedures

The inversion task can be accomplished with a number of strategies. A first order strategy classification of inversion procedures is between Local Search Methods (LSM) and Global Search Methods (GSM). A wide variety of local and global search techniques have been proposed to solve the non-linear inverse problem. In this work we will briefly outline the following: the Linearized Inversion, the Simplex Downhill Method (Nelder and Mead 1965), the Monte Carlo approach and the Genetic Algorithm (e.g., Goldberg 1989). Other global search methods proposed for surface wave dispersion inversion are: the Simulated Annealing (Beaty et al. 2002), the Neighbourhood Algorithm (Sambridge 1999a, b; Wathelet et al. 2004), and the Coupled Local Minimizers (Degrande et al. 2008).

5.3.1 Linearized Inversion (LIN)

As previously discussed, when linearized inversion methods are used, the final model inherently depends on an assumed initial model because of the existence of local optimal solutions. When an appropriate initial model is generated using a priori information about the subsurface structure, linearized inversions can find an optimal solution that is the global minimum of a misfit function.

The inverse problem is generally solved using Singular Value Decomposition (SDV, Press et al. 1986) and the Root Mean Square (RMS) of differences between observed and theoretical phase velocities (or in case of single station measurements, between observed and theoretical NHV) are minimized. Because of the non-linearity of the problem, the inversion is repeated until the RMS ceases to change significantly. Also, iterative inversion techniques like the simultaneous iterative reconstruction technique (Van der Sluis and Van der Vorst 1987) are used, but they do not provide any advantage with respect to using SVD.

5.3.2 Simplex Downhill Method (SDM)

Ohori et al. (2002) proposed using the SDM method originally outlined by Nelder and Mead (1965) to minimize the discrepancy between the squared differences of observed and theoretical phase velocities, normalized to the squared value of the observed velocities. For multi-dimensional minimizations, the algorithm requires an initial estimate. Generally, two chosen starting points are provided. The solution with the minimum misfit is adopted and the inversion then repeated, restarting from this solution. The SDM quickly and easily locates a minimum, even if, however, it might miss the global one.

5.3.3 MonteCarlo Method (MC)

In Monte Carlo (MC) procedures (Press 1968; Tarantola 2005) the space of model parameters is randomly explored and the numerical dispersion curves associated with each of several possible shear wave velocity profiles compared to the experimental dispersion curve. In contrast to linearized inversions schemes, MC inversion schemes require only an evaluation of the functions, not their derivatives. One of the main problems is the need to explore a sufficient number of profiles in order to obtain an adequate sampling of the model parameters space. An efficient inversion algorithm for the inversion of surface wave data makes use of the scale properties of the dispersion curves (Socco and Boiero 2008). These properties are linked to the scaling of the modal solution with the wavelength. If model parameters are scaled, the corresponding modal dispersion curve scales accordingly. In particular, both the phase velocities and frequencies scale if all the layer velocities are scaled, while only the frequencies scale if all the layer thicknesses are scaled (Socco and Strobbia 2004). A multimodal Monte Carlo inversion based on a modified misfit function (Maraschini et al. 2010) has been recently proposed by Maraschini and Foti (2010).

5.3.4 Modified Genetic Algorithm (GA)

With this algorithm, a search area is defined for both the S-wave velocity and thickness of the layers. An initial population of a limited number of individuals (e.g., 30) is generated and genetic operations are applied in order to generate a new population of the same size. This new population is reproduced based on a fitness function for each individual (Yamanaka and Ishida 1996). For surface wave inversion, the fitness function can be defined considering the average of the differences between the observed and the theoretical phase velocities. In addition to the crossover and mutation operation, two more genetic operations can be used to increase convergence, namely elite selection and dynamic mutation. Elite selection assures that the best model appears in the next generation, replacing the worst model in the current one. To avoid a premature convergence of the solution into a local minimum, the dynamic mutation operation was used to increase the variety in the population. Therefore, GA is a non-linear optimization method that simultaneously searches locally and globally for optimal solutions by using several models (Parolai et al. 2005).

Since this inversion applies a probabilistic approach using random numbers and finds models near to the global optimal solution, it is repeated several times by varying the initial random number. The optimal model is selected considering the minimum of the chosen fitness function. Recently, Picozzi and Albarello (2007) suggested to combine the GA inversion with a linearised one. In practice, the linearized inversion is started by using as the input model the best model of the GA inversion that it is supposed to be located close to the global minimum solution.

5.3.5 Rayleigh Wave Dispersion Curve Inversion

Parolai et al. (2006) compared different algorithms for the inversion of Rayleigh wave dispersion curves using a data-set of seismic noise recordings from different sites in the Cologne area. In particular, these authors considered the linearized inversion (e.g., Tokimatsu et al. 1991), the simplex downhill method (Nelder and Mead 1965), and the non-linear optimization method that uses a genetic algorithm (e.g., Goldberg 1989).

Figure 17 shows the inversion results for the different methods. Parolai et al. (2006) showed that when constraining the total thickness of the sedimentary cover from geological and geotechnical information, linearized local search inversions can provide very similar results to those from global search methods. However, in an area with a completely unknown structure, the genetic algorithm inversion is the preferred method. In fact, although the computations are more time-consuming, this method is less dependent upon a priori information, hence making this inversion scheme the most appealing method for deriving reliable S-wave velocity profiles.

Results from inverting the apparent Pulheim dispersion curve. The insets show the starting model for LIN and SDM (dashed), together with the borehole model. Light gray indicates the models tested in the GA inversion. Note that the S-wave velocity in the bedrock of the tested models varied by up to 3,300 m/s (data from Parolai et al. 2006)

5.3.6 NHV Inversion