Abstract

Over the past four or five decades many advances have been made in earthquake ground-motion prediction and a variety of procedures have been proposed. Some of these procedures are based on explicit physical models of the earthquake source, travel-path and recording site while others lack a strong physical basis and seek only to replicate observations. In addition, there are a number of hybrid methods that seek to combine benefits of different approaches. The various techniques proposed have their adherents and some of them are extensively used to estimate ground motions for engineering design purposes and in seismic hazard research. These methods all have their own advantages and limitations that are not often discussed by their proponents. The purposes of this article are to: summarise existing methods and the most important references, provide a family tree showing the connections between different methods and, most importantly, to discuss the advantages and disadvantages of each method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The accurate estimation of the characteristics of the ground shaking that occurs during damaging earthquakes is vital for efficient risk mitigation in terms of land-use planning and the engineering design of structures to adequately withstand these motions. This article has been provoked by a vast, and rapidly growing, literature on the development of various methods for ground-motion prediction. In total, this article surveys roughly two dozen methods proposed in the literature. Only about half are commonly in use today. Some techniques are still in development and others have never been widely used due to their limitations or lack of available tools, constraints on input parameters or data for their application.

Earthquake ground-motion estimation that transforms event parameters, e.g. magnitude and source location, to site parameters, either time-histories of ground motions or strong-motion parameters (e.g. peak ground acceleration, PGA, or response spectral displacement) is a vital component within seismic hazard assessment be it probabilistic or deterministic (scenario-based). Ground-motion characteristics of interest depend on the structure or effects being considered (e.g. McGuire 2004). At present, there are a number of methods being used within research and engineering practice for ground-motion estimation; however, it is difficult to understand how these different procedures relate to each another and to appreciate their strengths and weaknesses. Hence, the choice of which technique to use for a given task is not easy to make. The purpose of this article is to summarise the links between the different methods currently in use today and to discuss their advantages and disadvantages. The details of the methods will not be discussed here; these can be found within the articles cited. Only a brief description, list of required input parameters and possible outputs are given. The audience of this article includes students and researchers in engineering seismology but also seismic hazard analysts responsible for providing estimates for engineering projects and earthquake engineers seeking to understand limits on the predictions provided by hazard analyses. Numerous reviews of ground-motion simulation techniques have been published (e.g. Aki 1982; Shinozuka 1988; Anderson 1991; Erdik and Durukal 2003) but these have had different aims and scopes to this survey.

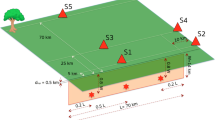

Only methods that can be used to estimate ground motions of engineering significance are examined here, i.e. those motions from earthquakes with moment magnitude M w greater than 5 at source-to-site distances <100 km for periods between 0 and 4s (but extending to permanent displacements for some special studies). In addition, focus is given to the estimation of ground motions at flat rock sites since it is common to separate the hazard at the bedrock from the estimation of site response (e.g. Dowrick 1977) and because site response modelling is, itself, a vast topic (e.g. Heuze et al. 2004). Laboratory models, including foam models (e.g. Archuleta and Brune 1975), are not included because it is difficult to scale up to provide engineering predictions from such experiments.

Section 2 summarises the different procedures that have been proposed within a series of one-page tables (owing to the vast literature in this domain, only brief details can be given) and through a diagram showing the links between the methods. The problem of defining an earthquake scenario is discussed in Section 3. Section 4 is concerned with the testing of methods using observations. The article concludes with a discussion of how to select the most appropriate procedure for a given task.

2 Summaries of Different Procedures

As described by Ólafsson et al. (2001) there are basically two approaches to the construction of models for the prediction of earthquake ground motions: the mathematical approach, where a model is analytically based on physical principles, and the experimental one, where a mathematical model, which is not necessarily based on physical insight, is fitted to experimental data. In addition, there are hybrid approaches combining elements of both philosophies. Earthquakes are so complex that physical insight alone is currently not sufficient to obtain a reasonable model. Ólafsson et al. (2001) term those models that only rely on measured data ‘black-box’ models.

Figure 1 summarises the links between the different methods described in Tables 1–22. Each table briefly: (1) describes the method; (2) lists the required input parameters (bold for those parameters that are invariably used, italic for parameters that are occasionally considered and normal font for those parameters that are often implicitly, but not often explicitly, considered) and the outputs that can be reliably obtained; (3) lists a maximum of a dozen key references (preference is given to: the original source of the method, journal articles that significantly developed the approach and review articles) including studies that test the approach against observations; (4) lists the tools that are easily available to apply approach (public domain programs with good documentation help encourage uptake of a method Footnote 1); (5) gives the rough level of use of the technique in practice and in research; and finally (6) summarises the advantages and disadvantages/limitations of the method. The following sections introduce each of the four main types of methods.

2.1 Empirical Methods

The three methods described in this section are closely based on strong ground motion observations. Such empirical techniques are the most straightforward way to predict ground motions in future earthquakes and they are based on the assumption that shaking in future earthquakes will be similar to that observed in previous events. The development of these methods roughly coincided with the recording of the first strong-motion records in the 1930s but they continue to be improved. Empirical methods remain the most popular procedure for ground-motion prediction, especially in engineering practice. Tables 1–3 summarise the three main types of empirical methods.

2.2 Black-box Methods

This section describes four methods (Tables 4–7) that can be classified as black-box approaches because they do not seek to accurately model the underlying physics of earthquake ground motion but simply to replicate certain characteristics of strong-motion records. They are generally characterised by simple formulations with a few input parameters that modify white noise so that it more closely matches earthquake shaking. These methods were generally developed in the 1960s and 1970s for engineering purposes to fill gaps in the small observational datasets then available. With the great increase in the quantity and quality of strong-motion data and the development of powerful techniques for physics-based ground-motion simulation, this family of prediction techniques has become less important although some of the procedures are still used in engineering practice.

2.3 Physics-based Methods

Although this class of methods was simply called the ‘mathematical approach’ by Ólafsson et al. (2001) the recent advances in the physical comprehension of the dynamic phenomena of earthquakes and in the simulation technology means that we prefer the name ‘physics-based methods’. These techniques often consist of two stages: simulation of the generation of seismic waves (through fault rupture) and simulation of wave propagation. Due to this separation it is possible to couple the same source model with differing wave propagation approaches or different source models with the same wave propagation code (e.g. Aochi and Douglas 2006). In this survey emphasis is placed on wave propagation techniques.

Source models that have been used extensively for ground-motion prediction include theoretical works by: Haskell (1969), Brune (1970, 1971), Papageorgiou and Aki (1983), Gusev (1983), Joyner (1984), Zeng et al. (1994) and Herrero and Bernard (1994). Such insights are introduced into prescribed earthquake scenarios, called ‘kinematic’ source models. It is well known that the near-source ground motion is significantly affected by source parameters, such as the point of nucleation on the fault (hypocentre), rupture velocity, slip distribution over the fault and the shape of the slip function (e.g. Miyake et al. 2003; Mai and Beroza 2003; Tinti et al. 2005; Ruiz et al. 2007). This aspect is difficult to take into account in empirical methods. Recently it has become possible to introduce a complex source history numerically simulated by pseudo- or fully-dynamic modelling (e.g. Guatteri et al. 2003, 2004; Aochi and Douglas 2006; Ripperger et al. 2008) into the prediction procedure. Such dynamic simulations including complex source processes have been shown to successfully simulate previous large earthquakes, such as the 1992 Landers event (e.g. Olsen et al. 1997; Aochi and Fukuyama 2002). This is an interesting and on-going research topic but we do not review it in this article.

All of the physics-based deterministic methods convolve the source function with synthetic Green’s functions (the Earth’s response to a point-source double couple) to produce the motion at ground surface. Erdik and Durukal (2003) provide a detailed review of the physics behind ground-motion modelling and show examples of ground motions simulated using different methods. Tables 8–18 summarise the main types of physics-based procedures classified based on the method used to calculate the synthetic seismograms in the elastic medium for a given earthquake source. Most of these are based on theoretical concepts introduced in the 1970s and 1980s and intensively developed in the past decade when significant improvements in the understanding of earthquake sources and wave propagation (helped by the recording of near-source ground motions) were coupled with improvements in computer technology to develop powerful computational capabilities. Some of these methods are extensively used for research purposes and for engineering projects of high-importance although most of them are rarely used in general engineering practice due to their cost and complexity.

2.4 Hybrid Methods

To benefit from the advantages of two (or more) different approaches and to overcome some of their disadvantages a number of hybrid methods have been proposed. These are summarised in Tables 19–22. These techniques were developed later than the other three families of procedures, which are the bases of these methods. Since their development, mainly in the 1980s and 1990s, they have been increasingly used, especially for research purposes. Their uptake in engineering practice has been limited until now, although they seem to be gaining in popularity due to the engineering requirement for broadband time-histories, e.g. for soil–structure interaction analyses.

3 Earthquake Scenario

Before predicting the earthquake ground motions that could occur at a site it is necessary to define an earthquake scenario or scenarios, i.e. earthquake(s) that need(s) to be considered in the design (or risk assessment) process for the site. The methods proposed in the literature to define these scenarios (e.g. Dowrick 1977; Hays 1980; Reiter 1990; Anderson 1997a; Bazzurro and Cornell 1999; Bommer et al. 2000) are not discussed here. In this section the focus is on the level of detail required to define a scenario for different ground-motion prediction techniques, which have varying degrees of freedom. In general, physics-based (generally complex) methods require more parameters to be defined than empirical (generally simple) techniques. As the number of degrees of freedom increases sophisticated prediction techniques can model more specific earthquake scenarios, but it becomes difficult to constrain the input parameters. The various methods consider different aspects of the ground-motion generation process to be important and set (either explicitly or implicitly) different parameters to default values. However, even for methods where a characteristic can be varied it is often set to a standard value due to a lack of knowledge. In fact, when there is a lack of knowledge (epistemic uncertainty) the input parameters should be varied within a physically realistic range rather than fixed to default values. Care must be taken to make sure that parameters defining a scenario are internally consistent. For example, asperity size and asperity slip contrast of earthquake ruptures are generally inversely correlated (e.g. Bommer et al. 2004).

The basic parameters required to define a scenario for almost all methods are magnitude and source-to-site distance (note that, as stated in Section 1, hazard is generally initially computed for a rock site and hence site effects are not considered here). In addition, other gross source characteristics, such as the style-of-faulting mechanism, are increasingly being considered. An often implicit general input variable for simple techniques is ‘seismotectonic regime’, which is explicitly accounted for in more complex approaches through source and path modelling. In this article, we assume that kinematic source models (where the rupture process is a fixed input) are used for ground-motion simulations. Dynamic source modelling (where the rupture process is simulated by considering stress conditions) is a step up in complexity from kinematic models and it remains mainly a research topic that is very rarely used for generating time-histories for engineering design purposes. Dynamic rupture simulations have the advantage over kinematic source models in proposing various possible rupture scenarios of different magnitudes for a given seismotectonic situation (e.g. Anderson et al. 2003; Aochi et al. 2006). However, it is still difficult to tune the model parameters for practical engineering purposes (e.g. Aochi and Douglas 2006) (see Section 2.3 for a discussion of dynamic source models).

Many factors (often divided into source, path and site effects) have been observed to influence earthquake ground motions, e.g.: earthquake magnitude (or in some approaches epicentral macroseismic intensity), faulting mechanism, source depth, fault geometry, stress drop and direction of rupture (directivity); source-to-site distance, crustal structure, geology along wave paths, radiation pattern and directionality; and site geology, topography, soil–structure interaction and nonlinear soil behaviour. The combination of these different, often inter-related, effects leads to dispersion in ground motions. The varying detail of the scenarios (i.e. not accounting for some factors while modelling others) used for the different techniques consequently leads to dispersion in the predictions. The unmodelled effects, which can be important, are ignored and consequently predictions from some simple techniques (e.g. empirical ground-motion models) contain a bias due to the (unknown) distribution of records used to construct the model with respect to these variables (e.g. Douglas 2007). There is more explicit control in simulation-based procedures. Concerning empirical ground-motion models McGuire (2004) says that ‘only variables that are known and can be specified before an earthquake should be included in the predictive equation. Using what are actually random properties of an earthquake source (properties that might be known after an earthquake) in the ground motion estimation artificially reduces the apparent scatter, requires more complex analysis, and may introduce errors because of the added complexity.’

In empirical methods the associated parameters that cannot yet be estimated before the earthquake, e.g. stress drop and details of the fault rupture, are, since observed ground motions are used, by definition, within the range of possibilities. Varying numbers of these parameters need to be chosen when using simulation techniques, which can be difficult. On the other hand, only a limited and unknown subset of these parameters are sampled by empirical methods since not all possible earthquakes have been recorded. In addition, due to the limited number of strong-motion records from a given region possible regional dependence of these parameters cannot usually be accounted for by empirical procedures since records from a variety of areas are combined in order to obtain a sufficiently large dataset.

Various prediction methods account for possible regional dependence (e.g. Douglas 2007) in different ways. Methods based on observed ground motions implicitly hope that the strong-motion records capture the complete regional dependence and that the range of possible motions is not underestimated. However, due to limited databanks it is not often possible to only use records from small regions of interest; data from other areas usually need to be imported. Physics-based methods explicitly model regional dependence through the choice of input parameters, some of which, e.g. crustal structure, can be estimated from geological information or velocimetric (weak-motion) data, while others, e.g. stress parameters, can only be confidently estimated based on observed strong-motion data from the region. If not available for a specific region parameters must be imported from other regions or a range of possible values assumed.

Although this article does not discuss site effects nor their modelling, it is important that the choice of which technique to use for a task is made considering the potential use of the ground-motion predictions on rock for input to a site response analysis. For example, predictions from empirical methods are for rock sites whose characteristics (e.g. velocity and density profiles and near-surface attenuation) are limited by the observational database available and therefore the definition of rock cannot, usually, be explicitly defined by the user; however, approximate adjustments to unify predictions at different rock sites can be made (e.g. Cotton et al. 2006). In addition, the characteristics of the rock sites within observational databases are generally poorly known (e.g. Cotton et al. 2006) and therefore the rock associated with the prediction is ill-defined. In contrast, physics-based techniques generally allow the user to explicitly define the characteristics of the rock site and therefore more control is available. The numerical resolution of each method puts limits on the velocities and thicknesses of the sufficiently layers that can be treated. Black-box approaches generally neglect site effects; when they do not the parameters for controlling the type of site to use are, as in empirical techniques, constrained based on (limited) observational databases.

4 Testing of Methods

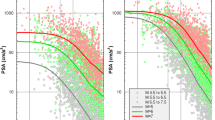

Predicted ground motions should be compared to observations for the considered site, in terms of amplitude, frequency content, duration, energy content and more difficult to characterise aspects, such as the ‘look’ of the time-histories. This verification of the predictions is required so that the ground-motion estimates can be used with confidence in engineering and risk analyses. Such comparisons take the form of either point comparisons for past earthquakes (e.g. Aochi and Madariaga 2003), visually checking a handful of predictions and observations in a non-systematic way, or more general routine validation exercises, where hundreds of predictions and observations are statistically compared to confirm that the predictions are not significantly biased and do not display too great a scatter (a perfect fit between predictions and observations is not expected, or generally possible, when making such general comparisons) (e.g. Atkinson and Somerville 1994; Silva et al. 1999; Douglas et al. 2004). In a general comparison it is also useful to check the correlation coefficients between various strong-motion parameters (e.g. PGA and relative significant duration, RSD) to verify that they match the correlations commonly observed (Aochi and Douglas 2006).

For those techniques that are based on matching a set of strong-motion intensity parameters, such as the elastic response spectral ordinates, it is important that the fit to non-matched parameters is used to verify that they are physically realistic, i.e. to check the internal consistency of the approach. For example, black-box techniques that generate time-histories to match a target elastic response spectrum can lead to time-histories with unrealistic displacement demand and energy content (Naeim and Lew 1995).

A potentially useful approach, although one that is rarely employed, is to use a construction set of data to calibrate a method and then an independent validation set of data to test the predictions. Using such a two-stage procedure will demonstrate that any free parameters tuned during the first step do not need further modifications for other situations. Such a demonstration is important when there is a trade-off between parameters whereby various choices can lead to similar predicted ground motions for a given scenario.

One problem faced by all validation analysis is access to all the required independent parameters, such as local site conditions, in order that the comparisons are fair. If a full set of independent variables is not available then assumptions need to be made, which can lead to uncertainty in the comparisons. For example, Boore (2001), when comparing observations from the Chi-Chi earthquake to shaking predicted by various empirical ground-motion models, had to make assumptions on site classes due to poor site information for Taiwanese stations. These assumptions led to a lack of precision in the level of over-prediction of the ground motions.

Until recently most comparisons between observations and predictions were visual or based on simple measures of goodness-of-fit, such as: the mean bias and the overall standard deviation sometimes computed using a maximum-likelihood approach (Spudich et al. 1999). Scherbaum et al. (2004) develop a statistical technique for ranking various empirical ground-motion models by their ability to predict a set of observed ground motions. Such a method could be modified for use with other types of predictions. However, the technique of Scherbaum et al. (2004) relies on estimates of the scatter in observed motions, which are difficult to assess for techniques based on ground-motion simulation, and the criteria used to rank the models would probably require modification if applied to other prediction techniques. Assessment of the uncertainty in simulations requires considering all sources of dispersion—modelling (differences between the actual physical process and the simulation), random (detailed aspects of the source and wave propagation that cannot be modelled deterministically at present) and parametric (uncertainty in source parameters for future earthquakes) (Abrahamson et al. 1990). The approach developed by Abrahamson et al. (1990) to split total uncertainty into these different components means that the relative importance of different source parameters can be assessed and hence aids in the physical interpretation of ground-motion uncertainty.

In addition to this consideration of different types of uncertainty, work has been undertaken to consider the ability of a simulation technique to provide adequate predictions not just for a single strong-motion intensity parameter but many. Anderson (2004) proposes a quantitative measure of the goodness-of-fit between synthetic and observed accelerograms using ten different criteria that measure various aspects of the motions, for numerous frequency bands. This approach could be optimised to require less computation by adopting a series of strong-motion parameters that are poorly correlated (orthogonal), and hence measure different aspects of ground motions, e.g. amplitude characterised by PGA and duration characterised by RSD. A goodness-of-fit approach based on the time-frequency representation of seismograms, as opposed to strong-motion intensity parameters as in the method of Anderson (2004), is proposed by Kristeková et al. (2006) to compare ground motions simulated using different computer codes and techniques. Since it has only recently been introduced this procedure has yet to become common but it has the promise to be a useful objective strategy for the validation of simulation techniques by comparing predicted and observed motions and also by internal comparisons between methods. Some comprehensive comparisons of the results from numerical simulations have been made in the framework of recent research projects and workshops (e.g. Day et al. 2005; Chaljub et al. 2007b).

If what is required from a method is a set of ground motions that include the possible variability in shaking at a site from a given event then it is important to use a method that introduces some randomness into the process (e.g. Pousse et al. 2006) to account for random and parametric uncertainties. For example, results from physically based simulation techniques will not reproduce the full range of possible motions unless a stochastic element is introduced into the prediction, through the source or path. However, if what is required from a technique is the ability to give the closest prediction to an observation then this stochastic element is not necessarily required.

5 Synthesis and Conclusions

Dowrick (1977) notes that ‘[a]s with other aspects of design the degree of detail entered into selecting dynamic input [i.e. ground-motion estimates] will depend on the size and vulnerability of the project’. This is commonly applied in practice where simple methods (GMPEs, representative accelerograms or black-box methods) are applied for lower importance and less complex projects whereas physics-based techniques are used for high importance and complex situations (although invariably in combination with simpler methods). Methods providing time-histories are necessary for studies requiring non-linear engineering analyses, which are becoming increasingly common. Dowrick (1977) believes that ‘because there are still so many imponderables in this topic only the simpler methods will be warranted in most cases’. However, due to the significant improvements in techniques, knowledge, experience and computing power this view from the 1970s is now less valid. Simple empirical ground-motion estimates have the advantage of being more defensible and are more easily accepted by decision makers due to their close connection to observations. Simulations are particularly important in regions with limited (or non-existent) observational databanks and also for site-specific studies, where the importance of different assumptions on the input parameters can be studied. However, reliable simulations require good knowledge of the propagation media and they are often computationally expensive.

One area where physics-based forward modelling breaks down is in the simulation of high-frequency ground motions where the lack of detail in source (e.g. heterogeneities of the rupture process) and path (e.g. scattering) models means high frequencies are poorly predicted. Hanks and McGuire (1981) state that ‘[e]vidently, a realistic characterization of high-frequency strong ground motion will require one or more stochastic parameters that can account for phase incoherence.’ In contrast, Aki (2003) believes that ‘[a]ll these new results suggest that we may not need to consider frequencies higher than about 10 Hz in Strong Motion Seismology. Thus, it may be a viable goal for strong motion seismologists to use entirely deterministic modeling, at least for path and site effects, before the end of the twenty-first century.’

The associated uncertainties within ground-motion prediction remain high despite many decades of research and increasingly sophisticated techniques. The unchanging level of aleatory uncertainties within empirical ground-motion estimation equations over the past thirty years are an obvious example of this (e.g. Douglas 2003). However, estimates from simulation methods are similarly affected by large (and often unknown) uncertainties. These large uncertainties oblige earthquake engineers to design structures with large factors of safety that may not be required.

The selection of the optimum method for ground-motion estimation depends on what data are available for assessing the earthquake scenario, resources available and experience of the group. Currently the choice of method used for a particular study is generally controlled by the experience and preferences of the worker and the tools and software available to them rather than it being necessarily selected based on what is most appropriate for the project.

There are still a number of questions concerning ground-motion prediction that need to be answered. These include the following—possible regional dependence of ground motions (e.g. Douglas 2007), the effect of rupture complexity on near-source ground motion (e.g. Aochi and Madariaga 2003), the spatial variability of shaking (e.g. Goda and Hong 2008) and the determination of upper bounds on ground motions (e.g. Strasser et al. 2008). All these questions are difficult to answer at present due to the lack of near-source strong-motion data from large earthquakes in many regions (little near-source data exists outside the western USA, Japan and Taiwan). Therefore, there is a requirement to install, keep operational and improve, e.g. in terms of spatial density (Trifunac 2007), strong-motion networks in various parts of the world. In addition, the co-location of accelerometers and high-sample-rate instruments using global navigation satellite systems (e.g. the Global Positioning System, GPS) could help improve the prediction of long-period ground motions (e.g. Wang et al. 2007).

In addition to the general questions mentioned above, more specific questions related to ground-motion prediction can be posed, such as: what is the most appropriate method to use for varying quality and quantity of input data and for different seismotectonic environments? how can the best use be made of the available data? how can the uncertainties associated with a given method be properly accounted for? how can the duration of shaking be correctly modelled? These types of questions are rarely explicitly investigated in articles addressing ground-motion prediction. In addition, more detailed quantitative comparisons of simulations from different methods for the same scenario should be conducted through benchmarks.

Over time the preferred techniques will tend to move to the top of Fig. 1 (more physically based approaches requiring greater numbers of input parameters) (e.g. Field et al. 2003) since knowledge of faults, travel paths and sites will become sufficient to constrain input parameters. Such predictions will be site-specific as opposed to the generic estimations commonly used at present. Due to the relatively high cost and difficulty of ground investigations, detailed knowledge of the ground subsurface is likely to continue to be insufficient for fully numerical simulations for high-frequency ground motions, which require data on 3D velocity variations at a scale of tens of metres. In the distant future when vast observational strong-motion databanks exist including records from many well-studied sites and earthquakes, more sophisticated versions of the simplest empirical technique, that of representative accelerograms, could be used where selections are made not just using a handful of scenario parameters but many, in order to select ground motions from scenarios close to that expected for a study area.

Notes

Some of the programs for ground-motion prediction are available for download from the ORFEUS Seismological Software Library http://www.orfeus-eu.org/Software/softwarelib.html

References

Abrahamson NA, Shedlock KM (1997) Overview. Seismological Research Letters 68(1):9–23

Abrahamson NA, Somerville PG, Cornell CA (1990) Uncertainty in numerical strong motion predictions. In: Proceedings of the fourth U.S. national conference on earthquake engineering, vol 1, pp 407–416

Abrahamson N, Atkinson G, Boore D, Bozorgnia Y, Campbell K, Chiou B, Idriss IM, Silva W, Youngs R (2008) Comparisons of the NGA ground-motion relations. Earthq Spectra 24(1):45–66. doi:10.1193/1.2924363

Aki K (1982) Strong motion prediction using mathematical modeling techniques. Bulletin of the Seismological Society of America 72(6):S29–S41

Aki K (2003) A perspective on the history of strong motion seismology. Physics of the Earth and Planetary Interiors 137:5–11

Aki K, Larner KL (1970) Surface motion of a layered medium having an irregular interface due to incident plane SH waves. Journal of Geophysical Research 75(5):933–954

Aki K, Richards PG (2002) Quantitative Seismology. University Science Books, Sausalito, California, USA

Akkar S, Bommer JJ (2006) Influence of long-period filter cut-off on elastic spectral displacements. Earthquake Engineering and Structural Dynamics 35(9):1145–1165

Ambraseys NN (1974) The correlation of intensity with ground motion. In: Advancements in Engineering Seismology in Europe, Trieste

Ambraseys NN, Douglas J, Sigbjörnsson R, Berge-Thierry C, Suhadolc P, Costa G, Smit PM (2004a) Dissemination of European strong-motion data, vol 2. In: Proceedings of thirteenth world conference on earthquake engineering, paper no. 32

Ambraseys NN, Smit P, Douglas J, Margaris B, Sigbjörnsson R, Ólafsson S, Suhadolc P, Costa G (2004b) Internet site for European strong-motion data. Bollettino di Geofisica Teorica ed Applicata 45(3):113–129

Anderson JG (1991) Strong motion seismology. Rev Geophys 29(Part 2):700–720

Anderson JG (1997a) Benefits of scenario ground motion maps. Engineering Geology 48(1–2):43–57

Anderson JG (1997b) Nonparametric description of peak acceleration above a subduction thrust. Seismological Research Letters 68(1):86–93

Anderson JG (2004) Quantitative measure of the goodness-of-fit of synthetic seismograms. In: Proceedings of thirteenth world conference on earthquake engineering, paper no. 243

Anderson G, Aagaard BT, Hudnut K (2003) Fault interactions and large complex earthquakes in the Los Angeles area. Science 302(5652):1946–1949, doi:10.1126/science.1090747

Aochi H, Douglas J (2006) Testing the validity of simulated strong ground motion from the dynamic rupture of a finite fault, by using empirical equations. Bulletin of Earthquake Engineering 4(3):211–229, doi:10.1007/s10518-006-0001-3

Aochi H, Fukuyama E (2002) Three-dimensional nonplanar simulation of the 1992 Landers earthquake. J Geophys Res 107(B2). doi:10.1029/2000JB000061

Aochi H, Madariaga R (2003) The 1999 Izmit, Turkey, earthquake: Nonplanar fault structure, dynamic rupture process, and strong ground motion. Bulletin of the Seismological Society of America 93(3):1249–1266

Aochi H, Cushing M, Scotti O, Berge-Thierry C (2006) Estimating rupture scenario likelihood based on dynamic rupture simulations: The example of the segmented Middle Durance fault, southeastern France. Geophysical Journal International 165(2):436–446, doi:10.1111/j.1365-246X.2006.02842.x

Aoi S, Fujiwara H (1999) 3D finite-difference method using discontinuous grids. Bulletin of the Seismological Society of America 89(4):918–930

Apsel RJ, Luco JE (1983) On the Green’s functions for a layered half-space. Part II. Bulletin of the Seismological Society of America 73(4):931–951

Archuleta RJ, Brune JN (1975) Surface strong motion associated with a stick-slip event in a foam rubber model of earthquakes. Bulletin of the Seismological Society of America 65(5):1059–1071

Atkinson GM (2001) An alternative to stochastic ground-motion relations for use in seismic hazard analysis in eastern North America. Seismological Research Letters 72:299–306

Atkinson GM, Boore DM (2006) Earthquake ground-motion prediction equations for eastern North America. Bulletin of the Seismological Society of America 96(6):2181–2205. doi:10.1785/0120050245

Atkinson GM, Silva W (2000) Stochastic modeling of California ground motion. Bulletin of the Seismological Society of America 90(2):255–274

Atkinson GM, Somerville PG (1994) Calibration of time history simulation methods. Bulletin of the Seismological Society of America 84(2):400–414

Atkinson GM, Sonley E (2000) Empirical relationships between modified Mercalli intensity and response spectra. Bulletin of the Seismological Society of America 90(2):537–544

Baker JW, Cornell CA (2006) Spectral shape, epsilon and record selection. Earthquake Engineering and Structural Dynamics 35(9):1077–1095, doi:10.1002/eqe.571

Bao HS, Bielak J, Ghattas O, Kallivokas LF, O’Hallaron DR, Shewchuk JR, Xu JF (1998) Large-scale simulation of elastic wave propagation in heterogeneous media on parallel computers. Computer Methods in Applied Mechanics and Engineering 152(1–2):85–102

Bazzurro P, Cornell CA (1999) Disaggregation of seismic hazard. Bulletin of the Seismological Society of America 89(2):501–520

Beresnev IA, Atkinson GM (1998) FINSIM: A FORTRAN program for simulating stochastic acceleration time histories from finite faults. Seismological Research Letters 69:27–32

Berge C, Gariel JC, Bernard P (1998) A very broad-band stochastic source model used for near source strong motion prediction. Geophysical Research Letters 25(7):1063–1066

Beyer K, Bommer JJ (2007) Selection and scaling of real accelerograms for bi-directional loading: A review of current practice and code provisions. Journal of Earthquake Engineering 11(S1):13–45, doi:10.1080/13632460701280013

Bommer JJ, Acevedo AB (2004) The use of real earthquake accelerograms as input to dynamic analysis. Journal of Earthquake Engineering 8(Special issue 1):43–91

Bommer JJ, Alarcón JE (2006) The prediction and use of peak ground velocity. Journal of Earthquake Engineering 10(1):1–31

Bommer JJ, Ruggeri C (2002) The specification of acceleration time-histories in seismic design codes. European Earthquake Engineering 16(1):3–17

Bommer JJ, Scott SG, Sarma SK (2000) Hazard-consistent earthquake scenarios. Soil Dynamics and Earthquake Engineering 19(4):219–231

Bommer JJ, Abrahamson NA, Strasser FO, Pecker A, Bard PY, Bungum H, Cotton F, Fäh D, Sabetta F, Scherbaum F, Studer J (2004) The challenge of defining upper bounds on earthquake ground motions. Seismological Research Letters 75(1):82–95

Boore DM (1973) The effect of simple topography on seismic waves: Implications for the accelerations recorded at Pacoima Dam, San Fernando valley, California. Bulletin of the Seismological Society of America 63(5):1603–1609

Boore DM (1983) Stochastic simulation of high-frequency ground motions based on seismological models of the radiated spectra. Bulletin of the Seismological Society of America 73(6):1865–1894

Boore DM (2001) Comparisons of ground motions from the 1999 Chi-Chi earthquake with empirical predictions largely based on data from California. Bulletin of the Seismological Society of America 91(5):1212–1217

Boore DM (2003) Simulation of ground motion using the stochastic method. Pure and Applied Geophysics 160(3–4):635–676, doi:10.1007/PL00012553

Boore DM (2005) SMSIM—Fortran programs for simulating ground motions from earthquakes: Version 2.3—A revision of OFR 96-80-A. Open-File Report 00-509, United States Geological Survey, modified version, describing the program as of 15 August 2005 (Version 2.30)

Bouchon M (1981) A simple method to calculate Green’s functions for elastic layered media. Bulletin of the Seismological Society of America 71(4):959–971

Bouchon M, Sánchez-Sesma FJ (2007) Boundary integral equations and boundary elements methods in elastodynamics. In: Advances in Geophysics: advances in wave propagation in heterogeneous Earth, vol 48, Chap 3. Academic Press, London, UK, pp 157–189

Brune JN (1970) Tectonic stress and the spectra of seismic shear waves from earthquakes. Journal of Geophysical Research 75(26):4997–5009

Brune JN (1971) Correction. Journal of Geophysical Research 76(20):5002

Bycroft GN (1960) White noise representation of earthquake. Journal of The Engineering Mechanics Division, ASCE 86(EM2):1–16

Campbell KW (1986) An empirical estimate of near-source ground motion for a major, m b = 6.8, earthquake in the eastern United States. Bulletin of the Seismological Society of America 76(1):1–17

Campbell KW (2002) A contemporary guide to strong-motion attenuation relations. In: Lee WHK, Kanamori H, Jennings PC, Kisslinger C (eds) International handbook of earthquake and engineering seismology, Chap 60. Academic Press, London

Campbell KW (2003) Prediction of strong ground motion using the hybrid empirical method and its use in the development of ground-motion (attenuation) relations in eastern North America. Bulletin of the Seismological Society of America 93(3):1012–1033

Campbell KW (2007) Validation and update of hybrid empirical ground motion (attenuation) relations for the CEUS. Tech. rep., ABS Consulting, Inc. (EQECAT), Beaverton, USA, Award number: 05HQGR0032

Cancani A (1904) Sur l’emploi d’une double échelle sismique des intensités, empirique et absolue. Gerlands Beitr z Geophys 2:281–283, not seen. Cited in Gutenberg and Richter (1942)

Chaljub E, Komatitsch D, Vilotte JP, Capdeville Y, Valette B, Festa G (2007a) Spectral-element analysis in seismology. In: Advances in geophysics: advances in wave propagation in heterogeneous earth, vol 48, chap 7. Academic Press, London, UK, pp 365–419

Chaljub E, Tsuno S, Bard PY, Cornou C (2007b) Analyse des résultats d’un benchmark numérique de prédiction du mouvement sismique dans la vallée de Grenoble. In: 7ème Colloque National AFPS 2007, in French

Chen XF (2007) Generation and propagation of seismic SH waves in multi-layered media with irregular interfaces. In: Advances in geophysics: advances in wave propagation in heterogeneous earth, vol 48, chap 4. Academic Press, London, UK, pp 191–264

Chen M, Hjörleifsdóttir V, Kientz S, Komatitsch D, Liu Q, Maggi A, Savage B, Strand L, Tape C, Tromp J (2008) SPECFEM 3D: User manual version 1.4.3. Tech. rep., Computational Infrastructure for Geodynamics (CIG), California Institute of Technology (USA); University of Pau (France). URL: http://www.gps.caltech.edu/jtromp/research/downloads.html

Cotton F, Scherbaum F, Bommer JJ, Bungum H (2006) Criteria for selecting and adjusting ground-motion models for specific target regions: Application to central Europe and rock sites. Journal of Seismology 10(2):137–156, DOI: 10.1007/s10950-005-9006-7

Dalguer LA, Irikura K, Riera JD (2003) Simulation of tensile crack generation by three-dimensional dynamic shear rupture propagation during an earthquake. J Geophys Res 108(B3), article 2144

Dan K, Watanabe T, Tanaka T, Sato R (1990) Stability of earthquake ground motion synthesized by using different small-event records as empirical Green’s functions. Bulletin of the Seismological Society of America 80(6):1433–1455

Day SM, Bradley CR (2001) Memory-efficient simulation of anelastic wave propagation. Bulletin of the Seismological Society of America 91(3):520–531

Day SM, Bielak J, Dreger D, Graves R, Larsen S, Olsen KB, Pitarka A (2005) Tests of 3D elastodynamic codes. Final report for Lifelines Project 1A03. Pacific Earthquake Engineering Research Center, University of California, Berkeley, USA

Dormy E, Tarantola A (1995) Numerical simulation of elastic wave propagation using a finite volume method. Journal of Geophysical Research 100(B2):2123–2133

Douglas J (2003) Earthquake ground motion estimation using strong-motion records: A review of equations for the estimation of peak ground acceleration and response spectral ordinates. Earth-Science Reviews 61(1–2):43–104

Douglas J (2007) On the regional dependence of earthquake response spectra. ISET Journal of Earthquake Technology 44(1):71–99

Douglas J, Suhadolc P, Costa G (2004) On the incorporation of the effect of crustal structure into empirical strong ground motion estimation. Bulletin of Earthquake Engineering 2(1):75–99

Douglas J, Bungum H, Scherbaum F (2006) Ground-motion prediction equations for southern Spain and southern Norway obtained using the composite model perspective. Journal of Earthquake Engineering 10(1):33–72

Dowrick DJ (1977) Earthquake resistant design—a manual for engineers and architects. Wiley, London

Erdik M, Durukal E (2003) Simulation modeling of strong ground motion. In: Earthquake engineering handbook, chap 6, CRC Press LLC, Boca Raton, FL, USA

Esteva L, Rosenblueth E (1964) Espectros de temblores a distancias moderadas y grandes. Boletin Sociedad Mexicana de Ingenieria Sesmica 2:1–18, in Spanish

Faccioli E, Maggio F, Paolucci R, Quarteroni A (1997) 2D and 3D elastic wave propagation by a pseudo-spectral domain decomposition method. Journal of Seismology 1(3):237–251

Field EH, Jordan TH, Cornell CA (2003) OpenSHA: A developing community-modeling environment for seismic hazard analysis. Seismological Research Letters 74(4):406–419

Florsch N, Fäh D, Suhadolc P, Panza GF (1991) Complete synthetic seismograms for high-frequency multimode SH-waves. Pure and Applied Geophysics 136:529–560

Frankel A (1995) Simulating strong motions of large earthquakes using recordings of small earthquakes: The Loma Prieta mainshock as a test case. Bulletin of the Seismological Society of America 85(4):1144–1160

Frankel A, Clayton RW (1986) Finite-difference simulations of seismic scattering—Implications for the propagation of short-period seismic-waves in the crust and models of crustal heterogeneity. Journal of Geophysical Research 91(B6):6465–6489

Gallovič F, Brokešová J (2007) Hybrid k-squared source model for strong ground motion simulations: Introduction. Physics of the Earth and Planetary Interiors 160(1):34–50, DOI: 10.1016/j.pepi.2006.09.002

Goda K, Hong HP (2008) Spatial correlation of peak ground motions and response spectra. Bulletin of the Seismological Society of America 98(1):354–365, doi:10.1785/0120070078

Graves RWJ (1996) Simulating seismic wave propagation in 3D elastic media using staggered-grid finite differences. Bulletin of the Seismological Society of America 86(4):1091–1106

Guatteri M, Mai PM, Beroza GC, Boatwright J (2003) Strong ground motion prediction from stochastic-dynamic source models. Bulletin of the Seismological Society of America 93(1):301–313, DOI: 10.1785/0120020006

Guatteri M, Mai PM, Beroza GC (2004) A pseudo-dynamic approximation to dynamic rupture models for strong ground motion prediction. Bulletin of the Seismological Society of America 94(6):2051–2063, DOI: 10.1785/0120040037

Gusev AA (1983) Descriptive statistical model of earthquake source radiation and its application to an estimation of short-period strong motion. Geophysical Journal of the Royal Astronomical Society 74:787–808

Gutenberg G, Richter CF (1942) Earthquake magnitude, intensity, energy, and acceleration. Bulletin of the Seismological Society of America 32(3):163–191

Guzman RA, Jennings PC (1976) Design spectra for nuclear power plants. Journal of The Power Division, ASCE 102(2):165–178

Hadley DM, Helmberger DV (1980) Simulation of strong ground motions. Bulletin of the Seismological Society of America 70(2):617–630

Hancock J, Watson-Lamprey J, Abrahamson NA, Bommer JJ, Markatis A, McCoy E, Mendis R (2006) An improved method of matching response spectra of recorded earthquake ground motion using wavelets. Journal of Earthquake Engineering 10(Special issue 1):67–89

Hancock J, Bommer JJ, Stafford PJ (2008) Numbers of scaled and matched accelerograms required for inelastic dynamic analyses. Earthquake Engineering and Structural Dynamics DOI: 10.1002/eqe.827, in press

Hanks TC (1979) b values and ω−γ seismic source models: Implications for tectonic stress variations along active crustal fault zones and the estimation of high-frequency strong ground motion. Journal of Geophysical Research 84(B5):2235–2242

Hanks TC, McGuire RK (1981) The character of high-frequency strong ground motion. Bulletin of the Seismological Society of America 71(6):2071–2095

Hartzell SH (1978) Earthquake aftershocks as Green’s functions. Geophysical Research Letters 5(1):1–4

Hartzell S, Leeds A, Frankel A, Williams RA, Odum J, Stephenson W, Silva S (2002) Simulation of broadband ground motion including nonlinear soil effects for a magnitude 6.5 earthquake on the Seattle fault, Seattle, Washington. Bulletin of the Seismological Society of America 92(2):831–853

Haskell NA (1969) Elastic displacements in the near-field of a propagating fault. Bulletin of the Seismological Society of America 59(2):865–908

Hays WW (1980) Procedures for estimating earthquake ground motions. Geological Survey Professional Paper 1114, US Geological Survey

Heaton TH, Helmberger DV (1977) A study of the strong ground motion of the Borrego Mountain, California, earthquake. Bulletin of the Seismological Society of America 67(2):315–330

Herrero A, Bernard P (1994) A kinematic self-similar rupture process for earthquakes. Bulletin of the Seismological Society of America 84(4):1216–1228

Hershberger J (1956) A comparison of earthquake accelerations with intensity ratings. Bulletin of the Seismological Society of America 46(4):317–320

Heuze F, Archuleta R, Bonilla F, Day S, Doroudian M, Elgamal A, Gonzales S, Hoehler M, Lai T, Lavallee D, Lawrence B, Liu PC, Martin A, Matesic L, Minster B, Mellors R, Oglesby D, Park S, Riemer M, Steidl J, Vernon F, Vucetic M, Wagoner J, Yang Z (2004) Estimating site-specific strong earthquake motions. Soil Dynamics and Earthquake Engineering 24(3):199–223, DOI: 10.1016/j.soildyn.2003.11.002

Hisada Y (2008) Broadband strong motion simulation in layered half-space using stochastic Green’s function technique. Journal of Seismology 12(2):265–279, DOI:10.1007/s10950-008-9090-6

Housner GW (1947) Characteristics of strong-motion earthquakes. Bulletin of the Seismological Society of America 37(1):19–31

Housner GW (1955) Properties of strong-ground motion earthquakes. Bulletin of the Seismological Society of America 45(3):197–218

Housner GW, Jennings PC (1964) Generation of artificial earthquakes. Journal of The Engineering Mechanics Division, ASCE 90:113–150

Irikura K, Kamae K (1994) Estimation of strong ground motion in broad-frequency band based on a seismic source scaling model and an empirical Green’s function technique. Annali di Geofisica XXXVII(6):1721–1743

Jennings PC, Housner GW, Tsai NC (1968) Simulated earthquake motions. Tech. rep., Earthquake Engineering Research Laboratory, California Institute of Technology, Pasadena, California, USA

Joyner WB (1984) A scaling law for the spectra of large earthquakes. Bulletin of the Seismological Society of America 74(4):1167–1188

Joyner WB, Boore DM (1986) On simulating large earthquake by Green’s function addition of smaller earthquakes. In: Das S, Boatwright J, Scholtz CH (eds) Earthquake source mechanics, Maurice Ewing Series 6, vol 37. American Geophysical Union, Washington, D.C., USA

Joyner WB, Boore DM (1988) Measurement, characterization, and prediction of strong ground motion. In: Proceedings of earthquake engineering & soil dynamics II, Geotechnical division, ASCE, pp 43–102

Jurkevics A, Ulrych TJ (1978) Representing and simulating strong ground motion. Bulletin of the Seismological Society of America 68(3):781–801

Kaka SI, Atkinson GM (2004) Relationships between instrumental ground-motion parameters and modified Mercalli intensity in eastern North America. Bulletin of the Seismological Society of America 94(5):1728–1736

Kamae K, Irikura K, Pitarka A (1998) A technique for simulating strong ground motion using hybrid Green’s functions. Bulletin of the Seismological Society of America 88(2):357–367

Kanamori H (1979) A semi-empirical approach to prediction of long-period ground motions from great earthquakes. Bulletin of the Seismological Society of America 69(6):1645–1670

Käser M, Iske A (2005) ADER schemes on adaptive triangular meshes for scalar conservations laws. Journal of Computational Physics 205(2):486–508

Kaul MK (1978) Spectrum-consistent time-history generation. Journal of The Engineering Mechanics Division, ASCE 104(ME4):781–788

Kennett BLN, Kerry NJ (1979) Seismic waves in a stratified half-space. Geophysical Journal of the Royal Astronomical Society 57:557–583

Kohrs-Sansorny C, Courboulex F, Bour M, Deschamps A (2005) A two-stage method for ground-motion simulation using stochastic summation of small earthquakes. Bulletin of the Seismological Society of America 95(4):1387–1400, DOI: 10.1785/0120040211

Koketsu K (1985) The extended reflectivity method for synthetic near-field seismograms. Journal of the Physics of the Earth 33:121–131

Komatitsch D, Martin R (2007) An unsplit convolutional perfectly matched layer improved at grazing incidence for the seismic wave equation. Geophysics 72(5):SM155–SM167

Komatitsch D, Tromp J (1999) Introduction to the spectral element method for three-dimensional seismic wave propagation. Geophysical Journal International 139(3):806–822

Komatitsch D, Vilotte JP (1998) The spectral element method: An efficient tool to simulate the seismic response of 2D and 3D geological structures. Bulletin of the Seismological Society of America 88(2):368–392

Komatitsch D, Liu Q, Tromp J, Süss P, Stidham C, Shaw JH (2004) Simulations of ground motion in the Los Angeles basin based upon the spectral-element method. Bulletin of the Seismological Society of America 94(1):187–206

Kristeková M, Kristek J, Moczo P, Day SM (2006) Misfit criteria for quantitative comparison of seismograms. Bulletin of the Seismological Society of America 96(5):1836–1850, DOI: 10.1785/0120060012

Lee VW, Trifunac MD (1985) Torsional accelerograms. Soil Dynamics and Earthquake Engineering 4(3):132–139

Lee VW, Trifunac MD (1987) Rocking strong earthquake accelerations. Soil Dynamics and Earthquake Engineering 6(2):75–89

Lee Y, Anderson JG, Zeng Y (2000) Evaluation of empirical ground-motion relations in southern California. Bulletin of the Seismological Society of America 90(6B):S136–S148

Levander AR (1988) Fourth-order finite-difference P-SV seismograms. Geophysics 53(11):1425–1436

LeVeque RJ (2002) Finite Volume Methods for Hyperbolic Problems. Cambridge University Press, Cambridge, UK

Luco JE, Apsel RJ (1983) On the Green’s functions for a layered half-space. Part I. Bulletin of the Seismological Society of America 73(4):909–929

Lysmer J, Drake LA (1972) A finite element method for seismology. In: Bolt BA (eds) Methods in Computational Physics. Academic Press Inc., New York, USA

Ma S, Archuleta RJ, Page MT (2007) Effects of large-scale surface topography on ground motions as demonstrated by a study of the San Gabriel Mountains, Los Angeles, California. Bulletin of the Seismological Society of America 97(6):2066–2079, DOI: 10.1785/0120070040

Mai PM, Beroza GC (2003) A hybrid method for calculating near-source, broadband seismograms: Application to strong motion prediction. Physics of the Earth and Planetary Interiors 137(1–4):183–199, DOI: 10.1016/S0031-9201(03)00014-1

Maupin V (2007) Introduction to mode coupling methods for surface waves. In: Advances in geophysics: advances in wave propagation in heterogeneous earth, vol 48, chap 2. Academic Press, London, UK, pp 127–155

McGuire RK (2004) Seismic Hazard and Risk Analysis. Earthquake Engineering Research Institute (EERI), Oakland, California, USA

Miyake H, Iwata T, Irikura K (2003) Source characterization for broadband ground-motion simulation: Kinematic heterogeneous source model and strong motion generation area. Bulletin of the Seismological Society of America 93(6):2531–2545, DOI: 10.1785/0120020183

Moczo P, Kristek J, Galis M, Pazak P, Balazovjech M (2007a) The finite-difference and finite-element modeling of seismic wave propagation and earthquake motion. Acta Physica Slovaca 57(2):177–406

Moczo P, Robertsson JOA, Eisner L (2007b) The finite-difference time-domain method for modeling of seismic wave propagation. In: Advances in geophysics: advances in wave propagation in heterogeneous Earth, vol 48, chap 8. Academic Press, London, UK, pp 421–516

Montaldo V, Kiremidjian AS, Thráinsson H, Zonno G (2003) Simulation of the Fourier phase spectrum for the generation of synthetic accelerograms. Journal of Earthquake Engineering 7(3):427–445

Mora P, Place D (1994) Simulation of the frictional stick-slip instability. Pure and Applied Geophysics 143(1–3):61–87

Motazedian D, Atkinson GM (2005) Stochastic finite-fault modeling based on a dynamic corner frequency. Bulletin of the Seismological Society of America 95(3):995–1010, DOI: 10.1785/0120030207

Mukherjee S, Gupta VK (2002) Wavelet-based generation of spectrum-compatible time-histories. Soil Dynamics and Earthquake Engineering 22(9–12):799–804

Murphy JR, O’Brien LJ (1977) The correlation of peak ground acceleration amplitude with seismic intensity and other physical parameters. Bulletin of the Seismological Society of America 67(3):877–915

Naeim F, Lew M (1995) On the use of design spectrum compatible time histories. Earthquake Spectra 11(1):111–127

Nau RF, Oliver RM, Pister KS (1982) Simulating and analyzing artificial nonstationary earthquake ground motions. Bulletin of the Seismological Society of America 72(2):615–636

Ólafsson S, Sigbjörnsson R (1995) Application of ARMA models to estimate earthquake ground motion and structural response. Earthquake Engineering and Structural Dynamics 24(7):951–966

Ólafsson S, Remseth S, Sigbjörnsson R (2001) Stochastic models for simulation of strong ground motion in Iceland. Earthquake Engineering and Structural Dynamics 30(9):1305–1331

Olsen K, Madariaga R, Archuleta RJ (1997) Three-dimensional dynamic simulation of the 1992 Landers earthquake. Science 278:834–838

Olsen KB, Day SM, Minster JB, Cui Y, Chourasia A, Faerman M, Moore R, Maechling P, Jordan T (2006) Strong shaking in Los Angeles expected from southern San Andreas earthquake. Geophys Res Lett 33(L07305). doi:10.1029/2005GL025472

Oprsal I, Zahradnik J (2002) Three-dimensional finite difference method and hybrid modeling of earthquake ground motion. J Geophys Res 107(B8). doi:10.1029/2000JB000082

Ordaz M, Arboleda J, Singh SK (1995) A scheme of random summation of an empirical Green’s function to estimate ground motions from future large earthquakes. Bulletin of the Seismological Society of America 85(6):1635–1647

Panza GF (1985) Synthetic seismograms: The Rayleigh waves modal summation. Journal of Geophysics 58:125–145

Panza GF, Suhadolc P (1987) Complete strong motion synthetics. In: Bolt BA (eds) Seismic strong motion synthetics. Academic Press, Orlando, pp. 153–204

Papageorgiou AS, Aki K (1983) A specific barrier model for the quantitative description of inhomogeneous faulting and the prediction of strong ground motion. Part I. Description of the model. Bulletin of the Seismological Society of America 73(3):693–702

Pavic R, Koller MG, Bard PY, Lacave-Lachet C (2000) Ground motion prediction with the empirical Green’s function technique: an assessment of uncertainties and confidence level. Journal of Seismology 4(1):59–77

Pitarka A, Irikura K, Iwata T, Sekiguchi H (1998) Three-dimensional simulation of the near-fault ground motion for the 1995 Hyogo-ken Nanbu (Kobe), Japan, earthquake. Bulletin of the Seismological Society of America 88(2):428–440

Pitarka A, Somerville P, Fukushima Y, Uetake T, Irikura K (2000) Simulation of near-fault strong-ground motion using hybrid Green’s functions. Bulletin of the Seismological Society of America 90(3):566–586

Place D, Mora P (1999) The lattice solid model to simulate the physics of rocks and earthquakes: Incorporation of friction. Journal of Computational Physics 150(2):332–372

Pousse G, Bonilla LF, Cotton F, Margerin L (2006) Non stationary stochastic simulation of strong ground motion time histories including natural variability: Application to the K-net Japanese database. Bulletin of the Seismological Society of America 96(6):2103–2117, DOI: 10.1785/0120050134

Power M, Chiou B, Abrahamson N, Bozorgnia Y, Shantz T, Roblee C (2008) An overview of the NGA project. Earthquake Spectra 24(1):3–21, DOI: 10.1193/1.2894833

Reiter L (1990) Earthquake Hazard Analysis: Issues and Insights. Columbia University Press, New York

Ripperger J, Mai PM, Ampuero JP (2008) Variability of near-field ground motion from dynamic earthquake rupture simulations. Bulletin of the Seismological Society of America 98(3):1207–1228, DOI: 10.1785/0120070076

Ruiz J, Baumont D, Bernard P, , Berge-Thierry C (2007) New approach in the kinematic k 2 source model for generating physical slip velocity functions. Geophysical Journal International 171(2):739–754, DOI: 10.1111/j.1365-246X.2007.03503.x

Sabetta F, Pugliese A (1996) Estimation of response spectra and simulation of nonstationary earthquake ground motions. Bulletin of the Seismological Society of America 86(2):337–352

Scherbaum F, Cotton F, Smit P (2004) On the use of response spectral-reference data for the selection and ranking of ground-motion models for seismic-hazard analysis in regions of moderate seismicity: The case of rock motion. Bulletin of the Seismological Society of America 94(6):2164–2185, DOI: 10.1785/0120030147

Scherbaum F, Cotton F, Staedtke H (2006) The estimation of minimum-misfit stochastic models from empirical ground-motion prediction equations. Bulletin of the Seismological Society of America 96(2):427–445, DOI: 10.1785/0120050015

Shi B, Brune JN (2005) Characteristics of near-fault ground motions by dynamic thrust faulting: Two-dimensional lattice particle approaches. Bulletin of the Seismological Society of America 95(6):2525–2533, DOI: 10.1785/0120040227

Shinozuka M (1988) Engineering modeling of ground motion. In: Proceedings of ninth world conference on earthquake engineering, vol VIII, pp 51–62

Shome N, Cornell CA, Bazzurro P, Carballo JE (1998) Earthquakes, records and nonlinear responses. Earthquake Spectra 14(3):469–500

Silva WJ, Lee K (1987) State-of-the-art for assessing earthquake hazards in the United States; report 24: WES RASCAL code for synthesizing earthquake ground motions. Miscellaneous Paper S-73-1, US Army Corps of Engineers

Silva W, Gregor N, Darragh B (1999) Near fault ground motions. Tech. rep., Pacific Engineering and Analysis, El Cerrito, USA, PG & E PEER—Task 5.A

Sokolov V, Wald DJ (2002) Instrumental intensity distribution for the Hector Mine, California, and the Chi-Chi, Taiwan, earthquakes: Comparison of two methods. Bulletin of the Seismological Society of America 92(6):2145–2162

Souriau A (2006) Quantifying felt events: A joint analysis of intensities, accelerations and dominant frequencies. Journal of Seismology 10(1):23–38, DOI: 10.1007/s10950-006-2843-1

Spudich P, Xu L (2003) Software for calculating earthquake ground motions from finite faults in vertically varying media. In: IASPEI handbook of earthquake and engineering seismology, chap 85.14. Academic Press, Amsterdam, The Netherlands, pp 1633–1634

Spudich P, Joyner WB, Lindh AG, Boore DM, Margaris BM, Fletcher JB (1999) SEA99: A revised ground motion prediction relation for use in extensional tectonic regimes. Bulletin of the Seismological Society of America 89(5):1156–1170

Strasser FO, Bommer JJ, Abrahamson NA (2008) Truncation of the distribution of ground-motion residuals. Journal of Seismology 12(1):79–105, DOI: 10.1007/s10950-007-9073-z

Swanger HJ, Boore DM (1978) Simulation of strong-motion displacements using surface-wave modal superposition. Bulletin of the Seismological Society of America 68(4):907–922

Takeo M (1985) Near-field synthetic seismograms taking into account the effects of anelasticity: The effects of anelastic attenuation on seismograms caused by a sedimentary layer. Meteorology & Geophysics 36(4):245–257

Tavakoli B, Pezeshk S (2005) Empirical-stochastic ground-motion prediction for eastern North America. Bulletin of the Seismological Society of America 95(6):2283–2296, DOI: 10.1785/0120050030

Tinti E, Fukuyama E, Piatanesi A, Cocco M (2005) A kinematic source-time function compatible with earthquake dynamics. Bulletin of the Seismological Society of America 95(4):1211–1223, DOI: 10.1785/0120040177

Trifunac MD (1971) A method for synthesizing realistic strong ground motion. Bulletin of the Seismological Society of America 61(6):1739–1753

Trifunac MD (1976) Preliminary analysis of the peaks of strong earthquake ground motion – dependence of peaks on earthquake magnitude, epicentral distance, and recording site conditions. Bulletin of the Seismological Society of America 66(1):189–219

Trifunac MD (1990) Curvograms of strong ground motion. Journal of The Engineering Mechanics Division, ASCE 116:1426–32

Trifunac MD (2007) Recording strong earthquake motion—instruments, recording strategies and data processing. Tech. Rep. CE 07-03. Department of Civil Engineering, University of Southern California

Trifunac MD, Brady AG (1975) On the correlation of seismic intensity scales with the peaks of recorded strong ground motion. Bulletin of the Seismological Society of America 65(1):139–162

Tufte ER (2006) Beautiful Evidence. Graphics Press, Cheshire, Connecticut, USA

Tumarkin A, Archuleta R (1994) Empirical ground motion prediction. Annali di Geofisica XXXVII(6):1691–1720

Vanmarcke EH (1979) Representation of earthquake ground motion: Scaled accelerograms and equivalent response spectra. State-of-the-Art for Assessing Earthquake Hazards in the United States 14, Miscellaneous Paper S-73-1, U.S. Army Corps of Engineers, Vicksburg, Mississippi, USA

Vanmarcke EH, Gasparini DA (1976) Simulated earthquake motions compatible with prescribed response spectra. Tech. Rep. R76-4. Dept. of Civil Engineering, Massachusetts Inst. of Technology, Cambridge, USA

Virieux J, Madariaga R (1982) Dynamic faulting studied by a finite difference method. Bulletin of the Seismological Society of America 72(2):345–369

Wald DJ, Quitoriano V, Heaton TH, Kanamori H (1999) Relationships between peak ground acceleration, peak ground velocity, and modified Mercalli intensity in California. Earthquake Spectra 15(3):557–564

Wang R (1999) A simple orthonormalization method for stable and efficient computation of Green’s functions. Bulletin of the Seismological Society of America 89(3):733–741

Wang GQ, Boore DM, Tang G, Zhou X (2007) Comparisons of ground motions from collocated and closely-spaced 1-sample-per-second Global Positioning System (GPS) and accelerograph recordings of the 2003, M6.5 San Simeon, California, earthquake in the Parkfield Region. Bull Seismol Soc Am 97(1B):76–90. doi:10.1785/0120060053

Watson-Lamprey J, Abrahamson N (2006) Selection of ground motion time series and limits on scaling. Soil Dynamics and Earthquake Engineering 26(5):477–482

Wennerberg L (1990) Stochastic summation of empirical Green’s functions. Bulletin of the Seismological Society of America 80(6):1418–1432

Wong HL, Trifunac MD (1978) Synthesizing realistic ground motion accelerograms. Tech. Rep. CE 78-07. Department of Civil Engineering, University of Southern California

Woodhouse JH (1974) Surface waves in a laterally varying layered structure. Geophysical Journal of the Royal Astronomical Society 37:461–490

Zeng Y, Anderson JG (1995) A method for direct computation of the differential seismogram with respect to the velocity change in a layered elastic solid. Bulletin of the Seismological Society of America 85(1):300–307

Zeng T, Anderson JG, Yu G (1994) A composite source model for computing realistic synthetic strong ground motions. Geophysical Research Letters 21(8):725–728

Acknowledgements

The design of the diagram in this article has benefited from advice contained in the book by Tufte (2006). Some of the work presented in this article was funded by the ANR project ‘Quantitative Seismic Hazard Assessment’ (QSHA). The rest was funded by internal BRGM research projects. We thank the rest of the BRGM Seismic Risks unit for numerous discussions on the topics discussed in this article. Finally, we thank two anonymous reviewers for their careful and detailed reviews, which led to significant improvements to this article.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Douglas, J., Aochi, H. A Survey of Techniques for Predicting Earthquake Ground Motions for Engineering Purposes. Surv Geophys 29, 187–220 (2008). https://doi.org/10.1007/s10712-008-9046-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10712-008-9046-y