Abstract

This paper discusses the role of levels and level-bound theoretical terms in neurobiological explanations under the presupposition of a regularity theory of constitution. After presenting the definitions for the constitution relation and the notion of a mechanistic level in the sense of the regularity theory, the paper develops a set of inference rules that allow to determine whether two mechanisms referred to by one or more accepted explanations belong to the same level, or to different levels. The rules are characterized as underlying level distinctions in neuroscience research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Taking explanatory practice in physics as a starting point, much philosophy of science in the 20th century presupposed that generally accepted explanations also in the special sciences conform either to a deductive-nomological (cf. Hempel and Oppenheim 1948; Hempel 1965) or to a unificationist model of explanation (cf. Friedman 1974; Kitcher 1989). Both of these models assign laws of nature a central role in explanatory practice in the sense that they demand any acceptable explanans to contain at least one law of nature.

The law-affine picture of explanation has been attacked, among others, by Bechtel and Richardson (1993), Machamer et al. (2000), Bechtel and Abrahamsen (2005), and Craver (2002, 2007). A view common to these authors is that the explanatory work notably in explanations in neurobiology is done by a description of the mechanism responsible for a to-be-explained phenomenon. Lawlike regularities subsuming the phenomenon play a secondary role in an explanation, if they play a role at all (however, cf. Fazekas and Kertész 2011).

The definition of a mechanism given by Machamer et al. describes it as consisting of “...entities and activities organized such that they are productive of regular changes from start or set-up to finish or termination conditions.” (Machamer et al. 2000, 3). Bechtel and Abrahamsen extend this definition by describing a mechanism as “...a structure performing a function in virtue of its component parts, component operations, and their organization. The orchestrated functioning of the mechanism is responsible for one or more phenomena.” (Bechtel and Abrahamsen 2005, 423)

It is important to distinguish what Machamer et al. describe as “being productive of regular changes from start or set-up to finish or termination conditions” and what Bechtel and Abrahamsen call being “responsible for one or more phenomena” (emphases added) from causing a phenomenon or event. When mechanisms are characterized as producing, or as being responsible for, a phenomenon they are not believed to precede the phenomenon temporally as it is definitional for causes. Rather, the idea is that the mechanisms underlie, realize, or instantaneously determine the initially identified phenomenon. Philosophers of neuroscience have sometimes characterized this non-causal and instantaneous relation between the phenomena and their mechanisms as a relation of “constitution”, “composition”, or “constitutive relevance”.Footnote 1 Since mechanisms can sometimes become explanantia themselves in the sense that their occurrence is explained by other “lower-level” mechanisms constituting them, the idea of a distinction of “mechanistic levels” has entered the picture.

With the introduction of these two notions, a host of new questions has emerged within the philosophy of neuroscience. An obvious problem to be solved in this context is to offer a satisfactory conceptual analysis of the constitution relation, given it is a relation at all. Call this the “definitional question”. Furthermore, certain general questions arise concerning the establishment and discovery of singular mechanistic explanations. This problem is analogous to questions about causal discovery and inference. Even when it is agreed that causation has something to do with INUS-regularities (cf. Mackie 1974), it is not immediately clear what procedures and inferences allow us to establish a true causal INUS-regularity statement. Call this the “methodological question”. Determining whether two mechanisms referred to by one or more accepted explanations belong to the same level, or to different levels, can be a non-trivial question in the course of integrating certain research results. Call this the “integrative question”. Characterizing the status of mechanistic explanations and the general methodology for their establishment in relation to concrete methods in neuroscience that are based on, for instance, mathematical modeling and curve fitting of various kinds is a further problem. This may be called the “comparative question”. Moreover, to decide which non-fundamental mechanistic terms, if any at all, ought to have a place in a completed explanation of a given phenomenon can be a matter of dispute. This is the “simplification question”. Finally, when a completed explanation factually makes reference to mechanisms located on different levels, a deep philosophical problem concerns the ontological interpretability of the mechanistic terms occurring in that explanation. Call this the “ontological question”.

This paper sides with the mechanists in their general approach to explanation. It takes the mechanistic framework to be widely adequate for explanation in neuroscience, and it mainly tries to refine the response that the regularity theory of constitution has given to the “definitional question” (cf. Harbecke 2010; Couch 2011) by providing new answers to the “methodological” and the “integrative” questions on the basis of this theory. The aim will be realized by showing how certain inference rules formulated within the regularity framework guide level distinctions and level identifications in light of certain kinds of empirical evidence.

The paper is structured as follows. Section 2 sketches an example of a currently accepted explanation in neurobiology that is used as a test case for the subsequent discussions. Some existing answers to the definitional question are presented in Sect. 3, namely the manipulationst and the regularity theory of constitution. The latter of the two is characterized as more powerful than the former. The definition of a “level” in the regularity framework is explicated, and it is shown how the theory reconstructs parts of the example mentioned in Sect. 2. Section 4 develops the mentioned inference rules as plausible answers to the integrative question. Section 5 summarizes the results and points to some puzzles that will have to be left for future research, among which are the “comparative”, “simplification”, and the “ontological” questions.

2 An Example

The test case for theories about the logical structure of neurobiological explanations is always their application to certain widely accepted research results in neuroscience. One such result that has become a standard case in the recent philosophy of neurobiology is the description of the neural mechanisms underlying spatial memory acquisition in rats (cf. Churchland and Sejnowski 1992, ch. 5; Craver and Darden 2001, 115–119; Craver 2002, Sect. 2; Bickle 2003, chs. 3–5; Craver 2007, 165–170). I will present the main results of research on this topic in this section.

The perceived explanation of spatial memory and learning in rats identifies the phenomenon of long-term potentiation (LTP) at neural synapses within the rat’s hippocampus as a mechanism underlying memory formation. Roughly speaking, LTP is an enhancement in synaptic efficacy following a high-frequency stimulation of an afferent pathway. Long before the discovery of hippocampal LTP, it was known that lesions in the hippocampus of rats are correlated with various kinds of learning impairments (cf. Kimble 1963). After LTP had been discovered in the rabbit perforant path (cf. Bliss and Lømo 1973), it was immediately identified as a candidate mechanism underlying the phenomenon of learning (Lømo 2003, 618). The activity-dependent synaptic plasticity characteristic of LTP is precisely what one would expect to find in a neural mechanism determining memory. Morris et al. (1982) eventually showed that the learning impairment correlates with hippocampal lesions and, hence, with the absence of hippocamapal LTP in this region.

Obviously, research did not stop here. Rather, LTP was subsequently analyzed as well in terms of the lower-level mechanisms underlying it. In particular, as Harris et al. (1984) were able to show, hippocampal LTP involves activation of \(N\)-methyl-D-aspartat (NMDA) receptors on CA1 pyramidal cells. In a series of experiments conducted on rats navigating through a Morris Water Maze (cf. Morris 1984; Morris et al. 1986; Davis et al. 1992), it was then shown by Morris and his collaborators that a selective inhibition of hippocampal NMDA-receptor activity leads to an impairment of spatial learning similar to that induced by hippocampal lesions.

The mechanism underlying NMDA-receptor activation is extremely complex. It is now believed that the NMDA-receptor channels of pyramidal cells are blocked by Mg\(^{+}\) ions during the rest potential phase (cf. Churchland and Sejnowski 1992, 255–270). If the postsynaptic membrane is strongly depolarized through a train of high-frequency stimuli and through activity of other receptors, the Mg\(^{+}\) ions are repelled whereby the blockade of NMDA-receptors is lifted. As a result, an increased influx of Na\(^{+}\), K\(^{+}\), and Ca\(^{2+}\) ions occurs. The resulting Ca\(^{2+}\) rise within the dendrite then activates calcium-dependent kinases (Ca\(^{2+}\)/Calmodulin-kinase and proteinkinase C). These processes add new receptors to the postsynaptic dendrite, alter their sensitivity to glutamate, or increase their transmission capacity of Ca\(^{2+}\) ions (cf. Toni et al. 1999). Through all these paths an increase in sensitivity of the postsynaptic receptors is attained which can last for a period of up to several hours.

With these results in the background, the neurobiological explanation of spatial memory has been described as involving at least the following central phenomena and mechanisms (cf. also Craver and Darden 2001, 118; Craver 2007, 166):

-

1.

The development of spatial memory in the rat

-

2.

The generating of a spatial map within the rat’s hippocampus

-

3.

The long-term potentiation of synapses of CA1 pyramidal cells

-

4.

The activation of NMDA-receptors at the synapses of CA1 pyramidal cells

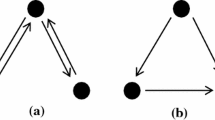

The explanation itself then essentially consists in a conjunction of claims about the constitutive relationships holding between these phenomena and mechanisms. Arguably, it is this relation of constitution that scientists generally have in mind when they say that a mechanism “is responsible for” (Bliss and Lømo 1973, 331), “gives rise to” (Morris et al. 1986, 776), “plays a crucial role in” (Davis et al. 1992, 32), “contributes to”, “forms the basis of” (both Bliss and Collingridge 1993, 38), “underlies” (Lømo 2003, 619; Frey et al. 1996, 703), or “is constitutively active in” (Malenka et al. 1989, 556) a phenomenon. Along with this conjunctive claim stating various constitutive relationships comes a more or less explicit distinction of levels that has sometimes been illustrated by pictures such as Fig. 1. If this reconstruction of the explanation is adequate, and if the example summarized above is paradigmatic for theories in neurobiology, it is evident that the notion of constitution plays a central role in standard neurobiological explanations.

Levels in the hierarchical organization of the realization of spatial memory in rats. Taken from Craver 2007, 166

3 Some Answers to the Definitional Question

Since the relata of mechanistic constitution do not have distinct instances, constitution is not a causal relation. Not having distinct istances does not imply that the instances are identical. It merely says that the space-time regions instantiating the relata of mechanistic constitution overlap. But since constitution is not causation, it becomes significant to ask what relation precisely working scientists have in mind when they describe a mechanism as “responsible for”, “giving rise to”, “playing a crucial role in”, “contributing to”, “forming the basis of”, “underlying”, or “being constitutively active in” a phenomenon.

The literature in the philosophy of neurobiology currently contains two main proposals for an analysis of the notion of mechanistic constitution. They both have in common that they distinguish constitution from identity, although the latter is typically considered a borderline case of constitution. Moreover, both approaches take for granted that the notion of supervenience as it has been discussed mainly in the philosophy of mind (cf. McLaughlin and Bennett 2008) is of little help for a better understanding of constitution. One of the reasons is that supervenience is construed as a relation between sets of properties, whereas constitution as it is referred to in neurobiological explanations is clearly either a relation between instantiations of mechanisms (= mechanistic events) or types of mechanistic events.Footnote 2

One of the mentioned theories has been termed a “manipulationist” analysis. It has been defended notably by Craver (2007). The second has been described as a “regularity theory” of constitution. The basic ideas have been developed by Harbecke (2010) and Couch (2011).

3.1 Manipulation and Mechanistic Constitution

Craver (2007) construes the relata of constitution, or in his terms: of “constitutive relevance”, as certain kinds of complex events which can be referred to by first-order sentences such as “\(x\) \(\phi \)’s” and “\(y\) \(\psi \)’s”. According to the author, a mechanism such as \(x\)’s \(\phi \)-ing is constitutionally relevant to, or simply constitutes, a given phenomenon referred to as \(y\)’s \(\psi \)-ing, if, and only if:

(i) [\(x\)] is part of [\(y\)]; (ii) in the conditions relevant to the request for explanation there is some change to [\(x\)]’s \(\phi \)-ing that changes [\(y\)]’s \(\psi \)-ing; and (iii) in the conditions relevant to the request for explanation there is some change to [\(y\)]’s \(\psi \)-ing that changes [\(x\)]’s \(\phi \)-ing. (Craver 2007, 153)

A “change” as referred to in this quote is thought of by Craver as an ideal manipulation or intervention as it is familiar from interventionist theories of causation (cf. Woodward 2003).Footnote 3 To be sure, conditions (ii) and (iii) can also be read as specifying a connection between types given that the dependencies of the relevant changes of \(x\)’s \(\phi \)-ing and \(y\)’s \(\psi \)-ing are presupposed as grounded in certain regular dependencies between the types \(\phi \) and \(\psi \). In this sense, the definition can be interpreted as characterizing general constitution as well. Whether Craver’s definition is supposed to specify general or singular constitution makes little difference for the criticism that has been expressed against it from different sides.

The most severe objection against the account points out that Craver’s attempt to customize Woodward’s central ideas for an analysis of constitution fails. Woodward’s definition of an independent intervention \(I\) demands, among other things, that “[a]ny directed path from [the intervention] \(I\) to [the effect variable] \(Y\) goes through [the cause variable] \(X\)” (Woodward 2003, 98). Since causes have no spatiotemporal overlap with their effects, there always is a fact of the matter as to whether a given intervention fulfills this criterion.

If mechanistic constitution is defined analogously, the problem is that a corresponding criterion for an independent intervention that acts only upon the mechanism constituting a given phenomenon is not satisfiable in any obvious way. In particular, the requirement would demand that an antidote injected into the rat’s brain that blocks NMDA-receptors ought to affect spatial learning not directly, but only via, the blocking of NMDA-receptor activity. However, since both NMDA-receptor activity and spatial memory acquisition take place in the very same spatiotemporal region, this seems questionable. Any intervention on a macro-phenomenon such as spatial learning is “fat-handed” with respect to the underlying mechanisms such as NMDA-receptor activity. Surgical top-down interventions are generally impossible. Moreover, manyFootnote 4 bottom-up manipulations have the same feature: There is no fact of the matter as to whether a massive injection of the antidote affects the spatial learning directly or indirectly. Since the independent intervention criterion is an essential conceptual ingredient in the mutual manipulability account of mechanistic constitution, the approach faces a serious challenge.Footnote 5

3.2 Regularity Constitution

The regularity-based analysis of mechanistic constitution has been developed partly as a response to the unsatisfiability problem reconstructed in the previous section. In contrast to the manipulationist theory, the regularity theory explicitly declares general constitution, i.e. constitution between types of mechanistic events, as the primary analysandum, where types are sets of individuals. This move is analogous to choices made by regularity theories of causation (cf. Mackie 1974; May 1999; Graßhoff and May 2001; Baumgartner 2008). Singular constitution, i.e. constitution between instantiations of mechanisms, is to be interpreted derivatively in terms of general constitution. The choice is pre-committal in the sense that it transforms the “components and their activities” into “compactivities” so to speak. For instance, the property of “being activated” that is instantiated by an NMDA-receptor becomes “hosting an active NMDA-receptor”, a property or type that is then had a space-time region. Space-time regions are the only individuals accepted into the ontology of this framework.

This certainly is a non-standard way of speaking about mechanisms. Craver, for instance, typically characterizes mechanisms as an activity “\(\phi \)-ing” of one or more objects/entities denoted by “\(x\)”, “\(y\)” etc. In other words, mechanisms, according to Craver, are events. Nevertheless, since science is typically interested not in events but in kinds of events, the commitment to an ontology consisting of space-time regions and mechanistic types may be more adequate to reconstruct pertinent explanations.

For the definition of regularity constitution, mechanistic types are expressed by Greek letters ‘\(\phi \)’ and ‘\(\psi \)’. Furthermore, capital letters ‘\(\mathbf {X}\)’, ‘\(\mathbf {X}_1\)’, ‘\(\mathbf {X}_2\)’,...,‘\(\mathbf {X}_n\)’ are used to express conjunctions of types that can be co-instantiated (either in the same individual or in “co-located” individuals). The formulation goes as follows (cf. Harbecke 2010, 275–278):Footnote 6

-

Constitution : A mechanistic type \(\phi \) constitutes another mechanistic type \(\psi \) (written as “\(\mathbf {C}\phi \psi \)”) if, and only if:

-

(i)

\(\phi \) is contained in a minimally sufficient condition \(\phi \mathbf {X}_1\) of \(\psi \), such that...

-

(ii)

\(\phi \mathbf {X}_1\) is a disjunct in a disjunction \(\phi \mathbf {X}_1 \vee \mathbf {X}_2\vee \ldots \vee \mathbf {X}_n\) of type conjunctions minimally sufficient for \(\psi \) that is minimally necessary for \(\psi \), such that...

-

(iii)

if \(\phi \) and \(\mathbf {X}_1\) are co-instantiated, then (a) their instances are a mereological part of an instance of \(\psi \), and (b) this instance of \(\psi \) is a mereological part of the mentioned fused instances of \(\phi \) and \(\mathbf {X}_1\).

-

(i)

The main idea underpinning this definition is that mechanistic constitution is a relation between mechanistic types that are regularly, but not redundantly, co-instantiated such that their instances are mereologically related.Footnote 7 Since often no single mechanism is sufficient for the occurrence of a given phenomenon, the definition makes reference to complex mechanisms involving a range of mechanistic properties. Additionally, since sometimes more than one mechanism can secure the occurrence of the given phenomenon, the definition also allows for alternative constitutive conditions. The mereological requirement is introduced in order to ensure that the mechanisms constituting a given phenomenon must occur in the same place and time as the latter. All of these ideas are expressed by conditions (i)–(iii)(a).

Condition (iii)(b) is added for the following reasons. First, it captures an intuitive relationship between the mechanisms realizing a given phenomenon in the sense that an instantiation of the former is demanded to occupy no less space or time than the phenomenon induced. It would be strange to think that when a set of neural mechanisms constituting a learning process by the rat occurs, that learning process partly takes place outside the rat. Or if it actually does, condition (iii)(b) demands that also further lower level mechanistic aspects outside the rat’s body must be relevant.

The second reason is that, with condition (iii)(b), Constitution provides a criterion for reduction. Since mutual parthood implies identity, mutual constitution, for instance, of a conjunction of types \(\phi \mathbf {X}_1\) with a type \(\psi \) ensures that \(\phi \mathbf {X}_1\) and \(\psi \) are not only sufficient and necessary for another but also coextensive. And since lawful coextensiveness is reasonably considered as sufficient for type identity (cf. Mellor 1977, 308–309), it can be inferred that \(\phi \mathbf {X}_1 = \psi \).Footnote 8

To see how the definition interprets explanations presented by working scientists, consider again how the acquisition of spatial memory by rats was made understood by a specification of the underlying neural mechanisms (cf. Sect. 2). The essence of the described research results was the claim that the acquisition of spatial memory is (partly) constituted by the generation of a spatial map within the rat’s hippocampus, which in turn is (partly) constituted by a long-term potentiation of synapses of CA1 pyramical cells, which in turn is (partly) constituted by NMDA receptor activity. If the second-order operator ‘\(\Rightarrow _c\)’ is used to capture the criteria specified by Constitution (from now on, I will sometimes speak of a type conjunction being “\(c\)-minimally sufficient” for another type in this sense) and ‘\(\mathbf {Y}_1\)’, ‘\(\mathbf {Y}_2\)’,...,‘\(\mathbf {Y}_n\)’ are used to express disjunctions of conjunctions of properties all of which are minimally sufficient for the type on the right-hand side of the biconditional, the above-mentioned research results can be expressed by the following proposition...

...where the type symbols are intended as follows:

- \(I\) :

-

: The development of spatial memory in the rat

- \(H\) :

-

: The generating of a spatial map within the rat’s hippocampus

- \(G\) :

-

: The long-term potentiation of pyramidal cells in the hippocampus

- \(F\) :

-

: The activation of NMDA-receptors at the synapses of CA1 pyramidal cells

Proposition P \(_{SL}'\) says: “(If NMDA receptor activation at the synapses of certain neurons within a rat’s hippocampus is instantiated together with certain other properties in an appropriate way, then a long-term potentiation of CA1 pyramidal cells is instantiated in the same place at the same time) and (If a long-term potentiation of CA1 pyramidal cells is instantiated together with certain other properties, then...) and...” and so on. The central hypothesis associated with the regularity theory of constitution is that this is essentially the relationship that scientists have in mind when they say that a mechanism “is responsible for”, “gives rise to” , “plays a crucial role in”, “contributes to”, “forms the basis of”, “underlies”, or “is constitutively active in” a phenomenon.

Note that P \(_{SL}'\) is still fairly cautious in character. In particular, it remains silent about which other types occur within the relevant \(c\)-minimally sufficient conditions and whether there are further \(c\)-minimally sufficient conditions for the types occurring on the right-hand side of the conditionals. This actually captures well the way that a material theory is usually expressed at any point in the history of research of neurobiology. As research goes on, the material theory gets extended in a piecemeal way and, in the optimal case, it gets completed at some point.

For instance, suppose that after more research has been conducted through the implementation of adequate difference testsFootnote 9 some of those types that in conjunction with \(F\) are \(c\)-minimally sufficient for \(G\) have been identified. These types, call them ‘\(F_1\)’ and ‘\(F_2\)’, can be thought of perhaps as certain further mechanistic types involving other receptors at the neuron’s synapses. Based on the empirical findings, the initial theory P \(_{SL}'\) is now transformed into the theory P \(_{SL}''\), which has extracted some of the types over which \(\mathbf {X}'\) initially quantified and has made them explicit (\(\mathbf {X}_1\) is transformed into \(\mathbf {X}'_1\), as the latter quantifies over fewer types than the former):

Once \(F_1\) and \(F_2\) are identified, it may turn out that the type conjunction \(FF_1F_2\) is already \(c\)-minimally sufficient for \(G\) and that, hence, \(\mathbf {X}'_1\) is in fact empty. In this case, the initial material theory will be transformed into P \(_{SL}'''\) :

Even after P \(_{SL}'''\) has been established, it may still not be clear whether, for instance, \(\mathbf {Y}_1\) is empty or not. As an example, Grover and Teyler (1992) have shown that it is not empty as there are forms of LTP in hippocampal area CA3 that are not constituted by NMDA receptor activation. In other words, it may not yet be clear whether the type conjunction \(FF_1F_2\) is the only \(c\)-minimally sufficient condition of \(G\) or not. If, after neurobiology has been completed, it turns out that \(\mathbf {Y}_1\) is in fact empty (because no further \(c\)-minimally sufficient conditions for \(G\) could be identified), the first conjunct of the initial theory P \(_{SL}'''\) takes the following final form:

In such a case, it can be inferred that \(G\) is identical to the type conjunction \(FF_1F_2\), i.e.: \(FF_1F_2 = G\). The reason is that, in this case, \(FF_1F_2\) is not only minimally sufficient and minimally necessary for, but also co-extensive with, \(G\). In other words, the constitution is mutual. And since a lawful coextensiveness of types can be considered sufficient for an identity of types (cf. again Mellor 1977, 308–309), the identity between the mutually constituting types follows. On the other hand, should \(\mathbf {Y}_1\) not turn out empty, the type conjunction \(FF_1F_2\) is not the only \(c\)-minimally sufficient condition for \(G\) and it is therefore not identical to \(G\). This shows how Constitution provides an empirical criterion for the reduction and non-reduction of types.

4 The Integrative Question

Apart from offering a faithful reconstruction of certain examples of neurobiological explanations, the regularity theory of constitution also provides a way to specify a distinction of mechanistic “levels” in the sense mentioned in Sect. 1. As mechanistic types were presupposed by the regularity theory as the primary relata of constitution, a level is bound to be a scientifically significant set of mechanistic or phenomenal types. For instance, the set of mechanisms involving functional brain regions may be classified as a level. Furthermore, the set of mechanisms involving activities of neural networks may be another one.

To be sure, the mere definition of a level as a scientifically significant set of mechanistic or phenomenal types does not provide any principles for deciding whether two mechanisms referred to by one or more accepted explanations belong to the same level, or to different levels. Moreover, the theory says nothing about how levels relate to each other in the first place. Section 1 referred to this problem as the “integrative question”, as it concerns the integration of accepted research results into a single theory.

This section aims to provide some general principles that specify a range of admissible integrative inferences and rules for level distinctions. The claim behind this enterprise is that working scientists must have in mind some rules reminiscent of the abstract principles described here when they integrate constitutive explanations and distinguish between different levels, given that the relevant integrations and level distinctions are justifiable at all. In order to explicate the principles sufficiently precisely, I use calibric letters ‘\({\mathcal {L}}_1\)’, ‘\({\mathcal {L}}_2\)’,\(\ldots \), ‘\({\mathcal {L}}_n\)’ to denote levels. Variables running over the domain of levels will be symbolized as ‘\({\fancyscript{L}}_i\)’, ‘\({\fancyscript{L}}_j\)’, and \({\fancyscript{L}}_h\)’.

As a very fundamental principle of the integrative project, each mechanistic type is taken to belong to a single level only. Much of what has been said about constitution would stand without this assumption, but it seems to give a more realistic picture of the notion as it is used in science.

- LL1 :

-

: For any mechanistic type \(\phi \) and any levels \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\), if \(\phi \) belongs to \({\fancyscript{L}}_i\) as well as to \({\fancyscript{L}}_j\), then \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\) are identical. In short: \(\forall \phi \forall {\fancyscript{L}}_i( {\fancyscript{L}}_i\phi \rightarrow \forall {\fancyscript{L}}_j( {\fancyscript{L}}_j\phi \rightarrow {\fancyscript{L}}_i = {\fancyscript{L}}_j))\).

To simplify notation, I will also introduce a two-place third-order type ‘\(\mathbf {C}(\_,\_)\)’ that is meant to express the notion of constitution according to the definition of Sect. 3.2. Additionally, I introduce a two-place relation “...is lower than, or identical to,...”, which below I will symbolize as ‘\(\le \)’. This relation, in turn, is definable within the regularity approach in terms of the notion of constitution. The idea can be expressed as follows :

- LL2 :

-

: For and any levels \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\), \({\fancyscript{L}}_i\) is lower than, or identical to, \({\fancyscript{L}}_j\) if, and only if, there are at least mechanistic types \(\phi \) and \(\psi \) such that \(\phi \) is a member of \({\fancyscript{L}}_i\) and \(\psi \) is a member of \({\fancyscript{L}}_j\) and \(\phi \) constitutes \(\psi \) (cf. Harbecke 2010, 278). In short: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j({\fancyscript{L}}_i\le {\fancyscript{L}}_j \leftrightarrow \exists \phi \exists \psi ({\fancyscript{L}}_i\phi \wedge {\fancyscript{L}}_j\psi \wedge \mathbf {C}\phi \psi ))\).

The reason that the two levels could be identical is that constitution as defined above is a reflexive relation. So, if \(\phi \) and \(\psi \) are the same mechanistic type, it would still be the case that \(\phi \) constitutes \(\psi \), or \(\mathbf {C}\phi \psi \). But it would be absurd to claim that \(\phi \) and \(\psi \) belong to two different levels.

On the other hand, cases seem possible in which constitution does not run both ways, because \(\phi \), or the conjunction of types in which \(\phi \) is contained, is not the only \(c\)-minimally sufficient condition of \(\psi \). Presumably, it is results like these on the basis of which scientists are willing to draw a distinction between mechanistic levels (even though the notion of a “level” itself is seldom clarified in scientific papers). As mentioned above, Grover and Teyler (1992) have argued that there are forms of LTP in hippocampal area CA3 that are not constituted by NMDA receptor activation. Hence, the conclusion that LTP as a mechanistic type belongs to a higher mechanistic level than the different neural processes realizing it in hippocampal areas CA1 and CA3.

One way to express the reasoning behind this example goes as follows. First, I specify when a level is not lower than, or identical to, another level.

- LL3 :

-

: For any levels \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\), if there is a conjunction of mechanistic types \(\mathbf {X}\) and a type \(\psi \) such that \(\psi \) belongs to \({\fancyscript{L}}_j\) and all members of \(\mathbf {X}\) constitute \(\psi \) and belong to \({\fancyscript{L}}_i\), and if, moreover, there is no type that is not contained in \(\mathbf {X}\), that belongs to \({\fancyscript{L}}_i\), and that constitutes \(\psi \), then, if \(\psi \) does not constitute \(\mathbf {X}\), \({\fancyscript{L}}_j\) is not lower, and not identical to, \({\fancyscript{L}}_i\). In short: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j\exists \mathbf {X}\exists \psi ({\fancyscript{L}}_j\psi \wedge \forall \phi (\phi \in \mathbf {X} \rightarrow {\fancyscript{L}}_i\phi \wedge \mathbf {C}\phi \psi ) \wedge \lnot \exists \gamma (\gamma \notin \mathbf {X} \wedge {\fancyscript{L}}_i\gamma \wedge \mathbf {C}\gamma \psi ) \rightarrow (\lnot \mathbf {C}\psi \mathbf {X} \rightarrow \lnot ({\fancyscript{L}}_j \le {\fancyscript{L}}_i)))\).Footnote 10

The antecedent of this conditional expresses the case mentioned above, in which a second minimally sufficient condition of the phenomenon in question inhabits the same level as the first (both minimally sufficient conditions are quantified over by the X in this case). The reasoning behind this rule corresponds to some extent to arguments against an identity of co-instantiated types based on a multiple type realizability (cf. Putnam 1967; Fodor 1974, 1997). However, since realization is not to be confused with constitution as defined above, a direct application of these arguments to the case of constitution would require further discussion. In a second step, the following rules characterize the “...is lower than, or identical to,...”-relation as a total ordering relation:

- LL4 :

-

: (Reflexivity): For and any level \({\fancyscript{L}}\), \({\fancyscript{L}}\) is lower than, or identical to, itself. In short: \(\forall {\fancyscript{L}}({\fancyscript{L}}\le {\fancyscript{L}})\).

- LL5 :

-

: (Transitivity): For and any levels \({\fancyscript{L}}_i\), \({\fancyscript{L}}_j\), and \({\fancyscript{L}}_h\), if \({\fancyscript{L}}_i\) is lower, or identical to, \({\fancyscript{L}}_j\), and \({\fancyscript{L}}_j\) is lower, or identical to, \({\fancyscript{L}}_h\) then \({\fancyscript{L}}_i\) is lower, or identical to, \({\fancyscript{L}}_h\). In short: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j\forall {\fancyscript{L}}_h(({\fancyscript{L}}_i\le {\fancyscript{L}}_j) \wedge ({\fancyscript{L}}_j\le {\fancyscript{L}}_h) \rightarrow ({\fancyscript{L}}_i\le {\fancyscript{L}}_h))\).

- LL6 :

-

: (Antisymmetry): For and any levels \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\), if \({\fancyscript{L}}_i\) is lower, or identical to, \({\fancyscript{L}}_j\), and \({\fancyscript{L}}_j\) is lower, or identical to, \({\fancyscript{L}}_i\) then \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\) are identical. In short: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j(({\fancyscript{L}}_i\le {\fancyscript{L}}_j) \wedge ({\fancyscript{L}}_j\le {\fancyscript{L}}_i) \rightarrow ({\fancyscript{L}}_i = {\fancyscript{L}}_i))\).

- LL7 :

-

: For and any levels \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\), either is \({\fancyscript{L}}_i\) is lower than, or identical to, \({\fancyscript{L}}_j\), or \({\fancyscript{L}}_j\) is lower than, or identical to, \({\fancyscript{L}}_i\). In short: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j(({\fancyscript{L}}_j\le {\fancyscript{L}}_i) \vee ({\fancyscript{L}}_i\le {\fancyscript{L}}_j))\).

We can then add another requirement that determines that, if a level fails to be identical and lower than another one, the former must be higher than the latter.

- LL8 :

-

: For and any levels \({\fancyscript{L}}_i\) and \({\fancyscript{L}}_j\), if \({\fancyscript{L}}_i\) is not lower than, and not identical to, \({\fancyscript{L}}_j\), then \({\fancyscript{L}}_j\) is lower than, and not identical to, \({\fancyscript{L}}_j\). In short: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j(\lnot ({\fancyscript{L}}_i\le {\fancyscript{L}}_j) \leftrightarrow ({\fancyscript{L}}_j<{\fancyscript{L}}_i))\).

...where the operator ‘\(<\)’ is defined as follows: \(({\fancyscript{L}}_j<{\fancyscript{L}}_i)\leftrightarrow ({\fancyscript{L}}_j\le {\fancyscript{L}}_i) \wedge ({\fancyscript{L}}_j\ne {\fancyscript{L}}_i)\). Level identity and level location defined in this way may imply the existence of a large number of mechanistic levels for some data sets. Whenever more than a single \(c\)-minimally sufficient condition is identified for a chosen mechanistic type, the type is located on a higher level than the conjuncts of that \(c\)-minimally sufficient condition. This shows that, at least according to the regularity theory, the overall hierarchy of mechanisms in neurobiology is quite different from classical models of the scientific hierarchy such as those sketched by Morgan (1923, 11) or Oppenheim and Putnam (1958, 9). Moreover, it shows that the distinction of mechanistic levels is not based on a measure such as the size of objects as discussed (and criticized) by Kim (2002). Mechanistic types instantiated in regions of different sizes can well be members of the same level.

Even with assumptions LL1–LL8, the “integrative question” is still only partially answered. The remaining, and much more complicated, problem is how to determine whether two mechanisms referred to by one or more accepted explanations belong to the same level, or to different levels, if they are not related by constitution. Bechtel (2005) and Craver (2007, 191) have sometimes declared this problem unsolvable.

In my view, at least in some cases there is in fact an answer to the same-or-different-levels questions. The cases are salient if we presume that there is causation between \(-\) and not only within \(-\) mechanisms, and if we accept Bechtel’s and Craver’s claim that causation as referred to in neuroscience is essentially intra-level or level-bound (Craver and Bechtel 2007, 553; cf. also Woodward 2008 and the notion of causal proportionality). The relevant causal relation is a second-order relation of “...is causally relevant for...”. A level-bound causal theory says that mechanistic types are causally relevant only for mechanistic types that belong to the same level. A Woodwardian theory of causation,Footnote 11 or a regularity theory, may offer this option. If causation is denoted by ‘\(\mathbf {K}\)’, the following rules arguably match how one would reason within a mechanistic theory.

- LL9 :

-

: For any \(\phi \) and \(\psi \), if either \(\phi \) causes \(\psi \) or \(\psi \) causes \(\phi \), they belong to the same level. In short: \(\forall \phi \forall \psi (\mathbf {K}\phi \psi \vee \mathbf {K}\psi \phi \rightarrow \forall {\fancyscript{L}}_i ({\fancyscript{L}}_i\phi \rightarrow {\fancyscript{L}}_i\psi ))\).

- LL10 :

-

: For any \(\phi \), \(\psi \), and \(\gamma \), if \(\phi \) constitutes \(\psi \) and either \(\psi \) causes \(\gamma \) or \(\gamma \) causes \(\psi \), then there is a \(\delta \) such that it constitutes \(\gamma \) and that belongs to the same level as \(\phi \). In short: \(\forall \phi \forall \psi \forall \gamma (\mathbf {C}\phi \psi \wedge \mathbf {K}\psi \gamma \vee \mathbf {K}\gamma \psi \rightarrow \exists \delta (\mathbf {C}\delta \gamma \wedge \forall {\fancyscript{L}}_i( {\fancyscript{L}}_i\phi \rightarrow {\fancyscript{L}}_i\delta )))\).

A further step would be to accept a general supervenience claim with respect to the different levels. On the basis of such a claim, the following inference rule can be formulated.

- LL11 :

-

: For any \(\phi \), \(\psi \), and \({\fancyscript{L}}_i\), if \(\psi \) belongs to \({\fancyscript{L}}_i\) and is constituted by \(\phi \), then, if there is a \(\gamma \) that belongs to the same level as \(\psi \), then there is also a \(\delta \) such that it constitutes \(\gamma \) and such that it belongs to the same level as \(\phi \). In short: \(\forall \phi \forall \psi \forall {\fancyscript{L}}_i({\fancyscript{L}}_i\psi \wedge \mathbf {C}\phi \psi \rightarrow \exists \gamma ({\fancyscript{L}}_i\gamma \rightarrow \exists \delta (\mathbf {C}\delta \gamma \wedge \forall {\fancyscript{L}}_j({\fancyscript{L}}_j\phi \rightarrow {\fancyscript{L}}_j\delta ))))\).

Taken together, assumptions LL1–LL11 provide some answers to the integrative question. They allow to determine at least in some cases whether two mechanistic or phenomenal types occurring in one or more accepted constitutive explanations belong to the same level or different levels.

The downside is that the explicated inference rules do so only under heavy background assumptions such as the assumption that causation is essentially level-bound and that supervenience holds. Nevertheless, as already claimed above, if level distinctions and the integrations of constitutive explanations made by working scientists are justifiable at all, they must be grounded in rules at least remotely reminiscent of assumptions LL1–LL11.

For instance, with respect to the example presented in Sect. 2, a distinction of levels might have proceeded as follows. As mentioned, according to the regularity theory, the explanation of spatial memory in rats can be expressed by the following proposition P \(_{SL}'\):

Among other things, rule LL2 allows to infer from the relationships C \(FG\), C \(GH\), C \(HI\), C \(\mathbf {X}_1G\), C \(\mathbf {X}_2H\), and C \(\mathbf {X}_3I\) implied by P \(_{SL}'\) the following claims: \({\mathcal {L}}_{F}\le {\mathcal {L}}_{G}\) (meaning “the level hosting active NMDA-receptors at the synapses of hippocampal pyramidal cells is lower, or identical to, the level hosting hippocampal pyramidal cells instantiating long-term potentiation”), \({\mathcal {L}}_{G}\le {\mathcal {L}}_{H}\), \({\mathcal {L}}_{H}\le {\mathcal {L}}_{I}\), \({\mathcal {L}}_{\mathbf {X}_1}\le {\mathcal {L}}_{G}\), \({\mathcal {L}}_{\mathbf {X}_2}\le {\mathcal {L}}_{H}\), \({\mathcal {L}}_{\mathbf {X}_3}\le {\mathcal {L}}_{I}\).

Rule LL5 (Transitivity) allows to infer from these formulae, for instance, the following claims: \({\mathcal {L}}_{F}\le {\mathcal {L}}_{H}\), \({\mathcal {L}}_{F}\le {\mathcal {L}}_{I}\), \({\mathcal {L}}_{G}\le {\mathcal {L}}_{I}\), \({\mathcal {L}}_{\mathbf {X}_1}\le {\mathcal {L}}_{H}\), \({\mathcal {L}}_{\mathbf {X}_2}\le {\mathcal {L}}_{I}\). These conclusions are certainly adequate, for we do want to say that, if a lower-level mechanism (such as the activation of NMDA-receptors at the synapses of CA1 pyramidal cells) constitutes a higher-level mechanism (such as the generating of a spatial map within the rat’s hippocampus) of a phenomenon (such as the development of spatial memory in the rat), then that lower-level mechanism is also on a lower level than, or the same level as, that phenomenon.Footnote 12

If it should now happen, as presumed above, that two further constutive factors, \(F_1\) and \(F_2\), of \(G\) have been identified whilst \(\mathbf {Y}_1\) turns out to be empty (because no further \(c\)-minimally sufficient conditions for \(G\) can be identified), the first conjunct of the initial theory takes the following final form:

In such a case, \(G\) becomes minimally sufficient for \(FF_1F_2\). And since \(G\) and \(FF_1F_2\) share all their instances, \(G\) also turns out to be \(c\)-minimally sufficient condition for \(FF_1F_2\). In other words, the following two claims are true: C \(G(FF_1F_2)\) and C \((FF_1F_2)G\), from which by LL2 it can be inferred that \({\mathcal {L}}_{G}\le {\mathcal {L}}_{FF_1F_2}\) and \({\mathcal {L}}_{FF_1F_2}\le {\mathcal {L}}_{G}\). Rule LL6 then allows to infer that \({\mathcal {L}}_{G}={\mathcal {L}}_{FF_1F_2}\). In other words, mutual constitution implies that the levels, on which the mutually constituting factors are located, are identical. This is desirable, since we do not want to say that two mechanisms are identical but inhabit different levels.

Suppose, on the other hand, that \(\mathbf {Y}_1\) is not empty as suggested by Grover and Teyler (1992). According to these researchers, there are forms of LTP in hippocampal area CA3 that are not constituted by NMDA receptor activation. Or in other words, there are certain further \(c\)-minimally sufficient conditions of \(G\) not identical to the one containing \(F\). In this case, the antecedent of rule LL3 is satisfied. For, if we include all the factors that constitute \(G\) into the conjunction \(\mathbf {X}\) (such that there is no factor on the same level that constitutes \(G\) but is not contained in \(\mathbf {X}\)), then \(G\) does not constitute \(\mathbf {X}\) in turn. The reason is that, according to our assumption, there is more than one \(c\)-minimally sufficient condition of \(G\) contained in the conjunction \(\mathbf {X}\), which means that \(G\) is not sufficient for \(\mathbf {X}\). It does not secure that all factors contained in \(\mathbf {X}\) are instantiated whenever \(G\) is instantiated. As a consequence, we can infer that \(\lnot ({\mathcal {L}}_{G}\le {\mathcal {L}}_{FF_1F_2})\).

With this assumption in place, we can now infer with LL7 that \({\mathcal {L}}_{FF_1F_2}\le {\mathcal {L}}_{G}\). An even stronger claim can be attained with rule LL8, namely \({\mathcal {L}}_{FF_1F_2}<{\mathcal {L}}_{G}\). This is as it should be. We want to be able to draw the conclusion that the level hosting the activated NMDA-receptors at the synapses of CA1 pyramidal cells is lower than the level hosting hippocampal pyramidal cells instantiating long-term potentiation, given that there are ways to secure the occurrence of LTP in other hippocampal cells that does not involve activated NMDA-receptors. Or put differently, we have good reasons to believe that it was inference rules of this kind that motivated scientists and philosophers to conclude that activated NMDA-receptors at the synapses of CA1 pyramidal cells are located on a strictly lower level than hippocampal pyramidal cells instantiating long-term potentiation.

5 Conclusion

The paper started off by sketching an example of a currently accepted explanation in neurobiology that was used as a test case for points made in later parts of the article. Two analytical approaches to the notion of constitution as it appears in neurobiological explanations were then presented in Sect. 3, namely the manipulationist and the regularity theory of constitution. The regularity theory was characterized as more powerful than the manipulationist approach. It was then shown how the regularity theory defines the notion of a “mechanistic level” and how it reconstructs the example of Sect. 2. Subsequently, Sect. 4 offered some inference rules that allow to integrate several independently established constitutive explanations into a single theory. It was argued that these inference rules guide the level distinctions and level identifications that scientists make in light of certain kinds of empirical evidence.

Due to limits of space, the paper had to leave certain further questions unanswered. Among these are what Sect. 1 described as the “methodological”, the “comparative”, the “simplification”, and the “ontological” questions. Furthermore, the real test case for the rules explicated in Sect. 4 consists in their application to more than a few actual cases of widely accepted multi-level explanations in neuroscience. These questions and problems will be the targets of future research on the mechanistic approach to neurobiological explanations.

Notes

The term “composition” has been used by Machamer et al. (2000, 13), Bechtel and Abrahamsen (2005, 426), and Craver (2007, 164); “constitution” occurs in Craver (2007, 153); “constitutive relevance” is found in Craver (2007, 139). It is safe to say that the authors intend these terms widely synonymously. For the sake of terminological unity, from now on I will use the term “constitution” to denote the relation that is referred to by these expressions.

For more details on the connection of supervenience and mechanistic constitution, see Harbecke (2014).

Craver explicitly uses the term “manipulation” in other versions of his definition, as for instance in (2007, 159): “In sum, I conjecture that to establish that [\(x\)]’s \(\phi \)-ing is relevant to [\(y\)]’s \(\psi \)-ing it is sufficient that one be able to manipulate [\(y\)]’s \(\psi \)-ing by intervening to change [\(x\)]’s \(\phi \)-ing (by stimulating or inhibiting) and that one be able to manipulate [\(x\)]’s \(\phi \)-ing by manipulating [\(y\)]’s \(\psi \)-ing. To establish that a component is irrelevant, it is sufficient to show that one cannot manipulate [\(y\)]’s \(\psi \)-ing by intervening to change [\(x\)]’s \(\phi \)-ing and that one cannot manipulate [\(x\)]’s \(\phi \)-ing by manipulating [\(y\)]’s \(\psi \)-ing.” The fact that Craver has in mind a Woodwardian kind of ideal manipulation/intervention is made explicit on pp. 94–98 in his (2007).

Of course, it is sometimes possible to intervene on micro-levels without inducing a change in the supervening properties. That is, there exist surgical bottom-up interventions. I thank an anonymous referee for pointing this out to me.

For a similar argument, (cf. Franklin-Hall, forthcoming).

Note that in Harbecke 2010 (p. 277), I initially divided condition (iii) of Constitution into two separate conditions. I thank an anonymous referee for pointing out to me that the initial formulation contained an ambiguity with respect to the subjunction in condition (iii). The present formulation avoids this problem.

The mereological theory presupposed here is General Extensional Mereology (GEM) as explicated by Varzi (2009).

Note that this view differs from a notion of type identity defended by Lewis (1985), according to which only a metaphysically necessary coextensiveness is sufficient for type identity. The problem with this view is that it excludes a posteriori discoveries of type identities in science, as actual scientists have access to our world and its laws exclusively. As a response, D. H. Mellor has suggested with respect to the discussion about the identity of the natural kind types ‘...is water’ and ‘... is H\(_2\)O’: “One might try arguing, however, that the requirements of natural kind identity have been pitched too high. Perhaps what is needed is coextensiveness, not in all possible worlds, but in this world and in those nearest to it. After all, science is supposed to give us essences; yet the most scientists can show us in fact is lawful coextensiveness between ‘..is water’ and ‘...is H\(_2\)O’. That is, we suppose they can show that not only are all samples of water samples of H\(_2\)O, but that if anything were a sample of water it would be a sample of H\(_2\)O. Now that need not be a claim about all possible worlds, since the consequent of a true subjunctive conditional need not be true in all possible worlds in which the antecedent is true; it need only be true in those worlds which are sufficiently like ours.” (Mellor 1977, 308) I subscribe to this view here: Lawful factual coextensiveness is sufficient for mechanistic type identity. In other words, in the background of my identity claim is a kind of epistemic “law”, which claims that for all mechanistic types \(\phi \), \(\psi \), and all individuals \(x\): \(\Box _{nom}(\phi x \leftrightarrow \psi x) \rightarrow \phi = \psi \) (where “\(\Box _{nom}\)” designates nomological necessity).

The questions about adequate difference tests are part of the “methodological question” that I do not focus on here. As a first hint, however, it should be pointed out that adequate rules for determining constitutive relationships should reflect the known rules allowing to determine causal relevancies in the context of a regularity theory of causation (cf. Baumgartner and Graßhoff 2004, ch. 9).

Note that, because in Sect. 3.2 mechanistic types were declared to be sets of individuals, a conjunction of mechanistic types is also a mechanistic type. For instance, the set (= type) corresponding to the conjunctive mechanistic predicate “\(\phi \wedge \psi \)” is identical to the intersection of the sets (= types) corresponding to the mechanistic predicates “\(\phi \)” and “\(\psi \)” (and there is nothing more to being a conjunction of types than being the set that correponds to a conjunctive mechanistic predicate whose conjuncts refer to types). It is therefore perfectly possible that a mechanistic type \(\psi \) constitutes a conjunction \(\mathbf {X}\) of mechanistic types. Moreover, note that LL3 could also be shortened as follows: \(\forall {\fancyscript{L}}_i\forall {\fancyscript{L}}_j\exists \mathbf {X}\exists \psi ({\fancyscript{L}}_j\psi \wedge \forall \phi (\phi \in \mathbf {X} \leftrightarrow {\fancyscript{L}}_i\phi \wedge \mathbf {C}\phi \psi ) \rightarrow (\lnot \mathbf {C}\psi \mathbf {X} \rightarrow \lnot ({\fancyscript{L}}_j \le {\fancyscript{L}}_i)))\). I have chosen the longer alternative since it brings out a bit clearer the requirement of the non-existence of a constituting condition of the \(\psi \) on the level \({\fancyscript{L}}_i\) outside of the X.

Note that these claims cannot be established directly on the basis of constitutive assumptions. Mechanistic constitution as defined above is not obviously transitive because, wheras sufficiency is transitive, minimal sufficiency is not. Hence, rule LL5 is non-redundant. It is required to establish the mentioned claims.

References

Baumgartner, M. (2008). Regularity theories reassessed. Philosophia, 36(3), 327–354.

Baumgartner, M. (2009). Interventionist causal exclusion and non-reductive physicalism. International Studies in the Philosophy of Science, 23(2), 161–178.

Baumgartner, M. (2010). Interventionism and epiphenomenalism. Canadian Journal of Philosophy, 40(3), 359–383.

Baumgartner, M. (2013). Rendering interventionism and non-reductive physicalism compatible. Dialectica, 67(1), 1–27.

Baumgartner, M., & Graßhoff, G. (2004). Kausalität und kausales Schliessen: eine Einführung mit interaktiven Übungen. Bern: Bern Studies in the History and Philosophy of Science.

Bechtel, W. (2005). The challenge of characterizing operations in the mechanisms underlying behavior. Journal of the Experimental Analysis of Behavior, 84(3), 313–325.

Bechtel, W., & Abrahamsen, A. (2005). Explanation: A mechanist alternative. Studies in History and Philosophy of Biological and Biomedical Sciences, 36(2), 421–441.

Bechtel, W., & Richardson, R. (1993). Discovering complexity: Decomposition and localization as scientific research strategies. New York: Princeton University Press.

Bickle, J. (2003). Philosophy and neuroscience: A ruthlessly reductive account. Dordrecht: Kluwer.

Bliss, T., Collingridge, G., et al. (1993). A synaptic model of memory: Long-term potentiation in the hippocampus. Nature, 361(6407), 31–39.

Bliss, T., & Lømo, T. (1973). Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. The Journal of Physiology, 232(2), 331–356.

Churchland, P. S., & Sejnowski, T. J. (1992). The computational brain. Cambridge, MA: MIT Press.

Couch, M. (2011). Mechanisms and constitutive relevance. Synthese, 183(3), 375–388.

Craver, C. (2002). Interlevel experiments and multilevel mechanisms in the neuroscience of memory. Philosophy of Science, 69(3), 83–97.

Craver, C. (2007). Explaining the brain. New York: Oxford University Press.

Craver, C., & Darden, L. (2001). Discovering mechanisms in neurobiology. In P. Machamer, R. Grush, & P. McLaughlin (Eds.), Theory and method in the neurosciences (pp. 112–137). Pittsburgh: University of Pittsburgh Press.

Craver, C. F., & Bechtel, W. (2007). Top-down causation without top-down causes. Biology & Philosophy, 22(4), 547–563.

Davis, S., Butcher, S., & Morris, R. (1992). The nmda receptor antagonist d-2-amino-5-phosphonopentanoate (d-ap5) impairs spatial learning and ltp in vivo at intracerebral concentrations comparable to those that block ltp in vitro. Journal of Neuroscience, 12(1), 21–34.

Fazekas, P., & Kertész, G. (2011). Causation at different levels: Tracking the commitments of mechanistic explanations. Biology and Philosophy, 26(3), 1–19.

Fodor, J. (1974). Special sciences (or: the disunity of science as a working hypothesis). Synthese, 28(2), 97–115.

Fodor, J. (1997). Special sciences: Still autonomous after all these years. Noûs 31 (Supplement: Philosophical Perspectives, 11, Mind, Causation, and World), pp. 149–163.

Franklin-Hall, L. (forthcoming). High-level explanation and the interventionist’s’ variables problem. British Journal for the Philosophy of Science.

Frey, U., Frey, S., Schollmeier, F., & Krug, M. (1996). Influence of actinomycin d, a rna synthesis inhibitor, on long-term potentiation in rat hippocampal neurons in vivo and in vitro. The Journal of Physiology, 490(Pt 3), 703.

Friedman, M. (1974). Explanation and scientific understanding. The Journal of Philosophy, 71(1), 5–19.

Graßhoff, G., & May, M. (2001). Causal regularities. In W. Spohn, M. Ledwig, & M. Esfeld (Eds.), Current issues in causation (pp. 85–114). Paderborn: Mentis.

Grover, L., & Teyler, T. (1992). N-methyl-d-aspartate receptor-independent long-term potentiation in area ca1 of rat hippocampus: Input-specific induction and preclusion in a non-tetanized pathway. Neuroscience, 49(1), 7–11.

Harbecke, J. (2010). Mechanistic constitution in neurobiological explanations. International Studies in the Philosophy of Science, 24(3), 267–285.

Harbecke, J. (2014). The role of supervenience and constitution in neuroscientific research. Synthese, 191(5), 725–743.

Harris, E., Ganong, A., & Cotman, C. (1984). Long-term potentiation in the hippocampus involves activation of n-methyl-d-aspartate receptors. Brain Research, 323(1), 132–137.

Hempel, C., & Oppenheim, P. (1948). Studies in the logic of explanation. Philosophy of Science, 15(2), 135–175.

Hempel, C. G. (1965). Aspects of scientific explanation. In C. G. Hempel (Ed.), Aspects of scientific explanation and other essays in the philosophy of science (pp. 331–496). New York: Macmillan.

Kim, J. (2002). The layered model: Metaphysical considerations. Philosophical Explorations, 5(1), 2–20.

Kimble, D. (1963). The effects of bilateral hippocampal lesions in rats. Journal of Comparative and Physiological Psychology, 56(2), 273.

Kitcher, P. (1989). Explanatory unification and the causal structure of the world. In P. Kitcher & W. Salmon (Eds.), Scientific explanation (pp. 410–505). Minneapolis: University of Minnesota Press.

Lewis, D. (1985). On the plurality of worlds, chapter modal realism at work: Properties. London: Basil Blackwell.

Lømo, T. (2003). The discovery of long-term potentiation. Philosophical Transactions of the Royal Society. London. B. Biological Science, 358, 617–620.

Machamer, P., Darden, L., & Craver, C. (2000). Thinking about mechanisms. Philosophy of Science, 67(1), 1–25.

Mackie, J. (1974). The cement of the universe. Oxford: Clarendon Press.

Malenka, R., Kauer, J., Perkel, D., Mauk, M., Kelly, P., Nicoll, R., et al. (1989). An essential role for postsynaptic calmodulin and protein kinase activity in long-term potentiation. Nature, 340(6234), 554–557.

May, M. (1999). Kausales Schließen. Eine Untersuchung zur kausalen Erklärung und Theorienbildung. Ph.D. thesis, University of Hamburg, Germany.

McLaughlin, B., & Bennett, K. (2008). Supervenience. In E. N. Zalta (Ed.), The stanford encyclopedia of philosophy (Fall 2008 ed.).

Mellor, D. H. (1977). Natural kinds. British Journal for the Philosophy of Science, 28(4), 299–312.

Morgan, C. L. (1923). Emergent evolution. London: Williams and Norgate.

Morris, R. (1984). Developments of a water-maze procedure for studying spatial learning in the rat. Journal of Neuroscience Methods, 11(1), 47–60.

Morris, R., Anderson, E., Lynch, G., & Baudry, M. (1986). Selective impairment of learning and blockade of LTP by an NMDA receptor antagonist AP5. Nature, 319, 774–776.

Morris, R., Garrud, P., Rawlins, J., & O’Keefe, J. (1982). Place navigation impaired in rats with hippocampal lesions. Nature, 297(5868), 681–683.

Oppenheim, P., & Putnam, H. (1958). Unity of science as a working hypothesis. In H. Feigl, M. Scriven, & G. Maxwell (Eds.), Concepts, theories and the mind-body problem volume 2 of minnesota studies in the philosophy of science, pp. 3–36. Minneapolis: University of Minnesota Press.

Putnam, H. (1980/1967). The nature of mental states. In N. Block (Ed.), Readings in philosophy of psychology, vol. 1, pp. 223–231. Cambridge: Harvard University Press.

Toni, N., Buchs, P.-A., Nikonenko, I., Bron, C., & Muller, D. (1999). Ltp promotes formation of multiple spine synapses between a single axon terminal and a dendrite. Nature, 402(6760), 421–425.

Varzi, A. (2009). Mereology. In E. N. Zalta (Ed.), Stanford encyclopedia of philosophy (Summer 2009 edition). URL: http://plato.stanford.edu/entries/mereology/.

Woodward, J. (2003). Making things happen: A theory of causal explanation. New York: Oxford University Press.

Woodward, J. (2008). Mental causation and neural mechanisms. In J. Hohwy & J. Kallestrup (Eds.), Being Reduced: New Essays on Reduction, Explanation, and Causation (pp. 218–262). New York: Oxford University Press, USA.

Woodward, J. (2011). Interventionism and causal exclusion (unpublished). PhilSci-Archive; URL: http://philsci-archive.pitt.edu/8651/.

Acknowledgments

Thanks to Carl Craver, Eli Dresner, Ariel Fürstenberg, Arnon Levy, Gualtiero Piccinini, Oron Shagrir, the Philosophy of Science Reading Group at the Philosophy-Neuroscience-Psychology program at Washington University St. Louis (fall semester 2013/2014), and two anonymous reviewers for helpful comments on an earlier version of this paper. The author gratefully acknowledges the support of the Deutsche Forschungsgemeinschaft (DFG), grant no. HA 6349/2-1.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Harbecke, J. Regularity Constitution and the Location of Mechanistic Levels. Found Sci 20, 323–338 (2015). https://doi.org/10.1007/s10699-014-9371-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10699-014-9371-1