Abstract

Studies of early writing recognize that learning to write is a complex process requiring students to attend to the composition of the text and the transcription of the ideas. The research discussed here examined six dimensions of early writing—text structure, sentence structure, vocabulary, spelling, punctuation, and handwriting—and how each dimension relates to message construction. Specifically, this research aimed to consider the relationships between the authorial and secretarial aspects of writing, in order to support formative assessment and teaching. The research also considered whether there were underlying clusters of students who were engaging with the various dimensions in differing ways as they learned to craft texts. The analysis of data showed a clear conceptualization of the authorial and secretarial aspects of writing, as reflected in a tool for analysing writing. The three authorial dimensions and three secretarial dimensions of writing were well defined statistically. Three clusters of students were identified as having varying degrees of competence across the six dimensions of writing: a group with consistently low scores; a group with consistently medium scores; and a consistently high-scoring group. Attainment for SES groups was reflected in the writing dimensions, and gender differences were also evident; however, the variance explained by gender and SES was small to moderate. The study has implications for supporting students who are at present struggling with the challenges of early writing. The results suggest that when teachers address the dimensions of the writing process in combination, based on an informed analysis of students’ needs, they can focus their teaching and select instructional approaches to increase the efficacy of their teaching.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Effective writing instruction is dependent upon teacher awareness of the complexity of the writing process, acknowledgment of the multiple layers and intersections between the various aspects of language learning, and appreciation of the many pathways young learners may take on their journey towards proficiency (Askew 2009; Clay 2001). A deep knowledge of writing acquisition processes and the skills that support students to learn to write and create meaningful texts is necessary for teachers to be able to assess students’ writing and subsequently design and implement teaching programmes to facilitate the craft of writing. To provide some clarity for teachers, our analysis of Year 1 students’ writing has identified six dimensions of writing that can be used to describe the qualities of the writing of this age group alongside six levels of attainment to map students’ learning. The Writing Analysis Tool, emanating from this mapping, enables teachers to identify the writing skills profile of young writers and to plan for instruction, which can provide students with focused input at critical points in their learning (Mackenzie et al. 2015; Mackenzie and Scull 2016).

The purpose of this paper is to establish the structure and validity of the Writing Analysis Tool and the six dimensions of writing identified—text structure, sentence structure, vocabulary, spelling, punctuation, and handwriting—to define the writing competence of Year 1 students. Further, the analysis considers various combinations of the dimensions of writing identified by the tool that may make teaching more focused and learning more efficient. For example, teaching sentence structure and spelling together may not be as advantageous to learners as an integrated approach to teaching sentence structure and text structure. The contention that there are typologies of young writers is also considered, again with the intention of guiding classroom teaching and targeting instruction. It is proposed that when teachers know what factors or dimensions work in combination, for various groups of students, they can strengthen teaching practices and enrich students’ learning.

1.1 The dimensions of writing

In the current era, writing has come of age, “eclipsing reading as the literate experience of consequence” (Brandt 2015, p. 3), and making the teaching and learning of writing a high priority for teachers. However, of all language activities humans engage with, writing is the most constructed and elaborate, and, for most of us, the most difficult (Bromley 2007). Even defining writing can be complex, and while it is tempting to use a common-sense definition of writing as “producing and inscribing words” (Brandt 2015, p. 92), our understandings of multimodality require us to consider writing as text creation or production that goes beyond linguistic understandings of writing to include multiple modes of expression (Kalantzis et al. 2016). Particular to young children there is a large body of literature which supports a strong relationship between drawing and writing (see for example Genishi and Dyson 2009; Kress and Bezemer 2009; Mills 2011), with children’s drawings integral to the emergent writing process (Mackenzie 2011). Young children very often use a range of semiotic modes as they create texts that combine drawing, symbols, letters, words, and talk. However, as children transition into school, teachers are often more focused on the teaching of written language (Mackenzie 2014). Perhaps this focus on words comes from teachers’ appreciation and understandings of written text forms as central even to multimodal texts. Their decision to focus on written language is supported by the literature which has shown that success with written text creation has been demonstrated to be essential for learning (Prain and Hand 2016), and academic success (Fang and Wang 2011; Puranik and Lonigan 2014), as well as important to reading (Cutler and Graham 2008) and literacy more generally (Mackenzie and Petriwskyj 2017).

Writing involves the use of the most elaborate manifestation of language to communicate messages to others (Lo Bianco et al. 2008). It requires making connections and constructing meaning, and knowing how to apply the conventions of grammar, spelling, punctuation, and form. In addition, writing requires developing control over vocabulary choices and syntactic competence that permits effective self-expression. The integration of skills related to the composition of messages and the recording of ideas draws on a range of conventions that allow others to access the meaning encoded in texts. The terms authorial and secretarial, as described by Peters and Smith (1993), have been used to group these dimensions of writing. The authorial dimensions consider the composition of ideas and information communicated through the text, while the secretarial dimensions take account of the surface features and conventions of writing that allow a writer to accurately record written messages.

The authorial dimensions of writing are primarily linked to the communication of messages and the organization of texts that take into account the intended audience and purpose. Knowing how texts are structured to convey meaning and having a developing awareness of the range of organizational options available is critical as students learn to sequence their ideas (Christie 2005; Christie and Dreyfus 2007). Young children, who have considerable experience with book language from being read to, recognize the different registers of oral and written language (Purcell-Gates 1994) and most can confidently discriminate between oral and written forms of communication by eight years of age (Perera 1986). However, many are unable to produce in writing the semantic complexity they control quite naturally in speech (Halliday 2016). While in oral language meaning can be negotiated, written texts must be structured in ways that can be understood without the need for clarification from an absent author (Mackenzie 2020). Writing is also “more lexically dense than speech and hierarchically ordered, with more integration, embeddedness, and subordination” (Myhill 2009, p. 407). Therefore, there is much for the young writer to learn about written structures at the text and sentence levels. This includes control over correct sentence grammar, a variety of sentence types, and the way in which ideas are linked and related in texts (Derewianka 2011). Similarly, students need to develop a breadth of vocabulary that will allow them to incorporate lexical choices appropriate to topic and audience, and to communicate meanings with precision, using unique field and technically specific vocabulary (Beck et al. 2002; Nagy and Townsend 2012).

For young students, learning to write also involves the development of an understanding of the secretarial dimensions of writing. Beginning writers are challenged by the conventions of print as they record messages that can be read by others (Abbott et al. 2010). They must develop knowledge of alphabetic principles including distinguishing, identifying and writing letters, and linking sound symbols to letters. Furthermore, they must demonstrate an increasing understanding of the accurate application of culturally determined spelling systems or rules (Wulff et al. 2008). Further, the use of punctuation symbols that arrange ideas into units of meaning is related to developing control over the variety of forms of representation and conventions of language (Olson 2009). In addition, students must learn to write efficiently and legibly by hand. The relationship between compositional skill and handwriting fluency is well documented (Graham 2009/2010; Medwell and Wray 2008; Schlagal 2007; Torrance and Galbraith 2006), with efficient, automatic handwriting freeing up a young writers’ working memory to concentrate on the message that is being created (Boscolo 2008; James and Engelhardt 2012).

1.2 Assessing and grouping students for instruction

While the complexity of learning to write is acknowledged, “the challenges for teachers are equally daunting as they grapple with trying to meet the diverse needs of students, curriculum requirements and the expectations of employers and the community” (Mackenzie 2009, p. 60). Regardless of a teachers’ pedagogical approach, if they are going to effectively support students’ writing they need a clear conceptualization of all aspects of the writing process, an understanding of possible progressions in students’ learning, and access to integrated writing analysis systems (Troia 2007). In addition to a range of valid approaches for assessing individual student progress, it is important that teachers are supported to interpret student work samples in reliable ways (Mackenzie and Scull 2015). Drawing on authentic practices that reflect and measure what the student is learning, teachers are able to identify specific information regarding students’ performance and clearly conceptualize learning goals (Black and Wiliam 2003). The relationship between assessment and teaching is further emphasized by Wiliam et al. (2004), who found that teachers who make good use of student data are able to almost double the rate of students’ learning. Such effective use of data requires a positive attitude towards data collection, knowledge of appropriate data collection methods, and a commitment to the appropriate analysis and effective use of the data at all levels (Griffin 2009; Wiliam et al. 2004).

According to Pressley et al. (2001), exceptional early years teaching “requires well-informed teachers who routinely identify children’s instructional needs and offer targeted lessons that foster development” (p. 49). Further, Wray et al. (2000) argue that effective teachers group students according to the needs of the learners and the task involved, with organizational patterns chosen for their purpose. Taking into account the broad range of within-class groupings, flexible skill-focused groups that are strategically constructed based on students’ learning needs and identified through continuous assessment processes support teaching efficiencies (Jones and Henriksen 2013). Therefore, knowing how to group students, and for what purpose, is vital to effective teaching and learning; yet, achieving a strategic balance through the use of a range of classroom grouping structures remains one of the most difficult dilemmas faced by teachers (Baines et al. 2003). In an analysis of teachers’ practice and student performance conducted by Castle et al. (2005), teachers attributed improvements in students’ literacy outcomes to flexible groupings based on the use of assessment data to make focused instruction possible.

1.3 Student cohort groups and writing

Differentiated attainment patterns for writing remain an ongoing concern, with large-scale national and international testing regimes providing clear evidence of the outcomes for particular student cohort groups (Australian Curriculum, Assessment and Reporting Authority 2017; Thomson et al. 2017). The Australian National Assessment Program, Literacy and Numeracy (NAPLAN) trend data for writing show that female students score consistently higher than male students in Years 3 and 5 in each state and territory (ACARA 2015, 2016, 2017). Explication of gender differences relate to social and behavioural skill differences (Diprete and Jennings 2012), teacher expectations (Below et al. 2010), and girls’ better preparedness for school (Whitehead 2006). However, it is often gender, in concert with other factors such as ethnicity and social class, that impacts outcomes (Lindsay and Muijs 2006). The influence of socio-economic status is particularly powerful on school and student performance (Teese and Lamb 2009; Organisation for Economic Cooperation and Development 2010). Specific to writing, the level of parental education is associated with achievement levels (ACARA 2017), and NAPLAN writing test results indicate that students from higher-income families consistently achieve better outcomes at Year 3 and Year 5 (ACARA 2015, 2016, 2017). Moreover, the low SES status of students and schools also impacts curriculum content, further limiting the breadth and depth of curriculum provision (Sawyer 2017).

Our previous study of Year 1 students’ writing showed a small difference between gender and SES in terms of growth trajectories (Mackenzie et al. 2015). Despite these general patterns of achievement, to ensure equality of outcomes, teachers working in the early years of schooling with boys or students from low SES backgrounds may need to differentiate their instruction, selecting dimensions or aspects of the writing process for focused teaching above others. However, we need to ensure against disadvantaging vulnerable students by narrowing of the curriculum, instead of enabling teaching that provides access to rich and varied discourse patterns and linguistic conventions necessary for on ongoing success in schooling and in the larger society (Delpit 2012).

1.4 The present study

The terms authorial and secretarial have been used to describe aspects of writing in the past (Peters and Smith 1993) and have become part of the teaching lexicon, yet there has been no statistical analysis of the ways in which these aspects may work in combination, or how combinations of the dimensions of writing might inform assessment for teaching and grouping students for instruction. An aim of this study is to confirm the structure of a model that is theoretically defined and relevant to early year education. A second aim is to consider the grouping of students based on their skill profiles of writing. The influence of gender and SES background of students to account for cohort differences is analysed. Therefore, the research questions under consideration are:

- (1)

Can a concise measure of the writing process comprised of six dimensions representing authorial and secretarial aspects of writing be developed?

- (2)

What is the strength of the relationship between the dimensions of writing (items) and the authorial and secretarial aspects (factors) of writing?

- (3)

Are there typologies of students that emerge from clustering the items of the Writing Analysis Tool?

- (4)

Are there differences in students’ writing performance based on SES and gender?

2 Method

2.1 Participants

The analysis was conducted using writing samples from Year 1, collected at the end of the school year via teacher networks from 75 schools in New South Wales and Victoria, Australia. Teacher networks were approached to gather a representative sample of SES groups, location, and cultural and linguistic student backgrounds.

This resulted in a database of writing samples from 1799 students. In line with statistical techniques for calculating sample size, given the six items under consideration, a sample of between 200 and 300 is recommended (Kyriazos 2018). Therefore, 250 samples were selected from the total data set, using systematic sampling with a fixed periodic interval set to reach the desired sample size. The proportion of students from SES groups and cultural and linguistic backgrounds in the sample selected was representative of the larger data set. The current sample of 250 consists of more females (n = 131) than males (n = 119).

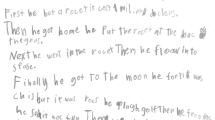

2.2 Procedure

During regular classroom writing sessions, teachers were asked to collect a sample of writing produced independently by the students in their class. A prompt that was not tied to any classroom teaching approaches, cultural contexts or curriculum was provided for the teachers to use (“Today you can choose to write about anything you like”) and the students were given 20 minutes to complete the writing task. The study discussed in this paper was conducted in the second half of the second year of school. Therefore, the students involved had been exposed to writing instruction for 18 months prior to data collection. The researchers left the choice of topic open and did not request drawings. They therefore allowed the teachers to guide students as to what “writing” was. While a small number of texts included drawings, the sample was not sufficient for drawings to be included in the analysis process. The analysis tool was designed to analyse the data that the study provided and these were written samples of text. They gave us the opportunity to examine texts from the perspective of the authorial and secretarial elements described earlier. The teacher did not correct the texts, and students were not prompted to revise their writing; as such, “first draft” texts were collected for analysis. The teachers de-identified the writing samples and recorded demographic details of the students, such as school, class, gender, and language background prior to sending the samples to the researchers. The study was performed with approval from the University’s Human Research Ethics Committee as well as permission from the participating educational institutions. Parental consent was also obtained before the participation of the students.

2.3 Data analysis

The Year 1 texts were first rated by the researchers using the multivector Writing Analysis Tool (Mackenzie et al. 2015) (see Appendix). The analysis tool was developed in an earlier stage of the research project through ratings of observable categories of writing evident in the texts collected. The analysis focused on six dimensions of writing, namely text structure, sentence structure, punctuation, spelling, vocabulary, and handwriting/legibility; for each dimension, and one of the six levels of attainment as assigned. The six levels of attainment, on which the texts are scored, were based on the increasing levels of complexity evident in the writing samples and understandings of expected progressions in learning alongside a clear recognition of what young writers are capable of achieving in each dimension (ACARA 2012; Mackenzie et al. 2013). Each dimension is scored independently and it is not expected that a total numeric be assigned to any one text. To refine the tool, the research team (three researchers and a research assistant) coded a total of 210 texts. After members of the research team worked independently to code the texts, results were compared and consensus achieved (Mackenzie et al. 2013). Importantly, this involved a process of reviewing the descriptors to refine the gradient of text complexity that explicitly described the sequence of learning evident in the texts, ensuring the researchers had reached verification and comprehension, and completeness (Morse et al. 2002). This process resulted in each of the raters developing a high level of familiarity with the tool. Two researchers coded the 250 texts analysed in this paper, with ten per cent selected for inter-coder reliability. Points of ambiguity or difference were identified and discussed until agreement was reached (Bradley et al. 2007).

The Index of Community Socio-educational Advantage (ICSEA) was used to measure SES, consistent with the previous research (Anderson and Curtin 2014). ICSEA values measure the level of advantage/disadvantage, remoteness, and the presence of groups with specific needs in Australian schools. The standardized values range from approximately 500 to 1300, with a median of 1000 and a standard deviation (SD) of 100 (ACARA 2013); approximately two-thirds of schools in Australia will have an ICSEA value between 900 and 1100. A four-group classification was anticipated with a group below 1 SD from the mean, however, as only one student was in this range that student was allocated to the low average group. Therefore, a three-group categorization was designed to reflect the range of participating schools—a low average ICSEA group, n = 76 (LAI: ICSEA score < 999), a high average ICSEA group, n = 98 (HAI: ICSEA range 1000–1099), and an above average ICSEA group, n = 76 (AAI: ICSEA score > 1100).

The statistics program used for each analysis was SPSS (IBM Corp. Released 2017). After checking and ensuring univariate and multivariate normality correlational analysis, confirmatory factor analysis and cluster analysis were used to address the research questions. As the correlation analysis indicated all items were associated, a confirmatory factor analysis was completed to validate the structure of the factors and items. Confirmatory factor analysis is a statistical procedure that is an “extension of factor analysis in which specific hypotheses about the structure of the factor loadings and intercorrelations are tested” (StatSoft Online Dictionary 2016a). This procedure provides a range of statistical indicators of the strength of association of items to represent constructs. To assess the fit of a proposed models, a number of indices are recommended (Byrne 1998; 2001) and include the ratio of χ2 to degrees of freedom (2/df < 2.0 indicating a good fit; Hooper et al. 2008), the root mean square residual (RMR; as all items have the same range; Kline 2005), the goodness-of-fit index (GFI), the adjusted goodness-of-fit index (AGFI), and the Tucker and Lewis index (TLI) which will indicate the goodness of fit of the model (Tanaka 1987; Tucker and Lewis 1973). For the GFI, AGFI, CFI, and TLI, acceptable levels of fit are above 0.90 (Marsh et al. 1988). For root mean square error approximation (RMSEA), evidence of good fit is shown by values less than 0.05 with values of 0.05–0.08 indicating a moderate fit (Browne and Cudeck 1993) and for RMR values < 0.05 are acceptable (Hooper et al. 2008).

Cluster analysis was carried out to group the students and establish whether there were underlying groups or typologies of students on the basis of the six ratings of the writing tool. K-means and hierarchical cluster procedures were completed to establish whether underlying groups were present. Cluster analysis is “an exploratory data analysis tool which aims at sorting different objects into groups in a way that the degree of association between two objects is maximal if they belong to the same group and minimal otherwise” (Sarstedt and Mooi 2014; StatSoft Online Dictionary 2016b). The number of groups considered ranged from 2 to 5, and the best solution was identified by inspection of the agglomeration schedule and dendograms using average linkage. As the rating scale scores all ranged from 1 to 6 and all SDs were less than 1, it was considered unnecessary to standardize the scores.

3 Results

The structure of the writing process represented by the six dimensions was tested using a confirmatory factor analysis with a maximum likelihood extraction method, completed using data from the 250 Year 1 texts. The ratio of 250 respondents to six items is 41.66:1 and is considered ample by Costello and Osborne (2005). The six items representing the dimensions of the writing process were loaded into the model and the fit indices showed that the model was a very good fit (CFMIN/df = 1.86; RMR = .026; GFI = .979; AGFI = 0.946; TLI = .970; RMSEA = 0.059; CFI = .984). The model was parsimonious and did not require adjustment or covarying of any error terms (Fig. 1). The standardized weights are presented in Table 1. The model tests showed an appropriate goodness of fit with a well-ordered hierarchical, multivariate factor structure in which three dimensions loaded on the two aspects of writing.

As recommended by Ketchen and Shook (1996) to ensure that there is no issue of multicollinearity, a principal components analysis with orthogonal rotation was used. Assessment of the overall significance of the correlation matrix using Bartlett’s test of sphericity and assessing the measure of sampling adequacy (Hair et al. 1995; Tabachnick and Fidell 1996) was also completed. The Kaiser–Meyer–Olkin measure of sampling adequacy was very good at .82. The Bartlett test of sphericity measures the overall significance of the correlation and showed adequacy (χ2 (1, n = 250) = 445.15, p = .001), indicating adequate independence. The two-factor solution accounted for 67.68% of the variance with the first factor accounting for 36.25% and a substantial 31.43% accounted for by the second factor. The scree plot indicated the two-factor solution was the best representation of the data. Finally, the independence of the items to factors is indicated by the rotated component matrix of the principal component analysis (Table 2). Taken together, this indicates the absence of multicollinearity.

The correlation between the factor scores and higher-order factors of the model (Table 3) was significant in each pairwise combination, ranging from low to high, and most frequently being in the moderate to high range. Like the coefficients of the confirmatory factor analysis, the correlations of the six dimensions of the writing tool with each other were moderate. The correlation of the first-order factors with the two aspects of the writing process—secretarial and authorial—was high. Similarly, the association between the secretarial and authorial factors was moderate. The alpha reliabilities of the factors were sufficiently high (Boyle 1991).

To establish whether there were underlying profiles of students on the six dimensions of the writing process, a K-means and hierarchical cluster procedure was completed (following Clatworthy et al. 2005 and MacCallum et al. 2002, which used Euclidean distance). Inspection of the dendrogram and agglomeration schedule (Everitt et al. 2001) showed that the clearest separation, indicating the best solution, was the three-cluster solution. The final solution had a high cluster (n = 104; 41.60%), a medium cluster (n = 90; 36.0%) and a low cluster (n = 56; 22.40%).

Three ANOVAs compared the DVs with cluster profiles, SES, and gender of participants. In each ANOVA, the DVs were comprised of the six dimensions of writing, the two aspects of writing, and the combined writing process. The results showed that there were no three-way interactions involving cluster, SES, and gender, and no two-way interaction involving cluster, SES, and gender; therefore, main effects were analysed separately.

An ANOVA with Bonferroni’s adjustment was used to compare the relationship of the DVs, defined above, by cluster groups (IV; three levels). The results showed that the writing dimensions, and authorial and secretarial aspects, were significantly different by a large magnitude between each cluster (low/medium/high) with two exceptions. The three authorial and three secretarial dimensions were significantly different between each pairwise comparison of typologies except between the medium and high clusters for text structure and sentence structure, and the low and medium clusters for spelling and punctuation (Table 4). Figure 2 shows the general pattern of the low cluster group consistently separating well below the other two groups, particularly for the authorial factors. For the three secretarial factors, the general pattern is that the high cluster group separates from the other two groups.

A second ANOVA with Bonferroni’s adjustment of post hoc tests was used to analyse the relationship between the ratings for each dimension of writing (DVs) by three groups based on the SES of respondents (IV; three levels). The general patterns of the three groups of SES showed that the above average group separates from the other two groups with relatively consistent significant differences; however, the magnitude of difference is not as strong as the differences between naturally occurring clusters (see above). The exception to the pattern was for punctuation, in which the high average group was the highest rating (see Table 4).

A third ANOVA (see Table 4) was used to analyse the relationship between the dimensions of writing (DVs) by gender (IV; males and females). The findings showed that the females were significantly, consistently higher rating than males except for the ratings of vocabulary where there was no significant difference.

In reference to the second-order factors of authorial and secretarial and the third-order factor of writing process, the pattern of ratings showed a significant difference between pairwise combinations of the three cluster groups. A similar and consistently significant pattern was also present for the SES groups. The exception, for SES, was that there was no significant difference between the low average and high average groups on overall secretarial aspects of writing.

Importantly, the comparison of the magnitude of the differences and the effect sizes between the first-, second- and third-order factors and the clusters, SES, and gender varied widely. The effect size of ratings for each of the dimensions and aspects of writing was low for gender and SES. By comparison, the effect sizes shown in the analysis of the difference between the clusters based on text sample ratings were far greater in magnitude.

4 Discussion

When students are learning to write, they need to develop automatic control over a range of different aspects of the writing process, moving from ideas to the composition and recording of the message and the monitoring of text production (Clay 2016). This requires attention to meaning construction while, at the same time, attending to the conventions of writing that allow the message to be read and understood by others. Capturing this complexity to support effective teaching decisions requires the careful observation and analysis of students’ written texts. Moreover, teachers need to have an appreciation of the ways in which students learn to write, as well as an awareness of the evidence that signals growth and competence (Scull and Mackenzie 2018). The analysis carried out to assess to the validity of the Writing Analysis Tool addresses the central tenets of this issue.

Over time, the terms authorial and secretarial aspects of writing have become part of teachers’ lexicon, used to describe early writing (Corden 2003; Emilia and Tehseem 2013; Peters and Smith 1993; Ruttle 2004). The findings from this study assist in defining the parameters of these aspects of the writing process. The results provide clear evidence of text structure, sentence structure, and vocabulary as dimensions of writing associated with the authorial aspect of writing. Similarly, spelling, punctuation, and handwriting are associated with the secretarial aspects of writing. The correlations for the dimensions of writing provide further evidence of the relationships between the six dimensions of writing, aligning these to the two aspects of the writing process. Starting from the analysis of young students’ texts, the dimensions captured in the Writing Analysis Tool (Mackenzie et al. 2013) can be used to illustrate the components of early writing with accuracy and confidence, while the tool also allows for the analysis of writing to support appropriate teaching decisions across the spectrum of skills required. Recent research suggests teachers place emphasis on print conventions, aligned with the secretarial aspects of text construction when teaching writing (Mackenzie 2014) with data from the USA suggesting handwriting, is often prioritized in early writing instruction (Bingham et al. 2017). Findings from this study support both the communicative purpose and the ways ideas are expressed as central features of texts and of equal importance. Use of the tool can assist to ensure teachers consider a broad range of skills to frame their teaching, increasing opportunities for composition, with this as a context for teaching the mechanics and conventions of text construction.

The analysis of students’ texts in this study recognizes the need for targeted teaching that acknowledges the particular relationship between the authorial aspects of writing, including the functions of texts and the expression of ideas, while attending to the ways ideas are represented and the vocabulary choices made to ensure that messages are precise and accurate. These skills shape the communicative purpose of written texts (Scull and Mackenzie 2018). Connecting the authorial dimensions of writing when teaching—for example, teaching sentence structure and vocabulary together—allows students to build grammatical complexity and lexical density as common features of more sophisticated texts (Christie 2005). It is also possible that dimensions taught concurrently may support each other, making the learning easier than when taught in isolation.

As writing demands increase (Brandt 2015), precision in conveying messages becomes a critical twenty-first-century skill. The results of the study discussed here reinforce the centrality of efficient transcription skills. Efficient transcription skills allow a writer to focus on the task of creating meaning (Kiefer et al. 2015). Poor transcription skills potentially constrain thinking, planning, and translating processes, as cited in Limpo et al. (2017), “For instance, if students are concerned with how to produce letter forms or with how to spell a word, they may either forget already developed ideas or disregard basic rules in sequencing words (e.g. subject-verb agreement)” (p. 27).

Across the data sets that show mean scores for the dimensions of writing, punctuation is consistently lower than the other dimensions measured. While an experienced teacher of young writers can often infer where punctuation should go, other readers may be confused if students do not use or incorrectly use punctuation. Yet, punctuation is important for the organization and correct interpretation of written texts (Davalos-Esparza 2017; Figueras 2001). Davalos-Esparza (2017) has shown that punctuation at either end of a sentence precedes punctuation within a sentence, with Hall (2009) suggesting that punctuation becomes increasingly challenging for students as they write more, with the subtleties of punctuation often quite perplexing for them. This is further supported by Mackenzie et al. (2013), who discovered that while initially young writers’ punctuation may be limited to capital letters at the start of sentences and for proper nouns, full stops at the end of sentences, question marks, and commas for lists, students as young as 6–7 years can demonstrate control over a range of punctuation to enhance text meaning.

Communicative purpose, an awareness of audience, and the expression of ideas are key features of texts and are of equal importance and need to be explicitly taught to support young writers. It is clear from the analysis of students’ varying skill profiles that teachers can use data obtained from student work samples to focus and inform their teaching. Knowledge of achievement patterns and students’ profiles alongside an awareness of the evidence that indicates competence across authorial and secretarial aspects of writing can suggest aspects of the writing process teachers might notice and teach.

The students’ texts analysed in this study revealed areas of strengths and needs across the high-, medium- and low-profile groups. The results showed large variability across the skill profiles of Year 1 writers, and while the differences between groups were significant, we recognize that it is plausible that differences in development could be attributed to classroom instructional approaches and teachers’ expectations, in terms of both text quality and quantity (Harmey and Rodgers 2017). The high-profile group of Year 1 writers demonstrated competence across both aspects of writing yet with higher levels of authorial competence evident. These young writers were aware of the ways language is structured and patterned for particular purposes and were also able to demonstrate an increasing awareness of appropriate vocabulary use. These students appeared to pay less attention to the secretarial aspects of writing. As will be recalled, the writing samples collected for analysis were first draft texts, so for some of these students, attention to the conventions of text, such as spelling and punctuation, may follow the composition and initial recording of ideas during processes of review and editing. The medium-profile group showed a similar pattern of attainment with high achievement levels for the authorial skills, yet their secretarial skills were significantly different and below that of the high-profile group. Rather than the result of inattention and occasional error behaviour, this may suggest the need for focused teaching to ensure the clear recording of messages and an increased sensitivity to writing conventions. The low-profile group was well below the other two groups on the authorial factors, indicating the need for careful teaching that supports students to organize their ideas or information into a text that follows the rules of a particular text type, that is, an increased awareness of how sentences are constructed and how to choose words to add precision to their writing. Furthermore, these students need targeted instruction that builds a knowledge of spelling patterns, understanding of the ways in which punctuation supports sentence construction, and how handwriting assists composing, as well as how messages should be recorded legibly and fluently. Important here is the need to avoid narrow approaches to teaching literacy that is a singular focus on basic skill areas or “constrained skills” (Paris 2005), as is often the case for the lowest achieving students (Luke 2014).

The data from this study suggest that outcomes specific to gender and SES may not be highly relevant to students in the early years of schooling. Despite the higher levels of attainment recorded for females, after only 2 years at school, gender and SES make little difference overall, with the exception of vocabulary, which appears to be of concern for boys. In essence, the magnitude of the variance explained by gender and SES is small to moderate. By comparison, the differences between the three cluster groups were considerably greater, as the student sample varied far more on the dimensions and aspects of writing. The benefit of this is that teachers have greater control over the learning of writing than they may have over the effect of gender and SES. As a consequence, while the overall skill profile range is large, this can be mediated by the ways in which teachers design instruction to optimally facilitate learning. This also suggests the need for policy and funding that prioritizes teaching interventions in the early years of schooling to strengthen outcomes for young learners and to reduce the achievement gap as early as possible (Gross et al. 2006).

Despite the recognized affordances of small group teaching (Jones and Henriksen 2013; Wray et al. 2000), challenges remain for effective implementation, with teachers responsible for the decisions related to the composition and nesting of groups within larger classroom organizational structures and differentiated teaching practices (Baines et al. 2003). Drawing on the results presented, we suggest that the analysis of students’ writing enables teachers to draw on instructionally relevant data that assist in identifying students’ needs and facilitates planning for learning that takes into account students’ profiles across the dimensions of writing. Specifically, this supports teachers to move away from fixed ability groups where there is clear evidence of negative effects on student achievement, particularly for low-achieving and minority students, to need-based instruction and the provision of focused small group support (Castle et al. 2005). We suggest that when working with small groups of students, with like needs, teachers draw across a range of well-respected approaches to teaching writing designed to specifically target the authorial or secretarial writing skills as required. For example, to develop skills related to text and sentence structure, teachers can use approaches such as modelled and shared writing or consider the use of mentor texts (Dorfman and Cappelli 2007; Hill 2012) to deepen students’ appreciation of complex sentence structures and vocabulary. Small group approaches, such as interactive writing (Mackenzie 2015; Nicolazzo and Mackenzie 2018), can be used to target the teaching of secretarial skills, including spelling and handwriting, within the context of meaningful text construction.

5 Limitations and future directions

The conceptualization of the six-factor model for writing was based on the analysis of Year 1 students’ texts using the Writing Analysis Tool. The dimensions of writing used to describe students’ attainment patterns across the authorial and secretarial aspects of writing pertain to students who are beginning to develop control over the writing process. A limitation of the study reported here was determined by the single-mode writing samples provided by teachers. Because of this, the analysis tool was designed to analyse samples of writing that were single-mode, written texts. The authors agree that writing in contemporary times is more often multimodal and recognizing and encouraging students’ use of multiple modes is particularly important in the early stages. Future research focused on the examination of the writing of children in the early years of school should include children’s drawings. Further, while the analysis scopes a range of achievement levels, students in later years of schooling may display a different pattern of development, and the associations between each of the dimensions of writing could vary for these student cohorts. Additional data collection and analysis will be required to examine students’ writing competence and to report patterns of achievement across a wider range of year levels. Now that we have moved closer to describing attainment patterns and possible within-class groupings for teaching writing, we propose to ascertain when grouping based on the analysis tool is effective, and with whom it is effective, and to investigate how teachers use the tool to strengthen the effectiveness of their teaching. This sets the agenda for the next stage of our inquiry.

6 Conclusion

Assessment is integral to the effective teaching of writing and is deeply connected to the ways teachers design and deliver classroom teaching programmes to support students’ learning. However, this is predicated on the systematic observation and analysis of writing, drawing on well-informed, classroom-based assessment tools and analysis frames. The Writing Analysis Tool described in this paper is intended to contribute to effective assessment and teaching practice, by taking into account students’ specific learning needs and classroom groupings to facilitate focused instruction. Further, we argue that the tool provides evidence that enables teachers to address young students’ learning needs in ways that lead to efficiencies in teaching and learning by attending to the dimensions of writing in combination rather than at singular levels of engagement.

References

Abbott, R. D., Berninger, V. W., & Fayol, M. (2010). Longitudinal relationships of levels of language in writing and between writing and reading in grades 1 to 7. Journal of Educational Psychology,102(2), 281–298.

Anderson, M., & Curtin, E. (2014). LLEAP 2013 Survey Report: Leading by evidence to maximise the impact of philanthropy in education. Melbourne: Australian Council for Educational Research.

Askew, B. J. (2009). Using an unusual lens. In B. Watson & B. J. Askew (Eds.), Marie Clay’s search for the impossible in children’s literacy (pp. 101–127). Auckland: Pearson Education.

Australian Curriculum Assessment and Reporting Authority (ACARA) (2012). Australian Curriculum: English. Version 3.0. Sydney: Australian Curriculum Assessment and Reporting Authority. Retrieved from https://www.australiancurriculum.edu.au/f-10-curriculum/english/. Accessed 28 May 2014.

Australian Curriculum, Assessment and Reporting Authority (ACARA). (2013) Guide to understanding 2013 Index of Community Socio-educational Advantage (ICSEA) values. Retrieved from http://www.acara.edu.au/verve/_resources/Guide_to_understanding_2013_ICSEA_values.pdf. Accessed 10 April 2015.

Australian Curriculum, Assessment and Reporting Authority (ACARA). (2015). NAPLAN achievement in reading, persuasive writing, language conventions and numeracy: National report for 2015. Sydney: ACARA.

Australian Curriculum, Assessment and Reporting Authority (ACARA). (2016). NAPLAN achievement in reading, writing, language conventions and numeracy: National report for 2016. Sydney: ACARA.

Australian Curriculum, Assessment and Reporting Authority (ACARA). (2017). NAPLAN achievement in reading, writing, language conventions and numeracy: National report for 2017. Sydney: ACARA.

Baines, E., Blatchford, P., & Kutnick, P. (2003). Changes in grouping practices over primary and secondary school. International Journal of Educational Research,39, 9–34.

Beck, I. L., McKeown, M. G., & Kucan, L. (2002). Bringing words to life: Robust vocabulary instruction. New York: The Guilford Press.

Below, J. L., Skinner, C. H., Fearrington, J. L., & Sorrell, C. A. (2010). Gender differences in early literacy: Analysis of kindergarten through fifth-grade dynamic indicators of basic early literacy skills probes. School Psychology Review,39(2), 240–257.

Bingham, G. E., Quinn, M. F., & Gerde, H. K. (2017). Examining early childhood teachers’ writing practices: Associations between pedagogical supports and children’s writing skills. Early Childhood Research Quarterly,39, 35–46.

Black, P., & Wiliam, D. (2003). In praise of educational research: Formative assessment. British Educational Research Journal,29(5), 623–637.

Boscolo, P. (2008). Writing in primary school. In C. Bazerman (Ed.), Handbook of research on writing: History, society, school, individual, text (pp. 293–309). New York: Lawrence Erlbaum Associates.

Boyle, G. J. (1991). Does item homogeneity indicate internal consistency or item redundancy in psychometric scales? Personality and Individual Differences,12(3), 291–294.

Bradley, E. H., Curry, L. A., & Devers, K. J. (2007). Qualitative data analysis for health services research: developing taxonomy, themes, and theory. Health Services Research,42(4), 1758–1772.

Brandt, D. (2015). The rise of writing: Redefining mass literacy. Cambridge: Cambridge University Press.

Bromley, K. (2007). Best practices in teaching writing. In L. B. Gambrell, L. M. Morrow, & M. Pressley (Eds.), Best practices in literacy instruction (pp. 243–263). New York: The Guilford Press.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equations models (pp. 36–62). Newbury Park, CA: Sage.

Byrne, B. M. (1998). Structural equation modeling with LISREL, PRELIS, and SIMPLIS: Basic concepts, applications, and programming. Mahwah, NJ: Erlbaum.

Byrne, B. M. (2001). Structural equation modeling with AMOS: Basic concepts, applications, and programming. London: Lawrence Erlbaum.

Castle, S., Deniz, C. B., & Tortora, M. (2005). Flexible grouping and student learning in a high-needs school. Education and urban society,37(2), 139–150.

Christie, F. (2005). Language education in the primary years. Sydney: University of New South Wales Press.

Christie, F., & Dreyfus, S. (2007). Letting the secret out: Mentoring successful writing in secondary English studies. Australian Journal of Language and Literacy,33(3), 235–247.

Clatworthy, J., Buick, D., Hankins, M., Weinman, J., & Horne, R. (2005). The use and reporting of cluster analysis in health psychology: A review. British Journal of Health Psychology,10(3), 329–358.

Clay, M. M. (2001). Change over time: In children’s literacy development. Portsmouth, NH: Heinemann.

Clay, M. M. (2016). Literacy lessons designed for individuals (2nd ed.). Auckland: Global Education Systems.

Corden, R. (2003). Writing is more than ‘exciting’: Equipping primary children to become reflective writers. Literacy,37(1), 18–26.

Costello, A. B., & Osborne, J. W. (2005). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research & Evaluation,10(7), 1–9.

Cutler, L., & Graham, S. (2008). Primary grade writing instruction: A national survey. Journal of Educational Psychology,100, 907–919.

Davalos-Esparza, D.-A. (2017). Children’s reflections on the uses and functions of punctuation: The role of modality markers. Journal for the Study of Education and Development,40(3), 429–466.

Delpit, L. D. (2012). ‘Multiplication is for white people’: Raising expectations for other people’s children. New York, NY: The New Press.

Derewianka, B. (2011). A new grammar companion for teachers (2nd ed.). Sydney: NSW Primary English Teaching Association Australia.

Diprete, T. A., & Jennings, J. L. (2012). Social and behavioral skills and the gender gap in early educational achievement. Social Science Research,41(1), 1–15.

Dorfman, L. R., & Cappelli, R. (2007). Mentor texts. Teaching writing through student’s literature, K-6. Portland: Stenhouse.

Emilia, E., & Tehseem, T. (2013). A synthesis of approaches to teaching writing: A case study in an Australian primary school. Pakistan Journal of Social Sciences,33(1), 121–135.

Everitt, B., Landau, S., & Leese, M. (2001). Cluster analysis (4th ed.). London: Arnold.

Fang, Z., & Wang, Z. (2011). Beyond rubrics: Using functional language analysis to evaluate student writing. Australian Journal of Language & Literacy,34(2), 147–165.

Figueras, C. (2001). Pragmática de la puntuación. Barcelona: Octaedro.

Genishi, C., & Dyson, A. H. (2009). Children, language and literacy: Diverse learners in diverse times. New York and London: Teachers College Press.

Graham, S. (2009–2010). Want to improve children’s writing? Don’t neglect their handwriting. American Educator, 33, 20–40.

Griffin, P. (2009). Use of assessment data. In C. M. Wyatt-Smith & J. J. Cumming (Eds.), Educational assessment in the 21st century (pp. 187–212). Dordrecht: Springer.

Gross, J., Jones, D., Raby, M., & Tolfree, T. (2006). The long term costs of literacy difficulties. London: KPMG Foundation.

Hair, J. F., Anderson, R. E., Tatham, R. L., & Black, W. C. (1995). Multivariate data analyses with readings. New Jersey: Englewood Cliffs.

Hall, N. (2009). Developing an understanding of punctuation. In R. Beard, D. Myhill, J. Riley, & M. Nystrand (Eds.), The SAGE handbook of writing development (pp. 271–284). London: Sage.

Halliday, M. A. K. (2016). Aspects of language and learning. Berlin, Heidelberg: Springer.

Harmey, S. J., & Rodgers, E. M. (2017). Differences in the early writing development of struggling children who beat the odds and those who did not. Journal of Education for Students Placed at Risk,22(3), 157–177.

Hill, S. (2012). Developing early literacy: Assessment and teaching (2nd ed.). Melbourne: Eleanor Curtain Publishing.

Hooper, D., Coughlan, J., & Mullen, M. R. (2008). Structural equation modelling: Guidelines for determining model fit. The Electronic Journal of Business Research Methods,6(1), 53–60.

IBM Corp. Released 2017. IBM SPSS Statistics for Windows, Version 25.0. Armonk, NY: IBM Corp.

James, K., & Engelhardt, L. (2012). The effects of handwriting experience on functional brain development in pre-literate children. Trends in Neuroscience and Education,1(1), 32–42. https://doi.org/10.1016/j.tine.2012.08.001.

Jones, C. D., & Henriksen, B. M. (2013). Skills-focused small group literacy instruction in the first grade: An inquiry and insights. Journal of Reading Education,38(2), 25–30.

Kalantzis, M., Cope, B., Chan, E., & Dalley-Trim, L. (2016). Literacies (2nd ed.). Melbourne: Cambridge University Press.

Ketchen, D. J., & Shook, C. L. (1996). The application of cluster analysis in strategic management research: an analysis and critique. Strategic Management Journal,17(6), 441–458.

Kiefer, M., Schuler, S., Mayer, C., Trumpp, N. M., Hille, K., & Sachse, S. (2015). Handwriting or typewriting? The influence of pen-or keyboard-based writing training on reading and writing performance in preschool children. Advances in cognitive psychology, 11(4), 136–146.

Kline, R. B. (2005). Principles and practice of structural equation modeling (2nd ed.). New York: The Guilford Press.

Kress, G., & Bezemer, J. (2009). Writing in a multimodal world of representation. In R. Beard, D. Myhill, J. Riley, & M. Nystrand (Eds.), The SAGE handbook of writing development (pp. 167–181). Los Angeles: SAGE.

Kyriazos, T. A. (2018). Applied psychometrics: Sample size and sample power considerations in factor analysis (EFA, CFA) and SEM in general. Psychology,9(08), 2207–2230.

Limpo, T., Alves, R. A., & Connelly, V. (2017). Examining the transcription-writing link: Effects of handwriting fluency and spelling accuracy on writing performance via planning and translating in middle grades. Learning and Individual Differences,53, 26–36.

Lindsay, G., & Muijs, D. (2006). Challenging underachievement in boys. Educational Research,48(3), 313–332.

Lo Bianco, J., Scull, J., & Ives, D. A. (2008). The words children write: Research summary of the Oxford Wordlist Research Study (Report for Oxford University Press). Melbourne: Oxford University Press.

Luke, A. (2014). On explicit and direct instruction. Australian Literacy Association Hot Topics, 1–4. https://www.alea.edu.au/documents/item/861.

MacCallum, R. C., Zhang, S., Preacher, K. J., & Rucker, D. (2002). On the practice of dichotomization of quantitative variables. Psychological Methods,7(1), 19–40.

Mackenzie, N. M. (2009). Becoming a writer: Language use and ‘scaffolding’ writing in the first six months of formal schooling. The Journal of Reading, Writing & Literacy,4(2), 46–63.

Mackenzie, N. M. (2011). From drawing to writing: What happens when you shift teaching priorities in the first six months of school? Australian Journal of Language & Literacy,34(3), 322–340.

Mackenzie, N. M. (2014). Teaching early writers: Teachers’ responses to a young child’s writing sample. Australian Journal of Language and Literacy,37(3), 182–191.

Mackenzie, N. M. (2015). Interactive writing: A powerful teaching strategy. Practical Literacy: The Early and Primary Years,20(3), 36–38.

Mackenzie, N. M. (2020). Writing in the early years. In A. Woods & B. Exley (Eds.), Literacies in early childhood: Foundations for equity and quality (pp. 179–192). Melbourne, VIC: Oxford University Press.

Mackenzie, N. M., & Petriwskyj, A. (2017). Understanding and supporting young writers: Opening the school gate. Australasian Journal of Early Childhood,42(2), 78–87.

Mackenzie, N. M., & Scull, J. (2015). Literacy: Writing. In S. McLeod & J. McCormack (Eds.), An introduction to speech, language and literacy (pp. 396–443). Melbourne: Oxford University Press.

Mackenzie, N. M., & Scull, J. (2016). Using a writing analysis tool to monitor student progress and focus teaching decisions. Practical Primary: The Early and Primary Years,21(2), 35–38.

Mackenzie, N. M., Scull, J., & Munsie, L. (2013). Analysing writing: The development of a tool for use in the early years of schooling. Issues in Educational Research,23(3), 375–391.

Mackenzie, N. M., Scull, J., & Bowles, T. (2015). Writing over time: An analysis of texts created by year one students. The Australian Educational Researcher,42(5), 567–593.

Marsh, H. W., Balla, J. R., & McDonald, R. P. (1988). Goodness-of-fit indexes in confirmatory factor analysis: The effect of sample size. Psychological bulletin, 103(3), 391–410.

Medwell, J., & Wray, D. (2008). Handwriting—A forgotten language skill? Language and Education,22(1), 34–47.

Mills, K. (2011). ‘I’m making it different to the book’: Transmediation in young children’s multimodal and digital texts. Australasian Journal of Early Childhood,36, 56–65.

Morse, J. M., Barrett, M., Mayan, M., Olson, K., & Spiers, J. (2002). Verification strategies for establishing reliability and validity in qualitative research. International Journal of Qualitative Methods,1(2), 1–19.

Myhill, D. (2009). Becoming a designer. In R. Beard, D. Myhill, J. Riley, & M. Nystrand (Eds.), The sage handbook of writing development (pp. 402–414). Los Angeles: SAGE.

Nagy, W., & Townsend, D. (2012). Words as tools: Learning academic vocabulary as language acquisition. Reading Research Quarterly,47(1), 91–108.

Nicolazzo, M., & Mackenzie, N. M. (2018). Teaching writing strategies. In N. M. Mackenzie & J. Scull (Eds.), Understanding and supporting young writers from birth to 8 (pp. 189–211). Oxfordshire, UK: Routledge.

Olson, D. R. (2009). The history of writing. In R. Beard, D. Myhill, J. Riley, & M. Nystrand (Eds.), The Sage handbook of writing development. London: SAGE.

Organisation for Economic Cooperation and Development (OECD). (2010). PISA 2009 at a glance. Geneva: Author. Retrieved May 28, 2015 from http://www.oecd.org/pisa/pisaproducts/pisa2009/pisa2009keyfindings.htm.

Paris, S. (2005). Reinterpreting the development of reading skills. Reading Research Quarterly,40(2), 184–202.

Perera, K. (1986). Grammatical differentiation between speech and writing in children aged 8-12. In A. Wilkinson (Ed.), The writing project (pp. 90–108). Oxford: Oxford University Press.

Peters, M. L., & Smith, B. (1993). Spelling in context strategies for teachers and learners. Windsor: NFER-Nelson.

Prain, V., & Hand, B. (2016). Coming to know more through and from writing. Educational Researcher,45(7), 430–434.

Pressley, M., Wharton-McDonald, R., Allington, R., Block, C. C., Morrow, L., Tracey, D., et al. (2001). A study of effective first-grade literacy instruction. Scientific Studies of Reading,5(1), 35–58.

Puranik, C. S., & Lonigan, C. J. (2014). Emergent writing in preschoolers: Preliminary evidence for a theoretical framework. Reading Research Quarterly, 49(4), 453–467.

Purcell-Gates, V. (1994). Relationship between Parental Literacy Skills and Functional Uses of Print and Children’s Ability to Learn Literacy Skills. Washington, DC: National Institute for Literacy.

Ruttle, K. (2004). What goes on inside my head when I’m writing? A case study of 8–9-year-old boys. Literacy,38(2), 71–77.

Sarstedt, M., & Mooi, E. (2014). A concise guide to market research: The process, data, and methods using IBM SPSS Statistics. Berlin: Springer.

Sawyer, W. (2017). Garth Boomer Address 2017: Low SES contexts and English. English in Australia,52(3), 11–20.

Schlagal, B. (2007). Best practices in spelling and handwriting. In S. Graham, C. A. MacArthur, & J. Fitzgerald (Eds.), Best practices in writing instruction (pp. 179–201). New York: The Guilford Press.

Scull, J., & Mackenzie, N. M. (2018). Developing authorial skills: Text construction, sentence construction and vocabulary development. In N. M. Mackenzie & J. Scull (Eds.), Understanding and supporting young writers from birth to 8 (pp. 165–188). Oxfordshire: Routledge.

StatSoft Online Dictionary (2016a). Confirmatory factor analysis. Retrieved from http://www.statsoft.com/Textbook/Structural-Equation-Modeling. Accessed 30 Nov 2016.

StatSoft Online Dictionary. (2016b). Retrieved from Cluster analysis. http://www.statsoft.com/Textbook/Cluster-Analysis. Accessed 30 Nov 2016.

Tabachnick, B. G., & Fidell, L. S. (1996). Using multivariate statistics.. Northridge: Cal.: Harper Collins.

Tanaka, J. S. (1987). “ How big is big enough?”: Sample size and goodness of fit in structural equation models with latent variables. Child development, 58(1), 134–146.

Teese, R., & Lamb, S. (2009). Low achievement and social background: Patterns, processes and interventions. In Document de réflexion présenté au colloque de. http://lowsesschools.nsw.edu.au/Portals/0/upload/resfile/Low_achievement_and_social_backgound_2008.pdf. Accessed 28 May 2015.

Thomson, S., Hillman, K., Schmid, M., Rodrigues, S., & Fullarton, J. (2017). Reporting Auatralia’s Results PIRLS 2016: Australia’s perspective. Melbourne: Australian Council for Educational Research.

Torrance, M., & Galbraith, D. (2006). The processing demands of writing. In C. A. MacArthur, S. Graham, & J. Fitzgerald (Eds.), Handbook of writing research (pp. 67–80). New York: The Guilford Press.

Troia, G. (2007). Research in writing instruction: What we know and what we need to know. In M. Pressley, A. Billman, K. Perry, K. Refitt, & J. M. Reynolds (Eds.), Shaping literacy achievement: Research we have, research we need (pp. 129–156). New York, NY: Guilford Press.

Tucker, L. R., & Lewis, C. (1973). A reliability coefficient for maximum likelihood factor analysis. Psychometrika,38(1), 1–10.

Whitehead, J. M. (2006). Starting school—Why girls are already ahead of boys. Teacher Development,10(2), 249–270.

Wiliam, D., Lee, C., Harrison, C., & Black, P. (2004). Teachers developing assessment for learning: Impact on student achievement. Assessment in Education: Principles, Policy and Practice,11(1), 49–65.

Wray, D., Medwell, J., Fox, R., & Poulson, L. (2000). The teaching practices of effective teachers of literacy. Educational Review,52(1), 75–84.

Wulff, K., Kirk, C., & Gillon, G. (2008). The effects of integrated morphological awareness intervention on reading and spelling accuracy and spelling automaticity: A case study. New Zealand Journal of Speech-Language Therapy,63(3), 24–40.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Mackenzie, Scull & Munsie, Writing Analysis Tool, 2009–2013, © All rights reserved

Rating | Text structure | Sentence structure and grammatical features | Vocabulary | Spelling | Punctuation | Handwriting/legibility |

|---|---|---|---|---|---|---|

1 | No clear message | Random words | Records words of personal significance, such as their own name or those of family members | Random letters/letter-like symbols | No evidence of punctuation | Letter-like forms with some recognizable letters |

2 | One or more ideas (not related) | Shows an awareness of correct sentence parts including noun/verb agreement. Meaning may be unclear | Use familiar, common words (e.g. like, went) and one-, two- and three-letter high-frequency words (e.g. I, my, to, the, a, see, me) | Semi-phonetic, consonant framework, alongside representation of dominant vowel sounds Correct spelling of some two and three-letter high-frequency words (e.g. the, my, to, can) | Some use of capital letters and/or full stops | Mix of upper- and lower-case letters and/or reversals or distortions (e.g. hnr/a d/bp/v y/i l)) |

3 | Two or three related ideas. May also include other unrelated ideas | Uses simple clauses, with noun, verbs, adverbs, which may be linked by “and”. Meaning clear | Everyday vocabulary, for example, Oxford first 307 word list plus proper nouns (in particular to the child’s cultural context, e.g. Fruit Fly Circus, Sydney Opera House) | Phonetic spelling—plausible attempts with most sounds in words represented Correct spelling of three- and four-letter high-frequency words (e.g. the, like, come, have, went) | Correct use of capital letters and full stops at the start and end of sentences | Mostly correct letter formations yet may contain poor spacing, positioning, or messy corrections |

4 | Four or more sequenced ideas. Clearly connected | Uses simple and compound sentence/s with appropriate conjunctions (e.g. and, but, then) Use of adverbial phrases to indicate when, where, how, or with whom | Uses a range of vocabulary, including topic specific words (e.g. A story about going to the zoo might include animal names and behaviours) | Use of orthographic patterns or common English letter sequence. If incorrect they are plausible alternative (e.g. er for ir or ur; cort for caught) Use of some digraphs (ck, ay) Correct use of inflections (ed, ing) Correct spelling of common words (e.g. was, here, they, this) | Some use, either correct or incorrect, of any of the following: Proper noun, capitalization, speech marks, question mark, exclamation mark, commas for lists, apostrophe for possession | Letters correctly formed, mostly well spaced and positioned |

5 | Evidence of structure and features of text type, e.g. recount, narrative, report, letter | Uses a variety of sentence structures: simple, compound and complex Pronoun reference is correct to track a character or object over sentences | Demonstrate a variety of vocabulary choices. Includes descriptive or emotive langrage | Use of some irregular spelling patterns (e.g. light, cough) Application of spelling rules (e.g. hope/hoping, skip/skipping) Correct spelling of more complex common words (e.g. there, their, where, were, why, who) | Uses a range of punctuation correctly | Regularity of letter size, shape, placement, orientation, and spacing |

6 | Complex text which shows strong evidence of the features of text type, purpose, and audience | Demonstrates variety of sentence structures, sentence length, and uses a range of sentence beginnings Sentence flow with logical sequence throughout the text and show a consistent use of tense | Correct use of unique field or technically specific vocabulary Use of figurative language such as metaphor and/or simile | Correct spelling of most words including multisyllabic and phonetically irregular words Making plausible attempts at unusual words | Demonstrates control over a variety of punctuations to enhance text meaning | Correct, consistent, legible, appearing to be fluent |

Rights and permissions

About this article

Cite this article

Scull, J., Mackenzie, N.M. & Bowles, T. Assessing early writing: a six-factor model to inform assessment and teaching. Educ Res Policy Prac 19, 239–259 (2020). https://doi.org/10.1007/s10671-020-09257-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10671-020-09257-7