Abstract

Criteria of acceptability for mathematical proofs are field-dependent. In topology, though not in most other domains, it is sometimes acceptable to appeal to visual intuition to support inferential steps. In previous work (De Toffoli and Giardino in Erkenntnis 79(3):829–842, 2014; Lolli, Panza, Venturi (eds) From logic to practice, Springer, Berlin, 2015; Larvor (ed) Mathematical cultures, Springer, Berlin, 2016) my co-author and I aimed at spelling out how topological proofs work on their own terms, without appealing to formal proofs which might be associated with them. In this article, I address two criticisms that have been raised in Tatton-Brown (Erkenntnis, 2019. https://doi.org/10.1007/s10670-019-00180-92019) against our approach: (1) that it leads to a form of relativism according to which validity is equated with social agreement and (2) that it implies an antiformalizability thesis according to which it is not the case that all rigorous mathematical proofs can be formalized. I reject both criticisms and suggest that our previous case studies provide insight into the plausibility of two related but quite different theses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mathematics is very successful. We are proving more and more sophisticated propositions with an ever-increasing cornucopia of techniques, notations, methods, and abstract concepts linking separate areas of the discipline. Certain achievements even reach the ears of non-specialists: Wiles’ proof of Fermat’s Last Theorem and Perelman’s proof of the Poincaré Conjecture are two examples. The number of informal proofs is vastly greater than that of formal proofs and as a result restricting mathematics to what has been formally proven would strip it of many of its exciting successes. As Tom Hales (2008) explains,

proofs are written in a way to make them easily understood by mathematicians. Routine logical steps are omitted. An enormous amount of context is assumed on the part of the reader. (p. 1371)

ProofsFootnote 1 are enthymematic valid deductive arguments—it is because they contain all sorts of shortcuts that the reader must be appropriately trained to check their correctness. Formal proofs, whose steps are either axioms or derived from previous steps with the aid of explicit inference rules, can instead be mechanically checked. Formal proofs were first defined at the end of the 19th century by the fathers of modern logic, Frege, Russell, Hilbert, and others. Moreover, after a turbulent period of foundational uncertainties, in the first half of the 20th century, set theory became widely recognized as able to provide foundations for all mathematics, that is, all provable results should be in principle provable in the language of set theory,Footnote 2 even if Gödel showed that no single formal system would suffice.Footnote 3 In practice, Bourbaki’s encyclopedic enterprise is an ambitious example of how to organize standard mathematics starting with set theoretic notions.

Oliver Tatton-Brown (2019)Footnote 4 argues that formalizability is the mark of rigor and criticizes recent literature that emphasizes how mathematical proofs are accepted in practice without discussing formal proofs. The main target is a paper Valeria Giardino and I (2016)Footnote 5 coauthored that is centered around Alexander’s theorem, a topological result connecting knots to braids. According to Tatton-Brown, we overemphasize the role of intuition in mathematics and put forward some sort of anti-formalizability claim. Tatton-Brown’s worries have some legitimacy. Putting our previous work into context and disambiguating some of the terminology, however, I will show that our previous work did not make the claims that Tatton-Brown takes it to make. When our work is understood in context, it becomes clear (or so I will suggest) that our project was not in opposition, but rather orthogonal to his.

It is worth noting right away that I agree with (TB19) that while informal proofs are much more common and much more elusive than formal proofs, the two are related. Consider the role of independence results in mathematical practice. After Cohen’s 1963 proof of the independence of the Continuum Hypothesis (CH) from ZFC, a reasonable mathematician would have known better than spend any effort to prove CH in standard mathematics.Footnote 6 That is, the existence of a formal proof (i.e., in principle formal provability) is a sine qua non condition for the existence of an informal proof (i.e., provability)—nobody would disagree with this. A locus of disagreement is instead the type of relation that holds between formal and informal proofs: is this a structured relation? Is it helpful to understand mathematical practice?Footnote 7

In what follows, I will first clarify the difference between an abstract overarching criterion of rigor and context-dependent criteria governing which arguments mathematicians accept in practice. I consider cases in which a special type of intuition is accepted in professional contexts because it does not threaten the reliability of the arguments in question. In Sect. 2, I will briefly go through the issues that (TB19) raises in relation to Alexander’s theorem. Afterward, I will address his particular worries about anti-formalizability and relativism and give a positive proposal about how to resolve them. The considerations to follow will not only respond to the concerns raised in (TB19), but have also a more general breadth, contributing to clarifying the terms at play in the formalizability debate in the philosophy of mathematics.

1.1 Rigor

The majority of mathematicians pay scant, if any, attention to foundational issues and probably never entered the thickets of set theory. Fields medalist Vaughan F. R. Jones (1998, p. 205) confesses: “my own notion of set is very primitive, certainly not going beyond ‘naïve set theory.’” Mathematicians are to logicians as physicists are to mathematicians, claims Jones: both groups push the worry to the other camp when made aware that their proofs are “riddled with holes.” “Let the logician worry about it,” replies the mathematician. Fine, but for rigorous proofs the logician should be able to satisfy her concerns. The logician will not able to fill the gaps all by herself, she will need the help of a mathematician to access the “enormous amount of context” that is assumed in the proof. This context is what mathematical communities shareFootnote 8: background propositional knowledge, knowledge-how, and the available representational resources.

Mark Steiner (1975, p. 100), inquiring about what it takes to achieve mathematical knowledge, considers a mathematician with a proof and a logician posing questions. Prompted by the logician, the mathematician will draw from her expertise unstated premises and enough details to convert the proof into something that the scrupulous logician could in turn convert into a formal proof. According to Steiner, the logician plays the role of a Socratic midwife:

I am so far like the midwife that I cannot myself give birth to wisdom […] Those who frequent my company at first appear, some of them, quite unintelligent, but, as we go further with our discussions, all who are favored by heaven make progress at a rate that seems surprising to others as well as to themselves, although it is clear that they have never learned anything from me. The many admirable truths they bring to birth have been discovered by themselves from within. But the delivery is heaven’s work and mine. (Theaetetus, 150c-d)Footnote 9

In our context, the logician’s lack of wisdom is her lack of knowledge of the relevant mathematical area, and the mathematician’s apparent unintelligence derives from her gappy proof. If the logician succeeds in delivering a formal proof from the mind of the mathematician, the latter had knowledge all along, and this implies that her proof was rigorous.

Bourbaki, Mac Lane, and others characterized rigor with a variation on the same theme. I will not quibble with this standard characterization; my aim here is not to provide yet another definition of rigor.Footnote 10 I will work with Burgess’ (2015) characterization, a version of which is endorsed and further detailed by Tatton-Brown. In short, according to Burgess, a rigorous proof is a proof that contains enough detail to convince (for the right reasons) the relevant audience that a formal proof exists. Moreover, it has to convince for first-order reasons; testimony or higher-order evidence are not relevant. This is a clear and general definition. However, it implies that in order to evaluate whether a proof is acceptable as rigorous, we have to wade into its context and clear the murky waters by asking who is the “relevant audience” and what is “enough” to convince such relevant audience for the right reasons. This is the task I have been pursuing.

1.2 Criteria of Acceptability

As Burgess acknowledges, his characterization of rigor can “provide some guidance” but is inadequate to analyze specific cases:

Lots of room for disagreement, even among experts, is contained in that word “enough,” as is room for recognition that what is enough for one audience may not be enough for another. (pp. 97–98)

Basically, for Burgess, the relevant details are giving the reader steps of the purported formal proof. That is why he does not admit diagrams into rigorous proofs—but this is not essential for his view, and I claim that in fact some types of diagrams meet his desiderata.

In previous work in collaboration with Valeria Giardino, (DG15) and (DG16),Footnote 11 I aimed to show that diagrams and visualizations may be sufficient to convince the relevant audience that an inferential step in a proof is correct. This is not because diagrams can be understood as steps of a formal proof,Footnote 12 but because they support high-level inferences that the relevant readers recognize as correct—and since correctness implies formalizability, the readers further infer that they are formalizable.

This is key. In some cases, mathematicians don’t accept a proof because it is formalizable; they accept a proof for other reasons (e.g., visualizations they know to be reliable), and then they infer that it is formalizable. The order of explanation is thus reversed when compared to Burgess’ and Tatton-Brown’s accounts. More generally, Giardino and I aimed to determine how the contours of enough detail are drawn in specific mathematical practices.Footnote 13

A natural concern with such a project is that criteria of acceptability do not admit of a principled philosophical analysis and, even if they do, they are not of interest for philosophy, but only for sociology or psychology. I will dispel this concern by showing that a philosophical analysis is not only possible, but critical for understanding actual mathematical practice. It is however true that acceptability, unlike rigor, is a context-dependent notion. This does not imply that criteria of acceptability are subjective or socially constructed, however. To clarify terminology and avoid verbal quarrelsFootnote 14 we can distinguish between:

-

1.

the criterion of rigor

and

-

2.

criteria of acceptability for rigorous proofs.

-

(1)

Rigor is a very general and context-independent criterion. It is factual: rigor implies correctness. A proof is rigorous only if it can be formalized and the relevant audience can be convinced of it (for the right reasons). This is a relatively uncontroversial criterion: reasonable mathematicians do not waste their time trying to prove mathematical propositions that have been shown to be independent from their assumptions—such as CH. This is a neat criterion, but it is too general to be implemented in actual mathematical practice.

-

(2)

Criteria of acceptability for rigorous proofs are the criteria that determine whether an argument is suitable for publication and for acceptance in a particular context. These criteria depend on the mathematical community to which the proof is addressed. While some communities accept proofs as rigorous only if they are very close to formal proofs, others are more permissive. While topologists tend to accept some specific appeal to intuition, others (e.g., von Neumann algebras specialistsFootnote 15) don’t.Footnote 16 Moreover, criteria of acceptability can be applied, for example, to Gauss’ proofs and to all other pre-19th century mathematical proofs, which were developed in a time when the notion of formal systems was not yet delineated.

By considering proofs in practice, it quickly becomes evident that the normative dimension at play for accepting rigorous proofs is more variegated and complex than the one involved in assessing formal proofs alone and cannot be spelled out adequately without entering into the details of the various cases. The criteria of acceptability for rigorous proofs—arising from the experience of mathematicians in recognizing what types of inferences are reliable—are going to determine what types of gaps are acceptable, and what types of high-level inferences are permissible in a specific contextFootnote 17,Footnote 18.

The criteria of acceptability for (rigorous) proofs are supposed to track correctness; however, unlike rigor, they are not a guarantee. There are clear historical cases in which the criteria of acceptability for proofs, even in professional contexts, were shown to be inadequate and required revision. As observed in (TB19), in the 17th century, when Leibniz and Newton introduced infinitesimals, their method was more or less acceptable by period mathematicians. With the work of Cauchy and culminating with Weierstraß, the method of infinitesimals was banned and replaced by early versions of ε-δ calculus.Footnote 19 Moreover, nowadays, Weierstraß’ proofs are also not accepted as rigorous.

Another example is the Italian school of algebraic geometry whose work straddled the end of the 19th century and the first three decades of the 20th century. It produced many fruitful ideas, but it was too speculative for its methods to be acceptable. Only with the later work of Oscar Zariski, a student of Guido Castelnuovo, were its intuitive ideas given an acceptable form.Footnote 20

This is the past, one might think: now we have a clear definition of rigor, and the criteria involved in accepting rigorous proofs cannot lead us astray anymore. But is this really so? Unfortunately not. There are always developments requiring new criteria, and new discoveries challenging current ones. For example, in the recent and fast-growing sub-field of symplectic geometry, the criteria of acceptability are a matter of controversy between the experts. As Kevin Hartnett (2017) explains:

Research developed rapidly, but without the shared background knowledge typically found in mature areas of mathematics. This made it hard for mathematicians to tell when new results were completely correct.

Dusa McDuff and Katrin Wehrheim, two prominent mathematicians, started questioning published results and criticizing methodological choices of Kenji Fukaya and collaborators.Footnote 21

We have not removed criticisms of the arguments in [FO, FOOO],Footnote 22 since for many years these have been the only available references on this topic (and still are the only published sources). So we think it important to give a coherent account of the construction in the simplest possible case, showing where arguments have been lacking and how one might hope to fill them. (McDuff and Wehrheim 2015, p. 169)

The criticized party disagrees, maintaining that the charges are unfair. Hartnett summarizes the gist of the discussion:

Wehrheim and McDuff would raise questions about Fukaya’s work. Fukaya and his collaborators would then write long, detailed answers. Whether those answers were satisfying depended on who was reading them. From Fukaya’s perspective, his work on Kuranishi structures was complete and correct from the start. “In a math paper you cannot write everything, and in my opinion this 1996 paper contained a usual amount of detail. I don’t think there was anything missing,” he said. Others disagree. (Hartnett 2017, emphasis added)

It could very well be that in the not so distant future, formal verification will be required for proofs to be accepted as rigorous, at least for some areas of mathematics or for some types of proofs.Footnote 23 This was the vision of Vladimir Voevodsky, who realized that part of what is now acceptable as rigorous mathematics should perhaps not be acceptable after all (sometimes is even straightforwardly wrong)—starting with his results leading to his Fields medal in 2002.Footnote 24

Criteria of acceptability for rigorous proofs are not carved in stone (as the criterion for rigor might be), but they are indexed to a mathematical community in a particular time. Often, such criteria are stable for fields in which the background knowledge has solidified and more volatile for recent areas. They reliably—but not perfectly—track rigorous proofs. There is a trade-off between reliability and effort. We can always perform additional checks, but only at the cost of hindering the progress of mathematics. When mathematicians can simplify their lives by using cognitive abilities to perform high-level mathematical inferences reliably, they will just do so. Shortcuts can be dangerous, but they are indispensable for mathematical growth.

1.3 Topological Intuition

One type of shortcut frequently chosen in topology is made possible by spatio-temporal and kinesthetic intuition. Differently than flatlanders, when inquiring in low-dimensional topology we can appeal to our intuition of three-dimensional space:Footnote 25

If we were two-dimensional creatures, then proving this theorem [Alexander’s theorem] would be another story entirely and would require much more formal argument. (Jones 1998, p. 212)

Giardino and I started with the observation that some proofs accepted as rigorous in topology include inferential steps appealing to intuition. Not all appeals to intuition are, however, admissible. Hans Hahn’s (1931/1980) lecture Crisis in Intuition offers plenty of cautionary tales. Our goal was exactly to explain when and why intuition can be reliable and thus acceptable in specific contexts. Intuition can be invoked in rigorous proofs only if it is shared by mathematicians with the appropriate training and is systematically linked to precise mathematical concepts and operations.

When high-level inferences rely on idiosyncratic appeals of intuition, they are not acceptable as rigorous. The work of William Thurston the Hyperbolization TheoremFootnote 26 and much before the works of Henri Poincaré that triggered the Bourbaki projectFootnote 27 are well-documented examples.

A specific type of intuition at play in topological proofs is what Giardino and I labeled enhanced manipulative imagination in a study of knot diagrams (DG14). This intuition develops via ordinary human interaction with concrete objects as well as specific training; it is then redeployed in mathematics when practitioners imagine spatial transformations and perform manipulations on diagrams that are easily interpreted as well-defined mathematical operations (e.g., ambient isotopies). The use of enhanced manipulative imagination is subject to specific norms. Referring to a topological proof showing the equivalence of different presentations of a 3-manifold, we wrote:

In order to understand the proof and check its validity, practitioners have to use their ability to imagine topological transformations correctly. For example, they have to interpret the transitions between the pictures as homeomorphisms. (DG15, p. 331, emphasis added)

Another example has to do with how topologists imagine manipulating knot diagrams in a way that is accurately cashed out in terms of Reidemeister moves (DG14). We concluded that the processes for checking the correctness of proofs

are context-dependent processes that in low-dimensional topology rely on our manipulative imagination and more generally on our intuition of three-dimensional space, duly trained according to the specific practice. (DG15, p. 333, emphasis added)

Although intuition is linked by training to precise mathematical operations, it is not in virtue of this fact alone dispensable. In practice, arguments relying on diagrams and intuition are cognitively manageable and are reliable alternatives to arguments that do not rely on them—I will later suggest that in some cases eliminating the appeal to intuition in a proof would relevantly change the proof at issue.

Mathematicians are certainly convinced that they can link widely acceptable visualizations to precise mathematical concepts, which in turn are convertible (with the help of the patient logician) into formal arguments. Seldom is this actually tested in practice, however. And even with the proof assistant technology available today, formalizing a proof remains a “major undertaking,” (Hales, p. 1372) often unfeasible in practice.Footnote 28 Thanks to their experience, mathematicians are good at picking out and relying on only those visualizations that are in fact correct (and thus convertible into formal steps). They are however indifferent with respect to the various choices that have to be taken for converting their proofs into formal ones.Footnote 29

In (DG16), a paper presenting a case study in braid theory, we intended neither to argue that proofs cannot be formalized at all, nor to advocate social constructivism for mathematics; rather, we were analyzing the criteria of acceptability for proofs (and for rigorous proofs) by focusing on how proofs are situated in a specific socio-cognitive setting. This approach was made clearer in (DG15), a previous, but deeply connected paper:Footnote 30

Of course, it is still possible to translate visual arguments into formal ones. Nevertheless […] the formal version might be complete, but it remains inadequate. As a consequence, once we accept the existence of arguments structured in sequences of pictures, we realize that although there might be good reasons to reduce the reasoning to formal statements, this move would add nothing to the topological reasoning behind the argument. (p. 334, emphasis added)

Our objective was precisely to analyze such topological reasoning and determine when it is acceptable in proofs and what the social, cognitive, and material conditions are that make this possible. Tatton-Brown takes issue with (DG16), starting with the choice of case study. One apt consideration is that we interpreted Alexander’s result as it is read today, rather than in 1923, when the criteria of acceptability for knot and braid theory were still fluid.Footnote 31 Here, I aim to respond to the two main charges of (TB19). I will show that our views are consistent with most of Tatton-Brown’s claims, but not with what he attributes to us. The two main charges are:

(REL) RELATIVISM Mathematicians’ agreement regarding the correctness of proofs is determined solely by social factors.

De Toffoli and Giardino write as though each community’s ways of reasoning are automatically accurate about the community’s chosen subject matter (p. 10)

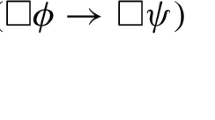

(AFT) ANTI-FORMALIZABILITY THESIS It is not the case that all rigorous mathematical proofs can be formalized (in some sense).

They aim to use a case study to attack a version of the standard view of proof […] in which the correctness of mathematical proofs is connected with their formalizability in some sense (p. 1)

In (DG16) we did not address the problem of how a proof can be connected with a formal proof, but this does not by itself imply that we endorsed AFT. Our attitude was similar to the one emerging from the Princeton Companion to Mathematics (Gowers 2008).Footnote 32 Editor Timothy Gowers, starts by quoting a passage of Bertrand Russell’s The Principles of Mathematics in which pure mathematics is defined in strictly logical terms as

Pure Mathematics is the class of all propositions of the form p implies q, where p and q are propositions containing one or more variables, the same in the two propositions, and neither p nor q contains any constants except logical constants. And logical constants are all notions definable in terms of the following: Implication, the relation of a term to a class of which it is a member, the notion of such that, and any such further notions as may be involved in the general notion of propositions of the above form. In addition to these, mathematics uses a notion which is not a constituent of the propositions which it considers, namely the notion of truth. (p. ix)

Gowers goes on to explain that the Princeton Companion “is about everything that Russell’s definition leaves out.” Again, this does not imply AFT. It rather threatens a sharper version of it, in which the connection between a proof and a formal proof is substantially defined, and the notion of formal proof is specified. In our work, we started with the assumptions, shared with Gowers, that (1) logical foundations are not among the concerns of mainstream mathematics and (2) mathematics was practiced well before the introduction of ZFC and featured proofs that were correct even without reference to formal systems.

As stated in (TB19), the “standard view” is problematic from the foundational point of view. What formal system are we talking about?Footnote 33 What does it mean to be formalizable (I will address this in Sect. 4)? Moreover, there are principled philosophical arguments against the theoretical commitments of every formal system, even the widely accepted ZFC. For instance, the fact that mathematicians tend to prefer constructive proofs is a pushback against the law of excluded middle. Another example is the still controversial Axiom of Choice.

Tatton-Brown’s charges are raised in connection with what he takes to be a misinterpretation of Alexander’s theorem connecting knots to braids in (DG16). In the next section, I address a few crucial mathematical passages to clarify my own perspective as one of the two co-authors of the previous studies.Footnote 34 This single-authored response will not employ the rhetoric of (DG16), nor answer Tatton-Brown’s related concerns.Footnote 35 What I hope to do in the present context is respond to the two worries that he raises and conclude with some general remarks on the role of intuition in mathematics and a positive proposal regarding how to recover reasonable versions of REL and AFT.

2 Alexander’s Theorem

Alexander’s theorem states that every link (i.e., a disjoint union of knots) is the closure of a braid.Footnote 36 The crux of the proof is to show that any knot is equivalent to a reelFootnote 37—that is, a knot that progresses monotonically around an axis. This was first proven by the Princeton mathematician James W. Alexander (1923), to whom the theorem is now attributed.

Given the definition of braid and its closure, it is obvious that every reel is the closure of a braid. However, since (DG16) was addressed to non-mathematicians and this initial step already involves visualization, we took the time to explain it (DG16, p. 40). Our exposition did not aim at mathematical or historical precision but was rather meant to be suggestive of peculiar techniques used in topology. What we presented was just a sketch, and not a full-fledged rigorous proof. Moreover, we departed from Alexander’s original proof since instead of working with polygonal knots up to elementary transformations (Fig. 1), we also worked with smooth knots up to ambient isotopies (Fig. 2).

We chose smooth knots because they are easier to visualize (and to draw) than polygonal knots.Footnote 38 We were inspired by the informal presentation of Alexander’s theorem by Jones (1998), who also aimed at illustrating to a broad audience the importance of intuition in topology.

The main idea in Alexander’s proof is to perform certain moves on polygonal knot diagrams to modify them into diagrams of reels. These moves correspond to elementary transformations in three dimensions (that crucially do not alter the knot type), such as complicated versions of the ones shown in Fig. 1. Echoing Jones, we instead performed the vivid “throw over the shoulder” move on smooth knots. Roughly, we imagine we (our body, standing straight) are an axis—corresponding to point O in (DG16, p. 42)—and have a huge physical knot around us that we want to wind around us all in the same direction. When we identify a portion of knot that is going the wrong way, we take it and throw it over our shoulders (or under our feet) so that it turns in the right direction (DG16, p. 42).Footnote 39

This move is inspired by Alexander’s, but it diverges from it in important ways. The smooth setting introduces the delicate issue of limiting the portion of the knot to modify, which is unproblematic in the polygonal setting. Details can be filled in, but neither we nor Jones did so. Our goal was different:

I would like to illustrate [how intuition plays a role in knot theory] by giving a proof of a simple result due to Alexander […]. It was explained to me by Joan Birman within five minutes. (Jones 1994, p. 209)

As it is to be expected, in her influential monograph on braids, Birman (1974) explains a proof for such a “simple result” in far more than five minutes to her audience of specialists and addresses details that are left out even by Alexander.Footnote 40

Birman presented a rigorous proof. Jones (and we, following him) reported a sketch of a proof in a different setting to a broad audience. What about Alexander’s original proof? It falls somewhere in between. The operations are well-defined, but it is laid out in just two pages and goes quickly. Note that Alexander published it in the PNAS (Proceedings on the National Academy of Sciences of the United States of America), a journal that does not aim at publishing rigorous proofs but instead publishes quick announcements.Footnote 41 Different contexts call for different levels of detail.

2.1 Legitimate Operations

Alexander (1923) writes that his diagrammatic move is legitimate since it “obviously corresponds to an isotopic transformation of the space curve” (p. 94, emphasis added).Footnote 42 Why is this obvious? What convinces us that such diagrammatic moves are legitimate is our visualization of the spatial arrangements that makes us even understand what these moves are.

Such diagrammatic moves correspond in space to elementary transformations. These are general and more complicated versions of the particular one depicted in Fig. 1 in which segment AB is replaced by two segments, AC and BC. Tatton-Brown claims that the only obstacle to performing such moves would be to find a point C up above or down below the rest of the knot (see his Figures 4, 5, pp. 17–18):

Does such a point [C] exist? Obviously yes. As we are visualizing it, the region vertically above [a, b] is free from obstructions, so if we take c to be enormously high up then the triangle [a, b, c] will go almost straight up from the line segment [a, b], and will not hit anywhere in K[.] (emphasis added, p. 17)

But actually, if it is not already obvious that such three-dimensional moves can be performed and do not alter the knot type, and therefore project diagrammatically into legitimate operations, then the very possibility of performing a Reidemeister II moveFootnote 43 is questioned—which is not in line with actual practice.Footnote 44 In the Reidemeister II move (see Fig. 3), the two segments corresponding to the two branches in the diagram ending on points A and B could correspond to segments in space having different heights and different slopes. How do we know that point C in Fig. 3 exists? Of course, we could give some detailed explanation (similar to Tatton-Brown’s details), for instance by appealing to compactness arguments, to prove that some slope exists. However, in practice this is never required!

Visualizations of the kind that is needed to see that the local move in Fig. 3 is legitimate (that is, that it does not alter the knot type) enable us to perform clearly identifiable diagrammatic moves by exploiting our imagination of three-dimensional knots. Tatton-Brown agrees that topologists rely on visualization to understand and prove results. Our main point was that such moves are accepted by specific communities of mathematicians without further justification.

Brendan Larvor (2012) uses the notion of “permissible actions” to describe the type of high-level context-specific inferences that are acceptable in rigorous proofs. In (DG16) we used this notion to isolate those visualizations that are acceptable in topological contexts. We acknowledged that such permissible actions need to comply with constraints since they correspond to well-defined mathematical operations. However, such constraints are not necessarily stated in advance and often form an open-ended list.Footnote 45

Our account of diagrammatic moves suffers from another problem according to Tatton-Brown—namely, we

describe Alexander’s proof as based on the manipulation of concrete spatio-temporal objects which is inaccurate as Alexander’s proof is based on knots being a finite union of straight line segments, which are not concrete and have zero width. (p. 21)

But the core point of Alexander’s proof is precisely to perform diagrammatic manipulations according to “legitimate operations.” Moreover, a diagram is not a concrete object, but a representation coming with an interpretation and rules of use that are partially conventional. A concrete figure can be interpreted as a knot diagram, which is a projection of a knot into a plane—and the interpretation obliges us to disregard some of the perceptual features of the figure, such as its color, the width of the lines, and even the specific geometric shape.Footnote 46 In particular, we interpreted the perceptual segments of the figure as one-dimensional straight segments. Our point was similar to the one known to every student of Euclidean geometry: when we see that two lines intersect, what we actually perceive is a small two-dimensional region, and not a dimensionless mathematical point.

2.2 The Termination Problem

In contrast to Alexander’s terse style, Tatton-Brown’s methodical approach uncovers the structure of the proof. The problem here is not to find single permissible moves, but to show that a finite number of them is sufficient to transform the knot diagram we start with into a diagram of a reel—that is, that the process terminates. In the smooth setting we adopt, the situation is particularly delicate because, as explained above, the individual moves themselves are not precisely defined. However, even in the polygonal case, perhaps Alexander should have been more explicit about it—like Birman in her monograph.Footnote 47 It is thus misleading to characterize Alexander’s proof as “perfectly rigorous” (p. 24). I would rather say that Alexander’s proof satisfied the acceptability criteria of the time and of the journal in which it was published.

Visualizing the spatial configuration, it is possible to realize that Alexander’s procedure can be chosen so that it terminates after a finite number of moves and that we could create a smooth analog of it inspired by the throw-over-the-shoulder move. However, as Tatton-Brown shows with a counterexample, there is no guarantee that it will. Moreover, the smooth setting is trickier to formalize than the (discrete) polygonal one, and thus Tatton-Brown is right to claim that in changing the setting we are changing the proof in a relevant way.

3 Local Criteria of Acceptability and the Relativism Worry

The high-level inferences (diagrammatic or not) that are acceptable in one sub-field might not be acceptable in another.Footnote 48 Does this imply that “mathematicians [are] split into distinct communities, each […] with their own standards of correctness” (p. 10, emphasis added)? I stand by the claim that reasoning in different sub-fields can be radically different, exploiting heterogeneous representational systems enabling the use of various cognitive abilities. However, the correctness of a deductive argument is a factual matter. What changes with context are not the “standard of correctness,” but the criteria of acceptability. Unfortunately in (DG15) and (DG16) we used the misleading expression “local criteria of validity.” This was intended to indicate that the criteria used to detect validity, or better, correctness (‘validity’ is in fact often conceived as a technical term applicable only to formal arguments) are local.Footnote 49 The expression was inspired by Larvor (2012), who claimed that if we admit topic-specific inferential actions on different representations, such as diagrams, “the cost is that we have to abandon the hope of establishing a general test for validity” (p. 723).

Although to understand the pulse of mathematical practice it is useful to think in terms of local factors, I take issue with the idea that “standards of correctness” are “standards which each individual community defines, without any further justification being supplied or called for” (p. 10). In the literature, similar claims are not unheard of. For example, Ruben Hersh writes that “what mathematicians at large sanction and accept is correct” (Hersh 2014, p. 149). I disagree with such a claim, as does Tatton-Brown. In my view, there is a relation between correctness and acceptance; but this is not a constitutive relation, it rather arises from the reliability of mathematicians to track correct proofs.Footnote 50

Tatton-Brown’s charge of REL is implausible for a number of reasons. Two were explicit in the very work that Tatton-Brown is discussing: (1) because different mathematical fields interact—a central point of the case study presented (DG16) where we discussed the relation between topology and abstract algebra and (2) because the moves on the diagrams are allowed only if they can be interpreted as specific mathematical operations (e.g., ambient isotopies).Footnote 51

4 The Role of Visualization and the Formalizability Worry

Different proofs can be given for the same proposition. Moreover, the same proof can be presented in different ways. Proofs can be defined as equivalence classes of proof presentations; but how do we choose the right equivalence relation? That is, how are proofs individuated? This question admits of different answers in different contexts.Footnote 52 As Marcus Giaquinto (2008) explains:

There is no context-invariant answer to this, and even within a context there may be some indeterminacy. Usually mathematicians are happy to regard two presentations as presenting the same proof if the central idea is the same in both cases. But if one’s main concern is with what is involved in thinking through a proof, its central idea is not enough to individuate it: the overall structure, the sequence of steps and perhaps other factors affecting the cognitive processes involved will be relevant. (p. 24)

For example, is Euclid’s proof that there are infinitely many primes a proof by contradiction? If we want to be historically accurate and able to detect the fine logical structure of proofs, then we should answer negatively. It is not a proof by contradiction, but it contains one. However, if we are interested in the ‘main idea’ of the proof, then we can say that it is equivalent to a proof by contradiction. If we individuate proofs in a way that is sensitive to the particular reasoning that is needed to go through them and to regard them as correct, as we did in previous work, then converting a topological appeal to intuition, such as Alexander’s, into a formal proof would change it, since the properly topological reasoning would be lost:

the reasoning involved in this specific proof cannot be identified exclusively with propositional reasoning, and even less with formal reasoning. (DG16, p. 43)

This statement does not imply that the proof cannot be formalized at all. Alexander’s proof consists of performing transformations on an arbitrary knot diagram. Note that a specific diagram is a diagram of a particular knot, and thus the proof cannot just consist in transforming it via permissible moves, but must explain how similar moves would be applicable to all other relevant diagrams.Footnote 53 In fact, the “legitimate operations” of Alexander’s algorithm can be defined without specifying the whole diagram.Footnote 54

In general, any proof relying on diagrams could be converted into a combinatorial proof; for the latter it suffices to encode a knot diagram by a sequence or matrix of numbers, and then describe the diagrams in these terms. This is not far from Alexander’s own approach to knot theory. He belonged to a modernist trend among mathematicians that opted for more formal styles—a combinatorial approach—compared to the previous generation of topologists.Footnote 55

In (DG16) we aimed at understanding the nature of and the social, cognitive, and material conditions for topological understanding. Our problem was not whether a proof could in principle be formalized, or whether a diagrammatic proof could be converted into a diagram-free proof. Of course both are possible, but

phrasing the problem of diagrams in proofs in terms of the possibility of eliminating them by translating the proof into a sequence of symbols misses the core of the issue. […] the fundamental problem is not whether a proof can contain diagrams or not, but whether it carries […] mathematical knowledge. (De Toffoli 2017, p. 183, emphasis added)

Even if we can provide a formalization, we should also explain how an argument is accepted in practice without appealing to any formalizations. After all, as Jones (1998) claims: “One is expected to ‘see’ results in this field, and once the result […] has been ‘seen,’ it requires no further discussion” (p. 212). One way to explain this is to understand which types of visualizations can be used reliably. However, as mentioned above, our emphasis on visualization is not in the spirit of Alexander’s formalist tendencies.

In general, it is possible, but possibly very difficult, to formalize proofs relying on intuition. With respect to Alexander’s result, Jones himself does not question the possibility of formalizing even his vague argument in the smooth setting, but he thinks that doing so “would be a nightmare” (1998, p. 212).

5 Conclusion

I have argued that the analysis put forward by Giardino and myself in earlier work did not lead us to endorse either a form of relativism (REL) or an anti-formalizability thesis (AFT), although it did include some ambiguous passages that lent themselves to readings along these lines. I have explained not only why we are not committed to these two theses, but also how more moderate versions of them might be made plausible. Here are the two amendments that I propose:

REL* The standards of acceptability of (rigorous) proofs depend on the shared background of the audience to whom the proof is addressed.

AFT* There is a reasonable way to individuate proofs such that if topological proofs involving visualization are converted into formal proofs, they are thereby transformed into a different proof.Footnote 56

Without visualizations the understanding of many topological proofs would be compromised, if not completely lost. Such visualizations are however not naïve or without constraints, but enabled by a specific form of intuition developed with specific training and applied to specific domains.

The fact that criteria of acceptability are local means that the correctness of proofs can be evaluated without direct reference to formal proofs. This does not contradict the claim that formalizations of some kind are in principle possible or that partial and ad hoc formalizations would theoretically help solve instances of mathematical disagreement.Footnote 57 It simply shows that formalizations are generally not useful for understanding how the actual practice of mathematics can produce stable results known by mathematicians via proofs involving intuition.

Far from denying the importance of logic in understanding mathematics, our previous work started with the assumption that logical analysis is not enough. We set ourselves among those philosophers who, to use José Ferreirós (2016) words,

have acknowledged the limits of logical analysis in understanding mathematical knowledge, and they have set out to introduce new types of theoretical discourse that complements or supplements logic. (p. 25)

It should be clear by now that my previous and current work is, to paraphrase Tim Gowers, about everything that formalization is not.

Notes

As it is customary, I use the term ‘proof’ as a success term. If not specified otherwise, I will use the term ‘proof’ to mean ‘informal proof.’

I am here focusing on classical logic and leaving aside issues raised by rejection of the law of excluded middle or of other non-classical logical choices.

The issue is however by no means settled. For example, the acceptance of large cardinal axioms is still a matter of debate. Moreover, nowadays category theory and homotopy type theory offer alternative foundations.

From now referred to as (TB19).

From now referred to as (DG16).

Note that not all mathematicians are reasonable. Some did actually try the impossible and proposed arguments doomed to be refuted. (TB19, p. 8) also mentions independence results. Thanks to John Burgess for conversations about this issue.

See Ferreirós (2016, Ch. 2) for a discussion of mathematical communities.

Translated by Cornford.

See Hamami (2019) for a meticulous analysis of mathematical rigor and the historical underpinning of the present account.

De Toffoli and Giardino (2014, 2015, 2016) are here labelled (DG14), (DG15), and (DG16). After publishing a paper on knot diagrams in Erkenntnis in 2014, Giardino and I wrote an initial draft on the topic of low-dimensional topology for two conferences which later was developed and split into two papers, then published in 2015 and 2016 as chapters in the two conference proceedings.

Although they could be—as in formal diagrammatic proofs.

In a similar vein, Jeremy Avigad (2020) proposes an account of how traditional proofs are reliable, tracking existence of formal proofs.

As one referee pointed out, part of the disagreement with Tatton-Brown derives from the fact that we talked past each other.

Von Neumann’s density theorem is the example that Jones (1998) uses to contrast Alexander’s theorem.

I am putting aside the (interesting) phenomenon of computer-assisted proofs or proofs requiring collaborations to focus on the phenomenon of proof that can be grasped by a single mathematician.

Don Fallis (2003) proposes a taxonomy and analysis of different gaps in mathematical proofs.

This is in line with Tatton-Brown. However, he takes the criteria of acceptability to be the criteria of rigor; this is where our projects take different directions.

Still, not by sheer chance infinitesimal worked pretty well in practice and in the right hands and this has been partially revindicated by Robinson’s non-standard analysis.

See Parikh (2009).

Thanks to Kevin Buzzard for discussions on the shortcomings of contemporary standard of acceptability for rigorous proofs and to Patrick Massot for pointing me to this specific controversy.

These are papers by Fukaya and co-authors published in main mathematical journals: K. Fukaya and K. Ono, Arnold conjecture and Gromov–Witten invariants, Topology 38 (1999), 933–1048, and K. Fukaya, Y.-G. Oh, H. Ohta, and K. Ono, Lagrangian Intersection Theory, Anomaly and Obstruction, Parts I and II, AMS/IP Studies in Advanced Mathematics, Amer. Math. Soc. and Internat. Press.

See Avigad and Harrison (2014). Computer assisted proofs are among the first proof candidates for formal verification. Great achievements have already been obtained; among the most pathbreaking are the formalizations of the Four-Color Theorem by Georges Gonthier in 2004 and of the proof of the Kepler Conjecture by Tom Hales and his collaborators of the Flyspek project in 2017.

After ten years and various vicissitudes, he found a mistake in his previous work.

I use the term ‘intuition’ in sense of the German word ‘Anschauung.’ There are other types of intuition that play a role in mathematics. For example, mathematical intuition á la Gödel is what leads us to the justification of the axioms of set theory. Thanks to one of the anonymous referees for this point.

“A grand insight delivered with beautiful but insufficient hints, the proof was never fully published” (Jaffe and Quinn 1993, p. 7).

“Poincaré claimed too much, proved too little, and his “reckless” methods could not be imitated. […] Dieudonné suggests that casual reasoning is a childhood disease of mathematical areas and says, “…after 1910… uniform standards of what constitutes a correct proof became universally accepted in topology… this standard has remained unchanged ever since.” But in fact, there have been many further episodes.” (Jaffe and Quinn 1993, p. 8).

For example, Hales estimated that the process of formalizing his proof of the Kepler Conjecture in HOL Light took 20 working years (distributed across many people) to complete.

This is in line with Burgess’ (2015) claim that mathematicians are indifferent to foundational choices.

As explained in footnote 11, there is continuity between (DG15) and (DG16).

For instance, in the second quarter of the 20th century, Artin, the father of braid theory, shows changes of mind with respect to his own methodology, particularly with the use of diagrams. See Friedman (2019) for a detailed historical account of the issue.

See Kennedy (2009) for a review emphasizing this point.

Of course, if any formal system is allowed, then this thesis is utterly trivial since we can always add more axioms.

For reasons of space, I cannot go into the mathematical details, but I limit myself to addressing some specific critiques.

See for example (DG16, p. 27). The strong criticism of a logic-based approach to mathematics was in line with early work in the philosophy of mathematical practice, and in particular with Lakatos’ (1963, p. X) discussion of the dangers of a formalist philosophy. This was a starting point in Giardino’s doctoral research.

See (DG16) and (TB19) for the details.

Alexander does not use such terminology, which is adopted in Dalvit (2011).

Elsewhere, Alexander writes: “In the figures, we shall picture a knot by a smooth curve rather than by a polygon. A purist may think of the curve as a polygon consisting of so many tiny sides that it gives an impression of smoothness.” (Alexander 1928, 276). Admittedly, we could have taken this approach as well.

Jones’ illustration reported in (DG16, p. 42) is bad since it depicts the very simple case in which no extra crossings are created by performing the move (and seems to suggest that all cases are that simple–but they are not).

If one counts the setting up of preliminary results, she takes six pages (pp. 39–44), but she proves a slightly more general result.

Thanks to Michael Barany for conversation on the policies of the PNAS at the time of Alexander’s publication.

Even if Alexander’s paper does not include diagrams of knots, the author asks the reader to imagine them.

The Reidemeister theorem establishes that all transformations on knot diagrams that leave invariant the represented knot type can be decomposed into a sequence of three simple local diagrammatic moves. In three dimensions, the second Reidemeister move consists in bringing a strand under or above another strand. Diagrammatically this leads to the introduction of two new crossings, as in Fig. 3.

Thanks to John Sullivan for this point.

This goes against Tatton-Brown’s (2019, p. 11) claim that in our framework the permissible actions form a “fixed list.”

See (DG14) for the case of knot diagrams and (De Toffoli 2019, Ch. 2–3) for general discussion of how only a subset of all perceptual features of a figure can be relevant for the corresponding diagram.

Birman is very clear in her own version of the proof and bypasses the problem completely, since her operation is done in parallel rather than sequentially.

In (DG16) we used what Thurston calls “shared mental model[s]” (1994, p. 174) to characterize the context formed by a particular sub-field. However, this expression is infelicitous because mental models are generally considered as subjective representations (and therefore not “shared”).

This is clearer in (DG15).

Jeremy Avigad (2020) addresses exactly the question of where the reliability of mathematicians comes from. In his terminology, he wants to understand how the robustness of informal mathematical proofs (which is a condition for us to get knowledge from them) can be squared with the fragility of formal proofs, in which a single tiny error invalidates the whole argument.

See also (De Toffoli 2017).

See (De Toffoli 2019, Ch. 1).

Clearly, this is the same generality problem that arises in the case of Euclidean diagrams.

Thanks to one of the referees for suggesting that I discuss this point.

I plan to say more about this in future work.

This is suggested in (TB19). However, in practice, the possibility of resolving disagreement in this way is often impracticable. This is exemplified by the persistent disagreement with respect to the abc Conjecture. The Japanese mathematician Shinichi Mochizuki put forward a putative proof in 2012 that has been challenged by the Fields medalist Peter Scholze and remains controversial to this day.

References

Alexander, J. (1923). A lemma on systems of knotted curves. Proceedings of the National Academy of Sciences of the United States of America, 9(3), 93.

Alexander, J. (1928). Topological invariants of knots and links. Transactions of the American Mathematical Society, 30(2), 275–306.

Ashton, Z. (2020). Audience role in mathematical proof development. Synthese. Audience role in mathematical proof development.

Avigad, J. (2020). Reliability of mathematical inference. Synthese. https://doi.org/10.1007/s11229-019-02524-y.

Avigad, J., & Harrison, J. (2014). Formally verified mathematics. Communications of the ACM, 57(4), 66–75.

Azzouni, J. (2004). The derivation-indicator view of mathematical practice. Philosophia Mathematica, 12(3), 81–105.

Birman, J. (1974). Braids, links, and mapping class groups (AM-82). Princeton: Princeton University Press.

Dalvit, E. (2011). New proposals for the popularization of braid theory. Trento: Università degli Studi di Trento.

De Toffoli, S. (2017). `Chasing’ the diagram: The use of visualizations in algebraic reasoning. The Review of Symbolic Logic, 10(1), 158–186.

De Toffoli, S. (2019). Epistemic roles of mathematical diagrams. Stanford: Stanford University.

De Toffoli, S., & Giardino, V. (2014). Roles and forms of diagrams in knot theory. Erkenntnis, 79(3), 829–842.

De Toffoli, S., & Giardino, V. (2015). An inquiry into the practice of proving in low-dimensional topology. In G. Lolli, M. Panza, & G. Venturi (Eds.), From logic to practice (pp. 315–336). Berlin: Springer.

De Toffoli, S., & Giardino, V. (2016). Envisioning transformations—the practice of topology. In B. Larvor (Ed.), Mathematical cultures (pp. 25–50). Berlin: Springer.

Epple, M. (2004). Knot invariants in Vienna and Princeton during the 1920s: Epistemic configurations of mathematical research. Science in Context, 17(1/2), 131–164.

Fallis, D. (2003). Intentional gaps in mathematical proofs. Synthese, 134, 45–69.

Ferreirós, J. (2016). Mathematical knowledge and the interplay of practices. Princeton: Princeton University Press.

Friedman, M. (2019). Mathematical formalization and diagrammatic reasoning: The case study of the braid group between 1925 and 1950. British Journal for the History of Mathematics, 34(1), 43–59. https://doi.org/10.1080/17498430.2018.1533298.

Giaquinto, M. (2008). Visualizing in mathematics. In P. Mancosu (Ed.), The philosophy of mathematical practice (pp. 22–42). Oxford: Oxford University Press.

Gowers, T. (Ed.). (2008). The Princeton companion to mathematics (3rd ed.). Princeton: Princeton University Press.

Hahn, H. (1980). The crisis in intuition. In Empiricism, Logic and Mathematics (pp. 73–102). Springer, Berlin.

Hales, T. C. (2008). Formal proofs. Notices of the American Mathematical Society, 55(11), 1370–1380.

Hamami, Y. (2019). Mathematical rigor and proof. The Review of Symbolic Logic, 1, 1. https://doi.org/10.1017/S1755020319000443.

Hartnett, K. (2017). A Fight to Fix Geometry’s Foundations. Quanta Magazine.

Hersh, R. (2014). Experiencing mathematics: What do we do, When we do mathematics?. Providence: American Mathematical Society.

Jaffe, A., & Quinn, F. (1993). Theoretical mathematics: Toward a cultural synthesis of mathematics and theoretical physics. Bulletin of the American Mathematical Society, 29(1), 1–13.

Jones, V. F. R. (1998). A credo of sorts. In H. G. Dales & G. Oliveri (Eds.), Truth in mathematics (pp. 203–214). Oxford: Oxford University Press.

Kennedy, J. (2009). Review: Princeton companion to mathematics. Bulletin of Symbolic Logic, 15(4), 431–436.

Lakatos, I. (1963). Proofs and refutations. Cambridge: Cambridge University Press.

Leitgeb, H. (2009). On formal and informal provability. In Ø. Linnebo & O. Bueno (Eds.), New waves in philosophy of mathematics (pp. 263–299). London: Palgrave Macmillan.

McDuff, D., & Wehrheim, K. (2015). Kuranishi atlases with trivial isotropy: The 2013 state of affairs. arXiv:1208.1340 [math].

Parikh, C. (2009). The Unreal Life of Oscar Zariski. Berlin: Springer.

Rav, Y. (1999). Why do we prove theorems? Philosophia Mathematica, 7(3), 5–41.

Steiner, M. (1975). Mathematical knowledge. Ithaca: Cornell University Press.

Tanswell, F. (2015). A problem with the dependence of informal proofs on formal proofs. Philosophia Mathematica, 23(3), 295–310.

Tatton-Brown, O. (2019). Rigor and intuition. Erkenntnis. https://doi.org/10.1007/s10670-019-00180-9.

Acknowledgements

Special thanks to Juliette Kennedy for her extensive and valuable feedback. Thanks are also due to Jeremy Avigad, John Burgess, Andrew Chignell, Tom Ryckman, and John Sullivan their suggestions. I wish to thank my previous co-author, Valeria Giardino for long conversations on the topic. Lastly, I wish to thank the two anonymous referees for their valuable comments, which helped me to greatly improve this paper.

Funding

The funding was provided by Princeton University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

De Toffoli, S. Reconciling Rigor and Intuition. Erkenn 86, 1783–1802 (2021). https://doi.org/10.1007/s10670-020-00280-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10670-020-00280-x