Abstract

Research has demonstrated that in controlled experiments in which small groups are being tutored by researchers, reading-strategy instruction is highly effective in fostering reading comprehension (Palincsar & Brown, Cognition and Instruction, 1(2), 117–175, 1984). It is unclear, however, whether reading-strategy interventions are equally effective in whole-classroom situations in which the teacher is the sole instructor for the whole class. This meta-analysis focuses on the effects of reading-strategy interventions in whole-classroom settings. Results of studies on the effectiveness of reading-strategy interventions in whole-classroom settings were summarized (Nstudies = 52, K = 125) to determine the overall effects on reading comprehension and strategic ability. In addition, moderator effects of intervention, study, and student characteristics were explored. The analysis demonstrated a very small effect on reading comprehension (Cohen’s d = .186) for standardized tests and a small effect (Cohen’s d = .431) on researcher-developed reading comprehension tests. A medium overall effect was found for strategic ability (Cohen’s d = .786). Intervention effects tended to be lower for studies that did not control for the hierarchical structure of the data (i.e. multilevel analyses).For interventions in which “setting reading goals” was part of the reading-strategy package, effects tended to be larger. In addition, effects were larger for interventions in which the trainer was the researcher as opposed to teachers and effect sizes tended to be larger for studies conducted in grades 6–8. Implications of these findings for future research and educational practice are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Many students struggle with reading comprehension (e.g., Organization for Economic Co-operation and Development [OECD] 2014). Since reading comprehension is a fundamental skill in all school subjects, problems with this skill have serious implications for students’ educational success and, consequently, for their later societal careers. From the literature, it is known that students who are struggling readers have problems reading strategically (Paris et al. 1983). Good readers monitor their understanding of the text, while making use of different reading strategies such as predicting, activating prior knowledge, summarizing during reading, question generating, and clarifying (e.g., Palincsar and Brown 1984). Therefore, interventions aimed at fostering reading comprehension in low achievers are often based (or focused) on this type of reading strategies (Pressley and Afflerbach 1995).

Many studies have demonstrated positive effects of reading strategy interventions on reading comprehension and previous meta-analyses established that the effects of these interventions are quite large (e.g., Rosenshine and Meister 1994; Sencibaugh 2007; Swanson 1999). However, many studies have been conducted in controlled settings in which experimenters are instructors (as opposed to teachers) and in which instruction is given to small groups of students (as opposed to classrooms in which multiple groups of students work simultaneously). Therefore, it is unclear whether reading-strategy interventions are as effective in whole-classroom settings as it is in more controlled settings (Droop et al. 2016). This is an important lack in the current research base, considering that reading comprehension strategies have found their way into curriculum materials in the last decades.

This meta-analysis is carried out to provide more insight into the effects of reading-strategy interventions on reading comprehension in whole-classroom settings. In addition, it explores moderator effects of intervention-, study-design-, and student characteristics.

Teaching Reading Strategies and Didactic Principles

Since the 1980s, and after Durkin’s study (1978) demonstrating that comprehension instruction was virtually non-existent in elementary classrooms, research into reading comprehension instruction by means of the use of reading strategies, increased rapidly (Duke and Pearson 2002). The underlying idea is that reading comprehension is a complex process in which the reader interacts with the text to construct a mental representation of the text, or a situation model (Kintsch 1988, 1998). Hence, if readers understand how they can use comprehension skills as they read, their comprehension will be stimulated.

A reading strategy is a mental tool a reader uses on purpose to monitor, repair, or bolster comprehension (Afflerbach and Cho 2009). The use of reading strategies is a deliberate and goal-directed attempt to construct meaning of text (Afflerbach et al. 2008), and as such, can refer to both metacognitive and cognitive strategies that aid the process of reading (Dole et al. 2009). While cognitive strategies are used to improve cognitive performance, metacognitive strategies are used to monitor and evaluate the process of problem solving. Thus, metacognition is used to regulate one’s cognitive activities (Veenman et al. 2006). During reading, one can use metacognitive strategies such as monitoring whether one can understand the text. If comprehension fails (metacognition), one must choose an appropriate cognitive strategy to repair comprehension.

Researchers have suggested many different strategies (Pressley and Afflerbach 1995). They may involve an awareness of reading goals, the activation of relevant background knowledge, the allocation of attention to major content while ignoring irrelevant details, the evaluation of the validity of text content, comprehension monitoring, visualizing, summarizing, self-questioning and making and testing interpretations, predictions, and drawing conclusions (Duke et al. 2011; Palincsar and Brown 1984).

There is a variety of approaches directed at instructing reading strategies to foster reading comprehension relevant to our study. For example, one of the approaches is Reciprocal Teaching (Palincsar and Brown 1984; Palincsar et al. 1987), which was influenced by Vygotskian theories of learning and development (Vygotsky 1978). Reciprocal teaching consists of a set of three principles: (a) teaching comprehension-fostering reading strategies, (b) expert modeling, scaffolding and fading; and (c) students taking turns in practicing reading strategies and discussing with other students. The term “reciprocal” refers to the practice of students and teacher alternating the role of “expert”, as students become increasingly more skilled. In the whole-classroom approach, the role of expert in leading the group discussion is assigned to students in the group, replacing the adult tutor. For the remainder, the three principles mentioned above are identical in the transfer of RT in whole-classroom settings.

Another approach is called Collaborative Strategic Reading (CSR). CSR is heavily influenced by Reciprocal Teaching, as it underscores the importance of strategy use in small groups. In CSR, the students in groups have more differentiated roles (leader, clunk expert, gist pro) than in reciprocal teaching and there is more attention to whole-class instruction (Vaughn et al. 2013). In addition, there is the approach called Concept Oriented Reading (CORI) in which more emphasis is placed on motivational engagement support provided by the teacher. Central to CORI is the construct of reading engagement (Guthrie et al. 2007). Engaged readers are internally motivated to read and CORI tries to increase students’ “motivational attributes”, that include intrinsic motivation, self-efficacy, social disposition for reading, and mastery goals for reading. For example, by providing choice in reading materials to increase students’ intrinsic motivation, emphasizing importance of reading, competence support and stimulating collaboration (Guthrie and Klauda 2014). Other approaches emphasize self-regulatory strategies for example Mason et al. (2013) and Jitendra et al. (2000), while Durukan (2011) integrates reading-strategy instruction with writing strategies. Although these approaches differ, they have also important similarities. The most important similarities are the use of whole-classroom strategy instruction, the modeling of strategies and students practicing in small groups.

Interventions using reading strategies according to the above-mentioned approaches appear to be not always successful in improving reading comprehension (De Corte et al. 2001; Edmonds et al. 2009; Fogarty et al. 2014; McKeown et al. 2009; Simmons et al. 2014; Vaughn et al. 2013). The complexity of the didactic principles of the combination of strategy instruction, modeling and guided group work that is used in these interventions may explain why it can be difficult to achieve improvements in reading comprehension in whole-classroom situations. In such situations, it is hard to maintain implementation quality of the intervention, given that one instructor (teacher or researcher) has to supervise several groups of students simultaneously, as opposed to small-group tutoring. This explanation is supported by a few qualitative studies that show that teachers in whole-classroom settings face problems in the implementation of interventions using the principles of strategy instruction, modeling and group work (Duffy 1993; Seymour and Osana 2003; Hacker and Tenent 2002). Teachers found it hard to induce strategic thinking in students (Duffy 1993). In addition, students showed poor application of reading strategies and poor discourse skills while collaborating (Hacker and Tenent 2002), in which case students become too distracted to form coherent representations of text content (McKeown et al. 2009). As a consequence, the teachers were hindered in changing from a teacher-centered to a student-centered approach.

Effects of Reading-Strategy Interventions: Findings from Previous Reviews and Meta-Analyses

The report of the National Reading Panel (2000) identified 16 types of interventions directed at reading comprehension, of which six were regarded to be effective. Five of these types can be defined as reading strategy interventions: (1) comprehension monitoring, (2) graphic and semantic organizers, (3) generating questions, (4) summarizing and (5) multiple strategy instruction.

In the past decades, several systematic meta-analyses on the effects of several types of interventions for fostering reading comprehension (including reading strategies) have been conducted (e.g., Berkeley et al. 2010; Edmonds et al. 2009; Slavin et al. 2009; Scammacca et al. 2015; Swanson 1999). Most of those meta-analyses are directed at a specific group of students, for example students with learning disabilities (Berkeley et al. 2010; Swanson 1999), adolescent struggling readers (Edmonds et al. 2009; Slavin et al. 2008) or elementary students (Slavin et al. 2009) and include a wide variety of interventions aimed at fostering reading comprehension. In these meta-analyses, interventions focusing specifically on reading strategies yield mixed results. Effect sizes for reading strategy interventions range from large to very small: Edmonds et al. (2009), for instance, established an average effect of d = 1.23, whereas Slavin et al. (2009) found an overall effect size of d = 0.21.

Meta-analyses that focus specifically on the effects of reading strategies interventions are from Rosenshine and Meister (1994), Chiu (1998) and more recently from Sencibaugh (2007). In the review by Rosenshine and Meister (1994), sixteen experimental studies of reciprocal teaching, conducted between 1984 and 1992, were summarized. The authors found an overall positive effect on reading comprehension; with a median Cohen’s effect size value (d = .32) for standardized tests and a large Cohen’s effect size value (d = .88) for researcher-developed tests.

Chiu (1998) synthesized studies that incorporate metacognitive interventions to foster reading comprehension, which involved reading strategies such as self-questioning, summarizing or inferencing. He analyzed 43 studies, ranging from second grade to college, which were conducted between 1978 and 1995. The overall effect size was larger for researcher-developed tests (d = .61) than for standardized tests (d = .24) and effect sizes were larger when researchers delivered instruction compared to teachers.

Sencibaugh (2007) focused on students with learning disabilities and analyzed 15 studies, conducted between 1985 and 2005, testing the effect of reading-strategy interventions on reading comprehension. He distinguished interventions with a focus on “auditory-language dependent” strategies (such as summarizing, self-questioning, inferencing) and “visually-dependent” strategies (such as semantic organizers or visual attention therapy). The overall effect of the former was 1.18 and on the latter 0.94. However, no separate effect sizes were reported for researcher-developed tests (n = 10) and standardized tests (n = 5).

Taken together, all of them have made relevant contributions to the growing insight into effective reading-strategy interventions. However, the following important question remains. It is unclear how the above findings from meta-analyses relate to the context of whole-classroom instruction. In the overall effect sizes presented in previous meta-analyses, it does not become clear whether the strategy interventions are successful in such whole-classroom contexts. We believe that this is a significant omission in the research literature, because the teaching of reading strategies has become a standard part of the reading curriculum in primary and secondary education. Hence, a focused meta-analysis on effectiveness of reading strategy instruction in whole-classroom settings is needed to shed light on whether such teaching is fruitful in regular educational practice. Based on the previous discussion, it can be hypothesized that effects of strategy-instruction on reading comprehension are less convincing in whole-classroom studies than was found in previous meta-analyses.

Additionally, previous meta-analyses give rise to two questions. First is the finding that teachers are less successful in delivering strategy instruction than researchers. This can be concluded from the moderation analyses reported in Chiu (1998) and Scammacca et al. (2015). A possible explanation for this finding is provided by Seymour and Osana (2003) stating on the basis of teacher interviews that teachers found the strategies to be taught hard to understand (for example the distinction between strategies such as questioning and clarifying). In addition, Duffy (1993) reported that teachers were not familiar with the definitions of strategies to be taught.

Secondly, there is the issue of standardized tests versus researcher-developed tests. Standardized tests are reading comprehension tests that are independent of the specific objectives of the intervention, and can be used nation-wide, for example Gates-MacGinitie Reading Test and Woodcock-Johnson Tests. On the other hand, researcher-developed tests are specifically designed to measure the type of reading comprehension as targeted by the intervention. In previous meta-analyses, effect sizes were significantly larger for researcher-developed than for standardized tests (Chiu, 1998; De Boer et al. 2014; Rosenshine and Meister 1994; Swanson 1999). Therefore, we hypothesize that this difference between the two types of tests will also appear in our analysis. This issue is relevant for educational practice, especially if the analysis focuses on whole classrooms. In that context, it is important to decide whether the teaching of reading strategies should be directed at performance on standardized reading tests or that this teaching is intended for more specific reading objectives (that can be achieved by the use of reading strategies).

The Present Study

The goal of the study is to estimate the effects of reading strategy interventions in whole-classroom settings on students’ reading comprehension and strategic abilities. In addition, we explore the moderating effects of intervention-, student-, and study-design characteristics.

In terms of intervention characteristics, we focus on type of reading strategies instructed, type of trainer (teacher vs experimenter) and type of didactic principle used (modeling, group work and scaffolding). As explained above, these variables may influence the effect size of strategy interventions. In addition, moderating effects of type of educational context (language or content area classes) are analyzed, because these contexts are quite different in nature for the application of reading strategies (general reading comprehension vs knowledge acquisition) (Guthrie and Davis 2003; De Milliano 2013). Finally, moderation effects of duration of the intervention are included, as Scammacca et al. (2015) found that shorter interventions generally result in larger effect sizes.

As reading-strategy interventions focused on different student populations, we also analyzed moderation effects of grade (3–12) and different types of readers (typical or low-achieving), as research showed that strategy interventions are most effective for low-achieving readers (Edmonds et al. 2009).

Finally, we took into account several study-design characteristics that are important in determining the validity of the studies (Cooper et al. 2009). We focused on design of the experiment, as it is documented that studies in which a quasi-experimental design is used show larger effect sizes compared to studies with a randomized design (Lipsey 2003). In addition, we checked whether the hierarchical structure of the data (students within classes within schools) was taken into account in the studies (multilevel vs not multilevel analyzed) and used this variable as a moderator. This is specifically of importance because our analysis focuses on classroom interventions and therefore multilevel analysis (separating classroom-level and student-level variance) avoids overestimation of effect-sizes (Hox 2010). Finally, the type of control condition (business-as-usual vs controlled control group) was used as a moderator. Controlled control groups refer to conditions in which the control students were given a different intervention (for example a vocabulary intervention) or one of the components of the reading strategy intervention. It is plausible that differences between experimental conditions and controlled control groups are smaller than differences between experimental conditions and business-as-usual control groups.

The following research questions are addressed:

-

1.

What are the effects of reading strategy interventions in whole classroom settings on students’ reading comprehension measured by standardized and researcher-developed tests?

-

2.

Which intervention-, student-, and study-design characteristics moderate the effects of reading strategy interventions?

Method

Inclusion Criteria

We chose the year 2000 as a starting point for our literature search, because from this year onwards the instruction of reading strategies started to become more and more mainstream in education (Pressley 2002). Criteria for inclusion of studies in this meta-analysis were as follows:

-

1.

The participants were in grades 3–12.

-

2.

The study measured the effects of reading strategy-interventions on students’ reading comprehension skills in regular classroom settings.

-

3.

The dependent measure(s) included quantitative measures of reading comprehension.

-

4.

The study compared an experimental group, participating in the intervention, to a control group that did not.

-

5.

The article was written in English, but the study could have taken place in any country.

-

6.

Pretest data were available.

-

7.

The information provided should be sufficient for calculating effect sizes.

The following exclusion criteria were utilized:

-

1.

The study’s treatment focuses on strategies that pertain to decoding and morphemic strategies.

-

2.

The treatment takes place in foreign language classes (e.g., Chinese students learning English).

-

3.

The treatment is implemented outside the classroom (e.g., one-on-one tutoring or remedial teaching).

-

4.

The treatment takes place during a summer school.

-

5.

The treatment is lab-based (e.g., experiments in which students are given individual instruction with a computer).

-

6.

The treatment is a curriculum-wide program in which it does not become clear which specific reading strategies are taught and how this was done (e.g., Success-for-All)

-

7.

The student population of the study consists mainly of students with a developmental disorder (e.g., autism or ADHD), students are deaf or hearing impaired, or students suffer from aphasia.

-

8.

The study design is a single-subject or single-case research design.

Literature Search

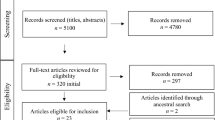

Two databases were accessed; ERIC and PsycINFO. Search queries consisted of synonyms of “reading comprehension,” “intervention,” “strategy-instruction,” and “children.” See Appendix A for the search syntax. Articles had to be peer-reviewed and written in English. The initial search was carried out in April 2012 and resulted in 2422 articles, of which 31 articles met the inclusion criteria. A search update on 11 May 2015 resulted in 1088 articles, with an additional 16 articles that met the criteria. Snowballing resulted in 5 more articles. An overview of the database search and selection is presented in Appendix B. Thus, a total of 52 articles were included in the meta-analysis.

Coding Procedures

Based on Cooper et al. (2009), we devised a coding scheme containing both theoretical and statistical elements. This scheme was piloted and refined until the first two authors reached agreement on all topics. At the start of the second round of literature search, two coders joined the team. They had completed training on how to use the coding scheme and had reached a high level of reliability.

Interrater reliability was measured at the level of the decision to include articles to be coded. From the first batch of the literature search (until 2012), twenty articles were randomly selected. The two new coders (who were oblivious to the articles thus far included in the meta-analysis) independently checked the twenty articles and, based on the aforementioned criteria, decided which of those should be included in the meta-analysis and which should not. Overall interrater-reliability between all coders was calculated as percentage agreement, which reached 86%.

Regular meetings between the coders were held to discuss particular issues or concerns and to collaboratively decide in cases of doubt how to interpret aforementioned criteria when coding and other coding problems, such as the definition of types of reading strategies.

The coding scheme included the following five elements: intervention characteristics, student characteristics, study design characteristics, and measurement characteristics and statistics.

Intervention Characteristics

Intervention characteristics pertain to who implemented the treatment (researcher/teacher/“other”), the educational context in which the study took place (language classes/content area classes/other), whether the treatment included scaffolding, modeling and/or group work to teach reading strategies, duration of the intervention (both in total number of sessions, number of hours (time per session × number of sessions), number of weeks, and an “Intensity” variable in which the total number of hours was divided by the number of weeks), and which reading strategies were taught.

To guide the coding of reading strategies, we took the seminal work of Pressley and Afflerbach (1995) as a starting point in identifying and sorting the many reading strategies that are described and reported in experimental studies. These authors analyzed 40 think-aloud studies and reported strategies that were executed by good readers as they go through a text.

Reading strategies included goal-directed activities that occur before a text is read, during reading, and after the reading of the text is completed. For example, setting reading goals is a reading strategy utilized before one starts reading, while inferencing is used during reading. Summarizing has been observed both during and after reading, and therefore is listed both under “during reading” and “after reading.” In our analysis, we included all strategies that were explicitly directed at the comprehension of text on the word-, sentence- or whole-text level. Strategies that were directed at focusing students’ attention to specific ways of improving their text comprehension (e.g., clarifying word meanings, setting boundaries for monitoring comprehension or error detection), were taken together in a strategy called “explicit monitoring strategies.” Examples of such comprehension directed strategies are: clarifying the meaning of a word, error detection and fix-up strategies such as rereading. Strategies only used for decoding words, such as spelling, phonemic analysis and phonics were excluded.

Table 1 lists the strategies that we coded with the accompanying studies. For each reading strategy, we coded whether the reading strategy was taught (1), or not (0).

Student Characteristics

With regard to student characteristics, we wanted to be able to differentiate among student populations. Therefore, we coded the grade(s) in which the study took place and type of student (typical students/learning disabled/low-achieving or struggling readers).

Study-Design Characteristics

As for study design characteristics, we coded the design of the study (randomized, quasi-experimental, matched, and “other”), whether multilevel analysis was used, and type of control group (business as usual or controlled).

Outcome Measures

With respect to the outcome measures, we coded reading comprehension measured with standardized and researcher developed tests. Furthermore, as many studies also reported on measures for strategic ability (i.e., the quality of application of reading strategies), strategy knowledge and/or self-reported strategy use, we also included these in our analysis. We distinguished immediate posttests and delayed posttests in our analysis. Thus, we ran analyses for five outcome measures (i.e., reading comprehension standardized, reading comprehension researcher-developed, strategic ability, strategy knowledge, and self-reported strategy-use).

In cases in which multiple outcome measures (for example, three measures of strategic ability) were reported for one of our outcome measures, we decided to include one of those based on the following decision tree:

-

1.

If both subtests and a total score of the instrument were analyzed and reported, we used the total score in our analysis (this was often the case for reading comprehension).

-

2.

If different instruments were reported and it was not possible to include a total score, we chose an instrument that measured summarizing or main idea identification (this was often the case for measures of strategic ability).

-

3.

If (1) and (2) were not available, we chose the first measure that was described in the study.

To determine the effect size (Cohen’s d), we coded group size (n experimental and n control), whether and which covariates were used in the specific statistical comparison by the authors, pretest- and posttest values (means and standard deviations) and the type of statistic the effect size is based on (t-value, F value, regression weight) with accompanying degrees of freedom and p values.

Method of Analysis

In a number of studies, multiple experiments were described, or multiple samples were researched, resulting in more than one experimental comparison within one study. For that reason, the unit of analysis was “experimental comparison.” As described above, we distinguished five outcome measures, and also distinguished between immediate and delayed posttests. Thus, for one experimental comparison it was possible to have 10 outcome measures, which were analyzed separately.

To calculate the average effect sizes (Cohen’s d) (Cohen 1988) for our outcome measures, a random effects model was used. To take into account differences in sample size between the comparisons, the effect sizes are weighted based on the variances within the samples and the between-study variation.

To analyze whether the variance in effect sizes can be attributed to differences in intervention-, student-, and study-design characteristics, moderation analyses were carried out using mixed effects models for categorical moderators (for example, type of design or reading strategy yes/no). The Q-statistic was calculated to analyze between-group differences for the categorical moderators (Lipsey and Wilson 2001). To examine how continuous variables are related to variation in effect sizes, a random effects meta-regression was used. The random effects meta-regression model allows for within-study variation as well as between-study variation. This procedure applied to all variables concerning duration of the intervention (number of sessions, total number of hrs, number of weeks, and intensity: hrs/weeks).

Publication bias was tested by applying Duval and Tweedie’s trim-and-fill method (Duval and Tweedie 2000). A random effects model was used to estimate if there were any interventions missing in the meta-analysis.

All analyses were performed by a statistician (Author 5), with a registered version of the Comprehensive Meta-Analysis software package (version 3; Biostat, Englewood, NJ).

Results

Descriptives

A total of 52 articles met our eligibility criteria. Within those 52 articles, 125 eligible effects were found. Of those 125 effects, 89 effects measured reading comprehension. Sixty-one effects concerned reading comprehension standardized tests as dependent variable, of which 9 were delayed posttests. For reading comprehension researcher-developed tests, 28 effects were found, of which 4 were delayed posttests. For strategic ability 22 effects were found, of which 5 were delayed posttests. For strategy knowledge a total of 8 effects were found, of which 3 were delayed posttests. Finally, for self-reported strategy-use 6 (immediate) effects were found.

Mean duration of the interventions was 47.11 h, with a standard deviation of 55.01. The range of duration in hours was 6–233. The interventions were spread over 17.47 weeks on average with a standard deviation of 11.37. In Appendix C an overview of the key characteristics is given of all experimental comparisons in the meta-analysis.

Main Effects

First, main overall effect sizes for our outcome measures were analyzed. In Table 2, an overview of the effect sizes per outcome measure is displayed. All overall effects were positive, but not all were significantly different from zero. For reading comprehension, the effect sizes for both immediate standardized measures (Cohen’s d = 0.186) and delayed measures (Cohen’s d = 0.167) can be considered trivial (Cohen 1988), whereas the effect size for researcher-developed measures was significant but small for immediate tests (Cohen’s d = 0.431), and large for delayed researcher-developed tests (Cohen’s d = .947). The difference between the effect sizes for standardized and researcher developed tests of reading comprehension is also significant, Q(1) = 10.599, p = .001; with effects on researcher-developed tests being larger.

In terms of reading strategy related outcome measures, the immediate measures of strategic ability, strategy knowledge, and self-reported strategy use were significantly different from zero with respectively medium and small effect sizes (Cohen’s d = 0.786, Cohen’s d = .366, and Cohen’s d = 0.358). For the delayed reading strategy measures, effect sizes were small and trivial, and, probably due to the small number of comparisons, not significantly different from zero.

Heterogeneity analyses (Table 2) and forest plots (Appendix D) show large and significant variation in effect sizes across the studies, which justifies moderation analyses for our three dependent variables of interest (immediate measures of reading comprehension standardized, researcher-developed tests, and strategic ability).

Moderator Effects

We chose to analyze only moderator effects on “immediate” outcome measures, as only a few studies included delayed measures. For the same reason, we did not analyze moderator effects for strategy knowledge (n = 5) and strategy-use self-report (n = 6). All significant moderators of the three outcome measures (reading comprehension standardized, reading comprehension researcher-developed, and strategic ability) are presented in Table 3.

Intervention Characteristics

As a first step, moderation analyses were performed on the three overarching categories of reading strategies (“before reading,” “during reading” and “after reading”). None of those moderation analyses were significant, apart from “before reading” for strategic ability (see Table 3). Effect sizes tended to be less strong for studies in which “before reading” strategies were taught.

In the next step, the separate reading strategies were examined. Only “setting reading goals” appeared to have a significant contribution to the overall effect size on all three outcome variables (Table 3). Interestingly, this contribution was positive for both reading comprehension outcome measures, but it had less impact on strategic ability. In other words, when “setting reading goals” was part of the intervention, the overall effect size of strategic ability was lower for the intervention than for the control. Furthermore, “underline important information” was a significant contributor to strategic ability.

In addition, a number of other reading strategies had less impact compared to when those strategies were not taught in the intervention. For reading comprehension standardized, this was the case for “mental imagery” and “memorizing.” For researcher-developed tests, this was the case for “comprehension directed strategies” and the use of “graphic organizers/visual representation.” Lastly, for strategic ability, this was the case for “predicting” and “prior knowledge.”

As for other intervention characteristics, “modeling” (applying reading strategies while thinking aloud by the teacher) was a significant contributor for measures of strategic ability, but not for the reading comprehension measures. The educational context in which the intervention took place mattered only in the case of strategic ability. A higher overall effect size was obtained in language-arts classes compared to content area classes. The type of trainer of the intervention only mattered in the case of researcher-developed tests with the effect size for researchers as trainers being larger than the effect size of teachers as trainers.

We did not find significant contributions of the following intervention characteristics: scaffolding, group work, questioning, summarizing (during reading), inferencing, text structure, paraphrasing, hinge words, summarizing (after reading), and duration in weeks, number of sessions, total hours of the intervention (time per session × number of sessions) and intensity of the intervention (total hours/number of weeks).

Student Characteristics

When looking at student characteristics (Table 3), reader type mattered only for strategic ability measures, with a higher overall effect size for low-achievers compared to typically developing students. Grade was a significant contributor for researcher-developed tests, with the largest overall effect size for students in grades 6–8.

Study-Design Characteristics

When looking at study-design characteristics as moderators (Table 3), whether the data were analyzed with multilevel analysis mattered in the case of standardized reading comprehension tests. As expected, significantly higher effect sizes were found for studies that did not control for the hierarchical structure of the data. In addition, the type of control condition mattered only for researcher-developed tests of reading comprehension. A higher overall effect size was observed when the control condition was a business-as-usual compared to controlled control groups. Design (randomized experiment vs quasi-experiment) did not influence effect sizes on the three outcome measures.

Publication Bias

We also tested for publication bias by applying the trim-and-fill method (Duval and Tweedie 2000). The funnel plot for reading comprehension standardized (immediate) showed evidence of asymmetry (see Appendix E). The addition of the “missing” studies imputed using the Duval and Tweedie’s trim-and-fill method (Duval and Tweedie 2000) shifted the effect size from Cohen’s d = 0.186 to Cohen’s d = 0.115 (0.093; 0.208), but still significant. Egger’s test (Egger et al. 1997) confirmed the presence of publication bias, t(50) = 2.087, p = .042. The opposite was found for researcher-developed tests (immediate), with evidence of asymmetry on the right side of the funnel plot (see Appendix E). With the Duval and Tweedie’s trim-and-fill method (Duval and Tweedie 2000), the effect size shifted from Cohen’s d = 0.431 to Cohen’s d = 0.522 (0.382; 0.662), but Egger’s regression intercept (Egger et al. 1997) did not confirm this, t(22) = 1.489, p = .151, suggesting a weak indication for publication bias. No indications of publication bias were found for strategic ability (immediate) with a symmetric funnel plot (See Appendix E) and an Egger’s regression intercept of t(15) = 0.507, p = .620.

Conclusions and Discussion

This study set out to summarize the overall effects of interventions aimed at instructing reading strategies on both reading comprehension and strategic ability in whole-classroom settings. In addition, it was determined whether intervention-, student-, and study design characteristics influenced the effects on reading comprehension and strategic ability. To establish the overall effects of reading strategies interventions, a search of literature published from 2000 onwards yielded a total of 52 studies, which comprised 125 effect sizes.

We found a significant, but very small, effect on reading comprehension (Cohen’s d = .186) for standardized tests and a small significant effect on researcher-developed reading comprehension tests (Cohen’s d = .431). Given these results, our expectation that effects of reading strategy instruction in classroom-based studies are less convincing than was found in previous meta-analyses, is confirmed. As expected, effects were significantly larger when researcher- developed tests were used compared to standardized tests. A significant medium overall effect was found for strategic ability tests (Cohen’s d = .786) and significant, but small effects for strategy knowledge (Cohen’s d = .366) and self-reported strategy use (Cohen’s d = .358). For delayed tests, we found a significant, but very small effect on standardized reading comprehension tests (Cohen’s d = 0.167) and a large effect for researcher-developed reading comprehension tests (Cohen’s d = 0.947). No significant effects were found for delayed tests of strategic ability and knowledge.

Moderation effects were analyzed for intervention characteristics (type of strategies instructed, type of trainer, didactic principles, educational context and duration). From all types of strategies discriminated in this study, only “setting reading goals” was found to positively moderate the effects on reading comprehension tests (both standardized and researcher-developed). Some strategies did not positively impact effect sizes. For standardized tests of reading comprehension, this was the case for “mental imagery” and “memorizing.” For researcher developed tests, this was the case for “explicit monitoring strategies” and the use of “graphic organizers/visual representation.” Lastly, for strategic ability, this was the case for “predicting,” “setting reading goals” and “prior knowledge.” Intervention characteristics which positively influenced effect sizes were “modeling” (strategic ability), the educational context of language arts classes (strategic ability), and researchers as type of trainers (researcher-developed tests).

For student and study design characteristics, larger effect sizes were obtained for low-achievers compared to typically developing students (strategic ability), students in grades 6–8 (researcher developed tests), and when control classes were business-as-usual (researcher-developed tests). It should be noted that all moderation analyses are exploratory in nature and are not based on explicit manipulation of experimental variables.

Main Effects of Reading-Strategy Interventions

Our findings concur with the findings of the meta-analyses of Rosenhine and Meister (1994) and Chiu (1998). They also found quite small effects (respectively d = .32 and d = .24) of reading strategy interventions on standardized tests. The finding that effects on standardized tests are hard to accomplish with reading strategy interventions has already been recorded in several instances (Paris et al. 1984). The transfer of instructed strategies to standardized reading tests is probably difficult to accomplish, because it may require quite different strategies than were provided in the instruction, such as how to handle multiple choice questions and how to interpret typical reading comprehension questions that are posed in standardized tests with limited time for thinking and using strategies. In sum, results of our study call into question whether the skills needed for achieving higher scores on standardized measures for reading comprehension can be improved by teaching students how to apply a limited set of reading strategies. On top of that, it shows that there is a systematic difference in the skills required for standardized tests and researcher-developed tests, justifying that both measures are treated separately.

On the other hand, we found a stronger effect size for researcher-developed reading comprehension tests, suggesting that such tests are better suited to capture the learning effects of strategy instruction on reading comprehension than standardized tests. Assuming that researcher-developed tests for reading comprehension measure significant aspects of students’ comprehension of texts, this shows that reading strategies taught in whole classrooms may be a valuable addition to students’ reading development. In addition, our finding that for delayed tests there was a large effect on researcher-developed measures of reading comprehension (d = .947) gives substantial support to the usefulness of instruction in reading strategies. This is an important finding because it shows that the reading strategies taught in interventions are quite durable. Possibly, this is the result of the students using the strategies effectively, even after the intervention has stopped.

Furthermore, we found a quite large effect of reading strategy interventions on strategic ability. Tests for strategic ability require applying the learned strategies, which are qualitatively scored. This finding is of relevance because it shows that application of strategies is improved by strategy training. Furthermore, we found small effects on students’ strategy knowledge and self-reports of strategy use, indicating that both knowledge about the different strategies and students’ awareness of the type of strategies that are taught can be increased by the reading strategy interventions.

Moderation of Intervention Characteristics

Our analysis of moderation effects of types of strategies taught showed that only a few reading strategies did affect the effect sizes positively, while others impacted the effect size less in comparison with interventions that did not include that specific strategy. In most of the studies included in the meta-analysis several strategies are taught in combination, only in a few cases did interventions focus on one reading strategy only (DiCecco and Gleason 2002; Jitendra et al. 2000; Lubliner and Smetana 2005; Miller et al. 2011; Ng et al. 2013; Redford et al. 2012). This means that the overall effect sizes for reading comprehension and strategic ability have to be attributed to the teaching of different combinations of reading strategies instead of one of the types of strategies discriminated. As a consequence, the results of our moderation analysis for types of reading strategies should be interpreted in terms of whether it matters if one or other type of strategy is part of the package offered. In most cases, our findings show that this is not the case: it does not seem to matter if, for example, inferencing is part of the package of reading strategies taught. In some cases (see Table 3), inclusion of some types of strategies in the package (e.g., explicit monitoring strategies, or the use of graphic organizers) results in lower effect sizes on reading comprehension in comparison to studies that do not contain these types. That, however, does not mean that such types used in isolation would result in negative effects. It only means that packages of strategies without them result in higher effect sizes.

An exception to the above is the positive effects for one of the 15 types of trained strategies on the measures for reading comprehension. This strategy, “setting reading goals,” requires students to critically reflect on their reading goals before reading. The finding of this type of strategy to be effective is based on only 3 experimental comparisons for standardized tests and one experimental comparison for researcher developed tests. Therefore, the finding must be treated with caution. However, it is interesting that this type of strategy sticks out as an important aspect of reading strategy instruction, because goal setting may be one of the main determinants of a successful task approach, especially when it is important to select relevant information from texts (Rouet and Britt 2011). We also found that “setting reading goals” had a lower impact on measures of strategic ability. However, there was only one experimental comparison for the combination of “setting reading goals” and strategic ability, thus the result cannot be generalized.

For strategic ability, we also found that “underline important information” and the didactic principle of “modeling” were positive contributors. Modeling refers to trainers (and eventually students) thinking aloud while reading and thereby exposing their cognitive process of comprehending texts and making clear how to use reading strategies when reading. It appears that this is a useful practice in comparison to approaches that do not contain modeling, because it increases effects on strategic ability. “Underline important information” is a reading strategy that was instructed in four studies (Guthrie et al. 2004; Ponce et al. 2012; Souvignier and Mokhlesgerami 2006; Sung et al. 2008). In these studies, strategic ability was measured by underlining important parts of a text, which is closely aligned to the strategy “Underlining important information.” This may explain the effect found on strategic ability.

As expected, effect sizes for reading comprehension (researcher-developed) were smaller for interventions in which instruction was given by the teacher compared to interventions in which researchers provided instruction, an outcome which is in line with earlier findings (Chiu 1998; Scammacca et al. 2015). Thus, researchers seem to be better able to deliver the interventions in whole-classroom settings than teachers. This supports the results of qualitative studies in which teachers were followed implementing reading strategy instruction in their classrooms (Duffy 1993; Seymour and Osana 2003; Hacker and Tenent 2002). They found that teachers in whole-classroom settings face problems in the implementation of interventions directed at instructing reading strategies. For example, teachers found the didactic principles of reciprocal teaching and the specific reading strategies that had to be taught hard to understand (Seymour and Osana 2003), teachers found it hard to induce strategic thinking in students (Duffy 1993), and students showed poor application of reading strategies and poor discourse skills while collaborating when teachers implemented reciprocal teaching in their classrooms (Hacker and Tenent 2002).

An interesting finding is the fact that duration of the intervention did not influence the overall effect size. Thus, it did not matter how long interventions lasted for the effect size. It should be noted that there were very few long interventions (> 1 year), which may have limited this effect to become significant.

Moderation Effects of Study-Design and Student Characteristics

As mentioned previously, effect-sizes can be overestimated when there is no control for the hierarchical structure of the data (students within classes). This is especially of importance in our meta-analysis because we are mainly interested in effects on classroom level. We therefore used the variable multilevel-analysis (yes/no) as a moderator. It turned out that only in 10 of 52 studies multilevel analysis was carried out. Moderation analyses showed that significantly higher effect sizes (Cohen’s d = .305) were obtained for standardized reading comprehension tests when these studies did not control for the hierarchical structure of the data, in comparison to studies that did use multilevel analysis (d = .106). Although not significant, the same trend was present for researcher-developed tests and strategic ability. These findings stress the importance of taking into account the hierarchical structure of the data in interventions that are directed at the class-level (Hox 2010).

As expected, studies in which the control group comprised a business-as-usual control group, effect sizes tended to be larger compared to controlled control groups for researcher developed reading comprehension tests. Controlled control groups refer to conditions in which the control students were given a different intervention (for example a vocabulary intervention) or one of the components of the reading strategy intervention. This finding seems logical given that the difference between a controlled-control group and the intervention group is most likely smaller than between business-as-usual control groups and intervention groups.

In terms of student characteristics, it seems that students in grades 6–8 profited the most from reading strategy interventions to improve reading comprehension, measured by researcher developed tests. A possible explanation is that middle grade students are increasingly required to learn from texts in school and therefore learning reading strategies is of more direct use to them. In addition, low-achievers’ strategic ability improved more from strategy instruction than was the case for typically developing students. This is a quite puzzling result, that should however be treated with some caution, because the result is based on a small sample of studies (3) involving low-achievers and it is marginally significant. Consequently, to take this result seriously future research should be carried out.

Limitations

We chose to only include published articles as a way to ensure research quality, but this means that we did not take into account unpublished research reports. Generally, effects estimated in published work tend to be higher than in unpublished reports, which could affect the results of the meta-analysis. For that reason, we used the trim-and-fill method (Duval and Tweedie 2000) to estimate whether our meta-analysis was subject to publication bias. For reading comprehension standardized tests the effect size decreased significantly (from d = .186 to d = .115), while for researcher-developed tests there was a significant increase (from d = .431 to d = .522). No indications of publication bias were found for strategic ability. Thus, there was a small publication bias for our reading comprehension outcome measures, but this does not change our main conclusions for these measures.

Another limitation was that we could not include implementation quality as moderator. Implementation quality refers to the degree in which interventions are carried out as intended, and especially in whole-classroom contexts in which teachers are often the trainers as opposed to researcher, this moderator is of importance. Many of the studies that we analyzed above do not give enough information about the quality of implementation to include this variable in our analysis, such as the way that teachers and trainers worked according to protocol, the way that students responded to instruction and the quality of training and coaching of teachers. It is quite interesting for future research to find out whether interventions with high implementation quality succeed in improving students’ reading comprehension skills more than interventions with lower implementation quality, as it is known that especially when studies are performed outside strongly controlled settings, implementation quality is of importance in finding effects (Hulleman and Cordray 2009).

Finally, we should be careful in generalizing the moderation effects. First, we need to acknowledge the fact that the moderation analyses are exploratory in nature, and as such, represent correlational relationships and not cause-effect relationships. Second, many of the moderation analyses were done with small sample sizes, which limits the power of the analyses.

Suggestions for Future Research

As demonstrated, studies using multilevel analysis controlling for the hierarchical nature of classroom level interventions showed lower effect sizes, especially in the case of standardized reading comprehension tests. This finding was mirrored also in other effect sizes, although the differences were not significant, presumably because of a small number of cases for comparison. These findings should be considered as a strong recommendation for future studies directed at classroom level interventions, to use multilevel analysis.

Another issue is that some studies cannot be included in meta-analyses, because of inadequate or missing data to calculate the effect size. We therefore call for more rigorous descriptions of statistical data in future research. Both journal editors and researchers should take care in registration of statistical results and should take into account that studies might be used in a meta-analysis in the future. For example, presenting pretest data is indispensable in determining a proper effect size. Also presenting student characteristics (e.g., gender or age) of each condition, as opposed to characteristics that apply to the whole student sample, is helpful to accurately synthesize data. For intervention studies in particular, it is interesting to be able to include moderators pertaining to intervention duration (e.g., intervention duration in terms of total hours and number of weeks) and more elaborate descriptions of what the intervention specifically entailed are helpful in determining whether a study is eligible for coding (e.g., training procedures for the trainers who delivered the intervention, what strategies were taught, what kind of tasks were the students required to do in the lessons, what didactic principles were underlying the intervention).

A third suggestion for future research pertains to the mediating effect of strategy-use by the students. The only feature that was experimentally manipulated in the studies included in the meta-analysis was the presence or absence of an intervention aimed at instructing reading strategies. The underlying assumption in the studies is that the strategies taught are also used by the students, but we cannot be sure that was always the case (Donker et al. 2014). It would be interesting to investigate in future research how strategy-use by students mediates the level of reading comprehension.

Implications for Educational Practice

Our meta-analysis gives rise to some important implications for the use of interventions aimed at fostering reading strategies in whole-classroom contexts. In the first place, the question whether reading strategy interventions can be effective for improving students’ reading comprehension can be answered in the affirmative. Although the overall effect size of strategy interventions on researcher-developed tests appears to be small, the effect on the delayed measures is stronger (large) and shows that strategy instruction can be considered to be quite durable in achieving effects on reading comprehension. The researcher-developed tests used in our analyzed interventions are presumably directed at reading comprehension skills that are needed for specific types of comprehension problems, which may arise in textbooks used in school (e.g., in content area teaching). For that reason, the results of our meta-analysis give support to the teaching of reading strategies for the purpose of improving such textbook reading in school (in contrast to improving achievement on standardized reading tests).

As the studies used a package of multiple strategy instruction to foster students’ reading comprehension, our findings do support the recommendations provided by the National Reading Panel (2000). The panel concludes that teaching of multiple reading strategies “leads to increased learning of the strategies, to specific transfer of learning, to increased memory and understanding of new passages, and, in some cases, to general improvements in comprehension” (p. 4–52). In addition, our study suggests that the strategy called “setting reading goals” is a promising one for adding to such packages of multiple strategies, since it has shown to have a positive moderating effect on reading comprehension. This type of strategy requires that students think beforehand what the purpose of reading is, for example, knowing something, answering questions or enjoying a story (e.g., Aaron et al. 2008). This means that teachers should make their students think and be aware of their purpose for reading and ask questions that elicit such awareness. Setting reading goals is an important type of strategy in task-oriented reading, which is often asked for in content-area classes. In task-oriented reading, students need to select relevant parts of the text, specific for the task at hand, they need to know when to skip information, and they need to know when to search for information (Vidal-Abarca et al. 2010). To do this successfully, knowing the goal(s) of reading is necessary. Thus, especially for content-area teachers, asking their students to think about their reading goals might be a valuable addition to learning from texts.

Our study has made it clear that reading strategy instruction seems to be especially effective in the middle (grades 6–8), and earlier grades (3–5), as substantial effects on researcher-developed measures of reading comprehension are found (d = .618 and d = .387). This implies that instructing reading strategies in whole-classroom settings should begin from the earliest grades to be effective. In contrast, for the group of older students (grades 9–12), the effects on reading comprehension are negligible. Although, the results found for the latter were derived from only two experimental studies, it remains doubtful whether strategy instruction for this oldest group in our analysis is fruitful.

Lastly, we need reflect on the result that teachers are not as proficient as researchers in successfully implementing an intervention aimed at instructing reading strategies. This finding calls for more emphasis in teacher education on the teaching of reading strategies to foster reading comprehension. As the National Reading Panel (2000) recommends, such instruction should be extensive, especially on how teachers should teach those strategies. In order for teachers to know how strategies should be taught, it seems necessary that teachers have a deeper understanding of the reading comprehension process. This deeper understanding should start during teacher education. For example, student-teachers could be stimulated to think aloud while reading a text and reflect on what reading strategies they use and whether the use of those reading strategies is helpful in understanding. In addition, in their apprenticeships, they could do the same with their younger students, to acquaint themselves with the reading problems that they are confronted with.

Conclusion

Summarizing our findings, we conclude that reading-strategy interventions in whole classroom settings can be beneficial, especially for students in grades 3–8. Larger effects were found for researcher-developed tests of reading comprehension, compared to standardized tests, which shows that researcher-developed tests are more sensitive to the specific reading strategies learned by students. In addition, the finding that delayed (researcher-developed) tests demonstrated a large effect on reading comprehension is certainly an important support for the case that strategy interventions may be quite durable in achieving effects on reading comprehension in whole-classroom settings.

References

*Included in the meta-analysis

*Aaron, P.G., Joshi, R.M, Gooden, R., & Bentum, K.E. (2008). Diagnosis and treatment of reading disabilities based on the component model of reading: An alternative to the discrepancy model of LD. Journal of Learning Disabilities, 41, 67–84. https://doi.org/10.1177/0022219407310838

Afflerbach, P., & Cho, B. (2009). Identifying and describing constructively responsive comprehension strategies in new and traditional forms of reading. In S. E. Israel & G. G. Duffy (Eds.), Handbook of research on reading comprehension. New York: Routledge.

Afflerbach, P., Pearson, P., & Paris, S. G. (2008). Clarifying differences between reading skills and reading strategies. The Reading Teacher, 61(5), 364–373. https://doi.org/10.1598/RT.61.5.1.

*Alfassi, M. (2009). The efficacy of a dialogic learning environment in fostering literacy. Reading Psychology, 30(6), 539–25; 563. https://doi.org/10.1080/02702710902733626.

*Allor, J. H., Mathes, P. G., Roberts, J. K., Cheatham, J. P., & Champlin, T. M. (2010). Comprehensive reading instruction for students with intellectual disabilities: Findings from the first three years of a longitudinal study. Psychology in the Schools, 47(5), 445–466. https://doi.org/10.1002/pits.20482.

*Andreassen, R., & Bråten, I. (2011). Implementation and effects of explicit reading comprehension instruction in fifth-grade classrooms. Learning and Instruction, 21(4), 520–537. https://doi.org/10.1016/j.learninstruc.2010.08.003.

*Berkeley, S. & Riccomini, P.J. (2011). QRAC-the-code: A comprehension monitoring strategy for middle school social studies textbooks. Journal of Learning Disabilities, 46(2) 154–165. https://doi.org/10.1177/0022219411409412.

Berkeley, S., Scruggs, T. E., & Mastropieri, M. A. (2010). Reading comprehension instruction for students with learning disabilities, 1995—2006: A meta-analysis. Remedial and Special Education, 31(6), 423–436.

*Calhoon, M. B. (2005). Effects of a peer-mediated phonological skill and reading comprehension program on reading skill acquisition for middle school students with reading disabilities. Journal of Learning Disabilities, 38(5), 424–433.

*Cantrell, S. C., Almasi, J. F., Carter, J. C., Rintamaa, M., & Madden, A. (2010). The impact of a strategy-based intervention on the comprehension and strategy use of struggling adolescent readers. Journal of Educational Psychology,102(2), 257–280.

*Chambers Cantrell, S., Almasi, J. F., Rintamaa, M., & Carter, J. C. (2016). Supplemental reading strategy instruction for adolescents: A randomized trial and follow-up study. The Journal of Educational Research, 109(1), 7–26. https://doi.org/10.1080/00220671.2014.917258.

Chiu, C. W. T. (1998). Synthesizing metacognitive interventions: What training characteristics can improve reading performance? Paper presented at the annual meeting of the American Educational Research Association, San Diego.

Cohen, J. (1988). Statistical power analysis for the social sciences. Hillsdale: Erlbaum.

Cooper, L., Hedges, H., & Valentine, J. (2009). The handbook of research synthesis and meta-analysis. New York: Russell Sage Foundation.

De Boer, H., Donker, A. S., & Van der Werf, M. P. C. (2014). Effects of the attributes of educational interventions on students’ academic performance: A meta-analysis. Review of Educational Research, 84(4), 509–545. https://doi.org/10.3102/0034654314540006.

De Corte, E., Verschaffel, L., & Van de Ven, A. (2001). Improving text comprehension strategies in upper primary school children: A design experiment. British Journal of Educational Psychology, 71(4), 531–559. https://doi.org/10.1348/00070990115866.

De Milliano, I. (2013). Literacy development of low-achieving adolescents; The role of engagement in academic reading and writing. Dissertation, University of Amsterdam.

*Denton, C. A., Wexler, J., Vaughn, S., & Bryan, D. (2008). Intervention provided to linguistically diverse middle school students with severe reading difficulties. Learning Disabilities Research and Practice, 23(2), 79–89.

*DiCecco, V. M., & Gleason, M. M. (2002). Using graphic organizers to attain relational knowledge from expository text. Journal of Learning Disabilities, 35(4), 306–320.

Dole, J. A., Nokes, J. D., & Drits, D. (2009). Cognitive strategy instruction. In S. E. Israel & G. G. Duffy (Eds.), Handbook of research on reading comprehension (pp. 347–372). New York: Routledge.

Donker, A. S., De Boer, H., Kostons, D., van Ewijk, C. D., & Van der Werf, M. P. C. (2014). Effectiveness of learning strategy instruction on academic performance: A meta-analysis. Educational Research Review, 11, 1–26. https://doi.org/10.1016/j.edurev.2013.11.002.

Droop, M., Van Elsäcker, W., Voeten, M. J. M., & Verhoeven, L. (2016). Long-term effects of strategic reading instruction in the intermediate elementary grades. Journal of Research on Educational Effectiveness, 9(1), 77–102. https://doi.org/10.1080/19345747.2015.1065528.

Duffy, G. G. (1993). Teachers’ progress toward becoming expert strategy teachers. The Elementary School Journal, 94(2), 109–120 Retrieved from http://www.jstor.org/stable/1001963.

Duke, N. K., & Pearson, P. D. (2002). Effective practices for developing reading comprehension. In A. E. Farstrup & S. J. Samuels (Eds.), What research has to say about reading instruction (3rd ed., pp. 205–242). Newark: International Reading Association.

Duke, N. K., Pearson, P. D., Strachan, S. L., & Billman, A. K. (2011). Essential elements of fostering and teaching reading comprehension. In S. J. Samuels & A. E. Farstrup (Eds.), What research has to say about reading instruction (4th ed., pp. 51–93). Newark: International Reading Association.

Durkin, D. (1978-1979). What classroom observations reveal about reading comprehension instruction. Reading Research Quarterly, 15, 481–553.

*Durukan, E. (2011). Effects of cooperative integrated reading and composition (CIRC) technique on reading-writing skills. Educational Research and Reviews, 6(1), 102–8; 109.

Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot–based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56(2), 455–463. https://doi.org/10.1111/j.0006-341X.2000.00455.x.

Edmonds, M. S., Vaughn, S., Wexler, J., Reutebuch, C., Cable, A., Tackett, K. K., & Schnakenberg, J. W. (2009). A synthesis of reading interventions and effects on reading comprehension outcomes for older struggling readers. Review of Educational Research, 79(1), 262–300. https://doi.org/10.3102/0034654308325998.

Egger, M., Smith, D. S., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. British Medical Journal, 315(7109), 629–634.

*Elbro, C. & Buch-Iversen, I. (2013). Activation of background knowledge for inference making: Effects on reading comprehension. Scientific Studies of Reading, 17(6), 435–452. https://doi.org/10.1080/10888438.2013.774005.

*Faggella-Luby, M., & Wardwell, M. (2011). RTI in a middle school: Findings and practical implications of a tier 2 reading comprehension study. Learning Disability Quarterly, 34(1), 35–15; 49.

*Fang, Z., & Wei, Y. (2010). Improving middle school students’ science literacy through reading infusion. Journal of Educational Research, 103(4), 262–12; 273.

*Fogarty, M., Oslund, E., Simmons, D., Davis, J., Simmons, L., Anderson, L., Clemens, N., Roberts, G. (2014). Examining the effectiveness of a multicomponent reading comprehension intervention in middle schools: A focus on treatment fidelity. Educational Psychology Review, 26(3), 425–449. https://doi.org/10.1007/s10648-014-9270-6.

*Graves, A. W., Brandon, R., Duesbery, L., McIntosh, A., & Pyle, N. B. (2011a). The effects of tier 2 literacy instruction in sixth grade: Toward the development of a response-to-intervention model in middle school. Learning Disability Quarterly, 34(1), 73–14; 86.

*Graves, A. W., Duesbery, L., Pyle, N. B., Brandon, R. R., & McIntosh, A. S. (2011b). Two studies of tier II literacy development: Throwing sixth graders a lifeline. Elementary School Journal, 111(4), 641–21; 661.

Guthrie, J. T., & Davis, M. H. (2003). Motivating struggling readers in middle school through an engagement model of classroom practice. Reading & Writing Quarterly, 19(1), 59–85.

*Guthrie, J. T., & Klauda, S. L. (2014). Effects of classroom practices on reading comprehension, engagement, and motivations for adolescents. Reading Research Quarterly, 49(4), 387–30; 416.

*Guthrie, J. T., Wigfield, A., Barbosa, P., Perencevich, K. C., Taboada, A., Davis, M. H., … & Tonks, S. (2004). Increasing reading comprehension and engagement through concept-oriented reading instruction. Journal of Educational pPsychology, 96(3), 403–423.

Guthrie, J. T., McRae, A., & Klauda, S. L. (2007). Contributions of concept-oriented reading instruction to knowledge about interventions for motivations in reading. Educational Psychologist, 42(4), 237–250. https://doi.org/10.1080/00461520701621087.

*Guthrie, J.T., McRae, A., Coddington, C.S., Lutz Klauda, S., Wigfield, A., & Barbosa, P. (2009). Impacts of comprehensive reading instruction on diverse outcomes of low- and high-achieving readers. Journal of Learning Disabilities, 42(3), 195–214. https://doi.org/10.1177/0022219408331039.

Hacker, D. J., & Tenent, A. (2002). Implementing reciprocal teaching in the classroom: Overcoming obstacles and making modifications. Journal of Educational Psychology, 94(4), 699–718. https://doi.org/10.1037//0022-0663.94.4.699.

Hox, J. J. (2010). Multilevel analysis: Techniques and applications (2nd ed.). New York: Routledge.

Hulleman, C. S., & Cordray, D. S. (2009). Moving from the lab to the field: The role of fidelity and achieved relative intervention strength. Journal of Research on Educational Effectiveness, 2(1), 88–110. https://doi.org/10.1080/19345740802539325.

*Jitendra, A., Hoppes, M. K., & Xin, Y. (2000). Enhancing main idea comprehension for students with learning problems: The role of a summarization strategy and self- monitoring instruction. The Journal of Special Education, 34(3), 127–139.

Johnston, P. (1984). Assessment in reading. In P. D. Pearson, R. Barr, M. L. Kamil, & P. Mosenthal (Eds.), Handbook of reading research (pp. 147–182). New York: Longman.

*Kim, A., Vaughn, S., Klingner, J. K., Woodruff, A. L., Reutebuch, C. K., & Kouzekanani, K. (2006). Improving the reading comprehension of middle school students with disabilities through computer-assisted collaborative strategic reading. Remedial and Special Education, 27(4), 235–249. https://doi.org/10.1177/07419325060270040401.

Kintsch, W. (1988). The role of knowledge in discourse comprehension: A construction-integration model. Psychological Review, 95(2), 163–182. https://doi.org/10.1037/0033-295X.95.2.163.

Kintsch, W. (1998). Comprehension: A paradigm for cognition. Cambridge: Cambridge University Press.

*Klingner, J.K, Vaughn, S., Arguelles, M.E., Tejero Hughes, M., & Ahwee Leftwich, S. (2004). Collaborative strategic reading: “Real-World” lessons from classroom teachers. Remedial and Special Education, 25(5), 291–302. https://doi.org/10.1177/07419325040250050301.

*Lau, K. & Chan, D. W. (2007). The effects of cognitive strategy instruction on Chinese reading comprehension among Hong Kong low achieving students. Reading & Writing, 20(8), 833–857. https://doi.org/10.1007/s11145-006-9047-5.

*Lederer, J. M. (2000). Reciprocal teaching of social studies in inclusive elementary classrooms. Journal of Learning Disabilities 33(1), 91–106.

*Lee, Y. (2014). Promise for enhancing children’s reading attitudes through peer reading: A mixed method approach. The Journal of Educational Research, 107(6), 482–492. https://doi.org/10.1080/00220671.2013.836469.

Lipsey, M. W. (2003). Those confounded moderators in meta-analysis: Good, bad, and ugly. Annals of the American Academy of Political and Social Science, 587(1), 69–81. https://doi.org/10.1177/000271620225079.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Thousand Oaks: Sage publications.

*Lovett, M. W., Lacerenza, L., De Palma, M., & Frijters, J. C. (2012). Evaluating the efficacy of remediation for struggling readers in high school. Journal of Learning Disabilities, 45(2), 151–169. https://doi.org/10.1177/0022219410371678.

*Lubliner, S., & Smetana, L. (2005). The effects of comprehensive vocabulary instruction on title I students’ metacognitive word-learning skills and reading comprehension. Journal of Literacy Research, 37, 163–200. https://doi.org/10.1207/s15548430jlr3702_3

*Lucariello, J. M., Butler, A. G., & Tine, M. T. (2012). Meet the “reading rangers”: Curriculum for teaching comprehension strategies to urban third graders. Penn GSE Perspectives on Urban Education, 9(2), 12.

*Lundberg, I. & Reichenberg, M. (2013). Developing reading comprehension among students with mild intellectual disabilities: An intervention study. Scandinavian Journal of Educational Research, 57(1), 89–100, https://doi.org/10.1080/00313831.2011.623179.

*Mason, L.H., Davison, M.D., Hammer, C.S. et al. (2013). Knowledge, writing, and language outcomes for a reading comprehension and writing intervention. Reading & Writing, 26(7), 1133–1158. https://doi.org/10.1007/s11145-012-9409-0.

*McCown, M., & Thomason, G. (2014). Informational text comprehension: Its challenges and how collaborative strategic reading can help. Reading Improvement, 51(2), 237–253.

*McKeown, M. G., Beck, I. L., & Blake, R. G. K. (2009). Rethinking reading comprehension instruction: A comparison of instruction for strategies and content approaches. Reading Research Quarterly, 44(3), 218–253.

*Miller, C. A., Darch, C. B., Flores, M. M., Shippen, M. E., & Hinton, V. (2011). Main idea identification with students with mild intellectual disabilities and specific learning disabilities: A comparison of explicit and basal instructional approaches. Journal of Direct Instruction, 11, 15–29.

National Reading Panel. (2000). Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction. Washington: National Institute of Child Health and Human Development.

Ng, C. C., Bartlett, B., Chester, I., & Kersland, S. (2013). Improving reading performance for economically disadvantaged students: Combining strategy instruction and motivational support. Reading Psychology, 34(3), 257–300.

*Orbea, J. M. M., & Villabeitia, E. M. (2010). The teaching of reading comprehension and metacomprehension strategies. A program implemented by teaching staff. Anales de Psicología/Annals of Psychology, 26(1), 112–122.

Organisation for Economic Co-operation and Development [OECD] (2014). “Profile of student performance in reading”, in PISA 2012 Results: What students know and can do (Volume I, Revised edition, February 2014): Student performance in mathematics, reading and science, OECD Publishing.

Palincsar, A. S., & Brown, A. (1984). Reciprocal teaching of comprehension-fostering and comprehension-monitoring activities. Cognition and Instruction, 1(2), 117–175. https://doi.org/10.1207/s1532690xci0102_1.

Palincsar, A. S., Brown, A., & Martin, S. M. (1987). Peer interaction in reading comprehension instruction. Educational Psychologist, 22(3-4), 231–253. https://doi.org/10.1080/00461520.1987.9653051.

Paris, S. G., Lipson, M. Y., & Wixson, K. K. (1983). Becoming a strategic reader. Contemporary Educational Psychology, 8(3), 293–316.

Paris, S. G., Cross, D. R., & Lipson, M. Y. (1984). Informed strategies for learning: A program to improve children’s reading awareness and comprehension. Journal of Educational Psychology, 76(6), 1239–1252.

*Ponce, H.C., López, M.J., Mayer, R.E. (2012). Instructional effectiveness of a computer-supported program for teaching reading comprehension strategies. Computers & Education, 59(4), 1170–1183. https://doi.org/10.1016/j.compedu.2012.05.013.

Pressley, M. (2002). Metacognition and self-regulated learning. In A. E. Farstrup & S. J. Samuels (Eds.), What research has to say about reading instruction (pp. 291–309). Newark: International Reading Association.

Pressley, M., & Afflerbach, P. (1995). Verbal protocols of reading: The nature of constructively responsive reading. Hillsdale: Erlbaum.

*Proctor, C. P., Dalton, B., Uccelli, P., Biancarosa, G., Mo, E., Snow, C., et al. (2011). Improving comprehension online: Effects of deep vocabulary instruction with bilingual and monolingual fifth graders. Reading and Writing, 24(5), 517–544.

*Radcliffe, R., Caverly, D., Hand, J., & Franke, D. (2008). Improving reading in a middle school science classroom. Journal of Adolescent and Adult Literacy, 51(5), 398–11; 408.

*Redford, J. S., Thiede, K. W., Wiley, J., & Griffin, T. D. (2012). Concept mapping improves metacomprehension accuracy among 7th graders. Learning and Instruction, 22(4), 262–9; 270.

*Reis, S. M., Eckert, R. D., McCoach, D. B., Jacobs, J. K., & Coyne, M. (2008). Using enrichment reading practices to increase reading fluency, comprehension, and attitudes. The Journal of Educational Research, 101(5), 299–315. https://doi.org/10.3200/JOER.101.5.299-315.