Abstract

The aim of this study was to explore the differential relationships between students’ science performance and their ICT availability, ICT use, and attitudes toward ICT based on the data from the Program for International Student Assessment (PISA) 2015 across the countries and regions including South/ Latin America, Europe, and Asia and Pacific. Before 2015, the PISA tests were paper-based, but in 2015, for the first time, a computer-based test was used. The mean score in science performance decreased dramatically in PISA 2015. In order to propose a plausible reason for this decrease, the relationships between students’ science performance and students’ ICT availability, ICT use, and attitudes toward ICT were examined. The ICT Development Indexes (IDI) of 35 countries were used to investigate whether the relationships vary across countries. Two-level regression was employed for the analysis, taking into account plausible values and sample weights. The results indicated that there was a differential relationship among countries and regions for how much of the total variance was explained through ICT related factors. Controlling major student- and school-level variables, as IDI scores of countries increase, explained variances of science scores by ICT use, availability and attitude increase in South/ Latin America, whereas, for countries in Europe, the explained variances decrease. In Asia and Pacific, explained variances across countries were similar. Further implications are discussed emphasizing the importance of regions-based perspectives.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

People can handle social, economic, and environmental challenges of globalization through science education because science improves people’s problem-solving ability and literacy skills not only for their academic and/or business lives but also for their daily lives (Royal Society, 2014; Valladares, 2021). According to The Organization for Economic Cooperation and Development (OECD, 2016a) science literacy is “increasingly linked to economic growth and is necessary for finding solutions to complex social and environmental problems, all citizens, not just future scientists and engineers, need to be willing and able to confront science-related dilemmas” (p. 16). Understanding the importance of science on coping with global challenges, scientific literacy is a crucial concern for all countries because of its potential for social transformation (Valladares, 2021). Considering these concerns, international large-scale assessments allow countries to evaluate the effectiveness of their educational systems.

The Programme for International Student Assessment (PISA) is directed by the OECD, as one of the large-scale international assessments. PISA gets great attention from researchers and policy makers because of its multidimensional aspects. PISA, also, creates an opportunity for comparisons with respect to reading literacy, mathematical literacy, and scientific literacy. These comparisons can be made not only at the cross-national level, but also at student and school levels (OECD, 2016b, 2017). The PISA tests had been applied as paper-based until 2015. In 2015, for the first time, computer-based tests were administered in almost all countries (Kastberg et al., 2016), and the OECD average science performance score was the lowest in 2015 since the first cycle of PISA. Information and Communication Technology (ICT) related factors may be among the reasons for the drop in science scores. There may be differences among countries in the effects of ICT related factors on science performance. If such differences exist, then different policy recommendations would be warranted for particular countries. Additionally, the rapid developments of technology lead to a shift in education from traditional classroom learning environments to online or technology integrated learning environments (Srijamdee & Pholphirul, 2020). Therefore, to understand the role of ICT related factors on students’ academic performance has been of prime importance in the twenty-first century (Oliver, 2002).

The purpose of this study was to explore the differential relationship between students’ science performance and students’ ICT availability, ICT use, and attitudes toward ICT in PISA 2015 for 35 countries located geographically in South/Latin America, Europe, and Asia and Pacific. We investigated the relative contribution of ICT related factors on explained variance in science performance for each country. Afterwards, we explored the relationship between the relative explained variance in science performance and the ICT Development Index (IDI) of countries. IDI has been published since 2009 in each year by the International Telecommunication Union (ITU) which is the United Nations (UN) specialized agency for ICTs in order to follow and contrast ICT developments of countries (ITU, 2015). To calculate IDI, data are collected in three main areas including ICT access, ICT use, and ICT skills based on 11 indicators (ITU, 2015).

2 Literature review

In the following sections, we describe the importance of ICT related factors for science performance and the student- and school-level variables that need to be controlled in order to isolate the relative contribution to explained variance by ICT related factors.

2.1 Importance of students’ ICT availability, ICT use, and attitudes toward ICT on academic performance

Research on the relationship between students’ reading, science, and mathematics performance and ICT related factors show usually statistically significant positive or negative relationships, but at different levels for various countries. For example, Srijamdee and Pholphirul (2020) investigated students in Thailand as a representative of developing countries in order to understand the relationship between ICT familiarity and educational outcomes. The results showed that if students use ICT resources for educational purposes, then their science, reading, and mathematics outcomes improve, a result similar to that obtained for developed countries. However, if students do not use ICT resources for education, their educational outcomes either do not change or might worsen. (Srijamdee & Pholphirul, 2020). They stated that ICT usages of students positively impact their educational outcomes if the time is limited to “1-30 min per day on weekdays in schools, and 2-4 hours per day on weekends” (p. 2968). Park and Weng (2020), using PISA 2015 data, found a small but positive and statistically significant relationship between attitudes toward ICT and science, mathematics, and reading performance across 39 countries. At the same time, ICT use at school and home were negatively associated with academic performance of students (Park & Weng, 2020). Park and Weng (2020) also conducted country level analyses by using the gross domestic product (GDP) and Gini coefficients of countries, which are indicators of countries’ economic levels. They found a changing degree of relationship between both countries’ GDP per capita, and Gini coefficients and students’ ICT use for schoolwork at school and perceived ICT autonomy on achievement. While the relationships between GDP per capita and these two ICT variables were stronger in countries with higher GDP per capita, there was an inverse relationship between the Gini coefficient of countries and these two ICT variables (Park & Weng, 2020). Hu et al. (2018) found that ICT availability at school, ICT interest, competence, and autonomy were positively correlated with science performance, whereas ICT availability at home and enjoyment of social interaction with ICT were negatively related to science performance. Hu et al. (2018) also noticed that students’ academic use and entertainment use of ICT were negatively associated with science performance for some countries and positively associated for others. Furthermore, the results of Hu et al. (2018) also showed that students from countries which have higher ICT skills according to IDI level leaned higher academic performance (Hu et al., 2018). Finally, Petko et al. (2017) showed that while ICT entertainment use and schoolwork use at school and home were negative indicators for many countries, students’ positive attitudes toward ICT have statistically significant and positive effects on all three subject areas for 30 participating countries. There was a large variation among these countries in terms of the degree of relationships (Petko et al., 2017). These studies indicated the effects of ICT related factors on science performance varied across countries, but none of them conducted region-based analyses. Additionally, these studies did not control many important student- and school-level variables. Lastly, these studies focused only on the relationship between ICT related factors and academic performance rather than how much of the total variance can be explained with ICT related factors. Hence, the current study fills this gap in the literature, by focusing on the explained variance in science performance by ICT related factors including availability, use, and attitudes depending on IDI levels, and regions of countries after controlling student- and school- levels variables.

2.2 Factors predicting science performance of students

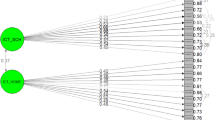

In order to identify the unique relationship between ICT availability, ICT use, and attitudes toward ICT and science performance, it is necessary to control other salient variables that are related to science performance. Prior attempts for explaining student outcomes appear to show a tension between focusing primarily on student-level variables and school-level variables. A widely adopted theory that focuses on student-level variables is Equality of Educational Opportunity (Coleman et al., 1966), stating that student-level factors are more important in explaining student outcomes, and hence those variables should be studied extensively. On the other camp, focusing on school-level variables, the Heyneman-Loxley effect (Heyneman & Loxley, 1983) states that school-level variables are more important for student outcomes. This tension can be resolved by synthesizing both approaches, forming a conceptual framework that combines both student- and school-level factors that does not place an emphasis on either level a priori. Such a conceptual framework allows for capturing the effects of variables at both levels. Effects of important variables in school-level can be missed if only student-level variables are included, and vice versa. In order to delineate the unique effects of the ICT-related variables, any other relevant variable needs to be accounted for. As shown in Fig. 1, in this conceptual framework, student-level factors are students’ science attitudes, students’ personal factors, students’ considerations about classroom learning environments, and parental factors. School-level factors are physical factors of schools and teacher-related factors.

2.2.1 Student-level factors

The equality of Educational Opportunity theory, developed by Coleman et al. (1966), is widely used to clarify the importance of student-level factors on their academic achievement. Coleman et al. (1966) claimed that the impacts of students’ personal factors are clearly greater than school-level factors on academic achievement. They found that differences among students within the same school were roughly four times larger than the differences between schools (Coleman et al., 1966).

PISA includes student-level variables that explain science performance. Prior research showed that self-efficacy (Maddux, 2002; Tzung-Jin, 2021), enjoyment of science (Hampden-Thompson & Bennett, 2013), instrumental motivation (Kula-Kartal & Kutlu, 2017), epistemological beliefs (OECD, 2016b), science-related activities (Grabau & Ma, 2017), motivation to achievement (Steinmayr et al., 2019), sense of belonging to school (St-Amand et al., 2017), parents’ education (Güzeller & Şeker, 2016), parental emotional support (Perera, 2014), and ESCS (Dolu, 2018) are positively related to science performance. On the other hand, test anxiety has been shown to be negatively related to science performance (Ergene, 2011). The direction of the relationship between inquiry-based learning environment and science performance (Cairns & Areepattamannil, 2019) remains a controversial issue (Cairns, 2019). The relevance for including these student-level variables for the present study is to control the combined effects of non-ICT-related student-level variables on science performance. Such an approach allows for identifying the unique contributions of each ICT-related variable for explaining science performance.

2.2.2 School-level factors

Schools have an impact on students’ academic performance in all countries at different degrees (Acar & Öğretmen, 2012; Martins & Veiga, 2010; Srijamdee & Pholphirul, 2020). According to Heyneman and Loxley (1983), the impacts of school-based factors on the academic performance are larger than that of personal factors especially for countries with lower-level income. They showed that in low-income countries the school and teacher quality have a large impact on pupils’ academic performance rather than their social and economic status. However, Baker et al. (2002) tested this assertion using the Third International Mathematics and Science Study (TIMSS) 1994 data and found that the effect of family-background on academic performance was increasing especially in poorer nations.

The importance of school level factors is being the most evident in the large between-school variance observed in PISA studies. For example, the OECD average between-school variance is 37% based on PISA 2006 (Alacacı & Erbaş, 2010). Among the most salient factors that account for the between-school variance are physical factors (Areepattamannil, 2014; Sousa et al., 2012) and teacher certification levels (Grabau, 2016; Fuchs & Wößmann, 2007). The relevance for including school-level variables together with the non-ICT-related student-level variables for the present study is to capture the combined effects of non-ICT-related variables at both levels on science performance. This approach allows for distinguishing the unique contributions of each of the ICT-availability, ICT-use, and ICT-attitudes variables for predicting science performance.

2.3 The present study

In the cycle of PISA 2015, the test was given to students as computer-based for the first time and OECD’s science performance average fell to the minimum level. Starting from the idea of what might cause this decrease, we proposed that there might be a differential relationship across countries and regions between students’ science performance and the ICT related factors. We hypothesized that for countries and regions that have relatively lower levels of ICT resources, having more ICT related factors might explain more variance on science performance, whereas for countries and regions that have relatively higher levels of ICT resources, the explained variance might be constant or decrease. By controlling student- and school-level factors that are related to science performance, we aimed to explore the relationship between the explained variance of science performance by ICT related factors and ICT development level of countries according to the regions. The following research question was investigated:

To what extent do the uniquely explained variances of science performance in PISA 2015 by ICT availability, ICT use, and attitudes towards ICT differ among countries and regions?

3 Method

3.1 Participants

In 2015, nearly 540,000 students from 72 countries between the ages from 15-year-old and three months to 16-year-old and two months participated in the PISA 2015 from three different regions of the world (OECD, 2017). These students were selected via a two-stage stratified sampling to represent 29 million students. In the present study, the data of 35 countries from three different regions of the world that have all the control and ICT related variables were included. The detailed information on participating students and coverage for the national desired target population and regions of the countries are given in Appendix 1. Listwise deletion was used to handle missing values in the study.

3.2 Instruments

All data in this study were downloaded from public OECD’s PISA 2015 database (OECD, 2019a) and ITU database (, 2015). PISA collects information not only at student-level but also school- and parent-levels for science literacy, mathematics literacy, and reading literacy cross-nationally. The independent variables of the current study were ICT related factors such as ICT availability, ICT use, and attitudes toward ICT. These variables were measured by related student questionnaires. ICT availability had two subscales which were access to ICT at home index and at school index. ICT use had three subscales which were ICT use outside of school leisure, ICT use outside of school for schoolwork, and use of ICT at school in general. Attitudes toward ICT had four subscales which were students’ ICT interest, students’ perceived ICT competence, students’ perceived autonomy related to ICT use, and students’ ICT as a topic in social interaction. The summary of the whole student- and school-level control variables, as well as ICT related variables and their abbreviated versions are given in Table 1 (OECD, 2019b).

3.3 Data analysis

We conducted two-level regression analyses in order to answer the research questions. Sample size, linearity, homoscedasticity, independence of errors, normality of residuals, and multicollinearity are the assumptions of two-level regression (Field, 2009; Keith, 2014; Pallant, 2007; Tabachnick & Fidell, 2007). All the assumptions were checked, and it was observed that all the assumptions were met. Mplus 7.3 (Muthén & Muthén, 1998–2014) was used to conduct the analysis as it can take into account plausible values and sampling weights simultaneously.

In order to obtain the uniquely explained variance of science performance by ICT related factors, the following procedures were followed. First, a baseline model with related student- and school-level control variables was fitted to the data to obtain the initial explained variances (R2 values) for each country. Second, the ICT availability variables were added to the baseline model (Model 1) and R2 values were obtained. Third, by removing ICT availability variables and adding the ICT use variables to the baseline model (Model 2), R2 values were obtained. Finally, the ICT use variables were removed and the attitudes toward ICT variables were added to the baseline model (Model 3) and R2 values were obtained. The R2 differences between each model and the baseline model (ΔR2) were calculated.

Then, the relationships between ΔR2 values and IDI levels of the countries were plotted for each model to examine whether the R2 changes differed for some groups of countries. The importance of ICT related factors on predicting science performance might differ according to countries’ ICT Development Index. In these plots, the best fit lines and 95% confidence interval bands were drawn using linear regression with the least squared fit method, for countries grouped by region. The unstandardized regression coefficients (b), t and p values are reported for each trend line. Due to the small number of data points in each region, the interpretations of results and conclusions are based on visual inspection of the trends. While we realize that this is a limitation, we argue that the plots and associated statistics allow the readers to judge our conclusions.

4 Results

4.1 Explained variance in multilevel regression models

The second column of Table 2 shows the explained variances of science performance by control variables for each country (baseline model). On the right-hand side of that column, the differences in the explained variance when ICT related factors were added to each model were presented. These R2 differences reflected the unique relationships between ICT related factors and science performance when student- and school-level variables were controlled.

As shown in Table 2, on average, ICT Use explained more variance on science performance than attitudes towards ICT and ICT availability. The highest explained variance for ICT availability was in Croatia (0.044), for ICT use is in Brazil (0.056), for attitudes toward ICT was in Macao (0.046). This means that ICT related factors could explain around 5% additional variance in science performance. We want to remind that these conclusions were reached in addition to all controlled student- and school- levels variables. Finland is of interest, as for all of the ICT related factors the unique variance explained was less than 1%. First, this finding showed that ICT related factors could predict students’ science performance, while controlling many important students- and school- levels variables. Second, it was concluded that the relationship between ICT related factors and science performance differed for each country and region.

4.2 The differential relationships in explained variances across geographical regions

Observing differential relationships in explained variances by ICT related factors on science performance, the patterns of these relationships were examined. The relationships between R2 changes and countries’ ICT Development Indexes were investigated.

4.2.1 ICT availability

Based on Fig. 2, a relationship between the explained variance of science performance by ICT availability of the countries and regions, and their IDI scores was observed. The solid blue, green, and red trend lines and gray bands surrounding each line were obtained using linear regression, regressing IDI scores on R2 change. These trend lines are based on few data points leading to lowered sensitivity to detect statistical significance, hence instead of statistical significance we focus on the visual inspection and interpretation. In South/ Latin America, the explained variances increase as IDI scores of countries increase (b = 0.008, t (5) = 1.334, p = 0.84). Conversely, in Europe, the explained variances by ICT availability for science performance decrease as IDI scores increase (b = −0.005, t (21) = −1.755, p = 0.09). In Asia and Pacific, the explained variance is similar across countries (b = 0.001, t (3) = 0.21, p = 0.24). Overall, it was observed that, if a country had less IDI, having more ICT availability became more important in predicting science performance whereas when a country has a high level of IDI, having more ICT availability has a lower role on predicting science performance. This means that in countries with lower IDI, ICT availability could create a difference on students’ science performance. When the IDI level is high, the science performances of students are more related to other variables than ICT availability.

4.2.2 ICT use

In Fig. 3, it is shown that ICT Use showed a similar pattern as ICT Availability and IDI scores of countries and regions. There was a relationship between the explained variance of science performance by ICT Use and IDI levels of countries. In South/ Latin America, the explained variances increase as IDI scores of countries increase (b = 0.008, t (5) = 1.334, p = 0.24). On the other hand, in Europe, the explained variances decrease as IDI levels of countries increase (b = −0.007, t (21) = −2.259, p = 0.04). For Asia and Pacific, the explained variances do not change related to IDI level of countries across the region (b = 0.001, t (3) = 0.308, p = 0.78). Therefore, if a country had less IDI, students’ ICT use outside of school for leisure and schoolwork, and at school in general became more important in predicting science performance whereas when a country had a high level of IDI, explained variance had been decreased. This means that in countries with lower IDI, ICT use could create a difference on students’ science performance. When the IDI level is high, the science performances of students are more related to other variables than ICT use.

4.2.3 Attitudes toward ICT

Based on Fig. 4, a relationship between explained variances by attitudes toward ICT of the countries and regions, and their IDI scores was observed. In South/ Latin America, the explained variances increase as IDI scores of countries increase (b = 0.006, t (5) = 1.931, p = 0.11). Conversely, in Asia and Pacific, the explained variances decrease slightly as IDI scores of countries increase (b = −0.001, t (3) = −0.582, p = 0.60). Finally, in Europe, there was no change in the explained variances related to IDI level of countries across regions (b = −0.000, t (21) = −0.104, p = 0.92). Overall, for countries with small IDI, increasing IDI level was associated with predicting science performance by attitudes toward ICT, whereas for countries with large IDI, increasing IDI level was not associated with predicting science performance. A result to be noted is that attitudes toward ICT do not change the explained variance on students’ science performance related to IDI levels of countries in Europe.

5 Discussion and conclusion

The purpose of this study was to investigate to what extent there was a differential relationship between ICT related factors and the science performance of the countries and regions. The overall result of the study highlighted that, in PISA 2015, there was a differential relationship between the explained variance of science performance by ICT availability, ICT use, and attitudes toward ICT and IDI scores of countries depending on the regions. The conclusion is important because it is obtained after controlling many student- and school- level variables.

The major result of the study showed that when IDI levels of countries are low, ICT related factors become more important in explaining variance of students’ science performance. This finding in line with the results of Srijamdee and Pholphirul (2020) that ICT familiarity is important for students’ science, mathematics, and reading outcomes in developing countries because “countries with low ICT levels (and low ranks) are primarily from developing world” (ITU, 2009). More specifically, related to ICT use, when IDI levels of countries increase in Europe, the explained variance decrease. The reason for this situation could be that many countries in Europe have the highest IDI scores (ITU, 2009), and so students in these countries might be using ICT resources more than the optimal time limits of “1-30 min per day on weekdays in schools and 2-4 hours per day on weekends” (Srijamdee & Pholphirul, 2020, p. 2968).

Hu et al. (2018) claimed that IDI levels of countries had a positive influence on science performance of students. Consistent with Hu et al. (2018), in this study we found that the explained variances by ICT factors were also related to the national ICT development level. It was observed that when a country had low ICT Development Index (IDI) value, students’ ICT availability, use, and attitudes toward ICT could explain more variance in science performance. After a certain level of IDI scores, students’ ICT availability, use, and attitudes toward ICT would make a very limited difference to explain change in PISA 2015 science performance. Beyond that, we noticed that the importance of IDI levels of countries for how much ICT factors explain science performance change according to the regions of countries.

In PISA focused studies, the importance of ICT related factors varied across countries. For example, Park and Weng (2020) stated that students’ ICT use for schoolwork at school and perceived ICT autonomy had more impact on the academic performance of students in countries with high GDP per capita. Hu et al. (2018) also showed the changing significance of ICT use for many participating countries and found negative or no relationship in PISA 2015. Petko et al.’s (2017) finding showed that positive attitudes toward ICT were statistically significant predictor for science performance in 30 countries among 39 participated countries, but at different levels. The results of the current study concurred with these findings and offered a more comprehensive perspective to the differential relationship. In South/Latin America, as IDI levels of countries increase, the explained variances by ICT availability, ICT use, and attitudes toward ICT increase. In Europe, as IDI levels of countries increase, the explained variances by ICT availability, and ICT use decrease. However, the explained variance by attitudes toward ICT did not make any change depending on IDI levels of countries. In Asia and Pacific, the IDI levels of countries increase, the explained variances by ICT availability and ICT use increase. But IDI levels of countries increase, the explained variances by attitudes toward ICT decrease.

On the other hand, although we controlled many important student- and school- level variables, there is still a relationship between ICT factors and science performance. To illustrate, the medians of explained variances are 1.6% by ICT availability; 2.7% by ICT use; and 2.1% by attitudes toward ICT and these three ICT factors can make a difference up to 4.4% in Croatia; with 5.6% in Brazil; with 4.6% in Macao, respectively as shown in Table 2. As a result, even if there is a differential relationship for countries and regions, ICT factors can be significant to explain PISA 2015 science performance of students in almost all countries and regions controlling many variables.

There are many contradictory studies for explaining the role of ICT related factors on academic performance of students (Hu et al., 2018; Petko et al., 2017; Zhang & Liu, 2016). One of the reasons for the contradictory results could be the controlled variables. In the current study, student- and school- levels variables were chosen according to the conceptual framework. For that reason, the reported results provide unique contributions to literature. Furthermore, a region-based approach to search for trends appear to have a potential for understanding similarities and differences in the contribution of different variables in students’ science performance.

For policymakers, the results imply that not only ICT development levels but also the region of a country may need to be considered in making educational policy decisions. For example, countries in South/Latin America may benefit from continuing investment in ICT infrastructure and providing ways for students to access ICT resources to increase ICT availability and consequently ICT use. On the other hand, for most countries in Europe such a recommendation is not warranted. It appears that ICT availability is already saturated in Europe, hence instead of further investment in ICT infrastructure, focusing efforts in guiding how students use ICT appears to be more relevant. As discussed, one reason for lowered explained variance for science performance by IDI scores might be that spending too much time using ICT. If this is the case for developed countries, one such policy guidance may involve directing effective ICT use time. A second implication for educational policy is that it may be more effective to consider ICT related factors separately from each other rather than producing a cumulative perspective. For example, in Europe while the explained variances by ICT availability almost remain constant with respect to increasing IDI levels, the explained variances by ICT use decrease with increasing IDI levels.

This study has a few limitations. First, 35 countries of 72 countries that attended PISA 2015 could be included in the current study because only 35 countries had ICT related datasets. Hence, other countries had to be excluded. This is a major concern for two of the three regions we investigated because the data points were limited. Further research is required to replicate the current results. Such research is only possible with data from different countries, hopefully, in the following PISA cycles, more countries select to be included in ICT related data collection.

Data availability

All data were taken from OECD official website. Link for the website: https://www.oecd.org/pisa/data/2015database/

References

Acar, T., & Öğretmen, T. (2012). Analysis of 2006 PISA science performance via multilevel statistical methods. Education and Science, 37(163), 178–189.

Alacacı, C., & Erbaş, A. K. (2010). Unpacking the inequality among Turkish schools: Findings from PISA 2006. International Journal of Educational Development, 30(2), 182–192. https://doi.org/10.1016/j.ijedudev.2009.03.006.

Areepattamannil, S. (2014). What factors are associated with reading, mathematics, and science literacy of Indian adolescents?A multilevel examination. Journal of Adolescence, 37(4), 367–372. https://doi.org/10.1016/j.adolescence.2014.02.007.

Baker, D. P., Goesling, B., & Letendre, G. K. (2002). Socioeconomic status, school quality, and national economic development: A cross-national analysis of the “Heyneman Loxley effect” on mathematics and science achievement. Comparative Education Review, 46(3), 291–312. https://doi.org/10.1086/341159.

Cairns, D. (2019). Investigating the relationship between instructional practices and science achievement in an inquiry-based learning environment. International Journal of Science Education, 41(15), 2113 2135. https://doi.org/10.1080/09500693.2019.1660927.

Cairns, D., & Areepattamannil, S. (2019). Exploring the relations of inquiry-based teaching to science achievement and dispositions in 54 countries. Research in Science Education, 49(1), 1–23. https://doi.org/10.1007/s11165-017-9639-x.

Coleman, J. S., Campbell, E. Q., Hobson, C. J., McPartland, F., Mood, A. M., Weinfeld, G. D., & York, R. L. (1966). Equality of educational opportunity. U.S. Government Printing Office.

Dolu, A. (2018). Mathematical analysis of equal opportunities in Turkey in education using PISA 2015 results. Suleyman Demirel University the Journal of Faculty of Economics and Administrative Sciences, 23(3), 924–935.

Ergene, T. (2011). The relationship among test-anxiety, study habits, achievement motivation, and academic performance among Turkish high school students. Education and Science, 36(160), 320–330.

Field, A. (2009). Discovering statistics using SPSS: And sex and drugs and rock ‘n’ roll (3th ed.). Sage.

Fuchs, T., & Wößmann, L. (2007). What accounts for international differences in student performance? A reexamination using PISA data. Empirical economics, 32, 433–464.https://doi.org/10.1007/s00181-006-0087-0.

Grabau, L. J. (2016). Aspects of science engagement, student background, and school characteristics: Impact on science achievement of U.S. students. Theses and dissertations--educational, school, and counseling psychology, 5.

Grabau, L. J., & Ma, X. (2017). Science engagement and science achievement in the context of science instruction: A multilevel analysis of U.S. students and schools. International Journal of Science Education, 39(8), 1045–1068. https://doi.org/10.1080/09500693.2017.1313468.

Güzeller, C. O., & Şeker, F. (2016). Variables associated with students’ science achievement in the programme for international student assessment (PISA 2009). Necatibey Faculty of Education Electronic Journal of Science and Mathematics Education, 10(2), 1–20.

Hampden-Thompson, G., & Bennett, J. (2013). Science teaching and learning activities and students' engagement in science. International Journal of Science Education, 35(8), 1–19. https://doi.org/10.1080/09500693.2011.60809.

Heyneman, S. P., & Loxley, W. A. (1983). The effect of primary-school quality on academic achievement across twenty-nine high- and low-income countries. American Journal of Sociology, 88(6), 1162–1194. https://doi.org/10.1086/227799.

Hu, X., Gong, Y., Lai, C., & Leung, F. K. S. (2018). The relationship between ICT and student literacy in mathematics, reading, and science across 44 countries: A multilevel analysis. Computers & Education, 125(1), 1–13. https://doi.org/10.1016/j.compedu.2018.05.021.

ITU (International Telecommunication Union) (2009). Measuring the information society. Retrieved from https://www.itu.int/ITU-D/ict/publications/idi/material/2009/MIS2009_w5.pdf

ITU (International Telecommunication Union) (2015). Measuring the information society. Retrieved from https://www.itu.int/en/ITU-D/Statistics/Documents/publications/misr2015/MISR2015-ES-E.pdf

Kastberg, D., Chan, J. Y., & Murray, G. (2016). Performance of US 15-year-old students in science, reading, and mathematics literacy in an international context: First look at PISA 2015. National Center for education statistics (NCES 2017-048). U.S. Department of Education. Washington, DC: National Center for education statistics. Retrieved [date] from http://nces.ed.gov/ pubsearch.

Keith, T. Z. (2014). Multiple regression and beyond: An introduction to multiple regression and structural equation modeling. Routledge.

Kula-Kartal, S. & Kutlu, Ö. (2017). Identifying the relationship between motivational features of high and low performing students and science literacy achievement in PISA 2015 Turkey. Journal of education and training studies, 5(12), 146-154. https://doi.org/10.11114/jets.v5;12.2816.

Maddux, J. E. (2002). Self-efficacy: The power of believing you can. In C. R. Snyder & S. J. Lopez (Eds.), Handbook of positive psychology (p. 277–287). Oxford University Press.

Martins, L., & Veiga, P. (2010). Do inequalities in parents’ education play an important role in PISA students’ mathematics achievement test score disparities? Economics of Education Review, 29(6), 1016–1033. https://doi.org/10.1016/j.econedurev.2010.05.001.

Muthén, L. K., & Muthén, B. O. (1998–2014). Mplus (version 7.3) [computer software]. Los Angeles: Author.

OECD (2016a). PISA 2015 results in focus, PISA, OECD Publishing, Paris.

OECD. (2016b). PISA 2015 results (volume I): Excellence and equity in education. PISA, OECDPublishing, Paris. https://doi.org/10.1787/9789264266490-en.

OECD. (2017). PISA 2015 technical report. OECD Publishing, Paris.

OECD (2019a). PISA 2015 Database. Retrieved from https://www.oecd.org/pisa/data/2015database/

OECD (2019b). Science performance (PISA) (indicator). OECD Publishing, Paris. https://doi.org/10.1787/91952204-en (Accessed on 28 October2019).

Oliver, R. (2002). The Role of ICT in Higher Education for the 21st century: ICT as a Change Agent for Education, retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.83.9509&rep=rep1&type=pdf

Pallant, J. (2007). SPSS survival manual-a step by step guide to data analysis using SPSS for windows (3rd ed.). Maidenhead: Open University Press.

Park, S., & Weng, W. (2020). The relationship between ICT-related factors and student academic achievement and the moderating effect of country economic indexes across 39 countries: Using multilevel structural equation modelling. Educational Technology & Society, 23(3), 1–15.

Perera, L. D. H. (2014). Parents' attitudes towards science and their children's science achievement. International Journal of Science Education, 36(180), 3021–3041. https://doi.org/10.1080/09500693.2014.949900.

Petko, D., Cantieni, A., & Prasse, D. (2017). Perceived quality of educational technology matters: A secondary analysis of students’ ICT use, ICT-related attitudes, and PISA 2012 test scores. Journal of Educational Computing Research, 54(8), 10701091–10701091. https://doi.org/10.1177/0735633116649373.

Royal Society (Great Britain). (2014). Vision for science and mathematics education.

Sousa, S., Park, E. J., & Armor, D. J. (2012). Comparing effects of family and school factors on cross-national academic achievement using the 2009 and 2006 PISA surveys. Journal of Comparative Policy Analysis, 14(5), 449–468. https://doi.org/10.1080/13876988.2012.726535.

Srijamdee, K., & Pholphirul, P. (2020). Does ICT familiarity always help promote educational outcomes? Empirical evidence from PISA-Thailand. Education and Information Technologies, 25, 2933–2970. https://doi.org/10.1007/s10639-019-10089-z.

Steinmayr, R., Weidinger, A. F., Schwinger, M., & Spinath, B. (2019). The importance of students’ motivation for their academic achievement replicating and extending previous findings. Frontiers in Psychology, 10, 17–30. https://doi.org/10.3389/fpsyg.2019.01730.

St-Amand, J., Girard, S., & Smith, J. (2017). Sense of belonging at school: Defining attributes, determinants, and sustaining strategies. Journal of Education, 5(2), 105–119.

Tabachnick, B. G., & Fidell, L. S. (2007). Using multivariate statistics (5th ed.). Pearson Education.

Tzung-Jin, L. (2021). Multi-dimensional explorations into the relationships between high school students’ science learning self-efficacy and engagement. International Journal of Science Education., 1–15. https://doi.org/10.1080/09500693.2021.1904523.

Valladares, L. (2021). Scientific literacy and social transformation. Science & Education. https://doi.org/10.1007/s11191-021-00205-2.

Zhang, D. & Liu, L. (2016). How does ICT use influence students’ achievements in math and science over time? Evidence from PISA 2000 to 2012. EURASIA journal of mathematics, Science & Technology Education, 12(10), 2431-2449. Doi:https://doi.org/10.12973/eurasia.2016.1297.

Funding

No funds, grants, or other support was received.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Sümeyye Arpacı, Fatih Çağlayan Mercan, and Serkan Arıkan. This draft of the manuscript was written by Sümeyye Arpacı, Fatih Çağlayan Mercan, and Serkan Arıkan. And all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Sümeyye Arpacı is the corresponding author.

Conflicts of interest/competing interests

The authors have no conflict of interest to report.

Code availability

Mplus 7.3 (Muthén & Muthén, 2014) was used to conduct the analysis.

Ethics approval

Not applicable.

Consent to participate & publication

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This manuscript expands on the Master of Science thesis conducted and published by Sümeyye Arpacı in which Fatih Çağlayan Mercan was the thesis advisor and Serkan Arıkan was co-advisor of the master thesis in 2020 at Bogazici University.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Arpacı, S., Mercan, F.Ç. & Arıkan, S. The differential relationships between PISA 2015 science performance and, ICT availability, ICT use and attitudes toward ICT across regions: evidence from 35 countries. Educ Inf Technol 26, 6299–6318 (2021). https://doi.org/10.1007/s10639-021-10576-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-021-10576-2