Abstract

Accounting firms are reporting the use of Artificial Intelligence (AI) in their auditing and advisory functions, citing benefits such as time savings, faster data analysis, increased levels of accuracy, more in-depth insight into business processes, and enhanced client service. AI, an emerging technology that aims to mimic the cognitive skills and judgment of humans, promises competitive advantages to the adopter. As a result, all the Big 4 firms are reporting its use and their plans to continue with this innovation in areas such as audit planning risk assessments, tests of transactions, analytics, and the preparation of audit work-papers, among other uses. As the uses and benefits of AI continue to emerge within the auditing profession, there is a gradual awakening to the fact that unintended consequences may also arise. Thus, we heed to the call of numerous researchers to not only explore the benefits of AI but also investigate the ethical implications of the use of this emerging technology. By combining two futuristic ethical frameworks, we forecast the ethical implications of the use of AI in auditing, given its inherent features, nature, and intended functions. We provide a conceptual analysis of the practical ethical and social issues surrounding AI, using past studies as well as our inferences based on the reported use of the technology by auditing firms. Beyond the exploration of these issues, we also discuss the responsibility for the policy and governance of emerging technology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The ethics of emerging technology is evoking the interest of a broader population beyond ethicists. Academia, professionals, policymakers, regulators, and developers of these technologies are increasingly becoming aware of the existence of valid ethical concerns that stem from the use of these technologies (Wright and Schultz 2018; Aicardi et al. 2018). The term ‘emerging technologies’ itself has been the subject of much debate, with little consensus in the literature on what technologies qualify as emergent (Rotolo et al. 2015). Rotolo et al. (2015) define emerging technology as being of “radical novelty, relatively fast growth, prominent impact, uncertainty, and ambiguity.” Any technology that meets this definition would be of high risk due to the potentially significant impact of the uncertainties surrounding the technology. For example, emerging technologies experience rapid growth, which would make timely remediation of unanticipated risks unfeasible. With this backdrop, ethics researchers have sought the best approach for identifying the ethical risks surrounding emerging technology during their introductory phase (Allen et al. 2005; Wright 2011; Brey 2012; Stahl et al. 2017). This endeavor has proven to be challenging since the ethical implications of using emerging technology usually become more evident after long-term use.

Emerging technologies are promising faster, cheaper, and more accurate analysis of massive data, resulting in an unprecedented surge in the use of these technologies in almost every aspect of the business, and with good reason, auditors are also increasingly relying on emerging technologies, such as artificial intelligence (AI) systems that use sophisticated algorithms. However, these types of technologies have come under the scrutiny of technology bodies (e.g., Association for Computing Machinery—US Public Policy Council). In general, the concern is that machine learning algorithms that are programmed based on cognitive tasks previously performed by humans can easily incorporate limitations inherent in human judgments. For example, research by IBM identifies that bias in the training data and algorithms of AI systems could ultimately lead to unfair decisions by the users of these systems (IBM 2018). AI is a technology, which is programmed to mimic human judgment and cognitive skills and can be designed to take environmental cues. Based on these cues, AI systems can assess risks to make decisions, predictions, or take actions. Unlike other software, AI systems “learn” from data and can self-evolve over time due to exposure to new data, without explicitly being programmed by a human (Shaw 2019).

The Big 4 audit firms have, and continue to make significant investments in AI for both advisory and assurance practice (Issa et al. 2016). In the assurance practice, AI is being used to perform auditing and accounting procedures such as review of general ledgers, tax compliance, preparing work-papers, data analytics, expense compliance, fraud detection, and decision-making. AI promises the capability to review unstructured data in real-time and provide a concise analysis of numerical, textual, and visual data. In the face of big data (relevant and irrelevant), intelligent systems can effectively point an auditor towards higher risk areas (Brown-Liburd et al. 2015). However, as companies and auditors rely more and more on AI, there are several underlying assumptions that they may make. One assumption is that these systems are always accurate; a second assumption is that the AI systems will always behave within the desired constraints; a third assumption is that divergence from the desired constraints will be detectable and correctable. These assumptions do not always hold, resulting in ethical, legal, and economic implications.

There are projections that 30% of corporate audits will be performed by AI by 2025 (World Economic Forum 2015). Omoteso et al. (2010) interviewed accounting firms, including the Big 4 firms, and observed that technology is indeed reshaping the role of auditors. They found that continuous auditing and AI were some of the technologies that were anticipated to gain more prominence within the profession. It is undetermined what the result of a massive rollout of AI in place of (or to complement) professionals in an accounting and auditing environment will be, and the resultant effect on audit quality. However, a morally justifiable form of technology is required when these technologies influence human actions (Verbeek 2006).

As such, we draw on two established emerging technology ethical frameworks to forecast the ethical implications of AI in an audit context. Due to the uncertainty of the future impact of AI within the auditing profession, the frameworks used in our conceptual analysis provide an insightful approach to analyze the ethical implications of AI. The motivation for our analysis comes from the resounding call for research into the impact of the use of AI in accounting and auditing (e.g., Issa et al. 2016; Sutton et al. 2016; Kokina and Davenport 2017). The interest in AI by researchers is in parallel with the increasing use of AI by accounting firms (EY 2017a; KPMG 2017; Deloitte 2018; PWC 2018).

The overall objective of this conceptual analysis is to identify potential ethical issues that the audit profession should consider as they adopt AI technologies. As such, we do not review the ethical theory nor perform a detailed analysis of the technical design of AI. Instead, we focus on practical ethical issues that arise, given the features of AI at the technology and artifact levels. We then consider these issues in the context of the nature of auditing, the social functions that auditing is intended to serve, and the existing ethical codes of conduct that auditors must follow. The ethical code of conduct consists of enforceable rules, as well as principles that guide behavior (i.e., responsibilities, public interest, objectivity, due care, and integrity). However, presently, the ethical code does not consider the current or future use of emerging technologies such as AI. For example, we do not know if and how the requirements under the code can be met, given the unique features of AI. One such scenario is an auditor’s reliance on a complex AI algorithm to perform an audit task. If the auditor has no understanding of the algorithm’s reasoning, this could impact the auditor’s due professional care. Given that technology grows exponentially fast, the profession will have less time to consider the ethical challenges of AI. As such, a proactive, as opposed to a reactive approach to the ethical implications of AI, is warranted.

There is a need for ethical governance of AI within the firms that implement it. Additionally, regulatory guidance and oversight, supported by updated standards which adapt to technological advances, is also required. Regulatory bodies, such as the Public Company Accounting Oversight Board (PCAOB) and the International Auditing and Assurance Standards Board (IAASB), aim to initiate oversight programs to anticipate and respond to the risks posed by the use of emerging technologies (PCAOB 2018; IAASB 2018). One of the Big 4 firms has already observed, “AI technologies are rapidly outpacing the organizational governance and controls that guide their use” in firms (Cobey et al. 2018). Given this situation, this paper seeks to inform practitioners, academics, and regulators on the ethical implication of the use of AI at various levels of the profession.

The paper proceeds as follows. First, we provide a general introduction to AI. Then, we lay out the case for ethical research into the use of AI in auditing. Next, we describe the two futuristic frameworks used in this paper. These frameworks form the basis of our methodological analysis of potential ethical implications associated with the use of AI. Specifically, we first provide a general analysis of AI technology and its resultant artifacts, independent of its application to auditing. In the second step of the methodological analysis, we examine the current use of AI by auditing firms and build on the ethical issues identified in the first step of our methodology. We then project what the ethical and social implications will be for the auditing profession when using this emerging technology. The last step of the methodological analysis proposes the necessary steps towards responsibility, policies, and the governance of AI within the profession. Finally, we provide concluding remarks.

AI Technology and Artifacts

The Oxford English Dictionary defines AI as “the capacity of computers or other machines to exhibit or simulate intelligent behavior” (OED Online 2019). We consider that ‘intelligent behavior’ would consist of attributes such as the capacity to observe and perceive one’s surroundings, the ability to extract information from speech or text, learning from acquired information, and the use of such information to make decisions, among other intelligence-related activities. Huang and Rust (2018) describe four levels of intelligence that AI systems can exhibit. While AI is not a new technology, given its conceptualization in the 1940s (Copeland 2004), it is still considered to be an emerging technology since the techniques used to implement it are radically evolving (Stahl et al. 2013, 2017). Additionally, companies are currently investing significant resources in the development and proliferation of AI. Stahl et al. (2017) observe that AI techniques are “currently undergoing major developments that will dramatically increase their social impact.” One of the Big 4 audit firms anticipates that the ongoing investments in AI by businesses will result in increased global productivity gains to the tune of $6.6 trillion by 2030.

In their report, PwC (2017) describes three types of AI artifacts that will generate these financial gains. The first type, Assisted AI systems, support the human in decision-making or taking actions. Assisted AI systems exhibit mechanical intelligence, which enables AI to perform routine, repetitive tasks. Humans who use these systems retain decision-making responsibilities. These assisted AI artifacts are usually applied to already existing procedures. As an example, a Microsoft research team published their development of an AI application that could transcribe speech into text better than a human (Xiong et al. 2016). Such an application can assist businesses to transcribe customer calls to gain better insight into customer needs and evaluate the performance of the support agents (Microsoft 2019).

The second type is Augmented AI systems that supplement human decision-making and increasingly learn from their human and environmental interactions (PwC 2017), and thus, exhibit analytical intelligence that enables the AI to learn from data and process information for problem-solving. In this setting, humans and AI are co-decision-makers. These augmented AI artifacts enable companies to perform activities that were not possible to do before. Topol (2019) documents a host of AI applications used in the medical field to scan patient data rapidly and provide clinicians with accurate interpretation to facilitate diagnosis.

Finally, the third type represents Autonomous AI systems that can adapt to different situations and thereby act independently, without human assistance. In this setting, humans delegate decision-making to AI. Autonomous AI systems exhibit both intuitive and empathetic intelligence. Intuitive intelligence enables the AI to creatively and effectively adjust to novel situations, and empathetic intelligence enables the AI to understand human emotions, to appropriately respond to and influence humans. Autonomous AI, which operates without human intervention, would require enhanced intuitive intelligence to deal with new situations and perhaps even the more advanced empathetic intelligence which would enable the AI to interact with humans effectively. Huang and Rust (2018) give numerous examples of autonomous AI applications in the service industry, including chatbots that provide direct customer support.

As the AI artifacts progress from assisted to autonomous, the benefits and cost savings of such systems become more apparent. However, as these autonomous AI systems assume the roles of humans, with minimal or no supervision, new ethical and social risks come into play. We dedicate a later section of this conceptual analysis to dissecting the ethical principles at risk with the uses of the different AI artifacts.

Current and Future Applications of AI in Auditing

We examined the websites of accounting firms to identify the current and future applications of AI in auditing. Although some discussions on the sites were promotional, proof of concepts, or pilots, these AI applications detailed on the firms’ websites provide an indicator of the possibilities of the technology in the auditing practice. We also collaborated with an AI auditing software firmFootnote 1 whose clients (small- and medium-sized accounting firms) provided details of their use of AI.

Table 1 combines the responses from the small- and medium-sized firms and the information identified from the websites of the Big 4 firms. Some of the current AI applications include the identification of audit areas with higher risk (PwC 2016), the review of all transactions in order to select the riskiest for testing (Bowling and Meyer 2019), and the analysis of all entries within the general ledger so as to detect anomalies (PWC 2018). AI is also used to conduct analytics to identify financial misstatements or even fraud (Persico and Sidhu 2017). One of the Big 4 audit firms reports the use of enhanced automation using a combination of techniques to generate “audit-ready work-papers” (EY 2016a). All Big 4 firms have piloted the use of AI to evaluate inventory controls through the use of drones that perform inspections (EY 2017b; Deloitte 2018; KPMG 2018; PwC 2019).

Etheridge et al. (2000) provide an analysis of different AI techniques that could be used to assess the going concern of a company. They illustrate how an AI can learn the relationships between various independent variables/financial ratios to determine the financial health of a company. Although the surveyed firms in Table 1 have yet to use AI for this purpose, some indicated that they anticipated doing so within the next 2 years. Another possible use of AI in auditing is the monitoring of the automated internal controls of clients (Hunton and Rose 2010). Lastly, AI techniques such as speech and facial recognition can enable AI to perform interviews. Dickey et al. (2019) infer from prior literature that AI can successfully be used to detect deception in speech or nervousness in facial patterns. This capability can be useful in fraud interviews.

Davenport and Raphael (2017) provide an example of Deloitte’s ‘Cognitive Audit’ strategy, which involves first standardizing the audit process, after which, the standardized processes are digitized. Then the digitized tasks are automated, followed by employing advanced analytics to the audit. Finally, cognitive (augmented) technology is used to transform the audit. Despite the growth of AI in audit, Chan and Vasarhelyi (2011) observed that the use of AI might be limited when it comes to complex judgments that require professional skepticism. An example of such a complex audit task is the evaluation of management estimates. However, they leave open the possibility that AI advancements may someday make it possible to automate complex tasks.

Projecting into the future, it is probable that as AI technology matures, routine lower-level audit tasks will become an AI function. An example of such a lower-level audit task would be the generation of requests for evidence from audit clients and the documentation of such evidence. Many of the current functions done by staff-level auditors will be taken on by Augmented AI, whose results will be reviewed by the auditor. On the audit client’s end, the evidence requested by the auditor’s AI could be generated by the client’s Assisted or Augmented AI. Once the technology matures, the AI systems on the auditor and client-side may communicate directly. After several loops in usage, the need for human review may be reduced, shifting this use from an Augmented level to a more Autonomous level.

Our projection is in line with Huang and Rust (2018), who reviewed the emergence of AI in the service industry. They theorize that “AI job replacement occurs fundamentally at the task level, rather than the job level, and for ‘lower’ (easier for AI) intelligence tasks first. AI first replaces some of a service job’s tasks, a transition stage seen as augmentation, and then progresses to replace human labor entirely when it can take over all of a job’s tasks.” In the ideal situation, the auditor would be aware of the type of AI artifact in use (assisted, augmented, or autonomous), and what its benefits and risks are. The auditor would not relinquish their responsibility to the technology. However, they would perceive the technology as a complement to their professional judgment and exhibit an appropriate level of skepticism.

The Case for a Futuristic Analysis of the Ethical Impact of AI Use in Auditing

The accounting and auditing profession embraced AI in the early 1980s. At that time, there was a much simpler version of AI in use than those of today. The precursor AI systems, such as expert systems, supported professionals in decision-making. They were composed of a rich knowledge base of rules and facts provided by experts within the domain. Professionals could query these expert systems, which could provide recommendations for specific scenarios (Vasarhelyi 1989). The ethical implication of expert systems was not evident during the initial years of implementation (Elliott et al. 1985). However, in the 1990s, some researchers began to observe the ethical effects of expert systems, including their lack of cognitive skills (e.g., intelligence, emotions, and values) and bias (Khalil 1993). Despite these ethical concerns at the turn of the 21st century, the profession embraced newer AI techniques. However, it is not evident that today’s AI systems have addressed previous ethical concerns.

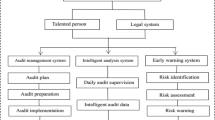

The AI systems used today have evolved significantly from the predecessor expert systems. With their increased sophistication comes a new set of ethical concerns, which form the focus of our conceptual analysis. The new AI systems do not require the rules and facts to be pre-populated and fed to them by humans. Instead, these systems feed on data. These systems study the input data, such as previous transactions performed by customers. From the data, the AI builds models (i.e., algorithms) to perform descriptive and predictive tasks. These systems can proceed autonomously to perform a task such as posting transactions, rejecting transactions, or conducting tests of transactions. The basis of their decisions is not always evident to the human, due to their complexity and continuous self-evolution. Figure 1 depicts the data-driven and intelligence aspects of AI.

In response to the rapid technological adoption within the auditing practice, the International Auditing and Assurance Standards Board (IAASB) formed a technology-working group to source feedback from various stakeholders (regulators, oversight bodies, accounting firms, academics, and professional bodies, among others). The stakeholders observed that “data is being used differently than in past audits” with resultant “legal and regulatory challenges” (IAASB 2018, p. 7). Although the stakeholders did not deem the current standards as “broken,” there was consensus that “practical guidance” (IAASB 2018, p. 6) was required to reflect the digital era that the profession is currently under, with regulators calling for a revision of standards in a “way that reflects current technology” (IAASB 2018, p. 9).

In light of this state of affairs, this paper aims to tease out the possible ethical and social challenges of an AI-enabled audit, which could inform the ‘practical guidance’ currently sought by the auditing profession. We adopt a futuristic ethics framework to analyze ethical issues that should be considered when AI is used by auditors. We ground our discussion around practical ethics. Practical ethics serves as a bridge between theory and practice, by relating professional codes of conduct to the social context within which the professional operates (Thompson 2007). Specifically, within the later sections of this paper, we explore how the use of AI within the auditing profession can impact the adherence to the profession’s ethical principles. We recommend an interactive approach among stakeholders to mitigate these resultant risks.

Futuristic Ethics Frameworks

There are three distinct approaches to ethics in emerging technology. One approach is to ignore the issue of ethics when dealing with emerging technology, with the belief that raising such concerns would stifle innovation. The hope in this approach is that ultimately, the benefits of the technology will outweigh the cons. This option would not be prudent and would only amount to deferring a problem. A second approach, which is conservative, seeks to address the ethical issues only when they materialize or when they are reliably predictable. This approach would also not be prudent in the case of emerging technology, where the pace of adoption is significantly faster than other types of technology. Additionally, when the ethical issues result from an inherent feature of the technology, it can become complicated, costly, or unfeasible to change that feature once the technology is in use.

It is for this reason that the third approach is selected. The approach followed in this paper is a futuristic approach that aims to forecast what the ethical implications will be of using emerging technology in the particular field of auditing (Brey 2012). The purpose of this futuristic approach is not to point to “one true future,” but instead to initiate dialogue on possible impacts of the technology, enlighten policy discussions, and provide some focus for future research (Stahl et al. 2010).

Two established frameworks guide the futuristic ethical analysis of emerging technology, as captured in Table 2. One framework is the Ethics of Emerging Information and Communication Technologies (ETICA), developed by a European consortium (Stahl et al. 2010). It has been used and extended in several studies (e.g., Wakunuma and Stahl 2014; Oleksy et al. 2012). Due to the uncertainty of the future impact of emerging technology, and the possibility of an infinite amount of technology uses and resultant outcomes, the ETICA framework proposes a forecasting approach. ETICA guides the researcher through the following steps: (i) identifying the features of the technology that pose ethical concerns; (ii) ethically analyzing the actual applications of the emerging technology; (iii) the performance of a bibliometric analysisFootnote 2 of literature to identify other ethical issues not captured in prior steps; and (iv) an evaluation of the identified ethical issues so as to rank them, review and critique governance, and provide policy recommendations.

A second framework used in the conceptual analysis is Anticipatory Technology Ethics (ATE) (Brey 2012), which builds on the ETICA framework. The ATE framework calls for the ethics researcher to perform five steps. First, at the technology level, the researcher considers the features of the technology of ethical concern, independent of its current or potential use. This level involves the identification of the inherent and consequential risks of the technology. Secondly, at the artifact level, the researcher considers the “physical configuration that, when operated in the proper manner and the proper environment, produces the desired result.” At this level, the researcher focuses on the artifacts independent of their actual applications and identifies the risks associated with the intended use of the artifacts. Third, at the application level, the actual use of an emerging technology’s artifact is studied. At this level, the researcher considers the unintended consequences for the users of the applications and other stakeholders. Fourth, the researcher evaluates the potential importance of the issues identified. Finally, the fifth part of the ATE framework is optional, where the researcher can design a feedback stage. There are additional optional stages beyond the fifth step. One optional stage is the responsibility assignment stage, where “moral responsibilities are assigned to relevant actors for ethical outcomes at the artifact and application levels.” Another optional stage is the governance stage, which provides policy recommendations.

Table 2 provides a visualization of how our methodology maps to the ETICA and ATE frameworks, in that we consider the analysis of AI technology and its artifacts within the first step. In the second step, we perform an analysis of the application of AI in auditing. In our third step, we review governance and provide policy recommendations.

Method of Analysis

We combine the ETICA and ATE approaches, as captured in Table 2, and further detailed within Appendix. While ETICA and ATE have overlapping steps, each of the frameworks has unique steps that contribute to a more comprehensive analysis. Specifically, ETICA’s bibliometric analysis approach allows for an objective analysis of publications on emerging technology. The principal purpose of this bibliometric approach, as documented by Stahl et al. (2010), is to obtain a dataset of abstracts of academic publications on the emerging technology. This dataset then undergoes textual analysis and visualization (i.e., term frequency counts and the visual mapping of co-occurrence links between words within the dataset). The main benefit of such an analysis is to quickly highlight the ethical issues associated with particular emerging technology, as captured within academic literature.

The ATE framework builds on the ETICA framework through its focus on an ethical analysis at a more granular level (i.e., technology, artifacts, application), and through the use of an ethical checklist to enable the contemplation of ethical questions at each level of the emerging technology. The benefit of using ethical questions to guide such a futuristic analysis is that many times, the ethical issues of emerging technologies are not apparent until much later in their use. Therefore, it is useful to follow a set of predeveloped ethical questions to guide the assessment of the values at risk when a technology is in use (Brey 2012). We follow the suggested approach of the ATE framework and use the technology ethics checklist provided by Wright (2011). Figure 2 provides a summary of the ethical questions we considered as being relevant at each level of analysis.

Ethical principles at risk with AI. Ethical issues were identified through the bibliometric analysis, the use of the technology checklist in Wright (2011), and the code of ethics for the auditing profession

While both the ETICA and ATE frameworks call for the ethical and social impact analysis of emerging technology, we focus on the ethicality of social issues that arise with the use of AI, thereby merging our discussion of the ethical and social issues at each level of analysis. We perform our analysis consistent with the frameworks, by considering first the technology level, then the artifact level, and finally, the application level. In order to identify the ethical and social risks at each level of analysis, we performed the ordered steps summarized in Table 3 and expounded upon in Appendix. Table 4 provides a summary of the publications used in the bibliometric analysis. The following section provides an analysis of the results.

Ethical and Social Impact Analysis

Step 1: Bibliometric Analysis and Ethical Checklist

The bibliometric analysis identified numerous AI-related ethical issues. However, we selected only the issues that we deemed relevant based on how AI is currently used, and projected to be used in auditing. In the following section, we provide a summary of those issues related to the features of AI that have a direct impact on the auditing profession. These unique features of AI differentiate it from other regular softwares (e.g., ERPs, spreadsheets and CAATs), and as such, pose new ethical issues not previously encountered with regular softwares used in accounting and auditing. Subsequently, we provide a summary of the audit-relevant ethical issues associated with the various AI artifacts. Through the analysis of these AI artifacts, it is evident that as the sophistication of the AI artifacts progresses from assisted to autonomous, so does the magnitude of the ethical risks.

Results of the AI Technology Analysis

Through the bibliometric analysis, we identified the following features of AI that have ethical and social implications. We explore the ethical implication of these AI features in an auditing context within Step 2.

Intelligence

The intelligence capability of AI sets it apart from other softwares that follow coded rules, such as Robotic Process Automation (RPA). The Association of Chartered Certified Accountants (ACCA 2017) observes that “historically, machines simply executed programs developed by humans. They were ‘doers’ rather than ‘thinkers.’ Now with complex pattern recognition-based machine learning tools, it is possible for systems to engage in discretionary decision-making.” However, the current level of intelligence exhibited by AI systems is considered to be ‘weak AI’ whereby the AI can perform (and possibly outperform) a human in one specific task, but it lacks the general characteristics of the human brain such as “self-understanding, self-control, self-consciousness and self-motivation” (Lu et al. 2018), which could result in the violation of ethical principles such as safety and nonmaleficence.

Data (Detailed Capture of Human Activities) and Retrieval

The development of AI systems is an intensive data process requiring large datasets to train a more accurate AI algorithm, which may result in ethical issues such as privacy, confidentiality, and data protection. Tucker (2018) sheds light on privacy concerns with AI that exceed those previously witnessed in other applications. One such concern is the data persistence (i.e., the data in AI may outlive the human who generated it). Another concern is data repurposing (i.e., data usage may extend beyond the purpose for which the data were generated). Lastly, there is a possibility of data spillover (i.e., other unwilling parties through association may have their data collected). Horvitz and Mulligan (2015) observe that “machine learning (an AI technique) and inference makes it increasingly difficult for individuals to understand what others can know about them based on what they have explicitly or implicitly shared.” As such, why data are retrieved and for how long data are retained are pertinent ethical questions.

Computation Complexity

One of the significant capabilities of AI is that it can be used to solve complex decision problems at a speed that is not humanly possible. However, the algorithms that form the backbone of AI may evolve and adopt such complex workings rendering them difficult to understand for even their developers (Preece 2018). This opacity into AI’s workings makes it challenging to determine which inputs were considered in the decision-making process, and when outputs are erroneous (ACM 2017). AI’s complexity can result in a lack of transparency, which is a “precondition for public trust and confidence. A lack of transparency risks undermining support for or interest in a technology” (Wright 2011).

Additionally, the complexity of AI can inhibit accessibility to the technology. Kirkpatrick (2016) conveys the sentiments of an AI developer who concluded that “I am afraid that our technical understanding is still so limited that regulation at this point could easily do more harm than good.” The complexity of AI can hinder regulators from understanding the core of it, and if AI is too complex to understand, then how can AI be effectively regulated?

Results of the AI Artifact Analysis

As captured in Fig. 2, we consider both the nature and intended function of each AI artifact (i.e., assisted, augmented, or autonomous) when determining their ethical and social risks (Dahlbom et al. 2002). While it is easy to observe what the intended functions of these AI artifacts are, their nature is not as apparent. In determining the nature of the AI artifact, we consider the various levels of intelligence that the artifact exhibits (Huang and Rust 2018). For example, augmented AI systems used to assist in decision-making exhibit analytical intelligence, which enables the AI system to learn from data and process information for problem-solving. This analytical intelligence is embedded in the sophisticated algorithms of the AI system. As such, the complexity of the algorithms may result in a lack of transparency regarding the AI’s decision-making process (e.g., what inputs it considered, and why the AI recommends specific actions). By considering the intelligence of the AI artifacts, we were able to determine what their capabilities are, as well as their constraints. Through this process, the possible ethical issues that could result from the intended use of these artifacts became clearer.

Assisted AI

Through the bibliometric analysis, we identified trust as a critical term that co-occurred with assistive technology. Since the function of Assisted AI is to support “humans in making decisions or taking actions” (PwC 2017), individuals using Assisted AI would need to trust that AI would perform its supportive role as intended. Bisantz and Seong (2001) conducted a literature review on the established system factors that influence a person’s trust in decision aids. They identified factors including the system’s predictability, dependability, reliability, robustness, understandability, explication of intention, usefulness, and user familiarity.

Augmented AI

Augmented AI is more advanced than Assisted AI since some decision-making tasks are delegated to Augmented AI. As such, we considered that the values at risk with Assisted AI would be of a greater magnitude in the case of Augmented AI. The bibliometric analysis did not yield significant insight into the key terms that co-occurred with Augmented AI within the literature. The lack of key terms could be as a result of Augmented AI not being well studied as yet. Alternatively, the lack of associated ethical terms may be as a result of Augmented AI being referred to by other synonyms such as cognitive technologies (Davenport and Raphael 2017). Given that AI is still considered an emerging technology, its terminologies are yet to crystalize.

Therefore, we relied on the Wright (2011) ethical checklist to identify the ethical/social issues associated with Augmented AI. One of the ethical principles possibly at risk is the autonomy of the user when AI exerts undue influence over novice users who lack the experience to interact appropriately with the system (Arnold and Sutton 1998; Hampton 2005). Another ethical principle at risk is accountability. Wright (2011) calls for ethics researchers to consider if “technology is complex and responsibility is distributed, can mechanisms be created to ensure accountability?” Considering the use of Augmented AI developed by a third party, if the technology and the human share decision-making responsibilities, who should be held accountable for AI’s decisions in the case of litigation? The user who is the co-decision-maker, the firm that purchased the AI, or the third-party software developer?

Autonomous AI

Autonomous AI, the most sophisticated AI artifact, has the capability of operating on its own. These artifacts can adapt to their environments and perform tasks that would have been previously unsafe or impossible for a human to do (e.g., the use of drones to perform inventory inspections of assets in remote locations). However, the greatest challenge for these artifacts is that they operate independently, and as a result, humans may not have full visibility into their actions and decisions. A new set of risks emerge from the use of these systems in addition to the values at risk associated with Assisted and Augmented AI.

Through our bibliometric analysis, we observed that between 2012 and 2016, the key terms that co-occurred with autonomous systems shifted gradually from words related to the use of Autonomous AI (e.g., agent, robot, prediction, cognition, and simulation) towards more ethics-related words (e.g., future challenge, trust, threat, security, protection, and intervention). We inferred from this analysis that when sophisticated technology grows in use, there is an increase in academic research on its ethical issues, resulting in more insight into the impact of the technology.

In addition to those ethical/social issues associated with Assisted and Augmented AI, as previously discussed, other ethical principles at risk with the use of AI include non-isolation. Wright (2011) encourages researchers to consider whether a technology will be a replacement for human contact and what the impact would be. Autonomous AI could be used to substitute the human worker, resulting in an “invisible workforce.” One of the Big 4 audit firms released a publication titled “As we say robot, will our children say colleague?” (EY 2016b), which captures the impact of robotics in the workplace. Another principle at risk is beneficence. Wright (2011) raises social and ethical questions, such as “Does the project serve broad community goals and values or only the goals of the data collector?”

Step 2: Analysis at the Application Level

Results of the Auditing AI Application Analysis

In assessing the ethical implication of the use of AI in auditing, we consider the three dimensions of practical ethics (Thompson 2007). First, the individual (auditor) level, where we consider the practical issues arising from the use of AI in light of the code of ethics. Secondly, we analyze the institutional (audit firm) level, which Thompson observes is an intermediate dimension (e.g., school, employer) that influences most individuals. However, the institutional level has received little attention in terms of ethical research, with more focus on the individual and societal level. Lastly, the socio-political level (profession and societal level), where we address issues around decision-making, policy, governance, and regulation.

Auditor level

(i) Due Care Due professional care concerns what auditors do and how well they do it. AICPA Code of ethics (Sect. 300.060) requires that “a member should observe the profession’s technical and ethical standards, strive continually to improve competence and the quality of services, and discharge professional responsibility to the best of the member’s ability.” As such, auditors are expected to be able to explain the rationale for their decisions and apply their skills and knowledge in good faith, to objectively evaluate audit evidence. If the auditor using AI cannot understand its rationale for decisions or actions, how could they rely on the technology without impairing their due professional care? Consider an AI used to perform tasks such as sample selection and risk assessments. If the AI is a ‘black-box,’ it may be difficult for the auditor using the AI to justify the choice of specific samples or processes for testing. In such situations, auditors may exhibit automation bias and complacency, i.e., less skepticism and trust in the accuracy of the AI system (Parasuraman and Manzey 2010). As such, developing explainable AI (Samek et al. 2017) is a critical step towards ensuring an AI-enabled audit consistent with professional standards.

Under current auditing standards, auditors may be liable when there is an audit failure due to faulty auditing decision aids or resultant erroneous audit judgments (Specht et al. 1991). Should individual auditors (e.g., engagement partners) be held responsible for the firm or third-party developed technologies for which they have no control over, such as autonomous AI? Verbeek (2006) questions the appropriate assignment of responsibility in situations where actions and decisions made spring from the combined and complex interactions between humans and technologies.

The IAASB (2018) posits that such a situation where third-party tools are used in the audit would pose a challenge, which may not be adequately addressed by current standards. If left unresolved, this could result in a responsibility gap. Matthias (2004) observes that traditionally, the user of a machine is liable for the resultant consequences of the machine operation. However, Matthias reflects that in this new situation of self-learning AI systems, the users would be unable to predict the future behavior of the systems. As such, the users should not be held morally responsible for the systems. This situation would give rise to a responsibility gap. A proposed responsibility mapping, in light of such an emerging gap, is discussed in Step 3.

(ii) Professional Skepticism and Judgment When AI systems are used to support professionals in judgment and decision-making, the impact of these systems could be beneficial due to the robustness of AI. For example, when an auditor has a large amount of data to analyze, the use of intelligent decision systems can effectively point an auditor towards higher risk areas (Brown-Liburd et al. 2015). However, the long-term use of decision aids may lead to the auditor only focusing on the issues that are identified by the decision aid and not consider other factors or issues not identified by the system (Seow 2011). Indeed, prior research demonstrates that as familiarity with a technology increases, the user can become desensitized and resort to habitual behavior, which may cause them to contradict their own instincts (e.g., Goddard et al. 2012). Westermann, Bedard, and Earley (2015) interview 30 audit partners and summarize that “while technology has improved audit efficiency and effectiveness, partners are concerned that the use of technology diminishes the development of appropriate attitudes/behaviors (e.g., critical thinking).”

Hampton (2005) suggests that novice users lack the required experience to evaluate the results of an intelligent decision aid, which causes a higher degree of system overreliance as compared to more experienced users. Skitka et al. (1999) propose that there are two types of errors that could be committed as a result of over-reliance on automated decision aids, such as AI. These errors could either be of omission or commission. Errors of omission arise when the decision-makers fail to take appropriate action because the system fails to inform them that such action was required, despite non-automated indicators of the required action. Errors of commission arise when decision-makers follow system directives, even though there are more valid non-automated indicators suggesting that the system is wrong.

The accounting firms argue that AI will not entirely supplant human judgment. However, even though firms may believe that the AI they use is not entirely autonomous, the auditor’s unquestioning reliance on AI may render the technology autonomous. As an example, while the auditor will make the final judgment, if the auditor always does what the algorithm recommends because s/he believes the algorithm is unbiased and objective, then the algorithm is as good as autonomous. In other words, if auditors never contradict algorithms that make decisions about complex audit tasks, then the algorithms should be considered autonomous. It also remains unknown how AI would operate in the gray areas of accounting (e.g., subjective judgments), and how AI would explain judgment in such cases, as would be required of the traditional auditor.

An auditor’s judgment skills or expertise is a product of their “ability, motivation, and experience” (Libby and Luft 1993). As emerging technology is taking over the more routine tasks that were previously performed by auditors, it is essential to consider whether these routine tasks provided novice auditors with some required experience that shaped their performance, which may be lost with the automation of such tasks. Arnold and Sutton (1998) refer to this as “deskilling,” such that an auditor’s professional judgment may not be as well developed as their counterparts who did not rely on advanced technological tools. Previous literature has proven that the antecedents for performance are experience, knowledge, and ability (Ashton and Ashton 1995). By removing what is referred to as ‘manual’ or ‘routine’ tasks from the professional’s experience, it is essential to consider what the impact on the professional’s performance and judgment will be. This would be a critical step towards ensuring that new auditors are technically competent and would, therefore, be able to subsequently rely on AI applications appropriately without impairing their professional judgment. Thus, the audit profession would need to reflect on the impact of AI on the training of new auditors. For example, training auditors on how to “think about problems” has been shown to improve their performance on ill-structured tasks, mental representations, and skeptical thinking (Plumlee et al. 2015).

(iii) Auditor Competence The code of ethics requires that a “member has the necessary competence to complete those services according to professional standards and to apply the member’s knowledge and skill with reasonable care and diligence” (AICPA 2014). There is an ethical responsibility for the accounting professional to be technically competent, continually improve themselves, and the quality of their services. This calls into question whether the current auditing and accounting curriculum is up to date with current emerging technology, to prepare the future auditor to be competent and relevant in the new technological auditing environment. What knowledge and skills the future professional will require is a pertinent question that prior research has sought to answer (Curtis et al. 2009), but because this answer changes by the minute in this technological world, academia has to ask this question continuously. A proactive approach to the update of the curriculum to address this gap is proposed in Step 3.

(iv) Independence The code of ethics requires auditors to exhibit “Independence in appearance - the avoidance of facts and circumstances that are so significant that a reasonable and informed third party would be likely to conclude that a firm’s or an assurance team member’s integrity, objectivity or professional skepticism has been compromised” (IESBA 2018). The Association of Chartered Certified Accountants (ACCA 2017) identifies a potential independence violation for external auditors when it comes to using AI for audit engagements, cautioning that the external auditor should avoid taking management responsibility. Austin et al. (2019) conducted interviews with various stakeholders (client management, audit partners, technologist, and standard setters) and found that there was an increase in external auditors providing their clients with data-driven insights, which standard setters considered to be a threat to auditor independence. Additionally, while some client management perceived that auditors would be better off relying on their data analytics tools, which provide insight into the company’s operations, the auditors expressed hesitance to do so as it would impair independence.

Although independence has been a topic of tension since the introduction of the Sarbanes–Oxley Act (Humphrey 2008), such tension is exacerbated even more with the use of AI (e.g., the level to which management and auditors can share and rely on each other’s insights from their different AI systems), warranting more practical guidance.

Audit Firm Level

(v) Confidentiality and Data Security The international code of ethics for professional accountants requires compliance with the principles of confidentiality concerning information obtained through their professional and business relationships (IESBA 2018). Auditors have to be mindful of the confidentiality of the information that clients share with them during an audit. The audit firm generally retains this information. If client data are used in AI applications, audit firms have to ensure that the data are secure and protected from security breaches, and risks related to confidentiality, and commingling of data from multiple clients when using AI to analyze, for example, business risk across the industry to gain insight into client risk factors. This issue may be particularly relevant for firms that use third-party AI platforms. How sure can the firm be that confidential information is not placed in a context where it is not adequately protected?

Another potential ethical issue is how the data used to train AI is obtained. Issa et al. (2016) observe, “unfortunately, due to statutory limitations, auditors do not have an ocean of data like those provided by Google or Facebook.” Although there are some types of publicly available datasets that can be used to train AI, it is not always possible to find the specific data required by certain AI from public sources. Where accounting and audit firms source (i.e., buy) training datasets, including the use of client data to train algorithms, could potentially result in confidentiality breaches. What criteria auditors should use for buying training data for their algorithms is also not fully defined, which emphasizes the need for more practical guidance around these emerging ethical dilemmas.

(vi) Data Quality (Inclusion/Exclusion) The code of ethics necessitates that the “professional accountant shall comply with the principle of objectivity, which requires an accountant not to compromise professional or business judgment because of bias, conflict of interest or undue influence of others.” AI that is trained using insufficient and non-diverse data is bound to produce biased output (Cobey et al. 2018). Osoba and Welser (2017) define this problem as “an algorithm’s data diet: with limited human direction, an artificial agent is only as good as the data it learns from.” Objectivity is an essential ethical standard that auditors must exhibit, especially when faced with complex judgments. When AI is used to provide audit judgment, objectivity can be compromised when the training data are biased.

The accuracy of an AI algorithm is plagued by the fact that there is a ‘class imbalance’ problem in many datasets (Chawla et al. 2004), whereby there are insufficient data points to adequately represent the whole population. Chawla et al. (2004) explain that, as in the real world, there are cases where there are more instances of some classes than others, resulting in inaccurate assumptions around the less represented classes. They cite an example of a financial dataset having an imbalanced representation of fraud instances (usually significantly fewer), which hinders the ability of an AI algorithm trained on such an imbalanced dataset to predict fraud accurately. As such, it is increasingly important to apply verification mechanisms within the profession, such as those described in Step 3, to ensure that AI systems used to support or automate audit procedures are free from algorithmic bias and that the audit firm has quality control mechanisms in place to continuously monitor AI algorithms.

(vii) Non-Isolation The audit profession exhibits diversity in human culture and experience, which also plays a role in performance. When accounting and auditing teams have diverse backgrounds, personalities, experiences, and knowledge, they exhibit higher performance (Trotman et al. 2015). The massive rollout of automated AI to complement or replace some roles of the professional in an auditing environment will fundamentally change the team dynamics, influencing human behavior, and performance. Bowers et al. (1996) observe that automated systems might have the “unintended effects on team communication and coordination,” and as a result, human contact within and across audit teams and clients may be cut off, resulting in human isolation.

The code of ethics requires that “a professional accountant shall take reasonable steps to ensure that those working in a professional capacity under the accountant’s authority have appropriate training and supervision” (IESBA 2018). Westermann et al. (2015) observe that while technology can be useful in delivering consistent training across the practice using e-learning tools, its use can result in the decrease of on-the-job coaching opportunities that are present in the traditional audit, in that more senior auditors (e.g., partners) spend less time at client locations as technology begins to reduce the need for their physical presence. This issue is potentially exacerbated when automated systems coordinate and collaborate human interactions.

Westermann et al. (2015) cite audit partners who observe that “as auditors focus more on the computer interface (‘hide behind their computers,’ ‘stuck to their laptops’), verbal interchange is reduced both between auditors and client personnel and among engagement team members… valuable information on the client is lost when the auditor cannot ‘see the person’s reaction and look them in the eye.’” The auditor’s observation of non-verbal cues may become increasingly important in this digital age, where more avenues for fraud may exist. For example, Austin et al. (2019) convey that regulators have expressed concern that client management may become increasingly familiar with data analytics tools which may create new avenues for misreporting (e.g., creation of fictitious entries in accounts which they determine auditors would not use data analytics testing, and would, therefore, be less likely to catch).

Another area at risk of human isolation is the recruitment process. AI systems could be used to optimize job descriptions and post job listings to online portals and perform the first-level screening of applicants by considering their resumes, social media profiles, and recorded video responses, which can be subjected to video analytics to determine their personalities (EY 2016b). The ethical implication of using such automated recruitment tools in place of human recruiters is mainly unexplored. Dattner et al. (2019) observe that “many of these technologies promise to help organizations improve their ability to find the right person for the right job, and screen out the wrong people for the wrong jobs, faster and cheaper than ever before.” However, “using AI, big data, social media, and machine learning, employers will have ever-greater access to candidates’ private lives, private attributes, and private challenges and states of mind.”

The use of a candidate’s personal information in the recruitment process may be unethical and illegal. Traditionally, psychometric assessments which have been scientifically derived and tested for different jobs have been legally used in recruitment. However, Dattner et al. (2019) suggest that AI algorithms with access to applicants’ social media accounts can obtain personal information (e.g., marital status, gender, political orientation, and mental state). If there are no measures in place to curb or detect the use of such information in the recruitment process, ethical and legal issues may arise. For example, social media analytics could be used to determine the mental state of applicants. This practice may result in the violation of the Americans with Disabilities Act, which forbids discrimination based on mental health. In Step 3, we consider how regulators can establish mechanisms for validation and verification of the ethical compliance of such algorithms and the monitoring of the impact of AI on human interactions.

Profession and Societal Level

(Viii) Audit Quality Across the Profession If some firms employ AI, while others do not, what will be the resultant difference in audit quality? Will two tiers of audit quality and standards emerge? Access to AI can potentially lead to a significant increase in the efficiency of accounting and auditing processes for firms, providing them with a competitive advantage. What the impact of AI will be on the competitive landscape between the large accounting firms versus the smaller firms is unknown. Will the cost of AI significantly drop, enabling smaller accounting firms to leverage the technology and become more competitive? Alternatively, will the initial AI investment required ultimately enforce a barrier for entry?

Austin et al. (2019) observed through interviews that many small audit firms did not adopt data analytics because of financial constraints, pointing to the fact that cost is a factor when it comes to technology adoption. In 2006, the chair of the PCAOB observed that the “four remaining largest audit firms currently audit almost 99% of the market capitalization of companies listed in the United States” (Olson 2006). The Big 4 firms retain this client base due to their larger capacity to audit Fortune 500 firms and have a global presence. One of the recommendations of the Advisory Committee on the Auditing Profession (2016) to the U.S. Department of the Treasury was to “reduce barriers to the growth of smaller auditing firms consistent with an overall policy goal of promoting audit quality.” Such efforts to reduce barriers to growth for small/medium-sized auditing firms could include access to validated off-the-shelf AI software that could enable them to reap the benefits of AI and enable them to take on more audit work.

(ix) Beneficence The impact on the profession and society because of autonomous AI will be the loss of certain types of jobs. Autonomous AI, creating the ‘invisible workforce,’ may soon perform many of the back-office tasks. As accounting and auditing are services, with human resources historically being the most significant contribution to accounting firms’ workforce, it is essential to explore what the impact of AI will be on recruitment, and indirectly the impact on the number of students electing to major in accounting if traditional accounting and auditing jobs significantly decrease.

Further, time allocation, reporting, and billing may become more convoluted for the profession. There is a discussion of whether the pricing model for CPA firms may then shift from billable hours to perhaps value pricing or project pricing, as a result of this shift in the level of human resources used in the audit and accounting processes (Tysiac and Drew 2018). Austin et al. (2019) observed that audit fee conflicts between clients and auditors arose when data analytics was used in the audit, in that clients expected audit fee reductions with the use of technology, while the auditors were not keen on providing such reduction due to the significant investments incurred when implementing the technology. With the increased use of AI, it is more likely that such tension between the client and auditor would grow, which may result in the public perceiving that the auditing profession is “commercialized.” Auditors are obliged “to act in a way that will serve the public interest, honor the public trust, and demonstrate a commitment to professionalism.”Footnote 3 If there is a shift from the more easily justified billable auditor hours towards the allocation of AI fixed costs across clients, there may be a need for increased transparency on how AI is used across these clients to foster trust in the resultant billing system.

(x) Transparency The code of ethics requires that “A professional accountant shall be honest and truthful and shall not make exaggerated claims for the services offered by, or the qualifications or experience of, the accountant” (IESBA 2018). When audit firms declare their use of AI systems, without accompanying disclosure into the actual capabilities and limitations of such systems, an expectation gap can emerge where stakeholders (auditors, clients, shareholders, and the public) have different expectations of the AI-enabled audit. In realizing that the use of AI can result in such ethical dilemmas, the European Commission’s High-Level Expert Group on Artificial Intelligence observed that there is need for AI-implementing firms to “provide, in a clear and proactive manner, information to stakeholders (customers, employees, etc.) about the AI system’s capabilities and limitations, allowing them to set realistic expectations” (HLEG 2018). We explore the resolution of such an expectation gap in Step 3.

(xi) Deprofessionalization Brougham and Haar (2018) examine what the impact of AI will be on the future workplace. They cite futurists’ predictions that by 2025, one-third of the existing jobs could be taken over by AI-related technology. They observe that the use of AI in place of a human is an enticing option since AI can work 24 h without breaks, and currently, there is no need for the employer to pay employee benefits and taxes for AI.Footnote 4 Additionally, they observe that automation will result in a “widening divide between the ‘haves and the have nots.’” Although both Assisted and Augmented AI benefit the adopter of the technology, the financial benefits of AI become more visible with Autonomous AI.

Austin et al. (2019) describe the drive towards a “new way of thinking” within the audit profession, whereby auditors are now being “forced” to think like scientists. Another possible shift in the audit profession is the inclusion of data scientists on audit engagements or at the extreme the replacement of auditors by data analytics firms, who have a different mindset than that of the traditional auditor (Brown-Liburd et al. 2015; Earley 2015; Richins et al. 2017). Nonetheless, the face of the audit profession is likely to change, and it is worthwhile to consider what this means when the auditor is no longer the traditional CPA who is bound to the profession’s codes of ethics.

Step 3: Review of Governance and Policy Recommendations

While AI has numerous advantages, the ethical risks associated with the use of the technology need remediation, so that the profession can maximize these resultant benefits (HLEG 2018). Having examined the capabilities, challenges, and ethical and social values at risk with the use of AI in auditing, as captured in the above discussion and summarized in Table 2, we progress to the last phase of the ethical framework. This phase involves the review of governance and the provision of policy recommendations. The aim of this section is not to rehash the issues discussed in Step 2, nor to provide solutions to each ethical issue individually. Instead, the purpose of this section is to provide a high-level analysis of the ideal governance and policy structures. These mechanisms would lay a foundation for a proactive approach to addressing the ethical risks posed by emerging technology, such as those highlighted in Step 2 of this paper.

Review of Governance

The current governance structures for AI are somewhat amorphous. One of the Big 4 audit firms has raised an alarm that the use of AI is increasingly outpacing governance and controls that guide their usage in organizations. They highlight the need for guiding principles for the purposeful design of ethical AI, and agile governance in the face of emerging technology (Cobey et al. 2018). There still exist gaping holes in the current governance of AI within the audit profession. For example, should an audit firm report its use of AI? If so, to whom should they report this use (e.g., their clients, regulators, users of financial statements)? To what level of detail should they report AI usage, issues, and impact on the audit engagement? What monitoring controls should audit firms put in place for the different levels of AI (Assisted, Augmented, and Autonomous)? Who would be technically competent to audit the AI used by auditing firms? Although these questions may initially seem far-fetched, consider what it would mean if 30% of the audit was performed by AI, as projected to be the case by 2025 (WEF 2015). Would it be essential to know what parts of the audit AI performed (e.g., documenting versus judgment), and the reliability and trustworthiness of the AI system?

Currently, the regulator, client, and shareholder may not have full visibility into what audit work is performed by the professional and which work is handled by AI. As such, the profession needs to consider the design of appropriate monitoring and reporting controls for the AI-enabled audit environment. It would also be worthwhile to consider if a shift in the performance of auditing tasks towards automation would impact the overall responsibility of the human auditor. Additionally, whether such a shift would impact the public’s expectation of the auditor, creating an expectation gap. One possibility is that when audit firms advertise their use of AI to inspect the full population of transactions, the expectation of stakeholders may escalate from reasonable assurance, which is the audit statutory requirement, towards full assurance, which is not the current mandate of auditors.

The PCAOB (2016) observes that “the opaque nature of the audit process and the audit results can cause a number of ‘gaps’ between what investors and other market participants expect or need and what an audit is designed to provide.” As such, it is increasingly vital for all stakeholders who rely on the results of the audit, to have some level of transparency into what the audit process entails. In the case of an AI-enabled audit, transparency into the capabilities and limitations of the AI used would assist in establishing a common expectation of the level of assurance provided in an audit environment that incorporates AI.

There are several areas that should be considered in fostering effective governance of an AI-enabled audit environment.

(i) A collaborative effort between stakeholders

In an AI-enabled audit, tension may arise between the various stakeholders (i.e., auditors, audit firms, clients, client investors, the audit profession, regulators, and society). As captured in Table 5, each of the stakeholders has their rights, responsibilities, and resultant risks they face with the implementation of AI. Some of the rights of one group of stakeholders may pose risks to another group. For example, while it is the right for audit firms to implement AI, such implementation may pose risks to auditors, the profession, and society. For example, Auditors may experience isolation when they increasingly interact with AI in place of their human colleagues. Also, while AI may enhance audit quality through the increased effectiveness and efficiency of the audit process, the profession may face an increased inequality gap between the big audit firms and the small/medium-sized audit firms due to the high costs of AI implementation. Additionally, AI implementation may negatively impact society in that job loss may result due to the elimination of tasks currently performed by less experienced auditors, which could negatively impact the Certified Public Accountant (CPA) pipeline.

Not only are there tensions between the interests of the different stakeholders, but there is also tension in the implementation of the ethical principles (HLEG 2018). For example, transparency may come at the cost of privacy. While both these principles are core to developing trustworthy AI, it may be difficult to ensure both at the same time. For example, to make AI more transparent, developers of the AI can provide an explanation facility whereby the AI conveys the basis for its decision to users. While in fact such a transparent explanation functionality could increase the level of trust a user places in the AI, if these explanations can be used to obtain inferences about others, then privacy may be violated. Wachter and Mittelstadt (2019) observe that “data protection law is meant to protect people’s privacy, identity, reputation, and autonomy, but is currently failing to protect data subjects from the novel risks of inferential analytics.” They warn that efforts to provide explanations to AI users may raise ethical issues if the content of these explanations inadvertently discloses other’s private information. It is therefore important that a careful balance is struck when governing the ethics of AI.

Thompson (2007) addresses the question of who should regulate the ethics of a profession, highlighting the tension that exists between the model profession, which effectively self-regulates and the reality where the professionals are market-oriented. Thompson suggests that in such a situation, a process of continuous deliberation and interaction among involved stakeholders is equally important as deciding who has the right to make decisions on ethical matters. Dillard and Yuthas (2001) describe the responsibility ethic as the involvement and inter-connectedness of stakeholders, with the parties anticipating the behavior and responses of other parties when determining their ethical behavior. Dillard et al. emphasize the need for the stakeholders to develop trust in the other stakeholders within the system. In the case that perception is formed that there may be more powerful stakeholders with self-interests within the system, that is when “the ethic of responsibility becomes an ethic of manipulation and exploitation.”

As such, there is a need for feedback loops between the stakeholders as part of governance, as illustrated in Fig. 3. These feedback loops are essential when addressing ethical issues that touch on multiple stakeholders. Such multi-stakeholder issues include fee models for AI-enabled audits, the impact of job automation, redefining the role and required competence of the auditor, regulating auditor transparency with AI use, the level of cross-sharing and reliance of AI information between clients and auditors, and the use of client data to develop algorithms, among other issues.

Several professional bodies and regulators, such as the PCAOB and IAASB, have commissioned taskforces to examine the effect of emerging technology on the profession. These task forces have embraced a multi-stakeholder approach for obtaining insight into the changes to regulations and standards that would be required. It would be vital to continue such engagement of various stakeholders as a more enduring effort to maintain dialog around emerging technology. This formalization of stakeholder collaboration would establish the feedback loops required between the stakeholders. It would be of equal importance to involve academia within the open, inclusive, and progressive collaboration between stakeholders. Such a collaboration would drive proactive, relevant, and practical academic research into the use of emerging technology within the profession.

(ii) Verification and validation mechanism

At the technology level, we propose that a consortium of technologists, ethicists, and policymakers enact AI development best-practice standards.Footnote 5 These standards would address the ethical issues that stem from the technical features of AI. One of the prominent features of AI is its complexity/opacity that makes it difficult for other stakeholders (e.g., auditors) to understand it. The technology consortium would be in the best position to standardize methodologies for verifying the ethical and structural integrity of AI systems and validating that AI fulfills its intended use (Liu and Yang 2004). Such verification and validation methodologies can guide the accreditation of third-party AI systems that are subsequently used by non-technology firms. If third-party AI undergoes such accreditation, this could enhance the level of trust that users may place in an otherwise non-transparent system. More pertinent would be identifying who would perform such accreditation. Ideally, we propose that an independent technology body should provide such a service. We discuss suggested policies around the verification and validation of AI via such an independent body within the policy recommendation section.

(iii) Monitoring mechanism

At the artifact level, we propose that accounting and auditing regulators set standards for monitoring controls around the use and ethical impact of each of the AI artifacts within the auditing profession. Because the level of risk increases as the level of intelligence and functionality of AI moves from assistive towards autonomous, it is vital that monitoring controls factor in the intelligence and function of the different artifacts. We cover policy recommendations for the monitoring controls within the subsequent section.

Policy Recommendations

Technology Policies The development of verification, validation, and accreditation policies would ensure the physical and psychological safety of humans who interact with these systems (Anderson 2008); the fairness, accuracy, and objectivity of the AI (Sprigman 2018); and that a value-sensitive design approach has been followed in the development of the technology (Wright 2011; HLEG 2018). These policies mitigate ethical issues arising from the inherent features of AI, as captured within the first step of our methodology (e.g., AI’s complexity which can limit transparency and accessibility to the technology and the need for large datasets to train AI which could result in data protection issues). A consortium of ethicists, technologists, and policymakers should consider what appropriate accreditation documentation should accompany AI systems. This accreditation would be increasingly important for AI systems that are developed by third parties but used by independent firms that do not have access to the underlying models. The accreditation documentation may include information such as the source of data used for training the AI, efforts undertaken to overcome known AI issues (e.g., algorithmic bias), and the monitoring controls in place to ensure the accuracy of self-evolving algorithms. Such documentation may be the first step towards demystifying AI.

Artifact Policies Artifact policies should espouse the use of AI as intended to enhance audit quality in a standardized manner, which will positively affect all stakeholders. Based on our prior discussion, we provide the following policy suggestions at the artifact level:

(i) Auditing regulators should adopt the verification, validation, and accreditation policies as defined at the technology policy level, and customize these policies to the different types of AI artifacts used in auditing. This customization of accreditation policies at the artifact level would lead to the design of monitoring and reporting controls that will guide the artifact’s usage by audit firms. An example of such a policy would be the continuous monitoring of Autonomous AI’s operation logs as they perform audit procedures. Another example of an artifact policy is the documentation of the audit professionals’ review of the work of Assisted and Augmented AI to ensure that auditors continuously apply professional skepticism and judgment with the use of AI.

(ii) Auditing regulators and standard setters should consider the ethical implications of AI applications used in auditing, to provide practical guidance to firms. For example, should standards such as ISA 620: Using the work of an auditor’s expert (IFAC 2009) be enhanced to include AI systems? The standard already addresses the need for the auditor to assess the expert’s competence, capability, and objectivity. Additionally, the standard provides guidance on the evaluation of the expert’s work. As such, this standard would provide an excellent baseline to develop guidance for the auditor’s reliance on AI.

Another standard for consideration in light of AI usage is the ISA 530: Audit sampling and other means of testing (IFAC 2004). The use of automated tools can enable auditors to test all transactions within the client’s financial records (Byrnes et al. 2018). This testing of all records would be a more superior approach in comparison to traditional sampling. With the increasing computation capabilities, it would be worthwhile for regulators to consider how a migration towards AI can be achieved across the profession to enhance audit quality. Before such a required migration, it would be necessary to address challenges that those who have attempted such an approach have faced. One challenge has been that full population testing yields hundreds or even thousands of exceptions, which may take an unreasonable amount of time for the auditor to investigate (CPAB Exchange 2019). Approaches such as prioritizing the high-risk exceptions when there is a high number of anomalies flagged by the automated system could be adopted into auditing standards (Issa and Kogan 2014).