Abstract

We investigated whether face-specific processes as indicated by the N170 in event-related brain potentials (ERPs) are modulated by emotional significance in facial expressions. Results yielded that emotional modulations over temporo-occipital electrodes typically used to measure the N170 were less pronounced when ERPs were referred to mastoids than when average reference was applied. This offers a potential explanation as to why the literature has so far yielded conflicting evidence regarding effects of emotional facial expressions on the N170. However, spatial distributions of the N170 and emotion effects across the scalp were distinguishable for the same time point, suggesting different neural sources for the N170 and emotion processing. We conclude that the N170 component itself is unaffected by emotional facial expressions, with overlapping activity from the emotion-sensitive early posterior negativity accounting for amplitude modulations over typical N170 electrodes. Our findings are consistent with traditional models of face processing assuming face and emotion encoding to be parallel and independent processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Faces are stimuli of vital importance in the human environment and hence supposedly processed by a dedicated neural network. In addition, displayed emotional expressions have important implications during social interaction, and may further raise the relevance of perceived faces. Although a mechanism that encodes, both, faces and emotion-related information at the same time may seem parsimonious, in fact it could pose a serious problem to recognize a person independently from its emotional expression. Therefore, mechanisms that account for the high proficiency in face processing might be separated from those that encode emotional meaning (Haxby et al. 2000). The present study provides evidence for this claim by showing a dissociation of face- and emotion-specific processes, suggesting that both have evolved rather independently in humans.

In event-related brain potentials (ERPs), face-selectivity is reflected by an enhancement of the so-called N170, a negative amplitude peaking around 150–200 ms over temporo-occipital sites (e.g., Bentin et al. 1996; Rossion et al. 2000). Functionally, processes reflected by the N170 have been related to stages of face-specific structural encoding (Bentin and Deouell 2000; Eimer 2000a, b; Itier and Taylor 2004; but see Thierry et al. 2011), that is, the generation of a holistic internal face representation utilized for later processes, as for instance the explicit recognition of a person (cf. Bruce and Young 1986; Eimer et al. 2011).

Brain imaging studies in humans suggest the N170 to stem from a network in posterior regions of the extrastriate visual cortex. This network comprises the inferior occipital and the middle lateral fusiform gyri—termed occipital (OFA) and fusiform face area (FFA), respectively—as well as the superior temporal sulcus in the lateral temporal cortex (Haxby et al. 2000; see also, Dalrymple et al. 2011; Deffke et al. 2007; Ishai 2008; Itier and Taylor 2004; Sadeh et al. 2010). Although the site of the N170 amplitude fits the relative location of this face-selective network, precise contributions of the different brain regions to the N170 remain controversial. Most consistently, the FFA in the fusiform gyrus has been reported to be selectively activated by faces (e.g., Kanwisher et al. 1997, 1999; Puce et al. 1996). However, according to a recent lesion study, the FFA is neither sufficient nor necessary for generating the face-selective N170 component (Dalrymple et al. 2011). In fact, the N170 may result from simultaneous activations in different brain regions, varyingly recruited depending on the concrete task at hand (Calder and Young 2005; Hoffman and Haxby 2000).

Critically, the involvement of face-unspecific, domain-general functions may coincide with face-specific processes (for review see, Palermo and Rhodes 2007; Ishai 2008). Traditionally, it has been assumed that structural encoding of a face and encoding of its changeable features, such as emotional expressions, take place independently and in parallel (Bruce and Young 1986). In line with this idea, many ERP studies found no influence of emotional expressions on N170 amplitudes; however, at variance with traditional accounts, a significant amount of studies reported the N170 to be modulated by emotional facial expressions (see Table 1), yielding higher amplitudes mainly for negative relative to neutral faces.

To date, the reasons for these contradicting findings remain unclear. Thus, the question whether the N170 as an index of face-specific processes is affected by emotion has not finally been answered. Importantly, looking at the opposing lines of evidence more closely, a striking methodological difference appears: The large majority of studies that found emotion effects on the N170 amplitude used the average activity across all electrodes as reference (average reference; e.g., Batty and Taylor 2003), whereas most of the studies that did not find such effects used single or paired common references located at or close to the mastoids (linked earlobes or mastoids; e.g., Ashley et al. 2004; Pourtois et al. 2005).

Although different types of references do not alter the topography of ERPs across the scalp, they may change the magnitude characteristics of ERPs at certain electrode sites. This principle is evident from theoretical considerations and has repeatedly been shown over the last four decades (e.g., Brandeis and Lehmann 1986; Koenig and Gianotti 2009; Lehmann 1977; for a recent review, Michel and Murray 2012). For the N170, this has been empirically demonstrated by Joyce and Rossion (2005): The more posterior the reference is located, that is, the closer to electrodes where the N170 is typically measured, the smaller the N170 peak amplitude at these sites. In contrast, a fronto-centro-parietal positivity, the vertex positive potential (VPP) suggested to be a polarity reversal of the posterior N170, became increasingly pronounced with a more posterior reference site. The same should apply to emotional modulations over posterior electrodes in the N170 time window (see, Junghöfer et al. 2006).

By definition, the voltage of any potential on the scalp is set to zero at the site of the reference electrode. Hence, any experimental effects will be zero at the location of the reference. So, chances are higher to observe a significant effect if the reference site is further away from the maximal effect site. If the reference happens to be located at the site of a maximal experimental effect, the effect will be pushed to a totally different site. Therefore, using a common reference electrode makes it hard to draw conclusions about the actual location of an effect, unless one has a priori knowledge about its distribution. The average reference, in contrast, is much less sensitive to prior assumptions about electrically neutral locations and thus tends to leave effects at sites where they in fact happen to be maximal (see, Koenig and Gianotti 2009).

Importantly, during the time window of the N170, also the emotion-sensitive early posterior negativity (EPN) arises. The EPN consists in a relatively increased negativity over temporo-occipital electrodes around 150 ms after stimulus onset, most pronounced between 250 and 300 ms, which typically emerges for emotional relative to neutral stimuli. It has been associated with increased perceptual encoding of emotional stimuli in extrastriate visual cortex and occurs spontaneously, that is, even when emotion is not relevant to the current task (for review, Schupp et al. 2006; Rellecke et al. 2012). Like the N170 peak amplitude, the EPN amplitude has been reported to be most pronounced for threat-related expressions (e.g., Schupp et al. 2004; Rellecke et al. 2011), and to occur in ERPs referred to average reference rather than to mastoid or earlobe common references (Junghöfer et al. 2006). In the latter case, emotion effects seem to occur primarily over fronto-central regions (see, Eimer and Holmes 2007), which may reflect a polarity reversal of the EPN (see, Junghöfer et al. 2006)—similar to the inverse relation of the VPP and the N170.

Since the EPN will yield more negative amplitudes for emotional facial expressions over temporo-occipital regions, at least in average-referenced ERPs, it needs to be taken into consideration that it is not necessarily the N170 component itself that underlies the emotion effect. Instead it is conceivable that the emotion effects at typical N170 electrodes may be due to superimposed EPN activity. Note that focussing only on ERPs at posterior sites of the scalp will not distinguish between the N170 and EPN as both components occur with enhanced negativities. Thus, to separate N170 and EPN related activity from each other, a more promising approach should be to compare the components’ overall spatial distributions across the scalp (topography) in the same time window. Differences in scalp distribution indicate that the neural generator configurations of the N170 and EPN differ in at least some respect (Lehmann and Skrandies 1980; McCarthy and Wood 1985; Skrandies 1990). This difference may consist in (partially) non-overlapping generator sources but also in different relative contributions from the same sources. None of these differences would be expected if the N170 and EPN reflected the same process, thus supporting the traditional notion of face- and emotion-specific processes to be carried out independently and in parallel (Bruce & Young, 1986).

By comparing scalp distributions during face processing within the same time window, two previous studies have already distinguished EPN from N170 activity, showing that emotion effects on temporo-occipital amplitudes were due to the former but not the latter component (Rellecke et al. 2011; Schacht and Sommer 2009). The first aim of the present study was to replicate these findings and to show that face- and emotion-specific encoding are in fact based on independent, parallel processes as indicated by distinct scalp distributions of the EPN and N170 component, respectively. By applying different reference montages to the same data, the second aim of the present study was to assess to which degree the use of different referencing techniques (average reference vs. mastoid) alters emotion effects at typical N170 electrodes. This might offer an explanation for the contradicting reports regarding emotion effects on the posterior N170 amplitude in the literature. However, note that if topographical differences emerged for emotion effects and the N170 in the same time window, this would suggest previously reported emotional modulations of the N170 to in fact reflect superimposed EPN activity.

The first part of this investigation involved a reanalysis of data from the study by Rellecke and co-workers (2011) where faces with angry, happy and neutral expressions had been presented in an easy and superficial face-word decisions task. In this study, we had compared N170 and EPN effects based on ERP mean amplitudes averaged across 150–200 ms relative to face onset. In contrast, other studies focussed on emotion effects on the exact N170 peak amplitude rather than the averaged activity for a certain interval (see Table 1). In order to better compare our results with previous reports, the current reanalysis assessed emotion effects and corresponding topographies coinciding with the exact N170 peak amplitude over temporo-occipital electrodes.

The second experiment involved a similar design as the original study by Rellecke et al. (2011); however, inverted images of the same stimuli were also included. Inversion preserves all physical properties of an image while disrupting the holistic configuration of diagnostic facial features relevant for expression recognition (Bartlett and Searcy 1993), thus interferes with emotion processing in faces (cf., Ashley et al. 2004; Eimer and Holmes 2002). Since different emotional expression categories naturally differ in physical stimulus characteristics, face inversion is useful to ascertain the validity of early emotion effects. If emotion effects occur only for upright but not inverted faces, this argues that they reflect the recognition of emotional meaning rather than physical differences between different expression types.

Experiment 1

Methods

Participants

Twenty-four participants between 18 and 35 years of age (11 female) contributed data to the experiment. They were reimbursed with course credits or payment. All participants were right-handed (according to Oldfield 1971), native German speakers with normal or corrected-to-normal vision and without any psychotropic medication or any history of psychiatric condition according to self-report.

Stimuli

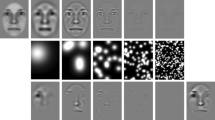

Colour portraits of 50 different persons (25 female) displaying angry, happy, and neutral expressions were taken from the Karolinska database (Lundquist et al. 1998) and the NimStim Face Stimulus Set (Tottenham et al. 2009), yielding 150 pictures in all. All images were edited to a unitary format by applying a mask with ellipsoid aperture framing the stimuli within an area of 126 × 180 pixels (4.45 × 6.35 cm) and rendering only the facial area visible (see Fig. 1a). Luminance (according to Photoshop™) and contrast (SD of luminance divided by M of luminance; cf. Delplanque et al. 2007) of each image was automatically adjusted by eliminating extreme pixels using Photoshop; multivariate analyses of variance confirmed that facial expressions did not vary on these parameters (mean luminance and contrast: angry = 122 and 0.3; happy = 123 and 0.3; neutral = 123.2 and 0.3), Fs(2,147) ≤ 2.4, ps = .690 and .095, respectively. Each face stimulus was presented at the centre of the screen on a dark grey background and appeared only once during the experiment.

In addition, 150 word stimuli were included (white Arial letters; mean length: 6.3 ± 1.5 letters, 3.1 ± 0.6 syllables; height: 8 mm; maximal width: 39 mm) for purposes other than the present report (see, Rellecke et al. 2011).

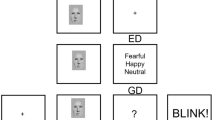

Procedure

The study was performed in accordance with the Declaration of Helsinki. After signing written informed consent, participants were seated at a viewing distance of 55 cm in front of a computer screen in a dimly lit, sound-attenuated room. Participants were instructed to classify each stimulus as “face” or “word” as fast and accurately as possible by pressing one of two horizontally arranged buttons. Each trial started with a fixation cross presented for 500 ms at the centre of the screen, followed by a face or word displayed for 1,000 ms at the same location. In order to minimize ocular artifacts, participants were asked to look at the fixation cross before stimulus appearance and to avoid movements and eye blinks during a trial. Responses had to be given within an interval of 1,500 ms after stimulus onset. The next trial always started 1,500 ms after stimulus onset. A practice block of about 12 trials was conducted using stimuli not used in the experiment proper. The practice trials were repeated till performance was considered appropriate. The main experiment consisted of 300 trials (150 faces and words each) divided into six blocks of 50 trials each, with a break after each block. The stimulus-response mapping was balanced across participants and stimulus presentation was fully randomized. Each stimulus appeared once for each participant yielding 50 trials in each experimental cell.

EEG Recording and Pre-Processing

The electroencephalogram (EEG) was recorded from 56 electrodes according to the extended 10–20 system (Pivik et al. 1993). Most electrodes were mounted within an electrode cap (Easy-Cap™). The left mastoid electrode (A1) was used as initial reference and AFz served as ground. Four additional external electrodes were used for monitoring the vertical and horizontal electrooculogram. Impedance was kept below 5 kΩ, using ECI electrode gel (Expressive Constructs Inc., Worcester, MA). All signals were amplified (Brain Amps) with a band pass of 0.032–70 Hz; sampling rate was 250 Hz. Offline, the continuous EEG was corrected for blinks via BESA software (Brain Electrical Source Analysis, MEGIS Software GmbH) and segmented into epochs of −200 to +1,000 ms relative to stimulus onset. These epochs were recalculated to average reference (AR) and average mastoid reference (MR; averaged across the left and right mastoid), yielding two separate sets of ERPs about 57 and 55 electrodes, respectively. ERPs were calculated for the edited set of raw data and referred to a 200-ms pre-stimulus baseline. Epochs containing incorrect responses were discarded as well as epochs showing amplitudes exceeding −200 or +200 μV or voltage steps larger than 100 μV per sampling point in any of the channels (artefacts).

Data Analysis

N170 peak amplitudes were automatically detected as local minima between 100 and 200 ms at P10, P9, PO10, and PO9, separately, in both AR and MR data. These electrodes were chosen as they showed largest N170 peak amplitudes at symmetrical electrode sites across hemispheres. Peak amplitudes were analyzed by means of repeated measures analysis of variance (ANOVA), α = .05, including the factor Reference type (AR, MR), and Emotion (angry, happy, neutral); electrode was included as an additional 4-level within-subject factor. For peak amplitudes showing significant main effects of Emotion and/or interactions between emotion and reference, Bonferroni-adjusted pos hoc tests were performed by separate ANOVAs in AR and MR data, involving pair-wise comparisons of emotional categories.

For those pair-wise emotional categories that yielded significantly different peak amplitudes at N170 electrodes (P10, P9, PO10, and PO9), ERPs across the scalp coinciding with the exact time point of the average peak (across N170 electrodes for each participant separately) were analyzed by means of repeated measures ANOVA, involving the within-subject factors emotion (2-levels) and electrode [57- (AR) or 55- levels (MR)].

To test whether Emotion effects were distinguishable from the N170 with regard to their topography, amplitude differences were eliminated by vector scaling (McCarthy and Wood 1985). Vector scaling adjusts for effects of amplitude by dividing the voltage at each electrode by the root mean square of activity across all electrodes (i.e. global field power, GFP; Skrandies 1990) for the same time point and condition. Therefore, one can infer that any difference across electrodes between two conditions is due to the spatial distribution of ERPs rather than amplitude. After adjusting for amplitude, repeated measures ANOVAs were performed, including all electrodes as within-subject factor levels. Note that in order to avoid comparing overlapping data sets, different emotion conditions were used for the calculation of the N170 and Emotion effect topographies (e.g. N170 to happy vs. Emotion effect of angry minus neutral expressions).

The average reference sets the mean value of the ERP amplitude across all electrodes within a given condition to zero. Therefore, for repeated measures ANOVAs on AR data including all electrodes across the scalp, only effects in interaction with electrodes are meaningful. To keep results comparable across the two reference conditions, only electrode interactions will be reported for both, AR and MR data. Degrees of freedom were Huynh–Feldt corrected to account for violations of the sphericity assumption; we report the original degrees of freedom, the correction factor Epsilon, and corrected p-values.

Results

Reaction times (M = 424 ms) and error rates (missed and incorrect responses, M = 2.6 %) were both unaffected by Emotion, Fs(2,46) < 1.8, ps ≥ .174 (see Rellecke et al. 2011).

The latency of the N170 amplitude did not vary between the selected electrodes, F(3,69) = 1.7, p = .200 (mean peak latency: M = 158 ms, range 156–163 ms). A main effect of Reference on peak amplitudes was observed, F(1,23) = 69.7, p < .001, η 2 p = .752. As can be seen in Fig. 2a, this effect was due to much more pronounced peak amplitudes in AR relative to MR data. Also an effect of Emotion occurred, F(2,46) = 21.0, p < .001, η 2 p = .477, that interacted with Reference, F(2,46) = 13.8, p < .001, η 2 p = .376. Post hoc comparisons of emotion categories revealed that peak amplitudes in both AR and MR data significantly differed for angry (M = −15.5 and −8.9 μV) compared to neutral (M = −13.5 and −8.1 μV) and happy expressions (M = −13.8 and −8.2 μV); however, the Emotion effect was stronger in AR than MR data, Fs(2,46) = 26.1 versus 7.2, ε (only AR) = .832, ps < .01, η 2 p s = .532 versus .239. As depicted in Fig. 2a, AR and MR data both yielded increased peak amplitudes between 100 and 200 ms for angry relative to neutral and happy expressions but these effects were more pronounced in average-referenced ERPs.

Effects of emotional facial expressions and reference during the N170 peak amplitude. ERPs to angry (red), happy (blue), and neutral (grey) facial expressions averaged across typical N170 electrodes (P10, P9, PO10, PO9) in average reference (solid lines) and mastoid reference (dashed lines). For illustration purposes ERP curves were filtered at 15 Hz. Larger maps (range: −16–16 μV) depict the topography of the N170 (averaged across facial expressions), surrounding smaller maps (range: −2–2 μV) depict Emotion effects of angry relative to neutral (left) and happy expressions (right) for the same time point in average reference (bottom) and mastoid reference (top). a In Experiment 1, peak amplitudes differed for angry relative to neutral and happy expressions, respectively, in both average reference and mastoid reference. b In Experiment 2, peak amplitudes differed between angry and neutral expressions (upright faces) only in average reference. Inversion of the stimuli completely eliminated this emotion effect (right graph) (Color figure online)

Effects across all electrodes were also significant for angry compared to neutral and happy expressions in both AR, Fs(56,1288) = 9.4 and 9.5, εs = .079 and .116, ps < .001, η 2 p s = .290 and .292, and MR data, Fs(54,1242) = 5.9 and 9.9, εs = .075 and .090, ps < .001, η 2 p s = .203 and .300. As can be seen in Fig. 2a, Emotion effects were associated with an increased posterior negativity accompanied by a fronto-centro-parietal positivity for angry relative to other facial expressions in AR and MR data. Notably, the posterior negativity was stronger in AR data, while in MR data the anterior positivity was more pronounced.

Comparison of vector-scaled topographies of the N170 (for happy or neutral expressions) and emotion effects (for angry relative to neutral or angry relative to happy expressions, respectively) yielded significant differences in, both, AR, Fs(56,1288) = 5.8 and 5.7, εs = .131 and .147, ps < .001, η 2 p s = .202 and .198, and MR data, Fs(54,1242) = 7.5 and 6.3, εs = .143 and .163, ps < .001, η 2 p s = .247 and .215. Note that topographies are independent from reference, so AR and MR data yield highly similar results; slightly different F-values for topographical comparisons within each data set are due to the different numbers of included electrodes. As apparent from Fig. 2a, topographies of the Emotion effects yielded more pronounced centro-parietal positivities than the N170 component in both, AR and MR data.

Discussion

As indicated in the introduction, we hypothesized ERP effects over posterior sites of the scalp to be more pronounced in average than mastoid reference. This was confirmed by the finding of larger overall peak amplitudes over typical N170 electrodes and their stronger modulation by emotional facial expressions in average-relative to mastoid-referenced data. Therefore, it appears that effects of emotional facial expressions coinciding with the N170 peak amplitude over temporo-occipital electrodes are more likely to surface in average-referenced ERPs. In contrast, in ERPs referenced to mastoids, posterior peak amplitudes and their modulation by Emotion were less pronounced at N170 electrodes due to the spatial proximity of the site of reference (cf., Junghöfer et al. 2006).

Importantly, comparison of vector-scaled topographies yielded that overall spatial distributions of the Emotion effect and the N170 differed. This suggests that effects of emotional facial expressions on amplitudes at typical N170 electrodes do not reflect a modulation of the N170 component itself—or at least not solely of the N170—but rather superimposed activity of an emotion-sensitive component. As hypothesized, a likely candidate is the EPN, most pronounced for threat-related expressions (e.g., Schupp et al. 2004; Rellecke et al. 2011, 2012), so increased negative amplitudes for angry relative to other facial expressions can be expected to occur during the time of the N170 peak.

Topographies of the EPN and N170 seemed to mainly differ due to the distribution of an accompanying fronto-centro-parietal positivity. Such fronto-centro-parietal positivities are very likely polarity reversals of the EPN and N170, respectively (Joyce and Rossion 2005; Junghöfer et al. 2006). Different topographies of the EPN and N170 across the scalp suggest at least partly distinct neural generators involved in emotion- and face-specific encoding, respectively—which is in accordance with traditional models of face processing assuming both mechanisms to proceed in parallel and independently (cf. Bruce and Young 1986).

Experiment 2

Since inversion is known to interfere with face and emotional expression processing by disrupting the coherence of diagnostic facial features (e.g., Eimer 2000b; Eimer and Holmes 2002; Haxby et al. 1999), we included also inverted stimuli in “Experiment 2”. If the increased negativity for emotional (angry) expressions over N170 electrodes was indeed based on the recognition of emotional meaning in faces, it should only occur for upright but not inverted faces. We again expected emotion effects and the N170 peak amplitude coinciding over temporo-occipital sites to be more pronounced in average- rather than mastoid-referenced data.

Methods

Twenty participants between 21 and 32 years of age (six female) contributed data to the experiment. They were reimbursed with course credits or payment. All participants were right-handed (Oldfield 1971), native German speakers with normal or corrected-to-normal vision and without any psychotropic medication or any history of psychiatric condition according to self-report.

The same stimuli were used as for “Experiment 1” but each face and word stimulus was also inverted by flipping the image along its vertical axes (see Fig. 1a, b), yielding a total stimulus set of 600 items containing 150 upright and 150 inverted images for faces and words each.

The procedure was identical to “Experiment 1”, apart from the main experiment consisting of 600 trials divided across 12 blocks. EEG recording and pre-processing were identical to “Experiment 1” as was the statistical analyses of ERPs, except for the inclusion of Orientation (upright, inverted) as additional factor.

Results

Reaction times (M = 404 ms) and mean error rates (M = 2.3 %) were both unaffected by Emotion and Orientation, Fs ≤ 2.3, ps ≥ .145.

Again, latencies of N170 amplitudes did not differ between selected electrodes, F(3,57) = 2.0, p = .147 (mean peak latency: M = 170 ms, range 164–174 ms). Like in “Experiment 1”, a main effect of Reference was observed for peak amplitudes at N170 electrodes, F(1,19) = 74.7, p < .001, η 2 p = .797. As can be seen in Fig. 2b, this difference was, again, due to larger peak amplitudes in AR relative to MR data. This time, no main effect of emotional facial expressions on peak amplitudes over N170 electrodes occurred, Fs(2,38) = 1.2, p = .305. However, again, an Emotion × Reference interaction was observed, F(2,38) = 5.1, p < .05, η 2 p = .211. In addition, an Emotion × Orientation interaction emerged, F(2,38) = 6.1, p < .01, η 2 p = .244. Pos hoc tests yielded that these interactions were due to emotional facial expressions affecting peaks at N170 electrodes only for upright stimuli in AR data, F(2,38) = 6.5, p < .05, η 2 p = .254—with significantly larger amplitudes for angry (M = −13.4 μV) relative to neutral expressions (M = −12.1 μV) and a trend for angry versus happy expressions (M = −12.5 μV), p = .072—while Emotion effects were entirely absent in MR data and did not occur for inverted faces in AR data, Fs(2,38) ≤ 3.8, ps ≥ .064. As can be seen in Fig. 2b, face inversion eliminated the Emotion effect on peak amplitudes at N170 electrodes in average-referenced ERPs.

For angry relative to neutral upright facial expressions in AR data, also ERPs across all electrodes significantly differed, F(56,1064) = 3.1, ε = .098, p < .01, η 2 p = .142. As can be seen in Fig. 2b (AR data), angry expressions induced an increased temporo-occipital negativity, this time accompanied by an anterior positivity focussing on centro-parietal regions more strongly. Again, comparison of vector-scaled topographies yielded significant differences between the Emotion effect (angry minus neutral) and the N170 component (for happy expressions), F(56,1064) = 6.9, ε = .111, p < .001, η 2 p = .267 (AR data).

Discussion

Once again we found peak amplitudes at N170 electrodes and their emotional modulation to be more pronounced when ERPs were referred to average reference than when mastoid reference was applied. Again, overall spatial distributions of the original N170 and the Emotion effect significantly differed for the same time point, suggesting the N170 component itself to be unaffected by emotion. Instead, emotional amplitude modulations coinciding with the N170 peak amplitude over temporo-occipital electrodes seem to originate from superimposed EPN activity (Schacht and Sommer 2009; Rellecke et al. 2011). A further finding is that Emotion effects occurred only for upright but not for inverted faces. This supports the notion that the EPN is in fact based on the processing of emotional meaning rather than low-level physical features in facial stimuli.

However, unlike in the first experiment, this time ERPs referred to mastoids did not yield any Emotion effect on peak amplitudes over N170 electrodes. Notably, overall, Emotion effects were smaller in “Experiment 2” than in “Experiment 1”, as apparent from the effect of angry relative neutral expressions across electrodes being larger in “Experiment 1” (η 2 p = .290) than “Experiment 2” (upright faces; η 2 p = .142). Thus, it seems that in mastoid-referenced data, the likelihood of obtaining emotional modulations for peak amplitudes between 100 and 200 ms at N170 electrodes decreases with the overall impact of emotional facial expressions on ERPs; that is, the closer the reference is located to posterior regions and the weaker the emotion effect across electrodes, the more unlikely the detection of Emotion effects at temporo-occipital electrodes coinciding with the N170 component (cf., Junghöfer et al. 2006).

The overall decrease of the effect of emotional facial expressions in “Experiment 2” relative to “Experiment 1” is most likely due to the use of inverted stimuli. Task and trial sequencing were exactly the same as in “Experiment 1”, thus the intermixed and random presentation of inverted and upright stimuli must have reduced the impact of emotional facial expressions. Since inverted and upright faces are processed differently (Haxby et al. 1999, 2000), presenting stimuli of different orientations in random order could have changed the implicit processing strategy of the participants (cf., Rellecke et al. 2012). Also the scalp distribution of the Emotion effects seemed different, with an anterior positivity more strongly centring on centro-parietal electrodes in “Experiment 2”, which further suggests a different quality of emotion processing to some degree in both experiments.

General Discussion

We investigated whether face-specific processing as indicated by the N170 entails the encoding of emotion. The previous literature on this phenomenon has yielded conflicting evidence, which is likely due to the different referencing procedures applied (cf., Junghöfer et al. 2006).

Replicating findings of Joyce and Rossion (2005), we found that the face-locked N170 peak amplitude over typical temporo-occipital electrodes decreased when the reference was located at the mastoids relative to when average reference was used. The same held true for effects of emotional facial expressions: Emotion effects coinciding with the N170 peak amplitude at temporo-occipital electrodes were more pronounced when ERPs were referred to average reference than when mastoids were used. More generally speaking then, the closer the sites of recording and reference are located to each other, the less pronounced are effects in the ERP. This offers an explanation as to why many studies using average reference reported emotional modulations of the N170 peak amplitude, while others using more posterior reference sites did not find such an effect. Moreover, it appears conceivable that reports on emotional expression modulations arising in the N170 time window over anterior sites in linked earlobes reference montages (for review, Eimer and Holmes 2007) reflect effects at the positive pole of the EPN shifted to frontal electrodes by mastoid references.

Note that site of reference alone does not completely determine whether effects of emotional facial expressions are observed over temporo-occipital electrodes in the N170 time window. It is still possible to obtain emotion effects with a mastoid reference as we did in “Experiment 1” (see also, Williams et al. 2006), or to find no emotion effect with average reference (e.g., Dennis and Chen 2007). This hints at other experimental factors relevant for the occurrence of increased posterior negativities for emotional facial expressions during the N170 peak amplitude; most likely factors affecting the overall magnitude of the emotion effect in the N170 time window. We thus conclude that the site of reference merely determines the likelihood of emotional facial expression effects to be detected during the N170 peak amplitude.

Most importantly, given the overall spatial distributions of the N170 and the Emotion effect to differ for the same time point (in both mastoid- and average-referenced data), emotional modulations of the N170 peak amplitude as reported by others appear spurious. In fact, different topographies for the N170 and EPN indicate—at least to some degree—different neural sources to be active in parallel for face- and emotion-related processes, respectively, which is in line with traditional models of face processing (Bruce and Young 1986). Therefore, emotional modulations coinciding with the N170 peak amplitude at temporo-occipital electrodes seem to reflect a component superimposed on the N170 wave shape. The topography of this effect resembled the emotion-sensitive EPN (Schupp et al. 2006). Notably, as must be the case from simple mathematical considerations, changing the reference from mastoids to average did not change the distinct topographical shapes of the N170 and the Emotion effect (EPN), but only shifted the magnitudes of potentials to more positive or negative values (most apparent in Fig. 2a; see, Junghöfer et al. 2006). That is, changing the point of reference leaves intact all topographical features (relative amplitudes between any given pair of locations) except for the zero equipotential line.

In sum, our findings suggest that apparent effects of emotional facial expressions on the N170 peak amplitude may be misleading and at least in part reflect other processes than a modulation of the N170 component itself. Instead, emotion effects coinciding with the N170 peak amplitude over temporo-occipital electrodes appear to consist in EPN activity at the same latency; given the high human expertise in face processing it is feasible that emotional meaning can be extracted at such an early stage. Detectability of the overlapping EPN depends on the referencing procedure with emotion effects being less pronounced at posterior electrodes with more posterior reference sites. Our data is in line with a functional dissociation of processes related to face (N170) and emotion (EPN) encoding, as suggested by traditional models of face processing.

References

Ashley V, Vuilleumier P, Swick D (2004) Time course and specificity of event-related potentials to emotional expressions. Neuroreport 15(1):211–216

Bartlett JC, Searcy J (1993) Inversion and configuration of faces. Cogn Psychol 25:281–316

Batty M, Taylor MJ (2003) Early processing of the six basic facial emotional expressions. Cogn Brain Res 17:613–620

Bentin S, Deouell LY (2000) Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cogn Neuropsychol 17(1/2/3):35–54

Bentin S, Allison T, Puce A, Perez E, McCarthy G (1996) Electrophysiological studies of face perception in humans. J Cogn Neurosci 8(6):551–565

Blau VC, Maurer U, Tottenham N, McCandliss BD (2007) The face-specific N170 component is modulated by emotional facial expression. Behav Brain Funct 3(7). doi:10.1186/1744-9081-3-7

Brandeis D, Lehmann D (1986) Event-related potentials of the brain and cognitive processes: approaches and applications. Neuropsychologia 24(1):151–168

Bruce V, Young A (1986) Understanding face recognition. Br J Psychol 77:305–327

Caharel S, Courtay N, Bernard C, Lalonde R, Rebaï M (2005) Familiarity and emotional expression influence an early stage of face processing: an electrophysiological study. Brain Cogn 59:96–100

Calder AJ, Young AW (2005) Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci 6:641–651

Dalrymple KA, Oruc I, Duchaine B, Pancaroglu R, Fox CJ, Iaria G, Handy TC, Barton JJS (2011) The anatomic basis of the right face-selective N170 in acquired prosopagnosia: a combined ERP/fMRI study. Neuropsychologia 49:2553–2563

Deffke I, Sander T, Heidenreich J, Sommer W, Curio G, Lueschow A (2007) MEG/EEG sources of the 170 ms response to faces are co-localized in the fusiform gyrus. Neuroimage 35:1495–1501

Delplanque S, N’diaye K, Scherer K, Grandjean D (2007) Spatial frequencies or emotional effects? A systematic measure of spatial frequencies for IAPS pictures by a discrete wavelet analysis. J Neurosci Methods 165:144–150

Dennis TA, Chen C-C (2007) Neurophysiological mechanisms in the emotional modulation of attention: the interplay between threat sensitivity and attentional control. Biol Psychol 76:1–10

Eimer M (2000a) The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 11:2319–2324

Eimer M (2000b) Effects of face inversion on the structural encoding and recognition of faces. Evidence from event-related brain potentials. Cogn Brain Res 10:145–158

Eimer M, Holmes A (2002) An ERP study on the time course of emotional face processing. Neuroreport 13(4):427–431

Eimer M, Holmes A (2007) Event-related brain potential correlates of emotional face processing. Neuropsychologia 45:15–31

Eimer M, Holmes A, McGlone FP (2003) The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn Affect Behav Neurosci 3(2):97–110

Eimer M, Gosling A, Nicholas S, Kiss M (2011) The N170 component and its links to configural face processing: a rapid neural adaptation study. Brain Res 1376:76–87

Haxby JV, Ungerleider L, Clark VP, Schouten JL, Hoffman EA, Martin A (1999) The effect of face inversion on activity in human neural systems for face and object perception. Neuron 22:189–199

Haxby JV, Hoffman EA, Gobbini MI (2000) The distributed human neural system for face perception. Trends Cogn Sci 4(6):223–233

Hendriks MCP, van Boxtel GJM, Vingerhoets JFM (2007) An event-related potential study on the early processing of crying faces. Neuroreport 18(7):631–634

Hoffman EA, Haxby JV (2000) Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci 3(1):80–84

Holmes A, Vuilleumier P, Eimer M (2003) The processing of emotional facial expression is gated by spatial attention: evidence from event-related potentials. Cogn Brain Res 16:174–184

Holmes A, Winston JS, Eimer M (2005) The role of spatial frequency information for ERP components sensitive to faces and emotional facial expressions. Cogn Brain Res 25:508–520

Ishai A (2008) Let’s face it: it’s a cortical network. Neuroimage 40:415–419

Itier RJ, Taylor MJ (2004) N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb Cortex 14:132–142

Joyce C, Rossion B (2005) The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clin Neurophysiol 116:2613–2631

Junghöfer M, Peyk P, Flaisch T, Schupp HT (2006) Neuroimaging methods in affective neuroscience: selected methodological issues. In Anders S, Ende G, Junghöfer M, Kissler J, Wildgruber D (eds) Progress in brain research, vol 156. Elsevier, Amsterdam, pp 123–143

Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17(11):4302–4311

Kanwisher N, Stanley D, Harris A (1999) The fusiform face area is selective for faces not animals. Neuroreport 10:183–187

Koenig T, Gianotti LRR (2009) Scalp field maps and their characterization. In: Michel CM, Koenig T, Brandeis D, Gianotti LRR, Wackermann J (eds) Electrical neuroimaging. Cambridge University Press, Cambridge, pp 25–47

Lehmann D (1977) The EEG as scalp field distribution. In: Remond A (ed) EEG informatics. A didactic review of methods and applications of EEG. Elsevier, Amsterdam, pp. 365–384

Lehmann D, Skrandies W (1980) Reference-free identification of components of checkerboard-evoked multichannel potential fields. Electroencephalogr Clin Neurophysiol 48:609–621

Leppänen JM, Kauppinen P, Peltola MJ, Hietanen JK (2007) Differential electrocortical responses to increasing intensities of fearful and happy emotional expressions. Brain Res 1166:103–109

Leppänen J, Hietanen JK, Koskinen K (2008) Differential early ERPs to fearful versus neutral facial expressions: a response to the salience of the eyes. Biol Psychol 78:150–158

Lundquist D, Flykt A, Öhman A (1998) Karolinska directed emotional faces, KDEF. Digital Publication, Stockholm

McCarthy G, Wood CC (1985) Scalp distributions of event-related potentials: an ambiguity associated with analysis of variance models. Electroencephalogr Clin Neurophysiol 62:203–208

Michel CM, Murray MM (2012) Towards the utilization of EEG as a brain imaging tool. Neuroimage 61:371–385

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychology 9:97–113

Palermo R, Rhodes G (2007) Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia 45(1):75–92

Pegna AJ, Landis T, Khateb A (2008) Electrophysiological evidence for early non-conscious processing of fearful facial expressions. Int J Psychophysiol 70:127–136

Pivik RT, Broughton RJ, Coppola R, Davidson RJ, Fox N, Nuwer MR (1993) Guidelines for the recording and quantitative analysis of electroencephalographic activity in research contexts. Psychophysiology 30:547–558

Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P (2005) Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum Brain Mapp 26:65–79

Proverbio AM, Brignone V, Matarazzo S, Del Zotto M, Zani A (2006) Gender difference in hemispheric asymmetry for face processing. BMC Neurosci 7(44). doi:10.1186/1471-2202-7-44

Puce A, Allison T, Asgari M, Gore JC, McCarthy G (1996) Differential sensitivity of human visual cortex to faces, letter strings, and text structures: a functional magnetic imaging study. J Neurosci 16(16):5205–5215

Rellecke J, Palazova M, Sommer W, Schacht A (2011) On the automaticity of emotion processing in words and faces: event-related brain potentials from a superficial task. Brain Cogn 77(1):23–32

Rellecke J, Sommer W, Schacht A (2012) Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol Psychol 90(1):23–32

Righart R, de Gelder B (2005) Context influences early perceptual analysis of faces: an electrophysiological study. Cereb Cortex 16(9):1249–1257

Rossion B, Gauthier I, Tarr MJ, Despland P, Bruyer R, Linotte S, Crommelinch M (2000) The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport 11(1):69–74

Sadeh B, Podlipsky I, Zhdanov A, Yovel G (2010) Event-related potential and functional MRI measures of face-selectivity are highly correlated: a simultaneous ERP-fMRI investigation. Hum Brain Mapp 31:1490–1501

Schacht A, Sommer W (2009) Emotions in word and face processing: early and late cortical responses. Brain Cogn 69:538–550

Schupp HT, Öhman A, Junghöfer M, Weike AI, Stockburger J, Hamm AO (2004) The facilitated processing of threatening faces: an ERP analysis. Emotion 4:189–200

Schupp HT, Flaisch T, Stockburger J, Junghöfer M (2006) Emotion and attention: event-related brain potential studies. In Anders S, Ende G, Junghöfer M, Kissler J, Wildgruber D (eds) Progress in brain research, vol 156. Elsevier, Amsterdam, pp 31–51

Schyns PG, Petro LS, Smith ML (2007) Dynamics of visual information integration in the brain for categorizing facial expressions. Curr Biol 17:1580–1585

Skrandies W (1990) Global field power and topographic similarity. Brain Topogr 3(1):137–141

Sprengelmeyer R, Jentzsch I (2006) Event related potentials and the perception pf intensity in facial expressions. Neuropsychologia 44:2899–2906

Stekelenburg JJ, de Gelder B (2004) The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport 15(5):777–780

Thierry G, Martin CD, Downing P, Pegna AJ (2007) Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nat Neurosci 10(4):505–511

Todd RM, Lewis MD, Meusel LA, Zelazo PD (2008) The time course of social-emotional processing in early childhood: ERP responses to facial affect and personal familiarity in a Go-Nogo task. Neuropsychologia 46(2):595–613

Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, Nelson C (2009) The NimStim set of facial expressions: judgements from untrained research participants. Psychiatry Res 168:242–249

Williams LM, Palmer D, Liddell BJ, Song L, Gordon E (2006) The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. Neuroimage 31:458–467

Wronka E, Walentowska W (2011) Attention modulates emotional expression processing. Psychophysiology 48(8):1047–1056

Acknowledgments

This research was supported by the German Initiative of Excellence, Cluster of Excellence 302 “Languages of Emotion”, Grant 209 to AS and WS. We thank Guillermo Recio, Sebastian Rose, and Olga Shmuilovich for assistance in data collection, and Thomas Pinkpank and Rainer Kniesche for technical support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rellecke, J., Sommer, W. & Schacht, A. Emotion Effects on the N170: A Question of Reference?. Brain Topogr 26, 62–71 (2013). https://doi.org/10.1007/s10548-012-0261-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10548-012-0261-y