Abstract

In this paper a direct approximation method on the sphere, constructed by generalized moving least squares, is presented and analyzed. It is motivated by numerical solution of partial differential equations on spheres and other manifolds. The new method generalizes the finite difference methods, someway, for scattered data points on each local subdomain. As an application, the Laplace–Beltrami equation is solved and the theoretical and experimental results are given. The new approach eliminates some drawbacks of the previous methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The moving least squares (MLS) has recently become a popular method for scattered data approximation on bounded domains in Euclidean spaces. This method was introduced by Lancaster and Salkauskas [15] with a special case going back to an earlier paper by Shepard [23]. In the nineties and thereafter it has been used extensively for solving partial differential equations (PDEs) in sciences and engineering; see [1, 3]. The error analysis has been studied by several authors; see [2, 18, 28] and the references therein.

The MLS approximation was developed for pure function approximation on spheres in [27]. The application of the classical MLS for solving PDEs on spheres and other manifolds is much involved, because the PDE operators should be evaluated for non-close-form and complicated shape functions. This is one of the reasons why this approximation technique has been rarely used for solving PDEs on manifolds. In this paper, we avoid the classical approach and suggest a direct approximation using a generalized moving least squares (GMLS). The idea of GMLS was introduced in [20] on bounded domains in \(\mathbb {R}^d\) and used to construct a fast numerical method based on local weak-forms for solving PDEs in [19].

In this paper, we adapt the direct approach on spheres

and use it for numerical solution of spherical PDEs. The new technique eliminates the action of operators on shape functions and replaces it by a much cheaper evaluation on spherical harmonics. In fact, GMLS recovers test functionals directly from values at nodes, without any detour via shape functions. The method is meshless, because the unknown quantities are parameterized entirely in terms of scattered nodes. On the other hand, this method generalizes the finite difference methods (FDM) for irregular points on the sphere.

To carry out the error analysis, we first prove that there exists a family of stable local polynomial reproductions on the sphere. To do this, we prove an analogous Bernstein inequality for spherical harmonics on spherical caps, and we then use the notion of norming sets to prove the unisolvency. Afterward, we show that the presented GMLS approximation constructs an example for this family. Finally, we estimate the error using a standard argument for stable local polynomial reproductions. The error analysis in the PDE part falls into a recently framework for nodal meshless methods by Schaback [22]. As an application, we present some numerical results for the Laplace–Beltrami equation.

The paper is organized as follows. In Sect. 2, a short review on spherical harmonics is given. In Sect. 3, it is proved that there exists a family of stable local polynomial reproductions based on a set of scattered points on the sphere. In Sect. 4, the GMLS approximation is formulated on the sphere, and in Sect. 5 it is shown that the presented GMLS approximation constructs an example of the stable local polynomial reproductions. Then, the error analysis for some special and important cases of differential operators is given. In Sect. 6, the application of the new method for solving PDEs is presented and analyzed. Finally, in Sect. 7 some experimental results are given to justify the theoretical error and stability bounds.

2 Spherical harmonics

Spherical harmonics are restrictions to the unit sphere \(\mathbb {S}^d\) of polynomials Y which satisfy

where \(\varDelta \) is the Laplacian operator in \(\mathbb {R}^{d+1}\). The space of all spherical harmonics of degree \(\ell \) on \(\mathbb {S}^d\) is denoted by \({\mathcal H}_\ell ^d={\mathcal H}_\ell ^d(\mathbb {S}^d)\), and has an \(L_2\) orthonormal basis

where

where \(\varGamma \) is the known Gamma function. We have

where \(d\sigma \) is the surface measure of the unit sphere. The space of spherical harmonics of order m or less will be denoted by

with dimension \(N(d+1,m)\).

A spherical cap on \(\mathbb {S}^d\) with center \(x_0\in \mathbb {S}^d\) and geodesic radius \(\delta \) is defined by

where \(\mathrm {dist}(x,y)\) is the geodesic distance between two points x, y on \(\mathbb {S}^d\) defined by \(\mathrm {dist}(x,y)=\arccos (x^Ty)\).

3 Local polynomial reproduction on the sphere

In this section, we prove the existence of a family of functions, based on a set of scattered points on the sphere, called “local polynomial reproductions”. An analogous result on bounded domains in \(\mathbb {R}^d\) is given in [28]. In the forthcoming section, we show that the generalized moving least squares approximation of this paper constructs an example of this family. In doing so, we present the following definition from [13, 28] and then we employ a known result of Theorem 3.1.

Definition 3.1

Let V be a finite dimensional vector space with norm \(\Vert \cdot \Vert _V\) and let \(\varLambda \in V^*\) (the dual space of V) be a finite set consisting of N functionals. We will say that \(\varLambda \) is a norming set for V if the mapping \(T:V\rightarrow T(V)\subseteq \mathbb {R}^N\) defined by \(T(v)=(\mu (v))_{\mu \in \varLambda }\) is injective. T is called the sampling operator.

If \(\varLambda \) is a norming set for V, then \(T^{-1}:T(V)\rightarrow V\) exists, and if \(\mathbb {R}^N\) has the norm \(\Vert \cdot \Vert _{\mathbb {R}^N}\) and its dual \(\mathbb {R}^{N*}=\mathbb {R}^N\) has the norm \(\Vert \cdot \Vert _{\mathbb {R}^{N*}}\), then equipping T(V) with the induced norm, we have

Also, we can simply show that

which means that \(\Vert \cdot \Vert _V\) and \(\Vert T(\cdot )\Vert _{\mathbb {R}^N}\) are equivalent norms on V. Thus \(\varLambda \) allows us to equip V with an equivalent norm via the operator T. This is the origin of term norming set or norm generating set [13].

The proof of the following theorem can be found in [17, 28].

Theorem 3.1

Suppose V is a finite-dimensional normed linear space and \(\varLambda =\{\mu _1,\ldots ,\mu _N\}\) is a norming set for V, T being the corresponding sampling operator. For every \(\lambda \in V^*\) there exists a vector \(u\in \mathbb {R}^N\) depending only on \(\lambda \) such that for every \(v\in V\),

and

In the sequel, Theorem 3.1 will be used for \(V=\mathcal P_m^d\) and \(\mu _j=\delta _{x_j}\) along with \(\ell _\infty \)-norm on \(\mathbb {R}^N\) and \(\ell _1\)-norm on its dual.

To have a well-defined approximation based on multivariate polynomials we should impose a condition on the distribution of points to guarantee the solvability.

Definition 3.2

A set point \(X=\{x_1,\ldots ,x_N\}\subset \mathbb {S}^d\) with \(N\geqslant \mathrm {dim}({\mathcal P}_m^d)\) is called \({\mathcal P}_m^d\)-unisolvent, if the zero function is the only function from \({\mathcal P}_m^d\) that vanishes on X.

The following proposition links the notions of norming sets and unisolvency of scattered points. Here \(\delta _x\) denotes the point evaluation functional at x, defined by \(\delta _x(v)=v(x)\).

Proposition 3.1

The functionals \(\varLambda =\{\delta _{x_1},\ldots , \delta _{x_N}\}\) form a norming set for \({\mathcal P}_m^d\) if and only if X is \({\mathcal P}_m^d\)-unisolvent.

To demonstrate the unisolvency of a set point X on a spherical cap or any subset of \(\mathbb {S}^d\), we require a variation of the Markov inequality on spheres. The restriction of any spherical harmonic Y of degree m to a great circle is a univariate trigonometric polynomial of degree less than or equal to m. A simple inequality says that if \(p_m\) is a trigonometric polynomial of degree \(\leqslant m\) then

This is the best possible bound, for there is equality if \(p_m(t) = \sin mt\). A simple proof can be found in [6, Section 4]. A variation of this inequality which holds on an interval shorter than the period is called the Videnskii’s inequality [26]: if \(\delta \in (0,\pi )\) and

then

If we assume \(\delta <\pi /2\) then (3.1) holds true for all \(m\geqslant 1\). Moreover, (3.2) is trivial for \(m=0\) independent of \(\delta \). Thus, (3.2) is guaranteed for all \(m\in \mathbb {N}_0\) and \(\delta \in (0,\pi /2)\).

Let \(x_0\in \mathbb {S}^d\) and \(\delta >0\) and let Y be any spherical harmonic in \({\mathcal P}_m^d|_{B(x_0,\delta )}\). For \(x,y\in B(x_0,\delta )\), let \(\mathcal C\) be the geodesic arc (part of a great circle) passing through x and y, and let p be the restriction of Y to \(\mathcal C\). We have

leading to an integrated form of the Markov inequality as below

Now, we are in situation that we can prove the following theorem.

Theorem 3.2

Let \(x_0\in \mathbb {S}^d\) and \(\delta \in (0,\pi /2)\) be given. Suppose \(B(x_0,\delta )\) is a spherical cap on \(\mathbb {S}^d\), and let \(m\in \mathbb {N}_0\) be fixed. Suppose that \(h>0\) and the set \(X'=X\cap B(x_0,\delta )\) satisfy

-

(1)

\(h\leqslant \frac{\delta }{16 m^2}\),

-

(2)

for every \(B(x,h)\subset B(x_0,\delta )\) there is a center \(x_j\in X'\cap B(x,h)\);

then \(\varLambda =\{\delta _x: x\in X'\}\) is a norming set for \(\mathcal P_m^d\) and the norm of the inverse of the associated sampling operator is bounded by 2.

Proof

Let \(B = B(x_0,\delta )\). Choose an arbitrary \(Y\in \mathcal P_m^d\) with \(\Vert Y\Vert _{\infty ,B}=1\). There exists \(x\in \overline{B}\) with \(|Y(x)|=\Vert Y\Vert _{\infty ,B}=1\). Assume that \(x\notin X'\). Consider a great circle \(\mathcal C\) which passes through \(x_0\) and x. If \(\mathrm {dist}(x_0,x)\leqslant h\) set \(x'=x_0\), otherwise let \(x'\in B\) be a point on \(\mathcal C\) between \(x_0,x\) with \(\mathrm {dist}(x,x')=h\). Using condition (2) there exists a point \(x_j\in X'\cap B\) such that \(\mathrm {dist}(x',x_j)\leqslant h\). Thus \(\mathrm {dist}(x,x_j)\leqslant 2h\). Applying the integrated Markov inequality (3.3), using the fact that \(\cot (\delta /2)\leqslant 2/\delta \) and using condition (1), we can write

This shows that \(|Y(x_j)|\geqslant 1/2\), thus the sampling operator T exists and

Finally, if \(x\in X'\) then for \(x=x_j\) we have \(|Y(x_j)|=1\). Thus the smaller upper bound 1, instead of 2, will be achieved for \(\Vert T^{-1}\Vert \). \(\square \)

In case \(h=h_X\) condition (2) of Theorem 3.2 is automatically satisfied.

It is worth to note that, Theorem 3.2 of [12] proves a similar statement with a special definition for h and a different upper bound on it. Its proof uses the Videnskii’s inequality and the norming sets as well. Although we should refer to [12] for the origin of the idea, our condition on h covers that of [12] and our proof, which follows the standard argument of the corresponding case in \(\mathbb {R}^{d+1}\) [28], is shorter and simpler. Also, see [10] for a global estimation on the sphere.

Since we are finally interested in solving equations containing the Laplace–Beltrami operator \(\varDelta _0\) and/or its corresponding gradient operator \(\nabla _0\), we will try to obtain some Bernstein bounds for these operators. The Laplace–Beltrami operator on \(\mathbb {S}^d\) is the spherical part of the Laplace operator in \(\mathbb {R}^{d+1}\). It plays an important role for analysis on the sphere. Let \(x=(x^1,\ldots ,x^{d+1})\in \mathbb {R}^{d+1}\). In the spherical-polar coordinates \(x=ry\), \(r>0\), \(y\in \mathbb {S}^d\), the Laplace operator satisfies

where \(\varDelta _0\) is independent of derivatives with respect to the radial direction r. For example in case \(d=1\) and in the polar coordinates \((x^1,x^2)=(r\cos \theta , r\sin \theta )\in \mathbb {R}^2\), \(r>0\), \(0\leqslant \theta \leqslant 2\pi \), we have

which means that the Laplace–Beltrami operator on \(\mathbb {S}^1\) is \(\varDelta _0=d^2/d\theta ^2\). In case \(d=2\), and in the spherical polar coordinates

we have

which gives

On the other side, the spherical gradient \(\nabla _0\) satisfies

where \(\nabla _0\) is the spherical part of \(\nabla =(\partial _1,\ldots ,\partial _{d+1})\) and involves only derivatives in y. Here \(\partial _j:= {\partial }/{\partial x^j}\). See [5] for proof. The following lemma can also be easily established [5].

Lemma 3.1

Let \(f\in C^2(\mathbb {S}^d)\). Define \(F(x):=f(x/\Vert x\Vert _2)\), \(x\in \mathbb {R}^{d+1}\). Then

The usual Laplacian \(\varDelta \) satisfies the dot product \(\varDelta =\nabla \cdot \nabla \), and the analogue of this identity also holds on the sphere, i.e. \(\varDelta _0=\nabla _0\cdot \nabla _0\).

Now, we introduce the operators \(D_{ij}\) to express some explicit relations between spherical and Euclidian differential operators. Using this, we can apply some know bounds from Euclidian spaces to prove the analogous bounds on spheres.

Definition 3.3

For \(x=(x^1,\ldots ,x^{d+1})\in \mathbb {R}^{d+1}\) and \(1\leqslant i\ne j \leqslant d+1\), define

where \(\theta _{ij}\) is the angle of polar coordinates in the \((x^i,x^j)\)-plane, defined by \((x^i,x^j)=r_{ij}(\cos \theta _{ij},\sin \theta _{ij})\), \(r_{ij}\geqslant 0\) and \(0\leqslant \theta _{ij}\leqslant 2\pi \).

The first equality in (3.6) shows that \(D_{ij}\) acts on \(\mathbb {R}^{d+1}\), yet the second equality shows that it acts on \(\mathbb {S}^d\). With notations introduced in Lemma 3.1, Theorem 8.2 of [5] proves that

Besides, Lemma 8.6 of the same reference proves

where \((\nabla _0)_j\) is the j-th component of \(\nabla _0\).

A Bernstein inequality for multivariate polynomials in \(\mathbb {R}^{d+1}\) is known from [28] which is stated in the following proposition.

Proposition 3.2

([28] Proposition 11.6) Suppose that \(\varOmega \subset \mathbb {R}^{d+1}\) is bounded and satisfies an interior cone condition with radius \(r>0\) and angle \(\theta \in (0,\pi /2)\). If \(p\in \mathbb {P}_m(\mathbb {R}^{d+1})\) (the space of multivariate polynomials of degree at most m on \(\mathbb {R}^{d+1}\)) and \(\alpha \in \mathbb {N}_0\) is a multi-index for which \(|\alpha |\leqslant m\) then

Note that, a set \(\varOmega \subset \mathbb {R}^{d+1}\) is said to satisfy an interior cone condition if there exist an angle \(\theta \in (0,\pi /2)\) and a radius \(r>0\) such that for every \(x\in \varOmega \) a unit vector \(\xi (x)\) exists such that the cone

is contained in \(\varOmega \).

Proposition 3.2 can be used to prove the following theorem.

Theorem 3.3

Let \(x_0\in \mathbb {S}^d\) and \(\delta \in (0,\pi /8]\). Then for \(Y\in {\mathcal P}_m^d\) we have the following inequalities for \(x\in B(x_0,\delta )\),

where \(C_{1,m,d}=20dm^2\) and \(C_{2,m,d}=200d(d+1)m^4\). The first inequality is interpreted component-wise.

Proof

Define \(\varOmega := \mathrm {Cone}(0,x_0,\delta ,1){\setminus } \{0\}\subset \mathbb {R}^{d+1}\). If \(\delta \leqslant \pi /8\), one can prove that \(\varOmega \) itself satisfies an interior cone condition with radius \(r=1/4\) and angle \(\theta =\delta \). Define \(\widetilde{Y}(x) :=Y(x/\Vert x\Vert _2)\) for \(x\in \varOmega \). It is clear that \(\widetilde{Y}\in \mathbb {P}_m(\mathbb {R}^{d+1})\). Definition 3.3 and Proposition 3.2 imply

The inequality in the fourth line above uses the fact that \(\sin \delta \geqslant \frac{4}{5}\delta \) for \(\delta \in (0,\pi /8)\) and the inequality in the last line uses the homogenous property of \(\widetilde{Y}\). By restricting x to the sphere and by using (3.8) we simply get (3.9). Bound (3.10) can be established by a simple induction. \(\square \)

Now we can prove, by applying Theorems 3.1 and 3.3, the existence of a stable local polynomial reproduction based on a set point X on \(\mathbb {S}^d\). We restrict ourselves to some special differential operators by introducing the following notation.

Notation 1

Let \(L_0:=Id\) where Id is the identity operator, \(L_1:=\nabla _0\) and \(L_2:=\varDelta _0\). Note that, the subscript on L refers to the order of the related differential operator.

Theorem 3.4

Assume that \(m\in \mathbb {N}\) is given. For every set \(X = \{x_1,\ldots ,x_N\}\subset \mathbb {S}^d\) with fill distance \(h_X\leqslant \frac{\pi }{128m^2}=:h_0\), every \(x\in \mathbb {S}^d\) and every \(k\in \{0,1,2\}\) there exist numbers \(u_1^{(k)}(x),\ldots , u_N^{(k)}(x)\) such that

-

(1)

\(\sum _{j=1}^N u_j^{(k)}(x)Y(x_j) = L_k Y(x)\), for all \(Y\in {\mathcal P}_m^d\),

-

(2)

\( \sum _{j=1}^N |u_j^{(k)}(x)| \leqslant C_{k,1} h_X^{-k}\),

-

(3)

\( u_j^{(k)}(x)=0\) \(\mathrm {if}\) \(\mathrm {dist}(x,x_j)> C_{2}h_X\),

where \(L_k\) are introduced in Notation 1, \(C_{0,1}=2\), \(C_{1,1}=\frac{5}{2}d\), \(C_{2,1}=(5/4)^2d(d+1)\) and \(C_{2} = 16 m^2\).

Proof

Let \(x\in \mathbb {S}^d\) be given. Choose \(\delta :=C_2h_X\) where \(C_2= 16 m^2\). Since \(h_X\leqslant \pi /(128m^2)\) we have \(\delta \leqslant \pi /8\) as is required for the previous inequalities. The fill-distance \(h_X\) and \(X'=X\cap B(x,\delta )\) satisfy both conditions of Theorem 3.2. Thus \(\{\delta _{x_j}: x_j\in X'\}\) is a norming set for \(\mathcal P_m^d|_{B(x,\delta )}\) and \(\Vert T^{-1}\Vert \leqslant 2\), where T is the associated sampling operator. By applying Theorem 3.1 for \(V=\mathcal P_m^d|_{B(x,\delta )}\), \(X=X'\), \(N=|X'|\), \(\lambda =\delta _x\circ L_k\), \(\mu _j = \delta _{x_j}\) and \(\ell _\infty \)-norm on \(\mathbb {R}^{|X'|}\) (\(\ell _1\)-norm on its dual) and by using (3.10) and (3.9) we have

Finally, by setting \(u_j^{(k)}(x)=0\) for \(x_j\in X{\setminus } X'\) we have (1) and (2). For (3), we realize that \(\mathrm {dist}(x,x_j)>\delta =C_2h_X\) means that \(x_j\notin B(x,\delta )\), and thus \(u_j^{(k)}(x)=0\). \(\square \)

4 Generalized moving least squares on spheres

Let \(u\in C^{m+1}(\mathbb {S}^d)\) for some \(m\geqslant 0\). Note that the smoothness of a function u on the spheres (or any manifold) is defined by the smoothness of \(u\circ T\) with homeomorphic mapping \(T:U\subset \mathbb {R}^d\rightarrow \mathbb {S}^d\) where U is open. Assume that \(X=\{x_1,\ldots ,x_N\}\subset \mathbb {S}^d\) is a set of scattered points with fill distance \(h_X\). For a given functional \(\lambda \in C^{m+1}(\mathbb {S}^d)^*\), we are going to recover \(\lambda (u)\) from values \(u(x_j)\), \(j=1,\ldots ,N\). The functional \(\lambda \) can, for instance, describe point evaluations of u and its derivatives up to order m. Therefore, we assume that \(\lambda \) is finally localized at some \(x\in \mathbb {S}^d\). Let L be a linear differential operator of order at most m, and for \(x\in \mathbb {S}^d\) define \(\lambda :=\delta _x\circ L\). The moving least squares approximation makes such recovery possible, by first approximating u by

where \(a_j\) are shape functions, and then approximating \(\lambda (u)=Lu(x)\) by \(\lambda (s_{u,X})=Ls_{u,X}(x)\),

This approach can be called the shape function or the trial space approach which is the usual techniques for solving partial differential and integral equations in numerical analysis. In the context of MLS it requires the action of L on shape functions which are complicated to evaluate. Here we follow an alternative approach which directly approximates Lu(x) from nodal values \(u(x_j)\) by

The new approach is called the direct approach. In general, \(L a_j\) is not identical to \(a_j^L\). If \(L= Id\) then the classical moving least squares (4.1) is resulted for pure function approximation. However, we desire to consider some more general functionals motivated by numerical solution of PDEs in strong and weak forms.

Let \(w:\mathbb {S}^d\times \mathbb {S}^d\rightarrow [0,\infty )\) be a continuous function. For a given \(x\in \mathbb {S}^d\) the value \(s_{u,X}^L(x)\) of generalized moving least squares approximation is given by \(s_{u,X}^L(x)=L Y^*(x)\) where \(Y^*\) is the solution of

Note that, the weight function w is chosen independent of L which makes the minimization problem (4.4) independent of L, and thus makes the new method different from the classical approximation (4.2). If we expand the optimal solution \(Y^*(x)\) in terms of spherical harmonics then we have

where the optimal vector \(b^*\) depends subtly on x via the weights. To have a local representation we choose a compactly supported weight function w, and for simplicity we let w to be a radial function. Thus we choose a continuous function \(\phi :[0,\pi )\rightarrow [0,\infty )\) with \(\phi (r)>0\) for \(r\in [0,1/2]\), and \(\phi (r)=0\) for \(r\geqslant 1\). We define for some \(0<\delta <\pi \),

For some reasons in analysis and implementation, it is more interesting to write the approximation in terms of shape functions and nodal values as in (4.3). For this, there is an alternative minimization problem on \(a^L\) instead of b. The proof of the following theorem is the same as the corresponding result in \(\mathbb {R}^d\) [20]. Also, see [27, 28] for a special case where L is the identity operator. Note that, this theorem makes a connection to the Backus–Gilbert optimality which was discovered in [4] for the classical moving least squares.

Theorem 4.1

Suppose that for every \(x\in \mathbb {S}^d\) the set \(\{x_j: j\in I(x,\delta ,X)\}\) is \(\mathcal P_m^d\)-unisolvent where \(I(x,\delta ,X)\equiv I(x):=\{j\in \{1,2,\ldots ,N\}: \mathrm {dist}(x,x_j)\leqslant \delta \}\). Then problem (4.4) with (4.5) is uniquely solvable and the solution \(s_{u,X}^L(x)=L Y^*(x)\) can be represented as

where the coefficients \(a_j^{L*}\) are determined by minimizing

under the constraints

In the following we drop the star from notation and write \(a^L\) instead of \(a^{L*}\) for simplicity. As a consequence of Theorem 4.1 by using the classical Lagrange multiplier approach, as it is done in [20, 28] in case \(\mathbb {R}^d\), functions \(a_j^L\) have representation

where, due to our assumption on unisolvency, the \(\beta _{\ell \,k}^L\) are the unique solution of the following system of linear equations

where \(0\leqslant \ell '\leqslant m,\; 1\leqslant k'\leqslant N(d,\ell )\). In a matrix form, this system can be rewritten as

where \(P(x)\in \mathbb {R}^{|I(x)|\times N(d+1,m)}\) has components \(Y_{\ell \,k}(x_j)\) and W(x) is a diagonal matrix of size |I(x)| carrying the weights \(\phi _\delta (\mathrm {dist}(x,x_j))\) on its diagonal. The right hand side vector \(Y^L(x)\in \mathbb {R}^{N(d+1,m)}\) has components \(L Y_{\ell 'k'}(x)\). The system is clearly positive semi-definite and using the assumption on unisolvency it is positive definite. Thus,

For approximation at any point \(x\in \mathbb {S}^d\) a small linear system of equation should be solved. The computational complexity of the approximant at a single point x is bounded by \({\mathcal O}(N(d+1,m)^3+N(d+1,m)^2|I(x)|+|I(x)|)\). Since in practice |I(x)| is much smaller than N and it is comparable with \(N(d+1,m)\), the complexity at every point is of order \(N(d+1,m)^3\). Recall that \(N(d+1,m)\) is the dimension of space \(\mathcal P_m^d\) of spherical harmonic basis functions.

From (4.8) we see that it suffices to evaluate L on space \(\mathcal P_m^d\), not on a certain trial space spanned by shape functions \(a_j\). This significantly speeds up numerical calculations in comparison with the classical moving least squares (4.2).

The error estimation for special cases \(L = L_0, L_1, L_2\) (see Notation 1) will be given in the next section.

5 Error analysis

In this section we estimate the error \(L u(x)-s_{u,X}^L(x)\) for some special L in terms of the fill distance \(h_X\). We will assume that all set of centers X are quasi-uniform. To be more precise, we define the separation distance \(q_X\) to be the radius of the largest ball that can be placed around every point in X such that no two balls overlap, i.e.

In addition, the mesh ratio \(\rho _X\) is defined by

which measures how uniformly the points are placed. If we have a sequence of point sets X, they are said to be quasi-uniform if there exists a global constant \(c_{\mathrm {qu}}>1\) such that \(\rho _X\leqslant c_{\mathrm {qu}}\), independent of X.

According to Notation 1, assume that L is specialized in \(L_0=Id\), \(L_1=\nabla _0\) or \(L_2=\varDelta _0\). Functions \(a_j^{(k)}:=a_j^{L_k}\), \(k\in \{0,1,2\}\), from generalized moving least squares approximation provide a local polynomial reproduction in sense of Theorem 3.4. Assume that \(h_0\), \(C_{k,1},\, k=0,1,2\) and \(C_2\) denote the constants from Theorem 3.4. Suppose that X satisfies \(\rho _X\leqslant c_{\mathrm {qu}}\) and \(h_X\leqslant h_0\), and let \(\delta = 2C_2h_X\). The first property (polynomial reproduction)

follows from the construction process of the generalized moving least squares approximation. Since \(a_j^{(k)}\) are supported in \(B(x_j,\delta )\) and \(\delta =2C_2h_X\), the third property holds with constant \(C_2\) replaced by \(2C_2\), i.e.

The proof of the second property (stability bound) invites more challenge. The same argument as given in [27] yields

where \(\widetilde{C}_{k,1}=C_{k,1}(1+2c_{\mathrm {qu}}C_2)^{d/2}(\pi /2)^{(d-1)/2}\sqrt{C_\phi /c_\phi }\) in which \(\displaystyle c_\phi :=\min _{r\in [0,1/2]}\phi (r)\) and \(C_\phi :=\Vert \phi \Vert _{L_\infty [0,1]}\).

These properties can be used to obtain the convergence order for smooth functions.

Theorem 5.1

Suppose that \(X=\{x_1,\ldots ,x_N\}\subset \mathbb {S}^d\) is quasi-uniform with constant \(c_{\mathrm {qu}}\). Fix \(m\in \mathbb {N}_0\) with \(m\geqslant k\). Suppose further that \(s_{u,X}^{L_k}\) is the generalized moving least squares approximation of \(u\in C^{m+1}(\mathbb {S}^d)\) for \(\delta = 2C_2h_X\) where \(C_2\) is defined in Theorem 3.4. Then there exist positive constants \(h_0\) and \(C=C(u,m,d,k,\phi ,c_{\mathrm {qu}})\) such that for every X with \(h_X\leqslant h_0\) and every \(x\in \mathbb {S}^d\) the estimate

holds.

Proof

We follow the standard proof of [27, Theorem 4] for pure function approximation by MLS and modify it for derivative approximation by GMLS. First, for any \(Y\in {\mathcal P}_m^d\), the local polynomial reproduction properties of GMLS, described before the theorem, can be used to write

Now we bound the right-hand side by inserting a proper Y. In Euclidean cases, the Taylor expansion usually gives a simple choice. Unfortunately, the Taylor formula can not be defined on spheres. Thus we use a convenient map T to make a relation between subsets of \(\mathbb {S}^d\) and \(\mathbb {R}^d\). Without restriction we can assume that \(x=(0,\ldots ,0,1)^T\); the north pole. In this case \(B(x,\delta )=\{y\in \mathbb {S}^d: y_{d+1}>\cos \delta \}\). We define the bijective map \(T:U\rightarrow B(x,\delta )\) by \(\widetilde{y}\mapsto (\widetilde{y},\sqrt{1-\Vert \widetilde{y}\Vert _2^2})^T\), where

The inverse of T is given by \(T^{-1}(y)=\widetilde{y} = (y_1,\ldots ,y_d)^T\). Using this simple chart, \(u\in C^{m+1}(B(x,\delta ))\) means \(v = u\circ T\in C^{m+1}(U)\). The Taylor expansion of v around origin is

where \({\mathcal A} = \{\beta \in \mathbb {N}_0^{d+1}: |\beta |\leqslant m, \,\beta _{d+1}=0\}\) and \({\mathcal B} = \{\beta \in \mathbb {N}_0^{d+1}: |\beta |= m+1,\, \beta _{d+1}=0\}\). Here \(Y_m(y)=\sum _{\beta \in {\mathcal A}}c_\beta y^\beta \) is a polynomial of degree at most m. It can be easily verified (for example by using operators \(D_{ij}\)) that \(Y_m(x)=u(x)\), \(\nabla _0Y_m(x)=\nabla _0u(x)\) and \(\varDelta _0 Y_m(x)=\varDelta _0 u(x)\). This will eliminate the first term in the right hand side of (5.2), if we are allowed to insert \(Y_m\) instead of Y into (5.2). We can do this because \(Y_m\) can be expressed in terms of spherical harmonics of degree at most m. To finalize the proof it is enough to bound \(|E_m(y)|=|u(y)-Y_m(y)|\). In doing so, we have

Inserting this bound into (5.2) gives the desired result. The new constant in the final bound depends further on k, \(\phi \) and \(c_\mathrm {qu}\) via \(\widetilde{C}_{k,1}\). \(\square \)

6 Solving PDEs by GMLS

Consider the operator equation

where L is a well-posed linear differential operator of order k, for some \(k>0\) and f is a given known function. In practice, usually f is only known on limited scattered points \(z_1,z_2,\ldots ,z_M\in \mathbb {S}^d\) and one should approximate the solution in terms of this limited information. Scattered data approximation methods are applicable in this situation. We give a simple algorithm using the GMLS approximation.

Assume that the true solution of (6.1) is denoted by \(u^*\). We first approximate \(L u^*(z_\ell )\) for \(1\leqslant \ell \leqslant M\) by the GMLS approximation (4.3) based on a set of trial points \(X=\{x_1,\ldots ,x_N\}\) which are well distributed on whole \(\mathbb {S}^d\) to fulfill the requirements of the approximation. Since L contains derivatives of order k, the degree of spherical harmonic basis functions, m, should be equal or bigger than k. This leads to

for nodal values \(u^*(x_1), \ldots , u^*(x_N)\). Assume that the exact values \(u^*(x_j)\) are replaced by approximate values \(u_j\) to have

The above linear system can be written in matrix form

with

The linear system (6.2) is an unsymmetric system of equations. The square system of certain meshless methods may be singular. But one can bypass this problem by overtesting i.e. choosing M (the number of test points) larger than N (the number of trial points). This leads to an overdetermined system of equations which can be handled by standard numerical linear algebra techniques. We assume that the matrix A is set up by sufficiently thorough testing so that the matrix has rank \(N\leqslant M\).

This approach is called direct discretization, because it bypasses shape functions. It is the same as the standard technique for generalized finite differences. The theoretical analysis of this method falls into a framework given by Schaback [22] for nodal meshless methods. Although it was given for operator equations on Euclidean spaces, one can simply proceed with the framework to see that it actually works for a general case including PDE problems on manifolds and particularly on spheres.

Let’s specialize the Schaback’s theory for our numerical scheme for a differential equation of the form

where \(\omega \) is a positive constant. Such PDE arises from discretizing the heat equation on the sphere. We should take three ingredients in to account for analysis, namely consistency, stability and numerical linear algebra solver. Let’s start with the latter first.

So far we have denoted the vector of approximate nodal values \(u_j\) by \(\varvec{u}\). Hereafter, the new notations \(\varvec{u}^*\) and \(\widetilde{\varvec{u}}\) will be respectively denoted as the vector of exact nodal values \(u^*(x_j)\) and the vector of approximate nodal values \(\widetilde{u}_j\) that is obtained by some numerical method that solves the system (6.2) approximately. We impose the following condition on the numerical procedure that produces \(\widetilde{\varvec{u}}\),

where \(\Vert \cdot \Vert _q\) is the q-norm on \(\mathbb {R}^N\). Note that (6.4) can be obtained with \(K(A)=1\) if \(\widetilde{\varvec{u}}\) is calculated via minimization of the residual \(\Vert A\varvec{u}-\varvec{f}\Vert _q\) over all \(\varvec{u}\in \mathbb {R}^N\), or with \(K(A) = 0\) if \(\varvec{f}\) is in the range of A. Once the approximated nodal vector \(\widetilde{\varvec{u}}\) is obtained, u and its derivatives can be approximated in any point \(x\in \mathbb {S}^d\) again via GMLS approximation (4.3) by replacing \(u(x_j)\) by \(\widetilde{u}_j\). This is a postprocessing calculation which is independent of the PDE itself.

We assume that the true solution lies in some regularity subspace U that carries a strong norm or seminorm \(\Vert \cdot \Vert _U\), and there is a consistency error bound

where we expect that \(\tau (x,L,X)\) is small provided that u has enough smoothness and the discretization quality keeps up with the smoothness. We have provided such bound for GMLS approximation in Theorem 5.1 for some special and important choices of differential operators \(L_k\) of order k and for \(U=C^{m+1}(\mathbb {S}^d)\), \(m\geqslant k\). In our cases we found \(\tau (x,L_k,X) = Ch_X^{m+1-k}\) independent of x.

For stability we again assume that the stiffness matrix A has no rank loss. Thus the stability constant

is finite for any choice of discrete norms \(\Vert \cdot \Vert _q\) and \(\Vert \cdot \Vert _p\) on \(\mathbb {R}^N\) and \(\mathbb {R}^M\), respectively. This constant can be explicitly calculated for standard norms and it measures the conditioning of the final (global) linear system (6.2). One hopes that \(C_S(A)\) is reasonably small.

Now we have the following theorem which gives the errors on nodal values [22].

Theorem 6.1

Under the above assumptions we have

where \(\varvec{\tau }=(\tau (z_1,L,X), \ldots , \tau (z_M,L,X))\). This leads to

for PDE (6.3) when it is numerically solved by the presented GMLS approximation with \(m\geqslant 2\).

But a question is still unanswered. How can one estimate the stability constant \(C_S(A)\)? The literature has no theoretical estimator for this constant in terms of discretization quality. However, Schaback [22] has proposed some numerical estimators which allow users to check the error and stability of their methods.

In case \(p=q=2\),

for the N positive singular values \(\sigma _1,\ldots ,\sigma _N\) of A, and these are obtainable by singular value decomposition (SVD).

The (q, p)-norm of the pseudoinverse of A, defined by

overestimates \(C_S(A)\). Thus one can calculate the pseudoinverse and take the (q, p)-norm to have a close grip on the stability.

A simple possibility, restricted to square systems, is to use the fact that MATLAB’s \(\texttt {condest}\) command estimates the \(L_1\) condition number, which is the \(L_\infty \) condition number of the transpose. Thus

is an estimate of the \(L_\infty \) norm of \(A^{-1}\). This is computationally very cheap for sparse matrices and turns out to work fine on the examples in Sect. 7, but an extension to non-square matrices is missing.

7 Numerical experiments

Before going to the experimental results, we should note that the computation of \(a^L(x)\) from (4.8) suffers from a numerical instability caused by roundoff errors. This goes back to the computation of spherical harmonics on small spherical caps which leads to nearly dependent columns in matrix P(x). To obtain a reliable solution, we avoid both matrix inverting and Matlab’s backslash command (for the square systems) in favour of using the QR factorization for normal linear systems as below. Recall

from (4.8). Decompose \(\sqrt{W} P=QR\) where Q is unitary and R is upper triangular to get \(P^TWP = R^TR\). By some simple calculations, \(RB^T = Q^T\sqrt{W}\). Using backward substitution, B is derived from this and \(a^L\) can be calculated directly. This technique stabilizes our calculations, significantly.

Now, we present the results of a numerical experiment for approximating the solution of differential equation (6.3) where the true solution is given by the following Franke’s function on the unit sphere \(\mathbb {S}^2\) [16]. To be more precise, in the spherical coordinates (3.4) we define

where \(x=(x^1,x^2,x^3)\), and compute f via the formula (3.5). Function \(u^*\) is independent of \(x^3\), but it of course depends on both \(\theta \) and \(\phi \) of spherical coordinates. The results for the 3-dimensional Franke’s function (see for example [24]) are roughly the same.

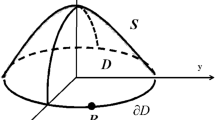

The first type of points, used to construct the approximate solutions, is generated using the equal area partitioning algorithm given in [21]. Two sets of these points are plotted in Fig. 1.

Results are presented in Table 1 for \(m=2\) and different number of points. The fill distance \(h_X\) is of order \({\mathcal O}(N^{-1/2})\).

Since N is approximately quadrupled (or \(h_X\) is approximately halved) row by row, the approximated orders in columns 3-6 of the table are calculated via formula

where G(N) is the value of \(\Vert \varvec{e}\Vert _2\), \(\Vert \varvec{a}^L\Vert _1\), \(C_S\) or \(\widetilde{C}_S\) at level N.

No overtesting is applied here and the final linear systems are solved using the backslash operator of Matlab. The third column shows the order of convergence at the nodal points of \(\Vert e\Vert _2=\Vert u^*-\widetilde{u}\Vert _2\), while the fourth column shows the order of growth of Lebesgue function \(\sum _{j=1}^N |a_j^L(x)|\) at sample point \(x=z_{\lfloor N/2\rfloor }\). The observed order of convergence is even better than the expected theoretical order \(m-1\). The Lebesgue function grows polynomially like \(h_X^{-2}\) as it was proved theoretically in Sect. 5. The orders of growth (here decay) of the stability constants \(C_S\) and \(\widetilde{C}_S\) are reported in the last two columns. The numbers are approximately approaching to zero which show that \(C_S\) and \(\widetilde{C}_S\) behave (approximately) independent of \(h_X\).

The sparsity patterns of the (global) final linear system for \(N=3201\) and \(N=12{,}801\) are shown in Fig. 2.

In order to check how the choice of the testing points influence the results, the same results are obtained in Table 2 for spherical t-designs on \(\mathbb {S}^2\) with \(N=t^2/2+t+{\mathcal O}(1)\) points which are described and distributed at the website [29]. The orders are roughly the same as those given in Table 1 for equal area partitioning points. This is expectable because both types of set points are quasi-uniform and they have a nearly identical fill-distance. This also holds for the minimum energy points of Hardin and Saff [11].

The true solution we considered so far (the Franke’s function) is an infinitely smooth function. Now, we turn to a finitely smooth function on \(\mathbb {S}^2\) as a true solution for (6.3). Let \(\{\xi _1,\ldots ,\xi _n\}\) be a set of n points on the sphere and let \(\phi (r)=(1-r)_{+}^6(35r^2+18r+3)\) for \(r\geqslant 0\). Define

for some known coefficients \(c_k\). Since \(\phi \) is a \(C^4\) function so is u. In experiments we assume that \(n=100\) and \(\{\xi _1,\ldots ,\xi _{100}\}\) are 100 scattered minimal energy points on \(\mathbb {S}^2\) [11]. Moreover, we set

The right hand side function f is calculated using the fact that \(\varDelta _0 \varphi (x^T\xi )=\mathcal L\varphi (t)\) for \(t=x^T\xi \) with

In Table 3 the orders are presented for \(m=3\) in two cases: \(M=N\) (without overtesting) and \(M=2N\) (with overtesting). We observe that overtesting improves both the order of convergence and the stability constant \(C_S\) at finer levels.

As we pointed out, the GMLS method generalizes the finite difference method for unstructured node layouts. In this direction, another well-established approach is the radial basis function-generated finite difference (RBF–FD) method [25]. Both techniques directly approximate the differential operators from function values at scattered nodes in small subdomains. GMLS uses the space of spherical harmonics as basis functions in a certain way which has been discussed in this paper, while RBF–FD uses the exactness of operator values on the space of translates of an RBF (instead of the space of polynomials in the standard finite difference method) on each local subdomain. We refer the reader to [8] (and the references therein) for a comprehensive discussion about the RBF–FD method. Here, we present some numerical results for comparison. The well-known RBFs

when restricted to the sphere, will be employed. The results of the RBF–FD method highly depend on the type of RBF and the scaling parameter \(\varepsilon \). We assign different values to \(\varepsilon \) but we care about the conditioning of the local matrices. Our numerical tests show that the behaviours of \(C_S\) and \(\widetilde{C}_S\) are more or less the same as those of the GMLS method, no matter what the value of \(\varepsilon \) is. Note that, \(C_S\) or \(\widetilde{C}_S\) measures the conditioning of the (global) final matrix A which is different from the conditioning of the local matrices for each stencil. The \(\Vert \cdot \Vert _{2}\) errors of the presented GMLS method are compared with the RBF–FD method (IMQ and Wendland’s function) in Fig. 3 for Franke’s function. As we can see, depending on the scaling parameter \(\varepsilon \), the RBF–FD method may give better or worse results than the GMLS method.

Small values of \(\varepsilon \) produce more accurate results until the calculation suddenly breaks down due to the increasing ill-conditioning of the local linear systems. The RBF interpolation itself is not unstable in function space even in the flat limit. Thus, a stable numerical algorithm would overcome that apparent problem.

Numerical tests by the Gaussian kernel do not converge for large values of \(\varepsilon \) in this example. As discussed in [7, 9, 14, 30], the use of RBF-QR, RBF-GA or Contour-Padé algorithm for small values of \(\varepsilon \) should lead to convergent results. Due to the need of this special treatment, we did not consider the Gaussian here and we restricted ourselves to the above mentioned basis functions.

Finally we note that, augmenting the RBF–FD stencils with spherical harmonic terms and using a special technique (such as RBF-QR, RBF-GA and Contour-Padé algorithms) for handling the flat limit case will turn this method more accurate than the GMLS method at the price of increasing the computational cost.

8 Conclusions and final remarks

A generalized moving least squares approximation was constructed on spheres to approximate an operator equation from scattered points on local spherical caps. The method avoided the action of the operator on the complicated shape functions and replaced it by much cheaper evaluation on spherical harmonics. Thus the computational cost was reduced remarkably compared to the classical moving least squares approach. It was applied and analyzed for some PDE problems on the sphere. The method provided the optimal algebraic rate of convergence and the final linear system is sparse and well-conditioned.

Finally, we note that the direct computation of spherical harmonics on small spherical caps leads to an instability when the discretization becomes finer. This is a disadvantage not only for this approach but also for all numerical methods based on local polynomial reproduction on the sphere, including the classical MLS. We suggested to use the QR factorization for solving the local normal linear systems. Other computational tricks, which avoid forming the ill-conditioned systems and are applicable in a general case, will be welcome in a future work.

References

Atluri, S.N., Shen, S.: The Meshless Local Petrov–Galerkin (MLPG) Method. Tech Science Press, Encino, CA (2002)

Belytschko, T., Krongauz, Y., Organ, D.J., Fleming, M., Krysl, P.: Meshless methods: an overview and recent developments. Comput. Methods Appl. Mech. Eng. 139, 3–47 (1996)

Belytschko, T., Lu, Y.Y., Gu, L.: Element-free Galerkin methods. Int. J. Numer. Methods Eng. 37, 229–256 (1994)

Bos, L.P., Salkauskas, K.: Moving least squares are Backus–Gilbert optimal. J. Approx. Theory 59, 267–275 (1989)

Dai, F., Xu, Y.: Approximation Theory and Harmonic Analysis on Spheres and Balls. Springer, New York (2013)

Devore, R.A., Lorentz, G.G.: Constructive Approximation. Springer, New York (1993)

Fornberg, B., Lehto, E., Powell, C.: Stable calculation of Gaussian-based RBF–FD stencils. Comput. Math. Appl. 65, 627–635 (2013)

Fornberg, B., Flyer, N.: Solving PDEs with radial basis functions. Acta Numer. 24, 215–258 (2015)

Fornberg, B., Piret, C.: A stable algorithm for flat radial basis functions on a sphere. SIAM J. Sci. Comput. 30, 60–80 (2007)

Golitschek, M.V., Light, W.A.: Interpolation by polynomilas and radial basis functions on spheres. Constr. Approx. 17, 1–18 (2001)

Hardin, D.P., Saff, E.B.: Discretizing manifolds via minimum energy points. Not. Am. Math. Soc. 51, 1186–1194 (2004)

Hesse, K., Le Gia, Q.T.: Local radial basis function approximation on the sphere. Bull. Aust. Math. Soc. 77, 197–224 (2008)

Jetter, K., Stöckler, J., Ward, J.D.: Error estimates for scattered data interpolation on spheres. Math. Comput. 68, 733–747 (1999)

Larsson, E., Lehto, E., Heryudono, A., Fornberg, B.: Stable computation of differentiation matrices and scattered node stencils based on Gaussian radial basis functions. SIAM J. Sci. Comput. 35, A2096–A2119 (2013)

Lancaster, P., Salkauskas, K.: Surfaces generated by moving least squares methods. Math. Comput. 37, 141–158 (1981)

Le Gia, Q.T., Sloan, I., Wendland, W.: Multiscale RBF collocation for solving PDEs on spheres. Numer. Math. 121, 99–125 (2012)

Mhaskar, H.N., Narcowich, F.J., Ward, J.D.: Spherical Marcinkiewicz–Zygmund inequalities and positive quadrature. Math. Comput. 70, 1113–1130 (2001)

Mirzaei, D.: Analysis of moving least squares approximation revisited. J. Comput. Appl. Math. 282, 237–250 (2015)

Mirzaei, D., Schaback, R.: Direct meshless local Petrov–Galerkin (DMLPG) method: a generalized MLS approximation. Appl. Numer. Math. 33, 73–82 (2013)

Mirzaei, D., Schaback, R., Dehghan, M.: On generalized moving least squares and diffuse derivatives. IMA J. Numer. Anal. 32, 983–1000 (2012)

Saff, E.B., Kuijlaars, A.B.J.: Distributing many points on a sphere. Math. Intell. 19, 5–11 (1997)

Schaback, R.: Error Analysis of Nodal Meshless Methods. In: Griebel, M., Schweitzer, M.A. (eds.) Meshfree Methods for Partial Differential Equations VIII, Lecture Notes in Computational Science and Engineering, vol. 115, pp. 117–143, Springer, Berlin (2017)

Shepard, D.: A two-dimensional interpolation function for irregularly-spaced data. In: Proceedings of the 23th National Conference ACM, pp. 517–523 (1968)

Sommariva, A., Womersley, R. S.: Integration by RBF Over the Sphere. Preprint UNSW (2005).http://www.math.unipd.it/~marcov//pdf/AMR05_17.pdf

Tolstyth, A.I.: On using RBF-based differencing formulas for unstructured and mixed structured-unstructured grid calculations. In: Proceedings of the 16th IMACS World Congress, pp. 4606–4624 (2000)

Videnskii, V.S.: Markov and Bernstein type inequalities for derivatives of trigonometric polynomials on an interval shorter than the period. Dokl. Akad. Nauk SSSR 130, 13–16 (1960). (In Russian)

Wendland, H.: Moving least squares approximation on the sphere. In: Lyche, T., Schumaker, L.L. (eds.) Mathematical Methods in CAGD, pp. 1–10. Vanderbilt University Press, Nashville, TN (2001)

Wendland, H.: Scattered Data Approximation. Cambridge University Press, Cambridge (2005)

Womersley, R.: Efficient Spherical Designs with Good Geometric Properties (2015). http://web.maths.unsw.edu.au/~rsw/Sphere/EffSphDes/index.html

Wright, G.B.: Radial Basis Function Interpolation: Numerical and Analytical Developments. Ph.D. thesis, University of Colorado (2003)

Acknowledgements

I wish to express my deep gratitude to anonymous reviewers for their helpful comments which improved the quality of the paper. Special thanks go to Dr. Maryam Namazi (University of Isfahan) for careful proofreading. This research was in part supported by a Grant from IPM (No. 95650077).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Elisabeth Larsson.

Rights and permissions

About this article

Cite this article

Mirzaei, D. Direct approximation on spheres using generalized moving least squares. Bit Numer Math 57, 1041–1063 (2017). https://doi.org/10.1007/s10543-017-0659-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0659-8