Abstract

Bark beetle populations have drastically increased in magnitude over the last several decades leading to the largest-scale tree mortality ever recorded from an insect infestation on multiple wooded continents. When the trees die, the loss of canopy and changes in water and nutrient uptake lead to observable changes in hydrology and biogeochemical cycling. This review aims to synthesize the current research on the effects of the bark beetle epidemic on nutrient cycling and water quality while integrating recent and relevant hydrological findings, along with suggesting necessary future research avenues. Studies generally agree that snow depth will increase in infested forests, though the magnitude is uncertain. Changes in evapotranspiration are more variable as decreased transpiration from tree death may be offset by increased understory evapotranspiration and ground evaporation. As a result of such competing hydrologic processes that can affect watershed biogeochemistry along with the inherent variability of natural watershed characteristics, water quality changes related to beetle infestation are difficult to predict and may be regionally distinct. However, tree-scale changes to soil–water chemistry (N, P, DOC and base cation concentrations and composition) are being observed in association with beetle outbreaks which ultimately could lead to larger-scale responses. The different temporal and spatial patterns of bark beetle infestations due to different beetle and tree species lead to inconsistent infestation impacts. Climatic variations and large-scale watershed responses provide a further challenge for predictions due to spatial heterogeneities within a single watershed; conflicting reports from different regions suggest that hydrologic and water quality impacts of the beetle on watersheds cannot be generalized. Research regarding the subsurface water and chemical flow-paths and residence times after a bark beetle epidemic is lacking and needs to be rigorously addressed to best predict watershed or regional-scale changes to soil–water, groundwater, and stream water chemistry.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The current bark beetle (Dendroctonous ponderosae) epidemic in western North America presents challenging water-resource problems. Bark beetle outbreaks are typically endemic and result in minimal tree mortality in affected watersheds; however, the recent outbreak has affected an unprecedented number of lodgepole pine trees (Pinus contorta) in the Rocky Mountain West, with some watersheds reaching 100 % tree mortality (Raffa et al. 2008) and over 5 million ha infested in both the western US and British Columbia (Meddens et al. 2012). Outbreaks are not specific to North America, and analogous increases in the magnitude of infestation have been observed in both Europe and Asia (e.g. Huber et al. 2004; Tokuchi et al. 2004). Due to the current scale of infestations, there has been an increase in research addressing water resource effects (both quality and quantity) resulting from bark beetle outbreaks.

Prior bark beetle hydrology reviews have focused on water quantity impacts from tree die-off and draw heavily on past findings relating to forest management and harvesting practices (Hélie et al. 2005; Schnorbus et al. 2010; Adams et al. 2011; Pugh and Gordon 2012). However, the different processes affecting water supply have a direct and quantifiable impact on nutrient cycling and hence, water quality (Bricker and Jones 1995). Recent work has developed conceptual frameworks of the coupled biogeochemical and biogeophysical impacts of the bark beetle (Pugh and Small 2012; Edburg et al. 2012); and model simulations suggest that given the large variability in beetle outbreak dynamics and heterogeneity in watersheds, a large range of nutrient responses are possible (Edburg et al. 2011). We expand upon these frameworks by critically synthesizing literature relating to the effects of the bark beetle epidemics on water quality, while integrating relevant research on water quantity and hydrology, to better understand interrelated feedbacks. While bark beetles may infect many varieties of coniferous trees which may alter the watershed biogeochemical response, our emphasis on water quality mostly focuses on the repercussions of beetle-induced die-off of lodgepole pine (Pinus contorta), Douglas-fir (Psuedotsuga menziesii) and Engelmann spruce trees (Picea engelmannii), which forms most of the recent literature. It has also been shown that biogeochemical cycling post beetle infestation, particularly nitrogen cycling, does not significantly differ between host species (Griffin and Turner 2012).

As the climate changes throughout most forested regions, outbreak characteristics of bark beetle infestations are predicted to intensify (Ayres and Lombardero 2000; Dale et al. 2001; Williams and Liebhold 2002). With warming temperatures, outbreaks are predicted to shift towards higher latitudes and higher elevations (Dale et al. 2001; Williams and Liebhold 2002; Jönsson et al. 2009) and insect biodiversity will generally decrease (Williams and Liebhold 1995; Fleming 1996; Coley 1998). In addition, adaptation to the changing climate has resulted in a second insect generation per growing season, further intensifying the epidemic (Mitton and Ferrenberg 2012). Until sufficient vegetation regrowth and forest renewal, the deforested zone shifts from a carbon sink to a carbon source, which may further contribute to climate change (Kurz et al. 2008).

Changes in canopy cover, interception and evapotranspiration have the potential to alter hydrologic processes including streamflow and soil moisture along with forest biogeochemistry (Fig. 1). Larval feeding and the introduction of blue-staining fungus carried by bark beetles immediately restricts water and nutrient flow to the tree (Reid 1961). The needles on an attacked tree progress in color from green to red within 2–3 years after attack; several years later, the tree appears grey as the needles drop to the forest floor faster than the typical annual litter-fall (Kim et al. 2005), thus decreasing canopy cover and interception of precipitation. Along with needle drop, tree branches and trunks fall to the forest floor and decay leaches increased amounts of carbon, phosphorus, and base cations into the soil matrix (Yavitt and Fahey 1986) potentially altering the forest biogeochemistry. Tree-fall rate is variable with climate, tree diameter, land geomorphic characteristics, forest management practices and tree species and can occur anywhere from 5 to 25 years post infestation (Mitchell and Preisler 1998). All of the inherent changes in a bark beetle infested forest could ultimately contribute to detrimental and complex changes to both water quality and quantity that vary both spatially and temporally from the onset of infestation.

A conceptual image depicting the continuously changing hydrologic and biogeochemical cycles during the various phases of bark beetle infestation. The top portion of the figure contains the visual representation of the three primary phases of infestation, with the accompanying elements of the hydrologic cycle. Fluxes are denoted with arrows and storage reservoirs as rectangles with the associated increase or decrease in the process depicted by the fill departure above or below the N.S.C. (“no significant change”) line. While differences in changes of each variable have been observed due to catchment characteristics, climate and infestation characteristics, the filled-in portion displays the general trend even though magnitude may vary. T transpiration, E ground evaporation, I interception, SWE snow water equivalent, θ soil moisture, A ablation and Q water yield. The bottom of the figure displays the temporal trends associated with the different phases of infestation and the expected alterations in biogeochemical cycling. An up arrow indicates concentrations above baseline, a down arrow indicates concentrations below baseline and a horizontal dash indicates no significant change (with the size of the arrow indicating magnitude). Asterisks(*) indicate this trend depicts the majority of results published, although occasional studies have not observed this trend. See Table 1 and 2 for additional clarification

While the bark beetle infestations create somewhat different changes in hydrology and biogeochemistry, a large pool of literature exists on other types of land disturbances that have similar post-disturbance phenomena. The two most common land disturbances compared to bark beetle infestation are tree harvest and fire. Adams et al. (2011) conducted an extensive review on the ecohydrological similarities and differences between tree mortality associated with canopy die-off, forest harvesting practices and fire and highlighted below are some of the different responses that are important to distinguish. One of the main differences is that in high severity fires and many tree harvesting practices, there is entire loss of the overstory canopy, while in bark beetle infestations mortality is not necessarily continuous across the entire watershed. In general, fire is not as similar to bark beetle infestations as tree harvesting, as fire completely alters ground surface vegetation and soil surface properties. Forest harvesting is similar in many ways to bark beetle infestations, inasmuch as both disturbances result in diminished canopy and reduced vegetative nutrient and water uptake; however, forest harvesting also often includes soil disturbance, soil compaction and road construction and maintenance, all of which contribute to differences in hydrological and biogeochemical processes. Beetle-induced tree mortality also occurs in phases, with the tree initially losing its ability to take up water and nutrients, followed by needle discoloration and several years later actual needle drop. This slower transitioning results in less stark changes to forest biogeochemistry, as soil buffering and surviving vegetation can often compensate lowering nutrient export and observed hydrological changes (Griffin et al. 2013). In comparison, forest harvesting occurs on a much shorter timescale with complete canopy removal associated with immediate tree death. With the above highlighted differences between bark beetle infestations and other land disturbances, it becomes apparent that even with similarities we can draw upon from the extensive pool of literature on non-bark beetle canopy-changing disturbances, it is necessary to review and synthesize the impacts bark beetle infestations can have on hydrological and biogeochemical processes.

Coupled hydrologic and biogeochemical shifts

Observed water quantity impacts from widespread insect outbreaks range from tree-scale processes, such as evapotranspiration, soil moisture and snow accumulation and melt, to watershed-scale effects, such as water yield and peak flow rates (Fig. 1). These perturbations can have collective effects on water quality and make understanding hydrologic changes an essential first step to water quality predictions; this is particularly important in regions like Colorado’s Front Range, where the majority of the water supply is generated from mountain snowmelt. While the magnitude may vary, most studies show similar trends in tree-scale water quantity processes such as soil moisture and snow accumulation with increasing die-off (Table 1). As more processes and diverse environmental variables are incorporated at larger scale, the response of large watersheds is more uncertain and varies between watersheds (Stednick and Jensen 2007; Weiler et al. 2009; Adams et al. 2011). The sections below highlight recent research on the effects of beetle infestation on water quantity and further integrate this understanding to explain how alterations to the water budget can affect water quality.

Snow accumulation and ablation

Snow pack accumulation typically increases with reduced interception as trees progress from green to grey canopy phases (Boon 2007; Boon 2009; Teti 2009; Pugh and Small 2012). However, observations also suggest that net accumulation may be minimal due to increases in ablation (i.e. combined melt, sublimation, and wind removal) which offset decreases in interception (Biederman et al. 2012). The observed magnitude of change in snow water equivalent (SWE) or accumulation is directly related to the ability of ablation at the ground surface to offset increased snow inputs from decreased interception. In general, ablation rates tend to be higher in infested watersheds (Potts 1984; Boon 2009; Pugh and Small 2012). Teti (2009) found that ablation rate variability could be described by radiation transmittance and basal area. Initial model results from Boon (2007) found a modest reduction in ablation rate; however, incorporation of a more detailed water balance by Boon (2009) suggests that rates increase in impacted stands. In addition to the increase in exposure due to solar radiation, increased needle-fall on the snow surface decreases snow albedo, in turn leading to increased snow ablation energy (Winkler et al. 2010; Pugh and Small 2012). This phenomenon is particularly important in red and grey phase stands, which receive more shortwave radiation than green stands (Boon 2009). However, the albedo decrease is only observed during the red phase when needles are falling and by the time the tree has transitioned to grey phase and most needles have fallen, the albedo may actually be greater than in undisturbed pine forests (Winkler et al. 2012). The contribution of wind removal to ablation rates is insignificant relative to the increase from enhanced solar radiative fluxes (Biederman et al. 2012).

When comparing green and grey stands across different sites, changes in snow accumulation display inconsistencies in magnitude and may be overshadowed by the spatial variability in winter weather conditions or possibly increased spatial variability of snowpack accumulation under beetle-killed trees (Teti 2009). In addition, stand scale spatial variability is important as denser canopy cover may result in increased SWE with tree death, while the opposite trend is observed in areas with sparser coverage (Biederman et al. 2012); however, beetles may more successfully kill trees that are naturally in areas of lower density (i.e. not managed or thinned stands) as a result of having potentially thicker phloem layers (Kaiser et al. 2012).

Temporal variability, specifically inter-annual differences in meteorological conditions and phase of tree death, also affects the response of snow accumulation to tree death (Pugh and Small 2012; Boon 2012; Winkler et al. 2012). Studies on the effects of widespread MPB-induced tree mortality on snow accumulation in British Columbia reveal greater differences in accumulation in years of low SWE, which the authors attribute to decreased interception (Winkler et al. 2012). This difference in interception also changes as tree death progresses. In contrast to the increase in snow accumulation observed in grey stands in Colorado, red phase trees exhibited no significant change in accumulation compared to green trees as most needles still remain on the trees and canopy transmittance from red phase trees was similar to green phase trees (Pugh and Small 2012),

Other mechanistic modeling studies have also been applied to understand field observations (Bewley et al. 2010; Mikkelson et al. 2013b). Model simulations are useful to differentiate between bark beetle impacts and inherent annual and spatial variability in snow deposition and melt. Bewley et al. (2010) found that discrepancies between field and modeled snow observations could be attributed to the model sensitivity in averaged leaf-area index values, which were inversely related to net solar radiation and directly related to net long-wave radiation. Net radiation was found to be the dominant source of snowmelt energy in modeled red, grey and green stands; whereas in clear-cut and regenerating stands there were larger contributions from changes in sensible heat flux attributed to increased wind speed. It is reasonable that this latter mechanism may also be a more dominant factor in beetle-infested forests after tree fall, although fallen trees still reduce wind velocity at the ground level. In addition, Pugh and Gordon (2012) found grey lodgepole pine stands transmitted 11 to 13 % more sunlight to the forest floor than green stands. Red phase trees exhibited moderate (4–5 %) increases, which may not result in measurable increases in snow accumulation or melt, as observed in an earlier study (Pugh and Small 2012). Mikkelson et al. (2013b) found that increased radiation, and thus increased snowmelt and sublimation, can offset the increases in snow accumulation due to decreased canopy interception until the air and ground temperatures are below freezing and the enhanced melting trend gives way to the permanent winter snowpack. As a result, changes in the land energy budget may account for early differences in SWE across sites.

A larger body of literature investigates the interrelationship between vegetation and snow processes, independent of insect infestation (Gelfan et al. 2004; Woods et al. 2006; Jost et al. 2007; Musselman et al. 2008; Molotch et al. 2009). These studies report 15–47 % greater snow accumulation and 23–54 % faster ablation rates in open as compared to forested areas. Changes in snow accumulation and melt are predominantly attributed to differences in forest cover, elevation, and aspect. Precipitation variability also exerts an influence; the increase in accumulation in open spaces was 12 % greater in a mild winter than in a winter with above average snowfall (Jost et al. 2007). While percent forest cover accounts for a significant portion of the variability in snow accumulation, the spatial structure of canopy removal will also result in different degrees of change in snow accumulation. When canopy cover was removed in 0.2–0.8 ha groups, no change in snow accumulation was observed, likely due to increased ablation from solar radiation and wind. Conversely, when the same percent of canopy was removed, but evenly distributed over the domain, snow accumulation significantly increased (Woods et al. 2006). In forests impacted by the mountain pine beetle, tree death is characterized by more distributed canopy removal than clustered clear-cutting, particularly in the earlier stages of infestation when tree death is less uniform. The findings of Woods et al. (2006) suggest that earlier and dispersed MPB-induced tree death will result in significant increases in snow accumulation. In contrast, more widespread tree death, potentially related to later stages of infestation or large wind-thrown areas, may not result in significant increases. Overall, hydrologic impacts are difficult to generalize; the stage of die-off, as well as spatial and climatic variability, must be taken into account when predicting future snow accumulation scenarios within bark beetle infested watersheds.

Changes in snow accumulation, ablation, and possible alterations to snowmelt timing and water availability may also have implications for biogeochemical cycling in snow dominated high-altitude watersheds. For example, snowmelt timing and growing seasons influence ecosystem nitrogen exports. Increased nitrogen deposition over the last several decades suggests nitrogen saturation in the high elevation alpine regions of the Rocky Mountain Front Range (e.g. Baron et al. 1994), resulting in increased N export from these ecosystems. Much of the increase in streamwater nitrogen concentrations is observed during snowmelt and is temporally disconnected from the peak-growing season of the subalpine forests, where nitrogen is still limiting. Earlier snow melt caused by MPB-induced changes in snow dynamics may further disconnect this high nitrogen flux from nitrogen limited vegetation. In addition, active microbial biomass formation limits nitrate exports from alpine watersheds during snowmelt for consistently snow-covered sites; however, inconsistent snowpack does not result in the same protection for biomass formation. The limited buffering capacity in inconsistent or shallow snowpack may result in greater inorganic nitrogen exports during snowmelt (Brooks et al. 1998). As a result, spatial and temporal changes in snowpack resulting from widespread land cover change may significantly alter the biogeochemical cycle of nitrogen.

Soil moisture and evapotranspiration

Soil moisture and evapotranspiration (ET) are two interlinked processes that appear to be altered following bark beetle outbreaks. After snowmelt, models universally found decreases in ET following insect induced tree-mortality, as transpiration ceases soon after initial outbreak (Zimmermann et al. 2000; Beudert 2007; Hubbard et al. 2013; Mikkelson et al. 2013b). However, after tree death, increased radiation exposure and moisture in the upper soil horizons can increase ground evaporation, and prolonged soil moisture during the growing season may increase transpiration as vegetation recovers. Restoration of transpiration in regenerating forests is a function of (1) the regrowth of the canopy cover, represented in many models as the leaf area index and (2) the development of roots able to reach deeper soil horizons, particularly in dry environments. Therefore, the ability of regrowth to mitigate the reduced transpiration from pine mortality is directly related to the rooting depth and depth to soil saturation; it is less likely to offset transpiration losses on steep slopes, with deeper, less accessible, shallow groundwater and lower density plant growth (Mikkelson et al. 2013b). Evaporative moisture loss, as reflected by latent heat, is also a function of wind speed which has been linked to observations of increased evaporation in clear-cut harvested areas; this increased flux partially offsets soil moisture increases from reduced transpiration (Woods et al. 2006). Increased wind speed may also be expected in MPB-impacted forests in the dieback phases, although fallen trees may reduce the effect and result in smaller increases in evaporation than observed in clearcut areas.

As a result of net interception and transpiration decreases, soil moisture increases are observed in bark beetle infested watersheds in field studies and are theoretically expected based on modeling studies. While Morehouse et al. (2008) observed greater soil moisture from spring through fall in infested plots, only the summer increases were statistically significant (p ≤ 0.05). Clow et al. (2011) found significantly higher soil moisture (p ≤ 0.1) under red and grey phase trees when compared with green trees in an October field investigation. Integrated hydrologic and land-cover modeling results from Mikkelson et al. (2013b) point to three interrelated controls on soil moisture: watershed slope, plant cover, and degree of soil moisture stress. They found that the effect of beetle infestation on soil moisture was more pronounced during periods of moisture stress when green phase trees continue to transpire, but the drier soil surface layer limits ground evaporation. Soil moisture effects are less distinct on steeper slopes because soils receive less moisture due to increased runoff, and are generally better drained. Similar to the previous hydrologic variables, spatial and temporal heterogeneity in infestation or land and soil characteristics must be considered when evaluating the impacts to soil moisture and ET, particularly at the watershed scale. For example, the loss of transpiration in an attacked tree is rapid and likely results in an increase in soil moisture observed over the first growing season after attack and reaching a maximum during the second growing season (Hubbard et al. 2013). As the infestation progresses in time and space, however, trees are not uniformly killed resulting in a range of transpiration and soil moisture changes at the watershed scale that will mute the expected increase in water yield. While the spatial distribution of attacked trees will affect the surrounding soil moisture, landscape characteristics and soil moisture also influences what trees are more susceptible to attack (Kaiser et al. 2012). For some landscape characteristics, the feedbacks between soil moisture and tree susceptibility are compounding. In general, trees under water stress are more prone to MPB attack (Kaiser et al. 2012) and the resulting effect on soil moisture is more pronounced (Mikkelson et al. 2013b). In contrast, trees on steep slopes are also more susceptible to attack; however the effects of the attack are less distinct, as runoff is more prominent. Increase in soil moisture, as well as nutrient availability, will also facilitate compensatory growth from remaining and new vegetation (Hubbard et al. 2013). This increased water and nutrient uptake further complicates the expected watershed scale response.

Increases in soil moisture and radiation are often linked with increased rates of soil organic matter decomposition in forest soils (e.g. Wickland and Neff 2008). Carbon inputs from decomposition are important for the microbial assimilation of nitrogen. In clear-cut forests, research suggests that carbon limitations on assimilation, rather than faster litter decomposition, is responsible for increased nitrogen availability (Prescott 2002), further adding to nitrogen increases from decreased plant uptake. In unmanaged MPB-impacted forests, carbon limitations are less significant, and increased decomposition rates may therefore be an important factor causing increased nitrogen fluxes, in addition to increased organic matter degradation.

Water yield and peak flows

Bark beetle induced tree mortality has the potential to impact watershed-scale runoff properties due to changes in snow accumulation and melt, evapotranspiration and interception. The severity of the impact on streamflow response is related to the factors above, as well as to the magnitude, type and intensity of precipitation. Stednick (1996) propose that watersheds receiving less than 20 inches of precipitation annually are not able to overcome increases in evaporation and will not see a streamflow response to dieback. In watersheds dominated by rain, not snow, peak flow increases should only occur when decreases in interception and transpiration are not mitigated by increases in evaporation from increased exposure. As a result, increased throughfall is a significant factor for small-storm events wherein interception is a larger component of the water balance. Thus, in rain-dominated watersheds, peak flow observations may not reflect observed changes in annual water yield (Alila et al. 2009). In snowmelt-dominated watersheds, however, models used to upscale observed changes in snow accumulation and melt rates produced increased peak flow associated with larger storm events (Alila et al. 2009).

Other watershed properties, such as size, also influence changes in peak flow rates. At the stand-scale, model results show that soil water outflow may increase by 92 % over control plots (Knight et al. 1991). Understanding runoff generation at the watershed scale is more challenging. Weiler et al. (2009) modeled watersheds in the Fraser Basin (British Columbia) at different scales assuming death of all pine trees and found that moderate increases in peak flow were more likely in larger, aggregate watersheds, while smaller order watersheds could experience either small (<23 %) or large (>88 %) increases in peak flows. The results suggest that simulating a variety of watershed properties at larger scales will result in muted responses in peak flow or desynchronized flows. Specifically, peak flows from larger watersheds combine flows from multiple sub-catchments, each with different aspects, forested extents, impacted areas, and degree of regeneration.

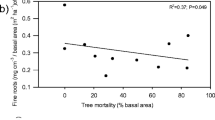

Evaporation rates and the percent of pine-covered forest have been shown to influence the change in peak flow rates in bark beetle infested watersheds. During a 1940s bark beetle outbreak further described below, Bethlahmy (1975) observed that streamflow increased more in low radiative energy watersheds, such as the north-facing Yampa River (Colorado, USA), than in high radiative energy watersheds, where net radiation and evaporative losses were greater. As expected, bark beetle infestation has a greater influence on peak flows in watersheds that receive most of their runoff from pine-dominated areas (Stednick and Jensen 2007; Weiler et al. 2009; Schnorbus et al. 2010) and are thus more sensitive to MPB infestation. Within the pine-dominated area, considerable forest regeneration and understory vegetation may prevent observable increases in water yield (Stednick 1996), likely due to transpiration that is restored in regrowth or facilitated by increased water availability in understory vegetation. It is also possible that a minimum percentage of the watershed must be infested before water yield is detectably altered. Zimmermann et al. (2000) propose a threshold of 25 % deforestation prior to observing a runoff response. Stednick (1996) proposed a comparable minimum canopy reduction of 20 %. If the MPB-affected area does not exceed the minimum threshold it is likely that the remaining vegetation is capable of transpiring enough of the excess soil moisture that increased flow are not detectable. In addition, if the threshold is not met in a snow-dominated watershed and the canopy is essentially thinned but interception is still occurring, SWE may actually decrease due to increased ablation and increases in peak flow are therefore improbable. Although peak flow responses may only occur if a minimum area is deforested, the streamflow response may not be immediate upon reaching that threshold. Streamflow observations from a bark beetle outbreak in the 1940s in the Rocky Mountains (Bethlahmy 1974; Bethlahmy 1975) demonstrated a delay in watershed response with little change in annual water yield in the first 5 years as the infestation escalated to epidemic levels. Interestingly, the maximum increase in water yield was observed 15 years later, and increases were still detected 25 years after the peak of the infestation (Bethlahmy 1974). The prolonged response in water yield is indicative of multiple flow paths and a range of residence times causing a continued signal for years following outbreak.

Watershed peak flow response may also be indicative of changes in flow path partitioning. We are aware of one study that has looked specifically at changes in flow paths from bark beetle infestation using historical data, isotope tracers, and simplified hydrologic models. Significant differences from isotopic partitioning into direct and indirect runoff during flood events were not found between paired catchments of different degrees of infestation (Beudert 2007). However, double mass analysis, comparing precipitation with components of the hydrologic cycle in infested and non-infested watersheds, highlighted increases in surface runoff and fast and slow groundwater runoff. This analysis suggests important changes in flow path partitioning in each watershed as infestation progressed.

The mechanisms responsible for competing watershed responses that influence peak flow rates are still unclear. Additional studies on the long-term alterations in water partitioning of infested watersheds are therefore needed to fully understand the water-quantity and hydrologic cycle impacts associated with beetle outbreak. Furthermore, perturbations in flow paths and timing in infested watersheds may impact nutrient transport and related biogeochemical processes with impacts on nutrient availability for reforestation and downstream water quality. For example, Beudert (2007) and Zimmermann et al. (2000) both observed higher nitrate concentrations in waters sourced from deeper soil water than from shallow soil water, suggesting hydrologic shifts toward this water fraction may result in higher nutrient fluxes.

Water quality and nutrient cycling

As suggested above, soil-vegetation disturbances from insect-induced tree-mortality may influence nutrient cycling and ultimately water quality by perturbing existing biogeochemical cycles. Specifically, hydrologic changes within the infested watershed may cause increased particulate transport, increased organic carbon fluxes from enhanced organic matter decomposition, and alterations in nitrogen cycling, which can potentially cause changes in soil pH and increased mobilization of metals with toxicological implications (Fig. 1). The available literature on biogeochemical outcomes and water quality perturbations resulting from a bark beetle outbreak (Table 2) is sparser than literature on water quantity; nonetheless, synthesis and interpretation of emerging literature suggests potential impacts. Biogeochemical changes in these systems are not yet well understood mechanistically or observationally, and evidence exists for intertwined and competing processes. In the sections below, we discuss potential implications of the beetle infestation on biogeochemistry and water quality with a focus on nutrients and metals.

Nitrogen

High tree mortality rates due to a bark beetle infestation can alter nitrogen cycling. As trees die, uptake of nitrogen (N) ceases, which can lead to excess nitrogen pools in underlying soils until vegetation regrowth compensates. Increased litter from tree mortality (Griffin et al. 2011) can also lead to increased inorganic nitrogen pools (Cullings et al. 2003). Transformation processes such as nitrification/denitrification and mineralization could be enhanced due to an abrupt increase in carbon sources, increased soil moisture, and increased microbial activity from higher energy fluxes to the ground; however, confounding factors such as catchment nitrogen deposition, surviving vegetation and climate can lead to differing responses post beetle infestation.

Typically, it has been found that nitrogen saturated watersheds in Europe and Southeast Asia exhibited a much larger nitrate response (in both soil and surface waters) than more nitrogen-limited watersheds such as the arid Rocky Mountain West in North America after a bark beetle attack. Three studies (Zimmermann et al. 2000; Huber et al. 2004; Huber 2005) looked at tree-scale nitrogen cycling post beetle infestation in a German spruce forest and saw a large soil nitrate response. Increases in nitrate concentrations were found in soil water at 50–100 cm depths for 4 years after initial die-off with maximum concentrations observed in years one and two after initial die-off (Zimmermann et al. 2000). However, shallower soil layers showed only slight increases in NO3 concentrations following die-off, possibly due to increased denitrification in the upper soil layers where there is typically a higher organic content in the soil and increased soil moisture.

In contrast, Huber et al. (2004) saw a more delayed nitrate response in seepage water samples that occurred approximately 4 years after die-off (which corresponds roughly with the grey phase). Seepage water NO3 concentrations were elevated for 7 years after outbreak and showed a clear seasonal trend with highest concentrations in autumn at the end of the vegetation growing period and lowest values after spring runoff. Surviving vegetation and vegetation regrowth are common themes when determining the biogeochemical response; they can be compensatory mechanisms that explain why a large nitrate response is not often seen post beetle infestation but is more frequently seen after large disturbances such as timber harvesting without streamside buffers, wind-thrown forests or wildfire (Griffin et al. 2013; Rhoades et al. 2013).

Along with compensatory nitrogen uptake by surviving vegetation, the differences in atmospheric N deposition and tree mortality spread over multiple years may be factors contributing to varied surface and groundwater nitrate-nitrogen responses post beetle infestation (Rhoades et al. 2013). Tokuchi et al. (2004) investigated a Japanese catchment that had recently experienced pine wilt disease and was the only study besides Zimmermann et al. (2000) to find increased NO3 concentrations in stream water. Both studies saw increased groundwater nitrate concentrations as well; however, in the German forest groundwater nitrate concentrations (5–10 m depth) lagged 2 years behind streamwater nitrate concentrations, while in the Japanese forest increases in groundwater nitrate concentrations preceded increases in streamwater nitrate concentrations by 2 years. These findings suggest that groundwater may play a large role in the long-term transport of NO3, however temporal trends are variable within catchments.

Unlike the Japanese (Tokuchi et al. 2004) and German studies (Zimmermann et al. 2000), two studies in Colorado (Clow et al. 2011; Rhoades et al. 2013) did not find increased NO3 concentrations in stream water in response to the MPB infestation even though one study (Clow et al. 2011) observed larger NO3 concentrations in soil (15 cm depth) under red and grey phase trees which would suggest the potential for elevated groundwater and stream water concentrations as the infestation progresses through the dieback phases. Clow et al. (2011) did find that the fourteen streams sampled during the 2007 runoff season in Grand County, CO, exhibited NO3 concentrations that were strongly correlated with the snowmelt hydrograph, typical of mountainous watersheds. While they did not detect increases in NO3 at the three study sites analyzed for temporal trends, total streamwater N did increase over the study period (2001–2009), which encompassed the year when most trees were initially infected and was attributed to increased litter breakdown or warming soil temperatures. They also found that percent forest cover (which included both dead and alive trees) in the basin was the strongest predictor of stream water NO3. Denitrification in groundwater is also feasible, and could mitigate increases in nitrogen transport in stream baseflow.

Tree-scale soil–water ammonium concentrations exhibit a more pronounced, universal increasing trend after bark beetle infestation regardless of other compensatory factors present with nitrate response. This implies that reduced vegetation uptake of NH4 and increased inorganic N pools due to leaf litter are the primary release mechanisms associated with beetle-impacted catchments. Griffin et al. (2011, 2012, 2013), Morehouse et al. (2008), Xiong et al. (2011) and Kaňa et al. (2012) all investigated soil nitrogen pools after insect outbreak. The studies by Griffin et al. (2011, 2012, 2013), Xiong et al. (2011) and Morehouse et al. (2008) were all in the United States (in Yellowstone National Park, the Colorado Front Range and Arizona, respectively), while the study by Kaňa et al. (2012) was located in the Czech Republic. Four of these studies (Morehouse et al. 2008; Griffin et al. 2011; Griffin and Turner 2012; Kaňa et al. 2012) found elevated N concentrations in needle litter, likely due to lack of N retranslocation associated with tree mortality. Unlike Huber (2005), only soil NH4, not NO3, was shown to significantly increase during the outbreak (Morehouse et al. 2008; Griffin et al. 2011, 2013; Xiong et al. 2011; Kaňa et al. 2012) despite observed increases in net mineralization and net nitrification (Griffin et al. 2011). Morehouse et al. (2008) also found higher soil NH4 and higher laboratory net nitrification rates from infected stand soils. 30 years post outbreak, N concentrations in needle litter still remained elevated; however, soil N fluxes and pools (NO3 and NH4) were not significantly different than in undisturbed stands (Griffin et al. 2011).

As results from beetle infested forests in Europe and beetle infested forests in western North America appear different, it is probable that because coniferous forests of western North America receive much less atmospheric N deposition than forests in Europe and eastern North America they have a smaller NO3 response following disturbance. Additionally, the climate in western North America is more arid than in Germany and Japan, where streamwater N concentrations were elevated in infested forests. It has been shown that locations with greater annual precipitation are more sensitive to harvesting practices and exhibit a larger hydrologic response and thus, possibly a larger nutrient response (Stednick 1996). This difference between watersheds in humid and arid regions is consistent with the finding that stream water N differences between developed and undeveloped watersheds were smaller in arid regions (Clark et al. 2000; Adams et al. 2011).

The magnitude of observed changes in nitrogen cycling associated with bark beetle outbreaks varies among literature studies. Bark beetle outbreaks will likely cause increased NH4 in the soil beneath dead trees due to increased mineralization of elevated organic nitrogen inputs from leaf litter, and reduced NH4 uptake by trees. Nitrate has also been found to increase in soil beneath bark beetle killed trees; however, it appears more likely under trees located in forests that receive higher atmospheric nitrogen deposition and in deeper soil horizons. Whether or not increased NO3 or NH4 concentrations reach the stream water remains uncertain. Depending on the hydrologic flow-paths of the catchment, surviving understory vegetation and vegetation regrowth, atmospheric N inputs, N pools and sinks, and potential denitrification in underlying deeper soils and aquifers, it is possible that bark beetle outbreaks will not affect N concentrations in surface waters, although the mechanisms controlling timing of N transport (i.e. hydrologic flow paths) need further elucidation.

Phosphorus

Phosphorus (P) flux, either in the form of dissolved phosphate or particulate P, has the potential to be altered after a bark beetle attack because phosphate is readily released from decaying organic matter. Many studies have researched phosphorus changes after forest clear-cutting (e.g. Piirainen et al. 2004; Pike et al. 2010) and found increased inorganic phosphorus loading in soils beneath cut forests. The only published study to investigate phosphorus loading in soils after bark beetle attack (Kaňa et al. 2012) found only slight increases in total P concentrations despite large increases in the P input via litterfall in a Czech Republic spruce forest. However, the soluble reactive fraction of the total P increased by over five times the original concentration which has implications for enhanced P transport to nearby lakes and streams.

Complementing these findings in soils, two Colorado studies investigated stream water P concentrations post beetle attack. Clow et al. (2011) found that while total P in stream water increased in conjunction with beetle attack, the dissolved phosphate fraction decreased. The drought conditions during the early record period may explain the decrease in dissolved phosphate observed by Clow et al. (2011). Stednick et al. (2010) found that both dissolved and particulate phosphorus increased in beetle-killed areas. Increases in particulate P could be indicative of (1) the conversion of dissolved nutrients to particulate form by benthic algae due to warming temperatures and increased productivity or (2) an increase in particulate fluxes to surface waters due to increased erosion. It would be insightful to determine if major water quality concerns arise from increases in particulate P loading following outbreaks due to enhanced erosion and litter decomposition or increased sub-surface transport of dissolved P from changing hydrologic pathways or the altered composition. Distinguishing this trend may be challenging as many mountain watersheds have experienced significant population growth during the MPB infestations and increased development may also result in higher dissolved and particulate phosphorus loading to streams (Geza et al. 2010).

Dissolved organic carbon (DOC)

DOC is ubiquitous in surface and groundwaters and originates from both allochthonous and autochthonous sources. Typically, spring runoff in mountainous watersheds flushes accumulated dissolved organic matter into surface waters (Boyer et al. 1997). In general, soil-DOC originates from litter leachates, root exudates and microbial degradation (Zsolnay 1996). Bark beetle infestations have the potential to influence DOC concentrations as decreases in canopy cover can increase runoff rates, and excess needle loss onto the forest floor, compounded by increased soil moisture and warmer soil temperatures, leads to increased decomposition and soil organic matter leaching. However, the increase in DOC to the soil matrix may be delayed due to the termination of rhizodeposition and mycorrhizal turnover in dead trees (i.e. chemical cycling associated with roots and related fungi), which are major contributors to DOC in soil water (Högberg and Högberg 2002; Godbold et al. 2006).

Studies of DOC in soils associated with infected trees suggest competing processes between litter decay and root exudates. Xiong et al. (2011), analyzed soil DOC in the top 5 cm of mineral soil below the organic horizon of living and attacked trees that had not yet dropped their needles. They observed lower DOC below dead trees than live trees, which is consistent with the termination of rhizodeposition and mycorrhizal turnover. However, long-term analysis of soil DOC was not reported at this site to determine if DOC concentrations eventually rebound as litter decay increases. In a complimentary study, Kaňa et al. (2012) found increased soil DOC concentrations in both the litter layer and the organic-rich mineral horizon 3 years after beetle attack. They hypothesized that increased mineralization due to the increased litter input to the forest floor resulted in an increase in DOC concentrations. It appears that increasing DOC concentrations, at least in the soil, lag behind the initial beetle attack by several years due to: (1) the immediate cessation of root processes (2) the time delay until the needles drop to the forest floor and continue the decomposition process.

Huber et al. (2004) compared DOC fluxes in beetle-killed stands versus healthy stands; however, their studies were limited to throughfall and the humus layer and did not extend into the soil mineral horizons. These authors found that DOC concentrations in throughfall were highest in recently killed stands (1–2 years after attack); however, their comparison was limited to adjacent stands of varying ages since attack, rather than temporally sampling a particular stand through the die-off phases. Higher DOC concentrations were also found in the humus efflux of recently attacked stands (1–4 years after attack) relative to healthy stands, with concentrations ranging from 25 to 225 mg/L.

Similar to observations for nitrate, Colorado increases in stream water DOC in response to the bark beetle outbreak were not seen in the study by Clow et al. (2011); however, typical seasonal fluctuations were observed. Classic watershed characteristics such as percent forest cover, precipitation and basin area were more highly correlated to DOC concentrations than percent of forest killed by bark beetles. On the other hand, in a complementary study, Mikkelson et al. (2013a) found approximately four times the amount of total organic carbon (TOC) entering Colorado water treatment facilities that receive their source water from MPB-infested watersheds as compared to control watersheds. However, although the TOC concentrations were shown to increase in conjunction with the level of bark beetle infestation at impacted facilities, this increase was not statistically significant. In light of the varied findings on surface water DOC concentrations we need a better understanding of near-surface and subsurface reactive transport mechanisms for DOC, along with the dominant hydrologic flow paths.

The ultimate impact of beetle outbreaks on DOC concentrations in adjacent waters is still unclear. However, if DOC concentrations increase or change in chemical composition (i.e. increased aromaticity), a water-treatment concern is the increased potential for the formation of disinfection byproducts (DBPs), which are regulated in drinking water and considered harmful to human health. DBPs are formed when DOC reacts with chlorine during water treatment. Humic-like substances typically found in dissolved organic matter have been found to be common DBP precursors (i.e. likely to form toxic DBPs after chlorination) (Nikolaou and Lekkas 2001) and therefore, even without increases in DOC there may be increases in DBP concentration as the DOC composition becomes more humic in nature. Beggs and Summers (2011) characterized litter DOC from varying stages of attacked lodgepole pine trees and quantified DOC reactivity with chlorine. They found that fresh litter leachates from beetle-killed trees exhibited concentrations within typical coniferous litter ranges and had low proportions of aromatic humic dissolved organic material (DOM) relative to the polyphenolics/protein-like contribution. However, after 2 months of biodegradation the litter leachate lost 80 % of its DOC while experiencing an 85 % increase in SUVA (specific ultraviolet absorption; indicative of the aromaticity of the material); all samples also increased their specific humic peak intensities. The findings of this study suggest that as the needles drop to the forest floor following attack, and the more time they are exposed to weathering and biodegradation, the higher the DBP formation potential.

These mechanistic findings where pine litter leachate becomes more aromatic and humic as it degrades were supported observationally in a variety of catchments by Mikkelson et al. (2013a). Water treatment facilities receiving their source waters from MPB-impacted catchments had significantly higher levels of DBPs, such as trihalomethanes (THMs) and haloacetic acids after their TOC-rich waters reacted with chlorine. From 2004-2011, THM concentrations in impacted water treatment facilities exhibited a significant increasing trend that was correlated with time since bark beetle outbreak. Additionally, THM concentrations peaked in the late summer and early fall, despite TOC concentrations peaking during spring runoff. This seasonal decoupling of THMs and TOC along with a more pronounced temporal increase in THM formation than TOC concentration suggests that a higher aromatic and humic fraction, and hence alterations in TOC structure, are occurring as a result of infestation.

In summary, litter decomposition under beetle-attacked trees can increase DOC concentration and aromaticity in surface and soil leachates. However, increases may be delayed or sequestered in deeper soil layers due to the termination of root exudates, carbon-dependent microbial processes and abiotic sorption. It is not yet clear whether surface water DOC will universally increase due to bark beetle infestations or whether the characteristics of DOC will be altered. Clow et al. (2011) did not observe increases in DOC, which could be due to delayed release of DOC, or the catchment could have other characteristics mitigating the release. Conversely, the study by Mikkelson et al. (2013a) saw a small increase in TOC concentrations after bark beetle infestation and more compelling evidence for changes in its refractory nature. One potential explanation is that Mikkelson et al. (2013a) considered multiple watersheds and included both surface and groundwaters, while Clow et al. (2011) only sampled surface waters in one watershed. It is possible that groundwater transport is important for understanding the link between bark beetle outbreaks and DOC transport. Interesting opportunities for future work include determining if the primary transport pathway of bark beetle induced DOC release is via leaching through the soil layers into the groundwater or increased runoff transporting terrestrial organic carbon to surface waters. It is also imperative to further understand how the characteristics of the DOC are changing and how it is expressed both temporally and spatially. This shift has higher DBP formation potential, human health risk and water treatment ramifications in beetle-infested watersheds.

Metals and base cations

Bark beetle infestations may alter cation and aluminum fluxes as increased nitrification reduces the soil pH and leads to the exchange and loss of base cations (Ca2+, K+ and Mg2+) and aluminum from the soil (Huber et al. 2004; Tokuchi et al. 2004). Aluminum concentrations in seepage water have been shown to increase post attack and correlate with NO3 concentrations (Zimmermann et al. 2000; Huber et al. 2004; Tokuchi et al. 2004) as the negatively charged NO3 colloid attracts the positively charged aluminum ions. Mineral soil (Huber et al. 2004) and surface water concentrations (Tokuchi et al. 2004) of base cations have also been shown to increase for up to 7 years post attack. It seems likely that if there is an increased NO3 flux after bark beetle infestation it will lead to base cation or aluminum leaching, as determined by the soil geochemistry and initial metal content within the soil.

While Kaňa et al. (2012) did not measure soil–water concentrations, they did find elevated base cation concentrations in the soil below attacked trees in a Czech Republic forest. K+ concentrations responded immediately, indicative of the rapid release of K+ from decaying spruce residues or lack of K+ uptake from vegetation, followed by a slower increase in Ca2+. The drastic increase in base cations caused replacement of Al3+ and H+ ions in the soil sorption complex, leading to a higher soil pH after beetle infestation despite acidic needle drop. It appears with the increasing soil pH and base saturation that the quality of soils in the Czech Republic catchment increased post-beetle infestation as compared to before the infestation; however, the increased mobility of other cationic metals such as Cu, Zn, and Cd which were not investigated in these studies may lead to detrimental water quality impacts.

Literature regarding changes in transport of other metals (besides aluminum) following bark beetle infestation is limited. Complimentary studies investigating the effect of land use change (agricultural versus forest) on metal mobility have found up to 80 % of the measured Zn and Cd levels to be above current ground water quality standards after forest soil acidification (Römkens and Salomons 1998). The effects on metal flux are complex due to intertwined processes such as ligand affinity and aqueous solubility as a function of pH. DOC is known to form complexes with metals such as cadmium, copper, nickel and zinc (e.g. Christensen et al. 1996; Antoniadis and Alloway 2002), thus increasing their mobility. Accordingly, it is possible that metal mobility could be increased by complexation with DOC resulting from large-scale tree die-off and an initial rapid loss of carbon from the soil (Xiong et al. 2011) followed by prolonged release into the adjacent water supplies (Huber et al. 2004; Mikkelson et al. 2013a). If DOC-metal complexation is the primary mechanism for metal transport in the watershed, then it is important to also take into consideration hydrologic controls on DOC release. If the infested watersheds exhibit a typical seasonal peak release of DOC (approximately 1 month prior to peak stream discharge) (e.g. Hornberger et al. 1994), then peak metal concentrations can be expected around a similar seasonal timeframe. However, as it is still unknown which dominant DOC transport pathways are altered post beetle infestation, it is possible that the typical hydrologic controls on DOC release will change. Therefore, pre-infestation watershed characteristics such as soil metal concentrations and dominant hydrologic flow paths are critical in determining watershed response. It has also been shown that in waters receiving little urban input (i.e. waters from forested catchments), aromatic humic substances are the dominant metal chelators (Baken et al. 2011). If bark beetle infestations increase the aromaticity of DOC, particularly in low flow months, as suggested by field data presented by Mikkelson et al. (2013a), and discussed above, then metal transport via DOC complexation could be enhanced, especially in low flow months.

Along with DOC complexation, pH is well known to influence metal mobility (Gabler 1997; Römkens and Salomons 1998; Sauve et al. 2000) where lower pH generally increases solubility, desorption rates from soil, and thus mobility. Acidification is possible following forest die-off due to increased nitrification (Hélie et al. 2005) and an increased acidic needle pulse to the forest floor and therefore could lead to increased cationic metal solubility. Conversely, increases in pH as were observed in Kaňa et al. (2012) and Xiong et al. (2011) potentially could increase metal mobility as the solubility of DOC increases with increasing pH (Guggenberger et al. 1994) and DOC is more readily available for complexation. Although there is no literature that addresses metal-mobility impacts of the bark beetle infestation, it is important to determine if metal mobility will be altered because of the potential impacts on ecological health as well as possible complications in municipal water treatment and drinking water quality. Research needs to begin with stand-scale observations of soil–water metal concentrations and expand out to watershed and regional-scale observations of surface and groundwater metal concentrations over the entire beetle infestation progression as other land use changes with similar biogeochemical phenomena (e.g. Römkens and Salomons 1998) have resulted in large differences in metal mobility.

Summary and synthesis

Coupled hydrologic and biogeochemical processes resulting from bark beetle outbreaks have the potential to alter water quality and quantity; however impacts on water supply and ecological ramifications are not yet fully understood. From a critical analysis of the current literature, the following hydrologic generalizations can be made about bark beetle infested forests:

-

While the magnitude of the shift appears variable, snow depth will increase in infested catchments.

-

Changes in evapotranspiration are more variable and may be offset by competing components, such as decreased transpiration but increased ground evaporation.

-

Soil moisture increases are probable, although seasonal fluctuations may be influential in determining overall increases.

-

Stand-scale water yield will increase; however, confounding factors such as percent infested area, evaporation rates, and climate make predicting changes in water yield difficult on a watershed or regional scale.

These hydrologic shifts combined with other beetle-induced impacts will in turn affect terrestrial biogeochemistry with potential adverse impacts on nutrient cycling and water quality. While these effects appear to have regional variability linked to factors such as soil type and precipitation, some possible trends are:

-

A lag in time between initial infestation and observance of concentration changes exists for most nutrient responses; however, this time-scale lag differs depending on nutrient and watershed characteristics.

-

Nitrate soil response will may be dependent on atmospheric N-deposition and climatic factors.

-

Ammonium is likely to increase in soil beneath dead trees due to increased mineralization or organic matter and cessation of nutrient uptake through the roots of dead trees.

-

Changes in stream water NO3 and NH4 concentrations depend on catchment hydrologic flow-paths, atmospheric N inputs, N pools and sinks and potential denitrification in underlying soils, aquifers and riparian zones.

-

Increases in nitrate will be coupled to increased aluminum transport in seepage and soil waters.

-

Changes to the composition of total P (dissolved vs. particulate) are probable, although the actual concentrations of P may not increase.

-

Soil DOC initially decreases due to cessation of root exudate processes until needle drop where decomposition rebounds soil DOC concentrations.

-

There is a potential for increases in both DOC concentration and refractory properties in stream and groundwaters.

As a result of competing hydrologic processes and the inherent variability of natural watershed characteristics and infestations, coupled and indirect water resource effects related to beetle infestation are difficult to predict. It is still necessary to distil the essential climatic, watershed, forest stand, and beetle attack variables that impact overall water supply to support predictive understanding and models for future water management that work across different scales of distance and time.

In addition, detailed studies investigating the impact of watershed flow paths and residence times after a beetle infestation are needed to better understand the link between water quantity and water quality alterations (e.g. Römkens and Salomons 1998) and determine the length of time during which a catchment response to beetle infestations might be expected. If beetle infested watersheds exhibit fractal scaling then the watersheds have the potential to retain a long memory of past inputs (e.g. Kirchner et al. 2000; Kollet and Maxwell 2008), extending the impact of the bark beetle infestation well beyond what was initially anticipated. Conversely, if beetle epidemics increase preferential flow through the soil in response to increased snowmelt, spatial variability in snowmelt processes, and changes to melt timing, then significant buffering may not occur, and we should expect to see more dramatic changes in surface water composition (Godsey et al. 2010). However matrix flow is known to often provide significant buffering to rapid changes (Frisbee et al. 2012), which could explain why streamflow alterations post beetle infestation are only observed within certain watersheds. Beetle kill results in temporal and spatial heterogeneity in several hydrologic processes, and this heterogeneity can play a large role in watershed response. Whether or not a catchment delivers a rapid, concentrated pulse of released nutrients, DOC, and metals to surface waters or a less concentrated but prolonged pulse depends upon a specific catchment’s residence time and dilution capacity, which may or may not mitigate the beetle infestation impacts. Further research is needed to understand the transport and buffering mechanisms that may offset potential water quality effects in infested watersheds.

The potential for perturbations to nutrient cycling, and metal speciation and mobility are unclear, though recent research suggests that shifts may impact downstream ecological and municipal water quality (Frisbee et al. 2012). Research agrees that alterations to N and DOC cycling will occur at the tree-scale (Table 2), although compilations of large-scale responses are uncertain and still emerging in the literature. A better understanding of outcomes requires field evidence as well as a better mechanistic understanding of these integrated and sometimes competing processes, as it has been argued that insect infestations have the potential to affect C cycling on a regional scale (Mikkelson et al. 2013a). This understanding can then be complimented by model development and validation to further explore the potential severity and duration of the impacts of bark beetle infestations on water quality in order to plan and mitigate for potentially adverse outcomes. Timing and transport are additional variables where a strong integration and understanding of traditional hydrological approaches will play an important role in better understanding biogeochemical ramifications. Understanding these water quality and supply alterations is particularly important, as climate change continues to increase the range and severity of insect infestations in coniferous forests throughout the world.

References

Adams HD, Luce CH, Breshears DD, Allen CD, Weiler M, Hale VC, Smith A, Huxman TE (2011) Ecohydrological consequences of drought- and infestation- triggered tree die-off: insights and hypotheses. Ecohydrology 5(2):145–159

Alila Y, Bewley D, Kuras P, Marren P, Hassan M, Luo C and Blair T (2009) Effects of pine beetle infestations and treatments on hydrology and geomorphology: Integrating stand-level data and knowledge into mesoscale watershed functions. Natural Resources Canada, Canadian Forest Service, Pacific Forestry Centre, Victoria. Mountain Pine Beetle Working Paper 2009-06

Antoniadis V, Alloway B (2002) The role of dissolved organic carbon in the mobility of Cd, Ni and Zn in sewage sludge-amended soils. Environ Pollut 117(3):515–521

Ayres MP, Lombardero MJ (2000) Assessing the consequences of global change for forest disturbance from herbivores and pathogens. Sci Total Environ 262(3):263–286

Baken S, Degryse F, Verheyen L, Merckx R, Smolders E (2011) Metal complexation properties of freshwater dissolved organic matter are explained by its aromaticity and by anthropogenic ligands. Environ Sci Technol 45(7):2584–2590

Baron JS, Ojima DS, Holland EA, Parton WJ (1994) Analysis of nitrogen saturation potential in Rocky Mountain tundra and forest: implications for aquatic systems. Biogeochemistry 27(1):61–82

Beggs KMH, Summers RS (2011) Character and chlorine reactivity of dissolved organic matter from a mountain pine beetle impacted watershed. Environ Sci Technol 45(13):5717–5724

Bethlahmy N (1974) More streamflow after a bark beetle epidemic. J Hydrol 23(3–4):185–189

Bethlahmy N (1975) A Colorado episode: beetle epidemic, ghost forests, more streamflow. Northwest Science 49(2):95–105

Beudert B (2007) Forest hydrology: results of research in Germany and Russia. IHP/HWRP-Sekretariat, Koblenz

Bewley D, Alila Y, Varhola A (2010) Variability of snow water equivalent and snow energetics across a large catchment subject to Mountain Pine Beetle infestation and rapid salvage logging. J Hydrol 388(3–4):464–479

Biederman JA, Brooks P, Harpold A, Gochis D, Gutmann E, Reed D, Pendall E, Ewers B (2012) Multiscale observations of snow accumulation and peak snowpack following widespread, insect-induced lodgepole pine mortality. Ecohydrology. doi:10.1002/eco.1342

Boon S (2007) Snow accumulation and ablation in a beetle-killed pine stand in Northern Interior British Columbia. BC Journal of Ecosystems and Management 8(3):1–13

Boon S (2009) Snow ablation energy balance in a dead forest stand. Hydrol Process 23(18):2600–2610

Boon S (2012) Snow accumulation following forest disturbance. Ecohydrology 5:279–285

Boyer EW, Hornberger GM, Bencala KE, McKnight DM (1997) Response characteristics of DOC flushing in an alpine catchment. Hydrol Process 11(12):1635–1647

Bricker OP, Jones BF (1995) Main factors affecting the composition of natural waters. CRC Press, Boca Raton

Brooks PD, Williams MW, Schmidt SK (1998) Inorganic nitrogen and microbial biomass dynamics before and during spring snowmelt. Biogeochemistry 43(1):1–15

Christensen JB, Jensen DL, Christensen TH (1996) Effect of dissolved organic carbon on the mobility of cadmium, nickel and zinc in leachate polluted groundwater. Water Res 30(12):3037–3049

Clark GM, Mueller DK, Mast MA (2000) Nutrient concentrations and yields in undeveloped stream basins of the United States. JAWRA Journal of the American Water Resources Association 36(4):849–860

Clow DW, Rhoades C, Briggs J, Caldwell M, Lewis WM Jr (2011) Responses of soil and water chemistry to mountain pine beetle induced tree mortality in Grand County, Colorado, USA. Appl Geochem 26:S174–S178

Coley PD (1998) Possible effects of climate change on plant/herbivore interactions in moist tropical forests. Climatic change 39(2–3):455–472

Cullings KW, New MH, Makhija S, Parker VT (2003) Effects of litter addition on ectomycorrhizal associates of a lodgepole pine (Pinus contorta) stand in Yellowstone National Park. Appl Environ Microbiol 69(7):3772–3776

Dale VH, Joyce LA, McNulty S, Neilson RP, Ayres MP, Flannigan MD, Hanson PJ, Irland LC, Lugo AE, Peterson CJ (2001) Climate change and forest disturbances. Bioscience 51(9):723–734

Edburg SL, Hicke JA, Lawrence DM, Thornton PE (2011) Simulating coupled carbon and nitrogen dynamics following mountain pine beetle outbreaks in the western United States. J Geophys Res 116(G4):G04033

Edburg SL, Hicke JA, Brooks PD, Pendall EG, Ewers BE, Norton U, Gochis D, Gutmann ED, Meddens AJH (2012) Cascading impacts of bark beetle-caused tree mortality on coupled biogeophysical and biogeochemical processes. Front Ecol Environ 10(8):416–424

Fleming RA (1996) A mechanistic perspective of possible influences of climate change on defoliating insects in North America’s boreal forests. Silva Fennica 30(2–3):281–294

Frisbee MD, Phillips FM, Weissmann GS, Brooks PD, Wilson JL, Campbell AR, Liu F (2012) Unraveling the mysteries of the large watershed black box: implications for the streamflow response to climate and landscape perturbations. Geophys Res Lett 39(1):L01404

Gabler H-E (1997) Mobility of heavy metals as a function of pH of samples from an overbank sediment profile contaminated by mining activities. J Geochem Explor 58(2):185–194

Gelfan AN, Pomeroy JW, Kuchment LS (2004) Modeling forest cover influences on snow accumulation, sublimation, and melt. Journal of Hydrometeorology 5(5):785–803

Geza M, McCray JE, Murray KE (2010) Model evaluation of potential impacts of on-site wastewater systems on phosphorus in Turkey creek watershed. J Environ Qual 39(5):1636–1646

Godbold DL, Hoosbeek MR, Lukac M, Cotrufo MF, Janssens IA, Ceulemans R, Polle A, Velthorst EJ, Scarascia-Mugnozza G, De Angelis P (2006) Mycorrhizal hyphal turnover as a dominant process for carbon input into soil organic matter. Plant Soil 281(1):15–24

Godsey SE, Aas W, Clair TA, De Wit HA, Fernandez IJ, Kahl JS, Malcolm IA, Neal C, Neal M, Nelson SJ (2010) Generality of fractal 1/f scaling in catchment tracer time series, and its implications for catchment travel time distributions. Hydrol Process 24(12):1660–1671

Griffin JM, Turner MG (2012) Changes to the N cycle following bark beetle outbreaks in two contrasting conifer forest types. Oecologia 170(2):551–565

Griffin JM, Turner MG, Simard M (2011) Nitrogen cycling following mountain pine beetle disturbance in lodgepole pine forests of greater yellowstone. For Ecol Manage 261(6):1077–1089

Griffin JM, Simard M, Turner MG (2013) Salvage harvest effects on advance tree regeneration, soil nitrogen, and fuels following mountain pine beetle outbreak in lodgepole pine. For Ecol Manage 291:228–239

Guggenberger G, Glaser B, Zech W (1994) Heavy metal binding by hydrophobic and hydrophilic dissolved organic carbon fractions in a spodosol A and B horizon. Water Air Soil Pollut 72(1):111–127

Hélie JF, Peters DL, Tattrie KR and Gibson JJ (2005). Review and Synthesis of Potential Hydrologic Impacts of Mountain Pine Beetle and Related Harvesting Activities in British Columbia. Natural Resources Canada, Canadian Forest Service, Pacific Forestry Centre, Victoria. Mountain Pine Beetle Initiative Working Paper 2005-23

Högberg MN, Högberg P (2002) Extramatrical ectomycorrhizal mycelium contributes one-third of microbial biomass and produces, together with associated roots, half the dissolved organic carbon in a forest soil. New Phytol 154(3):791–795

Hornberger G, Bencala K, McKnight D (1994) Hydrological controls on dissolved organic carbon during snowmelt in the Snake River near Montezuma. Colorado. Biogeochemistry 25(3):147–165

Hubbard RM, Rhoades CC, Elder K, Negron J (2013) Changes in transpiration and foliage growth in lodgepole pine trees following mountain pine beetle attack and mechanical girdling. For Ecol Manage 289:312–317

Huber C (2005) Long lasting nitrate leaching after bark beetle attack in the highlands of the Bavarian forest national park. J Environ Qual 34(5):1772

Huber C, Baumgarten M, Gottlein A, Rotter V (2004) Nitrogen turnover and nitrate leaching after bark beetle attack in mountainous spruce stands of the Bavarian forest national park. Water Air Soil Pollut Focus 4(2):391–414

Jönsson AM, Appelberg G, Harding S, Barring L (2009) Spatio-temporal impact of climate change on the activity and voltinism of the spruce bark beetle,Ips typographus. Global Change Biology 15(2):486–499

Jost G, Weiler M, Gluns DR, Alila Y (2007) The influence of forest and topography on snow accumulation and melt at the watershed-scale. J Hydrol 347(1–2):101–115

Kaiser KE, McGlynn BL, Emanuel RE (2012) Ecohydrology of an outbreak: mountain pine beetle impacts trees in drier landscape positions first. Ecohydrology. doi:10.1002/eco.1286

Kaňa J, Tahovská K, Kopáček J (2012) Response of soil chemistry to forest dieback after bark beetle infestation. Biogeochemistry 113(1–3). doi:10.1007/s10533-012-9765-5

Kim JJ, Allen EA, Humble LM, Breuil C (2005) Ophiostomatoid and basidiomycetous fungi associated with green, red, and grey lodgepole pines after mountain pine beetle (Dendroctonus ponderosae) infestation. Can J For Res 35(2):274–284

Kirchner JW, Feng X, Neal C (2000) Fractal stream chemistry and its implications for contaminant transport in catchments. Nature 403(6769):524–527

Knight DH, Yavitt JB, Joyce GD (1991) Water and nitrogen outflow from lodgepole pine forest after two levels of tree mortality. For Ecol Manage 46(3–4):215–225

Kollet SJ, Maxwell RM (2008) Demonstrating fractal scaling of baseflow residence time distributions using a fully-coupled groundwater and land surface model. Geophys Res Lett 35(7):L07402

Love L (1955) The effect on streamflow of the killing of spruce and pine by the Engelmann spruce beetle. Trans Am Geophys Union 36:113–118

Kurz WA, Dymond C, Stinson G, Rampley G, Neilson E, Carroll A, Ebata T, Safranyik L (2008) Mountain pine beetle and forest carbon feedback to climate change. Nature 452(7190):987–990

Meddens AJH, Hicke JA, Ferguson CA (2012) Spatiotemporal patterns of observed bark beetle-caused tree mortality in British Columbia and the Western US. Ecol Appl 22(7):1876–1891

Mikkelson KM, Dickerson ERV, Maxwell RM, McCray JE, Sharp JO (2013a) Water-quality impacts from climate-induced forest die-off. Nature Climate Change 3:218–222

Mikkelson KM, Maxwell RM, Ferguson I, Stednick JD, McCray JE, Sharp JO (2013b) Mountain pine beetle infestation impacts: modeling water and energy budgets at the hill-slope scale. Ecohydrology 6(1):64–72

Mitchell RG, Preisler HK (1998) Fall rate of lodgepole pine killed by the mountain pine beetle in central Oregon. Western Journal of Applied Forestry 13(1):23–26

Mitton JB, Ferrenberg SM (2012) Mountain pine beetle develops an unprecedented summer generation in response to climate warming. Am Nat 179(5):E163

Molotch NP, Brooks PD, Burns SP, Litvak M, Monson RK, McConnell JR, Musselman K (2009) Ecohydrological controls on snowmelt partitioning in mixed-conifer sub-alpine forests. Ecohydrology 2(2):129–142

Morehouse K, Johns T, Kaye J, Kaye M (2008) Carbon and nitrogen cycling immediately following bark beetle outbreaks in southwestern ponderosa pine forests. For Ecol Manage 255(7):2698–2708

Musselman KN, Molotch NP, Brooks PD (2008) Effects of vegetation on snow accumulation and ablation in a mid-latitude sub-alpine forest. Hydrol Process 22(15):2767–2776

Nikolaou AD, Lekkas TD (2001) The role of natural organic matter during formation of chlorination by-products: a review. Acta Hydrochim Hydrobiol 29(2–3):63–77

Piirainen S, Finér L, Mannerkoski H, Starr M (2004) Effects of forest clear-cutting on the sulphur, phosphorus and base cations fluxes through podzolic soil horizons. Biogeochemistry 69(3):405–424

Pike RG, Feller MC, Stednick JD, Reisberger KJ, Carver M (2010) Chapter 12: water quality and forest management. Compendium of Forest Hydrology and Geomorpholgy in British Columbia. British Columbia Land Management Handbook, Victoria, pp 410–440

Potts DF (1984) Hydrologic impacts of a large-scale mountain pine beetle (Dendroctonus Ponderosae Hopkins) epidemic. J Am Water Resour Assoc 20(3):373–377

Prescott CE (2002) The influence of the forest canopy on nutrient cycling. Tree Physiol 22(15–16):1193–1200

Pugh E, Gordon E (2012) A conceptual model of water yield impacts from beetle-induced tree death in snow-dominated lodgepole pine forests. Hydrol Process. doi:10.1002/hyp.9312

Pugh E, Small E (2012) The impact of pine beetle infestation on snow accumulation and melt in the headwaters of the Colorado River. Ecohydrology 5(4):467–477

Raffa KF, Aukema BH, Bentz BJ, Carroll AL, Hicke JA, Turner MG, Romme WH (2008) Cross-scale drivers of natural disturbances prone to anthropogenic amplification: the dynamics of bark beetle eruptions. Bioscience 58(6):501–517

Reid R (1961) Moisture changes in lodgepole pine before and after attack by the mountain pine beetle. The Forestry Chronicle 37(4):368–375

Rhoades CC, McCutchan JH, Cooper LA, Clow D, Detmer TM, Briggs JS, Stednick JD, Veblen TT, Ertz RM, Likens GE (2013) Biogeochemistry of beetle-killed forests: explaining a weak nitrate response. Proc Natl Acad Sci 110(5):1756–1760

Römkens PF, Salomons W (1998) Cd, Cu and Zn solubility in arable and forest soils: consequences of land use changes for metal mobility and risk assessment. Soil Sci 163(11):859–871

Sauve S, Hendershot W, Allen HE (2000) Solid-solution partitioning of metals in contaminated soils: dependence on pH, total metal burden, and organic matter. Environ Sci Technol 34(7):1125–1131

Schnorbus M, Bennett K, Werner A (2010) Quantifying the water resource impacts of mountain pine beetle and associated salvage harvest operations across a range of watershed scales: Hydrologic modelling of the Fraser River Basin. MBPI Project 7.29, Natural Resources Canada, Canadian Forest Service, Pacific Forestry Centre, Information Report BC-X-423, p 64

Stednick JD (1996) Monitoring the effects of timber harvest on annual water yield. J Hydrol 176(1):79–95

Stednick JD, Jensen R (2007) Effects of Pine Beetle Infestations on Water Yield and Water Quality at the Watershed Scale in Northern Colorado. Unpublished technical report. Report to CWRRI, Project 2007CO153B. Available: http://water.usgs.gov/wrri/07grants/progress/2007CO153B.pdf

Stednick JD, Condon KE, Ginter FG (2010) Water quality of Willow Creek as affected by beetle-killed forests. Completion report submitted to Northern Colorado Water Conservancy District Berthoud, CO, 46 pp