Abstract

Climate change is predicted to lead to an increase in extreme rainfall and, in coastal areas, sea-salt deposition events. The impacts of these two climatic extremes on stream hydrochemistry were separately evaluated via a novel watering manipulation at the Gårdsjön experimental catchment, SW Sweden. In summer 2004, a 2000 m2 hillslope draining to a defined stream reach was brought to a high-flow hydrological steady state for a 9 day period by sustained addition of ‘clean’ water using a distributed sprinkler system. Marine ions were then added, to generate a realistic ‘sea salt’ episode. A remarkably well constrained hydrological response was observed, such that a simple two-compartment mixing model could reasonably well reproduce observed conservative tracer (chloride, Cl) measurements, and 78% of added water was recovered in runoff. Stream base cation concentrations and acidity responded predictably to clean water and sea-salt addition, with the former leading to an increase in pH and acid neutralising capacity, and the latter to episodic acidification through hydrogen ion and aluminium displacement from soil exchange sites by marine base cations. Anion responses were less predictable: water addition caused a flush of nitrate, but this was apparently independent of rainfall composition. Sulphate remained near-constant during clean water addition but declined sharply during sea-salt addition, indicative of a strong, pH-dependent solubility control on leaching, presumably adsorption/desorption in the mineral soil. Most strikingly, dissolved organic carbon (DOC) concentrations were stable during clean water addition but varied dramatically in response to sea-salt addition, exhibiting a strong negative correlation with Cl concentrations in water draining the organic soil. These observations provide a robust experimental verification of the hypothesis that deposition chemistry, through its influence on acidity and/or ionic strength, has a major influence on DOC leaching to surface waters.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The ecological condition of running waters is, to a substantial extent, determined by the severity of physical and chemical extremes. These, in turn, are controlled by climatic factors, such as storm events and droughts. Among the potential consequences of climate change, the frequency and severity of storm events is predicted to increase (IPCC 2007; Pinto et al. 2009). Storm events affect streams directly through increased discharge, and indirectly through changes in water quality; in semi-natural catchments, storm events typically lead to increased flow through surface soil horizons, diluting base cation inputs from weathering and increasing inputs of dissolved organic carbon and/or nitrate from shallow soils. These processes can lead to ecologically damaging episodic acidification, particularly when superimposed on long-term catchment acidification associated with anthropogenically-derived sulphur (S) and nitrogen (N) deposition (Davies et al. 1992; Wigington et al. 1996; Evans et al. 2008a). Additionally, in coastal areas, heavy storms can result in pulses of sea salt deposition, due to entrainment of marine aerosols from breaking waves at high wind speeds. The major impact of marine ion deposition, generally termed the “sea-salt effect”, is the alteration of cation-exchange equlibria between soil and soil water. This is a transient process, in which episodic inputs of marine base cations, primarily sodium (Na) and magnesium (Mg), cause the displacement of adsorbed acid cations, protons (H) and inorganic aluminium (Ali). This in turn can lead to the episodic acidification of runoff (e.g. Wright et al. 1988; Evans et al. 2001). In many areas, the legacy of base saturation depletion due to historic acid deposition means that soils, and therefore surface waters, are likely to remain highly susceptible to sea salt episodes for many years to come (Evans 2005). Climate change induced increases in the future severity of high-flow events in general, and sea-salt episodes in particular, therefore have the potential to reduce, or even negate, the benefits of reduced S and N deposition for many aquatic ecosystems.

As acid deposition has decreased in many industrialised regions, widespread increases have been observed in surface water DOC concentrations across many areas of Europe and Northeastern North America (Erlandsson et al. 2008; Monteith et al. 2007; de Wit et al. 2007; Driscoll et al. 2003; Hejzlar et al. 2003; Hruška et al. 2009). DOC production by terrestrial ecosystems is intimately linked to biological activity, and therefore to climatic and other factors influencing productivity including temperature (e.g. Freeman et al. 2001); nitrogen deposition (e.g. Pregitzer et al. 2001; Braggaza et al. 2006); and atmospheric CO2 (Freeman et al. 2004) and drought-rewet cycles (e.g. Worrall and Burt 2004; Clark et al. 2005; Toberman et al. 2008). Transport of this DOC to runoff is in turn influenced by hydrological factors, in particular the proportion of streamflow routed through organic-rich surface horizons (McDowell and Likens 1988), such that DOC concentrations and (in particular) fluxes are in most cases positively correlated with discharge (Worrall and Burt 2007; Erlandsson et al. 2008; Hongve et al. 2004). Finally, there is growing evidence that observed rising DOC trends are causatively linked to concurrent decreases in acid deposition (e.g. Monteith et al. 2007; Evans et al. 2006; Clark et al. 2006; Erlandsson et al. 2008). The precise mechanism for this link has not been fully resolved, but appears to be a solubility control on DOC mobility linked to soil solution acidity, aluminium concentration and/or ionic strength (Hruška et al. 2009; de Wit et al. 2007; Monteith et al. 2007). As well as anthropogenically-derived acid deposition, sea-salt deposition also affects all of these variables, and long-term changes in sea-salt deposition have been identified as a significant contributor to DOC variations in long-term studies (Monteith et al. 2007; Evans et al. 2006). The impact of sea-salt deposition on DOC leaching on an episodic timescale is, however, not well quantified.

For this study, we separately evaluated the impacts of episodic increases in (a) water input and (b) sea-salt input on the runoff chemistry, utilising a unique catchment-scale experimental facility at Gårdsjön, Sweden. A distributed watering system was used to increase water inputs over a 9 day period, first by adding clean water to generate a high flow episode, and then (once the catchment was in hydrological steady state) by adding water spiked with sea salt. Experimentally-induced hydrochemical changes, including acidity, nitrate (NO3), sulphate (SO4) and DOC were measured in catchment runoff, and in hillslope soil solution. The aim of the experiment was to distinguish between the two main hypothesised drivers of event-scale runoff chemistry variations, namely separate (i) hydrological variation and (ii) precipitation chemistry.

Methods

Study site

Lake Gårdsjön is located at 130 m elevation, 12 km inland on the Swedish west coast (58°04′ N, 12°03′ E), Fig. 1. Since 1979 several small catchments around the lake have been monitored and used for various manipulation experiments to investigate the effects of air pollution, forestry practices and climate, on soils, waters and terrestrial and aquatic organisms (e.g. Andersson and Olsson 1985; Hultberg and Skeffington 1998; Moldan et al. 2006). The 6300 m2 catchment G1 has been monitored since 1988. In 1991 the catchment was covered by a below-canopy plastic roof and for 10 years all precipitation intercepted by the roof was replaced by sprinkling of a “clean” precipitation underneath the roof. The aim of the “roof” experiment was to study the rate and mechanisms of recovery from soil and water acidification. The experiment was completed and the roof removed in 2001, but the monitoring of the site has been continued.

The G1 catchment is covered by a 90 year old conifer forest of Norway spruce (Picea abies (L.) Karst.), with some Scots Pine (Pinus sylvestris) present. The bedrock is gneissic with intermediate to acid chemical composition. Soils are podsolic, with a thick LFH humus layer overlying an eluviated grey E horizon, and a lower mineral BC horizon grading into unaltered compacted glacial till. Both the organic horizon and the B/C horizons are thinner in hillslope areas, and deeper in valley areas (Table 1). Long term annual precipitation measured at the nearby F1 reference catchment is 1140 mm year−1, throughfall water input to forest floor is 750 mm, and runoff is 560 mm (1989–2007). A detailed description of the experimental area is given by Andersson and Olsson (1985) and by Hultberg and Skeffington (1998).

Experimental water addition

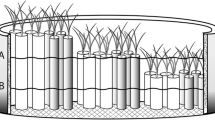

Manipulation was undertaken on a hydrologically discrete area of hillslope in the northwest of the catchment, which drains to the lower part of the stream channel (Fig. 2). The experiment started on July 22nd, 2004 and water was added for 9 days. Because conditions were dry prior to the experiment, with near zero discharge, the remainder of the catchment was hydrologically inactive throughout the experiment. To apply artificial rainfall evenly across the hillslope, thirty four water sprinklers were placed every 5 m over an area of about 1000 m2. In the valley bottom, an additional ca 1000 m2 of the catchment was watered indirectly by the water passing from the irrigated slope towards the catchment outlet. Thus the total slope area affected by the irrigation amounted to 2000 m2. Ambient precipitation during the experiment amounted to 3.2 mm on July 22nd and 2.6 mm on July 23rd–25th. These rains were largely intercepted in the canopy and the water amount reaching the forest floor was too small to cause a detectable runoff response.

A similar experimental design has been repeatedly used at the site through the years for a series of seven hydrological tracer experiments. In all seven campaigns the runoff rate during the high flow ‘steady state’ part of the experiment was lower than irrigation rates (Lange et al. 1996; Lischeid et al. 2000). On an annual basis, however, there has been nearly perfect match between 10 years of measured Cl input to the catchment from deposition and sprinkling and Cl output by runoff (Moldan et al. 1998). It has been concluded that under ambient conditions no measurable amount of water leaves catchment to deep groundwater or bypasses the outlet by cracks in bedrock. However, during the experiments the water table was strongly elevated in a part of catchment. As a result, the catchment border repositioned and part of the water was drained across the normal catchment border, and thus did not reach the catchment outlet. The amount of water ‘leaking’ was estimated as a difference between sprinkling rate and discharge rate in the mornings, when evapotranspiration could be assumed negligible (relative to irrigation rate). In the hereby described 2004 experiment the steady state input amounted to 0.68 L s−1, and the output showed a diurnal pattern (Fig. 3) due to the root water uptake of transpiring trees. Discharge peaked in the mornings at values some 0.10 L s−1 lower than the sprinkling rate. We assume that the difference of 0.10 L s−1 was water drained outside the normal catchment border. Therefore the effective sprinkling rate, i.e. the input of water which drained into the catchment, was estimated at 0.58 L s−1.

Water pumped from nearby Lake Gårdsjön was used for the experiment. Lake water was mechanically filtered and then de-ionised in cation and anion exchanger columns. For the first phase of the experiment, this ‘clean’ water was used for irrigation. The objective of the water addition was, firstly, to bring a part of the catchment to hydrological steady state, such that artificial rain of constant intensity resulted in a constant discharge rate. Hydrological pathways and residence times were then assumed to be in a steady state. A constant rate of clean water addition was then maintained for a total of 5 days, with the expectation that runoff chemistry would also stabilise to some extent (at least in relation to flowpath changes) within this period.

In the second phase of the experiment, deionised water was mixed with a small quantity of seawater, to simulate a sea-salt deposition event. 500 L of sea water were collected at the coast of Skagerrak, about 20 km from the site. The salinity of the sea water was 22.2 g L−1, equivalent to a chloride (Cl) concentration of 366700 μmol(c) L−1. Since the catchment was already at hydrological steady state and runoff chemistry had at least partly stabilised, chemical changes during this phase of the experiment were considered to be attributable primarily to the effects of the sea-salt addition. Addition was undertaken in two steps: firstly a lower dose, simulating a common “normal” sea salt input, was applied for a 2 day period (Tables 2 and 3). Subsequently, the dose was tripled to simulate high sea salt input. Again, this dose was maintained for 2 days, after which all water addition was halted. Both levels of sea salt addition were chosen based on analysis of Cl concentrations in the long term records of throughfall precipitation at the site (Hultberg and Skeffington 1998).

Sampling protocol

Runoff at the G1 catchment outlet was sampled automatically at a frequency proportional to the flow. Chemical analysis of runoff was performed at IVL Swedish Environmental Research Institute using routine accredited methods: pH was determined by glass electrode, Mg, Na, calcium (Ca), potassium (K), Cl, SO4 and NO3 by ion chromatography. Aluminium was determined colourimetrically by flow injection analysis. Al was speciated into organic (Alo) and inorganic (Ali) fractions using a method adapted from Driscoll (1984). The charge for inorganic Al was assumed to be 3+ (Moldan et al. 1995). DOC was determined using platinum-catalyst, high temperature oxidation using the non-purgeable organic C method. Ionic strength (IS) was calculated from the chemistry of inorganic constituents (mol L−1):

where c is the concentration of element i and Z is the ionic charge.

Apart from runoff water, samples were also collected inside the catchment at the low edge of the watered slope at the sampling point O6 (Fig. 2). At this location a steep outcrop of bare rock makes it possible to collect the dripping water draining the soils in the slope above before it re-enters the soil at the foot of the rock. Four samples collected at O6 were analysed for the full set of determinands above. The rest of the O6 samples were only analysed for Cl.

Mixing model construction

A simple mixing model was constructed to test the assumption that Cl concentrations in runoff could be adequately described by a mixing of pre-treatment soil water with water added during the experiment. Two compartments were considered; the 1000 m2 slope to which water was sprinkled and the 1000 m2 valley, to which the water from the slope drained. The sampling point O6 was taken to represent water draining the hillslope, and catchment runoff to represent the output from the valley. Initial Cl concentrations in pre-experiment soil water were assumed to be 420 μmol(c) L−1, equal to measured concentration in the first runoff collected after the start of the experiment. The amount of soil water in each compartment was manually calibrated (90 mm in the slope and 135 mm in the valley) to fit observed Cl variations in each compartment.

Statistical significance of runoff chemistry changes

Pearson correlation was used to evaluate statistical significance of relationships between different solutes. Runoff chemistry time series were divided into three groups: 1. Addition of clean water only; 2. Addition of a low concentration of sea salt; and 3. Addition of a high concentration of sea salt. These groups were tested separately. All statistical analysis was performed with NCSS 2001 (Hintze 2001) software.

Results and discussion

Hydrological response

After initial tests and adjustments of the watering system, the watering of the catchment slope resulted in a total water addition of 547 m3 (274 mm, Table 2) over the 9 days, equivalent to 274 mm of rainfall within 2000 m2 of the irrigated area. Due to summer drought, the catchment had near-zero discharge before the experiment. A day before the start of the experiment a 12 mm rain event took place, which was insufficient to cause a discharge response but which conveniently helped to wet the soils. Six hours after the start of watering, discharge began to increase, and by 38 h had reached 90% of the sprinkling rate. At that point, the sprinkling rate was reduced from an initial 0.87 to 0.68 L s−1. Thereafter, discharge was in an approximate balance with effective sprinkling rate (Fig. 3), and the catchment was hydrologically at steady state. The discharge rate of between 0.5 and 0.6 L s−1 (Fig. 3) corresponds to the 98th percentile of long term recorded discharge. At Gårdsjön, once stream discharge is initiated, the capacity of the soil to store additional water is very limited and any additional water input results in rapid discharge rate increase (Nyberg 1995). The change in soil water storage from near-zero discharge at the beginning of the experiment and storage at peak discharge was about 15 mm. This was estimated from the discharge volume which left the catchment in the 2 days between the end of experimental watering and the return of the system to near-zero discharge (Fig. 3). Of the 274 mm of sprinkled water between July 22nd and August 2nd, 31 mm (11%) was drained to outside the catchment, 213 mm (78%) was measured in discharge and ca 30 mm (11%) was lost to evapotranspiration. This compared to approximately 750 mm year−1 of annual average throughfall precipitation at the area, and an annual discharge of 440 mm year−1. A distinct diurnal discharge pattern was observed, with morning maxima and evening minima, due to transpiration by the trees (Fig. 3).

Chloride response and mixing model application

Chloride concentrations in the stream gradually decreased during the clean water addition, from the initial concentration of 402 μmol(c) L−1 to a minimum of 156 μmol(c) L−1 (Fig. 4). Concentrations were still gradually declining when sea salt addition was initiated (Table 3). This reversed the decline, and Cl rose to a peak of 423 μmol(c) L−1 by the end of the high sea-salt addition period. In runoff from the watered slope part of the catchment (O6), Cl fell to 95 μmol(c) L−1 during clean water steady state, and rose to 880 μmol(c) L−1 at the end of high sea-salt addition. Observed Cl variations were reasonably well reproduced by the simple two compartment mixing model (Fig. 4), with calibrated soil water storage of 90 mm in the hillslope and 135 mm in the valley bottom. The correspondence between modelled and observed variations in Cl concentration in runoff from the hillslope and runoff at the catchment outlet suggest that the hydrological interpretation on which the mixing model was based is realistic. The thinner hillslope soils, which received direct water addition from the sprinklers, responded rapidly to changes in rainfall chemistry. Deeper valley soils, receiving water additions indirectly via the hillslope, showed a slower and more damped chemical response (Fig. 4).

Concentration of Cl in the sprinkling water (dashed line), in the water draining the irrigated slope (open circles) and in runoff (closed circles) and theoretical concentrations Cl produced by a mixing of sprinkling water with pre-event water in the slope and water draining the slope with soil water in the valley (solid lines)

Sulphate response

Sulphate concentrations remained stable during clean water addition, ranging between 120 and 140 μmol(c) L−1 (Fig. 5). This occurred despite the high water throughput, and a zero concentration of SO4 in added water, which would have led to substantial dilution if SO4 had behaved conservatively. Previous observations from the catchment also indicate that SO4 does not decline significantly during high flows (Moldan et al. 2004). The lack of a decline strongly indicates that there must be large available pools of easily released SO4 in the soils. Mörth et al. (2005) estimated the amount of extractable SO4 in the soil at Gårdsjön to be 407 mmol m−2 in 1990 and 354 mmol m−2 in 1995 after 5 years of clean precipitation treatment (Moldan et al. 2004). The amount of extractable SO4 was not re-measured since, but provided that the roof experiment continued for another 5 years after 1995 and that SO4 deposition was in 2004 some 50–60% less than in 1990, the extractable SO4 pool could be expected to be in 2004 less than 354 mmol m−2, perhaps less than 300 mmol m−2. The amount of SO4 leached to runoff during the 9 days was 13 mmol m−2, probably less than 5% of the extractable pool. Two possible sources are the desorption of SO4 from B horizon, and mineralization of organic S from the humus layer. As observed for NO3 (see below), the dry period prior to the experiment could have led to an accumulation of mineralised S from the organic soils (Torssander and Mörth 1998). However, the complete absence of any episodic SO4 pulse comparable to that observed for NO3 argues against the flushing of a pool of mobile SO4 accumulated through organic S mineralisation, and in favour of an equilibrium-controlled release of SO4 through desorption. Higher observed pH during this phase of the experiment would favour SO4 desorption from mineral soil surfaces (Johnson and Cole 1980), increasing the strength of this buffering process during the clean water addition period. Previous work at the Gårdsjön catchment (Mörth et al. 2005) suggests that SO4 desorption plays a relatively modest role in delaying recovery from acidification over the long term as S deposition declines; a conclusion which likely extends to similar glacial soils elsewhere in Scandinavia. Our data suggest, however, that short-term adsorption–desorption of SO4 may play a significant role in buffering runoff chemistry on an episodic timescale.

In marked contrast to the lack of a response to clean water addition, SO4 declined sharply following sea-salt addition, from 125 to 80 μmol(c) L−1. This decline occurred despite the fact that the sea-salt treatment solutions contained 55 and 165 μmol(c) L−1 of SO4 in the low and high sea-salt rain solutions respectively. Concentrations reached a minimum just at the end of the experiment, at which point they were less than half the concentrations in the sprinkling solution. The response clearly cannot therefore be explained by dilution, and the likeliest explanation is that the decrease was associated with an acidity pulse triggered by sea-salt addition (discussed below); episodic soil acidification has been shown to increase SO4 adsorption in the Bs horizons of podzolized soils (Gustafsson 1995). Again, therefore, the observed response to sea-salt addition supports the interpretation that mineral soil SO4 adsorption/desorption has a strong influence on runoff SO4 concentrations at this site. As sea-salt addition was ceased, SO4 slowly returned to pre-experiment level of ca. 120 μmol(c) L−1 within 3 weeks after the end of the experiment.

Nitrate response

Nitrate was not present in runoff at the first stage of watering (Fig. 6), but it increased steeply up to 25 μmol(c) L−1 after 2 days of clean water treatment. Concentrations then decreased gradually to 5 μmol(c) L−1 at the end of the experiment, and finally returned to non-detectable concentrations 5 days after the experiment ended (data not shown). NO3 showed no visible response to the sea salt additions. Long-term yearly weighted NO3 concentrations at the G1 catchment are very low (1–2 μmol(c) L−1, Hultberg et al. 1998), but peaks are occasionally observed in response to intensive rain or snowmelt (maxima of 40–70 μmol(c) L−1 were observed during the winters of 2000 and 2002). The peak observed in this experiment appears to represent a similar hydrologically-driven response, probably enhanced by dry antecedent conditions leading to increased nitrification within catchment soils. The increase of nitrate in runoff during heavy rains or snowmelt is a recognised phenomenon reported in many regions (e.g. Kaste et al. 1997; Oulehle et al. 2008). In normal conditions, NO3 produced within upper soil layers is transported vertically through the soil column by rainwater, and consumed by microbial or root uptake before reaching the stream. Under the very high rate of experimental water input, the soil profile was completely water saturated, near-surface lateral flow dominated, and water residence times were reduced to a few hours, greatly reducing efficiency of NO3 uptake. The subsequent decline of NO3 after 2 days of constant water input is indicative of exhaustion of the soil NO3 pool.

Base cations response

As for Cl and SO4, base cations were removed from the sprinkling water by the ion exchanger for the clean water addition, and added as part of the subsequent sea-salt addition. The temporal response in runoff concentrations was consequently similar to Cl; the sum of base cations (SBC = Ca + Mg + Na + K) decreased from ca. 550 to ca. 350 μmol(c) L−1 during the clean water sprinkling, and increased gradually to ca. 500 μmol(c) L−1 at the end of sea salt addition (Fig. 5). The changes were most pronounced for Na, and to a lesser extent for Mg, the two major base cations in sea-salt. K remained stable at around 8 μmol(c) L−1, while Ca increased slightly, from 20 to 26 μmol(c) L−1, during clean water addition. Ca is the main base cation derived from weathering in the mineral soil, and this response appears to be associated with the peak in NO3 leaching.

The overall increase in base cation concentration from the end of the clean water addition to the end of the sea-salt addition (150 μmol(c) L−1) was smaller than the net increase in [Cl + SO4 of 222 μmol(c) L−1. This provides clear evidence that sea-salt additions had the anticipated effect of altering the cation exchange equilibrium through displacement of non-marine acid and base cations by marine base cations (primarily Na and Mg). The impacts of this process on Acid Neutralising Capacity, acidity and aluminium concentrations is considered in the following section.

Acidity, aluminium and ionic strength response

During clean water addition, runoff pH increased from 4.22 to 4.47. This occurred as a result of the more pronounced dilution of strong acid anions than base cations (Fig. 7a). A similar response was manifested by acid neutralising capacity (ANC, calculated as the sum of base cations minus the sum of acid anions) which increased gradually from −24 μmol(c) L−1, and reached +25 μmol(c) L−1 immediately prior to sea salt addition (Fig. 6). Ali concentration decreased from 20 to 5 μmol(c) L−1 during clean water treatment, and IS decreased from 0.44 to 0.23 (IS units*1000). Sea-salt addition effectively reversed all these chemical changes: pH fell from 4.47 prior the sea-salt addition to a minimum of 4.2, and ANC dropped to −60 μmol(c) L−1 (i.e. an overall decrease of 100 μmol(c) L−1 during a 4 day period with no hydrological change). This was associated with an increase in Ali from 3 to 39 μmol(c) L−1 and with an increase in H+ from 33 to 59 μmol(c) L−1 at the end of sea-salt addition (Fig. 7b). IS increased to 0.45 at the end of the sea-salt addition.

Over the full experimental period, IS and H+ concentration were both strongly correlated with Cl concentration (Fig. 8). Correlations with ANC and Ali were weaker, but still significant (all at p < 0.001). Ali was positively correlated to H+ over the full course of the experiment (p < 0.001), but during sea-salt addition a more statistically significant correlation for Ali was observed with IS (p < 0.001) than for H+ (p < 0.01). This suggests that during the clean water addition, Ali was governed by standard Al–H equilibria, but that during sea-salt addition (for the high dose in particular) incoming Na and Mg effectively displaced Ali from soil cation exchange sites, resulting in the observed Al–IS correlation.

Changes in ANC did not correspond directly to those in hydrogen ion concentration, due to the presence of DOC in the stream. According to dissociation model of Hruška et al. (2003), there were ca. 30–55 μmol(c) L−1 of organic anions (A−, Fig. 7b) present in the stream (with dissociation ranging between 42 and 47%) at the level of DOC 7.5–12 mg L−1. This organic acidity contributes significantly to stream acidity, but has a positive influence on ANC, as reflected in an alternative definition of ANC (units: μmol(c) L−1):

Since pH stayed below 4.47 during the experiment (i.e. hydrogen ion concentration always exceeded 35 μmol(c) L−1), Ali always exceeded 5 μmol(c) L−1, and since HCO3 concentration is zero at low pH, ANC would have been continuously negative through the experiment in the absence of organic acids.

Dissolved organic carbon response

DOC concentrations of 5–10 mg L−1 are typically observed in G1 stream water during base flow conditions (0.1–0.2 L s−1). During high discharge events (about 4 L s−1) DOC commonly increases, up to around 20 mg L−1. In this experiment, DOC showed highly contrasting responses to the two phases of treatment (as noted earlier, due to the summer drought there was no runoff before the start of the experiment). At the onset of clean water addition, runoff DOC concentration was relatively high (14.2 mg L−1). DOC concentrations then stabilised at around 12 mg L−1 for the remainder of the clean water addition. Several days of constant watering effectively saturated the soil. Hydrological flowpaths shifted from mineral soil horizons to more organic-rich upper organic layers and enhanced DOC leaching (e.g. McDowell and Likens 1988). The amount of DOC leached out over the 5 days before the onset of sea salt addition was 1.5 g m−2. This compares to an estimated 2–3 g m−2 of potential DOC in shallow upland soils (Haaland and Mulder 2010). The markedly stable DOC concentrations during clean water addition suggests that (like SO4, but unlike NO3), the watering did not simply flush out an accumulated DOC pool out of the soil, as observed in some other studies of similar systems (Haaland and Mulder 2010), but rather that a solubility control was in operation. On the other hand streamwater Cl, and consequently IS, declined by about 50% over the same period, while pH increased from 4.2 to 4.35 (Figs. 5 and 6). These observations are seemingly at odds with studies suggesting that DOC solubility is controlled by acidity or IS (Monteith et al. 2007; de Wit et al. 2007; Evans et al. 2006; Hruška et al. 2009). However, streamwater IS, pH and Ali concentrations probably do not fully reflect conditions in the organic horizons from which DOC was predominantly derived (e.g. Oulehle and Hruška 2005; Geisler et al. 1996). Whereas runoff chemistry was strongly influenced by the deeper mineral horizon in the valley soils, seepage data from the thin, organic-dominated hillslope soils sampled at the sampling point O6 (Fig. 2) may be a better proxy for organic horizon soil solution. Full chemical characterisation was not undertaken on all O6 samples, but Cl measurements (Fig. 4) suggest that organic horizon soil water chemistry may have stabilised relatively quickly (within 2 days) in response to clean water addition, leading to stable stream DOC over the same period. In addition, any DOC passing through the mineral soil may be affected by anion adsorption–desorption; higher pH may favour desorption of DOC, as was noted earlier for SO4. On the other hand, because DOC and SO4 compete for adsorption sites, desorption of SO4 from the soil during clean rain treatment could have enhanced DOC retention (Zech et al. 1994; Kalbitz et al. 2000). A similar response has been noted over the longer term at the Solling acidity manipulation study in Germany (Evans et al. 2008b). While anion adsorption–desorption provides long-term buffering in older, unglaciated soils, the results of the clean rain addition at Gårdsjön suggest that it can also play a significant short-term buffering role in glaciated catchments, for both SO4 and DOC.

Following the onset of sea-salt addition, DOC began a steady decline, reaching 10 mg L−1 during the period of low sea-salt addition, and falling further to a minimum of 7.5 mg L−1 during high sea-salt addition. Overall, this represents a ca. 40% decline in DOC during 4 days of sea salt addition at a constant discharge rate, and a total runoff DOC flux of 0.9 g m−2. In contrast to the period of clean water addition, hillslope seepage data suggest that organic horizon water chemistry was changing substantially, and continuously, through the sea-salt addition period (Fig. 6). Taking the entire period of hydrological steady state conditions (i.e. from 24 July onwards, including both clean water and sea-salt addition periods) there is a very strong correlation between stream water DOC, and the Cl concentration in hillslope seepage water (p < 0.001, r 2 = 0.96, Fig. 9). This strongly supports the hypothesis that organic horizon acid–base chemistry is controlling DOC leaching.

The results of this study provide some insights regarding the drivers of recent, widely-observed increases in surface water DOC concentrations across Northern Europe and parts of North America. The stable DOC concentrations over the period of clean water addition suggest that, at least over the timescale and water flux magnitude of the experiment, DOC concentrations in runoff are not constrained by limitations on DOC supply. On the other hand, the rapid DOC response to changes in precipitation chemistry supports the conclusion of various studies (e.g. Evans et al. 2006; Monteith et al. 2007; de Wit et al. 2007; Hruška et al. 2009; Oulehle and Hruška 2009) that DOC increases are driven by solubility changes, driven by reductions in acid deposition. Our results also clearly demonstrate that runoff DOC concentrations are affected by changes in sea-salt deposition, as proposed by Monteith et al. (2007).

With regard to the mechanisms by which such changes occur, most previous studies have been based on observations of long-term change under ambient conditions, and were thus unable to differentiate between specific mechanisms determining organic matter solubility, in particular the relative importance of pH, Al concentrations and IS. A number of relevant experimental studies have been undertaken, however. The HUMEX field experiment in Norway, in which strong acid loadings were increased, showed a decrease in soil water DOC, associated with a decrease in pH (Vogt et al. 1994). Clark et al. (2006) found that peat pore water DOC decreased along with pH during laboratory simulated drought experiments. It is likely that (at least in mineral soils), increases in acidity are accompanied by increases in inorganic Al concentration, which acts as a coagulant for DOC (Tipping and Woof 1991; De Wit et al. 2001). A few studies have also specifically shown that increasing IS reduces DOC concentrations (Tipping and Hurley 1988; Evans et al. 1988). Our data show a high correlation between DOC and Cl concentration in the hillslope organic horizon (Fig. 9), and a strong correlation between Cl and IS at the catchment outflow (Fig. 8). However, runoff Cl concentration was also strongly correlated with H+, and to a lesser extent with Ali, and it is thus not possible to definitively distinguish between these proposed controls on DOC solubility. In reality, the three proposed drivers are highly interlinked in acidic, mineral soil catchments, and it is perhaps spurious to attempt to distinguish between them in these systems. A different situation might be expected in peats (where Ali is not present) or in more alkaline catchments, where IS can change without concurrent changes in pH or Ali (Hruška et al. 2009). In this study, our clear conclusion is that alterations in precipitation chemistry can result in near-instantaneous changes in runoff DOC concentrations, and that these changes appear to be mediated through solubility controls within the organic horizon. This is consistent with the hypothesis that long-term DOC increases are attributable to reducing levels of acid deposition, and that DOC is also affected on an inter-annual timescale by variations in sea-salt deposition.

Conclusions

Sustained, hillslope-scale water addition generated a remarkably well constrained hydrological response, which could be explained by a simple mixing model comprising hillslope and valley soil compartments. Adding ‘clean’ water at a constant rate had a positive effect on the runoff chemistry: over 4 days pH increased from 4.3 to 4.5, Ali decreased from 20 to 5 μmol(c) L−1, and ANC increased from −24 to +25 μmol(c) L−1. A flush of NO3 was observed, but DOC and SO4 both remained remarkably constant once hydrological steady state was achieved. Sea-salt addition, on the other hand, led to a rapid, and prolonged, deterioration of the runoff quality, to biologically toxic levels: Ali increased to 80 μmol(c) L−1, pH decreased to 4.2 and ANC fell to −60 μmol(c) L−1. Sea-salt addition also led to large reductions in both SO4 and DOC concentrations. The behaviour of these solutes suggests strong solubility controls on leaching. SO4 leaching appears to be controlled by pH-dependent adsorption and desorption in the mineral soil, and DOC leaching by acidity and/or ionic strength in the organic soil.

The results of this study have a number of wider implications for understanding and prediction of surface water quality under changing climate and deposition. They confirm that biologically critical chemical extremes are highly sensitive to climate events, but indicate that this sensitivity is not simply related to the magnitude of precipitation inputs. For example, increased water fluxes will increase DOC leaching to surface waters, but increased sea-salt inputs will have the opposite effect. Acidity, Al and SO4 responses are also highly influenced by rainfall composition. Nevertheless, all of the responses observed appear explicable in terms of current mechanistic understanding, providing encouragement that future hydrochemical extremes may be accurately predicted, and quantitatively modelled, from the magnitude and composition of precipitation inputs.

Finally, we argue that large-scale manipulation experiments such as the one described in this study provide a uniquely valuable means for improving understanding the biogeochemical response of natural ecosystems. Compared to laboratory and even plot-scale experiments, which tend to minimise heterogeneity within small, replicated and often carefully selected soil units (Schindler 1998), hillslope-scale manipulations capture more of the inherent complexity and heterogeneity of real ecosystems. On the other hand, they can control for some of the multiple sources of variability (in this case short-term hydrological fluctuation) that can confound interpretation of ambient monitoring data. While challenging to maintain, particularly for extended periods, large-scale experimental catchments remain a vital tool for biogeochemical research.

References

Andersson F, Olsson B (eds) (1985) Lake Gårdsjön, an acid forest lake and its catchment. Ecological Bulletins 37, AiO Print Ltd, Odense

Braggaza L, Freeman C, Jones T, Rydin H, Limpens J, Fenner N, Ellis T, Gerdol R, Hájek M, Hájek T, Iacumin P, Kutnar L, Tahvanainen T, Toberman H (2006) Atmospheric nitrogen deposition promotes carbon loss from peat bogs. Proc Natl Acad Sci 103:19386–19389

Clark JM, Chapman PJ, Adamson JK, Lane SN (2005) Influence of drought induced acidification on the mobility of dissolved organic carbon in peat soils. Global Change Biol 11:791–809

Clark JM, Chapman PJ, Heathwaite AL, Adamson JK (2006) Suppression of dissolved organic carbon by sulfate induced acidification during simulated droughts. Environ Sci Technol 40:1776–1783

Davies TD, Tranter M, Wigington PJ, Eshleman KN (1992) ‘Acidic episodes’ in surface waters in Europe. J Hydrol 132:25–69

De Wit HA, Mulder J, Nygaard PH, Aamlid D (2001) Testing the aluminium toxicity hypothesis: a field manipulation experiment in mature spruce forest in Norway. Water Air Soil Pollut 130:995–1000

de Wit HA, Mulder J, Hindar A, Hole L (2007) Long-term increase in dissolved organic carbon in streamwaters in Norway is response to reduced acid deposition. Environ Sci Technol 41:7706–7713

Driscoll CT (1984) A procedure for the fractionation of aqueous aluminium in dilute acidic waters. Int J Environ Anal Chem 16:267–284

Driscoll CT, Driscoll KM, Roy KM, Mitchell MJ (2003) Chemical response of lakes in the Adirondack region of New York to declines in acidic deposition. Environ Sci Technol 37:2036–2042

Erlandsson M, Buffam I, Fölster J, Laudon H, Temnerud J, Weyhenmeyer GA, Bishop K (2008) Thirty-five years of synchrony in the organic matter concentrations of Swedish rivers explained by variation in flow and sulphate. Global Change Biol 14:1–8

Evans CD (2005) Modelling the effects of climate change on an acidic upland stream. Biogeochem 74:21–46

Evans A, Zelazny LW, Zipper CE (1988) Solution parameters influencing dissolved organic carbon levels in three forest soils. Soil Sci Soc Am J 52:1789–1792

Evans CD, Monteith DT, Harriman R (2001) Long-term variability in the deposition of marine ions at west coast sites in the UK Acid Waters Monitoring Network: impacts on surface water chemistry and significance for trend determination. Sci Total Environ 265:115–129

Evans CD, Chapman PJ, Clark JM, Monteith DT, Cresser MS (2006) Alternative explanations for rising dissolved organic carbon export from organic soils. Global Change Biol 12:2044–2053

Evans CD, Reynolds B, Hinton C, Hughes S, Norris D, Grant S, Williams B (2008a) Effects of decreasing acid deposition and climate change on acid extremes in an upland stream. Hydrol Earth Syst Sci 12:337–351

Evans CD, Goodale CL, Caporn SJM, Dise NB, Emmett BA, Fernandez IJ, Field CD, Findlay SEG, Lowett GM, Messenburg H, Moldan F, Sheppard LJ (2008b) Does elevated nitrogen deposition or ecosystem recovery from acidification drive increased dissolved organic carbon loss from upland soil? A review of evidence from field nitrogen addition experiments. Biogeochemistry 91:13–35

Freeman C, Evans CD, Monteith DT (2001) Export of organic carbon from peat soils. Nature 412:785

Freeman C, Fenner N, Ostle NJ, Kang H, Dowrick DJ, Reynolds B, Lock MA, Sleep D, Hughes S, Hudson J (2004) Export of dissolved organic carbon from peatlands under elevated carbon dioxide levels. Nature 430:195–198

Geisler R, Lundstrom US, Grip H (1996) Comparison of soil solution chemistry assessment using zero-tension lysimeters or centrifugation. Eur J Soil Sci 47:395–405

Gustafsson JP (1995) Modelling pH-dependenct sulfate adsorption in the Bs horizons of podzolised soils. J Environ Qual 24:882–888

Haaland S, Mulder J (2010) Dissolved organic carbon concentrations in runoff from shallow heathland catchments: effects of frequent excessive leaching in summer and autumn. Biogeochemistry 97(1):45–53

Hejzlar J, Dubrovský M, Buchtele J, Růžička M (2003) The apparent and potential effects of climate change on the inferred concentration of dissolved organic matter in a temperate stream (the Malše River, South Bohemia). Sci Total Environ 310:142–152

Hintze J (2001) NCSS 2001. NCSS, LLC. Kaysville, Utah, USA. www.ncss.com

Hongve D, Riise G, Kristiansen JF (2004) Increased colour and organic acid concentrations in Norwegian forest lakes and drinking waters—a result of increased precipitation? Aquat Sci 66:231–238

Hruška J, Köhler S, Laudon H, Bishop K (2003) Is a universal model of organic acidity possible: comparison of the acid/base properties of dissolved organic carbon in the boreal and temperate zones. Environ Sci Technol 37:1726–1730

Hruška J, Krám P, McDowell WH, Oulehle F (2009) Dissolved Organic Carbon (DOC) in Central European streams is driven by reductions in ionic strength rather than climate change or decrasing acidity. Environ Sci Technol 43:4320–4326

Hultberg H, Skeffington R (eds) (1998) Experimental Reversal of Acid Rain Effects: The Gårdsjön Roof Project. Wiley, England

Hultberg H, Moldan F, Andersson IB, Skeffington RA (1998) Recovery from acidification in the forested cover catchment experiment at Gårdsjön: effects on biogeochemical output fluxes and concentrations. In: Hultberg H, Skefington R (eds) Experimental Reversal of Acid Rain Effects: The Gårdsjön Roof Project. Wiley, England, pp 157–198

IPCC (2007) Climate Change 2007: Synthesis Report. Intergovernmental Panel on Climate Change, pp 73

Johnson DW, Cole DW (1980) Anion mobility in soils: relevance to nutrient transport from forest ecosystems. Environ Int 3:79–90

Kalbitz K, Solinger S, Park J-H, Michalzik B, Matzner E (2000) Controls on the dynamics of organic matter in soils: a review. Soil Sci 165:277–304

Kaste Ø, Henriksen A, Hindar A (1997) Retention of atmospherically-derived nitrogen in subcatchments of the Bjerkreim River in Southwestern Norway. Ambio 26(5):296–303

Lange H, Lischeid G, Hoch R, Hauhs M (1996) Water flow paths and residence times in a small headwater catchment at Gårdsjön, Sweden, during steady state storm flow conditions. Water Resour Res 32(6):1689–1698

Lischeid G, Lange H, Hauhs M (2000) Information gain by single tracers under steady state and transient flow conditions: the Gårdsjön G1 multiple tracer experiments. In: “TraM” 2000 Conference, 73–77. Red Books 262. IAHS

McDowell WH, Likens GE (1988) Origin, composition and flux of dissolved organic carbon in the Hubbard Brook valley. Ecol Monogr 58(3):177–195

Moldan F, Hultberg H, Andersson I (1995) Covered catchment experiment at Gårdsjön: changes in runoff chemistry after four years of experimentally reduced acid deposition. Water Air Soil Pollut 85:1599–1604

Moldan F, Andersson I, Bishop KH, Hultberg H (1998) Catchment-scale acidification reversal experiment at Gårdsjön, South-west Sweden: assessment of the experimental design. In: Hultberg H, Skeffington R (eds) Experimental reversal of acid rain effects: the Gårdsjön Roof Project. Wiley, New York, pp 85–108

Moldan F, Skeffington RA, Mörth C-M, Torssander P, Hultberg H, Munthe J (2004) Results from covered catchment experiment at Gårdsjön, Sweden, after ten years of clean precipitation treatment. Water Air Soil Pollut 154(1):371–384

Moldan F, Kjønaas OJ, Stuanes A, Wright RF (2006) Increased nitrogen in runoff and soil following thirteen years of experimentally-increased nitrogen deposition to a coniferous-forested catchment at Gårdsjön, Sweden. Environ Pollut 144(2):610–620

Monteith DT, Stoddard JL, Evans CD, de Wit HA, Forsius M, Høgåsen T, Wilander A, Skjelkvåle BL, Jeffries DS, Vuorenmaa J, Keller B, Kopáček J, Veselý J (2007) Dissolved organic carbon trends resulting from changes in atmospheric deposition chemistry. Nature 450:537–540

Mörth C-M, Torssander P, Kjønaas OJ, Stuanes A, Moldan F, Giesler R (2005) Mineralization of organic sulphur delays recovery from anthropogenic acidification. Environ Sci Technol 39:5234–5240

Nyberg L (1995) Soil and groundwater distribution, flowpaths, and transit times in a small till catchment. Comprehensive Summaries of Uppsala Dissertations from the Faculty of Sciences and Technology 97, Acta Universitatius Upsaliensis

Oulehle F, Hruška J (2005) Tree species (Picea abies and Fagus silvatica) effects on soil water acidification and aluminium chemistry at sites subjected to long-term acidification in the Ore Mts., Czech Republic. J Inorg Biochem 99:1822–1829

Oulehle F, Hruška J (2009) Rising trends of dissolved organic matter in drinking-water reservoirs as a result of recovery from acidification in the Ore Mts., Czech Republic. Environ Poll 157(12):3433–3439

Oulehle F, McDowell WH, Aitkenhead-Peterson JA, Krám P, Hruška J, Navrátil T, Buzek F, Fottová D (2008) Long-term trends in stream nitrate concentrations and losses across watersheds undergoing recovery from acidification in the Czech Republic. Ecosystems 11:410–425

Pinto JG, Zacharias S, Fink AH, Leckebusch GC, Ulbrich U (2009) Factors contributing to the development of extreme North Atlantic cyclones and their relationship with the NAO. Clim Dyn 32:711–737

Pregitzer KS, Zak DR, Burton AJ, Ashby JA, MacDonald NW (2004) Chronic nitrate additions dramatically increase the export of carbon and nitrogen from northern hardwood ecosystems. Biogeochemistry 68:179–197

Schindler DW (1998) Replication versus realism: the need for ecosystem-scale experiments. Ecosystems 1:323–334

Tipping E, Hurley MA (1988) A model of solid-solution interactions in acid organic soils, based on complexation properties of humic substances. J Soil Sci 39:505–519

Tipping E, Woof C (1991) The distribution of humic substances between solid and aqueous phases of acid organic soils; a description based on humic heterogeneity and charge dependent sorption equilibria. J Soil Sci 42:437–448

Toberman H, Evans CD, Freeman C, Fenner N, White M, Emmett BA, Artz RRE (2008) Summer drought effects Uppon soil and litter extracellular phenol oxidase activity and soluble carbon release in an upland Calluna heathland. Soil Biol Biochem 40:1519–1532

Torssander P, Mörth CM (1998) Sulfur dynamics in the roof experiment at lake Gårdsjön deduced from sulfur and oxygen ratios in sulfate. In: Hultberg H, Skefington R (eds) Experimental reversal of acid rain effect: the Gårdsjön Roof Project. Wiley, England, pp 185–206

Vogt RD, Ranneklev SB, Mykkelbost TC (1994) The impact of acid treatment on soilwater chemistry at the HUMEX site. Environ Int 3:277–286

Wigington PJ, DeWalle DR, Murdoch PS, Kretser WA, Simonin HA, Van Sickle J, Baker JP (1996) Episodic acidification of small streams in the northeastern United States: ionic controls of episodes. Ecol Appl 6:389–407

Worrall F, Burt TP (2004) Time series analysis of long term river DOC records: evidence for enzymic latch mechanisms? Hydrol Process 18:893–911

Worrall F, Burt TP (2007) Flux of dissolved organic carbon from UK rivers. Global Biogeochem Cycles 21:GB1013

Wright RF, Norton SA, Brakke DF, Frogner T (1988) Experimental verification of episodic acidification of freshwaters by seasalt. Nature 334:422–424

Zech W, Guggenberger G, Schulten H-R (1994) Budgets and chemistry of dissolved organic carbon in forest soil: effects of anthropogenic soil acidification. Sci Total Environ 152:49–62

Acknowledgments

This work was funded in part by the European Commission under the 6th Framework EU project EUROLIMPACS (GOCE-CT-2003-505540); project CZ0051 funded through EEA grant and Norwegian Financial Mechanism; the University of Bayreuth and the Swedish Environmental Protection Agency through research programme Climate Change and Environmental Objectives (CLEO).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Moldan, F., Hruška, J., Evans, C.D. et al. Experimental simulation of the effects of extreme climatic events on major ions, acidity and dissolved organic carbon leaching from a forested catchment, Gårdsjön, Sweden. Biogeochemistry 107, 455–469 (2012). https://doi.org/10.1007/s10533-010-9567-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10533-010-9567-6