Abstract

We analyze the results of a Probabilistic Seismic Hazard Assessment obtained for Saudi Arabia using a spatially smoothed seismicity model. The composite up-to-date earthquake catalog is used to model seismicity and to determine earthquake recurrence characteristics. Different techniques that are frequently used for the analysis of input data are applied in the study. The alternative techniques include the declustering procedures for catalog processing, statistical techniques for estimation the magnitude–frequency relationship (MFR), and the seismicity smoothing parameter. The scheme represents epistemic uncertainty that results from an incomplete knowledge of earthquake process and application of alternative mathematical models for a description of the process. Our calculations that are based on the Monte Carlo technique include also a consideration of the aleatory uncertainty related to the dimensions and depths of earthquake sources, parameters of MFR, and scatter of ground-motion parameter. The hazard maps show that the rock-site peak ground acceleration (PGA) is the highest for the seismically active areas in the north-western (Gulf of Aqaba, PGA > 300 cm/s2 for return period 2475 years) and south-western (PGA > 100 cm/s2 for return period 2475 years) parts of the country. We show that the procedures for catalog declustering have the highest influence on the results of hazard estimations, especially for high levels of hazard. The choice of smoothing parameter is a very important decision that requires proper caution. The relative uncertainty that is ratio between the 85th and 15th percentiles may reach 50–60% for the areas located near the zones of high-level seismic activity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Probabilistic seismic hazard assessment (PSHA) in terms of strong ground motion parameters is widely used in seismic hazard mapping, development of design codes, retrofit design, and financial planning of earthquake losses (e.g., McGuire 2001, 2004). PSHA determines the frequency of exceeding various levels of ground motion during a specified period of time (Cornell 1968; McGuire 2004). Seismic sources that describe the seismogenic potential of active areas are a necessary input for probabilistic seismic hazard analysis. Three types of seismic sources are used: active geological faults, areal source zones, and smoothed seismicity. The active fault is the preferred model when the necessary parameters of the fault (activity rate, maximum expected magnitude, focal mechanism) are well known. In many cases the seismic activity can not be associated with an active fault and two other types of seismic sources are used in descriptions of seismicity in seismic hazard analysis.

Areal seismic zones are geographical regions that have experienced seismic activity in the past and serve as potential sources of earthquakes in the future. The seismicity characteristics (earthquake recurrence and maximum expected magnitude) in a particular seismic source zone are distinct from those in adjacent zones. It is assumed that the distribution of earthquakes is homogeneous inside the zone. The definition of a seismic source zone is based on the interpretation of the geological, geophysical and seismological data, and analysis of the known seismicity, as well as possible future activity inferred from geology and tectonic structures. The evaluation and interpretation of the various types of available information depend strongly on individual judgment or opinion. At the same time, the characteristics of seismic activity may be particularly uncertain in regions of low-to-moderate seismicity (e.g., Aspinall 2013).

As an alternative to source-zone-based models, the so-called zoneless approach (Frankel 1995; Woo 1996) that is based only on the seismic activity rate obtained from the earthquake catalog is frequently used in seismic hazard studies (e.g., Cao et al. 1996; Lapajne et al. 1997; Peláez Montilla et al. 2003; Chan and Grünthal 2009; Kolathayar and Sitharam 2012; Ramanna and Dodagoudar 2012; Goda et al. 2013; Zuccolo et al. 2013). The observed seismic activity is smoothed around the vicinity of epicenters of past events, and different forms of smoothing functions may be used (see, for example, Stock and Smith 2002, for a review and analysis).

Saudi Arabia has experienced considerable earthquake activity in the past (Poirier and Taher 1980; Ambraseys and Adams 1988; Ambraseys and Melville 1988, 1989; El-Isa and Al Shanti 1989; Ambraseys et al. 1994). Several large and moderate-size earthquakes shook Saudi Arabia and the adjacent territory during the last century, among which the following events should be mentioned: the Sa’dah earthquake of 11 January 1941, MS 6.5 (Ambraseys et al. 1994) and the Gulf of Aqaba earthquake of 22 November 1995, MW 7.3 (Klinger et al. 1999; Roobol et al. 1999). A review of PSHA studies for different regions of the Arabian Peninsula was provided by Zahran et al. (2015). The conventional Cornell-McGuire approach (Cornell 1968; McGuire 2004) and areal seismic source models were applied in almost all the studies. Zahran et al. (2015) used a Monte Carlo technique for PSHA based on a model composed of areal source zones compiled from the corresponding models available in literature. Stewart (2007) considered seismic moment release in small unit cells for estimation of seismic hazard in western Saudi Arabia. The short seismic catalog (1970–2005) was used in the study.

In this paper we analyze results of PSHA obtained for Saudi Arabia using the smoothed seismicity model. To the our knowledge, there is a lack of seismic hazard studies for the area based on the approach. We apply different techniques that are frequently used for processing of input data and calculation of the necessary input parameters. The composite up-to-date seismic catalog is used to model the spatial distribution of seismicity and to determine earthquake recurrence characteristics. We apply two declustering procedures (Gardner and Knopoff 1974; Uhrhammer 1986), two statistical techniques for the estimation of parameters of the recurrence relationship (ordinary least squares and maximum likelihood), and two values of the smoothing parameter (30 and 50 km). These variants represent epistemic or model uncertainty resulted from incomplete knowledge of the physics of the earthquake process and application of alternative mathematical models for a description of the process. We consider in our study different combinations of the alternative procedures and different metrics to measure the uncertainty are used. The analysis allows us to estimate the degree of influence of the procedures on the results of the PSHA based on smoothed seismicity. The results of the work may be used together with the hazard estimations obtained on the basis of areal seismic source zones, and this would allow to compile the up-to-date composite seismic hazard map for the territory.

2 Catalog analysis

The composite catalog used in this study was compiled from several sources. These are:

-

1.

The catalog compiled by National Center for Earthquakes and Volcanoes, Saudi Geological Survey (NCEV SGS). The catalog includes data from the International Seismological Center (ISC) online bulletin (http://www.isc.ac.uk/), the data collected from regional centers, namely the Seismic Studies Center at King Saud University (KSU), King Abdulaziz City for Science and Technology (KACST), and the data collected by the NCEV itself (e.g., Endo et al. 2007).

-

2.

The catalog compiled during a recent study in the framework of the Global Earth Model (GEM) and the Earthquake Model of the Middle East (EMME) projects. The aim of the study was to establish the new catalog of seismicity for the Middle East, using all historical (pre-1900), early and modern instrumental events up to 2006 (Zare et al. 2014).

-

3.

The earthquake data compiled by the Egyptian National Seismological Network (ENSN) up to 2009; besides Egypt, the data include events that occurred in the NW part of the Arabian Peninsula (El-Hadidy 2012).

The composite catalog was compiled for a region spanning from 30° to 60°E and 10° to 35°N and covers the time period from 31 AD up to December 2014. The data from different sources cover different time periods and may overlap each other. The control and removal of duplicate events in the composite catalog was performed after uniform magnitude recalculation and priority was given to events with moment magnitude estimates.

2.1 Uniform magnitude recalculation

The size of earthquakes in the sources mentioned above is expressed by different types of magnitude, namely surface wave magnitude (MS), body wave magnitude (mb), local magnitude (ML), and moment magnitude (MW). As a rule, the uniform magnitude scale of moment magnitude MW is consistent with ground-motion prediction equations (GMPE) and is used in seismic hazard calculation. Therefore, the magnitudes of all events should be converted to the moment magnitude scale and the conversion is usually performed using empirical relationships.

However, the following problems related to the magnitude conversion should be mentioned. Firstly, the empirical equations between different types of magnitude may be developed when these estimates of magnitude are available for the same events. The parameters of a function that relates two variables are usually estimated using the least squares technique (e.g., Rawlings et al. 1998), in which several implicit requirements should be accepted. In so-called ordinary least squares (OLS), it is assumed that the independent (predictor) variables are measured without error and all the errors are in the dependent (response) variables. Strictly speaking, when considering the relation between different magnitude scales (for example, the relation MW–MS), one cannot accept the requirement of a non-error predictor (MS in this case). Therefore, the standard regression procedures based on the OLS technique, in which the uncertainty of the predictor variable is assumed to be relatively small, may not be applicable. The so-called orthogonal regression, or general orthogonal regression (GOR), in which the model errors are distributed over the predictor and response variables, should be used to determine linear relationships between two types of magnitudes (see Castellaro et al. 2006; Castellaro and Bormann 2007; Gutdeutsch et al. 2011; Lolli and Gasperini 2012; Musson 2012; Gasperini et al. 2015, among others). The technique, which is also called as total least squares (TLS), minimizes the sum of the squared perpendicular distances from the data point to a regression line.

Secondly, as it has been mentioned in several studies (e.g., Scordilis 2006; Leonard et al. 2012; Zare et al. 2014) conversion from local magnitude ML to moment magnitude MW requires a consideration of regional magnitude equations, that is, a consideration of different effective magnifications of Wood-Anderson seismographs and distance corrections. Because of the problems arising in magnitude conversion, some researches preferred not to use any conversion, for example Leonard et al. (2012) for Australian seismicity.

Bearing in mind the necessity to consider epistemic or model uncertainty in empirical relationships, in our study for magnitude conversion we use a number of relationships that are available in the literature. We apply several conversion equations for one type of magnitude-predictor with similar weights. Conversion for surface wave magnitude was performed using the relationships provided by Johnston et al. (1994), Ambraseys and Free (1997), Scordilis (2006), El-Hussein et al. (2012), and Zare et al. (2014). For body wave magnitude the relationships from Johnston (1996), Scordilis (2006), Deif et al. (2009), El-Hussein et al. (2012), and Zare et al. (2014) were used. For local magnitude we applied the relationships from Bollinger et al. (1993), Grünthal and Wahlström (2003), and Zare et al. (2014). A description of the relationships is provided in the Electronic Supplement.

The empirical magnitude–conversion relationships have a stochastic nature, therefore we added a random residual value to the averaged moment magnitude values calculated using the conversion relationships. The conversion was performed as follows:

where \(M^{\prime}_{W}\) is the estimate of moment magnitude, \(\overline{{M_{W,\iota} }}\) is the median value of moment magnitude calculated using a particular conversion equation i, n is the number of conversion equations used, \(\delta\) is the random variate, i.e. random draws from a normal distribution with zero mean and unit standard deviation, \(\sigma\) is the accepted standard deviation for uncertainty of the conversion, where \(\sigma\) = 0.2 units of magnitude.

2.2 Catalog declustering

To be used in seismic hazard assessment, the catalog needs to omit duplicate events, aftershocks, and foreshocks, that is, there is a necessity to separate independent earthquakes (or background earthquakes) from earthquakes that depend on each other. The process of separating earthquakes into these two classes is known as “seismicity declustering” (e.g., van Stiphout et al. 2012). For large enough tectonic regions, the subset of independent earthquakes is expected to be homogeneous in time, that is a stationary Poisson process. Seismic swarms, typically caused by magma or fluid intrusions, are a special case that is more appropriately modeled as a strongly non-homogeneous Poisson process. Such a process is characterized by a time-varying rate. An example of the swarms in the Kingdom of Saudi Arabia is the series of earthquakes that occurred in 2009 under the Harrat Lunayyir lava field (Zahran et al. 2009; Pallister et al. 2010; Al-Zahrani et al. 2012). The swarm contained several earthquakes of magnitude 4 or greater including a magnitude 5.4 event that caused minor damage in the town of Al Ays, 40 km to the southeast from the field.

Several methods for catalog declustering have been proposed (see reviews in Zhuang et al. 2002; Console et al. 2010; Luen and Stark 2012; van Stiphout et al. 2012; Marzocchi and Taroni 2014). The most common declustering methods are the mainshock window (e.g., Gardner and Knopoff 1974) and linked declustering (e.g., Reasenberg 1985). A windowing technique is a simple way of identifying mainshocks and aftershocks. For each earthquake in the catalog with magnitude M, the subsequent shocks are identified as aftershocks if they occur within a specified time interval T(M), and within a correspondent distance interval R(M). Foreshocks, in most cases, are treated in the same way as aftershocks. In general, the bigger the magnitude of the mainshock, the larger the windows. The size of spatial and temporal windows may vary from one study (or region) to the other (see examples in van Stiphout et al. 2012; Zare et al. 2014).

In the PSHA practice, the windowing technique proposed by Gardner and Knopoff (1974) is usually applied, because it produces a declustered catalog that resembles a Poisson dataset (van Stiphout et al. 2012). As has been noted by Luen and Stark (2012), if enough events are deleted from a catalog, the remainder will always be consistent with a temporally homogeneous Poisson process. In our study we apply two variants of the windowing techniques, namely those of Gardner and Knopoff (1974) and Uhrhammer (1986) (the respective techniques and publications here referred to as G&K1974 and UHR1986). The sizes of spatial and temporal windows are shown in Table 1, and the total numbers of earthquakes in the declustered catalogs are given in Table 2.

Due to the much smaller sizes of spatial and temporal windows, application of the UHR1986 technique for catalog declustering resulted in the larger number of independent earthquakes with small and intermediate magnitudes than that when applying the G&K1974 technique. Marzocchi and Taroni (2014) suggest that the declustering may lead to significant underestimation of the “true seismic hazard” (see also Boyd 2012), especially for areas of a high level of hazard. Thus the possible variations between declustered catalogs derived using different methods (see also examples in Zare et al. 2014) require analysis of the effect of the chosen method on the results.

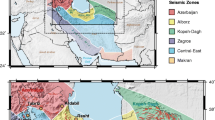

The distribution of earthquake epicenters from the declustered catalogs that was obtained using the UHR1986 technique is shown in Fig. 1 together with the slightly revised scheme of seismic source zones used in our previous study (Zahran et al. 2015).

Maps of epicenters from the declustered catalog (the UHR1986 technique) and the revised scheme of seismic source zones used in our previous study (Zahran et al. 2015). The numbers of the zones correspond to numbers in Table E1 (electronic supplement). a Earthquakes with magnitudes 3 < M ≤ 4, b earthquakes with magnitudes M > 4

2.3 Analysis of completeness of the catalog

An assessment of the catalog completeness is a necessary step in an analysis of seismicity. Several methods have been proposed to assess the magnitude MC above which an earthquake catalogue can be considered as reasonably complete (e.g., Woessner and Wiemer 2005; Mignan and Woessner 2012), or to assign time intervals in which a certain magnitude range is likely to be completely reported (e.g., Stepp 1972; Mulargia et al. 1987; Stucchi et al. 2004). In this work we concentrated on the determination of time intervals of completeness, which is required for the analysis of the Gutenberg-Richter relationship, and two methods are used. The first is the Visual Cumulative Method (VCM), which is based on plots of the cumulative number of seismic events of different magnitude intervals versus time (Tinti and Mulargia 1985; Mulargia et al. 1987). The slope change in the graphs indicates changes of the completeness. It is commonly assumed that the most recent change in slope occurs when the data became complete and the interval with the highest slope is selected. The method has been applied in regional PSHA studies by Deif et al. (2009), Al-Arifi et al. (2013), and Khan et al. (2013). The characteristics of completeness are, theoretically, functions of space (e.g., Wiemer and Wyss 2000), therefore an assessment of completeness in this study has been performed for the whole catalog and for sub-catalogs representing particular regions. The regions were composed based on areal seismic source zones (Zahran et al. 2015) and the density of epicenters. The regions are the following (Fig. 2): Gulf of Aden and Sheba Ridge, western Saudi Arabia, Gulf of Aqaba and the Dead Sea, the Red Sea, and the area that covers seismic zones in the Red Sea, western and northwestern Saudi Arabia, and Jordan (RSSAJR).

Figure 3 presents examples of plots of the cumulative number of earthquakes (CNE) for selected areas and particular magnitude ranges. The apparent flattening of the CNE slope is observed for the relatively small magnitude threshold or for ranges of relatively small magnitudes during the period of the recent few years. The phenomenon reflects the heterogeneity of the compiled catalog caused by different time periods covered by the source catalogs used. For example, the SGS NCEV catalog contains local events up to end of 2014, while the new catalog of seismicity for the Middle East (Zare et al. 2014) and the catalog compiled by El-Hadidy (2012) cover the time period up to 2006 and 2009, respectively. The flattening is less pronounced for the region of western Saudi Arabia that is covered by dense seismic networks.

The second method represents a statistical approach to completeness assessment proposed by Stepp (1972) that relies on the statistical property of the Poisson distribution highlighting time intervals during which the recorded earthquake occurrence rate is uniform. The method consists of a visual check of the parallelism of the cumulative experimental distribution against the Poisson (theoretical) standard deviation curve. Supposing that earthquake occurrences follow a Poisson distribution, the Stepp test evaluates the stability of the mean rate of occurrences (λ) of events which fall in a predefined magnitude range in a series of time windows (T). If \(k_{1} , k_{2} , \ldots , k_{n}\) are the number of events per unit time interval, then an unbiased estimate of the mean rate per unit time interval is \(\lambda = \frac{1}{n}\sum\nolimits_{i = 1}^{n} {k_{i} }\) and its variance is \(\sigma_{\lambda }^{2} = \lambda /n\), where n is the number of unit time intervals. If the rate λ is constant, then the standard deviation σ varies as \(1/\sqrt T\). On the contrary, if λ is not stable, σ deviates from the line of the \(1/\sqrt T\) slope. The length of the time interval at which no deviation from that line occurs defines the completeness time interval for the magnitude range. The method has been applied in regional PSHA studies by El-Hussein et al. (2012) and Mohindra et al. (2012).

Since Stepp’s method is based on a statistical analysis it is sensitive to the peculiarities of seismicity distribution. The dataset used in the method should represent a uniform distribution of seismicity across the study area and it should contain a statistically significant number of events. An analysis of catalog completeness using Stepp’s method is demonstrated in Fig. 4. Spatial heterogeneity of the compiled catalog that is caused by different time intervals covered by the source catalogs results in unstable estimates for the most recent 15–18 years even for small magnitudes. However the stable estimates for relatively small magnitudes may be obtained for short periods of observation when analyzing areas with homogeneous data. The region of western Saudi Arabia may be considered as an example of such an area, because the earthquake catalog for the region is compiled using dense networks.

Analysis of completeness of the catalog using the Stepp’s method, standard deviation of the estimate of the mean of the annual number of events (symbols) as a function of sample length (time interval T) for different magnitude range. The lines show \(1/\sqrt T\) behavior for particular magnitude ranges. a All data. b Particular areas

The insufficient number of recorded events with magnitudes more than 5.5–6.0 does not allow us to obtain stable estimates even for the whole composite catalog. Therefore, in this work for the determination of the period of completeness we used mostly the visual cumulative method; Stepp’s technique was applied to constrain the uncertainty related to selection of the points of the beginning of the highest slope. Table 3 provides estimates of completeness periods for different magnitude ranges and regions. A comparison of the estimates with the results of previous studies can be made only when the analyzed catalogs cover similar areas. The results obtained by Deif et al. (2009) and those for the RSSAJR region in this study may be considered to be examples of such correspondence, and the comparison is shown in Table 3.

2.4 Determination of parameters of the magnitude–frequency relation

A recurrence relation, that is, the relation between the frequency and the magnitude of the earthquakes, is estimated from the earthquake catalog provided that the data sample is characterized by time homogeneity and completeness. The most widely used form is the so-called Gutenberg-Richter (or magnitude–frequency) log-linear relation (Gutenberg and Richter 1956)

It may be interpreted either as being a cumulative relationship (N is the number of earthquakes of magnitude m or greater) or as being a density law (non-cumulative, N is the number of earthquakes in a certain small magnitude interval around m, i.e. \(m \pm\Delta m\)) (Herrmann 1977). Parameter a is the activity and defines the intercept of the relationship at m equals zero. The parameter b is the slope, which defines the relative proportion of small and large earthquakes. Many modifications of the magnitude–frequency relation have been proposed to represent observed peculiarities of earthquake recurrence (e.g., Tinti et al. 1987; Utsu 1999). The cumulative relationship \(N\left( { \ge m} \right)\) in a standard (Cornell–McGuire) PSHA is described by a doubly bounded (between arbitrary reference magnitude \(M_{min}\) and upper-bound magnitude \(M_{max}\)) exponential distribution (e.g., Cornell and Vanmarcke 1969) in the following way:

where \(\alpha = {\text{N}}\left( {{\text{M}}_{\hbox{min} } } \right)\), \({\text{M}}_{ \hbox{min} }\) is an arbitrary reference magnitude, \({\text{M}}_{ \hbox{max} }\) is an upper-bound magnitude, \(\beta = b \times \ln \left( {10} \right)\). This equation results in the earthquake frequency approaching zero for the upper-bound magnitude.

The ordinary least squares (OLS) and maximum likelihood (ML) (Aki 1965; Utsu 1965; Weichert 1980; Bender 1983; Kijko and Smit 2012) techniques are frequently used for estimation of the parameters a and b, while other methods have also been suggested (e.g., Musson 2004; Leonard et al. 2014; Wang et al. 2014). The question as to which technique is preferable has been widely discussed (e.g., Weichert 1980; Marzocchi and Sandri 2003; Bender 1983; Leonard 2012; Mohamed et al. 2014). The maximum likelihood method places a greater weight on numerous small events and therefore it is sensitive to uncertainties in the catalog completeness at small magnitudes and in magnitude estimates. The least squares method can be sensitive to off-trend extreme events (outliers) (e.g., Leonard 2012; Mohamed et al. 2014). It has been noted, however, that in regions of low activity, the least squares method may provide more reliable recurrence rates of large events than those obtained using the maximum likelihood method (Mohamed et al. 2014).

We analyze the cumulative magnitude–frequency relation that provides smooth curves when the data are sparse. Both OLS and ML techniques are applied, and the relations were estimated for the whole study area and for particular regions. The numerical procedure suggested by Weichert (1980) allows consideration of varying levels of catalogue completeness. The procedure is frequently used in seismic hazard analysis and it was selected here for the case of ML estimations. The minimum magnitude to be considered in the estimates varies from 4.0 to 4.5. The values of maximum magnitude assigned to the areal zones are taken from Zahran et al. (2015). Examples of the magnitude–frequency plots and the log-linear relations (doubly bounded exponential distribution) are shown in Fig. 5. The estimated values of coefficients b are listed in Tables E1 and E2 (Electronic supplement). In general, the data from the UHR1986 catalog reveal higher b-values (i.e. steeper slope of the magnitude–frequency relation) than the data from the G&K1974 catalog because the former contains a larger number of smaller earthquakes (see Table 2). The ML technique resulted in slightly higher earthquake rates than those obtained by the OLS technique.

Cumulative magnitude–frequency relations for particular regions shown together with b-values estimated using ordinary least squares (OLS, black) and maximum likelihood (ML, red) techniques. The solid lines denote the doubly bounded exponential magnitude–frequency plots obtained using OLS (line 1) and ML (line 2) techniques

In this study we do not determine parameters of the magnitude–frequency relation for the central part of Arabian Peninsula. As mentioned by Aldama-Bustos et al. (2009), the Arabian plate is a stable landmass that does not exhibit any discernable trace of interior deformation during the late Tertiary (e.g., Vita-Finzi 2001). The interior of the Arabian plate is also not known to have experienced any significant seismic events over the past 2000 years and it may be regarded as a stable cratonic region (e.g., Stern and Johnson 2010). The area was considered as aseismic in most recent studies (Thenhaus et al. 1989; Al-Haddad et al. 1994; Pascucci et al. 2008; Al-Arifi et al. 2013).

However, several earthquakes have occurred in the northern part of the Arabian plate. Many small events are concentrated within a relatively small area in the Eastern province of Saudi Arabia, in the Al-Ghawar anticlinal structure. Fnais (2011) suggested that the origin of these earthquakes is due to the extraction of oil and recent tectonic activity in the area. Some parts of the Arabian plate have been considered as active seismic source zones in several studies. Deif et al. (2009) assigned a maximum magnitude of 4.9 with b = 0.94 to the western part of the area. Al-Amri (2013) divided the Arabian plate into separate parts and assigned maximum magnitudes from 4.7 to 5.4 and b-values from 0.58 to 0.96 to the sections. Khan et al. (2013) considered the southwestern part of the Arabian plate (including the Arabian Gulf) as a seismically active area with Mmax 6.7, however with very low activity rate (α = 0.116 for Mmin 4.0, b = 0.502). Thus, a careful analysis of the location and magnitude of events that have occurred in the area, as well as the tectonic setting, should be performed to determine the characteristics of seismic source zones to be used in an updated seismic hazard assessment. In this study we use b = 0.94 and maximum magnitude 5.5 for the central part of the Arabian Peninsula.

3 Spatial distribution of seismicity

The so-called zoneless approach to probabilistic seismic hazard assessment implies smoothing of the observed seismic activity around the vicinity of epicenters of past events. In the method of Frankel (1995), the area of interest is subdivided into a grid of cells and characteristics of seismicity (e.g. cumulative number of events \(n_{i}\)) are estimated inside each cell \(i\). The Gaussian distribution function is used to smooth the values from each cell as follows:

where \(\hat{n}_{i}\) is the smoothed number of earthquakes in the i-th cell, \(n_{j}\) is the number of earthquakes in the j-th grid cell, c is parameter of the smoothing function (correlation distance), and \(\Delta _{i,j}\) is the distance between the i-th and j-th cells. The smoothing operator practically does not take into account cells located at distances more than 3c.

In the Kernel smoothing method (Woo 1996), the kernel function \(K(M,r)\) is introduced as a smoothing operation, and the contribution of each earthquake to the seismicity of region is a magnitude-dependent parameter. The mean activity rate \(\lambda (M,r)\) for a given magnitude M and point x is written as a kernel sum over the historical dataset of N events, in which the contribution of each event i located at point \(x_{i}\) is inversely weighted by its effective observation period \(T(x_{i} )\), that is:

The kernel function is most frequently used in the following form (Vere-Jones 1992):

where \(r\) is the horizontal distance from an epicenter of i-event, \(\gamma\) is the smoothing parameter, and \(H_{m}\) is the bandwidth function that can be expressed as \(H_{m} = a \times \exp(bm)\) with regional parameters a and b that depend on the spatial distribution of earthquake epicenters. Note the values of regional parameters are estimated using empirical data, that is, the least square fit between the nearest event distance versus magnitude, and the individual data points scatter significantly (Goda et al. 2013). Therefore it is suggested to consider the scatter using additional bandwidth functions (upper and lower) obtained using simple statistics of individual data points. Stock and Smith (2002) analyzed the performance of different forms of smoothing functions and showed that the Gaussian smoothing provided better estimates of future earthquake activity.

In this study we used the Frankel (1995) method (Eq. 4). The following scheme was applied to eliminate the influence of uncertainties in the estimation of magnitude for historical and instrumentally registered earthquakes and in the evaluation of periods of completeness. We divided the entire area (30°–60°E, 10°–35°N) into a grid spacing of 0.2° × 0.2°. The cumulative earthquake rates \(a_{{i,j,M \ge m_{j} }}\) (i.e. annual frequency of events with magnitude M equal to or greater than cutoff magnitude \(m_{j}\)) were calculated within every cell i for \(N_{m}\) values of cutoff magnitudes \(m_{j}\), namely \(m_{j}\) 4.0, 4.25, 4.50, and 4.75. The smoothing operator (Eq. 5) was applied for every of these four variants of earthquake rate distribution. Then the smoothed rates \(\hat{a}_{{i,j,M \ge m_{j} }}\) were converted to the zero magnitude level \(a_{i,j,M \ge 0 }\) as \(a_{i,j,M \ge 0} = a_{{i,M \ge m_{j} }} + b_{i} m_{j}\), where \(b_{i}\) is the b-value of the G–R relation assigned to the given cell i. Obviously it is not possible to estimate the b-value for every cell separately, therefore we used the area-dependent b-values calculated for different seismic source zones (Table E1 in the Electronic Attachment); the configuration of the zones are shown in Fig. 1. For the central part of the Arabian Peninsula, b = 0.95 is used (Deif et al. 2009; Al-Amri 2013). The correspondent seismic source zone is determined for the given cell and the b-value is assigned to the cell accordingly. Finally, the average smoothed rate \(\bar{a}_{i,M \ge 0}\) is calculated as

where \(w_{j}\) is the weight assigned to every cutoff magnitude.

The activity rate estimated for a seismic source is one of the main inputs for PSHA. However several factors may introduce a considerable level of uncertainty to the estimates, such as the conversion to uniform magnitude scale (Musson 2012b), the technique used for declustering that may either remove mainshocks or may consider dependent events as independent earthquakes, and the methods used for estimating the recurrence parameters (e.g., Leonard et al. 2014). In our study we use two variants of activity rate estimates, namely activity rates estimated using an ordinary least squares technique (OLS), and activity rates estimated using the maximum likelihood technique (ML).

What correlation distance should be selected for seismic assessment is a rather subjective decision and different criteria may be used for the selection (e.g., Cao et al. 1996; Lapajne et al. 1997; Barani et al. 2007). Larger correlation distances may lead to hiding of details of seismicity, whereas smaller values may result in an excessively fragmented patterns. On the other hand, the correlation distance, if a function of the grid size, should be larger than or at least equal to the size of grid. A sensitivity analysis is required to analyze the influence of the parameter on the results of the seismic hazard assessment. Unfortunately, many papers related to PSHA based on smoothed seismicity do not contain information about the used value of correlation distance and, that is more, the sensitivity analysis was not performed. Frankel (1995) used correlation distances of 50 and 75 km depending on input data (duration of catalogs). Lapajne et al. (1997) provided an example of the analysis for Slovenia: the correlation distance for different models of seismicity varied from 17 to 35 km.

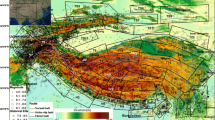

Examples of the distribution of annual rates \(\bar{a}_{M \ge 0}\) obtained using different correlation distances c (Eq. 4) are shown in Fig. 6. Note that the schemes represent seismic activity considering all possible earthquakes with magnitudes \(M \ge 0\). The level of seismic hazard, besides the activity rates, depends on the relative proportion of small and large earthquakes (i.e. b-value in the magnitude–frequency relation) and maximum possible magnitude. Thus, for example, the zones of apparently high level of \(M \ge 0\) activity that are located inside the Arabian plate may not correspond to areas of high seismic hazard.

Spatial distribution of smoothed seismicity, logarithm of annual number of events with magnitude \(M \ge 0, \log_{10} \left( {N_{M \ge 0} } \right),\) for areas 0.2° × 0.2° and considered values of correlation distance (Eq. 4) c = 30 km (a) and c = 50 km (b). The catalog was processed using the UHR1984 declustering method, and OLS technique was used for estimation of the G–R relation. National borders approximate

4 Probabilistic seismic hazard assessment

4.1 The basics of PSHA

The standard probabilistic seismic hazard assessment (e.g., McGuire 2004; Abrahamson 2000; Kijko 2011) is based on the evaluation of an annual frequency of exceedance (AFE) \({ \gamma }\) of a ground motion parameter y, that is \({{\upgamma}}({\mathbf{Y}} > y)\). The return period (or more precisely “the mean return period”) \({T}_{{\gamma}}\) is defined as the reciprocal of the annual frequency of exceedance, that is \({T}_{{\gamma}} = 1/{\gamma}\). The term “return period” is more frequently used in seismic hazard assessments than the term “annual frequency of exceedance” because of convenience and usability. For Poisson occurrence of earthquakes in time, the probability of observing at least one exceedance of the given ground motion level \(A_{0}\) in time interval t, that is \({P}_{{t}} [{A} > {A}_{0} ]\), is related to annual frequency \({\gamma}\) as:

The correspondent return period of such event is defined as:

For t = 1 year and small enough value \({ \gamma }[{A} > {A}_{{o}} ]\), the annual probability of at least one exceedance is numerically almost equal to the annual frequency of exceedance. Note, however, that these quantities have different dimensions. Thus, a PSHA result is represented by the frequency of exceedance, the probability of exceedance, and the return period. A plot showing the calculated annual frequencies of exceedance or the annual probabilities of exceedance for different levels of ground motion parameter is referred to as “hazard curve”.

The design seismic action Y 0 is associated with a reference probability of exceedance \({P}_{{{te}}} [{Y} > {Y}_{{o}} ]\) during the finite time period te (the exposure period). Several design levels may be defined in seismic codes. For example, if the ordinary structure (OS) is designed and constructed to withstand the design seismic action without local or global collapse (e.g. Eurocode 8, CEN 2004), the recommended values for reference probability POS is 10% in a 50-year exposure period of engineering interest, the correspondent return period (Eq. 8) is \(T_{OS} = 475\) years, and the annual frequency of exceedance is hence \({\gamma}_{{{OS}}} = 0.002105\). Note that for essential or hazardous facilities (EHF) the collapse prevention requirement may correspond to P EHF = 2% in a 50-year exposure period of engineering interest, the return period \(T_{EHF} = 2475\) years, and the annual frequency of exceedance is \({\gamma}_{{{EHF}}} = 0.000404\). The reference probability of 2% in a 50-year exposure period may be also considered for ordinary structures in low- and moderate-seismicity regions (e.g., in Saudi Arabia, SBC-301-2007; see also discussion in Tsang 2011), as well as for the almost whole of the U.S. (NEHRP 2004); the corresponding level of ground motion is called as probabilistic maximum considered earthquake (MCE) ground motion.

4.2 Monte Carlo approach to PSHA

Monte Carlo simulation is widely applied for probabilistic seismic hazard analysis (e.g., Ebel and Kafka 1999; Musson 1999, 2012a; Smith 2003; Weatherill and Burton 2010; Sokolov and Wenzel 2011; Assatourians and Atkinson 2013; Atkinson and Goda 2013). The method consists of two steps: (1) generation of a long-duration stochastic catalog of earthquakes (or a number of stochastic sub-catalogs that is more convenient computationally) for given seismic source parameters (geometry, maximum magnitude, earthquake recurrence, hypocentral depth), and (2) calculation of ground motion at selected sites of the study region using all earthquakes from the stochastic catalogs. Simple statistical data analysis is followed to develop seismic hazard curves. Through multiple repetitions of this process, uncertainties regarding different source models, maximum magnitude, parameters of the magnitude–frequency relationships, and choice of GMPEs can be easily incorporated.

The stochastic earthquake catalog represents the seismic process described by the input parameters used and considers uncertainty in the parameters. It is compatible with knowledge about regional seismicity and relations between ground motion parameters and the characteristics of particular earthquakes. On one hand, the duration of the synthetic catalog (or the sub-catalogs) should be long enough to include several events of maximum possible magnitude, as defined by the corresponding magnitude recurrence. On the other hand, the number of sub-catalogs and, correspondingly, the total number of simulated years \(T_{tot}\), should ensure a statistically reliable determination of the annual frequency of exceedance for high amplitudes of ground motion (i.e., for very low probability of exceedance) (e.g., Crowley and Bommer 2006; Musson 2012a).

The seismic source model describes the spatial and temporal distribution of earthquakes in a region. Each epicenter within the source zone is determined randomly assuming that any location within the source zone has an equal probability of being the epicenter of the next earthquake. The depth of the given earthquake source is also generated randomly considering the possible depth distribution. The number of earthquakes with a particular magnitude is determined against the magnitude–frequency distribution for that source zone. For each seismic event of stochastic catalogs the distribution of ground motion is calculated using the specified ground-motion prediction equations (GMPE) and corresponding random error considering the between-earthquake variability for the event, the within-earthquake variability at each site, and, if necessary, the ground-motion correlation (e.g., Sokolov and Wenzel 2013).

The hazard rates, that is, the annual frequency of exceedence \(\gamma_{t = 1} \,\left[ {A > A_{0} } \right]\) at the individual points are computed from the statistics of the simulated earthquakes. The rates were calculated by counting the number \(N(A > A_{0} )\) of ground-motion values A exceeding particular values A 0 in relation to the specified time interval \(T_{tot}\) (total duration of stochastic catalogs). If \(T_{sub}\) is the duration of every sub-catalog and the number of sub-catalogs is \(N_{cat}\), then the total duration is \(T_{tot} = T_{sub} \times N_{cat}\). Thus, the annual frequency \(\gamma_{t = 1} \,\left[ {A > A_{0} } \right]\) is determined as \(\gamma_{t = 1} \,\left[ {A > A_{0} } \right] = N(A > A_{0}^{{}} )/T_{tot}\).

4.3 The technique of PSHA based on smoothed seismicity and Monte Carlo approach

The Monte Carlo technique is applied in this study. The synthetic catalogs are generated separately for different combinations of the basic models for input data. The basic models are constructed as follows. Firstly, two declustering procedures (G&K1974 and UHR1986) that are based on the Gardner and Knopoff (1974) and the Uhrhammer (1986) spatial and temporal windows are applied for the composite catalog. Secondly, two statistical techniques, namely: ordinary least squares (OLS) and maximum likelihood (ML) are used for estimating the parameters of the magnitude–frequency relations (a and b-values) from the declustered catalogs. The parameters were determined for every areal seismic source zone (Fig. 1) and they are listed in Tables E1 and E2. Besides these catalog- and zone-based parameters, we consider a so-called “zero variant”, in which the uniform b-value equal to 1.0 is applied for the whole territory. Thirdly, two values of correlation distance c (Eq. 4), 30 and 50 km, are used in the seismicity smoothing procedure. The grid 0.2° × 0.2° is accepted for analysis of seismicity that corresponds to square sides between 19 and 22 km. Thus, the selected values of correlation distance are larger than the size of the grid, and the lowest value of 30 km is approximately equal to the diagonal distance between cells. As the result, twelve synthetic catalogs were generated. These variants represent the epistemic or model uncertainty and the structure of the corresponding logic tree is shown in Fig. 7.

Every variant of the synthetic catalogs is represented by 100 sub-catalogs with a duration each of 20,000 years that resulted in a total duration of 2,000,000 years. The sub-catalogs are generated as follows. As described above, every cell of a grid with 0.2° × 0.2° spacing is characterized by three parameters, namely the average annual frequency of events with magnitude M > 0 (parameter a), the slope of the magnitude–frequency relation (parameter b), and the maximum magnitude \({\text{M}}_{ \hbox{max} }\). Usually the uncertainty in b-values and in the maximum magnitude is considered via logic tree branches. The b-value uncertainty may be caused by uncertainty in magnitude (e.g., Musson 2012b), for example due to the application of empirical magnitude conversion equations, uncertain completeness times for different magnitude ranges, and, when using the Maximum Likelihood technique (e.g., Weichert 1980), by the uncertainty in maximum magnitudes. Thus, it seems that both the b-value and maximum magnitude should be considered as random variables. Musson (2004) suggested the use of Monte Carlo simulation to find credible values of the activity rate, slope of the magnitude–frequency relationship, and maximum magnitude.

In our study, the standard errors of the b-value that were estimated by regression procedures are used when counting the number of earthquakes in a given cell, and the normal distribution of the errors is accepted following Chatterjee and Hadi (2006). For every sub-catalog, the b-value is generated randomly considering the mean value estimated from magnitude–frequency relation plus the random residual value obtained via sampling from the normal distribution that is characterized by the corresponding standard errors (Tables E1 and E2 in the Electronic Supplement). Note that the constant b-value (b = 1.0) is used for all sub-catalogs when considering the “zero variant”. The maximum magnitude values should also be considered as random variables, however in this study we do not use that option. The parameters assigned to a given cell are used for determination of the number of earthquakes with magnitudes M ± 0.125 that may occur within the cell. It is assumed that any location within the cell has an equal probability of being the epicenter of the next earthquake. The depth of a given earthquake source is generated randomly considering a uniform depth distribution between 5 km and 25 km. Each sub-catalog has a fixed combination of input parameters (magnitude–frequency parameters and maximum magnitude). Examples of the synthetic sub-catalogs are shown in Fig. 8 (the models UHOLS30 and UHOL50).

Note, that the total number of simulated years, or total duration of catalog, should ensure statistically reliable determination of annual frequency of exceedance for high amplitudes of ground motion (i.e. for a low and very low probability of exceedance) (e.g., Crowley and Bommer 2006; Musson 1999, 2012a). At the same time, duration of a sub-catalog should be long enough to contain several maximum magnitude events. The number of sub-catalogs should allow reliable representation of accepted continuous distributions of random input parameters. Thus, the selection of a set of stochastic catalogs may represent a source of epistemic or model uncertainty and corresponding sensitivity analysis is needed. On the other hand, a large number of long sub-catalogs requires a huge amount of computing resources and the calculations may be extremely computationally expensive. In this study, due to technical reasons, we do not perform sensitivity analysis related to the duration and number of sub-catalog assuming that the accepted characteristics (100 sub-catalogs with a duration each of 20,000 years) meet the requirements.

The seismic hazard analysis typically uses multiple GMPEs deemed applicable to the region or the site of interest; the alternative GMPEs represent epistemic uncertainties in the median motions. As noted by Zahran et al. (2015), seismic hazard assessments for Arabian Peninsula were performed using alternative ground-motion models for various tectonic provinces: the active shallow crustal sources, the stable regions and extensional zones. In this work we consider the same scheme as used by Zahran et al. (2015) selecting ground motion prediction equations according to the tectonic regime associated with the earthquakes in each source zone. The preference is given to the recently developed GMPEs and to the GMPEs based on large amount strong-motion data, i.e. so-called Next Generation of Ground Motion Attenuation Models, NGA (Power et al. 2008). In seismic hazard assessment for important facilities, e.g. nuclear power plants, another technique for selection of appropriate GMPEs is suggested (e.g. Atkinson et al. 2014; Bommer et al. 2015). The goal of the approach is to use small number of GMPEs (for example, three equations) and create multiple versions of each equation to obtain desired distribution. The approach may require additional information about seismological characteristics of the region (e.g. shear wave crustal profiles) and for GMPEs developed with the stochastic method it is necessary to perform numerous simulations using sampled ranges of the stochastic source and path parameters. The necessary data may not be available in the studied region, therefore in our regional estimations we use “traditional” multiple-GMPE approach.

For peak ground acceleration, the models of Zhao et al. (2006), Boore and Atkinson (2008), Campbell and Bozorgnia (2008), and Akkar et al. (2014) are used for active shallow crustal sources (i.e. the Dead Sea and Jordan), and equal weights (0.25) were assigned to these models. The model of Akkar et al. (2014) supersedes previous GMPEs derived for Europe and the Middle East, and address shortcomings identified in those models. The model of Zhao et al. (2006) was derived predominantly from Japanese data; however it has been identified by Bommer et al. (2010) as a proper candidate for selection within PSHA for shallow crustal seismicity. The Atkinson and Boore (2006) model is used for the stable continental region of Saudi Arabia in conjunction with the above mentioned equations for active shallow crustal sources. The largest weight (0.60) is assigned to the Atkinson and Boore model for stable regions and equal weights (0.10) are assigned for crustal source equations. The model of Pankow and Pechmann (2004) that supersedes a previous study of strong ground motions in extensional tectonic regimes by Spudich et al. (1999) is used for extensional zones in the Red Sea and the Indian Ocean. The model of Zhao et al. (2006) does not consider peak ground velocity, therefore for estimating hazard in terms of PGV we use the model recently developed by Cauzzi et al. (2015). All the GMPEs allow taking into consideration local site effects through the average shear-wave velocity of the upper 30-m column (Vs30).

In this study we do not create separate logic tree branches for every GMPE and the following scheme is applied. Firstly, for every seismic event and every location a single value of standard normal variate \(\delta\) is generated. Secondly, the ground-motion residual value \(\varepsilon\) is calculated for every GMPE using the GMPE-specific standard deviation \(\sigma_{i}\) as \(\varepsilon_{i} = \delta \times \sigma_{i}\). Thirdly, a set of ground-motion parameters \(Y_{i}\) is estimated as \(\ln Y_{i} = \overline{{\ln Y_{i} }} + \varepsilon_{i}\), where \(\overline{{\ln Y_{i} }}\) denotes the predicted (by the GMPE) median ground-motion parameter that depends on magnitude, distance, and local-site conditions. Finally, the value of ground motion is calculated as a weighted average

where n is the total number of GMPEs used for given location and \(w_{i}\) is the weight assigned for a particular GMPE.

The selected ground motion models use different distance metrics, that is the minimum distance between the rupture and the site (R RUP ) and the minimum distance between the surface projection of the source plane and the site (Joyner–Boore distance, R JB ). For small to intermediate-size earthquakes (M < 5.5–6.0), the hypocentral distance may be used instead of R RUP , and the epicentral distance instead of R JB . Determination of the distance metrics for the area located in the vicinity of large earthquakes requires information about the dimensions and orientation of the source plane. The Monte Carlo technique that is based on event-by-event calculation allows consideration of the variations in strike and dip angles of the rupture, as well as in the source dimensions. We applied the following scheme for the source orientation and dimensions of the M > 6.0 earthquakes within every source zone (see also Zahran et al. 2015). The dip angles are fixed as 85° (almost vertical fault), and the strike angles are determined using a uniform distribution between 0° and 360°, that is all strike angles are equally possible. There is a lack of information related to focal mechanism solutions for intermediate-size and large earthquakes in the Arabian shield, and therefore it is difficult to assign representative focal mechanisms for seismic zones located in the area. The source dimensions are estimated using sampling from normal distribution considering magnitude–dimension relations provided by Vakov (1996) that define mean values of source area and length, and the error terms. The ground-motion parameters were calculated as the average between normal and strike-slip faulting.

5 Results of PSHA and analysis of uncertainty

The analysis was performed on the basis of twelve input models (Fig. 7) that resulted from different techniques used for the analysis of input data and calculation of necessary input parameters. These variants represent epistemic or model uncertainty that resulted from incomplete knowledge of the physics of the earthquake process and the application of alternative mathematical models for description of the process. Correspondingly, twelve variants of input synthetic catalogs were generated. Aleatory variability related to the natural randomness in the process is characterized in this study by considering random dimensions of earthquake sources and focal depth, random b-values assigned to every sub-catalog, and random scatter of the ground-motion parameter estimated for a given magnitude and distance. Probabilistic seismic hazard calculations resulted in twelve sets of seismic hazard maps that were compiled for engineering ground motion parameters (peak ground acceleration PGA, peak ground velocity PGV, 5% damped pseudo-spectral acceleration PSA for natural periods of 0.2 and 1.0 s, i.e. PSA (0.2 s) and PSA (1 s)). These sets represent branches of a logic tree that are used for the treatment of epistemic uncertainty; in this study all branches are weighted equally.

The hazard curves were calculated for the nodes of 0.25° × 0.25° grid and only earthquakes of MW ≥ 4.5 were considered. The curves were used for the estimation of ground motion values for a particular annual frequency of exceedance (AFE). Twelve input models (Fig. 7) produce twelve variants of hazard results (the set of hazard curves and corresponding hazard maps). Figure 9 presents the composite contour hazard maps (PGA, cm/s2) for a given rock condition (average shear wave velocity of the top 30 m of soil column, Vs30, 800 m/s) with 10% and 2% probabilities of exceedance in 50 years, which correspond to AFE 1/475 and 1/2475. The PGA values showed in the maps are obtained as the arithmetic mean from all twelve variants (branches). The maps show that the rock-site PGA hazard, which is estimated using smoothed seismicity, is less than 50 cm/s2 for the most of the territory of Saudi Arabia except the seismically active areas in the north-western (Gulf of Aqaba) and south-western parts of the Kingdom. The hazard maps for PGV and PSA are presented in the Electronic Supplement (Figs. E1–E3).

PGA hazard maps, rock condition. The PGA values are calculated as the arithmetic mean from all twelve variants of hazard estimations (see Fig. 7). National borders approximate

5.1 Overall uncertainty

Uncertainty in the ground motion levels estimated for a given return period of exceedance is usually quantified using the percentiles (15th or 16th, 50th and 84th or 85th) of the distribution of the ground motion parameter obtained via a logic tree scheme (e.g., Giardini et al. 2004; Barani et al. 2007; Douglas et al. 2014). In this study we use only twelve branches of the logic tree, and therefore it is not possible to estimate percentiles statistically in a traditional manner, in which results from all branches of the logic tree are used for analysis. Instead of the percentliles, for analysis of the entire collection of results we consider the minimum (\(A_{min}\)), the maximum (\(A_{max}\)), and the mean (\(A_{mean}\)) values from the set of twelve hazard estimates obtained for every location. Two metrics to measure uncertainty are used: absolute difference \(D_{abs} = A_{max} - A_{min}\), and relative or normalized difference \(D_{norm} = (A_{max} - A_{min} )/A_{mean}\). A specific scheme for the percentiles calculation is used for particular locations (cities) and the analysis is described in the next section. Note that the \(A_{mean}\) values for PGA are shown in Fig. 9.

Examples of the spatial distribution of the \(D_{abs}\) and the \(D_{norm}\) values together with the mean estimates \(A_{mean}\) are shown in Fig. 10 (PGA hazard, rock site, AFE 1/475). Figure 11 shows plots of the uncertainty metrics versus the level of mean hazard \(A_{mean}\). The absolute difference reveals a clear dependency on the level of hazard, i.e. the large \(D_{abs}\) values correspond to the large \(A_{mean}\) values. The dependency may be described by a log-linear relationship. Two variants of the relationship were considered. The first variant was obtained using orthogonal regression, in which the model errors are distributed over the predictor (\(A_{mean}\)) and dependent (\(D_{abs}\)) variable. The second variant was obtained using ordinary least squares regression, in which all the errors are in the dependent variables. The normalized difference reveals no dependency on the level of hazard and, in general, \(D_{norm}\) values are less than 2.0–1.5.

Uncertainty metrics plotted in grey scale together with the PGA hazard levels plotted as contours (AFE 1/475, see also Fig. 9). a Absolute difference \(D_{abs}\); b relative or normalized difference \(D_{norm}\). National borders approximate

5.2 Uncertainty related to particular techniques for input data processing

Figures 10 and 11 reflect the uncertainty that resulted from a combination of all twelve input models. To analyze the contribution of the different techniques applied for preparation of the input data to uncertainty in the hazard estimates, we consider the \(\log D_{abs}\)–\(\log A_{mean}\) relationships for different combinations of the input models. The combinations were selected using the following principle—one particular technique was selected as the primary and the combination based on the technique included several variants of the other techniques. The following combinations were analyzed: the models based on the smoothing distance 30 km (ALL30) and 50 km (ALL50), the models based on the zone-dependent b-values and different smoothing distances (OLSML30 and OLSML50), the models based on different techniques for estimation of the magnitude–frequency relationship (OLSALL and MLALL), and the models based on different declustering techniques and different smoothing distances (GK30; GK50; UH30; UH50). Table 4 provides a description of the combinations.

The comparisons of the \(\log D_{abs}\)–\(\log A_{mean}\) relationships (orthogonal regression, PGA hazard) are shown in Fig. 12. There is a clear evidence of a prominent influence of the declustering technique—the separation of variants based on the G&K1974 and UHR1986 techniques sufficiently reduce the absolute difference, as compared with the combination of all models (Fig. 12b, d). At the same time, it is possible to distinguish the influence of variations in the smoothing distance and in the b-values (Fig. 12a, b). The combinations, which are based on a smoothing distance of 30 km and which include all three variants of b-values (ALL30), resulted in the larger absolute difference, especially for regions of high levels of hazard (high \(A_{mean}\) values), than the combinations, which are based on a smoothing distance of 50 km and the zone-dependent b-values (OLSML50). Comparison of the \(\log D_{abs}\)–\(\log A_{mean}\) relationships for PSA (1.0 s) (Fig. E4 in the Electronic Supplement) shows similar behavior. However, in this case the reduction of absolute difference for the models, which are based on different declustering techniques (Figs. E4b and E4d), depends also on the smoothing distance.

Logarithmic relationships (orthogonal regression) between the mean values of PGA hazard (\(A_{mean}\)) and the absolute difference (\(D_{abs}\)) for different combinations of the basic hazard input models. Description of the combinations and indexes is given in Table 4

Let us analyze the distribution of the \(A_{min}\) and the \(A_{max}\) values, that is, the characteristics of the hazard that are responsible for the absolute difference, along with all twelve variants of hazard calculations for a given location. Examples of the distribution for the PGA hazard (AFE 1/475) are shown in Fig. 13 for two levels of the mean hazard values \(A_{mean}\). Here we consider all sites for which \(A_{mean}\) is higher than the selected threshold level, namely 100 and 50 cm/s2. The plots show how many times the maximum or the minimum values from the set of twelve variants are obtained using every basic model (stochastic catalog). The highest hazard estimates are more frequently obtained when using the input stochastic catalogs that are based on the UHR1986 declustering technique and/or smoothing distance of 50 km. The G&K1974 declustering technique and the smoothing distance of 30 km are mainly responsible for the lowest values of hazard \(A_{min}\). The relative percentage contribution for the maximum and minimum values is listed in Table 5.

Distribution of the maximum and minimum values of the PGA hazard (AFE 1/475) along twelve combinations of hazard calculations for the locations, in which the mean hazard level \(A_{mean}\) exceeds selected threshold levels 50 and 100 cm/s2. Description of the variants and indexes is given in Table 4

6 Discussion

Analysis of the results of probabilistic seismic hazard estimates for the Kingdom of Saudi Arabia using the zoneless (smoothed seismicity) approach and using different input models showed that the characteristics of the declustering technique, that is, the size of the spatial and temporal windows, have the largest influence on the results. The choice of smoothing parameter (correlation distance), as well as the regression technique used for determination of the magnitude–frequency relation, may also be an important decision (see Sects. 2.4 and 3). The influence of these techniques and characteristics is studied here in more detail.

The relations between the PGA hazard estimates obtained using different declustering techniques (models GK3050 and UH3050), different smoothing distances (models ALL30 and ALL50), and different techniques used for estimation of the magnitude–frequency relationship (models OLSALL and MLALL) are illustrated by Figs. 14, 15 and 16, respectively. The figures show the direct point-by-point relations between two values calculated as the average from the corresponding sets of results for a particular location of hazard estimation (left plot) and the logarithmic difference between these values, i.e. \(\log_{10} A_{y}{-}\log_{10} A_{x}\), where \(A_{x}\) and \(A_{y}\) are the averaged values obtained using the two models considered, which correspond to the x-axis and y-axis.

Relations between the PGA hazard estimations obtained using the input models based on different declustering techniques: models GK3050 and UH3050 (see Table 4 for description of the models). Left Point-by-point relations, line shows one-to-one relation. Right Logarithmic difference \(\log_{10} A_{G\& K1974}{-}\log_{10} A_{UHR1986}\), black dots show mean values within interval 0.1 log unit

Relations between the PGA hazard estimates obtained using the input models based on different smoothing distances: models ALL30 (30 km) and ALL50 (50 km) (see Table 4 for description of the models). Left Point-by-point relations, line show one-to-one relation. Right Logarithmic difference. \(\log_{10} A_{ALL30}{-}\log_{10} A_{ALL50}\), black dots show mean values within interval 0.1 log unit

Relations between the PGA hazard estimations obtained using the input models based on different regression procedures (maximum likelihood, ML; and ordinary least squares, OLS) for estimation of parameters of magnitude–frequency relationship: models OLSALL and MLALL (see Table 4 for description of the models). Left Point-by-point relations, line show one-to-one relation. Right Logarithmic difference. \(\log_{10} A_{MLALL}{-}\log_{10} A_{OLSALL}\), black dots show mean values within interval 0.1 log unit

The peculiarities of the relation, which reflect the influence of the declustering technique, may be explained as follows. Due to the much smaller sizes of the spatial and temporal windows (Table 1), application of the UHR1986 technique for the declustering resulted in the larger number of independent earthquakes of small and intermediate magnitudes in the declustered catalog, and consequently to the higher estimated earthquake rates, than that obtained when applying the G&K1974 technique (Table 2). Therefore, the stochastic catalogs based on the UHR1986 technique would contain a larger number of earthquakes of small and intermediate magnitudes (at least less than M 6) than the G&K1974 stochastic catalogs. Such earthquakes, frequently occurring near the site of hazard estimation, may cause high values of peak acceleration during a relatively large number of events, which may be comparable with the number of exceedances from the stronger and less frequent earthquakes. Consequently, starting from 50–70 cm/s2 for AFE 1/475 and from 100–200 cm/s2 for AFE 1/2475, the higher levels of hazard were obtained from the UHR1986 stochastic catalogs (Fig. 14). Similar features of the UHR1986–G&K1974 data distribution can be seen for the cases of PGV and PSA (Figs. E5–E7 in the Electronic Supplement).

Figure 15 presents relations between the PGA hazard estimations \(PGA_{30}\) and \(PGA_{50}\) obtained using smoothing distances 30 km and 50 km, respectively. Similar relations for the PGV and PSA hazard are shown in the Electronic Supplement (Figs. E8–E10). The larger correlation distance assigns the smaller weights to the central grid and conversely the larger weights to the neighboring grids than the smaller correlation distances. Therefore, the distribution of earthquake rates obtained using a small smoothing parameter would be characterized by the relatively high peaks at the locations of the observed earthquakes and by the sharp decrease of the rate level away from the peaks (Fig. 6). The larger smoothing parameter effectively reduces the peaks and spreads the rate over larger distances from the epicenters. Consequently, the relationship between the levels of hazard obtained using relatively small and large correlation distances is characterized by the scattered and relatively large excesses of the \(PGA_{30}\) over the \(PGA_{50}\) estimates and by the stable and confined excesses of the \(PGA_{50}\) over the \(PGA_{30}\). Note that for relatively high level of hazard, i.e. 70–90 cm/s2 for AFE 1/475 and 150–200 cm/s2 for AFE 1/2475, the \(PGA_{50}\) estimates are constantly larger than the \(PGA_{30}\) values. It seems that this is a consequence of the higher rate levels and, therefore, the larger number of possible events around the epicenters of the observed earthquakes that results from the smoothing based on a larger correlation distance.

It has been shown in Sect. 2.4 that application of the maximum likelihood (ML) technique for determination of parameters of the magnitude–frequency relation resulted in the slightly higher earthquake rates than those obtained by the ordinary least squares (OLS) technique. As expected, the average values of the hazard level that are estimated using the models based on the ML technique are slightly larger, in general, than the OLS based estimations (Fig. 16). The difference has a tendency to increase with the level of hazard. Similar relations for the PGV and PSA hazard are shown in the Electronic Supplement (Figs. E11–E13).

Despite of small number of logic tree branches, the scheme of seismic hazard analysis applied in our study allows consideration of fractile hazard. In the traditional scheme, all possible combinations of uncertain inputs forming branches of the logic tree were used to produce a number of output hazard curves. The set is used to calculate a mean- or median-hazard value and fractiles (percentiles) that allow an analysis of uncertainty of the results given the identified epistemic uncertainties. A different scheme for the fractile calculation may be used in the Monte Carlo approach that is based on stochastic sub-catalogs (see also Assatourians and Atkinson 2013). Each sub-catalog is characterized by a fixed combination of input parameters, some of which are estimated using a random draw from corresponding probability distribution (Sect. 4.3), and the combination may be considered as a branch of a logic tree. Thus the set of hazard values that is necessary for estimation of the mean hazard and fractiles may be compiled from the sub-catalogs. In our study we use 12 basic input models (Fig. 7) and 100 sub-catalogs were generated for every model, therefore the necessary set contains \(N_{basic} \times 100\) values, where \(N_{basic}\) is the number of the basic models used in the given combination (Table 4).

Due to technical reasons, in this study the analysis of fractiles was performed only for 20 locations (cities) shown in Fig. 17. As the metric to measure the uncertainty, we use the so-called “relative uncertainty”, lettered as RU here, that is based on the 15th and the 85th percentiles (Giardini et al. 2004; Douglas et al. 2014), i.e.

where HAZ is the considered ground motion characteristic. Distribution of the RU values for different combinations of the basic models is shown in Fig. 18 together with the mean-hazard PGA values (AFE 1/475). For most locations, the relative uncertainty is less than 40% and the high level of uncertainty does not necessarily correspond to a high level of hazard. The highest values of the uncertainty metric, that may reach 50or 60%, are obtained for Yanbu, Jeddah, Makkah, and Jizan locations. The cities are situated near relatively narrow zones of high-level seismic activity (Figs. 6, 8), and variations in the parameters involved in different procedures for input data processing and hazard calculation (catalog desclustering, analysis of the magnitude–frequency relation, etc.) may result in significant variations in the calculated level of hazard. Note that the hazard analysis based on a relatively large smoothing distance (50 km) resulted in the lowest uncertainty.

Comparison of the hazard levels and relative uncertainty RU estimated for several locations (cities). a PGA hazard, AFE 1/475, averaged from all combinations. b Distribution of the relative uncertainty estimated for different combinations of the basic models (see Table 4 for description of the combinations). Location of the cities are shown in Fig. 17

7 Conclusion

As has been mentioned by Beven et al. (2015), among others, epistemic uncertainty is the dominate source of uncertainty in natural hazard assessment. In this study we analyze the influence of different alternative procedures for calculating seismic hazard on the results of the calculations. The smoothed seismicity approach that is based only on seismic activity rate obtained from the earthquake catalog is used together with the Monte Carlo technique. The alternative procedures and incorporated mathematical models are considered as the sources of epistemic uncertainty, namely two mainshock window declustering procedures (Gardner and Knopoff 1974; Uhrhammer 1986), two statistical techniques for estimation of parameters of the recurrence relationship (ordinary least squares and maximum likelihood), and two values of smoothing parameter (30 and 50 km). Our calculations that are based on Monte Carlo technique include also a consideration of aleatory uncertainty related to the dimensions and depths of the earthquake sources, the parameters of the magnitude–frequency relationship, and the scatter of ground-motion parameters estimated for a given magnitude and distance.

We consider in our analysis different combinations of the alternative procedures and different metrics to measure the uncertainty are used. We found that the windowing procedures for catalog declustering have the highest influence on the results of the hazard estimations, especially for relatively high levels of hazard. This is an accordance with recent findings (Marzocchi and Taroni 2014; Boyd 2012), that showed that the declustering may lead to significant underestimation of the “true seismic hazard”. The choice of correlation distance, that is, the parameter of the smoothing function, is also a very important decision that requires proper caution. The smaller correlation distance resulted in relatively high hazard levels at the locations of the observed earthquakes and in the sharp decrease of the level when moving away from these locations. The choice of statistical procedure for estimation of the magnitude–frequency relation (maximum likelihood or least squares) reveals a moderate influence on the results of the hazard estimation.

In this study we do not analyze uncertainty related to the selection of ground-motion prediction equations that, as has been frequently noted (e.g., Bradley et al. 2012), may be much higher than the uncertainty related to earthquake source characteristics. Possible variations of the maximum magnitude were also not considered. From our point of view, the maximum magnitude value for a given seismic source zone should be considered as a random variable, for example, distributed uniformly within a certain range. In the frame of the Monte Carlo approach, the maximum magnitude is randomly assigned for every stochastic catalog. Estimation of the maximum magnitude and the range of uncertainty using a statistical approach (e.g., Kijko 2004) may provide a necessary complement and alternative to the estimates based on the observed maximum magnitude.

As the result of the complex influence of different, sometimes interrelated, factors on the results of probabilistic seismic hazard assessment, the level of hazard may vary substantially. The level of uncertainty depends not only on the procedure and the parameters selected for processing of data and preparation of inputs for hazard assessment, but also on relative position of the given point of calculation and the active seismic areas. Bearing in mind the rather subjective nature of the decision as to what procedures should be used, we suggest applying different procedures and the use of different parameters at all stages of the seismic catalog processing, starting from the conversion to a uniform magnitude scale and finishing with spatial smoothing of seismicity rates. A corresponding sensitivity analysis should be performed and the uncertainty expressed in a quantitative metric should be reported (Woo 2002; Douglas et al. 2014).

The future tasks in the treatment of epistemic uncertainty related to application of a smoothed seismicity approach for PSHA in the Saudi Arabian region may include an analysis of the influence of different regression procedures (OLS or GOR) applied for magnitude conversion, or a complete neglect of conversion, on the results of earthquake recurrence estimates, as well as the development of regional magnitude conversion equations. Uncertainty in maximum magnitude should also be considered in seismic hazard assessment.

The smoothing seismicity approach, in general, provides smaller hazard estimates (except the vicinity of observed large earthquakes) than the area-based procedures (e.g., Molina et al. 2001; Hong et al. 2006; Barani et al. 2007; Goda et al. 2013), however the relation between the estimates obtained using these approaches depends on the level of seismicity. In this study we do not perform detailed comparative analysis between our smoothed-seismicity estimations and the area-based estimations obtained by Zahran et al. (2015). The up-to-date area-based PSHA is one of our future tasks and, as suggested by several researchers (e.g., Beauval et al. 2006; Zuccolo et al. 2013; Leonard et al. 2014), both approaches will be used together and the results, as well as the uncertainty analysis, will be combined in order to construct up-to-date seismic hazard maps for Saudi Arabia that meet modern standards of rigor, quality, and engineering practice, and which may be used in developing building codes and emergency planning. Obviously, all conclusions and suggestions related to the treatment of epistemic uncertainty for the case of smoothed seismicity, except the smoothing parameter, are also of concern in the case of area-based procedures.

As a closing remark, it necessary to note that the seismic hazard maps obtained in the study should be considered as preliminary and they should not be used for practical purposes.

References

Abrahamson NA (2000) State of the practice of seismic hazard evaluation. In: Proceedings of GeoEng2000, invited papers, vol 1, Melbourne, Australia, pp 659–685

Aki K (1965) Maximum likelihood estimation of b in the formula log N = a − bM and its confidence limits. Bull Earthq Res Inst Tokyo Univ 43:237–239

Akkar S, Sandikkaya MA, Bommer JJ (2014) Empirical ground-motion models for point- and extended-source crustal earthquake scenarios in Europe and the Middle East. Bull Earthq Eng 12(1):359–387. doi:10.1007/s10518-013-9461-4

Al-Amri AM (2013) Seismotectonics and seismogenic source zones of the Arabian Platform. In: K. Al Hosani et al (eds) Lithosphere dynamics and sedimentary basins: the Arabian plate and analogues. Springer Frontiers in Earth Sciences, pp 295–316. doi:10.1007/978-3-642-30609-9-15

Al-Arifi NS, Fat-Helbary RE, Khalil AR, Lashin AA (2013) A new evaluation of seismic hazard for the northwestern part of Saudi Arabia. Nat Hazards 69:1435–1457. doi:10.1007/s11069-013-0756-1