Abstract

Standard semantic information processing models—information in; information processed; information out (in the form of utterances or actions)—lend themselves to standard models of the functioning of the brain in terms, e.g., of threshold-switch neurons connected via classical synapses. That is, in terms of sophisticated descendants of McCulloch and Pitts models (Bull Math Biophys 7:115–133, 1943). I argue that both the cognition and the brain sides of this framework are incorrect: cognition and thought are not constituted as forms of semantic information processing, and the brain does not function in terms of passive input processing units (e.g., threshold switch neurons or connectionist nodes) organized as neural nets. An alternative framework is developed that models cognition and thought not in terms of semantic information processing, and, correspondingly, models brain functional processes also not in terms of semantic information processing. As alternative to such models: (1) I outline a pragmatist oriented, interaction based (rather than reception or input-processing based), model of representation; (2) derive from this model a fundamental framework of constraints on how the brain must function; (3) show that such a framework is in fact found in the brain, and (4) develop the outlines of a broader model of how mental processes can be realized within this alternative framework. Part I of this discussion focuses on some criticisms of standard modeling frameworks for representation and cognition, and outlines an alternative interactivist, pragmatist oriented, model. In part II, the focus is on the fact that the brain does not, in fact, function in accordance with standard passive input processing models—e.g., information processing models. Instead, there are multiple endogenously active processes at multiple spatial and temporal scales across multiple kinds of cells. A micro-functional model that accounts for, and even predicts, these multi-scale phenomena in generating emergent representation and cognition is outlined. That is, I argue that the interactivist model of representation outlined offers constraints on how the brain should function that are in fact empirically found, and, in reverse, that the multifarious details of brain functioning entail the pragmatist representational model—a very strong interrelationship. In the sequel paper, starting with part III, this model is extended to address macro-functioning in the CNS. In part IV, I offer a discussion of an approach to brain functioning that has some similarities with, as well as differences from, the model presented here: sometimes called the predictive brain approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Concepts which have proved useful for ordinary things easily assume so great an authority over us, that we forget their terrestrial origin and accept them as unalterable facts. They then become labeled as “conceptual necessities”, a priori situations, etc. The road of scientific progress is frequently blocked for long periods by such errors.

A. EinsteinFootnote 1

1 Introduction

Assumptions that representation is constituted as some form of encoding of what is represented dominate the contemporary scene—in philosophy (e.g., Fodor 1990a, 1998), psychology (e.g., Matlin 2012), cognitive science (e.g., Bermudez 2010), and studies of the brain (e.g., Carlson 2013). Most commonly, this takes the form of an assumption of semantic information processing, in which perceptual inputs generate presumed informational encodings of the sources of such inputs, thus providing resultant signals and processes with representational content concerning those sources. Neural processes then, so it is assumed, process that semantic information via complex neural nets, and, ultimately, may generate outputs in the form of language or action.

I argue to the contrary that, while encodings certainly exist, such as Morse code and computer codes, the assumption that all representation is constituted as encodings—encodingism—is conceptually fatally flawed. Correspondingly, the brain cannot function in terms of such semantic information processing: an alternative framework for modeling brain functioning is required that transcends the flaws of standard models.

In the first section of this paper, I depict a few of the (rather large) family of arguments against encodingist, semantic information processing, models, and outline an alternative interaction-based (rather than reception based) model of representation. In Part II, I argue that the brain does in fact function in ways that are consistent with, and even predicted by, this alternative functional framework. That is, philosophical and theoretical considerations impose constraints on how the brain could function, and these constraints are in fact honored in what we know about how the brain does in fact function. These discussions will proceed with a focus on the micro-functioning of the brain, laying the ground for a discussion in Part III (second of two papers) of a macro-functional model that is consistent with the micro-functional model.

In Part IV (second of two papers), some comparisons are made with a family of approaches known as predictive brain models (and various other terms).

2 Part I: Representation: Encoding or Anticipation?

2.1 Underlying Metaphysical Problems

I argue that a fundamental conceptual problem blocks the understanding of the emergence of representation and cognition, and thus contributes to the lack of models of actual CNS processing that can make sense of cognition and other mental phenomena. The conceptual problem is the presupposition of a substance metaphysical framework, which, among its consequences, blocks the possibilities of emergence,Footnote 2 and, in particular, possibilities of normative emergence such as that of representational truth value. Representation is the heart of cognition, so a conceptual framework that blocks a naturalized model of representation thereby blocks a model of how cognition is emergently realized in the brain. Conversely, so I argue, recognition that the fundamental ontology of the world is that of process (Rescher 1996; Seibt 1996, 2000a, b, 2003, 2009), not substance or entity or atom, enables models of emergence, and, in particular, of the emergence of representation and cognition (Bickhard 2009a, b). These points are argued extensively elsewhere; for the purposes of this discussion, I will assume that normative emergence is a metaphysical possibility, and address the emergence of representation within that framework.

2.2 Representation

Arguing for an alternative model of representation and cognition involves, among other things, demonstrating that there is a need for an alternative model: that current models are unsatisfactory. I begin with a overview of some fatal flaws of standard models.Footnote 3

2.2.1 Problems with Encodingism

Normativities of representation have been problematic for millennia. How can you point to something that doesn’t exist, or to something that is false? How can you have an encoding correspondence with something that doesn’t exist, or something that is false? How can a representational correspondence exist but be false? If the correspondence exists, then the represented end of the correspondence exists, and the representation is true. If the correspondence does not exist, then the representation does not exist. There is no third modeling possibility for accounting for a representation that exists but is false.

There is, in fact, a large family of arguments and problems with encodingist models of representation, some of ancient provenance, and some discovered or created more recently. Here are a few:

An encoding can be defined in terms of other encodings, but this requires some base level of encoding atoms in terms of which all others can be defined. How could such a base level exist, how could it come into being? Fodor, among others, has argued that it must be innate (Fodor 1981; Carey 2009). But Fodor offers no model of how it could have emerged in evolution, nor—assuming that it could emerge in evolution—why such emergence of representation could not occur in individual level learning and development.Footnote 4

Worse, if that base level is assumed to itself be constituted as encodings, an incoherence is encountered. An encoding definition is a form of borrowing content: the defined term becomes a representation, becomes an encoding, by borrowing the content specified in the defining term or phrase or clause. But presumed base level encodings cannot borrow their content from other encodings, else they would not be base level. So, they would have to “borrow” their content from themselves: “X” represents the same as “X”. This does not provide any content to “X”, thus does not transform “X” into an encoding, so no such base level of atomic encodings can exist. Encodingism, the assumption that all representation is constituted as encodings, is an incoherent position (Bickhard 1993, 2000b; Shanon 1993).

Most commonly, of course, the assumption is that some kind of factual, perceptual or conceptual, correspondence is what yields (or even constitutes) content for perceptual and cognitive encodings. But such assumptions directly encounter the normativity problems—how could they be false?

2.2.1.1 Encoding as Correspondence

Consider a kind of converse of this problem: for whatever is posited as the special representation-constituting relationship—informational, causal, lawful, or structural—there are myriads of instances of such relationships throughout the universe: every correlated set of properties (information); every causal connection; every lawful relationship; every structural homomorphism. Almost none, if any, of these relationships are representational, so, at a minimum, such correspondence models are at best seriously incomplete—something in addition needs to differentiate the representational instances from those that are not representational. I argue that they are, more basically, flawed in-principle.

Information semantics, for example, consists of the assumption that a correlation relationship, which is the basic naturalistic or technical definition of information, is somehow simultaneously a representational relationship. In addition to the impossibility of accounting for the possibility of being false with just this assumption, there is the problem pointed out here that there are correlations everywhere, virtually none of which are representational.

One source of this confusion is the following: If X is correlated with Y, then someone knowing about X and Y and the correlation between them can make use of Y—infer on the basis of knowledge of Y—to gain knowledge about X. That is, use Y as a representation of (some property of) X. This is fine—it is the basic form of external representation, such as a map or Morse code—but it requires an epistemic agent who can know such properties and relationships and engage in the appropriate inferences. When attempting to model representation in the mind (or brain), we are attempting to model the representing that is involved in such interpreting, and that cannot be yet more interpretation on pain of vicious regress. Mental representing cannot be the same as external representation.

2.2.1.2 Yet More Problems

There are many other problems with, and arguments concerning, models of representation. One is Piaget’s copy argument: if our representations of the world are in some sense copies of that world, then we would have to already know the world in order to construct our copies of it. Various forms of such circularities often emerge in examining models of representation (such as the circularities of definition in the incoherence argument above). Note that Piaget’s construction argument carries less weight if it is assumed that representation is simply impressed into a passive mind like the form of a signet ring into wax, or contemporary versions of signet rings such as “transduction”, but such models again directly encounter the problem of how representations could possibly be false.Footnote 5

An argument that has been around a long time is the radical skeptical argument (Rescher 1980). This addresses not just the possibility of representational error, but the possibility of organism detectable representational error. The basic argument is that, in order to detect whether my representations are correct, I would have to step outside of myself, have some independent epistemic access to what I am representing, and compare my representation with what is actually there to determine if my representation is correct or not. Such stepping outside of myself is not possible, so consequently it is impossible for me (or any other organism) to determine the truth or falsity of our own representations. This conclusion seems unacceptable, but, nevertheless, the argument has stood for centuries.

But the conclusion is in fact unacceptable: if organism detectable error is not possible, then error corrected behavior and error corrected learning are not possible. We know that error based behavior and learning occur, so something has to be wrong with the radical skeptical argument; it cannot be simply ignored as a merely armchair philosophical problem. Not just representational “error” per se has to be modeled, but organism detectable error must be modeled.

I argue that the radical skeptical argument is a valid argument, but that it is unsound. The faulty premise is that of the background assumption that (all) representation is some form of encoding correspondence; that we know our worlds by somehow looking backwards down the perceptual input stream to the sources of such streams—by being spectators of our worlds (Dewey 1960/1929; Tiles 1990). If our representations of the world are some kind of encoding correspondences with the world, then the only way to check the accuracy of those correspondences is to be able to compare both ends of the correspondence, but we only have access to one end, the representation—the other end is what is supposedly being represented.

In place of such a backward looking, past oriented, assumption, I offer a model of representation based on future oriented anticipation—anticipation of future interactive possibilities. Note, among other characteristics, that any such model is intrinsically (inter-)active: there are no passive signet ring impressions (or transductions).Footnote 6

A False “Solution”

A common response to the radical skeptical argument is to claim that an organism may not be able to directly check some alleged representational correspondence, but an organism can check consequences. There is a glimmer here of what I argue is the correct resolution of the argument, but as stated, it does not work. In fact, if the radical skeptical argument were that simple to overturn, it would not have lasted for millennia (Bickhard 2014).

The problem is that the consequences to be checked must themselves be represented, and this simply re-introduces the radical skeptical problem again.

One common response to this recognition is to consider the external ends of the alleged representational relationships as a superfluous posit: all we have are our internal representations, and all we can do is to check them against each other—a coherence model.

The model that I propose below is based on anticipations, or anticipated consequences, but they are internal to the dynamics of the organism—functional anticipations of internal process flow—and, thus, do not have to be represented in order to be checked: they can be checked internally in completely functional manners. Thus, the skeptical problem is not re-introduced. And the model proposed below does involve organizations or nets of conditional anticipations, but it does not constitute a coherence model because every such anticipation can, in principle, be checked by engaging in the indication process, to check if it anticipates correctly, independently of the remainder of the organization of anticipations.

2.2.2 Representation as Anticipation

Adopting a process metaphysical framework enables addressing multiple ranges and kinds of emergence—in particular, emergences of normative phenomena, and, with special relevance to this discussion, the emergence of representation (Bickhard 2009a).

Representation emerged naturally in the evolution of animals. For any complex agent, one basic issue is how to select and guide actions and interactions. Such a selection must be among interactions that are actually possible in the current situation: it does no good to reach for the refrigerator door if you’re in the forest. Agents, then, must have some functional indications of what kinds or ranges of interaction are possible, and must keep them updated with respect to time, events in the world, and their own actions (Bickhard 2004, 2009a, b; Bickhard and Richie 1983). Setting up such indications of interaction potentiality is similar to Gibson’s notion of picking up affordances (Bickhard and Richie 1983).

What is crucial to accounting for representational truth value is that such indications of interaction potentialities can be true, or can be false: indicated interaction possibilities might be indicated as possible, but in fact not be possible. Furthermore, if the organism selects such an indicated possibility and it does not proceed as indicated, then it has been falsified in a manner that is, at least in principle, functionally detectable by the organism, and therefore available for error corrected behavior or learning.

Error is detectable without the organism having to step outside of itself to compare representation with represented. Instead, it can compare anticipated future with actual future—an internal functional comparison, not an external epistemic comparison.

There is no other model in the literature that can address this criterion of organism detectable representational error (Bickhard 2004, 2009a, b, 2014, in preparation). Fodor (1975, 1981, 1987, 1990a, b, 1991, 1994, 1998, 2003), Millikan (1984, 1993), Dretske (1988) and Cummins (1996) all attempt to address the problem of the possibility of representational error per se, but none succeed (Bickhard 2004, 2009a, b), and none of them attempts to address organism detectable representational error. As mentioned, the problem of organism detectable representational error is the focus of the radical skeptical argument: we cannot detect error in our own representations because, to do so, we would have to step outside of ourselves to compare what we are actually representing with our representation of it, and we cannot do that. This is an unsound argument, with the faulty premise being a conception of representation that is motivated by underlying substance conceptions, and that traces back to the Pre-Socratics (Bickhard 2009a, in preparation; Campbell 1992; Graham 2006; Mourelatos 1973).

Thus, we have the crucial normative aspect of representation—truth value, and truth value for the organism itself—emergent in indications of future interactive potentialities.Footnote 7 Because of this emergence of representation in indications of interaction, the model has been called interactivism.Footnote 8

2.2.2.1 More Complex Representation

Indications of interaction possibilities do not seem to be much like more familiar sorts of representations, such as of objects, but these more complex representations can be emergently constructed out of an underlying action base in a manner similar to Piaget’s model (Bickhard 2004, 2009a, b; Piaget 1954, 1971).

Consider, for example, a frog who might have opportunities for tongue flicking in one direction for a fly, in another direction for another fly, and downward straight-ahead for a worm: indications of interaction possibilities can branch into multiple such possibilities. Furthermore, if the frog were to shift to the left a bit, that might open up the possibility of a tongue flick for a different worm: indications of interaction possibilities can iterate, with some creating or detecting the conditions for others.

Such branching and iterating indications can become extremely complex, forming webs of anticipations of interaction possibilities. Within such a complex web, perhaps in an infant or toddler, consider the subweb for interacting with a child’s toy block. There are multiple visual scans and multiple manipulations that are available with the block, and they are all interrelated in such a way that any one scan or manipulation can be made available from any other with the appropriate “setting up” or intermediate manipulation(s). The subweb for interacting with the toy block is internally completely reachable—any point from any other point.

Still further, this internally reachable subweb of interaction possibilities is itself invariant under a range of other activities that the child can engage in, such as dropping the block, leaving it on the floor, putting it in the toy box, and so on. It is not, however, invariant under all activities, such as crushing or burning it. Such internally reachable, invariant under manipulations and transportations, subwebs constitute a candidate for a child’s representation of a toy block. This is “just” Piaget’s model translated into the terms of the interactivist model (Piaget 1954).

Such borrowing of Piagetian models is possible because of the common action base. The “toy block” model illustrates how an action framework for representation can account for more complex representations, such as of physical objects.Footnote 9

In connecting with Piaget’s action base, the interactivist model has strong convergences with the process orientation and action framework of Peirce (Rosenthal 1983). In fact, Piaget is part of this general pragmatist perspective, with the intellectual descent being from Peirce through James and Baldwin to Piaget. Piaget is among the very few in the current scene who has attempted a model of emergent representation on an action base (Bickhard 1988a; Bickhard and Campbell 1989).Footnote 10

There are also additional convergences: for example, any action based model forces an embodied model (Bickhard 2008a), and the interaction aspect of this model has interesting convergences with classical cybernetics and certain configurations of abstract machine theory (Ashby 1960; Bickhard 1973, 1980, 2014, in press, in preparation; Bickhard and Richie 1983). There are also intuitive convergences with notions of autopoiesis and enactivism (Maturana and Varela 1980, 1987; Varela 1997; Varela et al. 1991), though important differences as well (Bickhard 2014, in preparation; Christensen and Hooker 2000; Di Paolo 2005; Moreno et al. 2008): most centrally, enactivism focuses on a system reproducing its components, while the interactivist model focuses on a far from thermodynamic system maintaining its (ontologically necessary) thermodynamic relationships with its environment (Bickhard 2009a).Footnote 11

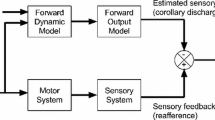

A Potential Confusion

There are a number of frameworks in the literature that can superficially sound like the interactivist model. Feed-forward models, for example, involve interaction that influences sensory inputs; top-down processing can involve anticipating and/or influencing representation; and re-entrant models posit a kind of recursive feedback of semantic information. Such models, however, presuppose the notion of representation—generally in a semantic information processing sense. They presuppose a model of what gets influenced or anticipated or ‘re-entered’. They do not address the nature of representation, except via such presuppositions. The interactivist model is not completely inconsistent in spirit with such models, but it focuses on the emergent nature of representation—and it is inconsistent with the presuppositions made concerning the nature of representation. In the second of these papers, I will discuss more closely one such class of models, that of predictive brain models.

2.2.2.2 Contact and Content

One powerful seduction into an encodingism framework is the point that sensory systems involve the evocation of processes in the nervous system in response to inputs from the environment. These evoked activities are variously described as sensory encodings, mappings, the results of transduction, and so on. Such correspondences certainly do exist, and they are necessary for the viable functioning of the organism, but they do not, and cannot, and do not need to, constitute representations of the inputs that evoked them. Instead, as will be argued, they serve as the functional basis for setting up indications of what the organism could do next, and it is such future oriented indications that have truth value (values of ‘true ‘or ‘false’), and, thus, that constitute representation.

Consider a system interacting with an environment. The internal course of the process will depend in part on the environment that is being interacted with. That internal course, therefore, and/or its outcome, will differentiate those kinds of environments that yield that course or outcome from those that don’t. If such an interaction has no outputs—it is passive input processing—then it will still differentiate, just not as potentially powerfully as a full interactive process.

But such differentiations, especially when they involve sensory systems, are standardly assumed to yield representations—perhaps sensations—of what has been differentiated (e.g., ‘transduced’ sensory encodings). But differentiation does not constitute representation—a differentiation does not represent what it has differentiated—and there is no possibility that such a differentiation per se can be true or false. So such differentiations cannot be representational.

Nevertheless, such differentiations are necessary for the organism: they constitute its contact with the world. Such correspondences or correlations provide the organism with the ability to engage in interactions with the world that have a chance of success; they serve as a functional basis for setting up anticipations of what the organism could do in environments of this differentiated type. It does not succeed to attempt to open the refrigerator if you’re in the forest.

On the other hand, the anticipations of what could be done next in a differentiated type of environment can be true or false. They have truth value. The environment might actually support the indication interaction if it were to be engaged in, or it might not. Such anticipations might or might not be true of the actual environment; such anticipations, thus, yield content.Footnote 12 Such future oriented anticipations, thus, emergently manifest the crucial normative properties of representation. Functional interactive process anticipations constitute representation.

2.3 The Importance of Timing

There are multiple differences between this interactivist-pragmatist model of representation and standard models. Most important, representation can emerge, according to this model, in constructions of indications of interaction potentialities, and such constructions are functional in nature, not representational themselves. The construction of new representation, therefore, can be out of non-representational functional organization: the representation is emergent.Footnote 13

This interactive model is, like pragmatist models in general, future oriented, not past oriented—not looking backwards down the input stream attempting to “see” where that stream originated.Footnote 14 It is this future orientation that makes organism detectable error possible: the indications about future possibilities can be checked by finding out if they are in fact future possibilities (Bickhard 2004, 2009a, b).

Another difference from standard models is that the interactivist model is inherently modal. The indications are of interaction possibilities. We find that children do not add modal considerations on top of prior non-modal representation, but, instead, they begin with poorly differentiated modal understandings and develop progressively more sophisticated differentiations within the modal realm (Piaget 1987; Bickhard 1988b).

There are multiple additional differences between the interactive model and standard models, important for varying purposes and interests. The crucial difference that I will pursue here is that, in being emergent in interaction systems, representation inherently involves and requires timing. (Inter-)actions with the environment can be in error, can fail, by being too fast or too slow—coordinative timing is what is required (Bickhard and Richie 1983; Port and van Gelder 1995; van Gelder and Port 1995).

This is in contrast to, for example, standard computationalism. Computationalist models are equivalent to Turing machines, and Turing machines have sequence but no timing. Nothing in Turing machine theory changes if the temporal durations between steps are short or long or even highly variable. Sequence is all that matters. Sequence can model sequential steps of symbol manipulations, but sequence cannot capture timing.

Computers have timing, so they are more than just Turing machines. But computer timing is via a clock and dedicated timing circuitry. Organisms require timing, but there is an easier way for evolution to have met this requirement: put clocks everywhere. Clocks are, functionally, just oscillators, so “putting clocks everywhere” becomes “put oscillators everywhere”—and constitute functional relationships as modulatory relationships among the oscillators. On this basis, then, we should expect a range of modulatory functional types and scales to be involved in CNS architectures and processes (Bickhard and Terveen 1995).

Such an architecture is at least as powerful as Turing machines in that the limit of A modulating B is for A to switch B ON or OFF, and Turing machines can be built out of switches. An oscillatory/modulatory architecture is more powerful than Turing machine architecture in that it has inherent timing.

And a complex of oscillatory/modulatory architectures is precisely what we find in the brain (Bickhard 1997).

3 Part II: Central Nervous System Functional Micro-architecture

3.1 (Micro-)Introduction

The brain does not contain simple threshold-switch neurons; neurons are not the only functional kind of cell; and synapses are not the only mode by which cells influence each other. Assumptions to the contrary (and related errors) abound in discussions and models of CNS functioning. Instead, the central nervous system functions in multifarious ways, across multiple physical and temporal scales, via endogenous oscillatory processes that engage in ongoing mutual modulatory influences. The complexities can seem bewildering to model and understand.

In this section of the paper, I offer a model of CNS functional processes that accommodates these multiple kinds of cells and multiple scales and forms of influence. The first part of the model focuses on micro-functional processes; the second part extends the micro-functional model into the range of macro-functional processes in the brain.Footnote 15

Many of the complexities of CNS functioning are well known, though more are discovered almost daily, but accounting for them in an overall functional model that can address issues of cognition and other mental phenomena is largely absent. Instead, the general input-processing model, whether of connectionist or neural net variety, still dominates. These descendants of the classic McCulloch and Pitts model (1943) are still the dominant kind of model of mental processes in the brain (e.g., Carlson 2013) because they form the dominant framework within which cognitive processes are thought of across multiple disciplines (e.g., Bermudez 2010). Thus this framework is imposed on discussions and models of brain functioning because that is the only (or the dominant) manner in which it is assumed that cognition ultimately occurs, so this kind of processing (so the reasoning goes) must somehow be realized in brain processes. This despite the fact that what is known about actual CNS processes is massively unexplained by, and mostly contradictory to, standard information processing models.

3.2 The Induction of Central Nervous System Attractor Landscapes

In fact, we find that actual CNS neurons are endogenously active, with baseline rates of oscillation, and with multiple modulatory relationships across a wide range of temporal and spatial scales:

-

silent neurons that rarely or never fire, but that do carry slow potential waves (Bullock 1981; Fuxe and Agnati 1991; Haag and Borst 1998; Roberts and Bush 1981);

-

volume transmitters, released into intercellular regions and diffused throughout populations of neurons rather than being constrained to a synaptic cleft (Agnati et al. 1992; Agnati et al. 2000); such neuromodulators can reconfigure the functional properties of “circuits” and even reconfigure functional connectivity (Marder and Thirumalai 2002; Marder 2012);

-

gaseous transmitter substances, such as NO, that diffuse without constraint from synapses and cell walls (e.g., Brann et al. 1997);

-

gap junctions, that function extremely fast and without any transmitter substance (Dowling 1992; Hall 1992; Nauta and Feirtag 1986);

-

neurons, and neural circuits, that have resonance frequencies, and, thus, can selectively respond to modulatory influences with the “right” carrier frequencies (Izhikevich 2001, 2002, 2007);

-

astrocytes that:Footnote 16

-

have neurotransmitter receptors,

-

secrete neurotransmitters,

-

modulate synaptogenesis,

-

modulate synapses with respect to the degree to which they function as volume transmission synapses,

-

create enclosed “bubbles” within which they control the local environment in which neurons interact with each other,

-

carry calcium waves across populations of astrocytes via gap junctions.

-

These aspects of CNS processes make little sense in standard neural information processing models. In these, the central nervous system is considered to consist of passive threshold switch or input transforming neurons functioning in complex micro- and macro-circuits.Footnote 17 Enough is known about alternative functional processes in the CNS, however, to know that this cannot be correct. The multifarious tool box of short through long temporal scale forms of modulation—many realized in ways that contradict orthodoxy concerning standard integrate and fire models of neurons communicating via classical synapses—is at best a wildly extravagant and unnecessary range of evolutionary implementations of simple circuits of neural threshold switches. This range, however, is precisely what is to be expected in a functional architecture composed of multiple scale modulatory influences among oscillatory processes (Bickhard in preparation; Bickhard and Campbell 1996; Bickhard and Terveen 1995).

The interactivist model of representation, therefore, argues for a kind of functional framework for the central nervous system that we actually find. No other model in the contemporary literature can make sense of this complex toolbox of multiple ways in which the nervous system functions (Bickhard in preparation; Bickhard and Terveen 1995). There are individual models of many of these phenomena, but they are in general at the neural and molecular level and do not connect with more general functional models. Those that do posit functional realizations do so within a semantic information processing framework. Such a framework is not only not correct (Bickhard 2009a), it could not explain the existence of such an array of kinds of modulatory dynamics: (semantic) information processing does not require anything like such an array, so why does such an array exist? Why did evolution create and maintain such a superfluous array of kinds of dynamics, if something so much simpler would suffice? If oscillatory modulations are the central form of functional dynamics, however, then it makes perfectly good sense that evolution would have created a range of such kinds of dynamics for multiple kinds and scales of modulatory functioning.

In this sense, what is known about micro-functional processes in the CNS confirms the implications of the interactive model that functional relations should be modulatory relations among oscillatory processes. The confirmation is by the fact that the nervous system does function in terms of multiple scales of temporal and spatial oscillatory-modulatory relationships.

These basic phenomena of CNS functioning, however, not only confirm the basic predictions of the interactive model of representation, they also entail the central core of that model.

3.2.1 Entailment

Demonstrating the entailment requires explicating a deeper level of the representational model: Recall that, according to the interactivist model, representational truth value emerges most simply in CNS processes that functionally anticipate the further potentialities for near-future interactive processes. In some circumstances, such anticipations will be correct, and, thus, the implicit presuppositions concerning those circumstances will be true, while in others those supporting circumstances will not hold, and thus the functional anticipations will be false. This constitutes the primitive ground of the emergence of representational normativity: the emergence of truth value.

What we find in CNS functioning are wide ranges of spatial and temporal modulatory relationships. The larger scale, temporally slower, processes modulate the intercellular environments within which faster and smaller processes take place. Smaller scale processes, such as neural oscillations and impulses, take place in relation to the ambient environments—e.g., membrane potential in a neuron is a relation between ionic concentrations inside the neuron and concentrations in its local environment. Larger scale processes, thus, modulate the activities of smaller, faster processes via modulations of these local environments.

The slower more spatially widespread processes will be relatively constant on the time scales of the smaller spatial-scale faster processes. Thus, they will set the parameters within which the faster processes occur. For dynamic systems,Footnote 18 parameter setting is the equivalent to programming in discrete systems. The slower processes, therefore—such as of volume transmitters and astrocyte processes—will “program” the faster dynamics. This local “programming” constitutes a kind of set-up, a microgenesis, of dynamic readiness for the thereby anticipated interaction potentialities (Bickhard 2006, 2009c; Brown 1991; Deacon 1997; Ogmen and Breitmeyer 2006; Werner and Kaplan 1963).Footnote 19

Such microgenetic readiness, in turn, can be correct, if its presuppositions about the environment are correct, or incorrect, if those presuppositions are incorrect. Microgenesis, hence, constitutes a functional kind of anticipation, with emergent representational truth value. Dynamic microgenesis, thus, yields the anticipatory truth values that ground representation.Footnote 20 The facts of CNS processes, therefore, entail the interactivist model of representation.

Thus, when we examine how the nervous system in fact functions, we find precisely the kind of anticipatory processes—microgenetic set-up processes—that are at the center of the emergence of interactive representational truth value. The theoretical model and the facts of CNS functioning entail each other—a very strong interrelationship.

In this model, it is the slower microgenesis processes that constitute the core of cognition. Axonal spikes and oscillations carry the results of more local dynamic “computations”—they do not do the processing themselves.Footnote 21 Some further points that provide a broader framework for this perspective include (Bickhard in preparation; Bickhard and Terveen 1995):

-

transmitter substances have evolved from early colony regulating hormones (Bonner 2000);

-

these became volume transmitters (Nieuwenhuys 2000);

-

classical synapses were a later evolution (Agnati et al. 1992; Agnati et al. 2000; Nieuwenhuys 2000);

-

in all cases, transmitters are relatively local hormones, the degree of locality depending on how widely the transmitter substance diffuses;

-

in some cases, precisely the same molecule serves as a transmitter in the CNS and as a whole body hormone outside of the blood–brain barrier;

-

percentages of astrocytes and other glia increase with increasing CNS complexity—as one review puts the point: “astrocytes tell neurons what to do, besides just cleaning up their mess.” (Nedergaard et al. 2003, p. 523).

3.2.2 The Dynamics of Attractor Landscapes

The slower microgenetic processes, in setting parameters for faster processes, thereby modulate the dynamics—the dynamic spaces—of the faster processes. This is what “programming” amounts to within an endogenously active framework.

A further perspective on these microgenetic processes derives from recognition that the larger spatial processes (thus the slower processes) induce local “weak coupling” among smaller scale oscillatory processes. The weak coupling follows from the larger scale: multiple smaller scale processes will have similar local modulatory environments from particular larger scale processes, and will, thus, be (weakly) coupled via those local environments.Footnote 22 Such weak coupling, in turn, induces attractor landscapes for the faster processes (Hoppensteadt and Izhikevich 1997). The “programming”, thus, is constituted in the induction and control of the dynamic attractor landscapes in which the faster processes occur.

The interactivist model, thus, induces a view of CNS functioning based on multiple scale inductions and controls of dynamic attractor landscapes. Control of such dynamic landscape microgenesis, therefore, is the center of the control of action and interaction, including internal action and interaction: thought. Thought as internal (inter-)action is a strong convergence of this framework with that of Piaget and pragmatism more broadly, but it is strikingly different from the passive input processing models that still dominate the contemporary literature.

In general, then, (local) temporally slow processes set parameters for—thus modulate—the dynamics of faster processes, and large spatial scale processes can induce weak coupling among smaller scale processes, thus inducing and modulating attractor landscapes in the dynamics of those faster, smaller scale processes.

3.3 Toward Central Nervous System Functional Macro-architecture

Modeling of cognitive brain processes is almost universally in terms of some sort of computational approach, whether symbol manipulation, information processing, or connectionist. Dynamical approaches to cognitive phenomena exist, but tend to be anti-representational (Brooks 1991; Freeman and Skarda 1990; Thelen and Smith 1996; van Gelder 1995). The interactivist model, in contrast, provides a fully dynamic, process framework for the modeling of representational and cognitive processes—especially of emergent representational and cognitive processes.

The interactivist model implies an oscillatory/modulatory functional architecture and this implication receives strong empirical support. Furthermore, not only does this approach imply such a functional framework, it is the only model currently on offer that makes in-principle sense of the multitude of kinds of modulatory relationships actually found. Still further, when the anticipatory nature of microgenetic set-up is recognized, these known properties of neural functioning themselves imply an anticipatory emergence of representational truth value. The model of representation and the phenomena of CNS processing imply each other.Footnote 23

Beyond specifics of micro-functioning, however, is the range of issues involved in how local microgenesis is itself modulated. In general, local microgenesis will be modulated by processes occurring within larger scale architectures in the brain. Constructing this part of the model, then, involves integrating what is known about the involvement of more macro-circuits in the CNS within the general dynamic framework of the interactivist model. In other words, how is local microgenesis itself controlled (or modulated)?

Such processes are likely to depend on:

-

Modulation of reciprocal couplings between thalamus and cortex, especially the intralaminar and reticular nuclei of the thalamus (Churchland 1995; Hoppensteadt and Izhikevich 1998; Izhikevich 2002; Izhikevich et al. 2003; Purpura and Schiff 1997; Steriade 1996; Steriade et al. 1997a, 1997b);

-

Loops from prefrontal cortex through thalamus, and through basal ganglia to thalamus, to other regions of the cortex (Crosson and Haaland 2003; Edelman and Tononi 2000; Fuster 2004, 2008; Koziol and Budding 2009; Marzinzik et al. 2008; Middleton and Strick 2000; Smith et al. 2004);

-

Baseline chaotic processes from which functional attractor landscapes can be induced and controlled (Freeman 1995, 2000a, b; Freeman and Barrie 1994; Bickhard 2008b); and

-

Involvements of the limbic system in modulating the overall dynamic process with respect to the evaluative aspects of emotions (Bickhard 2007, in preparation; Damasio 1995, 1999; Panksepp 1998).

As with the local microgenetic processes, a great deal of relevance is known about these more global architectures and processes, and more is being discovered rapidly, but little of it receives a modeling interpretation in terms of a coherent cognitive dynamics model.

The issue of internal interactions with cognitive processes, of the modulation and control of cognitive processes, is one of the edges of this model. It is the issue of the emergent nature of thought. I turn in the second paper of this discussion to an integrative framework for such macro-CNS functioning. CNS architecture is enormously complex. I will not address this complexity in detail; instead I will provide several functional and evolutionary themes that can help make sense of the macro-CNS as fundamentally an oscillatory-modulatory system.

3.4 (Micro-)Conclusion

The interactivist model provides a dynamic approach to emergent cognitive neural and glial functioning. This model constitutes an application of an underlying process metaphysics and model of representation that legitimates emergence, including normative emergence—and including, in particular, emergent representation. The model has novel implications for micro-level functioning, implications, that are in fact supported, and, conversely, are themselves implied by what we know of neural and glial functioning.

This framework is suited for exploring more macro-functioning in the nervous system. It is an alternative to computationalist and connectionist approaches. It involves a model of representation as emergent in certain kinds of dynamic organizations, rather than in transduced encodings or connectionist trained encodings. It is a process model from its non-representational base through the emergence of representational and cognitive processes, and, thus, is optimally suited for exploring the relationships between CNS dynamics and cognitive dynamics.

4 Conclusion

Representational truth value emerges in microgenetic anticipations of future courses of interactive processes. The oscillatory and modulatory basis for such microgenesis can be developed either in terms of the interactive nature of representation and cognition or in terms of what we know about how the micro-functioning of the brain actually works—and either consideration yields the other as a consequence.

The model thus developed, however, is a model of micro-functioning, and the issue now needs to be addressed of how these micro-level processes are themselves modulated by macro-brain processes. This is the primary focus of the second of these two papers (Bickhard in press).

Notes

Einstein (1990, p. 31).

Note that representation did not exist 13+ billion years ago, and it does now. So it has to have emerged. Any model that cannot account for the emergence of representation is thereby refuted.

Technically, transduction refers to a change in the form of energy. How that could possibly generate a representation, e.g., a sensation, is just as mysterious as how the signet ring impression could do so.

“Transduction, remember, is the function that Descartes assigned to the pineal gland.” (Haugeland 1998, p. 223).

I have (in this discussion) skipped over a preliminary normative emergence—that of normative function. I argue that normative function emerges naturally in living systems, in a manner differing from the standard etiological model of function, and that interaction indication is the crucial (normative) function from which representation emerges (Bickhard 1993, 2004, 2009a; Christensen and Bickhard 2002). Interaction indication is the interface between functional normativity and representational normativity.

This is just an illustration, and leaves multiple issues unaddressed. For example, how can abstractions, such as the number three, be represented within an interactivist framework? The answer is again roughly Piagetian in spirit, though with more changes from Piaget than for small physical objects (Bickhard 2009a). The general programme of modeling the myriad kinds of representational phenomena requires addressing each kind in its own terms.

There are numerous models today that posit effects of action on perception, but not that attempt to model the emergent constitution of representation in terms of (potential) (inter)action. Piaget is among the few who have made that attempt.

The central idea is that processes that are inherently far from thermodynamic equilibrium must be maintained, perhaps via (recursive) self-maintenance, in those far from equilibrium relationships with their environments. Examples range from candle flames to living organisms. Maintenance of such far from equilibrium conditions is, thus, contributory to—normatively functional for—the existence of the system (Bickhard 2009a).

There can also be a distinction between the anticipation and the environmental conditions that would support it. It is the latter conditions that might be called implicit ‘content’.

The impossibility of emergent representation in standard models, e.g., information semantics models, is reflected in arguments for the innatism of a base level of representations (Fodor 1981). But such a position assumes that representation emerged in evolution, and there is no model of how that could occur, nor is there any argument that such evolutionary emergence could not also occur in a single organism’s learning and development (Bickhard 2009c). Instead:

I am inclined to think that the argument has to be wrong, that a nativism pushed to that point becomes unsupportable, that something important must have been left aside. What I think it shows is really not so much an a priori argument for nativism as that there must be some notion of learning that is so incredibly different from the one we have imagined that we don’t even know what it would be like as things now stand. Fodor in Piatelli-Palmarini (1980, p. 269).

The second (macro-level) part of the brain model is discussed in the second of this pair of papers.

The literature on astrocytes has expanded dramatically in the last decades: e.g., Bushong et al. (2004), Chvátal and Syková (2000), Hertz and Zielker (2004), Nedergaard et al. (2003), Newman (2003), Perea and Araque (2007), Ransom et al. (2003), Slezak and Pfreiger (2003), Verkhratsky and Butt (2007), Viggiano et al. (2000).

Note that some models may posit a dynamics by which the threshold per se can be changed. So, in that sense, the threshold is passive relative to whatever changes it, but the focus here is on the sense in which the threshold is passive relative to its inputs, and that form of passivity holds whether or not the threshold per se can be changed.

This is a simplification of a more complex dynamics: (1) there are not just two levels, but, rather, a range of temporal and spatial scales of processes, and (2) influences occur from faster to slower as well as from slower to faster. But the focus here is on the sense in which the slower processes set parameters for faster processes, and that is an asymmetric functional relationship. Faster processes can influence slower processes, but, because they are faster, they do so with a kind of moving average of activity, if at all. Furthermore, as will be discussed in the second paper, the slower processes tend to be modulated by processes external to the local domains, and, thus, potentially less malleable to the influences of local faster processes.

E.g., Zacks et al. (2007).

In doing so, they participate in larger scale oscillatory/modulatory processes. They do not engage in the transmission of semantic information.

Such coupling via larger scale processes will be a meta-modulation of local coupling modulations among small scale processes that occur via shared local extra-cellular environments.

New non-standard modulatory phenomena are today discovered with startling frequency, and these need to be integrated into an overall model. The interactivist model is uniquely suited to be able to address this integration. It is an ongoing, always-under-construction, project.

References

Agnati LF, Bjelke B, Fuxe K (1992) Volume transmission in the brain. Am Sci 80(4):362–373

Agnati LF, Fuxe K, Nicholson C, Syková E (2000) Volume transmission revisited. Progress in brain research, vol 125. Elsevier, Amsterdam

Ashby WR (1960) Design for a brain. Chapman and Hall, London

Bermudez JL (2010) Cognitive science. Cambridge University Press, Cambridge

Bickhard MH (1973) A model of developmental and psychological processes. Ph. D. Dissertation, University of Chicago. Also Bickhard MH (1980) A model of developmental and psychological processes. Genet Psychol Monogr 102:61–116

Bickhard MH (1980) Cognition, convention, and communication. Praeger Publishers, New York

Bickhard MH (1988a) Piaget on variation and selection models: structuralism, logical necessity, and interactivism. Hum Dev 31:274–312

Bickhard MH (1988b) The necessity of possibility and necessity. Review of Piaget’s possibility and necessity. Harv Educ Rev 58(4):502–507

Bickhard MH (1993) Representational content in humans and machines. J Exp Theor Artif Intell 5:285–333

Bickhard MH (1997) Cognitive representation in the brain. In: Dulbecco R (ed) Encyclopedia of human biology, 2nd edn. Academic Press, San Diego, pp 865–876

Bickhard MH (2000a) Information and representation in autonomous agents. J Cogn Syst Res 1:65–75

Bickhard MH (2000b) Review of B. Shanon, the representational and the presentational. Mind Mach 10(2):313–317

Bickhard MH (2004) Process and emergence: normative function and representation. Axiomathes Int J Ontol Cogn Syst 14:135–169. Reprinted from: Bickhard MH (2003) Process and emergence: normative function and representation. In: Seibt J (ed) Process theories: crossdisciplinary studies in dynamic categories. Kluwer, Dordrecht, pp 121–155

Bickhard MH (2006) Developmental normativity and normative development. In: Smith L, Voneche J (eds) Norms in human development. Cambridge University Press, Cambridge, pp 57–76

Bickhard MH (2007) The evolutionary exploitation of microgenesis. Interactivist Summer Institute, The American University in Paris

Bickhard MH (2008a) Is embodiment necessary? In: Calvo P, Gomila T (eds) Handbook of cognitive science: an embodied approach. Elsevier, Amsterdam, pp 29–40

Bickhard MH (2008b) The microgenetic dynamics of cortical attractor landscapes. Workshop on “Dynamics in and of Attractor Landscapes”, Parmenides Foundation, Isola d’Elba, Italy

Bickhard MH (2009a) The interactivist model. Synthese 166(3):547–591

Bickhard MH (2009b) Interactivism. In: Symons J, Calvo P (eds) The Routledge Companion to philosophy of psychology. Routledge, London, pp 346–359

Bickhard MH (2009c) The biological foundations of cognitive science. New Ideas Psychol 27:75–84

Bickhard MH (2014) What could cognition be, if not computation … or connectionism, or dynamic systems? J Theor Philos Psychol 35(1):53–66

Bickhard MH (in preparation) The whole person: toward a naturalism of persons—contributions to an ontological psychology

Bickhard MH (in press) Toward a model of functional brain processes II: central nervous system functional macro-architecture. Axiomathes

Bickhard MH, Campbell RL (1989) Interactivism and genetic epistemology. Arch Psychol 57(221):99–121

Bickhard MH, Campbell RL (1996) Topologies of learning and development. New Ideas Psychol 14(2):111–156

Bickhard MH, Richie DM (1983) On the nature of representation: a case study of James Gibson’s theory of perception. Praeger Publishers, New York

Bickhard MH, Terveen L (1995) foundational issues in artificial intelligence and cognitive science: impasse and solution. Elsevier, Amsterdam

Bonner JT (2000) First signals: the evolution of multicellular development. Princeton University Press, Princeton

Brann DW, Ganapathy KB, Lamar CA, Mahesh VB (1997) Gaseous transmitters and neuroendocrine regulation. Neuroendocrinology 65:385–395

Brooks RA (1991) Intelligence without representation. Artif Intell 47(1–3):139–159

Brown JW (1991) Mental states and perceptual experience. In: Hanlon RE (ed) Cognitive microgenesis: a neuropsychological perspective. Springer, New York, pp 53–78

Bullock TH (1981) Spikeless neurones: Where do we go from here? In: Roberts A, Bush BMH (eds) Neurones without impulses. Cambridge University Press, Cambridge, pp 269–284

Bushong EA, Martone ME, Ellisman MH (2004) Maturation of astrocyte morphology and the establishment of astrocyte domains during postnatal hippocampal development. Int J Dev Neurosci 2(2):73–86

Campbell RJ (1992) Truth and historicity. Oxford University Press, Oxford

Campbell RJ (2009) A process-based model for an interactive ontology. Synthese 166(3):453–477

Carey S (2009) The origin of concepts. Oxford University Press, Oxford

Carlson NR (2013) Physiology of behavior, 11th edn. Pearson, Upper Saddle River

Christensen WD, Bickhard MH (2002) The process dynamics of normative function. Monist 85(1):3–28

Christensen WD, Hooker CA (2000) Autonomy and the emergence of intelligence: organised interactive construction. Commun Cogn Artif Intell 17(3–4):133–157

Churchland PM (1995) The engine of reason, the seat of the soul. MIT Press, Cambridge

Chvátal A, Syková E (2000) Glial influence on neuronal signaling. In: Agnati LF, Fuxe K, Nicholson C, Syková E (eds) Volume transmission revisited. Progress in brain research, vol 125. Elsevier, Amsterdam, pp 199–216

Clayton P, Davies P (2006) The re-emergence of emergence. Oxford University Press, Oxford

Crosson B, Haaland KY (2003) Subcortical functions in cognition: toward a consensus. J Int Neuropsychol Soc 9:1027–1030

Cummins R (1996) Representations, targets, and attitudes. MIT Press, Cambridge

Damasio AR (1995) Descartes’ error: emotion, reason, and the human brain. Avon, New York

Damasio AR (1999) The feeling of what happens. Harcourt, New York

Deacon TW (1997) The symbolic species. Norton, New York

Deacon TW (2006) Emergence: the hole at the Wheel’s hub. In: Clayton P, Davies P (eds) The re-emergence of emergence. Oxford University Press, Oxford, pp 111–150

Deacon TW (2012) Incomplete nature. Norton, New York

Dewey J (1960/1929) The quest for certainty. Capricorn Books, New York

Di Paolo EA (2005) Autopoiesis, adaptivity, teleology, agency. Phenomenol Cogn Sci 4(4):429–452

Dowling JE (1992) Neurons and networks. Harvard University Press, Cambridge

Dretske FI (1988) Explaining behavior. MIT Press, Cambridge

Edelman GM, Tononi G (2000) A universe of consciousness. Basic, New York

Einstein A (1990) Math Intell 12(2):31; from a letter written in 1916

Fodor JA (1975) The language of thought. Crowell, New York

Fodor JA (1981) The present status of the innateness controversy. In: Fodor J (ed) RePresentations. MIT Press, Cambridge, pp 257–316

Fodor JA (1987) Psychosemantics. MIT Press, Cambridge

Fodor JA (1990a) A theory of content and other essays. MIT Press, Cambridge

Fodor JA (1990b) Information and representation. In: Hanson PP (ed) Information, language, and cognition. University of British Columbia Press, Vancouver, pp 175–190

Fodor JA (1991) Replies. In: Loewer B, Rey G (eds) Meaning in mind: Fodor and his critics. Blackwell, Oxford, pp 255–319

Fodor JA (1994) Concepts: a potboiler. Cognition 50:95–113

Fodor JA (1998) Concepts: where cognitive science went wrong. Oxford University Press, Oxford

Fodor JA (2003) Hume variations. Oxford University Press, Oxford

Freeman WJ (1995) Societies of brains. Erlbaum, Mahwah

Freeman WJ (2000a) How brains make up their minds. Columbia, New York

Freeman WJ (2000b) Mesoscopic brain dynamics. Springer, London

Freeman WJ, Barrie JM (1994) Chaotic oscillations and the genesis of meaning in cerebral cortex. In: Buzsaki G, Llinas R, Singer W, Berthoz A, Christen Y (eds) Temporal coding in the brain. Springer, Berlin, pp 13–37

Freeman WJ, Skarda CA (1990) Representations: Who needs them? In: McGaugh JL, Weinberger NM, Lynch G (eds) Brain organization and memory. Oxford University Press, Oxford, pp 375–380

Fuster JM (2004) Upper processing stages of the perception-action cycle. Trends Cogn Sci 8(4):143–145

Fuster JM (2008) The prefrontal cortex, 4th edn. Elsevier, Amsterdam

Fuxe K, Agnati LF (1991) Two principal modes of electrochemical communication in the brain: volume versus wiring transmission. In: Fuxe K, Agnati LF (eds) Volume transmission in the brain: novel mechanisms for neural transmission. Raven, New York, pp 1–9

Galves A, Hale JK, Rocha C (2002) Differential equations and dynamical systems. American Mathematical Society, Providence

Graham DW (2006) Explaining the cosmos. Princeton University Press, Princeton

Haag J, Borst A (1998) Active membrane properties and signal encoding in graded potential neurons. J Neurosci 18(19):7972–7986

Hale J, Koçak H (1991) Dynamics and bifurcations. Springer, New York

Hall ZW (1992) Molecular neurobiology. Sinauer, Sunderland

Haugeland J (1998) Having thought. Harvard University Press, Cambridge

Hertz L, Zielker HR (2004) Astrocytic control of glutamatergic activity: astrocytes as stars of the show. Trends Neurosci 27(12):735–743

Hirsch MW, Smale S, Devaney RL (2004) Differential equations, dynamical systems, and an introduction to chaos, 2nd edn. Elsevier, Amsterdam

Hooker CA (2009) Interaction and bio-cognitive order. Synthese 166(3):513–546

Hoppensteadt FC, Izhikevich EM (1997) Weakly connected neural networks. Springer, New York

Hoppensteadt FC, Izhikevich EM (1998) Thalamo-cortical interactions modeled by weakly connected oscillators: could the brain use FM radio principles? Biosystems 48:85–94

Ivancevic VG, Ivancevic TT (2006) Geometrical dynamics of complex systems. Springer, Dordrecht

Izhikevich EM (2001) Resonate and fire neurons. Neural Netw 14:883–894

Izhikevich EM (2002) Resonance and selective communication via bursts in neurons. Biosystems 67:95–102

Izhikevich EM (2007) Dynamical systems in neuroscience. MIT, Cambridge, MA

Izhikevich EM, Desai NS, Walcott EC, Hoppensteadt FC (2003) Bursts as a unit of neural information: selective communication via resonance. Trends Neurosci 26(3):161–167

Jost J (2005) Dynamical systems. Springer, Berlin

Koziol LF, Budding DE (2009) Subcortical structures and cognition. Springer, New York

Levine A (2009) Partition epistemology and arguments from analogy. Synthese 166(3):593–600

Lyubich M, Milnor JW, Minsky YN (2001) Laminations and foliations in dynamics, geometry and topology. American Mathematical Society, Providence

Marder E (2012) Neuromodulation of neuronal circuits: back to the future. Neuron 76:1–11

Marder E, Thirumalai V (2002) Cellular, synaptic and network effects of neuromodulation. Neural Netw 15:479–493

Marzinzik F, Wahl M, Schneider G-H, Kupsch A, Curio G, Klosterman F (2008) The human thalamus is crucially involved in executive control operations. J Cogn Neurosci 20(10):1903–1914

Matlin MW (2012) Cognition. Wiley, Hoboken

Maturana HR, Varela FJ (1980) Autopoiesis and cognition. Reidel, Dordrecht

Maturana HR, Varela FJ (1987) The tree of knowledge. New Science Library, Boston

McCulloch W, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 7:115–133

Middleton FA, Strick PL (2000) Basal ganglia and cerebellar loops: motor and cognitive circuits. Brain Res Rev 31:236–250

Millikan RG (1984) Language, thought, and other biological categories. MIT Press, Cambridge

Millikan RG (1993) White queen psychology and other essays for alice. MIT Press, Cambridge

Moreno A, Etxeberria A, Umerez J (2008) The autonomy of biological individuals and artificial models. BioSystems 91:309–319

Mourelatos APD (1973) Heraclitus, parmenides, and the naïve metaphysics of things. In: Lee EN, Mourelatos APD, Rorty RM (eds) Exegesis and argument: studies in Greek philosophy presented to Gregory Vlastos. Humanities Press, New York, pp 16–48

Nauta WJH, Feirtag M (1986) Fundamental neuroanatomy. Freeman, San Francisco

Nedergaard M, Ransom B, Goldman SA (2003) new roles for astrocytes: redefining the functional architecture of the brain. Trends Neurosci 26(10):523–530

Newman EA (2003) New roles for astrocytes: regulation of synaptic transmission. Trends Neurosci 26(10):536–542

Nieuwenhuys R (2000) Comparative aspects of volume transmission, with sidelight on other forms of intercellular communication. In: Agnati LF, Fuxe K, Nicholson C, Syková E (eds) Volume transmission revisited. Progress in brain research, vol 125. Elsevier, Amsterdam, pp 49–126

Ogmen H, Breitmeyer BG (2006) The first half second. MIT, Cambridge

Panksepp J (1998) Affective neuroscience. Oxford University Press, Oxford

Perea G, Araque A (2007) Astrocytes potentiate transmitter release at single hippocampal synapses. Science 317:1083–1086

Piaget J (1954) The construction of reality in the child. Basic, New York

Piaget J (1971) Biology and knowledge. University of Chicago Press, Chicago

Piaget J (1987) Possibility and necessity, vols 1 and 2. University of Minnesota Press, Minneapolis

Port R, van Gelder TJ (1995) Mind as motion: dynamics, behavior, and cognition. MIT Press, Cambridge

Purpura KP, Schiff ND (1997) The thalamic intralaminar nuclei. The Neurosci 3:8–15

Ransom B, Behar T, Nedergaard M (2003) New roles for astrocytes (stars at last). Trends Neurosci 26(10):520–522

Rescher N (1980) Scepticism. Rowman and Littlefield, Totowa

Rescher N (1996) Process metaphysics. SUNY Press, Albany

Roberts A, Bush BMH (eds) (1981) Neurones without impulses. Cambridge University Press, Cambridge

Rosenthal SB (1983) Meaning as habit: some systematic implications of peirce’s pragmatism. In: Freeman E (ed) The relevance of Charles Peirce. Monist, La Salle, pp 312–327

Seibt J (1996) Existence in time: from substance to process. In: Faye J, Scheffler U, Urs M (eds) Perspectives on time. Boston studies in philosophy of science. Kluwer, Dordrecht, pp 143–182

Seibt J (2000a) Pure processes and projective metaphysics. Philos Stud 101:253–289

Seibt J (2000b) The dynamic constitution of things. In: Faye J, Scheffler U, Urchs M (eds) Things, facts, and events. Poznan studies in the philosophy of the sciences and the humanities, vol 72, pp 241–278

Seibt J (2003) Free process theory: towards a typology of occurings. In: Seibt J (ed) Process theories: cross disciplinary studies in dynamic categories. Kluwer, Dordrecht, pp 23–55

Seibt J (2009) Forms of emergent interaction in general process theory. Synthese 166(3):479–512

Shanon B (1993) The representational and the presentational. Harvester Wheatsheaf, Hertfordshire

Slezak M, Pfreiger FW (2003) New roles for astrocytes: regulation of CNS synaptogenesis. Trends Neurosci 26(10):531–535

Smith Y, Raju DV, Pare J-F, Sidibe M (2004) The thalamostriatal system: a highly specific network of the basal ganglia circuitry. Trends Neurosci 279(9):520–527

Steriade M (1996) Arousal: revisiting the reticular activating system. Science 272:225–226

Steriade M, Jones EG, McCormick DA (1997a) Thalamus. Vol. I. Organisation and function. Elsevier, Amsterdam

Steriade M, Jones EG, McCormick DA (1997b) Thalamus. Vol. II. Experimental and clinical aspects, vol II. Elsevier, Amsterdam

Thelen E, Smith LB (1996) A dynamic systems approach to the development of cognition and action. MIT, Cambridge

Tiles JE (1990) Dewey. Routledge, London

van Gelder TJ (1995) What might cognition be, if not computation? J Philos XCII(7):345–381

van Gelder T, Port RF (1995) It’s about time: an overview of the dynamical approach to cognition. In: Port RF, van Gelder T (eds) Mind as motion. MIT Press, Cambridge, pp 1–43

Varela FJ (1997) Patterns of life: intertwining identity and cognition. Brain Cogn 34:72–87

Varela FJ, Thompson E, Rosch E (1991) The embodied mind. MIT Press, Cambridge

Verkhratsky A, Butt A (2007) Glial neurobiology. Wiley, Chichester

Viggiano D, Ibrahim M, Celio MR (2000) Relationship between glia and the perineuronal nets of extracellular matrix in the rat cerebral cortex: importance for volume transmission in the brain. In: Agnati LF, Fuxe K, Nicholson C, Syková E (eds) Volume transmission revisited. Progress in brain research, vol 125. Elsevier, Amsterdam, pp 193–198

Vuyk R (1981) Piaget’s genetic epistemology 1965–1980, vol II. Academic, New York

Werner H, Kaplan B (1963) Symbol formation. Wiley, New York

Zacks JM, Speer NK, Swallow KM, Braver TS, Reynolds JR (2007) Event perception: a mind–brain perspective. Psychol Bull 133(2):273–293

Acknowledgments

Thanks are due to Cliff Hooker for comments on an earlier version of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bickhard, M.H. Toward a Model of Functional Brain Processes I: Central Nervous System Functional Micro-architecture. Axiomathes 25, 217–238 (2015). https://doi.org/10.1007/s10516-015-9275-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10516-015-9275-x