Abstract

A saliency detection task simulates the attention mechanism of the human visual system, which focuses on what draws the most attention in a picture, and performs accurate localization and pixel-level segmentation of the object. Existing detection methods based on neural networks usually perform calculation of the object position information and edge information separately in each layer, resulting in calculation redundancy and insufficient utilization of information. To address this issue, this paper proposes an attention-guided detection network using an autoencoder (AGA-Net). First, using a proposed attention-guided multi-scale (AM) module, results from deep layers can be used to highlight features of the foreground and suppress features of the background and extract different scale features that are more relevant to the detection task. Second, a bi-refinement (BR) module composed of two sub-networks is proposed. One sub-network extracts information of the foreground to find redundant areas in the prediction results, and the other uses background information to supplement missing boundary information. Finally, the new model uses a variational autoencoder (VAE) branch to realize the image restoration task. It shares the encoder module with the object detection task and helps it escape from a local minimum in the converging process. Extensive experiments on six benchmark datasets were conducted and the proposed method is compared with 19 state-of-the-art methods, demonstrating that the new method has the best results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Salient object detection methods aim to automatically, quickly, and accurately identify the most attractive objects in static pictures or dynamic videos while ignoring other information in the surrounding area to achieve the same effect of the human visual attention mechanism [1]. Numerous powerful models were proposed before the continuous development of deep learning technology in image recognition and classification. Since Wang et al. [2] used models based on deep learning to process images containing salient objects, new and more powerful models have been proposed. The derivational study of a large amount of data and ability correspond to selecting the most distinguishing features without manual involvement, and the neural network method is superior to traditional methods in processing time and detection results, providing the most advanced performance in the field of salient object detection [3].

The network structure of salient object detection models generally adopt two segments: a decoder and an encoder [4]. In the encoder stage, the input image is extracted through the convolution. In the decoder stage, corresponding to the encoder stage, a de-convolution or interpolation algorithm, such as BASNet [5] and MINet [6], is used to restore the image layer-by-layer to the original resolution. Such a network structure is simple with a relatively small number of parameters. It can extract features of different scales, and also has the benefit of being able to process images end-to-end. Similar structures are primarily utilized in medical imaging [7], image semantic segmentation [8], and image attribute editing [9]. However, there are some problems with this method, which limit the improvement of performance: the loss of detailed information caused by downsampling and the lack of supervision in the decoder module; the direct fusion of inaccurate encoder information and decoder information leads to information confusion; and the low confidence of the object boundary in the predicted map.

Down-sampling reduces the image’s resolution, which inevitably leads to a loss of detailed information, especially the information of small targets, which hinders the accurate determination of the boundary. Aiming at this problem, the entire image is divided into different sub-blocks using a super-pixel segmentation algorithm. In this way, the subsampling algorithm’s frequency is reduced. However, these methods are costly in time and not end-to-end, and require switching back and forth between super-pixel segmentation and the saliency detection algorithm. To realize an end-to-end network model, many models process the whole picture as the network’s input, which is similar to the human visual mechanism. When the down-sampling calculation is used in the model to alleviate the problem of information loss, the prior information before sampling is transferred to the higher-level information after sampling through a jump connection, shortcut connection, or dense connection in the model structure, which supplements missing information. These methods have a simple structure and improve the detection result of the model. Gradient disappearance or explosion is also alleviated, allowing the network depth to increase further. Since an attention mechanism can enhance the ability to distinguish similar colors and textures, enabling the model to concentrate on the primary features as needed, it has been widely used in the field of computer vision [10].

Post-processing is a standardized procedure to enhance the effect of boundary segmentation. Examples include a super pixel-based filter and conditional random field (CRF). However, these methods cannot be integrated into the network, and it is necessary to switch back-and-forth between the CPU and GPU, which increases the complexity of the overall model. Some networks refine the edge region one or more times by adding sub-networks [11]. However, there are also some shortcomings in those models. Conversely, the refined structure is shallower relatively to the main model, so the extraction ability is limited, which cannot fully correct boundary errors. On the other hand, refining the structure does not completely utilize the original image information.

In response to the earlier mentioned problems, this study proposes an optimization model based on U2Net [12] and simultaneously handles two different target detection tasks, salient object detection and reconstruction of the original image. While a uniform symmetric encoder and decoder cooperate to detect salient objects, a branch of the variational automatic encoder (VAE) is integrated into the network. In the new task, to diminish the difference between the reconstructed image and input image, it further forces the shared decoder structure to thoroughly learn the hidden features, the overall structure, and various details of the texture feature information [13], which improves the image information extraction. Through the shared learning of different tasks, it is conducive to the learning of general representation information, making use of the advantages of different tasks to complement each other, and the additional loss function reduces the space for finding the optimal solution, which is helpful for a salient object detection model to escape from a local minimum in the converging process.

To highlight more important and relevant features, an attention-guided multi-scale(AM) module is designed. It can perform iterative processing of the information according to the number of convolutions between methods. By setting different dilation rates of dilated convolution and down-sampling operation, the convolution receptive field increases. More importantly, it can highlight the information related to the detection task and filter the irrelevant information so only a small amount of computation is added.

Furthermore, we propose a bi-refinement (BR) module to further refined the boundary in the coarse prediction saliency map. The structure is similar to a coarse detection network but has a shallower depth and fewer parameters as a branch of the prediction network. Different from the refined structure designed in BASNet [5] that only uses the coarse predicted map of the original scale, the information of the original image is supplemented and more information that can be learned is added. By multiplying the predicted results with the original image, the background and foreground areas in the original information are extracted to cut the corresponding redundant areas and supplement the information. Compared with the prediction results of the main network, the output features after fusion are greatly improved, especially the edge region.

The main contributions of this study are as follows:

-

1.

The task of reconstructing the original image is added, and the decoder’s information-compressing ability of the entire image and texture is improved by using the advantages of joint optimization of different tasks.

-

2.

An attention-guided multi-scale (AM) module is proposed to alleviate performance degradation caused by inaccurate information through directly fusing the encoder and decoder information and increase the correlation between the extracted information and the task.

-

3.

We propose a bi-refinement (BR) module that can use the foreground information in the original image to refine the edge of the prediction result and the background information to supplement the missing details so that the edge area is more complete and more accurate.

-

4.

We comprehensively estimate the proposed attention-guided detection network using an autoencoder (AGA-Net) on six saliency detection datasets and compare the results with 19 top-notch models, achieving the best performance and more accurate object boundaries.

2 Related work

Early saliency detection methods based on deep learning use the powerful automatic feature extraction ability of a deep learning network and combine it with traditional methods to obtain more accurate results. The super-pixel information sequence input into the superCNN [14] learns the information flow of pixel-level prediction saliency map and super-pixel information flow through two branches that share weights. Furthermore, it trains and optimizes the two information flows alternately to generate a saliency map together. Information of different scales is conducive to a detection network to accurately identify salient objects of different sizes. Two different structures were designed [2] to extract the local information extracted by a sliding window and the general information of a learning RGB image and then merge the results obtained by the two networks to obtain the final saliency result. These networks need to use a super-pixel or sliding window to cut out part of the image area from an image but increase calculation cost.

2.1 Fusion of different scale features

An end-to-end structure can directly encapsulate an entire image as the data input [15]. DUS [16] constructed a full convolution neural network (CNN) with dilated convolution and learned the potential saliency through a pixel-level algorithm. The DSS network model [17] adds a shortcut connection between different layers, fusing primitive quality of image features and superlative quality of image features through a series of side outputs. Liu et al. [18] designed the PiCANet model to grasp the informative ascertained features of every simultaneous pixel and then embedded it into U-Net, improving the saliency detection performance by integrating global and multi-scale local context information.

The fusion of different scale features can improve the receptive field of convolution, which helps recognize significant objects of different sizes. Liu et al. [19] proposed a pyramid pooling module (PPM) that integrates four different scale features. After extracting information from each feature, 1 × 1 convolution is used to reduce the channel and fuse feature using a concatenation operation. However, Pang et al. [6] believe that the direct fusion of different scale features extracted through a pyramid module will cause fusion interference, so an aggregate interaction strategy (AIM) is proposed, which effectively integrates information of different resolutions through the guidance of collaborative learning knowledge. The information of different scales can be scaled to the same resolution and then the weight of different features can be obtained through learning to reduce the repetition and interference between the information. However, due to the lack of a module specifically for object edges, the saliency prediction map of these networks has low confidence at the edge and does not fully use different scales feature to further refine the edge region.

2.2 Attention

The attention mechanism was first proposed in machine translation [20], and used an alignment model to learn the weight of each word in a source sentence. Because it has relatively simple structure and can effectively determine the weight of meaningful information, it is widely used in various fields, such as image subtitle, medical organ segmentation, and visual question answering. In saliency object detection, Li et al. [21] adds a recurrent attention network model to repeatedly extract and refine the image features of different regions. The two-way information transfer model BDMP network [22] controls the interaction between different layers of information through elaborately designed gate functions. Sun et al. [23] use an attention mechanism to transfer the top-level global semantic information layer-by-layer to a shallow network, which solves the problem that the backbone network is too shallow and cannot extract global information. In subsequent research, Zhang et al. [24] proposed a fusion and progressive attention guided (PAG) recurrent mechanism in saliency detection network, which can use high-level semantics to guide a low-level network to extract the spatial location information of saliency target from top to bottom. Therefore, channel attention and spatial attention are introduced into the designed pyramid feature extraction module (CPFE) to pay more attention to the information of critical channels and some regions of the image. The experimental results of these models also prove that the attention mechanism can effectively highlight important information [25] and improve the detection result.

3 Proposed approach

This study designs a powerful network model to realize salient object detection, as represented in Fig. 1. The new model can process an input image in an end-to-end manner and uses a deep supervision mechanism [26]. To balance the calculation load and network depth, we use maximum pooling to gradually reduce the image’s resolution and increase the number of feature channels. Compressing the hidden feature of the decoder can effectively improve the model’s ability to understand pictures.

For this reason, we added a VAE branch, which deals with picture restoration task to force the network to extract global information and native texture information completely. In the short-connected information fusion phase, our model performs additional processing on the output of each feature using the encoder through an attention-guided multi-scale (AM) module, to avoid information confusion and highlight the feature related to the detection task. In addition, the module includes different rates of dilated convolution and down-sampling operations, which increases the receptive field of features. To obtain more accurate boundaries, the main network prediction results and the original image are further input into the refined module to optimize the edge details of the saliency object, and the processed results are regarded as the saliency prediction results of the whole model.

In this research, the saliency detection module uses a mixed loss function to find the difference, especially between the predicted results and ground truth, which are binary cross-entropy (BCE), structural similarity (SSIM), and intersection over union (IOU).

where LSOD indicates that the saliency detection branch includes the loss of each branch and fine module, w1, w2 and w3 are the weight of different loss functions, and the their values in this study are all 1.

Binary cross-entropy loss calculates the distance between the predicted value and the real label at pixel-level.

where P(i,j) is the prediction value of saliency at pixel (i,j), and G(i,j) ∈ 0,1 is the ground truth at the corresponding position.

SSIM loss explores the relationship between regions of an image and the structural relationship in the image at patch-level.

where μi, μj, σi and σj represent the mean and standard deviations of the pixels in the patches taken through the sliding window on the predicted map and the ground truth map during the SSIM calculation, σij is their covariance, C1 and C2 are used to avoid dividing by zero, and their values are 0.012 and 0.032 respectively.

Unlike other loss functions, the IOU loss can guide a network to learn the relationship between the entire picture at image-level to make the overall shape, size, and structure of the detected object more similar to ground-truth.

3.1 Autoencoder regularization

In salient object detection, it is necessary to accurately determine each pixel’s category in the image. However, the classes of saliency objects are different and complicated. A more significant problem is that sometimes an object in an image belongs to the foreground, while some objects of the same category belong to the background [27]. It can be considered that each image is independent of other images from the perspective of the surface. On the public ImageNet [28] dataset, elegant network models can accurately classify images through sufficient training. When these models and trained parameters are transformed to other image-related tasks, only by fine tuning can we achieve perfect results and get fast convergence. One explanation is that different images in nature are composed of an small textures with similar structures. The images are very different from the surface perspective, but they are all composed of implicit distribution. In the training stage, a neural network can extract the hidden information by learning the relationship between pixel contexts. However, to avoid the impact of more training images provided by other datasets, the model proposed in this article is trained from scratch. We can learn information from similar structures by introducing additional tasks.

To fully mine the implicit information in the image data, a VAE branch is added to the model. The general autoencoder regularization module is bifurcated into two processes: a decoder and an encoder. The encoder’s special process goes from the input image to the hidden layer, and the decoding process is relative to the hidden layer to reconstruct the original image. The dissimilarity allying to the input picture and the restored picture are regarded mainly as the reconstruction error. By minimizing the error, better network parameters can be learned, and the ideal state is when the input and output image have consistent values for each pixel. In the process of data reconstruction, only the hidden layer features are used, that is, the extracted distribution estimated normal distribution, instead of the original input image. Therefore, in image reconstruction, all information can only be obtained from the low-resolution in-depth features. Because of this, in the whole process, the requirement of the encoder is relatively higher. It is necessary to compress the redundant information while preserving the image’s local details and global information. The overall structure of the automatic encoder is similar to the module structure of the saliency detection task. This study shares a decoder module, especially to diminish the computation of model parameters and increase the advantages of multiple tasks. The part of the loss function is mainly composed of the reconstruction error and the estimated normal distribution:

where \(w_{1}^{\prime }\) represents the weight of each loss function, \(L_{L_{2}}\) loss is used to match the VAE branch output and the original image, LKL is the penalty term between the estimated normal distribution \({N\left (\mu , \sigma ^{2}\right )}\) and a prior distribution N(0,1), and N is the number of pixels in the image.

Therefore, the loss function of the whole network model is composed of the object saliency detection network and the VAE branch loss function:

where δ is the weight of the loss function of different tasks and the value is 0.5 in the paper.

3.2 Attention-guided multi-scale module

To highlight the features more relevant to the task and learn the information of different scales, we propose an attention-guided multi-scale (AM) module. The size of objects is different in a saliency detection task, and using different rates of dilated convolution to adapt to different sizes of objects is an effective method [29]. In the new model structure, as shown in Fig. 2, the resulting Enn(Xi,j) output by the encoder element-wisely multiplies the prediction result Outn− 1 transmitted by the lower sampling layer to get Enn(Xi,j) ⊗ Outn− 1, and Xi,j represents the feature of the input. By introducing the predicted results as attention, the foreground region is highlighted and the background region is suppressed, so the selected features are task-related. Then, we use multiple groups of 1 × 1 convolutions to compress the encoder’s features. In addition, different numbers of convolution kernels can be selected according to the image’s resolution, controlling the module parameters. In the short connection between the shallow encoder and decoder layer with higher resolution, the number of channels can be appropriately reduced to avoid excessive model complexity. Furthermore, the information of each group is extracted by a convolution of 3 × 3, but the dilation rate of dilated convolution starts from the first branch and increases by 2 times until the rate reaches 16. The remaining group will reduce the resolution through a maximum pooling down-sampling operation and it is then fed into convolution. The output feature map restores the original resolution by interpolation. If direct use of a larger expansion rate will cause the gathering of too large an information window, the credibility of the characteristics is reduced. Reducing the image resolution through downsampling can continue to increase the information of different scales while keeping the dilation rate unchanged and effectively reducing the module’s complexity.

Illustration of the attention-guided multi-scale module. First, the transmitted prediction results are taken as the attention and the foreground information more relevant to the task is highlighted. Then, the information of different scales is learned by setting the atrous convolution of different rates. It is worth noting that the scale parameter changes with the feature depth of encoders. The smaller the resolution of the input picture, the smaller the scale, and vice versa

We also increase the short connections within and between branches, adding the output result of the previous group as the input to the next group. In this way, the latter group can obtain the information of the former group and the accumulated information extracted from different scales, which further expands the fusion of information of different scales. Inspired by [30] the scale parameter of the AM module changes with the feature depth of different encoders. The deeper the encoder layer is, the smaller the scale parameter is, and vice versa. In this way, it can dynamically change according to whether the extracted feature is sufficient or not. For example, when the output of the En_1 is only processed by one convolution layer, which needs to be fused with the decoder features De_2 after multiple feature extraction layers, the scale value needs to increase to meet the balance between information.

After processing the output features of the decoder by the AM module, the background is filtered with the new information. Therefore, the filtered information is more related to the detection task. On the other hand, a module based on the different dilation rates of dilated convolution can make full use of the different receptive fields of the network to increase as much target information as possible.

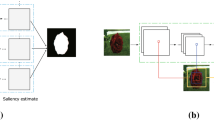

3.3 Bi-refinement module

In the results of salient object detection deduced by the main network, the object edge is blurred, mainly caused by the lack of a structure specially optimized for the edge. Therefore, the refinement module is composed of a particular network, and the overall structure is shown in Fig. 3. Different from the main network structure, only the original image is input. In the new module, the original image Xi,j is element wise multiplied by the predicted map Out1 and the reverse predicted map 1−Out1 to obtain Xi,j ⊗ Out1 and Xi,j ⊗ (1−Out1), respectively. These results highlight the background and foreground areas in the original image, followed by two identical sub-network structures for supplemented information and trimmed redundant regions. Like the main network structure, sub-networks are composed of encoder and decoder modules, but they are more concise than those in the main network. Each layer is composed of a layer of 3 × 3 convolution, batch normalization, and a ReLU activation function. In addition, the multi supervision mechanism is removed. The results Rf_1(Xi,j ⊗ Out1) and Rf_2(Xi,j ⊗ (1−Out1)) output by the sub networks will be concatenated along the channel dimension. Then convolutions are used for feature extraction and compression to promote the fusion of different information, obtaining Convs(Cat(Rf_1(Xi,j ⊗ Out1),Rf_2(Xi,j ⊗ (1−Out1))). Finally, the transmitted prediction results are added, and the convolution with output channel one is used for feature compression output. Therefore, the output OutRF of the refinement module can be expressed as:

Illustration of the bi-refinement (BR) module. The module makes full use of the prediction results, filters the foreground and background information in the original image, and then inputs them into sub-networks with the same structure to process the edge area. It supplements the edge information and deletes the redundant region

Based on the obtained prediction results, combined with the original map, the existing information is fully used. The foreground and background areas in the original image are highlighted. On the one hand, the foreground is used to optimize the edge, and on the other hand, the rich information in the background is used to supplement the missing areas at the edge. By supplementing the deficiency and cutting the redundant area, the edge is more complete and more accurate.

Figure 4 shows some feature diagrams in the calculation process. Comparing the edges of the input prediction map and the processed map, we can see that the edge confidence in the input prediction map is low. As shown in Fig. 4(d), the edge is fuzzy, especially in the tail of the prediction picture, with significant prediction errors; and in Fig. 4(j), the confidence of the edge is relatively high, the background area is obviously suppressed, and the tail of the object is processed correctly. This proves that our method is effective and can improve the object detection result.

Some of the feature diagrams in the calculation of the bi-refinement (BR) module. For a better display, the size of the image is scaled appropriately. (a) original image; (b) ground-truth image; (c) weight map of the obtained prediction map; (d) obtained prediction map; (e) original image element-wise multiplication with obtained prediction map; (f) original image element-wise multiplication with reverse obtained prediction map; (g) weight map of foreground after sub-network processing; (h) weight map of background after sub-network processing; (i) weight map after fusion; (j) prediction map output by the BR module

4 Experimental results

By qualitatively and quantitatively comparing with other outstanding models on the same dataset, our model can be analysed more effectively. For more comprehensive comparison, this paper selects six challenging public datasets that are commonly used in the field of SOD. All models are trained on the salient object detection dataset DUTS-TR [31] and evaluated on six popular datasets, the DUTS-TE [31], DUT-OMRON [32], HKU-IS [33], ECSSD [34], PASCAL-S [35], and SOD [36] datasets. The details are shown in Table 1.

Our model is an end-to-end model and can process input images of any size. In the training phase, the data of the training set is resized to 320 × 320 and then randomly reduced to 288 × 288. A random vertically flipping method is used for data augmentation. The Adam optimizer algorithm is used to train the whole network, and its hyperparameters initial_learning_rate_lr, betas, eps, and weight_decy are set to 1e-3, (0.9,0.9999), 1e-8, and 0 respectively. Moreover, the batch size is 12, and our module is implemented based on PyTorch 1.8, and four 1080Ti GPUs are used for training. In the testing phase, images are resized to 320 × 320, and the resolution of the prediction map is restored to be the same as the original image. In both training and testing, the image scaling algorithm is a bilinear interpolation method.

4.1 Evaluation metrics

We use four metrics that are commonly used in salient object detection tasks to evaluate the introduced model comprehensively: the Precision-Recall (PR) curve, F-measure curve, maximum F-measure (maxFβ), and Mean Absolute Error (MAE).

The PR is a curve drawn with recall defined as the horizontal axis and the corresponding accuracy as the vertical axis when the threshold changes uniformly from 0 to 1.

where #(⋅) is the numerical value of elements in the precision of the image, and St ∈{0,1} is referred to as binarization corresponding to the predicted result by threshold. The PR curve is plotted by setting different thresholds. By observing the corresponding precision and recall values, we can judge whether the result is accurate or not.

The F-measure curve is used to describe the relationship between the accuracy and recall, similar to previous research [12], we set β2 to 0.3 and use the maximum F-measure(maxFβ).

The MAE calculates the average absolute distance of each pixel (x,y) between the saliency map predicted P(x,y) by the model and the ground truth G(x,y).

4.2 Comparisons with the state-of-the-arts

We compare the results of each metric on the six datasets with 19 top-notch saliency detection methods, U2Net [12],ITSD [37], EDNS [38], HVPNet [39], DFI [40], BASNet [5], PoolNet [19], MWS [41], AFNet [42], MLMS [43], PAGRN [24], PiCANet [2], PiCANetR [18], DGRL [44] RAS [45], SRM [46], DSS+ [17], NLDF+ [47] and UCF [48], which are shown in Tables 2 and 3. Additionally, we show the PR and F-measure curves in Fig. 5 with the optimum results shown in red.

4.2.1 Quantitative evaluation

Our model is the best on the DUTS-TE, ECSSD, HKU-IS, OMRON datasets, and all results greatly surpass the other models’ results and improves the F-measure by 0.8%, 0.4%, 0.8%, and 1.4%. Moreover, only the MAE is 1.0% lower than DFI on the PASCAL-S data set, mainly due to additional datasets during training, such as BSDS500 and VOC Context, which expanded the number of training samples. Compared with the several corresponding models, the results of our predicted maps are perfect. In addition, all the metrics of our new model on the six test datasets are better than those of the basic model, proving that our model is effective and competitive compared with other models.

Figure 5 shows the F-measure and PR curves. The red lines representing our results are above other lines on the DUTS-TE, ECSSD, SOD, HKU-IS and OMRON datasets, which means our results are very competitive.

4.2.2 Qualitative evaluation

We show some saliency maps obtained from our method in Fig. 6. The new model outcomes are optimum compared with corresponding models for the overall confidence and boundary and can highlight the significant region in the map, including images with large and complex patterns in the picture, as shown in the first and second lines; smaller foreground area, shown in the third and fourth lines; and multiple salient objects, shown in the sixth and seventh lines. More importantly, it can achieve excellent results in complex background detection tasks. For example, in the saliency detection task shown in the fifth line of the figure, there are overlapping people in the background. Our model can accurately identify the foreground area, and the edges are sharper. However, other models have the problem that the detection area is too large, and some background areas are mistakenly recognized as the foreground.

4.3 Ablation analysis

To demonstrate the effectiveness of various modules, we analyse them in detail through ablation experiments. First, we take the U2Net model as the basis and then gradually add different modules. The results are shown in Table 4. When adding the VAE branch to the network, using the index max, the test results on the DUTS-TE, SOD, and OMRON datasets increased by 0.7%, 1.2%, and 0.3%, respectively. Furthermore, when adding the AM and the BR module, the results improved again. Thanks to better detection results from each improvement, the final model has a very large improvement of 1.8%, 1.9%, and 1.4%, respectively, on the three datasets.

As shown in Fig. 7, we show the detection results and corresponding object contours after adding different modules to the benchmark model. As different modules are added, the object’s outline becomes more accurate and clearer, especially the tail of the object, and the redundant part gradually decreases. Especially when the BR module is added, the model can highlight the foreground and background areas, optimize the details, supplement the insufficient information of the boundary, and delete the redundant edges. The contour of the object is greatly improved.

The predicted results of different models and the contours of salient objects. (a) Ground-truth image; (b) the predicted results after adding the VAE module to the baseline model; (c) the predicted results after adding the AM module to the baseline model; (d) the predicted results after adding the AM and BR modules to the baseline model; (e) the predicted result of our model

To further demonstrate the role of the BR module, we transplanted it to other models for more detailed testing, including U2Net, RASNet, and BASNet models. Since the BASNet already has a refined module, we delete the original module and add the new module. As shown in Table 5, after adding the new module, the detection results of various models have been greatly improved, especially those of the U2Net and RASNet models. In Fig. 8, we show different models’ original prediction results after adding new modules and the corresponding object contour. The object’s contour is more accurate in the new predicted map, especially the tail area of the object, which proves the effectiveness of the BR and its good portability.

Comparison of the predicted map of other models with the BR module and the original prediction results, including the corresponding contour diagram. The object contour is more accurate, especially the tail area of the object, and the area of false prediction is reduced, which proves the effectiveness of the designed BR module

5 Conclusion

This paper proposes an attention-guided detection network using an autoencoder (AGA-Net) to make full use of the existing information between different layers. First, a VAE branch is added to the detection task, which forces the encoder to learn the details of an image effectively through additional image restoration task, and enriches the content of features extracted by the encoder. To make full use of the existing prediction results, an attention-guided multi-scale (AM) module is added to the main model, extracting information of different scales that are more relevant to the task. Furthermore, the new model also uses a bi-refinement (BR) module that highlights the foreground and background areas, thus alleviating the insufficiency of the object edge and reducing redundancy. Thanks to the improvement of each adjustment, the final model has a very large improvement of 1.8%, 1.9%, and 1.4% on the DUTS-TE, SOD, and DUT-OMRON datasets, respectively. The effectiveness of the new model is proved through experiments on multiple datasets and comparing with other optimal models. For future work, we hope to continue improving the detection results of object boundaries by redesigning the loss function and modifying loss calculation of the edge region.

Data Availability

The datasets and materials used or analysed during the current study are available from the corresponding author on reasonable request.

Code Availability

The code used or analysed during the current study is available from the corresponding author on reasonable request.

References

Borji A, Cheng M-M, Hou Q, Jiang H, Li J (2019) Salient object detection: a survey. Comput Vis Media 5(2):117–150

Wang L, Lu H, Ruan X, Yang M-H (2015) Deep networks for saliency detection via local estimation and global search. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3183–3192

Liu Y, Zhang X-Y, Bian J-W, Zhang L, Cheng M-M (2021) Samnet: stereoscopically attentive multi-scale network for lightweight salient object detection. IEEE Trans Image Process 30:3804–3814

Ji Y, Zhang H, Zhang Z, Liu M (2021) Cnn-based encoder-decoder networks for salient object detection: a comprehensive review and recent advances. Inf Sci 546:835–857

Qin X, Zhang Z, Huang C, Gao C, Dehghan M, Jagersand M (2019) Basnet: boundary-aware salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7479–7489

Pang Y, Zhao X, Zhang L, Lu H (2020) Multi-scale interactive network for salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9413–9422

Wang S, Liu X, Zhao J, Liu Y, Liu S, Liu Y, Zhao J (2021) Computer auxiliary diagnosis technique of detecting cholangiocarcinoma based on medical imaging: a review. Comput Methods Programs Biomed 208:106265

Shi L, Wang Z, Pan B, Shi Z (2020) An end-to-end network for remote sensing imagery semantic segmentation via joint pixel-and representation-level domain adaptation. IEEE Geosci Remote Sens Lett 18(11):1896–1900

Dogan Y, Keles HY (2020) Semi-supervised image attribute editing using generative adversarial networks. Neurocomputing 401:338–352

Chen S, Tan X, Wang B, Lu H, Hu X, Fu Y (2020) Reverse attention-based residual network for salient object detection. IEEE Trans Image Process 29:3763–3776

Fan D-P, Zhai Y, Borji A, Yang J, Shao L (2020) Bbs-net: Rgb-d salient object detection with a bifurcated backbone strategy network. In: Proceedings of the european conference on computer vision (ECCV), pp 275–292

Qin X, Zhang Z, Huang C, Dehghan M, Zaiane OR, Jagersand M (2020) U2-net: going deeper with nested u-structure for salient object detection. Pattern Recogn 106:107404

Myronenko A (2019) 3d Mri brain tumor segmentation using autoencoder regularization. Brainlesion: glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries:311–320

He S, Lau RW, Liu W, Huang Z, Yang Q (2015) Supercnn: a superpixelwise convolutional neural network for salient object detection. Int J Comput Vis 115(3):330–344

Hu T, Yang M, Yang W, Li A (2019) An end-to-end differential network learning method for semantic segmentation. Int J Mach Learn Cybern 10(7):1909–1924

Zhang J, Zhang T, Dai Y, Harandi M, Hartley R (2018) Deep unsupervised saliency detection: a multiple noisy labeling perspective. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9029–9038

Hou Q, Cheng M-M, Hu X, Borji A, Tu Z, Torr PH (2017) Deeply supervised salient object detection with short connections. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3203–3212

Liu N, Han J, Yang M-H (2018) Picanet: learning pixel-wise contextual attention for saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3089–3098

Liu J-J, Hou Q, Cheng M-M, Feng J, Jiang J (2019) A simple pooling-based design for real-time salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3917–3926

Gehring J, Auli M, Grangier D, Yarats D, Dauphin YN (2017) Convolutional sequence to sequence learning. In: Proceedings of the 34th international conference on machine learning, pp 1243–1252

Zhang X, Wang T, Qi J, Lu H, Wang G (2018) Progressive attention guided recurrent network for salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 714–722

Zhang L, Dai J, Lu H, He Y, Wang G (2018) A bi-directional message passing model for salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1741–1750

Sun F, Li W, Guan Y (2019) Self-attention recurrent network for saliency detection. Multimed Tools Appl 78(21):30793–30807

Zhang X, Wang T, Qi J, Lu H, Wang G (2018) Progressive attention guided recurrent network for salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 714–722

Wang H, Dai L, Cai Y, Sun X, Chen L (2018) Salient object detection based on multi-scale contrast. Neural Netw 101:47–56

Wang H, Fan R, Cai P, Liu M (2021) Sne-roadseg+: rethinking depth-normal translation and deep supervision for freespace detection. In: Proceedings of the IEEE/RSJ international conference on intelligent robots and systems, pp 1140–1145

Su J, Li J, Zhang Y, Xia C, Tian Y (2019) Selectivity or invariance: Boundary-aware salient object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3799–3808

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 248–255

Zhang C, Li G, Du S (2019) Multi-scale dense networks for hyperspectral remote sensing image classification. IEEE Trans Geosci Remote Sens 57(11):9201–9222

Gao S, Cheng M-M, Zhao K, Zhang X-Y, Yang M-H, Torr PH (2021) Res2net: a new multi-scale backbone architecture. IEEE Trans Pattern Anal Mach Intell 43(2):652–662

Wang L, Lu H, Wang Y, Feng M, Wang D, Yin B, Ruan X (2017) Learning to detect salient objects with image-level supervision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 136–145

Yang C, Zhang L, Lu H, Ruan X, Yang M. -H. (2013) Saliency detection via graph-based manifold ranking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3166–3173

Li G, Yu Y (2015) Visual saliency based on multiscale deep features. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5455–5463

Yan Q, Xu L, Shi J, Jia J (2013) Hierarchical saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1155–1162

Li Y, Hou X, Koch C, Rehg JM, Yuille AL (2014) The secrets of salient object segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 280–287

Movahedi V, Elder JH (2010) Design and perceptual validation of performance measures for salient object segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 49–56

Zhou H, Xie X, Lai J-H, Chen Z, Yang L (2020) Interactive two-stream decoder for accurate and fast saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9141–9150

Zhang J, Xie J, Barnes N (2020) Learning noise-aware encoder-decoder from noisy labels by alternating back-propagation for saliency detection. In: Proceedings of the european conference on computer vision (ECCV), pp 349–366

Liu Y, Gu Y-C, Zhang X-Y, Wang W, Cheng M-M (2020) Lightweight salient object detection via hierarchical visual perception learning. IEEE Trans Cybern 51(9):4439–4449

Liu J-J, Hou Q, Cheng M-M (2020) Dynamic feature integration for simultaneous detection of salient object, edge, and skeleton. IEEE Trans Image Process 29:8652–8667

Zeng Y, Zhuge Y, Lu H, Zhang L, Qian M, Yu Y (2019) Multi-source weak supervision for saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6074–6083

Feng M, Lu H, Ding E (2019) Attentive feedback network for boundary-aware salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1623–1632

Wu R, Feng M, Guan W, Wang D, Lu H, Ding E (2019) A mutual learning method for salient object detection with intertwined multi-supervision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8150– 8159

Wang T, Zhang L, Wang S, Lu H, Yang G, Ruan X, Borji A (2018) Detect globally, refine locally: a novel approach to saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3127–3135

Chen S, Tan X, Wang B, Hu X (2018) Reverse attention for salient object detection. In: Proceedings of the European conference on computer vision (ECCV), pp 234–250

Wang T, Borji A, Zhang L, Zhang P, Lu H (2017) A stagewise refinement model for detecting salient objects in images. In: Proceedings of the IEEE international conference on computer vision, pp 4019–4028

Luo Z, Mishra A, Achkar A, Eichel J, Li S, Jodoin P-M (2017) Non-local deep features for salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6609–6617

Zhang P, Wang D, Lu H, Wang H, Yin B (2017) Learning uncertain convolutional features for accurate saliency detection. In: Proceedings of the IEEE international conference on computer vision, pp 212–221

Funding

National Key Research and Development Program of China. No. 2018YFB1404402.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, C., Liu, X. & Zhao, W. Attention-guided salient object detection using autoencoder regularization. Appl Intell 53, 6481–6495 (2023). https://doi.org/10.1007/s10489-022-03917-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03917-2