Abstract

Arrhythmia is a disease-influencing heart and is manifested by an irregular heartbeat. Atrial fibrillation (Afib), atrial flutter (Afl), and ventricular fibrillation (Vfib) are heart arrhythmias affecting predominantly senior citizens. An electrocardiogram (ECG) is a device serving to measure the ECG signal and diagnosis of an abnormal pattern which represents a heartbeat defects. Though it is possible to analyze these signals manually, in some cases it is a difficult task due to the often signal distortion by noise. Furthermore, manual analyzation of patterns is subjective and can lead to an inaccurate diagnosis. An automated computer-aided diagnosis (CAD) is a technique to eliminate these shortcomings. In this work, we present an 6-layer deep convolutional neural network (CNN) for automatic ECG pattern classification of the normal (Nr), Afib, Afl, and Vfib classes. This proposed CNN model requires simple feature extraction and no pre-processing of ECG signals. For two seconds ECG segments, the model obtained the accuracy of 97.78%, specificity and sensitivity of 98.82% and 99.76% respectively. This proposed system can be used as an assistant automatic tool in a clinical environment as a decision support system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Arrhythmia is a group of heart diseases whose common criterion is irregular rhythms caused by abnormal electrical impulses. This is the most common heart disease and the prevalence of people suffering from these is rising [13]. Arrhythmia affects men more often than women [12, 31] and mainly senior citizens [12, 14], however, there are the inconsiderable proportion of the deaths of young people [31]. Moreover, the expectation of the number of persons with most common arrhythmia disease, atrial fibrillation (Afib) concretely, is more than double [22].

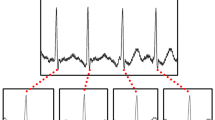

Afib is a tachyarrhythmia with uncoordinated atrial activation evoking irregular R-R intervals and absence of repeating P waves before QRS complex [20]. The reason for this states is the principle of the atrioventricular node (AVN) functionality ensuring conduction of the electrical impulse. In the atrial myocardium, a large amount of irregular impulses is originated and AVN reacts only on impulses with corresponding intensity. The most common symptom of Afib is weariness and is related to increased risk of stroke and heart failure. Afl, unlike Afib, does not contain irregular pulse but other symptoms are similar. Moreover, Afl and Afib may occur in the same person because of the interrelationship between them [32] so it is not easy to recognize them. Typical Afl is defined as atrial activity defined by the presence of replicating saw-tooth at a rate of 240 to 340 beats. Vfib and ventricular tachycardia (VT) are sustained ventricular arrhythmias which often arise at myocardial infarction and can cause sudden death. In that case, the individual cardiomyocytes of the ventricles contract completely unsynchronized with the absence of necessary QRS complex wave of electrocardiogram (ECG) signal for correct functioning of the heart [29]. These waves are measured by noninvasive electrocardiogram (ECG) device which compares voltage difference of heart activity by suitably placed electrodes. Classical ECG patterns of all these heart disorders and normal (Nr) ECG signal are shown in Fig. 1.

Because the patterns of ECG describes vital information about the patient heart state, the ECG signal is valuable in the detection and diagnosis of cardiac problems. However, ECG signals are entirely nonlinear and often with signal distortion by noise or unexpected artifacts which can lead to overlooked or hidden of measured symptoms of diseases and in the end could lead to an inaccurate diagnosis. There, it is desired to implemented computer-aided diagnosis (CAD) system for reduction of the human factor and serve as decision support system in the clinical environment. CAD tools are used in order to discover such disorder to minimize intra and inter observer variability [24].

2 Related works

Regarding previous researches regarding to heart function and ECG rhythms, in the last decade, it is possible to find out many different of similar techniques (refer to Table 7). The researchers developed diverse models in four different methods combined with three classification techniques [25], a rule-based data mining approach [11], k-nearest neighbor (KNN) classification [23], analyzing with nonlinear extraction with ANOVA ranking and decision tree classification [3], and lastly, Recurrence Quantification Analysis (RQA) with Decision Tree (DT), Random Forest (RAF) and Rotation Forest (ROF) methods [15]. All these techniques depend on serious noise removal and detailed knowledge on data labeling and annotation. This makes such works prediction for practicing CAD as effective tool to be very limited due to the cost of preprocessing.

Convolutional neural network (CNN) is an emerging hot research topic in machine learning and has been shown as successful solutions in the classification of images [30], annotations for text analysis [21] or speeches understanding [1]. The main aspect of CNN is dependence on data order in samples to recognize necessary patterns. Hence, CNN can be used even on signal information as one-dimensional vector input [5,6,7,8,9] and it is a very powerful, used tool without requiring of R-peak detection for extraction of features in CAD systems. Traditional CAD systems refer to approaches with pre-processing, feature extraction, feature selection, and classification tasks in their flowchart [18]. However, there are studies not involved feature extraction for algorithm optimization and reduction of asymptotic complexity and time consumption for getting results. In this study, our approach not follow the traditional process of an automated CAD system by omitting of pre-processing tasks like denoising or normalization of dataset.

Preprocessing serves for normalization, smoothing or denoising normalization data for another processing task of classification flowchart. Regarding preprocessing of data, there are articles using this input editing type of methods. However, some of the approaches are vindicated on the concrete dataset. Acharya et al. [7, 8] implemented Z score normalization which depends on the mean of the dataset and standard deviation. It means that the Z score value for each sample is computed by remaining values. However, we present an approach which is not linking with the dataset, but ECG branch of issues like [2, 4, 16], but in combination with feature extraction.

3 Data used

The ECG signals used in this work were obtained from a publicly available arrhythmia PhysioBank database [17]. We have obtained Vfib ECG signals from MIT-BIH malignant ventricilar arrhytmia database (vfdb), Afib and Afl ECG signals from MIT-BIH atrial fibrillation database (afdb), and Afib, Afl, Vfib, and Nr ECG signals from MIT-BIH arrhythmia database (mitdb) as described in Table 1. These are frequently used databases in articles about the classification of ECG signals and include raw data without smoothing or other modification. From these databases, 25,459 segments with 2 second duration were used (Table 2).

4 Methodology

4.1 Features extraction

Before classification by CNN, continuous wavelet transformation (CWT) shown in (1) divided ECG signal into wavelets.

Where Ψ denote a Morse wavelet [10] with time bandwidth T and symmetry s in time t of extracting sample. In this paper, T was set on value 1 and s on value 2 after a deeper examination of the results of extraction. Besides that, every parameter after CWT with greater value than 0.1 was set on itself for optimal extraction of necessary features. The power of two was used to secure only positive numbers and easier conversion to a range of 0-255 representing color components of the image. Examples of feature extraction output of each class converted to gray scale are shown on Fig. 2.

Since atdb and vfdb databases are sampled at a frequency of 250Hz and mitdb are sampled at a frequency of 360Hz, length of the input vector to CWT was used as 500 and 720 respectively, representing ECG signal with 2-second duration. Output 2-dimensional matrix was resized to width and height of 50 and 30 pixels respectively which guarantee optimal input to CNN without previous up-sampling, down-sampling or normalization of the signal.

4.2 CNN architecture

The architecture of the proposed CNN model is shown in Fig. 3 graphically and tabulated in Table 3. Parameters of the model like a number of layers, their type, kernel size, stride, and activation or optimization function are set by several experiments. On these experiments, results, weights, and biases were examined to get not only optimum performance, but optimum model without unnecessary computations too. Hence, the presented model includes 2 convolutions, 2 max-pooling, and 2 fully-connected layers. The stride, which represents the size of shifts through the input image, is set as 1 for convolutional and 2 for max-pooling layer. These layers form the main structure of CNN where convolution gets important features from the input. The max-pooling operation is reducing its dimensionality while maintaining significant features for next operations. The max-pooling is called after every convolution layer operation in this work. Lastly, the fully-connected layer is used to connect the neurons in 3 dimensions (2-dimensional resolution of each filter from last max-pooling layer) to vector, reducing of the previous fully-connected layer into a small number of neurons, or converting of the previous layer into a four-class (Nr, Afib, Afl or Vfib) probability distribution.

Layer 0 (input layer) is convoluted with a filter kernel size of 3 to produce the first layer. Then, a max-pooling operation (kernel 2) is performed on layer 1 (48 x 28 x 46) to form layer 2 (22 x 14 x 46). After that, in layer 2, a convolution is used again with a filter (size 3) to design layer 3. Then, a last max-pooling is applied to decrease the number of output neurons from 22 x 12 x 7 to 11 x 6 x 7. Next layer is first fully-connected to 24 output neurons in layer 5 and last layer 10 is second fully-connected to the final layer (layer 6) with 4 outputs which represent the four classification classes (Nr, Afib, Afl and Vfib).

4.3 Training and testing of CNN model

In this research, a backpropagation [28] with a batch size of 20 is used to model the update of CNN. The learning rate of parameter to help in data convergent equals to 1 ⋅ 10− 3 for first 12 epochs and 3 ⋅ 10− 4 for the next 8. For adjusting the speed of the learning, adaptive momentum (Adam) is used in the proposed model. Furthermore, leaky rectifier linear unit (LeakyRelu) is implemented as activation function for layers 1 and 3. Dropout layer optimized the output from layer 5 with value of 0.3 whereas the last layer has used the SoftMax function instead of Sigmoid because of nonbinary classification.

Stratified ten-fold cross-validation strategy [26] is implemented in this work. The images with feature extraction of ECG four sets are divided into ten equally large parts. Nine parts are used to train the model and the last part serves to test the model. Each divided part is represented as percentage approximated by the same output class of the entire data based. Same iterations as a number of parts are run in this work. The average of these iterations for the four classes are shown in Table 5.

5 Results

Proposed network was trained on workstation with Intel Core i7 2.50GHz processor and a 16GB RAM. For comparison purposes, we have tested proposed model on GeForce GTX 860M with 2GB memory and GeForce GTX 1080 with 6GB memory too. Table 4 shows the average time needed to train an each epoch. As can be seen from learning rate description in the Section 4.3, 20 epochs are evaluated in this study to train the model for next testing. Table 5 shows the confusion matrix of prediction results. It can be observed that 99.63% images are correctly classified as class representing normal rhythm and abnormal ECG patterns. These are correctly classified with minimal accuracy of 98.03% in Afib class. The overall classification is tabulated in Table 6. An average accuracy of 97.78 is achived. Furthermore, a sensitivity, specificity and positive predictive value (PPV) of 99.76%, 98.82% and 99.81% respectively is computed for Nr class. In the case of the remaining classes together, PPV is estimated as 98.55%.

6 Discussion

It is obvious from Table 5, every sectional result is higher than 92% even with the unbalanced dataset (see Table 2) except the sensitivity of Afl. It results from the recognition problem of Afl and Afib, where these are related to types of heart diseases with a similar characteristics. Second reason after deep studying of data there are issues related to inaccurate clarification in database creation. This can be treated by further study on feature extraction by specific labeling assumptions. We have not taken this in account in our study this time. On the other side, the fact of 100% accuracy of recognition between Nr and Vfib is very satisfactory. More significant results are in Table 6 where is the comparison of accuracy between normal rhythm and all types of health problems is achieved with the accuracy of 97.78%.

Table 7 discusses the different approaches developed for the automated detection of arrhythmia with ECG signals used from PhysioBank databases. Deasi et al. [15] proposed recurrence plots with recurrence quantification analysis for feature extraction, ANOVA statistical for feature ranking and WEKA machine-learning tool for classification. This tool contains Decision tree classifier, random forest and rotation forest where the last one achieved the best result in the proposed paper. Acharya et al. [3] presented ANOVA statistical for feature ranking too, but with thirteen nonlinear entropies for feature extraction and classification by K-Nearest Neighbor (KNN) and Decision Tree (DT), where DT classifier had better performance. Anyway, separate feature extraction and ranking requires deep knowledge of all used methods for implementation of the function system. Hamed et al. [19] implemented discrete wavelet transform for feature extraction and support vector machine classifier. To add, white Gaussian noise method is used like signal denoiser which is not necessary while using wavelet transform method depends on frequency. Pudutokain et al. [27] presented a low-complex digital hardware implementation (ADDHard) tool based on the detection of anomalies. Achieved accuracy 97.28% points to the quality system but the sensitivity of 78.70% suggests to an unbalanced dataset with the problematic prediction of some class. Moreover, all groups of researchers used the QRS detection algorithm, which needs long-term segment for recognition of the R-peak part. Acharya et al. developed 11-layer CNN architecture without R-peak detection and achieved 92.50% accuracy. Nevertheless, a too small dataset of Vfib was used and prediction of ventricular arrhythmias give unbalanced specificities and sensitivities of individual classes. However, our proposed CNN model does not require any type of ECG data processing and can be used for real-time classification of streamed input without recomputing of necessary parameters reliant to the previous data. Further, CWT used with resizing of the resulting image guarantees no problem with different frequency sampling of measured signal from the ECG device.

Though the design CNN model accuracy does not achieve 100.00% in the classification of normal and other ECG signals, this study has significant results in classification ECG signals into normal and other classes. Unlike our proposed algorithm, most of the researches in Table 7 hold in the conventional machine learning techniques describes in Section 2. Hence, the novelty of this work is the development of a 6-layer deep CNN model without pre-proccessing and with the use of feature extraction.

Also, CNN is not independent on the position of P wave or QRS complex, because of filters searching pattern through the whole sample. CNN attributes depend only on the kernel size of the convolutional layer filter and this makes stride tackle all important image features. Besides, there is no need to implement R peak detection to achieve optimum diagnostic performance.

The advantages of the proposed CNN model are:

-

6-layer deep CNN model is presented

-

Pre-processing is not required

-

Denoising is not required

-

R-peak detection is not required

-

GPU for training is not required

The limitations of the presented CNN model are:

-

The need for a large amount of data for training

-

Requires knowledge on feature extraction before training of model

It is obvious, that graphics processing unit (GPU) can decrease computational time for training of neural network (see Table 4). Even with bigger batch size, time required to fitting of CNN model can be shorter. However, CPU does not report serious need of GPU as we expected on the beginning. The requirement of feature extraction is achieved in comparison with [7] excessively, but to our advantage it is achieved with no data pre-processing needs to be simplified to show the merits of the results in comparison with [7]. Still, in our future work, we would like to focus on developing a CNN model with as small as possible preprocessing of data.

7 Conclusion

In contrast with traditional machine learning techniques, this study presents a 6-layer deep CNN model to 4 classes (Nr, Afib, Afl and Vfib) recognition of ECG rhythms. The proposed model needs no user interference and R-peak detection is not required. Also, three MIT-BIH PhysioBank databases were used to train and test CNN model. our proposed model obtained the performance with an accuracy, specificity, and sensitivity of 97.78%, 98.82%, and 99.76% respectively. CNN model can be implemented in the health-care industries as an added tool to assist physicians in providing decision support system on the diagnosis. Presented model can be also used in the home environment for watching of elderly patients by their family members for analysis of heart problems.

In these types of approach, where some of the processes from traditional system flowchart are omitted, there is very important to take into account some validation threats. From an external perspective, it is needed to normalize inputs into the same form. Typical normalization of data is to watch on a different frequency of measuring. If the data are not normalized, it can happen that input into system contains shortage or surplus information and classification process is not valid. Another problematic part is that our approach works with lead II ECG signals from 12 possible leads for measuring. Every single lead could have a different projection on the polarization vector and includes different views on heart activity. In this way, using more than one lead can cause malfunction of prediction. Internal perspective comprises operation on data before classification itself. Ignorance of input and used method of preprocessing or feature extraction can lead to loss of important information on the account of right classification. Other important aspect is the design of neural network architecture. Often the problem is a too robust solution where a network includes more neurons than it is needed for sufficient functionality. At first sight, it may seem like the problem of computational complexity only, but actually, it can lead to dysfunction of the whole system. If the network contains too many neurons, many inputs can be classified by a single branch regardless to other, in such context, clarification can provide great accuracy, but prediction on a total new dataset could have insufficient results.

References

Abdel-Hamid O, Ar Mohamed, Jiang H, Deng L, Penn G, Yu D (2014) Convolutional neural networks for speech recognition. IEEE/ACM Trans Audio Speech Lang Process 22(10):1533–1545

Acharya UR, Fujita H, Sudarshan VK, Sree VS, Eugene LWJ, Ghista DN, San Tan R (2015) An integrated index for detection of sudden cardiac death using discrete wavelet transform and nonlinear features. Knowl-Based Syst 83:149–158

Acharya UR, Fujita H, Adam M, Lih OS, Hong TJ, Sudarshan VK, Koh JE (2016) Automated characterization of arrhythmias using nonlinear features from tachycardia ecg beats. In: 2016 IEEE international conference on systems, man, and cybernetics (SMC). IEEE, pp 000533–000538

Acharya UR, Fujita H, Sudarshan VK, Oh SL, Adam M, Koh JE, Tan JH, Ghista DN, Martis RJ, Chua CK et al (2016) Automated detection and localization of myocardial infarction using electrocardiogram: a comparative study of different leads. Knowl-Based Syst 99:146–156

Acharya UR, Fujita H, Adam M, Lih OS, Sudarshan VK, Hong TJ, Koh JE, Hagiwara Y, Chua CK, Poo CK et al (2017) Automated characterization and classification of coronary artery disease and myocardial infarction by decomposition of ecg signals: a comparative study. Inf Sci 377:17–29

Acharya UR, Fujita H, Lih OS, Adam M, Tan JH, Chua CK (2017) Automated detection of coronary artery disease using different durations of ecg segments with convolutional neural network. Knowl-Based Syst 132:62–71

Acharya UR, Fujita H, Lih OS, Hagiwara Y, Tan JH, Adam M (2017) Automated detection of arrhythmias using different intervals of tachycardia ecg segments with convolutional neural network. Inf Sci 405:81–90

Acharya UR, Fujita H, Oh SL, Hagiwara Y, Tan JH, Adam M, Tan RS (2018) Deep convolutional neural network for the automated diagnosis of congestive heart failure using ecg signals. Appl Intell 49(1):16–27. https://doi.org/10.1007/s10489-018-1179-1

Acharya UR, Fujita H, Oh SL, Raghavendra U, Tan JH, Adam M, Gertych A, Hagiwara Y (2018) Automated identification of shockable and non-shockable life-threatening ventricular arrhythmias using convolutional neural network. Futur Gener Comput Syst 79:952–959

Amiri M, Lina JM, Pizzo F, Gotman J (2016) High frequency oscillations and spikes: separating real hfos from false oscillations. Clin Neurophysiol 127(1):187–196

Banaee H, Ahmed M, Loutfi A (2013) Data mining for wearable sensors in health monitoring systems: a review of recent trends and challenges. Sensors 13(12):17472–17500

Chow GV, Marine JE, Fleg JL (2012) Epidemiology of arrhythmias and conduction disorders in older adults. Clin Geriatr Med 28(4):539–553

Chugh SS, Havmoeller R, Narayanan K, Singh D, Rienstra M, Benjamin EJ, Gillum RF, Kim YH, McAnulty JH, Zheng ZJ et al (2013) Worldwide epidemiology of atrial fibrillation: a global burden of disease 2010 study. Circulation, pp CIRCULATIONAHA–113

DESA U (2015) United nations department of economic and social affairs, population division. world population prospects: The 2015 revision, key findings and advance tables. Tech. rep., Working Paper No ESA/P/WP. 241

Desai U, Martis RJ, Acharya UR, Nayak CG, Seshikala G, SHETTY K R (2016) Diagnosis of multiclass tachycardia beats using recurrence quantification analysis and ensemble classifiers. J Mech Med Biol 16 (01):1640005

Fujita H, Acharya UR, Sudarshan VK, Ghista DN, Sree SV, Eugene LWJ, Koh JE (2016) Sudden cardiac death (scd) prediction based on nonlinear heart rate variability features and scd index. Appl Soft Comput 43:510–519

Goldberger AL, Amaral LA, Glass L, Hausdorff JM, Ivanov PC, Mark RG, Mietus JE, Moody GB, Peng CK, Stanley HE (2000) Physiobank, physiotoolkit, and physionet: components of a new research resource for complex physiologic signals. Circulation 101(23):e215–e220

Hagiwara Y, Fujita H, Oh SL, Tan JH, San Tan R, Ciaccio EJ, Acharya UR (2018) Computer-aided diagnosis of atrial fibrillation based on ecg signals: a review. Inf Sci 467:99–114

Hamed I, Owis MI (2016) Automatic arrhythmia detection using support vector machine based on discrete wavelet transform. J Med Imaging Health Inf 6(1):204–209

January CT, Wann LS, Alpert JS, Calkins H, Cigarroa JE, Conti JB, Ellinor PT, Ezekowitz MD, Field ME, Murray KT et al (2014) 2014 aha/acc/hrs guideline for the management of patients with atrial fibrillation: a report of the american college of cardiology/american heart association task force on practice guidelines and the heart rhythm society. J Amer Coll Cardiol 64(21):e1–e76

Kim Y (2014) Convolutional neural networks for sentence classification. arXiv:14085882

Lip GY, Brechin CM, Lane DA (2012) The global burden of atrial fibrillation and stroke: a systematic review of the epidemiology of atrial fibrillation in regions outside North America and Europe. Chest 142(6):1489–1498

Martis RJ, Acharya UR, Prasad H, Chua CK, Lim CM, Suri JS (2013) Application of higher order statistics for atrial arrhythmia classification. Biomed Signal Process Control 8(6):888–900

Martis RJ, Acharya UR, Adeli H (2014) Current methods in electrocardiogram characterization. Comput Biol Med 48:133–149

Martis RJ, Acharya UR, Adeli H, Prasad H, Tan JH, Chua KC, Too CL, Yeo SWJ, Tong L (2014) Computer aided diagnosis of atrial arrhythmia using dimensionality reduction methods on transform domain representation. Biomed Signal Process Control 13:295–305

Moreno-Torres JG, Sáez J A, Herrera F (2012) Study on the impact of partition-induced dataset shift on k-fold cross-validation. IEEE Trans Neural Netw Learn Syst 23(8):1304–1312

Pudukotai Dinakarrao SM, Jantsch A (2018) Addhard: Arrhythmia detection with digital hardware by learning ecg signal. In: Proceedings of the 2018 on great lakes symposium on VLSI. ACM, pp 495–498

Rezende DJ, Mohamed S, Wierstra D (2014) Stochastic backpropagation and approximate inference in deep generative models. arXiv:14014082

Ruschitzka F, Abraham WT, Singh JP, Bax JJ, Borer JS, Brugada J, Dickstein K, Ford I, Gorcsan IIIJ, Gras D et al (2013) Cardiac-resynchronization therapy in heart failure with a narrow qrs complex. N Engl J Med 369(15):1395–1405

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:14091556

Tseng WC, Wu MH, Chen HC, Kao FY, Huang SK (2016) Ventricular fibrillation in a general population–a national database study–. Circ J 80(11):2310–2316

Waldo AL (2017) Atrial fibrillation and atrial flutter: Two sides of the same coin!. Int J Cardiol 240:251–252

Acknowledgements

Support from the Specific Research Project “Socio-economic models and autonomous systems 2” in Faculty of Informatics and Management, University of Hradec Kralove, is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Fujita, H., Cimr, D. Decision support system for arrhythmia prediction using convolutional neural network structure without preprocessing. Appl Intell 49, 3383–3391 (2019). https://doi.org/10.1007/s10489-019-01461-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01461-0