Abstract

We demonstrate the application of NUDGE (Narrow, Understand, Discover, Generate, Evaluate), a behavioral economics approach to systematically identifying behavioral barriers that impede behavior enactment, to the challenge of evidence-based practice (EBP) use in community behavioral health. Drawing on 65 clinician responses to a system-wide crowdsourcing challenge about EBP underutilization, we applied NUDGE to discover, synthesize and validate specific behavioral barriers to EBP utilization that directly inform the design of tailored implementation strategies. To our knowledge, this is the first study to apply behavioral economic insights to clinician-proposed solutions to implementation challenges in order to design implementation strategies. The study demonstrates the successful application of NUDGE to implementation strategy design and provides novel targets for intervention.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

The majority of people seeking mental health services do not receive treatments shown to improve clinical outcomes in rigorous randomized controlled trials, despite the accumulation of evidence and consensus for effective psychosocial treatments for psychiatric disorders (Ghandour et al. 2019; United States Congress Senate Committee on Health Pensions Subcommittee on Substance Abuse Mental Health Services 2004; United States President's New Freedom Commission on Mental Health 2003), and significant policy efforts to endorse, promote, and actively implement these evidence-based practices (EBPs). The field of implementation science was developed to better understand factors that facilitate or impede the implementation of EBPs and to develop and test strategies to increase effective implementation (Williams and Beidas 2019).

Though the field of implementation science has advanced considerably in the past two decades, implementation strategies—the interventions used to increase adoption, implementation, and sustainment of EBPs in health services (Brownson et al. 2017)—are still rarely developed in a systematic way that targets specific barriers to implementation (Powell et al. 2019). For example, a recent systematic review found that only 10% of trials testing an implementation strategy in mental health settings identified a priori the specific barrier the strategy addressed, or the mechanism through which the strategy was believed to improve implementation, and none of the trials that tested a mechanism or barrier supported the strategy’s underlying theory of change (Williams 2016). Additionally, there is evidence that traditional elicitation methods to identify barriers (e.g., qualitative interviews, surveys) often do not generate the actual barrier to behavior because people may not be consciously aware of the true impediments to their behavior (Lopetegui et al. 2014). This suggests that (a) many implementation strategies are not designed using a clear and scientifically-grounded theory of change regarding the specific behavioral barriers that impede or facilitate the use of EBPs, and (b) those that are designed with an underlying theory of change are not working in the way the investigators anticipated. Behavioral barriers are cognitive or psychological processes that operate prior to or during decision-making or behavioral enactment that impede or otherwise get in the way of achieving a target or focal behavior. Behavioral barriers are distinguished from structural barriers which represent factors external to the person that may impede on the person’s ability to select or enact a specific behavior. Behavioral barriers often operate outside of conscious awareness and are the focus of a large body of empirical research within the field of behavioral economics (Buttenheim et al. 2019; Datta and Mullainathan 2014; Spring et al. 2016). Behavioral barriers emerge at the intersection of psychological or cognitive factors and the context of the decisions and actions that comprise the behavioral target.

In the current study, we utilized a novel approach (called NUDGE for Narrow, Understand, Discover, Generate, and Evaluate) to rigorously identify behavioral barriers to EBP implementation in community mental health settings. NUDGE is a systematic design approach adapted by one author (AB) from existing behavioral economics, behavioral design and innovation methodologies (e.g., Asch et al. 2014; Council 2005; Darling et al. 2017; Datta and Mullainathan 2014; Kok et al. 2016; Tantia 2017) as a methodological advance in implementation strategy design. NUDGE was developed in this context for a parent project focused on applying insights from behavioral economics to advance the science and practice of implementing EBPs in community mental health. NUDGE distills barriers to an identified problem and identifies principles and constructs from behavioral economics that can inform implementation strategy design to address those barriers. A central thesis of behavioral economics is that people infrequently behave or make decisions “rationally” as most traditional utility-maximization models posit (Fiske and Taylor 2013; Kahneman and Tversky 1979; Tversky and Kahneman 1981). In contrast, people choose the best option given preferences, limited cognitive and attentional resources, and available information. The field of behavioral economics emerged from research on the limitations of human judgment and decision-making pioneered by Tversky and Kahneman, and offers novel ways to identify and leverage these “predictably irrational” (Ariely 2008) tendencies to design behavioral solutions. Examples of strategies that leverage insights from behavioral economics include strategically deployed financial incentives and “nudges” such as changing the default setting in an electronic health record to make it easier for individuals to do a desired behavior (Patel et al. 2016). Behavioral economic theories and methods, drawn from fields as diverse as cognitive psychology and economics, have been successful in identifying behavioral barriers to address public health challenges (Kooreman and Prast 2010), and have recently shown promise in solving implementation problems in medical settings (Patel et al. 2018). However, they have not yet been employed to aid implementation strategy design in mental health.

Despite evidence that tailoring strategies to obstacles may increase the effectiveness of implementations, implementation strategy development to enhance evidence-based practices is frequently not evidence-based (Baker et al. 2015; Powell et al. 2017). One way of addressing the implementation gap in mental health services, and the premise of the current paper, is to design implementation strategies using approaches that specifically delineate the exact behavioral barriers that impede EBP use in mental health settings. The current study utilizes the NUDGE method on a novel set of data (ideas generated by clinicians through a crowdsourcing challenge) to generate novel behavioral barriers and insights that inform implementation strategy design in community mental health. These identified behavioral barriers can then be directly leveraged to inform implementation strategy design.

Methods

Setting

We conducted this study within the context of a publicly-funded behavioral health system in Philadelphia County. The Philadelphia Department of Behavioral Health and Intellectual disAbility Services (DBHIDS) is a large publicly-funded behavioral health system that annually oversees the services provided to approximately 169,000 individuals. Since 2007, DBHIDS has supported the implementation of multiple EBPs through training, consultation, and internal staff time to coordinate implementation, training and ongoing consultation for five EBPs: Cognitive Behavior Therapy (Creed et al. 2014; Stirman et al. 2009), Trauma-Focused Cognitive-Behavioral Therapy (Beidas et al. 2016; Cohen et al. 2004), Prolonged Exposure (Foa et al. 2005), Dialectical Behavior Therapy (Linehan et al. 2006), and Parent–Child Interaction Therapy (Thomas et al. 2017). The Evidence-Based Practice and Innovation Center (EPIC) was launched in 2013 as a centralized infrastructure for the EBP initiatives, and to align policy, fiscal, and contracting processes for the delivery of EBPs, including an EBP “designation” process coupled with enhanced rates (Beidas et al. 2013, 2015, 2019b; Powell et al. 2016). This study is a part of a federally-funded research program applying behavioral economics to the implementation of evidence-based practices in community mental health settings, and is situated within one of the research projects focused on eliciting clinician preferences and processes around EBP implementation (Beidas et al. 2019a).

Data

The raw data used in our analysis were 65 proposed solutions to the problem of EBP underutilization submitted by 55 clinicians in Philadelphia’s publicly-funded mental health system (Stewart et al. 2019). Clinicians submitted their ideas in response to a system-wide, web-based, crowdsourcing challenge, called an innovation tournament that was conducted as part of the parent program described above (Beidas et al. 2019a). Innovation tournaments are a form of crowdsourcing designed to elicit divergent and novel solutions to complex and intractable problems by leveraging the direct experience, expertise, and practice wisdom of frontline providers and staff who work within a system and encounter the challenge on a highly frequent basis. Innovation tournaments have become increasingly popular and have been used in a variety of settings from healthcare, technology, law, and energy (Terwiesch and Ulrich 2009; Bjelland and Wood 2008; Jouret 2009; Mak et al. 2019; Merchant et al. 2014; Wong et al. 2020). “The Philadelphia Clinician Crowdsourcing Challenge” invited clinicians from the 210 publicly-funded behavioral health organizations in Philadelphia to submit an idea via email to the following prompt: “How can your organization help you use evidence-based practices in your work?” A total of 65 proposed solutions to EBP underutilization were generated and categorized into eight non-mutually exclusive categories: training (42%), financing and compensation (26%), clinician support and preparation tools (22%), client support (22%), EBP-focused supervision (17%), changes to the scope or definition of EBPs (8%), changes to the system and structure of publicly-funded behavioral health care (6%), and Other (11%). The tournament process and results are detailed elsewhere (Stewart et al. 2019).

Approach

Framework

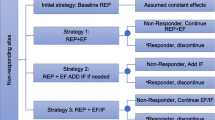

We used the NUDGE framework for designing behaviorally-informed interventions (See Fig. 1). The NUDGE approach begins when investigators Narrow the focus of the analysis to a specific, relevant behavioral target. Next, investigators seek to Understand the context of the behavior through inquiry into the decision-making process and related actions. Investigators then Discover insights about barriers to the target behavior by uniting the rich contextual inquiry (practitioner-proposed solutions in the current adaptation) from the prior step with core principles (cognitive biases and heuristic thinking) from behavioral science in a structured brainstorming process around the cues, alternatives and meanings of the target behavior. These insights are used to Generate intervention strategies and designs and Evaluate those designs through iterative prototyping and trialing. In this paper, we report on the results from the Narrow, Understand, and Discover steps; we will complete Generate and Evaluate steps in future work.

Analysis

The Narrow and Understand steps of the NUDGE process were undertaken in the Innovation Tournament described above. The target behavior was identified a priori as implementing EBPs with fidelity in community mental health settings. This study reports the results of the Discover step, which was carried out by a multidisciplinary team including licensed mental health clinicians; doctorally-prepared researchers in economics, public health, and psychology; and graduate student trainees in psychology, nursing, and behavioral and decision science. We identified and mapped barriers to successful implementation of EBPs in session (the behavioral target identified in the Narrow step), using a three-step process:

(1) Formulate hypotheses about behavioral barriers to the target behavior through a structured process that linked our understanding of the target behavior through the raw ideas with known cognitive biases and heuristics and other psychological elements that may be generating behavioral barriers. (2) Synthesize hypothesized barriers, and (3) Rapidly validate identified barriers through member checking, expert consultation, and literature review. Importantly, Steps 2 and 3 are iterative and were cycled through multiple times. The output of the process was a coherent, validated set of barriers to the target behavior (i.e., implementing EBPs with fidelity in community mental health setting) that can be used to generate focused, targeted implementation strategy designs. Below we provide a detailed explanation of our methodology for transparency and reproducibility.

Step 1: Brainstorm Hypotheses About Behavioral Barriers

Step 1 begins a process of structured brainstorming to identify behavioral barriers to the target behavior by linking information from the contextual inquiry to common biases and heuristics from the cognitive psychology, social psychology and behavioral economics literature. First, six investigators immersed themselves in the full set of ideas generated in the innovation tournament, and familiarized themselves with leading cognitive, social, and behavioral biases and heuristics from the psychology and behavioral sciences literature. There are many lists, references, textbooks, and taxonomies of common psychological factors (e.g., Behavioral Science Concepts, n.d.; Benson 2016; Health Interventions, n.d.; ideas422018; “List of Cognitive Biases” n.d.; Luoto and Carman 2014; Pinto et al. 2014; Samson 2017). Due to its usability and comprehensiveness, the authors utilized the Cognitive Bias Codex as the primary source for cognitive biases and other relevant constructs from behavioral economics (Benson 2016). Following immersion with the raw ideas and the Cognitive Bias Codex, investigators worked independently to formulate multiple hypotheses about possible behavioral barriers that impeded the target behavior (i.e., use of EBP with fidelity in community mental health settings). During the hypothesis brainstorm and formulation process, investigators drew from a set of prompts about the cues, actions and meanings, and alternatives for each decision or action step of the target behavior that helped to link the raw data (from the Understand step) to a specific behavioral principle in order to discover a novel insight about the target behavior. Prompts related to cues examined at what point a decision or action was prompted (e.g., how does the environment cue action or fail to cue action?). Prompts related to alternatives examined what other choices were available, or how easy it was to choose something else (e.g., How large or numerous is the choice set of possible competing behaviors?). Prompts for meanings reflected the participant’s identities that may be invoked by a particular action (e.g., What identities are associated with this behavior?”). There were two sets of prompts, one set focused on the decision or intention before a session to use EBP, and one set focused on the action and deployment of EBP in the session. Drawing from these prompts, the raw data and the Cognitive Bias Codex, the investigators formulated specific hypotheses about barriers to EBP implementation related to empirically-validated cognitive biases and heuristics. At the Discover step, the goal is to brainstorm as many hypotheses as possible in an effort to uncover a broad and comprehensive range of possible barriers.

For example, numerous ideas submitted to the tournament proposed checklists, “one-pagers,” or other decision aids that a clinician could bring into the session. This suggested that one barrier to delivery of evidence-based treatments is remembering the multi-step protocolized repertoires of behaviors required for high-fidelity therapy. An underlying psychological principle is Miller’s Law (Miller 1956; Robert 2005), which states that the human brain can only retain 3–7 items in working memory. These insights yielded multiple specific insights and hypothesized barriers related to the difficulty of executing complex protocols, and the high cognitive load on therapists, particularly when cognitive and attentional resources are scarce.

Step 2: Synthesize Hypothesized Barriers

Because the investigators worked separately and did not limit or curtail brainstorming at this stage, many hypotheses were duplicated across sets. Additionally, some hypotheses could be combined with other hypotheses to form an overarching theme. In Step 2, the full set of hypothesized barriers generated in Step 1 by the six investigators were de-duplicated and synthesized by two investigators (AB, RS) in an iterative process that included both independent and collaborative steps to ensure rigor and validity. Differences were resolved through discussion and consensus. Step 2 was completed iteratively with Step 3.

Step 3: Rapidly Validate Identified Barriers

To ensure trustworthiness and reproducibility of the findings, the researchers subjected their final list of hypotheses to a validation process including expert consultation (two licensed psychologists), literature review, and two interviews with clinicians who had participated in other aspects of the larger project. Interviews were conducted using a structured interview guide that elicited feedback from clinicians on the EBP implementation process that was relevant for assessing face validity of specific hypotheses. Step 3 was completed iteratively with Step 2.

Results

In Step 1, the six investigators separately generated 156 hypothesized behavioral barriers to the focal behavior (range: 18–35 hypotheses per investigator). Two investigators (RS, AB) proceeded with Step 2, independently de-duplicating and synthesizing the full set of 156 hypotheses; one investigator (AB) identified 41 discrete hypotheses, the other (RS) identified 29 discrete hypotheses. The lists were combined and further synthesized through discussion down to 21 hypotheses.

Step 3 (rapid validation) proceeded as described above. Two clinical experts (first author RES and a licensed clinical psychologist who has watched 10,000 h of videos of community clinicians engaged in session and is an expert in The Therapy Process Observational Coding System for Child Psychotherapy Strategies) reviewed the hypotheses for face validity. The first author (RS), a licensed psychologist and expert in the clinician decision-making literature, conducted a literature review for evidence of these hypotheses in the published scientific literature. Two interviews with community-based clinicians who had previously engaged with the parent project were conducted to elicit further input on clinician’s EBP experience, with prompts focused on themes relevant for validation. Ultimately, 16 of the 21 hypotheses were validated by 1 or more validation methods. Two investigators then returned to Step 2 for further synthesis. For example, through steps 2 and 3, “EBP seems good for other clients, but not for mine,” “My clients are different,” and “EBPs don’t work with this population” were combined into the one hypothesized barrier (i.e., EBPs don’t work here). Similarly, the hypothesized barriers “my plan to do an EBP goes out the window when the patient comes in upset” and “when talking to a client there is so much going on I can’t fit my EBP techniques in” were synthesized into one barrier (i.e., Just get through the session). The final hypothesis “What I do works” arose from a synthesis of hypothesized barriers such as “I’m not an EBP person” and “I meet the client where s/he is instead of following procedural details.” Our final list of six validated hypotheses is organized along the temporal continuum implicit in our target behavior from deciding or planning to do EBP through preparation and real-time deployment of the selected EBP during session. Figure 1 summarizes the hypothesis distillation process from 156 to 6. The six hypotheses, the associated cognitive biases, and the evidence from the contextual inquiry are described below and summarized in Table 1 and organized in two categories: Planning to do an EBP, and Deploying EBPs. The last column of the Table describes potential implementation strategy designs that might emerge in the Generate phase of NUDGE, which will follow the Discover phase detailed in this paper.

Planning to Do EBP

This category encompasses barriers related to forming the initial plan to do EBP in a session. These barriers emerge before a session when a clinician is deciding or planning whether or not to engage in EBP during the session. We found four hypothesized barriers that emerge at this stage.

EBPs Don’t Work Here

A striking behavioral barrier that emerged at the planning step is resistance to EBP due to a belief that EBPs don’t work for a community population or that efficacy data do not generalize to clinical practice in the community. A common feature found in the tournament ideas were requests for demonstrations that EBPs are applicable and relevant to the populations seen in community clinics. For example, one idea suggested the development of a database of EBPs for trans-gendered populations. Another participant submitted an idea about an organization supporting case conferences of employees showing how EBPs work with that organization’s clients. These ideas link to the cognitive bias of base rate fallacy (ignoring background probabilities in favor of event-specific information; Bar-Hillel 1980) and anecdotal fallacy [generalizing from possibly isolated incidents; (Nisbett et al. 1985)]: clinicians may over-anchor on a small number of client experiences in which an EBP was not appropriate or effective. Confirmation bias (selectively seeking or attending to information that confirms existing beliefs; Nickerson 1998) can also reinforce the “EBP’s don’t work” barrier.

What I Do Works

The counterpart barrier to “EBPs don’t work” is a strongly-held belief that “What I do works.” Our mapping process revealed that some clinicians’ professional identities or therapeutic mindsets don’t include EBP use, impeding EBP planning. This hypothesis was motivated by ideas that suggested training opportunities focused on the “art” of therapy to enhance the building of rapport and client engagement and serving as a patient advocate, in contrast to training on the formalized techniques and skills more characteristic of EBP protocols. Other tournament ideas suggested that less emphasis should be placed on one-size-fits-all implementation of EBPs if current therapeutic approaches were working better. The “What I do works” barrier highlights the strength of mental models (deeply-held beliefs about how things work that help you interpret your world) (Johnson-Laird 2010) and identity priming (when one’s identity influences a response to a stimulus) (Benjamin et al. 2010). Clinicians’ experiences, dating back from their graduate training through current clinical practice, produce a mental model about the efficacy or appropriateness of therapeutic practices in which they are more trained and comfortable. Finally, status quo bias (Kahneman et al. 1991) suggests that clinicians will stick with current therapeutic modalities as the default unless motivated or incentivized to train in and deliver EBPs.

No One Else is Doing EBP

We know that humans are highly influenced by social norms (Meeker et al. 2016; Sherif 1936), both descriptive (what people actually do) and injunctive (what people think they should do), and clinicians are no exception. Our third hypothesized barrier to forming a plan or deciding to implement EBPs was the perception that other clinicians (and the agency more broadly) do not value or engage in EBPs. Multiple tournament ideas suggested that clinicians increase the visibility of their EBP use or support, or that agencies more strongly signal that they value EBP use, for example by providing EBP-specific supervision. Clinicians’ requests that their peers actively demonstrate use of EBPs suggests that it is important to them to follow socially normative practices. In a busy clinical environment with heavy demands on cognitive and attentional bandwidth, the salience (Kahneman 2003) of EBPs and EBP use is also low. When a practice such as EBP use does not appear normative and is low-salience, status-quo bias (Kahneman et al. 1991) may also be activated.

Deploying EBPs

This category consists of barriers that emerged at the moment of preparing to execute EBPs in session or at the actual moment of execution in session.

I do not make a plan

Multiple ideas in the tournament proposed paying clinicians for session preparation time and other incentives to prepare for EBP sessions. This indicated that a potential barrier to EBP delivery in session is a lack of time to plan and prep prior to the sessions. Clinicians may hold “EBP mindsets” and intend to do EBPs but forget or change their mind in the moment. This is related to the behavioral insight of prospective memory failure (Brandimonte et al. 1996), or forgetting to perform an intended action at the right time. This may also relate to hassle factors (Bertrand et al. 2004); clinicians may want to do an EBP session but lack time to do the necessary planning especially with a full load of patients. These hassle factors may prevent them from executing an EBP in the session.

EBPs are Hard

A consistent theme in the literature on EBPs in mental health practice that was strongly echoed in our innovation tournament was the fact that EBP delivery, particularly with fidelity, is difficult. Ideas submitted through the innovation tournament suggested helping to break down EBPs into small, doable steps with decision-aids, reminders, and memory aids including “one-pagers,” checklists, and short demonstrative videos. Our insight into this barrier is informed by behavioral principles related to cognitive load (Bertrand et al. 2004; Paas and Van Merriënboer 1994), or the very real demand on a person’s mental resources from executing complex multi-faceted, multi-component procedures such as EBPs while dealing with complex data. Even a well-trained clinician with strong EBP intentions has to make decisions about the execution of techniques in session that are not specified in any EBP manual. This uncertainty may elicit a negative affective response (Slovic et al. 2002) and result in an avoidance of the technique. This avoidance may be fueled by ambiguity aversion (Akerlof 1991; Karlsson et al. 2009), which is a preference for actions that leads to outcomes that are known or certain versus those that are unknown or risky (such as embarking on an EBP technique with limited time left in the session).

Just Get Through the Session

The final barrier emerging in the execution stage is “getting through the session,” which was informed by ideas proposing strategies to prepare and calm clients prior to the session, such as a suggestion for a more relaxing waiting room, or funding of case managers to shift social services work outside of the therapy room. Our insight into this barrier is informed by hot–cold empathy gap, which is the phenomenon that people have difficulty predicting how they will behave in future affective states that are different from their current state (Loewenstein 2005). Clinicians may have difficulty executing an EBP while they are in session with an emotional patient, and may also persistently fail to remember (when in “cold state”) how disruptive the client and the clinician’s “hot states” will be to effective delivery of the EBP. This can lead to being unprepared or underprepared to bring a session back to EBP delivery if it goes off track, or being overoptimistic or overambitious about the ability to delivery EBP with fidelity in a session where both client and clinician are in a “hot state”. At the same time, the clinician in the middle of a session with a challenging client or a challenging situation is not able to recall the plans, intentions, and coping strategies that the “cold state” self put in place for the session.

Discussion

To our knowledge, this is the first study aimed towards developing implementation strategies directly from proposed solutions to infer behavioral barriers that might impede the implementation of EBPs. We applied a novel methodology informed by behavioral economics and used data from a diverse swath of clinicians engaged through an innovation tournament. We used these ideas as our raw data, as signals or cues to identify the behavioral barriers (and corresponding cognitive biases) to the implementation of EBPs. These results deepen our understanding of clinician decision-making with a lens towards cognitive biases, facilitating our ability to design targeted and novel implementation strategies.

Consistent with other studies (Beidas et al. 2015; Stewart et al. 2012), the behavioral barriers we identified through NUDGE largely fell into two broad categories: those related to making a plan to do EBP and those related to execution of the EBP in session. These different categories of behavioral barriers (and corresponding cognitive biases) may represent different populations of clinicians, and will require different strategies for intervention. Specifically, clinicians who are encumbered by attitudinal barriers related to making a plan to do EBP may lack the desire or motivation to use EBP. The implications for design (in the forthcoming Generate phase) are that strategies should seek to disrupt clinicians’ current mental models of what EBP signifies (e.g., by leveraging the “vividness effect” (Taylor and Thompson 1982) via realistic, detailed, and emotionally compelling studies) or to make descriptive or prescriptive norms more salient and persuasive via social comparison (Meeker et al. 2016).

The problem of EBP usage is different for clinicians who are encumbered by behavioral barriers related to the execution of the EBP. The challenge for this group is not attitudinal, but rather aiding these clinicians to take action to meet their espoused EBP goals as they describe them. This may require concrete planning, coping, and checklist strategies because objectives are more likely to be achieved when they are accompanied by simple specific planning (Casper 2008; Gollwitzer 1999; Milkman et al. 2011). Effective strategies for this group developed in the Generate stage might also target hassle factors and cognitive load, helping therapists to “chunk” sessions into manageable and simple techniques.

The present study illustrates the application of NUDGE, a systematic theoretically-informed approach to the identification of behavioral barriers informed by behavioral economics, that can also accompany conceptual frameworks that traditionally guide practice and research within implementation science (e.g., CFIR; Damschroder et al. 2009). NUDGE fills a gap in the implementation science literature related to tailored design by showing how raw inputs from frontline practitioners in community settings can be leveraged to generate robust, theory-informed behavioral insights about the drivers of implementation behavior which directly inform strategy design. A key contribution of NUDGE, distinct from intervention mapping and other traditional contextual inquiry approaches (e.g. Kok et al. 2016), is the explicit linking of well-characterized cognitive biases and heuristics to contextual data in order to identify specific barriers to the desired behavior that can inform intervention targets.

The use of raw stated preference data as a starting point from which to deduce behavioral barriers that can be targeted with implementation strategies is an essential feature of NUDGE. Why not take participants’ stated preferences at face value? One reason is that asking people for ideas or to report on barriers does not always yield accurate impediments to behavior (Asch and Rosin 2015); however, participants’ responses can help us to generate insights into behavioral barriers by revealing underlying assumptions, cognitive biases, or heuristics that participants employ in explaining the behavior. Our approach differs from traditional contextual inquiry in that we systematically investigated the implicit cognitive biases and heuristics that may contribute to implementation challenges. In other words, we are not aiming to implement the preferences themselves but rather implementation strategies informed by behavioral insights that the preferences reveal.

NUDGE offers an opportunity to build implementation strategies from the ground up, supporting an in-depth understanding of clinicians’ real difficulties and designing implementation strategies that accurately map back to these challenges. This approach goes beyond simply eliciting stakeholder preferences, but instead gave us perspective on where barriers appear to be getting in the way of doing EBPs, and provides insight on what we should do to overcome these barriers. This rigorous process was able to reverse engineer/translate ideas into hypotheses to identify salient barriers to behavior. Importantly, some of these biases can be harnessed through implementation strategy design to encourage more evidence-based practices by reframing the context of the choice.

We gleaned a rich set of hypotheses through this behavioral design process. Many of the hypothesized barriers have non-incentive related design implications (e.g., strategies that make EBPs easier to use). Additionally, many of the ideas in the tournament pertained to training strategies, yet, clinicians in Philadelphia County have more training than the average community clinician due to a decade of system-supported training initiatives that have trained hundreds of clinicians (Powell et al. 2016). We believe that we captured through our diagnosis that training is not always the true preference, but emerges downstream from a thought process such as, “I guess I don’t use EBP because I don’t feel prepared. How could I be better prepared? Oh, I guess I must need more training.” In contrast, we suspect that additional training would not enhance this competence or confidence, but simplification strategies (Service et al. 2014) such as skill-building techniques, preparation tools, reminders (Karlan et al. 2010), or aids to make implementation easier (and reduce cognitive load) may improve this sense of mastery and therefore execution. This again speaks to the strength of this study which is going beyond the ideas or suggestions at face value, similar to the “5 Whys” or “5 So Whats” used in process improvement, which is a technique of asking (5 times) why the failure or problem has occurred as a way to get to the true root cause of a problem (Arnheiter and Maleyeff 2005).

The NUDGE process is most useful for uncovering behavioral barriers to desired actions. In the context of mental health care, these actions might be taken by patients or clinicians, or by administrators of health care delivery systems. In the current study the investigators narrowed our focus (the first step of the NUDGE process) to the clinician’s decision to use an EBP, and the related action of deploying the EBP in session. The resulting behavioral barriers were therefore also focused at that level, although implementation strategy designs that emerge from this analysis may require organizational change (or at least leadership buy-in and commitment) to put in place. One promising area for future research is whether NUDGE can be helpful at the level of organizational decision-making and for identifying behavioral biases at the organizational level (Behavioral Insights Team 2017). Examples of target behaviors to focus on might be how payers and organizational leadership select and resource professional development activities for clinicians, or make decisions about reimbursement policies and incentive schemes.

Some limitations should be noted. Our greatest strength is potentially a limitation in that we were inferring problems based on suggested solutions (which are still self-report). Second, these ideas were from community clinicians in one system in one part of the country; thus, the inferred behavioral barriers and insights may not generalize to community behavioral health at large. The value of NUDGE is that it can be applied to unique contexts to identify behavioral barriers for targeted populations of individuals; the extent to which behavioral behaviors to EBP implementation identified in one population generalize to other populations remains an open empirical question. Third, we recognize that there are hundreds of EBPs from which to choose, and we did not focus on that choice, but further downstream after a particular EBP was selected. Lastly, NUDGE is designed specifically to only address behavioral barriers rooted in cognitive biases and heuristics. By definition then, this approach cannot address structural issues and barriers (e.g., scarcity of resources) of which there are many in publicly funded behavioral health (Beidas et al. 2015; Skriner et al. 2017).

Conclusion and Future Directions

We gleaned a rich set of six hypothesized barriers to EBP implementation through the innovative, hybrid nature of our contextual inquiry, and utilized a novel method, NUDGE, to inform implementation strategy design. This study represents first steps in thinking how a behavioral design process can inform implementation strategy development in mental health care settings. By enhancing methods for rigorous implementation strategy design such as NUDGE, we can better tailor implementation strategies to advance the field of implementation science. Future directions will include the transformation of these hypotheses into testable implementation strategies, such as incentivizing paid pre-session prep time. We are optimistic about continued application of the approach within implementation science to design relevant and impactful approaches to improve the quality of services in behavioral health.

Availability of data and materials

Data will be made available upon request. Requests for access to the data can be sent is the Penn ALACRITY Data Sharing Committee. This Committee is comprised of the following individuals: Rinad Beidas, PhD, David Mandell, ScD, Kevin Volpp, MD, PhD, Alison Buttenheim, PhD, MBA, Steven Marcus, PhD, and Nathaniel Williams, PhD. Requests can be sent to the Committee’s coordinator, Kelly Zentgraf at zentgraf@upenn.edu, 3535 Market Street, 3rd Floor, Philadelphia, PA 19107, 215-746-6038.

References

Akerlof, G. A. (1991). Procrastination and obedience. American Economic Review, 81(2), 1–19.

Ariely, D. (2008). Predictably irrational. New York: HarperCollins Publishers.

Arnheiter, E. D., & Maleyeff, J. (2005). The integration of lean management and Six Sigma. The TQM Magazine, 17(1), 5–18.

Asch, D. A., & Rosin, R. (2015). Innovation as discipline, not fad. New England Journal of Medicine, 373(7), 592–594.

Asch, D. A., Terwiesch, C., Mahoney, K. B., & Rosin, R. (2014). Insourcing health care innovation. New England Journal of Medicine, 370, 1775.

Baker, R., Camosso-Stefinovic, J., Gillies, C., Shaw, E. J., Cheater, F., Flottorp, S., et al. (2015). Tailored interventions to address determinants of practice. Cochrane Database of Systematic Reviews, 4, CD005470.

Bar-Hillel, M. (1980). The base-rate fallacy in probability judgments. Acta Psychologica, 44(3), 211–233.

Behavior Insights Team (2017). “An exploration of potential biases in project delivery in the deparmtnet for transport”. Retrieved February 6, 2020 from https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/627788/exploration-of-behavioural-biases.pdf.

Behavioral Science Concepts. (n.d.). Retrieved February 6, 2020 from https://www.behavioraleconomics.com/resources/mini-encyclopedia-of-be/.

Beidas, R. S., Aarons, G. A., Barg, F., Evans, A. C., Hadley, T., Hoagwood, K. E., et al. (2013). Policy to implementation: Evidence-based practice in community mental health–study protocol. Implementation Science, 8(1), 38.

Beidas, R. S., Adams, D. R., Kratz, H. E., Jackson, K., Berkowitz, S., Zinny, A., et al. (2016). Lessons learned while building a trauma-informed public behavioral health system in the city of Philadelphia. Evaluation and Program Planning, 59, 21–32.

Beidas, R. S., Stewart, R. E., Adams, D. R., Fernandez, T., Lustbader, S., Powell, B. J., et al. (2015). A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Administration and Policy in Mental Health, 43(6), 893–908.

Beidas, R. S., Volpp, K. G., Buttenheim, A. N., Marcus, S. C., Olfson, M., Pellecchia, M., et al. (2019a). Transforming mental health delivery through behavioral economics and implementation science: Protocol for three exploratory projects. JMIR Research Protocols, 8(2), e12121.

Beidas, R. S., Williams, N. J., Becker-Haimes, E., Aarons, G., Barg, F., Evans, A., et al. (2019b). A repeated cross-sectional study of clinicians’ use of psychotherapy techniques during 5 years of a system-wide effort to implement evidence-based practices in Philadelphia. Implementation Science, 14(1), 67. https://doi.org/10.1186/s13012-019-0912-4.

Benjamin, D. J., Choi, J. J., & Strickland, A. J. (2010). Social identity and preferences. American Economic Review, 100(4), 1913–1928.

Benson, B. (2016). Cognitive bias cheat sheet. Better Humans. Retrieved February 6, 2020 from https://betterhumans.coach.me/cognitive-bias-cheat-sheet-55a472476b18.

Bertrand, M., Mullainathan, S., & Shafir, E. (2004). A behavioral-economics view of poverty. American Economic Review, 94(2), 419–423.

Bjelland, O. M., & Wood, R. C. (2008). An inside view of IBM’s ‘Innovation Jam’. MIT Sloan Management Review, 50(1), 32–40.

Brandimonte, M. A., Einstein, G. O., & McDaniel, M. A. (1996). Prospective memory: Theory and applications. New York: Psychology Press.

Brownson, R. C., Colditz, G. A., & Proctor, E. K. (2017). Dissemination and implementation research in health: Translating science to practice. Oxford: Oxford University Press.

Buttenheim, A. M., Levy, M. Z., Castillo-Neyra, R., McGuire, M., Vizcarra, A. M., Riveros, L. M., et al. (2019). A behavioral design approach to improving a Chagas disease vector control campaign in Peru. BMC Public Health, 19(1), 1272.

Casper, E. S. (2008). Using implementation intentions to teach practitioners: Changing practice behaviors via continuing education. Psychiatric Services, 59(7), 747–752.

Cohen, J. A., Deblinger, E., Mannarino, A. P., & Steer, R. A. (2004). A multisite, randomized controlled trial for children with sexual abuse–related PTSD symptoms. Journal of the American Academy of Child and Adolescent Psychiatry, 43(4), 393–402.

Council, D. (2005). Eleven lessons: A study of the design process. Retrieved February 6, 2020 from https://www.designcouncil.org.uk/sites/default/files/asset/document/ElevenLessons_Design_Council%20(2).pdf.

Creed, T. A., Stirman, S. W., & Evans, A. C. (2014). A model for implementation of cognitive therapy in community mental health: The Beck Initiative. Behavior Therapist, 37(3), 56–64.

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 50.

Darling, M., Lefkowitz, J., Amin, S., Perez-Johnson, I., Chojnacki, G., & Manley, M. (2017). Practitioner's playbook for applying behavioral insights into labor programs. Retrieved March 1, 2020 from https://www.ideas42.org/wp-content/uploads/2017/06/7-Practitioner-Playbook-Final-20170517.pdf.

Datta, S., & Mullainathan, S. (2014). Behavioral design: A new approach to development policy. Review of Income and Wealth, 60(1), 7–35.

Fiske, S. T., & Taylor, S. E. (2013). Social cognition: From brains to culture (2nd ed.). Thousand Oaks, CA: Sage.

Foa, E. B., Hembree, E. A., Cahill, S. P., Rauch, S. A., Riggs, D. S., Feeny, N. C., et al. (2005). Randomized trial of prolonged exposure for posttraumatic stress disorder with and without cognitive restructuring: Outcome at academic and community clinics. Journal of Consulting and Clinical Psychology, 73(5), 953.

Ghandour, R. M., Sherman, L. J., Vladutiu, C. J., Ali, M. M., Lynch, S. E., Bitsko, R. H., et al. (2019). Prevalence and treatment of depression, anxiety, and conduct problems in US children. The Journal of Pediatrics, 206, 256–267.e253.

Gollwitzer, P. M. (1999). Implementation intentions: Strong effects of simple plans. American Psychologist, 54(7), 493.

ideas42. (2018). Behavioral design for digital financial services: How to increase engagement with products and services that build financial health. Retrieved February 6, 2020 from https://www.ideas42.org/dfsplaybook/.

Health Interventions. (n.d.). Washington, DC. Retrieved February 6, 2020 from https://publications.iadb.org/bitstream/handle/11319/6503/Behavioral_economics_guidelines_with_applications_for_health_interventions.pdf?sequence=1&isAllowed=y.

Johnson-Laird, P. N. (2010). Mental models and human reasoning. Proceedings of the National Academy of Sciences, 107(43), 18243–18250.

Jouret, G. (2009). Inside Cisco’s search for the next big idea. Harvard Business Review. Retrieved February 6, 2020 from https://hbr.org/2009/09/inside-ciscos-search-for-the-next-big-idea.

Kahneman, D. (2003). Maps of bounded rationality: Psychology for behavioral economics. American Economic Review, 93(5), 1449–1475.

Kahneman, D., Knetsch, J. L., & Thaler, R. H. (1991). Anomalies: The endowment effect, loss aversion, and status quo bias. Journal of Economic Perspectives, 5(1), 193–206.

Kahneman, D., & Tversky, A. (1979). Prospect theory: An analysis of decision under risk. Econometrica, 47(2), 263–292.

Karlan, D., McConnell, M., Mullainathan, S., & Zinman, J. (2010). Getting to the top of mind: How reminders increase saving. Cambridge: National Bureau of Economic Research.

Karlsson, N., Loewenstein, G., & Seppi, D. (2009). The ostrich effect: Selective attention to information. Journal of Risk and Uncertainty, 38(2), 95–115.

Kok, G., Gottlieb, N. H., Peters, G.-J. Y., Mullen, P. D., Parcel, G. S., Ruiter, R. A., et al. (2016). A taxonomy of behaviour change methods: An Intervention Mapping approach. Health Psychology Review, 10(3), 297–312.

Kooreman, P., & Prast, H. (2010). What does behavioral economics mean for policy? Challenges to savings and health policies in the Netherlands. De Economist, 158(2), 101–122.

Linehan, M. M., Comtois, K. A., Murray, A. M., Brown, M. Z., Gallop, R. J., Heard, H. L., et al. (2006). Two-year randomized controlled trial and follow-up of dialectical behavior therapy vs therapy by experts for suicidal behaviors and borderline personality disorder. Archives of General Psychiatry, 63(7), 757–766.

List of Cognitive Biases. (n.d.). https://en.wikipedia.org/wiki/List_of_cognitive_biases.

Loewenstein, G. (2005). Hot-cold empathy gaps and medical decision making. Health Psychology, 24(4S), S49.

Lopetegui, M., Yen, P.-Y., Lai, A., Jeffries, J., Embi, P., & Payne, P. (2014). Time motion studies in healthcare: What are we talking about? Journal of Biomedical Informatics, 49, 292–299.

Luoto, J., & Carman, K. G. (2014). Behavioral economics guidelines with applications for. Retrieved March 1, 2020 from https://publications.iadb.org/publications/english/document/Behavioral-Economics-Guidelines-with-Applications-for-Health-Interventions.pdf.

Mak, R. H., Endres, M. G., Paik, J. H., Sergeev, R. A., Aerts, H., Williams, C. L., et al. (2019). Use of crowd innovation to develop an artificial intelligence-based solution for radiation therapy targeting. JAMA Oncology, 5(5), 654–661.

Meeker, D., Linder, J. A., Fox, C. R., Friedberg, M. W., Persell, S. D., Goldstein, N. J., et al. (2016). Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: A randomized clinical trial. JAMA, 315(6), 562–570.

Merchant, R. M., Griffis, H. M., Ha, Y. P., Kilaru, A. S., Sellers, A. M., Hershey, J. C., et al. (2014). Hidden in plain sight: A crowdsourced public art contest to make automated external defibrillators more visible. American Journal of Public Health, 104(12), 2306–2312.

Milkman, K. L., Beshears, J., Choi, J. J., Laibson, D., & Madrian, B. C. (2011). Using implementation intentions prompts to enhance influenza vaccination rates. Proceedings of the National Academy of Sciences of the United States of America, 108(26), 10415–10420.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63(2), 81.

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175–220.

Nisbett, R. E., Borgida, E., Crandall, R., & Reed, H. (1985). Popular induction: Information is not necessarily informative. In D. Kahneman, P. Slovic, & A. Tversky (Eds.), Judgment under uncertainty: Heuristics and biases. New York: Cambridge University Press.

Paas, F. G., & Van Merriënboer, J. J. (1994). Variability of worked examples and transfer of geometrical problem-solving skills: A cognitive-load approach. Journal of Educational Psychology, 86(1), 122.

Patel, M. S., Day, S. C., Halpern, S. D., Hanson, C. W., Martinez, J. R., Honeywell, S., et al. (2016). Generic medication prescription rates after health system–wide redesign of default options within the electronic health record. JAMA Internal Medicine, 176(6), 847–848.

Patel, M. S., Volpp, K. G., & Asch, D. A. (2018). Nudge units to improve the delivery of health care. New England Journal of Medicine, 378(3), 214.

Pinto, D. M., Ibarrarán, P., Stampini, M., Carman, K. G., Guanais, F. C., Luoto, J., et al. (2014). Applying behavioral tools to the design of health projects. Retrieved February 6, 2020 from Washington, DC: https://publications.iadb.org/bitstream/handle/11319/6563/Applying-Behavioral-Tools-to-the-Design-of-Health-Projects.pdf?sequence=1.

Powell, B. J., Beidas, R. S., Lewis, C. C., Aarons, G. A., McMillen, J. C., Proctor, E. K., et al. (2017). Methods to improve the selection and tailoring of implementation strategies. Journal of Behavioral Health Services and Research, 44(2), 177–194.

Powell, B. J., Beidas, R. S., Rubin, R. M., Stewart, R. E., Wolk, C. B., Matlin, S. L., et al. (2016). Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Administration and Policy in Mental Health, 43(6), 909–926.

Powell, B. J., Fernandez, M. E., Williams, N. J., Aarons, G. A., Beidas, R. S., Lewis, C. C., et al. (2019). Enhancing the impact of implementation strategies in healthcare: A research agenda. Frontiers in Public Health, 7, 3.

Robert, S. (2005). Categorization, reasoning, and memory from a neo-logical point of view. In H. Cohen & C. Lefebvre (Eds.), Handbook of categorization in cognitive science (pp. 699–717). Amsterdam: Elsevier.

Samson, A. (2017). The behavioral economics guide 2019 (with an introduction by Uri Gneezy). Retrieved February 6, 2020 from https://www.behavioraleconomics.com/BEGuide2019.pdf.

Service, O., Hallsworth, M., Halpern, D., Algate, F., Gallagher, R., Nguyen, S., et al. (2014). EAST: Four simple ways to apply behavioural insights. Behavioural Insight Team. Retrieved March 1, 2020 from https://www.behaviouralinsights.co.uk/wp-content/uploads/2015/07/BIT-Publication-EAST_FA_WEB.pdf.

Sherif, M. (1936). The psychology of social norms. Oxford, England: Harper.

Skriner, L. C., Wolk, C. B., Stewart, R. E., Adams, D. R., Rubin, R. M., Evans, A. C., et al. (2017). Therapist and organizational factors associated with participation in evidence-based practice initiatives in a large urban publicly-funded mental health system. Journal of Behavioral Health Services and Research, 45(2), 174–186.

Slovic, P., Finucane, M., Peters, E., & MacGregor, D. G. (2002). The affect heuristic. In T. Gilovich, D. W. Griffin, & D. Kahneman (Eds.), Heuristics and biases: The psychology of intuitive judgment. New York: Cambridge University Press.

Spring, H., Datta, S., & Sapkota, S. (2016). Using behavioral science to design a peer comparison intervention for postabortion family planning in Nepal. Frontiers in Public Health, 4, 123.

Stewart, R. E., Chambless, D. L., & Baron, J. (2012). Theoretical and practical barriers to practitioners' willingness to seek training in empirically supported treatments. Journal of Clinical Psychology, 68(1), 8–23.

Stewart, R. E., Williams, N. J., Byeon, Y. V., Buttenheim, A., Sridharan, S., Zentgraf, K., et al. (2019). The clinician crowdsourcing challenge: Using participatory design to seed implementation strategies. Implementation Science, 14, 63.

Stirman, S. W., Buchhofer, R., McLaulin, J. B., Evans, A. C., & Beck, A. T. (2009). Public-academic partnerships: the Beck initiative: A partnership to implement cognitive therapy in a community behavioral health system. Psychiatric Services, 60(10), 1302–1304.

Tantia, P. (2017). The new science of designing for humans. Design Thinking. Retrieved February 6, 2020 from https://ssir.org/articles/entry/the_new_science_of_designing_for_humans.

Taylor, S. E., & Thompson, S. C. (1982). Stalking the elusive" vividness" effect. Psychological Review, 89(2), 155.

Terwiesch, C., & Ulrich, K. T. (2009). Innovation tournaments: Creating and selecting exceptional opportunities. Boston, MA: Harvard Business Press.

Thomas, R., Abell, B., Webb, H. J., Avdagic, E., & Zimmer-Gembeck, M. J. (2017). Parent-child interaction therapy: A meta-analysis. Pediatrics, 140(3), e20170352.

Tversky, A., & Kahneman, D. (1981). The framing of decisions and the psychology of choice. Science, 211(4481), 453–458.

United States Congress Senate Committee on Health Pensions Subcommittee on Substance Abuse Mental Health Services. (2004). Recommendations to improve mental health care in America: Report from the President's New Freedom Commission on Mental Health: Hearing before the Subcommittee on Substance Abuse and Mental Health Services of the Committee on Health, Education, Labor, and Pensions, United States Senate, One Hundred Eighth Congress, first session, on examining the report from the President's New Freedom Commission on Mental Health relating to recommendations to improve mental health care in America, November 4, 2003. Government Printing Office.

United States President's New Freedom Commission on Mental Health. (2003). Achieving the promise: Transforming mental health care in America. Rockville, MD: President's New Freedom Commission on Mental Health.

Williams, N. J. (2016). Multilevel mechanisms of implementation strategies in mental health: Integrating theory, research, and practice. Administration and Policy in Mental Health and Mental Health Services Research, 43(5), 783–798.

Williams, N. J., & Beidas, R. S. (2019). Annual research review: The state of implementation science in child psychology and psychiatry: A review and suggestions to advance the field. Journal of Child Psychology and Psychiatry, 60(4), 430–450.

Wong, W. C. W., Song, L., See, C., Lau, S. T. H., Sun, W. H., Choi, K. W. Y., et al. (2020). Using crowdsourcing to develop a peer-led intervention for safer dating app use: Pilot Study. JMIR Formative Research, 4(4), e12098.

Acknowledgements

We are especially grateful for the support that DBHIDS has provided for this project. We gratefully acknowledge Emily Becker-Haimes, Sriram Sridharan and Molly Candon for their assistance with hypothesis generation and confirmation. We would also like to thank all of the clinicians who participated in the innovation tournament and all who helped recruit those clinicians, making it possible.

Funding

P50MH113840 (Beidas, Mandell, Volpp).

Author information

Authors and Affiliations

Contributions

All authors substantially contributed to the conception, design, and analysis of the work and provided final approval of the version to be published.

Corresponding author

Ethics declarations

Conflict of interest

The authors report no conflicts of interest.

Ethics Approval

The Institutional Review Boards of the University of Pennsylvania and the City of Philadelphia approved all study procedures and all ethical guidelines were followed.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Stewart, R.E., Beidas, R.S., Last, B.S. et al. Applying NUDGE to Inform Design of EBP Implementation Strategies in Community Mental Health Settings. Adm Policy Ment Health 48, 131–142 (2021). https://doi.org/10.1007/s10488-020-01052-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10488-020-01052-z