Abstract

Building on a narrative synthesis of adoption theories by Wisdom et al. (2013), this review identifies 118 measures associated with the 27 adoption predictors in the synthesis. The distribution of measures is uneven across the predictors and predictors vary in modifiability. Multiple dimensions and definitions of predictors further complicate measurement efforts. For state policymakers and researchers, more effective and integrated measurement can advance the adoption of complex innovations such as evidence-based practices.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The concept of adoption, the complete or partial decision to proceed with the implementation of an innovation as a distinct process preceding but separate from actual implementation, is at an early stage of development among state policymakers, organizational directors, deliverers of services, and implementation researchers (Glisson & Schoenwald 2005; Panzano & Roth 2006; Schoenwald & Hoagwood 2001). In health and behavioral health, adoption is a key implementation outcome (Proctor et al. 2011; Proctor & Brownson 2012) because the latter cannot occur without the former, and implementation does not necessarily follow the contemplation, decision, and commitment to adopt an innovation such as an evidence-based practice (EBP). Adoption is a complex, multi-faceted decision-making process. Understanding this process may provide valuable insights for the development of strategies to facilitate effective uptake of EBPs or guide thoughtful de-adoption in order to avoid costly missteps in organizational efforts to improve care quality (Fixsen et al. 2005; Saldana et al. 2013; Wisdom et al. 2013). Research to date, however, has focused more on implementation, and less on adoption as a distinct outcome (Wisdom et al. 2013). Further, reviews of measures have focused more on general predictors of broad implementation outcomes, rather than specific predictors of adoption (Chaudoir et al. 2013; National Cancer Institute 2013; Seattle Implementation Research Collaborative 2013).

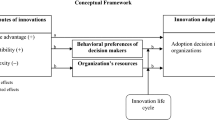

This review builds on the theoretical framework by Wisdom et al. (2013) that organizes 27 predictors of adoption by four contextual levels—external system, organization, innovation, and individual (see Fig. 1 and Appendix 1). These four contextual levels are consistent with those in other theoretical frameworks such as the Advanced Conceptual Model of EBP Implementation (Aarons et al. 2011), the Practical Robust Implementation Sustainability Model (Feldstein & Glasgow 2008), and the Consolidated Framework for Implementation Research (Damschroder et al. 2009). The goals of this review are to: (1) identify measures and their properties for the 27 adoption predictors described in Wisdom et al. (2013); (2) describe the measures’ relationships to the predictors, to other related measures, and to adoption, especially EBP adoption; (3) highlight the challenges of measurement; and (4), where possible, propose ways to effectively integrate measures for key adoption predictors. Linking the 27 predictors of adoption with their measures will assist systems, organizations, and individuals in identifying and measuring critical predictors of adoption decision-making. Although understanding adoption is important in many areas (e.g., primary medical care), it is sorely needed in state mental health systems given the demands on quality and accountability in the Patent Protection and Affordable Care Act of 2010 (P.L. 111–148). As states expand efforts to improve the uptake of EBPs, state policy leaders and agency directors are faced with the challenge of selectively adopting innovations to improve the quality of services and de-adopting ineffective innovations. Depending on the financial resources, time, and staffing involved, this decision-making—adopt, not adopt, adopt later, and de-adopt—has important consequences for future implementation and sustainability of EBPs in state supported services.

Theoretical framework for the 27 predictors of adoption organized by four contextual levels (Wisdom et al. 2013)

Methods

To identify measures for the factors associated with adoption, this review used the same search strategy as the narrative synthesis review of adoption theories (Wisdom et al. 2013). This approach insures that the measures are consistent with the theoretical framework of adoption. Appendix 2 illustrates database searches in Ovid Medline, PsycINFO, and Web of Science that yielded 322 unique journal articles. For the synthesis, the articles were screened and rescreened to yield theories that formed the framework. These 322 journal articles were used to abstract measures associated with the 27 predictors of adoption in the following steps:

-

1.

Measures championed by the theoretical framework by Wisdom et al. (2013) were included, in order to establish a one-to-one relationship between theory and measure.

-

2.

The articles not specifically used in the framework were reviewed to identify additional measures that could be mapped onto the theoretical framework.

-

3.

Snowball search of references of references and related themes (Pawson et al. 2005) was conducted.

-

4.

After identifying the measures for inclusion, all authors independently mapped each measure first to each of the four levels of predictors, then to each of the 27 predictors contained in the theoretical framework.

-

5.

The final mapping was achieved by consensus brainstorming among the authors and consultation with field experts to resolve discrepancies in mapping.

-

6.

Measures with the most relevance to the predictors were included. The threshold of exhaustiveness and the final selection decision were determined by discussions among the authors and consultation with the field experts. A measure with multiple subscales can be mapped to more than one predictor.

-

7.

The final set of measures and references were further reviewed for the availability of psychometric data (e.g., reliability, validity), empirical adoption data (i.e., whether a measure associated with an adoption predictor was applied in an empirical study), and the modifiability of predictors associated with the measures (i.e., whether a predictor is more or less malleable). Final agreement on these categories (yes/no) was required to be 100 % after resolving discrepancies among the authors, similar to Step 6 above.

Additional details regarding the literature search method are reported in Wisdom et al. (2013).

Results

Table 1 summarizes the 118 measures for the 27 adoption predictors, which are organized by the contextual levels: external system, organization, innovation, and individual (staff or client). For each measure, descriptive information is provided about its domains and items, its availability (i.e., whether the measure is accessible), its structure (e.g., single- vs. multi-items/domains, survey/interview, computation formula, etc.), its psychometric properties, the availability of supportive adoption data, and the modifiability of the corresponding adoption predictor(s). While Table 1 serves as a detailed compendium of measures, below we synthesize measures for each contextual level, describe how measures address similar or different aspects of the predictors, and highlight measures that are associated with EBP adoption.

External System

Overview of Measures

Eighteen measures are related to the external system. All measures are supported by empirical adoption data such as census data (Damanpour & Schneider 2009; Meyer & Goes 1988). Measure types vary: nine measures are rating scales, five measures are derived from computation of frequency data, and four measures are either based on state documentation or open-ended surveys/interviews. The 18 measures assess discrete aspects of the external system and complement one another.

External Environment (n = 10)

Of the 10 measures, seven address economic consideration of the market/industry (i.e., community wealth, income growth, industry concentration, competition, hostility, complexity, and dynamism) (Damanpour & Schneider 2009; Gatignon & Robertson 1989; Meyer & Goes 1988; Peltier et al. 2009; Ravichandran 2000); two measures assess population-based characteristics (urbanization, density) (Damanpour & Schneider 2009; Meyer & Goes 1988), and one measure focuses on political influence (e.g., mayor) (Damanpour & Schneider 2009). Although the economic measures were used in studying adoption of innovations such as management in the public sector (e.g., government) and technology in the private sector (e.g., retail), their relevance to EBP adoption is yet to be tested.

Government Policy and Regulation (n = 3)

State policies and regulations can mandate innovation adoption. Their specificity and complexity necessitate diverse measurement methods, including reviews of state guidelines (Ganju 2003) and open-ended surveys, interviews with state directors about EBP integration in state contracts (Knudsen & Abraham 2012). Most relevantly, a multi-dimensional scoring system known as the State Mental Health Authority Yardstick (SHAY) measures a state’s planning policies and regulations. The development of the measure was based on the adoption and implementation of EBPs among eight states (Finnerty et al. 2009).

Reinforcing Regulation with Financial Incentives to Improve Quality Service Delivery (n = 2)

Innovation adoption in the public mental health systems often relies on incentive funding from the government. Through open-ended surveys/interviews with state opinion leaders, the specific incentive conditions can be identified (Fitzgerald et al. 2002). A more in-depth approach requires the review of state documentation on incentive conditions (i.e., mandate vs. fiscal vs. technical assistance) and the use of administrative data (e.g., Medicaid claims) to measure the statewide adoption of large-scale quality improvement initiatives (Finnerty et al. 2012). Financial incentives have a consistent and significant positive effect on adoption.

Social Network (Inter-Systems) (n = 3)

Social connections among state agencies and community leaders (e.g., mental health and substance abuse) can have a top-down effect on organizational and individual adoption. The three measures of inter-systems social networks are based on complex computations involving the identification of the appropriate unit (e.g., community leader, county) and linkages among them. Centralization and density are complementary metrics that account for the number of ties linked to and from an identified community leader. A higher centralization value indicates a more centralized network; a higher density value indicates a more connected network among all possible ties (Fujimoto et al. 2009). Centralization, in particular, was significantly associated with the increased adoption of substance abuse prevention programs across system stakeholders (Fujimoto et al. 2009). Neighborhood Effect measures the influence of the adoption level of neighboring counties. It was associated with the increased adoption of Family Group Decision Making in child welfare systems (Rauktis et al. 2010).

Organization

Overview of Measures

Organizational measures represent the majority of the measures in this review. The nine organizational predictors of adoption are assessed by 41 measures, all of which are available and accessible. Key organizational predictors such as leadership, culture, climate, readiness for change, and operational size and structure are multi-dimensional. The predictive utilities of the majority of these measures are supported by data linking these predictors with adoption of EBPs and other psychosocial interventions. Consistent with the literature, most measures suggest that organizational predictors are potentially modifiable, although these predictors may take considerable time to change.

Absorptive Capacity (n = 6)

Absorptive capacity indicates the extent to which an organization utilizes knowledge and research. Based on the six measures, absorptive capacity is broadly measured in three ways: by productivity such as the number of projects and patents (Zahra & George 2002), the number of staff with advanced education/certification/licensure (Knudsen & Roman 2004), and the ratio of research and development expenditure to organizational revenue (Cohen & Levinthal 1990); by the assessment of staff’s collective knowledge of innovations (Knudsen & Roman 2004); or by the use of client satisfaction data to improve organizational knowledge utilization (Knudsen & Roman 2004). Knudsen and Roman (2004) found that absorptive capacity was associated with increased adoption of EBPs.

Leadership and Champion of Innovation (n = 7)

All but one leadership measure (i.e., median age) (Meyer & Goes 1988) focus on the different dimensions of leadership. The six multi-domain, multi-item measures address specific leadership styles (i.e., the Texas Christian University [TCU] Survey of Transformational Leadership [STL-S] and the Multi-Factor Leadership Questionnaire [MLQ]) (Aarons 2006; Bass et al. 1996; Edwards et al. 2010), leadership management practices that are associated with quality improvement (i.e., Management Practice Survey Tool, Quality Orientation of the Host Organization, Management Support for Quality) (McConnell et al. 2009; Ravichandran 2000), or the combination of leadership support of adoption and the level of decision-making power (Meyer & Goes 1988). Empirical findings using these measures showed that transformational and transactional leadership were associated with positive attitudes towards adoption of EBPs (Aarons 2006). Similarly, a quality improvement leadership orientation was associated with the swiftness and intensity of the adoption of management innovations, though the association was not maintained when adjustments for additional factors were made (Ravichandran 2000).

Network with Innovation Developers and Consultants (n = 2)

Two measures assess the quantity and quality of communication between an organization and innovation developers and consultants. Convergence between innovation developers and innovation users can be measured by the frequency of communication and the richness of the communication, both predictive of technology adoption (Lind & Zmud 1991). Tracking the frequency and the type of communication (e.g., phone consultation) is especially important for complex innovations that require ongoing assistance, such as organizational EBP training.

Norms, Values, and Cultures (n = 7)

Organizational norms, values, and cultures are multi-dimensional and require multi-domain measures with valid psychometric properties. Three measures identify distinct culture types in health and behavioral health organizations that are related to EBP adoption. The Organizational Culture Inventory (OCI) identifies three culture types and an organization’s proximity to an ideal culture. Specifically, a constructive culture promotes innovations and quality improvement (Cooke & Lafferty 1994; Ingersoll et al. 2000). The Children’s Services Survey confirmed that a constructive organizational culture (derived from the OCI) enhanced positive attitudes towards EBPs (Aarons & Sawitzky 2006). The Organization Social Context (OSC) showed that a “good” organizational culture profile—a high level of proficiency and low levels of rigidity and resistance to change—was linked to adoption, improved service quality, satisfaction, work attitudes, and decreased staff turnover (Glisson et al. 2008).

Four measures define organizational norms, values, and cultures based on employees’ affiliation with and opinions of their organizations, though they have not been directly linked to adoption data. The Practice Culture Questionnaire (PCQ) measures primary care teams’ attitudes toward quality improvement (QI) (e.g., whether QI improves staff performance and client satisfaction) as the basis for organizational attitudes toward QI (Stevenson & Baker 2005). It suggests a relationship between organizational culture and resistance toward QI based on the median and spread of each team’s score. The Competing Values Framework (Shortell et al. 2004) identifies four organizational culture types based on the distribution of endorsement points by employees for each culture type. The Pasmore Sociotechnical Systems Assessment Survey (STSAS) measures employees’ perceptions of organizational culture that can influence the employees’ commitment and ideals (e.g., responsibility, helping an organization succeed) (Ingersoll et al. 2000). Similarly, the Hospital Culture Questionnaire measures organizational culture based on employees’ viewpoints on organizational functions (e.g., workload), self-concept (e.g., hospital image, role significance), and support (e.g., benefits, supervision) (Sieveking et al. 1993).

Operational Size and Structure (n = 9)

Operational size and structure refer to measurable characteristics such as organizational volume (e.g., staffing), portfolios of services provided, or the power and decision-making structure. The nine measures involve the use of quantitative and qualitative data.

Four measures report the number of staff and clients, amount of organizational funding, and self-assessment of organizational economic health (Damanpour & Schneider 2009; Meyer & Goes 1988; Miller 2001). In particular, the Organizational Characteristics and Program Adoption survey was used to distinguish low, moderate, and high adopters among substance abuse treatment organizations (Miller 2001).

The Texas Christian University (TCU) Organizational Readiness for Change (ORC) has a specific domain that assesses physical organizational resources (e.g., office space, equipment, internet, etc.), which was associated with greater openness to and adoption of innovations in substance abuse treatment (Lehman et al. 2011; Simpson et al. 2007).

Two measures, Organizational Diversity and the TCU Survey of Structure and Operations (SSO), focus on the diversity of organizational services, clients served, and resources supported by functional affiliation with other entities (e.g., training program, medical school) (Burns & Wholey 1993; Lehman et al. 2011). Organizational Diversity was empirically linked with the adoption of management programs (Burns & Wholey 1993).

Two measures, Organizational Structure and Structural Complexity, provide information similar to an organizational chart in terms of task division, hierarchy, rules, procedures, and participation in decision-making (Ravichandran 2000; Schoenwald et al. 2003). Structural complexity based on organizational self-report was associated with the adoption of management support innovations (Ravichandran 2000).

Social Climate (n = 3)

Social climate generally refers to the multi-dimensional social environment among staff within an organization. The Work Environment Scale (WES) measures 10 such dimensions (Moos 1981; Savicki & Cooley 1987). Specifically, supportive and goal-directed work environments significantly predicted an increase in staff’s adoption of substance abuse treatment innovations (Moos & Moos 1998). The Organizational Social Context (OSC), which measures organizational culture as described above, has a distinct domain on organizational climate (Glisson et al. 2008). Organizational climate aggregates staff-level psychological climate ratings. Poor organizational climate characterized by high depersonalization, emotional exhaustion, and role conflict was associated with perceptions of EBPs as not clinically useful and less important than clinical experience (Aarons & Sawitzky 2006). The TCU Organizational Readiness for Change (ORC) measures five dimensions of organizational climate and showed that clarity of mission, cohesion, and openness to change were associated with positive perception of substance treatment adoption (Lehman et al. 2011; Simpson et al. 2007).

Social Network (Inter-Organizations) (n = 3)

The three measures either quantify the degree of linkages among organizations or the degree of exposure of an organization to influential adoption sources. First, the degree of linkage among organizations is indicated by three dimensions: the relative position of an organization within a network (central vs. peripheral), the directionality and intensity of the inter-organizational relationships, and the number of inter-organizational ties among all possible ties (Valente 2005). These dimensions are similar to measures for system-level social networks (e.g., community leaders and counties) described above, but assess these factors from the perspective of an organization.

Other measures operationalize influential adoption sources based on experience in adoption among networked organizations (e.g., the number of hospitals in the same region adopting the same innovation), perceived prestige of an organization in the network (e.g., reputation), and connections to field-specific innovations (e.g., organizational subscription to journals, exposure to media campaign) (Burns & Wholey 1993; Valente 1996). Empirical data indicated that by accounting for these influential sources, early adopters were differentiated from late adopters in medical, farming, and family planning innovations (Valente 1996), and the adoption of management innovations improved (Burns & Wholey 1993).

Training Readiness and Efforts (n = 2)

Organizational training readiness and efforts can be measured by the availability of physical resources that support training and training foci such as strategy and content. The Texas Christian University (TCU) Workshop Evaluation Form (WEVAL) uses a multi-item rating scale to measure the availability of staffing, time, and other program resources to set up training. It showed that increased program resources were linked to increased adoption of substance abuse treatment innovations (Bartholomew et al. 2007). The TCU Program Training Needs (PTN) is a focused measure of three training dimensions—efforts, needs and resources. In a 2 years study of 60 treatment programs, staff attitudes about training needs and past experiences were predictive of their adoption of new treatments in the following year (Simpson et al. 2007).

Traits and Readiness for Change (n = 2)

Organizational propensity to change and innovate is multi-dimensional, and is usually predicated on past experience with change. Of the four TCU Organizational Readiness for Change (ORC) domains, program needs/pressure for change identifies specific organizational drivers and motivations for change (e.g., client or staff needs) (Lehman et al. 2011; Simpson et al. 2007). The Pasmore Sociotechnical Assessment Survey (STSAS) measures key organizational readiness constructs such as organizational flexibility and maintenance of a futuristic orientation. When organizational change such as innovation adoption was perceived positively, employees were more likely to commit to the work and the innovative mission of the organization (Ingersoll et al. 2000).

Innovation

Overview of Measures

These 29 measures represent the second largest group of measures in this review. Driven by the principles of Roger’s (2003) Diffusion of Innovation Theory, they measure innovation characteristics using mainly multi-item or single-item rating scales. Innovation characteristics are either primary characteristics intrinsic to an innovation (e.g., cost) or characteristics that depend on the interaction between the adopter and the innovation (e.g., ease of use) (Damanpour & Schneider 2009; Richardson 2011). These more complex innovation characteristics such as level of skill required for innovation use and visibility of an innovation’s impact may require expert panel ratings. Because adopter and innovation characteristics interact, their malleability is likely dependent on this interaction. Twenty-six of the 29 measures are supported by empirical adoption data, with measures of an innovation’s cost-efficacy and risk assessment being especially relevant to EBP adoption.

Complexity, Relative Advantage and Observability (n = 8)

The eight measures originate from different fields ranging from public health, medical to technology innovations. The Rogers’s Adoption Questionnaire simultaneously measures three dimensions: Relative Advantage, Complexity, and Observability. It was used to demonstrate the adoption of health promotion innovations and can be modified for other innovations in different settings (Steckler et al. 1992).

The remaining seven measures assess single dimensions of innovation characteristics. Two measures are related to the perceived complexity and the specialized skills required to use an innovation (Damanpour & Schneider 2009; Meyer & Goes 1988). Three measures are related to the perceived relative advantage of adopting an innovation over existing practice based on perceived effectiveness and benefits (Dirksen et al. 1996; Peltier et al. 2009; Richardson 2011). Two measures are related to the observability, or the degree to which an innovation’s impact is deemed visible and evaluable (Meyer & Goes 1988; Richardson 2011). Empirical studies showed that these measures were positively associated with the decision to adopt technology innovations (Dirksen et al. 1996; Meyer & Goes 1988; Peltier et al. 2009; Richardson 2011).

Cost-efficacy and Feasibility (n = 6)

All six measures require objective computation or subjective ratings of the cost associated with an innovation, provided that each cost component can be delineated. Cost data allow potential adopters to assess the feasibility of adoption given their fiscal status. Three measures, the Cost of Implementing New Strategies (COINS), the TCU Treatment Cost Analysis Tool (TCAT) and the Costing Behavioral Interventions tool, use an Excel platform to identify cost components retrospectively or prospectively at each stage of the adoption process (Chamberlain et al. 2011; Flynn et al. 2009; Lehman et al. 2011; Ritzwoller et al. 2009; Saldana et al. 2013). Specifically, the COINS was used to illustrate the costs of adopting and implementing Multidimensional Treatment Foster Care in a two-state randomized controlled trial (Saldana et al., 2013). A fourth approach uses administrative data on service reimbursement rates to compare the cost-efficacy ratios across EBPs to aid adoption decision-making (McHugh et al. 2007). These techniques for estimating costs are recommended for policymakers, researchers, and potential adopters (Ritzwoller et al. 2009). The remaining two measures involve subjective ratings on the cost of the new innovation or of switching to the new innovation (Damanpour & Schneider 2009; Peltier et al. 2009).

Perceived Evidence and Compatibility (n = 4)

The perceived evidence that an innovation works and is compatible operationally with an organization can influence adoption decision-making (Damanpour & Schneider 2009; Richardson 2011). Three measures are unidimensional rating scales on innovation impact (i.e., evidence) and compatibility with staff knowledge, experiences, and needs (Damanpour & Schneider 2009; Meyer & Goes 1988; Richardson 2011). An open-ended interview protocol based on Roger’s Diffusion of Innovation Theory can identify adoption and rejection experiences with innovations, and the compatibility of innovation characteristics with an organization’s philosophy (Miller 2001).

Innovation Fit with Users’ Norms and Values (n = 2)

The goodness-of-fit between an innovation and personal values goes beyond technical compatibility between an innovation and an individual adopter. Derived from the information technology literature, the two measures operationalize this “fit” using multi-item rating scales to assess the perceived job fit (i.e., how an innovation fits with job performance) and perceived long-term consequences (i.e., how an innovation will produce long-term changes in personal meanings and career opportunities) (Thompson et al. 1991). These two measures can be applied to other types of innovations.

Risk (n = 3)

The perceived risk of adopting an innovation may refer to physical risk, propensity to risk-taking, and capacity for risk management. The three measures address these aspects using a combination of simple and in-depth approaches. In medical innovations, subjective level of injury and risk can be measured by an organizational rating scale to ensure an innovation’s viability in hospital settings (Meyer & Goes 1988). The Personal Risk Orientation uses multiple items to assess individual psychological attributes of risk-taking (e.g., competitiveness, creativity, confidence, achievement orientation, etc.) (Peltier et al. 2009). The Survey of Risk measures three organizational dimensions of risk. Specifically, perceived risk significantly differentiated adopters from non-adopters of EBPs, expected capacity to manage risk and past propensity to take risks were positively related to the propensity to adopt an EBP (Panzano & Roth 2006).

Trialability, Relevance and Ease (n = 6)

All six measures emphasize adopters’ perceptions of how relevant, easy to use and applicable on a trial basis an innovation is, whether the innovation is a medication, an EBP, or technology. They consist of rating scales that are discretely mapped to these innovation characteristics.

Two measures focus on trialability. One measure defines trialability as an organization’s participation status in a clinical trial network and whether the organization has an opportunity to experiment with a medication treatment offered by the network (Ducharme et al. 2007). Results showed that the trialability of an evidence-based medication significantly increased subsequent adoption of that same medication. A second measure uses two items to assess the degree information and communication technology skills (e.g., computer and software) can be experimented with or practiced prior to full adoption. Through discriminant analysis, however, trialability made less contribution than voluntariness of use in discriminating five adopter groups (early adopters, late adopters, adopters who reinvented the innovation, de-adopters, and rejecters) based on usage rates of the skills (Richardson 2011).

Two multi-item measures are related to relevance. The Task Relevance and Task Usefulness items measure an innovation’s relevance to the day-to-day job performance of the user, which significantly contributed to the adoption of information system innovations (Yetton et al. 1999). The TCU Workshop Evaluation (WEVAL) is more expansive. It measures an innovation’s relevance to client needs and staff comfort level (Bartholomew et al. 2007). In one study, higher ratings of relevance on the TCU WEVAL were related to greater future trial usage of an EBP (Bartholomew et al. 2007).

Ease can be measured by two multi-item measures. Ease of Use defines ease in multiple dimensions (e.g., ease of learning and controlling an innovation) (Davis 1989). Perceived Usefulness defines ease based on user experience at their job (e.g., an innovation making the job easier and increasing productivity) (Davis 1989). Empirical studies showed that Ease of Use served as an antecedent to Perceived Ease of Use, which in turn was significantly associated with current and self-predicted future adoption of information technology innovations (Davis 1989).

Individual: Staff

Overview of Measures

Twenty-six measures are related to six individual predictors of adoption, ranging from personal characteristics such as knowledge, skill and attitudes to more external influences such as social connections with other adopters. Dimensions of individual predictors are mainly measured by multi-domain or multi-items scales.

Affiliation with Organizational Culture (n = 1)

Since staff’s affiliation with their organization is the building block of organizational culture (Glisson et al. 2008), staff who view their organizational culture as welcoming or exploring innovations are likely to adopt or explore the use of EBPs (Aarons et al. 2011). Organizational Culture Profile (OCP) uses a Q-sort approach to measure staff-culture fit by correlating staff’s preferences with organizational values; this fit was characterized by an overlap in the “innovation” dimension (O’Reilly et al. 1991).

Attitudes, Motivation, Readiness Towards Quality Improvement and Reward (n = 4)

Using multi-domain and multi-item scales, all three measures capture staff’s attitudes towards innovations such as EBPs, QIs, and general organizational changes that may lead to perceived benefits. The Evidence-Based Practice Attitude Scale (EBPAS) and the SFTRC Course Evaluation measure clinicians’ general attitudes towards EBPs based on their clinical experience, clinical innovativeness, and perceived appeals of EBPs (Aarons & Sawitzky 2006; Haug et al. 2008). In one study, the SFTRC Course Evaluation was used to differentiate individual clinicians’ readiness for change (e.g., pre-contemplation, preparation, action) (Haug et al. 2008). Applicable to diverse types of innovations, Readiness for Organizational Change measures individual readiness for change based on staff’s opinions of their ability to innovate, their organizational support for change, and the appropriateness and benefits of change (Holt et al. 2007). In addition, the Texas Christian University (TCU) Workshop Assessment Follow-Up (WAFU) measures individual attitudes towards training based on resource and procedural factors, which were related to trial adoption of substance abuse treatment innovations (Bartholomew et al. 2007).

Feedback on Execution and Fidelity (n = 1)

Adoption theories indicate that feedback to staff about their alignment with or deviation from the proper usage of innovations can influence adoption prior to full implementation (Wisdom et al. 2013). Measures specific to the adoption stage are lacking compared with those during the implementation stage. Nevertheless, the Alternative Stages of Concern Questionnaire (SoCQ) measures the importance of student feedback on improving teachers’ awareness, concern, and adoption of educational innovations, for example, by identifying areas of an innovation that needs change and enhancement (Cheung et al. 2001).

Individual Characteristics (n = 7)

The seven measures focus on individual adopters’ awareness, knowledge of innovations, and competence in current practice. These measures involve a variety of self-ratings by individuals.

Four measures are related to awareness. Personal Innovativeness consists of rating scales that assess an individual’s propensity to innovate quickly and scientifically in the workplace (Leonard-Barton & Deschamps 1988). Subjective Importance of the Task requires an individual to rank innovations based on perceived importance, which was associated with management support prior to adoption (Leonard-Barton & Deschamps 1988). The Alternative Stages of Concern Questionnaire (SoCQ) (Cheung et al. 2001) and the Awareness-Concern-Interest Questionnaire (Steckler et al. 1992) measure individual awareness, concern, and interest about public health or educational innovations at each adoption stage.

The remaining three measures address knowledge/skill and competence in current practice, with consistent association with adoption. Job performance benchmarks (e.g., project goal reached) (Leonard-Barton & Deschamps 1988) can be complemented by subjective ratings of competence (from beginner to expert) on a particular task (Leonard-Barton & Deschamps 1988) and ratings on a class of knowledge about innovations (Peltier et al. 2009).

Managerial Characteristics (n = 6)

Managers play a key role in staff’s motivation to change and innovate. Of the six self-ratings, three measure qualifications, such as experience within a product class (Igbaria 1993), and education and tenure at current position (Damanpour & Schneider 2009). The other three measures identify managerial traits such as self-concepts (e.g., efficacy, esteem) and risk-taking dispositions (Judge et al. 1999), and entrepreneurial and innovative attitudes (Damanpour & Schneider 2009). Empirical studies of technology or government management innovations showed that all six measures were positively associated with adoption and receptiveness to change, except for tenure, which had an inverted U-shaped relationship with adoption (Damanpour & Schneider 2009).

Social Network (Individual’s Personal Network) (n = 7)

Unlike measures for inter-systems and inter-organizational social networks, measures for intra-organizational social networks among staff focus on person-to-person influence on adoption. Of the seven measures, four measures are rating scales that assess the positive impact of social influence on adoption, based on organizational or personal hierarchy. A Social Factors measure assesses influence from colleagues and superiors (Thompson et al. 1991), while Subjective Norm measures the impact of important others on the use of an innovation (Venkatesh & Davis 2000). Work Group Integration measures the sense of belonging to a work group as an additional source of social influence on adoption (Kraut et al. 1998). Social Network further accounts for the influence of peers in the same discipline or in similar organizations who are adopting an innovation (Talukder & Quazi 2011).

The other three measures quantify peer influence on adoption within an organization. Subscribers to a System measures the number of colleagues in the same work group who use an innovation, which is used to calculate the proportion of adopters within the work group, or Subscribers within the Work Group (Kraut et al. 1998). Sociometric data on peer influence can be used to calculate contagion effects and network exposure scores, both a function of the number of social ties and nominations associated with an individual (Valente 2005). These measures are useful in analyzing time until adoption and estimating the extent of peer influence on adoption, given the correct denominator of adopters.

Individual: Client

Readiness for Change/Capacity to Adopt (n = 1)

Although the adoption literature on individual predictors mainly focuses on the innovation user’s perspective (e.g., clinician’s), the perspective of the recipient of an innovation—the perspective of the client—is equally important. A potential measure is the Texas Christian University (TCU) Client Evaluation of Self and Treatment (CEST), which contains specific domains on client motivation and readiness for change (Simpson et al. 2007).

Measures for Multiple Levels of Predictors

Five measurement systems are notably broader in that they simultaneously assess predictors of adoption across multiple contextual levels. Two measurement systems employ both qualitative and quantitative approaches to characterize facilitators and barriers of EBP adoption. The Management of Ideas in the Creating Organizations is a system that measures facilitators and barriers of EBP adoption at the individual (individual provider characteristics, competencies, and desire for change) and organizational (conditions and contexts) levels (Gioia & Dziadosz 2008). It can be re-administered to show changes in attitudes towards EBP adoption over time (Gioia & Dziadosz 2008). The Concept System is an expansive approach that measures facilitators and barriers based on 14 clusters of 105 statements related to the individual (staff and consumers), innovation, organization, and external system. Its ratings can differentiate the relative importance and changeability of each cluster, such as insufficient EBP training as a barrier, or provision of ongoing EBP supervision as a facilitator (Aarons et al. 2009).

Three measures, previously noted within each contextual level above, are described here again because they contain distinct domains that cut across multiple levels of adoption predictors. The Reinventing Government (RG) and Alternative Service Delivery (ASD) surveys include a set of rating scales that assess the external environment, organizational size and structure, and individual managerial characteristics that are associated with adoption (Damanpour & Schneider 2009). Two other measures are training evaluations that have been used to understand the relationships between organizational level, innovation level and/or individual level variables with innovation uptake: The San Francisco Treatment Research Center (SFTRC) Course Evaluation contains domains that measure both organizational barriers to adoption, as well as individual staff readiness for change and attitudes towards EBPs (Haug et al. 2008); and the Texas Christian University (TCU) Workshop Evaluation Form (WEVAL) contains domains that measure organizational training readiness and efforts to support innovation adoption, as well as the relevance of an innovation to their work (Bartholomew et al. 2007).

Discussion

This review identifies 118 measures for the 27 predictors of innovation adoption associated with a theoretical framework (Wisdom et al. 2013) and augments related reviews of measures, such as the work of Chaudoir et al. (2013), as well as large-scale measurement databases such as the Seattle Implementation Research Collaborative (SIRC) (2013) Instrument Review Project founded on the Consolidated Framework for Implementation Research (http://www.wiki.cf-ir.net/index.php?title=Main_Page) (Damschroder et al. 2009) and the Grid-Enabled Measures (GEM) (National Cancer Institute 2013), which target broad implementation outcomes (e.g., acceptability, feasibility, fidelity, penetration, sustainability). Although there are conceptual overlaps (e.g., leadership) between predictors of adoption and predictors of broad implementation outcomes, this review shows that adoption-specific predictors and their measures are distinct from those associated with broad implementation outcomes.

The 118 measures are not distributed equally among the 27 predictors. Measures for predictors at the organizational, innovation, and individual staff levels make up the majority of the measures. Specific predictors such as organizational size, discrete innovation characteristics, and individual staff characteristics appear easier to measure based on the number of available measures. A key understudied predictor is client characteristics (Chaudoir et al. 2013), which has direct implications for the adoption of EBPs in mental health services, as client motivation and receptiveness to change could potentially deter or promote the initial adoption of EBPs by clinicians and by organizations.

Measures from outside the fields of health and mental health may need to be tailored for use in these areas. For instance, it may be enough to measure inter-organizational relationships in the corporate world based on market share. However, to measure relationships among non-profit organizations and their social impact on EBP adoption, we need to know which non-profits to include, their sources of knowledge about EBPs, and the greater political environment that could potentially impact such relationships. Further, the specificity of innovation characteristics means factors influential to adoption may also differ by the type of innovation. Overall, innovations that require less specialized skills and show clear and measurable benefit are more strongly related to adoption. This review suggests that some measures can be directly imported (e.g., measures for organizational culture), while other measures (e.g., rating scales for ease of use) will need to have their content modified and their psychometric properties re-established. Importantly, regardless of measurement issues, whether predictors of adoption from fields other than health continue to be important drivers of adoption of mental health EBPs will need to be established.

Multi-dimensional predictors are more challenging to measure because they require in-depth measures or diverse methods that range from surveys/semi-structured interviews of key informants to expert panel ratings. These predictors include organizational culture, climate, readiness for change, leadership, operational size and structure, facilitators and barriers of adoption, and risks associated with an innovation. Measures that are linked to empirical adoption data can guide the selection of measures. Having a specific dimension of interest (e.g., leadership style vs. leadership decision-making) that is hypothesized to influence adoption can also help prioritize measures.

Multiple definitions of adoption predictors pose additional challenges to the measurement field. For instance, what are the key characteristics of an effective leader who fosters adoption of EBPs? While many leadership measures have been developed, few existing measures have been compared to one another. Without direct comparisons of leadership measures that assess different aspects of leadership (e.g., the MLQ, Quality Orientation of the Host Organization, Management Practice Survey Tool), it is difficult to determine which leadership characteristics are important for adoption of EBPs (Chaudoir et al. 2013). A related challenge is the lack of comparisons between related predictors, for example, between organizational culture and other basic organizational characteristics (e.g., leadership). Similar predictors may also present potential redundancy in measurement—cost versus risk, fit with individual work demands versus task relevance and usefulness of an innovation, etc. To dispel such redundancy, it is important to gain a clear understanding on the level of analysis (e.g., individual data versus aggregating individual data to form organizational descriptors) and the nuances between similar predictors that may have different theoretical and empirical bases.

Adoption is multifaceted and involves many predictors that may relate to one another in complex ways (Aarons et al. 2011; Wisdom et al. 2013). For example, innovation characteristics inherently reflect individual perceptions of those characteristics (Damanpour & Schneider 2009; Peltier et al. 2009; Richardson 2011). An EBP can objectively be characterized as long-term based on its length of treatment, or as individual versus group treatment based on its modality. Conversely, its trialability, relevance, and observability reflect an adopter’s perceptions and evaluations of the EBP (i.e., innovation fit). Thus, innovation characteristics (e.g., modality, skills required, target clients) and individual characteristics (e.g., adopter’s skill level, innovativeness, appraisal of the EBP) are likely inter-related and analyses of adoption should reflect these potential relationships as well as evaluate under what circumstances (e.g., contextual variability or adoption stage) predictors relate to one another. In practice, while it is neither feasible nor even desirable to measure all potential predictors, selection of measures should be thoughtfully guided by existing or local knowledge about the context of adoption and variables that are theorized to have the biggest impact on adoption.

Some measures appear to have a circular relationship with adoption, such as the TCU WEVAL and the EBPAS. The WEVAL measures organizational support and resources, which can simultaneously represent adoption predictors or adoption outcomes. Similarly, the EBPAS measures clinician attitudes toward EBPs. Clinicians with positive attitudes are more likely to adopt an EBP, although adopting an EBP can subsequently increase positive attitudes toward EBPs. To avoid circularity, researchers must be clear on the intended purpose and timing of measures that have more than one application.

The modifiability of predictors varies across the four levels of predictors. External system predictors are generally not amenable to modification (e.g., urbanicity). In addition, organizational and individual predictors can be difficult to change, and innovation predictors may be limited in their modifiability because of their interactions with individual predictors. Clearly not all predictors can change and not all modifiable predictors change at the same pace. To improve adoption, research must focus on measuring predictors that are modifiable and such measures can then be used to assess change over time (e.g., organizational culture, relevance of an innovation). Future research must also advance the measurement of these predictors (Chaudoir et al. 2013) to make their measures accessible, affordable, and useful across multiple adoption efforts.

Implications for EBP Adoption: A State System Perspective

This critique of measures for 27 possible predictors of adoption outlined in Wisdom et al. (2013) suggests the following implications for states’ efforts to improve the adoption of EBPs.

Valid, Reliable, and Theory-Driven Measures are Critical to Understanding Characteristics Related to EBP Adoption

Introducing EBPs is more complex than installing discrete innovations such as computer software or a hand-washing practice (Comer & Barlow 2013). Therefore, measuring predictors of EBP adoption is also more complex because it is multi-level (organization, staff, client), multi-phasic (initial training, supervision, and ongoing consultation), and costly due to training, staff time and lost clinical revenue while staff are being trained. Having the appropriate measures helps to accurately determine what the drivers of EBP adoption are as well as how they differ by the specific EBP (e.g., learning a new treatment vs. modifying existing treatment) and by the adopter environment (e.g., organizational social context, operational structure).

Improving Measurement Efforts Enhances Development of Adoption Interventions

A state can potentially characterize and differentiate non-adopters from adopters, based on measurable differences in adoption facilitators. EBP roll-out strategies can then be customized to distinct adopter profiles. To illustrate, since 2011, the New York State Office of Mental Health (NYSOMH) has been offering technical assistance in business and clinical practices for child-serving mental health clinics through the Clinic Technical Assistance Center (CTAC). To understand possible drivers of clinic adoption patterns of the trainings (e.g., by type, number, and intensity of trainings), state administrative and fiscal data can be used to describe clinic operational size and structure (e.g., gain/loss per unit of service, productivity), clinic type (e.g., hospital vs. community-based), and client need (e.g., proportion of clients with persistent and severe mental illness). Findings will help the state allocate resources to improve adoption when appropriate, for example, by encouraging non-adopters who are financially strained to access free or affordable training options on EBPs. Characterizing non-adopters through better measurement can also help the state tailor future roll-outs more efficiently.

Equal Emphasis on Measuring Predictors of Adoption, Non-adoption, Delayed-Adoption, and De-adoption

A glaring omission in the adoption measurement literature is any focus on failure to adopt and de-adoption (Panzano & Roth 2006). Indiscriminate adoption is not always an ideal outcome. If an organization experiences innovation fatigue, financial challenges or is understaffed, it may exercise caution by resisting adoption in order to reduce costs and maintain productivity. Important lessons can be learned from this failure to adopt. To understand the rational decision-making of non-adoption, delayed adoption, or de-adoption, a state needs appropriate measures for the features related to each of these actions.

Conclusion

As healthcare reform increasingly demands accountability and quality measures for tracking change processes, states will need to increase the use of EBPs in their state-funded mental health systems. To do this efficiently will require a clear understanding of the drivers of adoption and the ability to measure these drivers accurately. Although a reasonably “young” scientific discipline (Stamatakis et al. 2013), implementation science needs to focus on measurement to create a common language and to develop interventions that promote strategic uptake of EBPs.

References

Aarons, G. A. (2006). Transformational and transactional leadership: Association with attitudes toward evidence-based practice. Psychiatric Services, 57(8), 1162–1169. doi:10.1176/appi.ps.57.8.1162.

Aarons, G. A., Hurlburt, M., & Horwitz, S. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 4–23. doi:10.1007/s10488-010-0327-7.

Aarons, G. A., & Sawitzky, A. C. (2006). Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychological Services, 3(1), 61–72. doi:10.1037/1541-1559.3.1.61.

Aarons, G. A., Wells, R. S., Zagursky, K., Fettes, D. L., & Palinkas, L. A. (2009). Implementing evidence-based practice in community mental health agencies: A multiple stakeholder analysis. American Journal of Public Health, 99(11), 2087–2095. doi:10.2105/ajph.2009.161711.

Bartholomew, N. G., Joe, G. W., Rowan-Szal, G. A., & Simpson, D. D. (2007). Counselor assessments of training and adoption barriers. Journal of Substance Abuse Treatment, 33(2), 193–199. doi:10.1016/j.jsat.2007.01.005.

Bass, B. M., Avolio, B. J., & Atwater, L. (1996). The transformational and transactional leadership of men and women. Applied Psychology, 45(1), 5–34. doi:10.1111/j.1464-0597.1996.tb00847.x.

Burns, L. R., & Wholey, D. R. (1993). Adoption and abandonment of matrix management programs: Effects of organizational characteristics and interorganizational networks. Academy of Management Journal, 36(1), 106–138.

Chamberlain, P., Brown, C. H., & Saldana, L. (2011). Observational measure of implementation progress in community based settings: The Stages of implementation completion (SIC). Implementation Science,. doi:10.1186/1748-5908-6-116.

Chaudoir, S. R., Dugan, A. G., & Barr, C. H. (2013). Measuring factors affecting implementation of health innovations: A systematic review of structural, organizational, provider, patient, and innovation level measures. Implementation Science, 8(1), 22. doi:10.1186/1748-5908-8-22.

Cheung, D., Hattie, J., & Ng, D. (2001). Reexamining the stages of concern questionnaire: A test of alternative models. The Journal of Educational Research, 94(4), 226–236.

Cohen, W. M., & Levinthal, D. A. (1990). Absorptive capacity—A new perspective on learning and innovation. Administrative Science Quarterly, 35(1), 128–152.

Comer, J. S., & Barlow, D. H. (2013). The occasional case against broad dissemination and implementation: Retaining a role for specialty care in the delivery of psychological treatments. American Psychologist,. doi:10.1037/a0033582.

Cooke, R. A., & Lafferty, J. C. (1994). Organizational culture inventory. Plymouth: Human Synergistics International.

Damanpour, F., & Schneider, M. (2009). Characteristics of innovation and innovation adoption in public organizations: Assessing the role of managers. Journal of Public Administration Research and Theory, 19(3), 495–522. doi:10.1093/jopart/mun021.

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science,. doi:10.1186/1748-5908-4-50.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. Management Information Systems Quarterly, 13(3), 319–340.

Dirksen, C. D., Ament, A. H., & Go, P. M. N. (1996). Diffusion of six surgical endoscopic procedures in the Netherlands. Stimulating and restraining factors. Health Policy, 37(2), 91–104. doi:10.1016/s0168-8510(96)90054-8.

Ducharme, L. J., Knudsen, H. K., Roman, P. M., & Johnson, J. A. (2007). Innovation adoption in substance abuse treatment: Exposure, trialability, and the clinical trials network. Journal of Substance Abuse Treatment, 32(4), 321–329. doi:10.1016/j.jsat.2006.05.021.

Edwards, J. R., Knight, D. K., Broome, K. M., & Flynn, P. M. (2010). The development and validation of a transformational leadership survey for substance use treatment programs. Substance Use and Misuse, 45(9), 1279–1302. doi:10.3109/10826081003682834.

Feldstein, A. C., & Glasgow, R. E. (2008). A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Joint Commission Journal on Quality and Patient Safety, 34(4), 228–243.

Finnerty, M., Leckman-Westin, E., Kealey, E., Wisdom, J. P., Olin, S., Horwitz, S., & Hoagwood, K. (2012). Impact of state incentives on mental health agency decisions to participate in a large state CQI initiative. Paper presented at the 5th Annual NIH Conference on the Science of Dissemination and Implementation, Bethesda, MD.

Finnerty, M., Rapp, C., Bond, G., Lynde, D., Ganju, V., & Goldman, H. (2009). The state health authority yardstick (SHAY). Community Mental Health Journal, 45(3), 228–236. doi:10.1007/s10597-009-9181-z.

Fitzgerald, L., Ferlie, E., Wood, M., & Hawkins, C. (2002). Interlocking interactions, the diffusion of innovations in health care. Human Relations, 55(12), 1429–1449. doi:10.1177/001872602128782213.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network.

Flynn, P. M., Broome, K. M., Beaston-Blaakman, A., Knight, D. K., Horgan, C. M., & Shepard, D. S. (2009). Treatment Cost Analysis Tool (TCAT) for estimating costs of outpatient treatment services. Drug and Alcohol Dependence, 100(1–2), 47–53. doi:10.1016/j.drugalcdep.2008.08.015.

Fujimoto, K., Valente, T. W., & Pentz, M. A. (2009). Network structural influences on the adoption of evidence-based prevention in communities. Journal of Community Psychology, 37(7), 830–845. doi:10.1002/Jcop.20333.

Ganju, V. (2003). Implementation of evidence-based practices in state mental health systems: Implications for research and effectiveness studies. Schizophrenia Bulletin, 29(1), 125–131.

Gatignon, H., & Robertson, T. S. (1989). Technology diffusion: An empirical test of competitive effects. The Journal of Marketing, 53(1), 35–49.

Gioia, D., & Dziadosz, G. (2008). Adoption of evidence-based practices in community mental health: A mixed-method study of practitioner experience. Community Mental Health Journal, 44(5), 347–357. doi:10.1007/s10597-008-9136-9.

Glisson, C., Landsverk, J., Schoenwald, S., Kelleher, K., Hoagwood, K. E., Mayberg, S., et al. (2008). Assessing the organizational social context (OSC) of mental health services: Implications for research and practice. Administration and Policy in Mental Health, 35(1–2), 98–113. doi:10.1007/s10488-007-0148-5.

Glisson, C., & Schoenwald, S. K. (2005). The ARC organizational and community intervention strategy for implementing evidence-based children’s mental health treatments. Ment Health Serv Res, 7(4), 243–259. doi:10.1007/s11020-005-7456-1.

Haug, N. A., Shopshire, M., Tajima, B., Gruber, V., & Guydish, J. (2008). Adoption of evidence-based practices among substance abuse treatment providers. Journal of Drug Education, 38(2), 181–192. doi:10.2190/De.38.2.F.

Holt, D. T., Armenakis, A. A., Feild, H. S., & Harris, S. G. (2007). Readiness for organizational change: The systematic development of a scale. Journal of Applied Behavioral Science, 43(2), 232–255. doi:10.1177/0021886306295295.

Igbaria, M. (1993). User acceptance of microcomputer technology: An empirical test. Omega, 21(1), 73–90. doi:10.1016/0305-0483(93)90040-r.

Ingersoll, G. L., Kirsch, J. C., Merk, S. E., & Lightfoot, J. (2000). Relationship of organizational culture and readiness for change to employee commitment to the organization. Journal of Nursing Administration, 30(1), 11–20.

Judge, T. A., Thoresen, C. J., Pucik, V., & Welbourne, T. M. (1999). Managerial coping with organizational change: A dispositional perspective. Journal of Applied Psychology, 84(1), 107–122. doi:10.1037/0021-9010.84.1.107.

Knudsen, H. K., & Abraham, A. J. (2012). Perceptions of the state policy environment and adoption of medications in the treatment of substance use disorders. Psychiatric Services, 63(1), 19–25.

Knudsen, H. K., & Roman, P. M. (2004). Modeling the use of innovations in private treatment organizations: The role of absorptive capacity. Journal of Substance Abuse Treatment, 26(1), 51–59. doi:10.1016/s0740-5472(03)00158-2.

Kraut, R. E., Rice, R. E., Cool, C., & Fish, R. S. (1998). Varieties of social influence: The role of utility and norms in the success of a new communication medium. Organization Science, 9(4), 437–453.

Lehman, W. E. K., Simpson, D. D., Knight, D. K., & Flynn, P. M. (2011). Integration of treatment innovation planning and implementation: Strategic process models and organizational challenges. Psychology of Addictive Behaviors, 25(2), 252–261. doi:10.1037/a0022682.

Leonard-Barton, D., & Deschamps, I. (1988). Managerial influence in the implementation of new technology. Management Science, 34(10), 1252–1265.

Lind, M. R., & Zmud, R. W. (1991). The influence of a convergence in understanding between technology providers and users on information technology innovativeness. Organization Science, 2(2), 195–217.

McConnell, K. J., Hoffman, K. A., Quanbeck, A., & McCarty, D. (2009). Management practices in substance abuse treatment programs. Journal of Substance Abuse Treatment, 37(1), 79–89. doi:10.1016/j.jsat.2008.11.002.

McHugh, R. K., Otto, M. W., Barlow, D. H., Gorman, J. M., Shear, M. K., & Woods, S. W. (2007). Cost-efficacy of individual and combined treatments for panic disorder. Journal of Clinical Psychiatry, 68(7), 1038–1044.

Meyer, A. D., & Goes, J. B. (1988). Organizational assimilation of innovations - A multilevel contextual analysis. Academy of Management Journal, 31(4), 897–923.

Miller, R. L. (2001). Innovation in HIV prevention: Organizational and intervention characteristics affecting program adoption. American Journal of Community Psychology, 29(4), 621–647.

Moos, R. H. (1981). Work Environment Scale manual. Palo Alto: Consulting Psychologists Press.

Moos, R. H., & Moos, B. S. (1998). The staff workplace and the quality and outcome of substance abuse treatment. Journal of Studies on Alcohol, 59(1), 43–51.

National Cancer Institute. (2013). Grid-enabled measure database. Measures. https://www.gem-beta.org/public/Home.aspx?cat=0. Retrieved January 3, 2014.

O’Reilly, C. A., Chatman, J., & Caldwell, D. F. (1991). People and organizational culture: A profile comparison approach to assessing person-organization fit. Academy of Management Journal, 34(3), 487–516. doi:10.2307/256404.

Panzano, P. C., & Roth, D. (2006). The decision to adopt evidence-based and other innovative mental health practices: Risky business? Psychiatric Services, 57(8), 1153–1161. doi:10.1176/appi.ps.57.8.1153.

Patient Protection and Affordable Care Act of 2010, Pub. L. No. 111-148, 124 Stat. 119–1205. (2010).

Pawson, R., Greenhalgh, T., Harvey, G., & Walshe, K. (2005). Realist review: A new method of systematic review designed for complex policy interventions. Journal of Health Services Research and Policy, 10(Suppl 1), 21–34. doi:10.1258/1355819054308530.

Peltier, J. W., Schibrowsky, J. A., & Zhao, Y. S. (2009). Understanding the antecedents to the adoption of CRM technology by small retailers entrepreneurs vs owner-managers. International Small Business Journal, 27(3), 307–336. doi:10.1177/0266242609102276.

Proctor, E. K., & Brownson, R. C. (2012). Measurment issues in dissemination and implementation research. In R. C. Brownson, G. A. Colditz, & E. K. Proctor (Eds.), Dissemination and implementation research in health: Translating science to practice (pp. 261–280). New York: Oxford University Press.

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., et al. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. doi:10.1007/s10488-010-0319-7.

Rauktis, M. E., McCarthy, S., Krackhardt, D., & Cahalane, H. (2010). Innovation in child welfare: The adoption and implementation of Family Group Decision Making in Pennsylvania. Children and Youth Services Review, 32(5), 732–739. doi:10.1016/j.childyouth.2010.01.010.

Ravichandran, T. (2000). Swiftness and intensity of administrative innovation adoption: An empirical study of TQM in information systems. Decision Sciences, 31(3), 691–724. doi:10.1111/j.1540-5915.2000.tb00939.x.

Richardson, J. W. (2011). Technology adoption in Cambodia: Measuring factors impacting adoption rates. Journal of International Development, 23(5), 697–710. doi:10.1002/Jid.1661.

Ritzwoller, D. P., Sukhanova, A., Gaglio, B., & Glasgow, R. E. (2009). Costing behavioral interventions: A practical guide to enhance translation. Annals of Behavioral Medicine, 37(2), 218–227. doi:10.1007/s12160-009-9088-5.

Saldana, L., Chamberlain, P., Bradford, W. D., Campbell, M., & Landsverk, J. (2013). The cost of implementing new strategies (COINS): A method for mapping implementation resources using the Stages of Implementation Completion. Children and Youth Services Review. doi:10.1016/j.childyouth.2013.10.006.

Savicki, V., & Cooley, E. (1987). The relationship of work environment and client contact to burnout in mental health professionals. Journal of Counseling & Development, 65(5), 249.

Schoenwald, S. K., & Hoagwood, K. (2001). Effectiveness, transportability, and dissemination of interventions: what matters when? Psychiatric Services, 52(9), 1190–1197.

Schoenwald, S. K., Sheidow, A. J., Letourneau, E. J., & Liao, J. G. (2003). Transportability of multisystemic therapy: Evidence for multilevel influences. Mental Health Services Research, 5(4), 223–239. doi:10.1023/a:1026229102151.

Seattle Implementation Research Collaborative. (2013). Instrument Review Project: A comprehensive review of dissemination and implementation science instruments. http://www.seattleimplementation.org/sirc-projects/sirc-instrument-project/. Retrieved January 3, 2014.

Shortell, S. M., Marsteller, J. A., Lin, M., Pearson, M. L., Wu, S.-Y., Mendel, P., et al. (2004). The role of perceived team effectiveness in improving chronic illness care. Medical Care, 42(11), 1040–1048.

Sieveking, N., Bellet, W., & Marston, R. C. (1993). Employees’ views of their work experience in private hospital. Health Services Management Research, 6(2), 129–138.

Simpson, D. D., Joe, G. W., & Rowan-Szal, G. A. (2007). Linking the elements of change: Program and client responses to innovation. Journal of Substance Abuse Treatment, 33(2), 201–209. doi:10.1016/j.jsat.2006.12.022.

Stamatakis, K., Norton, W., Stirman, S., Melvin, C., & Brownson, R. (2013). Developing the next generation of dissemination and implementation researchers: Insights from initial trainees. Implementation Science, 8(29). doi:10.1186/1748-5908-8-29.

Steckler, A., Goodman, R. M., McLeroy, K. R., Davis, S., & Koch, G. (1992). Measuring the diffusion of innovative health promotion programs. American Journal of Health Promotion, 6(3), 214–224.

Stevenson, K., & Baker, R. (2005). Investigating organisational culture in primary care. Quality in Primary Care, 13(4), 191–200.

Talukder, M., & Quazi, A. (2011). The impact of social influence on individuals’ adoption of innovation. Journal of Organizational Computing and Electronic Commerce, 21(2), 111–135. doi:10.1080/10919392.2011.564483.

Thompson, R. L., Higgins, C. A., & Howell, J. M. (1991). Personal computing: Toward a conceptual model of utilization. Management Information Systems Quarterly, 15(1), 125–143.

Valente, T. W. (1996). Social network thresholds in the diffusion of innovations. Social Networks, 18(1), 69–89. doi:10.1016/0378-8733(95)00256-1.

Valente, T. W. (2005). Network models and methods for studying the diffusion of innovations. In P. J. Carrington, J. Scott, & S. Wasserman (Eds.), Models and methods in social network analysis. Cambridge: Cambridge University Press.

Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204.

Wisdom, J. P., Chor, K. H. B., Hoagwood, K. E., & Horwitz, S. M. (2013). Innovation adoption: A review of theories and constructs. Administration and Policy in Mental Health and Mental Health Services Research,. doi:10.1007/s10488-013-0486-4. (Advance online publication).

Yetton, P., Sharma, R., & Southon, G. (1999). Successful IS innovation: The contingent contributions of innovation characteristics and implementation process. Journal of Information Technology, 14(1), 53–68. doi:10.1080/026839699344746.

Zahra, S. A., & George, G. (2002). Absorptive capacity: A review, reconceptualization, and extension. Academy of Management Review, 27(2), 185–203. doi:10.5465/amr.2002.6587995.

Acknowledgments

This study was funded by the National Institute of Mental Health (P30 MH090322: Advanced Center for State Research to Scale up EBPs for Children, PI: Hoagwood).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendices

Appendix 2: Databases and Search Strategy (Wisdom et al. 2013)

Ovid Medline, PsycINFO, and Web of Science were the major electronic databases used for Medical Subject Heading (MeSH) and article keyword searches.

-

1.

Ovid Medline provided the first and primary source of literature. First, exploratory searches were conducted using these individual MeSH terms:

-

DIFFUSION OF INNOVATION (13,774 hits);

-

EVIDENCE-BASED PRACTICE (51,940 hits);

-

EVIDENCE-BASED MEDICINE (47,305 hits) a subset of EVIDENCE-BASED PRACTICE;

-

MODELS, THEORETICAL (1,120,350 hits).

-

Next, the following MeSH terms were combined with the AND Boolean operator:

-

DIFFUSION OF INNOVATION AND EVIDENCE-BASED PRACTICE (1,781 hits); DIFFUSION OF INNOVATION AND MODELS, THEORETICAL (1,299 hits);

-

DIFFUSION OF INNOVATION AND EVIDENCE-BASED PRACTICE (1,397 hits).

Considering theoretical frameworks are the focus of this review, further combinations of the following MeSH terms were conducted using the AND Boolean operator:

-

DIFFUSION OF INNOVATION AND EVIDENCE-BASED PRACTICE AND MODELS, THEORETICAL (320 hits);

-

DIFFUSION OF INNOVATION AND EVIDENCE-BASED MEDICINE AND MODELS, THEORETICAL (237 hits).

Since EVIDENCE-BASED MEDICINE is a subset of EVIDENCE-BASED PRACTICE in the MeSH grouping, the search narrowed down to DIFFUSION OF INNOVATION AND EVIDENCE-BASED PRACTICE AND MODELS, THEORETICAL.

-

2.

We used PsycInfo to supplement the original pool of literature using a similar search logic of MeSH:

-

ADOPTION (15,535 hits);

-

EVIDENCE BASED PRACTICE (8,940 hits);

-

INNOVATION (3,995 hits);

-

MODELS (65,923 hits);

-

THEORIES (91,148 hits).

-

Since ADOPTION as a MeSH in PsycInfo is quite broad and often refers to the child welfare taxonomy, we used the AND Boolean operator for the following combinations:

-

ADOPTION AND EVIDENCE BASED PRACTICE (291 hits);

-

ADOPTION AND INNOVATION (339 hits).

Next, to add a theoretical focus to this pool, we used the AND Boolean operator for the following combinations:

-

ADOPTION AND EVIDENCE BASED PRACTICE AND INNOVATION (10 hits);

-

ADOPTION AND EVIDENCE BASED PRACTICE AND MODELS (11 hits);

-

ADOPTION AND EVIDENCE BASED PRACTICE AND THEORIES (9 hits);

-

ADOPTION AND INNOVATION AND MODELS (18 hits);

-

ADOPTION AND INNOVATION AND THEORIES (6 hits).

All articles from these last searches in PsycInfo were screened for overlaps.

-

3.

To gain a broader perspective on other fields, we used Web of Science to expand the pool of literature obtained from Ovid Medline and PsycInfo, using the following topic searches:

-

ADOPTION (45,440 hits);

-

DIFFUSION (425,401 hits);

-

EVIDENCE-BASE (47,294 hits);

-

INNOVATION (76,818 hits);

-

MODEL (3,894,846 hits);

-

THEORY (1,172,347).

-

Given these large yields, we used the Boolean operator AND to combine the following topic searches:

-

ADOPTION AND EVIDENCE-BASE (899 hits);

-

ADOPTION AND INNOVATION (4,209 hits);

-

ADOPTION AND DIFFUSION (3,059 hits);

-

ADOPTION AND EVIDENCE-BASE AND INNOVATION (135 hits).

To narrow the focus on theoretical models, further combinations using the Boolean operator AND were used:

-

ADOPTION AND INNOVATION AND MODEL (48 hits);

-

ADOPTION AND INNOVATION AND THEORY (194 hits);

-

ADOPTION AND EVIDENCE-BASE AND MODEL (23 hits);

-

ADOPTION AND EVIDENCE-BASE AND THEORY (80 hits);

-

ADOPTION AND INNOVATION AND MODEL AND THEORY (439 hits);

-

ADOPTION AND EVIDENCE-BASE AND MODEL AND THEORY (42 hits);

The last step searches from these three databases formed the preliminary pool of literature. All articles were searched for overlaps within and between databases, which yielded 332 unique hits. To systematically zero into specific adoption theories and their measures, we screened article keywords so at least one of the following keywords was included:

-

ADOPTION;

-

ADOPTION OF INNOVATION;

-

CONCEPTUAL MODEL;

-

DIFFUSION;

-

EVIDENCE-BASED INTERVENTIONS;

-

EVIDENCE-BASED PRACTICE;

-

FRAMEWORK;

-

INNOVATION;

-

THEORY.

In addition, article titles were screened so that at least one of the following words was included:

-

ADOPTION;

-

DIFFUSION;

-

DIFFUSION OF INNOVATION;

-

DIFFUSION OF INNOVATIONS;

-

EVIDENCE-BASED INTERVENTIONS;

-

EVIDENCE-BASED MENTAL HEALTH TREATMENTS;

-

EVIDENCE-BASED PRACTICE;

-

FRAMEWORK;

-

INNOVATION;

-

INNOVATION ADOPTION;

-

MODEL;

-

MULTILEVEL;

-

RESEARCH-BASED PRACTICE;

-

THEORETICAL MODELS.

Finally, we screened the actual titles of the adoption theories within the texts of the articles so that at least one the following words were included:

-

ADOPTION;

-

DIFFUSION;

-

EVIDENCE-BASED;

-

EVIDENCE-BASED PRACTICE;

-

FRAMEWORK;

-

INNOVATION;

-

MODEL.

Rights and permissions

About this article

Cite this article

Chor, K.H.B., Wisdom, J.P., Olin, SC.S. et al. Measures for Predictors of Innovation Adoption. Adm Policy Ment Health 42, 545–573 (2015). https://doi.org/10.1007/s10488-014-0551-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10488-014-0551-7