Abstract

Quantum Bridge Analytics relates generally to methods and systems for hybrid classical-quantum computing, and more particularly is devoted to developing tools for bridging classical and quantum computing to gain the benefits of their alliance in the present and enable enhanced practical application of quantum computing in the future. This is the first of a two-part tutorial that surveys key elements of Quantum Bridge Analytics and its applications, with an emphasis on supplementing models with numerical illustrations. In Part 1 (the present paper) we focus on the Quadratic Unconstrained Binary Optimization model which is presently the most widely applied optimization model in the quantum computing area, and which unifies a rich variety of combinatorial optimization problems. This document extends an original version published in 4OR to include a section on advanced models related to quantum optimization and a section reporting comparative computational results on challenging combinatorial applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The field of Combinatorial Optimization (CO) is one of the most important areas in the field of optimization, with practical applications found in every industry, including both the private and.

Public sectors. It is also one of the most active research areas pursued by the research communities of Operations Research, Computer Science and Analytics as they work to design and test new methods for solving real world CO problems.

Generally, these problems are concerned with making wise choices in settings where a large number of yes/no decisions must be made and each set of decisions yields a corresponding objective function value-like a cost or profit value. Finding good solutions in these settings is extremely difficult. The traditional approach is for the analyst to develop a solution algorithm that is tailored to the mathematical structure of the problem at hand. While this approach has produced good results in certain problem settings, it has the disadvantage that the diversity of applications arising in practice requires the creation of a diversity of solution techniques, each with limited application outside their original intended use.

As observed in Glover et al. (2019), in recent years we have discovered that a mathematical formulation known as QUBO, an acronym for a Quadratic Unconstrained Binary Optimization problem, can embrace an exceptional variety of important CO problems found in industry, science and government, as documented in studies such as Kochenberger et al. (2014) and Anthony et al. (2017). Through special reformulation techniques that are easy to apply, the power of QUBO solvers can be used to efficiently solve many important problems once they are put into the QUBO framework.

The QUBO model has emerged as an underpinning of the quantum computing area known as quantum annealing and Fujitsu's digital annealing, and has become a subject of study in neuromorphic computing. Through these connections, QUBO models lie at the heart of experimentation carried out with quantum computers developed by D-Wave Systems and neuromorphic computers developed by IBM. The consequences of these new discoveries linking QUBO models to quantum computing are being explored in initiatives by organizations such as IBM, Google, Amazon, Microsoft, D-Wave and Lockheed Martin in the commercial realm and Los Alamos National Laboratory, Oak Ridge National Laboratory, Lawrence Livermore National Laboratory and NASA’s Ames Research Center in the public sector. Computational experience is being amassed by both the classical and the quantum computing communities that highlights not only the potential of the QUBO model but also its effectiveness as an alternative to traditional modeling and solution methodologies.

The connection with Quantum Bridge Analytics derives from the gains to be achieved by building on these developments to bridge the gap between classical and quantum computational methods and technologies. As emphasized in the 2019 Consensus Study Report titled Quantum Computing: Progress and Prospects, by the National Academies of Sciences, Engineering and Medicine (https://www.nap.edu/catalog/25196/quantum-computing-progress-and-prospects) quantum computing will remain in its infancy for perhaps another decade, and in the interim “formulating an R&D program with the aim of developing commercial applications for near-term quantum computing is critical to the health of the field.” The report further notes that such a program will rest on developing “hybrid classical-quantum techniques.” Innovations that underlie and enable these hybrid classical-quantum techniques are the focus of Quantum Bridge Analytics and draw heavily on the QUBO model for their inspiration.

The significance of the ability of the QUBO model to encompass many models in combinatorial optimization is enhanced by the fact that the QUBO model can be shown to be equivalent to the Ising model that plays a prominent role in physics, as highlighted in in the paper by Lucas (2014). Consequently, the broad range of optimization problems solved effectively by state-of-the-art QUBO solution methods are joined by an important domain of problems arising in physics applications.

The materials provided in the sections that follow illustrate the process of reformulating important optimization problems as QUBO models through a series of explicit examples. Collectively these examples highlight the application breadth of the QUBO model. We disclose the unexpected advantages of modeling a wide range of problems in a form that differs from the linear models classically adopted in the optimization community. We show how many different types of constraining relationships arising in practice can be embodied within the “unconstrained” QUBO formulation in a very natural manner using penalty functions, yielding exact model representations in contrast to the approximate representations produced by customary uses of penalty functions. Each step of generating such models is illustrated in detail by simple numerical examples, to highlight the convenience of using QUBO models in numerous settings. As part of this, we provide techniques that can be used to recast a variety of problems that may not seem at first to fit within an unconstrained binary optimization structure into an equivalent QUBO model. We also describe recent innovations for solving QUBO models that offer a fertile avenue for integrating classical and quantum computing and for applying these models in machine learning.

As pointed out in Kochenberger and Glover (2006), the QUBO model encompasses the following important optimization problems:

-

Quadratic Assignment Problems

-

Capital Budgeting Problems

-

Multiple Knapsack Problems

-

Task Allocation Problems (distributed computer systems)

-

Maximum Diversity Problems

-

P-Median Problems

-

Asymmetric Assignment Problems

-

Symmetric Assignment Problems

-

Side Constrained Assignment Problems

-

Quadratic Knapsack Problems

-

Constraint Satisfaction Problems (CSPs)

-

Discrete Tomography Problems

-

Set Partitioning Problems

-

Set Packing Problems

-

Warehouse Location Problems

-

Maximum Clique Problems

-

Maximum Independent Set Problems

-

Maximum Cut Problems

-

Graph Coloring Problems

-

Number Partitioning Problems

-

Linear Ordering Problems

-

Clique Partitioning Problems

-

SAT problems

Details of such applications are elaborated more fully in Kochenberger et al. (2014).

In the following development we describe approaches that make it possible to model these and many other types of problems in the QUBO framework and provide information about recent developments linking QUBO to machine learning and quantum computing.

1.1 Basic QUBO problem formulation

We now give a formal definition of the QUBO model whose significance will be made clearer by numerical examples that give a sense of the diverse array of practical QUBO applications.

Definition

The QUBO model is expressed by the optimization problem:

where x is a vector of binary decision variables and Q is a square matrix of constants.

It is common to assume that the Q matrix is symmetric or in upper triangular form, which can be achieved without loss of generality simply as follows:

1.1.1 Symmetric form

For all i and j except i = j, replace \(q_{ij}\) by \((q_{ij} + q_{ji} )/2\).

1.1.2 Upper triangular form

For all \(i\) and \(j\) with \(j > i\), replace \(q_{ij}\) by \(q_{ij} + q_{ji}\). Then replace all \(q_{ij}\) for \(j < i\) by 0. (If the matrix is already symmetric, this just doubles the \(q_{ij}\) values above the main diagonal, and then sets all values below the main diagonal to 0).

In the examples given in the following sections, we will work with the full, symmetric Q matrix rather than adopting the “upper triangular form.”

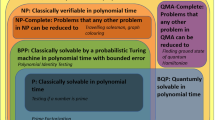

1.1.3 Comment on the formal classification of QUBO models and their solution

QUBO models belong to a class of problems known to be NP-hard. The practical meaning of this is that exact solvers designed to find “optimal” solutions (like the commercial CPLEX and Gurobi solvers) will most likely be unsuccessful except for very small problem instances. Using such methods, realistic sized problems can run for days and even weeks without producing high quality solutions. Fortunately, as we disclose in the sections that follow, impressive successes are being achieved by using modern metaheuristic methods that are designed to find high quality but not necessarily optimal solutions in a modest amount of computer time. These approaches are opening valuable possibilities for joining classical and quantum computing.

2 Illustrative examples and definitions

Before presenting common practical applications, we first give examples and definitions to lay the groundwork to see better how these applications can be cast in QUBO form.

To begin, consider the optimization problem.

where the variables, \(x_{j}\), are binary. We can make several observations:

-

1.

The function to be minimized is a quadratic function in binary variables with a linear part \(- 5x_{1} - 3x_{2} - 8x_{3} - 6x_{4}\) and a quadratic part \(4x_{1} x_{2} + 8x_{1} x_{3}^{{}} + 2x_{2} x_{3} + 10x_{3} x_{4}\).

-

2.

Since binary variables satisfy \(x_{j} = x_{j}^{2}\), the linear part can be written as

$$ - 5x_{1}^{2} - 3x_{2}^{2} - 8x_{3}^{2} - 6x_{4}^{2} $$ -

3.

Then we can re-write the model in the following matrix form:

\({\text{Minimize}}\,y = \left( {x_{1} x_{2} x_{3} x_{4} } \right)\left[ {\begin{array}{*{20}c} { - 5} & 2 & 4 & 0 \\ 2 & { - 3} & 1 & 0 \\ 4 & 1 & { - 8} & 5 \\ 0 & 0 & 5 & { - 6} \\ \end{array} } \right]\left[ {\begin{array}{*{20}c} {x_{1} } \\ {x_{2} } \\ {x_{3} } \\ {x_{4} } \\ \end{array} } \right]\).

-

4.

In turn, this can be written in the matrix notation introduced in Sect. 1 as

-

a.

Minimize \(y = x^{t} Qx\).

-

b.

where x is a column vector of binary variables. Note that the coefficients of the original linear terms appear on the main diagonal of the Q matrix. In this case Q is symmetric about the main diagonal without needing to modify the coefficients by the approach shown in Sect. 1.

-

a.

-

5.

Other than the 0/1 restrictions on the decision variables, QUBO is an unconstrained model with all problem data being contained in the Q matrix. These characteristics make the QUBO model particularly attractive as a modeling framework for combinatorial optimization problems, offering a novel alternative to classically constrained representations.

-

6.

The solution to the model in (3) above is: \(y = - 11,\;\;x_{1} = x_{4} = 1,\;x_{2} = x_{3} = 0.\)

Remarks

-

As already noted, the stipulation that Q is symmetric about the main diagonal does not limit the generality of the model.

-

As previously emphasized, a variety of optimization problems can naturally be formulated and solved as an instance of the QUBO model. In addition, many other problems that don’t appear to be related to QUBO problems can be re-formulated as a QUBO model. We illustrate this special feature of the QUBO model in the sections that follow.

3 Natural QUBO formulations

As mentioned earlier, several important problems fall naturally into the QUBO class. To illustrate such cases, we provide two examples of important applications whose formulations naturally take the form of a QUBO model.

3.1 The number partitioning problem

The Number Partitioning problem has numerous applications cited in the Bibliography section of these notes. A common version of this problem involves partitioning a set of numbers into two subsets such that the subset sums are as close to each other as possible. We model this problem as a QUBO instance as follows:

Consider a set of numbers \(S = \left\{ {s_{1} ,s_{2} ,s_{3} ,...,s_{m} } \right\}\). Let \(x_{j} = 1\;\;\) if \(s_{j} \;\) is assigned to subset 1; 0 otherwise. Then the sum for subset 1 is given by.

\({\text{sum}}_{1} = \sum\nolimits_{j = 1}^{m} {s_{j} x_{j} }\) and the sum for subset 2 is given by \({\text{sum}}_{2} = \sum\nolimits_{j = 1}^{m} {s_{j} } - \sum\nolimits_{j = 1}^{m} {s_{j} x_{j} }\).The difference in the sums is then.

\({\text{diff}} = \sum\limits_{j = 1}^{m} {s_{j} } - 2\sum\limits_{j = 1}^{m} {s_{j} x_{j} = c - 2\sum\limits_{j = 1}^{m} {s_{j} x_{j} } }\).

We approach the goal of minimizing this difference by minimizing

where

Dropping the additive and multiplicative constants, our QUBO optimization problem becomes:

where the Q matrix is constructed with \(q_{ii}\) and \(q_{ij}\) as defined above.

Numerical Example: Consider the set of eight numbers

By the development above, we have \(c^{2} = 27,556\) and the equivalent QUBO problem is \(\min \;y = x^{t} Qx\) with

Solving QUBO gives \(x = \left( {0,0,0,1,1,0,0,1} \right)\) for which \(y = - 6889\), yielding perfectly matched sums which equal 83. The development employed here can be expanded to address other forms of the number partitioning problem, including problems where the numbers must be partitioned into three or more subsets, as discussed in Alidaee et al. (2005).

3.2 The max-cut problem

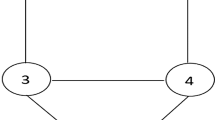

The Max Cut problem is one of the most famous problems in combinatorial optimization. Given an undirected graph G(V, E) with a vertex set V and an edge set E, the Max Cut problem seeks to partition V into two sets such that the number of edges between the two sets (considered to be severed by the cut), is a large as possible.

We can model this problem by introducing binary variables satisfying \(x_{j} = 1\) if vertex j is in one set and \(x_{j} = 0\) if it is in the other set. Viewing a cut as severing edges joining two sets, to leave endpoints of the edges in different vertex sets, the quantity \(x_{i} + x_{j} - 2x_{i} x_{j}\) identifies whether the edge \(\left( {i,j} \right)\) is in the cut. That is, if \((x_{i} + x_{j} - 2x_{i} x_{j} )\) is equal to 1, then exactly one of \(x_{i}\) and \(x_{j}\) equals 1, which implies edge \(\left( {i,j} \right)\) is in the cut. Otherwise \((x_{i} + x_{j} - 2x_{i} x_{j} )\) is equal to zero and the edge is not in the cut.

Thus, the problem of maximizing the number of edges in the cut can be formulated as.

Maximize \(y = \sum\limits_{{\left( {i,j} \right) \in E}} {\left( {x_{i} + x_{j} - 2x_{i} x_{j} } \right)}\).

which is an instance of

The linear terms determine the elements on the main diagonal of Q and the quadratic terms determine the off-diagonal elements. See Boros and Hammer (1991, 2002) and Kochenberger et al. (2013) for further discussions of QUBO and the Max Cut problem.

Numerical Example: To illustrate the Max Cut problem, consider the following undirected graph with 5 vertices and 6 edges.

Explicitly taking into account all edges in the graph gives the following formulation:

or

This takes the desired form

By writing the symmetric Q matrix as:

Solving this QUBO model gives \(x = (0,\;1,\;1,\;0,\;0)\). Hence vertices 2 and 3 are in one set and vertices 1, 4, and 5 are in the other, with a maximum cut value of 5.

In the above examples, the problem characteristics led directly to an optimization problem in QUBO form. As previously remarked, many other problems require “re-casting” to create the desired QUBO form. We introduce a widely-used form of such re-casting in the next section.

4 Creating QUBO models using known penalties

The “natural form” of a QUBO model illustrated thus far contains no constraints other than those requiring the variables to be binary. However, by far the largest number of problems of interest include additional constraints that must be satisfied as the optimizer searches for good solutions.

Many of these constrained models can be effectively re-formulated as a QUBO model by introducing quadratic penalties into the objective function as an alternative to explicitly imposing constraints in the classical sense. The penalties introduced are chosen so that the influence of the original constraints on the solution process can alternatively be achieved by the natural functioning of the optimizer as it looks for solutions that avoid incurring the penalties. That is, the penalties are formulated so that they equal zero for feasible solutions and equal some positive penalty amount for infeasible solutions. For a minimization problem, these penalties are added to create an augmented objective function to be minimized. If the penalty terms can be driven to zero, the augmented objective function becomes the original function to be minimized.

For certain types of constraints, quadratic penalties useful for creating QUBO models are known in advance and readily available to be used in transforming a given constrained problem into a QUBO model. Examples of such penalties for some commonly encountered constraints are given in the table below. Note that in the table, all variables are intended to be binary and the parameter P is a positive, scalar penalty value. This value must be chosen sufficiently large to assure the penalty term is indeed equivalent to the classical constraint, but in practice an acceptable value for P is usually easy to specify. We discuss this matter more thoroughly later.

Classical constraint | Equivalent penalty |

\(x + y \le 1\) | \(P(xy)\) |

\(x + y \ge 1\) | \(P(1 - x - y + xy)\) |

\(x + y = 1\) | \(P(1 - x - y + 2xy)\) |

\(x \le y\) | \(P(x - xy)\) |

\(x_{1} + x_{2} + x_{3} \le 1\) | \(P(x_{1} x_{2} + x_{1} x_{3} + x_{2} x_{3} )\) |

\(x = y\) | \(P(x + y - 2xy)\) |

Table of a few Known constraint/penalty pairs.

To illustrate the main idea, consider a traditionally constrained problem of the form:

subject to the constraint

where \(x{}_{1}\) and \(x_{2}\) are binary variables. Note that this constraint allows either or neither x variable to be chosen. It explicitly precludes both from being chosen (i.e., both cannot be set to 1).

From the 1st row in the table above, we see that a quadratic penalty that corresponds to our constraint is

where P is a positive scalar. For P chosen sufficiently large, the unconstrained problem.

minimize \(y = f(x) + Px_{1} x_{2}\).

Has the same optimal solution as the original constrained problem. If f(x) is linear or quadratic, then this unconstrained model will be in the form of a QUBO model. In our present example, any optimizer trying to minimize \(y\) will tend to avoid solutions having both \(x{}_{1}\) and \(x_{2}\) equal to 1, else a large positive amount will be added to the objective function. That is, the objective function incurs a penalty corresponding to infeasible solutions. This simple penalty has been used effectively by Pardalos and Xue (1999) in the context of the maximum clique and related problems.

4.1 The minimum vertex cover (MVC) problem

In Sect. 3.2 we saw how the QUBO model could be used to represent the famous Max Cut problem. Here we consider another well-known optimization problem on graphs called the Minimum Vertex Cover problem. Given an undirected graph with a vertex set V and an edge set E, a vertex cover is a subset of the vertices (nodes) such that each edge in the graph is incident to at least one vertex in the subset. The Minimum Vertex Cover problem seeks to find a cover with a minimum number of vertices in the subset.

A standard optimization model for MVC can be formulated as follows. Let \(x_{j} = 1\) if vertex j is in the cover (i.e., in the subset) and \(x_{j} = 0\) otherwise. Then the standard constrained, linear 0/1 optimization model for this problem is:

\({\text{Minimize}}\sum\limits_{j \in V} {x_{j} }\).

Subject to.

\(x_{i} + x_{j} \ge 1\) for all \((i,j) \in E\).

Note the constraints ensure that at least one of the endpoints of each edge will be in the cover and the objective function seeks to find the cover using the least number of vertices. Note also that we have a constraint for each edge in the graph, meaning that even for modest sized graphs we can have many constraints. Each constraint will alternatively be imposed by adding a penalty to the objective function in the equivalent QUBO model.

Referring to our table above, we see that the constraints in the standard MVC model can be represented by a penalty of the form \(P(1 - x - y + xy)\). Thus, an unconstrained alternative to the constrained model for MVC is. \({\text{Minimize}}\,y = \sum\limits_{j \in V} {x_{j} } + P\left( {\sum\limits_{{\left( {i,j} \right) \in E}} {\left( {1 - x_{i} - x_{j} + x_{i} x_{j} } \right)} } \right)\)Where P again represents a positive scalar penalty. In turn, we can write this as minimize \(x^{t} Qx\) plus a constant term. Dropping the additive constant, which has no impact on the optimization, we have an optimization problem in the form of a QUBO model.

Remark

A common extension of this problem allows a weight \(w_{j}\) to be associated with each vertex j. Following the development above, the QUBO model for the Weighted Vertex Cover problem is given by:

\({\text{Minimize}}\,y = \sum\limits_{j \in V} {w_{j} x_{j} } + P\left( {\sum\limits_{{\left( {i,j} \right) \in E}} {\left( {1 - x_{i} - x_{j} + x_{i} x_{j} } \right)} } \right)\) Numerical Example: Consider the graph of Sect. 3.2 again but this time we want to determine a minimum vertex cover.

For this graph with n = 6 edges and m = 5 nodes, the model becomes:

Which can be written as

Arbitrarily choosing P to be equal to 8 and dropping the additive constant (6P = 48) gives our QUBO model

With the Q matrix given by

Note that we went from a constrained model with 5 variables and 6 constraints to an unconstrained QUBO model in the same 5 variables. Solving this QUBO model gives: \(x^{t} Qx = - 45\) at \(x = (0,1,1,0,1)\) for which \(y = 48 - 45 = 3\), meaning that a minimum cover is given by nodes 2, 3, and 5. It’s easy to check that at this solution, all the penalty functions are equal to 0.

4.1.1 Comment on the scalar penalty P

As we have indicated, the reformulation process for many problems requires the introduction of a scalar penalty P for which a numerical value must be given. These penalties are not unique, meaning that many different values can be successfully employed. For a particular problem, a workable value is typically set based on domain knowledge and on what needs to be accomplished. Often, we use the same penalty for all constraints but there is nothing wrong with having different penalties for different constraints if there is a good reason to differentially treat various constraints. If a constraint must absolutely be satisfied, i.e., a “hard” constraint, then P must be large enough to preclude a violation. Some constraints, however, are “soft”, meaning that it is desirable to satisfy them but slight violations can be tolerated. For such cases, a more moderate penalty value will suffice.

A penalty value that is too large can impede the solution process as the penalty terms overwhelm the original objective function information, making it difficult to distinguish the quality of one solution from another. On the other hand, a penalty value that is too small jeopardizes the search for feasible solutions. Generally, there is a ‘Goldilocks region’ of considerable size that contains penalty values that work well. A little preliminary thought about the model can yield a ballpark estimate of the original objective function value. Taking P to be some percentage (75 to 150%) of this estimate is often a good place to start. In the end, solutions generated can always be checked for feasibility, leading to changes in penalties and further rounds of the solution process as needed to zero in on an acceptable solution.

5 The set packing problem

The Set Packing problem is a well-known optimization problem in binary variables with a general (traditional) formulation given by

where the \(a_{ij}\) are 0/1 coefficients, the \(w_{j}\) are weights and the \(x_{j}\) variables are binary. Using the penalties of the form shown in the first and fifth rows of the table given earlier, we can easily construct a quadratic penalty corresponding to each of the constraints in the traditional model. Then by subtracting the penalties from the objective function, we have an unconstrained representation of the problem in the form of a QUBO model.

Numerical Example: Consider the following small example of a set packing problem:

Here all the objective function coefficients, the \(w_{j}\) values, are equal to 1. Using the penalties mentioned above, the equivalent unconstrained problem is:

This has our customary QUBO form

where the Q matrix, with P arbitrarily chosen to be 6, is given by

Solving the QUBO model gives \(y = 2\) at \(x = (0,1,1,0)\). Note that at this solution, all four penalty terms are equal to zero.

Remark

Set packing problems with thousands of variables and constraints have been efficiently reformulated and solved in Alidaee et al. (2008) using the QUBO reformulation illustrated in this example.

5.1 The max 2-sat problem

Satisfiability problems, in their various guises, have applications in many different settings. Often these problems are represented in terms of clauses, in conjunctive normal form, consisting of several true/false literals. The challenge is to determine the literals so that as many clauses as possible are satisfied.

For our optimization approach, we’ll represent the literals as 0/1 values and formulate models that can be re-cast into the QUBO framework and solved with QUBO solvers. To illustrate the approach, we consider the category of satisfiability problems known as Max 2-Sat problems.

For Max 2-Sat, each clause consists of two literals and a clause is satisfied if either or both literals are true. There are three possible types of clauses for this problem, each with a traditional constraint that must be satisfied if the clause is to be true. In turn, each of these three constraints has a known quadratic penalty given in our previous table.

The three clause types along with their traditional constraints and associated penalties are:

-

1.

No negations: Example (\(x_{i} \vee x_{j}\))

-

Traditional constraint: \(\;x_{i} + x_{j} \ge 1\)

Quadratic Penalty: \((1 - x_{i} - x_{j} + x_{i} x_{j} )\)

-

-

2.

One negation: Example (\(x_{i} \vee \overline{x}_{j}\))

-

Traditional constraint: \(x_{i} + \overline{x}_{j} \ge 1\)

Quadratic Penalty: \((x_{j} - x_{i} x_{j} )\)

-

-

3.

Two negations: Example (\(\overline{x}_{i} \vee \overline{x}_{j}\))

-

Traditional constraint: \(\overline{x}_{i} + \overline{x}_{j} \ge 1\)

Quadratic Penalty: \((x_{i} x_{j} )\)

-

Note that \(x_{j}\) = 1 or 0 denoting whether literal j is true or false. The notation \(\overline{x}_{j}\), the complement of \(x_{j}\), is equal to \((1 - x_{j} )\).)

For each clause type, if the traditional constraint is satisfied, the corresponding penalty is equal to zero, while if the traditional constraint is not satisfied, the quadratic penalty is equal to 1. Given this one-to-one correspondence, we can approach the problem of maximizing the number of clauses satisfied by equivalently minimizing the number of clauses not satisfied. This perspective, as we will see, gives us a QUBO model.

For a given Max 2-Sat instance then, we can add the quadratic penalties associated with the problem clauses to get a composite penalty function which we want to minimize. Since the penalties are all quadratic, this penalty function takes the form of a QUBO model,

\(\min \;y = x^{t} Qx\). Moreover, if \(y\) turns out to be equal to zero when minimizing the QUBO model, this means we have a solution that satisfies all of the clauses; if \(y\) turns out to equal 5, that means we have a solution that satisfies all but 5 of the clauses; and so forth.

This modeling and solution procedure is illustrated by the following example with 4 variables and 12 clauses where the penalties are determined by the clause type.

5.1.1 Clause # clause quadratic penalty

-

1.

\(\begin{array}{*{20}c} {x_{1} \vee x_{2} } & {(1 - x_{1} - x_{2} + x_{1} x_{2} )} \\ \end{array}\)

-

2.

\(\begin{array}{*{20}c} {x_{1} \vee \overline{x}_{2} } & {(x_{2} - x_{1} x_{2} )} \\ \end{array}\)

-

3.

\(\begin{array}{*{20}c} {\overline{x}_{1} \vee x_{2} } & {(x_{1} - x_{1} x_{2} )} \\ \end{array}\)

-

4.

\(\begin{array}{*{20}c} {\overline{x}_{1} \vee \overline{x}_{2} } & {(x_{1} x_{2} )} \\ \end{array}\)

-

5.

\(\begin{array}{*{20}c} {\overline{x}_{1} \vee x_{3} } & {(x_{1} - x_{1} x_{3} )} \\ \end{array}\)

-

6.

\(\begin{array}{*{20}c} {\overline{x}_{1} \vee \overline{x}_{3} } & {(x_{1} x_{3} )} \\ \end{array}\)

-

7.

\(\begin{array}{*{20}c} {x_{2} \vee \overline{x}_{3} } & {(x_{3} - x_{2} x_{3} )} \\ \end{array}\)

-

8.

\(\begin{array}{*{20}c} {x_{2} \vee x_{4} } & {(1 - x_{2} - x_{4} + x_{2} x_{4} )} \\ \end{array}\)

-

9.

\(\begin{array}{*{20}c} {\overline{x}_{2} \vee x_{3} } & {(x_{2} - x_{2} x_{3} )} \\ \end{array}\)

-

10.

\(\begin{array}{*{20}c} {\overline{x}_{2} \vee \overline{x}_{3} } & {(x_{2} x_{3} )} \\ \end{array}\)

-

11.

\(\begin{array}{*{20}c} {x_{3} \vee x_{4} } & {(1 - x_{3} - x_{4} + x_{3} x_{4} )} \\ \end{array}\)

-

12.

\(\begin{array}{*{20}c} {\overline{x}_{3} \vee \overline{x}_{4} } & {(x_{3} x_{4} )} \\ \end{array}\)

Adding the individual clause penalties together gives our QUBO model

Or,

Where the Q matrix is given by

Solving QUBO gives: \(y = 3 - 2 = 1\) at \(x_{1} = x_{2} = x_{3} = 0,\;x_{4} = 1\), meaning that all clauses but one are satisfied.

Remarks

The QUBO approach illustrated above has been successfully used in Kochenberger etal. (2005a, b, c) to solve Max 2-sat problems with hundreds of variables and thousands of clauses. An interesting feature of this approach for solving Max 2-sat problems is that the size of the resulting QUBO model to be solved is independent of the number of clauses in the problem and is determined only by the number of variables at hand. Thus, a Max 2-Sat problem with 200 variables and 30,000 clauses can be modeled and solved as a QUBO model with just 200 variables.

6 Creating QUBO models: a general purpose approach

In this section, we illustrate how to construct an appropriate QUBO model in cases where a QUBO formulation doesn’t arise naturally (as we saw in Sect. 3) or where useable penalties are not known in advance (as we saw in Sect. 4). It turns out that for these more general cases, we can always “discover” useable penalties by adopting the procedure outlined below.

For this purpose, consider the general 0/1 optimization problem of the form:

This model accommodates both quadratic and linear objective functions since the linear case results when C is a diagonal matrix (observing that \(x_{j}^{2} = x_{j}\) when \(x{}_{j}\) is a 0–1 variable). Under the assumption that A and b have integer components, problems with inequality constraints can always be put in this form by including slack variables and then representing the slack variables by a binary expansion. (For example, this would introduce a slack variable s to convert the inequality \(4x_{1} + 5x_{2} - x_{3} \le 6\) into \(4x_{1} + 5x_{2} - x_{3} + s = 6\), and since clearly \(s \le 7\) (in case \(x_{3} = 1\)), \(s\) could be represented by the binary expansion \(s_{1} + 2s_{2} + 4s{}_{3}\) where \(s_{1} ,s_{2,}\) and \(s{}_{3}\) are additional binary variables. If it is additionally known that at not both \(x_{1}\) and \(x_{2}\) can be 0, then \(s\) can be at most 3 and can be represented by the expansion \(s_{1} + 2s_{2}\). A fuller treatment of slack variables is given subsequently.) These constrained quadratic optimization models are converted into equivalent unconstrained QUBO models by converting the constraints \(Ax = b\) (representing slack variables as x variables) into quadratic penalties to be added to the objective function, following the same re-casting as we illustrated in Sect. 4.

Specifically, for a positive scalar P, we add a quadratic penalty \(P\left( {Ax - b} \right)^{t} \left( {Ax - b} \right)\) to the objective function to get

where the matrix D and the additive constant c result directly from the matrix multiplication indicated. Dropping the additive constant, the equivalent unconstrained version of the constrained problem becomes

Remarks

-

1.

A suitable choice of the penalty scalar P, as we commented earlier, can always be chosen so that the optimal solution to QUBO is the optimal solution to the original constrained problem. Solutions obtained can always be checked for feasibility to confirm whether or not appropriate penalty choices have been made.

-

2.

For ease of reference, the preceding procedure that transforms the general problem into an equivalent QUBO model will be called Transformation # 1. The mechanics of Transformation #1 can be employed whenever we need to convert linear constraints of the form \(Ax = b\) into usable quadratic penalties in our efforts to re-cast a given problem with equality constraints into the QUBO form. Boros and Hammer (2002) give a discussion of this approach which is the basis for establishing the generality of QUBO.

For realistic applications, a program will need to be written implementing Transformation # 1 and producing the Q matrix needed for the QUBO model. Any convenient language, like C++, Python, Matlab, etc., can be used for this purpose. For small problems, or for preliminary tests preceding large-scale applications, we can usually proceed manually as we’ll do in these notes.

-

3.

Note that the additive constant, c, does not impact the optimization and can be ignored during the optimization process. Once the QUBO model has been solved, the constant c can be used to recover the original objective function value. Alternatively, the original objective function value can always be determined by using the optimal \(x_{j}\) found when QUBO is solved.

Transformation #1 is the “go to” approach in cases where appropriate quadratic penalty functions are not known in advance. In general, it represents an approach that can be adopted for any problem. Due to this generality, Transformation # 1 has proven to be an important modeling tool in many problem settings.

Before moving on to applications in this section, we want to single out another constraint/penalty pair for special recognition that we worked with before in Sect. 4:

Constraints of this form appear in many important applications. Due to their importance and frequency of use, we refer to this special case as Transformation #2. We’ll have occasion to use this as well as Transformation # 1 later in this section.

6.1 Set partitioning

The set partitioning problem (SPP) has to do with partitioning a set of items into subsets so that each item appears in exactly one subset and the cost of the subsets chosen is minimized. This problem appears in many settings including the airline and other industries and is traditionally formulated in binary variables as

where \(x_{j}\) denotes whether or not subset j is chosen, \(c_{j}\) is the cost of subset j, and the \(a_{ij}\) coefficients are 0 or 1 denoting whether or not variable \(x_{j}\) explicitly appears in constraint i. Note that his model has the form of the general model given at the beginning of this section where, in this case, the objective function matrix C is a diagonal matrix with all off-diagonal elements equal to zero and the diagonal elements are given by the original linear objective function coefficients. Thus, we can re-cast the model into a QUBO model directly by using Transformation # 1. We illustrate this with the following example.

Numerical Example: Consider a set partitioning problem

Subject to

And x binary. Normally, Transformation # 1 would be embodied in a supporting computer routine and employed to re-cast this problem into an equivalent instance of a QUBO model. For this small example, however, we can proceed manually as follows: The conversion to an equivalent QUBO model via Transformation # 1 involves forming quadratic penalties and adding them to the original objective function. In general, the quadratic penalties to be added (for a minimization problem) are given by \(P\sum\limits_{i} {\left( {\sum\nolimits_{j = 1}^{n} {a_{ij} x_{ij} - b_{i} } } \right)}^{2}\) where the outer summation is taken over all constraints in the system \(Ax = b\).

For our example we have

Arbitrarily taking P to be 10, and recalling that \(x_{j}^{2} = x_{j}\) since our variables are binary, this becomes

Dropping the additive constant 40, we then have our QUBO model

where the \(Q\) matrix is

Solving this QUBO formulation gives an optimal solution \(x_{1} = x_{5} = 1\) (with all other variables equal to 0) to yield \(y = 6\).

Remarks:

-

1.

The QUBO approach to solving set partitioning problems has been successfully applied in Lewis et al. (2008) to solve large instances with thousands of variables and hundreds of constraints.

-

2.

The special nature of the set partitioning model allows an alternative to Transformation #1 for constructing the QUBO model. Let \(k_{j}\) denote the number of 1’s in the jth column of the constraint matrix A and let \(r_{ij}\) denote the number of times variables i and j appear in the same constraint. Then the diagonal elements of Q are given by \(q_{ii} = c_{i} - Pk_{i}\) and the off–diagonal elements of Q are given by \(q_{ij} = q_{ji} = \Pr_{ij}\). The additive constant is given by \(m*P\). These relationships make it easy to formulate the QUBO model for any set partitioning problem without having to go through the explicit algebra of Transformation # 1.

-

3.

The set partitioning problem may be viewed as a form of clustering problem and is elaborated further in Sect. 6.

6.2 Graph coloring

In many applications, Transformation # 1 and Transformation # 2 can be used in concert to produce an equivalent QUBO model, as demonstrated next in the context of graph coloring. Vertex coloring problems seek to assign colors to nodes of a graph in such a way that adjacent nodes receive different colors. The K-coloring problem attempts to find such a coloring using exactly K colors. A wide range of applications, ranging from frequency assignment problems to printed circuit board design problems, can be represented by the K-coloring model.

These problems can be modeled as satisfiability problems as follows:

Let \(x_{ij}\) = 1 if node i is assigned color j, and 0 otherwise.

Since each node must be colored, we have the constraints

where n is the number of nodes in the graph. A feasible coloring, in which adjacent nodes are assigned different colors, is assured by imposing the constraints

For all adjacent nodes (i,j) in the graph.

This problem, then, can be re-cast in the form of a QUBO model by using Transformation # 1 on the node assignment constraints and using Transformation # 2 on the adjacency constraints. This problem does not have an objective function in its original formulation, meaning our focus is on finding a feasible coloring using the K colors allowed. As a result, any positive value for the penalty P will do. (The resulting QUBO model of course has an objective function given by \(x^{t} Qx\) where Q is determined by the foregoing re-formulation.)

Numerical Example: Consider the problem of finding a feasible coloring of the following graph using K = 3 colors.

Given the discussion above, we see that the goal is to find a solution to the system:

In this traditional form, the model has 15 variables and 26 constraints. As suggested above, to recast this problem into the QUBO form, we can use Transformation # 1 on the node assignment equations and Transformation # 2 on adjacency inequalities. One way to proceed here is to start with a 15-by-15 Q matrix where initially all the elements are equal to zero and then re-define appropriate elements based on the penalties obtained from Transformations # 1 and # 2. To clarify the approach, we’ll take these two sources of penalties one at a time. For ease of notation and to be consistent with earlier applications, we’ll first re-number the variables using a single subscript, from 1 to 15, as follows:

As we develop our QUBO model, we’ll use the variables with a single subscript.

First, we’ll consider the node assignment equations and the penalties we get from Transformation # 1. Taking these equations in turn we have.

Taking P to equal 4 and inserting these penalties in the “developing” Q matrix gives the following partially completed Q matrix along with an additive constant of 5P.

Note the block diagonal structure. Many problems have patterns that can be exploited in developing Q matrices needed for their QUBO representation. Looking for patterns is often a useful de-bugging tool.

To complete our Q matrix, it’s a simple matter of inserting the penalties representing the adjacency constraints into the above matrix. For these, we use the penalties of Transformation # 2, namely \(Px_{i} x_{j}\), for each adjacent pair of nodes and each of the three allowed colors. We have 7 adjacent pairs of nodes and three colors, yielding a total of 21 adjacency constraints. Allowing for symmetry, we’ll insert 42 penalties into the matrix, augmenting the penalties already in place. For example, for the constraint ensuring that nodes 1 and 2 can not both have color #1, the penalty is \(Px_{1} x_{4}\), implying that we insert the penalty value “2” in row 1 and column 4 of our matrix and also in column 1 and row 4. (Recall that we have relabeled our variables so that the original variables \(x_{1,1}\) and \(x_{2,1}\) are now variables \(x_{1}\) and \(x_{4}\).) Including the penalties for the other adjacency constraints completes the Q matrix as shown below

The above matrix incorporates all of the constraints of our coloring problem, yielding the equivalent QUBO model

Solving this model yields the feasible coloring:

\(x_{2} = x_{4} = x_{9} = x_{11} = x_{15} = 1\) with all other variables equal to zero.

Switching back to our original variables, this solution means that nodes 1 and 4 get color #2, node 2 gets color # 1, and nodes 3 and 5 get color # 3.

Remark

This approach to graph coloring problems has proven to be very effective for a wide variety of coloring instances with hundreds of nodes, as demonstrated in Kochenberger et. al. (2005a, b, c).

6.3 General 0/1 programming

Many important problems in industry and government can be modeled as 0/1 linear programs with a mixture of constraint types. The general problem of this nature can be represented in matrix form by

where slack variables are introduced as needed to convert inequality constraints into equalities. Given a problem in this form, Transformation # 1 can be used to re-cast the problem into the QUBO form

As discussed earlier, problems with inequality constraints can be handled by introducing slack variables, via a binary expansion, to create the system of constraints \(Ax = b\).

Numerical Example: Consider the general 0/1 problem

Since Transformation # 1 requires all constraints to be equations rather than inequalities, we convert the 1st and 3rd constraints to equations by including slack variables via a binary expansion. To do this, we first estimate upper bounds on the slack activities as a basis for determining how many binary variables will be required to represent the slack variables in the binary expansions. Typically, the upper bounds are determined simply by examining the constraints and estimating a reasonable value for how large the slack activity could be. For the problem at hand, we can refer to the slack variables for constraints 1 and 3 as \(s_{1}\) and \(s_{3}\) with upper bounds 3 and 6 respectively. Our binary expansions are:

where \(x_{6} ,x_{7} ,x{}_{8},x_{9}\) and \(x_{10}\) are new binary variables. Note that these new variables will have objective function coefficients equal to zero. Including these slack variables gives the system \(Ax = b\) with \(A\) given by:

A = \(\left[ {\begin{array}{*{20}c} 2 & 2 & 4 & 3 & 2 & 1 & 2 & 0 & 0 & 0 \\ 1 & 2 & 2 & 1 & 2 & 0 & 0 & 0 & 0 & 0 \\ 3 & 3 & 2 & 4 & 4 & 0 & 0 & { - 1} & { - 2} & { - 4} \\ \end{array} } \right]\).

We can now use Transformation # 1 to reformulate our problem as a QUBO instance. Adding the penalties to the objective function gives

Taking P = 10 and re-writing this in the QUBO format gives

With an additive constant of -900 and a Q matrix.

Q = \(\left[ {\begin{array}{*{20}c} {526} & { - 150} & { - 160} & { - 190} & { - 180} & { - 20} & { - 40} & {30} & {60} & {120} \\ { - 150} & {574} & { - 180} & { - 200} & { - 200} & { - 20} & { - 40} & {30} & {60} & {120} \\ { - 160} & { - 180} & {688} & { - 220} & { - 200} & { - 40} & { - 80} & {20} & {40} & {80} \\ { - 190} & { - 200} & { - 220} & {645} & { - 240} & { - 30} & { - 60} & {40} & {80} & {160} \\ { - 180} & { - 200} & { - 200} & { - 240} & {605} & { - 20} & { - 40} & {40} & {80} & {160} \\ { - 20} & { - 20} & { - 40} & { - 30} & { - 20} & {130} & { - 20} & 0 & 0 & 0 \\ { - 40} & { - 40} & { - 80} & { - 60} & { - 40} & { - 20} & {240} & 0 & 0 & 0 \\ {30} & {30} & {20} & {40} & {40} & 0 & 0 & { - 110} & { - 20} & { - 40} \\ {60} & {60} & {40} & {80} & {80} & 0 & 0 & { - 20} & { - 240} & { - 80} \\ {120} & {120} & {80} & {160} & {160} & 0 & 0 & { - 40} & { - 80} & { - 560} \\ \end{array} } \right]\).

Solving \(\max \;y = x^{\prime}Qx\) gives the non-zero values

For which \(y = 916.\) Note that the third constraint is loose. Adjusting for the additive constant, it gives an objective function value of 16. Alternatively, we could have simply evaluated the original objective function at the solution \(x_{1} = x_{4} = x_{5} = 1\) to get the objective function value of 16.

Remarks

Any problem in linear constraints and bounded integer variables can be converted through a binary expansion into \(\max {\kern 1pt} \;y = x^{\prime}Qx\) as illustrated here. In such applications, however, the elements of the Q matrix can, depending on the data, get unacceptably large and may require suitable scaling to mitigate this problem.

6.4 Quadratic assignment

The Quadratic Assignment Problem (QAP) is a renowned problem in combinatorial optimization with applications in a wide variety of settings. It is also one of the more challenging models to solve. The problem setting is as follows: We are given n facilities and n locations along with a flow matrix (\(f_{ij}\)) denoting the flow of material between facilities i and j. A distance matrix (\(d_{ij}\)) specifies the distance between sites i and j. The optimization problem is to find an assignment of facilities to locations to minimize the weighted flow across the system. Cost information can be explicitly introduced to yield a cost minimization model, as is common in some applications.

The decision variables are \(x_{ij} = 1\) if facility i is assigned to location j; otherwise, \(x_{ij} = 0\). Then the classic QAP model can be stated as:

\({\text{Minimize}}\sum\limits_{i = 1}^{n} {\sum\limits_{j = 1}^{n} {\sum\limits_{k = 1}^{n} {\sum\limits_{l = 1}^{n} {f_{ij} d_{kl} x_{ik} x_{jl} } } } }\).

Subject to \(\begin{gathered} \sum\limits_{i = 1}^{n} {x_{ij} = 1\quad j = 1,n} \hfill \\ \sum\limits_{j = 1}^{n} {x_{ij} = 1\quad i = 1,n} \hfill \\ \begin{array}{*{20}c} {x_{ij} \in \left\{ {0,1} \right\},} & {i,j = 1,n} \\ \end{array} \hfill \\ \end{gathered}\)

All QAP problems have \(n^{2}\) variables, which often yields large models in practical settings.

This model has the general form presented at the beginning of this section and consequently Transformation # 1 can be used to convert any QAP problem into a QUBO instance.

Numerical Example: Consider a small example with n = 3 facilities and 3 locations with flow and Distance matrices respectively given as follows: \(\left[ {\begin{array}{*{20}c} 0 & 5 & 2 \\ 5 & 0 & 3 \\ 2 & 3 & 0 \\ \end{array} } \right]\) and \(\left[ {\begin{array}{*{20}c} 0 & 8 & {15} \\ 8 & 0 & {13} \\ {15} & {13} & 0 \\ \end{array} } \right]\).

It is convenient to re-label the variables using only a single subscript as we did previously in the graph coloring problem, thus replacing.

Given the flow and distance matrices our QAP model becomes:

Subject to \(\begin{gathered} x_{1} + x_{2} + x_{3} = 1 \hfill \\ x_{4} + x_{5} + x_{6} = 1 \hfill \\ x_{7} + x_{8} + x_{9} = 1 \hfill \\ x_{1} + x_{4} + x_{7} = 1 \hfill \\ x_{2} + x_{5} + x_{8} = 1 \hfill \\ x_{3} + x_{6} + x_{9} = 1 \hfill \\ \end{gathered}\)

Converting the constraints into quadratic penalty terms and adding them to the objective function gives the unconstrained quadratic model

Choosing a penalty value of P = 200, this becomes the standard QUBO problem.

QUBO: \(\min {\kern 1pt} \quad y = x^{t} Qx\).

With an additive constant of 1200 and the following 9-by-9 Q matrix:

Solving QUBO gives \(y = - 982\) at \(x_{1} = x_{5} = x_{9} = 1\) and all other variables = 0. Adjusting for the additive constant, we get the original objective function value of \(1200 - 982 = 218.\)

Remark

A QUBO approach to solving QAP problems, as illustrated above, has been successfully applied to problems with more than 30 facilities and locations in (Wang et al. 2016).

6.5 Quadratic knapsack

Knapsack problems, like the other problems presented earlier in this section, play a prominent role in the field of combinatorial optimization, having widespread application in such areas as project selection and capital budgeting. In such settings, a set of attractive potential projects is identified and the goal is to identify a subset of maximum value (or profit) that satisfies the budget limitations. The classic linear knapsack problem applies when the value of a project depends only on the individual projects under consideration. The quadratic version of this problem arises when there is an interaction between pairs of projects affecting the value obtained.

For the general case with n projects, the Quadratic Knapsack Problem (QKP) is commonly modeled as

Subject to the budget constraint

where \(x_{j} = 1\) if project j is chosen: else, \(x_{j} = 0\). The parameters \(v_{ij} ,\;a_{j} \;{\text{and}}\;b\) represent, respectively, the value associated with choosing projects i and j, the resource requirement of project j, and the total resource budget. Generalizations involving multiple knapsack constraints are found in a variety of application settings.

Numerical Example: Consider the QKP model with four projects:

Subject to the knapsack constraint:

We re-cast this into the form of a QUBO model by first converting the constraint into an equation and then using the ideas embedded in Transformation # 1. Introducing a slack variable in the form of the binary expansion \(1x_{5} + 2x_{6}\), we get the equality constraint

Which we can convert to penalties to produce our QUBO model as follows.

Including the penalty term in the objective function gives the unconstrained quadratic model:

Choosing a penalty P = 10, and cleaning up the algebra gives the QUBO model.

QUBO: \(\max \;y = x^{t} Qx\).

With an additive constant of − 2560 and the Q matrix

Solving QUBO gives \(y = 2588\) at \(x = (1,0,1,1,0,0)\). Adjusting for the additive constant, gives the value 28 for the original objective function.

Remark

The QUBO approach to QKP has proven to be successful on problems with several hundred variables as shown in Glover et al. (2002a, b).

7 Connections to quantum computing and machine learning

7.1 Quantum computing QUBO developments

As noted in Sect. 1, one of the most significant applications of QUBO emerges from its equivalence to the famous Ising problem in physics. In common with the earlier demonstration that a remarkable array of NP-hard problems can converted into the QUBO form, (Lucas, 2014) more recently has observed that such problems can be converted into the Ising form, including graph and number partitioning, covering and set packing, satisfiability, matching, and constrained spanning tree problems, among others. Pakin (2017) presents an algorithm for finding the shortest path through a maze by expressing the shortest path as the globally optimal value of an Ising Hamiltonian instead of via a traditional backtracking mechanism. Ising problems replace x ∈ {0, 1}n by x ∈ {− 1, 1} n and can be put in the QUBO form by defining xj' = (xj + 1)/2 and then redefining xj to be xj'.Footnote 1 Efforts to solve Ising problems are often carried out with annealing approaches, motivated by the perspective in physics of applying annealing methods to find a lowest energy state.

More effective methods for QUBO problems, and hence for Ising problems, are obtained using modern metaheuristics. Among the best metaheuristic methods for QUBO are those based on tabu search and path relinking as described in Glover (1996, 1997), Glover and Laguna (1997) and adapted to QUBO in Wang et al. (2012, 2013).

A bonus from this development has been to create a link between QUBO problems and quantum computing.Footnote 2 A quantum computer based on quantum annealing with an integrated physical network structure of qubits known as a Chimera graph has incorporated ideas from Wang et al. (2012) in its software and has been implemented on the D-Wave System. The ability to obtain a quantum speedup effect for this system applied to QUBO problems has been demonstrated in Boixo et al. (2014).

Additional advances incorporating methodology from Wang et al. (2012, 2013) are provided in the D-Wave open source software system Qbsolv (2017) and in the supplementary QMASM system by Pakin (2018). Qbsolv is a hyrid classical/hardware accelerator tool, which takes as input a QUBO that may be larger/denser/higher-precision than the accelerator, and solves subQUBOs on an accelerator and combines the results for full QUBO solution. It has enabled widespread experimentation to map optimization problems to the QUBO form for execution on classical and D-wave computers. D-Wave has now upgraded this system by drawing on the MIT Kerberos system (Kerberos, 2019) which offers many convenience features for users. The Quantum Bridge Analytics perspective, as elaborated below, is providing additional gains.

Recent QUBO quantum computing applications, complementing earlier applications on classical computing systems, include those for graph partitioning problems in Mniszewski et al. (2017) and Ushijima-Mwesigwa et al. (2017); graph clustering (quantum community detection problems) in Negre et al. (2019); traffic-flow optimization in Neukart et al. (2017); vehicle routing problems in Feld et al. (2018), Clark et al. (2019) and Ohzeki et al.(2018); maximum clique problems in Chapuis et al. (2018); cybersecurity problems in Munch et al. (2018) and Reinhardt et al. (2018); predictive health analytics problems in Oliveira et al. (2018) and Sahner et al. (2018); and financial portfolio management problems in Elsokkary et al. (2017) and Kalra et al. (2018). In another recent development, QUBO models are being studied using the IBM neuromorphic computer at as reported in Alom et al. (2017) and Aimone et al. (2018). Still more recently, Aramon, et al. (2019) have investigated and tested the Fujitsu Digital Annealer approach, which is also designed to solve fully connected QUBO problems, implemented on application-specific CMOS hardware and solved problems of 1024 variables.

Multiple quantum computational paradigms are emerging as important research topics, and their relative merits have been the source of some controversy. One of the most active debates concerns the promise of quantum gate systems, also known as quantum circuit systems, versus the promise of adiabatic or quantum annealing systems. Part of this debate has concerned the question of whether adiabatic quantum computing incorporates the critical element of quantum entanglement. After some period, the debate was finally resolved by Albash et al. (2015) and Lanting et al. (2014), demonstrating that this question can be answered in the affirmative.

Yet another key consideration involves the role of decoherence. Some of the main issues are discussed in Amin et al. (2008) and Albash and Lidar (2015). The challenge is for the gate model to handle decoherence effectively. Superconducting qubit techniques have very short-lived coherence times and the adiabatic approach does not require them, while the gate model does.

An important discovery by Yu et al. (2018) shows that the adiabatic and gate systems offer effectively the same potential for achieving the gains inherent in quantum computing processes, with a mathematical demonstration that the quantum circuit algorithm can be transformed into the quantum adiabatic algorithm with the exact same time complexity. This has useful implications for the relevance of QUBO models that have been implemented in an adiabatic quantum annealing setting, disclosing that analogous advances associated with QUBO models may ultimately be realized through quantum circuit systems.

Complementing this analysis, Shaydulin et al. (2018) have conducted a first performance comparison of these two leading paradigms, showing that quantum local search approach with both frameworks can achieve results comparable to state-of-the-art local search using classical computing architectures, with a potential for the quantum approaches to outperform the classical systems as hardware evolves. However, the time frame for realizing such potential has been estimated by some analysts to lie 10 or more years in the future (Debenedictis, 2019; Reedy, 2017).

Regardless of which quantum paradigm proves superior (and when this paradigm will become competitive with the best classical computing systems), the studies of Alom et al. (2017) and Aimone et al. (2018) in neuromorphic computing reinforce the studies of adiabatic and gate based models by indicating the growing significance of the QUBO/Ising model across multiple frameworks.

However, to set the stage for solving QUBO problems on quantum computers, these problems must be embedded (or compiled) onto quantum computing hardware, which in itself is a very hard problem. Date et al. (2019) address this issue by proposing an efficient algorithm for embedding QUBO problems that runs fast, uses less qubits than previous approaches and gets an objective function value close to the global minimum value. In a computational comparison, they find that their embedding algorithm outperforms the embedding algorithm of D-Wave, which is the current state of the art.

Vyskocil et al. (2019) observe that the transformation in Sect. 5.3 for handling general inequality constraints of the form \(\sum_{i=1}^{n}{x}_{i} \le k\) introduces penalties for numerous cross products, which poses difficulties for current quantum annealers such as those by D-Wave Systems. The authors give a scalable and modular two-level approach for handling this situation that first solves a small preliminary mixed integer optimization problem with 16 binary variables and 16 constraints, and then uses this to create a transformation that increases the number of QUBO variables but keeps the number of cross product terms in check, thereby aiding a quantum computer implementation.

Nevertheless, other considerations are relevant for evaluating the performance of different computational paradigms for solving QUBO problems, among them the use of reduction and preprocessing methods for decomposing large scale QUBO problem instances into smaller ones. Hahn et al. (2017) and Pelofske et al. (2019) investigate such preprocessing methods that utilize upper and lower bound heuristics in conjunction with graph decomposition, vertex and edge extraction and persistency analysis. Additional preprocessing methods are introduced in ) as described subsequently in the context of machine learning.

7.2 Quantum bridge analytics: joining classical and quantum computing paradigms

As emphasized in the 2019 Consensus Study Report titled Quantum Computing: Progress and Prospects, by the National Academies of Sciences, Engineering and Medicine (2019), quantum computing will remain in its infancy for some years to come, and in the interim “formulating an R&D program with the aim of developing commercial applications for near-term quantum computing is critical to the health of the field.” As noted in this report, such a program will rest on developing “hybrid classical-quantum techniques,” which is the focus of Quantum Bridge Analytics. With the emergence of Quantum Bridge Analytics (QBA), a field devoted to bridging the gap between classical and quantum computational methods and technologies, the creation of effective foundations for such hybrid systems is being actively pursued with the development of the AlphaQUBO solver (AlphaQUBO, 2021). This work is paving the way for a wide range of additional QUBO and QUBO-related applications in commercial and academic research settings. The power of the QBA approach has been demonstrated in Glover and Kochenberger (2019), with computational tests showing that a predecessor of AlphaQUBO solves QUBO problems between 100 and 500 variables up to three orders of magnitude faster than a mainstream quantum computing system using Kerberos, and as noted in Sect. 8, AlphaQUBO is additionally capable of solving much larger problems involving upward of a million variables.

Another blend of classical and quantum computing, known as the Quantum Approximate Optimization Algorithm (QAOA), is a hybrid variational algorithm introduced by Farhi et al. (2014) that produces approximate solutions for combinatorial optimization problems. The QAOA approach has been recently been applied in Zhou et al. (2018) to MaxCut (MC) problems, including a variant in process for Max Independent Set (MIS) problems, and is claimed by its authors to have the potential to challenge the leading classical algorithms. In theory, QAOA methods can be applied to more types of combinatorial optimization problems than embraced by the QUBO model, but at present the MC and MIS problems studied by QAOA are a very small segment of the QUBO family and no time frame is offered for gaining the ability to tackle additional QUBO problem instances. Significantly, the parameters of the QAOA framework must be modified to produce different algorithms to appropriately handle different problem types. Whether this may limit the universality of this approach in a practical sense remains to be seen.

Wang and Abdullah (2018) acknowledge that the acclaim given to QAOA for exhibiting the feature called "quantum supremacy" does not imply that QAOA will be able to outperform classical algorithms on important combinatorial optimization problems such as Constraint Satisfaction Problems, and current implementations of QAOA are subject to a gate fidelity limitation, where the potential advantages of larger values of the parameter p in QAOA applications are likely to be countered by a decrease in solution accuracy.

QAOA has inspired many researchers to laud its potential virtues, though the practical significance of this potential at present is not well established. Investigations are currently underway in Kochenberger et al. (2019) to examine this issue by computational testing on a range of QUBO models that fall within the scope of QAOA implementations presently available, to determine the promise of QAOA in relation to classical optimization on these models.

We now examine realms of QUBO models that are actively being investigated apart from issues of alternative computational frameworks for solving them efficiently.

7.3 Unsupervised machine learning with QUBO

One of the most salient forms of unsupervised machine learning is represented by clustering. The QUBO set partitioning model provides a very natural form of clustering and gives this model a useful link to unsupervised machine learning. As observed in Ailon et al. (2008) and Aloise et al. (2010), the CPP (clique partitioning problem) is popular in the area of machine learning as it offers a general model for correlation clustering (CC) and the modularity maximization (MM). Pudenz and Lidar (2013) further show how a QUBO based quantum computing model can be used in unsupervised machine learning. A related application in O’Malley et al. (2018) investigates nonnegative/binary matrix factorization with a D-Wave quantum annealer.

An application of QUBO to unsupervised machine learning in ) provides an approach that can be employed either together with quantum computing or independently. In a complementary development, clustering is used to facilitate the solution of QUBO models in Samorani et al. (2019), thereby providing a foundation for studying additional uses of clustering in this context.

7.4 Supervised machine learning with QUBO

A proposal to use QUBO in supervised machine learning is introduced in Schneidman et al. (2006). From the physics perspective, the authors argue that the equivalent Ising model is useful for any representation of neural function, based on the supposition that a statistical model for neural activity should be chosen using the principle of maximum entropy. Consequently, this model has a natural role in statistical neural models of supervised machine learning. Hamilton et al. (2018) discussed the potential to use advance computing such as neuromorphic processing units and quantum annealers in spin-glass networks, Boltzmann machines, convolutional neural networks and constraint satisfaction problems.

7.5 Machine learning to improve QUBO solution processes

The development of rules and strategies to learn the implications of specific model instances has had a long history. Today this type of machine learning permeates the field of mixed integer programming to identify relationships such as values (or bounds) that can be assigned to variables, or inequalities that can constrain feasible spaces more tightly. Although not traditionally viewed through the lens of machine learning, due in part to being classified under the name of preprocessing, these approaches are now widely acknowledged to constitute a viable and important part of the machine learning domain.

Efforts to apply machine learning to uncover the implications of QUBO problem structures have proceeded more slowly than those devoted to identifying such implications in the mixed integer programming field. A landmark paper in the QUBO area is the work of Boros et al. (2008), which uses roof duality and a max-flow algorithm to provide useful model inferences. More recently, sets of logical tests have been developed in Glover et al. (2018a, b) to learn relationships among variables in QUBO applications which achieved a 45% reduction in size for about half of the problems tested, and in 10 cases succeeded in fixing all the variables, exactly solving these problems. The rules also identified implied relationships between pairs of variables that resulted in simple logical inequalities to facilitate solving these problems.

Other types of machine learning approaches also merit a closer look in the future for applications with QUBO. Among these are the Programming by Optimization approach of Hoos (2012) and the Integrative Population Analysis approach of Glover et al. (1998a, 1998b).

8 Advanced quantum-related applications

We examine developments in quantum-related models that have recently gained particular attention in the quantum computing community and exhibit the diverse possibilities for handling important applications using the QUBO model.

8.1 Satellite tracking formulations

Satellite tracking problems that involve scheduling the daily communications between satellites and ground station are becoming increasingly critical with the growth in the number of satellites and the number of important targets to be tracked by them. These problems come in a variety of forms. The following identifies three instances of a fundamental tracking problem that will give an idea of QUBO formations for others. (See, for example, Lu et al. 2017; Xie et al. 2019)

The fundamental problem is to schedule the tracking done by satellites in order to satisfy a set of requests RQh, h ∈ H for viewing particular targets by the satellites.

Each request RQh consists of a set of resources i ∈ RQh, and may be interpreted as identifying a target object Th (satellite, space debris, etc.) to be viewed by all resources i ∈ RQh, using different time slots to view the target Th for each resource (so that no two resources are engaged in viewing Th simultaneously). The time units are discretized and target Th is viewed for as many time periods as possible within the collective span of periods the resources are available for viewing Th.

Let StartView(h,i) = the starting time for the period when Th becomes available for viewing with resource i, and let EndView(h,i) = the ending time for this view period. These starting and ending times are given by the problem data and depend on both the target object Th being viewed (determined by request RQh) and the resource i used to view Th. The period available for viewing Th by resource i is therefore given by

The actual span of periods during which viewing takes place is determined by the decision variable xh,i,t defined below. However, not all times t within this view period are admissible for starting the observation of Th by resource i, and we let StartTime(h,i) = the set of admissible starting values t within the view period. Then, also by definition

Observation: The value d(h,i) can be the same value for all resources i and all targets Th, or may depend only on resource i, or only on the target Th (determined by the request RQh), or may depend on both h and i.

8.1.1 Decision variable

We introduce a 0–1 decision variable xh,i,t, where h ∈ H, i ∈ RQh, and t ∈ StartTime(h,i), and where setting xh,i,t = 1 corresponds to choosing to view the target object Th with resource i during the time interval from t to t + d(h,i).

Restriction 1 If i ∈ RQh, then we must choose exactly one (or at most one) variable xh,i,t to receive the value xh,i,t = 1 for t ∈ StartTime(h,i).

Restriction 1 may be represented formally as follows.

Formulation 1: For each h ∈ H and each i ∈ RQh,

The constraint (1) is readily represented by a quadratic penalty term in a QUBO formulation for both the “ = 1” and the “ ≤ 1” versions. The “ ≤ 1” version can produce a more versatile QUBO formulation by starting with an objective function

And then subtracting the weighted penalty terms from this xo representation to produce a quadratic objective for a QUBO problem as shown in Sect. 4.

The maximization of the sum of the xh,i,t variables assures that the solution will make as many of these variables equal to 1 as possible, and hence the “ = 1” version will be achieved in an optimal solution if (1) admits a feasible solution for the “ = 1” case. Instead of giving each xh,i,t variable a coefficient of 1 in this starting xo expression, a positive coefficient ch,i,t can be used, giving the possibility to compel xh,i,t = 1 for some variables in preference to others, hence for viewing some targets Th more thoroughly than others or employing some resources for viewing more intensively than others.

Restriction 2 No two resources i1 and i2 ∈ RQh are allowed to view the target object Th in the same time period t.

This restriction is introduced chiefly for the purpose of economy, since viewing Th by more than one resource in each time period is wasteful. Without it, the maximization of the sum of the xh,i,t variables in xo would produce unnecessary viewings, and such viewings could likewise result even if the coefficients for the xh,i,t variables in xo were all 0 before introducing the penalty terms. To express Restriction 2 more formally, it and to facilitate the discussion of its implications with the diagrams that follow, we define ti1(start) = StartView(h,i1) and ti1(end) = EndView(h,i1), and similarly define ti2(start) = StartView(h,i2) and ti2(end) = EndView(h,i2). Then the interval [ti1(start), ti1(end)] corresponds to ViewPeriod(h,i1), and the interval [ti2(start), ti2(end)] corresponds to ViewPeriod(h,i2).

Formulation 2 By the preceding definitions, Restriction 2 is equivalent to stipulating

For all time periods t1 and t2 such that

And such that there is a non-empty intersection of the intervals

And of the intervals

The inequality (2) is readily penalized and taken into the objective function for a QUBO problem, again as shown in Sect. 4.

It is useful to identify the implication of the conditions formulated for (2.1), (2.2) and (2.3) to understand how the model captures the key problem elements and to get a clearer understanding of the problem. Assume without loss of generality that ti1(start) ≤ ti2(start). Then we have the following three cases.

Case 1. ti1(end) < ti2(start).

This case may be visualized as follows.

Then no t2 value for i2 in the interval [ti2(start), ti2(end)] that overlaps with any t1 in the interval [ti1(start), ti1(end)], and hence there are no restrictions to be expressed by (2).

Case 2. ti2(start) ≤ ti1(end) and ti2(end) ≤ ti1(end).

This case may be visualized by.

In this instance the interval [ti2(start), ti2(end)] lies entirely inside the [ti1(start), ti1(end)] interval. Hence any t1 that satisfies.

Will cause the interval [t1, t1 – d(h,i1)] to have a non-empty intersection with the interval [ti2(start), ti2(end)], and any t2 at all such that.

Will cause the interval [t2, t2 – d(h,i2)] to have a non-empty intersection with the interval [ti1(start), ti1(end)]. Hence, for Case 2 the inequality (2) must hold for all t1 satisfying (2a) and all t2 satisfying (2b).

Case 3. ti2(start) ≤ ti1(end) and ti1(end) ≤ ti2(end).

The visualization for this case is given by.

Consequently, any t1 that satisfies.

Will cause the interval [t1, t1 – d(h,i1)] to have a non-empty intersection with the interval [ti2(start), ti2(end)]. Also, all t2 satisfying.

Will cause the interval [t2, t2 – d(h,i2)] to have a non-empty intersection with the interval [ti1(start), ti1(end)]. Hence, for Case 3 the inequality (2) must be imposed for all t1 satisfying (3a) and all t2 satisfying (3b).

As in the case of the equation or inequality (1) for Formulation 1, in all of the Cases 1, 2 and 3, the inequality (2) for Formulation 2 is readily translated into a quadratic penalty for a QUBO problem as previously observed.

Formulations 1 and 2 make no mention of the case where a resource i1 may be assigned to view a target Th1 and a resource i2 may be assigned to view a target Th2. This case is handled automatically by the formulations without imposing any restrictions.

8.2 Portfolio optimization formulations

The optimization community, from both the conventional and quantum computing sides, have shown a strong interest in testing the applicability of the QUBO model for addressing important problems arising in a variety of financial areas. In these pursuits, applications have been reported in problem domains such as index tracking (QC Ware Corporation 2018; Hong et.al., 2021), credit scoring (1Qbit, 2017), and credit fraud (Multivers 2020a, b).

By far, however, the financial application receiving the most attention is Portfolio modeling as evidenced by the articles of Mugel et al. (2020), Phillipson and Bhatia (2020), Grant and Humble (2020), Venturelli and Kondratyev (2018), Palmer et al. (2021) and Meta-Analytics (2020).

At the core of most of the Portfolio models reported in the literature is the basic Markowitz idea of finding an optimal tradeoff between risk and return. Given a set of candidate assets, the problem is to form a portfolio by choosing a subset of the assets that optimize the risk-vs-return tradeoff. Following Markowitz, risk is typically represented by a covariance matrix that is estimated from past returns. As we will show, however, a different model provides a highly attractive alternative.

In the basic model, the decision variables, denoted by \(w_{i}\), represent the percentage of the total portfolio that is represented by asset i. These decision variables are continuous between 0 and 1 and their sum, taken over all assets chosen for the portfolio, is equal to 1. Keeping in the spirit of QUBO modeling where constraints are represented by quadratic penalties appended to the original objective function, a Markowitz inspired model in QUBO-ready form can we written as.

where the various model components are defined as:

-

wi :Decision variable. The percentage of the total portfolio represented by asset i.

-

\(r\): Vector of Return data.

-

-

C: Covariance Matrix.

-

\(\beta\): Risk Tolerance parameter.

-