Abstract

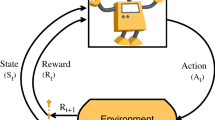

Deep reinforcement learning has shown remarkable success in the past few years. Highly complex sequential decision making problems from game playing and robotics have been solved with deep model-free methods. Unfortunately, the sample complexity of model-free methods is often high. Model-based reinforcement learning, in contrast, can reduce the number of environment samples, by learning an explicit internal model of the environment dynamics. However, achieving good model accuracy in high dimensional problems is challenging. In recent years, a diverse landscape of model-based methods has been introduced to improve model accuracy, using methods such as probabilistic inference, model-predictive control, latent models, and end-to-end learning and planning. Some of these methods succeed in achieving high accuracy at low sample complexity in typical benchmark applications. In this paper, we survey these methods; we explain how they work and what their strengths and weaknesses are. We conclude with a research agenda for future work to make the methods more robust and applicable to a wider range of applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

A dataset is static. In reinforcement learning the choice of actions may depend on the rewards that are returned during the learning process, giving rise to a dynamic, potentially unstable, learning process.

References

Abbeel P, Coates A, Quigley M, Ng AY (2007) An application of reinforcement learning to aerobatic helicopter flight. In: Advances in neural information processing systems, pp 1–8

Alpaydin E (2020) Introduction to machine learning, 3rd edn. MIT Press, Cambridge

Anthony T, Tian Z, Barber D (2017) Thinking fast and slow with deep learning and tree search. In: Advances in neural information processing systems, pp 5360–5370

Bellemare MG, Naddaf Y, Veness J, Bowling M (2013) The arcade learning environment: an evaluation platform for general agents. J Artif Intell Res 47:253–279

Bellman R (2013) Dynamic programming. Courier Corporation, 1957

Bertsekas DP, Tsitsiklis J (1996) Neuro-dynamic programming. MIT Press, Cambridge

Bishop CM (2006) Pattern recognition and machine learning. Information science and statistics. Springer, Heidelberg

Botev ZI, Kroese DP, Rubinstein RY, L’Ecuyer P (2013) The cross-entropy method for optimization. In: Handbook of statistics. Elsevier, vol 31, pp 35–59

Brazdil P, van Rijn J, Soares C, Joaquin V (2022) Metalearning: applications to automated machine learning and data mining. Springer, Berlin

Brockman G, Cheung V, Pettersson L, Schneider J, Schulman J, Tang J, Zaremba W (2016) OpenAI Gym. arXiv preprint arXiv:1606.01540

Brown N, Sandholm T (2019) Superhuman AI for multiplayer poker. Science 365(6456):885–890

Browne CB, Powley E, Whitehouse D, Lucas SM, Cowling PI, Rohlfshagen P, Tavener S, Perez D, Samothrakis S, Colton S (2012) A survey of Monte Carlo Tree Search methods. IEEE Trans Comput Intell AI Games 4(1):1–43

Buesing L, Weber T, Racaniere S, Eslami SMA, Rezende D, Reichert DP, Viola F, Besse F, Gregor K, Hassabis D, Wierstra D (2018) Learning and querying fast generative models for reinforcement learning. arXiv preprint arXiv:1802.03006

Çalışır S, Pehlivanoğlu MK (2019) Model-free reinforcement learning algorithms: a survey. In: 2019 27th signal processing and communications applications conference (SIU), pp 1–4

Campbell M, Hoane AJ Jr, Hsu F-H (2002) Deep blue. Artif Intell 134(1–2):57–83

Chao Y (2013) Share and play new sokoban levels. http://Sokoban.org

Chiappa S, Racaniere S, Wierstra D, Mohamed S (2017) Recurrent environment simulators. In: International conference on learning representations

Chua K, Calandra R, McAllister R, Levine S (2018) Deep reinforcement learning in a handful of trials using probabilistic dynamics models. In: Advances in neural information processing systems, pp 4754–4765

Clavera I, Rothfuss J, Schulman J, Fujita Y, Asfour T, Abbeel P (2018) Model-based reinforcement learning via meta-policy optimization. In: 2nd Annual conference on robot learning, CoRL 2018, Zürich, Switzerland, pp 617–629

Coulom R (2006) Efficient selectivity and backup operators in Monte-Carlo Tree Search. In: International conference on computers and games. Springer, pp 72–83

Deisenroth MP, Neumann G, Peters J (2013) A survey on policy search for robotics. In: Foundations and trends in robotics 2. Now Publishers, pp 1–142

Deisenroth M, Rasmussen CE (2011) PILCO: a model-based and data-efficient approach to policy search. In: Proceedings of the 28th international conference on machine learning (ICML-11), pp 465–472

Dietterich TG (1998) The MAXQ method for hierarchical reinforcement learning. Int Conf Mach Learn 98:118–126

Doerr A, Daniel C, Schiegg M, Nguyen-Tuong D, Schaal S, Toussaint M, Trimpe S (2018) Probabilistic recurrent state-space models. arXiv preprint arXiv:1801.10395

Duan Y, Schulman J, Xi C, Bartlett PL, Sutskever I, Abbeel P (2016) RL\({}^{2}\): fast reinforcement learning via slow reinforcement learning. arXiv preprint arXiv:1611.02779

Ebert F, Finn C, Dasari S, Xie A, Lee A, Levine S (2018) Visual foresight: model-based deep reinforcement learning for vision-based robotic control. arXiv preprint arXiv:1812.00568

Farquhar G, Rocktäschel T, Igl M, Whiteson SA (2018) TreeQN and ATreeC: differentiable tree planning for deep reinforcement learning. In International conference on learning representations

Feinberg V, Wan A, Stoica I, Jordan MI, Gonzalez JE, Levine S (2018) Model-based value estimation for efficient model-free reinforcement learning. arXiv preprint arXiv:1803.00101

Finn C, Abbeel P, Levine S (2017) Model-Agnostic Meta-Learning for fast adaptation of deep networks. In: International conference on machine learning. PMLR, pp 1126–1135

Finn C, Levine S (2017) Deep visual foresight for planning robot motion. In: 2017 IEEE international conference on robotics and automation (ICRA). IEEE, pp 2786–2793

Flack JC (2017) Coarse-graining as a downward causation mechanism. Philos Trans R Soc A: Math Phys Eng Sci 375(2109):20160338

Garcia CE, Prett DM, Morari M (1989) Model predictive control: theory and practice—a survey. Automatica 25(3):335–348

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems, pp 2672–2680

Grimm C, Barreto A, Singh S, Silver D (2020) The value equivalence principle for model-based reinforcement learning. In: Advances in neural information processing systems

Guez A, Mirza M, Gregor K, Kabra R, Racanière S, Weber T, Raposo D, Santoro A, Orseau L, Eccles T, Wayne G, Silver D, Lillicrap TP (2019) An investigation of model-free planning. In: International conference on machine learning, pp 2464–2473

Guez A, Weber T, Antonoglou I, Simonyan K, Vinyals O, Wierstra D, Munos R, Silver D (2018) Learning to search with MCTSnets. arXiv preprint arXiv:1802.04697

Gu S, Lillicrap T, Sutskever I, Levine S (2016) Continuous deep Q-learning with model-based acceleration. In: International conference on machine learning, pp 2829–2838

Haarnoja T, Zhou A, Abbeel P, Levine S (2018) Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. In: International conference on machine learning. PMLR, pp 1861–1870

Haarnoja T, Zhou A, Hartikainen K, Tucker G, Ha S, Tan J, Kumar V, Zhu H, Gupta A, Abbeel P, Levine S (2018) Soft actor-critic algorithms and applications. arXiv preprint arXiv:1812.05905

Hafner D, Lillicrap T, Ba J, Norouzi M (2020) Dream to control: learning behaviors by latent imagination. In: International conference on learning representations

Hafner D, Lillicrap T, Fischer I, Villegas R, Ha D, Lee H, Davidson J (2019) Learning latent dynamics for planning from pixels. In: International conference on machine learning, pp 2555–2565

Hafner D, Lillicrap T, Norouzi M, Ba J (2021) Mastering atari with discrete world models. In: International conference on learning representations

Hamrick JB, Ballard AJ, Pascanu R, Vinyals O, Heess N, Battaglia PW (2017) Metacontrol for adaptive imagination-based optimization. arXiv preprint arXiv:1705.02670

Hamrick JB (2019) Analogues of mental simulation and imagination in deep learning. Curr Opin Behav Sci 29:8–16

Ha D, Schmidhuber J (2018) Recurrent world models facilitate policy evolution. In: Advances in neural information processing systems, pp 2450–2462

Ha D, Schmidhuber J (2018) World models. arXiv preprint arXiv:1803.10122

Heess N, Wayne G, Silver D, Lillicrap T, Erez T, Tassa Y (2015) Learning continuous control policies by stochastic value gradients. In: Advances in neural information processing systems, pp 2944–2952

Hessel M, Modayil J, Van Hasselt H, Schaul T, Ostrovski G, Dabney W, Horgan D, Piot B, Azar M, Silver D (2018) Rainbow: combining improvements in deep reinforcement learning. In: AAAI, pp 3215–3222

Heuillet A, Couthouis F, Díaz-Rodríguez N (2021) Explainability in deep reinforcement learning. Knowl-Based Syst 214:106685

Ho TK (1995) Random decision forests. In: Proceedings of 3rd international conference on document analysis and recognition. IEEE, vol 1, pp 278–282

Hospedales T, Antoniou A, Micaelli P, Storkey A (2020) Meta-learning in neural networks: a survey. arXiv preprint arXiv:2004.05439

Hui J (2018) Model-based reinforcement learning https://medium.com/@jonathan_hui/rl-model-based-reinforcement-learning-3c2b6f0aa323. Medium post

Huisman M, van Rijn JN, Plaat A (2021) A survey of deep meta-learning. Artif Intell Rev 54:4483–4541

Ilin R, Kozma R, Werbos PJ (2007) Efficient learning in cellular simultaneous recurrent neural networks—the case of maze navigation problem. In: 2007 IEEE international symposium on approximate dynamic programming and reinforcement learning, pp 324–329

Itzkovitz S, Levitt R, Kashtan N, Milo R, Itzkovitz M, Alon U (2005) Coarse-graining and self-dissimilarity of complex networks. Phys Rev E 71(1):016127

Janner M, Fu J, Zhang M, Levine S (2019) When to trust your model: model-based policy optimization. In: Advances in neural information processing systems, pp 12498–12509

Justesen N, Bontrager P, Togelius J, Risi S (2019) Deep learning for video game playing. IEEE Trans Games 12(1):1–20

Kaelbling LP, Littman ML, Moore AW (1996) Reinforcement learning: a survey. J Artif Intell Res 4:237–285

Kahneman D (2011) Thinking, fast and slow. Farrar, Straus and Giroux

Kaiser L, Babaeizadeh M, Milos P, Osinski B, Campbell RH, Czechowski K, Erhan D, Finn C, Kozakowski P, Levine S, Sepassi R, Tucker G, Michalewski H (2019) Model-based reinforcement learning for Atari. arXiv:1903.00374

Kalweit G, Boedecker J (2017) Uncertainty-driven imagination for continuous deep reinforcement learning. In: Conference on robot learning, pp 195–206

Kamyar R, Taheri E (2014) Aircraft optimal terrain/threat-based trajectory planning and control. J Guid Control Dyn 37(2):466–483

Karl M, Soelch M, Bayer J, Van der Smagt P (2016) Deep variational Bayes filters: unsupervised learning of state space models from raw data. arXiv preprint arXiv:1605.06432

Kelley HJ (1960) Gradient theory of optimal flight paths. Am Rocket Soc J 30(10):947–954

Kempka M, Wydmuch M, Runc G, Toczek J, Jaśkowski W (2016) VizDoom: a doom-based AI research platform for visual reinforcement learning. In: 2016 IEEE conference on computational intelligence and games, pp 1–8

Kingma DP, Welling M (2014) Auto-encoding variational Bayes. In: International conference on learning representations

Kingma DP, Welling M (2019) An introduction to variational autoencoders. Found Trends Mach Learn 12(4):307–392

Kober J, Bagnell JA, Peters J (2013) Reinforcement learning in robotics: a survey. Int J Robot Res 32(11):1238–1274

Konda VR, Tsitsiklis JN (2000) Actor–critic algorithms. In: Advances in neural information processing systems, pp 1008–1014

Kwon WH, Bruckstein AM, Kailath T (1983) Stabilizing state-feedback design via the moving horizon method. Int J Control 37(3):631–643

Lakshminarayanan B, Pritzel A, Blundell C (2017) Simple and scalable predictive uncertainty estimation using deep ensembles. In: Advances in neural information processing systems, pp 6402–6413

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436

Levine S, Abbeel P (2014) Learning neural network policies with guided policy search under unknown dynamics. In: Advances in neural information processing systems, pp 1071–1079

Levine S, Koltun V (2013) Guided policy search. In: International conference on machine learning, pp 1–9

Littman ML (1994) Markov games as a framework for multi-agent reinforcement learning. In: Machine learning proceedings 1994. Elsevier, pp 157–163

Mandhane A, Zhernov A, Rauh M, Gu C, Wang M, Xue F, Shang W, Pang D, Claus R, Chiang C-H et al. (2022) Muzero with self-competition for rate control in vp9 video compression. arXiv preprint arXiv:2202.06626

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller MA, Fidjeland A, Ostrovski G, Petersen S, Beattie C, Sadik A, Antonoglou I, King H, Kumaran D, Wierstra D, Legg S, Hassabis D (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Mnih V, Badia AP, Mirza M, Graves A, Lillicrap T, Harley T, Silver D, Kavukcuoglu K (2016) Asynchronous methods for deep reinforcement learning. In: International conference on machine learning, pp 1928–1937

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M (2013) Playing Atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602

Moerland TM, Broekens J, Jonker CM (2020) A framework for reinforcement learning and planning. arXiv preprint arXiv:2006.15009

Moerland TM, Broekens J, Jonker CM (2020) Model-based reinforcement learning: a survey. arXiv preprint arXiv:2006.16712

Moerland TM, Broekens J, Plaat A, Jonker CM (2018) A0C: alpha zero in continuous action space. arXiv preprint arXiv:1805.09613

Nagabandi A, Kahn G, Fearing RS, Levine S (2018) Neural network dynamics for model-based deep reinforcement learning with model-free fine-tuning. In: 2018 IEEE international conference on robotics and automation (ICRA), pp 7559–7566

Nardelli N, Synnaeve G, Lin Z, Kohli P, Torr PHS, Usunier N (2018) Value propagation networks. In: 7th international conference on learning representations, ICLR 2019, New Orleans, LA, USA, May 6–9, 2019

Ng AY, Harada D, Russell S (1999) Policy invariance under reward transformations: theory and application to reward shaping. Int Conf Mach Learn 99:278–287

Oh J, Guo X, Lee H, Lewis RL, Singh S (2015) Action-conditional video prediction using deep networks in Atari games. In: Advances in neural information processing systems, pp 2863–2871

Oh J, Singh S, Lee H (2017) Value prediction network. In: Advances in neural information processing systems, pp 6118–6128

Ontanón S, Synnaeve G, Uriarte A, Richoux F, Churchill D, Preuss M (2013) A survey of real-time strategy game AI research and competition in StarCraft. IEEE Trans Comput Intell AI Games 5(4):293–311

Pascanu R, Li Y, Vinyals O, Heess N, Buesing L, Racanière S, Reichert D, Weber T, Wierstra D, Battaglia P (2017) Learning model-based planning from scratch. arXiv preprint arXiv:1707.06170

Plaat A (2020) Learning to play: reinforcement learning and games. Springer, Heidelberg. https://learningtoplay.net

Plaat A (2022) Deep reinforcement learning. Springer, Singapore. https://deep-reinforcement-learning.net

Polydoros AS, Nalpantidis L (2017) Survey of model-based reinforcement learning: applications on robotics. J Intell Robot Syst 86(2):153–173

Racanière S, Weber T, Reichert DP, Buesing L, Guez A, Rezende DJ, Badia AP, Vinyals O, Heess N, Li Y, Pascanu R, Battaglia PW, Hassabis D, Silver D, Wierstra D (2017) Imagination-augmented agents for deep reinforcement learning. In: Advances in neural information processing systems, pp 5690–5701

Richards AG (2005) Robust constrained model predictive control. PhD thesis, Massachusetts Institute of Technology

Risi S, Preuss M (2020) From Chess and Atari to StarCraft and beyond: how game AI is driving the world of AI. KI-Künstliche Intelligenz, pp 1–11

Rosin CD (2011) Multi-armed bandits with episode context. Ann Math Artif Intell 61(3):203–230

Sauter A, Acar E, François-Lavet V (2021) A meta-reinforcement learning algorithm for causal discovery

Schaal S (1996) Learning from demonstration. Advances in neural information processing systems, vol 9

Schleich D, Klamt T, Behnke S (2019) Value iteration networks on multiple levels of abstraction. Science and Systems XV, University of Freiburg, Freiburg im Breisgau, Germany, In Robotics

Schmidhuber J (1990) An on-line algorithm for dynamic reinforcement learning and planning in reactive environments. In: 1990 IJCNN international joint conference on neural networks. IEEE, pp 253–258

Schmidhuber J (1990) Making the world differentiable: On using self-supervised fully recurrent neural networks for dynamic reinforcement learning and planning in non-stationary environments. Inst. für Informatik, Technical report

Schneider J (1996) Exploiting model uncertainty estimates for safe dynamic control learning. Advances in neural information processing systems, vol 9

Schölkopf B, Locatello F, Bauer S, Ke NR, Kalchbrenner N, Goyal A, Bengio Y (2021) Toward causal representation learning. Proc IEEE 109(5):612–634

Schrittwieser J, Antonoglou I, Hubert T, Simonyan K, Sifre L, Schmitt S, Guez A, Lockhart E, Hassabis D, Graepel T, Lillicrap T, Silver D (2020) Mastering Atari, go, chess and shogi by planning with a learned model. Nature 588(7839):604–609

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347

Sekar R, Rybkin O, Daniilidis K, Abbeel P, Hafner D, Pathak D (2020) Planning to explore via self-supervised world models. In: International conference on machine learning

Silver D, Sutton RS, Müller M (2012) Temporal-difference search in computer Go. Mach Learn 87(2):183–219

Silver D, Huang A, Maddison CJ, Guez A, Sifre L, van den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M, Dieleman S, Grewe D, Nham J, Kalchbrenner N, Sutskever I, Lillicrap T, Leach M, Kavukcuoglu K, Graepel T, Hassabis D (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529(7587):484

Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, Hubert T, Baker L, Lai M, Bolton A, Chen Y, Lillicrap T, Hui F, Sifre L, van den Driessche G, Graepel T, Hassabis D (2017) Mastering the game of Go without human knowledge. Nature 550(7676):354

Silver D, Hubert T, Schrittwieser J, Antonoglou I, Lai M, Guez A, Lanctot M, Sifre L, Kumaran D, Graepel T, Lillicrap T, Simonyan K, Hassabis D (2018) A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 362(6419):1140–1144

Silver D, Lever G, Heess N, Degris T, Wierstra D, Riedmiller M (2014) Deterministic policy gradient algorithms. In: International conference on machine learning, pp 387–395

Silver D, van Hasselt H, Hessel M, Schaul T, Guez A, Harley T, Dulac-Arnold G, Reichert D, Rabinowitz N, Barreto A, Degris T (2017) The predictron: end-to-end learning and planning. In: Proceedings of the 34th international conference on machine learning, pp 3191–3199

Srinivas A, Jabri A, Abbeel P, Levine S, Finn C (2018) Universal planning networks. In: International conference on machine learning, pp 4739–4748

Sutton RS (1990) Integrated architectures for learning, planning, and reacting based on approximating dynamic programming. In: Machine learning proceedings 1990. Elsevier, pp 216–224

Sutton RS (1991) Dyna, an integrated architecture for learning, planning, and reacting. ACM Sigart Bull 2(4):160–163

Sutton RS, Barto AG (2018) Reinforcement learning, an introduction, 2nd edn. MIT Press, Cambridge

Talvitie E (2015) Agnostic system identification for Monte Carlo planning. In: Twenty-ninth AAAI conference on artificial intelligence

Tamar A, Wu Y, Thomas G, Levine S, Abbeel P (2016) Value iteration networks. In: Advances in neural information processing systems, pp 2154–2162

Tassa Y, Doron Y, Muldal A, Erez T, Li Y, de Las Casas D, Budden D, Abdolmaleki A, Merel J, Lefrancq A, Lillicrap T, Riedmiller M (2018) Deepmind control suite. arXiv preprint arXiv:1801.00690

Tassa Y, Erez T, Todorov E (2012) Synthesis and stabilization of complex behaviors through online trajectory optimization. In: 2012 IEEE/RSJ international conference on intelligent robots and systems, pp 4906–4913

Todorov E, Erez T, Tassa Y (2012) MuJoCo: a physics engine for model-based control. In: IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 5026–5033

Torrado RR, Bontrager P, Togelius J, Liu J, Perez-Liebana D (2018) Deep reinforcement learning for general video game AI. In: 2018 IEEE conference on computational intelligence and games (CIG). IEEE, pp 1–8

Van Der Maaten L, Postma E, Van den Herik J et al (2009) Dimensionality reduction: a comparative. J Mach Learn Res 10(66–71):13

Vinyals O, Babuschkin I, Czarnecki WM, Mathieu M, Dudzik A, Chung J, Choi DH, Powell R, Ewalds T, Georgiev P, Oh J, Horgan D, Kroiss M, Danihelka I, Huang A, Sifre L, Cai T, Agapiou JP, Jaderberg M, Vezhnevets AS, Leblond R, Pohlen T, Dalibard V, Budden D, Sulsky Y, Molloy J, Paine T, Gülçehre Ç, Wang Z, Pfaff T, Wu Y, Ring R, Yogatama D, Wünsch D, McKinney K, Smith O, Schaul T, Lillicrap TP, Kavukcuoglu K, Hassabis D, Apps C, Silver D (2019) Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 575(7782):350–354

Vinyals O, Ewalds T, Bartunov S, Georgiev P, Vezhnevets AS, Yeo M, Makhzani A, Küttler H, Agapiou JP, Schrittwieser J, Quan J, Gaffney S, Petersen S, Simonyan K, Schaul T, van Hasselt H, Silver D, Lillicrap TP, Calderone K, Keet P, Brunasso A, Lawrence D, Ekermo A, Repp J, Tsing R (2017) Starcraft II: a new challenge for reinforcement learning. arXiv:1708.04782

Wang T, Bao X, Clavera I, Hoang J, Wen Y, Langlois E, Zhang S, Zhang G, Abbeel P, Ba J (2019) Benchmarking model-based reinforcement learning. arXiv:1907.02057

Watkins Christopher JCH (1989) Learning from delayed rewards. PhD thesis, King’s College, Cambridge

Wong A, Bäck T, Kononova AV, Plaat A (2022) Deep multiagent reinforcement learning: challenges and directions. Artificial Intelligence Review

Xingjian SHI, Chen Z, Wang H, Yeung D-Y, Wong W-K, Woo W-C (2015) Convolutional LSTM network: a machine learning approach for precipitation nowcasting. In: Advances in neural information processing systems, pp 802–810

Ye W, Liu S, Kurutach T, Abbeel P, Gao Y (2021) Mastering atari games with limited data. Advances in neural information processing systems, vol 34

Zambaldi V, Raposo D, Santoro A, Bapst V, Li Y, Babuschkin I, Tuyls K, Reichert D, Lillicrap T, Lockhart E et al (2018) Relational deep reinforcement learning. arXiv preprint arXiv:1806.01830

Acknowledgements

We thank the members of the Leiden Reinforcement Learning Group, and especially Thomas Moerland, Mike Huisman, Matthias Müller-Brockhausen, Zhao Yang, Erman Acar, and Andreas Sauter for many discussions and insights. We thank the anonymous reviewers for their valuable insights, which improved the paper greatly.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Plaat, A., Kosters, W. & Preuss, M. High-accuracy model-based reinforcement learning, a survey. Artif Intell Rev 56, 9541–9573 (2023). https://doi.org/10.1007/s10462-022-10335-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-022-10335-w