Abstract

In the field of natural language processing and text mining, sentiment analysis (SA) has received huge attention from various researchers’ across the globe. By the prevalence of Web 2.0, user’s became more vigilant to share, promote and express themselves along with any issues or challenges that are being encountered on daily activities through the Internet (social media, micro-blogs, e-commerce, etc.) Expression and opinion are a complex sequence of acts that convey a huge volume of data that pose a challenge for computational researchers to decode. Over the period of time, researchers from various segments of public and private sectors are involved in the exploration of SA with an aim to understand the behavioral perspective of various stakeholders in society. Though the efforts to positively construct SA are successful, challenges still prevail for efficiency. This article presents an organized survey of SA (also known as opinion mining) along with methodologies or algorithms. The survey classifies SA into categories based on levels, tasks, and sub-task along with various techniques used for performing them. The survey explicitly focuses on different directions in which the research was explored in the area of cross-domain opinion classification. The article is concluded with an objective to present an exclusive and exhaustive analysis in the area of opinion mining containing approaches, datasets, languages, and applications used. The observations made are expected to support researches to get a greater understanding on emerging trends and state-of-the-art methods to be applied for future exploration.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the present time, the Internet plays an important role in people’s life. With the help of the latest technologies, people can access, share, and generate content over the Internet. In the World Wide Web, Web 1.0 refers to the first generation, which was entirely made up of web pages connected by hyperlinks and people can explore a website, read the content of the pages, but cannot write or add anything on the web page. Internet users are moving from Web 1.0 to Web 2.0 since 2004. Web 2.0 (“Interactive Web” or “The Social Web”) explains a novel stage of web facilities, social websites, and applications with an increasing emphasis on user collaboration (User-generated content and the read–write web). People are consuming as well as contributing information through sites such as YouTube, Flickr, Digg, blogs, etc. Web 3.0 (“web of meaning” or “the Semantic Web”) refers to the third generation of the World Wide Web. Web 3.0 includes smart search and behavioral advertising along with Web 2.0 features. Web contents are unstructured, structured, semi-structured, wrongly spelled and noisy that required Natural Language Processing techniques to analyze the data.

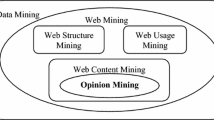

Social networking sites play an important role in Internet activities. Internet users and Internet content are increasing day by day. People are excited to share and express their feelings on any issues and day-to-day activities on the Internet. The micro text or short text is the biggest challenge in text analysis and different approaches are utilized for micro text normalization (Satapathy et al. 2020; Cambria 2016). Due to the explosive progress of online activities on the Internet (conferencing, chatting, social media communication, ticket booking, surveillances, e-commerce, online transaction, micro-blogging, and blogging, etc.) leads us to load, transmute, extract, and analyse the very large extent of data that is referred to as Big Data. This large amount of data can be analysed in several real-life applications by using a combination of data mining, text mining, web mining, and information retrieval techniques. Several blogs, forums, e-commerce websites, additional web resources, news reports, and social networks work as platforms to express views, which can be used to observe or report the feelings of the customer and general public on public occasions, organisations plans, monitoring reputations, political activities, promotion campaigns and product preferences (Ravi and Ravi 2015). A huge amount of raw data is tough to analyse and needs extant methods to get a comprehensive review summary. The population of the world and Internet users is going to increase day by day. People are giving more attention to web 1.0 and web 2.0 and many activities are performed by Internet users as shown in Fig. 1 (Balqisnadiah 2016).

Web 1.0 and Web 2.0 (Balqisnadiah 2016)

User-generated views are the main source of raw text. With the rapid progress of user-generated typescripts on the web or the Internet, mining of valuable data automatically from plentiful documents receives more research interest in numerous areas of Natural Language Processing (NLP) (Sun et al. 2017). The concept of artificial intelligence is used everywhere example Amazon’s Alexa from phone to devices. Nowadays machine learning methodologies or technologies are increasingly used in artificial intelligence fields. The concept of artificial intelligence together with a large amount of data is used by many different companies (Netflix, Google, data companies, etc.). NLP focuses on smartphones the human language to explain insight, help in human text and many more. The everyday human says several words to other people that interpret in countless meanings because every word is context-dependent. NLP is used for many prospectives such as word suggestion, a quick compilation of data, voice to text converter (Google assistant, Alexa application, Search engine optimization (SEO) application, handwriting recognition (online and offline), speech recognition system, opinion mining, etc. The Computational study of a person’s thoughts, moods, reviews, feelings, emotions, events, appraisal, issues, attitude, and topics are defined in sentiment analysis. The following text, explain the early reviews in the field of sentiment analysis.

1.1 Sentiment analysis: the earlier reviews

In the field of sentiment analysis (Pang and Lee 2008) reviewed and analyzed more than 300 research articles by covering the major tasks (opinion summarization, opinion classification, sentiment mining, polarity determination, opinion spam detection, different level of sentiment analysis, etc.), challenges and applications of opinion mining. Later, Tang et al. (2009) highlighted some issues in the field of sentiment analysis or opinion mining such as sentiment extraction, document opinion classification, word opinion classification, and subjectivity classification. Further, the authors specified some approaches for the subjectivity classification such as a cut-based classifier, multiple Naïve Bayes classifier, Naïve Bayes classifier, and similarity dependent.

O’Leary (2011) reviewed on blog mining and outlined different kind of blog search, forums, sentiments to be analysed and their applications. Montoyo et al. (2012) mentioned applications, attainments and some open issues in the field of sentiment mining and subjectivity classification. Tsytsarau and Palpanas (2012) focused on opinion spam detection, contradiction analysis, opinion aggregation, and opinion mining. The authors equated different opinion mining techniques and approaches that are applied in a common dataset.

Liu (2012) surveyed more than four hundred research articles in the area of opinion mining and sentiment classification. This survey covered NLP issues, sentiment analysis applications, sentiment lexicon and its issues, different levels of analysis, opinion summarization, cross-domain sentiment classification, cross-lingual sentiment classification, aspect-based sentiment analysis, sentence subjectivity classification, quality of reviews, sentiment lexicon generation, and some challenging issues in the field of opinion classification and sentiment analysis.

Feldman (2013) studied in the field of sentiment analysis and pointed-out some specific difficulties: sentiment lexicon acquisition, comparative sentiment analysis, sentence-level sentiment classification, document-level sentiment classification, aspect-based sentiment classification and some open challenging issues such as sarcasm detection, automatic entity recognition, discussion on multi-entity in the same review, and composition statement’s in sentiment analysis. Cambria et al. (2013) the survey focused on complexities involved in opinion mining, concerning demand and future direction.

After that Medhat et al. (2014) focused on the problem of sentiment analysis concerning the techniques not the applications’ point of view. The author categorized the article according to the techniques involved and classified the various techniques of sentiment analysis with brief details of algorithms. The author explained the available datasets and categorized the datasets according to the applications. Finally, discussed some sentiment analysis fields for enhancement such as transfer learning, building resources, and emotion detection. At last briefed fifty-four research articles listing out task accomplished, type of language, data source, polarity, data scope, algorithm utilized and domain-oriented.

Later, Ravi and Ravi (2015) reviewed about 251 research articles during (2002–2015) and classified the survey based on opinion mining approaches, applications, and tasks. This literature covered different tasks of sentiment analysis and pointed-out major issues in sentiment classification, review spam detection, degree of usefulness measurement, subjectivity classification and lexicon creation. Finally briefed thirty-two publicly available datasets and one hundred sixty-one research articles in the tabular form listing out concepts and techniques utilized, type of language, polarity, type of data and dictionary.

Further, Hussein (2016) identified challenges relevant to the techniques and methods in the area of opinion mining. Based on two-comparisons among forty-seven research articles, the authors discussed the effects and importance of opinion mining challenges in opinion evaluation. Finally summaries sentiment challenges and how to improve the accuracy based on previous work. After that, Al-Moslmi et al. (2017) studied about 91 research articles from 2010 to 2016 in the field of cross-domain sentiment classification. This study focused on the techniques, algorithms, and approaches used in cross-domain opinion classification. Further, highlighted some open issues in cross-domain opinion classification. Finally summarized the methodologies and findings of twenty-eight research articles in the area of cross-domain opinion classification. It is observed from the survey analysis that there is no perfect solution found in cross-domain opinion mining.

Recently, Sun et al. (2017) reviewed opining mining using the techniques of NLP. Firstly, the authors explained information fusion techniques for combining information from multiple sources to solve certain tasks. This study also presented some natural language processing techniques for text processing. Secondly introduced the different approaches, methods, and resources of sentiment analysis for different levels and situations. The aim of opinion mining is to extract the sentiment orientation (positive or negative) from different levels of sentiment analysis (sentence level, document level, fine-grained level, word level, etc.) using supervised, unsupervised and semi-supervised learning methods. Finally discussed some advanced topics (opinion spam detection, review usefulness measurement, opinion summarization, etc.), some open problems (annotated corpora and cumulative errors from pre-processing) and some challenges (deep learning for accuracy) in the field of opinion mining. Most recently Young et al. (2018) explained the latest trends of deep learning in NLP, compared various deep learning models and explained the past, present and future of deep learning in NLP.

This literature survey diverges from earlier review articles in several ways such as (1) categorized the standing studies based on different tasks in sentiment analysis and different level of sentiment analysis, (2) this study emphasized the cross-domain sentiment classification that is one of the most challenging tasks in sentiment analysis (3) summarized different tasks of sentiment classification in some aspects (approaches, techniques or methodologies, datasets, lexicon or corpus, and type of languages are utilized in sentiment classification), (4) this study provides a detailed list of publically available toolkits and supported language by toolkits for sentiment analysis’ tasks, (5) summarized a detailed list of available datasets, data sources, annotated corpora, and sentiment lexicons along with type of languages that is utilized in the field of sentiment analysis, (6) classified the baseline methods and research articles of cross-domain opinion classification in four aspects (approaches and methods utilized, datasets and languages used, name of the corpora or dictionary utilized and details description of research article) (7) study discussed some challenging issues, open problems and future directions in the area of sentiment analysis (8) summarized one hundred plus research articles of sentiment analysis in the aspect of techniques, methodologies, datasets, data source, and type of language.

The main aim of this survey paper is to understand the different techniques, approaches, and datasets, used in the field of sentiment analysis to achieve accuracy. Rest of the article is organized as follows: Sect. 2 explains the sentiment analysis and different levels of sentiment analysis. Section 3 presents different tasks and sub-task of sentiment analysis, state-of-the-art discussion on opinion mining along with publicly available datasets/lexicon and toolkits. Section 4 explains one of the most challenging tasks of sentiment classification named cross-domain opinion classification. Sections 5 and 6 covers the outcomes from the survey, the pros and cons of different baseline methods, challenges, open issues and future direction in the field of sentiment analysis. Section 7 concludes the survey.

2 Sentiment analysis

Sentiment analysis is a computational study of people’s views or aspects towards an entity. Here entity can be an individual thing like topics, blogs or events. The term sentiment analysis was firstly introduced in early of this century and has become an active field for research. According to the definition of sentiment (Liu 2012), it is represented as a quintuple.

Definition of sentiment analysis (ei, aij, sijkl, hk, tl), where ei represents the ith entity, aij represents the jth aspect of the ith entity, hk represents the kth sentiment holder, tl represents the time when the sentiment conveyed and sijkl represents the sentiments on aspect aij of entity ei at tl time for hk opinion holder. The sentiment or opinion sijkl is neutral, positive or negative.

For Example, “The Power backup of a power bank is excellent!” Here “Power bank” represents an entity, “Power backup” represents as aspect, and the sentiment expressed as positive.

Sentiment analysis examines the people’s moods, aspects, views, opinions, attitudes, feelings, and emotions towards entities (services, products, researches, political issues, organizations, random issues, and any topics). Sentiment analysis aims to find the polarity. Polarity can be positive, negative or neutral towards the entity.

Machine learning, lexicon-based, and hybrid approaches play an important role in sentiment analysis for obtaining the polarity. Sentiment analysis can be used in different applications such as movie sale prediction, market prediction, recommender system, customer satisfaction measurement and many more for achieving one goal i.e., opinion analysis of people’s reviews. Due to rapid growth and interest in e-commerce, this is one of the prominent sources of analyzing and expressing their opinions. Opinions are important for both sides: customers as well as the manager’s point of view. Many customers take their decision based on reviews that are available on the Internet. Sentiment analysis is a multifaceted problem, not a single problem. Texts for sentiment analysis are coming from various sources in diverse formats. Various pre-processing steps are needed to perform the task of sentiment analysis. Sentiment analysis helps in achieving various tasks such as sentiment classification, spam detection, usefulness measurement, subjectivity classification, and many more. Data pre-processing and acquisition are the most common subtask required for text classification and sentiment analysis, which are explained in the Fig. 2. The next subpart of this section explains the different levels of sentiment analysis i.e., document, sentence and aspect level.

2.1 Levels of sentiment analysis

In general, sentiment analysis has been classified at three different levels such as document level, sentence level, and entity/aspect level. Some studies explain the concept of user-level and concept-level sentiment analysis also as shown in Fig. 3. Concept-level sentiment analysis emphasized on semantic analysis of the text by using semantic networks (Cambria 2013). User-level sentiment analysis analyze the opinion expressed in individual texts (what people think) (Tan et al. 2011). A brief explanation of these levels of sentiment analysis is presented below.

2.1.1 Document-level sentiment analysis

The main task of document-level sentiment analysis is to find out the overall opinion polarity of the document such as blogs, tweets, movie reviews, institute reviews, product reviews, and any issues. The objective of the document-level sentiment analysis is to determine the third tuple from the quintuple as per the definition of sentiment analysis. The generalized framework of document-level sentiment analysis is explained in Fig. 4. Research articles of document-level sentiment analysis are summarized below in Table 1.

In document-level sentiment analysis (Moraes et al. 2013) utilized some machine learning approaches such as Support Vector Machine, Neural Network, and Naïve Bayes for comparison on product reviews in the English language. Du et al. (2014) utilized neural network and SVM on microblogs (Box office) in the Chinese language. Geva and Zahavi (2014) used neural network, decision tree utilized a stepwise logistic regression, genetic algorithm, and SVM on the stock market and news count in the English language. Xia et al. (2011) utilized Maximum entropy, Naïve Bayes, SVM on product reviews in the English language. Lin and He (2009) proposed a new unsupervised learning approach that is based on Latent Dirichlet Allocation (LDA) named as joint sentiment topic. Li et al. (2007) proposed a framework named Dependency-Sentiment-LDA. With the help of the Markov model, the author assumes the sentiment of words that depends on the previous one. In document-level sentiment analysis, the word sentiment information is consistent with the labeled document (Li et al. 2017). For each document, determined the topics and sentiments simultaneously. Irrespective of the research conducted in the area of document-level sentiment analysis, areas such as opinion mining, usefulness, and opinion spam detection remain a challenge.

2.1.2 Sentence-level sentiment analysis

Sentence-level sentiment analysis is similar to the document-level sentiment analysis, subsequently, a sentence can be observed as a short document. The objective of sentence-level sentiment analysis is to categorize the opinion expressed in each sentence. Before analysing the polarity of a sentence, find out whether the sentence is objective or subjective. If the sentence is subjective then find out the orientation or polarity (positive or negative) of the sentence. The process of sentence-level sentiment analysis is shown in Fig. 5. Research studies in sentence-level sentiment analysis are summarized in Table 2.

The applications of sentence-level SA in opinion mining are multi and cross-lingual, review spam detection, polarity detection, etc.

2.1.3 Aspect-level sentiment analysis

Classifying text at the sentence-level or the document-level provides valuable information in several applications but some time that information is not sufficient in many advanced applications. To acquire this information from the opinionated text, we need to go at aspect level which receives a great interest in research. In aspect-level, several variations are included like word (also known as an entity, attitude, or feature) and concept-level sentiment analysis. According to the definition of sentiment analysis, the first three components in quintuple (entity, aspect, sentiment) discover aspect level sentiment analysis and categorized the opinion with respect to the particular aspects of entities. Aspect-level sentiment analysis aims to determine the particular targets (entities or aspects) and the corresponding polarities.

For example, “iPhone is made by Apple company. The iPhone’s picture quality is very clear”.

First, the comment is splitted into sentences such as [‘iPhone is made by Apple company.’, ‘The iPhone’s picture quality is very clear. ‘].

Secondly, the sentence is splitted into words such as [‘iPhone’, ‘is’, ‘made’, ‘by’, ‘Apple’, ‘company’, ‘.’] and next sentence as [‘The’, ‘iPhones’, ‘picture’, ‘quality’, ‘is’, ‘very’, ‘clear’, ‘.’].

Now each word is tagged along with POS taggers such as [(‘iPhone’, ‘NN’), (‘is’, ‘VBZ’), (‘made’, ‘VBN’), (‘by’, ‘IN’), (‘Apple’, ‘NNP’), (‘company’, ‘NN’), (‘.’, ‘.’)] and [(‘The’, ‘DT’), (‘iPhone’s, ‘JJ’), (‘picture’, ‘NN’), (‘quality’, ‘NN’), (‘is’, ‘VBZ’), (‘very’, ‘RB’), (‘clear’, ‘JJ’), (‘.’, ‘.’)].

The entity, aspect, and sentiment are identified from both sentences, for instance from the first sentence, entity: “iPhone”, aspect: “made by apple company” and sentiment: “neutral” is extracted. The sentiment is neutral as the sentence is objective and it reflects the universal truth.

From the second sentence, entity: “iPhone”, aspect: “picture quality very clear” and sentiment: “express some sentiment as this sentence is subjective in nature and reflects the sentiment toward the quality of the iPhone.

Now, assign sentiment of aspect based on the polarity of aspect using a dictionary, statistical, lexicon and some other approach.

Finally, the sentence is classified into a positive or negative class based on assigned sentiment at the aspect-level.

The subsequent part summarizes the process of aspect-level SA in Fig. 6 along with current research work on aspect-level sentiment analysis is shown in Table 3.

The lack of annotated corpora at feature-level and complicated appearance of sentiments are the problems in the aspect-level sentiment analysis (Ravi and Ravi 2015). The applications of aspect-level sentiment analysis in opinion mining are polarity determination, entity recognition, feature extraction, etc. The next section explains the different tasks, approaches, and methods of sentiment analysis and utilizes the different level of sentiment analysis to achieve the objective of tasks.

3 Different tasks of sentiment analysis

In general, sentiment analysis is used to perform multiple tasks. This article consists of some important tasks of sentiment analysis as presented in Fig. 7 like subjectivity analysis, spam review detection, degree of usefulness measurement, opinion summarization, aspect selection, sentiment lexicon creation, and opinion classification. Some task is further categorized into subtasks such as opinion classification is divided into polarity extraction, cross-lingual and multi-lingual opinion analysis, and cross-domain opinion classification. In this section, discuss all the tasks, sub-tasks, and approaches, methods, or techniques applied in the respective task as explained in Fig. 7a, b. Applied methods are generally categorized into four approaches such as lexicon-based, machine learning, deep learning, and hybrid approaches that are further classified into some specific approaches. This study presents the literature on sentiment analysis’s tasks and applied techniques so that the new researchers can get the state-of-art in the field of sentiment analysis.

3.1 Subjectivity analysis

Subjective analysis deals with the recognition of “personal states”—a term that encompasses speculations, evaluations, opinions, emotions, sentiments, and beliefs. The sentence is divided into two dimensions objective and subjective. In an objective sentence, some factual information is available such as “This is a university” whereas, in the subjective sentence, some personal views, attitudes, feelings, or beliefs are available like “This is a good university”. The expressions of subjective sentences can be considered in many forms like speculations, allegations, suspicions, desires, opinions, and beliefs. The process of analysing whether the given sentence is subjective or objective is known as Subjectivity Analysis. Subjectivity analysis is an interesting task to work upon and bridge the gap between many applications and fields. In the past decade, considerable research has been reported and improvement is still coming out from the sentence subjectivity. To obtain the subjectivity of the sentence is more complex as compared to determining the orientation of the sentence (Chaturvedi et al. 2018). The improvement in the subjectivity analysis is directly proportional to the improvement in polarity determination. Figure 8 showing the process of subjectivity analysis of the sentence. Table 4 shows the research work in the field of subjectivity analysis.

The applications of subjectivity analysis in opinion mining are feature extraction, polarity determination, and sentence sentiment mining.

3.2 Spam review detection

With the increasing popularity of online reviews or e-commerce, the concerned person used to involve some experts in writing fake analyses of anything with the intention to increase productivity. In web 2.0, everyone is free to give their response or express their feelings from anywhere in the world without disclosing the identity. The analyses are extremely valuable for the customer. Here the concerned person may be manufacturer, dealer, service provider, political leader, market predictor, etc. Fake analyses referred to as a false review, a fraudulent review, opinion spam, fake review, etc. A spammer is a person who writes a fake review. To promote a low-quality product, a spammer used to write a false opinion for the customer. To find a fake review or opinion spam is a very tough task in the field of opinion mining. Sub-sequent part of this survey presents the process of spam review detection in Fig. 9 and research works in Table 5.

Opinion spam detection is one of the challenging tasks in the field of sentiment analysis and work is required for improvement of the accuracy and identifying the spam reviews. The applications of spam review detection in opinion mining are genuine polarity determination of product or any activity in e-commerce.

3.3 Opinion summarization

Opinion summarization can be observed as multi-document summarization (Sun et al. 2017). Opinion summarization focused on the opinion part of documents and corresponding sentiments towards the entity. Subjective information of the sentence contains opinions, feelings, and beliefs. One opinion is not sufficient for the decision. A large number of views for a particular thing is good to analyses the opinion. In traditional text summarization, emphasized on eliminating the redundancy and mining the subjective part only. Figure 10 shows the process of opinion summarization. Table 6 summarizes research works in the area of opinion summarization.

Opinion summarization is one of the challenging area and requires attention to improve accuracy. The applications of opinion summarization are to find the overall polarity and summarize form of any documents.

3.4 Degree of usefulness measurement

The rate of reviews in e-commerce or any social issues is increasing day by day. People used to examine the reviews and based on review rating they can make their decision. To promote their services and products, some third party persons are hired by the manager for writing fake reviews. These reviews may work for some products to increase their sale. At present, spam review detection and degree of usefulness measurement gained considerable attention from the researchers. Spam detection and usefulness measurement are sub-tasks of sentiment analysis. In spam detection, only consider and analyse the good reviews because professionals write fake reviews very intelligently to increase the sale of a product or to reduce the sale of the product. The aim of usefulness measurement is to rank the reviews according to their degree which can be expressed as a regression problem with the features of review lengths, Term frequency-inverse document frequency (TF-IDF) weighting scores sentiment words, review rating scores, POS tags, the timeliness of reviews, reviewers’ expertise’s, subjectivity of reviews, review styles and social contexts of reviewers (Sun et al. 2017). The process of the degree of usefulness measurement is explained in Fig. 11. Research in the degree of usefulness measurement are explained in Table 7.

Review usefulness is one of the challenging task to work upon and still required more attention to improve the accuracy. The applications of the degree of review usefulness in opinion mining are in market prediction, box office prediction, and many more.

3.5 Sentiment lexicon creation

3.5.1 Opinion lexica and corpora creation

A vocabulary of opinion words with corresponding strength value and opinion polarity is considered as Lexicon. The creation of a lexicon is started with the primary words called opinion seed words, the list is further enhanced using antonyms, and synonyms of opinion seed words with the help of the WordNet dictionary (Ravi and Ravi 2015). This process will continue until the extension of the list does not stop. The creation of a corpus is started with the seed word of sentiment words and searches the additional sentiment words in the large corpus with context-specific orientations. In order to collect or compile the opinion words list, there are three main approaches named as manual approach or brute force approaches, corpus-based approach and dictionary-based approach. The subsequent part of this survey summarizes research work (Table 8) and process (Fig. 12) of sentiment lexicon creation.

Researchers are still working on creating general lexicon and corpus that work for all the application like in social media, e-commerce, blogs, etc.

3.6 Aspect selection

3.6.1 Feature selection for opinion classification

Feature selection is one of the most important tasks in opinion classification. Feature selection and extraction from text feature is the first step in opinion classification problem. Some of the text features like Negation, Opinion words and phrases, Part of speech (POS), and Term presence and frequency. Feature selection methods are further divided into two sub-categories methods named as lexicon-based methods and statistical methods. In lexicon-based methods, human annotation is required and approach starts with a small set of words that are called ‘seed’ words. With the help of these ‘seed’ words, obtain the large set of lexicon through synonym and antonym. The most frequent method for feature selection is statistical methods that works automatically. The technique is used for feature selection considering the text document either as a string or a group of words (Bag of Words (BOWs)). After the pre-processing steps, feature selection plays an important role in extracting the good feature. The most frequently used statistical methods for feature selection are Chi square (χ2), Point-wise Mutual Information (PMI), Principal Component Analysis techniques (PCA), Hidden Markov Model (HMM), Latent Dirichlet Allocation (LDA), etc. Figure 13 explains the process of aspect selection. Table 9 summarizes existing research work in aspect selection for opinion classification.

There are some challenging tasks in feature detection such as irony detection, sparsity, polysemy detection, etc. that required more attention.

3.7 Opinion classification

The main objective of opinion classification is to determine the sentiment orientation of the given text. The sentiment orientation of given text is to find the polarity of a given text, whether the given text expresses the positive, negative or neutral opinion towards the subject. The classes of polarity can be varied like a binary (positive or negative), ternary (positive, negative, or neutral) and n-ary. In order to achieve the objective, many techniques are available named as machine learning, lexicon-based and hybrid techniques. The Machine Learning technique applies the well-known machine learning algorithms and uses linguistic features. For classifying the text, it observed that machine-learning techniques are divided into unsupervised and supervised learning methods. In supervised learning approaches, a large amount of labeled training data is available. Whereas in unsupervised learning approaches, it is difficult to find the labeled training data. Some frequently used machine learning methods are Support vector machine, Naïve Bayes, Maximum Entropy, Decision Tree, Neural Network, Bayesian Network, and Rule-based classifier. The Lexicon-based approach depends on an opinion lexicon that is a group of recognized and precompiled opinion terms. To analyse the text, lexicon-based techniques are used to find the sentiment lexicon. There are two approaches in this technique named as dictionary-based approach and a corpus-based approach. The dictionary-based approach is based on seed words and according to seed words, find out their synonyms and antonyms in the dictionary. The corpus-based approach, which uses statistical or semantic methods to find the other sentiment words in a huge corpus with context-specific orientations that begin with a seed list of sentiment words. The hybrid technique combines both Machine-learning and Lexicon-based approach with the objective to find the polarity of the text (sentences, documents, etc.) towards the subject. The objective of the opinion classification is to determine the polarity in multiple fields like multi-lingual, cross-lingual, and cross-domain, etc. The process of opinion classification is explained in Fig. 14. The current state of art, techniques, common datasets that are used for polarity determination explained in Table 10.

Researchers are still working to determine the polarity of texts, documents, and sentences by using a machine-learning approach, lexicon-based approach and hybrid approach along with feature extraction techniques. The challenging task of polarity determination is to yield good accuracy.

3.7.1 Cross-lingual and multi-lingual opinion classification

To determine the opinion, annotated corpora and sentiment lexicons i.e., sentiment resources are very crucial. However, most of the existing resources are written in the English language. To concern the opinions, the numbers of languages are available across the world with different degrees of sensitive power that makes it complex to achieve precise analysis for texts in different languages such as Spanish, Arabic, and Japanese, etc. It is very expensive to create sentiment lexicon or sentiment corpora for every language (Dashtipour et al. 2016). In cross-lingual opinion mining, the machine is trained on a dataset of one language (source language, which is having reliable resource e.g. English) and tested on a dataset of another language (target language or resource lacking language) whereas, in multi-lingual opinion analysis, mixed language is present (Singh and Sachan 2019). Using two different approaches, cross-lingual and multi-lingual opinion analysis can be performed e.g. lexicon-based approach and corpus-based approach. The Generalized process of multi-lingual opinion classification is described in Fig. 15. The current state of art, techniques, common datasets that are used for Cross-lingual and multi-lingual opinion analysis explained in Table 11.

Cross-lingual and multi-lingual sentiment analysis requires more attention in different aspects like code-mixed, phonetic words, social media code mixed, social media content etc. (Lo et al. 2017).

3.7.2 Cross-domain opinion classification

In the field of sentiment analysis, cross-domain sentiment analysis is one of the challenging and interesting problems to work upon. The opinion is expressed differently in a different domain that means a word is expressed positive sentiment towards a domain and the same word expressed negative sentiment towards another domain (“A Domain is a class that consists of different objects”). For example, if we considered two domains like “Hotel” and “computer” and a word like “Hot” will explain the challenging task of cross-domain sentiment analysis. In the case of the computer domain “The computer is too hot when it working” word “hot” express negative sentiment. When we are referring the case of the hotel domain like “The shower is having the great hot water” now the word “hot” expresses the positive sentiment. Due to this, the performance of the trained system will drop drastically. We can’t create the corpus for all the domains. Creating a corpus for all the domains is a very time consuming and costly process. Cross-domain sentiment analysis requires at least two domains, one is called a source domain and the other is called is a target domain. We get the training set from the source and target domain and train our classifier based on this training set. Once our classifier is trained, it is tested on the target domain and its accuracy is checked.

Let’s take the formal definition: \(D_{Src}^{l}\) denotes source domain and \(D_{trg}^{l}\) denotes the target domain. The set of labeled data for the source domain \(D_{Src}^{l}\) explained as:

where \(O^{l}_{s1} ,O^{l}_{s2} , \ldots ,O^{l}_{sm}\) are m sampled review from the source domain and sentiment labels are denoted as P1, P2, …, Pm \(\in\) {+ 1, − 1} where + 1 and − 1 denote the polarity i.e., positive and negative sentiments respectively.

The set of labeled data for the target domain \(D_{trg}^{l}\) explained as:

where \(O^{l}_{t1} ,O^{l}_{t2} , \ldots ,O_{tn}\) are n sampled review form target domain and sentiment labels are denoted as Q1, Q2, …, Qn \(\in\) {+ 1, − 1} where + 1 and − 1 denote the polarity i.e., positive and negative sentiments respectively. In addition to the labeled data set in source and target domain, also exist some unlabeled data set in both the domain.

The set of unlabeled data for the source domain \(D_{Src}^{U}\) denoted as:

where \(O^{U}_{s1} ,O^{U}_{s2} , \ldots ,O^{U}_{si}\) are i unlabeled sampled reviews.

The set of unlabeled data for the target domain \(D_{trg}^{U}\) denoted as:

where \(O^{U}_{t1} ,O^{U}_{t2} , \ldots ,O^{U}_{tj}\) are j unlabeled sampled reviews respectively. The task of cross-domain opinion analysis is to train our n-ary classifier based on the combination of labeled and unlabeled dataset {\(D_{Src}^{l}\),\(D_{trg}^{l}\), \(D_{Src}^{U}\), \(D_{trg}^{U}\)} available in the source and target domain.

3.7.3 Basic terminologies

Pre-processing Sentiment analysis requires many pre-processing steps for structuring the text data and extracting the features. Data is collected from many sources and that text data need to be pre-processed before using it. There are several pre-processing steps used in text data such as Stop word removal, Tokenization, Parsing, Part of Speech (POS) tagging, Stemming, word segmentation, and feature extraction. We explain some general pre-processing techniques.

Stop words do not contribute to the analysis of text so we remove that stop words in the pre-processing steps. Examples of stop words are “a”, “the” “as” and “on” etc. Tokenization is the process in which breaks the sentence into symbols, phrases, words, or some expressive tokens by eliminating some punctuation. In the English language, it is trivial to divide the words by the spaces. It is one of the most fundamental techniques for natural language processing tasks. With the help of a token, we can find out some additional information like name entity or opinion phrases. For tokenization, many fundamental tools are available such as OpenNLP Tokenizer, Stanford Tokenizer, etc.

Parsing and Part of Speech tagging are methods that analyze syntactic and lexical information of the text. Part of speech tagging is performed, to identify different parts of speech in the text. The POS tag is very vital for natural language processing. Part of speech tagging is used to find the equivalent POS tag for each word. POS tags such as noun, adjective, verb, adverb, a combination of two consecutive words like adverb-adjective, adverb-verb, n-gram, etc. are taken-out using the parser. Parsing is an important phase, it gives sentiment words as an output. Sentence parsing involves assigning different POS tags for the given text.

Stemming is a technique to acquire a word into its root form while discounting the different parts of speech of the word. Due to noise and sparseness in textual data, it often needs an extreme level of feature extraction that is one of the important steps in pre-processing.

Word segmentation technique is used when there are no explicit word boundary markers in the text such as Japanese, Chinese language. It is a sequential labeling problem. Several tools and approaches are available such as Stanford Segmenter, THULAC, ICTCLAS, Conditional Random Fields (CRFs), maximum-entropy Markov models, Hidden Markov models, etc. for this task.

For the pre-processing of data, some publically available toolkits are summarized in Table 12.

3.7.4 Languages and available datasets

In the field of sentiment analysis, some famous datasets, data source, sentiment lexicon, and opinion corpora are illustrated in tabular form (Table 13). These datasets are used to accomplish different tasks in sentiment analysis. English is the most frequent language that is used in different datasets (due to its availability of the resource). Research is still going on for the non-English language and it is a very challenging task to create lexicon, corpora, and resources for the different languages. Non-English language includes language such as Chinese, German, Hindi, Spanish, French, etc.

4 Baseline methods and techniques for cross-domain opinion classification

This section explains an outline of baseline methods and techniques of cross-domain opinion classification in the early days. Transfer learning and Domain adaptation or knowledge adaptation play an important role in the field of cross-domain opinion classification. In cross-domain opinion classification, trained a machine based on the available labeled/unlabeled dataset of source and target domain and test that machine on different domain whether machine work properly or not. In the early reviews, most of the articles used the amazon multi-domain dataset. Amazon multi-domain dataset consists of four different types of domains such as Kitchen, electronics, DVDs, and Books with 89,478, 104,027, 179,879, and 188,050 number of features respectively. The key techniques or approaches for the cross-domain opinion classification are explained in the following Fig. 16.

4.1 Structured Correspondence Learning (SCL) Technique

The SCL technique was introduced by:

-

Blitzer et al. (2006)

-

Approach: Structured Correspondence Learning, Support vector machine, part of speech tagging and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: In order to encourage communication among features from a variety of domains, a structured correspondence learning algorithm is introduced. The vital role of structure corresponding learning is to recognize correspondences among associations related to features and pivot features. Pivot features are features that act as a similar mode in both domains for discriminative learning. In their experiment, they considered the unlabeled data from the source and target domain and labeled data from the source domain. By using this dataset, structure correspondence learning outperformed with the semi-supervised and supervised learning approach. This work is extended by Blitzer et al. (2007).

-

-

Blitzer et al. (2007)

-

Approach: Structured Correspondence Learning with mutual information (unigram or bi-gram and domain label), Support vector machine, part of speech tagging and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: Structured correspondence learning depends on the selection of pivot features, and if pivot features are not well-selected that can directly change or alter the performance of a classifier. To overcome this problem, they extend the existing algorithm as Structured correspondence learning with mutual information (SCI-MI). For cross-domain opinion classification, structured corresponding learning with mutual information (SCI-MI) is more suitable as a comparison with Structural corresponding learning because it is selecting top pivot features by using mutual information between a domain label and uni-gram or bi-gram features. To measure the loss between the domains due to adaptation from one domain to another domain, they evaluated the A-distance. Using unlabeled data, A-distance is measured that will help to find out the divergence that affects the classification accuracy. Most recently the concept of Blitzer et al. (2006) is borrowed by Yu and Jiang (2016).

-

-

Yu and Jiang (2016)

-

Approach: Neural network, Sentence Embeddings, Deep learning (Convolutional Neural Networks and Recurrent Neural Network), Movie dataset (Pang and Lee 2004)Footnote 1 and Movie (Socher et al. 2013)Footnote 2 datasets, Digital products (Camera, MP3) (Hu and Liu 2004),Footnote 3 and Laptop and Restaurant SemEval (2015) (English language)

-

Corpora: Five benchmark product review, word embeddings from word2vecFootnote 4

-

Explanation: For domain adaptation, they induced a sentence embedding based on two auxiliary tasks (Sequential-auxiliary and Joint-auxiliary). The experiments are performed on five benchmark datasets and the proposed joint method outperformed several baseline methods.

-

4.2 Spectral feature alignment (SFA) technique

The spectral feature alignment technique is introduced by:

-

Pan et al. (2010)

-

Approach: Spectral feature alignment and Amazon multi-domain datasets, yelp and Citysearch websites (English language)

-

Corpora: Kitchen, DVD’s, electronics, Books, video game, electronics and software from Amazon, hotel from Yelp and CitySearch

-

Explanation: Proposed a new algorithm Spectral feature alignment (SFA), to bridge the gap between the two different domains. The spectral feature alignment algorithm is used to align domain-specific words (collected from different domains) into unified clusters and domain-independent words work as a bridge. The proposed framework includes the spectral feature alignment algorithm and graph construction, for reducing the gap between different domains. To construct the bipartite graph, they used co-occurrence information between domain-independent words and domain-specific words. Domain-specific words and domain-independent words are two different categories of words that are used in cross-domain sentiment data. The bipartite graph uses a spectral clustering algorithm, to co-align domain-independent words and domain-specific words into a unified word cluster and to minimize the mismatches between both domain and domain-specific words. The spectral feature alignment approach outperformed as compared with an existing approach like SCL etc. Later this work is extended by Lin et al. (2014).

-

-

Lin et al. (2014)

-

Approach: Spectral feature alignment, Support vector machine, taxonomy-based regression model (TBRM) and cosine function and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, Electronics, DVDs and Books

-

Explanation: Introduced two approaches taxonomy-based regression model (TBRM) and a cosine function to choose the most similar models based on the target node. They also utilized the support vector machine classifier, domain adaptation algorithm (spectral feature alignment) and weight adjustment technique. The experimental results showed that proposed approaches outperformed baseline approaches. Recently in cross-domain opinion classification.

-

-

Deshmukh and Tripathy (2018)

-

Approach: Modified Maximum Entropy for classification, bipartite graph clustering, and POS + unigram and Amazon Product Review (English language)

-

Corpora: Kitchen, Electronics, DVDs and Books

-

Explanation: Utilized semi-supervised approach (bipartite graph clustering and modified maximum entropy (enhanced the entropy with modified increment quantity)) for classifying and extracting the opinion or sentiment words from one domain (using set of labeled lexicon from source domain) and analyze the sentiment or opinion words of another domain (labeled and unlabeled from target domain). The authors classified their methodology in two phases. In the first phase, pre-processing steps of datasets (part of speech tagging using Stanford parser) are performed and secondly, they used classifier (modified maximum entropy for classification of opinions and all tagged words after the POS tagging) and clustering (using bipartite graph) on datasets. In the experiment, they used four different product domain reviews (DVD, Book, Kitchen appliances and Electronics) and the result demonstrated that the proposed approach performs better than the other baseline methods. They also used the F-measure and accuracy for analyzing the algorithm performance. They performed 4 different experiments and found that the proposed approach performs better than the existing approach and achieve accuracy between 70% and 88.35%.

-

4.3 Joint sentiment-topic (JST) technique

-

He et al. (2011)

-

Approach: Modified Joint sentiment topic, Maximum Entropy from MALLET, Bag of Word and Amazon multi-domain datasets, MPQA subjectivity lexicon (English language)

-

Corpora: Kitchen, Electronics, DVDs and Books

-

Explanation: Introduced the modified Joint Sentiment Topic model that is incorporated with word polarity. The Joint Sentiment Topic model is based on/extension of Latent Dirichlet Allocation (LDA) to extract the opinion and topic simultaneously from the text. The joint sentiment model is a probabilistic model based on polarity-bearing topics, to enhance the feature space and learning is based on prior information about the domain-independent polarity words. With the help of the joint sentiment topic approach, they performed polarity word extraction on the combined data sets and transfer learning or domain adaptation on amazon multi-domain data sets. The experiment results showed that the proposed joint sentiment topic method outperforms structured corresponding learning on average and gets comparable results to spectral feature alignment. Further JST model was improved by He et al. (2013).

-

-

He et al. (2013)

-

Approach: Dynamic Joint sentiment topic, expectation–maximization (EM), Part of speech tagging, Unigrams + phrases and Mozilla Add-ons web site,Footnote 5 MPQA subjectivity lexicon (English language)

-

Corpora: Personas Plus, Fast Dial, Echofon for Twitter, Firefox Sync, Video DownloadHelper, and Adblock Plus

-

Explanation: To overcome the issue in joint sentiment topic model such as static co-occurrence pattern of words in text and fitting large scale data, proposed dynamic joint sentiment topic model (dJST). The proposed approach permits the recognition and tracing of opinions of the present and regular interests and shifts in topic and sentiment. To update the dJST model using the afresh-arrived data and online inference procedures utilized the expectation–maximization (EM) algorithm. Both topic and sentiment dynamics are recognized by supposing that the present sentiment-topic specific word distributions are produced according to the word distributions at prior epochs. To obtain information on these dependencies, they utilized three different approaches: a skip model, the sliding window, and a multiscale model. The experiment showed that both the skip model and multiscale model is better than the sliding window for sentiment classification.

-

4.4 Active learning and deep learning approach

Active and deep learning techniques are used to select the data from which they can learn and perform effectively in less training. To acquire the preferred outputs at new data points, the active learning approach can interactively query the information source that is a special case of semi-supervised machine learning. To acquire the additional labeled target domain data, active learning uses the source domain information. In the active learning approach, three types of scenarios (pool-based sampling, stream-based selective sampling and membership query synthesis) and query strategies are used. On the other side, deep learning is the unsupervised approach, using an unlabeled dataset intending to mining the good features and obtain meaningful sentiments. In cross-domain opinion classification, very few research studies used the concept of active and deep learning. Some research studies are:

-

Li et al. (2013)

-

Approach: Query-by-Committee (QBC), label propagation (LP), Bag of words (Unigram and bigram), maximum entropy (ME), Mallet ToolkitsFootnote 6 and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: For sentiment classification and informative sample selection, they introduced the active learning approach incorporates with Query By Committee (QBC). The two classifiers (source classifier utilized source domain labeled data and target classifier utilized target domain labeled data) are trained by completely exploiting the unlabeled data in the target domain with the proposed label propagation (LP) approach and utilized Query-By-Committee (QBC) for selection of informative samples. In the experiment, they considered four different product domains Kitchen, Electronics, DVDs and Books. The proposed approach outperformed the state-of-the-art. Further active learning approach used by Tsai et al. (2014).

-

-

Tsai et al. (2014)

-

Approach: Query-by-Committee (QBC), Bag of words (Unigram and bigram) and Chinese language

-

Explanation: To identify the opinion words, used active learning approach incorporates with Query By Committee (QBC).

-

-

Glorot et al. (2011)

-

Approach: Deep learning, Stacked Denoising Autoencoder (SDA) with rectifier units, linear SVM with squared hinge loss and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: For Domain adaptation in opinion classification, they utilized a deep learning approach based on Stacked Denoising Auto-Encoders with sparse rectifier units and a linear support vector machine is trained on the transformed labeled data of the source domain. The experiment results show that the proposed approach outperforms the current state-of-the-art and comparable results to SCL, SFA, and MCT. Later deep learning approach is utilized by Nozza et al. (2016).

-

-

Nozza et al. (2016)

-

Approach: Deep learning, marginalized Stacked Denoising Autoencoder (mSDA), Ensemble learning Methods (Bagging, Boosting, Random SubSpace and Simple Voting) and Amazon multi-domain datasets (English Language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: Proposed a new framework based on deep learning and ensemble methods for domain adaptation. In this framework, deep learning is used for obtaining high-level features of cross-domain and ensemble learning methods are used for minimizing the cross-domain generalization error. The experiments are performed on amazon multi-domain datasets and the proposed approach outperformed the current state of art approaches. Further deep learning is used for domain adaptation by Long et al. (2016).

-

-

Long et al. (2016)

-

Approach: Deep learning, Transfer Denoising Autoencoder (TDA), deep neural networks, multi-kernel maximum mean discrepancy (MK-MMD), TF-IDF and Amazon multi-domain datasets, Email Spam Filtering Dataset,Footnote 7 Newsgroup Classification Dataset,Footnote 8 Visual Object Recognition Dataset (English language)

-

Corpora: Kitchen, DVD’s, electronics, books, Public and user email, Amazon, Webcam, DSLR and Caltech-256

-

Explanation: The proposed framework outperformed state of the art methods on different adaptation tasks such as visual object recognition, newsgroup content categorization, email spam filtering, and sentiment polarity prediction on multi-domain datasets.

-

4.5 Topic modeling

In order to minimize the high dimensionality in a term-document matrix into low dimensions, utilized the Topic modeling approaches that are using the concept of latent semantic indexing and clustering techniques. Some research on these categories are:

-

Wu and Tan (2011)

-

Approach: SentiRank algorithm, manifold-ranking algorithm, Bag of words, Chinese text POS tool-ICTCLASFootnote 9 and Chinese domain-specific data sets Book,Footnote 10 HotelFootnote 11 and NotebookFootnote 12 (Chinese language)

-

Corpora: Book,Footnote 13 HotelFootnote 14 and NotebookFootnote 15

-

Explanation: To overcome the problem of domain adaptation in sentiment analysis, proposed a two-stage framework where the first stage is “building a bridge stage” (by applying the SentiRank algorithm) and the second stage is “following the structure stage” (by employing the manifold-ranking process). In the first stage, they build the bridge to collect some confidently labeled data from target data and reduce the gap between the source domain and target domain. Whereas in the second stage, they used the manifold-ranking algorithm and the manifold-ranking scores for utilizing the intrinsic structure collectively revealed by the target domain and to label the target-domain data. For the experiment, they considered Chinese domain-specific dataset on Books, Hotels, Notebook domain and compared the proposed framework with baseline methods (Proto, transductive SVM (TSVM), SentiRank algorithm, expectation–maximization (EM) algorithm based on Proto, expectation–maximization (EM) algorithm based on SentiRank, and Manifold based on Proto) and shown the comparable results. Later in topic modeling:

-

-

Roy et al. (2012)

-

Approach: Online Streaming Latent Dirichlet Allocation (OSLDA), Learning Transfer Graph Spectra, SocialTransfer: Transfer Learning from Social Stream, Bag of words, and Microblogs (English language)

-

Corpora: YouTube and NIST Twitter dataset

-

Explanation: Based on social streams (by employing Online Streaming Latent Dirichlet Allocation (OSLDA)), proposed a new framework for cross-domain opinion classification named as SocialTransfer. To acquire knowledge from cross-domain data, SocialTransfer is used in numerous multimedia applications. In their experiment consider real-world large-scale datasets like 10.2 million tweets from NIST Twitter dataset (Worked as source domain) and 5.7 million tweets from YouTube (Target domain) and proposed approach SocialTransfer outperformed traditional learners significantly. Further, to identify the properties and common structure used in different domain directly and indirectly explained by Yang et al. (2013).

-

-

Yang et al. (2013)

-

Approach: Probabilistic Link-Bridged Topic (LBT) Model, expectation–maximization (EM), Probabilistic Latent Semantic Analysis (PLSA), Support vector machine and Global domainFootnote 16 and scientific research papers (English language)

-

Corpora: Industry Sectors dataset (topic include—computer science research papers dataset (Data structure, encryption, and compression, networking, operating system, machine learning, etc.))

-

Explanation: Proposed a new model named Link-Bridged Topic (LBT) for transfer learning in cross-domain. In this model, firstly identify the direct or in-direct co-relation, properties and common structure among the documents by using an auxiliary link network. Secondly, Link-Bridged Topic (LBT) concurrently wraps the link structures and content information into a unified latent topic model. The aim of Link-Bridged Topic (LBT) is to bridge the gap across different domains. In their experiment, considered two different domain such as scientific research papers datasets and web page datasets and proposed model suggestively improves the generalization performance. Further in topic modeling indirectly work is extended by Zhao and Mao (2014).

-

-

Zhao and Mao (2014)

-

Approach: Supervised Adaptivetransfer Probabilistic Latent Semantic Analysis (SAtPLSA), Probability Latent Semantic Analysis (PLSA), expectation–maximization (EM) and 20NewsgroupsFootnote 17 and Reuters-21,578Footnote 18 (English Language)

-

Corpora: 20 subcategories of newsgroup and Retures21,578Footnote 19

-

Explanation: To overcome the issue in knowledge transfer like partial utilization of source domain’s labeled information and exploit source domain’s knowledge in the later stage of the training process, introduced a new model named Supervised Adaptivetransfer Probabilistic Latent Semantic Analysis (SAtPLSA). It is an extended version of Probability Latent Semantic Analysis (PLSA). To learn the model parameters, they used the expectation–maximization (EM) approach. In their experiments, they considered nine benchmark datasets (20 Newsgroups and Reuters-21578) and compare the proposed approach with five state-of-art domain adaptation approaches (Partially Supervised CrossCollection LDA (PSCCLDA), Collaborative Dual-PLSA (CDPLSA), Topic-bridge PLSA (TPLSA), Spectral Feature Alignment (SFA), and Topic Correlation Analysis (TCA)) and two classical supervised learning methods (Logistic Regression (LR) and Support Vector Machines (SVM)) and get the effective results. Further in topic modeling:

-

-

Zhou et al. (2015)

-

Approach: Topical correspondence transfer (TCT), Support Vector Machine (SVM), Bag of words (Unigram and bigram) and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: Proposed a new model or algorithm named Topical correspondence transfer (TCT), to bridge the gap between different domains in which labeled data is available only in the source domain. Topical correspondence transfer (TCT) assumes that there exists a set of shared topics and domain-specific topics for the target and source domain. In order to reduce the gap between the domains with the help of shared topics, Topical correspondence transfer (TCT) learns domain-specific information from different domains into unified topics. The experiments are performed on amazon multi-domain datasets and the results of TCT are compared with SCL, SFA, and NMTF for cross-domain opinion classification. Topical correspondence transfer (TCT) gets significant improvements through with state-of-the-art methods. Later in topic modeling

-

-

Liang et al. (2016)

-

Approach: Latent sentiment factorization (LSF), A Library for Support Vector Machine (LIBSVM),Footnote 20 Unigram and bigram, Word2Vec,Footnote 21 probabilistic matrix factorization and Amazon multi-domain datasets (English Language)

-

Corpora: Kitchen, DVD’s, electronics and Books

-

Explanation: Proposed a new algorithm based on the probabilistic matrix factorization approach named Latent sentiment factorization (LSF), to adopt opinion associations of words more efficiently and to bridge the gap between the domains in cross-domain opinion classification. Latent sentiment factorization first maps the documents and the words in source and target domains into a unified two-dimensional space based on domain shared words, after that they employed labeled document’s sentiment polarities in the source domain and prior opinion information of words to constrain the latent space. In his experiments, they used amazon multi-domain datasets and the proposed approach performed well compare with five baseline techniques including NoTransf, Upperbound, SCL, SFL, and TCT. Most recently the work is extended by Wang et al. (2018a, b).

-

-

-

Approach: Sentiment Related Index (SRI), pointwise mutual information (PMI), Support Vector Machine (SVM) Unigram and bigram, SentiRelated algorithm and Rew Data, DoubanData dataset (Chinese language)

-

Corpora: Rew Data (Computer, Hotel, Education), Douban data (Books, Music, and Movie)Footnote 22 and sentiment lexicons (NTUSD),Footnote 23 HowNetFootnote 24

-

Explanation: In order to measure the correlation between different lexical features in a precise domain, sentiment related index (SRI) is created and based on SRI the authors proposed a new algorithm named SentiRelated. By using this approach, they bridge the gap between source and a target domain and validate the novel approach on two different datasets (RewData, DoubanData dataset) in the Chinese language. The experiment results explained that the SentiRelated algorithm performs well to analyze the opinion polarity.

-

4.6 Thesaurus-based techniques

To transfer the knowledge, introduced a new approach “thesaurus” in cross-domain sentiment classification.

-

Bollegala et al. (2013)

-

Approach: Sentiment Sensitive Thesaurus (SST), Query Expansion, Pointwise Mutual Information (PMI), L1 regularization logistic regression,Footnote 25 POS tagging+ (Unigram and bigram), rating information and Amazon multi-domain datasets (English Language)

-

Corpora: Kitchen, DVD’s, Electronics and Books

-

Explanation: Proposed a new approach for the cross-domain sentiment classification named as Sentiment Sensitive Thesaurus (SST). Firstly, they used the labeled datasets from the source domain and unlabeled datasets from both source and target domains and created a sentiment sensitive distributional thesaurus. After that, they used the created thesaurus and expand the feature vector (query expression) at the time of training and testing on the L1 regularized logistic regression-based binary classifier. The proposed approach outperformed as compare with numerous previously cross-domain approaches and baseline methods for multi-source as well as single-source domain adaptation settings along with supervised and unsupervised domain adaptation approaches. Moreover, the authors compared the proposed approach with the lexical resource of word polarity, i.e., SentiwordNet,Footnote 26 and showed that the created thesaurus accurately pick up the words that expressed similar opinions. Further Sanju and Mirnalinee (2014) enhanced the work of Bollegala et al. (2013) and used the Wiktionary in sentiment sensitive thesaurus (SST) in order to reduce the mismatch between the domain. Later in cross-domain sentiment classification using thesaurus (Jimenez et al. 2016) suggested the framework.

-

-

Jimenez et al. (2016)

-

Approach: Bootstrapping algorithm (BS), Term Frequency (TF), POS tagging, (Unigram and bigram) and Spanish MuchoCine corpus (MC), iSOL Spanish polarity lexicon (Spanish language)

-

Corpora: Movie

-

Explanation: For the transfer learning of a polarity lexicon, introduced two corpus-based techniques that are language independent and work in any domain. One corpus-based technique based on term frequency (TF) achieves very promising results by using previously polarity tagged documents. Another corpus-based technique (did not want an annotated corpus) based on the bootstrapping algorithm (BS) improves on the baseline system. To achieve the benefit of the positive features of each of them, they combined both methods and get an improvement of 11.50% in terms of accuracy. Recently in cross-domain sentiment classification using thesaurus technique:

-

-

Bollegala and Mu (2016)

-

Approach: Rule-based modeling, K-NN, Pointwise Mutual Information (PMI) based pivot selection, a numeric Python library for decomposition,Footnote 27 L2 regularization logistic regression in scikit-learn,Footnote 28 POS tagging + (Unigram and bigram), rating information and Amazon multi-domain datasets (English language)

-

Corpora: Kitchen, DVD’s, Electronics and Books

-

Explanation: Developed an embedding technique for the training phase of cross-domain opinion classification that considered following objective functions in isolation and together: (a) pivot’s distributional properties, (b) Source domain document’s label constraints, and (c) Unlabeled target and source domain document’s geometric properties. The experimental results presented that improved performance can be attained by optimizing the above three objective functions together than by optimizing individually each function. This verifies the importance of using domain-specific embedding learning for cross-domain opinion classification and get the regards as the finest performance of an individual objective function.

-

4.7 Case-based reasoning (CBR) techniques

Case-based reasoning utilized experience and predict the results of new problems.

-

Ohana et al. (2012)

-

Approach: Case-based reasoning (CBR), kNN, Euclidean distance, Stanford POS TaggerFootnote 29 and The General Inquirer (GI) lexicon, the Subjectivity Clues lexicon (Clues), SentiWordNet (SWN), the Moby lexicon and the MSOL lexicon (English Language)

-

Corpora: Hotel reviews dataset, IMDB dataset of film review, Amazon product review (Electronics, books, music, and apparel

-

Explanation: For cross-domain sentiment classification, proposed a new approach named as case-based reasoning. The case analysis is a feature vector based on document data, and the case explanation comprises all lexicons that made precise expectations during training. They considered the six different domain film reviews (IMDB), hotel reviews,Footnote 30 Amazon product review (Electronics, Books, music, and apparel) for sentiment classification. The experiment results are comparable to state-of-art.

-

4.8 Graph-based techniques

A weighted graph is used for data representation in a Graph-based technique. In a weighted graph, nodes are data instance and weighted edge represent the relationship between those instances. In the graph-based approach, data is available in a manifold structure that is showing the instance’s behavior and connection. If data is not in the form of manifold structure, we can use the similarity function to find the similarity between graph vertices. The good graph explains the suitable assessment of the similarity between the data instances. One of the most popular algorithms for the graph-based technique was label propagation developed by Zhu and Ghahramani (2002). The proposed algorithm learned from the labeled and unlabeled dataset for sentiment classification. Cross-domain sentiment classification based on graph-based techniques work is introduced by Ponomareva and Thelwall (2012a, b).

-

Ponomareva and Thelwall (2012a, b)

-

Approach: Optimisation problem (OPTIM), ranking algorithm (RANK), kNN, Graph-based approach, Support Vector Machines (SVMs) (LIBSVM library) and Amazon product review (English Language)

-

Corpora: Electronics, Books, DVDs, and Kitchen

-

Explanation: Compared the performance and effectiveness of two existing graph-based approaches named as a ranking algorithm (RANK) and an optimisation problem (OPTIM) for cross-domain opinion classification. The Optimisation problem (OPTIM) considered opinion as an optimization problem and ranking algorithm (RANK) utilized a ranking to allocate opinion scores. In order to find the document similarity, they analysed and performed various sentiment similarity measures such as feature-based and lexicon-based. In their experiments, they considered the amazon multi-domain dataset and compared the existing graph-based approach with each other and with other state-of-art approaches (SCL and SFA) for the cross-domain opinion classification. The experimental results showed comparable results. Later in the graph-based approach work is extended by Ponomareva and Thelwall (2013).

-

-

Ponomareva and Thelwall (2013)

-

Approach: Graph-based approach, modified label propagation (LP), semi-supervised learning (SSL), Cross-domain learning (CDL), linear-kernel Support vector machine (LIBSVM library), Class Mass Normalisation (CMN) and Amazon product review (English Language)

-

Corpora: Electronics, Books, DVD’s and Kitchen

-

Explanation: Proposed a modified label propagation (LP) graph-based approach based on semi-supervised learning (SSL) and Cross-domain learning (CDL) algorithms. The authors observed the performance of graph-based label propagation (LP) along with its three variants (LPαβ, \(LP_{\gamma }^{n}\) LPγ) and its combination with class mass normalisation (CMN) on amazon multi-domain datasets in their experiments. Further in a graph-based approach: Zhu et al. (2013) to extract the labeled data with high precision from the target domain, utilized some emotion keywords and combined the labeled data of source domain and generated labeled data of target domain. After that performed the cross-domain sentiment classification and utilized label propagation (LP) algorithm, unlabeled and labeled data of the target domain. They used the amazon multi-domain dataset and the proposed approach achieves better performance.

-

4.9 Domain similarity and complexity techniques

Domain similarity is one of the approaches that can be used in domain adaptation to select the features from the source domain which are more similar to the target domain. To measure the domain similarity and variance in the complexity of the domains (Remus 2012) introduced the framework.

-

Remus (2012)

-

Approach: Domain similarity (pair-wise Jensen-Shannon (JS) divergence, unigram distributions, Kullback–Leibler (KL) divergence), Domain Complexity, Instant selection (ranked instances), Support Vector Machines (SVMs) with linear “kernel”, LibSVM,Footnote 31 unigram, and bigram features and Multi-domain Sentiment Dataset v2.0Footnote 32 (English Language)

-

Corpora: Kitchen and housewares, health and personal care, electronics, books, music, apparel, DVD, toys and games, sports and outdoors and video

-

Explanation: In order to achieve high accuracy in cross-domain sentiment classification, the authors are tried to find the features from training data set that are similar in the test domain. This study utilized domain similarity, domain complexity and instant selection parameter in the proposed approach and achieved the comparative results in domain adaptation. For the experiment, they considered 10 different domains and rating information of reviews. They employ unigram distributions, Jensen-Shannon (JS) divergence and support vector machine with their cos parameter. Further, this work is extended by Ponomareva and Thelwall (2012a, b).

-

-

Ponomareva and Thelwall (2012a, b)

-

Approach: Domain similarity, Domain Complexity, parts-of-speech (POS), unigram distribution, linear regression, Support Vector Machines (SVMs) and Amazon Multi-domain Sentiment Dataset (English Language)

-

Corpora: Kitchen, Electronics, books, and DVD

-

Explanation: The authors utilized the domain similarity (divergence) and domain complexity (domain self-similarity) approaches. Analysed the performance loss of a cross-domain classifier (predict the average error of 1.5% and a maximum error of 3.4%).

-

4.10 Feature-based techniques

To improve the performance of cross-domain sentiment classification, feature-based techniques are used.

-

Xia et al. (2013)

-

Approach: {Feature ensemble plus sample selection (SS-FE), PCA-based sample selection (PCA-SS), Labeling adaptation, Instance adaptation (sample selection bias), part-of-speech (POS), Naive Bayes (NB) and Amazon Multi-domain Sentiment Dataset (English Language)

-

Corpora: Kitchen, Electronics, books, and DVD

-

Explanation: Introduced a joint approach (that consider instance adaptation and labeling adaptation) named as feature ensemble plus sample selection (SS-FE). Feature ensemble model absorbs a new labeling function in a feature re-weighting manner and sample selection used principal component analysis as an aid to FE for instance adaptation. Experiments are performed on the amazon multi-domain dataset and outcomes indicated the effectiveness of SS-FE in both instance adaptation and labeling adaptation. Further:

-

-

Tsakalidis et al. (2014)

-

Approach: Text-Based Representation (TBR), Feature-Based Representation (FBR), Lexicon-Based Representation (LBR), Combined Representation (CR) (parts-of-speech (POS) using the Stanford POS Tagger + TBR, Ensemble Classifier (hybrid classifier (HC) and Lexicon-based (LC)), n-gram, TF-IDF and Twitter Test Datasets (English Langauge)

-

Corpora: Stanford Twitter Dataset Test Set (STS), Obama Healthcare Reform (HCR), and Obama-McCain Debate (OMD)

-

Explanation: Introduced an ensemble classifier that is trained on a domain and adapts without the need for additional ground truth on the test domain before classifying a document. To deal with the domain dependence problem in cross-domain sentiment classification, the ensemble algorithm is used on twitter datasets and results are comparable to state-of-art approaches.

-

-

Zhang et al. (2015)

-