Abstract

Increase in the number of sport lovers in games like football, cricket, etc. has created a need for digging, analyzing and presenting more and more multidimensional information to them. Different classes of people require different kinds of information and this expands the space and scale of the required information. Tracking of ball movement is of utmost importance for extracting any information from the ball based sports video sequences. Based on the literature survey, we have initially proposed a block diagram depicting different steps and flow of a general tracking process. The paper further follows the same flow throughout. Detection is the first step of tracking. Dynamic and unpredictable nature of ball appearance, movement and continuously changing background make the detection and tracking processes challenging. Due to these challenges, many researchers have been attracted to this problem and have produced good results under specific conditions. However, generalization of the published work and algorithms to different sports is a distant dream. This paper is an effort to present an exhaustive survey of all the published research works on ball tracking in a categorical manner. The work also reviews the used techniques, their performance, advantages, limitations and their suitability for a particular sport. Finally, we present discussions on the published work so far and our views and opinions followed by a modified block diagram of the tracking process. The paper concludes with the final observations and suggestions on scope of future work.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Huge efforts have been put in tracking, understanding and visualization of events from sports environments. Due to increase in the number and variety of sport lovers and their interest, there is a great demand of obtaining multidimensional data from the game. Public may need to view the events from arbitrary viewpoint. Amateurs need to understand the precise movements of the professional sportsmen to tune their own plans or moves. Sports persons and coaches need to analyze the tactical movements of the opposition players and teams in order to design their own counter plan. Broadcasting and publishing agencies need to detect, annotate, store, categorize and summarize the available video sequences based on the events occurring during the game. This should facilitate easy retrieval of a particular match event or moment within no time and produce highlights as and when required. Since ball is the center of attention and attraction of the games considered in this work, its precise tracking helps facilitate the above said tasks and hence we present a comprehensive survey of tracking of a ball.

We categorized ball games with respect to similarities in challenges including different level of difficulties due to size, randomness, speed of movement of the ball, etc. and shown in Table 1.

Thus, the task of tracking the sport entities is not problem-free. There are a large number of challenges and problems involved which include occlusion, misdetection, illumination variation, different quality of videos with varying frame rates, real-time constraint, etc. They produce different levels of difficulties for the ball tracking algorithms. This paper presents a comprehensive survey of various detection and tracking techniques for a ball in various sport events. Wherever possible the quantitative performances of the published algorithms have been presented using well-known measures like accuracy, precision, recall and F-score. This survey highlights the physical infrastructure, algorithmic approaches and steps, various milestones in tracking techniques, research and performance benchmarking of the available ball tracking algorithms. We also propose a modified model of a ball tracking system.

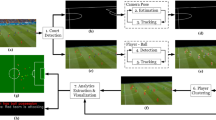

The ball tracking system is composed of large number of stages that include system modeling and pre-processing blocks, foreground extraction, followed by ball detection and ball tracking. Figure 1 shows the conventional block diagram of a ball tracking system.

The conventional system invariably requires certain pre-processing in order to get ready for actual detection and tracking. Region within dashed line in Fig. 1 shows the pre-processing stage. Its first stage consists of placement of camera(s) and acquisition of their initialization parameters. Stage 2 constructs a model of the game field obtained from the dimensions provided by rulebook of the game. The third stage involves camera modelling and calibration of each camera with homographic calculations for registering the play field that involves extracting the field lines and the feature points. Stage 4 further constructs a well-defined set of ground truths using the game rules.

Section 2 presents pre-processing stage with physical setups introduced so far for different ball tracking systems including camera placements, field modeling and registration, processes for camera calibration, ground truth and foreground extraction. Section 3 presents different features and methods used for ball detection techniques introduced so far. Section 4 presents different tracking algorithms under different categories like; Kalman Filter, Particle Filter, Trajectory based, and other miscellaneous types. Section 5 presents discussion on the major milestones in the literature survey, our observations and insight of the developments so far. Section 6 presents concluding remark and future scope.

2 Pre-processing and foreground extraction

In order to get ready for detection and tracking, it is important to set up the system, calibrate it, make it robust to noise and clutter, obtain ground truth and extract foreground. Pre-processing stage serves the purpose. Subsequent text of this section describes each sub-block of the pre-processing module in brief.

2.1 Camera placement

In order to capture the precise movements of the players, bat, racket, ball, etc. on the field or court it is important to employ appropriate number of cameras around it. Though the algorithms are expected to track a ball, other said objects are also required to be tracked in the dynamically changing area of interest for detailed analysis of events and indemnification of game rules. The video acquisition method has to be non-invasive to keep the rules of the games unchanged and still acquire all the minutest relevant events.

2.1.1 Single camera system

Researchers generally use a single fixed camera for capturing many sports events. The camera is placed on one side of the soccer field with its optical axis lying on the goal-mouth plane (D’Orazio et al. 2004) as shown in Fig. 2a or focused on the goal area (Ancona et al. 2003). Sometimes a fixed camera with properties like no zooming or panning capability (Bloom and Bradley 2003; Chen et al. 2012; Shah et al. 2007) are used. Camera with properties like monochrome (Chen and Wang 2007), low-resolution (Teachabarikiti et al. 2010), high speed (Glover and Kaelbling 2014; Shum and Komura 2005), high vision (Ariki et al. 2008), with light source (Zhang et al. 2011) (Fig. 2b), etc. are used to capture a fixed view of the field. Generally, high-speed cameras track the translational, rotational and spin movements of the ball. In order to reduce the burden of building a two camera based binocular vision system, ceiling mounted cameras (Fig. 2c) are preferred (Perše et al. 2005; Santiago et al. 2011). The single moving camera involves the using Pan–Tilt–Zoom (PTZ) capabilities (Dearden et al. 2006; Han et al. 2003; Yan et al. 2006b). The camera may be implanted on a robotic hand and use it in eye-in-hand configuration for tracking and catching a ball (Cigliano et al. 2015). The camera of a hand-held device (Uchiyama and Saito 2007) or a camcorder (Chakraborty and Meher 2011) may be used to take videos of the sports for tracking purpose.

2.1.2 Two camera system

A general purpose of using a two-camera system is 3D localization of the ball and players. Reducing the effect of occlusion and having clearer views from multiple angles are other major reasons for using the system. It includes using stereo cameras (Birbach and Frese 2009; Zhang et al. 2010) which may be unsynchronized (Liu et al. 2014) (as seen in Fig. 3a) or synchronized (Ishii et al. 2007; Kasuya et al. 2010) (as seen in Fig. 3b, c) or even dynamically coordinated (Hoyningen-Huene and Beetz 2009). The cameras may be placed outside the field at either the average height of player or a comparatively greater height to reduce occlusion (Kasuya et al. 2010) and have a bird’s eye view of the court (Misu et al. 2007).

2.1.3 Multi-camera systems

Apart from 3D localization of ball, such types of systems are usually employed where the ground is too big to be captured by one or two camera systems. Other purpose of such type of systems can be either to obtain a continuous shot of the complete game field or to cover every nuke and corner of the stadium clearly without occlusion or intrusion. These types of systems employ four cameras (Figueroa et al. 2004) which either may be static (Choi and Seo 2011) or dynamic high speed (D’Orazio et al. 2009a,b) (as seen in Fig. 4a) to cover the whole field. Two out of four high-speed cameras placed on both sides of one goal post are used to observe the goal events. The six-camera system consists of four cameras placed on the sides and two at the court ends (Pingali et al. 2000) at appropriate heights. Every side camera forms a pair with its adjacent end camera as seen in Fig. 4b. It could be part of an 8-monochrome camera system (Pingali et al. 2002) where other two cameras are used for player tracking. Each of the four cameras was paired with the two end cameras in order to observe the ball in 3D. The placement of the six cameras may contain three high-speed moving cameras on each side of the ground (D’Orazio et al. 2009a,b; Leo et al. 2008) so that all the area is doubly covered either by the adjacent or the opposite cameras. ISSIA dataset, prepared in accordance with this arrangement as in Leo et al. (2008), was used in Wang et al. (2014a) as in Fig. 4c. The APIDIS dataset of basketball match was prepared using 7 unsynchronized cameras placed one at the top and six around the court.

To obtain the multi-camera shots, Iwase and Saito (2003) used eight stable cameras with four lined up and set at both sides of the ground and aiming at the penalty area, as shown in Fig. 5a or elsewhere on the ground (Ren et al. 2004b, 2008, 2009) as shown in Fig. 5b. Conaire et al. (2009) used the infrastructure which included UbiSense spatial location system and nine cameras, with pan, tilt and zoom capability, positioned around the court as shown in Fig. 5c. Ohno et al. (2000) placed four cameras on each side of the soccer stadium as shown in Fig. 5d.

Single cameras are used where the field of view is small and fixed viz., goalpost or if the court is small enough to be captured from a single position as in Tennis. Single high-speed camera tracks all three motions; the rotational, translational and spins of a ball. External robotic mechanism gives 3D movement to single camera to keep the ball in its field of view. However, single camera system could not solve the problem of occlusion of ball by players due to its limited viewing angle. Multiple camera system could deal with occlusion problem by giving clearer views from multiple angles. Such systems cover every nuke and corner of the stadium. Modern day sports entertainment needs 3D localization of the ball and players as well as \(180{^{\circ }}\) shot from multiple cameras.

2.2 Field modeling and registration

The standard dimensions of the court model, lines and circles on the court, net in tennis, ring in basketball sport, etc. are available in the rulebook of the respective game and used as domain knowledge. The dimensions prove to be of much help in marking the field of interest and modeling the sport court, ground or field. The model and the crossing points of lines form the feature points that are required for calibrating the camera(s). In order to increase the number of intersection points, virtual intersection points (Tong et al. 2011) are also employed along with real intersection points.

The registration stage involves extraction of feature points from the ground lines and their crossings. General methods incorporating the use of Hough-transform (Chen et al. 2012; Farin et al. 2004; Miyamori and Iisaku 2000; Tong et al. 2011; Yu et al. 2009) or Canny edge detector (Ren et al. 2009) for feature point extraction by line detection and/or custom made ellipse detection (Yu et al. 2003d, 2004a) for tangential feature point extraction are used to find feature points. Feature points are also searched in other planes like tennis net (Chen et al. 2012; Miyamori and Iisaku 2000; Yu et al. 2009) using similar procedure. It is followed by tuning the homography matrix (or camera parameter refinement (Farin et al. 2004)) and updating for more accurate feature points. After obtaining all the straight lines in different planes, some reference like net is used for fitting the straight lines of the image with the model (Yu et al. 2009). The lines may cause false alarms which are removed using masks made from Field of View (FOV) of each camera in a multi-camera system (Ren et al. 2009). The Hough transform for line detection suffers from a disadvantage of being slow. To add to the complexity, the lines in Broadcast Soccer Video (BSV) are long and sparse. A solution was provided in Yu et al. (2004c) in the form of a grid based algorithm for line detection in goal-mouth scene. The system designed in Farin et al. (2004) required 1 s for initialization which was not sufficient for real-time applications which was modified by Farin and Han (2005) and increased the speed to 30 ms for first frame and 6 ms during court tracking using RANSAC (Fischler and Bolles 1981) based line detector for line parameter estimation instead of Hough transform. On the lines of Farin and Han (2005), Chang et al. (2009) also modified the approach of Farin et al. (2004) and made it applicable for broadcast basketball videos (BBV).

2.3 Camera model and calibration

Camera calibration consists of model estimation for an un-calibrated camera. The main objective of the method consists of finding the external (extrinsic) and internal (intrinsic) parameters of the camera. External parameters of camera include its position and orientation with respect to the world coordinate system whereas the internal parameters include the image center, distortion coefficients and focal length. The calibrated camera video signal provides an accurate and consistent mapping between the real world objects’ position and dimensions and their graphical representation in the video frames.

2.3.1 Ideal pinhole camera model

The video acquisition process always employs image acquisition to grab video frames for research work. Thus, we describe the basic static camera model for image acquisition for the sake of completeness. The model defines the geometric relation between a 3D point in real world coordinate system and its corresponding 2D projection on the image plane. A real world point \(\left( {X,Y,Z} \right) ^{T}\) in 3D homogeneous coordinate space is \(P=[X,Y,Z,1]^{T}\) after adding a fourth auxiliary coordinate 1. Its corresponding 2D point \(\left( {x,y} \right) ^{T}\) on the image is \(p=[x,y,1]^{T}\) with the third auxiliary coordinate 1. The points in 3D and 2D coordinates are related as in Eq. (1).

With \({{\varvec{K}}}=\left[ {{\begin{array}{l@{\quad }l@{\quad }l} {f_x }&{} c&{} {o_x } \\ 0&{} {f_y }&{} {o_y } \\ 0&{} 0&{} 1 \\ \end{array} }} \right] \quad {{\varvec{R}}}=[{\begin{array}{l@{\quad }l@{\quad }l} {{{\varvec{r}}}_0 }&{} {{{\varvec{r}}}_1 }&{} {{{\varvec{r}}}_2 } \\ \end{array} }]=\left[ {{\begin{array}{l@{\quad }l@{\quad }l} {r_{00}}&{} {r_{01}}&{} {r_{02}} \\ {r_{10}}&{} {r_{11}}&{} {r_{12}} \\ {r_{20}}&{} {r_{21}}&{} {r_{22}} \\ \end{array} }} \right] ,\quad {{\varvec{T}}}=\left[ {{\varvec{t}}} \right] =[{\begin{array}{l@{\quad }l@{\quad }l} {t_x }&{} {t_y }&{} {t_z } \\ \end{array} }]^{T}\) where, \({{\varvec{H}}}\) is the perspective projection matrix, \({{\varvec{K}}}\) is the camera intrinsic parameter matrix which include \((f_x ,f_y )\) as focal lengths on x and y axis in image plane, \((o_x ,o_y )\) as principal points which denote the intersection of the optical axis of the camera lens and the image plane and c is the distortion coefficient of the two axes of the image. \({{\varvec{R}}}\) and \({{\varvec{T}}}\) denote the rotation matrix and transition vector as the extrinsic parameters. \(\lambda \) is the homogenous scaling factor. Equation (1) modifies into \(p\approx {{\varvec{H}}}P\) if scale factor is unity. There are total eleven camera parameters that include five intrinsic and six extrinsic parameters used for achieving camera calibration.

At least six pairs of non-coplanar points are required between the world coordinates and the image coordinates for estimating all eleven parameters (Chen et al. 2009). By assuming the height in the world coordinate system as constant and scale factor as unity, Eq. (1) can be modified into Eq. (2) where \(r_2 \) in rotation matrix and Z component are made zero (Hartley and Zisserman 2003).

The perspective projection matrix size reduces from \(3\times 4\) to \(3\times 3\). The number of camera parameters also reduces from eleven to eight. The computation of eight camera parameters is thus possible using only four pairs of coplanar points (Chang et al. 2009; Liu et al. 2006; Mauthner et al. 2007; Ohno et al. 1999; Yu et al. 2004c). If the image’s \({{\varvec{H}}}\) is known and is invertible then its inverse can be used to calculate a point in 3D coordinate frame (Liang et al. 2007; Liu et al. 2006) if its corresponding point in 2D coordinate system viz., image, is available.

The method covered so far in this sub-section is direct homography estimation. It is used only if sufficient key-points are available. If the number of key-points in the current frame is not sufficient then indirect method of homography estimation is used (Tong et al. 2011). A few camera calibration methods (Chen et al. 2012; Yu et al. 2004c) used direct linear transform (DLT) (Hartley and Zisserman 2003).

2.3.2 Tsai camera calibration model

This is one of the most popular camera calibration methods since it is suitable for application to wide area as it can deal with coplanar as well as non-coplanar points. It also facilitates separate calibration of internal and external parameters. This process is mostly suitable for cases with known internal parameters and unknown external parameters. This method involves transformation from 3D world coordinates to undistorted image plane coordinates, then to distorted image plane coordinates and finally to image coordinates (Kumar et al. 2011; Li et al. 2005; Pingali et al. 2000, 2002) as shown in Fig. 6.

Along with the intrinsic and extrinsic parameters, distortion factors could also be calculated as in Choi and Seo (2011). The object’s measurement in the world coordinate could be calculated using the pixel dimensions in image as two fixed intrinsic constants (Ren et al. 2008). The manual mapping of the corner points in 2D image model and those on 3D pitch model are inputs to the Tsai’s calibration. Tsai’s camera calibration algorithm is of less use for the cases of ceiling mounted cameras, as it requires 3D coordinates for calibration unlike the 2D plane of the observed field.

2.3.3 Using geometric relations and multi-camera homography

2D projective transformation is a linear transformation using a homogenous 3-vector transformation H represented by a non-singular \(3\times 4\) matrix as in Eq. (3).

where, H is a homogenous matrix. Let the inhomogeneous coordinates of a pair of matching points X and x in the world and image plane is given by (X, Y, Z) and (u, v) respectively. The projective transformation in inhomogeneous form is written as in Eq. (4).

Thus we get the linear equations, Eq. (4), in terms of H. Four point correspondences leading to eight such equations are sufficient to solve H. This method is usually applied in the system where two (Choi and Seo 2004; D’Orazio et al. 2009a,b) or more cameras [six in case of D’Orazio et al. (2009a,b) and eight in case of Iwase and Saito (2003)] are used and homography is to be computed between them. The feature points may be derived automatically (Zhu et al. 2008) or supplied manually (Choi and Seo 2004). Such reference points are usually dependent on the scene the cameras are capturing. In case of football, if the scene happens to be the goalmouth region then the penalty area or goal area lines (Iwase and Saito 2003) may be used and if it happens to be the center of the field then the tangential points on the ellipse are used (Yu et al. 2003d, 2004a). In case of two cameras with focal points close to each other, their stitched image is used for computation purpose (Choi and Seo 2004). Finally, this method computes the homography between the stitched image and one of the two images.

2.3.4 Toolboxes

Camera parameters may also be calibrated automatically using OpenCV camera calibration toolbox as in Conaire et al. (2009) and Matlab camera calibration toolbox as in Kumar et al. (2011). Intrinsic and extrinsic parameters may be calibrated separately as in Kelly et al. (2010) which used Matlab toolbox to calculate intrinsic parameters and OpenCV toolbox to calculate extrinsic ones. Kumar et al. (2011) used Matlab calibration toolbox for calibrating every single camera. They obtained 3D points using the 2D point triangulation and back-projection methods. The authors used weighted averaging method to avoid camera calibration errors and 2D tracker errors.

In case of a single static camera system with fixed and limited area, ideal pinhole camera system may prove beneficial. It is the simplest case of homography estimation. However, if the system has more than one camera; as is required in most of the games and sports the multi-camera homography method is suitable as compared to the ideal single pinhole camera system. Tsai’s camera calibration method is preferred when wide area is to be covered. It can also deal with coplanar and non-coplanar key-points. In Tsai’s method, it is possible to calibrate internal and external parameters separately. However, Tsai’s method is less suitable for ceiling mounted camera system, as it requires 3D coordinates for calibration while the field is a 2D plane for a ceiling mounted camera.

2.4 Ground truth

In tracking, ground truth refers to either actual 2D or 3D position or contour of the object in every frame of the video sequence (Zhang et al. 2010). The overall ground truth data of the video sequence is a standard for quantitative evaluation of any tracking algorithm. Capturing the ground truth data becomes an important step after camera calibration and registration. Usually, an operator obtains the data manually by observing every frame and selecting the region or points. This happens to be a cumbersome and time-consuming task. Software like Video Performance Evaluation Resource (ViPER) (Chakraborty and Meher 2013b; Li et al. 2005), Context Aware Vision using image based Active Recognition (CAVIAR) (Li et al. 2005) and Open Development for Video Surveillance (ODViS) (Li et al. 2005) provide an interactive framework for defining ground truth. Data generated by a tracking system is compared to the ground truth for performance evaluation or rule infringements detection.

2.5 Foreground extraction

The term ‘Foreground’ refers to a set of pixel intensities that drastically change their positions in current frame with respect to their positions in previous frame. The set of such pixel intensities form an object that appears moving in every next frame. On the contrary, ‘Background’ refers to a set of pixel intensity values that either do not change their positions or change their positions by a few pixels per frame. The process of foreground extraction assumes that the trackable object is a part of foreground and without separating it from the background; detection, and eventually tracking, will not be feasible. Thus, foreground detection or background detection both aim at object (target) detection.

Since the target object is ball, it is evident that players will play with it on a ground or court and spectators will watch the game. The spectators are the biggest source of introduced noise and distortion, if they are visible in a video sequence. Thus, some approaches (Barnard and Odobez 2004) have introduced playfield extraction as the first step in foreground extraction by building a mask for pruning the noisy stands region. Further, the approaches extract the non-field regions inside the playfield as foreground. The process generally uses dominant color of the playfield sometimes coupled with histogram back-projection (Dearden et al. 2006) to build a mask. However, masks built from fusion of geometry based masks and dominant color based masks are also used (Ren et al. 2008). The court masks can also be generated using RANSAC based method or Hough Transform based method (Naidoo and Tapamo 2006) of court boundary line detection accompanied with dominant color based method (Chang et al. 2009). Sometimes color alone is not sufficient so multiple cues including color, motion (correlation between successive frames) and shapes are used for forming the playfield segmentor (Xing et al. 2011). However, during long shot frames, smaller ball size may hamper the ball detection process. Thus, a few methods suggested pre-processing of frames in advance using Saliency Map (SM) and later constructing the Saliency Binary Map (SBM) for facilitating the detection process (Chakraborty and Meher 2013a). In case of BSV, the above approaches filter out the frames containing the field view. The color ranges of the field, lines, ball and player uniforms are provided as input to the algorithm which does the sorting task (Yu et al. 2006).

Playfield extraction may not always be included in the foreground extraction process. Sometimes, background subtraction replaces foreground extraction. It requires building an adaptive background model from which foreground objects are extracted. The model can be built either beforehand or during runtime. For an object to be considered foreground two cues are generally used viz., motion and color.

Using motion as feature includes generation of the background model based on per-pixel Gaussian mixture model (GMM) (D’Orazio et al. 2009a,b; Li et al. 2005; Ren et al. 2008, 2009). The model must be updated in order to overcome errors in initial background registration due to lighting variations. Some algorithms like running average may be used for updating the model efficiently (Ren et al. 2008). Background subtraction algorithms like recursive approximate median filtering (Chakraborty and Meher 2012b; Ekinci and Gokmen 2008), successive frame differencing (Chakraborty and Meher 2011, 2013b; Chen and Wang 2007; Chen et al. 2007; Conaire et al. 2009; Ishii et al. 2007; Kokaram et al. 2005; Zhang et al. 2010) etc. use sequence of successive frames to distinguish moving pixels from the static ones.

Using dominant color as feature, statistical clustering approach like GMM was used to build the playfield color model (Barnard and Odobez 2004; Choi and Seo 2004; Kasiri-Bidhendi and Safabakhsh 2009; Liang et al. 2007; Zhu et al. 2008) based on field color. Dominant color pixels may be used to build a court binary mask (Chang et al. 2009; Hossein-Khani et al. 2011; Hu et al. 2011; Kim and Kim 2009; Tong et al. 2004) whereas the non-dominant pixels formed the player and ball candidates. The model needs to be updated periodically in order to counter the lighting variations (Kelly et al. 2010). The process of model adaptation or parameter estimation may be carried out by maximum a posteriori (MAP) or expectation–maximization (EM) (Liang et al. 2007). The process of foreground extraction can be implemented by forming lookup tables of the ball color (Liu et al. 2006) and comparing each pixel with it using some distance measure.

The resulting image is converted into binary form so that the ball regions are vividly seen. Logical operations (Ishii et al. 2007; Kokaram et al. 2005; Pingali et al. 2000, 2002; Ren et al. 2008) may also be helpful in such cases. To get rid of noise and clutter, morphological operations like opening (Batz et al. 2009; Chakraborty and Meher 2012a, 2013a, b; Chen et al. 2012; Kittler et al. 2005; Zaveri et al. 2004), closing (Chakraborty and Meher 2012b; Ekinci and Gokmen 2008; Hossein-Khani et al. 2011; Liu et al. 2006; Pingali et al. 2002; Ren et al. 2008; Shum and Komura 2005), top-hat (Ekinci and Gokmen 2008), etc. may be applied on the binary images. Thus, the number of false positives is reduced using morphological operations which are sometimes assisted by region growing processes (Liang et al. 2007; Yu et al. 2004b). The obtained blob regions are labeled in order to provide them identity. Thus, connected component labeling (Figueroa et al. 2004; Kim and Kim 2009) is performed and minimum bounding rectangle (MBR) may be fit (Naidoo and Tapamo 2006).

Like players, tracking of a ball is important due to the fact that intention of the players cannot always be judged from their movements only (Ohno et al. 1999).

3 Features and methods for ball detection

This stage involves the discussion of various features and methods used to detect the ball from the blobs obtained after the foreground extraction stage. A basic system for ball detection is shown in Fig. 7.

The initial stage involves modeling of the ball region, which is followed by feature extraction from samples and storing (or mapping) it for future use. This modeling of ball is carried out from different distances, under different illuminations and from different angles in case of non-spherical balls. This even may be required in case of multi-colored or multi-pattern balls. It is also known as training stage in some works. Either a similarity measure or a classifier is chosen which helps to identify the regions into Ball or non-Ball by obtaining the features from the blobs of the sequence. This process is sometimes referred to as testing phase as well. The next section presents the features and methods used by researchers for detecting ball from the blob regions.

3.1 Color

In most of the sports played worldwide, the balls have a distinct color compared to playfield or ground. In order to detect a fast moving ball its color proves to be one of the important cues. The detected blobs are searched for the specific color pixel clusters and if found, may be classified as a ball based on other criteria like shape. The ball pixels are obtained manually beforehand during training. The ball color sieve may be designed to filter objects with too few or no ball color pixels (Ekinci and Gokmen 2008; Yu et al. 2003b, 2004b, 2005, 2007b, 2009). A general condition for segmenting ball candidate pixels in normalized color space is presented in Eq. (5) (Huang et al. 2008; Liang et al. 2005; Liu et al. 2006; Liang et al. 2007).

Here, B—binary image, (x, y)—pixel locations, r(x, y) and b(x, y) denote normalized red and blue components respectively, I(x, y)—luminance value. Empirical values of a and b are selected heuristically using the color of the ball during training. The detection process for a blob also depends on the ratio of the ball color pixel to the total number of blob pixels (Ren et al. 2008; Yu et al. 2006). A template histogram (Wang et al. 2014a) formed by concatenation of histograms of HSV channels separately obtained during training have been used for comparison with the current histograms. Table 2 summarizes ball detection results of a few research works.

However, color feature of a ball is highly affected by illumination, sunlight, floodlight, weather conditions, type of lighting in indoor court, ball speed, player uniform, background with similar color objects, too bright or too dark background, etc. which makes it unreliable for detection purpose.

3.2 Size

It is a flagship feature that needs to be estimated in pixels, from actual ball’s dimensions in real-world units, before searching in frames. Size of an object is determined by the total number of pixels it is having which is decided either according to its 8—connectedness (Ekinci and Gokmen 2008) or the size of the bounding box. Its range sets a limitation for the length and width of the object’s MBR. Average player’s height could be used to determine ball size range for each frame F using a function \(R, R(F)=[R_{\min } , R_{\max } ]=[h/7,h/3]\), where, h is the average height of all players in F (Chen et al. 2009; Yu et al. 2003a). Ball size estimation from player’s height is less accurate than using center ellipse, goalmouth (Yu et al. 2003b, 2004c, 2006), court lines (Chen et al. 2012) or tennis net (Yu et al. 2004b). The ball size range may be given as in Eq. (6).

The result replaces the ball size b(i, j), where, \(\Delta _1 \) and \(\Delta _2 \) are the extensions for error tolerance. As the ball size may vary from frame to frame (Chakraborty and Meher 2011, 2012a, b, 2013a) adopted suitable pixel ranges for detecting a ball candidate in a video of varying frame sizes. Homography could also be used to estimate the ball size (Yu et al. 2007b, 2009). Ishii et al. (2007) implemented a real-time ball detection which took an average time of 3.08 ms per frame by the ball centered search. Chen et al. (2007) obtained an accuracy of 92.43%.

3.3 Shape

Shape is another important feature for ball detection. Its measurement and representation is carried out either by direct detection or removal of non-ball objects. It is categorized into many sub-features. A brief compilation of different shape sub-features, their mathematical representations, their typical values or ranges have been presented in Table 3. Table 3 also presents different performance measures achieved and published so far using these shape features by different algorithms followed by their shortcomings if any.

Shape of the ball is subject to change depending on type of camera, its framerate, ball speed, background, etc. In spite of these problems, shape is the most used feature for ball detection.

3.4 Position/proximity to expected position

Position/proximity is used in case of multiple detections. The ball candidate is supposed to be found in the expected position only (Pingali et al. 2000; Teachabarikiti et al. 2010) and the ball candidates that are far from the hitting position must be discarded (Yu et al. 2004b). Accurate automatic detection of hitting direction/position is a major issue of concern. However, the position feature plays an important role in detecting true ball candidates. Since the camera always follow the ball it is most likely to appear in \(2/3{\mathrm{rd}}\) width in the center and \(2/3{\mathrm{rd}}\) height at the bottom of a frame (Zhu et al. 2008).

3.5 Template matching

This method of ball detection is required to have a ball-like template formed beforehand. The process of template matching (TM) is performed at the player contour and is repeated for a straight-line path of the ball moving radially away from the player and at a predicted position. TM is generally performed using the normalized cross correlation between the template obtained from the first frame and the detected ball candidates (Ariki et al. 2008). The gray correlation coefficient (Tong et al. 2004) and the correlation measure (Leo et al. 2008) are on the same lines as in Ariki et al. (2008). An adaptive TM method (Ishii et al. 2007) is also used which is based on ball movement and reduces the search area as much as possible. Probability map based TM (D’Orazio et al. 2009a,b) uses past information of ball motion. The positive and negative ball image templates may be used to train a support vector machine (SVM) classifier for ball detection (Ancona et al. 2003). However, TM may give false positives if objects with similar appearance like player’s head, socks, shoes, ball-like pattern on jersey, scoreboard, stands, etc. are present in the current frame. Frequent update of template is a solution to reduce the number of false positives.

3.6 Circle and ellipse detection

This subsection discusses a few algorithms dedicated to circle detection viz., circle Hough transform (CHT), modified circle Hough transform (MCHT) and ellipse fitting.

CHT extends the basic idea of Hough transform from line detection to circle detection. The original CHT is robust to occlusions and noise but suffers from disadvantage of computational complexity. As per the comparison done in Janssen et al. (2012), the speed of modified CHT techniques depend on number of edge pixels and the parameter space size. In case of Football as an object, its number of edge pixels is very small and its size does not change much. Due to these reasons, the original CHT was found suitable for real-time purposes compared to modified CHT. In Wang et al. (2014a), color histogram based TM was used to find ball candidates. CHT was applied on the obtained ball candidate regions by Pallavi et al. (2008).

MCHT involves the use of directional CHT with simple convolutions (D’Orazio et al. 2004, 2009a,b) or using improved Circle response (Birbach and Frese 2009; Birbach et al. 2011; Cigliano et al. 2015). The prior method requires knowledge of ball appearance (illumination conditions and ball dimensions) to prepare masks for the ball to be detected. The method is advantageous in terms of high detection rates during full visibility of ball and low computational cost. The main drawback of the method was its failure in detecting occlusions and ball’s absence in the frame. Finally, a trained neural network (NN) was used to classify the candidate into ball and no ball. The later method is illumination invariant and does not need tuning parameters or hard thresholds. D’Orazio et al. (2004) implemented a modified Atherton algorithm which gave 97% of ball detection under natural light conditions with a processing speed of 0.3 s/frame. Researchers Birbach and Frese (2009) yield 69.4% of ball detection for a 417 stereo frame sequence with visible balls forming 32 trajectories. However, CHT requires prior knowledge of the ball radius, which is to be detected. Thus, it cannot be used in multiple shot types without modifying the radius. CHT with NN fails to detect the ball when non-ball objects look like the ball.

It is observed that if the ball moves very fast or if the frame rate is less; it appears as a blurred ellipse instead of circular. Generally, the ellipse is fitted on the blob, with its major and minor axes crossed through the ball center, according to some means like least square criterion (Yan et al. 2005). Quality measure of the fit can also be extracted as in Christmas et al. (2007). Finally, a trained classifier like Adaboost (Kittler et al. 2005) or SVM (Christmas et al. 2007) is used to classify the blobs into ball or non-ball. A few approaches like Yan et al. (2005) used the mean absolute difference between the gradient and the normal direction at all sample points.

3.7 Motion

Once the player region is detected, the ball motion region is searched in radial direction from the players at the estimated position (Ohno et al. 2000, 1999). Its representation was provided in Wong and Dooley (2010) and given in Eq. (7).

where, M represents the condition as to whether the ball candidate is in motion or not, \(t_M \) is the preset threshold and \(O_{{ diff}} \) is the Euclidean distance between the centroid of the blob in the current and the previous frame. Pallavi et al. (2008) used motion based detection for non-ball filtering in medium shots in BSV.

3.8 Trajectory

The trajectory-based detection involves the difference between the predicted and actual trajectory. It is a condition represented by T, as to whether the ball candidate follows the predicted trajectory or not. It is proposed in Wong and Dooley (2010) and given in Eq. (8).

where, \(t_T \) is the preset threshold and \(L_{{ diff}} \) is the Euclidean distance between the actual and predicted locations of the blob. The method is shown in Fig. 8 where, circles represent ball in a trajectory at the successive frames.

In frames 3 and 4, the red (upper) circle represents actual ball trajectory whereas the green (lower) circles are part of predicted ball trajectory. The predicted locations are the linear extrapolation of the blob position in the previous frames 1 and 2. Ideally, the actual trajectory position and the predicted trajectory positions should exactly match. Pallavi et al. (2008) achieved non-ball filtering in long ball shots using trajectory obtained by dynamic programming. They achieved ball detection at an average recall rate of 94.2% and average precision of 90.72%. This algorithm does not need prior pre-processing of the video and is efficient even with poor playfield conditions. However, they could not detect the ball when it is partially visible in medium shots. In addition, they could only estimate the routes for medium and long shots and not for the whole match.

3.9 Gradient directions

After ellipse fitting is applied on the blobs, if the gradient is assumed to be normal to the ellipse and positive to the direction of the center of the ellipse then the blob may be a ball (Kittler et al. 2005). Based upon this, the blobs are classified using Adaboost classifier. Blobs are classified into ball or no-ball using the gradient directions taken at the blob boundaries (Almajai et al. 2012; Huang et al. 2011).

3.10 Training based detector

Instead of using single feature based ball detection, a multi-feature Bayesian classifier may be used for classifying the objects into ball and non-ball. The generally used features are of two types, viz. appearance features and distance features. Some appearance features (Yu et al. 2003b, 2004b, c) may include surrounding of the candidate, color, shape, size, circularity, etc. whereas distance features (Yu et al. 2006, 2007b, 2009) include separation. An SVM classifier, trained for blob color and shape features has been used for classification in Christmas et al. (2007).

3.11 Speed/velocity

Speed is an important cue for ball detection and removal of false candidates (Ishii et al. 2007). This is because ball is the fastest object on the ground and its image will have motion blur proportional to its speed. Suppose that a ball, whose diameter is 22 cm, moves with a maximum speed of 100 km/h in a frame whose rate is 30 fps, then the ball will move around 90 cm on ground during a single frame interval. Obviously, the ball moves a distance several times the apparent ball diameter in a frame.

4 Ball tracking techniques

Main purpose of a ball-tracking algorithm is to establish a correspondence between detected ball candidates across adjacent frames to form a continuous trajectory. Ball tracking plays another important role of alleviating discrepancies like misdetection and hidden or missing ball localization. Following subsections elaborate on various ball tracking approaches and their implementation followed by their strength and weaknesses.

4.1 Kalman filter

This approach deals with estimating optimal state of a ball in the current frame if the initial (previous) state and noise are available. Kalman filter (KF) works well for linear systems with Gaussian distributed states and noise. However, for non-Gaussian object states and for non-linear systems, particle filter (PF) is preferred for state estimation.

Most of the researchers, in medieval era, employed KF to estimate ball velocity for predicting ball position i.e. detection in each frame. However, ball size, color, longevity have also been modeled for improving the ball detection process (Ren et al. 2006). They employed occlusion reasoning and tracking back for obtaining the ball occluded by players as well as for false alarm removal. Robustness and continuity of the ball tracking system was improved using hysteresis based thresholding by Ren et al. (2006). KF predicted vector was also used for improving the robustness and continuity of ball tracking in Ishii et al. (2007). Position, velocity and acceleration of the ball are modeled in 2D or 3D space in Ishii et al. (2007). Chen and Zhang (2006) incorporated incremental Bayesian algorithm (EM) in association with KF to update dynamic motion and appearance parameters of the ball. Ren et al. (2009) used multi-camera based approach similar to Ren et al. (2006) for ball tracking in 3D space.

During ball tracking, if players occlude the ball for a long time, conventional KF makes a poor ball prediction that increases error. Dynamic KF (DKF) has been used for reducing the error in such dynamic conditions using occluding player information and controlling velocity of the state vector (Kim and Kim 2009). However, the proposed framework shows poor results when the players are crowded on the ground around the ball. During any sport, the ball appearance keeps on changing due to various environmental factors. Large change in ball appearance causes KF to fail due to the changed reference statistical appearance model. The changes may be incorporated in observations of KF at regular interval by adaptively updating the ball template (Liang et al. 2005, 2007; Liu et al. 2006). KF predicts location of the ball in the next frame and filters the tracking result in the current frame. The algorithm runs fast owing to the fact that only a small portion of each frame is processed in most of the cases when the ball is detected around its expected position.

We can broadly classify ball motion in sports into linear and non-linear motion. Single linear filter is not able to track the ball in that condition. A few researchers used multiple-filter banks that took care of both the motions by tracking the ball in maneuvering as well as non-maneuvering state with fast switching method (Zaveri et al. 2004). They did not use any apriori information regarding the object dynamics. The system was robust for noisy background and changing ball size due to camera zooming effect. However, the datasets used were too small i.e. of mere 100 and 32 frames. Desai et al. (2005) implemented a similar multi-filter bank tracking algorithm on Xilinx Virtex-II Pro FPGA, but no quantitative results were presented. A single motion track using KF cannot model the discontinuity of ball motion due to a bounce or a hit. This problem can be solved by generating Multiple hypotheses i.e. one track for each motion model (each new field) to cope with the discontinuities (Kittler et al. 2005).

KF is a basic tracker suitable for tracking non-occluding objects moving linearly, slowly and with constant acceleration. Thus, tracking a high velocity ball with changing appearance is not possible with KF. Though a few methods claim to achieve good results with KF, they use either smaller datasets with low-resolution frames or deliberately avoided videos with conditions like cluttered background, complex trajectory, occlusion, etc. Table 4 presents a summary of all KF based ball-tracking methods published so far in different sports, validating databases, obtained results, strengths and shortcomings.

4.2 Particle filter

For non-linear systems, many extensions of KF have been developed and applied for ball tracking. However, when a strong non-linearity i.e. high ball speed, acceleration and spin occurs, KF and its extensions fail to give precise approximations of mean and covariance matrices and this degrades the tracker performance drastically. In such a scenario, Sequential Monte Carlo or Particle filter (PF) (Arulampalam et al. 2002) is suitable for approximating the posterior probability density function of the state. The algorithm propagates a weighted set of particles that approximate a density function. PFs are neither bound for utilization in presence of Gaussian noise nor bound by linearity of the systems. Thus, they provide flexible tracking frameworks. PF is suitable for solving the problem of ball tracking as balls have varying sizes, non-constant velocity, prone to occlusion due to players, objects and background with similar appearances. Generally, particles have hypothetical ball states that include position, velocity and acceleration in a 3D coordinate system.

PF generally makes use of appearance features to identify the ball. Selection of appearance feature usually depends on the type of ball to be tracked. Tracking of balls in indoor sports like Snooker (Rea et al. 2004), Billiards, Tennis and Pool typically use color as a strong feature in PF. Conventional PF has also been applied for locally searching a ball, lost due to small size or occlusion, in a switching search method proposed by Ariki et al. (2008). Per-frame ball tracking is implemented in ASPOGAMO (Beetz et al. 2009) where current state of the ball is updated as per the ball dynamics. However, tracking in presence of motion blur and occlusion remain partially solved even using PF. Performance of the ball tracking algorithm can be improved using accumulation image along with PF, after removing player and noise blobs (Choi and Seo 2004, 2005). The accumulation image not only provides a proposal density for PF, but also decides whether the ball was present in the image frame or not.

In order to cope with the cluttered background, occlusion and motion blur, a few modifications have been incorporated in the conventional PF. An adaptive PF is designed by Huang et al. (2008) where a strong detector is used to trigger the tracker while a weak detector is used to enhance the tracker. Strong detector is generated from shape properties of foreground blobs. However, the weak detector is generated using the foreground detection likelihood and gets integrated into observation likelihood of the tracker. The mixture model of the two detectors overcomes the ambiguity of template matching (TM) in cluttered background. Tracking becomes complicated when any model is learning the motion of a ball that has non-linear and non-periodic oscillatory movements like Table Tennis ball. To overcome the problem, El Abed et al. (2006) incorporated an adaptive dynamical model into PF. In order to adapt to the ball’s size, color and texture changes, Tong et al. (2004) appended Factored Sampling based Condensation algorithm with region optimization for soccer ball tracking. The authors also defined a scale-invariant soccer ball template confidence measure in order to bootstrap the re-detection of the ball region needed for continuous tracking. Though the system yields real-time tracking results, it again failed under occlusion, complex background and small ball size conditions. Yan et al. (2005) used two separate models for tracking tennis ball either in flight mode (first dynamic model) or in transition mode (second dynamic model). This improved sampling efficiency based PF algorithm has two advantages viz.; The Monte Carlo simulation limits the computational complexity by choosing the number of particles without losing Bayesian optimality. Another advantage is that the samples are directly drawn from the posterior density instead of drawing from the prior probability distribution. A Support Vector Regression (SVR) based PF (SVRPF) reweighting scheme is introduced by Zhu et al. (2009) in order to re-approximate the posterior density in PF. Sample distribution in SVRPF is maintained to achieve detection after ou of view situations and momentary occlusions.

Misu et al. (2007) proposed an architecture for soccer ball tracking using distributed PF. The filter exchanges particles among tracking units. The system improved positional accuracy along with robustness against factors like; occlusion, ball out of frame, system instability and time-out situations due to hanging. This could be possible by integrating multi-view information from two cameras. None of the above approaches works on long video sequences. Cheng et al. (2015) explored the likelihood estimate of PF and incorporated ball size adaptive (BSA) tracking window. The corresponding ball feature likelihood model and an anti-occlusion likelihood measurement model is proposed for ball tracking in 3D in volleyball sports using 4 cameras. However, it is very difficult for the system to recover the tracking targets once lost. Cheng et al. (2016) removed the shortcoming by incorporating automatic recovery of a lost target by 3D detection and anti-occlusion observation model. However, deleting some camera information with low likelihood makes the particles unstable. Both the approaches given above are not suitable for low-resolution videos with less than standard framerate (30 fps). This is because in low-resolution videos, fast moving ball appears blurred, elliptical, but not circular as expected in the above two approaches.

PF based approaches work by tracking the particles distributed all over the target. A few methods claim to satisfactorily overcome problems like small sizes, varying scales, partial occlusion, back-ground clutter, non-linear and non-periodic motion. However, when size of the ball becomes very small or when its color appears different from original then the number of particles reduces drastically which leads to failure of the tracker. When the ball appears touching a player wearing similar color uniform or when the ball merges with similar appearing color background, the particles assigned to the ball are distributed over both the regions. In such cases, if good redetection mechanism is not incorporated in the tracker, then it may lead to permanent failure of the tracker. In addition, the particles are not rapidly updated which leads to poor performance in tracking fast moving balls. One solution may be to reduce the number of particles assigned to a ball but it may result in increased number of false positives. This approach can work for close-up and medium shots, but gives poor performance for long shots due to small size of the ball. Table 5 presents a summary of all PF based ball-tracking methods published so far in different sports, validating databases, obtained results, strengths and shortcomings.

4.3 Trajectory

Trajectory based ball tracking approach is a wide umbrella covering tracking techniques that use physical or dynamic models, physics-based approaches, TM and triangulation. Since, major focus of these methods is tracking a ball using its trajectory; they are grouped as a single approach. Major focus of various researches done under this approach is building an optimum 2D or 3D ball trajectory that is a kind of representation for tracking a ball on sports ground. Generally, it is an offline procedure where ball candidates are obtained by detecting them over a sequence of frames. They are joined together using some prediction measures, after filtering false positives and missed observations; finally, a true ball trajectory is obtained. According to this approach, detecting a ball among ball candidates is difficult than detecting whether a trajectory is the ball trajectory or not.

Initial efforts are more focused on obtaining 3D ball positions using basic TM method. They use ball region from current frame as template for the next frame, search in a limited region around the previous position and use back tracking to confirm the result (Ohno et al. 1999; Yow et al. 1995). If a ground model is available, start and end-points of the ball trajectory are located on the ground. If a perpendicular object is located on the ground, then triangular geometric relations can be used to determine the 3D ball position (Kim et al. 1998). If the end-points are unavailable then a physics based search is used to determine them. Reid and North (1998) improved over Kim et al. (1998) using ball’s shadow on the ground as a reference for predicting 3D ball position thereby reducing the dependability on physics based ball model. However, selection of perpendicular object and shadow was a manual process. A few approaches (Ren et al. 2004a, 2008) categorized and modeled the ball motion in 4 phases’ viz., rolling, flying, in-possession and out-of-play. They did not require additional cues like reference player (Kim et al. 1998), goalmouth (Kim et al. 1998) and shadows (Reid and North 1998) for 3D position estimation of a ball in soccer. A few researchers (Ohno et al. 2000; Yamada et al. 2002) estimated ball positions in 3D space by fitting a physical model of a ball movement to the observed ball trajectory. However, human intervention is needed to guess the ball speed from image and that is a major limitation of this approach.

In order to deal with cases of occlusion, Seo et al. (1997) employed backprojection technique but required manual input of starting locations. A multi-threaded approach was used for tracking Tennis ball using multiple camera setting (Pingali et al. 2000, 2002). Depending on current ball velocity, the system is continuously updated. Segmentation and tracking parameters like intensity value ranges, expected size, search window size and different thresholds are dynamically updated. However, in order to speed up the process, monochrome cameras were preferred thereby sacrificing a strong cue i.e. color of the tennis ball. After ball detection, Liu et al. (2006) applied adaptive TM approach and its hybrid with KF for tracking. It can be observed that most of the template-based methods require either manual assistance to perform key tasks like selection of perpendicular object/shadow or frequent update of template. This reduced the efficiency, introduced errors and cannot be considered automatic. TM fails for detecting fast motion of the ball as its appearance changes with change in velocity. Even adaptive TM was not able to continue tracking.

In subsequent works, ball detection processes became more automated due to the use of background subtraction algorithms for obtaining moving regions in the form of blobs. The blobs are processed and several filters (size, shape, color criteria etc.) are applied on them to obtain ball candidates. Chen et al. (2007, 2012) classified the ball candidates into isolated and contacted candidates. In order to ease the extraction of ball trajectory from large number of noisy and false candidates, 2D plots (Candidate Feature Image (Yu et al. 2003a), X-Distribution Image and Y-Distribution Image (Chen and Wang 2007), X Time Plot and Y Time Plot (Chen et al. 2012), Candidate Distribution Plot (Chakraborty and Meher 2012a)) or 3D plots (Miura et al. 2009) are generated by plotting the centroid of ball candidates. For example, X-Time plot is the plot of input video frame number on X-axis and x-coordinate of the ball position in the frame on Y-axis. Initially, all the ball candidates are linked to their nearest neighbor candidate in the next frame forming large number of trajectories. Depending on the observed ball movement along Y and X-axes, estimation functions are modeled; one parabolic function for a ball trajectory along Y-axis and another straight line function for projection of the trajectory along X-axis. These estimation functions are used to predict ball positions in the subsequent frames (Chakraborty and Meher 2012a, b; Chen et al. 2007, 2009, 2012). For non-team sport like Tennis, a ball track is formed by blobs following a linear trajectory (Conaire et al. 2009). Trajectory is grown by adding ball candidates that are present at estimated positions and the estimation function is updated using regression. A few methods replaced the estimation function either with velocity constraint (Chen et al. 2009) or KF based candidate verification (Yu et al. 2003a, b, c, 2004b, 2006, 2007b) method or KF verification function (Chakraborty and Meher 2012b). Instead of single 2D KF function, Ekinci and Gokmen (2008) and Yu et al. (2004b) used two 1D KF for eliminating the need of two separate dynamic models as well as information of player positions. Next stage deals with identification of ball trajectory using either least square fitting technique (Chen et al. 2009) or assigning confidence degrees to each trajectory (Chen et al. 2007, 2012) or confidence index (Yu et al. 2003a, c, 2006, 2007b) to the trajectories. The best fitting trajectory or one with highest confidence degree is chosen as the ball trajectory. The obtained ball trajectory is extended by interpolating and patching the gaps resulting into actual ball trajectory. If the gap is large then localized KF based model matching is used to narrow down the gap (Yu et al. 2003c). However, lack of good initial detection and subsequent intermediate detection methods leads to failure in object segmentation resulting into improper trajectory.

Obtained 2D ball trajectory, calibrated camera parameters from multiple sources and physical characteristics of ball motion are used to reconstruct 3D trajectory of the ball (Chen et al. 2009). The obtained ball locations can be superimposed on original video frame for better visualization of ball trajectory (Chakraborty and Meher 2012a). For detecting position of the ball in a 3D trajectory, Ren et al. (2004a, 2008) used two methods. First one was triangulation using visual information from multiple sources. The other method was to analyze trajectory from a data source and infer that the ball is in flight. The ball trajectory was approximated by straight lines on a virtual playfield (Leo et al. 2008). Kumar et al. (2011) also used triangulation of 2D trajectories obtained from two cameras for obtaining 3D trajectory of the ball. They used basic kinematic equations to predict locations of missing balls in 3D space. However, most of the techniques discussed above did not take bouncing phenomenon of a ball into account. Hawk-eye Innovations Ltd (2015) work for ball tracking in sports is based on the concept of triangulation from multiple high-speed HD cameras. Each camera determines the ball position and plot the ball trajectory in 2D. Central system combines all the 2D trajectories to form a 3D trajectory and visualize any shot in \(360{^{\circ }}\). Under the availability of initial conditions and environmental parameters, Poliakov et al. (2010) used physical model (a flight model and a bounce model) for accurately simulating ball trajectory. They used genetic algorithm to fit the observations with the candidate trajectories. They managed to do ball tracking and 3D reconstruction of trajectories simultaneously with good resistance to noise and outliers.

A very important advantage of this approach is that they can deal with missing, merged or partially occluded ball in a few frames. Most of the methods need not remove salient objects like lines on the ground and basketball board for successful ball tracking. However, most of the researches considered long shots with full trajectories, i.e. a situation easier to work upon, compared to the close-up and medium shots with a lot of dynamic activity and shorter passes. Change of appearance in size, shape and color of ball due to high velocity and illumination changes are not considered. Most of the methods use KF for position estimation; however, it does not work for segments containing fast and curved ball movements. In presence of multiple moving ball-like objects in the background, it may lead to failure of the algorithm. Calculation of camera parameters for 2D to 3D conversion of ball trajectory is a computationally complex task. Ignoring the effect of air friction, complex ball movements like swerve, ball spin axis, ball spin rate, etc. may also lead to inefficient ball tracking. Commercial ball tracking solutions have a strong limitation of viewing angle, high cost of camera system and its installation, visible area constraint and are impractical for research in general. Table 6 presents a summary of all trajectory-based ball tracking methods published so far in different sports, validating databases, obtained results, strengths and shortcomings.

4.4 Data association

Data Association (DA) is a process of associating uncertain measurements (ball position in image obtained after detection) to known tracks. It requires detected ball candidates as input. The difficulty level of DA increases with increase in number of targets to be tracked. DA problem in ball tracking uses a Markov Chain assumption and a predictive motion model. However, in presence of noisy detections, the problem of false positives and false negatives makes ball tracking using DA, a computationally complex process in complex environments.

DA has been used in object tracking since its introduction almost three decades ago (Bar-Shalom 1987). However, it was used in ball tracking for the first time in the form of Robust Data Association (RDA) (Lepetit et al. 2003). RDA treated DA as a motion-fitting problem. They use RANSAC-like algorithm to solve the DA and estimation problem as it is robust to abrupt motion changes. However, as the ratio of true positives drops, RANSAC becomes computationally more complex in a highly cluttered environment. In RDA, multiple models give periodical estimates that are independent of each other. Since, global smoothness constraint is not applied, wrong pick of a clutter-originated motion as the best model may result in an irreparable error. Yan et al. (2006a) used Viterbi algorithm to search the sequence of measurements with a posteriori probability. They used bidirectional Viterbi search method to improve ball tracking by recovering from errors caused by abrupt change in ball motion. Yan et al. (2008, 2014) proposed a hierarchical layered DA scheme with graph theoretic formulation to overcome the shortcomings of RDA. In the first pass, the framework uses a recursive estimation scheme to fit a dynamic model. In the second pass, Dijkstra’s shortest path algorithm filters out the false tracklets to find the best trajectory. While Dijkstra’s algorithm is a single-source shortest path algorithm, Yan’s algorithm requires manual labelling of initial and final tracklets. The initiation and termination problems were dealt by studying all-pairs of shortest paths obtained from single object tracking (Yan et al. 2008). They did not provide any mechanism to handle cases of a ball going out of field and frequently occurring short-term misdetections.

Huang et al. (2012) proposed a Viterbi algorithm to estimate a globally smooth ball trajectory. However, they incurred some loss of precision in the obtained trajectory due to absence of a well-defined kinematics motion model. Ball tracking has also been done either using nearest neighbor DA (Zaveri et al. 2004) or DA with KF (Kittler et al. 2005). Zhou et al. (2015) proposed a two-layered DA for tennis ball tracking which is an extended version of Zhou et al. (2013). The proposed approach uses both; the global and local information to search the possible trajectories efficiently. The authors claim that the proposed approach for single object tracking is extendable for tracking multiple objects as well. Unlike Huang et al. (2012) and Zhou et al. (2015) use a motion model and a few measuring scores for acquiring precise trajectories locally using shift token transfer algorithm. At the local layer, the authors introduced virtual ball candidates for handling long-term absences and short-term misdetections. At global layer, they used Floyd algorithm that searches trajectories over a weighted directed acyclic graph and thus avoid manual labelling of initial and terminal tracklets. They introduced K-order directed arcs over the graph to tackle the problem of absence of ball in long video sequences.

DA algorithms are prone to misdetections, noisy background and cannot track ball temporarily going out of field. DA has been applied for ball tracking only in Tennis sport where mere two players are present on the court, occlusion is very less and field-of-view is limited. In addition, DA is an offline method of ball tracking. There is a good scope for applying DA to track ball in team sports like Volleyball, Soccer, Basketball, etc. It may require some modifications in the present hierarchical approaches. Table 7 presents a summary of all DA based ball-tracking methods published so far in different sports, validating databases, obtained results, strengths and shortcomings.

4.5 Graph

Graph based approach for ball tracking involves finding actual ball route from a graph constructed out of detected ball candidates and representing the required trajectory. Usually features like color, size, shape, motion, form factor, etc. are used for finding ball candidates in each frame. Pallavi et al. (2008) deliberately avoided the use of color feature as it is prone to changes in illumination and velocity and still presented a robust framework to poor playfield conditions without any pre-processing. Instead, they use circle-detection method called circle Hough transform (CHT). When almost all the methods focus on long shots for tracking ball, Pallavi et al. (2008) focused on medium shots as well. They filtered the non-ball candidates in medium shots using motion information and in long shots using size, position, velocity and direction of motion. A few methods formed transition graph to represent ball transitions between objects like players, ground lines, stands, and other ball candidates (Miura et al. 2009; Shimawaki et al. 2006a, b). After obtaining the ball route candidates using spatiotemporal relations, the authors searched each ball candidate with a ‘Separability filter’, clustered ball-like regions and formed cluster segments by fitting a line to each cluster. Other methods used ball candidates to form a weighted graph where each node represents a ball candidate and link (edge) representing possible transitions between nodes. Each node is assigned a weight that denotes its resemblance with the ball. Each edge is assigned a weight that represents correlation between the joined candidates (Liang et al. 2005; Pallavi et al. 2008; Yu et al. 2007a). Ball route candidates are estimated by applying dynamic programming [Viterbi algorithm (Liang et al. 2005; Yu et al. 2007a)] on the graph using some constraints [velocity (Shum and Komura 2004)]. In order to finely tune the estimated route, Shum and Komura (2004) used B-spline curve for interpolating the missing nodes. For finding the actual ball trajectory, a few researchers (Miura et al. 2009; Shimawaki et al. 2006a, b) selected a ball candidate trajectory with the highest allotted score whereas Yu et al. (2007a) used least square method. Researchers Shum and Komura (2004) constructed 3D trajectory of a ball using its 2D trajectory and an aerodynamic model.

Similar to DA algorithms, graph based approach can also handle occlusion to some extent. However, if the ball is missing for a large number of frames then the computational complexity of the algorithm increases and may lead to permanent discontinuity between candidate trajectories. A few methods were unable to detect ball moving out of field. None of the methods in this approach discussed the cases of size and shape changes of ball due to high velocity and heavy occlusion. Table 8 presents a summary of all graph-based ball-tracking methods published so far in different sports, detection techniques, validating databases, obtained results, strengths and shortcomings.

4.6 Optimizing an objective energy function

Generally, in team sports, ball-tracking algorithms discussed in previous approaches face difficulty due to frequent occlusion or possession by players, unpredictable ball and player movements during short passes. Ball tracking during long passes is comparatively easy owing to the fact that the ball follows second order motion model. In addition, the previous approaches were confined to a sport without any scope for generalization. These approaches track ball and players separately thereby requiring two different methods that unnecessarily increased the computational complexity of the whole framework.

In order to overcome the problems discussed above, Wang et al. (2014a) formulated the ball tracking method by deciding which player is in possession of the ball at a given time. They introduced a focus on scene (FoS) tracker which is based on Conditional Random Fields (CRF) model. The tracker minimizes a global objective function that takes into account player trajectories, phase of the game and image evidence (detection) of the ball. The system showed consistent high performance when applied to long basketball and soccer video sequences. It achieved a processing speed of 8–10 fps with good results but with misdetection in a few frames. Wei et al. (2014) extended the work in Wang et al. (2014a) by finding location of a ball in short-term future instead of finding the ball captivator at the present instance. Wei et al. (2014) used augmented-hidden conditional random field (a-HCRF) which incorporates local observations in hidden conditional random field (HCRF), boosting its prediction performance. In order to reduce dependency in tracking, Wang et al. (2014b, 2016) tracked both the ball and players simultaneously using intertwined flow variables. The authors could estimate the trajectories of the ball, which were not detected initially based only on image evidence, by estimating the trajectories of players and ball jointly. Authors formulated the problem of simultaneous tracking as a constrained Bayesian inference problem where one of the players’ possesses the ball. They formed a directed acyclic graph (DAG) of the discretized locations and poses that were reframed into mixed integer program (MIP). They minimized the objective function subject to the flow constraints. The authors reduced the computation complexity initially by pruning the graph and then by introducing tracklet based formation of the optimization problem. Though this method is generic and applicable to many team sports, it cannot preserve the identity of an object during momentary occlusion and performs misdetection a few times. Their ball possession is modeled explicitly and they perform tracking on discretized grid with no physical constraints that reduces smoothness of the trajectory. Maksai et al. (2015) explicitly model ball and player interactions and physical constraints obeyed by ball during flight. Initially the ball-tracking problem is formulated as maximization of a posteriori probability using factor graph that was reformulated in terms of an integer program using Ball graph. Further, adding various constraints, the final problem was addressed using MIP. Similar to Wang et al. (2016) and Maksai et al. (2015) introduced a ball tracking method by taking ball-player interactions into consideration. Additionally, they imposed physics based constraints. Their method described ball behavior in a better manner than other approaches.

The energy optimization approaches formulated the ball-tracking problem in a more precise manner compared to other approaches. They considered various phases of ball viz., in flight, in possession, occlusion and applied suitable constraints to the objective function thereby taking fine details of the ball state into consideration. They yield superior performance in tracking accuracy and robustness to occlusions. However, minor cases of misdetection were reported. Table 9 presents a summary of all Energy optimization based ball tracking methods published so far in different sports, validating databases, obtained results, strengths and shortcomings.

5 Discussion and our perspective

In this section, we present discussions on major ball detection and tracking techniques introduced so far followed by their classification and an improved model of a tracking system.

In most of the sports, a ball is a small object, highly agile, frequently occluded, changing contact and with changing contrast under varying illumination conditions. It is very important to detect the ball robustly, before or without tracking. Thus, all the approaches have used large number of features for its detection though it adds to computational complexity. Some of the preferred features of the ball consist of shape, size, color, position, motion, etc. A few curve detection methods like circle Hough transform, ellipse fitting and SVM multiple feature based classifiers were also used, resulting in a unified approach for detection unlike feature based detection.

Initially, most of the KF based approaches used a template to perform ball tracking. However, this technique proved inefficient in conditions of non-linear ball movement, illumination changes, occlusion, cluttered background and high speed of the ball. A large number of KF variants were preferred for ball tracking. They proved successful for dealing with size and time complexity but on a few very small databases with low-resolution video frames. In general, KF based method failed to incorporate non-linearity in their tracking techniques and needed manual input of initial ball location. Thus, a large number of researches used PF based methods for ball tracking. Increase in the number of assigned particles slowed down the tracking process. In contrast, reducing the number of particles increased the false positives and resulted in tracking failure. A balance between large number of particles and small number of false positives and negatives results in better tracking experience. This method was successful in tracking non-linear motion of the ball, redetection and partial occlusion; but could only marginally solve the problem of heavy occlusion, illumination changes, fast motion, motion blur, background clutter and size and scale changes. Both the probabilistic approaches need removal of salient objects on the ground like lines, circles, net, etc. for better ball detection.