Abstract

Competency frameworks serve various roles including outlining characteristics of a competent workforce, facilitating mobility, and analysing or assessing expertise. Given these roles and their relevance in the health professions, we sought to understand the methods and strategies used in the development of existing competency frameworks. We applied the Arksey and O’Malley framework to undertake this scoping review. We searched six electronic databases (MEDLINE, CINAHL, PsycINFO, EMBASE, Scopus, and ERIC) and three grey literature sources (greylit.org, Trove and Google Scholar) using keywords related to competency frameworks. We screened studies for inclusion by title and abstract, and we included studies of any type that described the development of a competency framework in a healthcare profession. Two reviewers independently extracted data including study characteristics. Data synthesis was both quantitative and qualitative. Among 5710 citations, we selected 190 for analysis. The majority of studies were conducted in medicine and nursing professions. Literature reviews and group techniques were conducted in 116 studies each (61%), and 85 (45%) outlined some form of stakeholder deliberation. We observed a significant degree of diversity in methodological strategies, inconsistent adherence to existing guidance on the selection of methods, who was involved, and based on the variation we observed in timeframes, combination, function, application and reporting of methods and strategies, there is no apparent gold standard or standardised approach to competency framework development. We observed significant variation within the conduct and reporting of the competency framework development process. While some variation can be expected given the differences across and within professions, our results suggest there is some difficulty in determining whether methods were fit-for-purpose, and therefore in making determinations regarding the appropriateness of the development process. This uncertainty may unwillingly create and legitimise uncertain or artificial outcomes. There is a need for improved guidance in the process for developing and reporting competency frameworks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

As individual health professions evolve, identification of competencies describing the required knowledge, skills, attitudes and other characteristics (KSAOs) for effective professional practice are needed by professionals, educators, and regulators (Campion et al. 2011; Gonczi et al. 1990; Palermo et al. 2017). Identifying these competencies ensures that healthcare professions are well defined, promotes competent workforces, facilitates assessment, facilitates professional mobility, and helps to analyze and evaluate the expertise of the profession and the professional (Baer 1986; Campion et al. 2011; Eraut 1994; Gonczi et al. 1990; Heywood et al. 1992; ten Cate 2005; Whiddett and Hollyforde 1999; Winter and Maisch 2005; World Health Organization 2005). The CanMEDS framework, the ACGME Outcomes project, and the entry-level registered nurse practice competencies are examples of frameworks that have been used in these ways (Black et al. 2008; J. Frank et al. 2015; Swing 2007). Given the stakes that such frameworks hold for educators, learners, regulators, health professions and healthcare broadly, development guidelines have been created (See Table 1).

The development of competency frameworks requires strategies to capture and represent the complexity associated with healthcare practice. This complexity can emerge in a number of ways. For example, regional or contextual variability, unique practice patterns, the role and attributes of individuals and individuals within teams, and other interacting competencies make practice in multiple contexts possible (Bordage and Harris 2011; Garavan and McGuire 2001; Heywood et al. 1992; Hodges and Lingard 2012; Knapp and Knapp 1995; Lingard 2012; Makulova et al. 2015; Roe 2002). The nature of clinical practice can also be difficult to define or understand fully (Garavan and McGuire 2001; Mendoza 1994). The role of tacit knowledge in professional practice for example, can be difficult to represent—that is, there can be a disconnect between the personal knowledge of professionals which becomes embedded in their practice, and the publicly accessible knowledge base of the profession (Collin 1989; Eraut 1994). Competence and its component parts are often inconsistently understood or defined and attributed multiple meanings depending on context (Hay-McBer 1996; Spencer and Spencer 1993; ten Cate and Scheele 2007). Other difficulties may include shifts in patient demographics or societal expectations, the role of technology, and changes in organizational structures (Duong et al. 2017; Jacox 1997; Whiddett and Hollyforde 1999). As such, any attempt to represent professional practice must contend with these challenges, which leaves developers with decisions on how best to make those choices (Garavan and McGuire 2001; Shilton et al. 2001).

Given this inherent complexity in capturing and accurately representing the features of a health profession, a variety of approaches may be employed. Influencing issues such as practicality, efficiency, and what might be deemed acceptable to the profession may have a role (see Table 1). While there is no guidance on what specific methods to use, when to use them, or how to use them, there is consensus that in order to increase the validity and utility of competency frameworks a combination of approaches may be necessary, akin to a process of triangulation (Heywood et al. 1992; van der Klink and Boon 2002; Kwan et al. 2016; Marrelli et al. 2005). However, feasibility, the complexity of practice, and access to appropriate stakeholders may prompt developers to prioritise aspects of the developmental and validation process (Marrelli et al. 2005; Whiddett and Hollyforde 1999).

These challenges may result in variable or uncertain outcomes that may be of limited validity and utility (Lester 2014; Shilton et al. 2001). This in turn may inaccurately represent the profession, or represent it in unintended ways, and inappropriately impact downstream dependent systems such as policy/standards development, accreditation and curriculum. Given the activity related to the development of competency frameworks in many health professions, little attention has been paid to the development process. Despite existing guidelines, the complexity associated with different professional practices may lead some to enact development activities differently. This emphasis on actual developmental processes, in the context of existing but perhaps incomplete or inadequate guidelines, is the focus of our study. Understanding these activities may provide insights into how these processes shape eventual outcomes and their validity and/or utility, and provide insights into what may hold value for the refinement of existing guidelines. As such, the primary objective of our study is to understand the way in which health professions develop competency frameworks and then to consider these activities against existing guidance.

Methods

Design

We conducted a scoping review, which enabled us to identify, map and present an overview of a heterogeneous body of literature (Arksey and O’Malley 2005; Munn et al. 2018). We deemed a scoping review to be appropriate given our interest in identifying key characteristics of competency framework development as well as potential knowledge or practice gaps (Munn et al. 2018). We employed Arksey and O’Malley’s (2005) five-stage framework which included (1) identifying the research question, (2) identifying relevant studies, (3) refining the study selection criteria, (4) collecting relevant data from each article, and (5) collating, summarizing, reporting, and interpreting the results. We reported our process according to the PRISMA Extension for Scoping Reviews (Tricco et al. 2018).

Research questions

-

1.

How are competency frameworks developed in healthcare professions?

-

2.

How do competency framework development processes align with previous guidance?

-

3.

What insights can be gleaned from the activities of health professions in their developmental activities and their alignment or not with previous guidance?

Identify relevant studies

Systematic search

We structured searches using terms that addressed the development of competency frameworks in healthcare professions. In addition, we considered other related concepts, and combinations of keywords and subject headings that were used are outlined in “Appendix 1”. We selected six databases to ensure a broad range of disciplines were included: MEDLINE, CINAHL, PsycINFO, EMBASE, Scopus, and Education Resources Information Center (ERIC). We also searched grey literature sites greylit.org and Trove, and we reviewed the first 1000 records from Google Scholar. We title screened citations within articles if they appeared relevant to the review (Greenhalgh and Peacock 2005). Our search was restricted to articles published in English. No limits were set on publication date, study design or country of origin. We conducted pilot searches in May and June 2018 with the help of two information specialists to refine and finalize the search strategy, and we conducted the final searches in August 2018.

Citations were imported into EndNote X8 (Clarivate Analytics, Philadelphia, PA) and we manually removed duplicate citations. The remaining articles were uploaded to the online systematic-review software Covidence (Veritas Health Innovation, Melbourne, Australia) for title and abstract screening, and data characterisation.

Select the studies

Eligibility criteria

Studies were eligible for inclusion if they involved a healthcare profession, produced a competency framework, and explicitly described the development process. Where the same data were reported in more than one publication (e.g., a journal article and a thesis), we only included the version that reported the most complete data. Studies of all types were included.

Title and abstract screening

Initial screening comprised of a review of title and abstracts by two reviewers (AB and BW). Disagreements were resolved through discussion until consensus was achieved. Where disagreement remained or there was insufficient evidence to make a decision, the citation was included for full article review.

Critical appraisal

In line with the scoping review framework, we did not conduct a critical appraisal (Arksey and O’Malley 2005).

Chart the data

To support the full-text review, we developed a standardised data extraction form to organize information, confirm relevance, and to extract study characteristics (See “Appendix 2”) (Ritchie and Spencer 2002). The information we collected included study characteristics, objectives of studies, and citations. Relevance was confirmed by sampling population and objectives. Characteristics collected via this form included: Author (year), country; Sampling population; Objective/Aim; Methods used; Count of methods; Outcomes. Additional coding was performed in September 2019 based on peer-review, and included: Rationale; Rationale for methods; Triangulation; Funding. We compiled all data into a single spreadsheet in Microsoft Excel 2013 (Microsoft, Redmond, WA) for coding and analysis.

Data summary

Due to variations in terminology, methods and strategies used it was necessary to merge some of these in order to facilitate synthesis. This was an iterative process whereby we reduced variation to produce a discrete list of codes, while retaining the pertinent information in each study. For example, we considered ‘steering groups’, ‘working groups’, ‘committees’, and ‘expert panels’ sufficiently similar to be coded as a form of ‘group technique’, while we coded Delphi process and nominal group technique (NGT) as forms of ‘consensus methods’. Stakeholder deliberation included conferences or workshops (but these may also have been used for other purposes), and alternative strategies including input from professional associations. Codes and their definitions are outlined in Table 2.

Data synthesis

We further explored the outlined codes in order to provide insight into their purpose and how they were operationalized. After synthesizing the results, we then organized them by frequency of use from most to least common. We outlined variations that existed within each code including form, function, application, and intended outcomes. This qualitative approach to analysis was performed inductively and iteratively, allowing the data to be representative of itself. The synthesis and subsequent discussion are influenced by our perspective that context is important both in the original studies, and in our own interpretation of the literature. Additionally, study authors may have held underlying positions that are distinct from ours, which may result in differing interpretations of their studies.

Results

Search results and study selection

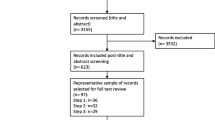

The search yielded 5669 citations. We identified an additional 110 citations through searches of grey literature, and hand searching. After elimination of duplicates, we screened 5710 citations at the title and abstract level. This led to the exclusion of 5331 citations. After full-text review of 379 citations, we included 190 full-texts for data extraction and analysis. See Fig. 1 for an illustration of these findings using PRISMA Diagram, and “Appendix 3” for a full list of included studies.

Source: Moher et al. (2009)

PRISMA diagram.

Characteristics of included studies

Included studies were published between 1978 and 2018. The majority were published as peer-reviewed articles (n = 172), with the remaining literature comprised of reports (n = 13) and theses (n = 5). The majority of studies were from the USA (n = 65, 34%), followed by the United Kingdom, Canada, and Australia (n = 27 each, 14% each). Nursing and medicine competency frameworks accounted for the majority (n = 65, 34% each), followed by multidisciplinary frameworks (n = 36, 19%). See Table 3 for further characteristics of included studies.

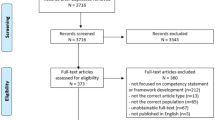

Literature reviews and group techniques were utilised in 116 studies each (61%). Strategies of stakeholder involvement were utilised in 85 studies (45%), and mapping exercises were conducted in 73 (38%). See Table 4 and Fig. 2 for frequency of the methods used.

Studies varied in the number of approaches used from one (n = 20, 11%) to seven (n = 3, 2%) (See Table 5). The median number used was three, and a total of 132 studies (69%) utilised three or more methods or strategies. Combinations of methods varied, and no distinct pattern of use emerged when analysed by profession, location or year. Triangulation of methods was mentioned in 18 (9%) studies. Study periods were not outlined in the majority of studies, but of those that did (n = 81, 43%), the timeframe for development ranged from 2 days to 6 years, with 41 of these (51%) completed in 12 months or less.

All included studies provided a rationale for the development of the framework. Improvement in education was the most commonly reported rationale (medicine), followed by lack of a competency framework (see Table 6 and “Appendix 5” for more detail). A total of 79 studies (42%) provided a clearly outlined rationale for their choice of methods, and a further 27 (14%) provided a partial rationale. While a detailed analysis of evaluation was outside of the scope of our review, evaluation of the final framework was reported in seven studies (4%), while a further 66 (35%) recommended or planned evaluation. Funding sources were outlined for 110 studies (58%).

Variation in application of approaches

While diversity existed in the methods and strategies used in the development of competency frameworks, we also observed variability within these approaches in form, function, application and intended outcomes (e.g., to achieve consensus, to facilitate dissemination, to review drafts etc.). The variation evident within these approaches suggests that authors made choices (that were not always explicit) in what they valued as meaningful when using such techniques. As such, the functional alignment of these choices remains unclear, and poses a challenge when we attempt to infer alignment with framework objectives. Next we elaborate on these findings, with the exception of Develop a curriculum (DACUM) due to its low popularity. Examples are referenced to illustrate variance, but are not intended to be exhaustive lists. See “Appendix 3” for full details.

Literature reviews

Different types of literature reviews included (a) systematic reviews (Galbraith et al. 2017; Klick et al. 2014), (b) scoping reviews (AlShammari et al. 2018; Redwood-Campbell et al. 2011), (c) integrative reviews (Camelo 2012), (d) focused reviews (Tavares et al. 2016; Yates et al. 2007), and (e) environmental scans (McCallum et al. 2018; National Physiotherapy Advisory Group 2017). Many authors did not explicitly outline the type of review they conducted, and instead described it using generic terms such as ‘broad’, ‘extensive’, and ‘comprehensive’. Some authors performed a review to identify existing competencies (Chen et al. 2013; Hemalatha and Shakuntala 2018), while others performed it to inform subsequent methodology (Davey 1995; Sherbino et al. 2014). It appears that authors made decisions regarding the type, role and relevance of reviews, and integrated them (or not) for a variety of reasons which are often unclear and remain implicit.

Group techniques

Group techniques included working/steering, or expert groups (Aylward et al. 2014; Davis et al. 2005), and various group data collection strategies (e.g., conferences and workshops) (Klick et al. 2014; Skirton et al. 2010). Aylward et al. (2014) used a group technique to draft the initial framework, while Davis et al. (2005) used it to edit a draft created by an expert group. Authors including Klick et al. (2014) used a large conference to facilitate input and dissemination. Conversely, others such as Skirton et al. (2010) elected for a smaller group workshop to review drafts and gain input. While there is variation within this category, the same holds true for other sources of evidence for competency framework development.

Stakeholder deliberation

We also noted variations in the role and relevance of stakeholder deliberation strategies, which included involving (a) healthcare professionals (Frank 2005; Yates et al. 2007), (b) professional associations (Davey 1995; Gillan et al. 2013), (c) academics (du Toit et al. 2010; Tangayi et al. 2011), (d) charities and non-profit organisations (Tsaroucha et al. 2013), (e) regulatory bodies (du Toit et al. 2010), (f) trade unions and employers (Reetoo et al. 2005), and (g) patients and their families (Davis et al. 2008; Dewing and Traynor 2005). Authors elected to use focus groups (Hamburger et al. 2015), interviews (Tsaroucha et al. 2013), surveys (Tangayi et al. 2011), action research (Dewing and Traynor 2005), conferences and workshops (D. Davis et al. 2005), online wikis (Ambuel et al. 2011), and/or patient advocacy organisations (Yates et al. 2007). Stakeholder input served different purposes, and was used to draft the initial framework (Kirk et al. 2014; Macmillan Cancer Support 2017), to refine and revise draft frameworks (Aylward et al. 2014; Davey 1995), and to gain consensus for the adoption of frameworks (Brewer and Jones 2013; Santy et al. 2005). Despite the focus on ‘patient-centred’ care described in many frameworks, only 21 studies (11%) reported engaging patients or their caregivers. In other instances, it was difficult to understand the role of stakeholders. Who to engage as stakeholders, how to engage them, and for what purpose was, similar to other approaches, also idiosyncratic and thus difficult to infer alignment with framework goals.

Mapping exercises

The documents used for mapping exercises included (a) specialty board certification exams or reporting milestones (Cicutto et al. 2017; Klein et al. 2014), (b) national policies and health service agendas (Glanville Geake and Ryder 2009; Mills and Pritchard 2004), (c) relevant frameworks from other countries (L. Liu et al. 2014; McCallum et al. 2018), and (d) international or regional frameworks (Barry 2011; Wölfel et al. 2016). These mapping exercises were used as a foundation for framework development (Boyce et al. 2011; McCallum et al. 2018), to identify a pool of items to use in consensus methods (Liu et al. 2007), to generate behavioural items for identified competencies (Aylward et al. 2014) and to organise and tabulate responses from stakeholders (Loke and Fung 2014). There appears to be inconsistent adherence with previous development guidance within this approach in relation to the importance of regional context.

Consensus methods

Consensus methods included (a) Delphi method (Cappiello et al. 2016; Sousa and Alves 2015), (b) group priority sort (Ling et al. 2017) and, (c) nominal group technique (Kirk et al. 2014; Landzaat et al. 2017). Cappiello et al. (2016) used a Delphi method to gain agreement on competencies early in the development of the framework, while Sousa and Alves (2015) used it as a final step to gain consensus. Kirk et al. (2014) utilised NGT as traditionally described (Delbecq and Van de Ven 1971), while Landzaat et al. (2017) utilised a hybrid of modified Delphi and NGT components. Ling et al. (2017) was the only study to utilise group priority sort method. While consensus is a worthwhile strategy that aligns with previous guidance, the rationale for a given approach over another, the sequence, or application was often unclear, and this poses a challenge when we attempt to examine alignment.

Surveys

Surveys also varied by method, purpose, and characteristics of survey population. From a methodological perspective, some were conducted online or via e-mail (Barnes et al. 2010; Klick et al. 2014), via post (Bluestein 1993; Davis et al. 2008), or using a combination of approaches (Baldwin et al. 2007). In terms of function, surveys were utilised to identify initial competencies (Parkinson’s 2016), to elicit feedback during the development process (Smythe et al. 2014), and in the subsequent validation of the framework (Sherbino et al. 2014). Sample sizes varied from 33 (Ketterer et al. 2017) to 18,000 (National Physiotherapy Advisory Group 2017), while response rates varied from 3% (NPAG 2017) to 89% (Liu et al. 2016). Actual number of responses ranged from 20 (Ketterer et al. 2017) to 6247 (Liu et al. 2016). As evidenced within other methods employed, here too we observed variation in the application and function of surveys.

Focus groups

Focus groups varied in composition, the size and number of groups, and purpose. For example, the composition for some comprised of members of the same discipline (Halcomb et al. 2017; Palermo et al. 2016), while others saw value in using members from different disciplines (Booth and Courtnell 2012; Gillan et al. 2013)—in direct contrast to the sine qua non of focus groups (Lederman 1990). In terms of how this method was used, some used it in the initial identification and drafting of competencies (Booth and Courtnell 2012; Patterson et al. 2000), while others used it to engage stakeholders during the development process (Banfield and Lackie 2009; Smythe et al. 2014). Authors including Myers et al. (2015) used focus groups to validate draft frameworks. The reasons for choices made by developers, and the methodological variation evident in this approach remain unclear and inconsistently reported.

Interviews

The forms of interviews included (a) semi-structured (Akbar et al. 2005; Daouk-Öyry et al. 2017), (b) structured (Amendola 2008), (c) in-depth (Blanchette 2015; Tavares et al. 2016), (d) group interviews (not focus groups) (Loke and Fung 2014), (e) critical incident (Lewis et al. 2010; McCarthy and Fitzpatrick 2009), and (f) behavioural event interviews (Calhoun et al. 2008; Chen et al. 2013). Participants in interviews included patients and family members (Dijkman et al. 2017; Patterson et al. 2000), academics (Chen et al. 2013; Gardner et al. 2006), and healthcare professionals (Calhoun et al. 2008; Chen et al. 2013). Interviews were conducted to gain expert input (Smythe et al. 2014; Tavares et al. 2016), to gain insight into practice (Dunn et al. 2000; McCarthy and Fitzpatrick 2009), to confirm findings from other methods (i.e. triangulation) (Dunn et al. 2000; Palermo et al. 2017), and to solicit contributions from diverse stakeholders (Kwan et al. 2016). The number of interviews conducted was often not reported, however, several authors provided details on population, technique, and analysis for interviews in their studies (Palermo et al. 2017; Tavares et al. 2016). As with other approaches, who to interview, how, and for what purpose was often not adequately reported, and this presents a challenge when we attempt to evaluate the outcomes.

Practice analysis

Practice analysis involved methods such as (a) functional analysis (Bench et al. 2003; Palermo et al. 2016), (b) analysis of administrative data (Dressler et al. 2006; Stucky et al. 2010), (c) direct observation of practice (Dewing and Traynor 2005; Underwood et al. 1996), (d) critical incident technique (CIT) (Dunn et al. 2000; Lewis et al. 2010), (e) review of position descriptions (Akbar et al. 2005; Fidler 1997), and (f) task or role analysis (Cattini 1999; Chang et al. 2013). Dressler et al. (2006) identified commonly encountered conditions in billing data, while Stucky et al. (2010) and Shaughnessy et al. (2013) identified commonly recorded diagnostic codes to inform the development of competency frameworks. Dunn et al. (2000), Underwood et al. (1996), and Dewing and Traynor (2005) observed practice in-person, while Patterson et al. (2000) observed video recorded interactions to develop an understanding of practice. Practice analyses were used to inform the initial list of competencies (Dressler et al. 2006; Fidler 1997), as a means of capturing the complexity of practice in context (Dunn et al. 2000; Underwood et al. 1996), to triangulate data from other methods (Lewis et al. 2010; McCarthy and Fitzpatrick 2009), and as a means of validating frameworks (Carrington et al. 2011). Timeframes of data collection also varied significantly, and were not always reported. The variation with this approach was perhaps to be expected given the differences in practice between professions. Despite existing guidance related to the importance of job/practice analysis (Lucia and Lepsinger 1999a, b; Roe 2002), this method was rarely utilised, which obligates us to question why given its ability to explore the complexities of practice.

Discussion

Competency frameworks serve various roles including outlining characteristics of a competent workforce, facilitating mobility, and analysing or assessing expertise. Given how existing development guidelines may be limited, combined with the known complexities of practice and practical challenges faced by framework developers, we sought to understand the choices made when developing competency frameworks. After we examined frameworks across multiple contexts, we suggest that: variability exists in what methods or combinations of methods developers use as well as within methods; there is inconsistent adherence to existing guidance (e.g., most neglect practice analyses, but include multiple methods); limited connections are made between intended use and methodological choices; and, outcomes are inconsistently reported.

Given how competency frameworks are developed, we identified a lack of guidance on how to identify the most appropriate methods. While existing guidance permits and/or encourages a certain flexibility (Table 1), we did not identify any guidance regarding making those choices or examining their suitability for the intended purpose or claims authors intend to make about their outcomes (i.e., competency framework). In other words, existing guidance acknowledges that what we consider fit for one setting or profession and intended use may not be for another, hence the flexibility and variability (Whiddett and Hollyforde 1999). While this seems necessary, existing guidelines also seem to lack organizing conceptual frameworks. As an example, social sciences and humanities research often include conceptual or theoretical frameworks as means to impose, organize, prioritize or align methodological choices. These validity ideals appear challenged by practicalities when developing frameworks. That is, we assume by the heterogeneity in our findings that methodological choices may have been influenced by practicalities such as available resources, timeframes, and the experience and expertise of developers. Other factors may include the maturity of the profession, the perspectives and mandate of the developer (i.e. who is creating the framework), the consistency of roles within the profession, and the complexity of practice which is enacted within broader social contexts. These influencing factors remained largely implicit. Lacking sufficient guidance on these conceptual and practical issues, the utility and validity associated with the framework becomes less clear, or difficult to examine.

Limitations in guidelines related to methodological choices ultimately leave producers and users struggling to make interpretations regarding suitability, utility and validity of competency frameworks. In developing competency frameworks limited in conceptual, theoretical or “use” alignment, we risk the perpetuation of frameworks that adopt a form of unintended or unwarranted legitimacy. This may subsequently result in the creation of what we could term a ‘false-god’ framework, which refers to an object of afforded high value that is illegitimate or inaccurate in its professed authority or capability (Toussaint 2009). That is, despite these limitations when developing competency frameworks, the outcomes are ‘worshipped’, or treated as legitimate or accurate representations of practice without sufficient conceptual or empirical arguments, derived by the methods used, or in alignment with intended purpose. It has been argued for example that social contexts and the complexities of clinical practice remain largely ignored in current competency frameworks (Bradley et al. 2015). Outcomes (i.e. final products) could perhaps (unknowingly) be prioritised over accurate representations of practice, thus limiting their suitability and utility, and threatening validity arguments. Existing guidance cautions that the more important the intended use of the framework, the more that its validity needs to be assured (Heywood et al. 1992; Knapp and Knapp 1995). If validity is compromised, this ‘false-god’ could exert substantial downstream effects including poor definitions of competence as well as threats to curriculum and assessment frameworks. These implications warrant consideration of improved guidance related to development and evaluation processes.

As a way forward, we may need to revisit and refine guidance surrounding competency framework development to include ways of capturing and/or representing the complexity of practice, borrowing from philosophical guidance included in mixed methods research in order to improve suitability, utility and validity, while also establishing reporting and evaluation principles (see Fig. 3 for conceptual framework). First, we may need to leverage if not obligate affordances of conceptual frameworks that have been associated with systems theory, social contexts, and mixed-methods approaches to research in development guidelines. Doing so may provide developers with kinds of organizing frameworks, including the role of underlying philosophical positions, assumptions, commitments and what counts as evidence of rigour and validity. Second, those developing frameworks should consider three core issues when developing a framework in order to align purpose with process: “binary/continuum; atomistic/holistic; and, context-specific/context-general” (Child and Shaw 2019)—see Table 1. These arguments require developers to explicitly consider the scope or intended use of the framework (which will inform their validity arguments); the level of granularity (which will inform their methods and alignment); and, the contexts in which the framework may be enacted (which will inform the degree of contextual specificity required in the development process). If we integrate organizing frameworks of these kinds and associated arguments into guidelines, it may lead to better alignment between intended uses, methods and sequences such that they are deemed “fit for purpose”. This shifts the emphasis from what or how many methods were used—since any one method can be aligned with more than one purpose—to the theoretical and functional alignment of methods with the rationale for development and intended uses (Child and Shaw 2019). Until implementation of these types of guidelines, we suggest that interpretation of the utility and validity of outcomes (i.e., competency frameworks) may be more variable or less certain (Arundel et al. 2019; Child and Shaw 2019; Simera et al. 2008).

In addition to improved developmental guidelines, we may also support developers and users of competency frameworks through the creation of reporting guidelines that provide structure and clarity (Simera et al. 2008; Moher et al. 2010; Simera et al. 2010). This may include reference to our recommendations above but also incorporate a format that borrows from recently described layered analyses for educational interventions (Cianciolo and Regehr 2019; Horsley and Regehr 2018; Varpio et al. 2012). Applied to competency framework development, techniques may be regarded as surface functions or selected methodologies that are highly context dependent, with underlying principles and philosophy that are context independent. This may help to account for the flexibility required when we attempt to provide guidance to multiple professions across varying contexts. We submit that the suitability, utility and validity of outcomes may leave too much room for interpretation without explicit consideration of the proposals outlined above. However, we acknowledge that the inconsistent adherence to existing guidance we observed in this review suggests that future guidance may also face challenges to implementation.

Limitations

Our study needs to be considered in the context of its limitations. We may not have identified all relevant studies despite attempts to be comprehensive. While our search strategy included terms previously used to describe the development of competency frameworks in various professions, others may exist. The keywords used to index papers lack consistency and a wide variety of descriptive terms are used in abstracts. Our search and review was restricted to articles published in English, but this does not inherently bias a review (Morrison et al. 2012). The Google Scholar search was limited to the first 1000 results; however, the first 200–300 results from Google Scholar are considered adequate for grey literature searches (Haddaway et al. 2015). No new codes were generated after approximately 50 articles were coded, which suggests that the inclusion of additional literature would likely not have influenced the overall findings of our review. Due to the lack of detail provided by many authors regarding their underlying assumptions, rationale, selection, and conduct of methods, our review cannot provide a concrete overview of all aspects of each included study. Finally, the dynamic nature of research into competency frameworks, EPAs, and the general discourse on competency based education may be considered a limitation. However, our review offers a comprehensive overview of the development of competency frameworks to date along with suggestions for future directions and research.

Conclusion

Our review identified and explored the research pertaining to competency framework development. Research to date has focused predominantly on the framework outcomes, with considerably less attention devoted to the process of development. Our findings demonstrated that the development process varied substantially, across and within professions, in the choice of methods and in the reporting of the process. There is evidence of inconsistent adherence to existing guidance and a suggestion that existing guidelines may be insufficient. This may result in uncertainty regarding the utility and validity of the outcomes, which may lead to unintended or unwarranted legitimacy. In light of our findings, the development process for competency framework development may benefit from improved guidance. This guidance should obligate a focus on organizing conceptual frameworks that promote the functional alignment of methods and strategies with intended uses and contexts. In addition, such guidance should assist developers to determine approaches that may be better positioned to overcome many of the challenges associated with competency framework development, including sufficiently capturing the complexities of practice. Extending existing guidelines in these ways may be complemented with further research on the implementation, reporting, and evaluation of competency frameworks outcomes.

References

Akbar, H., Hill, P. S., Rotem, A., Riley, I. D., Zwi, A. B., Marks, G. C., et al. (2005). Identifying competencies for Australian health professionals working in international health. Asia-Pacific Journal of Public Health, 17(2), 99–103. https://doi.org/10.1177/101053950501700207.

AlShammari, T., Jennings, P. A., & Williams, B. (2018). Emergency medical services core competencies: A scoping review. Health Professions Education. https://doi.org/10.1016/j.hpe.2018.03.009.

Ambuel, B., Trent, K., Lenahan, P., Cronholm, P., Downing, D., Jelley, M., et al. (2011). Competencies Needed by Health Professionals for Addressing Exposure to Violence and Abuse in Patient Care. MN: Eden Prairie.

Amendola, M. L. (2008). An examination of the leadership competency requirements of nurse leaders in healthcare information technology. ProQuest Information & Learning US, US. Retrieved from http://media.proquest.com/media/pq/classic/doc/1674957421/fmt/ai/rep/NPDF?hl=information,information,for,for,nurses,nurse,nurses,nurse&cit:auth=Amendola,+Mark+Lawrence.

Arksey, H., & O’Malley, L. (2005). Scoping studies: Towards a methodological framework. International Journal of Social Research Methodology, 8(1), 19–32. https://doi.org/10.1080/1364557032000119616.

Arundel, C., James, S., Northgraves, M., & Booth, A. (2019). Study reporting guidelines : How valid are they ?, 14(March), 2018–2020. https://doi.org/10.1016/j.conctc.2019.100343.

Aylward, M., Nixon, J., & Gladding, S. (2014). An entrustable professional activity (epa) for handoffs as a model for epa assessment development. Academic Medicine, 89(10), 1335–1340. https://doi.org/10.1097/ACM.0000000000000317.

Baer, W. (1986). Expertise and Professional Standards. Work and Occupations, 13(4), 532–552. https://doi.org/10.1177/0730888486013004005.

Baldwin, K. M., Lyon, B. L., Clark, A. P., Fulton, J., Davidson, S., Dayhoff, N., et al. (2007). Developing clinical nurse specialist practice competencies. Clinical Nurse Specialist, 21(6), 297–303. https://doi.org/10.1097/01.NUR.0000299619.28851.69.

Banfield, V., & Lackie, K. (2009). Performance-based competencies for culturally responsive interprofessional collaborative practice. Journal of Interprofessional Care, 23(6), 611–620. https://doi.org/10.3109/13561820902921654.

Barnes, T. A., Gale, D. D., Kacmarek, R. M., & Kageler, W. V. (2010). Competencies needed by graduate respiratory therapists in 2015 and beyond. Respiratory Care, 55(5), 601–616.

Barry, M. M. (2011). The CompHP core competencies framework for health promotion short version. Health Education & Behavior: The Official Publication of the Society for Public Health Education, 39(20081209), 648–662. https://doi.org/10.1177/1090198112465620.

Bench, S., Crowe, D., Day, T., Jones, M., & Wilebore, S. (2003). Developing a competency framework for critical care to match patient need. Intensive & Critical Care Nursing, 19(3), 136–142. https://doi.org/10.1016/S0964-3397(03)00030-2.

Black, J., Allen, D., Redfern, L., Muzio, L., Rushowick, B., Balaski, B., et al. (2008). Competencies in the context of entry-level registered nurse practice: A collaborative project in Canada. International Nursing Review, 55(2), 171–178. https://doi.org/10.1111/j.1466-7657.2007.00626.x.

Blanchette, L. (2015). An exploratory study of the role of the organization and the. ProQuest Information & Learning US, US. Retrieved from http://ovidsp.ovid.com/ovidweb.cgi?T=JS&PAGE=reference&D=psyc13a&NEWS=N&AN=2016-17339-289.

Bluestein, P. (1993). A model for developing standards of care of the chiropractic paraprofessional by task analysis. Journal of Manipulative and Physiological Therapeutics, 16(4), 228–237.

Booth, M., & Courtnell, T. (2012). Developing competencies and training to enable senior nurses to take on full responsibility for DNACPR processes. International Journal of Palliative Nursing, 18(4), 189–195. https://doi.org/10.12968/ijpn.2012.18.4.189.

Bordage, G., & Harris, I. (2011). Making a difference in curriculum reform and decision-making processes. Medical Education, 45(1), 87–94. https://doi.org/10.1111/j.1365-2923.2010.03727.x.

Boyce, P., Spratt, C., Davies, M., & McEvoy, P. (2011). Using entrustable professional activities to guide curriculum development in psychiatry training. BMC Medical Education, 11(1), 96. https://doi.org/10.1186/1472-6920-11-96.

Bradley, J. M., Unal, R., Pinto, C. A., & Cavin, E. S. (2015). Competencies for governance of complex systems of systems. International Journal of System of Systems Engineering, 6(1/2), 71. https://doi.org/10.1504/ijsse.2015.068804.

Brewer, M., & Jones, S. (2013). An interprofessional practice capability framework focusing on safe, high-quality, client-centred health service. Journal of Allied Health, 42(2), e45–49.

Brown, B. (1968). Delphi process: A methodology used for the elicitation of opinions of experts. Santa Monica, CA: RAND Corporation.

Calhoun, J. G., Ramiah, K., Weist, E. M., Shortell, S. M., Dollett, L., Sinioris, M. E., et al. (2008). Development of an interprofessional competency model for healthcare leadership. Journal of healthcare management/American College of Healthcare Executives, 53(6), 375–391. https://doi.org/10.1097/00115514-200811000-00006.

Camelo, S. H. H. (2012). Professional competences of nurse to work in intensive care units: An integrative review. Revista Latino-Americana de Enfermagem, 20(1), 192–200. https://doi.org/10.1590/S0104-11692012000100025.

Campion, M. A., Fink, A. A., Ruggeberg, B. J., Carr, L., Phillips, G. M., & Odman, R. B. (2011). Doing competencies well: Best practices in competency modeling. Personnel Psychology, 64, 225–262. https://doi.org/10.1111/j.1744-6570.2010.01207.x.

Cappiello, J., Levi, A., & Nothnagle, M. (2016). Core competencies in sexual and reproductive health for the interprofessional primary care team. Contraception, 93(5), 438–445. https://doi.org/10.1016/j.contraception.2015.12.013.

Carrington, C., Weir, J., & Smith, P. (2011). The development of a competency framework for pharmacists providing cancer services. Journal of Oncology Pharmacy Practice, 17(3), 168–178. https://doi.org/10.1177/1078155210365582.

Cattini, P. (1999). Core competencies for clinical nurse specialists: A usable framework. Journal of Clinical Nursing, 8(5), 505–511. https://doi.org/10.1046/j.1365-2702.1999.00285.x.

Chang, A., Bowen, J. L., Buranosky, R. A., Frankel, R. M., Ghosh, N., Rosenblum, M. J., et al. (2013). Transforming primary care training—Patient-centered medical home entrustable professional activities for internal medicine residents. Journal of General Internal Medicine, 28(6), 801–809. https://doi.org/10.1007/s11606-012-2193-3.

Chen, S. P., Krupa, T., Lysaght, R., McCay, E., & Piat, M. (2013). The development of recovery competencies for in-patient mental health providers working with people with serious mental Illness. Administration and Policy in Mental Health and Mental Health Services Research, 40(2), 96–116. https://doi.org/10.1007/s10488-011-0380-x.

Child, S. F. J., & Shaw, S. D. (2019). A purpose-led approach towards the development of competency frameworks. Journal of Further and Higher Education, 00(00), 1–14. https://doi.org/10.1080/0309877X.2019.1669773.

Cianciolo, A. T., & Regehr, G. (2019). Learning theory and educational intervention: Producing meaningful evidence of impact through layered analysis. Academic Medicine: Journal of the Association of American Medical Colleges, 94(6), 789–794. https://doi.org/10.1097/ACM.0000000000002591.

Cicutto, L., Gleason, M., Haas-Howard, C., Jenkins-Nygren, L., Labonde, S., & Patrick, K. (2017). Competency-based framework and continuing education for preparing a skilled school health workforce for asthma care: The Colorado experience. Journal of School Nursing, 33(4), 277–284. https://doi.org/10.1177/1059840516675931.

Collin, A. (1989). Managers’ competence: Rhetoric, reality and research. Personnel Review, 18(6), 20–25. https://doi.org/10.1108/00483488910133459.

Daouk-Öyry, L., Zaatari, G., Sahakian, T., Rahal Alameh, B., & Mansour, N. (2017). Developing a competency framework for academic physicians. Medical Teacher, 39(3), 269–277. https://doi.org/10.1080/0142159X.2017.1270429.

Davey, G. D. (1995). Developing competency standards for occupational health nurses in Australia. AAOHN Journal, 43(3), 138–143.

Davis, D., Stullenbarger, E., Dearman, C., & Kelley, J. A. (2005). Proposed nurse educator competencies: Development and validation of a model. Nursing Outlook, 53(4), 206–211. https://doi.org/10.1016/j.outlook.2005.01.006.

Davis, R., Turner, E., Hicks, D., & Tipson, M. (2008). Developing an integrated career and competency framework for diabetes nursing. Journal of Clinical Nursing, 17(2), 168–174. https://doi.org/10.1111/j.1365-2702.2006.01866.x.

Delbecq, A. L., & Van de Ven, A. H. (1971). A group process model for problem identification and program planning. The Journal of Applied Behavioral Science, 7(4), 466–492. https://doi.org/10.1177/002188637100700404.

Dewing, J., & Traynor, V. (2005). Admiral nursing competency project: Practice development and action research. Journal of Clinical Nursing, 14(6), 695–703. https://doi.org/10.1111/j.1365-2702.2005.01158.x.

Dijkman, B., Reehuis, L., & Roodbol, P. (2017). Competences for working with older people: The development and verification of the European core competence framework for health and social care professionals working with older people. Educational Gerontology, 43(10), 483–497. https://doi.org/10.1080/03601277.2017.1348877.

Dressler, D. D., Pistoria, M. J., Budnitz, T. L., McKean, S. C. W., & Amin, A. N. (2006). Core competencies in hospital medicine: Development and methodology. Journal of hospital medicine (Online), 1(1), 48–56. https://doi.org/10.1002/jhm.6.

du Toit, R., Cook, C., Minnies, D., & Brian, G. (2010). Developing a competency-based curriculum for eye care managers in Sub-Saharan Africa. Rural and Remote Health, 10(2), 1278.

Dunn, S. V., Lawson, D., Robertson, S., Underwood, M., Clark, R., Valentine, T., et al. (2000). The development of competency standards for specialist critical care nurses. Journal of Advanced Nursing, 31(2), 339–346. https://doi.org/10.1046/j.1365-2648.2000.01292.x.

Duong, H. V., Herrera, L. N., Moore, J. X., Donnelly, J., Jacobson, K. E., Carlson, J. N., et al. (2017). National characteristics of emergency medical services responses for older adults in the United States. Prehospital Emergency Care. https://doi.org/10.1080/10903127.2017.1347223.

Eraut, M. (1994). Developing professional knowledge and competence. London: Routledge. https://doi.org/10.4324/9780203486016.

Fidler, J. R. (1997). The role of the phlebotomy technician: Skills and knowledge required for successful clinical performance. Evaluation and the Health Professions, 20(3), 286–301. https://doi.org/10.1177/016327879702000303.

Frank, J. R. (2005). The CanMEDS 2005 physician competency framework. Better standsards. Better physicians. Better care. (Jason Frank, Ed.)The Royal College of Physicians and Surgeons of Canada. The Royal College of Physicians and Surgeons of Canada.

Frank, J, Snell, L., & Sherbino, J. (2015). CanMEDS 2015 Physician Competency Framework. Ottawa: Royal College of Physicians and Surgeons of Canada. CanMEDS 2015 Physician Competency Framework. Ottawa: Royal College of Physicians and Surgeons of Canada. http://www.royalcollege.ca/portal/page/portal/rc/canmeds/resources/publications.

Galbraith, K., Ward, A., & Heneghan, C. (2017). A real-world approach to evidence-based medicine in general practice: A competency framework derived from a systematic review and Delphi process. BMC Medical Education, 17(1), 1–15. https://doi.org/10.1186/s12909-017-0916-1.

Garavan, T., & McGuire, D. (2001). Competencies & workplace learning: The rhetoric & the reality. Journal of Workplace Learning, 13(4), 144–164.

Gardner, G., Carryer, J., Gardner, A., & Dunn, S. (2006). Nurse practitioner competency standards: Findings from collaborative Australian and New Zealand research. International Journal of Nursing Studies, 43(5), 601–610. https://doi.org/10.1016/j.ijnurstu.2005.09.002.

Gillan, C., Uchino, M., Giuliani, M., Millar, B.-A. A., & Catton, P. (2013). Defining imaging literacy in radiation oncology interprofessionally: Toward a competency profile for Canadian residency programs. Journal of Medical Imaging and Radiation Sciences, 44(3), 150–156. https://doi.org/10.1016/j.jmir.2013.03.002.

GlanvilleGeake, B., & Ryder, R. (2009). A community nurse clinical competency framework. British journal of community nursing, 14(12), 525–528. https://doi.org/10.12968/bjcn.2009.14.12.45527.

Gonczi, A., Hager, P., Oliver, L., & Oliver, M. L. (1990). Establishing competency…:Based standards in the professions, (1).

Grant, M. J., & Booth, A. (2009). A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information & Libraries Journal., 26(2), 91–108. https://doi.org/10.1111/j.1471-1842.2009.00848.x.

Greenhalgh, T., & Peacock, R. (2005). Effectiveness and efficiency of search methods in systematic reviews of complex evidence: Audit of primary sources. British Medical Journal, 331(7524), 1064–1065. https://doi.org/10.1136/bmj.38636.593461.68.

Haddaway, N. R., Collins, A. M., Coughlin, D., & Kirk, S. (2015). The role of google scholar in evidence reviews and its applicability to grey literature searching. PLoS ONE, 10(9), 1–17. https://doi.org/10.1371/journal.pone.0138237.

Halcomb, E., Stephens, M., Bryce, J., Foley, E., & Ashley, C. (2017). The development of professional practice standards for Australian general practice nurses. Journal of Advanced Nursing, 73(8), 1958–1969. https://doi.org/10.1111/jan.13274.

Hamburger, E. K., Lane, J. L., Agrawal, D., Boogaard, C., Hanson, J. L., Weisz, J., et al. (2015). The referral and consultation entrustable professional activity: Defining the components in order to develop a curriculum for pediatric residents. Academic Pediatrics, 15(1), 5–8. https://doi.org/10.1016/j.acap.2014.10.012.

Hay-McBer. (1996). Scaled competency dictionary. Boston, MA: Hay-McBer.

Hemalatha, R., & Shakuntala, B. (2018). A Delphi approach to developing a core competency framework for registered nurses in Karnataka, India. Nitte University Journal of Health Science, 8(2), 3–7. https://doi.org/10.12927/cjnl.2011.22142.

Heywood, L., Gonczi, A., & Hager, P. (1992). A guide to development of competency standards for professions. Canberra.

Ho, M. J., Yu, K. H., Hirsh, D., Huang, T. S., & Yang, P. C. (2011). Does one size fit all? Building a framework for medical professionalism. Academic Medicine, 86(11), 1407–14.

Hodges, B. D., & Lingard, L. (2012). The question of competence the question of competence. New York: Cornell University Press. https://doi.org/10.7591/9780801465802.

Horsley, T., & Regehr, G. (2018). When are two interventions the same? Implications for reporting guidelines in education. Medical Education, 52(2), 141–143. https://doi.org/10.1111/medu.13496.

Jacobson, A., McGuire, M., Zorzi, R., Lowe, M., Oandasan, I., & Parker, K. (2013). The group priority sort: A participatory decision-making tool for healthcare leaders. Healthcare Quality., 14(4), 47–53.

Jacox, A. (1997). Determinants of who does what in health care. Online Journal of Issues in Nursing, 2(4), Manuscript 1. http://www.nursingworld.org/MainMenuCategories/ANAMarketplace/ANAPeriodicals/OJIN/TableofContents/Vol21997/No4Dec97/DeterminantsofWhoDoesWhatinHealthCare.aspx.

Ketterer, A., Salzman, D., Branzetti, J., & Gisondi, M. (2017). Supplemental milestones for emergency medicine residency programs: A validation study. Western Journal of Emergency Medicine, 18(1), 69–75. https://doi.org/10.5811/westjem.2016.10.31499.

Kirk, M., Tonkin, E., & Skirton, H. (2014). An iterative consensus-building approach to revising a genetics/genomics competency framework for nurse education in the UK. Journal of Advanced Nursing, 70(2), 405–420. https://doi.org/10.1111/jan.12207.

Klein, M. D., Schumacher, D. J., & Sandel, M. (2014). Assessing and managing the social determinants of health: Defining an entrustable professional activity to assess residents’ ability to meet societal needs. Academic Pediatrics, 14(1), 10–13. https://doi.org/10.1016/j.acap.2013.11.001.

Klick, J. C., Friebert, S., Hutton, N., Osenga, K., Pituch, K. J., Vesel, T., et al. (2014). Developing competencies for pediatric hospice and palliative medicine. Pediatrics, 134(6), e1670–e1677. https://doi.org/10.1542/peds.2014-0748.

Knapp, J. E., & Knapp, L. G. (1995). Practice analysis: Building the foundation for validity. In J. Impara (Ed.), Licensure testing: Purposes, procedures, and practices (pp. 93–116). Buros Center for Testing.

Kwan, J., Crampton, R., Mogensen, L. L., Weaver, R., Van Der Vleuten, C. P. M., & Hu, W. C. Y. (2016). Bridging the gap: A five stage approach for developing specialty-specific entrustable professional activities. BMC Medical Education, 16(1), 1–13. https://doi.org/10.1186/s12909-016-0637-x.

Landzaat, L. H., Barnett, M. D., Buckholz, G. T., Gustin, J. L., Hwang, J. M., Levine, S. K., et al. (2017). Development of entrustable professional activities for hospice and palliative medicine fellowship training in the United States. Journal of Pain and Symptom Management, 54(4), 609–616.e1. https://doi.org/10.1016/j.jpainsymman.2017.07.003.

Lederman, L. C. (1990). Assessing educational effectiveness: The focus group interview as a technique for data collection. Communication Education, 39(2), 117–127. https://doi.org/10.1080/03634529009378794.

Lester, S. (2014). Assessment & evaluation in higher education professional competence standards and frameworks in the United Kingdom Kingdom. Assessment & Evaluation in Higher Education, 39(1), 38–52. https://doi.org/10.1080/02602938.2013.792106.

Lewis, R., Yarker, J., Donaldson-Feilder, E., Flaxman, P., & Munir, F. (2010). Using a competency-based approach to identify the management behaviours required to manage workplace stress in nursing: A critical incident study. International Journal of Nursing Studies, 47(3), 307–313. https://doi.org/10.1016/j.ijnurstu.2009.07.004.

Ling, S., Watson, A., & Gehrs, M. (2017). Developing an addictions nursing competency framework within a Canadian context. Journal of Addictions Nursing, 28(3), 110–116. https://doi.org/10.1097/JAN.0000000000000173.

Lingard, L. (2012). Rethinking competence in the context of teamwork. In B. D. Hodges & L. Lingard (Eds.), The Question of Competence (pp. 42–70). New York: Cornell University Press.

Liu, L., Curtis, J., & Crookes, P. (2014). Identifying essential infection control competencies for newly graduated nurses: A three-phase study in Australia and Taiwan. Journal of Hospital Infection, 86(2), 100–109. https://doi.org/10.1016/j.jhin.2013.08.009.

Liu, M., Kunaiktikul, W., Senaratana, W., Tonmukayakul, O., & Eriksen, L. (2007). Development of competency inventory for registered nurses in the People’s Republic of China: Scale development. International Journal of Nursing Studies, 44(5), 805–813. https://doi.org/10.1016/j.ijnurstu.2006.01.010.

Liu, Z., Tian, L., Chang, Q., Sun, B., & Zhao, Y. (2016). A competency model for clinical physicians in China: A cross-sectional survey. PLoS ONE, 11(12), e0166252–e0166252. https://doi.org/10.1371/journal.pone.0166252.

Loke, A. Y., & Fung, O. W. M. (2014). Nurses’ competencies in disaster nursing: Implications for curriculum development and public health. International journal of environmental research and public health, 11(3), 3289–3303. https://doi.org/10.3390/ijerph110303289.

Lucia, A. D., & Lepsinger, R. (1999). The art and science of competency models : Pinpointing critical success factors in organizations. Jossey-Bass/Pfeiffer. Retrieved October 3, 2019 from https://www.wiley.com/en-ca/The+Art+and+Science+of+Competency+Models%3A+Pinpointing+Critical+Success+Factors+in+Organizations-p-9780787946029.

Lucia, A. D., & Lepsinger, R. (1999b). The art and science of competency models: Pinpointing critical success factors in organizations. San Francisco: Jossey-Bass/Pfeiffer.

Macmillan Cancer Support. (2017). The Macmillan allied health professions competence framework for those working with people affected by cancer. London: Macmillan Cancer Support.

Makulova, A. T., Alimzhanova, G. M., Bekturganova, Z. M., Umirzakova, Z. A., Makulova, L. T., & Karymbayeva, K. M. (2015). Theory and practice of competency-based approach in education. International Education Studies, 8(8), 183. https://doi.org/10.5539/ies.v8n8p183.

Mansfield, R. S. (2000). Practical questions for building competency models. Communications, 1–34. Retrieved from http://workitect.performatechnologies.com/pdf/PracticalQuestions.pdf.

Marrelli, A. F., Tondora, J., & Hoge, M. A. (2005). Strategies for developing competency models. Administration and Policy In Mental Health, 32(5–6), 533–561. https://doi.org/10.1007/s10488-005-3264-0.

McCallum, M., Carver, J., Dupere, D., Ganong, S., Henderson, J. D., McKim, A., et al. (2018). Developing a palliative care competency framework for health professionals and volunteers: The nova scotian experience. Journal of Palliative Medicine, 21(7), 947–955. https://doi.org/10.1089/jpm.2017.0655.

McCarthy, G., & Fitzpatrick, J. J. (2009). Development of a competency framework for nurse managers in Ireland. The Journal of Continuing Education in Nursing, 40(8), 346–350. https://doi.org/10.3928/00220124-20090723-01.

Mendoza, J. (1994). If the suit doesn’t fit why wear it?’Competency-based training and health promotion. Health Promotion Journal of Australia, 4(2), 9.

Moaveni, A., Gallinaro, A., Conn, L., Callahan, S., Hammond, M., & Oandasan, I. (2010). A delphi approach to developing a core competency framework for family practice registered nurses in Ontario. Nursing Leadership, 23(4), 45–60.

Moher, D., Liberati, A., Tetzlaff, J., Altman, DG., & The PRISMA Group. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Medicine, 6(7), e1000097. https://doi.org/10.1371/journal.pmed1000097.

Mills, C., & Pritchard, T. (2004). A competency framework for nurses in specialist roles. Nursing Times, 26(43), 28–29.

Moerkamp, T., & Onstenk, J. (1991). From profession to training: An inventory of procedures for the development of competency profiles. Referenced in van der Klink and Boon, 2002 (original in Dutch).

Moher, D., Schulz, K. F., Simera, I., & Altman, D. G. (2010). Guidance for developers of health research reporting guidelines. PLoS Medicine, 7(2), e1000217.

Morrison, A., Polisena, J., Husereau, D., Moulton, K., Clark, M., Fiander, M., et al. (2012). The effect of English-language restriction on systematic review-based meta-analyses: A systematic review of empirical studies. International Journal of Technology Assessment in Health Care, 28(2), 138–144. https://doi.org/10.1017/S0266462312000086.

Munn, Z., Peters, M. D. J., Stern, C., Tufanaru, C., Mcarthur, A., & Aromataris, E. (2018). Systematic review or scoping review ? Guidance for authors when choosing between a systematic or scoping review approach, 1–7.

Myers, J., Krueger, P., Webster, F., Downar, J., Herx, L., Jeney, C., et al. (2015). Development and validation of a set of palliative medicine entrustable professional activities: Findings from a mixed methods study. Journal of Palliative Medicine, 18(8), 682–690. https://doi.org/10.1089/jpm.2014.0392.

National Physiotherapy Advisory Group. (2017). Competency Profile for Physiotherapists in Canada. National Physiotherapy Advisory Group.

Palermo, C., Capra, S., Beck, E. J., Dart, J., Conway, J., & Ash, S. (2017). Development of advanced practice competency standards for dietetics in Australia. Nutrition and Dietetics, 74(4), 327–333. https://doi.org/10.1111/1747-0080.12338.

Palermo, C., Conway, J., Beck, E. J., Dart, J., Capra, S., & Ash, S. (2016). Methodology for developing competency standards for dietitians in Australia. Nursing and Health Sciences, 18(1), 130–137. https://doi.org/10.1111/nhs.12247.

Parkinson’s UK. (2016). Competencies: A competency framework for nurses working in Parkinson’s disease management 3rd edition. Royal College of Nursing, PDNSA. London: Parkinson’s UK. https://doi.org/10.4137/nmi.s29530.

Patterson, F., Ferguson, E., Lane, P., Farrell, K., Martlew, J., & Wells, A. (2000). A competency model for general practice: Implications for selection, training, and development. British Journal of General Practice, 50(452), 188–193. https://doi.org/10.3399/bjgp13X667196.

Redwood-Campbell, L., Pakes, B., Rouleau, K., MacDonald, C. J., Arya, N., Purkey, E., et al. (2011). Developing a curriculum framework for global health in family medicine: Emerging principles, competencies, and educational approaches. BMC Medical Education, 11(1). https://doi.org/10.1186/1472-6920-11-46.

Reetoo, K. N., Harrington, J. M., & Macdonald, E. B. (2005). Required competencies of occupational physicians: A Delphi survey of UK customers. Occupational and Environmental Medicine, 62(6), 406–413. https://doi.org/10.1136/oem.2004.017061.

Ritchie, J., & Spencer, L. (2002). Qualitative data analysis for applied policy research. In A. Huberman & M. Miles (Eds.), The Qualitative Researcher’s Companion (pp. 305–329). Thousand Oaks: SAGE Publications Inc.

Roe, R. (2002). What makes a competent psychologist? European Psychologist, 7(3), 192–202. https://doi.org/10.1027//1016-9040.7.3.192.

Santy, J., Rogers, J., Davis, P., Jester, R., Kneale, J., Knight, C., et al. (2005). A competency framework for orthopaedic and trauma nursing. Journal of Orthopaedic Nursing, 9(2), 81–86. https://doi.org/10.1016/j.joon.2005.02.003.

Shaughnessy, A. F., Sparks, J., Cohen-Osher, M., Goodell, K. H., Sawin, G. L., & Gravel, J. (2013). Entrustable professional activities in family medicine. Journal of Graduate Medical Education, 5(1), 112–118. https://doi.org/10.4300/JGME-D-12-00034.1.

Sherbino, J., Frank, J. R., & Snell, L. (2014). Defining the key roles and competencies of the clinician-educator of the 21st century: A national mixed-methods study. Academic Medicine, 89(5), 783–789. https://doi.org/10.1097/ACM.0000000000000217.

Shilton, T., Howat, P., James, R., & Lower, T. (2001). Health promotion workforce development and health promotion workforce competency in Australia. Health Promotion Journal of Australia, 12(2), 117–123.

Simera, I., Altman, D. G., Moher, D., Schulz, K. F., & Hoey, J. (2008). Guidelines for reporting health research: The EQUATOR network’s survey of guideline authors. PLoS Medicine, 5(6), 869–874. https://doi.org/10.1371/journal.pmed.0050139.

Simera, I., Moher, D., Hirst, A., Hoey, J., Schulz, K. F., & Altman, D. G. (2010). Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Medicine, 8(1), 24. https://doi.org/10.1186/1741-7015-8-24.

Skirton, H., Lewis, C., Kent, A., & Coviello, D. A. (2010). Genetic education and the challenge of genomic medicine: Development of core competences to support preparation of health professionals in Europe. European Journal of Human Genetics, 18(9), 972–977. https://doi.org/10.1038/ejhg.2010.64.

Smythe, A., Jenkins, C., Bentham, P., & Oyebode, J. (2014). Development of a competency framework for a specialist dementia service. Journal of Mental Health Training, Education and Practice, 9(1), 59–68. https://doi.org/10.1108/JMHTEP-08-2012-0024.

Sousa, J. M., & Alves, E. D. (2015). Nursing competencies for palliative care in home care. ACTA Paulista de Enfermagem, 28(3), 264–269. https://doi.org/10.1590/1982-0194201500044.

Spencer, L., & Spencer, S. (1993). Competence at work. New York: Wiley.

Stucky, E. R., Ottolini, M. C., & Maniscalco, J. (2010). Pediatric hospital medicine core competencies: Development and methodology. Journal of Hospital Medicine, 5(6), 339–343. https://doi.org/10.1002/jhm.774.

Swing, S. R. (2007). The ACGME outcome project: Retrospective and prospective. Medical Teacher, 29(7), 648–654. https://doi.org/10.1080/01421590701392903.

Tangayi, S., Anionwu, E., Westerdale, N., & Johnson, K. (2011). A skills framework for sickle cell disease and thalassaemia. Nurs Times, 107(41), 12–13.

Tavares, W., Bowles, R., & Donelon, B. (2016). Informing a Canadian paramedic profile: Framing concepts, roles and crosscutting themes. BMC Health Services Research, 16(1), 1–16. https://doi.org/10.1186/s12913-016-1739-1.

ten Cate, O. (2005). Entrustability of professional activities and competency-based training. Medical Education, 39(12), 1176–1177. https://doi.org/10.1111/j.1365-2929.2005.02341.x.

ten Cate, O., & Scheele, F. (2007). Competency-based postgraduate training: Can we bridge the gap between theory and clinical practice? Academic Medicine: Journal of the Association of American Medical Colleges, 82(6), 542–547. https://doi.org/10.1097/ACM.0b013e31805559c7.

Toussaint. (2009). False god (Wikipedia page). Retrieved May 30, 2019 from https://en.wikipedia.org/wiki/False_god.

Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., et al. (2018). PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Annals of Internal Medicine. https://doi.org/10.7326/M18-0850.

Tsaroucha, A., Benbow, S. M., Kingston, P., & Le Mesurier, N. (2013). Dementia skills for all: A core competency framework for the workforce in the United Kingdom. Dementia, 12(1), 29–44. https://doi.org/10.1177/1471301211416302.

Underwood, M., Robertson, S., Clark, R., Crowder, K., Dunn, S., Lawson, D., et al. (1996). The emergence of competency standards for specialist critical care nurses. Australian Critical Care: Official Journal of the Confederation of Australian Critical Care Nurses, 9(2), 68–71. https://doi.org/10.1016/S1036-7314(96)70355-7.

van der Klink, M., & Boon, J. (2002). The investigation of competencies within professional domains. Human Resource Development International, 5(4), 411–424. https://doi.org/10.1080/13678860110059384.

Varpio, L., Bell, R., Hollingworth, G., Jalali, A., Haidet, P., Levine, R., et al. (2012). Is transferring an educational innovation actually a process of transformation? Advances in Health Sciences Education, 17(3), 357–367. https://doi.org/10.1007/s10459-011-9313-4.

Whiddett, S., & Hollyforde, S. (1999). The competencies handbook. London: Institute of Personnel and Development.

Whiddett, S., & Hollyforde, S. (2003). A practical guide to competencies: How to enhance individual and organisational performance. London: Chartered Institute of Personnel and Development.

Winter, R., & Maisch, M. (2005). Professional Competence And Higher Education: The ASSET Programme. Routledge.

Wölfel, T., Beltermann, E., Lottspeich, C., Vietz, E., Fischer, M. R., & Schmidmaier, R. (2016). Medical ward round competence in internal medicine - An interview study towards an interprofessional development of an Entrustable Professional Activity (EPA). BMC Medical Education, 16(1), 1–10. https://doi.org/10.1186/s12909-016-0697-y.

World Health Organization. (2005). Preparing a health care workforce for the 21st century: The challenge of chronic conditions. Geneva: Switzerland.

Yates, P., Evans, A., Moore, A., Heartfield, M., Gibson, T., & Luxford, K. (2007). Competency standards and educational requirements for specialist breast nurses in Australia. Collegian (Royal College of Nursing, Australia), 14(1), 11–15. https://doi.org/10.1016/S1322-7696(08)60542-9.

Acknowledgements

The authors wish to thank Ms. Paula Todd and Ms. Megan Anderson for their valuable insights into the search strategy.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Appendix 2

See Table 9.

Appendix 3: Citations for included studies

Ablah, E., Biberman, D. A., Weist, E. M., Buekens, P., Bentley, M. E., Burke, D., et al. (2014). Improving global health education: Development of a global health competency model. American Journal of Tropical Medicine and Hygiene, 90(3), 560–565. https://doi.org/10.4269/ajtmh.13-0537.

Akbar, H., Hill, P. S., Rotem, A., Riley, I. D., Zwi, A. B., Marks, G. C., & Mark, T. (2005). Identifying competencies for Australian health professionals working in international health. Asia–Pacific Journal of Public Health, 17(2), 99–103. https://doi.org/10.1177/101053950501700207.

Alfieri, E., Alebbi, A., Bedini, M. G., Boni, L., & Foà, C. (2017). Mapping the nursing competences in neonatology: a qualitative research. Acta bio-medica : Atenei Parmensis, 88(3–S), 51–58. https://doi.org/10.23750/abm.v88i3-s.6614.

Ali, A. M. (2012). Pharmacist prescribing in the Australian context: development and validation of competency standards and identification of pharmacists’ educational needs. Monash University. Retrieved from https://trove.nla.gov.au/work/186453738.

AlShammari, T., Jennings, P. A., & Williams, B. (2018). Emergency medical services core competencies: A scoping review. Health Professions Education. https://doi.org/10.1016/j.hpe.2018.03.009.

Ambuel, B., Trent, K., Lenahan, P., Cronholm, P., Downing, D., Jelley, M., et al. (2011). Competencies Needed by Health Professionals for Addressing Exposure to Violence and Abuse in Patient Care. Eden Prairie, MN.

Amendola, M. L. (2008). An examination of the leadership competency requirements of nurse leaders in healthcare information technology. ProQuest Information & Learning US, US. Retrieved from http://media.proquest.com/media/pq/classic/doc/1674957421/fmt/ai/rep/NPDF?hl=information,information,for,for,nurses,nurse,nurses,nurse&cit:auth=Amendola,+Mark+Lawrence.

Anderson, R. O. (2016). Assessing Nurse Manager Competencies in a Military Hospital. ProQuest Dissertations and Theses. ProQuest Information & Learning US, US. Retrieved from http://primo-pmtna01.hosted.exlibrisgroup.com/openurl/01WMU/01WMU_SERVICES??url_ver=Z39.88-2004&rft_val_fmt=info:ofi/fmt:kev:mtx:dissertation&genr.

Ash, S., Gonczi, A., & Hager, P. (1992). Combining Research Methodologies to Develop Competency-Based Standards for Dietitians: A Case Study for the Professions. Canberra.

Aylward, M., Nixon, J., & Gladding, S. (2014). An entrustable professional activity (epa) for handoffs as a model for epa assessment development. Academic Medicine, 89(10), 1335–1340. https://doi.org/10.1097/acm.0000000000000317.

Baldwin, K. M., Lyon, B. L., Clark, A. P., Fulton, J., Davidson, S., & Dayhoff, N. (2007). Developing Clinical Nurse Specialist Practice Competencies. Clinical Nurse Specialist, 21(6), 297–302. https://doi.org/10.1097/01.nur.0000299619.28851.69.

Banfield, V., & Lackie, K. (2009). Performance-based competencies for culturally responsive interprofessional collaborative practice. Journal of Interprofessional Care, 23(6), 611–620. https://doi.org/10.3109/13561820902921654.

Barnes, T. A., Gale, D. D., Kacmarek, R. M., & Kageler, W. V. (2010). Competencies needed by graduate respiratory therapists in 2015 and beyond. Respiratory Care, 55(5), 601–616.

Barrett, H., & Bion, J. F. (2006). Development of core competencies for an international training programme in intensive care medicine. Intensive care medicine, 32(9), 1371–1383. https://doi.org/10.1007/s00134-006-0215-5.

Barry, M. M. (2011). The CompHP Core Competencies Framework for Health Promotion Short Version. Health education & behavior : the official publication of the Society for Public Health Education, 39(20081209), 648–662. doi:https://dx.doi.org/10.1177/1090198112465620.

Basile, J. L., & Stone, D. B. (1986). Profile of an effective hospice team member. Omega: Journal of Death and Dying, 17(4), 353–362. https://doi.org/10.2190/046w-wvd7-x7p4-154k.

Bench, S., Crowe, D., Day, T., Jones, M., & Wilebore, S. (2003). Developing a competency framework for critical care to match patient need. Intensive & critical care nursing, 19(3), 136–142. https://doi.org/10.1016/s0964-3397(03)00030-2.

Black, J., Allen, D., Redfern, L., Muzio, L., Rushowick, B., Balaski, B., et al. (2008). Competencies in the context of entry-level registered nurse practice: a collaborative project in Canada. International Nursing Review, 55(2), 171–178. doi:http://dx.doi.org/10.1111/j.1466-7657.2007.00626.x.

Blanchette, L. (2015). An exploratory study of the role of the organization and the… ProQuest Information & Learning US, US. Retrieved from http://ovidsp.ovid.com/ovidweb.cgi?T=JS&PAGE=reference&D=psyc13a&NEWS=N&AN=2016-17339-289.

Bluestein, P. (1993). A model for developing standards of care of the chiropractic paraprofessional by task analysis. J Manipulative Physiol Ther, 16(4), 228–237.

Bobo, N., Adams, V. W., & Cooper, L. (2002). Excellence in school nursing practice: developing a national perspective on school nurse competencies. The Journal of school nursing : the official publication of the National Association of School Nurses, 18(5), 277–285.

Booth, M., & Courtnell, T. (2012). Developing competencies and training to enable senior nurses to take on full responsibility for DNACPR processes. International Journal of Palliative Nursing, 18(4), 189–195. https://doi.org/10.12968/ijpn.2012.18.4.189.

Boyce, P., Spratt, C., Davies, M., & McEvoy, P. (2011). Using entrustable professional activities to guide curriculum development in psychiatry training. BMC Medical Education, 11(1), 96. https://doi.org/10.1186/1472-6920-11-96.

Brewer, M., & Jones, S. (2013). An Interprofessional Practice Capability Framework Focusing on Safe, High-Quality, Client-Centred Health Service. Journal of Allied Health, 42(2), e45-49.

Brown, C. R. J., Criscione-Schreiber, L., O’Rourke, K. S., Fuchs, H. A., Putterman, C., Tan, I. J., et al. (2016). What Is a Rheumatologist and How Do We Make One? Arthritis Care & Research, 68(8), 1166–1172. https://doi.org/10.1002/acr.22817.

Brown, S. J. (1998). A framework for advanced practice nursing. Journal of Professional Nursing, 14(3), 157–164. https://doi.org/10.1016/s8755-7223(98)80091-4.

Cai, D., Kunaviktikul, W., Klunklin, A., Sripusanapan, A., & Avant, P. K. (2017). Developing a cultural competence inventory for nurses in China. International Nursing Review, 64(2), 205–214. https://doi.org/10.1111/inr.12350.

Calhoun, J. G., Ramiah, K., Weist, E. M., Shortell, S. M., Dollett, L., Sinioris, M. E., et al. (2008). Development of an interprofessional competency model for healthcare leadership. Journal of healthcare management/American College of Healthcare Executives, 53(6), 375–391. https://doi.org/10.1097/00115514-200811000-00006.

Camelo, S. H. H. (2012). Professional competences of nurse to work in Intensive Care Units: an integrative review. Revista Latino-Americana de Enfermagem, 20(1), 192–200. https://doi.org/10.1590/s0104-11692012000100025.

Cappiello, J., Levi, A., & Nothnagle, M. (2016). Core competencies in sexual and reproductive health for the interprofessional primary care team. Contraception, 93(5), 438–445. https://doi.org/10.1016/j.contraception.2015.12.013.

Carraccio, C., Englander, R., Gilhooly, J., Mink, R., Hofkosh, D., Barone, M. A., & Holmboe, E. S. (2017). Building a Framework of Entrustable Professional Activities, Supported by Competencies and Milestones, to Bridge the Educational Continuum. Academic medicine : journal of the Association of American Medical Colleges, 92(3), 324–330. doi:https://dx.doi.org/10.1097/ACM.0000000000001141.

Carrico, R. M., Rebmann, T., English, J. F., Mackey, J., & Cronin, S. N. (2008). Infection prevention and control competencies for hospital-based health care personnel. American Journal of Infection Control, 36(10), 691–701. https://doi.org/10.1016/j.ajic.2008.05.017.

Carrington, C., Weir, J., & Smith, P. (2011). The development of a competency framework for pharmacists providing cancer services. Journal of Oncology Pharmacy Practice, 17(3), 168–178. https://doi.org/10.1177/1078155210365582.

Cattini, P. (1999). Core competencies for Clinical Nurse Specialists: A usable framework. Journal of Clinical Nursing, 8(5), 505–511. https://doi.org/10.1046/j.1365-2702.1999.00285.x.

Caverzagie, K. J., Cooney, T. G., Hemmer, P. A., & Berkowitz, L. (2015). The development of entrustable professional activities for internal medicine residency training: A report from the Education Redesign Committee of the Alliance for Academic Internal Medicine. Academic Medicine, 90(4), 479–484. https://doi.org/10.1097/acm.0000000000000564.

Chan, B., Englander, H., Kent, K., Desai, S., Obley, A., Harmon, D., & Kansagara, D. (2014). Transitioning Toward Competency: A Resident-Faculty Collaborative Approach to Developing a Transitions of Care EPA in an Internal Medicine Residency Program. Journal of Graduate Medical Education, 6(4), 760–764. https://doi.org/10.4300/jgme-d-13-00414.1.