Abstract

Learner engagement matters, particularly in simulation-based education. Indeed, it has been argued that instructional design only matters in the service of engaging learners in a simulation encounter. Yet despite its purported importance, our understanding of what engagement is, how to define it, how to measure it, and how to assess it is limited. The current study presents the results of a critical narrative review of literature outside of health sciences education, with the aim of summarizing existing knowledge in these areas and providing a research agenda to guide future scholarship on learner engagement in healthcare simulation. Building on this existing knowledge base, we provide a working definition for engagement and provide an outline for future research programs that will help us better understand how health professions’ learners experience engagement in the simulated setting. With this in hand, additional research questions can be addressed including: how do simulation instructional design features (fidelity, range of task difficulty, feedback, etc.) affect engagement? What is the relationship between engagement and simulation learning outcomes? And how is engagement related to or distinct from related variables like cognitive load, motivation, and self-regulated learning?

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Engagement matters in education, particularly in simulation-based education. We assume that if a student is motivated, attentive, and active in their learning process, then their learning will stick. By contrast, if they show an absence of these qualities, then we assume that their learning will suffer. These assumptions drive efforts to develop pedagogy that intentionally fosters student engagement. But such efforts are undermined by a poor and inconsistent understanding of what engagement is, how it should be defined, and how it can be measured. If engagement truly does matter, then more focused and robust attention to the construct is required.

Despite a lack of clarity on what engagement is, the construct is central to the simulation-focused literature. To illustrate the centrality of engagement in simulation instructional design, let us consider the role of “fidelity”. Fidelity indicates how accurately a simulation represents the clinical event or setting it is meant to replicate; high fidelity simulation refers to a very realistic representation of a clinical situation, while low fidelity indicates a less realistic one. The notion that higher fidelity simulation results in improved learning is pervasive in healthcare simulation (Haji et al. 2016), in part based on the belief that higher fidelity leads to “high engagement” among students with a resulting improvement in learning outcomes (Rudolph et al. 2007). This is thought to occur because improved realism facilitates the “suspension of disbelief”, such that students treat a simulation “as if” it is a real clinical encounter (Dieckmann et al. 2007). In light of this view, some have argued that fidelity only matters in the service of engagement (Rudolph et al. 2007), wherein “suspension of disbelief” is used as a marker of engagement.Footnote 1 However, the accuracy or adequacy of such a defininition of engagement has not been systematically explored.

If fidelity is indeed central to designing effective simulations by way of optimizing a learner’s engagement, then engagement is a fundamental construct which requires serious and thorough consideration. However, before we can effectively study the relationship between engagement and fidelity, we must first understand what engagement is. The primary purpose of this paper is to provide some clarity on the concept of engagement and furthermore, to offer guidance on how to proceed with research on this phenomenon in simulation-based educational settings.

This paper aims to build a theoretical foundation for understanding and studying engagement. This foundation is based on the authors’ critical narrative review of the education and workplace literatures, where a great deal of research on engagement has been conducted. Specifically, we consider how engagement is defined, conceptualized, and measured within research contexts outside of health sciences education, and make suggestions for how these ideas can be applied to the study of engagement within the healthcare simulation context. To supplement our review, we also provide directions for future inquiry related to engagement in simulation-based education that stem from our proposed theoretical foundation. We hope to guide future research to address fundamental assumptions about the role engagement has to play in the design and subsequent outcomes of simulation-based education in the health professions.

Methods

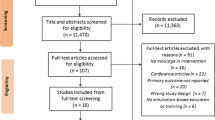

Our review was primarily based in two areas: the education literature (from Kindergarten to higher education) and the organizational sciences literature. These fields were chosen because they have spent a great deal of time on the topic of engagement, each approaching it from complementary yet distinct schools of thought. We also searched for uses of engagement in simulation-based medical education research to exemplify how fractured the use of this concept is in the current health sciences education literature.

The methodology used for this review is a critical narrative review, the primary focus of which is to outline the conceptual contribution of the included literature (Jesson and Lacey 2006). The goal of this type of review is not to systematically describe and evaluate a specific set of articles, but rather to review a diversity of sources to determine what is of value from a given body of work (Grant and Booth 2009). Critical narrative reviews aid researchers in understanding the key schools of scholarly thought on a given concept and rather than providing an answer to a specific research question, provide a foundation from which further evaluation can spring. In keeping with critical narrative review methodology, this is a non-systematic review. It is neither exhaustive nor rigorously evaluative; rather, it amalgamates the subject of engagement from a selected literature, incorporating information that we deem relevant for guiding future simulation research (Baumeister and Leary 1997; Ng et al. 2015).

In the education and organizational science literatures, we primarily targeted reviews and theoretical or conceptual papers in order to capture the most significant and commonly used components of the subject, focusing on how engagement had been defined and theorized. However, for the health sciences education literature, the focus was on empirical papers, partly due to the lack of theoretical articles in this area but also to provide a sense of how the concept is being applied in healthcare simulation research.

The authors searched online databases, including Google Scholar, ProQuest, ERIC, PubMed, and Scopus for articles from the the three aforementioned literatures: health sciences education, education, and organizational sciences. The search process was iterative for each of the literatures, with each literature search undertaken separately. For all of the searches, combinations of terms included, but were not limited to, “engage*”, “theoretical”, and “review”. For the educational literature, specific terms like “student”, “learner”, and “school” were added. For the organizational sciences, additional search words included: “workplace”, “employee”, and “worker”. Finally, for the health sciences education literature, the search terms “simulation” and “fidelity” were added to those used in the education search. For each of the literatures, citation lists of relevant articles were also a resource used for discovering additional articles.

Our inclusion criteria began broadly as we searched for any mention of engagement, but narrowed as the project went on to focus on what the authors deemed most relevant to simulation-based education, similar to the process used in a critical interpretive synthesis (Dixon-woods et al. 2006a, b). For example, within both the education and workplace literatures there are multiple forms of engagement that are discussed, such as workplace (engagement with the company/place of employment), school (engagement with the institution broadly, e.g. through extracurricular activities), and learner (engagement with the specific learning task at hand). During the review it became clear that “learner engagement” within the education literature most closely represented the form of engagement of interest in simulation-based education settings and as such became the primary focus of the review.

Relevant concepts and methods discovered in the literatures, such as learner engagement, were analyzed and if appropriate for the study of engagement in simulation research, have been included in the results of this article. In an effort to better link the findings of our review with its revelance to healthcare simulation research, each results subsection provides an overview of how engagement is currently used in that field. In the discussion section that follows, we address how the views in health sciences education may be advanced based on the conceptualizations, definitions, and measures of engagement outlined in our results. In addition, our discussion provides an outline for a program of research in learner engagement in simulation-based education that builds on these concepts.

Results

In this section we summarize current views of engagement in the education and organizational science/workplace literatures as they pertain to three areas. First, we consider how engagement has been conceptualized in these fields, with particular attention to theoretical constructs that are assumed to underlie and relate to engagement. Second, we consider how engagement has been defined in these fields. Finally, we describe how engagement has been measured in these fields. Each section begins with a brief overview of our findings from the health sciences education literature, followed by a round up of the essential findings from the education and organizational sciences.

Conceptualizing engagement

As mentioned in the introduction, the concept of fidelity is an important one in health sciences education, especially in simulation-based programs. The theory of realism discussed by Dieckmann et al. (2007) and Rudolph et al. (2007) focuses on the importance of developing engaging simulations, yet most of the discussion is focused on how to achieve engagement with little consideration given to what engagement is. Similarly, health sciences education researchers have recently been interested in testing engagement in relation to simulation learning programs, but they too often ignore the fundamental step of explaining what they mean by engagement.

The focus of engagement varies across studies, being used as an outcome in some (e.g. Power et al. 2015) and a predictor in others (e.g. Courteille et al. 2014). Some researchers use other established concepts like metacognition (Gardner et al. 2016) and motivation (Pizzimenti and Axelson 2015) to explain engagement, but generally conceptualization is omitted altogether and the meaning of engagement has to be inferred by how it was measured (e.g. Barnett et al. 2016; Bergin et al. 2003). Thus, the primary issue with conceptualizing engagement in health sciences education research is that it simply tends not to be done. To address this, we need to build a conceptual framework that empirical researchers can draw from.

We begin by looking at how other literatures conceptualize engagement. Within the education and workplace literatures, explicit theories of engagement are generally lacking or ambiguous. Within the education literature, other theories, such as ‘flow’ and those developed for motivation, are often used in place of an explicit theoretical foundation for the construct of engagement. Flow is a subjective state of total immersion to the point of forgetting time, fatigue and anything but the task itself (Csikszentmihalyi et al. 2005). Some education researchers have argued that the state of flow is essentially describing engagement and can thus be useful in operationalizing and measuring it (Eccles 2016). However, researchers in the workplace literature argue that while there is likely a relationship between flow and engagement, the two are distinct, stating flow may be a consequence rather than a component or basis of engagement (Bailey et al. 2017).

More often, learner engagement is discussed in relation to theories of motivation, which itself has an abundance of perspectives and models. Motivation has been described as an emotional quality (Ainley 2006; La Rochelle et al. 2011), yet a definition of motivation from a recent review on its contemporary theories describe it more as a cognitive process, “whereby goal-directed activities are instigated and maintained” (Cook and Artino 2016). Two motivational theories that have been strongly associated with engagement are self-determination theory (SDT) and self-regulated learning (SRL). In SDT, engagement is considered a core component of strong student outcomes, acting as a mediator between motivation and student functioning (Jang et al. 2016). With SDT, engagement itself isn’t described or analyzed closely but is simply used as a piece in understanding how well students learn. Alternatively, SRL offers a definition of a self-regulated learner that might be similar to how we think of an engaged learner: that is, someone who is metacognitively, motivationally, and behaviorally active in their own learning (Pizzimenti and Axelson 2015).

Many education researchers agree that engagement is related to motivation, however, they clarify that engagement is a unique construct that should be distinguished and understood on its own (Fredricks et al. 2016). For instance, motivation, specifically intrinsic motivation, is often seen as an aspect of engagement whereby a learner’s motivation to learn may increase their level of engagement (Fredricks et al. 2004). In this sense, motivation tends to be seen as an attribute of the learner whereas engagement, on the other hand, is state-specific, a product of the interaction between a learner and their current learning environment (Fulmer et al. 2015).

Like education researchers, workplace researchers tend to conceptualize engagement as a distinct, stand-alone construct. Yet, rather than motivation, research on work engagement is more closely aligned with the concept of burnout. The idea that workplace and personal resources (extrinsic and intrinsic forms of motivation respectively) facilitate engagement is considered empirically, but motivation itself is not a key component of the conceptual discussions (Bakker et al. 2008).

There are two primary schools of thought on the relationship between engagement and burnout in the workplace literature. One places engagement on the opposite end of the spectrum to burnout, characterizing it by three main components: energy (as compared to exhaustion in burnout), involvement (contrasted with cynicism), and efficacy (as opposed to ineffectiveness) (Maslach and Leiter 1997). The alternative view still considers engagement to be the opposite of burnout, but argues that the two do not exist on a spectrum but are rather their own distinct concepts (Schaufeli et al. 2002b). According to a review by Bailey et al. (2017), the majority of research on workplace engagement (86% of studies in their review) adheres to the latter approach.

Aside from this general agreement that worker engagement is a unique construct that is negatively related to burnout, there is little theorizing beyond this within the workplace literature (Macey and Schneider 2008). However, there are some conceptual models within the education literature that do come closer to a theoretical framework, but these are often simply typologies (Boekaerts 2016; Kahu 2013). For example, Coates (2007) describes four types of student engagement: collaborative, independent, passive, and intense. Another approach focuses on clustering educational institutions themselves in terms of how and in what ways their students tend to be engaged, fitting schools into such categories such as intellectually stimulating, interpersonally supportive, or diverse but interpersonally fragmented (Pike et al. 2011). These typologies offer broad clusters by which we might describe student behaviour and learning environments generally, but do not tell us much about the process of engagement itself, including what an engaged student looks like or how engagement may be measured.

The education literature does, however, offer one conceptual framework which we think would be the most useful for health sciences education settings—The Multidimensional Framework (MDF) of Engagement. MDF differs from these other examples, going beyond typologizing engagement to consider the process of engagement within the individual learner. It is also by far the most ubiquitous model of engagement in the education literature. While it aligns with general ideas of engagement in the workplace literature (e.g. Saks 2006; Shuck and Wollard 2010), workplace ideas have not been formalized into a clear structure in the manner that the MDF has in education.

The MDF focuses on the learner and how they experience engagement in a specific learning context. It describes engagement as a highly interactive, context-dependent process that consists of three dynamically interrelated components: behavioral, emotional, and cognitive (Fredricks et al. 2016). Behavioral engagement refers to actions such as participating, attending class, and putting forth consistent effort; cognitive engagement refers to learning strategies, depth of processing, and the investment put into a task that facilitates information processing; and emotional engagement refers to a learner’s affect and can include feelings of enjoyment, interest, and boredom (Shernoff et al. 2016). Recall the similarity of this description of engagement with the definition of a self-regulated learner discussed previously. The main difference is the broadness of the MDF’s categories (emotional engagement rather than just motivation, and cognitive engagement rather than the more specific metacognition). While both are useful, we believe the framework’s broad categories give a wider foundation on which to build our understanding of engagement with simulations.

Although the three components can be described and measured independently, the developers of this framework argue that they are interactive and work together to form a total representation of engagement (Fredricks et al. 2016). Some researchers have suggested additional components should be added to the MDF, such as agentic (Reeve and Tseng 2011) or social engagement (Wang et al. 2016) which offer a more constructivist view of learning. These additions focus on the socio-cultural components of the learning process, emphasizing the influence of the environment on the learner and the learner on the environment. Considering the dynamic environments of simulation-based education designs, this may be an important component to incorporate when developing a theoretical account of engagement for this field.

Defining engagement

Like conceptualizing engagement, defining engagement is often not considered in simulation-based education research. One study explicitly defined engagement as flow, interest, and relevance (McCoy et al. 2016), but for the others, the intended definition of engagement had to be inferred by their means of assessing it. In the studies reviewed, engagement seems to have been most commonly understood as different emotional states (Jorm et al. 2016; Bergin et al. 2003; Barnett et al. 2016) or cognitive states (Courteille et al. 2014; Gardner et al. 2016; Power et al. 2015). Yet even within these categories there is inconsistency across the studies in terms of which cognitive strategies or emotional states are being reflected. In order to build a unified body of research on engagement in the health sciences education literature, a clear and consistent definition is essential.

How to define student engagement has been a recurring topic in education research. In a review on this subject, Fredricks et al. (2016) stress how important a clear definition is for better understanding and improving engagement. Specifically, they argue that researchers need to think carefully about what they mean when they say “engagement” and ensure the definition isn’t so broad that it incorporates the entire educational experience of a student or overlap too closely with other constructs, such as motivation.

Researchers in both organizational science and education agree that engagement is not an individual trait (Schaufeli et al. 2002a, b), but is rather a context-dependent state (Fulmer et al. 2015). An individual may be engaged in one environment and not another, or may find one activity easy to engage with and others more difficult. Following from this, engagement can be considered the dual property of the person and their environment, and the interaction between person and environment is what constitutes the degree of their engagement (Gresalfi and Barab 2011). This aligns well with the MDF of engagement, which describes engagement as an interactive process that is influenced by the context or environment. Thus, when defining engagement, simulation researchers should think of it in the context of the interaction between the learner and the simulation. This clarifies engagement as a state rather than an inherent trait and keeps it aligned with the conceptualization we discussed in the previous section.

When it comes to defining specifically what engagement looks like when experienced by a learner, researchers offer a variety of similar examples. In the workplace literature, the definition of engagement provided by Schaufeli et al. (2002a, b) as a positive, fulfilling, work-related state of mind characterized by vigor, dedication, and absorption, is used by most researchers of workplace engagement (Bailey et al. 2017). While similarities to the prevalent workplace definition are evident, in the education literature no single definition predominates. Engagement has been characterized as mild positive valence, moderate arousal, and intense, focused attention with a particular task (Fulmer et al. 2015). An engaged learner has also been defined as someone who has good learning strategies, a will to learn, and who is involved behaviorally, emotionally, and intellectually with their task (Järvelä et al. 2016). Other researchers suggest that the key factors that can be used to capture schoolwork engagement are energy, dedication, and absorption (Salmela-Aro and Upadaya 2012), similar to the workplace definition. Finally, students have also been described as engaged with their work when they are involved, persist despite challenges and obstacles, and take visible delight in accomplishing their work (Schlechty 2011).

Measuring engagement

The final foundational component for studying engagement in simulation research is having good forms of measurement. Unsurprisingly, a lack of conceptualization and inconsistent definitions within this area has also meant a significant variability in how engagement is measured. The studies in this review used different methodologies, including focus groups/interviews, observer ratings, and most commonly, self-report questionnaires. Researchers would sometimes develop their own questionnaires (e.g. Bergin et al. 2003; Barnett et al. 2016; McCoy et al. 2016) or use existing measures that were developed for variables other than engagement (e.g. Gardner et al. 2016; Pizzimenti and Axelson 2015). Diversity of measurement across different studies of the same topic is acceptable practice, but the absence of established measures for a critical construct creates challenges in establishing the reliability and validity of the construct.

Within education, measurement of engagement tends to take the form of self-report measures, checklists and rating scales, direct observations, or focused case studies (Chapman 2003). Focus groups, especially with mature populations such as students in post-secondary institutions, are also common (Bryson and Hand 2007). More recently, experience sampling methods have been used. An alarm sounds in the middle of a task and learners are asked to stop what they’re doing and respond to a short survey to capture their engagement in the moment (Eccles 2016).

Given all these different formatting options and the variety of engagement definitions, it is not surprising that there is great variability and inconsistency in the measurement tools and approaches used by education researchers (Azevedo 2015). This inconsistency is compounded by the practice among researchers of using measures that were built for similar constructs, such as motivation, to capture specific aspects of engagement (DeBacker and Crowson 2006), a practice that has also been used in simulation research. Clearly, careful measurement standards are lacking in education research as they are in simulation.

Within the workplace literature there do exist well-established, commonly used measures of engagement. Because worker engagement was occasionally established as a construct characterized by the absence of burnout, some researchers use measures of burnout to assess employee engagement, simply by calculating reverse scores. These instruments include the Maslach Burnout Inventory (Maslach et al. 1996) and the Oldenburg Burnout Inventory (Demerouti and Bakker 2008; Demerouti et al. 2002). However, most researchers adhere to Schaufeli et al. (2002a, b)’s approach to engagement, measuring engagement using the Ultrecht Work Engagement Scale (Bakker et al. 2008) and its three subscales: vigor, dedication, and absorption. The original instrument has been well validated (Seppälä et al. 2009) and a student version of the scale with items such as “When I’m studying, I feel mentally strong” and “My studies inspire me” has also been developed and validated cross-nationally (Schaufeli et al. 2002a, b). However, these items may be a bit too broad to be sufficient for simulation-based education contexts.

Discussion

Conceptualizing, defining, and measuring engagement in healthcare simulation

There are many challenges with the current approach to assessing engagement in simulation-based education contexts. The literature is lacking in any thorough conceptualization of engagement, has no clear or consistent definition of the construct, and does not have any sound means of measurement. However, our review has revealed that other literatures have considered the concept carefully, providing a fund of knowledge that the health sciences education community can draw from.

With respect to conceptualizing engagement and considering the unique learning experience in healthcare settings (particularly simulation-based ones), it will be important to develop a theory of engagement that is unique to this area rather than relying on theories developed for employees or learners in classroom contexts. However, we believe the MDF serves as a good starting point for a theoretical exploration of engagement within healthcare simulation contexts. Specifically, the multidimensional model could serve as sensitizing concepts in research that focuses on understanding the experience of students participating in simulation-based learning activities. Qualitative methodologies such as Phenomenology (Sokolowski 2000) or Constructivist Grounded Theory (Charmaz 2014) could be useful to explore learners experiences and ideas around engagement in this educational context. By recognizing that learners hold ideas and values about what it means to be engaged during these educational experiences, such research may help us to better understand the differences between an engaged and unengaged learner, how engagement in health professions learners compares to that of other learners (e.g. an elementary school students), the contextual factors that influence learner engagement in healthcare simulation, and the implications of being engaged (or not) for their educational experience. This work could help build a strong foundation for a theory of engagement in healthcare simulation that could be tested and on which future research designs could be modeled.

Next, for defining engagement, we have identified important commonalities amongst the definitions used in the education and organizational sciences literatures, applying them to our own definition of engagement for simulation contexts. Specifically, we noted that the definitions of engagement identified in the review typically incorporate the components of the MDF, whether intentionally or not. For example, definitions of engagement often describe it as involving good cognitive strategies, high positive affect, and consistent, enduring (behavioral) effort. By using these three components as a guide, we propose the following as a possible definition of engagement for simulation research: Learner engagement is a context-dependent state of dedicated focus towards a task wherein the learner is involved cognitively, behaviorally, and emotionally.

This definition approaches engagement as a state that is context-dependent, giving responsibility for engagement to both the learner and the learning activity, rather than as a trait where engagement is the full responsibility of the learner. It prioritizes focus, where the learner’s primary source of attention is the task at hand, but does not require an intense focus where peripheral vision is lost, which helps distinguish engagement from the state of flow. This definition further modifies focus with the term ‘dedicated’, which implies that if and when distractions do arise which impact the learner’s focus, they have the will and energy to return their attention to the task. Finally, the definition includes the three components from the MDF: cognitive, emotional, and behavioral.

Finally, we can begin to think about how best to measure engagement by using what we’ve learned from the education and organizational sciences literatures. Like with the definition of engagement, it would be ideal for us to develop our own measures that best meet the unique needs of simulation-based education. Once again, the MDF may be of use here. It directs our focus to how engagement can manifest (e.g. cognitive strategies and related emotions and behaviors) and how it might be captured (e.g. observational and self-report measures). Since the MDF argues that engagement is a dynamic, fluctuating, and interactive process that is highly context-dependent, the developers of the framework argue that different models and measures of engagement are required for different contexts (Shernoff et al. 2016). In the area of primary and secondary education, this lends itself to the development of unique engagement measures for different subjects, such as English, History, or Math (Wang et al. 2016).If we follow the recommendations of the MDF developers, we could consider developing unique measures for specific types of learning environments. This could mean developing individualized engagement measures for different simulation types. Alternatively, it is possible that building broad measures, either self-report or observational, may be sufficient for the majority of simulation contexts. The most appropriate approach will certainly need to be a point of inquiry during the development and evaluation of these measures.

Advancing engagement research

Armed with an understanding of a learner’s experience of engagement, coupled with a clear definition and the means by which to measure it, we can begin to tackle a series of questions related to engagement in simulation based education in the health professions. The associated lines of inquiry can be delineated into three areas: (1) the relationship between the instructional design of an educational activity (e.g. a simulation, including design elements like ‘fidelity’) and a learner’s level of engagement; (2) the relationship between learner engagement and subsequent learning outcomes; and (3) the relationship between engagement and other, related variables such as emotion, stress, cognitive load, motivation, flow, self-regulation, and metacognition.

The first line of inquiry should seek to clarify how the instructional design of a learning activity impacts learner engagement. Returning to the example of fidelity, a central point of study will be to investigate the widely-held belief that situating a learning activity in a realistic environment (i.e. increasing a simulation’s fidelity) facilitates suspension-of-disbelief, positively impacting engagement by allowing learners to participate in a simulation in an experientially and emotionally relevant manner (Rudolph et al. 2007; Dieckmann et al. 2007; Hamstra et al. 2014; La Rochelle et al. 2011; Issenberg and Scalese 2008; Kneebone 2005). Importantly, future research should move past the false dichotomy of “high” vs. “low” fidelity that has dominated the literature and instead investigate how variations in the physical, conceptual and emotional resemblances of a simulation impact learner engagement (Koens et al. 2005; Hamstra et al. 2014) and the mechanism by which increased engagement is achieved (increased cognitive effort, emotional involvement, etc.). For instance, a number of authors have argued that psychological fidelity (whether the simulation accurately represents the learner behaviours required to complete the task in the real world) and phenomenal realism (the degree to which emotions, beliefs, metacognitive thoughts of learners are represented in the simulation) are particularly relevant to learner engagement (Rudolph et al. 2007; Dieckmann et al. 2007; Norman et al. 2012). Similarly, others have agued that physical fidelity (i.e. the degree to which a simulation “looks and feels” like the real thing) may also impact learner engagement when the content relates to tasks requiring feedback from the environment (e.g. suturing a wound or performing a surgical procedure). Empirical study of these claims using a consistent, conceptual definition of both simulation fidelity and learner engagement is necessary. In so doing, data generated from such studies will help educators to understand how various dimensions of fidelity (physical, semantic, and psychological) should be blended to optimize engagement.

In addition to clarifying the relationship between simulation fidelity and engagement, other design features—such as range of task difficulty, practice schedule, feedback, group versus individualized learning, etc. (Cook et al. 2012)—should be investigated with similar rigour. What instructional design features impact a learner’s engagement in a simulation? How can these design features be manipulated to increase engagement? What (if any) is the concomitant effect of these design features on other variables theorized to impact learning (e.g., cognitive load)? Ultimately, this line of questioning will deepen our understanding of how the design features that influence engagement should (or should not) be used in the design of simulation-based education.

The second line of inquiry should investigate the relationship between variations in engagement and subsequent learning and performance outcomes. While it is theorized that higher engagement leads to improved learning (Rudolph et al 2007; Dieckmann et al. 2007), we have limited evidence to support this claim in the simulation literature. Analogous to the relationship between fidelity or emotion and learning, it is likely that the relationship between engagement and learning will not be linear or one-dimensional. Thus, we need to question: what impact does variation in engagement have on learning outcomes? Are some learning outcomes influenced by engagement more than others? Is engagement along each of these dimensions outlined in the definition we’ve adopted (cognitive, behavioural, emotional) necessary in every learning encounter? There has been some suggestion in the healthcare simulation literature that specific skills (e.g., crisis resource management, team communication) require a greater degree of emotional engagement in order to generate authentic interactions between the learner and other team members (Rudolph et al 2007; Dieckmann et al. 2007; Hamstra et al. 2014). This underscores that future research in this area will need to explore whether requirements for learner engagement depend on the nature of the knowledge/skills to be learned, or if they remain constant across multiple content domains.

Furthermore, consideration must be given to the role of engagement when we use methods like simulation for performance assessment. These methods are playing an increasingly important role in our assessment practices in the health professions—take for example the use of Objective Structured Clinical Examinations (OSCEs), which are currently used in settings ranging from assessment of medical students’ clinical skills through to national licensing examinations for specialty-based board certification. If engagement is indeed context specific, we must understand how its presence or absence in such assessment environments should be interpreted.

If we acknowledge that engagement is the shared responsibility of the learner and the educators/assessors, then considering engagement in the assessment arena opens a broad area of inquiry around the influence it has on assessment scores, rater cognition and bias, and perhaps most importantly, validity of decisions made about the learner based on those assessments. Differentiating a poor performance from a disengaged performance may be difficult, but doing so is essential when we consider the plethora of domains in which simulation-based assessments are utilized and the implications of the decisions made based on their outcome. Characterizing this challenge and developing strategies to mitigate any extraneous influence of engagement on assessment will only become more important as we move toward competency based education paradigms in health professions education.

The final line of inquiry that requires further attention is the relationship between engagement and other, related variables. As both the education and simulation literature show, engagement is often conflated with other concepts such as flow, motivation, metacognition and emotion. Similarly, peripheral variables such as context (Koens et al. 2005; Zepke 2015) cognitive load (La Rochelle et al. 2011) and goal setting (Boekaerts 2016) have been studied in terms of their relation to engagement. Each of these variables could conceivably mediate the relationship between engagement and learning, wherein they influence or are influenced by a learner’s engagement in a given learning task. Thus, an important direction for future research would be to clearly differentiate engagement from these variables and to further assess how they relate to one another.

For instance, higher levels of engagement are theorized to lead to cognitive behaviours and strategies that improve learning (e.g., increased time on task, higher cognitive effort, effective information processing, and the use of deeper processing strategies) (La Rochelle et al. 2011; Koens et al. 2005; Artino and Durning 2012). Still unknown, however, is how variations in a learner’s level of engagement influence their willingness to invest cognitive resources (e.g., attention) in learning, the goals that they set during a given learning experience, or the nature of the (meta)cognitive and self-regulatory learning strategies that they subsequently employ. It is also postulated that states of cognitive under- or over-load can negatively impact learner engagement (e.g. by inciting boredom or reduced self-efficacy) (Brunken et al. 2003; Moreno 2010; Schweppe and Rummer 2014), while higher emotional content of a learning task is thought to increase engagement; however empirical evidence in these areas is lacking.

Across these three lines of inquiry, a variety of research methods could be employed. Experimental methods may be used to systematically manipulate the design of a learning encounter (fidelity, emotional content, etc.) in order to determine influence on learner engagement and downstream effects on learning outcomes. Mediation models can be developed that look at the relationship between a simulation’s instructional design, a student’s level of engagement with the simulation, and the student’s subsequent learning outcomes. Concomitant analysis of confounding variables (e.g. cognitive load, stress, motivation) will be essential to ensure a holistic understanding of these relationships. Where more complex relationships are identified, correlational analyses, structural equations modeling, and regression models may offer further insights. Mixed methods approaches that couple these with observational and interview data may provide further evidence on the subsequent impact on learners’ goal setting, motivation, allocation of cognitive resources, and self-regulatory behaviours.

Limitations

The central aim of this paper was to build a foundation for research on learner engagement in healthcare simulation. As a result, our critical narrative review focused on literature we deemed relevant to learner engagement in educational settings, and our subsequent analysis and recommendations are intended to inform a conceptual basis from which to study engagement in simulation based education. Although our findings may bear on the study of engagement in other health professions educational contexts (such as classroom or workplace-based learning), the specific considerations of those contexts would need to be considered before the definitions, measures, theories, and frameworks reviewed in this paper are applied to these pedagogical contexts.

We would like to reiterate that we intend the views presented in this paper to serve as a starting point for a broader dialogue about learner engagement in health professions education, and we anticipate that evidence from these other domains, as well as primary research within health sciences education, will help refine our understanding in this domain in the future.

Conclusion

Most educators and education researchers would agree that strong student engagement is necessary for good learning to take place. Indeed, this has been a central theoretical claim made by several simulation researchers in health professions contexts. Yet throughout the simulation literature, there is very little clarity, and certainly no consensus, on how engagement should be conceptualized, defined, or measured. The aim of this paper was to provide guidance in how we view engagement in the health professions, specifically in regards to simulation, so that more informed discussions and a delineated line of inquiry on engagement can begin. Moving forward, the challenging task of translating this knowledge from general education and workplace contexts to simulation is necessary. We argue that the focus of this program of research should be to first understand how learners in simulation learning contexts experience engagement, and second to establish strong methods and tools for measuring engagement. With a firmer grasp of what engagement is and the tools to capture it, we can begin to consider the relationship between engagement and learning with simulations, particularly how engagement relates to the instructional design of an educational program or activity, how engagement influences subsequent learning outcomes, and how engagement is connected to and distinguishable from other variables central to the learning process, such as cognitive load, motivation, and self-regulated learning. The pursuit of such research questions will help to further our understanding not only of engagement, but also of other concepts like fidelity whose theoretical and empirical characterization remain elusive in simulation scholarship.

Notes

While fidelity is widely believed to be essential to simulation instructional design, the empirical data supporting the relationship between high fidelity and the subsequent transfer of learning is tenuous at best (Hamstra et al. 2014). As a result, some authors have advocated abandoning the concept of fidelity entirely while others have suggested a complete conceptual overhaul of what we consider it to be (Dieckmann et al. 2007; Cook et al. 2011; Norman et al. 2012; Grierson 2014). It may be, however, that part of the controversy surrounding fidelity stems from our incomplete understanding of the variables theoretically postulated to mediate its effect—variables such as engagement. If we believe that fidelity matters for learning insofar as it increases engagement, but we do not understand what it means for a student to be engaged, do not have a consistent way to measure engagement, and lack clarity in how we approach engagement, then it should not be surprising that we have failed to demonstrate a consistent relationship between fidelity and learning.

References

Ainley, M. (2006). Connecting with learning: Motivation, affect and cognition in interest processes. Educational Psychology Review, 18(4), 391–405.

Artino, A. R., & Durning, S. J. (2012). ‘Media will never influence learning’: But will simulation? Medical Education, 46(7), 630–632.

Azevedo, R. (2015). Defining and measuring engagement and learning in science: Conceptual, theoretical, methodological, and analytical issues. Educational Psychologist, 50(1), 84–94.

Bailey, C., Madden, A., Alfes, K., & Fletcher, L. (2017). The meaning, antecedents and outcomes of employee engagement: A narrative synthesis. International Journal of Management Reviews, 19(1), 31–53. https://doi.org/10.1111/ijmr.12077.

Bakker, A. B., Schaufeli, W. B., Leiter, M. P., & Taris, T. W. (2008). Work engagement: An emerging concept in occupational health psychology. Work and Stress, 22(3), 187–200. https://doi.org/10.1080/02678370802393649.

Barnett, S. G., Gallimore, C. E., Pitterle, M., & Morrill, J. (2016). Impact of a paper vs virtual simulated patient case on student-perceived confidence and engagement. American Journal of Pharmaceutical Education, 80(1), 16. https://doi.org/10.5688/ajpe80116.

Baumeister, R. F., & Leary, M. R. (1997). Writing narrative literature reviews. Review of General Psychology, 1(3), 311.

Bergin, Rolf, Youngblood, Patricia, Ayers, Mary K., Boberg, Jonas, Bolander, Klara, Courteille, Olivier, et al. (2003). Interactive simulated patient: Experiences with collaborative e-learning in medicine. Journal of Educational Computing Research, 29(3), 387–400. https://doi.org/10.2190/UT9B-F3E7-3P75-HPK5.

Boekaerts, M. (2016). Engagement as an inherent aspect of the learning process. Learning and Instruction, 43, 76–83.

Brunken, R., Plass, J. L., & Leutner, D. (2003). Direct measurement of cognitive load in multimedia learning. Educational Psychologist, 38(1), 53–61.

Bryson, C., & Hand, L. (2007). The role of engagement in inspiring teaching and learning. Innovations in Education and Teaching International, 44(4), 349–362.

Chapman, E. (2003). Alternative approaches to assessing student engagement rates. Practical Assessment, 8(13), 1–7.

Charmaz, K. (2014). Constructing grounded theory. Thousand Oaks: Sage.

Coates, H. (2007). A model of online and general campus-based student engagement. Assessment & Evaluation in Higher Education, 32(2), 121–141.

Cook, D. A., & Artino, A. R. (2016). Motivation to learn: An overview of contemporary theories. Medical Education, 50(10), 997–1014.

Cook, D. A., Brydges, R., Hamstra, S. J., Zendejas, B., Szostek, J. H., Wang, A. T., et al. (2012). Comparative effectiveness of technology-enhanced simulation versus other instructional methods: A systematic review and meta- analysis. Simulation in Healthcare, 7(5), 308–320.

Cook, D. A., Hatala, R., Brydges, R., Zendejas, B., Szostek, J. H., Wang, A. T., et al. (2011). Technology-enhanced simulation for health professions education: A systematic review and meta-analysis. JAMA, 306(9), 978–988.

Courteille, O., Anna, J., & Lars-Olof, L. (2014). Interpersonal behaviors and socioemotional interaction of medical students in a virtual clinical encounter. BMC Medical Education, 14(1), 64. https://doi.org/10.1186/1472-6920-14-64.

Csikszentmihalyi, M., Abuhamdeh, S., & Nakamura, J. (2005). Flow. In A. Elliot & C. Dweck (Eds.), Handbook of competence and motivation (pp. 598–623). New York: Guilford Publications.

DeBacker, T. K., & Crowson, H. M. (2006). Influences on cognitive engagement: Epistemological beliefs and need for closure. British Journal of Educational Psychology, 76(3), 535–551.

Demerouti, E., & Bakker, A. B. (2008). The Oldenburg Burnout inventory: A good alternative to measure burnout and engagement. In J. R. Halbesleben (Ed.), Handbook of stress and burnout in health care (pp. 65–78). New York: Nova Science Publishers.

Demerouti, E., Bakker, A., Nachreiner, F., & Ebbinghaus, M. (2002). From mental strain to burnout. European Journal of Work and Organizational Psychology, 11(4), 423–441.

Dieckmann, P., Gaba, D., & Rall, M. (2007). Deepening the theoretical foundations of patient simulation as social practice. Simulation in Healthcare, 2(3), 183–193.

Dixon-Woods, M., Bonas, S., Booth, A., Jones, D., Miller, T., Sutton, A., et al. (2006a). How can systematic reviews incorporate qualitative research? A critical perspective. Qualitative Research, 6(1), 27–44. https://doi.org/10.1177/1468794106058867.

Dixon-Woods, M., Cavers, D., Agarwal, S., Annandale, E., Arthur, A., Harvey, J., et al. (2006b). Conducting a critical interpretive synthesis of the literature on access to healthcare by vulnerable groups. BMC Medical Research Methodology, 6, 1–13. https://doi.org/10.1186/1471-2288-6-35.

Eccles, J. S. (2016). Engagement: Where to next? Learning and Instruction, 43, 71–75.

Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109.

Fredricks, J. A., Filsecker, M., & Lawson, M. A. (2016). Student engagement, context, and adjustment: Addressing definitional, measurement, and methodological issues. Learning and Instruction, 43, 1–4.

Fulmer, S. M., D’Mello, S. K., Strain, A., & Graesser, A. C. (2015). Interest-based text preference moderates the effect of text difficulty on engagement and learning. Contemporary Educational Psychology, 41, 98–110.

Gardner, Aimee K., Jabbour, Ibrahim J., Williams, Brian H., & Huerta, Sergio. (2016). Different goals, different pathways: The role of metacognition and task engagement in surgical skill acquisition. Journal of Surgical Education, 73(1), 61–65. https://doi.org/10.1016/j.jsurg.2015.08.007.

Grant, M. J., & Booth, A. (2009). A typology of reviews: An analysis of 14 review types and associated methodologies. Health Information and Libraries Journal, 26(2), 91–108. https://doi.org/10.1111/j.1471-1842.2009.00848.x.

Gresalfi, M., & Barab, S. (2011). Learning for a reason: Supporting forms of engagement by designing tasks and orchestrating environments. Theory into Practice, 50(4), 300–310.

Grierson, L. E. (2014). Information processing, specificity of practice, and the transfer of learning: Considerations for reconsidering fidelity. Advances in Health Sciences Education, 19(2), 281–289.

Haji, F. A., Cheung, J. J., Woods, N., Regehr, G., Ribaupierre, S., & Dubrowski, A. (2016). Thrive or overload? The effect of task complexity on novices’ simulation-based learning. Medical Education, 50(9), 955–968.

Hamstra, S. J., Brydges, R., Hatala, R., Zendejas, B., & Cook, D. A. (2014). Reconsidering fidelity in simulation-based training. Academic Medicine, 89(3), 387–392.

Issenberg, S. B., & Scalese, R. J. (2008). Simulation in health care education. Perspectives in Biology and Medicine, 51(1), 31–46.

Jang, H., Kim, E. J., & Reeve, J. (2016). Why students become more engaged or more disengaged during the semester: A self-determination theory dual-process model. Learning and Instruction, 43, 27–38.

Järvelä, S., Järvenoja, H., Malmberg, J., Isohätälä, J., & Sobocinski, M. (2016). How do types of interaction and phases of self-regulated learning set a stage for collaborative engagement? Learning and Instruction, 43, 39–51.

Jesson, J., & Lacey, F. (2006). How to do (or not to do) a critical literature review. Pharmacy, education, 6.

Jorm, C., Roberts, C., Lim, R., Roper, J., Skinner, C., Robertson, J., et al. (2016). A large-scale mass casualty simulation to develop the non-technical skills medical students require for collaborative teamwork. BMC Medical Education, 16(1), 83.

Kahu, E. R. (2013). Framing student engagement in higher education. Studies in Higher Education, 38(5), 758–773.

Kneebone, R. (2005). Evaluating clinical simulations for learning procedural skills: A theory-based approach. Academic Medicine, 80(6), 549–553.

Koens, F., Mann, K. V., Custers, E. J., & Ten Cate, O. T. (2005). Analysing the concept of context in medical education. Medical Education, 39(12), 1243–1249.

La Rochelle, J. S., Durning, S. J., Pangaro, L. N., Artino, A. R., van der Vleuten, C. P., & Schuwirth, L. (2011). Authenticity of instruction and student performance: A prospective randomised trial. Medical Education, 45(8), 807–817.

Macey, W. H., & Schneider, B. (2008). The meaning of employee engagement. Industrial and Organizational Psychology, 1(01), 3–30. https://doi.org/10.1111/j.1754-9434.2007.0002.x.

Maslach, C., Jackson, S. E., & Leiter, M. P. (1996). MBI: Maslach burnout inventory. Sunnyvale (CA): CPP, Incorporated.

Maslach, C., & Leiter, M. P. (1997). The truth about burnout: How organizations cause personal stress and what to do about it. San Francisco, CA: Jossey-Bass.

McCoy, Lise, Pettit, Robin K., Lewis, Joy H., Aaron Allgood, J., Bay, Curt, & Schwartz, Frederic N. (2016). Evaluating medical student engagement during virtual patient simulations: A sequential, mixed methods study. BMC Medical Education, 16(1), 20. https://doi.org/10.1186/s12909-016-0530-7.

Moreno, R. (2010). Cognitive load theory: More food for thought. Instructional Science, 38(2), 135–141.

Ng, S. L., Kinsella, E. A., Friesen, F., & Hodges, B. (2015). Reclaiming a theoretical orientation to reflection in medical education research: A critical narrative review. Medical Education, 49(5), 461–475.

Norman, G., Dore, K., & Grierson, L. (2012). The minimal relationship between simulation fidelity and transfer of learning. Medical Education, 46(7), 636–647.

Pike, G. R., Kuh, G. D., & McCormick, A. C. (2011). An investigation of the contingent relationships between learning community participation and student engagement. Research in Higher Education, 52(3), 300–322.

Pizzimenti, M. A., & Axelson, R. D. (2015). Assessing student engagement and self-regulated learning in a medical gross anatomy course. Anatomical Sciences Education, 8(2), 104–110.

Power, Tamara, Virdun, Claudia, White, Haidee, Hayes, Carolyn, Parker, Nicola, Kelly, Michelle, et al. (2015). Plastic with personality: Increasing student engagement with manikins. Nurse Education Today, 38, 126–131. https://doi.org/10.1016/j.nedt.2015.12.001.

Reeve, J., & Tseng, C. M. (2011). Agency as a fourth aspect of students’ engagement during learning activities. Contemporary Educational Psychology, 36(4), 257–267.

Rudolph, J. W., Simon, R., & Raemer, D. B. (2007). Which reality matters? Questions on the path to high engagement in healthcare simulation. Simulation in Healthcare, 2(3), 161–163.

Saks, A. M. (2006). Antecedents and consequences of employee engagement. Journal of Managerial Psychology, 21(7), 600–619.

Salmela-Aro, K., & Upadaya, K. (2012). The schoolwork engagement inventory. European Journal of Psychological Assessment, 28(1), 60–67.

Schaufeli, W. B., Martinez, I. M., Pinto, A. M., Salanova, M., & Bakker, A. B. (2002a). Burnout and engagement in university students: A cross-national study. Journal of Cross-Cultural Psychology, 33(5), 464–481.

Schaufeli, W. B., Salanova, M., González-Romá, V., & Bakker, A. B. (2002b). The measurement of engagement and burnout: A two sample confirmatory factor analytic approach. Journal of Happiness Studies, 3(1), 71–92.

Schlechty, P. C. (2011). Engaging students: The next level of working on the work. Hoboken: Wiley.

Schweppe, J., & Rummer, R. (2014). Attention, working memory, and long-term memory in multimedia learning: An integrated perspective based on process models of working memory. Educational Psychology Review, 26(2), 285–306.

Seppälä, P., Mauno, S., Feldt, T., Hakanen, J., Kinnunen, U., Tolvanen, A., et al. (2009). The construct validity of the utrecht work engagement scale: Multisample and longitudinal evidence. Journal of Happiness Studies, 10(4), 459–481. https://doi.org/10.1007/s10902-008-9100-y.

Shernoff, D. J., Kelly, S., Tonks, S. M., Anderson, B., Cavanagh, R. F., Sinha, S., et al. (2016). Student engagement as a function of environmental complexity in high school classrooms. Learning and Instruction, 43, 52–60.

Shuck, B., & Wollard, K. (2010). Employee engagement and HRD: A seminal review of the foundations. Human Resource Development Review, 9(1), 89–110.

Sokolowski, R. (2000). Introduction to phenomenology. Cambridge: Cambridge University Press.

Wang, M. T., Fredricks, J. A., Ye, F., Hofkens, T. L., & Linn, J. S. (2016). The math and science engagement scales: Scale development, validation, and psychometric properties. Learning and Instruction, 43, 16–26.

Zepke, N. (2015). Student engagement research: Thinking beyond the mainstream. Higher Education Research & Development, 34(6), 1311–1323.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Padgett, J., Cristancho, S., Lingard, L. et al. Engagement: what is it good for? The role of learner engagement in healthcare simulation contexts. Adv in Health Sci Educ 24, 811–825 (2019). https://doi.org/10.1007/s10459-018-9865-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-018-9865-7