Abstract

Image-guided interventions have become the standard of care for needle-based procedures. The success of the image-guided procedures depends on the ability to precisely locate and track the needle. This work is primarily focused on 2D ultrasound-based tracking of a hollow needle (cannula) that is composed of straight segments connected by shape memory alloy actuators. An in-plane tracking algorithm based on optical flow was proposed to track the cannula configuration in real-time. Optical flow is a robust tracking algorithm that can easily run on a CPU. However, the algorithm does not perform well when it is applied to the ultrasound images directly due to the intensity variation in the images. The method presented in this work enables using the optical flow algorithm on ultrasound images to track features of the needle. By taking advantage of the bevel tip, Circular Hough transform was used to accurately locate the needle tip when the imaging is out-of-plane. Through experiments inside tissue phantom and ex-vivo experiments in bovine kidney, the success of the proposed tracking methods were demonstrated. Using the methods presented in this work, quantitative information about the needle configuration is obtained in real-time which is crucial for generating control inputs for the needle and automating the needle insertion.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Percutaneous needle-based procedures such as biopsy, prostate brachytherapy and radio-frequency ablation (RFA) require guidance of the needle to a target location to deliver therapy or to remove tissue samples from a suspicious site for diagnosis. Tracking the needle in real-time through imaging facilitates guiding them inside the soft-tissue and enables automating the needle insertion procedure. Percutaneous needle-based procedures are commonly performed using magnetic resonance imaging (MRI), ultrasound, computed tomography (CT) or fluoroscopy. The success of the image-guided procedures highly depends on the ability to precisely track and guide the needle.

There are many challenges in achieving accurate targeting in needle-based procedures such as needle deflection due to tissue deformation. The targeting errors due to initial alignment of the needle with the target can be reduced with robotic assistance.29,11,22 However, most of the targeting errors occur due to needle deflection when the needle is inside the soft-tissue. Furthermore, some sites are inaccessible using straight-line trajectories due to the anatomical structures that need to be avoided. Targeting errors may lead to false negatives in biopsy procedures, imprecise delivery of radiation therapy in brachytherapy procedures and ablation of healthy tissue in radio-frequency ablation procedures. In our previous work, a multi-degree-of-freedom hollow needle (cannula) was developed to account for the needle placement errors.4 The discretely actuated steerable cannula enables trajectories that are not limited to straight-line trajectories. The cannula is composed of straight segments that are connected by shape memory alloy (SMA) actuators at discrete locations along its length. When the SMA actuators are heated using resistive heating, they transform into an arc thereby generating joint torques. To control the bending angle at each joint and hence the tip of the cannula, two control approaches have been explored. One approach is to measure the bending angle or the tip position directly via the imaging modality. The second approach is an indirect approach where the strain of the SMA actuators is controlled by controlling the temperature of the SMA actuator. The constitutive model of the SMA relates the stress, strain and the temperature of the SMA.25 This work is focused primarily on the detection of the cannula in ultrasound-images for ultrasound-guided steering of the cannula.

Related Work on Ultrasound Imaging

Ultrasound imaging is inexpensive, portable and free of ionizing radiation. Real-time images can be obtained intraoperatively which makes it attractive for instrument guidance in interventional procedures. In clinics, the localization of the needle is done by an experienced physician. When ultrasound is intended to be used as a feedback to automate the needle insertion procedure, the ultrasound image needs to be analyzed to extract quantitative information about the needle shape and configuration.

Image analysis is commonly applied to ultrasound images to reduce noise and extract useful information about the needle position. Extraction of lines, edges and curves is a key step in image analysis. The Radon transform is a well-known tool for detecting parametrized shapes in an image.26 Radon transform was previously used to determine parametrized shapes of a curved needle.23 Hough transform is a special case of the Radon transform and is commonly used to determine line parameters such as slope and intersection points.13 Hough transform has been used to find the needle long-axis which is usually the brightest line in an ultrasound image.33,8 3D ultrasound imaging is advantageous since it enables 3D visualization of the surgical site. Novotny et al. tracked passive markers that were wrapped on a needle using 3D ultrasound to obtain needle position.8 The markers were detected at different positions after imaging for 5 s. Adebar and Okamura attached a piezoelectric actuator to the needle shaft outside the tissue to create vibrations that can be detected in the ultrasound images using 3D Doppler ultrasound.28 An active contour-based detection method was also used to detect a curved instrument and the results were demonstrated in 3D ultrasound images.35 Previous work on 3D ultrasound primarily focused on detection of the instruments in pre-acquired images.28,35 The implementation of the algorithms is computationally intensive, requires a GPU processor,23,21 and only a neighborhood of the region of interest is updated to decrease the computation time.8

2D ultrasound is the current standard that is used in clinics. The images are obtained in slices as opposed to voxels in 3D ultrasound. Hence, it has high acquisition rate and is advantageous for real-time active tracking. When the long axis of the needle is perpendicular to the plane of the ultrasound beam, imaging occurs out-of-plane (Fig. 1a). In out-of-plane tracking, the detected shape is the cross-section of the needle. This approach is mostly used to locate the needle tip.27,2 This approach is troublesome for active tracking since the geometry of the needle is cylindrical, and each cross-section gives a circle. The shaft of the needle tip can easily be mistaken for the needle tip. The ultrasound transducer is usually placed at an angle with respect to the needle insertion direction to visualize the needle tip31,17 (Fig. 1c). In this case, the detected cross-section can be the needle shaft and the needle tip can be at a greater depth. When the needle long axis and the needle tip lie in the same plane with the ultrasound beam, the imaging takes place in-plane (Fig. 1b). The in-plane tracking makes guidance easier since the entire needle can be seen. In-plane tracking requires finding geometry specific features of the needle. For a rigid needle, the feature to be detected can be a line whereas detection of local or global curvature may be required for a curved needle.

To actively steer the discretely actuated steerable cannula, we need to develop image processing algorithms that extract information about the needle tip and joint angles. The goal of this paper is to develop real-time imaging algorithms that detect the shape of the discretely actuated steerable cannula and achieve accurate localization of its tip. The in-plane tracking gives information about the configuration of the cannula and can be used as feedback to control the motion of the cannula. Out-of-plane tip detection is achieved by rotating the ultrasound transducer until ultrasound beam is perpendicular to the needle long axis and then scanning the cannula tip to detect the exact location of the cannula tip. This way any out-of-plane motion can be easily detected. The out-of-plane tracking method proposed in this paper guarantees that the detected shape is the needle tip.

In ultrasound imaging, artifacts of the needle result in missing boundaries. Even when the boundaries are clearly resolved, extracting the boundaries of the needle is not sufficient for tracking. Independent of which algorithm is used, there still has to be a post-processing step to extract quantitative information on the configuration of the needle. For instance, Hough line transform may work well in situations where there is only a single bright line. Despite the missing boundaries, the line parameters can be obtained when a portion of the line is detected. However, it would fail for the cases where there are multiple links. Since the goal is to find the slope between the links, one cannot specify a desired slope to eliminate the other lines. Hence, for real-time guidance of the needle, the performance of the tracking method used does not only depend on detecting the needle boundaries, but also depends on effectively getting quantitative information that describes the needle configuration. In this work, needle detection and quantitative information are obtained simultaneously.

Contributions

The main contribution of this paper is employing the optical flow algorithm and circular Hough transform to develop image processing algorithms that can detect the cannula and provide quantitative information about its configuration. This is a crucial first step towards automating the cannula insertion and controlling the cannula in a closed-loop using real-time image-feedback. To track the shape of the cannula in-plane, a tracking algorithm based on optical flow was developed. Lucas–Kanade optical flow is a powerful algorithm for motion estimation and feature tracking.1 This algorithm is advantageous since it is computationally efficient, and can run on a CPU. Optical flow algorithm has been previously used for temperature estimation in ultrasound images,6 estimation of muscle thickness during contraction,9 and myocardial strain analysis16 to name a few. While the optical flow algorithm has been applied in other applications, to the best of our knowledge, this is the first time the optical flow algorithm is applied to ultrasound images to track the features of a needle. Optical flow algorithm is based on the brightness smoothness assumption and hence it is sensitive to variation in brightness. Ultrasound imaging suffers from brightness variation. The brightness not only changes in space but also in time. Therefore, applying the optical flow algorithm to the ultrasound images directly gives poor results. We introduce a pre-processing step that enables overcoming the brightness variation problem and enables using the fast and powerful optical flow tracking algorithm on ultrasound images. In this paper, pre-processing refers to the steps before the optical flow algorithm and the circular Hough transform are used. Although the focus of the paper is primarily on tracking the discretely actuated steerable cannula, we show that the algorithms developed in this work can be extended to other generic needles as well. A needle-tip scanning method is also introduced that detects the tip of the cannula using circular Hough transform when the tracking is out-of-plane. The proposed method guarantees that the detected shape is the needle tip and not the needle shaft.

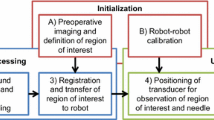

The paper is organized as follows. In Sect. 2, we describe the discretely actuated steerable cannula and the experimental setup used for tracking. We also describe the circular Hough transform along with the optical flow algorithm. In Sect. 3, the effectiveness of the proposed in-plane and out-of-plane tracking methods are demonstrated through experiments inside tissue phantom made of gelatin and ex-vivo experiments in bovine kidney. Finally, in Sect. 4, we make some concluding remarks.

Materials and Methods

Discretely Actuated Steerable Cannula

The cannula is composed of straight segments that are connected by SMA actuators. The SMA actuators are 0.53 mm diameter wires and they were annealed in an arc shape. When the SMA actuator is heated using resistive heating, it deforms into an arc shape thereby generating joint torques. SMA actuators have one-way-shape-memory-effect and antagonistic pair of SMA actuators are needed at each joint to achieve joint motion in a plane.

Figure 1d shows the discretely actuated steerable cannula that has three straight segments and two joints. The straight segments have 1.651 mm inner diameter and 3.175 mm outer diameter. The length of each segment from the base to the tip is 3.8, 2.5 and 2.1 cm, respectively. In our previous work, the straight metal segments were coated with high temperature enamel coating for electrical insulation.12 In this work, the proximal (closest to the base) link is made of metal and coated with high temperature enamel coating and the remaining straight segments are made of polycarbonate. All SMA actuators lie in a plane and the motion of the cannula occurs in a plane. The cannula is covered with non-conductive sheath for heat isolation and electrical insulation of the SMA actuators inside soft-tissue.

The kinematics of the cannula is straightforward. The schematic used for deriving the forward kinematics of the cannula is shown in Fig. 1e. There are 3 degrees-of-freedom (DOF): 1-DOF at each joint and 1 insertion DOF. The configuration of the cannula in a plane can be described using Eq. (1):

where α 1 and α 2 are the joint angles, \(u\) is the insertion distance; \(L_1\), \(L_2\), and \(L_3\) are the lengths of the straight segments from the base to the tip; \((x,y)\) is the position of the cannula tip and \(\beta\) describes the orientation of the distal link (and hence the cannula tip). The radius of curvature of the joints is given by \(r_1\) and \(r_2\). The relation between the arc radius, \(r\), and joint angle, \(\alpha\), is \(r=\ell / \alpha\) where \(\ell\) is the length of the SMA actuator between consecutive links. The three equations representing the geometry of the cannula is solved using a 4th order Runge-Kutta solver.

Experimental Setup

The experimental setup used for ultrasound guidance is shown in Fig. 2. Two linear rail systems (Haydon Motion Solutions) are mounted orthogonally to the top frame. The rails consist of a stepper motor (0.015875 mm per step resolution) and has 305 mm travel length. These two rails are used to position the ultrasound transducer in a plane. The cannula is fixed between two plates. The plates are attached to a vertical support, and the vertical support is attached to the sliding element of rail 3. The height of the plates can be adjusted using screws. The vertical position of the ultrasound probe can also be adjusted prior to the experiments to make sure the ultrasound probe is in contact with the tissue sample. The arrows shown with dashed lines show the adjustment direction for the cannula fixture and the ultrasound fixture. There is a DC motor (Model 247858, Maxon Precision Motors, Inc.) attached to the sliding element of rail 2 and its rotation axis is centered along the center of the ultrasound probe. Hence, the ultrasound probe has two translational and one rotational degrees-of-freedom.

There are two PCs that communicate through the serial port. One of the PCs controls the rails and the cannula using Sensoray 626 DAQ. The DAQ sends control signals to the solid state relays (SSRs) that control the current flow to the respective SMA actuators. The rails are controlled via a TTL-compatible micro stepping chopper drive (DCM 8028, Haydon Motion Solutions). The TTL-signals are generated using Arduino UNO board at 500 Hz and this frequency corresponds to 3.9 mm/s travel speed. The DC motor is controlled using a proportional-integral (PI) controller. A constant 2.5 V is supplied until the motor angle is within 10° of the desired angle. Once the error is less than 10° the proportional-integral (PI) controller takes over. Another PC is used to process the ultrasound images. The ultrasound system is a Philips Sonos 5500 ultrasound console with a 3–11 MHz linear array transducer (Model 11-3L). The ultrasound images are acquired in real time at 15 fps using a Matrox Morphis (Matrox Inc.) frame grabber. Matrox Imaging Library (MIL) library is used to grab and process the images in real time.

Lucas–Kanade Optical Flow for In-Plane Tracking

Optical flow is a fast and robust tracking algorithm that can be used to track features or points in an image stream. The Lucas–Kanade optical flow algorithm is briefly described here for completeness, and to shed light on the underlying assumptions which make it hard to apply the algorithm to the ultrasound images directly. By comparing two consecutive images in an image stream, an intensity map can be obtained that describes the change in intensity per pixel. Let the increase in brightness in the first image at a pixel location \(p(x,y)\) along the \(x\) direction be \(I_x\) and \(y\) direction be \(I_y\). After a movement by \(v_x\) pixels in the \(x\) direction and \(v_y\) pixels in the \(y\) direction, the total increase in brightness is equal to the local difference in intensity \(I_{\rm{t}}\) between the two frames. Hence, the optical flow equation is given as:18

where \(v_x\) and \(v_y\) are the unknown optical flow of the pixel in \(x\) and \(y\) direction, respectively. The displacements in \(x\) and \(y\) directions are defined in terms of pixels. In the more general form, the optical flow equation for the ith pixel, \(p_i\), is given as:

The first term is the spatial gradient and the second term is the temporal gradient. Since one pixel does not provide enough information for corresponding to another pixel in the next frame, the equations are formulated in a \(k\times k\) sized window around the pixel assuming they all move by \([v_x,v_y]^{\rm{T}}\). A set of \(k^2\) equations can be obtained and represented in the matrix form as:18

The system of equations is overdetermined and is of the form:

The solution to Eq. (5) is formulated as a least-squares minimization of the equation by minimizing \(\left\| Ad -b\right\| ^2\). The solution \(d=[v_x,v_y]^{\rm{T}}\) is found by solving:

\(A^{\rm{T}}A\) is indeed the Hessian matrix and has the second-order derivatives of the image intensities. Eigenvalue analysis of the Hessian matrix contain information about local image structure and reveals edges and corners that can be tracked between images.34 Hence, the detected features are the edges and corners in the \(k\times k\) sized window. Since the algorithm searches only small spatial neighborhood of the pixel of interest, large motions can cause the points to move outside of the local neighborhood and the tracked points can be lost. To overcome this problem, pyramidal Lucas–Kanade algorithm has been developed which starts analyzing the motion flow from the lowest detail to finer detail.19 The pyramidal implementation of the Lucas–Kanade method is a fast and reliable optical flow estimator that can accommodate large motions. We use the OpenCV implementation of the algorithm.7 The mathematical formulation of the optical flow algorithm in pyramids (top to down) is beyond the scope of this work and it is described elsewhere.19

Circular Hough Transform for Tip Detection

The Hough transform is commonly used to find the parametrized shapes in an image. It was originally used for the detection of straight lines14 and has been previously applied for the detection of the needle long-axis in ultrasound images.31,10 It was also used to detect curved needles by connecting the straight segments that are found using Hough transform with a polynomial fit.20 Hough transform can also be used to detect shapes other than lines such as circles and ellipses. Circular Hough transform is a particular example where Hough transform is employed to search a parameter space. A circle with radius \(r_{\rm{c}}\) and its center at \((x_{\rm{c}},y_{\rm{c}})\) is parametrized by the equation:

Hough transform usually employs an edge detector to detect the edges of the circle. An arbitrary edge point \((x_i,y_i)\) transforms into a circular cone in the \((x_{\rm{c}}, y_{\rm{c}}, r_{\rm{c}})\) parameter space.14 Circular Hough transform was previously used for automated localization of retinal optic disk on images captured by a fundus camera,30 measurements of arterial diameter in ultrasound images during the cardiac cycle,24 and segmentation of the left ventricular in 3D echocardiography.5 We use circular Hough transform to detect the needle tip when the tracking is out-of-plane. Since the diameter of the needle is known, the search reduces to two parameters. The algorithm also uses the edge gradient information which determines the direction of the circle with respect to the edge point.32

Experiments and Results

In-Plane Tracking of the Cannula

The markers are the tracked features (points). A line was plotted to connect the two points to show the orientation of the cannula tip. (a) Two points were selected (shown on left). The point at the tip shifted across the needle cross-section due to pixels having similar brightness as the cannula was further inserted into the tissue phantom (shown on right-21 frames after). This introduces an error in the angle calculation. This means the pixels that are further down the cross-section had similar brightness with the ones that were originally selected on the top surface. (b) This figure shows another example where the algorithm fails in the beginning when a point on the distal link (the link where tip is located) was selected. When a point on the top surface was clicked with the mouse, the algorithm detected a feature (a brighter edge) that is lower down the cross-section

There are two main assumptions of the Lucas–Kanade optical flow algorithm. Firstly, optical flow algorithm is sensitive to brightness variation and it expects brightness smoothness. The objects or features in the image should exhibit intensity levels that change smoothly. The second assumption is the spatial coherence. It is assumed that neighboring points in a region belong to the same surface and move in a similar fashion. These assumptions make it difficult to apply the optical flow algorithm to ultrasound images directly. In ultrasound images the brightness of a region can change both in time and space. The optical flow algorithm was tested on the ultrasound videos of the cannula. Initially, the desired features to be tracked (corner, edge) are selected by clicking on the image with the mouse. To get the cannula configuration, we track a corner (cannula tip) and an edge (a point on the distal link) on the top surface. The coordinates of these two points are enough to determine the tip location and orientation. Figure 3 demonstrates two cases where the algorithm fails to track the desired points in an image stream. To eliminate these problems, smooth brightness should be achieved on the surface from which the points are selected and the brightness variation of the tracked points in time should be minimized. Edge detection algorithms are useful to find the boundaries of the cannula. However, the boundaries of the cannula are not always clearly resolved since the artifacts result in missing boundaries. Pre-processing of the images is necessary to reduce noise, intensity variation, and missing boundaries. Using a fixed threshold value is risky since in ultrasound images the brightness not only varies in space but also in time as the needle is steered inside the soft tissue or the organ. Even when the threshold value is varied between images, there might be anatomical structures having same brightness as the needle and thresholding can destroy some portions of the needle as well. Hence, in this work we avoid using thresholding and only morphological operations are used for processing the images to remove noise and detail.

The image is first processed using downsampling followed by upsampling operation to reduce the amount of noise and detail. Erosion, dilation and blurring operations are also applied recursively to smooth the image and reduce brightness variation. The surface of the cannula facing the transducer has higher brightness in ultrasound images. Sobel operator is applied to the pre-processed image to detect the top surface of the cannula. The edges occur when the gradient is greatest and the Sobel operator finds the edges in the image. The Sobel Operator is a discrete differentiation operator and it computes an approximate image gradient in the \(x\) and \(y\) directions by convolving the image with a pair of \(3\times 3\) kernels. The kernels of the Sobel operator, \(S_x\) and \(S_y\), are defined as:

After the top surface of the cannula is detected, a linear blend operator is applied using Eq. (9) to combine the output of the Sobel operator with the original image.

where \(I\) is the original image, \(I_{\rm{sobel}}\) is the image that is obtained by convolving the output of the morphological operations with the Sobel operators, and \(\gamma\) is the weight of blending that ranges between 0 and 1. The blend operator overlays both images, thus enhancing the brightness of the top surface of the cannula on the original image. The value of \(\gamma\) was chosen as 0.65. This coefficient should be larger than 0.5 so that the output of the Sobel operator (top surface) has a higher weighting. The blend operator not only increases the brightness of the top layer that is detected using the Sobel operator but also reduces the brightness of the other regions since the original image intensities are multiplied by a coefficient that is smaller than 1. The resulting image is used as an input to the optical flow algorithm.

(a) The top surface of the cannula was clearly resolved after applying the Sobel operator to the pre-processed images. (b) The shape of the cannula was successfully detected and overlayed on the ultrasound images from the experiment. Since the blend coefficient \(\gamma\) was chosen as 0.3 the image has a tinge of gray. (c) The change in joint variables \(q=[\alpha _1,\alpha _2,u]\), in experiment shown in (b) were calculated in real-time. (d) The various steps of the pre-processing steps in ex-vivo bovine kidney experiment. (e) The shape of the cannula was successfully detected and drawn on the ultrasound images. (f) The change in joint variables in cannula insertion experiment shown in (e)

Videos of cannula insertion experiments were recorded to test and optimize the parameters of the tracking algorithm. The videos are 45 s long on average and were recorded at 15fps. The tested parameters were the kernel size of the dilation and erosion (3, 5, 7, 9), the number of recursive morphological operations (between 1 and 10), the blend coefficients (between 0 and 1), and the brightness increase \(\lambda\) (between 1 and 3). Among these, the number of recursive morphological operations determines how much the image will be clear of noise and detail. Five iterations give a clear image for both gelatin and soft-tissue without substantially increasing the computation time. Kernel size of the dilation/erosion does not have a substantial effect on the outcome. \(\lambda\) can be selected between 2 and 3 to increase the brightness of the top surface. \(\lambda =3\) works better for soft-tissue.

In the experiments, the transducer is stationary and the cannula is inserted into the gelatin. The cannula is aligned with the ultrasound beam in-plane. The tracking algorithm starts when the cannula is inserted sufficiently into the medium. Once the cannula is inside the imaging region, the tip of the cannula and one point on the top surface of the distal link are selected. A line is fitted between the two points and the tip orientation is obtained. This configuration is registered as the initial configuration. The motion of the two points are continuously tracked using the pyramidal Lucas–Kanade optical flow algorithm. The optical flow algorithm gives the pixel location of the cannula tip position and orientation. The pixel information is then converted into physical dimensions and the joint variables, \(q = [u, \alpha _1, \alpha _2]\), are solved using inverse kinematics. Finally, the shape of the cannula is drawn on the image in real-time using the kinematic equation given in Sect. 2.1. Full description of the in-plane tracking procedure is given in Algorithm 1.

Figure 4a shows the original ultrasound image, the pre-processed image and the output of the blend operator which is given as an input to the tracking algorithm. Figures 4b–4c show the results from a tracking experiment in-plane. The cannula trajectory is displayed on the screen and the change of joint variables are recorded in real-time. Any technique can be used to draw the cannula shape on the original image. In this work, the shape of the cannula is drawn in a separate window and overlaid on the original image using a blend operator. The cannula kinematics is overlaid on the image with \(\gamma\) = 0.3 to demonstrate that the cannula shape is accurately detected. The choice of the coefficient for the blend operator is not important as long as the drawing can be seen on the image.

The algorithm was also verified in an ex-vivo experiment. Figure 4d shows various steps of the image processing. Figures 4e–4f show the results of a cannula insertion experiment into a bovine kidney. The soft-tissue has more intensity variations compared to tissue phantom made of gelatin, yet the output of the Sobel edge detector clearly resolves the top surface of the cannula.

The pre-processing step was tested on two commercially available needles as shown in Fig. 5a inside ex-vivo bovine tissue. One of the needles is a 18 gauge needle used for prostate brachytherapy (Mick Radio Nuclear Instruments, Inc.) and the other one is a 12 gauge high field MRI coaxial biopsy needle (Invivo, Corp.). The goal of this experiment is to demonstrate that the pre-processing step can highlight the top surface of generic metal needles as well. The cannula is currently made of mostly polycarbonate parts. Future prototypes may involve metal parts. Since the pre-processing step uses a gradient operator (Sobel operator), the algorithm looks at the relative intensity difference. Independent of which needle is used; the top surface has higher brightness since the transducer faces the top surface of the needle. Figures 5b and 5c show the original ultrasound images of the prostate brachytherapy and the biopsy needle, and the images after pre-processing. The brightest pixels are on the top surface of the needles and the pre-processing step extracts the top surfaces of the needles.

Image processing algorithms are targeted for visualization or obtaining information about the needle position since the position of the needle is not known. It is difficult to give a quantitative measure of the performance of the algorithm since there is no ground truth that can be used for comparison. We can potentially introduce a magnetic tracker inside the cannula to verify the tip location. However, the error of the magnetic trackers can be up to 2 mm.15 This corresponds to more than half the size of the cannula diameter and is not a reliable way of providing a ground truth. In the tracking algorithm, the tracked features are trapped on the top surface of the cannula. Hence, the locations of the tracked points give an accurate measure of the joint angles. This was manually verified with a digital protractor software.

Out-of-Plane Detection of the Cannula Tip

(a) To demonstrate tip detection using circular Hough transform, the ultrasound transducer was slided over the cannula and the images were recorded with 0.127 cm intervals. (b) From left to right: the cannula started to appear—a bright region was observed which corresponds to the upper part of the cross-section (bevel-tip)—the cannula cross-section was detected. The images show: (i) the pre-processed images prior to the application of circular Hough transform, (ii) The original images of the ROI where cannula tip is located. When the circle was detected, it was drawn on the original image. The circular Hough transform gives the center of the detected circle. The center of the circle was marked in blue and the circle, which corresponds to the outer diameter of the cannula , was drawn in yellow on the frame when it was detected. The circle was also drawn on the corresponding pre-processed image of the frame the circle was detected to demonstrate the actual location of the circle. The circular Hough transform operates on the pre-processed image

In out-of-plane tracking, the ultrasound transducer is perpendicular to the direction of needle insertion. Hence, the ultrasound image shows cross-sections of the needle-long axis. To demonstrate the tip detection using circular Hough transform, the transducer was positioned away from the cannula tip such that the cannula tip was not visible (Fig. 6a). To increase the computation time, Circular Hough transform searches a circle within a 2.5–3cm (10 times the cannula diameter) window (ROI). The ROI is defined around the initial position of the cannula which is known from the respective position of the rails. The transducer was moved towards the cannula tip with 0.127 mm increments. Downscaling, upscaling, dilation, and erosion operations followed by blurring were applied recursively to obtain a clear image that is free of any noise (see Algorithm 2 for the parameters used). The result is a bright spot at the center of the cannula axis. Figure 6b shows the original ultrasound images and the images after the pre-processing step. A range of pixel values can be specified to detect circles with a desired diameter. The circle radius was specified as 8–10 pixels, which corresponds to 3.074–3.842 mm diameter. The number of recorded frames from the time the tip started to appear to the frame that the circle was detected is 39. This corresponds to 4.953 mm displacement of the transducer. The length of the bevel-tip is 5.5 mm and the algorithm shows great performance in accurate detection of the tip.

The out-of-plane detection can be used to track the needle-tip in real-time. In our approach, the main tracking takes place in a plane. The cannula is initially aligned with the ultrasound beam in-plane. In the experimental setup, the relative configuration of the rails and the cannula are known. In a clinical scenario, the registration of the ultrasound probe with the cannula axis can be achieved using a camera system such as Micron Tracker (Claron Technologies Inc.) and the needle plane can be detected by jiggling the needle to differentiate its motion from that of the tissues or organs.3 The 3D location of the cannula tip can be obtained by rotating the ultrasound transducer by 90\(^\circ\) around its axis and sliding the transducer over the cannula to obtain the location of the tip using circular Hough transform. Using this approach, any off-plane movement of the cannula due to tissue interaction can be quantified. This method also guarantees the detected shape is the needle tip and not the needle shaft. Full description of the out-of-plane tip detection procedure is given in Algorithm 2.

To demonstrate accurate localization of the cannula tip using combined in-plane and out-of-plane tracking, an experiment was carried out. The cannula is inserted at 0.508 cm intervals and is actively tracked in-plane using the algorithm described in Sect. 3.1. Whenever the cannula tip reaches the center of the ultrasound image, ultrasound transducer is also advanced in the direction of cannula insertion by 0.635 cm to guarantee that the cannula tip does not cross the center of the ultrasound transducer rotation axis. When the user requests 3D information of the tip, the ultrasound probe rotates 90° and the ultrasound transducer is moved towards the cannula to detect the tip. Since the ultrasound transducer always moves ahead of the cannula tip, it is guaranteed that the transducer will be ahead of the cannula tip once it is rotated.

(a) Ultrasound images: (i) before the ultrasound transducer rotated (initially the tracking is in-plane), (ii) when ultrasound transducer was rotated, (iii) 90° rotation was achieved and the cannula tip was observed ahead of the ultrasound transducer, (iv) the transducer moved towards the cannula and the circular cross-section was detected. The location of the tip is \([x,y,z]=[56.05,40.30,58.75]\) mm in the imaging plane. (b) Change in ultrasound transducer rotation and position, and the cannula displacement with time

The computation time of the in-plane tracking algorithm is 43.4 ms and out-of-plane is 168.4 ms. The reported computation times include the tracking algorithm, drawing the cannula (or the circle) and displaying the original images and the processed images. Figure 7b shows the change in cannula insertion distance, the displacement (rail 2) and the rotation of the ultrasound transducer. The transducer tracked the cannula tip until 22 s. The user requested out-of-plane tip location. The motor rotated 90° and the transducer started moving against the cannula insertion detection and the circular Hough transform was activated. Since the vertical position of the tip in the plane is known prior to the rotation of the transducer, the ROI is automatically defined and the out-of-plane detection algorithm searches for a circle near the neighborhood of the previous location. When the cannula tip was detected, the program stopped. Figure 7a shows the ultrasound images at the locations marked as i, ii, iii and iv in Fig. 7b.

Conclusions

This work describes an in-plane needle tracking method that tracks the needle in 2D and an out-of plane needle-tip detection method that localizes its tip. There are two main results of this work. Firstly, using the pre-processing method described in this work, the top surface of the needles can be highlighted and smooth brightness can be achieved on the needle top surface. This makes it possible to use optical flow algorithm to track the features of the needle in ultrasound images. Secondly, since the cross-section of the needle is a circle, circular Hough transform can be used to localize the needle-tip when the ultrasound beam is perpendicular to the tip of the needle. The in-plane tracking tracks the orientation of the tip and its position at the top-surface of the cannula. Out-of-plane tracking takes advantage of the bevel tip and the circular cross-section to detect the center of the bevel tip.

The effectiveness of the algorithm in extracting the top surface was successfully demonstrated in tissue phantom and in ex-vivo bovine tissue. The algorithms that are presented here show the effectiveness of the algorithms and they form the foundations of our future work on active 3D tracking of the cannula. The relative position of the ultrasound transducer and the cannula base were known since the transducer moves in the Cartesian plane. The algorithms start with the assumption that the cannula is in the imaging plane. In our future work, we are interested in registration and positioning of the ultrasound transducer such that the ultrasound beam is aligned with the cannula plane. We have developed motion planning algorithms that can optimize the path between the entry point to the soft-tissue and the target point based on multiple criteria. The future work will also focus on incorporating the motion planning algorithms into the tracking algorithms to steer the cannula to a desired region using ultrasound guidance.

References

Aboofazeli, M., P. Abolmaesumi, P. Mousavi, and G. Fichtinger. A new scheme for curved needle segmentation in three-dimensional ultrasound images. In: IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBI), pp. 1067–1070, 2009.

Adebar, T. K., and A. Okamura. 3D segmentation of curved needles using doppler ultrasound and vibration. In: Information Processing in Computer-Assisted Interventions. Lecture Notes in Computer Science, vol. 7915, pp. 61–70, 2013.

Ayvali, E., and J. P. Desai. Towards a discretely actuated steerable cannula. In: 2012 IEEE International Conference on Robotics and Automation (ICRA) pp. 1614–1619, 2012.

Ayvali, E., C. Liang, M. Ho, Y. Chen, and J. P. Desai. Towards a discretely actuated steerable cannula for diagnostic and therapeutic procedures. Int. J. Robotics Res. 31:588–603, 2012.

Bouguet, J. Y. Pyramidal Implementation of the Lucas Kanade Feature Tracker. Intel Corporation, Microprocessor Research Labs, 2000.

Bradski, G. The OpenCV Library. Dr. Dobb’s Journal of Software Tools, 2000.

Bradski, G., and A. Kaehler. Learning OpenCV: Computer Vision with the OpenCV Library. O’Reilly Media, 2008.

Chatelain, P., A. Krupa, and M. Marchal. Real-time needle detection and tracking using a visually servoed 3D ultrasound probe. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 1676–1681, May 2013.

Chen, X., L. Lu, and Y. Gao. A new concentric circle detection method based on Hough transform. In: International Conference on Computer Science Education (ICCSE), pp. 753–758, 2012.

Deans, S. R. Hough transform from the radon transform. IEEE Trans. Pattern Anal. Mach. Intell. 3:185–188, 1981.

Ding, M., and A. Fenster. A real-time biopsy needle segmentation technique using Hough transform. Med. Phys. 30:2222–2233, 2003.

Dong, B., E. Savitsky, and S. Osher. A novel method for enhanced needle localization using ultrasound-guidance. In: Advances in Visual Computing. Lecture Notes in Computer Science, vol. 5875. Berlin: Springer, pp. 914–923, 2009.

Duan, Q., K. M. Parker, A. Lorsakul, E. D. Angelini, E. Hyodo, J.W. Homma, S., Holmes, and A. F. Laine. Quantitative validation of optical flow based myocardial strain measures using sonomicrometry. In: Proceedings of IEEE International Symposium Biomedicin Imaging, vol. 2009, pp. 454–457, Jun 2009.

Duda, R. O., and P. E. Hart. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 15:11–15, 1972.

Fichtinger, G., J. Fiene, C. Kennedy, G. Kronreif, I. Iordachita, D. Song, E. C. Burdette, and P. Kazanzides. Robotic assistance for ultrasound-guided prostate brachytherapy. Med. Image Anal. 12:535–545, 2008.

Golemati, S., J. Stoitsis, E. G. Sifakis, T. Balkizas, and K. S. Nikita. Using the Hough transform to segment ultrasound images of longitudinal and transverse sections of the carotid artery. Ultrasound Med. Biol. 33:1918–1932, 2007.

Gottlieb, R. H., W. B. Robinette, D. J. Rubens, D. F. Hartley, P. J. Fultz, and M.R. Violante. Coating agent permits improved visualization of biopsy needles during sonography. Am. J. Roentgenol. 171:1301–1302, 1998.

Hata, N., J. Tokuda, S. Hurwitz, and S. Morikawa. Mri-compatible manipulator with remotecenter-of-motion control, J. Magn. Reson. Imaging 27:1130–1138, 2008.

Hendriks, C., M. van Ginkel, P. Verbeek, and L. J. van Vliet. The generalized radon transform: sampling, accuracy and memory considerations. Pattern Recognit. 38:2494–2505, 2005.

Hong, J., T. Dohi, M. Hashizume, K. Konishi, and N. Hata. An ultrasound-driven needle insertion robot for percutaneous cholecystostomy. Phys. Med. Biol. 49:441, 2000.

Hong, J. S., T. Dohi, M. Hasizume, K. Konishi, and N. Hata. A motion adaptable needle placement instrument based on tumor specific ultrasonic image segmentation. In: Medical Image Computing and Computer-Assisted Intervention MICCAI 2002. Lecture Notes in Computer Science, vol. 2488. Berlin: Springer, pp. 122–129, 2002

Hongliang, R., and P. Dupont. Tubular enhanced geodesic active contours for continuum robot detection using 3D ultrasound. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 2907–2912, May 2012.

Lucas, B., and T. Kanade. An iterative image registration technique with an application to stereo vision. In: Proceedings of the International Joint Conference on Artificial Intelligence, pp. 674–679, 1981.

Mascott, C.R. Comparison of magnetic tracking and optical tracking by simultaneous use of two independent frameless stereotactic systems. Neurosurgery 57:295–301, 2005.

Mehrabani, B., V. Tavakoli, M. Abolhassani, J. Alirezaie, and A. Ahmadian. An efficient temperature estimation using optical-flow in ultrasound b-mode digital images. In: 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS 2008), pp. 86–89, Aug 2008.

Neshat, H., and R. Patel. Real-time parametric curved needle segmentation in 3D ultrasound images. In: 2nd IEEE RAS EMBS International Conference on Biomedical Robotics and Biomechatronics (BIOROB), pp. 670–675, 2008.

Neubach, Z., and M. Shoham. Ultrasound-guided robot for flexible needle steering. IEEE Trans. Biomed.Eng. 57:799–805, 2010.

Novotny, P., J. A. Stoll, N. V. Vasilyev, P. J. del Nido, P. E. Dupont, T. E. Zickler, and R. D. Howe. GPU based real-time instrument tracking with three-dimensional ultrasound.Med. Image Anal. 11:458–464, 2007.

Qiaoliang, L., N. Dong, Y. Wanguan, C. Siping, W. Tianfu, and C. Xin. Use of optical flow to estimate continuous changes in muscle thickness from ultrasound image sequences. Ultrasound Med. Biol. 39:2194–2201, 2013.

Sekhar, S., W. Al-Nuaimy, and A. Nandi. Automated localisation of retinal optic disk using Hough transform. In: 5th IEEE International Symposium on Biomedical Imaging: From Nano to Macro(ISBI 2008), pp. 1577–1580, May 2008.

Shi, J., and C. Tomasi. Good features to track. In: 1994 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 593–600, 1994.

Tanaka, K. A thermomechanical sketch of shape memory effect: one-dimensional tensile behavior. Res. Mech. 18:251–263, 1986.

van Stralen, M., K. Leung, M. Voormolen, N. de Jong, A. van der Steen, J. Reiber, and J. Bosch, Time continuous detection of the left ventricular long axis and the mitral valve plane in 3-d echocardiography. Ultrasound Med. Biol. 34:196–207, 2008.

Vrooijink, G., M. Abayazid, and S.Misra. Real-time three-dimensional flexible needle tracking using two-dimensional ultrasound. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), pp. 1676–1681, 2013.

Yang, B., U. Tan, A. McMillan, R. Gullapalli, and J. Desai. Towards the development of a master-slave surgical system for breast biopsy under continuous MRI. In: 13th International Symposium on Experimental Robotics, Qubec City, Canada, June 2012.

Acknowledgments

The project described was supported by Award Number R01EB008713 from the National Institute Of Biomedical Imaging And Bioengineering. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute Of Biomedical Imaging And Bioengineering or the National Institutes of Health.

Author information

Authors and Affiliations

Corresponding author

Additional information

Associate Editor Dan Elson oversaw the review of this article.

Rights and permissions

About this article

Cite this article

Ayvali, E., Desai, J.P. Optical Flow-Based Tracking of Needles and Needle-Tip Localization Using Circular Hough Transform in Ultrasound Images. Ann Biomed Eng 43, 1828–1840 (2015). https://doi.org/10.1007/s10439-014-1208-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10439-014-1208-0