Abstract

This article presents a translational model of curricular design in which findings from investigating learning in university BME research laboratories (in vivo sites) are translated into design principles for educational laboratories (in vitro sites). Using these principles, an undergraduate systems physiology lab class was redesigned and then evaluated in a comparative study. Learning outcomes in a control section that utilized a technique-driven approach were compared to those found in an experimental class that embraced a problem-driven approach. Students in the experimental section demonstrated increased learning gains even when they were tasked with solving complex, ill structured problems on the bench top. The findings suggest the need for the development of new, more authentic models of learning that better approximate practices from industry and academia.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

As a hybrid discipline, biomedical engineering integrates the tools, knowledge, and methods from engineering with the sciences toward the development of healthcare applications. While this integration is critical to advances in disease prevention, detection, and treatment, it creates unique challenges for BME educators. Unlike other post-secondary engineering courses of study with well-practiced educational traditions codified in an assortment of textbooks, BME is still in the throes of developing those traditions both in the classroom and in the instructional laboratory, which is the focus of this article.

Laboratory courses in engineering education can serve many purposes: the development of technique and experimental skills, discovery, independent learning, and application of theoretical principles to real, tangible problems. In BME, the laboratory takes on special meaning. As Perreault, Litt, and Saterbak observed, such courses “provide students with the opportunity to observe how the physical world compares with the simplified quantitative descriptions taught in the classroom”.22 Indeed, the laboratory can be a place where students practice integrating the quantitative skills of engineering with the wet world of the bench. The question we address in this article is how best to design, structure and implement a rich and deep learning experience in the BME laboratory. How much structure should we impose and how bold should we be in setting goals for our students? Where should we take our inspirations and derive principles for the design of these “synthetic” laboratories?

In this article, we argue for a translational approach to the design and development of undergraduate laboratories. Such an approach entails studying complex in-the-world learning environments (in vivo sites) and then appropriately translating findings into design principles3,4 for classrooms (in vitro sites). Science educators and engineering educators (See Linsenmeier et al.16 and Flora and Cooper9) have also looked to authentic sites of science for inspiration in the development of inquiry approaches first advocated in the AAAS Benchmarks for Science Literacy.1 This recommended approach to science instruction advocated students emulating the cognitive practices of real world scientists. Thus, in classrooms they learn to design and conduct experiments to answer questions, often of their choosing, as opposed to traditional laboratories where they follow procedures to arrive at pre-determined results. Subsequent studies of this approach have indicated that inquiry-based approaches in laboratory science courses can improve ability to design experiments and analyze data18 enhance conceptual knowledge28 and increase interest in subject matter.12 Moreover, learning scientists more generally have an established tradition of looking at the reasoning practices of experts in a field as a starting point for establishing learning goals and anticipated development trajectories. In the realm of physics learning, for example, studies of reasoning and problem solving in experts compared to those in novices6,11,14 have been important in identifying misconceptions and in the development of curricular reform. What sets our translational approach apart from our learning science colleagues is that, in addition to determining the expert cognitive practices that drive research in science and engineering, we have focused on the learning processes of graduate and undergraduate researchers in sites of authentic research activity. This is in line with situated learning10 approaches that look not just at the “what” of instruction but also the “where and how.” It has been demonstrated in numerous studies15 that context profoundly impacts cognition. So in our approach we try to account for both the cognitive practices of experts but also the environment in which these practices take place.

In this article, we offer design principles for instructional laboratories in biomedical engineering derived from two NSF-funded studies. We then report on a study in which we utilized these principles to redesign a laboratory course and conduct a preliminary study of learning effects with this new model compared to a traditional technique-driven version of the same course. The findings attest to the potential for new educational models to enhance learning outcomes in laboratory settings, but also reveal the challenges of moving from technique-driven to more problem-driven approaches.

Design Principles for Laboratory Environments

Design principles for our new models of instructional laboratories derive from two education-focused projects addressing interdisciplinary learning and cognition. The first project was a 6-year study untaken in three university research laboratories to investigate how learning unfolds and is supported in these complex sites of knowledge-making.21 Unquestionably research laboratories are central to all graduate education in science and engineering and numerous survey studies of undergraduate research experiences attest to the positive impacts of working in a lab,25,29 particularly for women and minorities.17 These authentic research settings are where science and engineering identity trajectories at the undergraduate level are often strengthened and career paths that include graduate school become a vision, a reason why the National Science Foundation has pushed to create more opportunities for post-secondary students to participate in and be mentored through the Research Experience for Undergraduate (REU) program. And while the numbers of undergraduate research opportunities are increasing, there will never be enough labs to accommodate more than a fraction of potential BME practitioners. Thus, our study of the research labs sought to identify ecological features of laboratory settings that are conducive to documented positive learning experiences in hopes of replicating those features in classroom settings. Our research also addressed the more cognitive issue of interdisciplinary integration across engineering and science, integration essential to innovation in biomedical engineering.

From more than 148 interviews with members of two BME research labs, sustained observation of lab work over a three-year period, attendance at lab meetings, PhD proposals and defenses, mentoring meetings, and laboratory tours for visitors,Footnote 1 we distilled five principles used to inform the design of new models for instructional laboratories. Some may question whether these principles are generalizable to all BME research settings; we do not dispute that each PI has a certain style and way of working that profoundly influences how work and learning are accomplished in the lab. Nevertheless, the fact that we found the same principles at work in two very different lab settings—tissue engineering and neuroengineering—suggests that the nature of the work itself, discovery and innovation in interdisciplinary engineering, demands a certain work/learning configuration. These principles represent the social–cultural–cognitive mechanisms that make it possible for undergraduates and new PhDs to find a foothold and then flourish in these rich, complex learning factories. While some may challenge our efforts to replicate the technology and knowledge rich features of a research laboratory in an undergraduate classroom, we contend that it is just these kinds of open-ended, ill-constrained, failure-imbued learning experiences that truly offer a glimpse of the real work done in the field to advance healthcare. The principles are as follows:

Learning Is Driven by the Need to Solve Complex Problems

Knowledge building in the labs is driven by the need to solve problems. Much work goes into continually re-articulating the larger problem and determining tractable pieces through which progress can be made. In working toward solutions, multiple questions need to be addressed; multiple forms of activity need to be undertaken; and multiple forms of data generation, gathering, and analysis need to be undertaken. The complex, ill-defined nature of the problems promotes the distribution of problem solving activities across a community of researchers. With so many problems and sub-problems to be solved, every lab member, even the greenest undergraduate, can find a niche from which s/he can begin to contribute to the larger problem solving of the lab.

Organizational/Social Structure Is Largely Non-Hierarchical

Knowledge building on the frontiers of science and especially, though not exclusively, at the crossroads of two or more disciplines is most often distributed across individuals while accruing individually. The lab director has the big picture in mind, but s/he does not have all the knowledge, the skills, or the expertise to answer all the questions or resolve all the problems. Actually, no one does. Rather it is the group as a whole that possesses the expertise to move forward. This means that the oft-studied distinction between novice and expert is of less importance here. In a sense, everyone is a novice, which affirms the new lab member’s status as not especially remarkable. What is of importance here is how in this nonhierarchical setting the newcomer can envision herself as a major group player, perhaps even the expert in her particular domain or part of the greater problem space. This is a great motivator for the learner to find more and more venues for developing knowledge and a scientific identity.

Learning Is Relational

Conducting research requires lab members to be agents in forming relationships. In our investigation of the research labs we witnessed researchers forming relationships both with people and with the technological artifacts they design and build to carry out their research.19 In the instructional design project, we focused on the former. Research requires developing independence but interdependence as well. As we saw in the lab studies, a great deal of lab knowledge resides in the heads, experiences and notebooks of the various members. As repositories of scientific and engineering know-how, senior lab members become identified with specific lab devices, techniques, research questions, and evolving protocols, assays, and devices. Newcomers to the lab need to develop relationships with these people, learning to ask questions and seek advice to get access to this knowledge. And in developing relationships, they learn about the senior lab members’ experiences with particular devices and the requisite aspects of lab history that are often poorly chronicled in other places. With strong social relationships comes the potential for a wealth of problem-solving capacity and knowledge acquisition. But the lab newcomer has to develop the habit of first identifying and then going to people in the know. Forming relationships means being part of a team, collaborating with others toward both local and global goals.

Multiple Support Systems Foster Resilience in the Face of Impasses and Failures

The social aspect of learning is critical for another reason. Learners need to understand that setbacks, frustration, and uncertainty are constant companions in doing the work of science. This situation can and does create frustration. Cells die, devices fail, and data collected do not validate a hypothesis. These realities of research can be very hard to handle, especially when impasses and failure become a pattern. In the face of such setbacks, learners need a sense that they are not alone, that their failure is not singular but is rather a feature of lab life. This sense of membership mitigates the feeling of futility that could pervade the community. Having relationships with others in times of failure affords two things: a point for commiseration and solidarity and potential partners for problem solving. Without the close social fabric of the lab, such experiences of failure would be experienced in isolation. Instead, “failure” in the lab becomes an opportunity to deepen one’s understanding of the project under investigation, the nature of research, and the ethos of the community. While failure is hard, it can be very instructive and even a profound starting point for new realizations and learning.

Taken together, we characterize the educational model derived from the study of learning in research settings as agentive.20 In an agentive learning environment, students are agents of their own learning and in determining a course of action. In this sense, they are actively constructing understanding and knowledge as they work through problems. In the research labs, learners enlist and interact with people and laboratory tools and devices. They develop strategies for solving the problems that arise, for dealing with impasses and failure and for seeking help when they experience difficulties. They utilize their hands and minds to solve open-ended, ill-constrained, and ill-structured problems. They build when required to do so; they do research and then use this research to guide decision-making. When in an agentive learning environment, students need to become self-directed, empowered learners and problem solvers, who utilize previous learning experiences for the current learning situation, all features of what is called “constructivist learning.”7,23,27

A Comparative Study of Problem-Driven vs. Technique-Driven Instructional Laboratories

To assess the feasibility of translating principles of agentive learning environments to BME laboratory instruction, we conducted a comparative study of two sections of the Systems Physiology I lab, the most technique-driven lab in the Georgia Tech BME laboratory sequence. Prior to the study, the control section, which had been developed in 2002, very much mirrored a cell biology laboratory. A legacy course developed at the inception of the undergraduate curriculum, it followed the biology model of technique-driven bench top activity. Students practiced cell-based techniques such as Western blot or PCR by following protocols and keeping lab notebooks of their procedures and outcomes, with a course objective to introduce students to analytical methods used in cell biology. The lab culminated in a more open exercise where they had to propose two techniques they had practiced as tools for answering a question they had developed from the literature.

Prior to the reported study, it had been hypothesized that this lab was not serving the development of biomedical engineering skills. The learning outcomes for the course were poorly specified. Students were learning laboratory technique, how to take measurement and interpret data from biological systems, but they were not gaining experience in designing experiments or solving problems. There was also evidence from testing that students were not developing an understanding of the mechanisms behind the technologies. To better understand how students were participating in the activities as the class unfolded over time, from module-to-module, we undertook a qualitative study utilizing observation of student pairs and open interviewing. The collected data were then coded and sorted for emerging and repetitive themes. In our estimation, tracking a pair of teams over the term as they worked, as they interacted in pairs and with the TA was the best way to capture the “lived” experiences of the students, something that more quantitative instruments cannot provide. A survey could have been developed based on this first study to generate more quantitative data but we did not do that. Also test scores would not have been appropriate for getting at the kinds of things that the more qualitative approach was able to yield.

From a qualitative investigation of the lab over a semester, we developed a better understanding of how the various lab activities were unfolding so as to support or discourage learning. This investigation entailed the generation of extensive field notes derived from continuous observations of student pairs at work on the bench tops as well as assessment of their lab notebooks, presentations, tests, and final projects. Students were also interviewed informally as they worked in the lab. Data collected and then analyzed during the term suggested four major failings of this laboratory model of learning.

-

1.

The design of the lab made it possible for students to follow the various procedures, such as a Western blot, without fully understanding the underlying mechanisms of the test itself. This was particularly evident in the lab reports when students attempted to figure out where their experiments had gone wrong. According to the lab director, “…the explanations of what went wrong usually went something like—the TA, he told me wrong or the moons weren’t lined up… and just some crazy explanations, and they really didn’t have any scientific merit.” It was apparent that with such shallow understanding of the techniques, students would be unable to generalize use of these tests to other situations or to trouble-shoot when they failed. They were “mindlessly” going through the procedures without understanding the scientific basis of the established protocols.

-

2.

From the student perspective, there was no coherence between the labs. Each lab was experienced as an isolated set of procedures that had little or no relationship to the lab of the previous or following week. While an expert may have been capable of making the links, the students were not. Thus, the labs were experienced as a series of disjointed physical bench top tasks that just needed to be completed. The labs were also not coordinated with the lecture course they were attached to, so there was little opportunity for the students to make connections between the procedure and the course content.

-

3.

With the students working in pairs, many unnecessarily redundant conversations occurred because the teaching assistants were failing to utilize questions arising in one pair to leverage a whole class discussion. The pair wise configuration of parallel tasks was not conductive to a sense of the whole lab as a learning community. The instructional staff failed to leverage pair-based questions for teachable moments for the whole class.

-

4.

The lab structure failed to clarify or bring home the connection between the various techniques and their practical uses in industry or research. Such a failing led students to dismiss the bench top work as so much busy work.

The redesign of the lab using agentive principles addressed three of the four problems: shallow understanding, the lab as a learning community and the practical uses of the technique in authentic settings. By situating the desired learning outcomes in the context of problems to be solved, it was hypothesized that learning would be deeper, the lab community could be leveraged for better learning and that students would have a better sense of the global applications of the techniques. The problem of coherence between labs was not addressed in the redesign as we wanted to replicate in the new model the sequencing of activities found in the legacy model.

Experimental Design

To investigate whether a problem-driven instructional lab would yield the desired learning outcomes, a comparative study was conducted in the spring of 2007. The specific research questions for the study were:

-

How do the learning outcomes compare across the two sections of the same course?

-

How do students experience this new model? Is there a difference?

-

How do the lab manager and TA’s experience this new model? Do they find it feasible in terms of time allotted for the problem modules, the lab resources required and instructional support needed?

To address these questions, two sections of Systems Physiology I were run in parallel by the same lab director but with different teaching assistants. The content of the two sections was kept constant to the extent possible, but the mechanisms through which students engaged the material differed. To better clarify, we offer an analysis of the differences between the two models of instruction in the lab that focused on protein analysis. These models differed along several dimensions: lab framing, instructions, supplies, equipment, and student configuration. By lab framing, we refer to the initial prompt that positioned the students in particular ways and set the stage for the lab to follow. Instructions refer to scripted activities required of the students in the labs. Supplies and equipment are self-evident—what they work with and use. Student configuration refers to formats of interaction required of students as they work. Comparing the two sections along these dimensions, we see significant differences in how students engaged the material (Table 1).

In the control section, the lab was framed as an “experiment”, comprising a chronology of tasks, “first…then” and a “series of steps”. The students were positioned as “observers”, “performers”, and “visualizers”. The framing introduced the technical terminology of “electrophoresis” and “electrophoretic mobilities”. And finally the value of such a technique was stated for the student. Each team then followed the numerous intricate steps constituting a Western blot provided in the lab packet using the supplies and equipment specified in the instructions. Students worked in pairs throughout the lab, following the steps and keeping individual lab notebooks.

In contrast, the experimental lab was framed as a problem; the students positioned as problem-solvers charged with identifying an unknown serum. They received no instructions on how to proceed. They did receive references to some relevant literature and a list of available supplies and equipment they could use, more than they needed which required them to systematically evaluate the appropriateness of all supplies and equipment and then to eliminate as required. In addition, they had to limit the possible negative and positive controls to four from a longer list, again making decisions in advance concerning how they were going to set up their identification process. Another feature of the experimental section was letting the student groups learn through failure. Even in instances when the lab director knew they were headed down the wrong course but the group had a sound reason, he let them go. After getting unanticipated results, groups had to revisit their work and try to understand where they had gone wrong. Students worked on teams of five with one student assuming the lead role for each lab. Other roles included—a librarian to provide feedback to the instructor in the form of weekly progress reports, tool smith, who was tasked with learning the utility and use of equipment and instruments required to complete given tasks, and a statistician to provide input into the design of experiment and statistical analysis.

Throughout the semester, uniform assessment strategies were applied in both the control and experimental sections. Data from the comparative assessment comprised:

-

Post-lab quizzes

-

Student lab notebooks of their experiments

-

Final project presentations and reports

-

End-of-term student survey

-

End-of-term comprehension test.

This comparative assessment was statistically analyzed by a student’s t-test for post-lab quizzes and final project presentations and reports and by Fisher’s exact test for the end-of-term student survey and comprehension test.2 Questions for the post-lab quizzes were selected based on expected knowledge gained from each lab module and signified a generalized understanding of the topics covered in that particular module. The average score for each of the two modules was normalized to one and compared by a student’s t-test. For the final project, students in the experimental section were grouped into six teams of two students and one team of three students similar to the control section (n = 14). Presentation final grades were scored by four graders independently and averaged for their final presentation score; one grader graded all reports, which were individually written, for the course (n = 30). The end-of-term student survey8 covered student perception toward class learning goals and were scored on a five-point Likert scale. As part of the end-of-term survey, a comprehension test (which was not figured into the student grade), comprising definitions, equipment and instrumentation, and experimental design categorized questions, was given to each section. The definitions section comprised four questions that tested their depth of understanding. For example, students were exposed to both the terms “histology” and “immunology”; they were asked to define the term immunohistochemistry—a combination of the two terms. Raters classified student responses into three levels, incorrect, partially correct, and fully correct. Partially correct answers were defined as those that a rater could perceive to be correct but did not convey a full depth of understanding. For example, for the term immunohistochemistry, a partially correct answer would be one that described the use of antibodies on a histological section, but failed to identify that a specific antigen is targeted for detection. Similarly, two questions in the equipment and instrumentation section required deeper understanding of equipment and instrument usage by asking students to identify an instrument that could quantify DNA concentration, something that they had not specifically done during the course, although they had quantified protein concentrations and understood both Beer–Lambert law and DNA labeling for visualization. Finally, one question was asked that challenged students to design an experiment.

By a blinded procedure, four independent reviewers, consisting of the lab director, two graduate student teaching assistants not linked to the study, but who were previously TAs for the course, and an independent cell and molecular biologist, categorized student response, either incorrect, partially correct, or fully correct. Reviewers were not given any instruction for the first two categories. For the experimental design section, reviewers were asked to ensure that students had selected appropriate positive and negative controls for their study. The statistical program JMP was used for data analyses. P-values <0.05 and <0.1 were considered statistically significant for student’s t-test and Fisher’s exact test, respectively. A multiple kappa statistic was performed to gauge inter-rater reliability for the comprehension portion of the final survey.

Findings

Post Lab Quizzes

The final normalized comparison of these post-lab quizzes between the groups did not yield significant differences between sections (Fig. 1). This finding was reassuring in that we did not cause undue harm in the experimental section given the very unstructured, and ill-constrained nature of the problems.

Final Project/Presentations

Students in both control and experimental sections were given identical instructions to propose a hypothetical experiment using techniques learned from the course. As shown in Fig. 2, oral presentation scores for the experimental section (gray bars) were significantly higher than the control (white bars) as measured by a student’s t-test, p < 0.05 (n = 14 for presentations; n = 30 for reports).

It is not clear why the oral presentation scores were, on average, higher for the experimental sections. One possibility is the oral examination format allowed the scorers to gain better insight into the depth of student understanding.

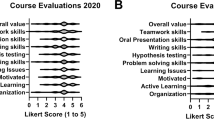

End-of-Term Survey

A final survey was provided to students after the completion of the term assessing student perception.8 Questions resulting in a statistical difference are shown in Fig. 3. A statistical differences was defined as a p-value lower than 0.1. Students in the experimental section perceived themselves to be able to better identify strategies to address lab objectives and better learn from their failures while those in the control section perceived themselves to be able to better execute the lab procedure and felt more confident in the lab.

End-of-term student survey probing student development in the following areas: (a) identifying critical problem features and attack strategies, (b) learning from failure, (c) executing lab techniques, and (d) confidence in a lab setting with lab equipment. The frequency of responses is shown as grayscale bars on a 5 point Likert scale, where “strongly agree” corresponds to white and strongly disagree corresponds to black bars. The percentage of student response scored in each category on a per section basis is enumerated within the bars. Bars without this value represent one response or ~7% of the section response. There was a statistical difference between control and experimental sections for the above questions (p < 0.1)

Comprehension Test

As a gauge of inter-rater agreement, a multiple kappa coefficient was calculated to be 0.27, suggesting some agreement between raters. Better instruction prior to blinded rating along with better consistency in the background of the raters would most likely improve this agreement. Using all rater data, for each category, the experimental section performed statistically better than their control counterparts (Fig. 4). This finding suggest that, despite the varied backgrounds of the raters, that students in the experimental section could better apply what they had learned in the laboratory.

End-of-term comprehension test examining students to apply gained knowledge in three areas: (a) definitions, (b) instrumentation, and (c) experimental design. Student response, correct (white), partially correct (dark gray), and incorrect (black), were evaluated by fisher’s exact test. Statistically significant differences were found in each category of question (p < 0.1). The percentage of student response scored in each category on a per section basis is enumerated in the bars

Discussion

In this study, we sought to discover how participation in an agentive learning laboratory would affect understanding and skills development. We also wanted to explore the implementation and management challenges associated with this new model of laboratory instruction. For post lab quizzes and end-of-term project reports, the two groups demonstrated no significant difference even though the instructional treatments in the two sections greatly differed. As noted earlier, this finding was reassuring in that a learner-centric, problem driven environment could produce positive learning effects comparable to more traditional methods. As this was the first iteration on designing and implementing this kind of environment, it is possible that in a second refined iteration on this model, greater learning gains could be demonstrated. This finding of comparable learning outcomes between a more traditional transmission or technique-driven approach and a constructivist educational approach aligns with other comparative studies of problem-driven learning5,24,26 in which a post-treatment test showed no significant difference in learning outcomes.

As for the more positive learning outcomes demonstrated in the oral presentations and the end-of-term comprehension test with the experimental group, we hypothesize that the very nature of the constructivist learning environment may account for the differential. In a series of experiments on problem-driven learning at the undergraduate level, Schwartz and Bransford24 propose knowledge assembly and discovery as discernment as mechanisms that may account for demonstrated superior learning. Knowledge assembly works as follows.

Analyzing the cases encourages students to assemble relations that connect the case information to other pockets of prior knowledge. Conceivably this elaboration increases the number of possible retrieval paths (connections) to target concepts. The multiple retrieval paths increase(s) the chances of recovering the relevant concepts.24

In other words, in working on an ill-constrained problem as opposed to following predetermined steps, in determining what the problem is requiring, what the list of supplies implies and how a lab procedure can identity a species of animal, students need to assemble information from multiple sources and link it to prior knowledge, both integration activities which enhance retrieval and explanation. The discovery as discernment view contends that better encoding of knowledge for later access is enhanced through deliberation, which promotes superior analysis and recognition of patterns. More specifically, “individuals learn well when they have generatively discerned features and structures that differentiate relevant aspects of the world” (p. 493). The species identification problem called for just such discernment toward determining the mystery animal. We further hypothesize that this need for discernment can also explain the greater appreciation for failure as a learning mechanism demonstrated in the experimental group as well as a grasp of experimental method. At the time of failure or impasse, the researcher is called upon to mindfully discern what may have caused the difficulty, to reconsider the experimental design and to retool for the next iteration. While some might argue this is more appropriate for graduate students, if the learning effects are enhanced for undergraduates perhaps we should support what we know to be the case in research settings—addressing failure is the starting point for learning.

We next consider the differences in student perception across sections whereby the control group felt more confident in their lab technique than the experimental group. This confidence, however, was not grounded in any greater success navigating the Western blot; in fact, errors in technique were commonplace in both sections. Yet, the control group derived a greater sense of confidence even as they were making errors. In this technique-driven lab, poor technique resulting in a failed procedure was merely to be noted and reflected upon. Failed technique in the experimental group had consequences—they couldn’t solve the problem. Therefore, the control group was not really pushed to recognize that their technique was less than perfect. Or perhaps, since the perceived sole purpose of the control labs was the development of technique, not problem solving, it is possible that students felt pushed to confirm their mastery of these skills. In any event, even though the lab director and TA’s perceived no difference in technique, perception differed across the experimental and control groups.

Greater expected time commitment for the experimental section was addressed by removing material from one of nine modules of the control section. In the end-of-term student perception survey, a question was asked to probe this time commitment: “Compared to other courses, the amount of effort required of me as a team leader and individual student was….” This question did not provide the necessary resolution to discern a difference between groups as both sections unanimously stated a much higher time commitment compared to other courses. A better survey question more suitable to a laboratory setting might tease out differences between the groups.

Implementation and management of a problem solving educational lab proved very trying at times for the lab director and TA’s for several reasons. A first challenge is the determination of team composition for optimal collaboration and learning. In this study, the three teams in the experimental group self-selected resulting in one highly efficient/effective group, one highly dysfunctional team and one team somewhere in the middle. One factor contributing the success of the first team was prior laboratory experience in university research labs by two team members. They had already been acculturated into the open lab setting and had pipetting skills, for example, not possessed by the less successful teams. On the low performing team, two members had significant difficulty working on a team even though they had many prior experiences in other classes with collaborative work. Contributing to the difficulties of the dysfunctional team was an early split among team members into two sub teams compounded by real problems with the two members actually working on the team. Leadership on this team was a continual problem and at one point the lab director had to take over leadership tasks to model the kinds of behaviors he was looking for. At the end, there was little cohesion among the team members and this was reflected in the quality of their work. In future iterations, distributing students so as to create the best possible fit among team members will be important.

Conclusions

With this research we were able to demonstrate that creating an agentive learning environment in undergraduate BME laboratories is possible and can yield positive learning outcomes. In future iterations of this lab, we have decided to constrain the problems a bit more, to reduce the team size from five to four, to move away from assigning roles, and to rearrange certain elements. There is no doubt, however, that taking our inspiration for design of educational laboratories not from the technique-driven instructional labs typical of the sciences but rather from real research laboratories in the BME domain has the potential to bring BME as a discipline to the forefront of educational innovation in post secondary engineering education.

Notes

During our study, the tissue engineering lab hosted approximately 25 members over a two-year period, including summer REU students, graduating PhD students, visiting scholars and post-docs while the neurolab generally comprised ten members, but there were members that came and went as well. We collected the large number of interviews through repeated, longitudinal interviewing with selected lab members and by interviewing even short-term visitors.

We used a mixed methods approach to data collection. Prior to any data collection, we obtained IRB approval for the studies. Initially we collected ethnographic data using the techniques of participant observation of all aspects of lab life and work, sustained interviewing over time, artifact collection and rigorous analysis using data-derived and validated coding schemes. These ethnographic activities and findings focused our cognitive historical analysis on the devices that drive the work in the labs. Cognitive-historical study involves the in-depth and fine-grained analysis of practices over time spans of varying length, reaching from shorter spans defined by the analyzed activity itself to time spans of historical dimension. The goal of such analysis is to use the historical records as traces of the reasoning and problem solving practices that led to conceptual shifts and innovation. In our case, we used lab notebooks, early lab papers, grant proposals, and interviews on the design history of artifacts to recover how the salient investigative and reasoning practices had been developed and used by lab members.13

References

American Association for the Advancement of Science. Benchmarks for Science Literacy Project 2061. New York: Oxford University Press, 1993.

Behravesh, E., B. B. Fasse, M. C. Mancini, W. C. Newstetter, and B. D. Boyan. A Comparative Study of Traditional and Problem-Based Learning Instructional Methods in a Lab Setting. Los Angeles, CA: Biomedical Engineering Society, 2007.

Brown, A. L. Design experiments: theoretical and methodological challenges in creating complex interventions in classroom settings. J. Learning Sci. 2(2):141–178, 1992.

Brown, A. L., and J. C. Campione (eds.). Guided Discovery in a Community of Learners. Cambridge, MA: MIT Press, 1994.

Capon, N., and D. Kuhn. What’s so good about problem-based learning? Cogn. Instruct. 22(1):61–79, 2004.

Chi, M. T. H., P. J. Feltovich, and R. Glaser. Categorization and representation in physics problems by experts and novices. Cogn. Sci. 5:121–152, 1981.

Cobb, P. Theories of mathematical learning and constructivism: a personal view. In: Symposium on Trends and Perspectives in Mathematics Education. Austria: Institute of Mathematics, University of Klagenfurt, 1994.

Fasse, B. B., and E. Behravesh. Exit Survey: 3160. Atlanta, GA: Georgia Institute of Technology, 2007.

Flora, J. R. V., and A. T. Cooper. Incorporating inquiry-based laboratory experiment in undergraduate environmental engineering laboratory. J. Professional Issues Eng. Educ. Pract. 131(1):19–25, 2005.

Greeno, J. G., and Middle School Mathematics through Application Project Group. The situativity of knowing, learning and research. Am. Psychol. 53(1):5–26, 1988.

Halloun, I. Schematic modeling for meaningful learning of physics. J. Res. Sci. Teach. 33(9):1019–1041, 1996.

Howard, D. R., and J. A. Miskowski. Using a module-based laboratory approach to incorporate inquiry into a large cell biology course. Cell Biol. Educ. 4:249–260, 2005.

Kurz-milcke, E., N. Nersessian, and W. Newstetter. What has history to do with cognition? Interactive methods for studying research laboratories. Cogn. Culture 4(3/4):663–700, 2004.

Larkin, J., J. McDermott, D. P. Simon, and H. A. Simon. Expert and novice performance in solving physics problems. Science 208:1335–1342, 1980.

Lave, J. Cognition in Practice Cambridge. UK: Cambridge University Press, 1988.

Lisenmeier, R. A., D. E. Kanter, H. D. Smith, K. A. Lisenmeier, and A. F. McKenna. Evaluation of a challenge-based human metabolism laboratory for undergraduates. J. Eng. Educ. 97(2):213–222, 2008.

May, G. An Evaluation of the Research Experiences for Undergraduates Program at Georgia Institute of Technology. Pittsburgh, PA: Frontiers in Education, 1997.

Miles, M. J., and A. B. Burgess. Inquiry-based laboratory course improves students’ ability to design experiments and interpret data. Adv. Physiol. Educ. 27:26–33, 2003.

Newstetter, W., E. Kurz-milcke, and N. Nersessian. Cognitive partnerships on the bench tops. In: International Conference on Learning Sciences. Santa Monica, CA: AACE, 2004.

Newstetter, W., E. Kurz-milcke, and N. J. Nersessian. Agentive learning in engineering research labs. In: Proceedings of FIE Conference [CD–ROM]. Savannah, GA: IEEE, 2004.

Newstetter, W., N. J. Neresessian, E. Kurz-Milcke, K. R. Malone. Laboratory learning, classroom learning: looking for convergence/divergence in biomedical engineering. ICLS Conference 02, AACE, 2002.

Perreault, E. J., M. Litt, and A. Saterbak. Educational methods and best practices in BME laboratories. Ann. Biomed. Eng. 34(2):209–216, 2006.

Piaget, J. The Origins of Intelligence in Children. New York, NY: International Universities Press, 1952.

Schwartz, D., and J. Bransford. A time for telling. Cogn. Instruct. 16:475–522, 1998.

Seymour, E., A. Hunter, S. L. Laursen, and T. Deantoni. Establishing the benefits of research experiences for undergraduates in the sciences: first findings from a three year study. Sci. Educ. 88(4):493–534, 2004.

Vernon, D. T., and R. L. Blake. Does problem-based Learning work? Acad. Med. 68:550–563, 1993.

Vygotsky, L. S. Thought and Language. Cambridge, MA: MIT Press, 1962.

Wallace, C. S., M. Y. Tsoi, J. Calkin, and D. Marshall. Learning from inquiry-based laboratories in nonmajor biology: an interpretive study of the relationships among inquiry, experience, epistemologies, and conceptual growth. J. Res. Sci. Teach. 40(10):986–1024, 2002.

Zydney, A. L., J. S. Bennett, A. Shahid, and K. Bauer. Impact of undergraduate research experience in engineering. J. Eng. Educ. 91(2):151–157, 2002.

Acknowledgments

We gratefully acknowledge the support of the National Science Foundation ROLE Grants REC0106773 and DRL0411825 to Nancy Nersessian and Wendy Newstetter in conducting the research on the laboratories. Our analysis has benefited from data collection and discussions with members of our research group (http://clic.gatech.edu/index.html) especially Aras Bilgas, Ellie Harmon, Yanlong Sun Lisa Osbeck, and Christopher Patton. We thank the members of the research labs for allowing us into their work environment, letting us observe them, and granting us numerous interviews, and the members of our Problem-Driven Learning Classrooms for their enthusiastic participation in our learning experiments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Associate Editor Larry V. McIntire oversaw the review of this article.

Rights and permissions

About this article

Cite this article

Newstetter, W.C., Behravesh, E., Nersessian, N.J. et al. Design Principles for Problem-Driven Learning Laboratories in Biomedical Engineering Education. Ann Biomed Eng 38, 3257–3267 (2010). https://doi.org/10.1007/s10439-010-0063-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10439-010-0063-x