Abstract

A method for assessing Granger causal relationships in bivariate time series, based on nonlinear autoregressive (NAR) and nonlinear autoregressive exogenous (NARX) models is presented. The method evaluates bilateral interactions between two time series by quantifying the predictability improvement (PI) of the output time series when the dynamics associated with the input time series are included, i.e., moving from NAR to NARX prediction. The NARX model identification was performed by the optimal parameter search (OPS) algorithm, and its results were compared to the least-squares method to determine the most appropriate method to be used for experimental data. The statistical significance of the PI was assessed using a surrogate data technique. The proposed method was tested with simulation examples involving short realizations of linear stochastic processes and nonlinear deterministic signals in which either unidirectional or bidirectional coupling and varying strengths of interactions were imposed. It was found that the OPS-based NARX model was accurate and sensitive in detecting imposed Granger causality conditions. In addition, the OPS-based NARX model was more accurate than the least squares method. Application to the systolic blood pressure and heart rate variability signals demonstrated the feasibility of the method. In particular, we found a bilateral causal relationship between the two signals as evidenced by the significant reduction in the PI values with the NARX model prediction compared to the NAR model prediction, which was also confirmed by the surrogate data analysis. Furthermore, we found significant reduction in the complexity of the dynamics of the two causal pathways of the two signals as the body position was changed from the supine to upright. The proposed is a general method, thus, it can be applied to a wide variety of physiological signals to better understand causality and coupling that may be different between normal and diseased conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Detection and characterization of interdependence among simultaneously collected signals for coupled systems is one of the most relevant problems in cardiovascular pathophysiology.17 For example, understanding the baroreceptor dysregulation that is implicated in cases such as syncope and orthostatic hypotension provides the impetus for developing a quantitative and noninvasive method for the detection of imbalance,9 as most existing methods require invasive pharmacological interventions. Full characterization of the dynamics involved in coupled systems requires not only accurately detecting the presence or absence of interactions, but also identifying driver–response relationships, so that causality information between the two signals can be obtained. It is hoped that such combined information may provide better understanding of the pathophysiology involved in the baroreceptor imbalance. The issue of detection and quantification of bilateral interactions between variables measured from coupled systems is receiving significant attention in the scientific literature. Applications are ubiquitous in fields ranging from economics11 to climatology20 and to the analysis of biological systems, with particular emphasis on neural26 and cardiac signals. In the latter application, a large body of work has been developed to assess causality in both cardiovascular8,9,22,23,25 and cardiorespiratory25,32 interactions.

Although there has been no universally accepted definition of causality,12 it is commonly accepted that the notion of causality of two events describes to what extent one event is caused by the other. According to this very general concept, different approaches have been proposed to estimate causality in bivariate time series. Within the framework of chaos theory, methods deducing causality from asymmetries of nonlinear interdependency measures have been presented.15,34 However, such asymmetries can reflect differences in the intrinsic dynamics of the two series rather than the actual strength of the causal interactions,30 and thus they can provide misleading information about directionality of the coupling. Other approaches exploit information theory to quantify bilateral interdependences according to a probabilistic notion of causality (i.e., an event X causes another event Y if the likelihood of Y occurring given X is greater than the likelihood of Y occurring alone). These approaches are mostly based on the concept of transfer entropy,35 and have found application in various fields.12 Unfortunately, entropy calculations need the estimation of probability distributions in mixed state spaces, and therefore, require a large quantity of data, which in turn violates the stationarity assumption of the data. The inference of causal interactions has been investigated also within the framework of phase synchronization by assessing bilateral coupling between the phases of self-sustained interacting oscillators.33 This method was subsequently utilized to detect cardiorespiratory interactions in humans.32 However, this approach was designed for narrow-band signals with dominant oscillations, which poses constraints on cardiovascular signals as they exhibit largely broadband characteristics.21,24

An approach to analyze causality between two time series was formalized by Granger in the context of linear regression modeling of stochastic processes.11 According to Granger’s definition, if the prediction error of a time series is reduced by the inclusion of another time series in the regression model, then the second series is said to have a causal influence on the original time series. By reversing the roles of the two series, causal influences in the opposite direction can be quantified. While Granger causality was originally proposed for linear systems,11 it has been recently extended to nonlinear time series analysis.2,5,10 Nonlinear Granger causality methods are appropriate for studying the complex cardiovascular and cardiorespiratory systems, since couplings are most likely to be manifested by nonlinear dynamics.23,25,29,33 The main problem with the current nonlinear Granger causality methods is that they require long data records which preclude their application to short-term cardiovascular data.

In the present study, we propose a new approach to detect the presence of coupling as well as the causality between two signals via the use of a nonlinear autoregressive exogenous (NARX) model. The advantage of using the NARX model instead of other existing nonlinear methods is that our approach provides more compact representation of the system, as few parameters are needed to characterize the system dynamics. In addition, the parameters provide a physical interpretation of the dynamics of the system, as the NARX coefficients represent the linear and nonlinear transfer functions. Furthermore, the NARX model is an appropriate tool to use for short data records. One main caveat with either the linear ARX or NARX model is the proper determination of the model order, as the least squares-based methods are largely dependent on the appropriate initial selection of a model order. We overcome this complication with the use of the Optimal Parameter Search (OPS) Algorithm,16 a recently proposed method for parameter estimation that is able to identify and retain only the significant model parameters despite an a priori incorrectly over-determined model order selection. The OPS has been shown to outperform the widely utilized least squares (LS) approach for parameter estimation in linear ARX models,16 and in most cases is more accurate than other algorithms.14 Moreover, it is especially useful for NARX model identification as it is specifically designed to select only the significant model terms from the initial large pool of model coefficients that is composed of both linear and nonlinear terms. This allows performing nonlinear model identification without resorting to out-of-sample prediction schemes, which in short time series would be difficult.

As described above, the assessment of Granger’s causality involves evaluating the reduction of prediction error of a time series by the inclusion of another time series in the regression model. To quantitatively address the significance of reduction in the prediction error, we used a surrogate data analysis technique. Moreover, the use of surrogate data analysis allows us to investigate the nature of coupling (unidirectional or bidirectional) as well as to quantify the relative strength of coupling.

We examined the feasibility of the proposed approach using various simulation examples designed to exploit the full capabilities of the method, comparing it to the standard LS method based on the use of the Akaike Information model order criterion.1 The approach was then applied to heart rate and systolic arterial pressure time series measured from healthy humans. These signals are ideal for understanding causality and coupling as they are known to operate within a closed loop system.22

Methods

Nonlinear ARX Model Identification

Let x and y be two stationary series of N values, considered, respectively, as input and output of a closed-loop time-invariant nonlinear system described as:

where the coefficients c 0,{a 1(i); b 1(j)} and {a 2(i, j); b 2(i, j); c 2 (i, j)} represent constant (zero-th order), linear (first order), and nonlinear (second order) contributions to y(n), respectively, and e y (n) is the prediction error. The model orders P 1 and P 2 determine the maximum lags for linear and nonlinear autoregressive (AR) influences, respectively, while the maximum lags for linear and nonlinear exogenous (X) effects are indicated by the model orders Q 1 and Q 2.

The NARX model of Eq. (1) can be represented in the matrix form:

where the vector y = [y(1), y(2),..., y(N)]T contains all values of the available realization of the output series, e y = [e y (1), e y (2),..., e y (N)]T is the prediction error series, c 0 is the zero-th order coefficient, a 1 and b 1 are the first-order vector coefficients, and a 2 , b 2 , and c 2 are the second order vector coefficients. The matrices H y and H x contain the P 1 linear AR terms and the (Q 1 + 1) linear X terms, respectively:

The matrix H yy contains the P 2(P 2 + 1)/2 quadratic AR terms given by the product between the terms of the matrix H y . Similarly, the matrix H xx contains the (Q 2 + 1)(Q 2 + 2)/2 quadratic X terms, and the matrix H xy contains the P 2(Q 2 + 1) cross-terms. All these matrices, along with the constant vector h 1 formed by N unitary values, constitute the observation matrix H = [h 1 H y H x H yy H xx H xy ]. Thus:

The vector d of the model coefficients can be estimated through the traditional least squares (LS) approach, by means of the well known equation:

However, the LS algorithm estimates all of the model coefficients d (as determined by the initially chosen model order), whether or not they reflect the true dynamics of the investigated output series. The maximum model order can be determined by variants of the Akaike information criterion (AIC),1 which are appropriate for linear ARX models.

The OPS algorithm is designed to overcome the above limitations by means of a two step procedure, which is described in detail in Lu et al.16 and thus, is only briefly summarized here. First, the linearly independent vectors are selected from the observation matrix H. Being H i the i-th column of H, linear independence between the first two vectors H 1 and H 2 is tested. That is, H 1 is used to fit H 2 by the least squares method and, if the residual of the fit is larger than a preset threshold (in this study, 0.001, assuming normalized data in which the threshold is related to the variance of the data), then H 2 is considered to be independent from H 1. If not, H 2 is discarded, the third vector H 3 is selected, and the procedure is repeated. Once a vector H i is determined to be linearly independent from H 1, it is added to the set of independent vectors that will be used to estimate the linear interdependence of H i+1. This procedure is repeated until all the independent vectors are selected to form a new observation matrix containing only the linearly independent vectors:

where R is the maximum number of selected linearly independent vectors.

The second step of the OPS is to retain only the significant model terms among the pool W of independent candidate vectors. To this end, the output vector is projected onto the set of linearly independent vectors:

where Θ = [Θ(1), Θ(2),..., Θ(R)]T is the vector of the expansion coefficients. The least squares solution of Eq. (7) is:

The vectors of W are rearranged in descending order according to the value of their projection distance, which is defined as:

Finally, only the terms that reduce the error value significantly, that is, the vectors w i = [w i (1), w i (2),..., w i (N)]T for which the projection distance C i is large, are retained. Thus, the final model identification is performed (Eq. 8) after eliminating from the observation matrix W the vectors associated with the lower projection distances.

In the present study, an automatic criterion for the determination of the number of significant candidate vectors was adopted. It was based on retaining the first k vectors having associated cumulative projection distances larger than 95% of the sum of all projection distances, i.e., \( {\sum\nolimits_{r = 1}^k {C_{r} } } \ge 0.95{\sum\nolimits_{r = 1}^R {C_{r} } }. \) This way, the OPS algorithm provides only the k most significant model coefficients, despite an incorrectly over-determined initial model order selection. This feature also allows the method to identify any possible missing model terms that may be present in the signal.

Estimation of Nonlinear Causality

In this section, we show how the definition of Granger causality11 can be applied to NARX models by comparing the degrees of predictability yielded by NAR and NARX models. One approach to determine the degree of predictability is to examine the mean-squared prediction error (MSPE) of the fitted model:

To bound the MSPE between 0, which corresponds to a fully predictable series, and 1, corresponding to a fully unpredictable series, the index of Eq. (10) is divided by the variance of the output series, obtaining the normalized MSPE, termed, NMSPEy.

The prediction of the output series y can be performed either by means of a pure NAR model, forcing Q 1 = Q 2 = 0 in Eq. (1), or by a NARX model (Q 1 ≠ 0, Q 2 ≠ 0). Accordingly, the output series is predicted only from its own past for a NAR model, yielding the prediction error NMSPEy|y, or from both its own past and the past and present of the input series for a NARX model, yielding the prediction error NMSPEy|x,y. Thus, causality from the input to the output series can be quantified by calculating the absolute or relative predictability improvement (PI) obtained by the NARX model compared to the NAR model prediction:

Similarly, causality from y to x can be investigated by reversing the input–output roles of the two series and calculating the absolute and normalized PI, i.e., PIx|y and NPIx|y, resulting from the inclusion of y samples in the prediction of x.

Finally, the relative strength of the causal interactions from x to y and from y to x is quantified by the directionality index:

With this definition, D xy ranges from −1 to 1. A negative value implies that the direction of causality is from y to x, whereas a positive value indicates that causality is from x to y. A value of zero indicates balanced bilateral interactions between x and y.

Surrogate Data Analysis

In order to test the statistical level of significance of the proposed causality measures, we use a surrogate data technique. The purpose is to establish if the reduction in the prediction error of the output series y is made statistically significant by the inclusion of the input series x (i.e., comparison between NAR and NARX prediction). To accomplish this, surrogate data are generated in which the individual spectral properties of the series are maintained while influences of the input series on the output series are destroyed. The NMSPE yielded by NARX prediction of the surrogate data is used as a discriminating statistic to test whether the predictability improvement evaluated for the output series is statistically significant, in accordance with the null hypothesis of absence of any correlation between the two series.

Starting from the original series x and y, N s pairs of surrogate series are generated as follows. For the i-th surrogate pair, the output series y is left unchanged, while a surrogate of the input series x, x si , is generated using the iteratively refined amplitude adjusted Fourier transform (IAAFT) surrogate data technique.36 This method maintains an excellent approximation of both the power spectrum and the amplitude distribution of the original series. After IAAFT surrogate data are generated, NARX model prediction is performed for the original x and y series, yielding the error NMSPEy|x,y, and for the N s pairs of surrogate series, yielding the errors NMSPEy|x si ,y, i = 1,...,N s . The prediction errors derived from the original and surrogate data are compared and, if NMSPEy|x,y is lower than the threshold given by the 100·α-percentile of the NMSPEy|x s ,y distribution (where α is the prescribed significance level), the null hypothesis is rejected and the measured PI is considered statistically significant. A nonparametric test based on percentiles is preferred in order to account for possible deviations, from Gaussian behavior of the surrogate NSMPE distribution.

Simulated signals

The proposed approach was validated using simulation examples designed to reproduce different types of interactions between bivariate time series with short data lengths. We considered both linear stochastic processes and nonlinear deterministic signals, and the degree of interaction was varied to obtain uncoupled, unidirectionally coupled, and bidirectionally coupled dynamics.

For simulations involving linear dynamics, ARX model identification was performed both by the OPS algorithm and the LS approach with the AIC as the model selection criterion (with the same number of model coefficients), in order to compare the performance of the two approaches in identifying causality. For nonlinear dynamics, NARX models were identified by means of the OPS algorithm to assess the ability of the proposed approach to detect causal relationships in the presence of complex dynamics. In all simulations, the length of the simulated series was set to N = 300 points. The maximum linear model orders were set to P 1 = Q 1 = 12, and the maximum nonlinear model orders were set to P 2 = Q 2 = 0 for linear simulations and to P 2 = Q 2 = 5 for nonlinear simulations. The significance of the causality indices was assessed by generating N s = 100 surrogate pairs for each pair of the original series, and by setting the statistical significance level at the value α = 0.05.

Linear Unidirectional Coupling

In the first simulation, we considered unidirectionally coupled AR processes:7

where ρ is the modulus of the complex conjugate poles of the AR process (set to 0.8), and w x and w y are independent Gaussian white noise signals with zero mean and unit variance (GWN(0,1)). The coupling factor c, which determines the strength of the unidirectional interaction from x to y, was varied from 0 to 1, at an increment of 0.1.

Figure 1 shows the results of the OPS and LS based on 100 realizations of Eq. (13). For c = 0, the distributions of NMSPEy|y and NMSPEy|x,y are substantially superimposed (panels a and b), and the corresponding normalized PI, NPIy|x, is close to zero (panels e and f), correctly indicating the uncoupling of the two series. Increasing the causal coupling strength c from 0.1 to 1 resulted in progressive improvement in the predictability of the ARX identification as compared to AR identification, as noted by the larger difference between NMSPEy|y and NMSPEy|x,y (panels a and b) as well as by the increasing values of NPIy|x (panels e and f). In contrast, no PI was detected, for any value of c, in the direction from x to y (panels c–f). Consequently, the directionality index shifted progressively towards 1, indicating unidirectional coupling. While both OPS and LS correctly detected the unidirectional coupling, results for the OPS are more stable as we observe lower variances (see Fig. 1e vs. Fig. 1f).

Causal analysis of linear unidirectionally coupled signals. (a, b) Normalized mean squared prediction errors (NMSPE) obtained by linear AR prediction (filled symbols) and linear ARX prediction (empty symbols) of the series y and; (c, d) of the series x, generated by the simulation of Eq. (13); (e, f) Normalized predictability improvements (NPIy|x, circles; NPIx|y, squares) and directionality index (D, triangles) estimated for the same series. All indices are plotted as a function of the imposed degree c of unidirectional interaction from x to y, and are expressed as mean ± SD over 100 realizations of the simulation. Indices were calculated after using the OPS (a, c, e) and the LS (b, d, f) algorithms to perform model identification

For the series y, the average number over the 100 simulation trials of significant AR model terms in AR identification, and of significant AR and X model terms in ARX identification, was 2.6, 3.5, 2.0 with c = 0, and 4.3, 2.3, 3.7 with c = 1 for the OPS algorithm; 2.4, 2.2, 1.2 with c = 0, and 4.1, 1.8, 3.7 with c = 1 for the LS algorithm. The same numbers for the series x were 2.6, 3.7, 2.0 (OPS) and 2.4, 2.2, 1.3 (LS) with c = 0, and 2.6, 4.4, 2.0 (OPS) and 2.1, 2.3, 1.3 (LS) with c = 1.

With surrogate data analysis, the PI was statistically significant in only 4 out of 100 realizations for the OPS and in 11 out of 100 realizations for the LS with c = 0. When c = 0.1, the PI was significant in 42 out of 100 realizations for the OPS and in 83 out of 100 realizations for the LS. Both methods indicated significance for all realizations for all values of c greater than or equal to 0.2.

Linear Bidirectional Coupling

In the second simulation, bidirectionally coupled AR processes7 were generated by:

with w x and w y = GWN(0, 1) and ρ = 0.8. The two coupling factors c 1 and c 2 represent the degrees of coupling from x to y and from y to x, respectively. The value of c 2 was varied from 0 to 1 while c 1 was constant with a value of 1, in order to reproduce coupling conditions varying from unidirectional coupling from x to y (c 1 = 1, c 2 = 0) to bilateral balanced coupling (c 1 = c 2 = 1).

The results obtained by the OPS and LSQ algorithms, expressed as a function of the coupling factor c 2 over 100 realizations of the simulation, are shown in Fig. 2. The unidirectional coupling (c 1 = 1, c 2 = 0) was correctly detected, as evidenced by the significant reduction in the predictability of the series y (Fig. 2a, b) and the non-significant reduction for x (Fig. 2c, d), which consequently resulted in the directionality value being very close to 1 (Fig. 2e, f). Increasing the coupling from y to x towards the state of balanced interactions resulted in a progressive increase of the PI estimated for the series x, while the predictability of y remained almost constant. As a consequence, directionality decreased from 1 to 0, correctly indicating a shift from unidirectional to bidirectional coupling. As in the previous simulation, the OPS provided more consistent results than did the LS, as evidenced by the lower dispersion of the causality indices around their mean value (Fig. 2e, f).

Causal analysis of linear bidirectionally coupled signals. (a, b) Normalized mean squared prediction errors (NMSPE) obtained by linear AR prediction (filled symbols) and linear ARX prediction (empty symbols) of the series y and; (c, d) of the series x, generated by the simulation of Eq. (14); (e, f) Normalized predictability improvements (NPIy|x, circles; NPIx|y, squares) and directionality index (D, triangles) estimated for the same series. All indices are plotted as a function of the imposed degree c 2 of interaction from y to x with constant degree of interaction from x to y (c 1 = 1), and are expressed as mean ± SD over 100 realizations of the simulation. Indices were calculated after using the OPS (a, c, e) and the LS (b, d, f) algorithms to perform model identification

For the series y, the average number over the 100 simulation trials of significant AR model terms in AR identification, and of significant AR and X model terms in ARX identification, was, respectively, 4.2, 2.2, 3.4 with c 2 = 0, and 4.4, 2.6, 3.8 with c 2 = 1 for the OPS algorithm; 4.0, 1.8, 2.0 with c 2 = 0, and 4.0, 2.0, 1.3 with c 2 = 1 for the LA algorithm. The same numbers for the series x were 2.4, 4.4, 1.7 (OPS) and 2.1, 2.3, 1.1 (LS) with c 2 = 0, and 4.6, 2.8, 2.9 (OPS) and 4.1, 2.1, 1.3 (LS) with c 2 = 1.

According to the surrogate data analysis, the ARX model prediction yielded NMSPEy|x,y values that were significant in 4, 38, and 78 out of 100 realizations for the OPS and 7, 55, and 98 out of 100 realizations for the LS with c 2 = 0, 0.1, and 0.2, respectively. They were all significant for both methods when c 2 was higher than 0.3. The prediction error, NMSPEy|y,x, was below the IAAFT surrogate threshold in all realizations and for all c 2 values.

Linear Coupling with Long Memory

To assess the performance of the OPS and LS algorithms in the presence of interactions with long memory, we considered the following bivariate process:

where x is an AR process with pole modulus ρ = 0.8, and y is an ARX process in which the long memory of the X model terms (modeled by the terms x(n − 4) and x(n − 6)) was tuned by the factor c.

Results obtained over 100 realizations of Eq. (15) and expressed as a function of c are summarized in Fig. 3. With an ARX model identification based on the OPS, the series y was fully predictable as noted by the null values of NMSPEy|x (Fig. 3a). The same situation was observed for the ARX model prediction of the series x (NMSPEx|y is always null, Fig. 3c). This result is explained by the presence of instantaneous causality between the two series (i.e., both x(n) and y(n) are present in Eq. 15). As a consequence, the two PIs have unit values and the bilateral interactions are equal as the directionality index (Fig. 3e) had a value of zero. In contrast, the LS identification was not able to reproduce the above-mentioned behaviors in the presence of significantly long memory effects from x to y. Indeed, the ARX prediction of y (Fig. 3b) and x (Fig. 3d) yielded increasing errors when the coupling factor c was increased. In addition, an erroneous indication of reduction in causality and of unbalancing between the two dynamics was suggested by the progressive decrease of the NPI indices and the directionality index (Fig. 3f).

Causal analysis of linear coupling with long memory. (a, b) Normalized mean squared prediction errors (NMSPE) obtained by linear AR prediction (filled symbols) and linear ARX prediction (empty symbols) of the series y and; (c, d) of the series x, generated by the simulation of Eq. (15); (e, f) Normalized predictability improvements (NPIy|x, circles; NPIx|y, squares) and directionality index (D, triangles) estimated for the same series. All indices are plotted as a function of the coupling factor c, and are expressed as mean ± SD over 100 realizations of the simulation. Indices were calculated after using the OPS (a, c, e) and the LS (b, d, f) algorithms to perform model identification

The number of model terms selected by OPS and LS algorithms was substantially unvaried at changing the parameter c. The averages over 100 simulations and over all c values for the series y were: 6.7 AR terms (AR identification), 2 AR terms and 4 X terms (ARX identification) with the OPS; 6.5 AR terms (AR identification), 1.9 AR terms and 3.6 X terms (ARX identification) with the LS. The same numbers for the series x were 2.5, 3, 3 with the OPS and 2.2, 1.8, 3 with the LS. Note that ARX identification performed with the OPS always returned the exact number of coefficients according to Eq. (15).

Surrogate data analysis indicated the significance of the PIs for all realizations for the OPS, while the PI of y was often not significant when estimated by the LS method for high values of c (NMSPEy|y,x was significant in 70 and 50 out of 100 realizations with c = 0.9 and c = 1).

Nonlinear Unidirectional Coupling

As an example of unidirectional nonlinear interaction, we consider the following coupled non-identical Henon maps:34

where c denotes the degree of coupling from x to y. The values chosen for the parameters as well as the initial conditions resulted in Henon maps operating in a chaotic regime.

Figure 4 shows the results of the causality analysis performed by NARX model prediction with the OPS, with the coupling c varying in the range {0, 0.05, 0.1, 0.2,..., 1}. For each c > 0, the NARX model’s MSPE decreased with respect to NAR model prediction (Fig. 4a). The decrease was statistically significant, according to surrogate data analysis, for c = 0.05, 0.1,..., 1. Accordingly, the normalized predictability NPIy|x had a unit value (Fig. 4c). For c = 0, the difference between NMSPEy|y and NMSPEy|x,y, and thus the index NPIy|x, was not statistically significant. The PI of x was always zero (Fig. 4b, c), and was not statistically significant. Accordingly, the directionality index was not defined for c = 0, reflecting the uncoupling between the two dynamics. For all other values of c the method correctly detected the imposed unidirectional interactions.

Causal analysis of nonlinear unidirectionally coupled signals. (a, b) Normalized mean squared prediction errors (NMSPE) obtained by nonlinear AR prediction (filled symbols) and nonlinear ARX prediction (empty symbols) of the series y and of the series x generated by the simulation of Eq. (16); (c) Normalized predictability improvements (NPIy|x, circles; NPIx|y, squares) and directionality index (D, triangles) estimated for the same series. All indices are plotted as a function of the imposed degree c of unidirectional interaction from x to y

The OPS algorithm returned the exact number and value of the coefficients in all coupling conditions, except for AR identification of the series y when c > 0. In this case the model selected a number of linear AR terms variable from 3 to 5, and of nonlinear AR terms variable from 3 to 9, depending on the coupling c, thus explaining the high NMSPE of the AR prediction of y (see Fig. 4a). In all other cases, the OPS detected exactly 1 linear AR term equal to 0.1, 1 nonlinear AR term equal to c − 1 and 1 cross term equal to −c for the series y, and 1 linear AR term equal to 0.3 and 1 nonlinear AR term equal to −1 for the series x (for both AR and ARX identification), as well as the constant term equal to 1.4.

Nonlinear Bidirectional Coupling

For the final simulation, we consider bidirectionally coupled Henon maps:10

where c 1 and c 2 represent the coupling strengths from y to x and from x to y, respectively. These two coupling strengths were varied from 0 to 0.33, at an increment of 0.05. Values higher than 0.33 resulted in identical synchronization4 which cannot provide information about causality. The NARX predictability in each direction was tested for statistical significance by the surrogate data approach. For the results that were found not to be statistically significant, the corresponding PI index was set to zero so that a clear graphical representation of the results can be demonstrated. Similarly, the directionality index was set to zero when both PI indices were not statistically significant.

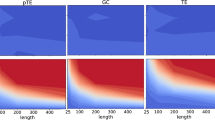

Figure 5 depicts the results of the causality analysis as a function of the coupling strengths in the two directions, c 1 and c 2. When the coupling from x to y was absent, the PI for the series y was always not statistically significant, as documented by the horizontal blue line present in the graph of PIy|x for c 2 = 0. The same situation was observed for the PI of x when c 1 = 0 (vertical blue line in the plot of PIx|y). Accordingly, the directionality index D xy had a low negative and high positive values, respectively. When significant coupling was imposed in the two causal directions (i.e., c 1 > 0 and c 2 > 0), the PI indices successfully detected the coupling strength in both directions. Indeed, for c 1 < 0.1 and c 2 > 0.2 causality was detected more from x to y than vice versa (i.e., PIy|x > PIx|y). A similar result, but in the opposite direction, was found for c 1 > 0.2 and c 2 < 0.1 (i.e., PIx|y > PIy|x). The difference between the two PIs, as well as the directionality D xy , reflected the asymmetry in the coupling degree between the two dynamics (see Fig. 5). Note the large areas with no PI in both directions (blue color), corresponding to zero directionality (green) that occurred when strong synchronization was present, which consequently eliminates causality.

Causal analysis of nonlinear bidirectionally coupled signals. Values of the predictability improvement for the series y (PIy|x) and x (PIx|y), as well as their difference (PIy|x − PIx|y) and the directionality index (D xy ) are represented according to the indicated color scales, as a function of the coupling strengths c 1 and c 2 of Eq. (17)

For all combinations of the imposed coupling strengths, ARX prediction of the two series returned exactly the number and values of the coefficients as in Eq. (17). On the contrary, AR prediction of one of the two series was accurate only when the coupling strength in the corresponding equation was set to zero, while nonzero coupling strengths yielded a larger number of AR terms thus increasing in the prediction error of the series.

Application to cardiovascular data

Subjects and Experimental Protocol

The study population consisted of 15 young healthy subjects (25 ± 3 years old). The surface ECG (lead II) and the noninvasive arterial pressure signals (Finapres) were acquired with subjects in sinus rhythm during spontaneous breathing. The experimental protocol consisted of 15 min of data collection in the resting supine position followed by another 15 min with subjects in the upright position using a motorized tilt table at 60° body position. Subjects were given 10 min of acclimatizing for each body position before data were collected.

After digitization of the continuous signals with a 1 kHz sampling rate and 12 bit precision, the beat-to-beat series of the cardiac cycle length (RR interval) and of the systolic arterial pressure (SAP) were determined as the temporal interval between two consecutive R peaks in the ECG and as the local maximum of the arterial pressure wave within each detected cardiac cycle, respectively. After removing artifacts and slow trends, two stationary segments of 300 points were selected for each subject, one in the supine and one in the upright position.

Data Analysis

For each selected data segment, the RR and SAP series were normalized by subtracting the mean and dividing by the standard deviation, thus obtaining the dimensionless series r(n) and s(n), n = 1,..., 300. To assess causality between the two cardiovascular series, model identification was performed by comparing the degree of predictability obtained by the NAR model and also by the NARX model. Nonlinear model identification and model order optimization were performed via the OPS algorithm, setting the maximum linear and nonlinear model orders to P 1 = Q 1 = 12 and P 2 = Q 2 = 5, respectively. Moreover, linear AR and ARX model identification (OPS with maximum model orders P 1 = Q 1 = 12 and P 2 = Q 2 = 0) were also performed to provide a comparison between linear and nonlinear approaches on cardiovascular data.

Causality from SAP to RR interval was assessed by setting x = s and y = r in Eq. (1), while the opposite (x = r and y = s) was set to study causal interactions from RR interval to SAP. In the latter case, the s series was one-beat delayed before model identification to properly account for the non-immediate effects from RR interval to SAP. The causality analysis should yield improvements in the predictability of RR intervals given SAP, via the index NPIr|s, and of SAP given RR intervals, via NPIs|r. The resulting directionality index D sr will be positive when the feedback interactions from SAP to RR are dominant, and negative when feedforward effects from RR to SAP are dominant. The significance of the prediction errors obtained by ARX prediction of the two series (NMSPEr|s,r and NMSPEs|s,r) was assessed by the 100 surrogate series and the statistical significance was evaluated at the threshold of α = 0.05.

Results

Results of NARX model prediction for a representative subject in the supine position and after head-up tilt are shown in Figs. 6 and 7, respectively. At rest, the NAR predictability was better for the SAP series than for the RR interval series (NMSPEs|s < NMSPEr|r). For both series, NARX model prediction led to a statistically significant reduction in the prediction error (i.e., NMSPEr|s,r and NMSPEs|s,r were below 5% of the distribution of their surrogate counterparts). Tilt position (Fig. 7) resulted in a marked reduction of NMSPEr|r that became slightly lower than NMSPEs|s. The improvements in predictability with the NARX model prediction for the tilt position, while less marked than in the supine position, were still statistically significant.

Causal analysis of the cardiovascular interactions for a subject in the supine position. (a, b) RR interval and systolic arterial pressure (SAP) variability series; (c) Normalized mean squared prediction error (NMSPE) obtained by nonlinear AR prediction (NMSPEr|r and NMSPEs|s, circles) and by nonlinear ARX prediction (NMSPEr|s,r and NMSPEs|s,r, squares) for the RR intervals (filled symbols) and for the SAP (empty symbols). The distributions of NMSPE yielded by ARX prediction on 100 surrogate series are also represented as 5-th percentile, median and 95-th percentile

Causal analysis of the cardiovascular interactions for the same subject of Fig. 6, performed in the upright position. (a, b) RR interval and systolic arterial pressure (SAP) variability series; (c) Normalized mean squared prediction error (NMSPE) obtained by nonlinear AR prediction (NMSPEr|r and NMSPEs|s, circles) and by nonlinear ARX prediction (NMSPEr|s,r and NMSPEs|s,r, squares) for the RR intervals (filled symbols) and for the SAP (empty symbols). The distributions of NMSPE yielded by ARX prediction on 100 surrogate series are also represented as 5-th percentile, median and 95-th percentile

Figure 8 summarizes the results of the causality analysis of the 15 subjects. In the supine position, the SAP (first two bars in panel b) was significantly more predictable than the RR interval (first two bars in panel a), as evidenced by the lower NMSPE with both NAR and NARX model predictions. After tilt, the predictability of the RR interval improved significantly. There was a tendency of the predictability of the SAP to be increased when the body position was changed from supine to tilt, although not significant. According to Fig. 8c, at rest the normalized PI was significantly higher in the direction from RR interval to SAP than in the reverse direction. This unbalancing was also confirmed by the negative values of the directionality index (D sr = −0.262 ± 0.398, mean ± SD over 15 subjects). The tilt position resulted in a significant increase of the PI in the feedback direction: from SAP to RR interval, while the normalized PI value relating RR interval to SAP did not change with alteration in the body position. The directionality index shifted towards zero (D sr = 0.036 ± 0.296, p < 0.05 vs. supine, t-test for paired data), thus providing more supporting evidence for causality in the feedback direction. The analysis performed by linear AR and ARX models yielded similar results, with values of the estimated NMSPE that were always slightly higher than those obtained with nonlinear model identification. The statistical differences shown in Fig. 8 were confirmed also with the linear approach, with the only exception that the increase of NPIr|s from the supine to the upright position was documented but was not statistically significant.

Causal analysis of the cardiovascular interactions for the 15 subjects. (a) Normalized mean squared prediction error (NMSPE) of the RR interval and; (b) systolic arterial pressure (SAP) series (NMSPEr and NMSPEs) obtained by nonlinear AR prediction (black bars) and by nonlinear ARX prediction (white bars); (c) Normalized predictability improvement of RR intervals given SAP (NPIr|s, black bars) and of SAP given RR intervals (NPIs|r, white bars). All indices are expressed as mean (bar) + SD (error bars) over the 15 subjects in the supine and upright positions. Student t-test for paired data: *p < 0.05, **p < 0.01, supine vs. upright; ## p < 0.01, RR vs. SAP; § p < 0.01, NPIr|s vs. NPIs|r

The number of linear model terms selected for the RR interval series by the OPS during NAR identification decreased significantly from supine (4.0 ± 1.7, mean ± SD) to upright (2.6 ± 1.2) position, while the number of nonlinear terms remained unaltered (7.3 ± 2.5 vs. 7.1 ± 2.5). For the SAP series, no significant changes were observed (supine: linear AR terms = 3.7 ± 2.4, nonlinear AR terms = 7.4 ± 1.8; upright: linear AR terms = 3.0 ± 0.9, nonlinear AR terms = 7.8 ± 1.8). As regards NARX identification, the number of model terms did not vary significantly from the supine (RR interval: linear terms = 4.9 ± 2.7, nonlinear terms = 29.0 ± 7.0; SAP: linear terms = 4.5 ± 2.6, nonlinear terms = 26.5 ± 8.5) to the upright position (RR interval: linear terms = 3.9 ± 3.1, nonlinear terms = 24.3 ± 8.3; SAP: linear terms = 6.3 ± 2.6, nonlinear terms = 24.3 ± 5.9). However, significant differences were found looking at the number of X terms, that decreased significantly during prediction of the RR series (linear X terms = 3.3 ± 2.3 (supine), 1.8 ± 2.5 (upright); nonlinear X terms = 12.9 ± 2.5 (supine), 8.5 ± 4.0 (upright)), and increased significantly during prediction of the SAP series (linear X terms = 0.9 ± 1.0 (supine), 2.9 ± 1.7 (upright); nonlinear X terms = 5.5 ± 5.0 (supine), 10.0 ± 3.6 (upright)). It is worth noting that the identification of a considerable number of nonlinear model terms could not be directly associated to the presence of nonlinear dynamics underlying RR and SAP series, as for stochastic time series NARX models tend to fit the dynamics using also nonlinear terms.

The surrogate data approach applied to the cardiovascular series measured in the supine position revealed that the PI of SAP given RR intervals was statistically significant in all subjects, while the PI of RR intervals given SAP was not significant in 3 out of 15 subjects. After tilt, the PI was significant in all subjects for the RR intervals, and in all but one subject for the SAP series.

Discussion and conclusions

In this paper we proposed a NARX model identification approach to assess nonlinear Granger causality in short bivariate time series. With the proposed approach, the PI resulting from moving from NAR to NARX models was taken as a measure of causal interaction from the input to the output series. The application of the OPS-based NARX model in combination with a surrogate data procedure enabled statistical determination of the strength of the causal interactions between bivariate time series.

The core of the proposed approach is the identification of the NARX model that was based on the recently proposed OPS algorithm.16 The salient feature of the OPS algorithm is its accuracy in reflecting only the model coefficients pertaining to the dynamics of the system despite overdetermined initial model order selection. This feature consequently leads to better performance over the other model order selection criteria.1,31 Note that both AIC1 and minimum description length31 model order selection criteria are mainly designed for linear model identification, and they only have the capability to provide the maximum model order of the system. Thus, their accuracy suffers when there are missing model terms (e.g., systems with long delays). The benefits of the OPS-based NARX algorithm were clearly demonstrated with simulation examples involving short data records. Such benefits were particularly evident in deterministic simulations where noise did not contaminate the dynamics (e.g., Eq. 15), as also demonstrated by the comparison between the numbers of significant model terms selected by the OPS and the AIC. Moreover, even for linear simulations with noise included in the generation of the dynamics (Eqs. 13 and 14) the OPS resulted more stable than the AIC, even though both identification techniques selected a similar number of significant model terms and thus yielded comparable prediction errors.

Other nonlinear approaches to assessing Granger causality have been proposed.2,5,10 However, most of these methods require a large amount of data in order to provide reliable causality indices, which is an obvious limitation for short-term cardiovascular variability applications. In our study, the reliability of the results with the proposed method was apparent even with short data records, as the simulation examples demonstrated. Another issue is the lack of accuracy with the other nonlinear methods, as in certain circumstance they could not be able to recognize variables without causality relationships.19 While a method based on the use of a radial basis function improved the accuracy of detecting causality,2,19 it could not provide compact system representation, as our proposed approach does. It should be mentioned, however, that the proposed approach based on NARX models is appropriate only up to 3rd-order nonlinearity as the number of model terms to be searched becomes excessively large after that. However, this is not a major problem for the cardiovascular system in general, since it has been shown that it can be characterized by nonlinearity lower than 3rd-order.6

The application of the approach to RR interval and SAP series in the supine and upright positions provided physiologically interpretable results. For example, the high predictability of the SAP series in either body position can be simply observed in the time series itself. On the contrary, the RR variability is much greater and appears more random than the SAP variability. The major contribution to SAP variability is that located in the low frequency region of the spectrum (LF, around 0.1 Hz), and has been theorized to be reflection of the sympathetic nervous activities.13,24 However, since respiratory-related high frequency (HF) oscillations are present in both body positions, it may be argued that the contemporaneous presence of low and high frequency rhythms does not directly imply complexity.27 A physiological interpretation of the observed higher complexity (i.e., lower predictability) in the RR intervals, especially in the supine position, is that this may reflect low synchronization among the several mechanisms that are responsible for heart rate regulation in the LF band (e.g., central oscillators at the brain stem level, baroreceptive and chemoreceptive feedback, peripheral vasomotion13,17). Our results are in agreement with those obtained in previous studies.27,29

In the upright position, both the SAP and the RR series became highly predictable. This is mainly because the LF oscillations are enhanced and the HF dynamics are nearly abolished due to the activation of the sympathetic nervous activities induced by tilt. It has been shown that dominant activation of the sympathetic activities can lead to a simplification of the cardiovascular dynamics, and hence reduction of RR and SAP complexity.21,24 The observed modifications of the predictability degrees with changing the body positions were reflected by corresponding variations of the significant number of model terms selected by OPS identification. In general, an increase in predictability was associated with a reduction in the number of model terms needed to describe a specific part of the observed dynamics, due to the fact that more pronounced phenomena can be described with lower coefficients required to capture the variability of the considered signal. For instance, the higher predictability of the RR in the upright position was measured with a significantly lower number of linear AR terms during NAR prediction, and with a significantly lower number of (linear and nonlinear) X terms during NARX prediction. These behaviors could be associated, respectively, to a larger involvement of linear dynamics in the generation of the RR oscillations,27 and to a larger contribution of SAP to the variability of RR,22,23 during head-up tilt.

The result indicating higher PI from RR intervals to SAP than in the reverse direction during supine position (Fig. 8c) may indicate an imbalance of the cardiovascular regulation in humans at a resting state. This observation suggests, from the point of view provided by Granger causality analysis that a major role in cardiovascular regulation is played by mechanisms operating along the mechanical pathway from heart rate to arterial pressure.18 Significant increase of the PI from SAP to RR intervals in the upright position can also be explained by the activation of the baroreflex control pathway as a consequence of tilt-induced sympathetic activation.17 Our results based on the directionality index are in agreement with the above-noted physiological interpretation, as we found the values to be negative in the supine position and then approached zero in the upright position. It is worth noting that our directionality analysis mostly confirms previous results obtained by methods exploiting the concept of Granger causality according to different paradigms, such as cross correlation of AR residuals,3 cross-conditional entropy,23 and causal coherence.22,28 In particular, the causal coherence is a tool proposed by Porta et al.28 to quantify in the frequency domain the strength of the linear causal coupling in bivariate time series, and allows to assess Granger causality within specific frequency bands. When applied to LF cardiovascular oscillations in healthy humans, this tool evidenced both the imbalance of the RR-SAP coupling with prevalence of interactions from RR to SAP,22,28 and the enhancement of the feedback regulation from SAP to RR after head-up tilt.22 The similarity of the conclusions drawn in previous studies using the linear causal coherence22,28 and in the present study using the NARX model suggests that a linear approach is appropriate to elicit directionality in the study of RR-SAP interactions in supine and upright healthy humans. We provided a direct evidence for this hypothesis through the comparison between linear and nonlinear ARX identification on the same data set, as we found analogous results using the two approaches. Nevertheless, the implementation of nonlinear parametric models should be preferred to infer directionality in experimental protocols (e.g., paced breathing) or pathological states (e.g., myocardial infarction) where nonlinear dynamics have been shown to play a greater role in the genesis of cardiovascular interactions.23,27

In conclusion, we demonstrated the effectiveness of the combined OPS-based ARX model and a surrogate data technique in evaluating nonlinear Granger causality. This combined method is effective even for short bivariate time series (a few hundred samples). Our simulation results have shown the efficacy of the method in accurately detecting directionality of the coupled linear and nonlinear dynamics. In addition, we demonstrated that the OPS’s inherent ability to discriminate only the significant model terms resulted in better performance than the least-squares based NARX model. Our application to cardiovascular data also illustrated the feasibility of the method, as the results were relevant and interpretable according to the known cardiovascular physiology.

References

Akaike H. A new look at the statistical model identification. IEEE Trans. Autom. Contr. 19:716–723, 1974

Ancona N., D. Marinazzo, S. Stramaglia. Radial basis function approach to nonlinear Granger causality of time series. Phys. Rev. E 70:056221, 2004

Baselli G., S. Cerutti, M. Livraghi, C. Meneghini, M. Pagani, O. Rimoldi. Causal relationship between heart rate and arterial blood pressure variability signals. Med. Biol. Eng. Comput. 26:374–378, 1988

Bhattacharya J., E. Pereda, H. Petsche. Effective detection of coupling in short and noisy bivariate data. IEEE Trans. Syst. Man Cyb. Part B: Cyb. 33:85–95, 2003

Chen Y., G. Rangarajan, J. Feng, M. Ding. Analyzing multiple nonlinear time series with extended Granger causality. Phys. Lett. A 324:26–35, 2004

Chon K. H., T. J. Mullen, R. J. Cohen. A dual-input nonlinear system analysis of autonomic modulation of heart rate. IEEE Trans. Biomed. Eng. 43:530–544, 1996

Faes L., R. Cucino, G. Nollo. Evaluation of a nonlinear prediction algorithm quantifying regularity, synchronization and directionality in short cardiovascular variability series. Comp. Cardiol. 33:177–180, 2006

Faes L., A. Porta, R. Cucino, S. Cerutti, R. Antolini, G. Nollo. Causal transfer function analysis to describe closed loop interactions between cardiovascular and cardiorespiratory variability signals. Biol. Cybern. 90:390–399, 2004

Faes L., L. Widesott, M. Del Greco, R. Antolini, G. Nollo. Causal cross-spectral analysis of heart rate and blood pressure variability for describing the impairment of the cardiovascular control in neurally mediated syncope. IEEE Trans. Biomed. Eng. 53:65–73, 2006

Feldmann U., J. Bhattacharya. Predictability improvement as an asymmetrical measure of interdependence in bivariate time series. Int. J. Bifurc. Chaos. 14:505–514, 2004

Granger C. W. J. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37:424–438, 1969

Hlavackova-Schindler K., M. Palus, M. Vejmelka, J. Bhattacharya. Causality detection based on information-theoretic approaches in time series analysis. Phys. Rep. 441:1–46, 2007

Koepchen H. P. Physiology of rhythms and control systems: an integrative approach. In: Haken H., Koepchen H. P., eds Rhythms in Physiological Systems. Berlin, Germany: Springer Verlag, 1991:3–20

Korenberg M. J. A robust orthogonal algorithm for system identification and time-series analysis. Biol. Cybern. 60:267–276, 1989

Le Van Quyen M., J. Martinerie, C. Adam, F. J. Varela. Nonlinear analyses of interictal EEG map the brain interdependences in human focal epilepsy. Physica D 127:250–266, 1999

Lu S., K. H. Ju, K. H. Chon. A new algorithm for linear and nonlinear ARMA model parameter estimation using affine geometry. IEEE Trans. Biomed. Eng. 48:1116–1124, 2001

Malliani A. Principles of Cardiovascular Neural Regulation in Health and Disease. Norwell, MA, USA: Kluwer academic publishers, 2000

Malpas S. C. Neural influences on cardiovascular variability: possibilities and pitfalls. Am. J. Physiol. Heart Circ. Physiol. 282:H6–20, 2002

Marinazzo D., M. Pellicoro, S. Stramaglia. Nonlinear parametric model for Granger causality of time series. Phys. Rev. E 73:066216, 2006

Mokhov I. I., D. A. Smirnov. El Nino-Southern Oscillation drives North Atlantic Oscillation as revealed with nonlinear techniques from climatic indices. Geophys. Res. Lett. 33:L03708, 2006

Montano N., T. Gnecchi Ruscone, A. Porta, F. Lombardi, M. Pagani, A. Malliani. Power spectrum analysis of heart rate variability to assess the change in sympathovagal balance during graded orthostatic tilt. Circulation 90:1826–1831, 1994

Nollo G., L. Faes, A. Porta, R. Antolini, F. Ravelli. Exploring directionality in spontaneous heart period and systolic pressure variability interactions in humans. Implications in baroreflex gain evaluation. Am. J. Physiol. Heart Circ. Physiol. 288:H1777–H1785, 2005

Nollo G., L. Faes, A. Porta, B. Pellegrini, F. Ravelli, M. Del Greco, M. Disertori, R. Antolini. Evidence of unbalanced regulatory mechanism of heart rate and systolic pressure after acute myocardial infarction. Am. J. Physiol. Heart Circ. Physiol. 283:H1200–H1207, 2002

Pagani M., F. Lombardi, S. Guzzetti, O. Rimoldi, R. Furlan, P. Pizzinelli, G. Sandrone, G. Malfatto, S. Dell’Orto, E. Piccaluga, M. Turiel, G. Baselli, S. Cerutti, A. Malliani. Power spectral analysis of heart rate and arterial pressure variabilities as a marker of sympatho-vagal interaction in man and conscious dog. Circ. Res. 59:178–193, 1986

Pereda E., D. M. de La Cruz, L. De Vera, J. J. Gonzalez. Comparing generalized and phase synchronization in cardiovascular and cardiorespiratory signals. IEEE Trans. Biomed. Eng. 52:578–583, 2005

Pereda E., R. Q. Quiroga, J. Bhattacharya. Nonlinear multivariate analysis of neurophysiological signals. Prog. Neurobiol. 77:1–37, 2005

Porta A., G. Baselli, S. Guzzetti, M. Pagani, A. Malliani, S. Cerutti. Prediction of short cardiovascular variability signals based on conditional distribution. IEEE Trans. Biomed. Eng. 47:1555–1564, 2000

Porta A., R. Furlan, O. Rimoldi, M. Pagani, A. Malliani, P. van de Borne. Quantifying the strength of the linear causal coupling in closed loop interacting cardiovascular variability signals. Biol. Cybern. 86:241–251, 2002

Porta A., S. Guzzetti, N. Montano, M. Pagani, V. Somers, A. Malliani, G. Baselli, S. Cerutti. Information domain analysis of cardiovascular variability signals: evaluation of regularity, synchronisation and co-ordination. Med. Biol. Eng. Comput. 38:180–188, 2000

Quiroga R. Q., J. Arnhold, P. Grassberger. Learning driver-response relationships from synchronization patterns. Phys. Rev. E 61:5142–5148, 2000

Rissanen J. An universal prior for the integers and estimation by minimum description length. Ann. Stat. 11:417–431, 1983

Rosenblum M. G., L. Cimponeriu, A. Bezerianos, A. Patzak, R. Mrowka. Identification of coupling direction: application to cardiorespiratory interaction. Phys. Rev. E 65:041909, 2002

Rosenblum M. G., A. S. Pikovsky. Detecting direction of coupling in interacting oscillators. Phys. Rev. E 64:045202, 2001

Schiff S. J., P. So, T. Chang, R. E. Burke, T. Sauer. Detecting dynamical interdependence and generalized synchrony through mutual prediction in a neural ensemble. Phys. Rev. E 54:6708–6724, 1996

Schreiber T. Measuring information transfer. Phys. Rev. Lett. 2000:461–464, 2000

Schreiber T., A. Schmitz. Improved surrogate data for nonlinearity tests. Phys. Rev. Lett. 77:635–638, 1996

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Faes, L., Nollo, G. & Chon, K.H. Assessment of Granger Causality by Nonlinear Model Identification: Application to Short-term Cardiovascular Variability. Ann Biomed Eng 36, 381–395 (2008). https://doi.org/10.1007/s10439-008-9441-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10439-008-9441-z