Abstract

A single-frequency single-site GPS/Galileo algorithm for retrieval of absolute total electron content is implemented. A single-layer approximation of the ionosphere is used for data modeling. In addition to a standard mapping function, the NeQuick model (version 2) of the ionosphere is now applied to derive improved mapping functions. This model is very attractive for this purpose, because it implements a ray tracer. We compare the new algorithm with the old one using an effective global height of the ionosphere of 450 km. Combined IGS IONEX gridded data sets serve as reference data. On global average, we find a small improvement of 1 % in precision (standard deviation) of the NeQuick2 mapping method versus the conventional approach on global average. A site-by-site comparison indicates an improvement in the precision for 34 % of the 44 sites under investigation. The level of improvement for these stations is 0.5 TECU on average. No improvement was observed for 41 % of the sites. Further comparisons of the single (code ranges and carrier phases) versus dual-frequency (carrier phases only) single site algorithm show that dual-frequency VTEC estimation is more accurate for the majority of the stations, but only in the range of 0.3 TECU (2.6 %) in average.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The idea of using single-frequency GPS measurements for ionospheric delay estimation and/or correction has been recognized by several authors, although publications on this topic are relatively rare compared to the more common dual-frequency (network based) methods. Xia (1992) describes an experiment to retrieve absolute ionospheric delay errors from a single-frequency GPS receiver. This approach indicates that the use of a code range minus carrier phase combination (CMC) enables us to determine the total electron content, although the author admitted that his study was a very first step and a demonstration of the technique. Cohen et al. (1992) present a paper of similar contents at the same conference. Two years later, Qiu et al. (1994) publishes results regarding the estimation of ionospheric propagation delay corrections from a single-frequency GPS receiver. Lestarquit et al. (1996) depicts a sequential filter algorithm for L1 only ionospheric delay estimation, which is also mentioned by Issler et al. (2004). Finally, a more recent publication by Mayer et al. (2008) is—in essence—of the same contents.

A single-frequency approach is attractive for the following reasons: The Galileo global navigation satellite system will feature a highly precise signal, the E5 AltBOC modulated wideband signal (European Union 2010). Simsky et al. (2008) outline the fact that this signal is expected to provide the most precise code range measurements ever measured in the history of GNSS. Unfortunately, E5 is the one-and-only signal of this kind broadcast by Galileo. Consequently, a single-frequency VTEC retrieval method could optimally exploit the benefits of the E5 signal. Schüler and Oladipo (2012) present initial results based on both real data from the experimental GIOVE-B satellite as well as simulated data. Moreover, the implemented algorithm is a single site approach. Consequently, it can be easily integrated into a receiver. Our in-house s/w-defined receiver offers an API for such purposes (Stöber et al. 2010).

Depending on the precision of today’s code range measurements, GPS L1 VTEC retrieval also appears to provide useful results. Real world data from the IGS LEO network were used for this study. The main topic of this research is the problem of the modeling errors related to inaccurate mapping functions for the ionospheric delay. For this reason, we used the NeQuick2 ionosphere model and its integrated ray tracer in order to obtain more precise obliquity factors.

Algorithm and data processing

Absolute VTEC retrieval from single-frequency GNSS data is not trivial. A direct estimation of the desired quantity is not possible. We applied a single-layer model of the ionosphere according to Hoffmann-Wellenhof et al. (1993) and used a horizontal interpolation function for combination of pierce point specific ionospheric delays and separation of VTEC and bias terms.

Observation equations

The appropriate observable containing the ionospheric delay in slant direction is the code-minus-carrier observation that eliminates all geometry dependent components, with the impact of the ionospheric propagation delay being the prominent remainder in the observation equation

where CMC is the code-minus-carrier observable containing the ionospheric delay, i is the satellite index, PR is the code range measurement, λ is the carrier wave wavelength, ϕ the carrier phase measurement (in cycles), m the mapping function to project slant to zenith direction, C ≈ 40.309×1016 is a constant as defined in Petit and Luzum (2010), f is the carrier wave frequency, VTEC the vertical total electron content (in TECU) and b the combined bias term. This bias term consists of the following main constituents

where b REC is the receiver common bias, b CH is the receiver inter-channel bias, b i is a satellite specific bias and N is the ambiguity term (in cycles). We are estimating b for each satellite arc.

The estimation of our target parameter VTEC from a set of CMC observables to various GNSS satellites is not straightforward: VTEC i actually differs for each satellite i, because it refers to a satellite specific ionosphere pierce point (single layer model, see “Main contributors to error budget”). Moreover, the bias terms b for each satellite arc must be separated from the (vertically mapped) target parameters. These problems are addressed via a horizontal interpolation function, usually a low order polynomial with 3 or 4 parameters. We determined the coefficients of the interpolation function rather than each pierce point related VTEC i individually. A least squares block adjustment with a piece-wise linear modeling of the time dependent variations of VTEC is used.

Main contributors to error budget

The single frequency single site VTEC retrieval algorithm is prone to several deficiencies that can yield a deteriorated performance. These are:

-

1.

As to measurements, it is obvious that the code range precision is of major concern because code noise and multipath errors are much more severe on the ranges than on carrier phase measurements.

-

2.

The inaccuracy of the mapping function is critical, even for an elevation mask of 20° as used in this study. This is the main subject of our research.

-

3.

The horizontal interpolation function will also not be without error. We can expect a deterioration of the results under ionospheric storm conditions due to increased interpolation errors as well as for stations close to the geomagnetic equator where the ionospheric activity is higher than in the mid latitudes. This fact is clearly visible in previous results presented in Schüler and Oladipo (2012).

Points 2 and 3 are data modeling errors. Under certain conditions, these errors can become more important than the limited accuracy of the code range measurements. In such cases, using Galileo E5 AltBOC signal would not provide any significant gain compared to GPS L1 data.

Mapping function

The conventional mapping function is derived from the single-layer model of the ionosphere and can be found in standard textbooks. Note that our actual implementation is a slightly modified mapping function that is also used in the Bernese GPS software following Dach et al. (2007). We use the nominal parameters adopted by these authors, in particular a global effective ionosphere height of 450 km.

Actually, the driving parameter of this mapping function is the effective height of the ionosphere. Our experience is that an erroneous effective height will systematically bias the results, whereas the empirical precision estimates virtually remain unaffected. As a consequence, it is impossible to estimate or otherwise improve the effective height from daily data batches if no external reference data are available. Our software implementation features an effective height calibration mode using gridded data of the ionosphere in IONEX format (http://igscb.jpl.nasa.gov/components/prods.html), so that a station specific value can be obtained. However, the need for calibration is an additional effort and not really convenient, in particular for near real-time applications. This is exactly why we tried the NeQuick2 model for improved mapping function computation under consideration of diurnal and annual changes that can be present.

Nava et al. (2008) discuss the NeQuick2 ionosphere model. Once this ray tracer is implemented, an improved mapping function can be easily derived by a first ray trace into zenith direction yielding the zenith ionospheric delay followed by ray tracing into slant direction of the satellite yielding the slant ionospheric propagation delay. The ratio of the slant and zenith delays is the mapping function value. It can also be used to determine improved coordinates of the ionosphere pierce points for our single-layer modeling method.

NeQuick2 ray tracer

NeQuick is a three dimensional and time dependent global ionospheric electron density model (Radicella and Leitinger 2001). It was created on the basis of the analytical model by Di Giovanni and Radicella (1990)—abbreviated as DGR—and later improved by Radicella and Zhang (1995). It gives an analytical representation of the vertical profile of electron density, with continuous first and second derivatives. It is called more properly a “profiler” (or a “ray tracer”) because its mathematical formulation of the electron density profile is characterized by anchor points mainly linked to the peaks of the different ionospheric layers. It belongs to the International Center for Theoretical Physics (ICTP)—University of Graz family of models according to Hochegger et al. (2000).

In an effort to ensure that NeQuick gives a more realistic global representation of the ionosphere, various improvements have been implemented to the model. Major changes were made to both the topside and the bottomside formulations of the model as documented by Leitinger et al. (2005), and Coïsson et al. (2006, 2008a, b). Modification was made to the bottomside formulation in terms of the modeling of F1 layer peak electron density, height and thickness parameter following Leitinger et al. (2005). For the topside, a new formulation for the shape parameter k was adopted by Coïsson et al. (2006). Details on the evolution of NeQuick to its latest version (NeQuick2) can be found in Radicella (2009), while the list of major improvements can be found in Nava et al. (2008).

NeQuick is particularly tailored for trans-ionospheric applications that allows the calculation of the electron concentration at any given location in the ionosphere and thus the Total Electron Content (TEC) along any ground-to-satellite ray path by means of numerical integration. The input parameters are the coordinate of the receiver (ray point 1) and the satellite (ray point 2), year, month, time of the day, and ionization parameter. The output is the electron density profile and via integration the slant TEC (STEC) for the receiver-to-satellite link. The input ionization parameter to the NeQuick model is the monthly smoothed sunspot number (R12) or the 10.7 cm radio flux input (F10.7).

Processing notes

The IGS LEO network is a sub-network of the IGS tracking network implemented in support of LEO satellite missions. These stations provide data with a sampling interval of 1 s which is needed for our single-frequency VTEC retrieval algorithm. The normal data interval of IGS-archived data is 30 s. This can be too coarse for single-frequency cycle slip detection.

Data of year 2003 from 44 reference stations shown in Fig. 1 were processed using GPS L1 code and carrier data as well as GPS L1 and L2 carrier phase data. IGS IONEX grids (combined final product) are serving as reference data. These data are officially said to be accurate to 2–8 TECU (http://igscb.jpl.nasa.gov/ components/prods.html). We would like to have more precise reference data for our statistical analyses, but it is difficult to find alternatives. Digisonde data, for instance, mainly mirror the bottomside of the ionosphere, whereas the topside still contains a significant part of the ionospheric propagation delay.

GPS processing was carried out in daily data batches. VTEC is estimated with a temporal resolution of 30 min. IONEX derived VTEC values are available each 2 h making 12 data samples available for comparison each day. This means that up to 4,380 differences can be computed for each site provided there are no data outages in the 2003 data set. The actual average number of samples accepted for statistical analysis is close to 3,480. VTEC estimates associated with a standard deviation higher than 9.99 TECU were rejected.

Single-frequency performance analysis

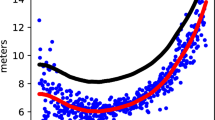

The global average results of single-frequency VTEC estimation using the two main approaches are illustrated in Fig. 2. The “old”, i.e. conventional approach is denoted as “450 km fixed height” (abbreviated as “H450” below), whereas the “new” implementation is called “NeQuick2 mapper”.

The “bias” is the average difference using all available data for a station. The “global average” is then the average bias using the results from all 44 stations. Similarly, the RMS is computed from the squared sum of the differences. In contrast, the standard deviation is bias free, i.e. it is computed from the residuals rather than the differences. Please also refer to Table 1 for numerical results. The annual bias computed as arithmetic mean and the median value are listed.

The results are somehow sobering: The annual biases as well as the RMS for the NeQuick2 mapping function approach are higher, although of similar magnitude. Usually, we refer to the RMS as a measure of “accuracy”. This, however, is only legitimate if the reference data are “significantly” more accurate than the data under investigation, i.e. preferably at least one order of magnitude. In our case, the IONEX data are not perfectly appropriate, because the estimated error can be up to 8 TECU. The accuracy level expected for our own results has the same order of magnitude. This could compromise a meaningful statistical analysis. Moreover, and this is very important from our point of view, there are IGS analysis centers that generate IONEX maps using mapping functions similar or identical to the one we are using in the conventional (old) approach. The CODE analysis center (University of Bern, Switzerland) is using its Bernese software with an identical mapping function. As a consequence, the IONEX maps could be similarly biased just like our results for the H450 method.

In essence, this means that we are not able to perform a real accuracy analysis. Instead, we should better focus on the precision estimates, i.e. the standard deviation, which is computed from bias-reduced differences. Here, the NeQuick2 approach is slightly better, although only 0.1 TECU (0.9 %) on global average.

Figure 3 portrays the individual standard deviations for each of the stations under investigation. The difference of the annual standard deviation using the H450 method minus that of the NeQuick2 mapper is plotted. A positive difference indicates that the NeQuick2 approach is more precise. Actually, the standard deviation using the NeQuick2 mapper is smaller than that of the H450 mapper for 15 (34 %) stations, and it is higher for 11 (25 %). We found no difference for 18 (41 %) stations. The threshold for identification was set to 0.1 TECU. This means that a site is considered to perform better using the NeQuick2 mapper if the difference in standard deviation is higher than this threshold. For all stations in the range of ±0.1 TECU we consider the precision to be identical.

There are three stations that show an improvement of more than 1 TECU, namely KOKB (+1.8 TECU, Kokee Park, Waimea, USA, latitude 22.1263°N), MKEA (+1.4 TECU, Mauna Kea, USA, 19.8014°N) and MAS1 (+1.7 TECU, Maspalomas, Spain, 27.7637°N). Stations KOUR (Kourou, French Guyana, 5.2522°N) and PIMO (Quezon City, Philippines, 14.6357°N) show the highest level of deterioration of −0.6 TECU in our data set. All these stations are located in the geomagnetic equatorial belt that reaches up to the Canary Islands (MAS1). The average improvement for the 15 stations is +0.53 TECU, the average deterioration is −0.28 TECU.

The statistical analysis is carried out with respect to standard descriptors such as average bias, RMS and standard deviation, but also with respect to robust descriptors such as the median bias. Comparing the arithmetic mean with the median annual bias, we should expect similar values if the statistical distribution of the differences was symmetric and not compromised by outliers, e.g. if it followed a Gaussian distribution. Outliers can be present because of anomalous behavior of the ionosphere. This was actually the case in year 2003 regarding the October storm documented by Doherty et al. (2004). Moreover, data modeling errors induced by an insufficient horizontal interpolation function or vertical mapping function can also distort the statistical distribution.

Figure 4 illustrates the differences between the arithmetic mean bias and the median bias for both the H450 method (red dots) as well as the NeQuick2 mapper (green dots). The closer these dots are to the zero abscissa the better, because we normally prefer to work with undistorted results. What one can quickly see is that the green dots, i.e. the NeQuick2 related differences, are smaller than the red dots for the same site in the vast majority of the cases. On global average, the difference is 0.68 TECU for the NeQuick2 mapper and 0.83 TECU for the H450 approach. The difference is smaller compared to H450 in 36 out of 44 cases (82 %). A major reduction of the difference can be observed for certain stations such as KOKB and MAS1. It seems that the NeQuick2 mapper is able to deliver statistically slightly less distorted results.

Note that some equatorial stations feature strong deviations between median and mean bias, e.g. MALI (Malindi, Kenya, 2.9959°S), MSKU (Franceville, Gabon, 1.6316°S) and GLPS (Puerto Ayora, Galapagos Islands, Ecuador, 0.7430°S). SANT (Santiago, Chile, 33.1503°S) is actually located at a dip latitude of 18°S in the equatorial anomaly region of the American Sector. This underlines that the single-frequency single site algorithm can exhibit substantial shortcomings in regions where the ionosphere is more active or behaves anomalously compared to the mid latitudes.

Single versus dual-frequency performance

We have focused on single-frequency VTEC retrieval so far. However, the same site specific algorithm can also be powered by dual-frequency carrier phase measurements:

where L1/L2 designates GPS L1/L2 carrier phase measurements (ϕ), frequencies f or wavelengths λ. Note that the bias term b now comprises the inter-frequency bias.

The modification of the existing single-frequency algorithm is very straightforward. In this section we would like to investigate whether the use of dual-frequency carrier phase observations yields more precise results. Undoubtedly, the accuracy of the observations will be substantial. However, we have already highlighted the negative impact of the data modeling errors that can compromise VTEC accuracy. For this reason, we do not expect a drastic improvement of the results. We restrict the statistical analysis to the NeQuick2 mapper.

From Table 2 and Fig. 5 we can see that the systematic error, the RMS, and the standard deviation are lower for the dual-frequency carrier phase only data processing method compared to the single-frequency code/carrier combination. However, the level of improvement is relatively low. Comparing the noise level of carrier phases versus code ranges, we might expect a larger improvement. Once again, this fact emphasizes that data modeling errors play a very important role in our site specific VTEC estimation algorithm. The standard deviation is 2 % smaller for the dual-frequency results on global average. This corresponds to as few as 0.2 TECU. The RMS improves by 2.6 % (0.3 TECU).

When looking at the RMS and standard deviations of the individual stations plotted in Fig. 6, we see that the dual-frequency method shows an improved standard deviation in 38 out of 44 cases (86 %). This value is even slightly higher with respect to the RMS (40 sites, 91 %). The strongest improvement is visible for SANT (+1.7 TECU, RMS and standard deviation overlap in the plot), KOKB (+1.3/1.1 TECU) and YELL (+1.1/0.9 TECU, Yellowknife, Canada, 62.4809°N). There are 5 stations featuring a reduced RMS or standard deviation of at least close to 1 TECU or better. Deterioration appears to be present for some few sites, the most pronounced one being ARTU (−2.6/3.0 TECU, Arti, Russian Federation, 56.4298°N). We had a closer look at the results for this site and found that L1 results were rejected during two larger time windows (days of year 1–43 and 248–273), whereas dual-frequency results were available (with a few outages only), but at a relatively high noise level for the first time window. This explains why ARTU appears to be more accurate in single-frequency mode.

We would like to stress that the statistical basis for the comparison between the single and the dual-frequency method is not identical. As mentioned above, the rejection criterion applied to the empirical standard deviation of the VTEC estimates is 9.99 TECU in both cases. With the dual-frequency algorithm being more precise, more results pass the outlier detector. Consequently, the database for that method is larger. This is nicely illustrated in Fig. 7: The percentage p plotted is defined such that n 1 + p/100. n 1 = n 2 is fulfilled, where n 1 is the number of samples, i.e. result records, available from the single-frequency algorithm and n 2 is that of the dual-frequency algorithm. This percentage is usually higher than 5 %, reaches 16 % for QUIN (Quincy, USA, 39.9746°N) and a maximum of 29 % in case of MSKU.

Summary and conclusions

Single-frequency single site VTEC estimation requires some approximations of reality. In our implementation, this comprises the use of a single-layer model of the ionosphere with an associated mapping function and the use of a low order polynomial for horizontal interpolation of VTEC. From the results we have obtained so far we deduce that this method is suitable for ionosphere monitoring for mid and high latitude sites. We do not recommend this method for sites located within latitudes ±20° centered around the magnetic equator, because the data modeling errors grow considerably.

In an effort to improve the algorithm, we implemented the NeQuick2 model of the ionosphere, and used it as an improved mapping function. This is a straightforward method. Because the NeQuick model is a ray tracer, our hope was that this model would supply improved mapping function values. This model should be able to take seasonal, diurnal and spatial variations into account. Consequently, there is no longer any need to perform a calibration of the mapping function as recommended for the old implementation.

We must admit that the level of improvement in precision is low, although the standard deviation formally improves for 34 % of the stations. The absolute level of improvement for these stations is around 0.5 TECU. We found no improvement for 41 % and deterioration for 25 % of the sites. Taking the considerable processing load into consideration, it remains questionable whether the approach outlined in this study is worth being implemented in an operational version of the algorithm. As a matter of fact, the NeQuick ray tracing operations are considerably more time consuming than the traditional mapping function computation.

Secondly, we investigated the level of improvement when using dual-frequency GPS carrier phase measurements for the single site VTEC estimator versus the single-frequency code/carrier VTEC retrieval algorithm. As expected, an improvement can be stated for 86 % of the sites under investigation. However, the level of improvement is only 2.6 % (0.3 TECU). This underlines that data modeling errors are of major concern for the single site algorithm whereas observation accuracy appears to be of minor concern.

Abbreviations

- API:

-

Application programming interface

- CMC:

-

Code-minus-carrier (linear combination)

- DGR:

-

Di Giovanni and Radicella model (of the ionosphere)

- ICTP:

-

International Centre for Theoretical Physics

- STEC:

-

Slant total electron content

- TEC:

-

Total electron content

- VTEC:

-

Vertical total electron content

References

Cohen CE, Pervan B, Parkinson BW (1992) Estimation of absolute ionospheric delay exclusively through single-frequency GPS measurements. In: Proceedings ION GPS 1992, The Institute of Navigation, Albuquerque, NM, USA, September 16–18, pp 325–329

Coïsson P, Radicella SM, Nava B, Leitinger R (2006) Topside electron density in IRI and NeQuick: features and limitations. Adv Space Res 37(5):937–942

Coïsson P, Nava B, Radicella SM, Oladipo OA, Adeniyi JO, Krishna SG, Rama Rao PVS, Ravindran S (2008a) NeQuick bottomside analysis at low latitude. J Atmos Solar Terr Phys 70(15):1911–1918

Coïsson P, Radicella SM, Nava B, Leitinger R (2008b) Low and equatorial latitudes topside in NeQuick. J Atmos Solar Terr Phys 70(6):901–906

Dach R, Hugentobler U, Fridez P, Meindl M (2007) Bernese GPS software version 5.0 (user manual of the Bernese GPS software version 5.0). AIUB—Astronomical Institute, University of Bern, Switzerland

Di Giovanni G, Radicella SM (1990) An analytical model of the electron density profile in the ionosphere. Adv Space Res 10(11):27–30

Doherty P, Coster AJ, Murtag W (2004) Space weather effects of October–November 2003. GPS Solut 8(4):267–271. doi:10.1007/s10291-004-0109-3

European Union (2010) European GNSS (Galileo) Open Service Signal In: Space interface control document, Reference: OS SIS ICD, Issue 1.1, September 2010, accessible via http://ec.europa.eu/enterprise/policies/satnav/galileo/open-service/index_en.htm

Hochegger G, Nava B, Radicella SM, Leitinger R (2000) A family of ionospheric models for different uses. Phys Chem Earth Part C Solar Terr Planet Sci 25(4):307–310

Hoffmann-Wellenhof B, Lichtenegger H, Collins J (1993) GPS—theory and practice, 2nd edn. Springer, Wien

Issler JL, Ries L, Bourgeade JM, Lestarquit L, Macabiau C (2004) Probabilistic approach of frequency diversity as interference mitigation means. In: Proceedings ION GPS 2004, The Institute of Navigation, Long Beach, September 21–24, pp 2136–2145

Leitinger R, Zhang ML, Radicella SM (2005) An improved bottomside for the ionospheric electron density model NeQuick. Ann Geophys 48(3):525–534

Lestarquit L, Suard N, Issler JL (1996) Determination of the ionospheric error using only L1 frequency GPS receiver. In: Proceedings ION GPS-96, The Institute of Navigation, Kansas City, September 17–20

Mayer C, Jakowski N, Beckheinrich J, Engler E (2008) Mitigation of ionospheric range error in single-frequency GNSS applications. In: Proceedings. ION GPS 2008, The Institute of Navigation, Savannah, Georgia, pp 2370–2375, 16–19 September 2008

Nava B, Coisson P, Radicella S (2008) A new version of the NeQuick ionosphere electron density model. J Atmos Sol-Terr Phys 70(15):1856–1862. doi:10.1016/j.jastp.2008.01.015

Petit G, Luzum B (editors, 2010) IERS Conventions (2010). IERS Technical Note No. 36, International Earth Rotation and Reference Systems Service (IERS), Verlag des Bundesamts für Kartographie und Geodäsie, Frankfurt am Main

Qiu W, Lachapelle G, Cannon ME (1994) Ionospheric Effect Modelling for Single Frequency GPS Users. In: Proceedings ION NTM 1994, The Institute of Navigation, San Diego, January 1994, pp 911–919

Radicella SM (2009) The NeQuick model genesis, uses and evolution. Ann Geophys 52(3):417–422

Radicella SM, Leitinger R (2001) The evolution of the DGR approach to model electron density profiles. Adv Space Res 27(1):35–40

Radicella SM, Zhang ML (1995) The improved DGR analytical model of electron density height profile and total electron content in the ionosphere. Ann Geofis XXXVIII(1):35–41

Schüler T, Oladipo OA (2012) Single-frequency GNSS ionospheric delay estimation—VTEC monitoring with GPS, GALILEO and COMPASS. 1st edition, Lulu Press, ISBN 978-1-4716-4225-8

Simsky A, Mertens D, Sleewaegen JM, De Wilde W, Hollreiser M, Crisci M (2008) Multipath and tracking performance of Galileo ranging signals transmitted by GIOVE-B. In: Proceedings ION GNSS 2008, The Institute of Navigation, Savannah, September 2008, pp 1525–1536

Stöber C, Anghileri M, Sicramaz Ayaz A, Dötterböck D, Krämer I, Kropp V, Won JH, Eissfeller B, Sanroma-Güixens D, Pany T (2010) ipexSR: A real-time multi-frequency software GNSS receiver. In: Proceedings of the 52nd International Symposium ELMAR-2010 IEEE, September 15–17, Zadar, Croatia

Xia R (1992) Determination of absolute ionospheric error using a single frequency GPS receiver. In: Proceedings ION GPS 1992, The Institute of Navigation, Albuquerque, 16–18 September, pp 483–490

Acknowledgments

The authors would like to thank the IGS community (International GNSS Service) for granting access to the high-rate dual-frequency GPS data of the IGS LEO network used in this study. We also thank NASA and National Space Science Data Center (NSSDC) for making the IRI-2007 online model) available, the Aeronomy and Radiopropagation Laboratory of the Abdus Salam International Center for Theoretical Physics (ICPT, Trieste, Italy) for making the NeQuick code openly available for scientific use. Trademarks possibly mentioned in the text are the property of its respective owners. Finally, the financial support of the European Union and the European GNSS Agency (GSA) in the framework of FP7 research grant “SX5—Scientific Service Support based on Galileo E5 Receivers” is highly appreciated.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schüler, T., Oladipo, O.A. Single-frequency single-site VTEC retrieval using the NeQuick2 ray tracer for obliquity factor determination. GPS Solut 18, 115–122 (2014). https://doi.org/10.1007/s10291-013-0315-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10291-013-0315-y