Abstract

The complexity of new information technologies (IT) may limit the access of elderly people to the information society, exacerbating what is known as “the digital divide,” as they appear to be too challenging for elderly citizens regardless of the integrity of their cognitive status. This study is an attempt to clarify how some cognitive functions (such as attention or verbal memory) may determine the interaction of cognitively impaired elderly people with technology. Twenty participants ranging from mild cognitive impairment to moderate Alzheimer’s disease were assessed by means of a neuropsychological and functional battery and were asked to follow simple commands from an avatar appearing on a TV by means of a remote control, such as asking the participant to confirm their presence or to respond Yes/No to a proposal to see a TV program. The number of correct answers and command repetitions required for the user to respond were registered. The results show that participants with a better cognitive and functional state in specific tests show a significantly better performance in the TV task. The derived conclusion is that neuropsychological assessment may be used as a useful complementary tool for assistive technology developers in the adaptation of IT to the elderly with different cognitive and functional profiles. Further studies with larger samples are required to determine to what extent cognitive functions can actually predict older users’ interaction with technology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Patients suffering from a mild to moderate stage of progression of Alzheimer’s disease typically present cognitive and functional impairments affecting memory, concentration, and learning [1]. This decline of functions, together with the lack of information technology (IT) skills in the generation of people of 60 and over, poses a barrier to exploiting the opportunities offered by technology. Hence, new technologies may exacerbate the digital divide problem if certain properties of technologies are too challenging for elderly citizens, regardless of the integrity of their cognitive status [2]. Indeed, some studies [3, 4] show how, when learning to use a computer, older adults take longer to master the system, make more errors, and require more help than younger people. Since software applications tend to increase in complexity over time, they may overload the processing capacity of elderly people. However, technology has been identified as a tool that can be used to promote independent living, improve the safety and autonomy of people with dementia, and support their quality of life [5].

People with dementia are not used to learning how to operate new devices. Limitations in knowledge and understanding of the technology merge with the limitations in communication between the user and the technology [6, 7]. However, the ACTION participatory design model [8] (which comprises the identification of user needs, early program development, testing and refining) defends the possibility that people with dementia are able to enjoy computer training sessions and gain considerable satisfaction from learning a new skill that they previously thought was not feasible.

Also, family caregivers of patients suffering from Alzheimer’s disease spend almost all their time caring about their relatives [8–13]. There are many technological solutions that could assist in the care of patients with Alzheimer’s at home [5, 7, 14, 15]. However, caregivers usually fall into the same age range (i.e., elder spouses or daughters, taking care of demented husbands or parents), so the barriers put in front of them are the same as for the rest of elderly people.

In this context, the i2home project funded by the European Commission 6th Framework Program aims to build devices for the usage of domestic electronic and communication devices for elderly and disabled people, based on industry standards. This means that devices developed in the project will be based on the same standards for technologies used in the industry of electric and electronic devices, for example, standards for ovens, washers, dishwashers, air conditioners, etc., in order to facilitate the future integration of technologies and devices in the homes of these people. In other words, if an older person or a person with a disability wants to integrate new electric and electronic devices in the future for their living environment, they can do it easily without needing to make new physical or technological adaptations in their homes, without needing to buy a whole set of new devices, or without needing to learn new and complex ways to use the electronic devices they will have at their homes. The scope of i2home is to make devices and appliances more accessible and to provide intuitive interaction (understood here as the “interaction based on the use of knowledge that people have gained from other products and/or experiences of their past” [16]) for people suffering from different degrees of cognitive decline from mild cognitive impairment (MCI) to moderate Alzheimer’s disease.

Taking into account the lack of IT skills and cognitive and functional impairments, the TV and the remote control were selected as user interfaces in the i2home project, because the former is previously learned interfaces. A total of 98.3 % of the elderly from 60 to over 80 years old posses and regularly use a TV set [17], which is the reason why TV sets are a very well-suited technological platform to improve the quality of life of elderly people through tailored information and communication technology (ICT)-based applications.

The particularity of the i2home interface for elderly patients suffering from cognitive decline is the inclusion of an avatar with the ultimate aim of giving specific commands that help in the supervision of the end user with dementia. An avatar is a life-like simulation of a virtual assistant generated through computer graphics, and previous studies performed by the authors’ research group [18–20] have indicated that interaction between avatars and patients with Alzheimer’s disease is possible. Though existing recent literature points to a greater differentiation between avatars and human faces relying on particular features of the face [21, 22], differential responses to human faces versus virtual avatar faces will not be presented here; facial emotional expressions of the avatar used (if compared to a real human face) were minimal, as can be seen in Fig. 1, with just a brief and precise movement of the lips when talking.

In terms of technical parameters of the avatar, as described in [17], an external application renders the avatar using OpenSceneGraph for graphical, and Loquendo 7 for speech output. The raw avatar video is supplied to FFmpeg for real-time encoding to MPEG-TS. A HTTP-streaming server conveys the video to the STB, which is sufficiently reliable for cable home networks. The 2D GUI is also created on the IS using a VNC X-server. Widgets are created dynamically within the IS module using Gtk#. A VNC client in the STB plugin receives the GUI from the IS and renders it to the frame buffer of the Dreambox. It also transfers user input back to the IS. A Weemote® dV programmable remote control was initially thought for user input, though it was substituted by a simplified remote control, with basic commands (YES, NO, +, −, arrow up, arrow down). The switch between the avatar, recorded game, and on-going TV shows was quite abrupt in order to catch users’ attention.

This study aims to evaluate what cognitive functions may be involved in the correct interaction with the avatar; more specifically, to assess whether these measures are related to the interactions shown by the users with different degrees of cognitive decline, including mild to moderate Alzheimer’s disease.

2 Methods

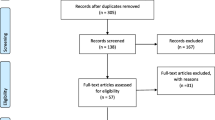

2.1 Participants

The sample was composed of 20 participants, 5 male, and 15 female, from MCI to moderate Alzheimer’s disease (diagnosed according to NINCDS-ADRDA criteria), ranking from 3 to 5 in the Global Deterioration Scale (GDS) [23]. More specifically, ten subjects scored a GDS = 3, eight scored GDS = 4, and two scored GDS = 5. The average age of the group was 82.43 (SD = 7.65). In terms of education levels, 66.7 % had completed primary studies (i.e., 8 years of education, or schooling until being 14 years old), 14.3 % had completed professional training, and the rest had not fulfilled primary studies; among these, 9.5 % read and wrote normally, 4.8 % read and wrote with difficulty, and 4.8 % were illiterate. All of them were, at the time of the evaluation, attending a day care centre, and all had agreed to participate by means of a signed consent form.

2.2 Procedures

Prior to presenting the avatar to the subjects, they were assessed by means of a neuropsychological screening battery including the following:

-

MiniExamen Cognoscitivo (MEC): Adaptation of the MMSE—Mini-Mental State Examination [24] to the Spanish population [25].

-

GDS: Global Deterioration Scale [23]. It is a scale for the assessment of primary degenerative dementia and delineation of its stages.

-

RAVLT: Rey Auditory Verbal Learning Test validated to the Spanish population [26]. This is a brief, easily administered measure that assesses immediate memory span, new learning, susceptibility to interference, and recognition memory [27], by means of a list of words read aloud for five consecutive trials; after free recall of each trial, a free recall of an interference list occurs; afterward, a delayed recall task and a subsequent recognition task of the first list take place.

-

Barthel ADL Index Scale, Spanish version [28]. It is a scale used to measure performance in basic activities of daily living (ADL).

-

Digit span (DS) subtest of the Wechsler Adult Intelligence Scale—Third Edition adapted to the Spanish population [29]. It consists of two parts and requires the subject to repeat digits forward and in reverse order.

-

Boston Diagnostic Aphasia Examination (BDAE) is a test for the assessment and diagnosis of aphasia [30, 31]. It is composed of 10 subtests, each of them constituted by different items that are complemented by 16 images for its application. For the assessment of the sample the “Commands” category of the auditory comprehension subtest was used, in which the ability for the comprehension of auditory presented simple, semi-complex, and complex commands was assessed.

-

In addition to the previous evaluation protocol, simple tests such as a name writing task and a color identification test were created ad hoc and administered in order to measure whether the identification of colors and symbols that appear in the remote control could affect the interaction with the avatar. The same colors and symbols included in the remote control were printed on a separate paper, and the participants had to point with their finger to each one of them when asked. That is, the symbols evaluated were those appearing on the simplified remote control: +, −, YES, NO, arrow up, arrow down. These would later show up as labels on the remote control, not on the TV screen. Moreover, a color identification test was administered as to determine whether the person had any kind of visual, attentional, or cognitive impairment to follow basic commands and differentiate basic stimuli, if an avatar was intended to be presented on a TV set. No personalization of the avatar was considered at this stage of the project in terms of changing hair/eyes/clothes, but this suggestion will be considered for future developments.

Afterward, in a different session, each subject was positioned in front of the TV set. On a table next to the subject, a piece of paper, a pen, and a remote control (RC) with 2 buttons (labeled YES and NO) were placed.In the application, the assistant explained the following instructions prior to the avatar’s presentation on the TV: “We are going to watch a TV program. At any moment, while we are watching TV, a girl will appear on the screen and will ask you some questions which you will have to answer using the remote control. Are you ready? Let’s turn the TV on. Is the TV loud enough for you?” After the volume adjustment, a TV program was presented.The procedure started with the subject watching a TV program, and the following sequence of interactions with the avatar was required from the user:

-

The presence confirmation of the avatar’s 1st presentation. After the subject had been watching the TV for some time, the screen turned black, and an avatar appeared on the screen saying “Mr./Mrs. [name]… Are you there? If you are there, press YES on the remote control.” If the subject did not produce any response with the RC (neither YES nor NO), the avatar’s previous speech was repeated for a second time “Mr./Mrs. [name]… Are you there? If you are there, press YES on the remote control.”

-

See-a-program-proposal. Either if the person confirmed its presence to the avatar or if they did not answer anything with the remote control, the avatar continued as follows: “Mr./Mrs. [name], a Basque “pelota” match is going to start. If you want to watch it, press YES on the remote control.” If the subject answered YES, the avatar disappeared and a Basque “pelota” match started. If the subject answered NO, the avatar disappeared and the previous TV program continued. Again, if the subject did not produce any response with the RC (neither YES nor NO), the avatar’s speech was repeated. “Mr./Mrs. [name], a Basque “pelota” match is going to start. If you want to watch it, press YES on the remote control.” If no answer was given at this point, the previous program appeared on the TV screen again as if the answered were “NO” (but the answer was registered as “no response”).

-

Presence confirmation of the avatar’s 2nd presentation. After the subject had been watching the TV for some time, the screen turned black and the avatar reappeared again asking the subject for a presence confirmation.

-

Write-your-name proposal. “Mr./Mrs. [name], write-your-name on the piece of paper that you have in front of you.” After a while, the avatar would ask “Mr./Mrs. [name] have you already finished? If you have already finished, press YES on the remote control.” After the subject’s answer with the RC, the avatar said “Thank you very much for your cooperation. See you later!” and the application finished.

3 Results

3.1 Neuropsychological evaluation

Subjects showed a mild to moderate cognitive impairment as measured by the MEC and GDS. In addition, their memory processes, as illustrated by their performance in the RAVLT, were mildly impaired when faced with novel situations (trial 1), when it came to encoding and free retrieval (trial 5), as well as in recognition processes (false positives). Attentional processes also show low scores when measured with direct DS and inverse digit span. The group also showed a mild functional dependency as measured with the Barthel Scale. All these results are summarized in Table 1.

3.2 Interactions with the avatar

For the purpose of describing the interactions between subjects and the avatar, frequencies of correct answers and repetitions required for a user to respond (“immediate”—i.e., the user respond at the first attempt- vs. “delayed”—i.e., the avatar had to repeat the question for the user to respond) were registered, and verbal responses were analyzed.

3.2.1 First i2home trial

On the first trial with the participants presented with the i2home avatar, 100 % of the users confirmed their presence to the avatar using the remote control, but only 86.7 % accepted to see the “pelota” match, and only 80 % confirmed having written their names on the paper. Hence, a decrease in the RC use was perceived with each subsequent task. Table 2 shows the moment of response (immediate vs. delayed).

It must be highlighted that, even if no directive was given with respect to giving a verbal response to the avatar, 80 % of the sample responded verbally to the avatar, which could reflect to some extent that verbal response is a more natural way of interaction than the use of a remote control for people with mild to moderate cognitive impairment. The relevance of these answers was taken into account, but they were not included in the analyses, since no speech recognition interface was being evaluated. Only answers using the remote control were analyzed.

3.2.2 Second i2home trial

When faced with the i2home task in the second administration, after a mean of 3 week time from the first exposition to the avatar, the sample of participants was reduced to 13, due to severe health problems of 6 and further hospitalization of 2 participants who took part in the first application. After discarding a normal distribution of a great part of the variables, a Wilcoxon test was used to confirm whether they were differences among the first and second application results.

For this second application, for which the instructions were reduced to the sentence “we are going to watch a TV program,” most of the users stated that they remembered having done a task like this before. In this context, the use of a remote control was performed by 84.6 % of the sample (n = 11) to confirm their presence to the avatar’s firs presentation, 92.3 % (n = 12) for the second presentation and to confirm having written their names on the paper, and 76.9 % (n = 10) to accept watching a “pelota” match. Differences between the first and second application results regarding the use of remote control are not statistically significant (i.e., the use of remote control was similar in both i2home trials).

However, there is a need to highlight a decrease in verbal response to the avatar. On this second trial with the i2home avatar, 69.2 % (n = 9) gave a verbal response to the avatar the first time it appeared, and 76.9 % (n = 10) when it appeared for the second time. Moreover, the verbal response to the proposal to watch the “pelota” match was only 30.8 % (n = 4). A Wilcoxon test performed to clear up differences between the first and second application results showed that these differences were close to be statistically significant (Z = −1.89, p = .059).

There is a fairly feasible explanation to these results. First, regarding general performance, it was possible that the familiarity with the task stated by most of the participants oriented their answers to the use of the remote control rather than to a verbal response which had no effect in practical terms (i.e., the i2home application responded to the RC answers, not to verbal answers). Moreover, a verbal response to the avatar’s question “Are you there?” seems quite more natural than a verbal response to “A ‘pelota’ match is going to start. If you want to watch it, please press YES on your remote control,” where the avatar asks no direct questions to the user. It is very likely that an instruction given in interrogation terms would have elicited verbal responses at a rate similar to the one of the first i2home avatar trials. It remains to be solved in the future whether attention/executive disruptions in mild to moderate Alzheimer patients make them more likely not to inhibit verbal answers when asked direct questions, regardless these questions are asked by a human being (i.e., caregiver) or by a virtual avatar on the screen of a TV set.

3.3 Correlations between neuropsychological testing and human–avatar interactions

On the basis of the current results, statistical analyses were developed to find out whether cognitive and functional measures could relate in any way to the performance shown by the subjects in their interactions with the avatar, both in the first i2home trial (i.e., the one with more elaborated instructions given to the user) and in the second trial (i.e., the one with the simple instruction “we are going to watch a TV program”).

3.3.1 Correlations for the first i2home trial

After a normal distribution of a great part of the variables by means of a Kolmogorov–Smirnoff test was discarded, Spearman’s rho correlations were calculated, as shown in Table 3.

These results show that the higher the Barthel score (that is, the higher the functional independence), the less repetitions are needed to answer the avatar. Also, the better the performance in an attentional task such as the inverse digit span, the shorter the time needed to answer to a command from the avatar, such as the “see-a-program” proposal. Moreover, relationship between the production of false negative responses in the RAVLT recognition trial and the time needed to confirm the presence of the avatar’s 1st presentation may be a signal of a distractibility component that prevents the subject from answering immediately such a simple question as the confirmation of his presence. Finally, the greater the ability to discriminate between relevant and irrelevant stimuli (RAVLT recognition trial), the faster the response to questions asked by the avatar, showing a higher level of sustained attention and concentration.

3.3.2 Correlations for the second i2home trial

As for the results from the first trial, after discarding a normal distribution for a great part of the variables, Spearman’s rho correlations were calculated.

As it can be seen in Table 4, in this particular trial, the higher the general cognitive performance (measured by the MEC test -Spanish adaptation of the MMSE), the less the time needed to react to the avatar.

With regard to the Barthel index for functional independence, a very strong negative correlation was found between Barthel index and the emission of a verbal response both to the avatar’s first presentation (ρ (11) = −.716, p < .01) and second presentation (ρ (11) = −.735, p < .01), showing that a higher functional independence was related to a higher probability for the participant to focus on the specific instructions of the avatar (i.e., use of the remote control). Moreover, as shown in Table 4, functional independence was associated with fewer repetitions and less time needed to give appropriate responses to the avatar’s proposal to watch a “pelota” match.

One interesting result was the correlation between false negative answers to the RAVLT and the presence of verbal responses to confirm presence to the avatar’s second presentation (ρ (11) = .670, p < .05). It is difficult to find an adequate interpretation for this result, since in the second i2home trial, some learning effect from the first trial was expected. One possibility is that verbal response may constitute a sign of a deficit of attention or executive processes, since there are no instructions to give a verbal response. Thus, emission of false negatives in RAVLT, which may be described as a response to relevant or familiar information incorrectly interpreted as irrelevant or unknown, may relate to further attention and memory problems. Maybe, after two applications of the i2home system and the users’ familiarity with the remote control, still giving a verbal response is less related to the users’ spontaneity and more related to cognitive problems. However, this is an issue that requires further research.

In line with this possibility that verbal response was more a sign of cognitive problems than a sign of spontaneity, RAVLT recognition trial showed that the better the recognition abilities, the shorter the time and the fewer the repetitions needed to give an appropriate response to requirements from the avatar.

However, the clearest differences for this second application derived from the initial screening tests administered, such as name writing test and color identification test, both administered in order to examine users’ abilities to follow simple commands, as it has been stated in the procedures’ section. A good performance in name writing tasks meant a better focus on the use of the remote control, faster responses, and fewer repetitions (to confirm avatar’s second presentation). Finally, the color identification test became the best correlating measure with the participants’ interactions with the avatar.

On the contrary, the BDAE showed no statistically significant correlations with the interactions between the users and the avatar. It is likely that the simplicity of the required interactions with the avatar, which did not demand verbal expression and do not seem to affect verbal comprehension, may explain the fact that this test is not a reliable predictor of user interaction in these specific trials. However, for reaching more reliable conclusions, further studies with larger samples including aphasic patients would be required.

4 Conclusions

Conclusions derived from this research show, firstly, that functional measures (such as the Barthel ADL index) can relate to the expected number of trials needed by a person to interact with an avatar. Secondly, cognitive measures (especially those related to attentional and processing speed domains (i.e., digit span) and discrimination between relevant and irrelevant information (i.e., RAVLT) can relate to the latency of response that the subjects show when they respond to the avatar. A similar result was reported by Czaja et al. [32], who found that the successful performance on computer-based information search and retrieval tasks was related with attentional and processing speed cognitive functions. However, to the authors’ knowledge, the study presented in this paper is one of the very few studies which address the usefulness of neuropsychological measures as complementary tools for personalizing interfaces for users with mild to moderate cognitive decline.

It is likely that even the simplest brief cognitive screening tools (i.e., name writing task, color identification) may be a shortcut to acknowledge the expected interaction of a person with mild to moderate cognitive impairment with technological devices, such as the one proposed in the i2home project. In other words, it is very likely that cognitive and functional measures may help to predict users’ expected response to the avatar if further trials with larger samples are performed, as correlation results with the small sample presented here point to this trend. Also, their cognitive status may explain how much time that interaction will take. It is clear that further research should be required to establish whether these cognitive and functional measures could become by themselves predictors of performance of the elderly with avatars. The size of the sample limits the extrapolation of the results, but still leaves the door open to use cognitive and functional measures for guidance in a better adaptation of ICTs to elderly people. As Slegers et al. [3] explain, knowing which cognitive abilities lead to problems with technology will make it possible to modify devices to accommodate older users’ capacities and, as a consequence, improve their efficiency. In this sense, it can be very useful for technology developers to get a quick idea of whether their end users will be able to interact with the technology they are developing, even before any prototype testing is carried out. Even for elderly people with no cognitive impairment, cognitive skills such as speed of information processing, psychomotor processes, working memory, and mental flexibility seem to be critical when using complex technological systems [3].

Also, cognitive and functional statuses observed in the users by means of neuropsychological testing may accurately orient technology developers in the adaptation of their interfaces in a more efficient way. A recent review [5] shows that research on the role of technology in dementia care is still in its infancy, but the aim to integrate technology in elderly people’s lives with different cognitive status (from normal to cognitive impairment) in order to maintain their quality of life and their autonomy is worthy of intensive efforts in this area.

One clear limitation of this study is the sample size. This must be seen as a preliminary study in which it was intended to validate the concept of presenting an innovate feature (i.e., the avatar) in a classical user interface (TV set) with a classical way to interact with it (i.e., remote control), that is, familiar to all the users involved regardless of their cognitive status. Once confirmed that the users’ interaction with the avatar is as natural as if they were answering to a real person, it is of course necessary to perform further studies with larger samples and contrast control groups to validate this concept and allow generalizability of results to different populations and using commands of increasing complexity. Also, these larger studies are required to determine to what extent cognitive functions can actually predict older users’ interaction with technology.

In this regards, could the results of the study do not confirm Blackler et al’s [33] findings, measuring the effect of familiarity with technology, since it was an assumed principle that a TV set with a remote control was familiar to all the users involved in the study. Not having considered the effect of familiarity may have confounded the results, and the possibility remains that some of the observed effects attributed to cognitive abilities (mainly, attention and memory) may in part be due to the effects of familiarity.

The effects of previous experience and openness to technology have been clearly documented [34, 35] and should be taken into account in future research that overcome the limitations affecting the current study. Accordingly, an in-depth study like this will of course benefit of the work done so far with regard to utilization of cognitive and functional measures, which will help in the adaptation and simplification of user interfaces to users’ abilities. As stated by Gudur et al. [36, 37], keeping the interfaces clean and simple with minimal distractions to reduce use of limited attention resources may be most helpful for older users, especially for those with mild to moderate decline as the ones in this study. It is expected that the work presented here stimulates further research in this area.

References

Salmon, D.P., Bondi, M.W.: Neuropsychological assessment of dementia. Ann. Rev. Psychol. 60, 1–26 (2009)

Wu Y., Van Slyke C.: Interface complexity and elderly users: revisited. In: Proceedings of the Eighth Annual Conference of The Southern Association For Information Systems (SAIS 2005), Savannah, Georgia (2005)

Slegers, K., Van Boxtel, M.P., Jolles, J.: The efficiency of using everyday technological devices by older adults: the role of cognitive functions. Ageing Soc. 29, 309–325 (2009)

Hanson, V.L.: Influencing technology adoption by older adults. Interact. Comput. 22, 502–509 (2010)

Topo, P.: Technology studies to meet the needs of people with dementia and their caregivers a literature review. J. Appl. Gerontol. 28, 5–37 (2009)

Nygård, L.: The meaning of everyday technology as experienced by people with dementia who live alone. Dementia 7, 481–502 (2008)

Hanson, E., Magnusson, L., Arvidsson, H., Claesson, A., Keady, J., Nolan, M.: Working together with persons with early stage dementia and their family members to design a user-friendly technology-based support service. Dementia 6, 411–434 (2007)

Bertrand, R.M., Fredman, L., Saczynski, J.: Are all caregivers created equal? Stress in caregivers to adults with and without dementia. J. Aging Health 18, 534–551 (2006)

Etxeberria, I., Yanguas, J.J., Buiza, C., Yanguas, E., Palacios, V., Rodríguez, S.: Effectivity of an early psychosocial intervention program with relative of patients with Alzheimer’s disease on first stages [Article in Spanish]. Rev. Esp. Geriatr. Gerontol. 39, 5–6 (2004)

IMSERSO (Spanish Institute for Elderly and Social Services). 93% of informal caregivers of dependent people show a low quality of life [Report in Spanish]. http://www.imsersodependencia.csic.es/documentacion/dossier-prensa/2008/not-13-06-2008.html (2008). Accessed 12 May 2009

Losada, A., Izal, M., Montorio, I., Marquez, M., Perez, G.: Differential efficacy of two psycho educational interventions for dementia family caregivers [Article in Spanish]. Rev. Neurol. 38, 701–708 (2004)

Lu, Y.Y., Wykle, M.: Relationships between caregiver stress and self-care behaviours in response to symptoms. Clin. Nurs. Res. 16, 29–43 (2007)

Son, J., Erno, A., Shea, D.G., Femia, E.E., Zarit, S.H., Parris, M.A.: The caregiver stress process and health outcomes. J Aging Health 19, 871–887 (2007)

Elliot, R.: Assistive technology for the frail elderly: an introduction and overview. Department of Health and Human Services, Pennsylvannia (1991)

Pilotto, A., D’Onofrio, G., Benelli, E., Zanesco, A., Cabello, A., Margelí, M.C., Wanche-Politis, S., Seferis, K., Sancarlo, D., Kilias, D.: Information and communication technology systems to improve quality of life and safety of Alzheimer’s disease patients: a multicenter international survey. J. Alzheimers Dis. 23, 131–141 (2011)

Blackler, A., Popovic, V., Mahar, D.: Investigating users’ intuitive interaction with complex artefacts. App. Ergon. 41, 72–92 (2010)

Carrasco, E., Göllner, C.M., Ortiz, A., García, I., Buiza, C., Urdaneta, E., Etxaniz, A., Gonzalez, M.F., Laskibar, I.: Enhanced TV for the promotion of active ageing. In: Eizmendi, G., Azkoitia, J.M., Craddock, G.M. (eds.) Challenges for assistive technology, pp. 159–163. IOS Press, Amsterdam (2007)

Buiza C., Urdaneta E., Yanguas J.J., Carrasco E., Göllner C.M., Paloc C: i2home-intuitive interaction for everyone with home appliances based on industry standards. Poster session presented at the 9th European Conference for the Advancement of Assistive Technology, AAATE 2007. San Sebastian, Spain (2007)

Carrasco, E., Epelde, G., Moreno, A., Ortiz, A., Garcia, I., Buiza, C., Urdaneta, E., Etxaniz, A., Gonzalez, M.F., Arruti, A.: Natural interaction between virtual characters and persons with Alzheimer’s disease. In: Miesenberger, K., et al. (eds.) Computers helping people with special needs, pp. 38–45. Springer-Verlag, Berlin-Heildelberg (2008)

Ortiz, A., Carretero, M.P., Oyarzun, D., Yanguas, J.J., Buiza, C., Gonzalez, M.F., Etxeberria, I.: Elderly users in ambient intelligence: does an Avatar improve the interaction? In: Stephanidis, C. (ed.) Universal access in ambient intelligence environments, pp. 99–114. Springer-Verlag, Berlin-Heildelberg (2006)

Dyck, M., Winbeck, M., Leiberg, S., Chen, Y., Gur, R.C., Mathiak, K.: Recognition profile of emotions in natural and virtual faces. PLoS ONE 3, e3628 (2008)

Moser, E., Derntl, B., Robinson, S., Fink, B., Gur, R.C., Grammer, K.: Amygdala activation at 3T in response to human and Avatar facial expressions of emotions. J. Neurosci. Methods 161, 126–133 (2007)

Reisberg, B., Ferris, S.H., de Leon, M.J., Crook, T.: The global deterioration scale for assessment of primary degenerative dementia. Am. J. Psychiatr. 139, 1136–1139 (1982)

Folstein, M.F., Folstein, S.E., McHugh, P.R.: “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198 (1975)

Lobo, A., Ezquerra, J., Gómez-Burgada, F., et al.: The cognoscitive mini exam (a simple, practical ‘test’ to detect intellectual disorders in medical patients [Article in Spanish] Actas Luso Españolas de Neurología. Psiquiatría y Ciencias Afines 7, 189–202 (1979)

Ortiz-Marqués N., Amayra-Caro I., Uterga-Valiente J.M., Martínez-Rodríguez S. (2008) Standardization of a Spanish version of Rey Auditory Verbal Learning Test (RAVLT) [Article in Spanish] V Congress of Neuropsychology in Andalucia Huelva, Spain

Spreen, O., Strauss, E.: A compendium of neuropsychological tests. Administration norms and commentary. Oxford University Press, New York (1998)

Baztán, J.J., Pérez del Molino, J., Alarcón, T., San Cristóbal, E., Izquierdo, G., Manzarbeitia, I.: Barthel index: valid instrument for the functional assessment of patients with cerebrovascular disease [Article in Spanish]. Rev. Esp. Geriatr. Gerontol. 28, 32–40 (1993)

Seisdedos, N., Wechsler, D.: WAIS-III: wechsler adult intelligence scale –third edition technical manual [Book in Spanish]. TEA Ediciones, Madrid (1999)

Goodglass, H., Kaplan, E.: The assessment of aphasia and related disorders. Lea & Febiger, Philadelphia (1983)

García-Albea, J.E., Sánchez, M., del Viso, S.: Test de Boston para el diagnóstico de la afasia. Adaptación española. In: Goodglass, H., Kaplan, E. (eds.) La evaluación de la afasia y trastornos relacionados. Panamericana, Madrid (1986)

Czaja, S.J., Sharit, J., Ownby, D., Roth, D., Nair, S.N.: Examining age differences in performance of a complex information search and retrieval task. Psychol. Aging 16, 564–579 (2001)

Blackler, A., Mahar, D., Popovic, V.: Older adults, interface experience and cognitive decline, pp. 22–26. Paper presented at the OZCHI, Brisbane (2010)

Langdon, P., Lewis, T., Clarkson, J.: The effects of prior experience on the use of consumer products. Univers. Access. Inf. Soc. 6, 179–191 (2007)

Lewis, T., Langdon, P.M., Clarkson, P.J.: Prior experience of domestic microwave cooker interfaces: a user study. Des. Incl. Futur. 2, 3–14 (2008)

Gudur, R.R., Blackler, A., Mahar, D., Popovic, V.: The effects of cognitive ageing on use of complex interfaces, pp. 22–26. Paper presented at the OZCHI, Brisbane (2010)

Gudur R.R., Backler A.L., Popovic V., Mahar D.P.: Ageing and use of complex product interfaces. In: Norbert R., Lin–Lin C., Pieter J.S. (eds) Proceedings of 4th World Conference on Design Research, Delft, the Netherlands, 31 October–4 November (2011)

Acknowledgments

This research is being partially funded by the EU 6th Framework Program under grant FP6-033502 (i2home). The opinions herein are those of the authors and not necessarily those of the funding agencies.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Diaz-Orueta, U., Etxaniz, A., Gonzalez, M.F. et al. Role of cognitive and functional performance in the interactions between elderly people with cognitive decline and an avatar on TV. Univ Access Inf Soc 13, 89–97 (2014). https://doi.org/10.1007/s10209-013-0288-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10209-013-0288-1