Abstract

Research with barn owls suggested that sound source location is represented topographically in the brain by an array of neurons each tuned to a narrow range of locations. However, research with small-headed mammals has offered an alternative view in which location is represented by the balance of activity in two opponent channels broadly tuned to the left and right auditory space. Both channels may be present in each auditory cortex, although the channel representing contralateral space may be dominant. Recent studies have suggested that opponent channel coding of space may also apply in humans, although these studies have used a restricted set of spatial cues or probed a restricted set of spatial locations, and there have been contradictory reports as to the relative dominance of the ipsilateral and contralateral channels in each cortex. The current study used electroencephalography (EEG) in conjunction with sound field stimulus presentation to address these issues and to inform the development of an explicit computational model of human sound source localization. Neural responses were compatible with the opponent channel account of sound source localization and with contralateral channel dominance in the left, but not the right, auditory cortex. A computational opponent channel model reproduced every important aspect of the EEG data and allowed inferences about the width of tuning in the spatial channels. Moreover, the model predicted the oft-reported decrease in spatial acuity measured psychophysically with increasing reference azimuth. Predictions of spatial acuity closely matched those measured psychophysically by previous authors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The spatial location of a sound source is signaled to listeners by interaural differences in timing and level, as well as by the monaural stimulus spectrum (Middlebrooks and Green 1991). Jeffress (1948) proposed that sound source location is represented topographically in the brain by neurons narrowly tuned to particular interaural time differences (ITDs). In contrast, van Bergeijk (1962), following von Békésy (1930), postulated just two neural groups, one tuned to the left hemispace and the other tuned to the right hemispace, each summing the evidence that the source is located in the hemispace to which it is tuned. The perceived location would reflect the balance of activity in the left and right hemispace channels. While the topographic model is currently dominant and has received support from research with barn owls (Sullivan and Konishi 1986; Carr and Konishi 1990), the latter, “opponent channel,” model is more consistent with the results from small-headed mammals (McAlpine and Grothe 2003; McAlpine 2005; Grothe et al. 2010). For example, the topographic model predicts the existence of neurons tuned to spatial locations throughout the auditory space, whereas the majority of spatially sensitive neurons in inferior colliculus (McAlpine et al. 2001) and auditory cortex (Stecker et al. 2005) of small-headed mammals respond most strongly to extreme lateral positions. Both the left and right auditory cortex appear to contain subpopulations of neurons tuned to the left and right auditory space, although the subpopulation tuned to the contralateral hemispace may be larger in each cortex (Stecker et al. 2005).

Recent studies using electroencephalography (EEG) and magnetoencephalography (MEG) with stimuli that varied solely in ITD (Magezi and Krumbholz 2010; Salminen et al. 2010a) and stimuli that contained the full range of location cues (Salminen et al. 2009) have provided evidence for opponent channel coding of auditory space in humans. Magezi and Krumbholz presented pairs of noise stimuli in a “continuous stimulation paradigm” (CSP; Hewson-Stoate et al. 2006). On each trial, a control stimulus was followed immediately by a test stimulus that differed from the control in ITD. In the CSP, the macroscopic response to the test stimulus is assumed to reflect activity in neurons that respond more strongly to the test than the control. The size of the test response is assumed to reflect the difference between the responses of these neurons to the two stimuli. The authors found larger responses to outward-going (away from midline), than inward-going (toward midline), ITD shifts. This result is predicted by the opponent channel model because, for an outward-going shift, the test stimulus is lateral to the control and thus elicits a larger response from the channel tuned to its hemispace (Fig. 1A). In contrast, the topographic model predicts either no difference between responses to inward and outward shifts of the same magnitude (if the spatial array is uniform; Fig. 1B) or, if one assumes a greater proportion of neurons tuned to locations near the midline (Knudsen 1982), greater responses to inward shifts (as the test stimulus will activate a larger number of neurons than the control; Fig. 1C).

Schematic spatial tuning curves in the opponent channel (A) and topographic models (B, each location represented by an equal number of neurons; C larger number of neurons tuned to locations near the midline, represented by the relative heights of the tuning curves), after Magezi and Krumbholz (2010). The topographic model posits neurons tuned to locations throughout the horizontal plane; however, for visual clarity, tuning curves are plotted at 30 ° intervals. Solid upward arrows indicate the contribution of each channel to the test response following a shift away from (“Out”), or toward (“In”), straight ahead. Channels that contribute to the test response are highlighted in bold. It is assumed that only channels that respond more strongly to the test (“T”), than the control (“C”), contribute. The size of the contribution from each channel, then, is the difference between the channel’s response to the test (upward arrow) and control (downward arrow).

Both Salminen et al. and Magezi and Krumbholz presented test stimuli solely in the left auditory space. Further, while both groups measured responses consistent with opponent channel representation, neither attempted to model opponent channel coding explicitly. Finally, there is conflicting evidence as to the relative sizes of the contralateral and ipsilateral tuned subpopulations within each auditory cortex. The results of Krumbholz et al. (2005) suggest that the contralateral subpopulation is larger than the ipsilateral subpopulation in the left auditory cortex, while the subpopulations are of similar size in the right auditory cortex, while the results of Salminen et al. (2010b) suggest the opposite.

The current study used an efficient CSP paradigm in which each stimulus served as both control and test. Stimuli were presented from loudspeakers in the sound field, providing the full range of cues for sound location. Stimulus locations in both hemifields were used, so that differences in spatial representation between the hemifields could be examined. The aims of the study were (1) to examine evidence for opponent channel coding of auditory space in humans, (2) to examine whether the contralateral and ipsilateral tuned subpopulations are of similar size in the left and right auditory cortex, and (3) to produce a computational model of the cortical representation of auditory space in humans. Subsequently, we tested our model by using it to predict psychophysical measures of spatial acuity reported previously by other authors.

Methods

Participants

Eleven participants, with no history of audiological or neurological disease, participated (mean age ± SD, 23.3 ± 5.9 years; 10 females). All participants had pure-tone hearing thresholds at, or more favorable than, 20 dB HL at octave frequencies between 250 and 8,000 Hz, inclusive. Participants completed the Speech, Spatial and Qualities of Hearing Scale (Gatehouse and Noble 2004) to assess their difficulties with everyday listening. The mean scores (±SD), out of 10 (where 0 indicates profound difficulty and 10 indicates no difficulty), were 7.6 (±0.7), 7.1 (±1.2), and 7.6 (±0.8) on the Speech, Spatial and Qualities of Hearing scales, respectively. No subject scored poorer than one standard deviation below the mean scores for young, normally hearing adults who have participated in previous studies in our laboratory (N = 118; mean ± SD scores, 8.0 ± 1.0, 7.4 ± 1.3, and 8.3 ± 1.0 for the Speech, Spatial, and Qualities of Hearing scales). All participants provided written informed consent. The study was approved by the Research Ethics Committee of the Department of Psychology of the University of York.

Procedure

Experiments were conducted in a single-walled IAC audiology test room (5.3 × 3.7 m), located in a larger sound-treated enclosure. Participants sat on a chair in the center of a circular stage with a radius of 1.15 m, facing an arc of loudspeakers (Plus XS.2, Canton) positioned at approximately head height (1.1 m; the “AB-York Crescent of Sound”; Kitterick et al. 2011). On each trial of the “location shift” condition, a 1.51-s pink noise was presented from one of five loudspeakers, located at 30 ° intervals from −60 ° (left) to +60 ° (right) relative to straight ahead (Fig. 2). There were no silent intervals between trials. To avoid audible clicks, stimuli were gated on and off with overlapping 10-ms raised cosine ramps. Participants were instructed to ignore the auditory stimuli and to watch a subtitled film of their choice presented on a screen directly below the central loudspeaker (0 °). Participants also completed two control conditions. In these conditions, the stimuli always came from the central loudspeaker, but their spectra were altered to match the monaural spectra at the left ear and, separately, the right ear of the stimuli in the location shift condition. Participants completed four recording runs in each of three conditions (“location shift”, “left ear control” and “right ear control”) for a total of 12 runs. The order of runs was randomized, separately for each participant. Each run consisted of 751 trials and lasted 18.8 min. The order of trials within each run was randomized, with the constraint that each combination of pre- and post-shift location occurred 30 times. This meant that, across the 12 recording runs, each combination of condition, pre- and post-shift location was presented 120 times. Runs were presented in groups of four, to form three sessions. Nine participants completed two sessions in 1 day, and the remaining session on a different day. One participant completed the three sessions on separate days and one completed all sessions on the same day.

The participant sat at the center of a circular stage with radius of 1.15 m, facing an arc of loudspeakers positioned at approximately head height. On each trial of the location shift condition, the stimulus came from the loudspeaker at −60, −30, 0, +30, or +60 ° relative to straight ahead. In the control conditions, the stimulus always came from the central loudspeaker (0 °).

Stimulus presentation was controlled by a PC situated outside the test room. Stimuli were sent to audio amplifiers (RA-150, Alesis) via a digital-to-analogue convertor (Ultralite Mk3, MOTU). A spare audio channel was used to send a trigger pulse aligned with the onset of each stimulus. The trigger pulse was detected by custom-made hardware, which sent a marker to the EEG amplifier for storage alongside the EEG recordings.

Stimuli

Acoustical stimuli were generated digitally, with 16-bit amplitude resolution and a 44.1-kHz sampling rate, in Matlab (The Mathworks, Natick, MA, USA) by summing pure tones with frequencies at 0.2-Hz intervals between 100 and 5,000 Hz. The starting phases of the tones were selected randomly from a uniform distribution. In the location shift condition, the amplitude of each pure tone was inversely proportional to the square root of its frequency. The resulting noise, known as pink noise, contained equal power per octave. When presented from each of the five loudspeakers used in this experiment, the noise had an intensity of 55 ± 1 dBA SPL at the approximate position and head height of the participants (B&K Type 2260 Investigator sound level meter, with half-inch free-field microphone 4189).

Stimuli for the left ear and right ear control conditions were generated in four steps. First, a 15-s pink noise stimulus was presented from each of the loudspeakers in turn, and recordings were made using microphones placed in the ear canals of a Head and Torso Simulator (HATS; B&K Type 4100D). The HATS was positioned such that its head was in the approximate location and orientation of the participants when seated at the listening position. The long-term amplitude spectrum was computed for each of the 10 stimulus waveforms (left/right ear × 5 loudspeakers) by computing a 16384-point Hann-windowed FFT on each signal, advancing the window by half the FFT length, repeating the process over the duration of the signal, and averaging across the windows. For the left and right ears separately, the spectrum (in decibels) for the loudspeaker directly ahead was subtracted from the spectrum for each of the other loudspeakers to obtain a set of weighting coefficients. The coefficients were interpolated linearly to obtain values at 0.2 Hz spacing. The values were fed back into the program which generated the pink noise stimuli and used to alter the amplitudes of the individual pure tones. The new control stimuli were played from the loudspeaker at 0 ° and the new long-term amplitude spectra were computed. For each ear, root mean square (RMS) differences were computed between the amplitudes (in decibels) of the points in the spectra of the pink noise stimuli presented from each non-central location (−60, −30, +30, and +60 °) and the corresponding points in the spectra of the control stimuli presented at the central location (0 °). Figure 3 shows that the match was good at frequencies up to 3 kHz and acceptable up to 5 kHz, with RMS errors ranging across stimuli from 0.98 to 2.58 dB.

Long-term amplitude spectra derived from recordings made with microphones in the ear canals of a head and torso simulator situated at the approximate position that was occupied by the participants during the experiment. A 15-s version of the pink noise stimulus was presented from the loudspeakers at ±30 and ±60 ° (black, solid lines), along with a 15-s version of each of the control stimuli, presented from the loudspeaker at 0 ° (dashed, red lines). The stimulus bandwidth was 100–5,000 Hz. For the sake of clarity, the spectrum for the stimulus with the largest peak amplitude, +60 ° in the right ear, was shifted such that its peak amplitude was 0 dB. The spectrum for −60 ° in the left ear was shifted the same amount. In order of decreasing overall amplitude, each subsequent spectrum was shifted by an additional 10 dB. The stimulus location is labeled above each pair of spectra, along with the root mean square error (RMSE) between the “actual” and “simulated” spectra.

Before the first EEG session, participants completed a short psychophysical experiment to confirm that they could localize the pink noise stimulus accurately when it was presented from each of the five loudspeakers. The experiment was similar to the location shift condition described above, except that the pre- and post-shift locations were never identical and there were 10 trials per combination of pre- and post-shift location for a total of 200 trials. Screens below each loudspeaker numbered the locations from one to five. Participants were given a few familiarization trials and were then asked to fixate a cross displayed on the screen below the central loudspeaker and call out the location of the noise stimulus each time it changed. Performance was at ceiling for each post-shift location, with a mean accuracy of 99.5 % and each participant making at least 37 correct responses out of 40 for stimuli presented from each location.

Electroencephalography

EEG recordings were made using 64 Ag/AgCl electrodes, arranged according to the 5 % electrode scheme (an extension of the traditional 10/20 scheme), in an elasticated cap (ANT WaveGuard system, Enschede, Netherlands). The EEG system incorporated active noise cancellation, which improved signal quality, allowing higher than normal scalp electrode impedances. Nevertheless, all impedances were kept below 40 kΩ. During recording, signals were referenced to the mean across channels (average reference) and an electrode at AFz (central forehead) was used as ground. Signals were amplified and low-pass-filtered at 500 Hz, then sampled at 1,000 Hz, and sent via a fiber optic cable to a dedicated PC located outside the test room. ASA Lab software (ANT, Enschede, NL) stored the EEG recordings (and stimulus triggers) for offline processing. The timing accuracy of this setup was verified by presenting 101 triggers, separated by 1.5-s intervals (the stimulus onset asynchrony, “SOA,” used throughout this study). No interval measured by the ASA-Lab software differed from 1.5 s by more than one sample.

Data analysis

Recordings were processed offline with the EEGLAB toolbox (Delorme and Makeig 2004) running under Matlab. Recordings were bandpass-filtered between 0.1 and 35 Hz and down-sampled to 250 samples/s per channel. They were then split into epochs ranging from 100 ms before to 1,500 ms after the start of each stimulus (total duration of 1,600 ms). For each epoch and channel, a baseline correction was applied by subtracting the average voltage in the 100 ms preceding the stimulus from each sample of the response. Data from the four runs within each session were merged and epochs containing extreme values (joint probability limits of ±3.5 SD) were removed. In order to remove stereotyped artifacts resulting from eye blinks and lateral eye movements, data were submitted to an independent component analysis using the Infomax extended ICA algorithm (Bell and Sejnowski 1995; Lee et al. 1999), with an initial PCA decomposition that retained the 10 largest principal components. Independent components were removed by manual inspection and the corrected data were back-projected to the measurement electrodes (on average, 2.3 ± 0.9, mean ± SD, components were removed per session). Subsequently, for each participant, data from all three sessions were merged and epochs were averaged to obtain, for each of the three conditions, an event-related potential (ERP) for every combination of pre- and post-shift location. As 14.2 % (±5.3 %, mean ± SD) of epochs were rejected per session, each ERP was computed from an average of 103 epochs. ERPs were typically triphasic, with peaks corresponding to the P1, N1, and P2 components of the auditory event-related potential (Key et al. 2005; see “Results”), although the N1 and P2 were most prominent.

Due to volume conduction, any neural activity that gives rise to a measurable EEG signal at the scalp is recorded at all electrodes. The amplitude and sign of the activity, however, vary across electrodes; the patterns of variation depend on the location and orientation of the underlying brain source (Nunez and Srinivasan 2006). Given a model of the underlying sources expressed in terms of equivalent current dipoles, one can compute estimates of the activity within particular brain regions from the signals recorded at the scalp (Scherg 1990). As the current study used a passive listening task, it was expected that neural activity would be restricted to the left and right auditory cortices (Hall et al. 2000), and thus, responses were modeled with two sources. This expectation was corroborated by the simple, dipolar field maps that characterized the ERP peaks (see Fig. 4) and the small residual variances produced by the fits (see below).

Left: Event-related potentials in the location shift condition, averaged across participants and pre- and post-shift locations. Each black line is the response from one of the 64 recording electrodes. The response from the vertex electrode is plotted in red and the N1 and P2 peaks are labeled. The dashed vertical line marks the onset of the location shift. Right: Maps of field potential, calculated over 40-ms windows centered on the N1 and P2 peaks. The maps are viewed as if looking down onto the head. Each black dot marks the location of a recording electrode. Electrodes below the head center are plotted outside the head boundary.

For each participant, five source models were constructed, one for each post-shift location. Each model consisted of two equivalent current dipoles—one dipole at the centroid of primary auditory area TE1.0 in each hemisphere (Morosan et al. 2001). The orientations of the two dipoles were fitted simultaneously within a 40-ms window centered on the P2 peak of the response to the post-shift location of interest (averaged across pre-shift locations). All models were constructed using responses in the location shift condition. The orientations of the dipoles were fitted, while the dipole locations were held fixed, because EEG is more sensitive to variations in dipole orientation than dipole location (Nunez and Srinivasan 2006). Fits were centered on the P2 peak because of its prominence in the ERPs in comparison with the N1 peak (see Fig. 4). (In some participants, the N1 peak, despite being visible as a negative deflection around 120 ms, was close to the pre-stimulus baseline and rode on the subsequent P2 component, leading to inaccurate dipole fits. Nonetheless, fitting dipole orientations to the N1 peak led to qualitatively similar, though noisier, fits than the results reported below.)

In 10 out of the 11 participants, source models accounted for between 91 and 98 % of the variance in the P2 field maps (mean ± SD, averaged across models and participants, 95 ± 2 %). In one participant, the fits were poorer, accounting for between 78 and 89 % of the variance. This outcome reflected the smaller, noisier ERPs of that participant. Her responses were, however, qualitatively similar to other participants and removing them from the analyses did not affect the pattern, or significance, of any of the results. Her data were therefore retained. Each of the 75 ERPs per participant (5 pre-shift locations × 5 post-shift locations × 3 conditions) was passed through one of these five dipole models (depending on the post-shift location or, in the case of the control conditions, the post-shift location that was being simulated). The output of each dipole model consisted of two “source waveforms,” one for each auditory cortex. Subsequent analyses were carried out on these source waveforms. For the left auditory cortex, the right ear (contralateral) condition was used as a control, while for the right auditory cortex, the left ear condition was used as a control. Thus, there were only two conditions per auditory cortex—these will be termed the “location shift” condition and the “control” condition.

For each participant, an average source waveform was calculated (averaged across cortices, conditions, and pre- and post-shift locations). This waveform was visualized and the N1 and P2 peaks were identified manually, with the aid of a peak-picking algorithm. Subsequently, the latencies of these peaks were used as mid-points for 80-ms-wide peak-picking windows. In each of the individual source waveforms, the N1 was automatically identified as the largest negative deflection within the first window and the P2 as the largest positive deflection in the second window. The two windows did not overlap for any participant. The sizes of both the N1 and P2 were modulated by the spatial/spectral differences between the pre- and post-shift stimuli. As the components have overlapping time courses (Näätänen and Picton 1987; Makeig et al. 1997), but opposite polarities, it was expected that they would partially cancel each other out. Thus, an increase in one component could cause an apparent decrease in the other component. To overcome this problem, the response magnitude for each ERP was quantified as the peak-to-peak difference between the N1 and the P2 (cf. Briley et al. 2012).

Peak-to-peak response sizes were plotted as a function of the size and direction of location shift. One method used to characterize the resulting “location shift response curves” was to fit them with exponential functions of the form:

The variable g was the magnitude of the location shift in degrees, while b was the value in nanoampere-meters (nAm) required to bring the 0 ° point of the curve to the value for 0 ° observed in the data, averaged across cortical hemispheres and post-shift locations. As a result, p reflected the sharpness of the response curve, while r reflected the dynamic range of the curve. To facilitate comparisons across participants, the function was initially fitted to each participant’s mean location shift response curve, averaged across cortical hemispheres and post-shift locations; the obtained value of r was then used as an additional scale factor in subsequent fits for that participant. This served to emphasize the differences in the parameters between conditions, rather than the overall differences between participants. For the 0 ° post-shift location, the two sides of the location shift response curves (corresponding to leftward and rightward shifts) were fitted separately. However, as the fitted parameter values were similar for the two sides, their means were used in subsequent analyses to reduce noise. For the other post-shift locations (−60, −30, +30, and +60 °), only one side of the response curves was fitted. The average root mean square error (RMSE) for the fits, across all participants, auditory cortices, and post-shift locations, was 4.3 nA m, with an R 2 of 0.95.

Results

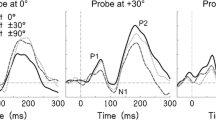

Location shifts elicited transient neural responses, dominated by peaks corresponding to the N1 (average latency of 120 ms after stimulus onset) and P2 (208 ms) components of the auditory event-related potential (Fig. 4; Key et al. 2005). The scalp topographies of the N1 and P2 displayed, respectively, vertex negativity and vertex positivity, with polarity inversion near the mastoids. Such dipolar field maps are consistent with bilateral generators in the auditory cortex (Scherg 1990). Source waveforms (see “Methods”) were estimated for each combination of pre- and post-shift location. In Figure 5, source waveforms have been averaged over participants and plotted for each absolute size of location shift. When there was no shift in location (black dotted lines), source waveforms were irregular with amplitudes close to zero. As the angular separation between the pre- and post-shift locations increased, the response magnitude also increased.

Source waveforms for the left and right auditory cortices, for each size of location shift (averaged across participants, post-shift locations and direction of shift). The dashed vertical line marks the onset of the location shift. The N1 and P2 peaks of the left hemisphere response to the 120 ° location shift are labeled. The magnitude of the location shift response was quantified as the peak-to-peak amplitude from the N1 to the P2. Note that the response magnitude increased as the size of the location shift increased.

In Figure 6, the black lines plot response magnitude (i.e., the peak-to-peak difference from the N1 to the P2) as a function of the size and direction of location shift, with data averaged across post-shift locations. For example, a location shift of +60 ° indicates that the post-shift stimulus was 60 ° to the right (clockwise) of the pre-shift stimulus. The corresponding data point is the average response magnitude resulting from shifts from −60 to 0 °, −30 to +30 °, and 0 to +60 °. As in the source waveforms in Figure 5, response magnitude in Figure 6 increases as the spatial separation between the pre- and post-shift stimuli increases. The resulting “location shift response curves” are steeper at small spatial separations than at larger separations, though they have large dynamic ranges and increase even between the two largest separations (90 and 120 °).

Response curves for the location shift (black lines and circles) and control (red lines and squares) conditions, averaged across participants and post-shift locations. Negative values of location shift mean that the post-shift stimulus was to the left of the pre-shift stimulus (a leftward, anti-clockwise, shift), while positive values mean that the post-shift stimulus was to the right of the pre-shift stimulus (a rightward, clockwise, shift). Error bars denote 95 % within-subjects confidence intervals (Morey 2008).

The location shift response curves should not be confused with neural tuning curves. As stimuli were presented in a continuous sequence, rather than in isolation, the transient location shift responses would be expected to reflect the differences between the responses to the pre- and post-shift stimuli from the auditory spatial channels, rather than the absolute responses from these channels (Magezi and Krumbholz 2010). Thus, the location shift response curves provide a measure of the sensitivity of the auditory cortices to shifts in spatial location. A steeper (“sharper”) response curve would suggest that the auditory cortex is more sensitive to small location shifts and, thus, presumably better able to distinguish locations that are close together. A shallower (“broader”) response curve would suggest that the cortex is less sensitive to small shifts and, thus, less able to distinguish closely spaced locations.

Control conditions

In the sound field, changes in the location of a source are accompanied by changes in interaural differences in level and arrival time and also by changes in the pattern of peaks and troughs in the stimulus spectrum at each ear. At the level of the cortex, it is likely that the auditory system has integrated all available cues to the location of sound sources, both binaural (ITDs and ILDs) and monaural (the spectra of sounds at each ear). Two control conditions were run to check that the location shift responses reflected both types of cue, rather than only the binaural type, or only the monaural type. In these conditions, the stimuli always came from the loudspeaker at 0 °, but their spectra were altered to match the monaural spectra observed in the main condition at the left ear and, separately, the right ear. The left ear spectra were used as a control for the responses from the right cortical hemisphere, while the right ear spectra were used a control for the left hemisphere responses. The contralateral ear was used as a control because the dominant ascending projection from each ear is to the contralateral, rather than the ipsilateral, cortical hemisphere (Rosenzweig 1951; Webster 1992). Consistent with this, a number of neuroimaging studies have demonstrated larger responses from the contralateral, than the ipsilateral, cortical hemisphere to monaural stimulation (e.g., Mäkelä et al. 1993; Pantev et al. 1998; Scheffler et al. 1998). Response curves for the control conditions are shown as red lines in Figure 6, plotted as a function of their matched location shift. The response increased as a function of the spectral dissimilarity between the pre- and post-shift stimuli. However, for all location shifts except 0 °, responses were larger in the location shift condition than in the matched control condition. This was confirmed in a linear mixed model (LMM) analysis with fixed factors of condition (location shift or control), shift (0, ±30, ±60, ±90, or ±120 °) and auditory cortex (left or right). As in all subsequent LMM analyses, the participants were treated as a random factor. There was a significant interaction between the effects of condition and shift [F(8, 358) = 10.769, p < 0.001], and all pairwise comparisons between the location shift and control conditions were highly significant (p < 0.001), except for the 0 ° shift (p = 0.635). The three-way interaction was nonsignificant [F(8, 350) = 0.502, p = 0.855]. The differences between the response curves in the location shift and control conditions are compatible with the idea that the response measured in the location shift condition reflects binaural and monaural evidence of spatial position, not just monaural evidence.

Differences in location shift responses for different post-shift locations

Location shift response curves were also obtained for each post-shift location separately (Fig. 7). In all cases, responses increased with increasing spatial separation between the pre- and post-shift locations, as was observed in the data averaged over post-shift locations in Figure 6. Nevertheless, there were systematic differences in the response curves between the five post-shift locations, between the left and right auditory cortices and between the location shift directions.

Top: Response curves in the location shift condition (averaged across participants). Each curve plots response magnitudes to one of the five post-shift locations as a function of the size and direction of location shift (see Fig. 6). Bottom: The response curves were fitted with exponential functions, to obtain measures of the curves’ sharpness and dynamic range. Each bar represents fits obtained for one of the five post-shift locations (the color scheme matches that used in the top part of the figure). The top of the bar denotes the mean parameter value and the error marker denotes the 95 % within-subjects confidence interval (Morey 2008). For the 0 ° post-shift location, the two sides of the response curves were fitted separately, but as the resulting parameter estimates were similar, the average estimates across the two sides are presented here to reduce noise arising from fitting few data points. For the ±30 and ±60 ° post-shift locations, only one side of each response curve was fitted.

Perhaps the most important differences were between the post-shift locations, in that the response curves for more central locations were sharper than the curves for more peripheral locations. This suggests that both auditory cortices are more sensitive to small location shifts near the midline than at the periphery. To quantify these differences, location shift response curves were fitted with exponential functions, to derive measures of curve sharpness and dynamic range (see “Methods”). The response curve for each participant, cortical hemisphere, and post-shift location was fitted separately (Fig. 7, bottom).

As expected, a LMM analysis found a main effect of post-shift location on the sharpness of the location shift response curves [F(4, 94) = 33.668, p < 0.001]. Pairwise comparisons found that all differences between post-shift locations were significant (p < 0.01), except those between −60 and +60 ° (p = 0.344) and between −30 and +30 ° (p = 0.615). There was no main effect of cortical hemisphere [F(1, 94) = 0.806, p = 0.372], nor an interaction of post-shift location and hemisphere [F(4, 90) = 0.406, p = 0.804]. There was also a main effect of post-shift location on the dynamic range parameter [F(4, 94) = 6.607, p < 0.001; main effect of hemisphere: F(1, 94) = 2.170, p = 0.144; interaction of location and hemisphere: F(4, 90) = 0.449, p = 0.773], with a larger range for the peripheral (±60 °), than the more central (0 °, ±30 °), post-shift locations (p < 0.005 in pairwise comparisons).

Differences in location shift responses between the auditory cortices

Figure 7 (top) also shows that, for each auditory cortex, location shift responses were generally larger for post-shift locations in the contralateral, than ipsilateral, hemispace. For the left auditory cortex, responses to the +30 ° (pink downward triangles) and +60 ° (purple diamonds) post-shift locations were larger than responses to the −30 ° (green upward triangles) and −60 ° (orange squares) post-shift locations, respectively, while this pattern was reversed for the right auditory cortex.

Figure 8A displays, for each auditory cortex, the mean response magnitude for the most extreme left (−60 °) and right (+60 °) post-shift locations, averaged across pre-shift locations. Interestingly, it can be seen here that, while the response was larger for the contralateral than ipsilateral post-shift location for both cortices, this effect was small for the right auditory cortex. Indeed, a LMM analysis found an interaction between the effects of cortical hemisphere and post-shift hemispace [F(1, 30) = 5.017, p = 0.033; main effect of hemispace: F(1, 30) = 3.254, p = 0.081; main effect of cortex: F(1, 30) = 1.156, p = 0.291]. The difference between the responses to the two post-shift locations was significant in the left (p = 0.008), but not the right (p = 0.760), auditory cortex. This suggests that the left auditory cortex contains more neurons tuned to the contralateral (right), than the ipsilateral (left), acoustic space (or that the contralateral tuned neurons respond more vigorously or consistently than the ipsilateral tuned neurons), while the right auditory cortex shows little such preference.

A Mean response magnitudes for the most extreme left (−60 °) and right (+60 °) post-shift locations (averaged across pre-shift locations), for each auditory cortex. Error bars denote 95 % within-subjects confidence intervals (Morey 2008). B Differences between response magnitudes to outward (away from straight ahead) and inward (toward straight ahead) location shifts, for post-shift locations in the left (−30 °) and right (+30 °) auditory space, for each auditory cortex. C Spatial tuning of the two opponent channels used in the computational model. Each channel was defined by a cumulative Gaussian function. In each case, the peak of the underlying Gaussian, and thus the point of maximum slope of the cumulative Gaussian, was 0 °. The standard deviation of the underlying Gaussian, and thus, the sharpness of tuning of the cumulative Gaussian, was 46 °. This value was derived from fits to the data.

The hemispheric preference of each auditory cortex was quantified as follows: where ω L is the preference for the left hemispace and ω R is the preference for the right hemispace (hemispace preference lies between 0 and 1, where 1 indicates that the auditory cortex responds solely to the given hemispace):

m L and m R are the mean response magnitudes to the leftmost (−60 °) and rightmost (+60 °) post-shift locations, respectively, averaged across pre-shift locations. The letter ω, here, stands for “weights,” a designation that will become clear later in the context of modeling the location shift response curves. Note that ω L and ω R sum to 1. For the left auditory cortex, ω L and ω R were 0.457 and 0.543, respectively (averaged across participants), reflecting the larger response to the rightmost, than the leftmost, post-shift location. ω L and ω R were more similar for the right auditory cortex, being 0.505 and 0.495, respectively.

Comparison of topographic and opponent process accounts

The topographic and opponent channel models make different predictions for the effect of the direction of a location shift on the size of neural responses (Magezi and Krumbholz 2010; see “Introduction”). According to the topographic model, responses to inward (toward straight ahead) and outward (away from straight ahead) shifts of the same size should either be equal or, if one assumes a greater number of neurons tuned to locations near straight ahead, responses should be larger to inward shifts. In contrast, the opponent channel model predicts larger responses to outward, than inward, shifts. The panel labeled “Data” in Figure 8B plots the differences in response magnitude in each auditory cortex between outward and inward location shifts towards the two post-shift locations which participated in both types of shift. These post-shift locations were −30 and +30 °. Positive values indicate that there was a larger response magnitude to outward shifts; negative values indicate that there was a larger response magnitude to inward shifts. Consistent with the opponent channel model of spatial tuning, outward shifts produced a larger response magnitude than inward shifts in all cases, though the difference was very small for the left auditory cortex and left (ipsilateral) post-shift location. A LMM analysis found a significant interaction between the effects of cortical hemisphere and post-shift hemispace [F(1, 30) = 4.313, p = 0.046; main effect of hemispace: F(1, 30) = 2.096, p = 0.158; main effect of cortex: F(1, 30) = 0.202, p = 0.657]. In the left auditory cortex, the difference between the response magnitudes to outward and inward shifts was significantly greater for the right (contralateral) than the left (ipsilateral) post-shift location (p = 0.018). Indeed, the difference was only significantly different from zero for the right post-shift location [left post-shift location: t(10) = 0.016, p = 1; right: t(10) = 2.930, p = 0.044; Šidák-corrected]. The effect of post-shift location was nonsignificant in the right auditory cortex (p = 0.660), and the difference between outward and inward shifts was significantly greater than zero [t(10) = 3.032, p = 0.037; Šidák-corrected].

Computational model

The larger responses for outward-going than inward-going location shifts indicate that an opponent process rather than a topographic model would best account for the location shift responses. However, such a model must also account for the other three main features observed in the data: (1) sharper response curves (i.e., greater sensitivity to small location shifts) for more central, than more peripheral, post-shift locations; (2) greater dynamic range for more peripheral post-shift locations; and (3) larger responses, in general, to contralateral, than ipsilateral, post-shift locations, particularly in the left auditory cortex.

The computational model assumed two spatial channels, one tuned to the left, and one tuned to the right, auditory space. The tuning of each spatial channel was expressed as a sigmoidal function of angular sound location in degrees using a cumulative Gaussian (Fig. 8C; it was assumed that such a function would be established at a lower processing level, but after the auditory system has integrated ITD, ILD, and spectral cues to sound source location). Thus, the spatial tuning of each channel was described by two parameters, both measured in degrees: the mean (M) of the underlying Gaussian, which is the point of maximum slope of the cumulative Gaussian, and the standard deviation (SD) of the underlying Gaussian, which determines the sharpness-of-tuning of the cumulative Gaussian (small and large values of SD correspond to sharp and broad spatial tuning, respectively). The tuning functions for the left and right spatial channels were assumed to be symmetric. In line with measurements of spatial tuning in mammalian auditory cortex (Stecker et al. 2005), M, the point of maximum slope, was set to 0 °. The sharpness of tuning, SD, was a free parameter to be fitted to the data. The responses from the left and right channels will be referred to as W l and W r, respectively.

Modeling the EEG response magnitudes

The response to a location shift was, for each channel, calculated as the post-shift activity minus the pre-shift activity, unless the pre-shift activity exceeded the post-shift activity, in which case the response was assumed to be zero. This latter assumption reflects a property of the continuous stimulation paradigm used to measure the location shift responses (see Magezi and Krumbholz 2010). In the CSP, the macroscopic response to a test (in this case, post-shift) stimulus after a sufficiently long control (in this case, pre-shift) stimulus is assumed to reflect activity in neurons that respond more strongly to the test than control.

The EEG response magnitude from a particular auditory cortex was calculated by weighting the responses, W l and W r, from the left and right spatial channels. Thus, it is worth noting that any differences in the responses from the left and right auditory cortex arose in the model solely from differences between the cortices in the weights given to the left and right spatial channels, and not from differences between the cortices in the tuning of each channel. The weights were ω L and ω R; these are the hemispace preference values calculated from the data that were described earlier. To recap, ω L and ω R were 0.457 and 0.543, respectively, for the left auditory cortex (indicating greater influence from the right hemispace channel), and 0.505 and 0.495, respectively, for the right auditory cortex (indicating only slightly greater influence from the left hemispace channel). After weighting, the response from each auditory cortex was subjected to the compressive nonlinearity:

where r was the response before compression, e was the base of the natural logarithm, and k was a compression factor that was fitted to the data. The nonlinearity served to compress large response values and expand small values. Thus, the response from each auditory cortex scaled as a compressed version of the weighted channel outputs.

Finally, the response from each auditory cortex was multiplied by a single scale factor, and then a single shift factor was added. The scale and shift factors were obtained from the data using the mean location shift response curve, averaged across participants, post-shift locations, and cortical hemispheres. First, the data response curve was shifted so that, at a location shift of 0 °, the response magnitude was zero. The size of this shift was taken as the shift factor (it was assumed to reflect the amount of noise in the EEG responses). The shifted response curve was then divided, point-by-point, by the mean response curve produced by the computational model. These values were averaged (excluding the 0 ° point) and the average served as the scale factor.

In summary, the model contained six parameters: one for the spatial channels (SD—the sharpness of tuning; recall that M—the point of maximum slope—was set to 0 ° in line with the findings from previous neurophysiological studies, and that the left and right hemispace channels were assumed symmetric), one weighting parameter for each auditory cortex (as ω L and ω R always summed to 1), one compression factor (k), one scale factor, and one shift factor. The scale factor, shift factor, and weighting parameters were meaningfully computed from portions of the data, as described above. SD and k served as free parameters that were fitted to the data by minimizing the error sum of squares. The parameters of the model are summarized in Table 1 (including whether each parameter was derived from, or fitted to, the EEG data). The model is available to download as a Microsoft Excel document, or as a Matlab program, from ModelDB (http://senselab.med.yale.edu/ModelDB/; accession number 146050).

Model fits

The model was fitted to the location shift response curves averaged across participants (Fig. 9, top). The fit was very good, with RMSE 4.0 nA m and an R 2 of 0.98. The model also reproduced the full pattern of results seen in the data response curves. Notably, the response curves for more central post-shift locations were sharper than those for more peripheral locations, while the curves for more peripheral locations had a larger dynamic range. These observations were confirmed when the location shift response curves were fitted with exponential functions (Fig. 9, bottom), as was done for the data curves in Figure 7. The sharper curves for more central locations arose in the model because the steepest portions of the spatial tuning curves lay at the midline. Thus, a shift near 0 ° corresponded to a greater change in channel output than a shift near 60 °. The larger dynamic range for more peripheral locations arose because there was a larger range of channel outputs to traverse along the spatial tuning curves if the end-point was at an extreme location. If the end-point was at the midline, then only around half of the spatial tuning curve was left to traverse.

Location shift response curves produced by the opponent channel computational model, with corresponding exponential fit parameters. Figure details, including axis scales, are the same as for Fig. 7.

Figure 8B (right) shows differences between the modeled responses to outward- and inward-going location shifts, for two post-shift locations (−30 and +30 °) for each auditory cortex. This panel can be compared directly with the observed data (Fig. 8B, left). As in the data, the model produced larger responses to outward-going, than inward-going, location shifts. The model also correctly produced the smallest out-minus-in value for the left auditory cortex and the left post-shift location, as well as the large difference between out-minus-in values in the left auditory cortex and the small difference between values in the right cortex. In the model, these effects arose due to the differential weighting of the ipsilateral and contralateral tuned channels in the left and right auditory cortices (the contralateral channel was weighted more heavily in the left auditory cortex, while the channels were weighted more equally in the right cortex).

The fitted value of SD, the sharpness of tuning of the spatial channels, was 46 °, and the fitted value of the compression factor, k, was 7. SD refers to the standard deviation of the Gaussian underlying the cumulative Gaussian used to model the channel tuning curves. Smaller values indicate sharper tuning and larger values indicate broader tuning. Figure 10 shows a plot of the RMSE between the modeled location shift response curves and the data response curves, as a function of the SD model parameter, with compression factor, k, held constant at 7 (allowing k to vary just lowers the right tail of the RMSE curve somewhat). The red dot indicates the fitted value (46 °), the point of lowest RMSE (4.0 nA m). The horizontal bar above the dot indicates the 95 % confidence interval for this fitted value, calculated by fitting the model to data from individual participants. This plot indicates that the critical feature of the computational model is that channel tuning is relatively broad. Decreasing tuning sharpness (i.e., increasing SD) has much less of an effect on the model fit than does increasing tuning sharpness (i.e., decreasing SD).

Root mean square error (RMSE) between the observed and modeled location shift response curves, as a function of the key parameter of the computational model—the standard deviation (SD) of the Gaussian underlying the channel tuning curves. Larger and smaller values of SD correspond to broader and sharper channel tuning, respectively. For this plot, the compression factor of the model, k, was fixed at its fitted value of 7. Allowing k to vary merely lowers the RMSE for larger values of SD. The red dot marks the point of minimum RMSE, which occurs at an SD of 46 °. The horizontal bar above the dot denotes the 95 % confidence interval for this parameter estimate, calculated from fits of the computational model to data from individual participants.

Estimates of psychophysical minimum audible angles

We sought to use the computational model to predict a psychophysical measure of spatial acuity, the minimum audible angle (MAA). The MAA is the smallest detectable spatial separation between two consecutive sound sources, one of which is at a specified reference location. The output of the opponent channel model, f(x), upon which perception is assumed to be based, was calculated as the difference in response between the left and right spatial channels, i.e., W r − W l (the tuning of the spatial channels being that determined from the EEG data, i.e., SD of 46 °). Thus, a larger response from the right, than left, channel, corresponding to a positive model output, would signal a sound source in the right auditory hemispace. The model output changes most rapidly with respect to x (azimuth in degrees) for midline sound source location. Thus, if it is assumed that, for any reference value of x, the smallest detectable location shift corresponds to a fixed change in f(x), the model predicts greater spatial acuity for midline sound sources.

The reciprocal of the rate-of-change of f(x) with respect to x was used to obtain quantitative estimates of the MAA for different reference locations. Larger values of the reciprocal (that is, smaller rates-of-change of f(x)) suggest larger MAAs. The reciprocal was multiplied by a constant, c, to obtain a measure of MAA. Thus,

where f′(x) is the rate-of-change of f(x) with respect to x. The value of c should be derived from psychophysical measures of the MAA for stimuli similar to those used in the current study, for a reference azimuth of 0 ° (MAA0). The value of c is then f′(0)·MAA0. Therefore, the full equation for the MAA is:

Litovsky and Macmillan (1994) reported a mean MAA0 value of 1.48 ° for short, wideband (500–8,500 Hz) noise bursts. Grantham (1995, Fig. 14A) summarized, with a curve fit, the results of a number of studies that have measured MAAs for low-frequency pure tones; the MAA0 value in this case was 1.54 °. As these two values for MAA0 are similar, their mean, 1.51 °, was used in the computational model. The value of f′(0) produced by the model was 0.0183 and thus c was 0.0276.

Figure 11 displays MAAs predicted by the model for reference azimuths between 0 and 75 ° (dashed red line). Note that, in terms of the MAA predictions, the model has no free parameters. The two parameters are the channel tuning width (SD), which was determined by the EEG data, and the value of MAA0, which was determined from the previous literature. The model reproduced the oft-described increase in MAA with increasing reference azimuth (Middlebrooks and Green 1991). Litovsky and Macmillan also measured MAAs for reference azimuths of 25 and 50 °, while Grantham’s curve fit explained data points at reference azimuths of 30, 45, 60, and 75 °. The MAA values from these studies are plotted together in black in the same figure. In summary, the model predictions follow the measured MAAs closely, though they underestimate the MAA for the largest reference azimuth (75 °).

Minimum audible angles (MAAs) as a function of reference azimuth, produced by the computational model (dashed red line), alongside those reported in psychophysical studies by previous authors (solid black line and circles). Note that the model prediction for the 0 ° reference azimuth was fixed at that observed in the psychophysical data (the model predicts the relative differences in MAA between the 0 ° and the more lateral reference azimuths). MAAs for reference azimuths of 25 and 50 ° were obtained from Litovsky and Macmillan (1994), and those for other reference azimuths were obtained from Grantham (1995).

Fits of a topographic model to the EEG data

To examine to what extent a topographic model could account for the responses to location shifts measured with EEG, an alternative computational model was developed. This model consisted of an array of 181 spatial channels with Gaussian tuning and peaks at azimuths from −90 to +90 ° in 1 ° intervals. The tuning widths (standard deviations of the Gaussians) could be varied independently of the peak response amplitudes. The widths, or amplitudes, could be identical across all channels in the array, or they could vary linearly between two values, one for the centermost (0 °) channel and one for the most peripheral (±90 °) channels. The variation in the amplitude of the peaks was intended to model differences in the number of neurons contributing to each spatial channel (see Fig. 1B, C), and thus to see whether assuming a larger number of neurons tuned to midline, than peripheral, locations could explain the EEG data. The variation in the channel widths was intended to model an alternative topographic model, in which neurons tuned to locations near the midline are more sharply tuned than neurons tuned to more peripheral locations. EEG responses to location shifts were calculated in essentially the same way as in the opponent channel computational model. That is, in each spatial channel, the activity to the pre-shift location was subtracted from the activity to the post-shift location and all positive differences were summed across channels. The location shift response curves produced by this process were normalized to their maximum value, and then subjected to compression, scaled and shifted as in the opponent channel model. In these analyses, no attempt was made to model differences between the auditory cortices. The model was always fitted (by minimizing the sum-of-squares error, SSE) to the average EEG data (averaged across participants) from the right auditory cortex, as this cortex showed little preference for the right or left hemispace.

The EEG location shift response curves contain 25 data points. Each model fit requires at least three parameters (compression factor, scale, and shift factors). Fits were compared by submitting the SSEs to F tests, which take into account differences in model complexity. Essentially, the F tests ask whether the more complex model fits the data significantly better than would be expected merely by increasing the number of fitted parameters. Each model fit was examined to see whether it reproduced the three key aspects of the EEG location shift response curves: greater sharpness for post-shift locations closer to the center (0 ° > ±30 ° > ±60 °); greater dynamic range for the most peripheral post-shift locations (±60 ° > ±30 ° and ±60 ° > 0 °, the ±30 ° versus 0 ° comparison was not included as this difference was not significant in the EEG analysis); and larger responses to outward-going, than inward-going, location shifts for the ±30 ° post-shift locations.

Firstly, as a baseline, the tuning width and amplitudes were allowed to vary, but were constrained to be identical across channels. The fitted tuning width was 30 °. The SSE was 463 nA m2 and neither the dynamic range, nor the outward-versus-inward, criteria were met. The sharpness criterion was met; this was because the most extreme spatial channels had peaks at ±90 ° so, with the channels having standard deviations of 30 °, responses to more central post-shift locations received contributions from a greater number of channels than responses to more peripheral locations.

In the next fit, the peak amplitude was allowed to vary across channels, with the constraint, imposed by the topographic model, that the amplitudes for central channels were greater than, or equal to, the amplitudes for peripheral channels. The results were identical to those produced with the amplitude constrained to be the same across channels, as the minimum SSE was obtained when the amplitude was identical for central and peripheral channels. Exploration of parameter values revealed that part of the difficulty the model had in reproducing the data location shift response curves arose because the modeled response curves had larger dynamic range for central, than peripheral, post-shift locations. In addition, responses were larger to inward-going, than outward-going, shifts. Both effects are contrary to the data.

Interestingly, removing the constraint on the peak amplitudes greatly improved the model fit. The obtained SSE was 308.35 nA m2, and all three criteria (sharpness, dynamic range, and larger responses to outward-going shifts) were met. The fitted peak amplitude was 0 for the centermost channel and 0.9 for the most peripheral channels. Thus, channels near the center contributed little to the location shift response curves produced by the model (indeed, the centermost channel contributed nothing). The fitted tuning width was 55 °. This model, having six parameters, was compared to the model in which the amplitudes and widths of the channels were the same for the central and peripheral locations (five parameters), and the improvement was significant [F(1, 19) = 9.548, p = 0.006]. Subsequently, the peak amplitude was fixed across channels and the tuning width was allowed to vary. Even when the width could vary in any manner across the channels (narrower or broader for more central locations), the fit was not significantly better than that observed when neither the width nor the amplitude could vary across channels [F(1, 19) = 2.24, p = 0.151]. Moreover, when the width could vary in any manner, and the amplitude could vary but only in a manner consistent with the topographic model (greater, or equal, for more central locations), the model fit remained poor, with an SSE of 449 nA m2 [F(2, 18) = 0.281, p = 0.758, relative to the baseline case]. Only when the amplitude could vary in any manner did the model fit improve (and all three criteria were met). In this case, the SSE was 299.97 nA m2, a significant reduction relative to the baseline case [F(2, 18) = 4.300, p = 0.020]. The peak amplitudes were 0 and 0.9 for channels at 0 and ±90 °, respectively. The corresponding tuning widths were 40 and 60 °.

Thus, the computational model best fitted the EEG data when the model assumed a greater number of neurons tuned to peripheral, than central, locations. To see whether, despite their reduced size, the central channels made an important contribution to the modeled responses, an additional parameter was introduced into the model. The value of this “cut-off” parameter was the azimuth magnitude below which all channels had zero peak amplitude. Thus, if the central channels played an important role, the fitted value of the cut-off parameter should be near 0 °, whereas if the central channels played an insignificant role, the fitted value could be higher. For this analysis, above the cut-off, both the peak amplitude and tuning width stayed the same. The SSE was 282 nA m2, the best so far, indicating that, far from being important to the model predictions, the central channels were actually detrimental. All criteria were met and the model fitted significantly better than the baseline case [F(1, 19) = 12.248, p = 0.002]. The value of the cut-off parameter was 55 °, the channel peak was 0.8, and the width was 75 °. A final fit, allowing the peak amplitude to vary above the cut-off, produced a similar result [SSE of 281 nA m2, with all criteria met. Cut-off parameter of 60 ° and peak amplitudes ranging from 0.9 at ±60 ° to 0.3 at ±90 °. Channel width of 75 °. The fit was significantly better than baseline, F(2, 18) = 5.813, p = 0.011]. In summary, the best versions of the topographic model included only broadly tuned channels at peripheral spatial locations. Structurally and functionally, these models are very similar to the opponent channel computational model.

Discussion

The current study measured neural responses from the human auditory cortex to shifts in sound source location in the sound field, using EEG. Responses were larger than responses to matched spectral and level changes, compatible with the idea that the location shift responses reflected both monaural and binaural location cues. Responses to outward-going shifts were larger than responses to inward-going shifts, consistent with an opponent channel model of spatial representation, but inconsistent with a topographic model. A computational opponent channel model explained other key aspects of the EEG data. One aspect was greater sensitivity to small location shifts near the midline than near the periphery. The model could account for this as the steepest portions of the tuning curves for the opponent channels lay near the midline. Thus, small location shifts near the midline led to larger changes in channel output than small shifts near the periphery. A second key aspect was larger dynamic range for responses toward more peripheral, than more central, locations. This was predicted as there was a larger range of channel outputs to traverse along the spatial tuning curves if the end-point was at an extreme location. Thirdly, responses were generally larger to contralateral, than ipsilateral, post-shift locations, particularly in left auditory cortex. The model could account for this by assuming that the response from each auditory cortex reflects a weighted sum of the responses from the left hemispace and right hemispace opponent channels, and that, more so in the left auditory cortex, the weight for the contralateral channel is greater.

Using the parameters derived from fits to the EEG data, the model was used to make predictions of psychophysical spatial acuity for different reference locations. The model predicted the oft-reported increase in spatial acuity for reference locations near the midline, than near the periphery. Spatial acuity was predicted to be the greatest near the midline because the outputs of the spatial channels changed most rapidly with azimuth in this region. Interestingly, this is the region in which the acoustic cues for location change most rapidly with azimuth when stimuli are presented in the sound field (Middlebrooks and Green 1991), an effect that could contribute to the shape of neural spatial tuning. Spatial acuity predictions made by the computational model closely followed those measured psychophysically by previous authors.

Finally, an alternative, topographic, computational model was produced and fitted to the EEG data. The best model fit, both in terms of minimum residual error and in terms of reproducing key aspects of the EEG data, arose when the model parameters took values such that the model closely resembled the opponent channel model. The primary contribution of this study, therefore, is the demonstration, using data and computational modeling, that the opponent channel model best accounts for neural responses from human auditory cortex and can reproduce psychophysical properties of sound localization.

Plausibility of the opponent channel account

Evidence for the opponent channel model came originally from single-unit recordings in small-headed mammals. Using stimuli presented from loudspeakers in the sound field, or stimuli presented over headphones that differed solely in ITD or ILD, these studies found that single units in auditory cortex and subcortex displayed broad spatial tuning with peaks mostly at extreme positions in the contralateral hemispace (Phillips and Irvine 1981; Phillips and Brugge 1985; McAlpine et al. 2001; McAlpine 2005; Stecker et al. 2005). Narrow tuning, with different neurons tuned to different positions throughout the auditory space, consistent with the classic topographical model of spatial representation, has been found mainly in birds, notably the barn owl (Konishi 2003).

The evidence provided by the current study for opponent channel representation of space in the human auditory cortex is consistent with the recent work by Magezi and Krumbholz (2010) and Salminen et al. (2009, 2010a). Magezi and Krumbholz used EEG with a CSP paradigm to obtain evidence for opponent channel coding of ITD in the left auditory space. Salminen et al. (2010a) obtained similar evidence using MEG and a paradigm based on response adaptation (also known as “repetition suppression”; Grill-Spector et al. 2006). Salminen et al. (2009) also used MEG adaptation, but presented stimuli in such a way so as to retain the full range of cues for sound location. They found that the extent to which an adapter stimulus suppressed the response to a subsequent probe stimulus presented in the left auditory space depended primarily on whether the adapter was located in the same, or different, hemispace, rather than on the size of the spatial separation between the stimuli as would be expected from the topographic model. This result is predicted by the opponent channel model, assuming that stimuli in the same hemifield activate, and thus adapt, the same spatial channel, whereas stimuli in different hemifields largely activate different channels.

Differences in spatial representation between the auditory cortices

In its original form, the opponent channel model postulated left- and right-sided neural structures each tuned to the contralateral hemispace (van Bergeijk 1962). Consistent with this conception, the majority of neurons in macaque primary auditory cortex and caudomedial field respond most strongly to contralateral locations, though ipsilateral and midline tuned neurons are also present (Recanzone et al. 2000). Stecker et al. (2005) proposed instead that each auditory cortex in mammals contains both contralateral and ipsilateral tuned channels, though the contralateral channel is dominant. Our results are consistent with the view that, in humans, each auditory cortex contains both contralateral and ipsilateral channels. In addition, we found that the left auditory cortex responds more strongly to contralateral, than ipsilateral, post-shift locations, whereas the right auditory cortex responds similarly to contralateral and ipsilateral locations. Our computational model was able to match the detailed pattern of the measured location shift response curves when the difference between the cortices in contralateral and ipsilateral sensitivity was included. Our results are consistent with previous neuroimaging work by Krumbholz et al. (2005, 2007) and Johnson and Hautus (2010). Moreover, while patients with left temporal lesions can exhibit sound localization deficits, deficits are typically greatest in the contralateral hemispace (Clarke et al. 2000), whereas deficits in patients with right temporal lesions are considerably more pronounced and cover the whole auditory space (Zatorre and Penhune 2001). This has been confirmed recently with a large number of patients by Spierer et al. (2009). Interestingly, recent work by Salminen et al. (2010b), using MEG adaptation, has come to the opposite conclusion, namely, that the right auditory cortex prefers contralateral to ipsilateral space, while the left auditory cortex shows little preference. One potential reason given by Salminen et al. for this discrepancy is that much of the previous work demonstrating contralateral preference in the left auditory cortex used stimuli containing ITDs or ILDs alone, while Salminen et al. used stimuli containing the full range of location cues. However, the current study presented stimuli in the sound field, thus preserving ITD, ILD, and monaural spectral cues, but still found larger contralateral preference in left auditory cortex. Another reason they suggest is that many previous studies have used location shifts presented in an ongoing sound, thus potentially giving rise to the percept of movement. Location shifts in the study of Salminen et al. occurred in consecutive sounds separated by silent gaps, so presumably elicited less of a movement percept. Location shifts were abrupt in the current study, but nevertheless occurred during continuous stimulation, so this explanation remains a possibility to be explored in future work, particularly as Getzmann (2009) has shown changes in the topography of cortical responses as a function of the velocity of sound motion.

Representation of interaural cues

It is unclear whether the location shift responses arose from a mixture of neurons, each sensitive to a single spatial cue, or from neurons sensitive to the combination of ITD, ILD, and spectral cues. ITDs and ILDs are initially coded separately, in the medial, and lateral, subdivisions of the brainstem superior olivary complex, respectively (Tsuchitani and Johnson 1991; Irvine 1992). The extent to which the cues are combined at later processing stages to form a unified representation of sound source location is a subject of debate. Phillips et al. (2006) measured the perceived laterality of a test stimulus as a function of its ITD or ILD, before and after presentation of a highly lateralized adapter stimulus. The adapter was lateralized with the different location cue to the test. The authors found shifts in the perceived laterality of the test stimulus away from the adapted side, and these shifts were of the same magnitude as those found in an earlier study when the adapter and test shared the same location cue (Phillips and Hall 2005). This outcome suggests that, at some stage in the auditory pathway, there is a common neural substrate for the processing of ITDs and ILDs. The above view is consistent with earlier studies demonstrating an interaction between the effects of ITD and ILD on the responses of cat (Hall 1965; Brugge et al. 1969; Yin et al. 1985) and bat (Pollak 1988; Grothe and Park 1995) single neurons. For example, Brugge et al. (1969) described neurons in cat auditory cortex whose response to a contralateral stimulus was reduced as the level of an ipsilateral stimulus was increased (corresponding to a reduction in ILD). The response reduction could be abolished by introducing an ITD favoring the contralateral ear. However, Schröger (1996) and Ungan et al. (2001) have provided EEG evidence for an, at least partial, separation of ITD and ILD processing in human auditory cortex. Schröger (1996) measured differences between responses to frequent, midline-lateralized (zero ITD and ILD), tones and responses to infrequent tones lateralized with ITD, ILD, or the combination of ITD and ILD. Consistent with parallel processing of ITD and ILD, the surface-negative portion of the difference response (termed the “mismatch negativity,” or MMN; Näätänen et al. 2007) was larger for the combination stimulus than for either the ITD or ILD stimulus alone. Moreover, the combination MMN was similar in magnitude to the sum of the ITD- and ILD-only MMNs. Ungan et al. (2001) found that the N1 responses to brief shifts in ITD or ILD superposed on rapid click trains had significantly different scalp topographies and significant differences in underlying generators (using equivalent current dipole modeling).

Attention

Teder-Sälejärvi and Hillyard (1998; see also Teder-Sälejärvi et al. 1999) suggest that attention can enhance auditory spatial tuning. In their EEG study, a noise was presented from one of seven loudspeakers on each trial; participants were required to attend to the leftmost, rightmost, or central loudspeaker. Evoked responses were largest to stimuli presented from the attended location and decreased in amplitude as a function of the spatial separation between the presented and attended locations. The current study used a passive listening paradigm, in which participants watched a subtitled film of their choice displayed on a screen below the loudspeaker from straight ahead. When questioned, participants typically recalled little about the stimuli or presentation conditions, suggesting that the stimuli did not re-orient their attention, as might be expected given that each stimulus location was equiprobable and all stimuli had fixed length. Nevertheless, it remains possible that the sustained attention that participants had on the film, and thus, by implication, the straight-ahead spatial location, could explain some of the differences in the sharpness of the location shift response curves for different post-shift locations. It would be interesting to examine whether location shift response curves like those measured in the current study change if the direction of attention no longer aligns with the direction of gaze. If so, computational modeling could help to decide whether such a change results from a reduction in the widths of the channel tuning curves (“attentional sharpening”), or an increase in the output of either channel (“attentional gain”).

References

Bell AJ, Sejnowski TJ (1995) An information-maximization approach to blind separation and blind deconvolution. Neural Comput 7:1129–1159

Briley PM, Breakey C, Krumbholz K (2012) Evidence for pitch chroma mapping in human auditory cortex. Cereb Cortex. doi:10.1093/cercor/bhs242

Brugge JF, Dubrovsky NA, Aitkin LM, Anderson DJ (1969) Sensitivity of single neurons in auditory cortex of cat to binaural tonal stimulation; effects of varying interaural time and intensity. J Neurophysiol 32:1005–1024

Carr CE, Konishi M (1990) A circuit for detection of interaural time differences in the brain stem of the barn owl. J Neurosci 10:3227–3246

Clarke S, Bellmann A, Meuli RA, Assal G, Steck AJ (2000) Auditory agnosia and auditory spatial deficits following left hemispheric lesions: evidence for distinct processing pathways. Neuropsychologia 38:797–807

Delorme A, Makeig S (2004) EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods 134:9–21

Gatehouse S, Noble W (2004) The Speech, Spatial and Qualities of Hearing Scale (SSQ). Int J Audiol 43:85–99

Getzmann S (2009) Effect of auditory motion velocity on reaction time and cortical processes. Neuropsychologia 47:2625–2633

Grantham DW (1995) Spatial hearing and related phenomena. In: Moore BCJ (ed) Hearing. Academic, San Diego, pp 297–345

Grill-Spector K, Henson R, Martin A (2006) Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Neurosci 10:14–23

Grothe B, Park TJ (1995) Time can be traded for intensity in the lower auditory system. Naturwissenschaften 82:521–523

Grothe B, Pecka M, McAlpine D (2010) Mechanisms of sound localization in mammals. Physiol Rev 90:983–1012

Hall JL (1965) Binaural interaction in the accessory superior-olivary nucleus of the cat. J Acoust Soc Am 37:814–823

Hall DA, Haggard MP, Akeroyd MA, Summerfield AQ, Palmer AR, Elliott MR, Bowtell RW (2000) Modulation and task effects in auditory processing measured using fMRI. Hum Brain Mapp 10:107–119

Hewson-Stoate N, Schönwiesner M, Krumbholz K (2006) Vowel processing evokes a large sustained response. Eur J Neurosci 24:2661–2671

Irvine DRF (1992) Physiology of the auditory brainstem. In: Popper A, Fay R (eds) The mammalian auditory pathway: neurophysiology. Springer, New York, pp 153–231

Jeffress LA (1948) A place theory of sound localization. J Comp Physiol Psychol 41:35–39

Johnson BW, Hautus MJ (2010) Processing of binaural spatial information in human auditory cortex: neuromagnetic responses to interaural timing and level differences. Neuropsychologia 48:2610–2619

Key APF, Dove GO, Maguire MJ (2005) Linking brainwaves to the brain: an ERP primer. Dev Neuropsychol 27:183–215

Kitterick PT, Lovett RES, Goman AM, Summerfield AQ (2011) The AB-York crescent of sound: an apparatus for assessing spatial-listening skills in children and adults. Cochlear Implant Int 12:164–169

Knudsen EI (1982) Auditory and visual maps of space in the optic tectum of the owl. J Neurosci 2:1177–1194

Konishi M (2003) Coding of auditory space. Ann Rev Neurosci 26:31–55

Krumbholz K, Schönwiesner M, von Cramon DY, Rübsamen R, Shah NJ, Zilles K, Fink GR (2005) Representation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cereb Cortex 15:317–324

Krumbholz K, Hewson-Stoate N, Schönwiesner M (2007) Cortical response to auditory motion suggests an asymmetry in the reliance on inter-hemispheric connections between the left and right auditory cortices. J Neurophysiol 97:1649–1655