Abstract

Neuronavigation has become an essential neurosurgical tool in pursuing minimal invasiveness and maximal safety, even though it has several technical limitations. Augmented reality (AR) neuronavigation is a significant advance, providing a real-time updated 3D virtual model of anatomical details, overlaid on the real surgical field. Currently, only a few AR systems have been tested in a clinical setting. The aim is to review such devices. We performed a PubMed search of reports restricted to human studies of in vivo applications of AR in any neurosurgical procedure using the search terms “Augmented reality” and “Neurosurgery.” Eligibility assessment was performed independently by two reviewers in an unblinded standardized manner. The systems were qualitatively evaluated on the basis of the following: neurosurgical subspecialty of application, pathology of treated lesions and lesion locations, real data source, virtual data source, tracking modality, registration technique, visualization processing, display type, and perception location. Eighteen studies were included during the period 1996 to September 30, 2015. The AR systems were grouped by the real data source: microscope (8), hand- or head-held cameras (4), direct patient view (2), endoscope (1), and X-ray fluoroscopy (1) head-mounted display (1). A total of 195 lesions were treated: 75 (38.46 %) were neoplastic, 77 (39.48 %) neurovascular, and 1 (0.51 %) hydrocephalus, and 42 (21.53 %) were undetermined. Current literature confirms that AR is a reliable and versatile tool when performing minimally invasive approaches in a wide range of neurosurgical diseases, although prospective randomized studies are not yet available and technical improvements are needed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

During the last 15 years, neuronavigation has become an essential neurosurgical tool for pursuing minimal invasiveness and maximal safety [14]. Unfortunately, ergonomics of such devices are still not optimal [23]. The neurosurgeon has to look away from the surgical field into a dedicated workstation screen. Then, the operator is required to transfer the information from the “virtual” environment of the navigation system to the real surgical field. The virtual environment includes virtual surgical instruments and patient-specific virtual anatomy details (generally obtained from pre-operative 3D images). Augmented reality (AR) allows merging data from the real environment with virtual information and vice versa [33]. In the context of surgical navigation, AR may represent the next significant technological development because AR complements and integrates the concepts of traditional surgical navigation that rely solely on virtual reality [1]. The main goal of AR systems is to provide a real-time updated 3D virtual model of anatomical details, overlaid on the real surgical field. In this sense, the AR reality is the process of enrichment of reality with additional virtual contents.

In neurosurgery, there is a special need for AR to enhance the surgeon’s perception of the surgical environment. The surgical field is often small and the neurosurgeon has to develop an “X-ray” view through the anatomical borders of the surgical approach itself [37] in order to avoid unnecessary manipulation or inadvertent injuries to vascular or nervous structures, which becomes even more important with the introduction of minimally invasive neurosurgery mandating the smallest possible accesses for a given intracranial pathology [35]. Although the benefits to patients of minimally invasive neurosurgery are well established, the use of small approaches still represents a surgical challenge.

Although AR in neurosurgery is a promising frontier and several devices have been tested in vitro [19, 21], the clinical experience with such systems appears to be quite limited [3–5, 8, 11, 14, 15, 17, 22, 23, 29, 32]. We present a literature review aiming to describe and evaluate the advantages and shortcomings of each of the different AR setups tested in vivo in humans, to understand the efficacy of AR in the treatment of neurosurgical diseases, and to define potential future research directions.

Materials and methods

The present review was conducted according to the PRISMA statement criteria [27]. The literature search was updated to September 30, 2015. No other temporal limits were applied. The search was restricted to human studies. Inclusion criterion was as follows: report of a human in vivo application of AR in any neurosurgical procedure. Exclusion criteria were as follows: surgical simulation in virtual environment, in vitro studies, language of publication other than English, lack of new original experiments on humans, field of application other than neurosurgery, commentaries, and abstracts. The search was performed using the PubMed database and scanning reference lists of the resulting articles. The search terms were “Augmented reality” and “Neurosurgery.” Eligibility assessment was performed independently in an unblinded standardized manner by two reviewers (AM and FCa). Disagreements between reviewers were resolved by consensus. The following clinical data were extracted from each paper: neurosurgical subspecialty of application (neurovascular surgery, neuro-oncological surgery, non-neurovascular, non-neuro-oncological), lesion pathology, and lesion location. We evaluated a number of additional relevant technical aspects, as listed, in part, in the data, visualization processing and view (DVV) taxonomy published in 2010: [19] real data source, virtual data source, tracking modality, registration technique, visualization processing (AR visualization modality), display type where the final image is presented, and perception location (where the operator focused).

Unfortunately, qualitative parameters concerning the clinical usefulness and feasibility of the presented systems were not gathered primarily because of the subjective nature of the evaluation by the operators and the lack of consensus as to the definition of the qualitative parameters and, consequently, the evaluation tools, such as questionnaires. In a similar fashion, the accuracy of the AR systems was not included because, when reported, its definition was not consistent across different papers, obviating a meaningful comparison.

Finally, due to the nature of the studies (small case series) and the subjective nature of the qualitative assessments, publication bias should be considered. For the same reasons, no statistical analysis was performed.

Results

A total of 18 studies were included in our review. The PubMed search provided 60 items. No duplicates were identified. Of these, 44 studies were discarded because they did not meet the inclusion criteria: four papers were written in languages other than English, seven were in vitro studies, five were virtual reality studies, nine were reviews or commentaries, 12 papers were about disciplines other than neurosurgery (i.e., maxillofacial surgery, ENT surgery), seven papers were not pertinent to AR. The full text of the remaining 16 citations was obtained. After carefully reviewing the bibliography of each of the papers, two additional citations were included. No other relevant unpublished studies or congress abstracts were included. To the best of authors’ knowledge, no other pertinent papers are available today.

Table 1 summarizes the 18 papers published from 1996 to September, 2015. The specific technical advantages and shortcomings of each system in the clinical setting are reported in “AR in neurosurgery: technical implementations” section and in Table 1; the clinical applications of AR in neurosurgery are illustrated in “AR in neurosurgery: clinical applications” section and Table 2.

AR in neurosurgery: technical implementations

AR systems are composed of functionalities and devices that may be the same although used in different implementations. The real and virtual data source, its registration with the real content, the visualization of the AR content, and all the other factors shown in Table 1 are often performed with similar or exactly the same approach in different systems as described in the reported papers. Therefore, the discussion of some parts of selected papers reported here may appear somewhat redundant [20]. We describe the papers grouping them by function of the real data source as the type of capturing device used during the actual procedure is, from a surgical point of view, most important.

Real data source

Overall, the real data sources were the microscope (eight studies), different types of cameras (four studies in total), including hand-held cameras (four studies) and head-held cameras (two studies), direct patient view with or without the interposition of a semitransparent mirror (one study each), endoscope (one study), X-ray fluoroscopy (one study), and, finally, a rudimentary head-mounted display (one study).

In most of the systems, the real data source is a surgical microscope. These systems allow overlaying 3D projections derived from preoperative surgical images into bilateral eyepieces of the binocular optics of the operating microscope, precisely aligned with the surgical field [3–5, 11, 16, 22, 34, 38]. To achieve a coherent fusion between real images and virtual content, these systems monitor microscope optics pose, focus, zoom, and all internal camera parameters [13]. This is an important advantage as other, simpler systems require manual alignment with the surgical field [29]. The microscope-based AR system represented by microscope-assisted guided interventions (MAGI) requires an invasive preoperative placement of skull-fixed fiducials and/or locking acrylic dental stents. More recently, surface-based registration approaches have been used [4] without any additional referencing device as traditional modern neuronavigation systems.

It is significant that a microscope-based AR system does not require the bayonet pointer typical of the common neuronavigation systems. In traditional neuronavigation systems, the bayonet pointer, tracked and shown in the external display, is the sole link between the real and the virtual environments. In fact, in order to see the correspondence between a real and a virtual point, the surgeon places the pointer tip on a real anatomical target and observes its correspondence with the virtual one. In an AR scene, the correspondence between the real and virtual worlds is shown on the augmented images themselves without any additional physical device as a pointer, which might be a potential source of damage in the surgical field. Additionally, when brain shift occurs during intradural maneuvers, the AR view can be used as a guide for a limited correction of the initial image coregistration [10, 16].

A special type of microscope-based AR system is created by a neuronavigation-tracked microscope that serves uniquely as an input source for software integrating the data with preoperative virtual models [34]. The image is not displayed in the microscope, but rather, on a screen separate from the actual surgical scene. This is probably due to technical issues related to the re-send of the augmented images as input data to the microscope display. These microscope-based AR systems have two main shortcomings: first, the microscope itself is not practical for the initial macroscopic part of the surgical procedure, consisting of skin incision, craniotomy, and dural opening; second, current microscopes display only a monoscopic visualization of the surgical field [23]. As a consequence, a potential stereoscopic virtual image is superposed on a bidimensional field. From a practical point of view, there are perceptual issues particularly related to depth perception of an AR scene [23].

In four papers, the real data source was an additional hand-held and/or head-held camera [14, 23, 29, 30]. These systems are based on the use of a camera connected to a neuronavigation system. Currently, four main setups have been reported.

The Dex-Ray [23, 30] consists of a small, lipstick-shaped video camera positioned on a tracked hand-held pointer. The AR scene consists of the virtual rendering of a 3D dimensional virtual model superimposed on a bidimensional (monoscopic) view of the surgical field. Finally, the scene is shown on a display remote from the patient. Dex-Ray has several advantages and some limitations. There is a perfect alignment between the pointer and the camera, so the surgeon is aware of the spatial relationship between the tip of the pointer, the borders of the surgical corridor, and the target. Nevertheless, this feature has two limitations: first, in deep and narrow corridors, the camera has a limited ability to depict the anatomical structures due to lack of light and unsatisfactory magnification. Second, the surgeon’s viewpoint is different from that of the camera resulting in two main consequences: first, oculomotor issues occur due to camera movement, and second, the different points of view raise uncertainty as to the actual position of camera-recorded objects (parallax problem).

Additionally, the Dex-Ray requires the surgeon to look away from the surgical field to a screen where the AR scene is shown, rendering this setup quite similar to common neuronavigation systems. Unlike the microscope, the Dex-Ray can be handled easily without obstruction of the surgical field and can be conveniently used in all steps of the surgery, from skin incision to tumor resection.

The second camera-based setup is a quite similar one. The AR created overlapping intraoperative pictures (taken using a standard digital camera) on a 3D virtual model of the brain [29]. The virtual model was elaborated by dedicated neuroimaging 3D rendering software. The “real” intraoperative picture and the virtual model were matched using anatomical landmarks—sulci and gyri—and shown to the surgeon with a bit of delay. The AR system was then validated by verifying the actual position of the surgical target with intraoperative US or stereotactic biopsy. The main advantage of this system is that it is extremely cost-effective, making it a suitable option in developing countries where traditional commercially available neuronavigation systems are not available [29]. The two main disadvantages are that the image guidance is not displayed in real time so the delay depends on the frequency of acquired pictures and that the guidance becomes unreliable when lesions are far from the cortical surface because the sole anatomical landmark is almost lost. Conversely, it works surprisingly well for lesions hidden in the depth of a sulcus.

Recently, a new system was designed by using a hand-held or head-held camera tracked by a classical neuronavigation system [14]. The AR scene was displayed on a separate monitor by overlapping the 3D virtual model, as acquired by the camera, onto the real bidimensional surgical field. The head-mounted camera partially resolves the issues related to a conflicting point of view for at least camera movement. Nevertheless, it still requires that the surgeon look away from the actual surgical scene to observe the AR scene on a separate screen. Additionally, the point of view of the camera is not aligned with surgeon’s line of sight. Consequently, the eye-hand coordination may constitute a challenge.

More recently, a tablet based-AR system [8] was applied to neurosurgery. It consists of a navigational tablet that superimposes a virtual 3D model on the surgical field as it is recorded by the tablet’s posterior camera. In this case, the camera’s point of view can be considered aligned with the surgeon’s line of sight, offering favorable ergonomics in terms of eye-hand coordination. However, the tablet cannot be draped so a second surgeon needs to hold the tablet while the operator performs the surgery. Although the tablet allows magnification of the surgical field, it cannot provide the magnification and resolution necessary for microsurgical use.

The real data source can be represented by the direct view of the patient’s head. Two systems were proposed with this aim in mind. One is based on commercially available video projectors with LED technology [2]. The virtual image is projected onto the patient’s head, and it is rigidly and statically manually registered by moving the head or the projector up to align fiducial points. The main advantage is a potential intuitive visualization of the site of the skin incision and craniotomy (not explained by the authors) with a highly ergonomic setup, potentially resolving the eye-hand coordination problem. Unfortunately, the point of view of the operator is not the same as that of the projector, so that a parallax error is created, primarily for deep structures [12].

In 1997, a new, interesting AR system was created [15], with a paradigm completely different from the previously described systems. It has continuously been improved during the ensuing years until the present [39, 40]. It consists of a semi-transparent mirror positioned at 45° in front of a light field display [26] developed with the integral imaging approach [28]. The display technology is the same as that employed in glass-free 3D television and allows obtaining a realistic full-parallax view of a virtual scene. The user can perceive motion parallax moving in respect to the display. The half mirror allows the user to see the patient’s head with his/her unaided eyes, and it is mixed with the full-parallax light field rendering of the virtual information. The registration of the CT or MRI patient-specific 3D model with the patient’s head is manually performed aligning artificial markers [7]. The main advantage of the system is the full-parallax visualization of the virtual information and the unaided view of real surgical environment, an advantage in a camera-mediated view in cases of open surgery.

The application of the AR to endovascular surgery consisted of the superimposition of a CT or MRI-derived 3D model of the vascular tree and its lesions on the real bidimensional image acquired, in this case, with angiography [32]. Since the craniotomy is not needed, the brain shift is null in this application field. Accordingly, it can be considered the one AR system that is completely reliable for surgical access into the brain.

The use of an endoscope with AR consists of the superimposition of virtual 3D models, obtained, as in the previous case, from CT or MRI images on the bidimensional view of the surgical field as acquired by the endoscopic camera [17]. It requires registration of the patient’s head and the tracking of the rigid endoscope itself. Two types of information were shown on the traditional endoscopic monitor: the surgical target (and surrounding critical structures) and the position of the endoscope inside the nasal cavities. The second aspect may be especially important when an angled endoscope is used as the endoscope axis is different from the surgeon’s view of the axis. Typical limitations of common endoscopes still persist, including the bidimensional view of the operative field and a limited magnification ability in respect to the surgical microscope.

The oldest system reported in our review in 1996 did not show the virtual information superimposed and aligned with the real anatomy [9]. The graphic user interface essentially shows the surgeon the tip of a magnetic tracked digitizer in respect to the CT or MRI pre-operative images as in traditional (non-AR) neuronavigators. The system, a pioneer, can be considered a first example of AR as the authors employed a semi-transparent head-mounted display so as to offer the user the possibility of seeing the navigator images and the real patient at the same time.

Tracking

Overall, tracking was not needed in four setups, but, as performed, optical trackers were generally used (13 studies). Magnetic tracking was reported as used in only one of the oldest reports in 1996 [9].

There is a wide consensus that the optical tracking was the best option for AR systems in neurosurgery [6]. In fact, optical tracking is very practical because it does not require wires to connect the tracked object. Additionally, it is promptly available because it can rely on widely available cameras included in smartphones, tablets [8], and digital recording cameras [14]. The main shortcoming is that tracked objects have to be in line of sight of the tracking system.

Registration technique

Patient registration was mainly based on superficial fiducial markers (six studies), on face surface matching systems (six studies), or on manual procedures/refinement (five studies). Skull-implanted or dental-fixed fiducials were used in two older reports in 1999 and 2004 [11, 22].

Thus, in the largest part of the reported studies, the image registration is based on fiducial markers or on skin surface identification. Indeed, these two techniques demonstrated to be faster and more accurate respect to the manual registration [25], as well as less invasive and laborious than skull-implanted or dental-fixed fiducials.

Visualization processing

It allowed for the representation of virtual reality in several different ways including the following: surface mash (eight studies), texture maps (four studies), wireframes (three studies), and transparencies (three studies). Older reports described rudimentary visualizations: in one case [9], the MRI slices were directly visualized in a fashion similar to the current neuronavigation devices; in another case [15], a light field object rendering was performed.

The visualization processing affects the global manner (“style”) that is used to represent the virtual content. From a practical point of view, visualization processing should be as simple and intuitive as possible, resembling the real-life experience: wireframes and texture maps are less intuitive than surface meshes because the latter realistically clearly represent the margin and shape of the object of interest. Indeed, they were used in seven of the more recent studies (Table 1).

Display type and perception location

The display type determines the perception location that can be a remote monitor (eight studies) or the patient himself (ten studies). The first type of perception location is provided by a hand-held camera (two studies), a head-held camera (two studies), a digital camera (one study), an endoscope (one study), an X-ray fluoroscopy (one study), and a microscope when the AR scene is projected on an external monitor (one study) instead of eyepieces. The latter kind of perception location is achieved by using several different displays, including microscope eyepieces (five studies) or oculars via an external beam (two studies), a tablet display, a light field display with a 45-degree-oriented mirror, or an image created by a common video projector (one study each).

The perception location has two main practical consequences. First, when the AR is displayed on an external monitor [23, 29, 30, 34], the surgeon has to move his/her attention from the actual surgical field to the monitor, in order to gather information that will be “mentally” transferred to the real surgical field, as currently occurs with the neuronavigation systems. In contrast, perception localization on the patient is much more intuitive. The goal can be achieved both by the unaided eye [2] or by devices presenting the AR scene in the line of view between the surgeon and the surgical field, as in the case of the microscope [3–5, 16, 38], the tablet [8], and the light field display [15].

The second crucial aspect is the different points of view of the same surgical target that can be achieved by the surgeon’s eye and optic devices (the parallax problem). When the surgeon’s point of view is the same as that of the real data source, there is no mismatch between what the operator sees and what the device actually captures. Conversely, when the operator and the data source have different points of view, there may be uncertainty as to the actual position of the target [23]. The position of the AR display in relation to the surgeon is very important also for future developments.

AR in neurosurgery: clinical applications

Six of the 18 studies reported neuro-oncological applications only (one of them mainly reported epileptogenic tumors) [9], six reported neurovascular applications only, five reported both neuro-oncological and neurovascular applications, and one reported a neuro-oncological, neurovascular, non-neuro-oncological non-neurovascular application, the use of AR for external ventricular drainage placement [29].

The lesions listed in Table 2 are classified by pathology type. A total of 195 treated lesions were analyzed in the selected works. Of these, 75 (38.46 %) were neoplastic lesions, mainly gliomas (14 lesions, 7.17 %) and meningiomas in supratentorial (12 lesions, 6.15 %) or infratentorial/skull base (7 lesions, 3.58 %) locations, pituitary adenomas (12 lesions, 6.15 %), and metastases (11 lesions, 5.64 %). There were 77 (39.48 %) neurovascular lesions, mainly aneurysms of the anterior circulation (39 lesions, 20 % of the total) and posterior circulation (four lesions, 2.05 % of the total), cavernomas (20 lesions, 10.25 %), and arterovenous malformations (AVMs) (eight lesions, 4.10 %). Non-neoplastic, non-vascular lesions included just one case (0.51 % of total) of external ventricular drainage under AR guidance. The histology was not specified for 42 lesions (21.53 %). The “epileptogenic lesions” (40 lesions, 20.51 %) were reported to be mainly tumors. Adding these lesions to the neoplastic lesions listed above (75), we may conclude that the neuro-oncological application is the most frequent type of use for AR in neurosurgery.

Accordingly to the pathological process and the type of intervention required, the virtual reality sources were as follows: CT (13 studies), angio-CT (four studies), MRI (14 studies), angio RM (seven studies), functional MRI (one study), tractography (one study), and angiography (one study).

Although AR has been applied to a wide range of diseases, including neoplastic, vascular, and other lesions (non-neoplastic non-vascular), the small number of cases in each series allowed only a qualitative assessment of the usefulness of AR in such neurosurgical procedures.

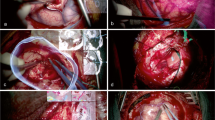

In neuro-oncological surgery, the AR has been applied in open treatment, mainly of gliomas and meningiomas. The largest tumor series [11, 23] reports an advantage in minimizing skin incisions and craniotomies. When the dura is to be opened, the AR allows a clear visualization of the venous sinuses underneath: for example, in the case of falcine meningiomas [23, 30], the sagittal sinus can be seen as a virtual model and spared. In addition, when tumors are hidden in the depths of a cerebral sulcus, the visualization of the tumor shape under the brain surface can aid in the selection of the sulcus to be dissected [29]. When the surgeon performs the corticectomy and tumor resection, the relevant surrounding vascular and nervous structures can be visualized, including eloquent areas and white matter tracts [14]. In an older, yet broad series of mixed oncological and epilepsy cases, AR allowed reducing craniotomy size needed to position subdural electrode monitoring cortical activity [9]. In skull base surgery, the AR provides an optimal visualization of cranial nerves and major vessels and their relationships with bony structures [11], potentially reducing morbidity and mortality. This advantage is especially relevant in endoscopic endonasal approaches. In fact, AR allows the surgeon to orient his tracked instruments in the nasal cavities perfectly, having a precise awareness of the midline position and, when the approach moves laterally, visualizing the carotids and optic nerves [17]. Such an advantage is particularly relevant when the endonasal anatomy is distorted by previous interventions.

In vascular neurosurgery, the AR was mainly applied to the open treatment of aneurysms [3, 5] and AVMs [4] and to the endovascular treatment of aneurysms [32].

The microscope-based AR systems were found to be a particularly useful asset in neurovascular surgery because they improve the craniotomy placement and dural opening [3–5] as also demonstrated in neuro-oncological cases [11, 22]. Specifically, microscope-based AR systems were useful in aneurysm treatment because they allowed optimal adjustment of the head position, minimizing subarachnoid dissection, and selecting the proper clip placement by a thorough visualization of the vascular anatomy near the aneurysm itself [3]. Furthermore, when by-pass surgery was the selected treatment option for multiple aneurysms, the microscope-based AR systems allowed for a reliable identification of the donor vessel and of the recipient intracranial vessel [5]. In the case of AVMs, results were less encouraging. In fact, microscope-based AR systems allowed a reliable visualization of the main arterious feeders of an AVM, indicating precisely where proximal control should be performed in case of an intraoperative AVM rupture. However, microscope-based systems were not able to reveal the detailed anatomy of vessels surrounding or actually feeding the AVM, a detail of critical importance during AVM resection. The information about AVM venous drainage seems to be irrelevant because a large number of cases underwent preoperative embolization.

Microscope-based AR systems were found to be a useful tool for resecting cavernomas close to eloquent or deeply seated areas [38]. Unfortunately, the virtual component may partially obstruct the surgeon’s point of view and not function when the cavernoma itself has been reached surgically.

AR also dramatically improved the endovascular treatment of cerebral aneurysms. In fact, the angiographic visualization of cerebral vessels does not allow the observer any intuitive deduction about the spatial relationship between structures. A 3D model of one or more vascular branches is a valuable aid for the surgeon [32].

Finally, AR can also improve the treatment of non-neoplastic non-vascular pathology, as in the case of hydrocephalus secondary to subarachnoid hemorrhage [29]. The external ventricular drain positioning can be easier and faster, especially if the lateral ventricle is not well dilated yet due to sudden obstruction of the ventricular system.

Discussion

AR in neurosurgery was demonstrated as a useful asset in different subspecialties. Nonetheless, there are a number of uncertainties limiting the introduction of AR in the daily practice. Currently, there are no prospective studies showing a significant difference between AR-aided surgeries versus navigation-guided procedures in terms of morbidity, mortality, and clinical effectiveness.

Thus, the aims of the present study were not to drag definitive conclusions on the “best” AR system, but to provide a practical tool to analyze the different aspects and limitations of existing neuronavigation systems and to stimulate the development of new solutions for AR in neurosurgery. The most relevant parameters, and their main current options, are summarized in Table 3.

First, the field of use must be defined. Indeed, the adequacy of an AR system should be primarily evaluated in respect to the different procedures or steps in the procedure. When only a macroscopic view of the surgical field is required (i.e., ventricular drain placement, standard craniotomy), microscope-based AR systems are potentially impractical because of the ergonomics of the microscope itself. When a relevant magnification is required, the microscope-based AR systems appear to be the best option. In a similar fashion, the endoscope and the endoscope represent the main, and sole, tool to perform, respectively, endoscopic and endovascular surgeries.

Then, the AR setup in the operating room should consider five parameters: the real data source, the tracking modality, the registration technique, the display type, and the perception location. As described above, there is quite a unanimous agreement across different neurosurgical subspecialties that the best tracking method is the optical tracking and that the easiest as well as accurate registration technique is represented by fiducial markers or skin surface registration. All the other parameters must be tailored on the specific surgical needs, as well as on the available tools.

Unfortunately, the monetary cost of the different systems has not yet been determined. The necessary equipment for microscope-based AR systems is primarily based on a neuronavigation system and a surgical microscope that are available in most modern operating rooms, and their introduction into daily practice would not require additional costs. Some AR systems may also be cheaper than a standard neuronavigation system. In fact, in developing countries, very rudimentary AR systems, composed of a 3D rendering software running on a computer and a digital camera [29], have partially replaced the use of neuronavigation systems, although with evident limitations.

The third main aspect to define in an AR system is the layout of actual surgical scene, as provided by different visualization techniques. The depth perception of the overlaid 3D models is still quite difficult for all the AR systems [24, 36]. Binocular cues, partially offered by 3D stereoscopic displays, are not always sufficient for inferring the spatial relationships between objects in a three-dimensional scene. The 3-D perception is necessarily associated not only with the binocular perception of the scene, but also with the different visualization techniques, as reported above. For this reason, many researchers try to improve depth perception by means of visualization processing techniques [19]. For example, specific color coding, one of several methods, can be associated with the distance from the surgical target [17]. Other more sophisticated tools consist of progressive transparency of colors as the structures are deeper [18].

On the other hand, the crowding of the surgical view must be avoided. The virtual models should be presented to the surgeon, in the most intuitive as well as most effective way. Only essential virtual details should be presented because the overlapped models may hide a part of the actual surgical field. This issue could be a potential source of morbidity and mortality. Similarly, all AR systems that require the surgeon to look away from the surgical field eliminate this risk.

Great effort should be invested in not only improving the visualization of 3D models, but also in introducing information derived from new advanced imaging techniques. AR systems represent a suitable option for multimodal imaging integration involving not only CT, MRI (and related techniques), and angiography, but also other techniques such as magnetoencephalography and transcranial magnetic stimulation.

Further, when the arachnoid is opened, the resulting brain shifts irreversibly, progressively compromising the reliability of both virtual models of AR systems and neuronavigation. This problem was recently addressed by manually optimizing the overlay of the virtual model in the surgical microscope. It has been reported [16] that when severe deformation occurs during advanced tumor resection, compensation of any sort becomes impossible because of the parenchymal deformation. The brain shift problem could be dealt with by refreshing the virtual 3D models with intraoperative imaging, such as intraoperative MRI and intraoperative ultrasound, as with traditional neuronavigation systems.

Conclusions

AR represents a meaningful improvement of current neuronavigation systems. The prompt availability of virtual patient-derived information superimposed onto the surgical field view aids the surgeon in performing minimally invasive approaches. In particular, the large variety of technical implementations provides the neurosurgeon valid options for different surgeries (mainly neuro-oncological and neurovascular), for different treatment modalities (endovascular, endonasal, open), and for different steps of the same surgery (microscopic part and macroscopic part). Current literature confirms that AR in neurosurgery is a reliable, versatile, and promising tool, although prospective randomized studies have not yet been published.

Efforts should be invested in improving the AR system setup, making them user friendly throughout all the different steps of the surgery (microscopic and macroscopic parts) and across different surgeries. The virtual models need to be refined, perfectly merging with the surrounding real environment. Finally, new imaging techniques such as magnetoencephalography, transcranial magnetic stimulation, intraoperative MRI, and intraoperative ultrasound have the potential for providing new details for virtual models and improved registration.

References

Badiali G, Ferrari V, Cutolo F, Freschi C, Caramella D, Bianchi A, Marchetti C (2014) Augmented reality as an aid in maxillofacial surgery: validation of a wearable system allowing maxillary repositioning. J Cranio-Maxillofac Surg. doi:10.1016/j.jcms.2014.09.001

Besharati Tabrizi L, Mahvash M (2015) Augmented reality-guided neurosurgery: accuracy and intraoperative application of an image projection technique. J Neurosurg 123:206–211. doi:10.3171/2014.9.JNS141001

Cabrilo I, Bijlenga P, Schaller K (2014) Augmented reality in the surgery of cerebral aneurysms: a technical report. Neurosurgery 10(Suppl 2):252–260. doi:10.1227/NEU.0000000000000328, discussion 260–251

Cabrilo I, Bijlenga P, Schaller K (2014) Augmented reality in the surgery of cerebral arteriovenous malformations: technique assessment and considerations. Acta Neurochir 156:1769–1774. doi:10.1007/s00701-014-2183-9

Cabrilo I, Schaller K, Bijlenga P (2015) Augmented reality-assisted bypass surgery: embracing minimal invasiveness. World Neurosurgery 83:596–602. doi:10.1016/j.wneu.2014.12.020

Craig AB (2013) Understanding augmented reality: concepts and applications. Morgan Kaufmann, Waltham

Cutolo F, Badiali G, Ferrari V (2015) Human-PnP: ergonomic AR interaction paradigm for manual placement of rigid bodies. In: Augmented environments for computer-assisted interventions. Springer International Publishing, pp 50–60

Deng W, Li F, Wang M, Song Z (2014) Easy-to-use augmented reality neuronavigation using a wireless tablet PC. Stereotact Funct Neurosurg 92:17–24. doi:10.1159/000354816

Doyle WK (1996) Low end interactive image-directed neurosurgery. Update on rudimentary augmented reality used in epilepsy surgery. Stud Health Technol Inform 29:1–11. doi:10.3233/978-1-60750-873-1-1

Drouin S, Kersten-Oertel M, Collins DL (2015) Interaction-based registration correction for improved augmented reality overlay in neurosurgery. In: Linte C, Yaniv Z, Fallavollita P (eds) Augmented environments for computer-assisted interventions, vol 9365. Lecture Notes in Computer Science. Springer International Publishing, pp 21–29. doi:10.1007/978-3-319-24601-7_3

Edwards PJ, Johnson LG, Hawkes DJ, Fenlon MR, Strong A, Gleeson M (2004) Clinical experience and perception in stereo augmented reality surgical navigation. In: YG Z, Jiang T (eds) MIAR. Springer-Verlag, Berlin, pp 369–376

Ferrari V, Cutolo F (2016) Letter to the Editor on “Augmented reality-guided neurosurgery: accuracy and intraoperative application of an image projection technique”. Accepted by Journal of Neurosurgery

Ferrari V, Megali G, Troia E, Pietrabissa A, Mosca F (2009) A 3-D mixed-reality system for stereoscopic visualization of medical dataset. IEEE Trans Biomed Eng 56:2627–2633. doi:10.1109/TBME.2009.2028013

Inoue D, Cho B, Mori M, Kikkawa Y, Amano T, Nakamizo A, Yoshimoto K, Mizoguchi M, Tomikawa M, Hong J, Hashizume M, Sasaki T (2013) Preliminary study on the clinical application of augmented reality neuronavigation. J Neurol Surg Part A, Central European Neurosurg 74:71–76. doi:10.1055/s-0032-1333415

Iseki H, Masutani Y, Iwahara M, Tanikawa T, Muragaki Y, Taira T, Dohi T, Takakura K (1997) Volumegraph (overlaid three-dimensional image-guided navigation). Clinical application of augmented reality in neurosurgery. Stereotact Funct Neurosurg 68:18–24. doi:10.1159/000099897

Kantelhardt SR, Gutenberg A, Neulen A, Keric N, Renovanz M, Giese A (2015) Video-assisted navigation for adjustment of image-guidance accuracy to slight brain shift. Neurosurgery. doi:10.1227/NEU.0000000000000921

Kawamata T, Iseki H, Shibasaki T, Hori T (2002) Endoscopic augmented reality navigation system for endonasal transsphenoidal surgery to treat pituitary tumors: technical note. Neurosurgery 50:1393–1397

Kersten-Oertel M, Chen SJ, Collins DL (2014) An evaluation of depth enhancing perceptual cues for vascular volume visualization in neurosurgery. IEEE Trans Vis Comput Graph 20:391–403. doi:10.1109/TVCG.2013.240

Kersten-Oertel M, Jannin P, Collins DL (2010) DVV: towards a taxonomy for mixed reality visualization in image guided surgery. Lect Notes Comput Sc 6326:334–343

Kersten-Oertel M, Jannin P, Collins DL (2012) DVV: a taxonomy for mixed reality visualization in image guided surgery. IEEE Trans Vis Comput Graph 18:332–352. doi:10.1109/TVCG.2011.50

Kersten-Oertel M, Jannin P, Collins DL (2013) The state of the art of visualization in mixed reality image guided surgery. Comput Med Imaging Graph 37:98–112. doi:10.1016/j.compmedimag.2013.01.009

King AP, Edwards PJ, Maurer CR Jr, de Cunha DA, Hawkes DJ, Hill DL, Gaston RP, Fenlon MR, Strong AJ, Chandler CL, Richards A, Gleeson MJ (1999) A system for microscope-assisted guided interventions. Stereotact Funct Neurosurg 72:107–111

Kockro RA, Tsai YT, Ng I, Hwang P, Zhu C, Agusanto K, Hong LX, Serra L (2009) Dex-ray: augmented reality neurosurgical navigation with a handheld video probe. Neurosurgery 65:795–807. doi:10.1227/01.NEU.0000349918.36700.1C, discussion 807–798

Kruijff E, Swan JE, Feiner S Perceptual issues in augmented reality revisited. In: Mixed and Augmented Reality (ISMAR), 2010 9th IEEE International Symposium on, 13–16 Oct. 2010 2010. pp 3–12. doi:10.1109/ISMAR.2010.5643530

Le Moigne J, Netanyahu NS, Eastman RD (2010) Image registration for remote sensing. Cambridge University Press, Cambridge

Levoy M (2006) Light fields and computational imaging. Computer:46–55

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D (2009) The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ 339:b2700. doi:10.1136/bmj.b2700

Lippmann G (1908) Epreuves reversibles donnant la sensation du relief. J Phys Theor Appl 7:821–825

Lovo EE, Quintana JC, Puebla MC, Torrealba G, Santos JL, Lira IH, Tagle P (2007) A novel, inexpensive method of image coregistration for applications in image-guided surgery using augmented reality. Neurosurgery 60:366–371. doi:10.1227/01.NEU.0000255360.32689.FA, discussion 371–362

Low D, Lee CK, Dip LL, Ng WH, Ang BT, Ng I (2010) Augmented reality neurosurgical planning and navigation for surgical excision of parasagittal, falcine and convexity meningiomas. Br J Neurosurg 24:69–74. doi:10.3109/02688690903506093

Mahvash M, Besharati Tabrizi L (2013) A novel augmented reality system of image projection for image-guided neurosurgery. Acta Neurochir 155:943–947. doi:10.1007/s00701-013-1668-2

Masutani Y, Dohi T, Yamane F, Iseki H, Takakura K (1998) Augmented reality visualization system for intravascular neurosurgery. Comput Aided Surg 3:239–247. doi:10.1002/(SICI)1097-0150(1998)3:5<239::AID-IGS3>3.0.CO;2-B

Milgram P, Kishino F (1994) A taxonomy of mixed reality visual displays. Ieice T Inf Syst E77d:1321–1329

Paul P, Fleig O, Jannin P (2005) Augmented virtuality based on stereoscopic reconstruction in multimodal image-guided neurosurgery: methods and performance evaluation. IEEE Trans Med Imaging 24:1500–1511. doi:10.1109/TMI.2005.857029

Perneczky A, Reisch R, Tschabitscher M (2008) Keyhole approaches in neurosurgery. Springer, Wien

Reichelt S, Häussler R, Fütterer G, Leister N Depth cues in human visual perception and their realization in 3D displays. In: SPIE Defense, Security, and Sensing, 2010. International Society for Optics and Photonics, pp 76900B-76900B-76912

Rhoton AL, Rhoton AL, Congress of Neurological Surgeons. (2003) Rhoton cranial anatomy and surgical approaches. Neurosurgery,, vol 53. Lippincott Williams & Wilkins, Philadelphia

Stadie AT, Reisch R, Kockro RA, Fischer G, Schwandt E, Boor S, Stoeter P (2009) Minimally invasive cerebral cavernoma surgery using keyhole approaches—solutions for technique-related limitations. Minimally Invasive Neurosurgery: MIN 52:9–16. doi:10.1055/s-0028-1103305

Wang J, Suenaga H, Hoshi K, Yang L, Kobayashi E, Sakuma I, Liao H (2014) Augmented reality navigation with automatic marker-free image registration using 3-D image overlay for dental surgery. IEEE Trans Biomed Eng 61:1295–1304. doi:10.1109/TBME.2014.2301191

Zhang X, Chen G, Liao H A High-accuracy surgical augmented reality system using enhanced integral videography image overlay. In: 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society EMBC, Milano, 2015.

Acknowledgments

Dr. Meola is supported by an NIH award (R25CA089017). We sincerely thank Nina Geller, PhD, for the careful and rigorous editing of the present manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Comments

Yavor Enchev, Varna, Bulgaria

Neuronavigation exemplifies one of the newest and most rapidly developing neurosurgical technologies. Neuronavigation gradually became inseparable part of the efforts of neurosurgeons to achieve minimal invasiveness with maximal effect simultaneously reducing the hazards for the patients’ safety. It is extremely diverse technique with multiple forms and subtypes. Augmented reality represents a separate direction in the development of the image-guided technology allowing incorporation of virtual image data into the real surgical field. However, the clinical experience with the augmented reality in neurosurgery is quite limited.

The authors performed meticulous review of the literature in PUBMED pertinent to the augmented reality in neurosurgery. The eligible papers were analyzed according to the relevant neurosurgical subspecialty, type of pathologies and their location as well as many additional related technical aspects. Quantitative assessment of the clinical usefulness and feasibility were not available from the selected data. Significant matter is the lack of data for the accuracy of the augmented reality devices due to the inconsistency of its definition in the different papers.

Meaningful and useful for the practice conclusions, from this interesting review, could not be draught due to the limited patient population, the lack of data for quantitative assessment and the impossibility for statistical analysis. Future, more numerous series would be crucial for the potentially wider distribution and application of this approach.

Uwe Spetzger, Karlsruhe, Germany

The paper augment reality in Neurosurgery provides a perfect and systematic overview and demonstrates the development and improvement of neuronavigation systems with the implementation of AR in the last years. Augmented reality is a helpful device to visualize hidden structures in the skull. However the paper of A. Meola et al., demonstrate that AR is not only a device or tool, it is more a strategy or philosophy to improve our surgical planning and provides a high-end simulation of the procedure. The auxiliary to look through or behind anatomical structures is the key-benefit and AR will be utilized more frequently in the near future.

During the 90es neuronavigation gets more and more in the focus and meanwhile is a routine tool in our daily neurosurgical practice (1). Initially, arm based and consecutively also the first optical navigations systems allowed a detailed depiction of radiological data and integrated them into the real anatomy and the microscopic view of the neurosurgeons. The integration of neuronavigation in our routine work in cranial and also in spinal neurosurgery was one of the milestones of modern neurosurgery, and the acceptance of AR in modern neurosurgery will increase continuously.

The paper perfectly compares different augmented reality systems and also shows different philosophies of AR and their focus on cranial neurosurgery. This review gives detailed information about the development and also demonstrates the usefulness of the indication in different pathologies. The authors also point out that AR is an additional part in modern neuronavigation and also indicated in their review, that further efforts are necessary to make these systems more user-friendly and intuitive. Another aspect could be, using AR as a platform for integration of functional data to enhance these systems.

In this review, I miss the really important aspect that augmented reality systems are perfect tools for education and especially for practical surgical training (2). The capability of high-end visual representation of the anatomy and the combined radiological data, will create a perfect simulation and educational tool to learn the surgical anatomy much better as in textbooks. Therefore, I want to point out the importance to integrate AR systems more into the education and training of our young neurosurgeons. However, as a common warning, we always should be aware, that all augmented reality tools bear the potential risk of inaccuracy and errors and we have to keep an eye on the precise registration and the exact handling. Just as is all other navigation systems, accuracy of the registration and the navigation are the basis of all our AR data (3).

Also the planning and manufacturing of 3D implants for the reconstruction of the skull is an upcoming field for using AR (4). Just as modern computer-assisted pre-planning and especially the exact surgical implantation of individualized and patient-specific 3D laser printed spinal implants will be the next important issue for AR in spine surgery (5).

References

(1) Spetzger U, Laborde G, Gilsbach JM. Frameless neuronavigation in modern neurosurgery. Minim Invasive Neurosurg. 1995 Dec. 38(4):163–6. Review

(2) Krombach G, Ganser A, Fricke C, Rohde V, Reinges M, Gilsbach J, Spetzger U. Virtual placement of frontal ventricular catheters using frameless neuronavigation: an “unbloody training” for young neurosurgeons. Minim Invasive Neurosurg. 2000 Dec. 43(4):171–5

(3) Spetzger U, Hubbe U, Struffert T, Reinges MH, Krings T, Krombach GA, Zentner J, Gilsbach JM, Stiehl HS. Error analysis in cranial neuronavigation. Minim Invasive Neurosurg. 2002 Mar; 45(1):6–10

(4) Vougioukas VI, Hubbe U, van Velthoven V, Freiman TM, Schramm A, Spetzger U. Neuronavigation-assisted cranial reconstruction. Neurosurgery. 2004 Jul; 55(1):162–7; discussion 167

(5) Spetzger U, Frasca M, König SA. Surgical planning, manufacturing and implantation of an individualized cervical fusion titanium cage using patient-specific data. Eur Spine J. 2016 Mar 1 [Epub ahead of print]

Rights and permissions

About this article

Cite this article

Meola, A., Cutolo, F., Carbone, M. et al. Augmented reality in neurosurgery: a systematic review. Neurosurg Rev 40, 537–548 (2017). https://doi.org/10.1007/s10143-016-0732-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10143-016-0732-9