Abstract

Technological advances in robotics increase progressively. Elder care is one of the work areas which have potential to involve robotic workforce. So, it is important to focus on interaction between humans and potential robot workers to prepare the organization for possible challenges. The current study examined the relationships between trust in robots and anthropomorphism of robots, intention to work with robots and preference of automation levels. For this purpose, 102 caregivers who work for elder care in a nursing home (aged between 19 and 40) participated in an experimental study. According to the results, anthropomorphism of robots did not make any difference in terms of trust in them. Trust in robots was significantly related to intention to work and preference of automation levels. Organizations may consider employees’ trust in robots as an important factor before adapting them to workplace area.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to rapid extension of aged population, the need for human resources for elder care is rising inevitably; so, the aging population is a very big concern for care service institutions (Ahn et al. 2015). Older people may have health problems (Ahn et al. 2015) which may require long-term care (Robinson et al. 2013), and it may be difficult to find staff for these tasks (Jacobzone 2000). There are big concerns about the shortage of caregiving personnel for elders (Sargen et al. 2011). The limited number of caregiving staff in elder care facilities often brings the suggestion of using service robots for elder care (Oulton 2006). Therefore, research teams are focusing on more and more to develop assistive technologies for the elder care personnel and to increase older people’s life quality (Berns and Mehdi 2010).

Since machines are better at repeating routine duties (Cummings and Thornburg 2011), and once the routine tasks are determined in the workplace, task allocation process between human and robot may start. Robots may do many tasks in elder care facilities; therefore, human workers may use most of their time for the activities that robots cannot serve for elders. So, regarding the wide variety of needs of elders (from physical to psycho-social), robots may fill a big gap. Due to the fact that the caregivers may work with these robots, their approach and aspect to these robots should be scrutinized.

One of the biggest constraints of the technology-centered view is that it only seeks solutions to improve automation and excludes users—the human factor (Endsley 1995). As a result of this approach, users have to deal with more difficult tasks (Lockhart et al. 1993), which leads to increased training needs, and naturally increases the cost (Parasuraman et al. 1993). However, with a human-centered viewpoint, human and robot can be evaluated as a team and solutions can be obtained by considering this team. Therefore, the organization may have more productive strategic outcomes about human–robot cooperation in a shared workplace (Jouglet et al. 2003), if designers understand the work in full sense before putting a new technology into practice (Holden et al. 2013). That is why in our study, we focus on the human–robot interaction in elder care facilities through taking care technology into consideration.

1.1 Robots, anthropomorphism, and trust in robots

Care robots may help its users to increase their life quality by doing tasks. In the healthcare environment, the need for robots revealed with rehabilitation purposes which include mostly physical training and supporting daily activities (Butter et al. 2008). However, robotics with social purposes is trending implementation (Butter et al. 2007). Many types of robots are in use for the healthcare environment. All these innovations in care technology may help the care personnel for their huge workload and lead to an increase in life quality in both personnel and older people’s lives (Hansen et al. 2010). Furthermore, in nursing homes, robots can have a crucial role by monitoring elders, particularly for dementia patients, and giving immediate information to the staff (Pineau et al. 2003). With these robots, even if the facility may not increase the number of staff that they employed, it can give higher standards of care service (Kageyama 2002).

In robotic technology, the number of human-like robots is increasing. The main reason to apply anthropomorphism for robots is coming from the intention to obtain an effective system that can adapt to social interaction more easily (Duffy 2002). In this way, robots can communicate more naturally in predicted social scenarios which involve mostly humans. Moreover, humans tend to anthropomorphize (Duffy 2002). This tendency may show us that we can interact more easily and effectively with robots which have more anthropomorphic form; because familiarity helps (Choi and Kim 2008; Fasola and Mataric 2012). It is necessary to understand the distinction between the physical characteristics of a robot and the psychological mechanism for anthropomorphizing. So, in this study, to be human-like is handled as the degree to which a robot’s appearance is like a human.

In this study, we focus on trust as an interaction between user and technology (in particular, robot–human). This kind of trust is a fundamental issue in human–machine interaction; because if a human has no trust in a system, efficient and productive outputs cannot be obtained (Muir and Moray 1996; Lee and Moray 1992). Trusting a technological device or automated system requires expectation for the device or the system does not fail (Sheridan 2002). In automated systems, human trust was examined by many studies (see, Lee and See 2004; Madhavan et al. 2006; Reidy et al. 2015). Trust can be a determinant of effective use, disuse, misuse or abuse of automated device (Parasuraman and Riley 1997). When a person makes attribution a human-like characteristic to a non-human entity, she/he may think that the entity has the capacity to manage its own actions and perform well; therefore she/he may trust it more during task allocation process. If technology has more human-like characteristics, people trust more for the task being done by it (Epley et al. 2006). Therefore, humanizing technology may bring acceptance and trust.

All in all, according to the studies, it can be suggested that appearance of robots plays an important role in human–robot interaction by influencing human cognitive processes about the robot. Hence, anthropomorphism has an important effect in perceiving other entities. So, anthropomorphic perception may be a determinant of the trust towards potential robot coworkers.

1.2 Intention to work with robot

Attitudes are our guidance which selects information that influences our perception and behaviors (Fazio 1990). If a person accepts a technological device attitudinally, she/he also may accept it intentionally (Davis 1989). A person’s intention to use technology largely determines to what degree she/he uses it and his/her attitude for using technology largely determines the intention (Davis 1989). Trust is characterized as an attitude (Hertzberg 1988; Jones 1996; Spier 2013). Therefore, if an individual’s trust (as an attitude) toward an automated machine (i.e. robot) is positive; she/he may have the intention to work with it.

There are several studies that link trust to behavioral intention such as online purchasing (Everard and Galletta 2005; Lee and Turban 2001; Njite and Parsa 2005), revisiting the hotel (Kim et al. 2009), e-procurement (Kusuma and Pramunita 2011), mobile banking (Gu et al. 2009), and using healthcare robots (Alaiad and Zhou 2013). When we combine results of all these studies, we see the strong association between trust and intention. In this research, it is proposed that trust can be associated with the attitudinal determinant of behavioral intention.

1.3 Preferences of automation levels

In the light of cooperation of human and machine, a task can be executed fully by a human or a machine. A human or a machine can complete a task for different degrees and this is the basic allocation way of the task (Scerbo 1996). Between these two edges, task allocation can be varied according to intended control degree. Many studies tried to discover the elements behind users’ trust in automation and how this trust affects the user’s behavior. Studies indicated that trust influences the usage of automation (see Dzindolet et al. 2003; Muir 1987; Lee and Moray 1991; Lee and See 2004). If the user trusts automation more, she/he may tend to use it with higher automation level. Trust may be seen as an important factor in effecting the usage of automation (Moray et al. 2000; Lee and Moray 1992; Lee and See 2004) particularly for choosing appropriate level of automation.

1.4 Present study

Regarding all these research results and theoretical propositions, it is a requirement to understand employees’ standing point about their potential robot coworkers. Elder care facilities need immediate robotic service and this need will keep increasing. Caregivers, who work in these facilities for elders, will be in touch with robots during daily tasks in case of being coworkers with robots. Hence, it may be beneficial to take their perception and preferred automation levels for robot into consideration for adaptation, better performance, and productivity. Furthermore, we believe that to trust in automation and the anthropomorphic characteristics of robots may help us to explain the process of working with robots better. In particular, we propose that the human-likeness of a robot determines a caregiver’s trust in robot; and this trust may determine the intention to work with a robot and preference of level of automation in robots (see Fig. 1).

So, we propose the following hypotheses:

Hypothesis 1

A robot which has more human-likeness such as Android robot will be trusted by caregivers more than a robot which has less human-likeness such as Humanoid robot.

Hypothesis 2

Caregivers who trust in robots more will be more intended to work with them.

Hypothesis 3

Caregivers who trust in robots more will prefer higher level of automation in robots.

2 Methods

2.1 Sample

102 caregivers (43 females, 59 males) who work in an elder care facility in city of Istanbul in Turkey participated in the experiment. This research complied with the American Psychological Association Code of Ethics and was approved by the Institutional Review Board at Directorate of Almshouse of Istanbul Metropolitan Municipality. 50 caregivers (21 females, 29 males) saw the picture of humanoid robot as one of the experimental manipulations. Of those, 2 had primary school diploma, 30 had high school diploma, and 18 had college diploma. The mean age was 30 with a range from 20 to 39, and the mean of work years was 5 with a range from 1 to 14. 52 caregivers (22 females, 30 males) saw the picture of android robot, another of the experimental manipulations. Of those, 2 had primary school diploma, 34 had high school diploma, and 16 had college diploma. The mean age was 31 with a range from 19 to 40, and the mean of work years was 5 with a range from 1 to 16.

2.2 Procedure

The experiment took place with consecutive sessions in psychological counseling rooms of the nursing home. It was aimed to host four participants for each session. In each room, participants sat around the table where they can easily see the computer’s 17 inch LCD screen which was settled on other side of the table. After settlement of participants, the experimenter gave information about the experiment.

After the statement, the pictures were shown and the scales were given. Each group had been assigned to one of two experimental situations before entering the room. So, participants in first experimental situation saw the picture of humanoid robot that is called as AILA which is developed by the German Research Center for Artificial Intelligence (see Fig. 2). Participants in second experimental situation saw the picture of Android robot which is called as HRP-4C which is developed by the National Institute of Advanced Industrial Science and Technology in Japan (see Fig. 3). The pictures were obtained from these institutes’ web pages. Robot pictures involved a body picture and a face picture. Both robots can easily be perceived as having a female appearance.

The picture stayed on the screen during the experiment. Therefore, participants had the opportunity to reexamine the picture while answering the scales. Besides, after answering the scales, the demographic questions were fulfilled by participants. Finally, they were given the contact information of the researcher to communicate in case they want to learn the results of the study and the experimenter warned participants after experiment process for avoiding talking about the experiment with other possible participants.

2.3 Measures

The original language of the scales was English; so, they were translated into Turkish. Therefore, to be sure that these scales can give valid and reliable results, a pilot study was executed with these translated scales with a sample of 32 participants (17 females, 15 males) from the same elder care facility (these participants were excluded in the main experiment). The participants were taken to the room one by one. In the room, same procedure which was followed in the main experiment was executed until the end. As an addition, each participant was asked to tell the scale questions that cause any confusion or ambiguity. The feedbacks were noted by the experimenter. After evaluation of the feedbacks following scales were decided to be used in the experiment (see “Appendix”).

2.3.1 Manipulation check

Feedbacks from participants showed that participants had difficulty to understand and answer the Godspeed I: Anthropomorphism scale of Bartneck et al. (2009). So, a new scale was developed to measure anthropomorphism of robots with three items based on one of the items of Godspeed I to check the manipulation whether participants perceived Android robot more human-like than Humanoid robot. The item was the range from machinelike to human-like. As we conceptualize the anthropomorphism issue with appearance and show only the picture of robots, this item was the most understandable and appropriate one. The Cronbach’s alpha value was 0.73.

2.3.2 Checklist for trust between people and automation

Caregivers’ trust in automation is measured via the checklist which was developed by Jian et al. (2000). The checklist was translated into Turkish using the back translation method. The word “robot” was used in the place of the word “system” in Turkish version. This checklist originally contains 12 items that rate intensity of feeling of people’s trust or their impression about operating the machine; however, after the back translation three items were omitted due to repeating same expressions. Participants indicated their point on a 6-point scale ranging from 1 (strongly disagree) to 6 (strongly agree). Two items were also excluded to reach the Cronbach’s alpha value which was 0.70.

2.3.3 Intention to work

Caregivers’ intention to work with robots is measured via adaptation of items from Chang and Cheung’s study (2001). Two items were used. These items were translated into Turkish using the back translation method. Participants indicated their point on a 6-point scale ranging from 1 (strongly disagree) to 6 (strongly agree). The Cronbach’s alpha value was 0.92.

2.3.4 Automation levels of robots

Caregivers’ preferences for automation levels for robots were determined via adaptation of automated functions of Parasuraman et al. (2000). These functions were classified into four: acquisition of data, analyzing the data, making decisions and implementation of the decision. Each one of these functions was matched with an item. Participants responded their preference of level of automation to execute the tasks that a caregiver does in elder care on a 6-point scale ranging from 1 (strongly disagree) to 6 (strongly agree). For example, for the function of analyzing the data the following item was formed “I make robot analyze the data which was collected about the situation of the resident”. The Cronbach’s alpha value was 0.84.

2.3.5 Demographics

Demographic information about the participants was collected during the experiment. It includes age, sex, tenure in caregiving, education, and also familiarity with the robot shown in the picture.

3 Findings

In the first step, independent samples t test was executed to reveal that two robot types in the experiment were rated differently than each other on an anthropomorphism scale. This manipulation check showed that android robot was perceived significantly more anthropomorphic than humanoid robot (see Table 1).

Second, the first hypothesis was tested for trust in robot between the two experimental groups. Independent samples t test results revealed that participants who saw android robot in the experiment were not significantly different than the ones who saw humanoid robot in terms of trust in robot (see Table 2). Since any influence of anthropomorphism was not detected on trust in robot, the first hypothesis was not supported.

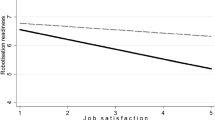

Third, second and third hypotheses were tested through Pearson correlation and simple regression analyses (see Table 3). The second hypothesis was stating that participants who trusted robots more would have more intention to work with them. Correlation analysis indicated that there is a moderate positive association between trust in robot and intention to work with them. Similarly, a simple regression analysis executed to predict intention to work with robots based on trust in robots (see Table 4). Results of the analysis revealed a significant equation. Therefore, the second hypothesis was supported.

According to the third hypothesis, participants who trusted in robot more will prefer higher automation level for all the functions (information acquisition, information analysis, decision selection, and action implementation). To test the hypothesis, first correlation analyses were executed (see Table 3). Results of the analyses indicated that there was weak yet significant positive correlation among trust in robot and preference of level of automation for information acquisition, information analysis, decision selection, action implementation, and mean of preferences of levels of automation for all the functions. Hence, the third hypothesis was supported.

Furthermore, simple regression analysis was carried on for each function and their mean to predict preferences of levels of automated functions based on trust in robots (see Tables 5, 6, 7, 8, 9). According to the results of these analyses, there was found significant equations which can predict the automation level based on trust in robots.

To sum up, according to results, the degree of human-likeness of robots was not a predictor for trust in robots. However, trust in robots was related to intention to work and preference of automation levels. It was found that participants who trusted robot more had more intention to work with them and preferred higher levels of automation.

4 Discussion

As organizations are scrutinized with multiple perspectives, their potential employees, robots, should be taken under consideration. In the last century, it was well understood that machines changed routine work life. Therefore, while it is becoming more autonomous, more changes may be expected. In line with these expectations, this study focused on the possible relationship between human and robots. In design of the study, three main hypotheses were followed.

In hypothesis one, it was proposed that people trust in android robots more than humanoid robots due to difference between their anthropomorphism levels. Although, manipulation check showed that their anthropomorphism levels significantly different than each other, participants’ trust in robots did not change according to the anthropomorphism level. Conversely, studies which relate trust in automation to anthropomorphism found positive relationships between these factors (see Bass et al. 2011; Branyon and Pak 2015; Epley et al. 2007; Gong 2008; Reidy et al. 2015; Schaefer 2013; Waytz et al. 2014). Nevertheless, there was no study which states negative relationship between the degree of anthropomorphism and trust in automation. However, studies that examine different features of perceptions towards robots found some contradictory results. Although some of them confirmed that there is a positive relationship between anthropomorphism and positive perception for robots (see Chee et al. 2012; Haring et al. 2012; Prakash and Rogers 2015), some studies found that robots which have an appearance like human causes negative perception (Ferrari et al. 2016; Woods 2006). It can be predicted that people who perceive robots more positively may tend to trust them due to the fact that trust is a positive perception in its nature. In line with this premise, if people perceive a robot negatively, their trust in it may decrease. As some of the studies stated above, those robots which have more human-like feature were perceived negatively; perhaps in our study, some participants perceived android robot negatively that caused them to trust it less. So, in total, this situation may have decreased the mean of trust in android robot and that’s why there was found no significant difference between robots.

There may be also various reasons behind why caregivers did not trust android robots more than humanoid ones. In the current study, both of these robots have a certain degree of anthropomorphism. Although, android one was more anthropomorphic, it may be necessary only a certain amount of anthropomorphism to trust a robot. After a certain point, other factors may be effective. Furthermore, there are many factors that complete a robot’s anthropomorphism such as human voice and facial expressions. Although, in the current study, only appearance of robot was taken as a base for anthropomorphism, these other anthropomorphic factors may help android robot to be trusted more than humanoid robot.

According to Mori’s hypothesis, as the degree of similarity between human and robot increases, people start to consider the robot as a human at some point (Mori et al. 2012). However, at some level they understand that the robot is not human. Here, it becomes difficult for them to accept it because it seems uncanny. So, he proposed that too much similarity may cause unpleasantness in people. When we look at the graph of Mori’s hypothesis, it is seen that while the similarity between robot and human increases, people experience increasing positive emotions until a point. Afterwards, it sharply decreases and on a point it starts increasing. Thus, android robot used in this study may be perceived as too much human-like on Mori’s hypothetical valley. Thus, the positive perception that occurs for this robot may start to fall. This may bring the level of trust for android robot to the level of trust for humanoid robot used in this study.

In hypothesis two, it was proposed that people who trust robots more have more intention to work with them. Results of the study confirmed this proposition and also gave the possibility to form an equation to predict intention to work based on trust in robots. The relationship between trust and intention was examined in many different ways in the literature. Particularly, studies which focus on the antecedents of behavior used trust as an effective factor. Not surprisingly, there were many studies that found similar results with current study (see Alaiad and Zhou 2013; Kim et al. 2009; Njite and Parsa 2005; Lee and Turban 2001). Thus, it can be said that people who trust in robots more have more intention to work with them. As other studies suggest about behavioral intention, it may be expected that if these people come face to face with robots, they may work with them more easily.

In hypothesis three, it was proposed that people who trust robots more prefer higher automation levels for robots. In the study, these automation levels were asked for four automated functions which were developed by Parasuraman et al. (2000). Our results revealed that there was a positive relationship between caregivers’ trust in robots and their preferred levels of automation for these functions. Also, the mean of automation levels of these functions and also each of these functions was in a positive relationship with participants’ trust in robots. There were many studies which also point the relationship between trust in robot and automation levels (see Dassonville et al. 1996; Desai 2007; Dzindolet et al. 2003; Lee and See 2004; Pak et al. 2012). Furthermore, when these preferences about automated functions are examined, it can be easily seen that participants preferred lesser automation levels for the function of decision selection compared to other functions. Participants may have perceived decision-making issue as closely related with cognitive superiority and may not want robots take this superiority.

Although results of the current study indicate that there is a relationship between trust and preferences of automation levels, these relationships were not strong. Caregivers may have job-related anxiety towards robots. As machines in work life changed workers’ life since more than a century, it still keeps changing. People are aware of this change through reading news, watching TV or experiencing it themselves. In this frame, there is an unavoidable issue “Do robots support us or replace us?” Although in the study, participants were encouraged to think robots as supporters, people who had this question may not get over this anxiety and this may be reflected to their preferences. Although the experimenter explained the purpose of the research, participants may have thought that if they preferred higher levels of automation, they would increase the likelihood of adoption of robots which can replace them.

As it is mentioned in the Sect. 2, robots which were used in the experiment could be easily perceived as female. However, robots’ gender may have affected caregivers’ preferences of automation levels. Participants’ perception of gender capabilities may be reflected to these robots. Therefore, they may have preferred lower levels of automation for female robots.

As the number of robots increasing in work life, it is crucial to focus on potential interaction between human and robot. Regarding this understanding, many studies are carried on progressively. Similarly, the current study tried to emphasize that technology has three dimensions which have to be examined. One of them is cognitive one which points out that our perception of anthropomorphism effects our trust in robots. This study could not support that idea, but we believe research which includes observations from long-term interactions between human and robot, may find supporting results. The other one is psychological dimension which states the importance of our attitudes, particularly trust in robots, affects our intention to work and preferences about automation levels. Finally, the organizational dimension which may experience many changes because of automation. All workflows which include human and robot at the same time may need to be rearranged in near future. Organizations that make these arrangements more efficiently may keep their being.

5 Conclusion

If a human-centered technology approach is used prior to production, high-tech machines can provide qualified service in cooperation with human workers. As this work carried out in this light of ideas, we focused on robots and human workers who were thought to meet a great need in the field of elderly care. Their attitudes towards robots were seen to influence the possible cooperation with robots. Moreover, the main contribution of this study is to emphasize the importance of trust in automation in elder care. Thus, contributing to the necessity of human factor analysis to develop technology (Sætren et al. 2016), companies which produce these robots may take trust in robot issue under consideration while designing them and introducing them to the potential users.

6 Limitation and future work

The main limitation of the study is the lack of real interaction between participants and robots. Nevertheless, the use of photography has also given the advantage that the robots used in the experiment were presented on equal terms. Manipulation was the difference in perception that would arise from the appearance of robots. Thus, in the real-life interaction, the possibility that the participants could gain confusion about the mobility skills of the robots was eliminated.

In future studies, all work done in elderly care may be rated according to the level of cooperation required between human and robot. Then the robot design may be determined according to the required cooperation standard. Moreover, in elderly care, robots may be expected to work by touching people; so caregivers may take into account the sensitivities of the bodies of elderly individuals. Producing anthropomorphic designs in response to this anxiety—concerning about the likelihood that robots may hurt people—may have beneficial consequences. In addition, employees may be given training in attitude development before such technologies are put into practice.

References

Ahn HS, Datta C, Kuo IH, Stafford R, Kerse N, Peri K, MacDonald BA et al (2015) Entertainment services of a healthcare robot system for older people in private and public spaces. In: Sixth international conference on automation, robotics and applications (ICARA). IEEE, pp 217–222. https://doi.org/10.1109/icara.2015.7081150

Alaiad A, Zhou L (2013) Patients’ behavioral intention toward using healthcare robots. In: Proceedings of the nineteenth Americas conference on information systems, Chicago

Bartneck C, Kulić D, Croft E, Zoghbi S (2009) Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int J Soc Robot 1(1):71–81. https://doi.org/10.1007/s12369-008-0001-3

Bass B, Fink N, Price M, Sturre L, Hentschel E, Pak R (2011) How does anthropomorphism affect user’s trust, compliance, and performance on a decision making automation task? Proc Hum Factors Ergon Soc Annu Meet 55(1):1952–1956. https://doi.org/10.1037/e578902012-416

Berns K, Mehdi SA (2010) Use of an autonomous mobile robot for elderly care. In: Advanced technologies for enhancing quality of life (AT-EQUAL), pp 121–126. https://doi.org/10.1109/atequal.2010.30

Branyon J, Pak R (2015) Investigating older adults’ trust, causal attributions, and perception of capabilities in robots as a function of robot appearance, task, and reliability. Proc Hum Factors Ergon Soc Annu Meet 59(1):1550–1554. https://doi.org/10.1177/1541931215591335

Butter M, Jv B, Kalisingh S (2007) Robotics for healthcare, state of the art report. TNO, Delft

Butter M, Rensma A, Boxsel JV, Kalisingh S, Schoone M, Leis M, Thielmaan A et al (2008) Robotics for healthcare: final report. DG Information Society, European Commission, Brussels

Chang MK, Cheung W (2001) Determinants of the intention to use Internet/WWW at work: a confirmatory study. Inf Manag 39(1):1–14. https://doi.org/10.1016/s0378-7206(01)00075-1

Chee BTT, Taezoon P, Xu Q, Ng J, Tan O (2012) Personality of social robots perceived through the appearance. Work 41(Suppl 1):272–276

Choi JG, Kim M (2008) The usage and evaluation of anthropomorphic form in robot design. In: Undisciplined! Design research society conference 2008. Sheffield Hallam University, Sheffield

Cummings ML, Thornburg KM (2011) Paying attention to the man behind the curtain. IEEE Pervasive Comput 10(1):58–62. https://doi.org/10.1109/mprv.2011.7

Dassonville I, Jolly D, Desodt AM (1996) Trust between man and machine in a teleoperation system. Reliab Eng Syst Safe 53(3):319–325. https://doi.org/10.1016/s0951-8320(96)00042-7

Davis FD (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 13:319–340. https://doi.org/10.2307/249008

Desai M (2007) Sliding scale autonomy and trust in human–robot interaction. Unpublished Doctoral dissertation. University of Massachusetts, Lowell

Duffy BR (2002) Anthropomorphism and robotics. The Society for the Study of Artificial Intelligence and the Simulation of Behaviour 20. https://doi.org/10.1016/s0921-8890(02)00374-3

Dzindolet MT, Peterson SA, Pomranky RA, Pierce LG, Beck HP (2003) The role of trust in automation reliance. Int J Hum Comput St 58(6):697–718. https://doi.org/10.1016/s1071-5819(03)00038-7

Endsley MR (1995) Towards a new paradigm for automation: designing for situation awareness. IFAC Proc 28(15):365–370. https://doi.org/10.1016/s1474-6670(17)45259-1

Epley N, Caruso E, Bazerman MH (2006) When perspective taking increases taking: reactive egoism in social interaction. J Pers Soc Psychol 91(5):872–889. https://doi.org/10.2139/ssrn.785989

Epley N, Waytz A, Cacioppo JT (2007) On seeing human: a three-factor theory of anthropomorphism. Psychol Rev 114(4):864–886. https://doi.org/10.1037/0033-295x.114.4.864

Everard A, Galletta DF (2005) How presentation flaws affect perceived site quality, trust, and intention to purchase from an online store. J Manag Inf Syst 22(3):56–95. https://doi.org/10.2753/mis0742-1222220303

Fasola J, Mataric MJ (2012) Using socially assistive human–robot interaction to motivate physical exercise for older adults. Proc IEEE 100(8):2512–2526. https://doi.org/10.1016/b978-0-12-800881-2.00022-0

Fazio RH (1990) Multiple processes by which attitudes guide behavior: the MODE model as an integrative framework. Adv Exp Soc Psychol 23:75–109. https://doi.org/10.1016/s0065-2601(08)60318-4

Ferrari F, Paladino MP, Jetten J (2016) Blurring human–machine distinctions: anthropomorphic appearance in social robots as a threat to humandistinctiveness. Int J Soc Robot 8(2):287–302. https://doi.org/10.1007/s12369-016-0338-y

Gong L (2008) How social is social responses to computers? The function of the degree ofanthropomorphism in computer representations. Comput Hum Behav 24(4):1494–1509. https://doi.org/10.1016/j.chb.2007.05.007

Gu JC, Lee SC, Suh YH (2009) Determinants of behavioral intention to mobile banking. Expert Syst Appl 36(9):11605–11616. https://doi.org/10.1016/j.eswa.2009.03.024

Hansen ST, Andersen HJ, Bak T (2010) Practical evaluation of robots for elderly in Denmark: an overview. In: Proceedings of the 5th ACM/IEEE international conference on human–robot interaction. IEEE Press, pp 149–150. https://doi.org/10.1145/1734454.1734517

Haring KS, Watanabe K, Mougenot C (2012) The influence of robot appearance on assessment. In: Proceedings of the 8th ACM/IEEE international conference on human–robot interaction. IEEE Press, pp 131–132. https://doi.org/10.1109/hri.2013.6483536

Hertzberg L (1988) On the attitude of trust. Inquiry 31(3):307–322. https://doi.org/10.1080/00201748808602157

Holden RJ, Rivera-Rodriguez AJ, Faye H, Scanlon MC, Karsh BT (2013) Automation and adaptation: nurses’ problem-solving behavior following the implementation of bar-coded medication administration technology. Cogn Technol Work 15(3):283–296. https://doi.org/10.1007/s10111-012-0229-4

Jacobzone S (2000) Coping with aging: international challenges. Health Aff 19(3):213–225. https://doi.org/10.1377/hlthaff.19.3.213

Jian JY, Bisantz AM, Drury CG (2000) Foundations for an empirically determined scale of trust in automated systems. Int J Cogn Ergon 4(1):3–71. https://doi.org/10.1207/s15327566ijce0401_04

Jones K (1996) Trust as an affective attitude. Ethics 107(1):4–25. https://doi.org/10.1007/978-0-230-20409-6_11

Jouglet D, Piechowiak S, Vanderhaegen F (2003) A shared workspace to support man–machine reasoning: application to cooperative distant diagnosis. Cogn Technol Work 5(2):127–139. https://doi.org/10.1007/s10111-002-0108-5

Kageyama Y (2002) Nurse gadget patrols the wards. Age. https://doi.org/10.7748/ns.11.7.6.s6

Kim TT, Kim WG, Kim HB (2009) The effects of perceived justice on recovery satisfaction, trust, word-of-mouth, and revisit intention in upscale hotels. Tour Manag 30(1):51–62. https://doi.org/10.1016/j.tourman.2008.04.003

Kusuma H, Pramunita R (2011) The effect of risk and trust on the behavioral intention of using e-procurement system. Eur J Econ Financ Admin Sci 40:138–145

Lee J, Moray N (1991) Trust, self-confidence and supervisory control in a process control simulation. In: 1991 IEEE international conference on systems, man, and cybernetics, 1991. Decision aiding for complex systems, conference proceedings. IEEE, pp 291–295. https://doi.org/10.1109/icsmc.1991.169700

Lee J, Moray N (1992) Trust, control strategies and allocation of function in human–machine systems. Ergonomics 35(10):1243–1270. https://doi.org/10.1080/00140139208967392

Lee JD, See KA (2004) Trust in automation: designing for appropriate reliance. Hum Factors J Hum Factors Ergon Soc 46(1):50–80. https://doi.org/10.1518/hfes.46.1.50.30392

Lee MK, Turban E (2001) A trust model for consumer internet shopping. Int J Electron Commun 6(1):75–91. https://doi.org/10.1080/10864415.2001.11044227

Lockhart JM, Strub MH, Hawley JK, Tapia LA (1993) Automation and supervisory control: a perspective on human performance, training, and performance aiding. In: Proceedings of the Human Factors and Ergonomics Society annual meeting, vol 37, no 18. SAGE Publications, Sage, pp 1211–1215. https://doi.org/10.1177/154193129303701802

Madhavan P, Wiegmann DA, Lacson FC (2006) Automation failures on tasks easily performed by operators undermine trust in automated aids. Hum Factors J Hum Factors Ergon Soc 48(2):241–256. https://doi.org/10.1037/e577042012-018

Moray N, Inagaki T, Itoh M (2000) Adaptive automation, trust, and self-confidence in fault management of time-critical tasks. J Exp Psychol Appl 6(1):44. https://doi.org/10.1037//1076-898x.6.1.44

Mori M, MacDorman KF, Kageki N (2012) The uncanny valley [from the field]. IEEE Robot Autom Mag 19(2):98–100. https://doi.org/10.1109/mra.2012.2192811

Muir BM (1987) Trust between humans and machines, and the design of decision aids. Int J Hum Comput Stud 27(5–6):527–539. https://doi.org/10.1016/s0020-7373(87)80013-5

Muir BM, Moray N (1996) Trust in automation. Part II. Experimental studies of trust and human intervention in a process control simulation. Ergonomics 39(3):429–460. https://doi.org/10.1080/00140139608964474

Njite D, Parsa HG (2005) Structural equation modeling of factors that influence consumer internet purchase intentions of services. J Serv Res 5(1):43

Oulton JA (2006) The global nursing shortage: an overview of issues and actions. Politics Nurs Pract 7(Suppl 3):34S–39S. https://doi.org/10.1177/1527154406293968

Pak R, Fink N, Price M, Bass B, Sturre L (2012) Decision support aids with anthropomorphic characteristics influence trust and performance in younger and older adults. Ergonomics 55(9):1059–1072. https://doi.org/10.1080/00140139.2012.691554

Parasuraman R, Riley V (1997) Humans and automation: use, misuse, disuse, abuse. Hum Fact J Hum Fact Ergon Soc 39(2):230–253. https://doi.org/10.1518/001872097778543886

Parasuraman R, Molloy R, Singh IL (1993) Performance consequences of automation-induced ‘complacency’. Int J Aviat Psychol 3(1):1–23. https://doi.org/10.1207/s15327108ijap0301_1

Parasuraman R, Sheridan TB, Wickens CD (2000) A model for types and levels of human interaction with automation. IEEE Trans Syst Man Cybern A Syst Hum 30(3):286–297. https://doi.org/10.1109/3468.844354

Pineau J, Montemerlo M, Pollack M, Roy N, Thrun S (2003) Towards robotic assistants in nursing homes: challenges and results. Robot Auton Syst 42(3):271–281. https://doi.org/10.1016/s0921-8890(02)00381-0

Prakash A, Rogers WA (2015) Why some humanoid faces are perceived more positively than others: effects of human-likeness and task. Int J Soc Robot 7(2):309–331. https://doi.org/10.1007/s12369-014-0269-4

Reidy K, Markin K, Kohn S, Wiese E (2015) Effects of perspective taking on ratings of human likeness and trust. In: International conference on social robotics. Springer International Publishing, pp 564–573. https://doi.org/10.1007/978-3-319-25554-5_56

Robinson H, MacDonald BA, Kerse N, Broadbent E (2013) Suitability of healthcare robots for a dementia unit and suggested improvements. J Am Med Dir Assoc 14(1):34–40. https://doi.org/10.1007/s12369-016-0338-y

Sætren GB, Hogenboom S, Laumann K (2016) A study of a technological development process: human factors—the forgotten factors? Cogn Technol Work 18(3):595–611. https://doi.org/10.1007/s10111-016-0379-x

Sargen M, Hooker RS, Cooper RA (2011) Gaps in the supply of physicians, advance practice nurses, and physician assistants. J Am Coll Surg 212(6):991–999. https://doi.org/10.1016/j.jamcollsurg.2011.03.005

Scerbo MW (1996) Theoretical perspectives on adaptive automation. In: Parasuraman R, Mouloua M (eds) Automation and human performance: theory and applications. Erlbaum, Mahwah, pp 37–63

Schaefer KE (2013) The perception and measurement of human–robot trust. Unpublished Doctoral dissertation. University of Central Florida, Orlando

Sheridan TB (2002) Humans and automation: system design and research issues. Wiley, New York

Spier R (2013) Trust as a necessary attitude in learning and research. http://www.portlandpress.com/pp/books/online/wg86/086/0015/0860015.pdf. Retrieved 5 Sept 2016

Waytz A, Heafner J, Epley N (2014) The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. J Exp Soc Psychol 52:113–117. https://doi.org/10.1016/j.jesp.2014.01.005

Woods S (2006) Exploring the design space of robots: Children’s perspectives. Interact Comput 18(6):1390–1418. https://doi.org/10.1016/j.intcom.2006.05.001

Author information

Authors and Affiliations

Corresponding author

Appendix: Measurement items of scales

Appendix: Measurement items of scales

Scale | Measurement item |

|---|---|

Manipulation check | This robot’s head looks like a human head This robot’s face looks like a human face This robot’s body looks like a human body |

Checklist for trust between people and automation | This robot is deceptive I am suspicious of this robot’s intent, action, or output I am wary of this robot This robot’s action will have a harmful or injurious outcome I am confident in this robot This robot provides security This robot is dependable This robot is reliable I can trust this robot |

Intention to work | I want to work with this robot when I work in the future I can easily work with this robot when I work in the future |

Automation levels of robots | |

Information acquisition | I would assign this robot to collect information on whether or not there is any need of the resident |

Information analysis | I would assign this robot to analyze the collected information |

Decision selection | After the analysis of the information, I would assign this robot to decide what to do |

Action implementation | I would assign this robot to implement the decision |

Rights and permissions

About this article

Cite this article

Erebak, S., Turgut, T. Caregivers’ attitudes toward potential robot coworkers in elder care. Cogn Tech Work 21, 327–336 (2019). https://doi.org/10.1007/s10111-018-0512-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10111-018-0512-0