Abstract

Today’s automation is typically tied into work processes as tools actively supporting the human operator in fulfilling certain well-defined sub-tasks. The human operator is in the role of the high-end decision component determining and supervising the work process. With emergent technology highly automated work systems can be beneficial on the one hand, but automation may as well cause problems on its own. A new way of introducing automation into work systems shall be advocated by this article overcoming the classical pitfalls of automation and simultaneously taking the benefit as wanted. This shall be achieved by so-called cognitive automation, i.e. providing human-like problem-solving, decision-making and knowledge processing capabilities to machines in order to obtain goal-directed behaviour and effective operator assistance. A key feature of cognitive automation is the ability to create its own comprehensive representation of the current situation and to provide reasonable action. By additionally providing full knowledge of the prime work objectives to the automation it will be enabled to co-operate with the human operator in supervision and decision tasks, then being intelligent machine assistants for the human operator in his work place. Such assistant systems understand the work objective and will be heading for the achievement of the overall desired work result. They will understand the situation (e.g. opportunities, conflicts) and actions of team members—whether humans or assistant systems—and will pursue goals for co-operation and co-ordination (e.g. task coverage, avoidance of redundancy or team member overcharge). On the other hand, cognitive automation can be emerged towards being highly automated intelligent agents in charge of certain supportive tasks to be performed in a semi-autonomous mode. These cognitive semi-autonomous systems and the cognitive assistants shall be denoted as the two faces of dual-mode cognitive automation (Onken and Schulte, in preparation).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the field of airborne vehicle guidance, and of course not only there, the general principle of integrating human and automation in the manner of supervisory control (Sheridan 1987) is very common. While the automation typically performs fast inner control loops, the human operator is responsible for mode selection and command value setting as the observable outcome of planning, decision-making, deliberation and anticipation for the sake of a safe and efficient mission accomplishment. Thereby, a hierarchically organised work system is established.

The insufficiency of human mental resources becomes obvious when the supervision of multiple uninhabited aerial vehicles (UAV) by a single operator is required. Inevitably, this would lead to erroneous action and performance decline. Nevertheless, such ideas are currently being discussed in the military community under the term manned–unmanned teaming. This is an approach to simultaneously control several UAVs and their payload from a manned aircraft in order to increase the effectiveness of the manned system in performing its mission. In order to cope with the task to supervise multiple automated processes of vehicle guidance in a co-ordinated manner, while maintaining control over the own vehicle, much more cognition has to be build into the system. How can that be done?

From a purely technology-driven point of view, a superficial answer could probably be to make the UAVs autonomous. But, what are the requirements of an appropriate autonomous system and what is the difference to the aforementioned automated system? Commonly, an autonomous system would be expected to pursue the work objective of the considered mission, to be reactive to perceived external situational dynamics, to modify the work objective on the basis of the actual situation if necessary, and to generate solutions by means of anticipation, deliberation and planning and execute them without human intervention; in short to be an artificial cognitive system equivalent to the human operator. But of what use might such an artificial autonomous creature completely detached from human input be? On the other hand, is the consideration of human factors issues in the context of the autonomy debate still adequate? This paper will show that giving machines full autonomy, in the limited sense of a purely technical treatment, will not be the solution.

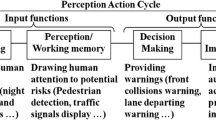

In order to embed automation into a highly interactive work environment such as a multi-agent scenario, teamwork is compulsory. The basis of teamwork is the appreciation of the behavioural traits of team members, whether they are humans or machines. Establishing the capability of teaming between humans and automation will be referred to as co-operative control, as opposed to the classical paradigm of supervisory control. Interaction shall occur no longer on the level of mode selection and command value settings but through negotiation of requests and commitments on the level of tasks and intents. This will be based upon a common understanding of the current situation by both humans and machines, subject to a common mission objective. Although the final decision authority for high-end decisions shall stay with the human operator, there will be established a peer team of humans and artificial cognitive units as intelligent systems which assist the human operator in the process of pursuing the mission objective. Therefore, the following machine capabilities have to be implemented:

-

semi-autonomous task accomplishment, i.e. the capability to comply with a given task with little human intervention if necessary,

-

operator assistance, i.e. to direct the human’s attention by technical means to the most urgent task and to balance his workload whenever demanded by the situation,

-

human–machine and machine–machine co-operation, i.e. to achieve the common top-level goals of teaming by a co-ordinated pursuit of the common mission objective.

The following sections will provide a theoretical approach of, firstly, how to introduce cognitive automation into work, secondly, what is required to make the automation behave in a co-operative manner, and thirdly, how to design artificial cognitive systems. Finally, an insight into current application-related research will be given.

2 Introducing artificial cognition into the work system

2.1 The work system as human factors engineering framework

The introduction gave a brief outline of the challenges for future vehicle guidance systems. To figure out a solution, the first step shall be the characterisation of conventional automation in the work process as opposed to the introduction of cognitive automation. Therefore, the consideration of the work system as top-level human factors engineering framework shall be helpful. The work system (see Fig. 1) as a general ergonomics concept, as probably defined for the first time in REFA (1984) has been utilised in a modified definition, adapted to the application domain of human–machine co-operation in flight guidance by (Onken 2002) and to machine–machine co-operation by Meitinger and Schulte (2006b).

The work system is defined by the work objective, being the main input into the process of work. The work objective mostly comes as an instruction, order or command from a different supervising agency with its own work processes. Further constraining factors to the work process are environmental conditions and supplies. At its output, the work system provides the work result including the current state of work and what has been accomplished by the work process as a physical change of the real world (Onken et al. in preparation).

The work system itself consists of two major elements, i.e. the operating force and the operation-supporting means, as characterised in some more detail as follows:

-

The operating force is the high-end decision component of the work system. It is the only component which pursues the complete work objective. It determines and supervises what will happen in the course of the work process and which operation-supporting means will be deployed at what time. The operating force is the work system component with the highest authority level. One major characteristic of especially a human representing the operating force is the capability of self-defining the work objective himself (see Fig. 1). Besides operating on the basis of full authority competence this is the decisive criterion for an autonomous system.

-

The concept of the operation-supporting means can be seen as a container for whatever artefacts are available to make use of in the work process, including basic work site settings, non-powered tools and machines. The latter might be a vehicle in the case of a transport work process, but also computerised devices of automation. In the application domain of flight guidance currently used auto-flight or autopilot systems including the human–machine control interface can serve as typical examples. Common to the nature of various operation-supporting means is the fact that they only facilitate the performance of certain sub-tasks. By nature, such a sub-task does not form a work system itself, obviously being only a part of another higher level work task. According to the common ergonomic design philosophy, mostly the operation-supporting means are subjected to the endeavours of optimisation in order to achieve overall system requirements and accomplish further improvements.

These elements will be combined to the work system set up in order to achieve a certain work result on the basis of a given work objective. The accomplishment of a flight mission (i.e. the work objective) may give a good idea of what is meant here. In this case, the work system will consist of an air-crew being the operating force, and the aircraft including its automated on-board functions as well as any required infrastructure represents the operation-supporting means.

2.2 Artificial cognitive units in the work system

Traditionally, a human or a human team (cf. Onken 2002) represents the operating force in the work system. In the conventional sense, the human operating force provides the capability of cognition within a work system, whereas the operation-supporting means do not. In order to overcome known shortcomings of such conventional automation (Billing 1997) a configuration of the work system shall be suggested, where so-called artificial cognitive units (ACU) are introduced. Introducing ACUs into the work system as opposed to the further enhancement of conventionally automated functions or the addition of further humans adds a new level of automation, i.e. the cognitive level to the work system. The possibility to shape an ACU being either part of the operating force (operating ACU) or being part of the operation-supporting means (supporting ACU) defines two modes of cognitive automation (see Fig. 2). Both modes might be combined together with conventional automation within one work system.

Both modes of automation have in common that they incorporate artificial cognition. Onken (2002) describes the nature of suchlike cognitive automation as follows:

“As opposed to conventional automation, cognitive automation works on the basis of comprehensive knowledge about the work process objectives and goals […], pertinent task options and necessary data describing the current situation in the work process. Therefore, cognitive automation is prime-goal-oriented.” (Onken 2002)

Concerning the application of cognitive automation as operating ACU, i.e. on the right hand side of Fig. 2, where the human operator and the ACU form the operating force as a team (Onken 2002) comments that in this configuration the ACU has reached

“[…] the high-end authority level for decisions in the work system, which was, so far, occupied by the human operator alone.” (Onken 2002)

As a consequence of this consideration both team members—human operator and ACU—have to have the obligation to apply their specific capabilities, which might be overlapping, in order to pursue the overall work objective best. As a consequence, an operating ACU is always characterised by the incorporation of the functionality of what we call an assistant system.

Onken (1994) formulates two basic functional requirements for specification purposes of such an assistant system being part of the operating force. An amended version of these requirements is the following:

Requirement (1):

The assistant system has to assess/interpret the work situation on its own and has to do its best by own initiatives to ensure that the attention of the assisted human operator(s) is placed with priority to the objectively most urgent task or subtask.

Requirement (2)—optional:

If the assistant system can securely identify as part of the situation interpretation according to requirement (1) that the assisted human operator(s) is (are) overtaxed, then the assistant system has to do its best by own initiatives to transfer this situation into another one which can handled normally by the assisted human operator(s).

In these so-called two basic requirements for human–machine interaction the way is paved for automation as part of the operating force of a work system in the sense of cognitively facilitated human–machine co-operation.

2.3 Dealing with expanding automation in work systems

The idea of supplementing the operating force of a work system with an ACU might be driven up to a degree where the human operator’s capabilities would be completely substituted by the capabilities of the ACU and, therefore, consequently the human could theoretically be dropped out of the work system (see Fig. 3, top right). In case that this includes the analogue of the human capability to self-assign a work objective, the resulting artefact could be called an artificial autonomous system.

Considering such an artificial autonomous system there are two good reasons, why it is not desirable to create an artefact like this:

-

(1)

From an ethical point of view we want to refuse building machines, which potentially could self-assign a work objective implying an unforeseeable or even harmful consequence for humans.

-

(2)

From a pragmatic point of view we do not need machines not being subjected to human authority. Such technological artefacts for themselves are of no use, since they are no longer serving the human for his work in its broadest sense.

Therefore, a reasonable conclusion at this point is the consideration that the cognitive artefact as a substitute of a work system becomes a part of the operation-supporting means of a higher level work system whose operating force has got at least one human operator (possibly among other team members, humans or operating ACUs). According to the principal concept of human autonomy, only this operator is entitled to define the overall work objective (see Fig. 3, bottom). The artificial work system substitute, now being part of the operation-supporting means of the upper-level work system as a supporting ACU, receives its task instructions purely from the operating force of that work system. In this regard, we would speak of a highly automated or semi-autonomous system. Thus, the consideration of human factors issues in the field of the control of such ‘uninhabited’ systems is not an oxymoron as it might have been speculated at the first glance.

This migration of work from humans to machines, mostly not having any intelligence, could be observed throughout the whole history of industrial mechanisation and automation. Figure 4 depicts the resulting vicious circle of escalating automation as part of the operation-supporting means in the work system and the suggested way out by operating ACUs in the application field of UAV guidance and control. The replacement of human work by continuously expanding automation (as part of the operation-supporting means) leads to technical solutions of steadily increased authority. Simultaneously, the human operator is continuously being pushed further into the role of supervising more and more machines and, at the same time, more and more complex ones. The concept of co-operative automation by operating ACUs, being part of a work system on the side of the operating force, is supposed to break with the stubborn design philosophy of increasing replacement of human work by increasingly complex automation and the resulting shift of human work to more detached and more burdening supervision (cf. Fig. 4).

In contrast to automation aiming at autonomous performance in the sense of taking over human responsibilities, no matter how dumb or intelligent that kind of automation might be, co-operative automation in the sense of human–machine co-agency shall be enabled

-

(1)

to collaborate in a close-partner work relationship with the human operator,

-

(2)

to negotiate the allocation of tasks adapted to the needs of the current situation, and

-

(3)

to jointly supervise the performance of sub-tasks under the consideration of the overall work objective by highly automated (semi-autonomous) systems, if applicable.

3 Co-operative automation

Having introduced ACUs into the work system both as part of the operating force and as operation-supporting means (i.e. dual-mode cognitive automation) demands for co-operative capabilities on the side of the ACUs for the following reasons:

-

(1)

Operating ACUs have to be capable of co-operating with the human operator in order to be enabled to successfully compensate possible human resource limitations for the sake of mission accomplishment.

-

(2)

There might be several supporting ACUs, which should be capable to co-operate for the accomplishment of a task commanded to them by the operating force.

Obviously, co-operation becomes a key issue in work systems, if there are humans and ACUs involved as several cognitive units. This section will take a look at co-operation and its implications of co-ordination and communication. In this context, we will also touch on selected approaches how machines can be enabled to co-operate.

3.1 Co-operation

Generally, assuming co-players having a common objective it is being considered a fundamental characteristic of co-operation. Within a work system as shown in Fig. 5 there are different levels of co-operation.

On the first level, human–machine co-operation can be present within the operating force, where an ACU representing an assistant system is teaming with the human operator. This level of co-operation allows that the assistant system is co-operating with the human operator like it would be the case if there was a human assistant. A typical example for a purely human team on this level of co-operation is the pilot team in the two-man cockpit of a commercial transport aircraft. Just like them both the human operator and the assistant system operate the work process in a coordinated way in order to accomplish a common, externally given work objective. This level of co-operation can be called the collaborative level and might go as far as the assistant system takes over full authority in case of incapacity of the human operator. Not all assistant systems on that co-operation level as part of the team constituting the operating force are of that high-end capacity. We can think of others which can be considerably constrained in their authority level and might have got no capacity at all to directly operate the work process, although knowing about all what is known by any other team members of the operating force, including the externally given work objective. Their assistance can be like that of a harbour pilot who advises the captain of a ship, thereby supporting him to bring his vessel safely to the dock, but who never would have his hands on the rudder.

On a second level co-operation exists between supporting ACUs within the operation-supporting means, consisting of several cognitive systems working together in order to achieve the sub-tasks assigned to them and monitored by the operating force. This level of co-operation allows for extended capabilities within the operation-supporting means. Although, it is in principle desirable to have as much automation as possible within the operating force (Onken et al. in preparation), there are cases in which this is not possible. An example for such co-operative semi-autonomous systems might be a team of UAVs, which is capable of accomplishing a given task such as the reconnaissance of a certain area with little or no further intervention of the human operator, including the co-ordination of responsibilities for sub-areas, the appropriate usage of different, e.g. sensors.

Figure 5 also indicates what we know as supervision as opposed to co-operation, taking place between the operating force and the operation-supporting means. Note that double-sided vertical arrows in Fig. 5 designate the interaction type of co-operation and horizontal arrows indicate the direction of supervising interaction. From the engineering point of view, it is important to distinguish carefully between supervision and co-operation. In particular, the requirements and challenges for the realisation are considerably different. The main difference between supervision and co-operation lies in the fact that the goals of both the supervisor and the supervised unit are not necessarily the same. It is even not warranted that the goals are compatible under all circumstances. In that sense, the term manned–unmanned teaming (MUM-T) is not necessarily the same as what we have defined here as teaming of co-operating units (Schulte 2006). There, teaming is used for team structures which can be associated with both supervision and co-operation like it is depicted in Fig. 5.

Billings’ principles of human-centred automation as stated in Billings (1997) give an idea of what requirements have to be met on the side of both the human and automation in order to enable safe and efficient aircraft guidance or air traffic control. They refer to a human–machine team, but can be generalised for teams consisting of several humans and ACUs working in similar structured task domains, as suggested in Ertl and Schulte 2004). Thus, all team members must

-

be actively involved,

-

keep each other adequately informed,

-

monitor each other, and therefore should be predictable, and

-

know the intent(s) of all other team members.

These requirements demand for various capabilities of the ACUs such as comparing expected and observed activities of team members including the human operator in order to be able to monitor them in terms of their intents. This requirement has been thoroughly investigated in the context of knowledge-based crew assistant systems by Wittig and Onken (1993) and Strohal and Onken (1998).

3.2 Co-ordination

Accomplishing a work objective in co-operation only makes sense, if it is actually possible and useful to work on the given task with several actors, due to the following reasons (cf. Jennings 1996; Wooldridge 2002):

-

No individual team member has enough competence, resources or information to solve the entire problem.

-

Activities of team members depend on each other with respect to the usage of shared resources or the outcome of previously completed tasks.

-

The efficiency of the work process or the utility of the outcome is increased, e.g.

-

by avoiding the completion of tasks in unnecessary redundancy or

-

by informing team members about relevant situational changes

-

so that task completion will be optimised.

In order to achieve the desired positive effects of co-operative work, the activities of the participating team members have to be co-ordinated, i.e. interdependencies between these activities have to be managed (Malone and Crowston 1994). Interdependencies include not only negative ones such as the usage of shared resources and the allocation of tasks to actors, but also positive relationships such as equal activities (von Martial 1992).

Although there are many approaches to co-ordination (cf. e.g. Wooldridge 2002), Jennings’ approach will be discussed briefly here, as it is well suited for the application within human–machine teams. He reduces co-ordination to the formula

Therein, commitments are described as

“[…] pledges [of agents] to undertake a specified course of actions” (Jennings 1996)

Conventions are explained as

Finally, local reasoning stands for the capability to think about own actions and related ones of others.

By choosing conventions and social conventions appropriately, the distribution of commitments in a team can be adapted to the current situation and can consider aspects such as the workload of the human operator, opportunities of team members, or unexpected changes of the environmental conditions.

3.3 Communication

The prevailing means for co-ordinating team members is communication, which can be either explicit or implicit. Explicit communication in the area of multi-agent research is usually based on the speech act theory founded by Austin (1962) and further developed by Searle (1969). It states that communicative acts can change the state of the environment as much as other actions, i.e. to communicate explicitly means to send messages to team members, usually in order to achieve a certain desired state. Implicit communication in contrast is based on the observation of team members and an inference of their intentions in order to be able to conclude, which content messages could have had, if explicitly sent.

In order to enable machines to communicate with team members explicitly, agent communication languages can be used, one of them being the FIPA ACL (Foundation of Intelligent Physical Agents Agent Communication Language, http://www.fipa.org). It specifies

-

a message protocol (FIPA 2002a),

-

communicative acts (performatives) (FIPA 2002b),

-

content languages, and

-

interaction protocols.

Whereas the former three are focused on single messages, interaction protocols describe possible successions of messages. In Fig. 6, for instance, a typical request interaction based on the FIPA specification (FIPA 2002c) is shown as a state automaton (cf. Winograd and Flores 1986) with messages representing state transitions.

Usually, a request interaction is started because a team member wants another one to accomplish a certain task. Therefore, initially a message detailing the request is sent. The participant can either refuse or accept the request and in case of acceptance informs the initiator of the outcome of task accomplishment. Such a formalisation of interactions involves that—no matter how the participant decides—the initiator always receives feedback on the request and can use this as basis for selecting future actions.

Although humans mostly tend to stick to such protocols, they may in contrast to machines send redundant messages or use short-cuts not being intended. Thus, in case machines are supposed to communicate with humans, either the humans have to stick to protocols or—being a much more human-friendly alternative—the machines have to be enabled to cope with such imperfect human behaviour. In a first step, this might be a notification if a message has not been understood (a possibility already been intended by the FIPA ACL) and information about the expected messages. A more advanced approach could imply an interpretation of the derivation and an inference of the underlying intention with an appropriate adaptation of the interaction.

This possibility already connects explicit with implicit communication, the latter demanding for a model of the dialogue partner from which intentions can be revealed. Whereas for machine partners the development of suchlike models is quite straightforward due to the fact that their internal mechanisms are usually well known, many human factors engineering research issues regarding human behaviours in dialogues remain.

4 Approaching artificial cognition

In Sect. 2, the introduction of artificial cognition into work systems has been advocated. Section 3 gave an overview of the required attributes of an artificial cognitive unit used as a piece of co-operative automation supplementing human performance as part of the operating force in a work system or providing advanced capabilities as part of the operation-supporting means, respectively. This section shall provide an engineering approach to artificial cognition.

The concept of automation being a team-player in a mixed human–machine team, or having a machine taking over responsibility for work objectives to a large extent, promotes the approach of deriving required machine functions from models of human performance. The following sections will describe the concept of the so-called cognitive process and its implementation in a system engineering framework at some more detail.

4.1 Cognitive process

The cognitive process (CP) can be seen as a model of intelligent machine performance, which is well suited for the design of ACUs with goal-directed decision-making and problem-solving capabilities based on a symbolic representation of the perceived situation. It aims at the development of technical systems, which are capable of exhibiting behaviour on all levels of performance as stated by Rasmussen (1983), i.e. the skill-based, rule-based, and knowledge-based level. Particularly, the possibility to perform on the knowledge-based level makes it possible to develop systems, which are very flexible and adaptive to environments, the configuration of which is not exactly known in advance.

From an architectural point of view, the CP follows the approach of knowledge-based systems in computer science, i.e. it separates application-specific knowledge from application-independent processing of this knowledge (inference). Figure 7 shows the CP consisting of the body (knowledge; inner part) and the transformers (inference; outer extremities) (Putzer and Onken 2003).

The body consists of two kinds of knowledge: the a priori knowledge, which is modelled by the developer of the ACU, and the situational knowledge, which is created by the CP during runtime by processing information from the environment, already existent situational knowledge, and a priori knowledge.

The above-mentioned transformers are the underlying mechanism for the handling of situational knowledge. They read input data in mainly one area of the situational knowledge, use a priori knowledge to transform or process the knowledge and write output data (‘new’ or modified knowledge) to a designated area of the situational knowledge. The following transformation steps work together in order to generate observable CP or ACU behaviour, respectively. Although they are described sequentially here, they are performed according to the situation being represented by the situational knowledge as appropriate.

-

Information concerning the current state of the environment (input data) is acquired via the input interface. These input data include anything the CP is designed to perceive, subject to relevance for further knowledge processing steps. The input data in real-world applications are usually limited by the equipment of the underlying implementation in terms of communication systems and sensors, both concerning the vehicle and the surrounding environment.

-

An internal representation of the current situation (belief) including the mental model of the external physical world including the comprehension on a higher conceptual level is obtained by interpreting the input data using environment models. These are concepts of objects, relations and abstract structures, which might be part of the situation.

-

Based on the belief, it is determined, which desires (potential goals) are to be pursued given the current situation. These abstract desires are instantiated to active goals describing the state of the environment, which the CP intends to achieve.

-

Planning determines the steps, i.e. situation changes, which are necessary to alter the current state of the environment in a way, so that the desired state is achieved. For each planning step, models of action alternatives are used.

-

Instruction models are then needed to schedule the steps required to execute the plan, resulting in instructions.

-

Instructions are finally put into effect by appropriate effectors which are part of the environment, resulting in the change of the environment as wanted.

4.2 Different views on the cognitive process

For the development of complex systems, it is desirable to be able to consider different application capabilities separately. The theory of the cognitive process facilitates the design of so-called ‘packages’ encapsulating application capabilities (horizontal view, Fig. 8).

The packages are linked by dedicated joints in the a priori knowledge and by the use of common situation knowledge. Therefore, the use of a common ontology in the design of the different packages is of great importance. Together, the packages form the complete system. As they are all designed according to the blueprint of CP, a uniform structure can be recognised, when looking on the packages vertically (vertical view, Fig. 8).

4.3 The framework: from theory to implementation

An appropriate architecture for the development of cognitive systems is COSA (cognitive system architecture) (Putzer and Onken 2003), which offers a framework to implement applications according to the theory of the CP. It supports the developer in two ways: firstly, COSA provides an implementation of the application-independent inference mechanism, so that the development of a cognitive system is reduced to the implementation of interfaces and the acquisition and modelling of a priori knowledge. Secondly, knowledge modelling is supported by the provision of cognitive programming language (CPL), the programming paradigm of which is based on the theoretical approach of the CP. CPL facilitates coding of complex behaviour on a high level of abstraction. In this context, ‘programming’ means to describe situation-dependent behaviour of concepts (knowledge models). These knowledge models are environment models, models of desires and action alternatives and instruction models as known from the CP. Thus, it differs from conventional programming in the sense of not representing a merely procedural implementation of functions.

5 Application

In the previous sections, the idea of introducing artificial cognition into the work process has been elaborated and discussed to some extent. The discussion resulted in the postulation of co-operative automation as approach to overcome human factors related shortcomings of the current automation paradigm. Some basic considerations were made concerning fundamental features of co-operative automation and a brief introduction into the concept of artificial cognition as underlying technology has been given. This final section shall be dedicated to some application-related issues.

Earlier in this contribution (Fig. 5) the various forms of co-operation between intelligent agents in the work system have been mentioned, i.e. the human–machine co-operation (assistant system in co-operation with human operator) and the machine–machine co-operation (co-agency of multiple highly automated, i.e. semi-autonomous, entities under human supervision). The following two sub-sections shall give some insight into these two aspects. The issue of knowledge-based assistant systems has already been reported on very extensively from our research group in many previous publications. For this reason, the elaboration on this will be very brief. The treatment of machine–machine co-operation will be elaborated in more detail, though. We expect that there will be massive spin-off for a more human-centred view on how humans and machines may co-operate in the very next future.

5.1 Assistant systems

Researchers at the Universität der Bundeswehr München (Munich University of the German Armed Forces) have been working on co-operative automation technology in the field of aircraft guidance for almost two decades.

Early approaches were on knowledge-based systems assisting airline pilots in IFR flight. The cockpit assistant system (CASSY) has been successfully flight tested in the year of 1994, being the first prototype of its kind worldwide (Prévôt et al. 1995). The crew assistant military aircraft (CAMA) followed in the late 1990s, incorporating technology capable of semi-autonomously performing mission tasks (e.g. tactical situation analysis, tactical re-planning) on the basis of goal oriented behaviours while keeping up a situation adapted dialogue with the pilot, in order to balance his workload (Walsdorf et al. 1997; Schulte and Stütz 1998; Stütz and Schulte 2000; Frey et al. 2001).

Both systems can be regarded as representing operating ACUs according to the definition given earlier (cf. Fig. 2), although not fully following the today’s strict design philosophy, not having been available by that time. However, they already bear some crucial capabilities, e.g. they model expected pilot behaviour on the level of control actions (Ruckdeschel and Onken 1994; Stütz and Onken 2001) and recognise the pilot’s intents and errors through comparison between expected and observed pilot behaviour (Wittig and Onken 1993), and finally, manage the human–machine dialogue by the use of adaptive speech recognition (Gerlach and Onken 1993).

Future work in this field will cover two new application domains, the support of helicopter pilots and assistant systems for the UAV ground operator. Technology-wise the consideration of user-adaptive automation will be an issue.

5.2 Machine–machine co-operation as basis for manned–unmanned teaming

While work in the field of assistant systems focuses on the introduction of ACUs as artificial team members for the human operator being the operating force in a work system, work in the area of manned–unmanned teaming additionally considers the integration of semi-autonomous systems into a work system as discussed earlier in this contribution.

In a first step towards a work system of manned–unmanned teaming the co-agency of a team of semi-autonomous co-operating uninhabited combat aerial vehicles (UCAVs) is investigated (Meitinger and Schulte 2006a, b). This team of UCAVs is supposed to be capable of co-operatively accomplishing a mission as specified by the operating force (in the following being referred to as ‘command and control’). The subject of this investigation is the co-ordination of the UCAVs as machine agents in a simplified SEAD/attack scenario on the basis of dialogues on the knowledge-based level of behaviour.

The scenario, which is used for the development of the required semi-autonomous and co-operative capabilities, consists of some surface to air missile sites (SAM-sites) and a high value target in a hostile area, which has to be destroyed by the team of UCAVs. Some of the threats are known a priori at the beginning of the mission; others pop-up unexpectedly during the course of the mission. One UCAV is equipped with a weapon, which can destroy the target. The other UCAVs have sensors for the detection of pop-up threats and incoming missiles as well as high-speed anti radiation missile (HARMs) for suppression or destruction of SAM-sites on board.

In order to tackle the problem, an artificial cognitive unit (ACU) has been introduced onboard each UCAV, covering the following capabilities:

-

use of operation-supporting means

-

safe flight

-

single vehicle mission accomplishment

-

co-operative mission accomplishment

5.2.1 Use of conventional operation-supporting means

This package enables the ACUs to handle other conventional operation-supporting means as automation equipment of the UCAV, namely an autopilot, a flight management system and a flight planner minimising threat.

The availability of these functionalities is modelled by action alternatives such as ‘generate flight plan’ and ‘fly to a location’ including their effects and pre-conditions for execution. Besides, instruction models containing knowledge about the usage of interfaces to the vehicle and the above-mentioned equipment are implemented, thus enabling the ACU not only to plan actions but also to execute them (Meitinger and Schulte 2006a).

5.2.2 Safe flight

Basic flight safety is being ensured by the implementation of desires concerning traffic collision avoidance and environment models enabling the ACU to comply with these desires.

As indicated in Fig. 9, instances of the environment model ‘vehicle’ are created for both the own vehicle and another vehicle and updated according to sensor data. Their relative position, distance and flight altitudes are considered. As soon as a collision-prone situation is recognised such as both vehicles are flying towards each other at the same altitude and in a small distance, the model ‘danger of collision’ is instantiated, leading to an activation of the desire ‘avoid collision’ as well as the selection of the action alternative ‘evade’ and appropriate instruction models described in the previous section.

5.2.3 Single vehicle mission accomplishment

The package ‘single vehicle mission accomplishment’ is supposed to provide the basis for group behaviours and implements capabilities being necessary for semi-autonomous mission accomplishment in a threatened area, namely:

-

communication with command and control, i.e. reception of a mission order and acceptance or refusal based on individual resources, and

-

weapon deployment and threat avoidance as basic tactical manoeuvres for an attack of the target, attack of SAM-sites and avoidance of threats.

Central within this package are the concepts of an ‘actor’ and its ‘commitments’. In case of single vehicle mission accomplishment, there is just one instance of an actor representing the ACU itself with its commitments, resources etc. The actor enters into a commitment, if a mission is requested and if there are enough resources. It is dropped again, if the mission is accomplished or if it has become unachievable due to an unexpected change of the situation.

Communication with command and control is based on the FIPA ACL as described earlier. To get a mission order, a dialogue based on a request interaction is used. For the dialogue representation, environment models referring to all dialogues (‘dialog’), specific dialogues (‘dialog-request’), dialog states and dialogue transitions as well as actors and dialogue contents are necessary. These are instantiated as often as necessary and linked to each other. An instance of ‘dialog-request’ for example is linked to an actor (‘command-and-control’) as initiator, another actor (‘actor-self’) as participant and an instance of the requested mission order as subject of the dialogue (cf. Fig. 10).

An instance of the desire ‘continue dialogue’ is created as a goal, if the ACU itself is responsible for the execution of the next step of a dialogue. The only possible kind of action to achieve this goal is to send a message. As there are usually several possibilities, which kind of message can be sent (e.g. agree or refuse), several instances of ‘send message’ will be created and decision knowledge will be used to select one option. In case the options are to accept or refuse a mission order, the available resources of the actor are compared to the required resources. If enough resources are available, the mission will be accepted, otherwise it will be refused. For this decision, the mission has to be comprehended by the ACU. Therefore, several environment models are designed. Whereas an instance of a model ‘mission order’ represents the content of the respective message received from command and control, all mission tasks such as ‘destroy target’ or ‘destroy SAM-site’ are represented as instances of appropriate environment models and linked to the mission order. They have to be completed in order to accomplish the mission. For these tasks, the required resources are estimated. In addition, concepts of a ‘target’ and ‘SAM-site’ are represented as well as an ‘attack location’, at which the UCAV has to be before it can attack an object. For the execution of ‘send message’, knowledge about the message structure is necessary, which is stored in an instruction model.

With respect to actual mission accomplishment, the desire ‘comply with commitment’ is essential, an instance of which is created in case a commitment is the next one to be accomplished. Depending on the actual commitment, the appropriate sub-ordinate goals such as ‘be at attack location’ and action alternatives such as ‘fly to location’ mainly being part of the package ‘use of operation-supporting means’ are activated and executed.

Threat avoidance is implemented by the desire ‘avoid threat’. This is activated in case there is a threat by a SAM-site. One example for threat by a SAM-site is in case a flight plan goes through threatened area. In order to be able to decide, whether the respective SAM-site should be destroyed or re-planning is also an option, flight plans are not only attributed with the destination, but also with the SAM-sites, which were considered while planning.

A description of simulation results obtained with an implementation of parts of this package is given in Meitinger and Schulte 2006a).

5.2.4 Co-operative mission accomplishment

The package ‘co-operative mission accomplishment’ extends the previous package by enabling an ACU not only to accomplish tasks on single-mission level, but in co-operation with others.

Both, co-operation and co-ordination as discussed earlier are addressed within this package conventions and social conventions are considered by the implementation of the behaviour of the models concerning the appropriate desires and action alternatives. For instance, given the situation, that there is a task belonging to a mission to which the team is committed, but no team member is committed to actually performing this task, a desire ‘ensure task coverage’ becomes an active goal, leading to a proposal to commit to the task or a request. Likewise, if two team members are committed to the same task in unnecessary redundancy, a desire ‘avoid redundant task completion’ is instantiated, leading to either proposal or request of commitment cancellation. Subsequently, if own commitments change, the desire to keep team members informed becomes an active goal, bringing forth an inform dialogue.

The desires of this package are detailed in Fig. 11. Starting from the top-level abstract desires ‘form a team’ and ‘achieve common objective’ subordinate ones are deduced. To form a team includes (1) to know team members with respect to resources, capabilities, commitments, and opportunities, (2) to support them by continuing dialogues and keeping them informed about relevant situation changes such as the occurrence of a pop-up threat, and (3) to balance the work among the team members. In order to achieve the common objective, all tasks related to it have to be completed, but redundant task completion should be avoided. Moreover, each team member should comply with its commitments.

In order to achieve these goals, several action alternatives are available (cf. Fig. 12) the execution of which leads to the initiation of dialogues in most cases. Besides the ‘request’ dialogue already known from single vehicle mission accomplishment, which can be used to request the completion or cancellation of a task, a ‘propose’, an ‘inform’, a ‘query’, and a ‘subscribe’ dialogue are introduced. ‘propose’ dialogues can be used to suggest task completion or task cancellation to others, ‘inform’ dialogues usually consist of an ‘inform’ message containing information for others, and ‘query’ and ‘subscribe’ dialogues facilitate to ask others for information. In addition to starting dialogues, the actor can commit itself to perform a task or drop an existing commitment.

As the representation of the new dialogues is in accordance with the representation discussed in the previous section, no new desires, action alternatives, and instruction models are needed for the continuation of dialogues, which are initiated by other actors. Additional knowledge is needed for the selection of options, when there are several possibilities to answer a message (e.g. accept or refuse a request to cancel task accomplishment). Moreover, instruction models are needed for the creation of new dialogues, which are implemented for all dialogues mentioned above.

In order to be able to activate appropriate goals and select actions, a variety of environment models is necessary. Within this package, the focus is on the team with its team members and their resources, capabilities, commitments, and opportunities, at which each team member is an instance of an actor. Moreover, the common objective is represented, which corresponds to the mission assignment of a single actor. The results obtained with an implementation of parts of this package are described in (Meitinger and Schulte 2006a).

5.3 Experimental facilities

Upcoming activities will include field tests of the presented work on the UAV demonstrator platform of the Universität der Bundeswehr München, currently under construction (see Fig. 13) (Ertl et al. 2005; Kriegel et al. 2007) (Höse et al. 2007). Further research in the fields of adaptive automation in military helicopter guidance and manned–unmanned teaming for airborne army missions will make use of the capability of machine–machine co-operation and moreover involve technologies of cognitive automation in the context of human–machine co-agency.

6 Summary and future prospects

Having started from the slightly ironical question regarding the self-conception of human factors considerations in the field of the guidance of often-called ‘autonomous’ systems, this contribution clarifies the articulate necessity of ergonomics research with regard to highly automated systems. Based upon the human factors engineering framework of the so-called work system clear definitions and distinctions of the terms autonomous system and semi-autonomous system have been elaborated. According to the given definition a work system consists of an operating force, usually a human or human team, and the operation-supporting means being the technology used for work. An autonomous system would emerge if the human function in the work system was completely replaced by a technological artefact, including the ability to self-define its work objective. An artificial system must have to do without this latter capability and, therefore, will be part of the operation-supporting means under supervision of the human operator. In order to counteract obvious problems with human supervisory control of highly automated complex systems the approach of co-operative control has been suggested by this contribution. In this case respective automation, i.e. co-operative automation, we call this kind of automation an assistant system, shall become part of the operating force building a team with the human operator. Co-operative capabilities on the side of the operation-supporting means working under supervision of the operating force have been discussed as well.

The question is how to build suchlike co-operative automation? As a result of our research group the answer is the approach of cognitive automation. Cognitive automation is based upon the idea of mimicking the rational behaviour aspects of human cognition, i.e. the cognitive process on the machine side. An approach how to engineer such an artificial cognitive system has been discussed. In order to enable it to perform as a team member the required capabilities have been specified. This includes the capability to perform the relevant mission tasks supplemented by the capability to co-operate and to coordinate on task level.

Currently, two major streams of co-operative automation are under consideration at the Universität der Bundeswehr München: the intelligent pilot assistant systems and the multi-UAV guidance systems. Whereas the requirements for human–machine co-operation in assistant systems have been studied thoroughly in several recent and current projects (references have been given in the paper), this contribution has focused more on the systematic engineering of machine–machine co-operation in the domain of uninhabited vehicles.

Although momentarily being focussed mainly on the machine aspects this work provides very interesting approaches for the modelling of the human–machine co-operation by consequently providing models of goals for co-operation, of coordination techniques, and of dialogue management related knowledge. Future work will join the different aspects of human–machine and machine–machine cognitive co-operation in manned–unmanned multi-agent scenarios. In the field of operator assistance the aspect of adaptive automation will be considered. Therefore, the modelling of human mental resources and workload will be a prerequisite for the decision of the assistant system in which case to intervene and how to do it. In the field of supervisory control of UAVs, the question of how to reduce the operator-to-vehicle ratio will be predominant. Cognitive and co-operative automation offers an approach to tackle many forthcoming questions in this field.

References

Austin JL (1962) How to do things with words. Oxford University Press, Oxford

Billings Charles E (1997) Aviation automation: the search for a human-centered approach. Lawrence Erlbaum Associates, Mahwah

Ertl C, Kriegel M, Schulte A (2005) Experimental set-up for the development of autonomous capabilities and operator assistance in UAV guidance. In: Proceedings of 6th conference on engineering psychology and cognitive ergonomics, in conjunction with HCI international, Las Vegas, USA, 22–27 July 2005.

Ertl C, Schulte A (2004) System design concept for co-operative and autonomous mission accomplishment of UAVs. In: Deutscher Luft- und Raumfahrtkongress 2004, Dresden, 20–23 September 2004

FIPA ACL Message Structure Specification. Document Number SC00061G. http://www.fipa.org/specs/fipa00061/SC00061G.pdf. Accessed 7 Dec 2005

FIPA ACL Communicative Act Library Specification. Document Number SC00037J. http://www.fipa.org/specs/fipa00037/SC00037J.pdf. Accessed 7 Dec 2005

FIPA Request Interaction Protocol Specification. Document Number SC00026H. http://www.fipa.org/specs/fipa00026/SC00026H.pdf. Accessed 7 Dec 2005

Frey A, Lenz A, Putzer H, Walsdorf A, Onken R (2001) In-flight evaluation of CAMA—the crew assistant military aircraft. In: Deutscher Luft- und Raumfahrtkongress, Hamburg, 17–20 September 2001

Gerlach M, Onken R (1993) A dialogue manager as interface between pilots and a pilot assistant system. In: Salvendy, Smith (Eds) Proceedings of the 5th international conference on human computer interaction HCII’93, Orlando

Höse D, Kriegel M, Schulte A (2007) Development of a microcontroller based sensor acquisition system for uninhabited aerial vehicles. In: First CEAS European air and space conference, Berlin, 10–13 September 2007

Jennings NR (1996) Coordination techniques for distributed artificial intelligence. In: O’Hare GMP, Jennings NR (eds) Foundations of distributed artificial intelligence. Wiley, New York, pp 187–210

Kriegel M, Meitinger C, Schulte A (2007) Operator assistance and semi-autonomous functions as key elements of future systems for multiple UAV guidance. In: 7th Conference on engineering psychology and cognitive ergonomics, in conjunction with HCI international, Beijing, 22–27 July 2007

Malone TW, Crowston K (1994) The interdisciplinary study of coordination. ACM Comput Surv 26(1):87–119

von Martial F (1992) Coordinating plans of autonomous agents. In: Lecture notes in artificial intelligence 610. Springer, Berlin

Meitinger C, Schulte A (2006a) Onboard artificial cognition as basis for autonomous UAV co-operation. In: Platts JT (Ed). Autonomy in UAVs. Final report of the GARTEUR Flight Mechanics Action Group FM-AG–14

Meitinger C, Schulte A (2006b) Cognitive machine co-operation as basis for guidance of multiple UAVs. NATO RTO HFM symposium on human factors of uninhabited military vehicles as force multipliers, Biarritz, 9–11 October 2006

Onken R (1994) Basic requirements concerning man–machine interactions in combat aircraft. In: Workshop on human factors/future combat aircraft, Ottobrunn, 4–6 October 1994

Onken R (2002) Cognitive cooperation for the sake of the human–machine team effectiveness. In: RTO-meeting procedures MP-088, HFM-084: the role of humans in intelligent and automated systems, Warsaw, 7–9 October 2002

Onken R, Schulte A. Dual-mode cognitive design in vehicle guidance and control human–machine systems. Springer, Heidelberg (in preparation)

Prévôt T, Gerlach M, Ruckdeschel W, Wittig T, Onken R (1995) Evaluation of intelligent on-board pilot assistance in in-flight field trials. In: 6th IFAC/IFIP/IFORS/IEA Symposium on analysis, design and evaluation of man–machine systems. Massachusetts Institute of Technology, Cambridge

Putzer H, Onken R (2003) COSA—a generic cognitive system architecture based on a cognitive model of human behaviour. Int J Cognit Technol Work 5:140–151

Rasmussen J (1983) Skills, rules, and knowledge; signals, signs, and symbols, and other distinctions in human performance models. In: IEEE Trans Syst Man Cybern SMC-13(3):257–266

REFA (1984) Methodenlehre des Arbeitsstudiums, Teil 1–3. 7. Auflage. Verband für Arbeitsstudien und Betriebsorganisation. Carl Hanser Verlag, München

Ruckdeschel W, Onken R (1994) Modelling of pilot behaviour using petri nets. In: Valette R (ed) Application and theory of petri nets, LNCS Bd. 815. Springer, Berlin

Schulte A, Stütz P (1998) Evaluation of the crew assistant military aircraft (CAMA) in simulator trials. In: NATO research and technology agency, system concepts and integration panel. Symposium on sensor data fusion and integration of human element. Ottawa, 14–17 September 1998

Schulte A (2006) Manned–unmanned missions: chance or challenge? In: The Journal of the JAPCC (Joint Air Power Competence Centre), Kalkar, edn 3

Searle JR (1969) Speech acts: an essay in the philosophy of language. Cambridge University Press, Cambridge

Sheridan Thomas B (1987) Supervisory control. In: Salvendy G (ed) Handbook of human factors. Wiley, New York

Strohal M, Onken R (1998) Intent and error recognition as part of the knowledge-based cockpit assistant. In: Proceedings of the SPIE ‘98 conference. The International Society for Optical Engineering, Orlando

Stütz P, Onken R (2001) Adaptive pilot modelling for cockpit crew assistance: concept, realisation and results. In: The cognitive work process: automation and interaction. 8th European conference on cognitive science applications in process control, CSAPC’01. Munich, 24–26 September 2001.

Stütz P, Schulte A (2000) Evaluation of the cockpit assistant military aircraft CAMA in flight trials. In: Third international conference on engineering psychology and cognitive ergonomics, Edinburgh, 25–27 October 2000

Wooldridge M (2002) An introduction to multi agent systems. Wiley, Chichester

Walsdorf A, Onken R, Eibl H, Helmke H, Suikat R, Schulte A (1997) The crew assistant military aircraft (CAMA). In: The human–electronic crew: the right stuff? 4th Joint GAF/RAF/USAF workshop on human–computer teamwork, Kreuth

Winograd Terry, Flores Fernando (1986) Understanding computers and cognition. Ablex Publishing Corporation, Norwood

Wittig T, Onken R (1993) Inferring pilot intent and error as a basis for electronic crew assistance. In: Fifth international conference on human–computer interaction (HCI International 93), Orlando

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schulte, A., Meitinger, C. & Onken, R. Human factors in the guidance of uninhabited vehicles: oxymoron or tautology?. Cogn Tech Work 11, 71–86 (2009). https://doi.org/10.1007/s10111-008-0123-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10111-008-0123-2