Abstract

Modern developments in the use of information technology within command and control allow unprecedented scope for flexibility in the way teams deal with tasks. These developments, together with the increased recognition of the importance of knowledge management within teams present difficulties for the analyst in terms of evaluating the impacts of changes to task composition or team membership. In this paper an approach to this problem is presented that represents team behaviour in terms of three linked networks (representing task, social network structure and knowledge) within the integrative WESTT software tool. In addition, by automating analyses of workload and error based on the same data that generate the networks, WESTT allows the user to engage in the process of rapid and iterative “analytical prototyping”. For purposes of illustration an example of the use of this technique with regard to a simple tactical vignette is presented.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The field of command and control (C2) is undergoing significant changes as a result of the gradual adoption of modern information and networking technologies. Indeed, it is argued that the advent of network enabled capability (NEC), as it is termed in the UK, will transform the way in which UK armed forces operate: “It offers a new way of not just ‘doing things better’ but of ‘doing better things’” (MoD 2005). It is envisaged that NEC, by linking sensors, effectors, decision makers and other individuals will through the rapid and timely sharing of information create widespread shared understanding of a situation that will in turn allow swifter actions, based on better-informed decisions, to be made (MoD 2005). Another benefit of NEC will be increased flexibility and agility within teams as a result of flexibility within information sharing and communications to create: “…highly responsive, well integrated and flexible joint force elements that have assured access to and unprecedented freedom of manoeuvre within the entire battlespace.” (MoD 2004). Whilst NEC will be based on the roll-out of interconnected technologies, the locus of its effect will be upon human cognition and decision making to enable better actions to be made (see Houghton and Baber 2005).

The situation created by the adoption of NEC technology poses considerable challenges for the analyst hoping to understand and evaluate the sociotechnical systems it produces: First, the emphasis on agility and flexibility suggests there are many possible system designs we might wish to consider at any one time, therefore a system allowing the rapid modelling and then reconfiguration of that model is required. This general approach is termed analytical prototyping and is based on the concept that initial system descriptions can be quantatively explored in order to evaluate the potential benefits of modification (Baber and Stanton 1999, 2001). Second, the importance attached to the possession and fluid sharing of information prompts us to look to find a way of usefully representing and understanding the distribution and use of information within the sociotechnical systems that NEC will create. Our proposed solution to this problem takes the form of linking what we call “knowledge objects” to individuals and tasks to produce a systems-level rendering of knowledge use. Knowledge objects are discussed in more detail later in this paper, but in essence they represent forms of knowledge that must be in some way accessed or owned for a task to be successfully completed. Third, the integration of sensors, effectors and humans suggests the requirement for the production of a systems-level depiction of NEC that considers the interactions of both humans and technological items. Our approach to this issue is to represent all entities as “agents” within the system that have essentially the same characteristics whether human or non-human (that is, they can possess and share knowledge objects, can be said to carry out certain tasks or operations and can form nodes in social networks linked to other agents through communications). By adopting this approach one can therefore investigate the impacts of augmenting or even replacing elements of teams with new technology on a like-for-like basis and how these changes alter the system as a whole.

The WESTT (workload, error, situational awareness, time and teamwork) software tool has a range of possible applications that are not necessarily limited to the military sphere. Specific uses include the analysis of field data and the evaluation of current practice; comparison between actual performance and design/doctrine; evaluation of changes to current practice through modelling; and evaluation of performance in training and virtual environments. Whilst our approach was initially motivated with regard to military operations, we believe the WESTT tool can be used widely in the study of any organization where C2 and complex team/collaborative performance are important elements (e.g., emergency services operations and disaster management). Whilst WESTT is an integrative approach, bringing together many metrics and modes of representation of team activity, one is by no means required to necessarily use all of its elements.

1.1 Underlying approach

Key to the design of WESTT is the contention that, with the advent of NEC and the increased acknowledgment of the importance of knowledge management in military operations, fully understanding C2 performance requires an appreciation of what happened (or might happen) in a given situation or scenario from at least three distinct but closely interrelated perspectives. First, we must represent the tasks being performed by the team over time; second, the nature of the social network the team forms must be made clear and third, the knowledge that is being used and exchanged must be represented. Thus we suggest that C2 and related team processes are best understood as being the product of tightly coupled network structures wherein the assessment of one network requires knowledge of the bindings across to—and the structure of—the others. This interrelation is depicted in Fig. 1.

Whilst WESTT is ostensibly a software tool, it should also be noted it implies a particular methodological approach to the study of team and C2 behaviour and a relatively novel theoretical stance on the nature of human-centred systems (see also Stanton et al. 2007). An implication of this view is that changing an element of one network has repercussions in the other two networks. For example, adjusting manning to meet performance targets in the task network will have repercussions for both the social network within the team and for the distribution and flow of knowledge within the team, both of which will have further repercussions upon task performance itself. These changes may of course be either beneficial or harmful to overall performance. WESTT then allows analysts to investigate these complexities to prevent unforeseen damage occurring both in terms of the network topographies (e.g., creating bottlenecks in information flow) and in terms of metrics of predicted error, workload and task duration. More positively this approach also allows analysts to produce original and non-obvious solutions to problems. For example, it is possible that what appears to be a manning problem may actually be a symptom of an underlying knowledge distribution problem that in turn might be solved by altering communications networks without changing staffing levels.

The WESTT network-centric method of representation is consistent with claims that a networked force is not one that merely employs a physical communications network, but one whose whole operation should, from a conceptual perspective be understood in terms of the complex web of dynamic relationships between entities (e.g., Atkinson and Moffat 2005). The approach taken in WESTT can also be seen as meeting the requirements for the analysis of organisational factors in the NATO Code of Best Practice for C2 Assessment (NATO 2002): “Organizational factors must be addressed as part of most C2 analyses. Organizational factors include structural (e.g. number of echelons, span of control), functional (e.g. distribution of functions, information, and authority), and capacity (e.g. personnel, communications) factors” (p. 17). Structural factors can be addressed through social networks and social network metrics such as centrality which in topological sense at least measure how well a node may potentially exert influence as a function of its distance from others. Functional factors are described through a combination of all three networks; specifically by relating the social network to the task network to show distribution of function and by relating the social network to the knowledge network, to show the distribution of information. Capacity factors can be assessed through measures of workload, social networks (which depict the varying weight of communications traffic and will indicate the busiest nodes through metrics such as Sociometric Status) and error metrics.

1.2 The Lloyd (2004) tactical vignette

In the next section of this paper we will describe in some depth the various modules that make up the WESTT software package. In doing this we shall make reference to an example of how WESTT can be used to support a Use case investigation of a hypothetical military action described by Lloyd (2004). The scenario describes a brigade-level action in urban terrain in which Brigade HQ plays the central command and control role, both passing reconnaissance from special forces and a unmanned aerial vehicle (UAV) down to the Urban Combat Team and also communicating with a convoy. We used WESTT to evaluate a change in network architecture suggested by Lloyd (2004) wherein rather than information being routed via Brigade HQ, a direct data-feed between the Urban Combat Team and the two reconnaissance assets (special forces and the UAV) is added, in line with NEC-style thinking about the provision of information directly to “on the ground” decision makers and effectors. WESTT allows rapid construction, assessment and modification of designs: the figures and data reported here took no longer than 20 min of keyboard time to produce, although it should be of course noted that the time spent to come to an understanding a scenario can clearly be highly variable depending on the expertise of the individual analyst in the domain being studied, access to subject matter experts and the complexity of what is being studied. Where data required for a WESTT analysis were not present in the original text we have taken the liberty of inventing some points of data informed by the text, as we would expect an analyst to be able to employ their professional judgment about a situation and to seek the advice of subject matter experts where required.

1.3 The main data table

At the heart of WESTT, in terms of both the user interface and the underlying method of analysis, is the main data table. The data table, as the name implies, simply displays an ordered list of events over time together with the agents (human or technological) involved and relevant details (labels breaking the action down by phases and other descriptive material can be added for example). In essence the data table is very similar to a spreadsheet and has similar editing capabilities, indeed data can readily be imported to WESTT from Microsoft Excel. In addition to the familiar spreadsheet functions, one can also ask WESTT to check that all the observations in the table fall in chronological order to prevent errors in analysis. A screenshot of the main data table is shown in Fig. 2.

1.4 Representation of task structure: operation sequence diagram and unified modelling language (UML) renderings

In terms of the tripartite model depicted in Fig. 1, we consider the following forms of representing actions over time to fulfil the role of being task networks. On the basis of the information in the main data table, WESTT will automatically draw and label an operation sequence diagram (OSD). The OSD has been widespread in the ergonomics community since the 1950s and provides a means of representing the activity of agents within a system over the course of a mission as Meister (1985) pointed out, “The OSD can be drawn at a system or task level and it can be utilized at any time in the system development cycle provided the necessary information is available. It can aid the analyst in examining the behavioural implementation of design alternatives by permitting the comparison of actions involved in these design alternatives.” (p. 67). In an OSD standard symbols indicate types of activities (e.g., transmit, inspect, decide). The order of steps in a task begins at the top of the page and moves down (i.e., time runs from top to bottom). Each agent has their own column on the diagram, thus looking down the page we can see the tasks that agent undertakes over time in relation to the actions of other agents to determine critical events and instances of task overloading. However, the use of the OSD has hitherto been beset by problems in their creation “…the task of drawing a complex OSD can be extremely cumbersome and expensive.” (Meister 1985, p. 68). Various attempts have been made to automate the drawing of OSDs (particularly during the 1980s), although there are few if any commercial products that are currently available to do this directly from source data. WESTT therefore fills this gap (see Fig. 3).

Operation sequence diagram for the Lloyd (2004) urban operations scenario

In addition to the OSD, WESTT can also automatically generate unified modelling language (UML) diagrams, namely, a sequence diagram and a Use case diagram. Whilst the OSD may be familiar to human factors and operational research experts, in engineering (and particularly software engineering) the UML system is popular and widely understood. Thus to make the tool useful to as wide an audience as possible and to facilitate communication between human factors analysts and engineers involved in the production of C2 technology we decided to add these UML options. The sequence diagram (see Fig. 4) is very similar to the OSD in so far as it portrays much the same information (actions by agent over time) albeit using slightly different layout for its symbology. The Use case diagram displays the associations between individual actors and the tasks (termed Use cases herein) that they are involved in. By offering a range of representations of the data we allow the analyst to pick the most relevant to their needs at the time. For example, if one is interested in which agents are collaborating on particular tasks but have no interest in the timings involved, the Use case diagram may be a more preferable rendering of the information then the OSD or the UML sequence diagram.

UML sequence diagrams. The left-hand pane depicts a version of the scenario without linkages between Special Forces and UAV reconnaissance and the Urban Combat Team, the right-hand pane shows the scenario with those links, and the extra events that would necessarily occur as a result. Note that these differences are clearly apparent at a glance

1.5 Social network analysis

Social network analysis is based on the simple intuition that the structure of relationships between entities plays a determinant role in the performance or action of that social network and the entities within it. It is worth noting from the outset that social network theory is not merely about drawing diagrams—useful as these are—it is also a branch of mathematical analysis. Indeed, modern social network analysis techniques would not exist had Graph Theory not undergone rapid development as a mathematical field in the 1970s. Today, social network theory is widely used across myriad disciplines; it can be used as a tool to investigate organizations, decision making, the spread of information, the spread of disease, mental health support systems, anthropology, child development etc. Most recently there has been a great deal of enthusiasm for using the techniques of social network analysis (SNA hereon) to study the internet and connections between both web pages (e.g., Google “page rank” technology) and internet users (e.g., see Adamic et al. 2003). In terms of studying the architectures encountered in the increasingly complex network enabled command and control networks (both pre-designed and formed ad hoc) SNA would appear to be the logical choice of analysis tool. Modelling of military command and control networks using this general approach has already yielded intriguing results (e.g., Dekker 2002).

Teamwork is therefore explored and quantified in WESTT through methods of social network analysis. On the basis of the central data table WESTT automatically extracts a social network diagram that graphically portrays the interconnections between agents within a system. Each agent is represented as a node and is connected to others via lines termed “edges”. Again, whilst there are products that will draw social networks, WESTT is notable for allowing one to go directly from empirical data to a full social network in a single step. Qualitative analysis of social networks can yield interesting results; one can for example identify nodes that are acting as hubs that connect other nodes. In some cases this function may not have been deliberately assigned to an individual with implications for their performance in other tasks. In Fig. 5 we see social network outputs that show, with regard to the urban operations tactical vignette discussed earlier, the change to the topography of the network extra reconnaissance to Urban Combat Team linkages provide. These changes notably introduce extra redundancies; even if something happens to Brigade HQ it is clear from the right-hand social network that vital reconnaissance data will be available to the Urban Combat Team and those who are linked to them. By contrast, in the left-hand social network we can see that there is relatively little redundancy; if something happens to Brigade HQ the network will be shattered into four isolated units.

As well as giving the analyst a visual representation of the relationships between agents, which can be used to understand the general character of the social network, the data on which it is based can be analyzed using algorithms and statistical techniques (for a comprehensive review see Wasserman and Faust 1992). For our purposes these metrics fall into two main camps; measures of the activity of nodes and measures that pertain to the topography of the network. Each class of metric provides a different perspective on the structure and performance of the social network. A measure of node activity implemented in WESTT is Sociometric Status, which gives an indication of the contribution a given node makes to the overall amount of communication in the network. The other two social network metrics currently instantiated in WESTT are geodesic distance and centrality that relate more directly to the physical form of the network. Geodesic distance refers to the shortest possible path between two nodes in a network and thus can be assumed to be shortest path for a communication to pass between two agents. Typically, the greater the geodesic distance between two agents, the longer information will take to propagate from one to another and the greater the risk that information will lose its value both because of the degradation encountered in inaccurate reception or retransmission (as in a game of “Chinese whispers”) and in terms of the information pertaining to a rapidly changing situation being rendered obsolete before it reaches its eventual recipient. If the information in question is intelligence this might mean plans are formed or orders issued that are inappropriate to the extant situation with the result that clumsy, uncoordinated or even unnecessarily hazardous actions are taken. Centrality is an overall indication of how close a given node is (in terms of geodesic distance) from all other nodes in the network.

Measures of Sociometric Status and centrality for the two variations on the urban operations scenario are shown in Table 1.

From Table 1 we can see that increased connectivity raises Sociometric Status for most nodes, but more interestingly that even quite minor changes have adjusted the nature within the network. For example, Special Forces assets have increased centrality, meaning they are now closer to other units within the network. This means that in principle they would now be expected to play a far more important role within the network but also that their loss or infiltration of their communications would have an increasingly profound impact upon the wellbeing of the mission.

1.6 Representing system knowledge structure: propositional networks

In addition to importing and representing the observational data, WESTT supports the construction of what are termed propositional networks. The basic approach is for the analyst to collect information from debriefs, interviews, procedural manuals and other sources, to define the “knowledge objects” relevant to the mission. These knowledge objects are then connected by linkages based on semantic propositions that define their linkage (e.g., IS, HAS, KNOWS), thus a network of knowledge is produced (see for example, Anderson 1983). In terms of constraining what a proposition can be we take Anderson’s approach that a proposition is a basic statement, “…the smallest unit about which it makes sense to make the judgment true or false” (Anderson 1990, p. 123). To-date we have found the best way to elicit knowledge objects has been to use the critical decision method for structuring interviews. The critical decision method (Klein 1989) is a form of critical incident technique. According to Klein (1989), “The CDM is a retrospective interview strategy that applies a set of cognitive probes to actual non-routine incidents that required expert judgment or decision making” (p. 464). In our implementation of this approach, the interview proceeds through a series of four stages: briefing and initial recall of incidents; identifying decision points in specific incident; probing the decision points; and finally checking. In order to derive knowledge objects from this procedure we list the types of knowledge needed to make each critical decision. It is important to note that when we talk of knowledge objects we do not mean specific values but rather the names of variables that need to be known, (e.g., “speed” would be used rather than “55 miles per hour”).

In terms of WESTT itself, the knowledge objects are entered into a matrix which allows the analyst to define the relationship between the objects. WESTT is then able to automatically provide a graphical representation of the “space” of knowledge objects that are involved in the mission. An example is given in Fig. 6, although it should be noted that this is a somewhat simplified version of what we would expect in the typical case. Ideally, these networks are then presented to subject matter experts (SME) in order to validate the level of detail and the inclusion of specific knowledge objects. Once the network has reached an acceptable state, it can be subjected to network analysis in a similar way to the social networks to identify trends in the relationships between information. It is this network of propositions that embodies the novel conceptualization of situation awareness used by WESTT (see also Stanton et al. 2007). Rather than focusing on aspects of “awareness”, we are more concerned with defining the “situation”. From this perspective, we consider the situation as comprising of a collection of objects about which the agents within the system require some knowledge in order to operate effectively. Nodes in a PN can be easily associated with specific agents through colour coding. This provides an intuitive representation of “who knows what” during the phases of an incident, which can be useful for considering gaps in awareness, requirements for shared knowledge or potential for conflicting interpretations of the same knowledge. WESTT provides a facility to “play back” the spread of activation in the propositional network so the analyst can watch the spread of knowledge use and sharing over the duration of the scenario.

1.7 Workload, error and time metrics

The final part of WESTT is the calculation of workload, error and time metrics. In many forms of human reliability analysis (HRA), an approach is taken that is analogous to failure mode effect consequence analysis (FMECA) (see Kirwan 1994). This is a standard engineering approach that defines failure modes for specific elements of a problem. In HRA approaches, an element might be a task and a failure mode might be “done too early/too late”. WESTT uses this notion for its initial (analyst driven) inspection of operations. Each operation can, from a pop-up menu be assigned one or more specific failure modes. The resulting table this produces shows operation by failure mode.

Given that each operation is made up of specific tasks, the assignment of time to tasks is simply a matter of linking tasks to a database list of typical unit-times. WESTT can combine these times into a simple linear model of performance, i.e., by summing all the times in manner that is similar to keystroke level models (e.g., Card et al. 1983). As a more sophisticated alternative we have implemented a method of extending this analysis into a critical path model (e.g., Gray et al. 1993; Baber and Mellor 2001) which can better account for the parallelism of human activity. Essentially the critical path technique is about identifying which activities may safely occur in parallel, which tasks are dependent on the completion of other tasks before they commence and thus ultimately which tasks are critical to maintaining a schedule and which are not. Given that we use the OSD/UML renderings as a task network (i.e., it shows the nature and order of actions and thus tasks carried out), then the critical path model is useful adjunct in that it can be used to place a degree of restraint upon possible modifications the order of actions carried out within a specified length of time.

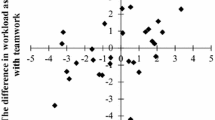

The study of human factors has developed a range of measures to describe how busy a person is in terms of how much cognitive and physical activity they are required to perform. In terms of predictive analysis of workload, the general approach would appear to follow the notion that changes in activity can be mapped over time to provide an index of loading (Parks and Boucek 1989); this could be considered as a function task scheduling (Moray et al. 1991) or in terms of competition between cognitive resources (North and Riley 1989). At present WESTT provides a simple metric for workload based on the operations performed by a given agent during a defined phase of the mission. This is derived from the OSD and provides an index of “operations demand” stating how many actions of a given type an individual or the team in total performed. However, workload is also a cognitive function and subsequent developments of the workload algorithms in WESTT may take into account the number of knowledge objects (as represented in the propositional network) to provide a scaling factor for the operations demand. That is to say, we will look to consider task complexity as being related to the number of knowledge objects it requires to be successfully completed.

2 Discussion

This paper has described the rationale behind and use of the WESTT software tool. It is envisaged that WESTT will provide a novel and useful means of representing team activity, and will be particularly beneficial for exploring future configurations in command and control system structure. Because the analyses and metrics are largely automated, WESTT can be used as an analytical prototyping tool allowing one to rapidly compare different approaches by manipulating data in the main data-table. By supporting analysis at several levels, it is possible to explore the effects of changes in system structure, the introduction or removal of knowledge objects (which might be operating procedures, cultural knowledge or tactical information) and the replacement or augmentation of human agents with technology. One way of understanding how the various networks are linked is to consider that within WESTT, for each agent (that is, a human or technological entity) we have the following information available: what they have done or will be doing (operation sequence diagram); what they know (knowledge objects embedded in a propositional network); who they know (social network diagram and metrics); what their modes of failure might be at various points (error); and broadly what their capacity is for adapting to a different schedule of tasks is (OSD/critical path analysis of time/workload). To answer a design question using WESTT we might want to select from a subset of these attributes for a subset of agents. Alternatively we may survey the overall structures of knowledge, social nets and tasks to gain an appreciation of the functioning of the system as an entity in itself.

As an integrative tool, many individual aspects of WESTT have already been subject to extensive testing, academic discussion and validation. For example, there is a large literature discussing the uses of social network modelling (e.g., Wasserman and Faust 1992) and many of the workload, error and time metrics are in common use by human factors practitioners. The use of “plug in” databases of task time and error also allow one to employ pre-validated and reliability-tested estimates.

Validation of WESTT as a whole has so far consisted of discussions with subject matter experts regarding the face validity of the outputs and usefulness of comparing different types of representations as an aid to the investigation by analysts of complex datasets (this has taken place with regard to police, fire and power industry scenarios). In addition to this we are currently following two more formal approaches to validation. First, we are comparing WESTT predictions to the outputs delivered by simulation tools (such as MicroSAINT). Second, and perhaps more importantly given an analysis tool’s ultimate duty is to produce metrics and data that accord with reality itself, we are involved in ongoing experimental work looking at the effects of different network structures and technologies upon the activities of teams of human participants in different games and scenarios and comparing the outputs of WESTT analyses against those real world patterns of performance. Future directions for the development of WESTT include adding more metrics as appropriate and in particular “headline figures” which more succinctly describe the performance of the system as a whole.

References

Anderson J (1983) The architecture of cognition. Harvard University Press, Cambridge

Anderson JR (1990) Cognitive psychology and its implications, 3rd edn. W.H. Freeman, New York

Adamic LA, Buyukkoten O, Adar E (2003) A social network caught in the web. Internal report, HP and Google Labs

Atkinson SR, Moffat J (2005) The agile organization: from informal networks to complex effects and agility. DODCCRP Publications, Washington

Baber C, Mellor BA (2001) Modelling multimodal human-computer interaction using critical path analysis. Inter J Hum–Comput Stud 54:613–636

Baber C, Stanton NA (2001) Analytical prototyping of personal technologies: using predictions of time and error to evaluate user interfaces. In: Hirsoe M (ed) Interact’01. IOS Press, Amsterdam, pp 585–592

Baber C, Stanton NA (1999) Analytical prototyping. In: Noyes JM, Cook M (eds) Interface technology. Research Studies Press, Baldock, pp 175–194

Card SK, Moran TP, Newell A (1983) The psychology of human–computer interaction. LEA, Hillsdale

Dekker AH (2002) C4ISR architectures, social network analysis and the FINC methodology: an experiment in military organisational structure. DSTO report DSTO-GD–0313

Gray WD, John BE, Atwood ME (1993) Project ERNESTINE: validating a GOMS analysis for predicting and explaining real-world performance. Hum–Comput Interact 8:237–309

Houghton RJ, Baber C (2005) Social aspects of network enabled capability: information sharing and decision-making. IEE people and systems conference: who are we designing for? London, November 2005

Kirwan B (1994) A guide to practical human reliability assessment. Taylor & Francis, London

Klein GA (1989) Recognition-primed decisions. In: Rouse WB (ed) Advances in man–machine systems research, vol 5. JAI, Greenwich, pp 47–92

Lloyd M (2004) The NEC use-case methodology. Distillation 8:16–23

Moray N, Dessousky MI, Kijowski BA, Adapathya R (1991) Strategic behaviour, workload and performance in task scheduling. Hum Factors 33:607–629

Meister D (1985) Behavioral analysis and measurement methods. Wiley, New York

Ministry of Defence (2004) Network enabled capability, an introduction. Ministry of Defence, UK

Ministry of Defence (2005) Network enabled capability. Joint Services Publication 777, London

NATO (2002) Code of best practice for C2 assessment (Decision maker’s guide)

North RA, Riley VA (1989) A predictive model of operator workload. In: MacMillan GR et al. (eds) Applications of human performance models to system design. Plenum, New York, pp 81–90

Parks DL, Boucek GP (1989) Workload prediction, diagnosis and continuing challenges. In: MacMillan GR et al. (eds) Applications of human performance models to system design. Plenum, New York, pp 47–64

Stanton NA, Stewart RJ, Baber C, Harris D, Houghton RJ, McMaster R, Salmon P, Hoyle G, Walker G, Young MS, Linsell M, Dymott R (2007) Distributed situational awareness in dynamic systems: theoretical development and application of an ergonomics methodology. Ergonomics (in press)

Wasserman S, Faust K (1992) Social network analysis: methods and applications. Cambridge University Press, Cambridge

Acknowledgments

This work from the Human Factors Integration Defence Technology Centre was part-funded by the Human Sciences Domain of the UK Ministry of Defence Scientific Research Programme. The WESTT software package is available on request via our website: http://www.hfidtc.com.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Houghton, R.J., Baber, C., Cowton, M. et al. WESTT (workload, error, situational awareness, time and teamwork): an analytical prototyping system for command and control. Cogn Tech Work 10, 199–207 (2008). https://doi.org/10.1007/s10111-007-0098-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10111-007-0098-4