Abstract

Consider the problem of minimizing the expected value of a cost function parameterized by a random variable. The classical sample average approximation method for solving this problem requires minimization of an ensemble average of the objective at each step, which can be expensive. In this paper, we propose a stochastic successive upper-bound minimization method (SSUM) which minimizes an approximate ensemble average at each iteration. To ensure convergence and to facilitate computation, we require the approximate ensemble average to be a locally tight upper-bound of the expected cost function and be easily optimized. The main contributions of this work include the development and analysis of the SSUM method as well as its applications in linear transceiver design for wireless communication networks and online dictionary learning. Moreover, using the SSUM framework, we extend the classical stochastic (sub-)gradient method to the case of minimizing a nonsmooth nonconvex objective function and establish its convergence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the optimization problem

where \({\mathcal {X}} \subseteq \mathbb {R}^{n}\) is a bounded closed convex set; \(\xi \) is a random vector drawn from a set \(\varXi \in \mathbb {R}^m\), and \(g:{\mathcal {X}}\times \varXi \mapsto \mathbb {R}\) is a real-valued function. A classical approach for solving the above optimization problem is the sample average approximation (SAA) method. At each iteration of the SAA method, a new realization of the random vector \(\xi \) is obtained and the optimization variable x is updated by solving

Here \(\xi ^1,\xi ^2, \ldots \) are some independent, identically distributed realizations of the random vector \(\xi \). We refer the readers to [1–5] for the roots of the SAA method and [6–8] for several surveys on SAA.

A main drawback of the SAA method is the complexity of each step. In general, solving (2) may not be easy due to the non-convexity and/or non-smoothness of \(g(\cdot ,\xi )\). Even when (2) is convex, finding a solution of it might require the use of an iterative procedure which may be inefficient. To overcome the difficulties in solving the subproblem (2), we propose an inexact SAA method whereby at each step a well-chosen approximation of the function \(g(\cdot , \xi )\) in (2) is minimized. Specifically, at each iteration r, we update the optimization variable according to

where \({\hat{g}}(\cdot ,x^{i-1},\xi ^i)\) is an approximation of the function \(g(\cdot ,\xi ^i)\) around the point \(x^{i-1}\). To ensure the convergence of this method, we require the approximation function \({\hat{g}}(\cdot , x^{i-1}, \xi ^i)\) to be a locally tight upper bound of the original function \(g(\cdot ,\xi ^i)\) around the point \(x^{i-1}\), for each \(i=0,\ldots ,r-1\). For this reason, we call the above algorithm (3) a stochastic successive upper-bound minimization method (SSUM).

The idea of successive upper-bound minimization (also known as majorization minimization or successive convex optimization) has been widely studied in the literature for deterministic optimization problems; see [9] and the references therein. In the successive upper-bound minimization (SUM) framework, a locally tight approximation of the function is minimized at each step of the algorithm. This technique is key to many important practical algorithms such as the concave-convex procedure [10] and the expectation maximization algorithm [11, 12].

While the successive upper-bound minimization idea is well studied and widely used in deterministic settings, very little is known about its use in the stochastic setup. The main contributions of this paper are to extend the technique of successive upper-bound minimization to the stochastic setup (1) and to illustrate its use in applications. In particular, we first establish the convergence of SSUM defined by (3), and then describe two important applications of the SSUM framework: the sum rate maximization problem for wireless communication networks and the online dictionary learning problem. For the stochastic wireless beamforming problem, our numerical experiments indicate that the SSUM approach significantly outperforms the other existing algorithms in terms of the achievable ergodic sum rate in the network. In addition, we show that the traditional stochastic gradient (SG) algorithm for unconstrained smooth minimization is a special case of the SSUM method. Moreover, using the SSUM framework, we extend the SG algorithm to the problem of minimizing a nonsmooth nonconvex objective function and establish its convergence.

1.1 Technical preliminaries

Throughout the paper we adopt the following notations. We use \(\mathbb {R}\) to signify the set of real numbers. The notation \(\mathbb {E}(\cdot )\) is used to represent the expectation operator. Unless stated otherwise, all relations between random variables hold almost surely in this paper. A list of other definitions adopted in the paper are given below.

-

Distance of a point to a set: Given a non-empty set \(S\subset \mathbb {R}^{n}\) and a point \(x\in \mathbb {R}^{n}\), the distance of the point x to the set S is defined as

$$\begin{aligned} d(x,S)~\triangleq ~\inf _{s\in S}~\Vert x-s\Vert , \end{aligned}$$where \(\Vert \cdot \Vert \) denotes the 2-norm in \(\mathbb {R}^{n}\).

-

Directional derivative: Let \(h:~D\mapsto \mathbb {R}\) be a function, where \(D\subseteq \mathbb {R}^{n}\) is a convex set. The directional derivative of the function h at a point \(x\in D\) in the direction \(d\in R^{n}\) is defined as

$$\begin{aligned} h'(x;d)~\triangleq ~\liminf _{t\downarrow 0}\; \frac{h(x+td)-h(x)}{t}. \end{aligned}$$Moreover, we define \(h'(x;d) \triangleq +\infty \), if \(x+td\notin D\), \(\forall \; t>0\).

-

Stationary points of an optimization problem: The point \(\bar{x}\) is a stationary point of problem (1) if

$$\begin{aligned} f^\prime (\bar{x};x - \bar{x}) \ge 0, \quad \forall x\in {\mathcal {X}}, \end{aligned}$$i.e., the directional derivative of the objective function is non-negative for every feasible direction at \(\bar{x}\).

-

Natural history of a stochastic process: Consider a real valued stochastic process \(\{Z^{r}\}_{r=1}^{\infty }\). For each r, we define the natural history of the stochastic process up to time r as

$$\begin{aligned} \mathcal {F}^{r}~=~\sigma (Z^{1},\ldots ,Z^{r}), \end{aligned}$$where \(\sigma (Z^{1},\ldots ,Z^{r})\) denotes the \(\sigma \)-algebra generated by the random variables \(Z^{1},\ldots ,Z^{r}\).

-

Infinity norm of a function: Let \(h:~D\mapsto \mathbb {R}\) be a function, where \(D\subseteq \mathbb {R}^{n}\). The infinity norm of the function \(h(\cdot )\) is defined as

$$\begin{aligned} \Vert h\Vert _\infty \triangleq \sup _{x\in D} |h(x)|. \end{aligned}$$

2 Stochastic successive upper-bound minimization

In the introduction section, we have briefly outlined the stochastic successive upper-bound minimization (SSUM) algorithm as an inexact version of the SAA method. In this section, we describe the SSUM algorithm and the associated assumptions more precisely, and provide a complete convergence analysis.

Consider the optimization problem

where \({\mathcal {X}}\) is a bounded closed convex set and \(\xi \) is a random vector drawn from a set \(\varXi \in \mathbb {R}^{m}\). We assume that the function \(g_{1}:~{\mathcal {X}}^\prime \times \varXi \mapsto \mathbb {R}\) is a twice continuously differentiable (and possibly non-convex) function in x, while \(g_{2}:~{\mathcal {X}}\times \varXi \mapsto \mathbb {R}\) is a convex continuous (and possibly non-smooth) function in x. Here \({\mathcal {X}}^\prime \) is a bounded open set containing the set \({\mathcal {X}}\). Due to the non-convexity and non-smoothness of the objective function, it may be difficult to solve the subproblems (2) in the SAA method. This motivates us to consider an inexact SAA method by using an approximation of the function \(g(\cdot ,\xi )\) in the SAA method (2) as follows:

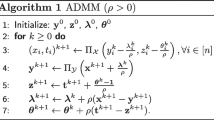

where \({\hat{g}}_{1}(x, x^{i-1},\xi ^{i})\) is an approximation of the function \(g_{1}(x,\xi ^{i})\) around the point \(x^{i-1}\). Table 1 summarizes the SSUM algorithm.

Clearly, the function \({\hat{g}}_1(x,y,\xi )\) should be related to the original function \(g_1(x,\xi )\). In this paper, we assume that the approximation function \({\hat{g}}_1(x,y,\xi )\) satisfies the following conditions.

Assumption A

Suppose the approximation function \({\hat{g}}(x,y,\xi )\) satisfies the following

-

A1.

\({\hat{g}}_1(y,y,\xi ) = g_1(y,\xi ), \quad \forall \ y \in {\mathcal {X}}, \;\forall \ \xi \in \varXi \)

-

A2.

\({\hat{g}}_1(x,y,\xi ) \ge g_1 (x,\xi ),\quad \forall \ x\in {\mathcal {X}}', \;\forall \ y\in {\mathcal {X}},\; \forall \ \xi \in \varXi \)

-

A3.

\({\hat{g}}(x,y,\xi ) \triangleq {\hat{g}}_1(x,y,\xi ) + g_2(x,\xi )\) is uniformly strongly convex in x, i.e., for all \(\ (x,y,\xi ) \in {\mathcal {X}}\times {\mathcal {X}}\times \varXi \),

$$\begin{aligned} {\hat{g}}(x+d,y,\xi ) - {\hat{g}}(x,y,\xi ) \ge {\hat{g}}'(x,y,\xi ;d) + \frac{\gamma }{2}\Vert d\Vert ^2,\ \;\forall \ d\in \mathbb {R}^n, \end{aligned}$$

where \(\gamma >0\) is a constant.

The assumptions A1–A2 imply that the approximation function \({\hat{g}}_1(\cdot ,y,\xi )\) should be a locally tight approximation of the original function \(g_1(\cdot ,\xi )\). We point out that the above assumptions can be satisfied in many cases by the right choice of the approximation function and hence are not restrictive. For example, when the gradient of the function \(g_1(\cdot , \xi )\) is Lipschitz continuous with a given constant L, then it is not hard to check that the function

is a valid approximation and satisfies A1–A3 with \(\alpha \) being a large enough positive constant. Note that in this example the approximation function \({\hat{g}}_1(\cdot , y,\xi )\) is strongly convex even though the function \(g_1(\cdot ,y)\) itself may not even be convex; see Sects. 3 and 4 for other examples.

To ensure the convergence of the SSUM algorithm, we further make the following assumptions.

Assumption B

-

B1.

The functions \(g_1(x,\xi )\) and \({\hat{g}}_1(x,y,\xi )\) are continuous in x for every fixed \(y\in {\mathcal {X}}^\prime \) and \(\xi \in \varXi \)

-

B2.

The feasible set \({\mathcal {X}}\) is bounded

-

B3.

The functions \(g_1(\cdot ,\xi )\) and \({\hat{g}}_1(\cdot ,y,\xi )\), and their derivatives are uniformly bounded. More precisely, there exists a constant \(K>0\) such that for all \((x,y,\xi ) \in {\mathcal {X}}^\prime \times {\mathcal {X}}\times \varXi \), we have

$$\begin{aligned} |g_1(x,\xi )|\le & {} K, \quad \Vert \nabla _x g_1(x,\xi )\Vert \le K, \\ |{\hat{g}}_1(x,y,\xi )|\le & {} K, \quad \Vert \nabla _x {\hat{g}}_1(x,y,\xi )\Vert \le K, \quad \Vert \nabla _x^2 {\hat{g}}_1(x,y,\xi )\Vert \le K. \end{aligned}$$ -

B4.

The function \(g_2(x,\xi )\) is convex in x for every fixed \(\xi \in \varXi \)

-

B5.

The function \(g_2(x,\xi )\) and its directional derivative are uniformly bounded. In other words, there exists \(K'>0\) such that for all \((x,\xi )\in {\mathcal {X}}\times \varXi \), we have \(|g_2(x,\xi )|\le K'\) and

$$\begin{aligned} |g_2'(x,\xi ;d)| \le K' \Vert d\Vert , \quad \ \forall \ d \in \mathbb {R}^n \; \mathrm{with}\; x+d \in {\mathcal {X}}. \end{aligned}$$ -

B6.

Let \({\hat{g}}(x,y,\xi )={\hat{g}}_1(x,y,\xi )+g_2(x,y,\xi )\). There exists \( \bar{g} \in \mathbb {R}\) such that

$$\begin{aligned} |{\hat{g}}(x,y,\xi )|\le \bar{g}, \quad \forall \;(x,y,\xi ) \in {\mathcal {X}}\times {\mathcal {X}}\times \varXi . \end{aligned}$$

Notice that in the assumptions B3 and B5, the derivatives are taken with respect to the x variable only. Furthermore, one can easily check that the assumption B3 is automatically satisfied if the mappings \(g_1(x,\xi )\), \(\nabla _x g_1(x,\xi )\), \({\hat{g}}_1(x,y,\xi )\), \(\nabla _x {\hat{g}}_1(x,y,\xi )\), \(\nabla _x^2{\hat{g}}_1(x,y,\xi )\) are continuous in \((x,y,\xi )\) and the set \(\varXi \) is bounded; or when the above mappings are continuous in (x, y) and \(\varXi \) is finite. As will be seen later, this assumption can be easily satisfied in various practical problems. It is also worth mentioning that since the function \(g_2(x,\xi )\) is assumed to be convex in x in B4, its directional derivative with respect to x in B5 exists and can be written as

The following theorem establishes the convergence of the SSUM algorithm.

Theorem 1

Suppose that Assumptions A and B are satisfied. Then the iterates generated by the SSUM algorithm converge to the set of stationary points of (1) almost surely, i.e.,

where \({\mathcal {X}}^*\) denotes the set of stationary points of (1).

To facilitate the presentation of the proof, let us define the random functions

for \(r = 1,2,\ldots \). Clearly, the above random functions depend on the realization \(\xi ^1,\xi ^2,\ldots \) and some of these random functions depend on the choice of the initial point \(x^0\) as well. Now we are ready to prove Theorem 1.

Proof

First of all, since the iterates \(\{x^r\}\) lie in a compact set, it suffices to show that every limit point of the iterates is a stationary point. To show this, let us consider a subsequence \(\{x^{r_j}\}_{j=1}^\infty \) converging to a limit point \({\bar{x}}\). Note that since \({\mathcal {X}}\) is closed, \({\bar{x}} \in {\mathcal {X}}\) and therefore \({\bar{x}}\) is a feasible point. Moreover, since \(|g_1(x,\xi )|<K, \; |g_2(x,\xi )|<K'\) for all \(\xi \in \varXi \) (due to B3 and B5), using the strong law of large numbers [13], one can write

Furthermore, the assumptions B3, B5, and a simple use of mean value theorem implies that the family of functions \(\{f_1^{r_j}(\cdot )\}_{j=1}^\infty \) and \(\{f_2^{r_j}(\cdot )\}_{j=1}^\infty \) are uniformly equicontinuous in \({\mathcal {X}}\);Footnote 1 and therefore by restricting to a subsequence, we have

almost surely. On the other hand, \(\Vert \nabla _x {\hat{g}}_1(x,y,\xi )\Vert <K ,\; \forall \ x,y,\xi \) due to the assumption B3 and therefore the family of functions \(\{{\hat{f}}_1^r(\cdot )\}\) is uniformly equicontinuous in \(\bar{{\mathcal {X}}}\) for some compact set \(\bar{{\mathcal {X}}}\) satisfying \({\mathcal {X}} \subset \bar{{\mathcal {X}}} \subset {\mathcal {X}}^\prime \) and \({\mathcal {X}}\) being in the interior of \(\bar{{\mathcal {X}}}\). Hence the Arzelà–Ascoli theorem [14] implies that, by restricting to a subsequence, there exists a uniformly continuous function \({\hat{f}}_1(x)\) such that

and

Furthermore, it follows from assumption A2 that

Letting \(j \rightarrow \infty \) and using (7) and (11), we obtain

On the other hand, using the update rule of the SSUM algorithm, one can show the following lemma.

Lemma 1

\(\lim _{r \rightarrow \infty } {\hat{f}}_1^r(x^r) - f_1^r(x^r) = 0, \; \mathrm{almost \; surely.}\)

Proof

The proof of Lemma 1 is relegated to the appendix section.

Combining Lemma 1 with (9) and (12) yields

It follows from (13) and (14) that the function \({\hat{f}}_1(x) - f_1(x)\) takes its minimum value at the point \({\bar{x}}\) over the set \(\bar{{\mathcal {X}}}\). On the other hand, the combination of the assumption B3 and the Arzelà–Ascoli theorem implies that the function \({\hat{f}}_1(\cdot )\) is differentiable at the point \({\bar{x}}\). Since \(\bar{x} \in {\mathcal {X}}\) is in the interior of \(\bar{{\mathcal {X}}}\), the first order optimality condition implies that

or equivalently

On the other hand, using the update rule of the SSUM algorithm, we have

Letting \(j \rightarrow \infty \) and using (10) and (12) yield

Moreover, the directional derivative of \(f_2(\cdot )\) exists due to the bounded convergence theorem [13]. Therefore, (16) implies that

Combining this with (15), we get

or equivalently

which means that \({\bar{x}}\) is a stationary point of \(f(\cdot )\). \(\square \)

Few remarks regarding the SSUM algorithm and its convergence are in order:

Remark 1

In Theorem 1, we assume that the set \({\mathcal {X}}\) is bounded. It is not hard to see that the result of the theorem still holds even if \({\mathcal {X}}\) is unbounded, so long as the iterates lie in a bounded set; see Remark 8 for an example of this scenario.

Remark 2

The boundedness of the Hessian of \(\hat{g}_1(\cdot )\) in assumption B3 is used to show the existence of the gradient of \(\hat{f}_1(\cdot )\) at the point \(\bar{x}\). It is worth noticing that this assumption can be relaxed by just assuming that the gradient of \(\hat{g}_1(\cdot )\) is uniformly locally Lipschitz continuous around the limit point \({\bar{x}}\).

Remark 3

It is worth noticing to the special cases: \(g_1(x,\xi ) = 0\) and \(g_2(x,\xi )=0\). When \(g_1(x,\xi ) = 0\), then the SSUM algorithm reduces to the traditional SAA algorithm. On the other hand, when \(g_2(x,\xi )=0\), we successively approximate the whole objective function at each iteration. An example of this case is given in Sects. 3.3 and 4.

Remark 4

From the proof of Theorem 1, it can be observed that for the result of the theorem to hold, it is sufficient to calculate the solution of (5) at each iteration within the accuracy level of \(\mathcal {O}\big (\frac{1}{r}\big )\).

Remark 5

An alternative approach is to solve the SAA subproblem (2) to a stationary point at each iteration. Under very mild set of assumptions and by using strong law of large numbers, it can be shown that this approach is also guaranteed to converge to the set of stationary points. In addition, one may obtain further guarantees on the quality of the limit points; see [6, 15] and the references therein. However, this approach could be computationally costly since it requires an iterative procedure at each iteration to find the stationary point of the subproblem (2). We will discuss this method further for the transceiver design problem in the next section.

Remark 6

When the objective function is smooth, the empirical estimate of the gradient can be used as a stopping criterion in SSUM algorithm. In addition, when the nonsmooth part \(g_2(x,\xi )\) is not random, i.e., \(g_2(x,\xi ) \equiv g_2(x)\), one can use the estimated proximal gradient for termination criteria of the SSUM method.

3 Applications: transceiver design and online dictionary learning

In this section, we describe two important applications of the SSUM approach.

3.1 Expected sum-rate maximization for wireless networks

The ergodic/stochastic transceiver design problem is a long standing problem in the signal processing and communication area, and yet no efficient algorithm has been developed to date which can deliver good practical performance. In contrast, substantial progress has been made in recent years for the deterministic counterpart of this problem; see [16–25]. That said, it is important to point out that most of the proposed methods require the perfect and full channel state information (CSI) of all links – an assumption that is clearly impractical due to channel aging and channel estimation errors. More importantly, obtaining the full CSI for all links would inevitably require a prohibitively large amount of training overhead and is therefore practically infeasible.

One approach to deal with the channel aging and the full CSI problem is to use the robust optimization methodology. To date, various robust optimization algorithms have been proposed to address this issue [26–31]. However, these methods are typically rather complex compared to their non-robust counterparts. Moreover, they are mostly designed for the worst case scenarios and therefore, due to their nature, are suboptimal when the worst cases happen with small probability. An alternative approach is to design the transceivers by optimizing the average performance using a stochastic optimization framework which requires only the statistical channel knowledge rather than the full instantaneous CSI. In what follows, we propose a simple iterative algorithm for ergodic/stochastic sum rate maximization problem using the SSUM framework. Unlike the previous approach of [32] which maximizes a lower bound of the expected weighted sum rate problem, our approach directly maximizes the ergodic sum rate and is guaranteed to converge to the set of stationary points of the ergodic sum rate maximization problem. Furthermore, the proposed algorithm is computationally simple, fully distributed, and has a per-iteration complexity comparable to that of the deterministic counterpart [21].

For concreteness, let us consider a K cell interfering broadcast channel [33, 34], where each base station k, \(k~=~1,\ldots ,K\) is equipped with \(M_{k}\) antennas and serves \(L_{k}\) users located in the cell k. We denote the i-th receiver in the k-th cell by user \(i_{k}\). Let us also assume that base station k wishes to transmit \(d_{i_{k}}\) data streams \(\mathbf {s}_{i_{k}}\in \mathbb {C}^{d_{i_{k}}}\) to the user \(i_{k}\) equipped with \(N_{i_{k}}\) antennas. Furthermore, we assume that the data streams are independent Gaussian random variables with \(\mathbb {E}[\mathbf {s}_{i_{k}}\mathbf {s}_{i_{k}}^{H}]=\mathbf {I}\), where \(\mathbf {I}\) is the identity matrix of the appropriate size. In order to keep the encoding and decoding procedure simple, we consider linear precoding strategies. In other words, the transmitted signal \(\mathbf {x}_{k}\) at transmitter k is

where \(\mathbf{V }_{i_{k}}\in \mathbb {C}^{M_{k}\times d_{i_{k}}}\) is the transmit precoding matrix of user \(i_k\). Due to the power consumption limitations, we assume that the average transmission power of transmitter k is constrained by a budget \(P_{k}\), i.e.,

As the transmitters use the same frequency band, the received signal is the superposition of the signals from different transmitters. Hence the received signal of user \(i_k\) can be written as

where \(\mathbf {n}_{i_{k}}\) denotes the additive white Gaussian noise with distribution \(\mathcal {CN}(0,\sigma _{i_{k}}^{2}\mathbf {I})\) and \({\mathbf {H}}_{i_{k}j}\in \mathbb {C}^{N_{i_{k}}\times M_{j}}\) is the channel matrix from transmitter j to user \(i_k\). Furthermore, we assume that receiver \(i_{k}\) utilizes a linear precoder \(\mathbf {U}_{i_{k}}\in \mathbb {C}^{N_{i_{k}}\times d_{i_{k}}}\) to estimate the data stream \(\mathbf {s}_{i_{k}}\), i.e.,

where \({\hat{\mathbf {s}}}_{i_{k}}\) is the estimated signal.

Under these assumptions, it is known that the instantaneous achievable rate of user \(i_k\) is given by [34–36]:

where

denotes the mean square error matrix. Furthermore, it is not hard to check that the optimum receive beamformer \(\mathbf {U}_{i_k}\) which maximizes (21) is the MMSE receiver [34–36]:

where \(\mathbf {J}_{i_k}\triangleq \sum _{j=1}^K \sum _{\ell =1}^{I_j}{\mathbf {H}}_{i_kj}\mathbf{V }_{\ell _j}\mathbf{V }_{\ell _j}^H{\mathbf {H}}_{i_kj}^H+\sigma _{i_k}^2\mathbf {I}\) is the covariance matrix of the total received signal at receiver \(i_k\).

To maximize the sum of the rates of all users in the network, we need to solve

which requires the knowledge of all instantaneous channel matrices \({\mathbf {H}}\) at the transmitters. Due to practical limitations, the exact channel state information (CSI) of all channels, which changes rapidly in time, is typically not available at the base stations. A more realistic assumption is to assume that an approximate CSI is know for a few links, while a statistical model of the CSI is known for the rest of the links. The latter changes more slowly in time and is easier to track. In such situations, we are naturally led to maximize the expected sum rate of all users, where the expectation is taken over the channel statistics.

Notice that, in practice, the optimum receive beamformer in (23) can be updated by measuring the received signal covariance matrix. Hence even though the complete channel knowledge is not available at the transmitters, the receive beamformers can be optimized according to the instantaneous channel values by measuring the received signal covariance matrices. Therefore, the expected sum rate maximization problem can be written as

To be consistent with the rest of the paper, let us rewrite (24) as a minimization problem:

where

It can be checked that \(g_{1}\) is smooth but non-convex in \(\mathbf{V }\) [34]. In practice, due to other design requirements, one might be interested in adding some convex non-smooth regularizer to the above objective function. For example, the authors of [37] added a convex group sparsity promoting regularizer term to the objective for the purpose of joint base station assignment and beamforming optimization. In such a case, since the non-smooth part is convex, the SSUM algorithm is still applicable. For simplicity, we consider only the simple case of \(g_{2}\equiv 0\) in this section.

In order to utilize the SSUM algorithm, we need to find a convex tight upper-bound approximation of \(g_{1}(\mathbf{V },{\mathbf {H}})\). To do so, let us introduce a set of variables \(\mathbf {P}\triangleq (\mathbf{W},\mathbf {U},\mathbf{Z})\), where \(\mathbf{W}_{i_{k}}\in \mathbb {C}^{d_{i_{k}}\times d_{i_{k}}}\) (with \(\mathbf{W}_{i_{k}}\succeq \mathbf {0}\)) and \(\mathbf{Z}_{i_{k}}\in \mathbb {C}^{M_{k}\times d_{i_{k}}}\) for any \(i=1,\ldots ,L_k\) and for all \(k=1,\ldots ,K\). Furthermore, define

for some fixed \(\rho >0\) and

Using the first order optimality condition, we can check that

Now, let us define

where

Clearly, we have

and

Furthermore, \({\hat{g}}_1(\mathbf{V },\bar{\mathbf{V }},{\mathbf {H}})\) is strongly convex in \(\mathbf{V }\) with parameter \(\rho \) due to the quadratic term in (27). Hence \({\hat{g}}_1(\mathbf{V },\bar{\mathbf{V }},{\mathbf {H}})\) satisfies the assumptions A1–A3. In addition, if the channels lie in a bounded subset with probability one and the noise power \(\sigma _{i_k}^2\) is strictly positive for all users, then it can be checked that \(g_1(\mathbf{V },{\mathbf {H}})\) and \({\hat{g}}_1(\mathbf{V },\bar{\mathbf{V }},{\mathbf {H}})\) satisfy the assumptions B1-B6. Consequently, we can apply the SSUM algorithm to solve (25).

Define \({\mathbf {H}}^r\) to be the r-th channel realization. Let us further define

where \(\mathbf{V }^{r-1}\) denotes the transmit beamformer at iteration \(r-1\). Notice that \(\mathbf {P}^r\) is well defined since the optimizer of (29) is unique. With these definitions, the update rule of the SSUM algorithm becomes

or equivalently

In order to make sure that the SSUM algorithm can efficiently solve (25), we need to confirm that the update rules of the variables \(\mathbf{V }\) and \(\mathbf {P}\) can be performed in a computationally efficient manner in (29) and (30). Checking the first order optimality condition of (29), it can be shown that the updates of the variable \(\mathbf {P}= (\mathbf{W},\mathbf {U},\mathbf{Z})\) can be done in closed form; see Table 2. Moreover, for updating the variable \(\mathbf{V }\), we need to solve a simple quadratic problem in (30). Using the Lagrange multipliers, the update rule of the variable \(\mathbf{V }\) can be performed using a one dimensional search method over the Lagrange multiplier [34]. Table 2 summarizes the SSUM algorithm applied to the expected sum rate maximization problem; we name this algorithm as stochastic weighted mean square error minimizations (stochastic WMMSE) algorithm. Notice that although in the SSUM algorithm the update of the precoder \(\mathbf{V }_{i_k}\) depends on all the past realizations, Table 2 shows that all the required information (for updating \(\mathbf{V }_{i_{k}}\)) can be encoded into two matrices \(\mathbf{A }_{i_{k}}\) and \(\mathbf {B}_{i_{k}}\), which are updated recursively.

Remark 7

Similar to the deterministic WMMSE algorithm [34] which works for the general \(\alpha \)-fairness utility functions, the Stochastic WMMSE algorithm can also be extended to maximize the expected sum of such utility functions; see [34] for more details on the derivations of the respective update rules.

3.2 Numerical experiments

In this section we numerically evaluate the performance of the SSUM algorithm for maximizing the expected sum-rate in a wireless network. In our simulations, we consider \(K=57\) base stations each equipped with \(M=4\) antennas and serve a two antenna user in its own cell. The path loss and the power budget of the transmitters are generated using the 3GPP (TR 36.814) evaluation methodology [38]. We assume that partial channel state information is available for some of the links. In particular, each user estimates only its direct link, plus the interfering links whose powers are at most \(\eta \) (dB) below its direct channel power. For these estimated links, we assume a channel estimation error model in the form of \(\hat{h}=h+z\), where h is the actual channel; \(\hat{h}\) is the estimated channel, and z is the estimation error. Given a MMSE channel estimate \(\hat{h}\), we can determine the distribution of h as \(\mathcal {CN} (\hat{h}, \frac{\sigma _{l}^{2}}{1+\gamma \mathrm{SNR}})\) where \(\gamma \) is the effective signal to noise ratio (SNR) coefficient depending on the system parameters (e.g. the number of pilot symbols used for channel estimation) and \(\sigma _{l}\) is the path loss. Moreover, for the channels which are not estimated, we assume the availability of estimates of the path loss \(\sigma _{l}\) and use them to construct statistical models (Rayleigh fading is considered on top of the path loss).

In our first set of simulations, we compare the performance of four different algorithms: one sample WMMSE, mean WMMSE, stochastic gradient, and Stochastic WMMSE. In “one sample WMMSE”, we apply the WMMSE algorithm [34] on one realization of all channels, i.e., we fix \(\xi = \hat{\xi }\) and apply the deterministic WMMSE algorithm to it. On the other hand, in the “mean WMMSE”, the realization is assumed to be the mean channel matrices, i.e., \(\hat{\xi } = \mathbb {E}[\xi ]\) is used in the deterministic WMMSE algorithm. In the SG method, we apply the stochastic gradient method with two different step-size selection strategies: 1) diminishing step size rule of \(\gamma ^r = 10^{13}/r\); and 2) self-adaptive step-size selection scheme [39] of \(\gamma ^r = \gamma ^{r-1} \left( 1-10^{-14} \gamma ^{r-1}\right) \) with \(\gamma ^0 = 10^{13}\). There parameters are the best candidates based on our extensive numerical experiments for the ergodic sum rate maximization problem. In the Stochastic WMMSE method, it is observed that, when the number of users is large, the approximation function \(\hat{g}(\cdot )\) is strongly convex by itself in most of the cases and therefore adding the regularizer is not necessary. However, to be consistent with our theoretical part, we add the proximal regularizer with \(\rho = 10^{-12}\). It is further observed that smaller values of \(\rho \), which result in tighter upper-bound, perform better in our numerical experiments. We have also considered a forgetting factor, similar to [40], in the first 100 iterations to reduce the initialization effects on the algorithm. This modification is not directly applicable to the other algorithms in Fig. 1. In particular, the other algorithms either use just one channel instance, or they are stochastic gradient based and therefore, given the current iterate and channel realization, the new iterate is independent of the past iterates and initialization. Also in the SAA methods in Fig 4, given the channel realizations \({\mathbf {H}}^1,\ldots , {\mathbf {H}}^r,\) the new iterate \(\mathbf {V}^{r}\) is independent of the past iterates and initialization. Figure 1 shows our simulation results when each user only estimates about 3 % of its channels, while the others are generated synthetically according to the channel distributions. The expected sum rate in each iteration is approximated in this figure by a Monte-Carlo averaging over 500 independent channel realizations. As can be seen from Fig. 1, the Stochastic WMMSE algorithm significantly outperforms the rest of the algorithms. Although the stochastic gradient algorithm with diminishing step size (of order 1 / r) is guaranteed to converge to a stationary solution, its convergence speed is sensitive to the step size selection and is usually slow. We have also experimented the SG method with different constant step sizes in our numerical simulations, but they typically led to divergence. It is worth noticing that the per-iteration complexity of the stochastic WMMSE algorithm is \(\mathcal {O}(K^2 M^3)\) with K being the total number of users in the system and M denoting the number of antennas per user. In fact, the per iteration computational complexity is the same as the WMMSE algorithm; see [34] for more details and comparison with existing algorithms. On the other hand, calculating each component of the gradient requires K inversions of the received signal covariance matrices which leads to the same computational complexity order of \(\mathcal {O}(K^2 M^3)\). Hence the stochastic WMMSE algorithm has the same computational complexity order as in the stochastic gradient method.

Figure 2 illustrates the performance of the algorithms for \(\eta =12\) whereby about 6 % of the channels are estimated.

In our second set of numerical experiments, we compare the performance of the stochastic WMMSE algorithm with the sample average approximation (SAA) method. In order to apply the SAA method, we need to solve (2) for the sum rate maximization problem. In other words, one needs to solve the following optimization problem at each step:

Clearly, the above problem is NP-hard since it covers the instantaneous sum rate maximization as a special case [41]. Moreover, most of the off-the-shelf solvers which are not customized to this problem perform poorly in practice and the global solvers could only solve problems of small sizes; see [25, 42]. Therefore, we utilize the idea of the WMMSE algorithm, which is one of the most efficient algorithms for the instantaneous sum rate maximization [34, 43–47], to solve the above SAA subproblem. More precisely, the optimization problem (31) can be rewritten as

By duplicating the auxiliary variables \(\mathbf {U}\) and \(\mathbf{W}\), (32) can be equivalently written as

The above problem can be solved using the block coordinate descent method on the variables \(\mathbf {U},\mathbf{V },\mathbf{W}\), and every step is closed form [34]. The resulting algorithm is called “SAA – WMMSE” and compared with stochastic WMMSE method. Another approach tried in our simulation results is “SAA—Gradient Descent” method, which is the result of solving (31) via gradient descent method. Figure 3 compares these three methods. Notice that in the SAA—WMMSE and SAA—Gradient Descent methods, we set \(r=300\) and input all the 300 channel realizations to the algorithm at the first iteration and solve (31); while in the stochastic WMMSE method, only one channel realization is used at each iteration. Therefore the SAA method have access to more information in the initial stages. As can be seen from this figure, the SAA algorithm with gradient descent method results in a local optimum point with a poor objective. However, the SAA method solved with the WMMSE algorithm can reach almost the same objective value as the stochastic WMMSE method. On the other hand, each step of the SAA—WMMSE method is much more expensive than the stochastic WMMSE method since the dimension of the problem is much larger. This fact can be seen in Fig. 4. As can be seen in this figure, even one iteration of the SAA—WMMSE method takes more time than the Stochastic WMMSE method.

3.3 Online dictionary learning

Consider the classical dictionary learning problem: Given a random signal \(y \in \mathbb {R}^n\) drawn from a distribution \(P_{Y}(y)\), we are interested in finding a dictionary \(D \in \mathbb {R}^{n\times k}\) so that the stochastic cost function

is minimized over the feasible set \(\mathcal {D}\); see [48–50]. The loss function g(D, y) measures the fitting error of the dictionary D to the signal y. Most of the classical and modern loss functions can be represented in the form of

where \(\mathcal {A} \subseteq \mathbb {R}^k\) and \(h(\alpha ,D,y)\) is a convex function in \(\alpha \) and D separately. For example, by choosing \(h(\alpha ,D,y) = \frac{1}{2} \Vert y-D\alpha \Vert _2^2 + \lambda \Vert \alpha \Vert _1\), we obtain the sparse dictionary learning problem; see [50].

In order to apply the SSUM framework to the online dictionary learning problem, we need to choose an appropriate approximation function \({\hat{g}}(\cdot )\). To this end, let us define

where

Clearly, we have

and

Furthermore, if we assume that the solution of (34) is unique, the function \(g(\cdot )\) is smooth due to Danskin’s Theorem [51]. Moreover, the function \({\hat{g}}(D,\bar{D},y)\) is strongly convex in D. Therefore, the assumptions A1–A3 are satisfied. In addition, if we assume that the feasible set \(\mathcal {D}\) is bounded and the signal vector y lies in a bounded set \(\mathcal {Y}\), the assumptions B1-B6 are satisfied as well. Hence the SSUM algorithm is applicable to the online dictionary learning problem.

Remark 8

Choosing \(h(\alpha ,D,y) = \frac{1}{2} \Vert y-D\alpha \Vert _2^2 + \lambda \Vert \alpha \Vert _1\) and \(\gamma =0\) leads to the online sparse dictionary learning algorithm in [50]. Notice that the authors of [50] had to assume the uniform strong convxity of \(\frac{1}{2} \Vert y-D\alpha \Vert _2^2\) for all \(\alpha \in \mathcal {A}\) since they did not consider the quadratic proximal term \(\gamma \Vert D-{\bar{D}}\Vert ^2\). An alternative approach to make the approximation function strongly convex - and also in order to avoid overfitting - is to use the ridge regularizer \(g_2(D) = \gamma \Vert D\Vert ^2\) [52]. It is worth noticing that in this case when the signal vector y is bounded and \(\mathcal {A}\) is the probability simplex, then the sequence of SSUM iterates \(\{\mathcal {D}^r\}\) remains bounded.

4 Stochastic gradient method and its extensions

In this section, we show that the classical SG method and the incremental gradient method are special cases of the SSUM method. We also present an extension of these classical methods using the SSUM framework.

To describe the SG method, let us consider a special (unconstrained smooth) case of the optimization problem (1), where \(g_{2}\equiv 0\) and \({\mathcal {X}} = \mathbb {R}^n\). One of the popular algorithms for solving this problem is the stochastic gradient (also known as stochastic approximation) method. At each iteration r of the stochastic gradient (SG) algorithm, a new realization \(\xi ^{r}\) is obtained and x is updated based on the following simple rule [7, 53–59]:

Here \(\gamma ^{r}\) is the step size at iteration r. Due to its simple update rule, the SG algorithm has been widely used in various applications such as data classification [60, 61], training multi-layer neural networks [62–65], the expected risk minimization [66], solving least squares in statistics [67], and distributed inference in sensor networks [68–70]. Also the convergence of the SG algorithm is well-studied in the literature; see, e.g., [7, 55, 71, 72].

The popular incremental gradient method [64–67, 73] can be viewed as a special case of the SG method where the set \(\varXi \) is finite. In the incremental gradient methods, a large but finite set of samples \(\varXi \) is available and the objective is to minimize the empirical expectation

At each iteration r of the incremental gradient method (with random updating order), a new realization \(\xi ^{r}\in \varXi \) is chosen randomly and uniformly, and then (35) is used to update x. This is precisely the SG algorithm applied to the minimization of (36). In contrast to the batch gradient algorithm which requires computing \(\sum _{\xi \in \varXi }\nabla _{x}g(x,\xi )\), the updates of the incremental gradient algorithm are computationally cheaper, especially if \(|\varXi |\) is very large.

In general, the convergence of the SG method depends on the proper choice of the step size \(\gamma ^{r}\). It is known that for the constant step size rule, the SG algorithm might diverge even for a convex objective function; see [64] for an example. There are many variants of the SG algorithm with different step size rules [74, 75] and even different inexact versions [76]. In the following, we introduce a special form of the SSUM algorithm that can be interpreted as the SG algorithm with diminishing step sizes. Let us define

where \(\alpha \) is a function of y and is chosen so that \({\hat{g}}_{1}(x,y,\xi )\ge g_{1}(x,\xi )\). One simple choice is \(\alpha ^{r} = L\), where L is the Lipschitz constant of \(\nabla _{x}g_{1}(x,\xi )\). Choosing \({\hat{g}}_{1}\) in this way, the assumptions A1–A3 are clearly satisfied. Moreover, the update rule of the SSUM algorithm becomes

Checking the first order optimality condition of (38), we obtain

Rewriting (39) in a recursive form yields

which can be interpreted as the stochastic gradient method (35) with \(\gamma ^{r}=\frac{1}{\sum _{i=1}^{r}\alpha _{i}}\). Notice that the simple constant choice of \(\alpha ^{i}=L\) yields \(\gamma ^{r}=\frac{1}{rL}\), which gives the most popular diminishing step size rule of the SG method.

Remark 9

When \({\mathcal {X}}\) is bounded and using the approximation function in (37), we see that the SSUM algorithm steps become

where \(\varPi _{\mathcal {X}} (\cdot )\) signifies the projection operator to the constraint set \({\mathcal {X}}\). Notice that this update rule is different from the classical SG method as it requires generating the auxiliary iterates \(\{z^r\}\) which may not lie in the feasible set \({\mathcal {X}}\).

It is also worth noting that in the presence of the non-smooth part of the objective function, the SSUM algorithm becomes different from the classical stochastic sub-gradient method [7, 53–55]. To illustrate the ideas, let us consider a simple deterministic nonsmooth function \(g_2(x)\) to be added to the objective function. The resulting optimization problem becomes

Using the approximation introduced in (37), the SSUM update rule can be written as

Although this update rule is similar to the (regularized) dual averaging method [77, 78] for convex problems, its convergence is guaranteed even for the nonconvex nonsmooth objective function under the assumptions of Theorem 1. Moreover, similar to the (regularized) dual averaging method, the steps of the SSUM algorithm are computationally cheap for some special nonsmooth functions. As an example, let us consider the special non-smooth function \(g_2(x) \triangleq \lambda \Vert x\Vert _1\). Setting \(\alpha ^r = L\), the first order optimality condition of (41) yields the following update rule:

where \(\{z^{r+1}\}_{r=1}^{\infty }\) is an auxiliary variable sequence and \(\mathrm{shrink}_{\tau }(z)\) is the soft shrinkage operator defined as

Notice that the algorithm obtained in (42) is different from the existing stochastic sub-gradient algorithm and the stochastic proximal gradient algorithm [73, 79]; furthermore, if the conditions in Theorem 1 is satisfied, its convergence is guaranteed even for nonconvex objective functions.

Notes

The family of functions \(\mathcal {F}\) is uniformly equicontinuous over the set \({\mathcal {X}}\) if for every \(\epsilon >0\), there exists a \(\delta >0\) such that \(|f(x) - f(y)| <\epsilon , \;\forall f \in \mathcal {F}\) and for all \(x ,y \in {\mathcal {X}}\) with \(|x - y| <\delta \).

References

Plambeck, E.L., Fu, B.R., Robinson, S.M., Suri, R.: Sample-path optimization of convex stochastic performance functions. Math. Program. 75(2), 137–176 (1996)

Robinson, S.M.: Analysis of sample-path optimization. Math. Oper. Res. 21(3), 513–528 (1996)

Healy, K., Schruben, L.W.: Retrospective simulation response optimization. In: Proceedings of the 23rd Conference on Winter Simulation, pp. 901–906. IEEE Computer Society (1991)

Rubinstein, R.Y., Shapiro, A.: Optimization of static simulation models by the score function method. Math. Comput. Simul. 32(4), 373–392 (1990)

Rubinstein, R.Y., Shapiro, A.: Discrete Event Systems: Sensitivity Analysis and Stochastic Optimization by the Score Function Method, vol. 346. Wiley, New York (1993)

Shapiro, A.: Monte carlo sampling methods. Handb. Oper. Res. Manag. Sci. 10, 353–426 (2003)

Shapiro, A., Dentcheva, D., Ruszczyński, A.: Lectures on Stochastic Programming: Modeling and Theory, vol. 9. Society for Industrial and Applied Mathematics, Philadelphia (2009)

Kim, S., Pasupathy, R., Henderson, S.: A guide to sample average approximation In: Fu, M.C. (ed.) Handbook of Simulation Optimization, pp. 207–243. Springer, New York (2015)

Razaviyayn, M., Hong, M., Luo, Z.Q.: A unified convergence analysis of block successive minimization methods for non-smooth optimization. arXiv preprint, arXiv:1209.2385 (2012)

Yuille, A.L., Rangarajan, A.: The concave–convex procedure. Neural Comput. 15, 915–936 (2003)

Borman, S.: The expectation maximization algorithm—a short tutorial. Unpublished paper http://ftp.csd.uwo.ca/faculty/olga/Courses/Fall2006/Papers/EM_algorithm.pdf

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 39, 1–38 (1977)

Fristedt, B.E., Gray, L.F.: A Modern Approach to Probability Theory. Birkhuser, Boston (1996)

Dunford, N., Schwartz, J.T.: Linear Operators. Part 1: General Theory. Interscience Publications, New York (1958)

Bastin, F., Cirillo, C., Toint, P.L.: Convergence theory for nonconvex stochastic programming with an application to mixed logit. Math. Program. 108(2–3), 207–234 (2006)

Larsson, E., Jorswieck, E.: Competition versus cooperation on the MISO interference channel. IEEE J. Sel. Areas Commun. 26, 1059–1069 (2008)

Razaviyayn, M., Luo, Z.Q., Tseng, P., Pang, J.S.: A stackelberg game approach to distributed spectrum management. Math. Program. 129, 197–224 (2011)

Bengtsson, M., Ottersten, B.: Handbook of antennas in wireless communications. In: Godara, L.C. (ed.) Optimal and Suboptimal Transmit Beamforming, CRC, Boco Raton (2001)

Shi, C., Berry, R.A., Honig, M.L.: Local interference pricing for distributed beamforming in MIMO networks. In: Military Communications Conference, MILCOM, pp. 1–6 (2009)

Kim, S.J., Giannakis, G.B.: Optimal resource allocation for MIMO ad hoc cognitive radio networks. IEEE Trans. Inf. Theory 57, 3117–3131 (2011)

Shi, Q., Razaviyayn, M., Luo, Z.Q., He, C.: An iteratively weighted MMSE approach to distributed sum-utility maximization for a MIMO interfering broadcast channel. IEEE Trans. Signal Process. 59, 4331–4340 (2011)

Razaviyayn, M., Sanjabi, M., Luo, Z.Q.: Linear transceiver design for interference alignment: complexity and computation. IEEE Trans. Inf. Theory 58, 2896–2910 (2012)

Scutari, G., Facchinei, F., Song, P., Palomar, D.P., Pang, J.S.: Decomposition by partial linearization: parallel optimization of multi-agent systems. arXiv preprint, arXiv:1302.0756 (2013)

Scutari, G., Palomar, D.P., Facchinei, F., Pang, J.S.: Distributed dynamic pricing for mimo interfering multiuser systems: a unified approach. In: 2011 5th International Conference on Network Games, Control and Optimization (NetGCooP), pp. 1–5 (2011)

Hong, M., Luo, Z.Q.: Signal processing and optimal resource allocation for the interference channel. arXiv preprint, arXiv:1206.5144 (2012)

Wajid, I., Eldar, Y.C., Gershman, A.: Robust downlink beamforming using covariance channel state information. In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp. 2285–2288 (2009)

Vucic, N., Boche, H.: Downlink precoding for multiuser MISO systems with imperfect channel knowledge. In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp. 3121–3124 (2008)

Song, E., Shi, Q., Sanjabi, M., Sun, R., Luo, Z.Q.: Robust SINR-constrained MISO downlink beamforming: When is semidefinite programming relaxation tight? In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp. 3096–3099 (2011)

Tajer, A., Prasad, N., Wang, X.: Robust linear precoder design for multi-cell downlink transmission. IEEE Trans. Signal Process. 59, 235–251 (2011)

Shenouda, M., Davidson, T.N.: On the design of linear transceivers for multiuser systems with channel uncertainty. IEEE J. Sel. Areas Commun. 26, 1015–1024 (2008)

Li, W.C., Chang, T.H., Lin, C., Chi, C.Y.: Coordinated beamforming for multiuser miso interference channel under rate outage constraints. IEEE Trans. Signal Process. 61(5), 1087–1103 (2013)

Negro, F., Ghauri, I., Slock, D.: Sum rate maximization in the noisy MIMO interfering broadcast channel with partial CSIT via the expected weighted MSE. In: International Symposium on Wireless Communication Systems, ISWCS, pp. 576–580 (2012)

Razaviyayn, M., Baligh, H., Callard, A., Luo, Z.Q.: Joint transceiver design and user grouping in a MIMO interfering broadcast channel. In: 45th Annual Conference on Information Sciences and Systems (CISS) pp. 1–6 (2011)

Shi, Q., Razaviyayn, M., Luo, Z.Q., He, C.: An iteratively weighted MMSE approach to distributed sum-utility maximization for a MIMO interfering broadcast channel. IEEE Trans. Signal Process. 59, 4331–4340 (2011)

Guo, D., Shamai, S., Verdú, S.: Mutual information and minimum mean-square error in Gaussian channels. IEEE Trans. Inf. Theory 51(4), 1261–1282 (2005)

Sampath, H., Stoica, P., Paulraj, A.: Generalized linear precoder and decoder design for MIMO channels using the weighted MMSE criterion. IEEE Trans. Commun. 49(12), 2198–2206 (2001)

Hong, M., Sun, R., Baligh, H., Luo, Z.Q.: Joint base station clustering and beamformer design for partial coordinated transmission in heterogeneous networks. IEEE J. Sel. Areas Commun. 31(2), 226–240 (2013)

3GPP TR 36.814. In: http://www.3gpp.org/ftp/specs/archive/36_series/36.814/

Yousefian, F., Nedić, A., Shanbhag, U.V.: On stochastic gradient and subgradient methods with adaptive steplength sequences. Automatica 48(1), 56–67 (2012)

Razaviyayn, M., Sanjabi, M., Luo, Z.Q.: A stochastic weighted MMSE approach to sum rate maximization for a MIMO interference channel. In: IEEE 14th Workshop on Signal Processing Advances in Wireless Communications (SPAWC), pp. 325–329 (2013)

Luo, Z.Q., Zhang, S.: Dynamic spectrum management: complexity and duality. IEEE J. Sel. Top. Signal Process. 2(1), 57–73 (2008)

Weeraddana, P.C., Codreanu, M., Latva-aho, M., Ephremides, A., Fischione, C.: Weighted Sum-Rate Maximization in Wireless Networks: A Review. Now Publishers, Hanover (2012)

Christensen, S.S., Agarwal, R., Carvalho, E., Cioffi, J.M.: Weighted sum-rate maximization using weighted MMSE for MIMO-BC beamforming design. IEEE Trans. Wirel. Commun. 7(12), 4792–4799 (2008)

Schmidt, D.A., Shi, C., Berry, R.A., Honig, M.L., Utschick, W.: Minimum mean squared error interference alignment. In: Forty-Third Asilomar Conference on Signals, Systems and Computers, pp. 1106–1110 (2009)

Negro, F., Shenoy, S.P., Ghauri, I., Slock, D.T.: On the MIMO interference channel. In: Information Theory and Applications Workshop (ITA), 2010, pp. 1–9 (2010)

Shin, J., Moon, J.: Weighted sum rate maximizing transceiver design in MIMO interference channel. In: Global Telecommunications Conference (GLOBECOM), pp. 1–5 (2011)

Razaviyayn, M., Baligh, H., Callard, A., Luo, Z.Q.: Joint transceiver design and user grouping in a MIMO interfering broadcast channel. In: 45th Annual Conference on Information Sciences and Systems (CISS), pp. 1–6 (2011)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: design of dictionaries for sparse representation. Proc. SPARS 5, 9–12 (2005)

Lewicki, M.S., Sejnowski, T.J.: Learning overcomplete representations. Neural Comput. 12(2), 337–365 (2000)

Mairal, J., Bach, F., Ponce, J., Sapiro, G.: Online learning for matrix factorization and sparse coding. J. Mach. Learn. Res. 11, 19–60 (2010)

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena-Scientific, Belmont (1999)

Razaviyayn, M., Tseng, H.W., Luo, Z.Q.: Dictionary learning for sparse representation: Complexity and algorithms. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5247–5251 (2014)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22, 400–407 (1951)

Kiefer, J., Wolfowitz, J.: Stochastic estimation of the maximum of a regression function. Ann. Math. Stat. 23(3), 462–466 (1952)

Nemirovski, A., Juditsky, A., Lan, G., Shapiro, A.: Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 19(4), 1574–1609 (2009)

Koshal, J., Nedić, A., Shanbhag, U.V.: Regularized iterative stochastic approximation methods for stochastic variational inequality problems. IEEE Trans. Autom. Control 58(3), 594–609 (2013)

Nemirovsky, A.S., Yudin, D.B.: Problem Complexity and Method Efficiency in Optimization. Wiley, New York (1983)

Chung, K.L.: On a stochastic approximation method. Ann. Math. Stat. 25, 463–483 (1954)

Ermoliev, Y.: stochastic quasigradient methods and their application to system optimization. Stoch. Int. J. Probab. Stoch. Process. 9(1–2), 1–36 (1983)

Amari, S.: A theory of adaptive pattern classifiers. IEEE Trans. Electron. Comput. 16(3), 299–307 (1967)

Wijnhoven, R., With, P.H.N.D.: Fast training of object detection using stochastic gradient descent. In: Proceedings of IEEE International Conference on Pattern Recognition (ICPR), pp. 424–427 (2010)

Grippo, L.: Convergent on-line algorithms for supervised learning in neural networks. IEEE Trans. Neural Netw. 11(6), 1284–1299 (2000)

Mangasarian, O.L., Solodov, M.V.: Serial and parallel backpropagation convergence via nonmonotone perturbed minimization. Optim. Methods Softw. 4(2), 103–116 (1994)

Luo, Z.Q.: On the convergence of the LMS algorithm with adaptive learning rate for linear feedforward networks. Neural Comput. 3(2), 226–245 (1991)

Luo, Z.Q., Tseng, P.: Analysis of an approximate gradient projection method with applications to the backpropagation algorithm. Optim. Methods Softw. 4(2), 85–101 (1994)

Bottou, L.: Online learning and stochastic approximations. In: On-Line Learning in Neural Networks, vol. 17(9) (1998)

Bertsekas, D.P.: A new class of incremental gradient methods for least squares problems. SIAM J. Optim. 7(4), 913–926 (1997)

Bertsekas, D.P., Tsitsiklis, J.N.: Parallel and Distributed Computation: Numerical Methods, 2nd edn. Athena-Scientific, Belmont (1999)

Tsitsiklis, J., Bertsekas, D.P., Athans, M.: Distributed asynchronous deterministic and stochastic gradient optimization algorithms. IEEE Trans. Autom. Control 31(9), 803–812 (1986)

Bertsekas, D.P.: Distributed asynchronous computation of fixed points. Math. Program. 27(1), 107–120 (1983)

Ermol’ev, Y.M., Norkin, V.I.: Stochastic generalized gradient method for nonconvex nonsmooth stochastic optimization. Cybern. Syst. Anal. 34(2), 196–215 (1998)

Bertsekas, D.P., Tsitsiklis, J.N.: Gradient convergence in gradient methods with errors. SIAM J. Optim. 10(3), 627–642 (2000)

Bertsekas, D.P.: Incremental gradient, subgradient, and proximal methods for convex optimization: a survey. In: Optimization for Machine Learning 2010, pp. 1–38 (2011)

Tseng, P.: An incremental gradient(-projection) method with momentum term and adaptive stepsize rule. SIAM J. Optim. 8(2), 506–531 (1998)

George, A.P., Powell, W.B.: Adaptive stepsizes for recursive estimation with applications in approximate dynamic programming. Mach. Learn. 65(1), 167–198 (2006)

Broadie, M., Cicek, D., Zeevi, A.: General bounds and finite-time improvement for the Kiefer–Wolfowitz stochastic approximation algorithm. Oper. Res. 59(5), 1211–1224 (2011)

Nesterov, Y.: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 221–259 (2009)

Xiao, L.: Dual averaging methods for regularized stochastic learning and online optimization. J. Mach. Learn. Res. 11, 2543–2596 (2010)

Shalev-Shwartz, S., Tewari, A.: Stochastic methods for \(\ell _1\)-regularized loss minimization. J. Mach. Learn. Res. 12, 1865–1892 (2011)

Fisk, D.L.: Quasi-martingales. Trans. Am. Math. Soc. 120, 369–389 (1965)

Van der Vaart, A.W.: Asymptotic Statistics, vol. 3. Cambridge University Press, Cambridge (2000)

Hewitt, E., Savage, L.J.: Symmetric measures on cartesian products. Trans. Am. Math. Soc. 80, 470–501 (1955)

Bonnans, J.F., Shapiro, A.: Perturbation Analysis of Optimization Problems. Springer, New York (2000)

Acknowledgments

The authors are grateful to Maury Bramson of the University of Minnesota for valuable discussions.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Lemma 1

First of all, it can be observed that \({\hat{f}}_1^r(x^r) - f_1^r(x^r) = {\hat{f}}^r(x^r) - f^r(x^r)\) according to the definition of the functions \({\hat{f}}(\cdot )\) and \(f(\cdot )\). Therefore it suffices to show that \(\lim _{r\rightarrow \infty } {\hat{f}}^r(x^r) - f^r(x^r)=0,\;\mathrm{almost \;surely}\). The proof of this fact requires the use of quasi martingale convergence theorem [80], much like the convergence proof of online learning algorithms [50, Proposition 3]. In particular, we will show that the sequence \(\{{\hat{f}}^r(x^r)\}_{r=1}^{\infty }\) converges almost surely. Notice that

where the last equality is due to the assumption A1 and the inequality is due to the update rule of the SSUM algorithm. Taking the expectation with respect to the natural history yields

where (44) is due to the assumption A2 and (45) follows from the definition of \(\Vert \cdot \Vert _\infty \). On the other hand, the Donsker theorem (see [50, Lemma 7] and [81, Chapter 19]) implies that there exists a constant k such that

Combining (45) and (46) yields

where \((a)_+ \triangleq \max \{0,a\}\) is the projection to the non-negative orthant. Summing (47) over r, we obtain

where \(M \triangleq \sum _{r=1}^\infty \frac{k}{r^{3/2}}\). The equation (48) combined with the quasi-martingale convergence theorem (see [80] and [50, Theorem 6]) implies that the stochastic process\(\{{\hat{f}}^r(x^r) + \bar{g}\}_{r=1}^{\infty }\) is a quasi-martingale with respect to the natural history \(\{\mathcal {F}^r\}_{r=1}^{\infty }\) and \({\hat{f}}^r(x^r)\) converges. Moreover, we have

Next we use (49) to show that \(\sum _{r=1}^\infty \frac{{\hat{f}}^r(x^r) - f^r(x^r)}{r+1} <\infty ,\; \mathrm{almost\;surely}\). To this end, let us rewrite (43) as

Using the fact that \({\hat{f}}^r(x^r) \ge f^r(x^r),\;\forall \ r\) and summing (50) over all values of r, we have

Notice that the first term in the right hand side is finite due to (49). Hence in order to show \(\sum _{r=1}^\infty \frac{{\hat{f}}^r(x^r) - f^r(x^r)}{r+1}<\infty ,\;\mathrm{almost\;surely}\), it suffices to show that \(\sum _{r=1}^\infty \frac{\Vert f-f^r\Vert _\infty }{r+1} < \infty ,\;\mathrm{almost\;surely}\). To show this, we use the Hewitt-Savage zero-one law; see [82, Theorem 11.3] and [13, Chapter 12, Theorem 19]. Let us define the event

It can be checked that the event \(\mathcal {A}\) is permutable, i.e., any finite permutation of each element of \(\mathcal {A}\) is inside \(\mathcal {A}\); see [82, Theorem 11.3] and [13, Chapter 12, Theorem 19]. Therefore, due to the Hewitt-Savage zero-one law [82], probability of the event \(\mathcal {A}\) is either zero or one. On the other hand, it follows from (46) that there exists \(M'>0\) such that

Using Markov’s inequality, (52) implies that

Hence combining this result with the result of the Hewitt-Savage zero-one law, we obtain \(Pr(\mathcal {A}) =1\); or equivalently

As a result of (51) and (53), we have

On the other hand, it follows from the triangle inequality that

and

where (56) is due to the assumption B3 (with \(\kappa =(K+K') \)); (57) follows from the assumption B6, and (58) will be shown in Lemma 2. Similarly, one can show that

It follows from (55), (58), and (59) that

Let us fix a random realization \(\{\xi ^r\}_{r=1}^\infty \) in the set of probability one for which (54) and (60) hold. Define

Clearly, \(\varpi ^r \ge 0\) and \(\sum _r \frac{\varpi ^r}{r}<\infty \) due to (54). Moreover, it follows from (60) that \(|\varpi ^{r+1}-\varpi ^r| < \frac{\tau }{r}\) for some constant \(\tau >0\). Hence Lemma 3 implies that

which is the desired result. \(\square \)

Lemma 2

\(\Vert x^{r+1} - x^r\Vert = \mathcal {O}(\frac{1}{r}).\)

Proof

The proof of this lemma is similar to the proof of [50, Lemma 1]; see also [83, Proposition 4.32]. First of all, since \(x^r\) is the minimizer of \({\hat{f}}^r(\cdot )\), the first order optimality condition implies

Hence, it follows from the assumption A3 that

On the other hand,

where (62) follows from the fact that \(x^{r+1}\) is the minimizer of \({\hat{f}}^{r+1}(\cdot )\), the second inequality is due to the definitions of \({\hat{f}}^r\) and \({\hat{f}}^{r+1}\), while (63) is the result of the assumptions B3 and B5. Combining (61) and (63) yields the desired result. \(\square \)

Lemma 3

Assume \(\varpi ^r > 0\) and \(\sum _{r=1}^\infty \frac{\varpi ^r}{r} <\infty \). Furthermore, suppose that \(|\varpi ^{r+1}-\varpi ^r| \le {\tau }/{r}\) for all r. Then \(\lim _{r\rightarrow \infty } \varpi ^r = 0\).

Proof

Since \(\sum _{r=1}^{\infty } \frac{\varpi ^r}{r} < \infty \), we have \(\liminf _{r \rightarrow \infty } \varpi ^r = 0\). Now, we prove the result using contradiction. Assume the contrary so that

for some \(\epsilon >0\). Hence there should exist subsequences \(\{m_j\}\) and \(\{n_j\}\) with \(m_j\le n_j<m_{j+1},\forall \ j\) so that

On the other hand, since \(\sum _{r=1}^{\infty } \frac{\varpi ^r}{r} < \infty \), there exists an index \(\bar{r}\) such that

Therefore, for every \(r_0 \ge \bar{r}\) with \(m_j \le r_0 \le n_j-1\), we have

where the equation (69) follows from (65), and (70) is the direct consequence of (67). Hence the triangle inequality implies

for any \(r_0 \ge \bar{r}\), which contradicts (64), implying that

\(\square \)

Rights and permissions

About this article

Cite this article

Razaviyayn, M., Sanjabi, M. & Luo, ZQ. A Stochastic Successive Minimization Method for Nonsmooth Nonconvex Optimization with Applications to Transceiver Design in Wireless Communication Networks. Math. Program. 157, 515–545 (2016). https://doi.org/10.1007/s10107-016-1021-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-016-1021-7