Abstract

We consider two-stage adjustable robust linear optimization problems with uncertain right hand side \(\mathbf {b}\) belonging to a convex and compact uncertainty set \(\mathcal{U}\). We provide an a priori approximation bound on the ratio of the optimal affine (in \(\mathbf {b}\) ) solution to the optimal adjustable solution that depends on two fundamental geometric properties of \(\mathcal{U}\): (a) the “symmetry” and (b) the “simplex dilation factor” of the uncertainty set \(\mathcal{U}\) and provides deeper insight on the power of affine policies for this class of problems. The bound improves upon a priori bounds obtained for robust and affine policies proposed in the literature. We also find that the proposed a priori bound is quite close to a posteriori bounds computed in specific instances of an inventory control problem, illustrating that the proposed bound is informative.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the following two-stage adaptive robust linear optimization problem, \(\varPi _{Adapt}(\mathcal{U})\) with an uncertain right hand side.

where \(\mathbf {A}\in {\mathbb R}^{m \times n_1}, \mathbf {B}\in {\mathbb R}^{m \times n_2}, \mathbf {c}\in {\mathbb R}^{n_1}_+, \mathbf {d}\in {\mathbb R}^{n_2}_+\), \(\mathcal{U} \subseteq {\mathbb R}^m_+\) is a convex and compact uncertainty set of possible values of the right hand side of the constraints. For any \(\mathbf {b}\in \mathcal{U}\), \(\mathbf {y(b)}\) denotes the value of the second-stage variables in the scenario when the right hand side is \(\mathbf {b}\). Problem (1) models a wide variety of adaptive optimization problems such as the set covering, security-commitment for power generation [12, 13] and inventory control problems (see our reformulation in Sect. 5).

In this paper, we study for Problem (1) the power of affine policies, i.e., solutions \(\mathbf {y(b)}\) that are affine functions of \(\mathbf {b}\). Given that finding the best such policy is tractable, affine policies are widely used to solve multi-stage dynamic optimization problems. While it has been observed empirically that these policies perform well in practice, to the best of our knowledge, there are no theoretical performance bounds for affine policies in general.

Hardness. The problem \(\varPi _{Adapt}(\mathcal{U})\) is intractable in general. Feige et al. [14] prove that a special case of Problem (1) can not be approximated within a factor better than \(O(\log m)\) in polynomial time unless \(\mathsf{NP} \subseteq \mathsf{TIME}(2^{O(\sqrt{n})})\), where \(n\) refers to the problem input size. However, an exact or an approximate solution to Problem (1) can be computed efficiently in certain special cases.

If \(\mathcal{U}\) is a polytope with a small (polynomial) number of extreme points, Problem (1) can be solved efficiently. Instead of considering the constraint \(\mathbf {Ax} + \mathbf {B y(b)} \ge \mathbf {b}\) for all \(\mathbf {b}\in \mathcal{U}\), it suffices to consider the constraint only for all the extreme points of \(\mathcal{U}\). Thus, the resulting expanded formulation of Problem (1) has only a small (polynomial) number of variables and constraints which can be solved efficiently. Dhamdhere et al. [13] consider Problem (1) with \(\mathbf {A} =\mathbf {B}\). In this case the problem can model the set covering, Steiner tree and facility location problems. In this case, and when the set \(\mathcal{U}\) has a small number of extreme points, Dhamdhere et al. [13] give approximation algorithms with similar performance bounds as the deterministic versions. Feige et al. [14] extend to a special case of the uncertainty set with an exponential number of extreme points and give a polylogarithmic approximation for the set covering problem in this setting. Khandekar et al. [18] consider a similar uncertainty set with an exponential number of extreme points as [14] and give constant factor approximations for several network design problems such as the Steiner tree and uncapacitated facility location problems. In these papers the algorithm and the analysis is very specific to the specific problem addressed and does not provide insights for a tractable solution for the general two-stage Problem (1).

Approximability. Given the intractability of \(\varPi _{Adapt}(\mathcal{U})\), it is natural to consider simplifications of \(\varPi _{Adapt}(\mathcal{U})\). A natural approximation is to consider solutions that are non-adaptive – that is \(\mathbf {y(b)}\)=\(\mathbf {y}\). In this case, Problem \(\varPi _{Adapt}(\mathcal{U})\) reduces to the robust optimization problem

Problem (2) is solvable efficiently. Bertsimas et al. [11], generalizing earlier work in [9], provide a bound for the performance of robust solutions which only depends on the geometry of the uncertainty set. In particular, for a general convex, compact and full-dimensional uncertainty set \(\mathcal{U}\subseteq {\mathbb R}^m_+\), they provide the following bound for two-stage adaptive optimization problems:

where \(\rho \) and \(s\) are the translation factor and the symmetry of the uncertainty set \(\mathcal{U}\) which described in Sect. 2.

Affine Policies. Under this class of policies, \(\mathbf {y(b)}=\mathbf {Pb+q}\), that is the second stage decisions depend linearly on \(\mathbf {b}\):

Affine solutions were introduced in the context of stochastic optimization in [23] and then later in robust optimization in [5] and also extended to linear systems theory [3, 4]. Affine policies have also been considered extensively in control theory of dynamic systems (see [2, 6, 7, 15, 21, 24] and the references therein). In all these papers, the authors show how to reformulate the multi-stage linear optimization problem such that an optimal affine policy can be computed efficiently by solving convex optimization problems such as linear, quadratic, conic and semi-definite. Goulart et al. [15] first consider the problem of theoretically analyzing the properties of such policies and show that, under suitable conditions, the affine policy has certain desirable properties such as stability and robust invariance. There are two complementary approaches for studying the performance of affine policies: (a) a priori methods which evaluate the worst-case approximation ratio of affine policies over a class of instances and (b) a posteriori methods that estimate the approximation error for each problem instance individually. The bound we propose in this paper is an a priori bound. There have been various studies in the literature for developing a posteriori bounds to evaluate the performance of affine policies for particular instances [15, 16, 25–27]. Kuhn et al. [19] consider one-stage and multi-stage stochastic optimization problems, give efficient algorithms to compute the primal and dual approximations using affine policies, and determine a posteriori performance bounds for affine policies on an instance by instance basis in contrast to the a priori performance bounds we derive in this paper.

Bertsimas and Goyal [10] show that the best affine policy can be \(\varOmega (m^{1/2-\delta })\) times the optimal cost of a fully-adaptable two-stage solution for \(\varPi _{Adapt}(\mathcal{U})\) for any \(\delta >0\). Furthermore, for the case \(\mathbf {A} \in {\mathbb R}^{n_1\times m}_+\), they show that the worst-case cost of an optimal affine policy for \(\varPi _{Adapt}(\mathcal{U})\) is \(O(\sqrt{m})\) times the worst-case cost of an optimal fully-adaptable two-stage solution. However, unlike the bounds in [11], these bounds do not depend on \(\mathcal{U}\).

Our main contribution in this paper is a new approximation bound on the power of affine policies relative to optimal adaptive policies that depends on fundamental geometric properties of \(\mathcal{U}\) and offers deeper insight on the power of affine policies. Specifically, we show that

where \(\lambda \), \(\rho \) and \(s\) are the simplex dilation factor, the translation factor and the symmetry of the uncertainty set \(\mathcal{U}\) described in Sect. 2. We also show that for many interesting uncertainty sets this new bound improves the bounds discussed in [10]. The bound demonstrates theoretically that the improvement on the performance of affine policies compared to robust policies can be significant. We further provide computational evidence in the context of an inventory control problem that shows that the geometric a priori bound we obtain in this paper is quite close to the a posteriori bounds that we get for a random instance of the inventory control problem. This suggests that our geometric a priori bound is indeed informative.

The structure of the paper is as follows. In the next section, we introduce the geometric properties of convex sets we will utilize and in Sect. 3, we state and prove the main approximation bound. In Sect. 4, we compute the bounds for specific examples of \(\mathcal{U}\) and compare it with earlier bounds illustrated in [11]. Finally, in Sect. 5, we demonstrate the effectiveness of the proposed bound in the context of an inventory control problem.

2 Geometric properties of convex sets

In this section, we review several geometric properties of convex sets \(\mathcal{U}\) that we use in the paper.

2.1 The symmetry of a convex set

Given a nonempty compact convex set \(\mathcal{U}\subset \mathbb {R}^m\) and a point \(\mathbf { b}\in \mathcal{U}\), we define the symmetry of \(\mathbf { b}\) with respect to \(\mathcal{U}\) as follows:

In order to develop geometric intuition on \(\mathbf {sym}(\mathbf { b},\mathcal{U})\), we first show the following result. For this discussion, we assume that \(\mathcal{U}\) is full-dimensional.

Lemma 1

Let \(\mathcal{L}\) be the set of lines in \({\mathbb R}^m\) passing through \(\mathbf { b}\). For any line \(\ell \in \mathcal{L}\), let \(\mathbf { b}'_{\ell }\) and \(\mathbf { b}''_{\ell }\) be the points of intersection of line \(\ell \) with the boundary of \(\mathcal{U}\), denoted \(\delta (\mathcal{U})\) (these exist as \(\mathcal{U}\) is full-dimensional and compact). Then,

-

a)

\({\mathbf {sym}(\mathbf { b},\mathcal{U}) \le \min \left\{ \frac{\Vert \mathbf { b} - \mathbf {b}''_\mathbf {\ell } \Vert _2}{\Vert \mathbf { b} - \mathbf {b}'_\mathbf {\ell }\Vert _2}, \frac{\Vert \mathbf { b} - \mathbf {b}'_\mathbf {\ell } \Vert _2}{\Vert \mathbf { b} - \mathbf {b}''_\mathbf {\ell }\Vert _2}\right\} }\),

-

b)

\( {\mathbf {sym}(\mathbf { b},\mathcal{U}) = \min \limits _{\ell \in \mathcal{L}} {\min }\left\{ \frac{\Vert \mathbf { b} - \mathbf {b}''_\mathbf {\ell } \Vert _2}{\Vert \mathbf { b} - \mathbf {b}'_\mathbf {\ell }\Vert _2},\frac{\Vert \mathbf { b} - \mathbf {b}'_\mathbf {\ell } \Vert _2}{\Vert \mathbf { b} - \mathbf {b}''_\mathbf {\ell }\Vert _2}\right\} }\).

The symmetry of set \(\mathcal{U}\) is defined as

An optimizer \(\mathbf { b}_0\) of (5) is called a point of symmetry of \(\mathcal{U}\). This definition of symmetry can be traced back to [22] and is the first and the most widely used symmetry measure. We refer to [1] for a broad investigation of the properties of the symmetry measure defined in (4) and (5). Note that the above definition generalizes the notion of perfect symmetry considered by [9]. In [9], the authors define that a set \(\mathcal{U}\) is symmetric if there exists \(\mathbf { b}_0\in \mathcal{U}\) such that, for any \(\mathbf { z}\in {\mathbb R}^m\), \((\mathbf { b}_0+\mathbf { z}) \in \mathcal{U} \Leftrightarrow (\mathbf { b}_0-\mathbf { z})\in \mathcal{U}\). Equivalently, \(\mathbf { b} \in \mathcal{U} \Leftrightarrow (2\mathbf { b}_0 -\mathbf { b}) \in \mathcal{U}\). According to the definition in (5), \({\mathbf {sym}}(\mathcal{U}) =1\) for such a set.

Lemma 2

[1] For any nonempty convex compact set \(\mathcal{U}\subseteq {\mathbb R}^m\), the symmetry of \(\mathcal{U}\) satisfies

The symmetry of a convex set is at most \(1\), which is achieved for a perfectly symmetric set; and at least \(1/m\), which is achieved by a standard simplex defined as \(\varDelta :=\{\mathbf { x}\in \mathbb {R}^m_+\;|\; \sum _{i=1}^m x_i\le 1\}\). The lower bound follows from the Löwner-John Theorem (see [1]).

2.2 The translation factor of a convex set

For a convex compact set \(\mathcal {U}\subset {\mathbb R}^m_+\), we define the translation factor of \(\mathbf { b}\in \mathcal{U}\) with respect to \(\mathcal{U}\), as follows:

In other words, \(\mathcal {U}':=\mathcal {U}-(1-\rho )\mathbf { b}\) is the maximum possible translation of \(\mathcal {U}\) in the direction \(-\mathbf { b}\) such that \(\mathcal{U}' \subset {\mathbb R}^m_+\). Figure 1 depicts the geometry of the translation factor \(\rho \).

Lemma 3

[11] Let \(\mathcal{U}\subset \mathbb {R}^m_+\) be a convex and compact set such that \(\mathbf {b}_0\) is the point of symmetry of \(\mathcal{U}\). Let \(s:=\mathbf {sym}(\mathcal{U}) = \mathbf {sym}(\mathbf {b}_0, \mathcal{U})\) and \(\rho := \rho (\mathcal{U}) = \rho (\mathbf {b}_0, \mathcal{U})\). Then,

2.3 The simplex dilation factor of a convex set

In this section, we introduce a new geometric property of a convex set that is critical in bounding the performance of affine policies. For any convex set \(\mathcal{U}\) we associate a constant \(\lambda (\mathcal U)\) in the following way.

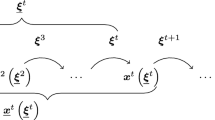

In dimension \(m\), a simplex \(S\) is a convex hull of \(m+1\) affinely independent points. Bertsimas and Goyal [10] show that the affine policy is optimal for two stage adaptive optimization problems when the uncertainty set is a simplex. For any convex set \(\mathcal{U} \in \mathbb {R}^m\), we define the simplex dilation factor \(\lambda :=\lambda (\mathcal {U})\) to be the smallest number such that there is a simplex \(S\) containing the symmetry point \(\mathbf { b_0}\) and

Figure 2 depicts the definition of \(\lambda \) and its calculation. We next provide some examples of calculation of \(\lambda \).

Lemma 4

For a unit ball \(\mathcal{U}:= \{\mathbf { b}: \Vert (\mathbf { b}-\overline{\mathbf { b}})\Vert _2\le 1\} \in \mathbb {R}^m\), \(\lambda (\mathcal{U})=m\).

Proof

Without loss of generality, let \(\mathcal {U}\) be a unit ball in \(\mathbb {R}^m\) (centered at the origin) and \(S\) be a regular (the distance of every two vertices is the same) maximum volume simplex inside the unit ball \(\mathcal {U}\). Let \(\mathbf {v_1},\ldots , \mathbf { v_{m+1}}\) be vertices of such a simplex \(S\). Then, we have:

-

1.

\({\Vert \mathbf { v_i}\Vert _2}=1\) for \(i=1,\ldots ,m+1\).

-

2.

\({\mathbf { v_i} \cdot \mathbf {v_j}}= -\frac{1}{m}\) for \(i \ne j\).

We next show that \( S \subset \mathcal{{U}} \subset m\cdot S\). We first claim that for any point \(\mathbf { w}\) in the boundary of \(S\), we have \({ \Vert \mathbf { w}\Vert _2}\ge \frac{1}{m}\). Since \(\mathbf { w}\) is on the boundary of \(S\), it is on one of its facets. Without loss of generality, we can assume that \(\mathbf { w}\) is on the facet containing the vertices \(\mathbf { v_1}, \ldots ,\mathbf { v_m}\). Therefore,

We then have

implying that \({ \Vert \mathbf { w}\Vert _2}\ge \frac{1}{m}\) for any point \(\mathbf { w}\) in the boundary of \(S\). Thus, a ball of radius \(\frac{1}{m}\) is contained in \(S\), i.e., \(\frac{\mathcal{U}}{m} \subset S\). So, we have:

Therefore, \(\lambda (\mathcal{U}) \le m\).

On the other hand, consider

We then have

This implies that \(\Vert \hat{\mathbf {w}}\Vert _2=\frac{1}{m}\), and so \(m\) is the smallest number \(\lambda (\mathcal U)\) such that

Therefore, \(\lambda (\mathcal{U})=m\). \(\square \)

We proceed with calculating the simplex dilation factor for different sets. We omit the proofs as they are not particularly illuminating.

Lemma 5

The simplex dilation factors for the following sets \(\mathcal{U}\) are:

-

1.

For \(\mathcal{U}_1:=\{\mathbf { b}: \Vert \mathbf { b}\Vert _p\le 1, \mathbf { b}\ge \mathbf { 0}\}\), \(\lambda (\mathcal{U} _1)=m^{\frac{p-1}{p}}\).

-

2.

For \(\mathcal{U}_2:=\{\mathbf { b}: \Vert \mathbf { E}(\mathbf { b}-\overline{\mathbf { b}})\Vert _2\le 1\}\subset \mathbb {R}^m_+\) where \(\mathbf {E}\) is invertible, \(\lambda (\mathcal{U}_2)=m\).

-

3.

For \(\mathcal{U}_3:=\left\{ \mathbf { b}\in [0,1]^m\;\biggl |\; \sum _{i=1}^m {b}_i = k\right\} , \) for \(k\in \{ 1,\dots ,m \}\), \(\lambda (\mathcal{U}_3) \le k\).

Finally we provide bounds for \(\lambda (\mathcal{U})\).

Theorem 1

[20] Let \(\mathcal{U}\) be a convex body in \( {\mathbb {R}}^m\) and let \(S\) be a simplex of maximal volume contained in \(\mathcal{U}\) and assume that the centroid of \(S\) is at the origin. Then \(S \subset \mathcal{U} \subset (m+2)\cdot S, \) i.e., \(\lambda (\mathcal{U})\le m+2\).

3 On the performance of affine policies

In this section, we state and prove a new bound on the suboptimality of affine policies. We first state a result from the literature that affine policies are optimal when the uncertainty set is a simplex.

Theorem 2

[10] Consider the problem \(\varPi _{Adapt}(\mathcal{{U}})\) such that \(\mathcal{{U}}\) is a simplex, i.e., \(\mathcal{{U}}=conv\{\mathbf { b^1},\ldots , \mathbf { b^{m+1}}\}\), where \(\mathbf { b^j} \in {\mathbb {R}}^m\) for all \( j = 1,\ldots , m\) such that \( {\mathbf { b^1}},\ldots , \mathbf { b^{m+1}}\) are affinely independent. Then, there is an optimal two-stage solution \(\mathbf { x}, \mathbf { y(b)}\) for all \(\mathbf {b} \in \mathcal{U}\) such that for all \(\mathbf { b} \in \mathcal{U}\), \(\mathbf { y(b) }= \mathbf { Pb} +\mathbf { q}\), where \(\mathbf {P}\) is a real matrix of dimension \(n_2\) and \(m\).

We next present a lemma that prepares the ground for our main result.

Lemma 6

Consider a convex and compact uncertainty set \(\mathcal{U}\), with a symmetry point \(\mathbf {b_0}\), symmetry \(s\), translation factor \(\rho \) and simplex dilation factor \(\lambda \). For \(\alpha = {\frac{{\lambda }(1+\frac{\rho }{s})}{\lambda +\frac{\rho }{s}}}\) we have

Proof

Let \(\alpha ={\frac{{\lambda }(1+\frac{\rho }{s})}{\lambda +\frac{\rho }{s}}},\) leading to

Applying Lemma 3, and substituting the above expression for \({1+\frac{\rho }{s}}\), we obtain that for all \(\mathbf {b}\in \mathcal{U}\),

leading to

\(\square \)

We are now ready to prove the main result of the paper.

Theorem 3

Consider a convex and compact uncertainty set \(\mathcal{U}\), with a symmetry point \(\mathbf {b_0}\), symmetry \(s\), translation factor \(\rho \) and simplex dilation factor \(\lambda \). Then,

Proof

First we show that when the uncertainty set is the simplex \(S \subset \mathcal{U}\), there is a two-stage affine optimal solution \(\mathbf { x}\) and \(\mathbf { P(b-b_0)+\mathbf {q}}\), where \(\mathbf { q} \ge \mathbf { 0}\).

Applying Theorem 2, we know that when the uncertainty set is the simplex \(S \subset \mathcal{U}\), there is an optimal affine solution \(\mathbf { x}\) and \(\mathbf {P(b)}+{\bar{\mathbf{q}}}\). We define \(\mathbf {{q}}:= {\bar{\mathbf{q}}}+\mathbf {P(b_0)}\). Therefore \(\mathbf { x}\) and \(\mathbf {y(b)}=\mathbf { P(b-b_0)+\mathbf {q}}\) is an optimal affine solution for the simplex \(S \subset \mathcal{U}\). By the properties of \(\varPi _{Adapt}(\mathcal{{U}})\), \(\mathbf {y(b_0)}=\mathbf { P(b_0-b_0)+\mathbf {q}}=\mathbf {q} \ge \mathbf {0}\). Therefore, there is a two-stage optimal affine solution \(\mathbf { x}\) and \(\mathbf { P(b-b_0)+\mathbf {q}},\) where \(\mathbf {q}\ge \mathbf{0}\).

Starting with the solutions \(\mathbf { x}\) and \(\mathbf { P(b-b_0)+\mathbf {q}}\) and letting \({\alpha = \frac{{\lambda }(1+\frac{\rho }{s})}{\lambda +\frac{\rho }{s}}}\), we define the following affine solution for the uncertainty set \(\mathcal{U}\) as follows:

and

We first show that \(\hat{\mathbf {x}}\) and \(\hat{\mathbf {y}}\mathbf {(b)}\) is a feasible affine solution for \(\varPi _{Adapt}(\mathcal{U})\).

-

1.

\(\mathbf {x} \ge \mathbf {0}\), therefore we have \(\hat{\mathbf {x}}=\alpha \mathbf {x} \ge \mathbf {0}\).

-

2.

Now we show that

$$\begin{aligned} \hat{\mathbf {y}}(\mathbf{b})=\frac{\alpha }{\lambda }\mathbf {P(b-b_0)}+ \alpha \mathbf {q} \ge \mathbf {0}. \end{aligned}$$Considering the definition of the simplex dilation factor, we know that

$$\begin{aligned} \mathcal{{U}} \subset \lambda \cdot (S - \mathbf { b_0}) +\mathbf { b_0}. \end{aligned}$$Therefore, for any \(\mathbf {b} \in \mathcal{{U}}\), \(\hat{\mathbf {b}} = {\frac{\mathbf {b}-\mathbf {b_0}}{\lambda }+ \mathbf {b_0}} \in S\). We know that \(\mathbf {x}\) and \(\mathbf {P}({\hat{\mathbf {b}}-\mathbf {b_0)}}+\mathbf {q}\) is a feasible solution for \(\varPi _{Adapt}(S)\). So,

$$\begin{aligned}&\mathbf {P}({\hat{\mathbf {b}}-\mathbf {b_0})}+\mathbf {q}\ge \mathbf {0} \\&\quad \Rightarrow \frac{1}{\lambda }\mathbf {P({b-b_0)}}+\mathbf {q}\ge \mathbf {0}\\&\quad \Rightarrow \alpha \left( \frac{1}{\lambda }\mathbf {P({b-b_0)}}+\mathbf {q} \right) \ge \mathbf {0}\\&\quad \Rightarrow \hat{\mathbf {y}}(\mathbf {b})=\frac{\alpha }{\lambda }\mathbf {P(b-b_0)}+ \alpha \mathbf {q} \ge \mathbf {0}. \end{aligned}$$ -

3.

Now, we show that

$$\begin{aligned} \mathbf {A}{\hat{\mathbf {x}}} + \mathbf {B} \hat{\mathbf {y}}\mathbf {(b)} \ge \mathbf {b},\; \forall \mathbf {b} \in \mathcal{{U}}. \end{aligned}$$Again, considering the definition of the simplex dilation factor, we know that

$$\begin{aligned} \mathcal{{U}} \subset \lambda \cdot (S - \mathbf { b_0}) +\mathbf { b_0}. \end{aligned}$$Therefore, for any \(\mathbf {b} \in \mathcal{{U}}\), \(\hat{\mathbf {b}} = {\frac{\mathbf {b}-\mathbf {b_0}}{\lambda }+ \mathbf {b_0}} \in S\). Therefore, \(\mathbf {x}\) and \(\mathbf {P}({\hat{\mathbf {b}}-\mathbf {b_0})}+\mathbf {q}\) is a feasible solution for \(\varPi _{Adapt}(S)\).

$$\begin{aligned}&\mathbf { A x}+ \mathbf {B} ({\mathbf {P}({\hat{\mathbf {b}}-\mathbf {b_0})}}+\mathbf { q} ) \ge \hat{\mathbf {b}}\\&\quad \Rightarrow \mathbf { A x}+ \mathbf {B} \left( \frac{1}{\lambda }{\mathbf { P(b-b_0)}}+\mathbf { q}\right) \ge \frac{\mathbf {b}-\mathbf {b_0}}{\lambda }+ \mathbf {b_0} (\text { by multiplying with } \alpha )\\&\quad \Rightarrow \alpha \left( \mathbf { A x}+ \mathbf {B} \left( \frac{1}{\lambda }{\mathbf { P(b-b_0)}}+\mathbf { q}\right) \right) \ge \frac{\alpha }{\lambda }{({\mathbf {b}-\mathbf {b_0})}+ \alpha \mathbf {b_0}}\\&\quad \Rightarrow \mathbf { A}\alpha \mathbf {x}+ \mathbf {B} \left( \frac{\alpha }{\lambda }{\mathbf { P(b-b_0)}}+\alpha \mathbf {q}\right) \ge \frac{\alpha }{\lambda }{({\mathbf {b}-\mathbf {b_0})}+ \alpha \mathbf {b_0}} \\&\qquad \text {By the definition of }\hat{\mathbf {x}}\text { and } \hat{\mathbf {y}}(\mathbf {b}) \text { and Lemma 6}\\&\quad \Rightarrow {\mathbf {A} \hat{\mathbf {x}} + \mathbf {B} \hat{\mathbf {y}}\mathbf {(b)}} \ge {\mathbf {b}}. \end{aligned}$$

It only remains to show

Recall that for any \(\mathbf {b} \in \mathcal{{U}}\), \(\hat{\mathbf {b}} = {\frac{\mathbf {b}-\mathbf {b_0}}{\lambda }+ \mathbf {b_0}} \in S\).

\(\square \)

4 On the power of the new bound

In this section, we compare the bound obtained in this paper with the bound in [11] for a variety of uncertainty sets. We first introduce two widely used uncertainty sets.

Budgeted uncertainty set. The budgeted uncertainty set is defined as,

[11] show that

Demand uncertainty set. We define the following set,

Such a set can model the demand uncertainty where \(\mathbf {b}\) is the demand of \(m\) products; \(\mu \) and \(\varGamma \) are the center and the span of the uncertain range.

The set \(\mathbf {DU}\) has different symmetry properties, depending on the relation between \(\mu \) and \(\varGamma \). If \(\mu \ge \varGamma \), the set \(\mathbf {DU}\) is in fact symmetric. Intuitively, \(\mathbf {DU}\) is the intersection of an \(L_{\infty }\) ball centered at \(\mu \mathbf {e}\) with \((2^m-2m)\) halfspaces that are symmetric with respect to \(\mu \mathbf {e}\). If \(\mu <\varGamma \), \(\mathbf {DU}\) is not symmetric any more. [11] show that

Proposition 1

Assume the uncertainty set is \(\mathbf {DU}\).

-

1.

If \(\mu \ge \varGamma \), then

$$\begin{aligned} \mathbf {sym}(\mathbf {DU})=1,\quad \mathbf {b}_\mathbf {0}(\mathbf {DU})=\mu \mathbf {e}. \end{aligned}$$(10) -

2.

If \({\frac{1}{\sqrt{m}}\varGamma <\mu < \varGamma }\), then

$$\begin{aligned} \mathbf {sym}(\mathbf {DU})=\frac{\sqrt{m}\mu + \varGamma }{(1+\sqrt{m})\varGamma },\quad \mathbf {b}_\mathbf {0}(\mathbf {DU}) = \frac{(\sqrt{m}\mu + \varGamma )(\mu +\varGamma )}{\sqrt{m}\mu +(2+\sqrt{m})\varGamma }\mathbf {e}. \end{aligned}$$(11) -

3.

If \({0\le \mu \le \frac{1}{\sqrt{m}}\varGamma }\), then,

$$\begin{aligned} \mathbf {sym}(\mathbf {DU})=\frac{\sqrt{m}\mu + \varGamma }{\sqrt{m}(\mu +\varGamma )},\quad \mathbf {b}_\mathbf {0}(\mathbf {DU}) = \frac{(\sqrt{m}\mu + \varGamma )(\mu +\varGamma )}{2\sqrt{m}\mu +(1+\sqrt{m})\varGamma }\mathbf {e}. \end{aligned}$$(12)

Define \(\mathbf{conv}\{ \varDelta _1,\{ \mathbf{e} \}\}\) to be the convex hull of the standard \(m\)-simplex \(\varDelta _1:=\{ \mathbf{b}\in \mathbb {R}^m\ |\ \mathbf{e}^T\mathbf{b}\le 1,\mathbf{b}\ge \mathbf{0} \}\) and a point \(\mathbf{e}:={\begin{bmatrix}1\\\vdots \\1\end{bmatrix}}\).

In Table 1, we calculate the symmetry and simplex dilation factor for several uncertainty sets.

In Table 2, we compare the proposed bound on the suboptimality of affine policies to the bound on the suboptimality of robust policies for \(\varPi _{Adapt}(\mathcal{U})\), which were obtained in [11]. In the following tables \(k\) is an integer. In Table 2, we define Eqs. (13), (14) and (15):

In Table 3, we calculate the ratios \(\frac{z_{\mathrm{Aff }}}{z_{Adapt}}\Big /\frac{z_{Rob}}{z_{Adapt}}\), which demonstrates that the improvement of the performance of the affine solution compared to the robust solution can be significant.

As illustrated in Table 3, the performance of the affine policy is significantly better than the robust solution for \(\varPi _{Adapt}(\mathcal{U})\) for many widely used uncertainty sets \(\mathcal{U}\). This includes the budgeted uncertainty set \(\varDelta _k\), the demand uncertainty set \(\mathbf {DU}(\mu ,\varGamma )\), \(\mathbf {conv}\{\varDelta _1,\{\mathbf { e}\}\}\) and \(\{\mathbf { B}\in \mathbb {S}^m_+\;|\; \mathbf { I}\bullet \mathbf { B}\le 1\}\).

5 Comparison with a posteriori bounds

In this numerical study, we consider a multi item two-stage inventory control model similar to the one discussed in [8]. The computational methods for solving the optimization problem and calculating the affine policy performance have been described in [17] and [19]. All of our numerical results are carried out using the IBM ILOG CPLEX 12.5 optimization package.

The inventory problem can be described as follows. At the beginning of the second period, the decision maker observes all product demands \(\mathbf {b}={\begin{bmatrix}b_1\\\vdots \\b_m\end{bmatrix}}\in \mathcal {U}\). Each product demand can be served by either placing an order \(x_i\), with unit cost \(c_{x_i}\) in the first period, which will be delivered at the beginning of the second period, or by placing an order \(z_i(\mathbf {b})\) at the beginning of the second period which has a more expensive unit cost \( c_{x_i} < c_{z_i}\) and it is delivered immediately. If the ordered quantity is greater than the demand, the excess units are stored in a warehouse, incurring a unit holding cost \(c_{h_i}\). If there is a shortfall in the available quantity, then the orders are backlogged incurring a unit cost \(c_{t_i}\). The level of available inventory for each item is given by \(I_i( \mathbf {b})\). The decision maker wishes to determine the ordering levels for all products \(x_i\) and \(z_i(\mathbf {b})\) that minimize the total ordering, backlogging and holding costs. The problem can be formulated as the following two-stage adaptive optimization problem.

We define \(\mathcal {U}^* :=\bigcup _{\mathbf {b} \in \mathcal {U}} {\begin{bmatrix}\mathbf {b}\\\mathbf {b}\\ \mathbf {0}_m\end{bmatrix}} \) where \(\mathbf {0}_m:= {\left. \begin{bmatrix} 0\\ \vdots \\ 0\end{bmatrix}\right\} } \, {\small m}\). So \(\bar{\mathbf {b}}= {\begin{bmatrix} \mathbf {b}\\ \mathbf {b}\\ \mathbf {0}_m \end{bmatrix}} \in \mathcal {U}^*\) if and only if \(\mathbf {b} \in \mathcal {U}\).

Lemma 7

The inventory control problem (16) can be reformulated to the standard form of Problem (1) as follows:

Proof

To bring the inventory control problem (16) into the standard form of Problem (17), we provide a reformulation with the following steps. We start with Problem (16).

-

(a)

First, \(\max \{c_{t_i}I_i(\mathbf{b}), c_{h_i}I_i(\mathbf{b}) \} \) can be replaced by introducing auxiliary decision variables \(o_i(\mathbf{b})\), serving as over-estimators for the max functions. Therefore, the objective function can be replaced with

$$\begin{aligned}&\min \sum _{i=1}^{m}c_{x_i}x_i + \max _{\mathbf{b}\in \mathcal {U}}\left( {\sum _{i=1}^{m} c_{z_i} z_i(\mathbf{b})}+\sum _{i=1}^{m} o_i(\mathbf{b})\right) . \end{aligned}$$and the following set of linear constraints are added to Problem (16)

$$\begin{aligned}&o_i(\mathbf{b}) \ge c_{t_i}I_i(\mathbf{b})\\&o_i(\mathbf{b}) \ge c_{h_i}I_i(\mathbf{b})\nonumber \\&o_i \ge 0.\nonumber \end{aligned}$$(18) -

(b)

Second, since for \(i=1,\ldots ,m\), \(c_{t_i}\) is negative and \(c_{h_i}\) is positive, we replace Constraints (18) with the following

$$\begin{aligned} {o_i(\mathbf{b})\over c_{h_i}} - x_i-z_i(\mathbf{b})&\ge -b_i\\ -{o_i(\mathbf{b})\over c_{t_i}} + x_i+z_i(\mathbf{b})&\ge b_i\nonumber \\ o_i&\ge 0.\nonumber \end{aligned}$$(19) -

(c)

Third, we introduce new auxiliary decision variables \(s_1(\mathbf{b}),\dots ,s_m(\mathbf{b})\) and we replace Constraints (19) with the following. Since \(s_1(\mathbf{b}),\dots ,s_m(\mathbf{b})\) do not appear in the objective function, the optimal value of the optimization problem will remain the same.

$$\begin{aligned} {o_i(\mathbf{b})\over {2c_{h_i}}} -\frac{ x_i}{2}-\frac{z_i(\mathbf{b})}{2}&\ge -\frac{b_i}{2}\\ -{o_i(\mathbf{b})\over c_{t_i}} + x_i+z_i(\mathbf{b})&\ge b_i\nonumber \\ 2 s_i(\mathbf{b}) - {x_i} - z_i(\mathbf{b})&\ge b_i\nonumber \\ o_i, s_i&\ge 0.\nonumber \end{aligned}$$(20) -

(d)

In the last step, we replace Constraints (20) with Constraints (21).

$$\begin{aligned} {o_i(\mathbf{b})\over {2c_{h_i}}} -{ x_i} + s_i(\mathbf{b}) - z_i(\mathbf{b})&\ge 0\\ -{o_i(\mathbf{b})\over c_{t_i}} + x_i+z_i(\mathbf{b})&\ge b_i\nonumber \\ 2 s_i(\mathbf{b}) -x_i - z_i(\mathbf{b})&\ge b_i\nonumber \\ o_i, s_i&\ge 0.\nonumber \end{aligned}$$(21)It is clear that the optimal value of the optimization problem (16) do not change during the steps \((a)\), \((b)\) and \((c)\). For step \((d)\), every \(o_i(\mathbf{b}), s_i(\mathbf{b}), x_i, z_i(\mathbf{b}) \) which satisfy Constraints (20), also satisfy Constraints (21). In addition, we can always find \(o_i(\hat{\mathbf{b}}), s_i(\hat{\mathbf{b}}), x_i, z_i(\hat{\mathbf{b}}) \) satisfy Constraints (21) and take the optimal value and also satisfy Constraints (20). So replacing Constraints (20) with Constraints (21) does not change the optimal value of the optimization problem. Moreover, since \( \bar{\mathbf{b}}= {\begin{bmatrix}\mathbf {b}\\\mathbf {b}\\ \mathbf {0}_m\end{bmatrix}} \in \mathcal {U}^*\) if and only if \(\mathbf {b} \in \mathcal {U}\). We can define \(s_i(\bar{\mathbf{b}}):=s_i(\mathbf{b})\), \(o_i(\bar{\mathbf{b}}):=o_i(\mathbf{b})\) and \(z_i(\bar{\mathbf{b}}):=z_i(\mathbf{b})\) and reformulate Problems (16), (17). \(\square \)

For our computational experiments we randomly generate instances of Problem (17) for various uncertainty sets, and compare the a posteriori bounds for the optimal affine policy with our geometric a priori bound. The parameters are randomly chosen using a uniform distribution from the following sets: advanced and instant ordering costs are chosen from \(c_{x_i} \in [0, 5]\) and \( c_{z_i} \in [0, 10]\), respectively, such that \(c_{x_i} < c_{z_i}\). Backlogging and holding costs are elements of \(c_{t_i} \in [-10,0]\) and \(c_{h_i} \in [0,5]\), respectively.

We consider \(\mathbf {DU}^*(\mu , \varGamma ,m)\), where

Also we consider \(\varDelta ^*_{(k,m)}\), where

Following the definition of symmetry and simplex dilation factor, we have \( \mathbf {sym}(\mathcal {U}^* ) = \mathbf {sym}(\mathcal {U} )\) and also \(\lambda (\mathcal {U}^* )=\lambda (\mathcal {U} )\).

We compare the a posteriori bounds for the optimal affine policy with our geometric a priori bound for randomly generated instances of Problem (17) in Table 4. We find that the geometric a priori bound is quite close to the a posteriori bounds for random instances of the inventory control problem. This suggests that our geometric a priori bound is indeed informative.

6 Concluding remarks

In this paper, we evaluate the performance of the affine policy for two stage adaptive optimization problems based on the geometry of the uncertainty sets. [11] has linked the performance of adaptive and robust policies based on the symmetry of the uncertainty set. In this work we link the performance of adaptive and affine policies based on the notion of the simplex dilation factor of the uncertainty set. We found (see Table 4) that our a priori bounds for affine policies significantly improve on the symmetry bounds for robust policies, and are close to the a posteriori bounds.

Natural extensions of this work include the study of the performance of affine and piecewise affine policies for multi-stage adaptive optimization problems.

References

Belloni, A., Freund, R.M.: On the symmetry function of a convex set. Math. Program. 111(1–2), 57–93 (2008)

Bemporad, A., Borrelli, F., Morari, M.: Min-max control of constrained uncertain discrete-time linear systems. IEEE Trans. Autom. Control 48(9), 1600–1606 (2003)

Ben-Tal, A., Boyd, S., Nemirovski, A.: Control of uncertainty-affected discrete time linear systems via convex programming (2005). http://www2.isye.gatech.edu/~nemirovs/FollowUpFinal.pdf

Ben-Tal, A., Boyd, S., Nemirovski, A.: Extending scope of robust optimization: comprehensive robust counterparts of uncertain problems. Math. Program. 107(1–2), 63–89 (2006)

Ben-Tal, A., Goryashko, A., Guslitzer, E., Nemirovski, A.: Adjustable robust solutions of uncertain linear programs. Math. Program. 99(2), 351–376 (2004)

Ben-Tal, A., Nemirovski, A.: Selected topics in robust convex optimization. Math. Program. 112(1), 125–158 (2008)

Bertsimas, D., Brown, D.: Constrained stochastic LQC: a tractable approach. IEEE Trans. Autom. Control 52(10), 1826–1841 (2007)

Bertsimas, D., Georghiou, A.: Design of near optimal decision rules in multistage adaptive mixed-integer optimization (2013). http://www.optimization-online.org/DB_FILE/2013/09/4027.pdf

Bertsimas, D., Goyal, V.: On the power of robust solutions in two-stage stochastic and adaptive optimization problems. Math. Oper. Res. 35(2), 284–305 (2010)

Bertsimas, D., Goyal, V.: On the power and limitations of affine policies in two-stage adaptive optimization. Math. Program. 134(2), 491–531 (2012)

Bertsimas, D., Goyal, V., Sun, X.A.: A geometric characterization of the power of finite adaptability in multistage stochastic and adaptive optimization. Math. Oper. Res. 36(1), 24–54 (2011)

Bertsimas, D., Litvinov, E., Sun, X.A., Zhao, J., Zheng, T.: Adaptive robust optimization for the security constrained unit commitment problem. IEEE Trans. Power Syst. 28(1), 52–63 (2013)

Dhamdhere, K., Goyal, V., Ravi, R., Singh, M.: How to pay, come what may: approximation algorithms for demand-robust covering problems. In: 46th Annual IEEE Symposium on Foundations of Computer Science, vol. 46, pp. 367–376 (2005)

Feige, U., Jain, K., Mahdian, M., Mirrokni, V.: Robust combinatorial optimization with exponential scenarios. In: Fischetti, M., Williamson, D. (eds.) Integer Programming and Combinatorial Optimization, vol. 4513 of Lecture Notes in Computer Science, pp. 439–453. Springer, Berlin (2007)

Goulart, P.J., Kerrigan, E.C., Maciejowski, J.M.: Optimization over state feedback policies for robust control with constraints. Automatica 42(4), 523–533 (2006)

Hadjiyiannis, M.J., Goulart, P.J., Kuhn, D.: An efficient method to estimate the suboptimality of affine controllers. IEEE Trans. Autom. Control 56(12), 2841–2853 (2011a)

Hadjiyiannis, M.J., Goulart, P.J., Kuhn, D.: A scenario approach for estimating the suboptimality of linear decision rules in two-stage robust optimization. In: 2011 50th IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), pp. 7386–7391. IEEE (2011b)

Khandekar, R., Kortsarz, G., Mirrokni, V., Salavatipour, M.: Two-stage robust network design with exponential scenarios. In: Proceedings of the 16th Annual European Symposium on Algorithms, pp. 589–600. Springer, Berlin (2008)

Kuhn, D., Wiesemann, W., Georghiou, A.: Primal and dual linear decision rules in stochastic and robust optimization. Math. Program. 130(1), 177–209 (2011)

Lagarias, J.C., Ziegler, G.M.: Bounds for lattice polytopes containing a fixed number of interior points in a sublattice. Canad. J. Math. 43, 1022–1035 (1991)

Lofberg, J.: Approximations of closed-loop minimax MPC. In: Proceedings of 42nd IEEE Conference on Decision and Control, vol. 2, pp. 1438–1442 (2003)

Minkowski, H.: Allegemeine lehzätze über konvexe polyeder. Ges. Ahb. 2, 103–121 (1911)

Rockafellar, R., Wets, R.: The optimal recourse problem in discrete time: \(L^1\)-multipliers for inequality constraints. SIAM J. Control Optim. 16(1), 16–36 (1978)

Skaf, J., Boyd, S.P.: Design of affine controllers via convex optimization. IEEE Trans. Autom. Control 55(11), 2476–2487 (2010)

Van Parys, B., Goulart, P., Morari, M.: Infinite-horizon performance bounds for constrained stochastic systems. In: 2012 IEEE 51st Annual Conference on Decision and Control (CDC), pp. 2171–2176 (2012a)

Van Parys, B., Kuhn, D., Goulart, P., Morari, M.: Distributionally robust control of constrained stochastic systems (2013) http://www.optimization-online.org/DB_HTML/2013/05/3852.html

Van Parys, B.P.G., Goulart, P.J., Morari, M.: Performance bounds for min-max uncertain constrained systems. In: Nonlinear Model Predictive Control, vol. 4, pp. 107–112 (2012b)

Acknowledgments

We would like to thank two anonymous referees for very thoughtful comments that have improved the paper and Dr. Angelos Georghiou for sharing with us his code and very helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bertsimas, D., Bidkhori, H. On the performance of affine policies for two-stage adaptive optimization: a geometric perspective. Math. Program. 153, 577–594 (2015). https://doi.org/10.1007/s10107-014-0818-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-014-0818-5