Abstract

This paper focuses on the simulation of bimanual assembly/disassembly operations for training or product design applications. Most assembly applications have been limited to simulate only unimanual tasks or bimanual tasks with one hand. However, recent research has introduced the use of two haptic devices for bimanual assembly. We propose a more natural and low-cost bimanual interaction than existing ones based on Markerless motion capture (Mocap) systems. Specifically, this paper presents two interactions based on a Markerless Mocap technology and one interaction based on combining Markerless Mocap technology with haptic technology. A set of experiments following a within-subjects design have been implemented to test the usability of the proposed interfaces. The Markerless Mocap-based interactions were validated with respect to two-haptic-based interactions, as the latter has been successfully integrated into bimanual assembly simulators. The pure Markerless Mocap interaction proved to be either the most or least efficient depending on the configuration (with 2D or 3D tracking, respectively). Usability results among the proposed interactions and the two-haptic based interaction showed no significant differences. These results suggest that Markerless Mocap or hybrid interactions are valid solutions for simulating bimanual assembly tasks when the precision of the motion is not critical. The decision on which technology to use should depend on the trade-off between the precision requested to simulate the task, the cost, and inner features of the technology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Virtual reality (VR) is an artificial environment which simulates real or imaginary worlds by means of computers. This paper focuses on the simulation of bimanual assembly/disassembly operations, which are typically found in virtual training processes or as part of product design. The use of virtual mock-ups during the product design/development allows the reduction of physical prototypes (Jung et al. 1998), saving costs and time. In this specific context, virtual mock-ups allow designers to analyse accessibility problems and to validate whether the assembly and maintainability operations of a machine can be properly done. In the case of training activities, the user can practise the assembly task as many times as necessary, eliminating the constraints of using the physical environment such as availability of the machine, safety, time, or costs.

An important aspect of VR systems is the interaction between the user and the virtual world. As described by Bowman et al. (2004), there are three universal interaction tasks in a 3D user interface design: selection of an object, viewpoint motion control, and manipulation of the object. The implementation of these tasks is constrained by the technology used for interaction. Reviewing existing virtual assembly applications, the main types of interaction are based on mouse (2D and 3D), data-gloves, or haptic technology. Most commercial CAD applications, such as SolidWorks or Solid Edge, support standard input devices (i.e. keyboard and 2D mouse). In scientific publications, the proposed interaction is usually based on haptic technology (Leino et al. 2009; Poyade et al. 2009; Abate et al. 2009; Cheng-jun et al. 2010; Lu et al. 2012; Polistina 2010; Bordegoni et al. 2009). There are few exceptions where the interaction is based on solely a Spaceball 5,000 mouse (Jun et al. 2005), pinch glove (Lee et al. 2010), or where the interface supports several devices such as mouse, data-glove, Spaceball mouse, or hand tracking (Jung et al. 1998). More examples in virtual assembly systems can be found in the surveys (Seth et al. 2011; Gupta et al. 2008).

A second important aspect of VR systems is their degree of fidelity with respect to real tasks. Most of virtual assembly applications, indistinctly of the implemented interaction technology, either do not simulate bimanual assembly operations or do it sequentially, for which only one-handed interaction is needed. However, in a real assembly task, the user will often work with both hands simultaneously. This means that for a realistic assembly simulation bimanual interaction should be available. An example of bimanual simulation can be found in the work of Seth et al. (2008), who proposed a dual-handed haptic interface for realistic part interaction using two PHANToM® haptic devices.

However, the use of dual-handed haptic interface presents two main drawbacks:

-

High acquisition cost; professional devices may cost several thousands of US dollars.

-

Bimanual configuration is not always feasible; this is a common problem in haptic devices that have not been designed for bimanual operations, even if the haptic rendering library allows it. Reasons are due to workspace limitations (the size/configuration of some devices impedes having two devices working in the same workspace) or due to mechanical interferences between the device arms (when two devices work in the same workspace the haptic arms can collide with one another impeding some motions).

Similarly, the mouse (2D or 3D) has not been designed for bimanual operations and thus, it would require an ad hoc approach to allow bimanual tasks. Moreover, it has been demonstrated that the efficiency of a 3D mouse is worse than a haptic device for assembly tasks (Leino et al. 2009; Bloomfield et al. 2003).

In this paper, we propose a new low-cost approach for natural bimanual interaction based on integrating a Markerless motion capture (Mocap) system in a virtual assembly system. We analyse the usability and user performance of a particular Mocap system in a virtual assembly task that requires bimanual interaction, and we compare the Mocap system results with two haptic-based interaction. One could think that the lack of force feedback on the interaction could affect somehow the learning process, the accuracy or the time needed to do the task. Volkov et al. (2001) studied the effectiveness of haptic sensation for the evaluation of virtual prototyping. They concluded that the addition of force feedback to the virtual environment allowed the participants to complete the task faster, but not more accurately. Adams et al. (2001) studied the learning effect of a virtual training with haptics-based interaction, where two groups trained with and without force feedback, respectively. They did not find significant differences between the two groups.

We can find several approaches of Markerless Mocap systems based on research contributions (Moeslund et al. 2006; Poppe 2007; Zhu and Fujimura 2007; Siddiqui and Medioni 2010; Romero et al. 2010; Cao et al. 2010; Wang and Popovic 2009; Wang et al. 2011; Shotton et al. 2011; Oikonomidis et al. 2012). For this research, we implemented a particular Markerless Mocap system with two possible configurations. First approach is based on tracking user’s hands with one standard camera. In this case, the 2D hands positions are converted to 3D world position. Second approach is based on tracking user’s hand with a stereo RGB camera. The stereo camera returns a disparity map from which we can obtain 3D hand positions. Results obtained in our usability experiment could be extended to similar off-the-shelf Markerless Mocap systems, such as Organic Motion™, Kinect™, Omek™, and SoftKinetic™ for the 3D solution, or eyetoy™ for the 2D solution.

In addition, in the cases in which we want to benefit from having a physical interaction with the virtual objects but it is not possible or adequate to interact with two haptic devices simultaneously, we decided to combine a haptic device with a Markerless Mocap system technology.

In summary, this paper proposes and analyses three interactions: a pure Markerless Mocap-based interaction, where both hands are tracked, in 2D or 3D, with a Markerless Mocap system; and a hybrid interaction, where one hand is tracked with a Markerless Mocap and the other hand with a haptic device. Specifically, this paper addresses the following research questions:

-

Is it feasible to interact in a virtual assembly context by means of a Markerless Mocap system?

Originally, Markerless Mocap systems were poor on precision compared to other technologies (i.e. Haptic, mouse). But the release of off-the-shelf technologies (such Organic Motion™, Kinect™, Omek™, etc.), they have demonstrated that its level of precision became good enough for a wide range of applications, i.e. Tv-controller, medical and physical therapy, and in particular video game. However, Markerless Mocap systems have been rarely applied to virtual assembly systems, and thus more research is needed. An example can be found in the work of Wang et al. (2011), Wang and Popovic (2009) where they presented a 6D hand tracking and tested it within a virtual assembly task. However, the performance was not assessed in comparison with other technologies.

-

How usable are the Markerless Mocap-based interactions (with 2D or 3D tracking) and hybrid-based interaction (Markerless Mocap + haptic) compared to two-haptic-based interaction?

To address these research questions and in order to assess the usability of the proposed interactions, this paper presents two different experiments:

-

A “usability” experiment has been carried out to analyse the usability of the three interactions; Markerless Mocap system (with 2D and 3D tracking) and a hybrid system. A couple of interactions based on two haptic devices have been taken as benchmark. The experiment has been performed using two desktop haptic devices with a small workspace (OMNI® haptic device). The hybrid configuration is based on this haptic device and a Markerless Mocap system (with 2D tracking). Due to the differences in workspace size between both technologies, a second experiment analyses the usability of the hybrid interaction with a more appropriate haptic device. The “usability” experiment follows a within-subjects design, i.e. participants repeat the same task with each interaction modality where the interaction order is randomly assigned. The task consists of assembling part of a virtual valve.

-

A “hybrid versus haptic” experiment has been conducted in order to assess the hybrid interaction with respect to two haptic devices with similar workspace size. The experiment is similar to the “usability” experiment except for the haptic device used. In this case, the GRAB haptic device (Avizzano et al. 2003) with a larger workspace has been used.

This paper starts describing the virtual assembly system used for running the experiments. Then, the two experiments are presented based on their experimental task, experimental design, procedure, and results. After that we discuss results and summarise the conclusions.

2 The test-bed application

The experiments were conducted on a previously published demonstrator known as IMA-VR (Gutiérrez et al. 2010). This demonstrator was designed to train users on industrial assembly and disassembly tasks. The IMA-VR platform consists of a screen displaying the 3D graphical scene corresponding to the maintenance task, the device used for the interaction with the virtual scene, and the training software to simulate and teach assembly and disassembly tasks.

2.1 Interaction technologies

One of the main features of this platform is its flexibility to integrate new interaction devices. By default, this demonstrator can work with different types of haptic devices (e.g. desktop or large space devices, one or two contact points, etc.). For this research, a Markerless Mocap system was integrated.

The Markerless Mocap implementation is based on Unzueta’s work (Unzueta 2008), where the user’s body part is tracked by applying the condensation algorithm (Isard and Blake 1998) with a blob representation (Wren et al. 1997). The Kalman filter was efficiently applied against white noise. In order to improve its tracking recognition, the users wore a chrome-key glove. The system was implemented to work with one or more standard cameras and with a stereo camera. The output is in 2D or 3D depending on the number of cameras.

Since Markerless Mocap systems allow to design natural interfaces, the interaction with the virtual objects must be consistent with the performance in the real task. Haptic-based interactions using force feedback devices such as PHANToM® are different to Markerless Mocap interactions firstly in that the user is constantly attached to a handle. Secondly, user’s natural reactions, such as swapping a tool from one hand to another, have no sense with haptic devices. To allow this type of actions and increase the naturalness in the Markerless Mocap interaction, we have used props. Props are real objects which are tracked and can interact with the virtual scene. These can be as complex as a steering wheel or as simple as a physical tool. A review of props can be found in this book (Bowman et al. 2004). With the adopted approach, the user can grasp a physical tool to perform the virtual task.

2.2 System layout

The 3D graphical scene is divided into two areas. The assembly zone, with the machine to be assembled, is rendered in the centre of the scene, and the pieces to be assembled are placed at the back-wall, see Fig. 2. On the right edge of the screen, there is a configurable “tools menu” with the virtual tools that can be chosen to assemble. To grasp a piece or tool, the user has to select it, and then, grab it by means of a voice command. If the piece/tool is correct, the piece/tool will be grasped and moved with the motion of the user’s hand. In other case, a message is displayed explaining the type of error.

The selection of small pieces, very common in assembly tasks (screws, nuts, washers, etc.), may be cumbersome in case of Mocap-based interactions due to the noise coming from the tracking process. To solve this problem, a new strategy was implemented to facilitate the selection of small pieces. This strategy was based on defining a tolerance region for each small object, so that the object was automatically selected when the user’s hand dropped inside this region.

As previously mentioned and optionally to the “tools menu”, the Markerless Mocap system implementation provides a prop solution that allows the users to operate with physical tools in a natural way. When the user grasps a physical tool, a virtual copy of the tool appears in the scene which moves with the motion of the physical tool.

2.3 Commands

These experiments follow the Wizard of Oz strategy, i.e. the user does not know that the system is partially operated by a person. In this strategy, when the users want to send an order to the system (e.g. grasp a piece), they send the order by voice and the evaluator activates the corresponding command in the system.

3 Usability experiment I

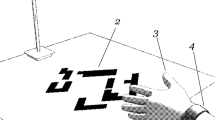

This section describes the experiment conducted to analyse the feasibility and usability of the proposed bimanual interactions for assembling a virtual task, followed by their results. The five types of interactions analysed are as follows (Fig. 1):

-

1.

A Markerless Mocap system with 2D tracking (Mmocap2D).

-

2.

A Markerless Mocap system with 3D tracking (Mmocap3D).

-

3.

A hybrid system based on Mmocap2D and a haptic device (We found that combining two different technologies and manage them in the 3D space was far more complicated than in the 2D space. In order to not increase significantly the cognitive load, we decided to combine the haptic device, which runs in the real 3D space, with the Markerless Mocap system with 2D tracking).

-

4.

Two haptic devices, with different real workspace, placed one in front of the other so that the haptic arms were aligned (haptic w.a.w.), and

-

5.

Two haptic devices, with different real workspace, placed one beside the other so that the arms were not aligned (haptic w.n.w.).

The initial objective of the fourth type of interaction, with aligned haptic arms, was to evaluate the configuration of two haptic devices sharing the same real workspace. However, this was not feasible due to the haptic devices used in this experiment: two PHANToM OMNI®. When both haptic devices were placed in the same workspace, the haptic arms collided with each other most times impeding the task completion. So, the two haptic devices were shifted to avoid the haptic arms collision, similar to the work of Seth et al. (2008). As result, in the aligned haptic arms configuration, the offset in axe x between both hands was smaller than in the non-aligned haptic arms configuration. To analyse if this factor could have any influence in the interaction, we decided to analyse both configurations.

3.1 Experimental task

The selected experimental task consisted of assembling a part of an electro-hydraulic valve bimanually. This task consisted of 5 steps where participants had to place the cover of the valve (step 1) and to fix it using a set of pieces (e.g. screws, nuts, lock washers, and washers) and tools (steps 2–5).

Figure 2 shows the virtual task. As shown in Fig. 2a, the virtual scenario was divided into three areas: assembly area, pieces repository, and “tools menu”. At the beginning of every test, all pieces were located on the rear wall (Fig. 2a). When users had to assemble by means of the haptic interaction, they had to grab the tool from the tool menu. In case of the Markerless Mocap interaction, users had to grasp the corresponding prop from a table. After completion of the assembly task, the system displayed a message indicating the end of the task (Fig. 2c). The assembly task was explained before starting the experiment.

In this research work, an operation is considered as unimanual or bimanual depending on the number of pieces/tools manipulated at the same time. Unimanual operations (i.e. only one object/tool is involved) are simulated with a single hand independently of whether the user needs a second hand as support in the real task performance. Bimanual operations (i.e. two or more pieces/tools are involved simultaneously) are simulated with both hands. Following this criteria, the experimental task consisted of one unimanual operation (step 1 corresponding to place the cover) and four bimanual operations (steps 2–5 corresponding to fix the cover with the screws and nuts, Fig. 2b).

3.1.1 Experimental design

In order to analyse the differences among the five interaction types, the experiment followed a within-subject design with 25 users that performed the task 5 times, one for each type of interaction. Our interest was in analysing which technology was faster and more usable. Participants were 8 women and 17 men of an age rate between 20 and 60 years. All of them were competent users of personal computers and new technology in general. From them, 5–6 users were familiar with Mocap and haptic technology, respectively. All participants were right-handed and reported normal sense of touch and vision. In order to block the possible learning effect between some of the interactions (e.g. between haptic w.a.w. and haptic w.n.w.), each user performed the experiment with a random order of the interaction condition.

3.1.2 Procedure

First, the experiment’s objective was explained, and the task to be performed was illustrated step by step. Thereafter, the participants had a familiarisation session with the platform and the interaction technologies. Then, they had to perform the task five times, one with each interaction condition, trying to perform the task as fast as possible and without errors. Once the participants completed the experiment, they were asked to fill out a demographic questionnaire and a usability questionnaire in order to rate each interaction and comment on the advantages/disadvantages.

3.1.3 Performance measure

The main parameters considered to measure the efficiency and usability of each interaction corresponded to quantitative results (e.g. time to grab each piece, total time) and qualitative data gathered through the usability questionnaire.

3.2 Results

We have analysed the efficiency and usability of five interaction types in a bimanual assembly task. The interaction conditions were as follows: a two-haptic based interaction with the haptic arms aligned (haptic w.a.w), a two-haptic based interaction with the haptic arms non-aligned (haptic w.n.w), a hybrid system combining a haptic device with a 2D Markerless Mocap system (hybrid-I), a Markerless Mocap solution with 2D tracking (Mmocap2D), and a Markerless Mocap solution with 3D tracking (Mmocap3D). All results were analysed with the aid of Minitab.

3.2.1 Quantitative results

Figure 3 shows the total execution time for each interaction condition. The task consisted of one unimanual step and four repeated bimanual steps. Since the goal of this experiment was to check which technology was faster and more usable and the execution time for the first step could be influenced by the learning of the technology itself, we decided to analyse only the execution time of the last step. This step consisted of grasping one tool and two pieces for each hand and assembling them with both hands simultaneously.

A one-way within-subjects ANOVA (α = 0.05), adjusted for ties, was run to compare the effect of the interaction technology on the completion time for the last step (Fig. 4 shows these times). The main effect that the interaction technology had on the dependent variable, time, was found to be significant (F(4, 94) = 8.10, p = 0.000). Tukye’s post hoc comparisons of the five interaction groups indicated that the Mmocap2D (M = 22.974 s, SD = 3.913) and hybrid-I (M = 27.329 s, SD = 9.617) were the fastest interaction and were significantly faster than Mmocap3D interaction (M = 40.320 s, SD = 13.010). Rest of the interactions were haptic w.a.w (M = 32.243 s, SD = 9.624) and haptic w.n.w (M = 31.852 s, SD = 11.246).

3.2.2 Qualitative results

Once the participants completed the experiment, they were asked to fill out a usability questionnaire of these interaction types in terms of:

- Q1:

-

naturalness of interaction

- Q2:

-

ease of use

- Q3:

-

movement consistency

- Q4:

-

user’s concentration in the task

- Q5:

-

comfort

- Q6:

-

satisfaction

- Q7:

-

efficiency to complete the assembly task

- Q8:

-

amusement

The usability questionnaire followed a 7-point Likert scale. Figure 5 shows the medians and quartiles for each question.

As seen in Fig. 5, the hybrid-I system was clearly valued worst in terms of usability. The Mmocap2D was found easiest from all interactions, but similar in the rest of usability questions. As for the rest of interactions (Mmocap3D, haptic w.n.w., haptic w.a.w.), we could not find significant differences.

3.2.3 User’s preference for interaction technology

Finally, users had to rank the interaction technology in decreasing order starting from the most preferable one (rate 1) to the least (rate 5). Figure 6 represents the medians and quartiles of the preferences for each interaction.

A Kruskal–Wallis test was conducted to evaluate differences in the user’s preference among the five interaction types. The test, which was adjusted for ties, was found significant χ 2 (4, N = 25) = 20.67, p = 0.000. As seen in Fig. 6, there were few differences among haptic (w.a.w and w.n.w.) and Mmocap (2D and 3D) groups meanwhile the hybrid-I interaction was the worst valued. In fact, a 60 % of participants rated the hybrid-I interaction as the worst.

4 Usability experiment II: hybrid versus haptic interaction

Based on previous experiment results, we realised that the hybrid configuration analysed (hybrid-I) combined two systems with a great difference in workspace size (16 cm × 12 cm × 12 cm for the OMNI® device against approximately 50 cm × 40 cm × 50 cm for the Markerless Mocap system). Consequently, movements done with each hand by the user were considerably different in amplitude, resulting in a poor natural interaction.

In addition, the Markerless Mocap and the haptic system followed a different strategy to grasp the tools; while the Markerless Mocap interaction kept a strategy based on props, the haptic interaction followed a strategy based on “tools menu”. We found that having two different selection strategies in the hybrid interaction confused some participants when changing from one modality to another. These factors, workspace size and grasp strategy, could have led to wrong usability results with respect to the hybrid system. Therefore, we decided to take another experiment based on the previous one, but having only one selection strategy (i.e. “tools menu”) and using a larger workspace haptic: the GRAB haptic device (Avizzano et al. 2003). This device has been designed for bimanual operations and is composed of two haptic arms working in the same workspace (60 cm × 40 cm × 40 cm).

Therefore, the goal of this experiment was to analyse the task performance and usability of a hybrid system that combines a Markerless Mocap system with one arm of the GRAB device with respect to a dual-handed haptic interaction where the haptic arms share the real workspace (the GRAB device), see Fig. 7.

4.1 Experimental task

This experiment used the same experimental task and followed the same procedure as the usability experiment. Thus, it followed a within-subjects design (i.e. participants had to repeat the same task twice alternating the interaction system). There were 13 participants, 4 women and 9 men of an age rate between 20 and 50 years, most of them with a technical degree. From them, 4 users were familiar with haptic technology, and 4 users were familiar with Mocap technology. All participants were right-handed and reported normal sense of touch and vision. In order to block the possible learning effect, two experimental groups were defined and each participant was randomly assigned to any of both. We had,

Group 1 (7) → pre-test → task under strategy 1 → task under strategy 2 → usability test

Group 2 (7) → pre-test → task under strategy 2 → task under strategy 1 → usability test

4.2 Results

We have analysed the efficiency and usability of two interactions types in a bimanual assembly task. Those were a two-haptic based interaction with the arms sharing the same workspace (haptic w.s.w.) and a new hybrid configuration (hybrid-II).

A paired-sample t test (α = 0.05) was run to compare the effect of the interaction technology in the time completion. The normality assumption was checked with a probability plot, Fig. 8.

There was no significant difference in the time for haptic w.s.w. (M = 207 s, SD = 36.9) and hybrid-II (M = 198.6 s, SD = 41.5) conditions; t(12) = −1.45, p = 0.914.

4.2.1 Qualitative results

The usability of both interaction types was studied in terms of:

- Q1:

-

naturalness of interaction

- Q2:

-

ease of use

- Q3:

-

movement consistency

- Q4:

-

user’s concentration in the task

- Q5:

-

comfort

- Q6:

-

user’s capability to control the events of the virtual system

- Q7:

-

efficiency to complete the assembly task

- Q8:

-

difficulty of interaction with the virtual system

The usability questionnaire followed a 7-point Likert scale. Figure 9 shows the medians and quartiles for each question.

From Fig. 9, we could not find any significant difference between both interactions in terms of usability.

5 Discussion

Discussion is presented for each experiment in the same order as they were explained in the design of experiments section.

5.1 Usability experiment I

All participants performed and finished the virtual task with the tested interactions without major problems. Nevertheless, recorded times have demonstrated that the Markerless Mocap solution with a 2D tracking and the hybrid-I were significantly faster than the Markerless Mocap interaction based on 3D tracking. This is explainable, since working in 2D is easier than in a 3D scene. The two haptic (with/without haptic arms aligned) did not differ significantly from the rest of groups. Although they are working directly in the 3D space, the slight difference in time with respect to the Mmocap3D can be due to the difference in the movement amplitudes requested by both technologies. The haptic workspace was around three times smaller than the 3D Markerless Mocap workspace.

One of the initial objectives of this experiment was also to compare the results with the use of two haptic devices sharing the same real workspace. When the haptic arms do not share the same real workspace the virtual hands positions cannot be consistent with real hands position, what may provoke the user’s confusion and affect the task performance. An example is when both hands appear crossed in the virtual space, when actually they are not. But, we could not analyse this configuration since we found a problem when we set up two OMNI® haptics to share the workspace; both haptic arms were colliding in the central area impeding to operate bimanually most of the time. Hence, we analysed two different distributions of the haptic devices (with/without aligned haptic arms) to know whether this distribution could have any influence on the usability results. Results showed almost no difference between both groups. In fact, most participants did not realise the difference between both configurations.

5.1.1 Usability results

Overall, the usability questionnaire analysis has demonstrated that there are no significant differences among four of the five interactions corresponding to Mocap2D, Mocap3D, and the two-haptic based interactions (with/without haptic arms aligned). On the other hand, the hybrid interaction has been significantly worst valued. We discuss now each factor.

Efficiency to complete the task (Q7): Overall participants valued the hybrid-I interaction significantly worse than the rest of interaction groups, although the hybrid-I system was the second fastest in finishing the task. But the reason for such a short time was most likely due to the Mmocap2D, which was the fastest interaction. In any case, we found that some participants became confused because the workspace size of each hand was significantly different. This confusion was increased in the use of two different strategies to grasp the tool (props and tool container for each hand). This assumption is as well reflected in the ratings of the factors concentration (Q4), satisfaction (Q6), and naturalness (Q1), where hybrid-I interaction resulted significantly worst.

Naturalness (Q1): Only slight differences were noticeable, except for the hybrid-I interaction. The hybrid-I interaction based on the Markerless Mocap and the haptic system used a different strategy to grasp the tool for each hand (tool menu vs. props). This could have affected negatively the naturalness of the interaction type.

Concentration (Q4) and easiness (Q2): When users are performing a virtual task the interaction system could negatively influence the concentration on the task, and thus affect the efficiency of several applications such as virtual assembly training. The concentration factor is also related to the easiness of use of the corresponding technology. A complex interaction may increase the user’s cognitive load and hence reduce the concentration on the task. In this sense, bimanual operations require users to work simultaneously with both hands, resulting in more complex interaction than unimanual operations. Results have demonstrated that users value the concentration on the task higher with a natural interface (Mmocap2D and Mmocap3D) than with the rest of tested technologies (haptic and hybrid), where the interaction based on the hybrid-I interaction was the worst valued. These results are consistent with the valorisation of the easiness of interaction where the hybrid-I interaction was considered significantly more difficult to use. The main reason is that it requires the user to learn and use two different technologies simultaneously.

Consistence between real and virtual movements (Q3): Participants did not find significant differences between the tested interactions. This result is interesting since it is related to the precision of each technology. The nature of Markerless Mocap systems is considerably noisier compared to haptic technology. While the haptic technology tracks the user’s movements directly, the Markerless Mocap system has to estimate them. Moreover, we have to add the noise coming from the image quality and the hands tracking. These results suggest that participants’ perception is based on how alike the virtual movement is with the real trajectory, rather than how exact or precise the virtual movement really is.

Comfort and ergonomics (Q5): The hybrid-I interaction was considerably worst valued to the rest of technologies. However, we considered that the duration of this experiment (3–4 min per task) was not enough to evaluate this aspect and more experiments should be conducted. In long term, the Markerless Mocap interaction seems to be more tiring with respect to haptic desktop interactions, as a consequence of being standing for long time, and having larger movement amplitudes.

Satisfaction (Q6) and amusing (Q8): Only slight differences have been noticeable, except for the hybrid-I interaction. Overall, it depended more on participants’ preference than on the interaction technology itself.

At the end of the usability questionnaire, participants had to rank the interaction types from the most preferable one to the least. The hybrid-I system was considered the worst system by the majority of the participants. The main reason is due to the differences in the workspace size for each hand which make the interaction awkward and less usable. Rest of the interactions achieved a similar score.

Looking backward to the usability responses, we can conclude that combining two technologies with different workspace sizes is significantly less usable. A second observation is that participants did not value the slowest interaction negatively, as long as the interaction was perceived to respond in real time. The final observation is that usability results are very similar between the solely Markerless Mocap interactions and the solely two-haptic based interactions. Responds varied from one participant to another depending on what they personally value more from each interaction.

5.2 Usability experiment II: hybrid versus haptic interaction

During the previous experiment, we noticed that there were two features of the tested hybrid-I system (Mmocap2D plus OMNI® device) that could have a great influence in the results: the use of two technologies with a different workspace size and the use of different interaction strategies to grasp a tool. In order to analyse the potential of the hybrid systems, we conducted a new experiment with a new configuration of the hybrid system that did not present the above drawbacks called hybrid-II. In addition, we compared this system with two haptic devices sharing the same real workspace.

All participants performed and finished the virtual task with the two systems. In contrast to the previous experiment, recorded times showed no significant difference between both interactions. Same could be observed for the usability test. These results suggest that the new configuration of the hybrid-II system could substitute the two-haptic systems when the precision of the motion is not critical for simulating the task.

The difference in the results obtained in this experiment and the previous one shows the importance of the strategy used for combining two different technologies. In the first experiment, we demonstrated that each technology (Markerless Mocap, OMNI-haptic) obtained each good result, but the usability results decreased when both technologies were combined. However, in this experiment, where we had tried to minimise the differences between both technologies, the results of the integration were similar. Thus, we conclude that in order to achieve a usable hybrid interaction, it is important to minimise the differences in the conditions in which both technologies are used, similar workspace and similar strategy to interact with the virtual objects, etc.

6 Conclusions and future work

This paper has been focused on studying the feasibility and usability of Markerless Mocap-based interactions for simulating bimanual assembly tasks. In particular, we have proposed three interactions: a pure Markerless Mocap-based interaction with two possible configurations (where both hands are tracked, in 2D or 3D, with a Markerless Mocap) and a hybrid interaction (where one hand is tracked with a 2D Markerless Mocap and the other hand with a haptic device). These interactions have been analysed in comparison with two-haptic based interactions with two possible configurations (with/without aligned haptic arms) through different experiments. Taking all results into account, the initially formulated questions can be answered as following:

-

Is it feasible to simulate a virtual assembly task by means of a Markerless Mocap system?

All participants in the experiments performed and finished the tested tasks without major problems, showing that the Markerless Mocap system can be a valid option for simulating bimanual assembly tasks when the precision of the motion is not critical. Based on the participants’ comments, it seems that the use of props, such as physical tools, improves the naturalness of the interaction.

-

And how usable are the Markerless Mocap-based interactions (with 2D and 3D tracking) and hybrid- (Mmocap2D + haptic) based interaction compared to two-haptic-based interaction?

Usability results have demonstrated that the Markerless Mocap and hybrid interactions are as usable as a dual-handed haptic interaction for assembly tasks when the precision of the motion is not critical. The efficiency of each interaction is determined by the movement amplitude and the DoF of the tracking system (2D vs. 3D). The decision about which interaction (haptic, Mmocap2D, Mmocap3D, or hybrid) to use depends on the trade-off between the requirements of simulating the target task (e.g. precision) and the cost of the interaction technology. Three additional considerations:

-

In order to get a good hybrid system, one should minimise the differences in the conditions in which both technologies are used, e.g. tracking each hand with a considerably different workspace size leads to a decreased usability.

-

Time performance does not affect the valorisation of the usability of a system, as soon as the interaction responses in real time (i.e. users do not feel any lagging).

-

Results suggest that users’ perception is based on how alike the virtual movement and the real trajectory are, rather than how exact or precise the virtual movement really is.

-

Future work should analyse the effect that the new Markerless Mocap approach has on the assembly process of assembly tasks. A comparison against off-the-shelf solutions, or alternatively to traditional training systems, should be carried out.

As for the usability study, the simulation of assembly tasks can require more functionality (i.e. rotation of virtual objects and weight discrimination operations), which should be implemented and assessed. Finally, based on these results, it seems feasible to extend the use of the Markerless Mocap systems for the simulation of assembly tasks with one hand.

References

Abate AF, Guida M, Leoncini P, Nappi M, Ricciardi S (2009) A haptic-based approach to virtual training for aerospace industry. J Vis Lang Comput 20:318–325. doi:10.1016/j.jvlc.2009.07.003

Adams JR, Clowden D, Hannaford B (2001) Virtual training for a manual assembly task. Haptics-e, Vol. 2(2)

Avizzano CA, Marcheschi S, Angerilli M (2003) A multi-finger haptic interface for visually impaired people. Works.on ROMAN, pp 165–170. doi:10.1109/ROMAN.2003.1251838

Bloomfield A, Deng Y, Wampler J, Rondot P, Harth D, Mcmanus M, Badler NI (2003) A taxonomy and comparison of haptic actions for disassembly tasks. In: Proceedings of IEEE VR Conference, Los Angeles, CA, USA. pp 225–231

Bordegoni M, Cugini U, Belluco Paolo, Aliverti M (2009) Evaluation of a haptic-based interaction system for virtual manual assembly. Virtual Mixed Real LNCS 5622:303–312. doi:10.1007/978-3-642-02771-0_34

Bowman D, Kruijff E, LaViola J, Poupyrev I (2004) 3D user interfaces: theory and practice. Addison-Wesley, Boston

Cao Y, Xia Y, Wang Z (2010) A close-form iterative algorithm for depth inferring from a single image. Comput Vis (ECCV). 6315:729–742. doi:10.1007/978-3-642-15555-0_53

Cheng-jun C, Yun-feng W, Niu L (2010) Research on interaction for virtual assembly system with force feedback. In: Proceedings ICIC, Wuxi, China, 2: 147–150. doi:10.1109/ICIC.2010.131

Gupta SK, An DK, Brough JE, Kavetsky RA, Schwartz M, Thakur A (2008) A survey of the virtual environments-based assembly training applications. Virtual manufacturing workshop (UMCP), Turin, Italy

Gutiérrez T, Rodríguez J, Vélaz Y, Casado S, Sánchez EJ, Suescun A (2010) IMA-VR: a multimodal virtual training system for skills transfer in industrial maintenance and assembly tasks. In: Proceedings ROMAN, pp 428–433. doi:10.1109/ROMAN.2010.5598643

Isard M, Blake A (1998) Condensation—conditional density propagation for visual tracking. Int J Comput Vis 29(1):5–28

Jun Y, Liu J, Ning R, Zhang Y (2005) Assembly process modeling for virtual assembly process planning. Int J Comput Integr Manuf 18(6):442–445. doi:10.1080/09511920400030153

Jung B, Latoschik M, Wachsmuth I (1998) Knowledge-based assembly simulation for virtual prototype modeling. In: Proceedings IECON, Aachen, Germany, 4: 2152–2157. doi:10.1109/IECON.1998.724054

Lee J, Rhee G, Seo D (2010) Hand gesture-based tangible interactions for manipulating virtual objects in a mixed reality environment. Int J Adv Manuf Tech 51(9):1069–1082. doi:10.1007/s00170-010-2671-x

Leino S-P, Lind S, Poyade M, Kiviranta S, Multanen P, Reyes-Lecuona A, Mäkiranta A, Muhammad A (2009) Enhanced industrial maintenance work task planning by using virtual engineering tools and haptic user interfaces. Virtual Mixed Real LNCS 5622:346–354. doi:10.1007/978-3-642-02771-0_39

Lu X, Qi Y, Zhou T, Yao X (2012) Constraint-based virtual assembly training system for aircraft engine. Adv Comput Environ Sci Adv Intell Soft Comput 142:105–112. doi:10.1007/978-3-642-27957-7_13

Moeslund TB, Hilton A, Krüger V (2006) A survey of advances in vision-based human motion capture and analysis. Comput Vis Image Underst 104(2):90–126. doi:10.1016/j.cviu.2006.08.002

Oikonomidis I, Kyriazis N, Argyros AA (2012) Tracking the articulated motion of two strongly interacting hands. To appear in the proceedings of IEEE conference on CVPR, Rhode Island, USA

Belluco P, Bordegoni M, Polistina, S (2010) Multimodal navigation for a haptic-based virtual assembly application. In: Conference on WINVR. Iowa, USA. pp 295–301. doi:10.1115/WINVR2010-3743

Poppe R (2007) Vision-based human motion analysis: an overview. Comput Vis Image Underst 108(1–2):4–18. doi:10.1016/j.cviu.2006.10.016

Poyade M, Reyes-Lecuona A, Leino S-P, Kiviranta S, Viciana-Abad R, Lind S (2009) A high-level haptic interface for enhanced interaction within virtools. Virtual Mixed Real LNCS 5622:365–374. doi:10.1007/978-3-642-02771-0_41

Romero J, Kjellström H, Kragic H (2010) Hands in action: real-time 3D reconstruction of hands in interaction with objects. In: Proceedings IEEE ICRA, pp 458–463. doi:10.1109/ROBOT.2010.5509753

Seth A, Su H-J, Vance JM (2008) Development of a dual-handed haptic assembly system: SHARP. J Comput Inf Sci Eng 8(4):044502

Seth A, Vance JM, Oliver JH (2011) Virtual reality for assembly methods prototyping—a review. Virtual Real Virtual Manuf Constr 15(1):5–20. doi:10.1007/s10055-009-0153-y

Shotton J, Fitzgibbon A, Cook M, Sharp T, Finocchio M, Moore R, Kipman A, Blake A (2011) Real-time human pose recognition in parts from single depth images. In: Proceedings CVPR’11. 2: 1297–1304

Siddiqui M, Medioni G (2010) Human pose estimation from a single view point, real-time range sensor. Conference in CVCG at CVPR, San Francisco, California, USA, pp. 1–8. doi:10.1109/CVPRW.2010.5543618

Unzueta L (2008) Markerless full-body human motion capture and combined motor action recognition for human-computer interaction. Ph. D. thesis, University of Navarra, tecnun

Volkov S, Vance JM (2001) Effectiveness of haptic sensation for the evaluation of virtual prototypes. ASME J Comput Inf Sci Eng 1(2):123–128. doi:10.1115/1.1384566

Wang RY, Popovic J (2009) Real-time hand-tracking with a color glove. In: Proceedeings SIGGRAPH’09. 28(3). doi:10.1145/1531326.1531369

Wang RY, Paris S, Popovic J (2011) 6D hands: Markerless hand-tracking for computer aided design. In: Proceeding UIST, pp 549–558. doi:10.1145/2047196.2047269

Wren CR, Azarbayejani A, Darrell T (1997) Pfinder: real-time tracking of the human body. IEEE Trans Pattern Anal Mach Intell 19(7):780–785

Zhu Y, Fujimura K (2007) Constrained optimization for human pose estimation from depth sequences. Proc ACCV 1:408–418

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vélaz, Y., Lozano-Rodero, A., Suescun, A. et al. Natural and hybrid bimanual interaction for virtual assembly tasks. Virtual Reality 18, 161–171 (2014). https://doi.org/10.1007/s10055-013-0240-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-013-0240-y