Abstract

An optimal steganography method is provided to embed the secret data into the low-order bits of host pixels. The main idea of the proposed method is that before the embedding process, the secret data are mapped to the optimal values using Bayesian optimization algorithm (along with introducing a novel mutation operator), in order to reduce the mean square error (MSE) and also maintain the structural similarity between the images before and after embedding (i.e., preserving the visual quality of the embedded-image). Then, the mapped data are embedded into the low-order bits of host pixels using modulus function and a systematic and reversible algorithm. Since the proposed method is able to embed data into more significant bits, it has enhanced the payload, while preserving the visual quality of the image. Extraction of data from the host image is possible without requiring the original image. The simulation results show that the proposed algorithm can lead to a minimum loss in MSE criterion and also a minimal reduction in visual quality of the image in terms of diagnostic criteria of the human eye, whereas there is no limitation on the improvement of payload, in comparison with other methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In contrast to cryptography methods [1], which prevent the illegal access, the data hiding methods are used to make the data incomprehensible or invisible to the unauthorized users to be seen or heard. The data hiding is a process for embedding the secret information into a cover media and then extracting it [2,3,4]. The embedding should be carried out in such a way that the unauthorized users do not become aware of secret information (cover media should not be affected by high visual distortion after the embedding); In other words, the eye cannot detect changes in the cover media. The digital watermarking and steganography are two major categories of techniques for data hiding [5, 6]. In order to demonstrate signal ownership, the watermarking is used for embedding a symbol such as a signature or a trademark into cover signals. The embedding data size in this category is usually small. The main purpose of watermarking is a focus on maintaining the embedded symbol even after destructive attacks such as adding noise, filtering, compression. The second category is used for embedding secret data with a relatively large size and that is considered in this paper. In steganography, the focus is on invisible data hiding as well as obtaining a high rate of embedding. In this paper, the gray-level digital images will be used as the cover media, and these images are called the host images. The image obtained after embedding is called the stego-image. In literature, there are three types of steganography conventions: pure, public key, and secret key [7, 8]. A steganography system that does not require a key to detect is called pure. This system is not very secure in practice because its security depends entirely on its secrecy. It is preferred in most applications because no key must be shared in the communication process, although it does not provide any security if the attacker knows the embedding method. Public key schemes do not depend on the exchange of a secret key. They use a secret key for embedding and a secret key for detection. Secret key schemes need a secret key for both embedding and detection. If the secret key is known to the recipient, it can extract the secret data. However, sharing the secret key in these methods may lead to the keys distribution problem [9]. An idea for dealing with this problem is presented in [10].

Two major parameters in the stego-image are the embedding capacity and visual quality. The embedding capacity is defined as the length of the information that can embed into the host image. The visual quality means the secret information that should be embedded into the host image in such a way that the human observer cannot detect the changes in the host image after embedding [11]. With respect to the need of researchers to determine the amount of image visual degradation in various areas of image processing (such as compression, denoising, data hiding), the quantitative criteria are used to measure the results [12,13,14,15]. The peak signal-to-noise ratio (PSNR) is one of the common criteria that is used to compare image quality between the stego-image and the original host image. The more PSNR is, the higher stego-image visual quality will be. One of the weaknesses of this criterion is failure to consider pixels position in the image, and it only focuses on average of brightness level difference between the images. In other words, all the pixels will play the same role in the evaluation, while in practice, the pixel value causes a special effect on the human eye with regard to its position in the image [16, 17]. To overcome this weakness, Wang et al. [17] proposed the mean structural similarity (MSSIM) criterion, in which the image structural features are obtained based on the statistical and experimental information. It is more consistent with the function of the human eye. For example, Fig. 1 shows several images in a variety of different distortions. In Fig. 1, all images have the same PSNR, but with different MSSIM values. Although MSSIM is more appropriate to consider the similarities of two images (such as edges), PSNR is also useful because of the calculation of the mean squared reconstruction error. Therefore, in this paper, both PSNR and MSSIM criteria will be used simultaneously as an objective in the optimization of the proposed steganography method.

An image with various types of distortions, all with PSNR = 25 dB [17]. a Original image, b contrast-stretched image, MSSIM = 0.9168, c mean-shifted image, MSSIM = 0.9900, d JPEG compressed image, MSSIM = 0.6949, e blurred image, MSSIM = 0.7052, f salt–pepper impulsive noise contaminated image, MSSIM = 0.7748

In terms of embedding domain, the steganography algorithms are classified into two categories, including spatial domain and spectral domain [18]. In general, the embedding methods in the spectral domain are more robust, but instead are more complex. For example, in the spectral domain, Motamedi and Jafari [19] proposed a steganography method based on wavelet transform by utilizing image denoising by wavelet thresholding. In the spatial domain, there are generally three categories of techniques for embedding data: least significant bit (LSB) substitution, LSB matching, and pixel-value differencing (PVD) [20]. In the first type which is the most common and simplest method, the secret bits directly replace the LSBs of the host image. In the second type, the LSBs of cover image are modified. In the third type, a difference between two consecutive pixels is calculated to determine the number of embedding bits. In our proposed method in this paper, the information will be embedded into the host image in the spatial domain.

Wang et al. [21] used the genetic algorithm (GA) to search the approximate optimal solutions and produce a substitution table. Their goal was to prevent a significant reduction in the image visual quality after simple embedding of important information into the low-order bits of the pixel. The substitution table determines that how should the low-order bits be transformed for embedding the secret data. Thien and Lin [22] proposed a simple method for embedding digit-by-digit data into the low-order bits using the modulus function. Their work increased the data hiding payload. Chang et al. [23] used a dynamic programming strategy to obtain the optimal solution with lower computational time compared to the GA method [21]. Ker [24] proposed a novel method called the LSB matching by using histogram characteristic function (HCF). Their purpose was to improve the efficiency of embedding into LSB. Mielikainen [25] proposed a modified method based on the LSB matching technique, which, assuming the equal payload, made fewer changes in the host image. The PVD method was first time proposed in the study by Wu and Tsai [26]. The PVD method is used for hiding secret message into the gray-level images. It provides a larger embedding capacity without a significant deterioration in image quality, unlike traditional LSB techniques. The authors of the work [26] considered this important feature that in each image, variations of gray-level value are easily detectable by eye in smooth areas, whereas in the edges, they are less visible. Chang et al. [27] achieved an appropriate payload using Hamming code (from the family of error-correcting codes) and LSB embedding, but their technique can only embed the information into the LSBs not the more significant bits. Molaei et al. [28, 29] provided two different steganography methods based on error-correcting codes, which are robust against some famous attacks. In addition, they improved the payload. Their methods lack technical restrictions in embedding into the more significant bits. Yang et al. [20] introduced an adaptive substitution technique for embedding data into the low-order bits. Their goal was to prevent sudden changes on the edges of the image and also to achieve a better quality of stego-image. Their technique uses masking of light, edges, and texture of host image to estimate the number of LSBs for hiding data. In their work, a larger number of bits are substituted into the pixels belonging to the regions of insensitivity to noise in comparison with regions of sensitivity to noise. In addition, an optimal pixel adjustment process is used by LSB substitution technique to increase the visual quality of stego-image. Zhang et al. [30] conducted a study on data hiding to improve the capacity and efficiency of embedding in the gray-level images. They provided two algorithms, high capacity of information hiding (HCIH) and high quality of information hiding (HQIH). The first algorithm aims to achieve a high embedding rate, and the second algorithm aims to achieve high embedding efficiency. In the study [31], in addition to the LSB substitution technique, a mixed edge detection mechanism is also employed to increase payload. In this mechanism, the combination of Canny edge detection and Log edge detection techniques is used. A second-order steganographic (SOS) method based on pixel pair matching and modification direction exploiting (MDE) is provided in [32]. Unlike the MDE-based methods [33,34,35,36,37] in which only one secret digit of each cover pixel pair can be concealed in base B, the SOS method is able to perform it for two secret digits. In the research [38], an image steganography method is presented to provide reliability by using the secret key and logistic map for generating random numbers.

In most of the provided methods, there is a trade-off between the payload and visual quality of stego-image, so that by increasing the payload, the visual quality of stego-image is reduced. Therefore, the main problem is to find an optimal technique for embedding secret information in order to minimize image degradation after embedding with maintaining the required payload. Most of the optimization methods that have been introduced for this purpose only considered MSE and PSNR criteria as the objective function and were trying to find the optimized solutions. However, as mentioned earlier, these alone cannot be the appropriate assessments to evaluate the image quality degradation from the perspective of a human observer. Therefore, in our proposed method, two objectives will be considered simultaneously in the optimization process, with keeping the value of PSNR high and value of MSSIM low. This can help us to find the true optimal solution. As a result, the deviations resulting from the use of traditional methods, which have done the optimization without considering the diagnostic criteria of the human eye, are compensated. On the other hand, one of the weaknesses in some of the provided algorithms is a restriction in the embedding payload. Because, their embedding process is designed in such a way that data can only be embedded into LSBs. But the technique proposed in this research does not have this limitation. In fact, in the proposed method, we will face no restrictions in the embedding payload, while maintaining desirable visual quality approved by the appropriate criteria (by construction and application of optimal mapping vector).

The purpose of this paper is to provide a new and optimal steganography technique using an efficient optimization algorithm (Bayesian algorithm optimization (BOA) [39]) and applying some structural modifications to it. Also, in order to enhance the stego-image visual quality, the modulus function [22] will be used after finding the optimal values and before the embedding process. To show the superiority of the proposed method, several experiments will be performed on the test images, and the results will be compared with similar methods. The experiment results confirm the acceptable performance of the proposed technique.

Rest of this paper is organized as follows: Sect. 2 will provide an overview of the low-order bits embedding method using the modulus function [22]; in Sect. 3, the BOA [39] will be introduced; in Sect. 4, the proposed algorithm will be fully described; Sect. 5 is allocated to the simulation results and the discussion; in Sect. 6, the conclusions are provided.

2 Low-order bits embedding using modulus function

In this section, the low-order substitution method that is presented by Thein and Lin [22] to improve the visual quality of the stego-image, is described. The method [22] embeds the secret data into the low-order bits of host image pixels using modulus function and then simply extracts them. Assuming data are embedded in \( r \) low-order bits of each pixel of the host image, first, the data are decomposed into \( r \)-bit units. To embed the \( i \)-th unit \( x_{i} \) into \( i \)-th pixel \( p_{i} \) of the host image, first, the difference value \( d_{i} \) is calculated by Eq. (1) [22]

where \( Z = X\bmod Y \) is the remainder of the division of \( X \) by \( Y \). Then, the optimized difference of \( d_{i} \) is calculated by Eq. (2) [22]

where \( \left\lfloor x \right\rfloor \) is the largest integer that does not exceed \( x \), and \( \left\lceil x \right\rceil \) is an integer that is obtained from upward rounding \( x \). Finally, since in some cases, the value of \( d_{i}^{'} \) may be out of the range from 0 to 255 and the modified gray-level value of the \( i \)-th pixel of host image after embedding (i.e., \( \hat{p}_{i} \)) is calculated by Eq. (3) [22]

To extract the embedded data, it is sufficient to calculate Eq. (4)

where \( r \) is the number of low-order bits used for embedding, \( \hat{p}_{i} \) is the value of the \( i \)-th pixel from stego-image, and \( x_{i} \) is the value that is hidden into \( r \) low-order bits of pixel \( \hat{p}_{i} \).

The low-order substitution techniques are simpler than other techniques, and also, the image quality after data embedding in them has lower loss. In this paper, after finding the optimal mapping vector, we will embed data into low-order bits of host pixels using the above method.

3 BOA

The BOA [39] belonged to the evolutionary algorithms (such as the GA), based on constructing, learning, and sampling of Bayesian probabilistic networks. The BOA has a stronger logical backing than other optimization algorithms, due to its completely mathematical and probabilistic structure. The BOA in finding optimum solutions for additively decomposable functions has much better performance than the GA. A performance analysis has been performed in [39]. In this paper, the BOA will be used to find the optimal mapping vector for optimum embedding of information into the low-order bits of the host image. In the following, first, the Bayesian networks, learning method, and generating method of new instances will be introduced, and then, the BOA will be expressed.

3.1 Bayesian networks

Bayesian network models a connection between available variables in data and the problem structure that is expressed using it [40, 41]. Each node in the network is corresponding to a variable \( X_{i} \). The connection between the two variables is represented by the edge between the two nodes. An acyclic Bayesian network with directed edges encodes a joint probability distribution and is expressed as Eq. (5)

where \( X = \left( {X_{0} , \ldots ,X_{n - 1} } \right) \) is a vector of variables and \( \varPi_{{X_{i} }} \) is the set of parents of \( X_{i} \) in the network (the set of nodes from where there exists an edge to \( X_{i} \)). A simple example of a Bayesian network and joint distribution encoded by it is shown in Fig. 2. This distribution can be used for generating new instances using the conditional and marginal probabilities in the modeled dataset.

Now, two general questions arise which are answered in the following: (1) How to determine a network that represents a proper model of data set? (2) How to determine a network that the instance generated by it has the maximum matching with the given data?

3.1.1 Network structure learning

There are two main components of network learning [42]. The first one is a scoring metric, and the second one is a search procedure. The scoring metric measures the quality of data modeled by the network. In the search procedure, a network can be found among all the possible networks, which increases the scoring metric as much as possible.

Different metrics can be used to measure the quality of a Bayesian network [42, 43]. One of the most useful criteria is called K2 metric. For network \( B \) and binary data set \( D \) with \( n \) variables, the K2 metric is defined in the simplified model as Eq. (6) [42, 43]

where \( m\left( {x_{i} ,\,\pi_{{X_{i} }} } \right) \) is the number of instances in \( D \), the variable \( X_{i} \) is equal to value \( x_{i} \), and also variable(s) \( \varPi_{{X_{i} }} \) is (are) equal to value \( \pi_{{X_{i} }} \). If the set \( \varPi_{{X_{i} }} \) is empty, (\( X_{i} \) has no parent), then there is only an instance 0 for \( \varPi_{{X_{i} }} \). \( m\left( {\pi_{{X_{i} }} } \right) \) is calculated by Eq. (7)

Now, a search is required to find the best network with regard to the scoring metric value. The greedy algorithm could be used to find the best network [42, 43]. In each iteration of the greedy search, the edge that increases the scoring metric is added to the network. The edge addition steps are continued to the point that no further improvement is achieved in the scoring metric. Also, the addition of the edges that take the network out of the acyclic model is not allowed. Also, to reduce the operations, a limitation is considered for at most incoming edges into each node.

3.1.2 New instances generating

A joint distribution encoded by \( B \) is expressed using Eq. (5), where \( p\left( {X_{i} |\varPi_{{X_{i} }} } \right) \) is defined as Eq. (8)

First, the variables are sorted in order of generations. Next, the probability of independent variables is calculated, and then, conditional probability of each possible instance of each variable is calculated with respect to the all possible instances of parents in data set [44]. The independent and conditional probabilities are used to generate each new instance, and the process is repeated until the values of all the variables are produced.

3.2 BOA description

Figure 3 shows the schematic diagram of the BOA (for more details, refer to [45]). Figure 4 shows the flowchart of the BOA. In the BOA [39], the first population of strings is randomly generated. From the present population, a number of best strings are selected with regard to the selection rate (e.g., a rate of 0.5 means selecting a half of best strings). Each of the conventional methods, such as random selection, tournament selection, roulette wheel selection, rank selection, can be used. Bayesian networks are constructed in accordance with the chosen set of strings. The K2 metric measures the quality of the network, and the greedy search algorithm is used to search for the best network in order to maximize the K2 metric. The new strings are generated using the joint distribution encoded by the network. The generated strings are added to the old population, replacing some of the old ones. The BOA steps are as follows:

BOA schematic [45]

-

1.

Set \( 0 \to t \). Generate an initial population randomly.

-

2.

Choose a set of good strings \( S\left( t \right) \) from \( P\left( t \right) \).

-

3.

Construct network \( B \) using K2 metric and constraints (at most incoming edges into each node, and keeping acyclic the network).

-

4.

Generate a set of new strings \( O\left( t \right) \) based on joint distribution encoded by \( B \).

-

5.

Create a new population \( P\left( {t + 1} \right) \) by replacing bad strings of \( P\left( t \right) \) with \( O\left( t \right) \). Set \( t + 1 \to t \).

-

6.

If the termination criterion is not met, return to step 2.

4 Proposed method

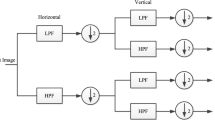

In this section, an optimal steganography method is provided with high embedding payload. The optimality of the proposed method is ensured by defining a new and practical cost function and minimizing it. The results of the proposed method are competitive with other methods. Three techniques for extracting secret data from cover (original) image include non-blind, semi-blind, and blind [46, 47]. Non-blind and semi-blind techniques need the cover image or some parts of it, whereas in blind detection, there is the only access to the stego-image. The proposed method is a blind steganography technique, since in the extraction process, there is no need for the original image. The overall block diagram of the proposed method is shown in Fig. 5. Secret data can be the binary information of an image, text, or any binary data. We provide a systematic approach for embedding and extracting information based on a secret key. First, the binary string of secret data is converted to an image with the same size of the original image, and then, a matrix of the sum of squared errors is constructed by comparing the obtained image with original image. Using this matrix, a cost function is defined. The cost function is obtained by combining two functions (one for controlling sum of squared errors and the other for controlling mean structural similarity between images before and after embedding). By minimizing this two-objective function using the BOA, we can construct an optimal mapping vector. During the optimization, to increase efficiency, a mutation operator is also included in this process. The optimal mapping vector is saved and given to authorized users to be used during information extraction. In the embedding process, a modified image will be provided with the same size as the original image from the secret data, by using the optimal mapping vector. After decomposition of pixels of the modified image and the original image to their constituent bits, the data embedding (into the low-order bits from pixels of the original image) is performed to obtain the stego-image, by using the modulus function. The purpose of the use of modulus function is to increase the stego-image visual quality, since in this paper, in addition to LSB, the more significant bits are also used for data embedding. Since the proposed method is reversible in the embedding stage, it is possible for authorized users to easily extract data. In the extracting stage, the low-order bits are decoded and secret data are extracted by the confidential key (optimal mapping vector) and the use of modulus function.

4.1 Embedding stage

Assume that we want to hide secret data \( S \) into the pixels of a gray-level image \( I \) of size \( L \times W \) as cover. \( r \) is considered as the number of low-order bits of the pixels where the secret information will be embedded. The value \( r \) can be chosen from 1 to 8 where \( r = 1 \) is LSB, and by increasing \( r \), the bit significant is increased.

First, the secret data \( S \) are decomposed into \( r \)-bits units and become like an image \( S_{M} \) with the same size of the host image. The value of each element in \( S_{M} \) is located in the range of \( 0 \) to \( 2^{r} - 1 \). Now, the value \( d_{i}^{'} \) is obtained using Eqs. (1) and (2), and then, since the value of \( y_{i} + d_{i}^{'} \, \) may be out of the range from 0 to 255, it is modified using Eq. (9)

Equations (1), (2) and (9) are integrated as a function named \( Difference\left( {d_{i} ,\,x_{i} } \right) \). For embedding \( x_{i} \) into \( d_{i} \), the difference between the original pixel and the embedded pixel will be \( d_{i}^{{^{{{\prime \prime }}} }} \), which returns the value of \( Difference\left( {d_{i} ,\,x_{i} } \right) \). Now, a squared error matrix is defined as

where \( N \) is equal to \( 2^{r} \), and \( e\left( {i,\,j} \right) \) is the sum of squared errors when all the pixels with value \( i \) in \( S_{M} \) are transformed to \( j \) and are embedded into the corresponding pixels of host image using Thien and Lin technique [17]. \( e\left( {i,\,j} \right) \) can be calculated as

The image \( S_{M} \) is scanned, and the pixels with value \( i \) are found; then, their corresponding value is found in the host image, which is equal to \( I_{c} \). In order to form the squared error matrix \( E \), all the values of \( e\left( {i,\,j} \right) \) must be calculated for \( 0 \le i,\,j \le 2^{r} - 1 \).

For example, in the case of \( r = 3 \) and for embedding binary data \( S = \left[ {001101010101010011101111110} \right]_{2} \) into the host image \( I = \left[ {\begin{array}{*{20}c} {163} & {27} & {121} \\ {220} & {219} & {215} \\ 7 & {131} & {233} \\ \end{array} } \right] \), we have \( S_{M} = \left[ {\begin{array}{*{20}c} {001} & {101} & {010} \\ {101} & {010} & {011} \\ {101} & {111} & {110} \\ \end{array} } \right]_{2} = \left[ {\begin{array}{*{20}c} 1 & 5 & 2 \\ 5 & 2 & 3 \\ 5 & 7 & 6 \\ \end{array} } \right]_{10} \). For calculating \( e\left( {1,\,0} \right) \), all pixels in the image \( S_{M} \) with a value of 1 must be found and their corresponding values in \( I \) should be extracted which is equal to \( I_{c} \). Thus, according to Eq. (11): \( e\left( {1,\,0} \right) = \left( {Difference(163,\,0)} \right)^{2} = 9 \). According to Eqs. (10) and (11), the other values \( e\left( {i,\,j} \right) \) are similarly calculated for \( 0 \le i,\,j \le 2^{r} - 1 \) to form the squared error matrix \( E \) as follows:

The next step for finding the optimal mapping vector is the cost function definition and then the minimization. Assume \( N \) values \( e\left( {0,\,j_{0} } \right) \), \( e\left( {1,\,j_{1} } \right) \), \( e\left( {2,\,j_{2} } \right) \), …, \( e\left( {N - 1,\,j_{N - 1} } \right) \) are selected from matrix \( E \) (i.e., an element from each row). The first purpose is the minimization of \( e\left( {0,\,j_{0} } \right) + e\left( {1,\,j_{1} } \right) + e\left( {2,\,j_{2} } \right) + \cdots + e\left( {N - 1,\,j_{N - 1} } \right) \), on the condition that if \( k \ne k^{'} \) then \( j_{k} \ne j_{{k^{'} }} \), where \( 0 \le k,\,k^{'} \le N - 1 \). The realization of this goal will result in PSNR minimization. The second objective function is the mean structural similarity between the two images before and after the embedding, which is calculated as [17]

where \( I \) and \( I^{'} \) are the original and embedded images, respectively, \( i_{l} \) and \( i_{l}^{'} \) are the image contents at the \( l \)-th local window, and \( M \) is the number of local windows in the image. In Eq. (12), \( SSIM\left( {x,y} \right) \) is calculated as [17]

where \( \mu_{x} \), \( \mu_{y} \), \( \sigma_{x} \), \( \sigma_{y} \), \( \sigma_{xy} \), are the means, standard deviations, and mutual local covariance for images \( x \) and \( y \), and \( C_{1} \) and \( C_{2} \) are constant. The MSSIM value in Eq. (12) can be located in the range of 0 to 1, so that the more the structural similarity between the two images is, the closer to 1 the value is.

There are various techniques for solving multi-objective optimization problems. One of these methods, which is not based on population, is the conventional weighted aggregation (CWA) [48]. In this method, the linear combination of objective functions is minimized. In the optimization, a maximization problem can be turned into a minimization problem in multiplying the objective function by − 1. Here, we define a total cost function for solving our desired problem by a linear combination of a minimization objective function and a maximization objective function as follows:

where \( e\left( {0,\,j_{0} } \right) \), \( e\left( {1,\,j_{1} } \right) \), \( e\left( {2,\,j_{2} } \right) \), …, \( e\left( {N - 1,\,j_{N - 1} } \right) \) are the elements of \( E \); \( L \times W \) is the size of the host image; and \( MSSIM\left( {I,I^{'} } \right) \) is the mean structural similarity between the two images before and after embedding which is calculated by Eqs. (12) and (13). We have weighed the cost function according to the size of the image. Thus, in short, the problem is to find the optimal values for \( j_{0} \), \( j_{1} \), \( j_{2} \), and \( j_{N - 1} \), so that (14) is minimized. This will be done using the BOA that is explained in Sect. 3. We will show that the use of the BOA can help us in finding the optimal solutions with a good convergence rate.

Basically, in the evolutionary algorithms, the population size is considered as one of the important parameters. The selection of a very large population size results in a significant increase in computations and is not affordable. On the other hand, a small initial population only explores a small part of the search space and causes difficulties in finding a global optimal solution. The use of mutation operator can largely prevent from the convergence of the optimization process to the local extrema and partially compensate the relevant problems of small population size. In principle, mutations are random [49]. Theory and experiments on the BOA [39] show when the new generation instances for each variable are all converged to the same value and BOA will not be able to change the status and search the other solutions. The use of mutation in the BOA can prevent falling into the trap. Consider the network of Fig. 6. Assume the value of variable \( X_{0} \) is equal to 1. If a mutation with probability \( p_{m} \) is allowed to occur on it and converts it from 1 to 0, the mutation will be effective on the conditional probability of other variables and as a result on the value of those variables. Such a process with probability \( p_{m} \) can be applied to other variables. In this paper, we use such a technique to improve the performance of the optimization.

After the optimization, the optimal values of \( j_{0} \), \( j_{1} \), \( j_{2} \), and \( j_{N - 1} \) are obtained and the optimal mapping vector \( J = \left[ {j_{0} ,\,j_{1} ,\,j_{2} ,\, \ldots ,\,j_{N - 1} } \right] \) is formed. Based on this vector, the pixel values of \( S_{M} \) are transformed to obtain the image \( S_{M}^{'} \). For example, for \( r = 2 \), if \( J = \left[ {\begin{array}{*{20}c} 2 & 1 & 0 & 3 \\ \end{array} } \right] \) and \( S_{M} = \left[ {\begin{array}{*{20}c} 1 & 2 \\ 3 & 1 \\ \end{array} } \right] \), then the secret image \( S_{M}^{'} \) is obtained as follows:

Finally, to obtain the stego-image, the elements of \( S_{M}^{'} \) are embedded into the host image using Thien and Lin (modulus function) [17] described in Sect. 2. The optimal mapping vector \( J \) (as a confidential key) is only given to the authorized users to be used during the extraction of hidden information.

The following is a step-by-step process for embedding. The implementation details of the BOA in the proposed algorithm are presented in steps 6 to 18.

Data embedding process

-

Input: A gray-level image \( I \) of the size \( L \times W \) that is used as a cover, binary secret data \( S \), number of low-order bits from each pixel used for embedding (\( r \)).

-

Output: A stego-image \( I^{'} \) of the size \( L \times W \), the optimal mapping vector \( J \) as a key.

-

Step 1: Scan the pixels of \( I \) (\( p_{i} \), \( 1 \le i \le L \times W \)) from top to bottom and left to right.

-

Step 2: Decompose the values of pixel \( p_{i} \) to their constituent bits, and consider \( r \) low-order bits from each pixel for embedding.

-

Step 3: Convert the binary string of secret data \( S \) to the image \( S_{M} \) of the size \( L \times W \).

-

Step 4: Form \( E \).

-

Step 5: Define a cost function as

$$ Cost\,function = e\left( {0,\,j_{0} } \right) + e\left( {1,\,j_{1} } \right) + e\left( {2,\,j_{2} } \right) + \cdots + e\left( {N - 1,\,j_{N - 1} } \right) - L \times W \times {\text{MSSIM}}\left( {I,I^{{^{\prime } }} } \right). $$ -

Step 6: Generate randomly \( n_{\text{pop}} \) different combinations of \( N \) integer with the range \( \left[ {0,\,N - 1} \right] \) as an initial population (a population with \( n_{\text{pop}} \) rows and \( N \) columns from integers). Now, convert the obtained population into the binary form (a population with \( n_{\text{pop}} \) rows and \( N \times r \) columns from binary numbers). Thus, the number of binary variables incoming to the BOA will be equal to \( n = N \,\times\, r \).

-

Step 7: Determine the selection rate \( s_{r} \).

-

Step 8: Start the main loop of the BOA and repeat the steps 9 to 16 until the stop condition is fulfilled.

-

Step 9: Compute \( Cost\,Function_{i} \) for members of the population (\( 1 \le i \le n_{\text{pop}} \)).

-

Step 10: Use a selection method (e.g., tournament selection), and choose the bests of the population with regard to selection rate \( s_{r} \) and cost function values.

-

Step 11: Consider the selected population as incoming data \( D \) to the Bayesian network \( B \).

-

Step 12: Construct an empty (edgeless) Bayesian network by \( n \) node.

-

Step 13: Find the best structure for the Bayesian network by addition of edge to the variables and calculation of K2 metric.

-

Step 14: Sort the variables with respect to the best network found in step 13 in order of generation.

-

Step 15: Generate \( n_{\text{pop}} - s_{r} \times n_{\text{pop}} \) new instances to replace with the removed instances (determine the value of each instance with regard to Eq. (8) and mutation probability \( p_{m} \)).

-

Step 16: Form the population in decimal values, and check if there is any repeated number in each row; if it was found, replace that row with a random string of different combinations of \( N \) integer within the range of \( \left[ {0,\,N - 1} \right] \).

-

Step 17: Get out of the main loop, if a stop condition is met.

-

Step 18: Select the best row of the population which has the lowest cost function value, and call it \( J \) and save it.

-

Step 19: Modify the pixels of \( S_{M} \) based on the mapping vector \( J \), and call it \( S_{M}^{'} \).

-

Step 20: Decompose the pixels of \( S_{M}^{'} \) to their constituent bits. (Each pixel will contain \( r \) bits.)

-

Step 21: Considering the new image \( S_{M}^{'} \), calculate \( d_{i} \) and \( d_{i}^{'} \) by Eqs. (1) and (2), respectively, where \( 1 \le i \le L \times W \).

-

Step 22: For \( 1 \le i \le L \times W \), compute the modified gray-level value of \( i \)-th pixel of \( I \) after the embedding process (\( \hat{p}_{i} \)) by Eq. (3) to obtain the stego-image.

4.2 Extracting stage

With the confidential key \( J \), the secret data will be easily extracted using an algorithm that will be explained in the following. The stego-image pixels will be called \( \hat{p}_{i} \), where \( 1 \le i \le L \times W \). First, by applying Eq. (4) to the received stego-image pixels, the values hidden into \( r \) low-order bits from pixel \( \hat{p}_{i} \) are obtained (i.e., \( x_{i} \)). Then, using the confidential key \( J \), \( x_{i} \) is modified (i.e., exactly the opposite of what in the embedding stage done for modifying the pixels to produce the optimal mapping vector \( J \)) to obtain the secret information \( S \).

Data extraction process

-

Input: A stego-image \( I^{'} \) of the size \( L \times W \), number of low-order bits from each pixel used for embedding (\( r \)), optimal mapping vector \( J \).

-

Output: Secret data.

-

Step 1: Use the modules function (Eq. (4)) for pixels of \( I^{'} \) (\( \hat{p}_{i} \), \( 1 \le i \le L \times W \)), so that \( x_{i} \) s (values hidden into the \( r \) low-order bits from pixel \( \hat{p}_{i} \)) are obtained.

-

Step 2: By using the confidential key \( J = \left[ {j_{0} ,\,j_{1} ,\, \ldots ,\,j_{N - 1} } \right] \), modify \( x_{i} \) (i.e., transform all \( x_{i} \) s with values \( j_{0} \), \( j_{1} \), …, \( j_{N - 1} \) to values 0, 1, …, \( N - 1 \), respectively) to obtain the secret data \( S \).

5 Simulation results and discussion

To evaluate the performance of the proposed algorithm, several gray-level images are considered of size \( 256 \times 256 \) as the host images and a gray-level image as the secret data. The dataset used in this work is the standard and common images in the field of image processing. The proposed algorithm will be compared with other methods, by using two criteria, visual quality and payload.

As noted in the introduction section, PSNR alone is not a perfect criterion for assessing the visual quality of images. So, in our work, to evaluate the visual quality, both PSNR and MSSIM criteria are used, which show the image distortion after the embedding. PSNR is measured in dB as [27]

In the above equation, MSE indicates the difference between values of pixels in original image and stego-image and is computed as \( {\text{MSE}} = \frac{1}{L \times W}\sum\nolimits_{i = 1}^{L} {\sum\nolimits_{j}^{W} {\left( {I_{ij} - I_{ij}^{'} } \right)^{2} } } \) [27], where \( I_{ij} \) s refer pixel values of the original image, \( I_{ij}^{'} \) s refer pixel values of the stego-image, and \( L \) and \( W \) are the image’s dimensions. The lower is the difference between the two images, the lower is the value of MSE, and as a result, the PSNR value will be higher, which is more desirable for stenography purposes. The structural similarity of both images is also calculated by Eqs. (12) and (13). In all experiments, the constants \( C_{1} \) and \( C_{2} \) are equal to 6.51 and 58.52, respectively (for further details, see Eqs. 7 and 9 in [17]). The local windows are considered Gaussian of size 11 and standard deviation equal to 1.5.

The second touchstone is the embedding payload, which is a ratio of the number of bits embedded into the host image to the number of pixels of the host image and is defined as [27]

where \( \left| S \right| \) refers the number of secret bits carried by the host image. \( P \) is measured in bits per pixel (bpp). The more is the value of \( P \), the better is the system performance in the embedding payload.

Parameters used in the BOA are as follows: (1) size (number) of the initial population \( n_{\text{pop}} = 3 \) for \( r = 1 \), and \( n_{pop} = 10 \) for \( r = 2 \); (2) selection rate \( s_{r} = 0.5 \); (3) tournament selection method; (4) the maximum number of iterations equal to 10; and (5) the mutation probability \( p_{m} = 0.1 \).

The type of secret data that is used in the simulations is the gray-level image of Lack that is converted into binary data. Each one of the test images with \( r = 1 \) and \( r = 2 \) carries 65,536 and 131,072 bits of information, respectively, in the embedding. Therefore, the payload is equal to 1 and 2 bpp, respectively. It should be noted that the proposed technique has no implementation restriction for embedding in the more significant bits. Of course, this is a lack of technical limitation, but in practice, there are certain restrictions on the acceptable (maximum) distortion. Figures 7 and 8 show the results of the visual quality of some test images. In Fig. 7, the left column shows the original image, the middle column shows the stego-image obtained by [27], and the right column shows the stego-image obtained by the proposed technique with \( r = 1 \). Also, in Fig. 8, the left column shows the original image, the middle column shows the stego-image obtained by [23] with \( r = 2 \), and the right column shows the stego-image obtained by the proposed technique with \( r = 2 \). In Table 1, a comparison is performed in terms of payload and visual quality of stego-image between the proposed method and other methods. In the proposed method, the standard deviations of PSNR are very low (< 0.02). The maximum payload is 1.00 bpp, in methods of [27], HCIH [30] and HQIH [30], whereas the proposed method has the capability of carrying data with payload above 1.00 bpp, with maintaining the visual quality. In a compromise between payload and visual quality, the proposed method is competitive with methods [20] and [31]. Among all of them, only methods [27] and [28] are robust against the attacks. There are also comparative results between the proposed method with methods [27] and [23] in terms of payload and visual quality (with both PSNR and MSSIM criteria) which shown in Tables 2, 3 and 4. From the results of Tables 2, 3 and 4, it can be found that the proposed method guarantees PSNR above 46.4 dB and MSSIM above 0.993, whereas the payload is increased by 101% compared to [27]. The proposed method has improved the average value of PSNR and MSSIM compared to method [23] by 0.04% and 0.20% with \( P = 1\,\,{\text{bpp}} \), and 3.93% and 0.47% with \( P = 2\,\,{\text{bpp}} \), respectively. Thus, in the proposed method, the quality improvement of steganography can be seen clearly for embedding in the more significant bit. This improvement is due to using an optimal data substitution for specified pixels. Figure 9 shows a comparative diagram of visual quality by PSNR criterion between LSB substitution method, optimal LSB substitution method [21], GA method [21], method [23], and the proposed method. In all of them, the payload value is considered equal to 1bpp. Figures 10 and 11 show comparative diagrams of the visual quality in terms of MSSIM criterion between methods [23, 27] and the proposed method. As shown in Figs. 9, 10, 11, the proposed method had a much better quality compared to the other methods, due to the definition of an appropriate cost function and also the use of modules function.

Results of the visual quality of some test images (\( r = 1 \)); from left to right: before embedding, after embedding by method [27], after embedding by the proposed method

Results of the visual quality of some test images (\( r = 2 \)); from left to right: before embedding, after embedding by method [23], after embedding by the proposed method

Comparative diagram between the proposed method (\( r = 2 \)) and the method [23], in terms of the visual quality by MSSIM

We also investigated the performance of the proposed method using the GA, instead of the use of BOA, and performed a comparison between them in terms of speed of convergence. The parameters used in the GA in all these experiments are as follows: (1) number of low-order bits for embedding \( r = 2 \); (2) size (number) of the initial population \( n_{\text{pop}} = 10 \); (3) maximum number of iterations equal to 10; (4) crossover probability equal to 70%; and (5) mutation probability equal to 10%. (The parameters were chosen the same for both the GA and BOA.) Figure 12 shows a comparison between the use of the BOA and GA in the proposed steganography technique for four test images in terms of the speed of convergence. As can be seen, the GA is converged on average after approximately six iterations, while the BOA is converged on average after approximately four iterations. So it can be concluded that the use of BOA generally led to finding the optimal solution (optimal mapping vector) in a lower number of iterations. It can reduce the number of computations (especially when calculating the cost function and also for larger values of \( r \)).

6 Conclusions

A new steganography method with blind detection was provided along with high embedding payload. Although this method embeds information into more significant bits of a pixel, the image visual quality can be preserved well.

The results of the simulations were compared with methods of [20, 21, 23, 27, 28, 30,31,32]. Due to the use of optimal mapping vector, we reduced the difference between images before and after embedding and achieved a PSNR above 46.4 dB and MSSIM above 0.993, while increasing the payload by 101% compared to [27]. Also, compared to [23], PSNR and MSSIM were on average improved by 0.04% and 0.20% with \( r = 1 \) and by 3.93% and 0.47% with \( r = 2 \), respectively. The results of simulations also showed that in the proposed method, visual quality of stego-image, in terms of both PSNR and MSSIM, is better than other methods, due to a proper definition of the cost function.

In the proposed approach, in addition to the use of the BOA, the GA was also employed in process of finding optimal mapping vector. The results showed that the BOA compared to the GA, generally, find the optimal solution in a fewer number of iterations, which can decrease the number of cost function calculations and as a result reduce the computational burden. Although the optimization process was implemented using the BOA, the embedding stage in the proposed approach can also be implemented by other optimization algorithms.

References

Schneier B (2007) Applied cryptography: protocols, algorithms, and source code in C. Wiley, New York

Zeng W (1998) Digital watermarking and data hiding: technologies and applications. In: Proceedings of international conference on information systems, analysis and synthesis, pp 223–229

Wu M, Liu B (2003) Data hiding in image and video. I. Fundamental issues and solutions. IEEE Trans Image Process 12(6):685–695

Wu M, Yu H, Liu B (2003) Data hiding in image and video. II. Designs and applications. IEEE Trans Image Process 12(6):696–705

Zhang X, Wang S (2004) Vulnerability of pixel-value differencing steganography to histogram analysis and modification for enhanced security. Pattern Recogn Lett 25(3):331–339

Wang C-T, Yu H-F (2012) A Markov-based reversible data hiding method based on histogram shifting. J Vis Commun Image Represent 23(5):798–811

Naji A, Zaidan A, Zaidan B, Hameed SA, Khalifa OO (2009) Novel approach for secure cover file of hidden data in the unused area within exe file using computation between cryptography and steganography. Int J Comput Sci Netw Secur 9(5):294–300

Ahadpour S, Majidpour M, Sadra Y (2012) Public key steganography using discrete cross-coupled chaotic maps. arXiv preprint arXiv:12110086

Alia MA, Yahya A (2010) Public–key steganography based on matching method. Eur J Sci Res 40(2):223–231

Diffie W, Hellman M (1976) New directions in cryptography. IEEE Trans Inf Theory 22(6):644–654

Zou D, Shi YQ, Ni Z, Su W (2006) A semi-fragile lossless digital watermarking scheme based on integer wavelet transform. IEEE Trans Circuits Syst Video Technol 16(10):1294–1300

Dosselmann R, Yang XD (2005) Existing and emerging image quality metrics. In: 2005 Canadian conference on electrical and computer engineering. IEEE, pp 1906–1913

Sheikh HR, Bovik AC (2006) Image information and visual quality. IEEE Trans Image Process 15(2):430–444

Wang Z, Bovik AC, Lu L (2002) Why is image quality assessment so difficult? In: 2002 IEEE international conference on acoustics, speech, and signal processing (ICASSP). IEEE, pp IV-3313–IV-3316

Eskicioglu AM, Fisher PS (1995) Image quality measures and their performance. IEEE Trans Commun 43(12):2959–2965

Winkler S, Susstrunk S (2004) Visibility of noise in natural images. In: 2004 international society for optics and photonics electronic imaging, pp 121–129

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Hamid N, Yahya A, Ahmad RB, Al-Qershi OM (2012) Image steganography techniques: an overview. Int J Comput Sci Secur (IJCSS) 6(3):168–187

Motamedi H, Jafari A (2012) A new image steganography based on denoising methods in wavelet domain. In: 2012 9th international ISC conference on information security and cryptology (ISCISC). IEEE, pp 18–25

Yang H, Sun X, Sun G (2009) A high-capacity image data hiding scheme using adaptive LSB substitution. Radioengineering 18(4):509–516

Wang R-Z, Lin C-F, Lin J-C (2001) Image hiding by optimal LSB substitution and genetic algorithm. Pattern Recogn 34(3):671–683

Thien C-C, Lin J-C (2003) A simple and high-hiding capacity method for hiding digit-by-digit data in images based on modulus function. Pattern Recogn 36(12):2875–2881

Chang C-C, Chan C-S, Fan Y-H (2006) Image hiding scheme with modulus function and dynamic programming strategy on partitioned pixels. Pattern Recogn 39(6):1155–1167

Ker AD (2005) Steganalysis of LSB matching in grayscale images. IEEE Signal Process Lett 12(6):441–444

Mielikainen J (2006) LSB matching revisited. IEEE Signal Process Lett 13(5):285–287

Wu D-C, Tsai W-H (2003) A steganographic method for images by pixel-value differencing. Pattern Recogn Lett 24(9):1613–1626

Chang C-C, Kieu TD, Chou Y-C (2008) A high payload steganographic scheme based on (7, 4) hamming code for digital images. In: 2008 international symposium on electronic commerce and security. IEEE, pp 16–21

Molaei AM, Sedaaghi MH, Ebrahimnezhad H (2014) a blind steganography based on Reed-Solomon codes and optimal substitution table with improving payload and robustness. Tabriz J Electr Eng 43(2):43–59 (In Persian)

Molaei A, Sedaaghi M, Ebrahimnezhad H (2017) Steganography scheme based on Reed-Muller code with improving payload and ability to retrieval of destroyed data for digital images. AUT J Electr Eng 49(1):53–62

Zhang Y, Jiang J, Zha Y, Zhang H, Zhao S (2013) Research on embedding capacity and efficiency of information hiding based on digital images. Int J Intell Sci 3(02):77

Jena B (2014) High payload digital image steganography using mixed edge detection mechanism. MTech thesis, National Institute of Technology Rourkela, India, Retrieved from http://ethesis.nitrkl.ac.in/6468/

Yin Z-X, Chang C-C, Xu Q, Luo B (2015) Second-order steganographic method based on adaptive reference matrix. IET Image Proc 9(4):300–305

Zhang X, Wang S (2006) Efficient steganographic embedding by exploiting modification direction. IEEE Commun Lett 10(11):781–783

Chang C-C, Chou Y-C, Kieu TD (2008) An information hiding scheme using Sudoku. In: 2008 3rd international conference on innovative computing information and control ICICIC’08. IEEE, p 17

Hong W, Chen T-S, Shiu C-W (2008) A minimal Euclidean distance searching technique for Sudoku steganography. In: 2008 international symposium on information science and engineering. IEEE, pp 515–518

Chao R-M, Wu H-C, Lee C-C, Chu Y-P (2009) A novel image data hiding scheme with diamond encoding. EURASIP J Inf Secur 1:1

Yin Z, Luo B (2015) MDE-based image steganography with large embedding capacity. Secur Commun Netw 9(8):721–728

Ulker M, Arslan B (2018) A novel secure model: image steganography with logistic map and secret key. In: 2018 6th international symposium on digital forensic and security (ISDFS). IEEE, pp 1–5

Pelikan M (2005) Hierarchical Bayesian optimization algorithm. In: Hierarchical Bayesian optimization algorithm. Springer, pp 105–129

Kjaerulff UB, Madsen AL (2008) Bayesian networks and influence diagrams, vol 200. Springer, Berlin, p 114

Cooper GF (1990) The computational complexity of probabilistic inference using Bayesian belief networks. Artif Intell 42(2–3):393–405

Heckerman D, Geiger D, Chickering DM (1995) Learning Bayesian networks: the combination of knowledge and statistical data. Mach Learn 20(3):197–243

Cooper GF, Herskovits E (1992) A Bayesian method for the induction of probabilistic networks from data. Mach Learn 9(4):309–347

Castillo E, Gutierrez JM, Hadi AS (2012) Expert systems and probabilistic network models. Springer, Berlin

Pelikan M, Goldberg DE, Cantu-Paz E (2000) Linkage problem, distribution estimation, and Bayesian networks. Evol Comput 8(3):311–340

Ingemar JC, Miller ML, Bloom JA, Fridrich J, Kalker T (2008) Digital watermarking and steganography. Morgan Kaufmann, Burlington

PourArian MR, Hanani A (2016) Blind steganography in color images by double wavelet transform and improved Arnold transform. Indones J Electr Eng Comput Sci 3(3):586–600

Jin Y, Olhofer M, Sendhoff B (2001) Dynamic weighted aggregation for evolutionary multi-objective optimization: Why does it work and how? In: Proceedings of the 3rd annual conference on genetic and evolutionary computation. Morgan Kaufmann Publishers Inc., pp 1042–1049

Da Ronco CC, Benini E (2014) A simplex-crossover-based multi-objective evolutionary algorithm. In: IAENG transactions on engineering technologies. Springer, pp 583–598

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Molaei, A.M., Ebrahimzadeh, A. Optimal steganography with blind detection based on Bayesian optimization algorithm. Pattern Anal Applic 22, 205–219 (2019). https://doi.org/10.1007/s10044-018-00773-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-018-00773-0